An EEG–MEG dissociation between online syntactic comprehension and post hoc reanalysis

- Language Section, National Institute on Deafness and Other Communication Disorders, National Institutes of Health, Bethesda, MD, USA

Successful comprehension of syntactically complex sentences depends on online language comprehension mechanisms as well as reanalysis in working memory. To differentiate the neural substrates of these processes, we recorded electroencephalography and magnetoencephalography (MEG) during sentence-picture-matching in healthy subjects, assessing the effects of two difficulty factors: syntactic complexity (object-embedded vs. subject-embedded relative clauses) and semantic reversibility on neuronal oscillations during sentence presentation, and during a subsequent memory delay prior to picture onset. Synthetic Aperture magnetometry analysis of MEG showed that semantic reversibility induced left lateralized perisylvian power decreases in a broad frequency range, approximately 8–30 Hz. This effect followed the relative clause presentation and persisted throughout the remainder of the sentence and the subsequent memory delay period, shifting to a more frontal distribution during the delay. In contrast, syntactic complexity induced enhanced power decreases only during the delay period, in bilateral frontal and anterior temporal regions. These results indicate that detailed syntactic parsing of auditory language input may be augmented in the absence of alternative cues for thematic role assignment, as reflected by selective perisylvian engagement for reversible sentences, compared with irreversible sentences in which world knowledge constrains possible thematic roles. Furthermore, comprehension of complex syntax appears to depend on post hoc reanalysis in working memory implemented by frontal regions in both hemispheres.

Introduction

A central theme in sentence processing is the distinction between automatic, instantaneous processes of comprehension and post hoc processes of reanalysis and repair. While the former is commonly thought to depend on encapsulated, or “modular” mechanisms specialized for language, the latter is generally held to depend on interaction with more general mental resources such as working memory (Carpenter et al., 1995; Caplan and Waters, 1999), executive function (Martin and Allen, 2008), and cognitive control (Novick et al., 2005). The distinction between instantaneous and post hoc mechanisms of comprehension informs our understanding of deficits in sentence-level comprehension occurring in aphasic patients who have intact comprehension on the single-word level. The finding that aphasic patients may fail to understand thematic roles, e.g. “who did what to whom” in the presence of certain more difficult syntactic structures (Caramazza and Zurif, 1976) has generated a great deal of controversy. Some authors have claimed that Broca’s aphasia in particular entails a loss of core aspects of syntactic competence, resulting in a failure to comprehend certain structures but not others (Grodzinsky, 2000). Other authors have claimed instead that sentence comprehension difficulties stem from a more general deficit of cognitive resources not specific to syntax (Caplan et al., 2007a).

Sentence comprehension is commonly assessed with a sentence-picture-matching task. Subjects select a picture from a field of two or more, with the correct picture depicting an action described in an auditorily presented sentence. In this task, subjects are required to understand not only the individual meanings of nouns and verbs, but also the “thematic roles” of the individual nouns, conveyed in English through syntactic cues involving word order. Thus, for a sentence such as “The girl is pushing the boy,” subjects may have to distinguish a correct picture depicting a girl pushing a boy, from a syntactic foil picture in which a boy is pushing a girl. Studies of language comprehension in aphasic patients have identified specific factors of individual sentences that lead to comprehension difficulties. One such factor is generally referred to as “syntactic complexity.” Although the complexity of any individual sentence is dependent on the model of grammar employed to analyze it, there is general agreement that some sentences are more complex than others of the same length. In English, there is a strong tendency for the noun bearing the AGENT role to precede the noun bearing the PATIENT role in linear order, and structures that violate this tendency are considered to be more complex. These include the passive voice, e.g., “The girl is being pushed by the boy,” and object-embedded relative clauses, e.g., “The girl who the boy is pushing is tall.” Broca’s aphasics frequently exhibit chance-level comprehension of thematic roles in such complex sentences (Drai and Grodzinsky, 2006), while comprehension is generally preserved in non-complex alternatives like simple actives “The boy is pushing the girl” or subject-embedded relative clauses “The boy who is pushing the girl is tall.”

Another factor influencing comprehension performance is semantic reversibility. Typically, an irreversible sentence such as “The apple that the boy is eating is red” will be understood by aphasic patients despite the presence of the syntactically complex object-embedded relative clause. This is because the meanings of the words constrain the possible interpretation; people know that boys eat apples and not the other way around. Behavioral studies have suggested that in ordinary language comprehension, a full consideration of syntactic information may occur only “as needed,” but that a so-called “good enough” strategy based mainly on lexical integration of word meaning may be the primary mechanism of language comprehension in most cases (Ferreira et al., 2002; Sanford and Sturt, 2002). According to this viewpoint, semantic reversibility induces an extra need for receptive syntactic processing, and complex syntax (in the context of a reversible sentence) induces a still greater need for syntactic processing. However, it is unclear whether the extra processing induced by these factors occurs automatically in real time, or instead as a consciously controlled reanalysis in working memory.

Previous fMRI studies have examined the effect of syntactic complexity within reversible sentences, finding increased activation in response to complex sentences in Left Inferior Frontal Gyrus (Just et al., 1996; Stromswold et al., 1996; Caplan et al., 1999; Ben-Shachar et al., 2003) and sometimes in other regions (Caplan, 2001; Caplan et al., 2002; Yokoyama et al., 2007). One study performed by our group examined the separate effects of reversibility and complexity in a 2 × 2 factorial design (Meltzer et al., 2010). In that study, we found that reversible sentences induced greater BOLD signals throughout the perisylvian language network, comprising both left frontal and left parietal–temporal regions, consistent with the need to apply a greater degree of syntactic analysis on these sentences. Syntactic complexity within reversible sentences induced an additional signal change on top of that induced by reversibility, but only in the frontal regions. Syntactically complex reversible sentences also evoked a higher hemodynamic response upon subsequent picture presentation in bilateral frontal regions, suggesting a role for these areas in post hoc reanalysis of sentence structure.

Selective activations in fMRI to complex sentences are compatible with extra syntactic processing occurring either instantaneously or as a post hoc process in working memory. Since the reanalysis phase would always follow immediately after the initial phase of online comprehension, the two processes would contribute to a single augmented hemodynamic response, reflecting neural activity integrated over a period of several seconds. Electrophysiological methods such as electroencephalography (EEG) and magnetoencephalography (MEG) can measure the unfolding of sentence parsing in real time, and may offer a useful alternative. EEG studies have examined several event-related potentials (ERPs) in sentence comprehension, including left anterior negativity (LAN), associated with syntactic processing (Friederici and Mecklinger, 1996) and verbal working memory (King and Kutas, 1995), N400, associated with semantic processing (Kutas and Federmeier, 2000), and P600, which is detected in response to linguistic anomalies of various kinds (Osterhout et al., 1997; Kuperberg, 2007). However, relatively few studies have sought to distinguish between activity related to first-pass sentence comprehension and post hoc reanalysis in working memory extending several seconds after sentence presentation.

The distinction between first-pass online comprehension and post hoc reanalysis is likely to play a major role in future understanding of language comprehension, as evidence accumulates that comprehension is heavily influenced by context and attentional factors under volitional control. While reanalysis of auditory language input can be imposed on subjects using such techniques as temporary syntactic ambiguity and “garden-path” sentences, behavioral studies have suggested that effortful reanalysis may be required for correct comprehension of non-ambiguous syntactically complex sentences, and that listeners often fail to carry out sufficient analysis for proper interpretation in casual listening paradigms without explicit task demands (Sanford and Sturt, 2002; Ferreira, 2003; ). Under the so-called “good enough” view of language comprehension (Ferreira et al., 2002), full syntactic parsing is seen as an optional process that may be carried out as needed. However, such post hoc reanalysis is likely to play a major role in the performance of the gold-standard test for syntactic comprehension, sentence-picture-matching. Therefore, we sought in this study to use novel electrophysiological methods to investigate the timecourse of syntactic processing at a finer level than what is available in conventional methods.

Methodological limitations have largely precluded the study of post hoc reanalysis in neuroimaging experiments on sentence comprehension. Event-related responses in the time domain require precise phase locking to an external stimulus, precluding the examination of activity extended beyond several hundred milliseconds, while hemodynamic methods such as fMRI cannot distinguish between immediate and post hoc processes that are closely spaced in time, although in principle separable. In contrast, induced oscillatory responses can be examined through time–frequency analysis without phase locking, and can be measured in real time over intervals ranging from hundreds of milliseconds up to several seconds and beyond. Given recent advances in MEG-based source localization of oscillatory responses, this technique may offer the ideal combination of spatial and temporal resolution to dissociate different processing stages involved in sentence comprehension. In particular, decreases in oscillatory power in the alpha (8–12 Hz) and beta (15–30 Hz) ranges have been shown to be colocalized with BOLD responses in many different regions of the cortex (Brookes et al., 2005; Hillebrand et al., 2005), and induced in a wide range of cognitive paradigms including language processing (Singh et al., 2002; Kim and Chung, 2008).

In the present study, we exploit the temporal properties of the oscillatory response to examine neural activity occurring during the immediate perception of a sentence and during a subsequent memory delay, two stages that would be impossible to separate using hemodynamic methods such as fMRI. We recorded EEG simultaneously with MEG, analyzing oscillatory activity within specific time windows within the sentence and during a subsequent memory delay. We then analyzed the MEG data with synthetic aperture magnetometry (SAM), yielding statistical maps that can be directly compared with fMRI results. These analyses revealed that both reversibility and syntactic complexity induce enhanced neural responses to sentences, but with temporal and spatial dissociations between the effects, compatible with separable processes of online comprehension and post hoc reanalysis.

Materials and Methods

Subjects

Twenty-four healthy volunteers (12 female, age 22–37) were recruited from the NIH community. All were right-handed monolingual native speakers of English, with normal hearing and (corrected) vision and no history of neurological or psychological disorders. Subjects gave informed consent (NIH protocol 92-DC-0178) and were financially compensated. All subjects participated in two experimental sessions. In the first session, EEG and MEG data were acquired simultaneously, and these are the subject of this report. In the second session, the same subjects completed an fMRI version of the sentence-picture-matching task with a slightly different experimental design. The fMRI data have been reported elsewhere (Meltzer et al., 2010), but will be discussed briefly below for purposes of comparison with MEG localization.

Materials

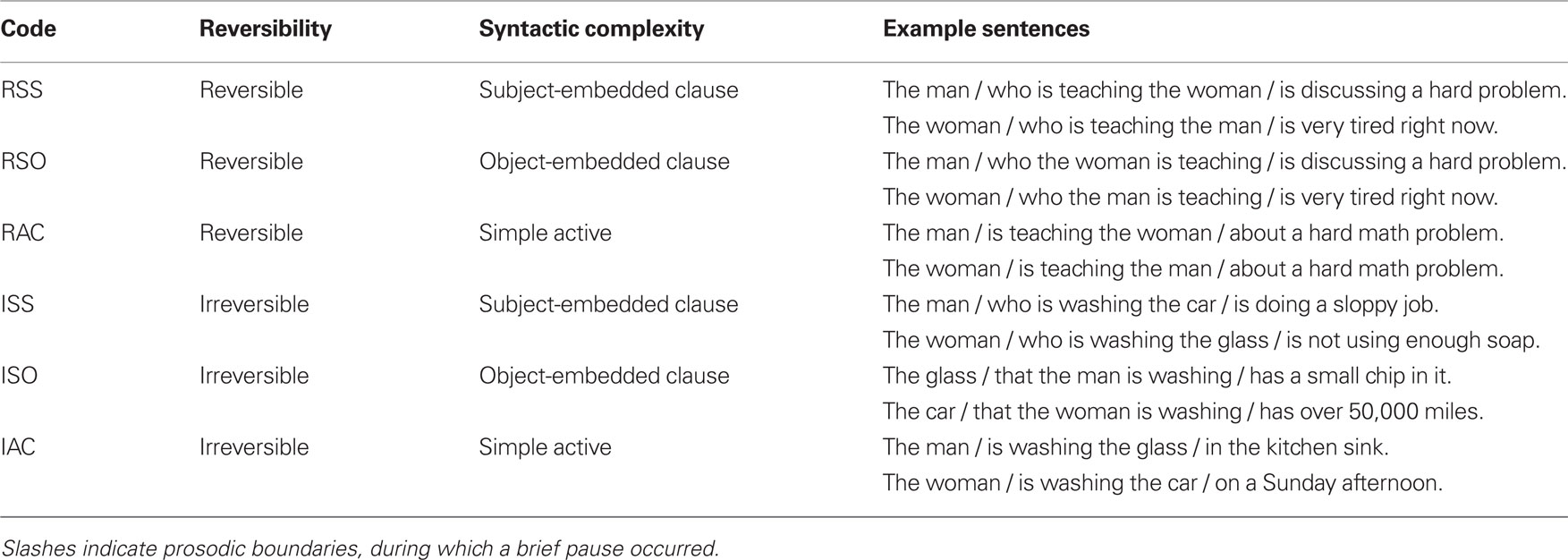

Two hundred fifty-two sentences were used for this experiment, in six categories, for a 2 × 3 factorial design (semantic reversibility × syntactic complexity). These were selected from a pool of 540 sentences constructed for this experiment and the parallel fMRI experiment. All sentences involved one or two out of four possible people, namely “the boy, the girl, the man, and the woman.” Reversible sentences (R) involved a human as both subject and object, and were constructed to avoid plausibility biases. Irreversible sentences (I) involved one human and one inanimate object. Three levels of syntactic complexity were employed, with examples shown in Table 1.

In keeping with previous studies of syntactic processing (e.g., Caplan et al., 2002), we use the abbreviation “Subject subject” (SS) for sentences with the syntactically simpler subject-embedded relative clauses, in which the subject of the relative clause is also the subject of the main clause. Sentences with the more complex object-embedded relative clauses are termed “Subject object” (SO), as the initial noun serves as the subject of the main clause but object of the relative clause. We adopt the abbreviation “AC” for simple active sentences, which are also considered non-complex, as they follow the canonical “agent-first” word order, similar to the SS sentences. The abbreviations (RSS, RSO, etc.) will be used throughout the paper to denote the six conditions. The primary contrast of interest is between the two types of embedded clause, as the lexical content of these sentences was strictly controlled by using the same main clauses with different initial structures (see supplementary information for a full list of sentences). All conditions were matched in length of sentences in words and syllables. The simple active condition was included as an additional control condition, and also as “filler” to reduce subjects’ habituation to the relative clause structure.

For each level of reversibility, 45 verbs were chosen for sentence construction. All were chosen for easy imageability to facilitate the production of photographs for target and distractor stimuli. For reversible sentences, in order to avoid an over-reliance on verbs of violence (bite, pinch, kick, etc.), we also used some phrasal verbs (e.g., pick up, whisper to, sneeze on). Phrasal verbs were also used in the irreversible condition (e.g., put away, spread out, flip over). Reversible sentences were matched item-wise across conditions by using the same ending material in multiple sentences. For the irreversible sentences, it was not possible to use item-wise matching, as the ending material for the object-embedded sentences had to apply to an inanimate object instead of a person. Main verbs used in reversible and irreversible conditions were matched on word frequency (derived from the Brown corpus; Kucera and Francis, 1967) and familiarity (taken from the MRC Psycholinguistic Database). For other words in the sentence, it was an inevitable consequence of the design that the irreversible sentences would have lower-frequency words, given the large number of different inanimate objects occurring in those sentences, whereas “man, woman, boy, girl” are all very frequent words. We compared the mean frequency for open-class words in the second and third portions of the sentence. A strong word-frequency difference was present during the relative clause portion of the sentence (mean Rev: 2.27 × 10−4, mean Irrev: 1.15 × 10−4, t = 6.02, p < 0.001), but not during the post-relative clause segment (mean Rev: 1.87 × 10−4, mean Irrev: 1.73 × 10−4, t = 0.879, p = 0.38). The difference occurring during the relative clause is unlikely to affect our results, as significant differences in MEG reactivity occurred only during the post-relative clause segment and the subsequent memory delay, likely reflecting the increased syntactic demand of the reversible sentences. Furthermore, any effect of word frequency would most likely be in the opposite direction of our reported results, as the irreversible sentences had words of lower frequency, which have consistently been shown to evoke increased brain activation (Chee et al., 2002; Halgren et al., 2002).

In all relative clause sentences, the imageable information was presented in the relative clause, while the main clause presented extraneous information that was not depicted in the picture. For each verb, six sentences were generated, two of each sentence structure, with separate pools of verbs used for reversible and irreversible categories. Example sextets of sentences for two verbs are presented in Table 1.

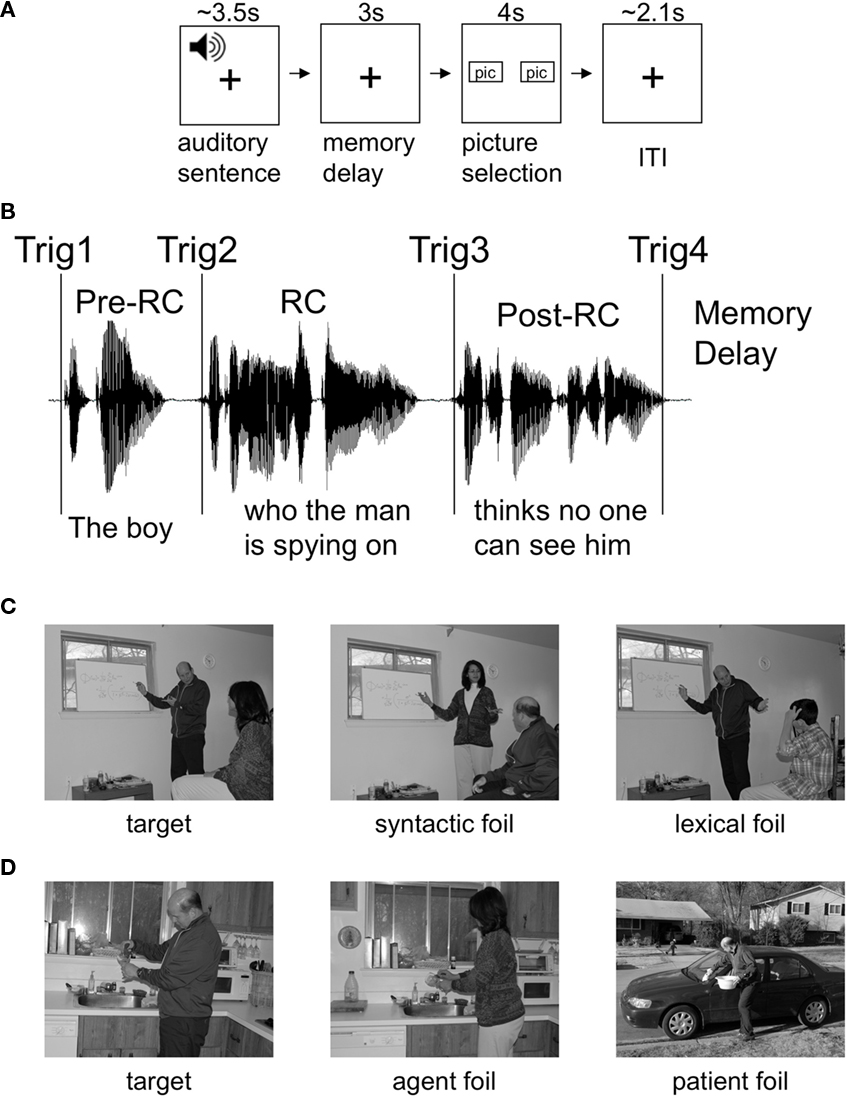

Sentences were digitally recorded by a female voice artist, sampled at 44.1 kHz, and subsequently edited in Audacity software1 as individual wav files. Spoken sentences averaged 3.45 s in length. Care was taken to record all sentences with a consistent, neutral prosody pattern, and sentences that were judged to deviate from it by the first author and/or a second rater were re-recorded. Each sentence was decomposable into three prosodic units, with a brief pause between them. Digital triggers were manually inserted into the wav files at the boundaries between the three sentence regions, delineating the pre relative clause segment (“Pre-RC”), the relative clause (“RC”), and the post-relative-clause segment (“Post-RC”), as shown in Figure 1B. These triggers were inserted into the EEG–MEG acquisition stream to allow for analysis activity within particular regions of the sentence, and also for activity occurring in the memory delay following the sentence.

Figure 1. Task design. (A) Schematic illustration of trial structure. On each trial, subjects heard a sentence while viewing a fixation cross. After a 3-s delay, two pictures appeared, the target and a foil. (B) Illustration of the placement of digital triggers within the sound files of individual sentences, to allow analysis of EEG–MEG data timelocked to specific points within and following the sentence. (C) A sample picture set for the reversible sentence “The woman who the man is teaching is very tired right now.” The target shows the correct arrangement. A syntactic foil has the thematic roles of the two named actors switched, while a lexical foil substitutes one of the actors. (D) A sample picture set for the non-reversible sentence “The glass that the man is washing has a small chip in it.”

For each group of six sentences involving a particular verb, we produced four photographs for the picture-matching task (Figures 1C,D). The photographs all involved the same set of four actors (man, woman, boy, and girl). Subjects were familiarized with the actors before the experiment so that they could clearly distinguish them. For a reversible sentence such as “The boy who the girl is pushing hopes to win the race,” the target picture depicted a girl pushing a boy, while the syntactic foil depicted a boy pushing a girl. For trials with syntactic foils, it is necessary to use syntactic information to assign thematic roles correctly, as the same two people are mentioned in the sentence. Lexical foils involved a person not mentioned in the sentence, depicting, e.g., a girl pushing a woman, or a woman pushing a girl. For irreversible sentences, only lexical foils were used, but either the human agent or the inanimate patient could be substituted. For reversible sentences, half of the picture pairs involved a syntactic foil, and half a lexical foil. For irreversible sentences, half of the pictures involved a substitution of the human agent, and half of the inanimate patient. Thus, for behavioral purposes, there were a total of 12 conditions (2 reversibility × 3 complexity × 2 foil type), with 21 trials of each. However, since the subjects did not know what type of foil would appear on each trial, EEG–MEG analysis of brain activity occurring prior to picture onset was collapsed across foil types, yielding 42 trials per condition.

Comparisons of Interest

In this experiment, we sought to evaluate the relative importance of instantaneous comprehension and post hoc reanalysis for understanding reversible sentences. In previous fMRI work with these materials (Meltzer et al., 2010), we showed that reversible sentences induce greater hemodynamic responses than irreversible sentences throughout the perisylvian language cortex, while reversible complex sentences (RSO) induce still greater responses in prefrontal regions. As the hemodynamic response integrates neuronal activity over several seconds, the fMRI experiment does not necessarily distinguish activity related to hearing the sentence from holding it in working memory. The temporal dissociation of these effects to online and post hoc processes is the goal of the present EEG–MEG work. Therefore, rather than a full factorial analysis of all conditions, we focus on specific contrasts. For simplicity, we refer to the contrast (RSO–RSS) as relating to “syntactic complexity,” but strictly speaking, it is syntactic complexity only within the context of reversibility, as it does not include the irreversible trials. For the effect of reversibility, we analyzed [(RSO + RSS) − (ISO + ISS)] as the primary comparison of interest, for comparability with our previous fMRI study that directly contrasted these conditions. We also conducted comparisons including the simple active conditions as additional trials, which resulted in greater sensitivity.

Procedure

Subjects were seated in a padded chair inside a magnetically shielded room containing the MEG instrument. Auditory stimuli were delivered through pneumatic tubes ending in foam ear inserts, with the volume adjusted to the individual subject’s preference. Visual stimuli were projected on a screen approximately 0.5 m in front of the subject’s face. The paradigm is illustrated in Figure 1A. A fixation cross was presented at all times except during picture presentation. Each sentence was followed by a silent delay period of 3 s, during which the relevant information was held in working memory. Next, two pictures appeared on the screen on either side of the fixation cross, which disappeared. Subjects indicated their choice of picture by pressing the left or right button of a fiberoptic response box with the index or middle finger, respectively, of the right hand. Subjects were instructed to make their selection within the time that the pictures remained on the screen (4 s). Upon picture selection, a green box appeared around the selected picture to confirm that the selection was registered, but no feedback about accuracy was given. After each picture trial, a delay of 2.1–2.25 s occurred before the presentation of the next sentence. Subjects were instructed to refrain from head and facial movement, and were informed that minimal blinking during trials was preferred, although they were not asked to completely refrain from blinking. Trials were presented in seven runs of 36 trials each, and subjects were allowed to rest in between runs if desired. Individual main verbs were repeated an average of three times in the experimental session (for a total of six across the separate MEG and fMRI sessions). The different experimental conditions were randomly sorted within each run of the task, with six trials of each condition occurring within each run. This served to prevent any incidental confound from fatigue or repetition of lexical material across the experimental session.

EEG–MEG Recording

Electroencephalography was recorded from 34 Ag–AgCl electrodes, fixed in an elastic cap in an extended 10–20 arrangement. Additional electrodes were placed on the left and right mastoid. Electrode Cz was placed halfway between the nasion and inion for each subject, and one of three caps was used depending on the individual’s head size. The skin under electrode sites was lightly abraded using NuPrep, and conductive gel was inserted between the electrode and the scalp. Scalp impedances were reduced below 5 kΩ. Head localization coils were placed at three fiducial points, the nasion and left and right preauricular points, for MEG head localization and coregistration with structural MRI. Electrode locations and fiducial points were measured with a 3D digitizer (Polhemus, Colchester, VT, USA). EEG was amplified and digitized along with the MEG data at 600 Hz, with an online 125 Hz lowpass filter. EEG recordings were referenced online to the left mastoid, and re-referenced offline to the average of the left and right mastoids. EEG data were downsampled offline to 200 Hz (100 Hz lowpass filter), and submitted to independent component analysis to remove effects of blinks and horizontal eye movements (Jung et al., 2000), using the EEGLAB toolbox (Delorme and Makeig, 2004). In each subject, either two or three components were removed, resulting in satisfactory suppression of ocular artifacts.

Magnetoencephalography was recorded with a CTF Omega 2000 system, comprising 273 first order axial gradiometers. For environmental noise reduction, synthetic third-order gradiometer signals were obtained through adaptive subtraction of 33 reference channels located inside the MEG dewar far from the head. Head position was continuously monitored using the fiducial coils. For source analysis of MEG activity, each subject later underwent a structural MRI on a GE 3T system, a sagittal T1-weighted MPRAGE scan. MR-visible markers were placed at the fiducial points for accurate registration, aided by digital photographs.

EEG Spectral Analysis

We chose to conduct the sensor-level group analysis on the EEG data in order to select time and frequency windows for SAM analysis of the MEG data. Although the spatial sensitivity profiles of EEG and MEG differ somewhat, they are generated by the same underlying neural activity, and can be expected to yield similar results for the purposes of identifying time and frequency windows of significance. We chose the EEG for the sensor-level analysis rather than the MEG, as it is more convenient in several respects: consistent sensor placement across subjects, insensitivity to head position within the MEG helmet, much smaller number of channels (fewer multiple comparisons and computationally cheaper), and interpretable signal polarity. On the other hand, MEG is superior for source localization within individuals, which can then be subjected to group analysis. We capitalized on the strengths of both modalities by using the EEG group results to select the frequency range for the MEG beamforming analysis.

Power spectra of temporal epochs of interest were computed from EEG data using multi-taper Fourier analysis (Percival and Walden, 1993), averaged across trials within each electrode, subject, and condition, and log-10 transformed to better approximate a normal distribution. Because we did not have a specific a priori hypothesis about the frequency band of the response, we used a cluster analysis approach that reveals statistically significant patterns of task reactivity while protecting against type 1 error (Maris, 2004; Maris and Oostenveld, 2007). Cluster analysis routines were implemented in the Fieldtrip toolbox2, using the approach outlined in Maris and Oostenveld (2007). Briefly, a paired t-test comparing two conditions was conducted at each electrode at each time (or frequency) point, and t-values exceeding a threshold of p < 0.05 were clustered based on adjacent time (frequency) bins and neighboring electrodes (distance < 6 cm). Cluster-based corrected p-values were produced by randomly permuting the assignment of individual subjects’ values to the two conditions 1000 times, and counting the number permutations in which larger clusters (defined by the total t-values of all timepoints and electrodes exceeding p < 0.05) were obtained than those in the correct assignment of conditions. Note that this procedure tests for differences in the total spectral power between conditions; no attempt is made to distinguish between “evoked” power timelocked to the stimulus and “induced” power that is not timelocked (Klimesch et al., 1998). Given the relatively long time windows used in this experiment (>1 s) and the imprecise time locking of auditory language stimuli, it is likely that “evoked” responses play a relatively minor role in the activity studied here.

Synthetic Aperture Magnetometry

Guided by the results of the cluster analysis of the simultaneously recorded EEG data, we used the SAM beamformer technique (Van Veen et al., 1997; Sekihara et al., 2001; Vrba and Robinson, 2001) implemented in CTF software (CTF, Port Coquitlam, BC, Canada) to map changes in oscillatory power in the brain associated with reversibility and complexity in sentence comprehension. A constant frequency band of 8–30 Hz was chosen to encompass all the significant effects seen in the cluster analysis. All comparisons of primary experimental interest were between identical time windows from different conditions, although we also compared averaged activity across all conditions with a pre-stimulus baseline in order to demonstrate the extent of non-specific activity induced by the task independent of the specific sentence conditions. At a regular grid of locations spaced 7 mm apart throughout the brain, we computed the pseudo T-statistic, which is a normalized measure of the difference in signal power between two time windows (Vrba and Robinson, 2001). Due to this “dual-state” analysis approach, multi-subject statistical maps were derived from subtractive contrast images computed on the single-subject level, not from individual conditions. Maps of pseudo t-values throughout the brain were spatially normalized to Talairach space via a non-linear grid based coregistration routine (Papademetris et al., 2004) applied to the T1-weighted MRI, implemented in the software package bioimagesuite3.

Group statistics on SAM results were computed in a similar fashion as is customary in fMRI studies. For each experimental comparison, the spatially normalized whole-brain map of pseudo t-values was submitted to a voxel-wise one-sample t-test across subjects. To correct for multiple comparisons across the whole brain, resulting statistical maps were thresholded at a voxel-wise level of p < 0.001 and a cluster size criterion of 64 voxels, resulting in a corrected p-value of p < 0.05. The cluster size criterion was determined by Monte Carlo simulations conducted in the AFNI program Alphasim, using an estimated spatial smoothness value of 8 mm FWHM. This value was derived by computing a “null” SAM map comparing the pre-stimulus period between two different sentence conditions. Two such null maps agreed on a smoothness value of approximately 8 mm.

For purposes of display, we also calculated timecourses of power changes in virtual signals extracted from regions of interest using the SAM beamformer. Regions were specified in Talairach space on the basis of the prior fMRI study and transformed to each individual subject’s analysis. Virtual signals were filtered at 1–100 Hz, and beamformer weights for this analysis were computed from the entire trial period of all six conditions. Virtual signals from each trial were submitted to time–frequency analysis using a short-time Fourier transform (256 point overlapping Hanning windows, 200 windows per trial). Time–frequency transforms were averaged across trials within condition, normalized to percent change, and averaged across subjects.

Results

Behavioral

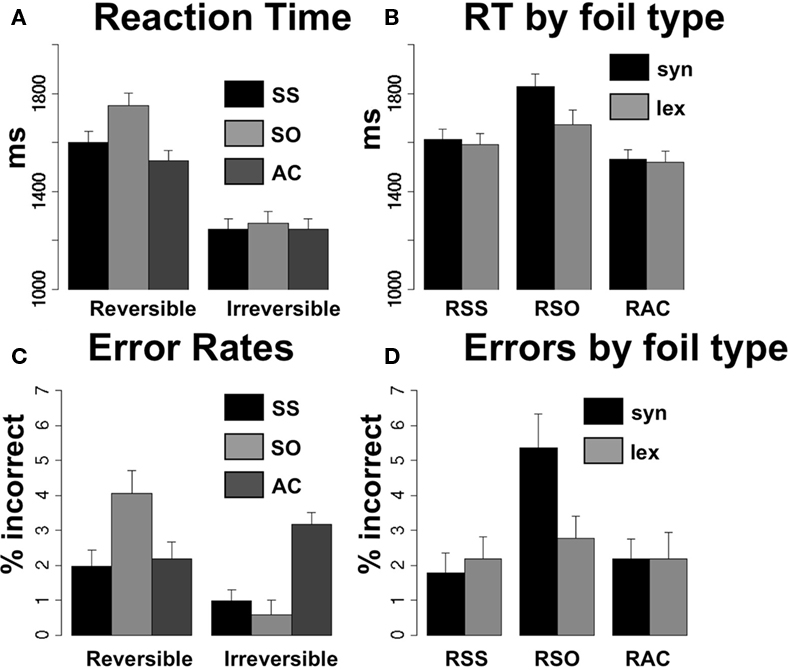

Because the behavioral data from this study were relevant to the fMRI study conducted in the same subjects, they have been reported before in partial form (Meltzer et al., 2010). Reaction time to picture pair onsets is presented in Figure 2A. RTs were averaged within subject for each condition and submitted to a 2 × 3 repeated measures ANOVA, with reversibility (R = reversible and I = irreversible), and syntactic structure (SS = subject-embedded, SO = object-embedded, and AC = simple active) as within-subject factors. Additionally, reaction times were also submitted to item analysis, treating the individual sentence-picture pairs as the random factor, averaging each item across subjects (Clark, 1973). The subject analysis results are referred to as F1 and the item analysis results as F2. There was a main effect of reversibility [F1(1,23) = 560.61, p < 0.0001, F2(1,372) = 132.84, p < 0.0001], indicating that subjects were slower to respond to the reversible pictures involving two people than to the irreversible pictures involving one person and an object, regardless of the grammatical structure of the sentence. There was also a main effect of syntactic structure [F1(2,46) = 40.33, p < 0.0001, F2(2,372) = 6.30, p = 0.002], owing to the fact that the sentences with the non-canonical SO order induced the longest reaction times for both reversible and irreversible scenarios. However, the effect of the non-canonical order was much more pronounced in the reversible sentences, as evidenced by a significant interaction effect between reversibility and syntactic structure [F1(2,46) = 34.49, p < 0.0001, F2(2,372) = 5.33, p = 0.005].

Figure 2. Behavioral results. (A) Reaction time across the six sentence types, collapsed across foil type. (B) Reaction time for reversible sentences further divided by foil type, syntactic or lexical. (C) Error rate (% incorrect or no response) across sentence conditions. (D) Error rates for reversible sentences, further divided by foil type.

Reaction time may be influenced both by aspects of the auditory sentence and the pictures. One important variable for reversible sentences is foil type, as trials with syntactic foils (agent and patient reversed) may require more demanding reanalysis of the sentence compared with lexical foils (showing a person not mentioned in the sentence). Because the foil types were different for reversible and irreversible sentences, we did not include foil type as a factor in the general ANOVA. Reaction times from reversible sentences only were averaged within subjects and submitted to a 2 × 3 repeated measures ANOVA, with factors for foil type (syntactic or lexical) and syntactic structure (SS, SO, AC). A main effect of foil type [F1(1,23) = 8.57, p = 0.007] indicated that syntactic foils consistently induced larger reaction times for all three syntactic structures. A main effect of syntactic structure [F1(2,46) = 56.65, p < 0.0001] indicated that SO sentences were associated with the largest reaction times regardless of foil type, as even the reaction time for the SO with lexical foil condition was longer than all SS and AC conditions. Nonetheless, the effect of syntactic foils was particularly pronounced for the SO sentences, as evidenced by an interaction effect between foil type and syntactic structure [F1(2,46) = 7.39, p = 0.002]. Inspection of the bar graph (Figure 2B) shows that the long reaction time associated with syntactic foils in the RSO condition is longer than what would be expected from linear summation of independent effects of foil type and syntactic structure. Thus, the RSO sentence structure, when combined with a syntactic foil in a forced-choice picture-matching paradigm, induces an extra processing cost, most likely due to reanalysis of the sentence to determine which named actor is the agent of the action.

Accuracy in picture selection patterned similarly with reaction time. Error rates for all sentence conditions are shown in Figure 2C, while error rates for reversible sentences further subdivided by foil type are shown in Figure 2D. Accordingly, A 2 × 3 repeated measures ANOVA for error rates in all sentence conditions showed a main effect of reversibility [F1(1,23) = 13.228, p = 0.001], and an interaction between reversibility and syntactic structure [F1(2,46) = 38.76, p < 0.0001], but no main effect of syntactic structure [F1(2,46) = 2.199, p = 0.123]. A 2 × 3 repeated measures ANOVA for effects of syntactic structure and foil type on error rates within reversible sentences alone revealed a main effect of syntactic structure [F1(2,46) = 6.310, p = 0.004], but not of foil type [F1(1,23) = 2.76, p = 0.11]. An interaction between syntactic structure and foil type was not quite statistically significant, [F1(2,46) = 2.66, p = 0.08], but the relatively high error rate for RSO sentences with syntactic foils (∼5%) exceeds the error rate for all other conditions, reflecting the particular difficulty of this condition, as seen also in the reaction time data. Note however, that differences in error rates are rather small in magnitude, given the ceiling effect on performance and the limited number of trials in each condition. For all electrophysiological comparisons, data were analyzed from time periods prior to picture presentation, resulting in 42 trials per condition. For the analysis of errors by foil type, conducted on responses to pictures, conditions have further differentiated into 21 trials per condition. Thus, an error rate of 5% on the RSO sentences with syntactic foils represents an average of only one error during the whole experiment, reflecting the fact that overall performance on this task is very good in healthy subjects. The higher error rate seen on IAC trials also reflects an average of one additional error per experiment, and is unlikely to be meaningful.

EEG Spectral Analysis

A major goal of this study was to localize modulations of oscillatory brain activity associated with sentence processing, using the beamforming technique SAM. Because that technique involves computing a virtual signal at each location of interest (typically a regular grid covering the entire brain), the analysis of a five-dimensional data set (three spatial dimensions, time, and frequency) becomes computationally infeasible. This problem is usually addressed by choosing time and frequency windows in advance, and mapping the spatial distribution of power changes in the chosen windows. The time windows of interest in this study were defined by the trial structure. The second segment of the sentence, comprising the relative clause, contains the syntactically challenging structure. However, comparisons of reversibility may not be relevant for this sentence portion, as the reversibility of the sentence is not apparent until the end of this segment, when both nouns have been mentioned. We were particularly interested in the third segment of the sentence, because at this point the different sentence conditions have become fully differentiated from each other, but subjects are still processing the sentence as it continues to unfold. Additionally, we were interested in activity following the sentence, during the memory delay, which may be related to late reanalysis of difficult sentence structures. Rather than choosing a frequency band for SAM a priori, we chose to first use cluster analysis to examine the modulation of spectral power in the EEG data, in order to determine which frequencies were sensitive to the different sentence conditions employed in this study. In this way, we hoped to avoid combining two frequency bands that may react in opposite ways. For example, many studies have shown that increased cognitive demands may induce increases in the theta band (4–8 Hz) and gamma band (35+ Hz) but decreases in the alpha (8–12 Hz) and beta (15–25 Hz) bands (Brookes et al., 2005; Meltzer et al., 2008). Therefore, combining across these bands in SAM may cancel effects of interest.

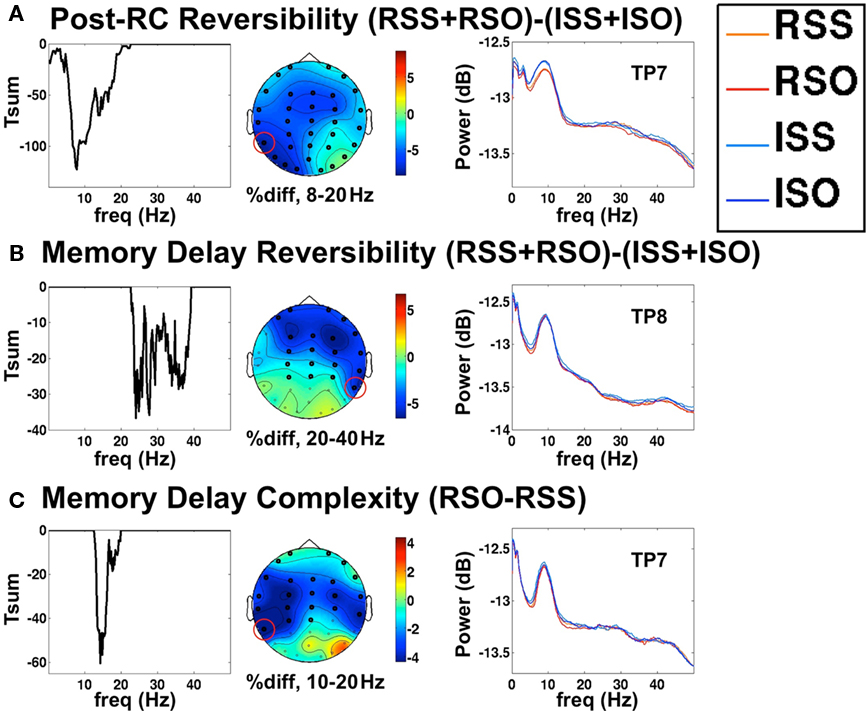

This concern proved to be unfounded, as we invariably found that all significant modulations of spectral power consisted of power decreases in the more challenging condition, in a relatively wide range of frequencies. Significant effects detected in cluster analysis are displayed in Figure 3.

Figure 3. Clusters of EEG oscillatory effects. Here, the left panel depicts the sum of t-values at each frequency point, rather than time point. The middle panel is the approximate topography of the effect (see text) in the indicated frequency range, and the right panel is the power spectra at a selected electrode. (A) Decrease in oscillatory power in the post-RC period following reversible vs. irreversible relative clauses. (B) Decrease in power during the post-sentence memory delay for sentences containing reversible vs. irreversible relative clauses. (C) Power decrease during the post-sentence memory delay following sentences containing reversible object-embedded vs. subject-embedded relative clauses.

Despite the presence of the syntactically challenging relative clause in the second sentence segment, we found no significant effects of either syntactic complexity or semantic reversibility in that time period, either in the EEG cluster analysis or in SAM (see below). Rather, all significant effects occurred during the post-RC segment and the memory delay. The comparison of reversible vs. irreversible post-RC sentence segments (RSS + RSO) − (ISS + ISO), shown in Figure 3A, revealed a widespread cluster of power decreases covering the alpha and beta bands. The topography of the effect was quite distributed, as the cluster included every single electrode, although the magnitude of the effect appeared somewhat left lateralized. Note however, that topographies of spectral effects are normalized to percentage change, making them dependent on baseline power levels, and are therefore less informative about the underlying generators than are the voltage maps used in ERP topographic maps. For localization of spectral changes, we used SAM analysis, as discussed below. In contrast to the large effects of reversibility, no significant cluster was detected in this time window for the syntactic complexity comparison (RSO–RSS). We also tested the third sentence segment of all three reversible conditions against all three irreversible conditions (RSS + RSO + RAC) − (ISS + ISO + IAC). This gave very similar results as the analysis restricted to relative clause containing sentences, but included an even broader range of frequencies extending up to 50 Hz, the maximum included in the analysis (data not shown).

Similar effects were found during the memory delay following the sentence. The reversibility contrast (RSS + RSO) − (ISS + ISO), shown in Figure 3B, revealed that oscillatory power continued to be depressed following reversible sentences, although statistical significance was achieved in a somewhat higher frequency range. Again, the comparison including all three sentence structures (RSS + RSO + RAC) − (ISS + ISO + IAC) gave qualitatively similar but stronger results, in a broader frequency range (data not shown). While no effect of syntactic structure was seen for the contrast (RSO–RSS) during the sentence, we did detect a difference during the memory delay period, shown in Figure 3C. Power between 10 and 20 Hz was significantly decreased following RSO sentences, predominantly at bilateral frontal electrodes.

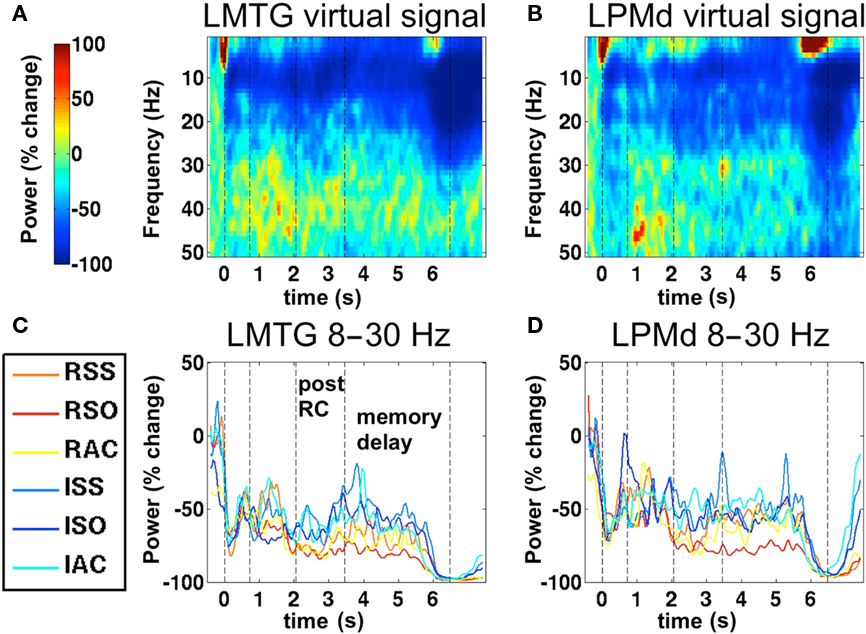

We note that the frequency ranges of significant effects resulting from these cluster analyses represent ranges of adjacent frequency points at which a statistical threshold was passed, allowing for valid inference on the cluster level. However, this does not mean that power modulations were limited to these frequencies. Close inspection of power spectra such as those shown in the right-most column of Figure 3 indicate that differences between conditions tended to extend across a broad portion of the frequency spectrum, despite being quite small at each individual frequency point. We did not observe any striking dissociation between different frequency bands. Therefore, on the basis of these results, we chose to use a consistent frequency range of 8–30 Hz for SAM analysis of the MEG data, as the EEG spectral analysis demonstrated that this range is sufficient to capture differences between conditions without canceling effects of opposite directionality across the frequency spectrum. Furthermore, this frequency range corresponds roughly to the traditional alpha and beta bands, without extending into the gamma band, which frequently exhibits quite different properties. Time–frequency analysis of virtual signals extracted from MEG data using SAM also implicated the 8–30 Hz range as maximally reactive in this task paradigm (see Figure 5, and results below). As an additional check, we also conducted SAM analyses in the traditional alpha (8–12 Hz) and beta (15–25 Hz) bands, but the results were very similar to each other and to the combined 8–30 Hz results (data not shown).

Sam Localization of Oscillatory Changes

Reversibility

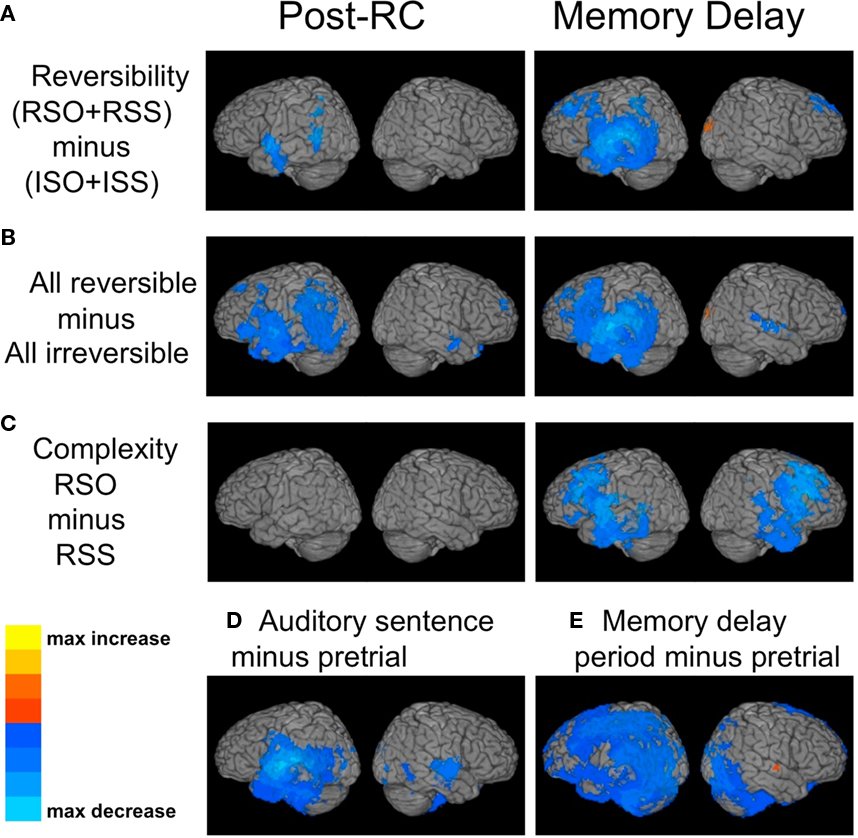

Synthetic aperture magnetometry was applied in a frequency window of 8–30 Hz. In all cases, the comparison of a more challenging condition with an easier one resulted in power decreases. Therefore, we will refer to these power decreases as “activation,” in keeping with common usage, and reflecting the fact that oscillatory decreases in these bands have previously been shown to have good spatial correspondence with positive BOLD activation in fMRI. Power decreases are mapped in a blue color scale on the surface of a standard reference brain in Figure 4. Figure 4A shows the reversibility contrast (RSO + RSS) − (ISO + ISS). This comparison produced left lateralized activation in classical language regions, including the left inferior frontal gyrus, anterior temporal gyrus, posterior middle temporal gyrus (MTG), superior temporal gyrus (STG), and supramarginal gyrus. These activations began during the post-RC portion of the sentence (left column) and expanded during the memory delay after the sentence (right column) to encompass the entire left STG, as well as premotor and dorsolateral prefrontal cortex. Analyses were also conducted during the second sentence segment (the relative clause), but no significant effects of either reversibility or syntactic complexity were detected.

Figure 4. Synthetic aperture magnetometry maps of power changes in the 8–30 Hz frequency range. (A) Power changes following the relative clause for reversible vs. irreversible sentences, during the post-RC portion of the sentence (left panel), and during the post-sentence memory delay (right panel). (B) Same as part (A), except that the simple active conditions are also included in the comparison, not just the sentences containing relative clauses. (C) Power changes following reversible object-embedded vs. subject-embedded relative clauses. (D) Non-specific changes induced by all sentence conditions on average, comparing 1 s timelocked to Trigger 2 (see Figure 1B) with 1 s of pre-stimulus time prior to sentence onset. (E) Non-specific changes induced by working memory for sentence content, comparing 1 s from the middle of the memory delay with 1 s of pre-stimulus time prior to sentence onset.

As with the EEG spectral analysis, effects of reversibility were stronger when the additional sentence condition of simple actives was also included (Figure 4B). Here, reversible sentences induce selective activation in most of classical perisylvian cortex (left column), and this activity persists throughout the delay period (right column).

Syntactic complexity

The results of SAM analysis of MEG data were consistent with the EEG spectral analysis, showing significant effects for syntactic complexity only during the memory delay period. During the period of sentence presentation, SAM analysis of the 8–30 Hz range revealed no significant clusters anywhere in the brain for the contrast RSO–RSS (Figure 4C, left column). During the memory delay period, however, widespread bilateral frontal activation was seen (Figure 4C, right column), including portions of inferior frontal gyrus, premotor and dorsolateral prefrontal cortex, and the anterior temporal lobe.

Non-specific activity

Although the primary focus of this experiment was on a comparison of brain activation between different categories of sentences, based on the factors of reversibility and complexity, it was also of interest to examine “non-specific” activity, i.e., activations induced by the sentences, memory delay, and picture presentation in general, regardless of condition. Here again, all comparisons shown are in the 8–30 Hz frequency range. To map general effects induced by auditory speech presentation, we compared a 1-s pre-stimulus interval of silence with 1 s of sentence presentation, timelocked to the onset of the relative clause (thus avoiding effects specific to the beginning of a sentence). As expected, this produced bilateral activation of primary auditory cortex in STG (Figure 4D), but also more widespread activation in the left temporal lobe, most likely reflecting general processes of language comprehension.

To examine activity associated with holding the contents of a sentence in working memory, we also compared a 1-s silent pre-stimulus interval with 1 s extracted from the middle of the memory delay that occurred between the sentence and the pictures. Note that in both time windows, the subject is silently viewing a fixation cross, but in the memory delay the subject is engaged in a complex cognitive task, preparing to respond to the pictures. This comparison resulted in very widespread activity in frontal, parietal, and temporal cortex, but was strongly left lateralized (Figure 4E). In fact, in the right hemisphere a small but significant power increase was observed in the right STG. This effect was consistently seen in single subjects, but variability in its location resulted in only a small cluster achieving significance on the group level.

Timecourse of Virtual Channel Signals

To better appreciate the temporal dissociation between initial sentence comprehension and post hoc processes occurring during the memory delay, it is useful to view the average time–frequency transforms computed from virtual signal timecourses. Therefore, we extracted virtual signals from two brain regions active in the task and conducted time–frequency analysis for display purposes. We present the temporal evolution of power in the 8–30 Hz band from two regions. These are timelocked only to the start of the sentence, not the individual phrase boundaries, but still show the temporal nature of the effects relative to the average position of the phrase boundaries. These are presented for illustrative purposes only; the SAM statistical maps were based on activity precisely timelocked to the phrase boundaries. Broadband time–frequency decompositions (averaged across all conditions) in both regions are displayed in Figures 5A,B. These figures confirm the conclusion derived from the EEG cluster analysis, that the main response in this task takes the form of a power decrease in the alpha and beta bands, approximately 8–30 Hz. The dotted vertical lines indicate the average position of the digital triggers across trials. In the bottom half of the figure, we present the timecourse of power in this range, in each individual condition. In all conditions, oscillatory power is strongly suppressed by sentence presentation relative to the pre-stimulus baseline, and remains suppressed throughout the memory delay, dropping even further upon picture presentation. In the Left Middle Temporal Gyrus (Figure 5C), power diverges between the reversible and irreversible conditions during the post-RC portion of the sentence, and remains suppressed to a greater degree by reversible sentences throughout the delay period. In the Left Dorsal Premotor region (Figure 5D), power diverges during the post-RC period between reversible and irreversible sentences. However, during the memory delay, power in the RSO condition remains especially suppressed relative to all other conditions.

Figure 5. Time–frequency decomposition of SAM virtual signals. (A) Time–frequency decomposition of virtual signals averaged across conditions, timelocked to the start of the sentence, extracted from left middle temporal gyrus (LMTG, Talairach coordinates −55, −52, +11). Dashed Vertical dashed lines indicate the average positions of the four digital triggers illustrated in Figure 1B. (B) Time–frequency decomposition of virtual signal extracted from Left Dorsal Premotor cortex (LPMD, Tal. −44, −2, +45). (C) Average timecourse of power in the 8–30 Hz band, in individual conditions, from the virtual signal in LMTG. (D) Average timecourse from virtual signals in LPMD.

Discussion

We employed EEG–MEG to investigate the timecourse of syntactic comprehension, measuring effects of semantic reversibility and syntactic complexity. The combination of these two factors is known to induce comprehension failure in agrammatic aphasics (Caramazza and Zurif, 1976), and in healthy adults under adverse conditions (Dick et al., 2001), but opinions vary on whether the difficulty relates to a failure of automatic syntactic parsing or instead to enhanced working memory demands in reversible complex sentences. Caplan and Waters (1999) distinguish between interpretive and post-interpretive processing, with the latter referring to task-dependent processes extending far beyond the time when a sentence is heard or read. Thus far, few neurocognitive studies have attempted to draw a temporal dissociation between these stages.

In order to distinguish between these temporal stages, we examined the effects of syntactic complexity and semantic reversibility on the brain’s electromagnetic responses in an auditory sentence-picture-matching task occurring in several time windows, including a relative clause, post-relative clause sentence material, and crucially, a 3-s delay period prior to picture onset. Effects of sentence content occurring during the subsequent delay period can be unambiguously attributed to post-interpretive reanalysis and working memory.

The behavioral data in this experiment indicated that the sentence-picture-matching task is more difficult for reversible sentences, and also that object-embedded clauses within reversible sentences pose a special challenge to comprehension beyond reversibility alone. Through analysis of oscillatory activity, we found that reversibility modulates the brain’s response to language input both during and after the sentence, suggesting that the need to explicitly consider syntactic information induces stronger neural activity during online comprehension and also during working memory for sentence content. In contrast, syntactic complexity in the form of object-embedded clauses modulated oscillatory activity only during the post-sentence delay period, suggesting that enhanced brain responses to these sentences are attributable to processes of post hoc reanalysis rather than online comprehension.

Reversible sentences induced a decrease in spectral power compared to irreversible sentences, beginning during the sentence and persisting throughout the delay period, in a broad frequency range including alpha and beta bands. Previous comparisons of MEG and fMRI have suggested that power decreases in alpha and beta bands correspond closely to fMRI activation and are reliably associated with increased neural activity (Brookes et al., 2005; Kim and Chung, 2008). Although the mechanism behind power decrease is not completely understood, it is generally assumed that task-related neural activity interferes with ongoing “idling” rhythms in cortex, resulting in desynchronization of oscillatory activity as detected at the scalp. At present, the mechanisms of oscillatory desynchronization are not well understood; for discussion, see (Neuper and Pfurtscheller, 2001; Jones et al., 2009). Here, we use SAM to map 8–30 Hz desynchronization as an indicator of enhanced neuronal activity in specific time periods. Our results demonstrate the utility of desynchronization in the alpha and beta bands as a brain mapping modality for language experiments. In our experiment, we did not detect a robust increase in gamma activity (>35 Hz) accompanying the alpha and beta decreases as have sometimes been reported (e.g. Brookes et al., 2005). Although gamma oscillations were evoked by linguistic stimulation (Figures 5A,B), they did not significantly distinguish between conditions. Comparisons of intracranial and non-invasive recordings have suggested that gamma oscillations are coherent over a smaller spatial extent, providing superior localization of activity, but are present at a much smaller signal-to-noise ratio at the surface, limiting their utility in non-invasive experiments (Crone et al., 2006). Furthermore, gamma activity is frequently observed most strongly in occipital regions under conditions of visual stimulation (e.g. Brookes et al., 2005; Meltzer et al., 2008), but may not be as general an indicator of neural activity in other paradigms as is low-frequency desynchronization.

Synthetic aperture magnetometry analyses suggested that online comprehension of reversible sentences recruits enhanced activity in core regions of receptive language function, including the anterior temporal lobe, posterior superior temporal lobe, and the temporoparietal junction. This effect is consistent with models of language comprehension that consider full syntactic parsing to be an optional process employed as needed (Ferreira et al., 2002; Sanford and Sturt, 2002), thus leading to increased activation in language areas when semantic reversibility demands explicit consideration of word order information to derive the correct meaning of the sentence. Enhanced power decrease in the 8–30 Hz band during the delay period following reversible sentences occurred in overlapping regions with those seen during the sentence, but with a more frontal distribution, recruiting regions of left inferior frontal gyrus and extending up into the dorsal premotor cortex. In fMRI, we observed enhanced activation to reversible sentences in all of these regions (Meltzer et al., 2010). The present results suggest that the enhanced activity in frontal cortex occurs later than the activity in posterior regions, despite the fact that the temporal difference is not large enough to be dissociable in fMRI. Such a conclusion is also bolstered by the analyses of “non-specific” activity combined across all conditions, which showed that speech comprehension produced activation in bilateral auditory cortex and in widespread portions of the left temporal lobe (Figure 4D), but working memory delay period produced a much wider left lateralized pattern of activation in posterior temporal lobe, and large portions of frontal and parietal cortex (Figure 4E).

While the manipulation of syntactic complexity (RSO–RSS) featured sentences that were exactly matched for the semantic and lexical content of the post-RC sentence segment that followed the critical relative clause, a limitation of the present design is that the reversible and irreversible sentences involved different main clause verbs and different action scenarios. Therefore, it is possible that part of the difference between these conditions may be attributable to semantic factors beyond syntactic parsing. Reversible sentences involve social or physical interactions between two human beings, and therefore may engage cognitive mechanisms related to such factors rather than purely linguistic processing. However, we believe that the contribution of such factors cannot fully explain the present findings, on the basis of our previous fMRI results with the same materials (Meltzer et al., 2010). In that study, we also compared the brain’s responses to the pictures following reversible vs. irreversible sentences, finding increased activity for reversible pictures only in bilateral posterior middle temporal regions. In contrast, increased activity for reversible sentences occurred throughout the left perisylvian areas, as seen again in the present MEG study. We would expect that activity related to the general semantic content of the reversible scenarios would be induced by the pictures depicting the actions as well, and therefore we conclude that such factors cannot fully explain the perisylvian activations induced by reversible sentences in the context of a task that demands a thematic role judgment.

Another concern related to the task design is that subjects had to be prepared to make the more difficult syntactic discriminations for the reversible sentences, whereas they may have caught on that no such decision was required for the irreversible sentences, which were tested using only lexical foils. This may have resulted in increased attention and arousal for the reversible sentences, contributing to greater brain activity during and following these sentences. However, our results are unlikely to be driven by general arousal, given that the increased activation to reversible sentences was almost completely left lateralized and localized to perisylvian language areas. This argues that the increased attention induced by reversible sentences is rather specific to the linguistic processing demands of the task, rather than a more general modulation of neural excitability.

The pattern of selective activations observed to the most difficult sentences, the non-canonical RSO condition (contrasted with RSS), was clearly dissociable from activation related to reversibility alone. We observed selective activity to RSO sentences only during the post-sentence delay period. This activity occurred in anterior temporal and frontal regions, and was remarkably symmetric bilaterally. The finding of enhanced activity in bilateral frontal regions is consistent with patterns of activation in these regions observed in fMRI studies of verbal working memory load (Rypma et al., 1999; Narayanan et al., 2005), supporting the hypothesis that enhanced processing of non-canonical sentences occurs mainly in a late reanalysis stage in working memory. This finding is also consistent with the fMRI results showing increased activity specific to the RSO condition timelocked to the onset of the picture probes in bilateral frontal cortex (Meltzer et al., 2010). These selective activations were interpreted as reflecting effortful reanalysis of both the sentence and the pictures. However, the fMRI technique, being dependent on hemodynamic deconvolution with a jittered trial design, was unable to fully resolve activity that peaks in the interval between the sentence presentation and the pictures. Activity occurring during the memory delay but before the picture probes may have appeared to be associated with the initial sentence processing, due to the hemodynamic delay. In the present MEG study, we observed that selective activation of bilateral anterior temporal and frontal regions occurred in response to RSO sentences, but only in the delay period, not during the sentence itself. This distinction would not have been apparent in the BOLD data, which integrates neuronal activity over a timescale of several seconds. With beamforming analysis of MEG data, we have been able to demonstrate for the first time that comprehension of syntactically complex sentences, so often impaired in aphasic patients, does not lead to an appreciable increase in neural activity during the perception of the sentence (at least for the measures employed here), but rather during the period of task-related memory use following the sentence. These findings suggest that comprehension deficits in impaired populations may stem from an impaired capacity for working memory use, rather than a loss of core syntactic competence.

Our results do not necessarily imply that there is no specialized neuronal activity involved in processing syntactic complexity in real time, but given the effects seen in this study, any such real-time activity seems to be dwarfed in magnitude by post hoc processes. Previous ERP studies have associated syntactic reanalysis with such “late” components as the P600 potential (Friederici et al., 1996; Frisch et al., 2002). However, we observed that syntactically challenging sentences induced power decreases that spanned the entire 3-s length of the delay period (as seen in Figure 5). This finding has important implications for processing models. In some models, “reanalysis” in complex syntax implies that subjects take extra time to derive the meaning of a sentence, but do arrive at a stable meaning eventually. Our results suggest instead, that rather than arriving at a stable meaning, subjects continue to engage in mental reanalysis of complex sentences (including but not limited to subvocal rehearsal processes) until the task demands are fulfilled. Furthermore, this extra analysis extends to the picture selection period, as evidenced by the increased reaction time following RSO sentences, as well as the increased hemodynamic responses to picture probes following RSO sentences observed in our previous fMRI study (Meltzer et al., 2010). This notion of temporally extended reanalysis is more in line with that described in Caplan and Waters (1999) as a post-interpretive process, rather than a more immediate process described as reanalysis in the P600 literature.

One potential objection to these findings is that they may be related to the task demands, which involved an extended memory delay and a challenging picture-matching probe. Subjects may not engage in such extended reanalysis in the course of ordinary language comprehension. However, we would argue that this “optional” reanalysis of language is an important component of comprehension during the understanding of more difficult sentences, and that its frequent absence in ordinary listening accounts for misinterpretations of complex sentences as shown by Ferreira (2003). Furthermore, the sentence-picture-matching tasks used in offline comprehension tests in clinical populations allow for untimed responses, during which even healthy participants may engage in reanalysis over several seconds. Our observations of increased brain activity following complex sentences both during the memory delay (shown with MEG) and following the actual picture presentation (shown previously with fMRI) suggest that participants must continue to process the most difficult sentences until the task demands are completed. One might expect that participants would quickly determine who did what to whom after the sentence is completed, forming a conceptual representation of the action unrelated to the actual syntactic structure. Our results argue that this does not occur; subjects are more likely to continue to rehearse complex sentences “verbatim” instead of transforming them to a simpler representation.

We are not the first to propose that brain activation in response to syntactically complex sentences is largely due to working memory demands. In an ERP study, King and Kutas (1995) observed Left Anterior Negativities to object-embedded vs. subject-embedded relative clauses, beginning after the main verb of the sentence and extending until after its completion. This activity was much stronger in subjects with higher working memory capacities. It is uncertain why we did not observe increased activity for object-relative sentences during the sentences themselves, either in oscillatory responses or time-domain averages of evoked responses (data not shown), but our interpretation is consistent with theirs: rehearsal and reanalysis of complex sentences is most likely responsible for increased brain activation, rather than automatic parsing processes triggered by syntactic complexity. It is possible that our task design encouraged subjects to delay the reprocessing of syntactically complex sentences to a greater degree than the subjects in the King and Kutas study. In any case, both our results and theirs argue against an automatic parsing process being responsible for the observed neural activity.

The present results suggest that comprehension of syntactically complex sentences may rely upon a highly distributed network of (predominantly frontal) brain regions that are also involved in more general cognitive processes, which are especially activated by the need for continued rehearsal of these sentences in working memory. Such an interpretation can reconcile divergent findings in the literature on syntactic comprehension in aphasia. Although studies have shown an association between damage to Broca’s area and impaired comprehension of non-canonical sentences (Drai and Grodzinsky, 2006), other studies have shown that this association is imperfect, with the same comprehension patterns sometimes occurring after damage to different areas (Berndt et al., 1996; Caplan et al., 2007b). Our findings point to an extended reanalysis involving large portions of the frontal lobe, suggesting that damage to a wide variety of areas could be sufficient to disrupt it. An inability to engage in extended reanalysis of complex sentences may underlie comprehension deficits for complex material in aphasic patients whose comprehension of less syntactically complex material is otherwise undisturbed.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We gratefully acknowledge technical assistance from Joe McArdle, Suraji Wagage, Elizabeth Rawson, Judy Mitchell-Francis, Tom Holroyd, and Fred Carver. This study was funded through the NIH Intramural Research Program, in the National Institute on Deafness and Other Communication Disorders.

Supplementary Material

The Supplementary Material for this article can be found online at http://www.frontiersin.org/Human_Neuroscience/10.3389/fnhum.2011.00010/abstract

Footnotes

References

Ben-Shachar, M., Hendler, T., Kahn, I., Ben-Bashat, D., and Grodzinsky, Y. (2003). The neural reality of syntactic transformations: evidence from functional magnetic resonance imaging. Psychol. Sci. 14, 433–440.

Berndt, R. S., Mitchum, C. C., and Haendiges, A. N. (1996). Comprehension of reversible sentences in “agrammatism”: a meta-analysis. Cognition 58, 289–308.

Brookes, M. J., Gibson, A. M., Hall, S. D., Furlong, P. L., Barnes, G. R., Hillebrand, A., Singh, K. D., Holliday, I. E., Francis, S. T., and Morris, P. G. (2005). GLM-beamformer method demonstrates stationary field, alpha ERD and gamma ERS co-localisation with fMRI BOLD response in visual cortex. Neuroimage 26, 302–308.

Caplan, D. (2001). Functional neuroimaging studies of syntactic processing. J. Psycholinguist. Res. 30, 297–320.

Caplan, D., Alpert, N., and Waters, G. (1999). PET studies of syntactic processing with auditory sentence presentation. Neuroimage 9, 343–351.

Caplan, D., Vijayan, S., Kuperberg, G., West, C., Waters, G., Greve, D., and Dale, A. M. (2002). Vascular responses to syntactic processing: event-related fMRI study of relative clauses. Hum. Brain Mapp. 15, 26–38.

Caplan, D., Waters, G., Dede, G., Michaud, J., and Reddy, A. (2007a). A study of syntactic processing in aphasia I: behavioral (psycholinguistic) aspects. Brain Lang. 101, 103–150.

Caplan, D., Waters, G., Kennedy, D., Alpert, N., Makris, N., Dede, G., Michaud, J., and Reddy, A. (2007b). A study of syntactic processing in aphasia II: neurological aspects. Brain Lang. 101, 151–177.

Caplan, D., and Waters, G. S. (1999). Verbal working memory and sentence comprehension. Behav. Brain Sci. 22, 77–94; discussion 95–126.

Caramazza, A., and Zurif, E. B. (1976). Dissociation of algorithmic and heuristic processes in language comprehension: evidence from aphasia. Brain Lang. 3, 572–582.

Carpenter, P. A., Miyake, A., and Just, M. A. (1995). Language comprehension: sentence and discourse processing. Annu. Rev. Psychol. 46, 91–120.

Chee, M. W., Hon, N. H., Caplan, D., Lee, H. L., and Goh, J. (2002). Frequency of concrete words modulates prefrontal activation during semantic judgments. Neuroimage 16, 259–268.

Clark, H. (1973). The language-as-a-fixed-effect fallacy: critique of language statistics in psychological research. J. Verbal Learn. Verbal Behav. 12, 335–359.

Crone, N. E., Sinai, A., and Korzeniewska, A. (2006). High-frequency gamma oscillations and human brain mapping with electrocorticography. Prog. Brain Res. 159, 275–295.

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21.

Dick, F., Bates, E., Wulfeck, B., Utman, J. A., Dronkers, N., and Gernsbacher, M. A. (2001). Language deficits, localization, and grammar: evidence for a distributive model of language breakdown in aphasic patients and neurologically intact individuals. Psychol. Rev. 108, 759–788.

Drai, D., and Grodzinsky, Y. (2006). A new empirical angle on the variability debate: quantitative neurosyntactic analyses of a large data set from Broca’s aphasia. Brain Lang. 96, 117–128.

Ferreira, F., Ferraro, V., and Bailey, K. G. D. (2002). Good-enough representations in language comprehension. Curr. Dir. Psychol. Sci. 11, 11–15.

Friederici, A. D., Hahne, A., and Mecklinger, A. (1996). Temporal structure of syntactic parsing: early and late event-related brain potential effects. J. Exp. Psychol. Learn Mem. Cogn. 22, 1219–1248.

Friederici, A. D., and Mecklinger, A. (1996). Syntactic parsing as revealed by brain responses: first-pass and second-pass parsing processes. J. Psycholinguist. Res. 25, 157–176.

Frisch, S., Schlesewsky, M., Saddy, D., and Alpermann, A. (2002). The P600 as an indicator of syntactic ambiguity. Cognition 85, B83–B92.

Grodzinsky, Y. (2000). The neurology of syntax: language use without Broca’s area. Behav. Brain Sci. 23, 1–21; discussion 21–71.

Halgren, E., Dhond, R. P., Christensen, N., Van Petten, C., Marinkovic, K., Lewine, J. D., and Dale, A. M. (2002). N400-like magnetoencephalography responses modulated by semantic context, word frequency, and lexical class in sentences. Neuroimage 17, 1101–1116.

Hillebrand, A., Singh, K. D., Holliday, I. E., Furlong, P. L., and Barnes, G. R. (2005). A new approach to neuroimaging with magnetoencephalography. Hum. Brain Mapp. 25, 199–211.

Jones, S. R., Pritchett, D. L., Sikora, M. A., Stufflebeam, S. M., Hamalainen, M., and Moore, C. I. (2009). Quantitative analysis and biophysically realistic neural modeling of the MEG mu rhythm: rhythmogenesis and modulation of sensory-evoked responses. J. Neurophysiol. 102, 3554–3572.

Jung, T. P., Makeig, S., Westerfield, M., Townsend, J., Courchesne, E., and Sejnowski, T. J. (2000). Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin. Neurophysiol. 111, 1745–1758.

Just, M. A., Carpenter, P. A., Keller, T. A., Eddy, W. F., and Thulborn, K. R. (1996). Brain activation modulated by sentence comprehension. Science 274, 114–116.

Kim, J. S., and Chung, C. K. (2008). Language lateralization using MEG beta frequency desynchronization during auditory oddball stimulation with one-syllable words. Neuroimage. 42, 1499–1507.

King, J. W., and Kutas, M. (1995). Who did what and when? Using word- and clause related ERPs to monitor working memory usage in reading. J. Cogn. Neurosci. 7, 378–397.

Klimesch, W., Russegger, H., Doppelmayr, M., and Pachinger, T. (1998). A method for the calculation of induced band power: implications for the significance of brain oscillations. Electroencephalogr. Clin. Neurophysiol. 108, 123–130.

Kucera, H., and Francis, W. (1967). Computational Analysis of Present-Day American English. Providence, RI: Brown University Press.

Kuperberg, G. R. (2007). Neural mechanisms of language comprehension: challenges to syntax. Brain Res. 1146, 23–49.

Kutas, M., and Federmeier, K. D. (2000). Electrophysiology reveals semantic memory use in language comprehension. Trends Cogn. Sci. 4, 463–470.

Maris, E. (2004). Randomization tests for ERP topographies and whole spatiotemporal data matrices. Psychophysiology 41, 142–151.

Maris, E., and Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190.

Martin, R. C., and Allen, C. M. (2008). A disorder of executive function and its role in language processing. Semin. Speech Lang. 29, 201–210; C 204–205.

Meltzer, J. A., McArdle, J. J., Schafer, R. J., and Braun, A. R. (2010). Neural aspects of sentence comprehension: syntactic complexity, reversibility, and reanalysis. Cereb. Cortex. 20, 1853–1864.

Meltzer, J. A., Zaveri, H. P., Goncharova, I. I., Distasio, M. M., Papademetris, X., Spencer, S. S., Spencer, D. D., and Constable, R. T. (2008). Effects of working memory load on oscillatory power in human intracranial EEG. Cereb. Cortex 18, 1843–1855.

Narayanan, N. S., Prabhakaran, V., Bunge, S. A., Christoff, K., Fine, E. M., and Gabrieli, J. D. (2005). The role of the prefrontal cortex in the maintenance of verbal working memory: an event-related FMRI analysis. Neuropsychology 19, 223–232.

Neuper, C., and Pfurtscheller, G. (2001). Event-related dynamics of cortical rhythms: frequency-specific features and functional correlates. Int. J. Psychophysiol. 43, 41–58.

Novick, J. M., Trueswell, J. C., and Thompson-Schill, S. L. (2005). Cognitive control and parsing: reexamining the role of Broca’s area in sentence comprehension. Cogn. Affect. Behav. Neurosci. 5, 263–281.

Osterhout, L., McLaughlin, J., and Bersick, M. (1997). Event-related brain potentials and human language. Trends Cogn. Sci. 1, 203–209.

Papademetris, X., Jackowski, A. P., Schultz, R. T., Staib, L. H., and Duncan, J. S. (2004). “Integrated intensity and point-feature non-rigid registration,” in Medical Image Computing and Computer-Assisted Intervention, eds C. Barillot, D. Haynor, and P. Hellier (Saint-Malo: Springer), 763–770.

Percival, D. B., and Walden, A. T. (1993). Spectral Analysis for Physical Applications. Cambridge: Cambridge University Press.

Rypma, B., Prabhakaran, V., Desmond, J. E., Glover, G. H., and Gabrieli, J. D. (1999). Load-dependent roles of frontal brain regions in the maintenance of working memory. Neuroimage 9, 216–226.

Sanford, A. J., and Sturt, P. (2002). Depth of processing in language comprehension: not noticing the evidence. Trends Cogn. Sci. 6, 392–396.