Perceptual crossing: the simplest online paradigm

- 1Laboratoire d'Informatique pour la Mécanique et les Sciences de l'Ingénieur, Centre National de la Recherche Scientifique UPR 3251, Orsay, France

- 2Department of Cognitive Neuroscience, University of Bielefeld, Bielefeld, Germany

Researchers in social cognition increasingly realize that many phenomena cannot be understood by investigating offline situations only, focusing on individual mechanisms and an observer perspective. There are processes of dynamic emergence specific to online situations, when two or more persons are engaged in a real-time interaction that are more than just the sum of the individual capacities or behaviors, and these require the study of online social interaction. Auvray et al.'s (2009) perceptual crossing paradigm offers possibly the simplest paradigm for studying such online interactions: two persons, a one-dimensional space, one bit of information, and a yes/no answer. This study has provoked a lot of resonance in different areas of research, including experimental psychology, computer/robot modeling, philosophy, psychopathology, and even in the field of design. In this article, we review and critically assess this body of literature. We give an overview of both behavioral experimental research and simulated agent modeling done using the perceptual crossing paradigm. We discuss different contexts in which work on perceptual crossing has been cited. This includes the controversy about the possible constitutive role of perceptual crossing for social cognition. We conclude with an outlook on future research possibilities, in particular those that could elucidate the link between online interaction dynamics and individual social cognition.

Introduction

It is the advent of the interactionist turn in social cognition. It is becoming more and more evident that research cannot be limited to investigating offline situations, where individual mechanisms to process social situations are looked at in isolation. There are social processes specific to online situations, i.e., when two persons are engaged in real-time interaction, and these processes are essential for an understanding of social interaction. As an illustrative example of how dynamics of interaction can be determined by the interaction process, rather than by the goals and actions of any of the interactors, De Jaegher (2009) describes a situation where two people try to walk past each other in a narrow corridor. It can happen that both people step toward the same side, readjust and step toward the other side, and subsequently engage repeatedly in such synchronized mirroring of sideways steps. In such a case, the process of interaction continues even if none of the interactors want to remain in interaction. There is thus a coordination of synchronized sideways movements in which the two peoples' behaviors are adjusted as a function of the evolving dynamics of the interaction. In other words, there are aspects of the dyadic system that cannot be assigned to any of the interacting entities. It remains to be seen how important such interaction processes are for social cognition. What is clear, however, is that traditional approaches in social cognition that study an individual's reaction to social stimuli offline are unable to capture this kind of interaction dynamics in the first place.

The importance of online interaction for the recognition of others has been illustrated by Murray and Trevarthen (1985). In their studies, 2-month-old infants interacted with their mothers via a double-video projection. The video, displayed to the infants, could either present their mother interacting with them in real-time or a video pre-recorded from a previous interaction. The infants engaged in coordination with the video only when interaction was live, whereas they showed signs of distress if the video was pre-recorded. The fact that the children were able to distinguish a live interaction with their mother from a pre-recorded one suggests that the recognition of another person does not only consist of the simple recognition of a particular shape or pattern of movements, but also involves a property intrinsic to the shared perceptual activity: The perception of how the other's movements are related to our own.

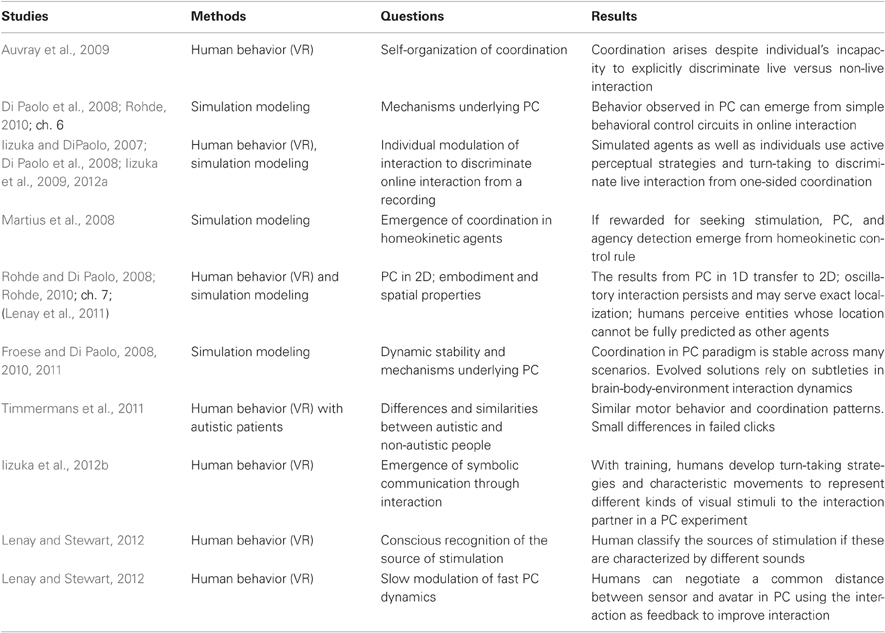

Auvray et al.'s (2009) perceptual crossing paradigm provides the most basic conditions for studying the factors involved in recognizing others in online interactions. In the experiment, pairs of blindfolded human participants were placed in separate rooms and interacted in a common virtual one-dimensional perceptual space (see Figure 1). Each participant moved a cursor (an avatar representing her body) along a line using a computer mouse and received a tactile stimulus to the free hand when encountering something on the line. The participants were asked to click the mouse button when they perceived the presence of the other participant. Apart from each other, participants could encounter a static object or a displaced “shadow image” of the partner. Note that this shadow image was strictly identical with respect to shape and movement characteristics. Therefore, the only difference between the partner and their shadow image is that the former can at the same time perceive and be perceived, i.e., that there can be live dyadic interactions. A solution to the task has to rely at least partially on performing and detecting a live interaction.

Figure 1. Schematic illustration of Auvray et al.'s (2009) experimental set-up.

Participants were able to perform this task well, i.e., they clicked significantly more often when meeting the partner's avatar (65.9% of the clicks ± S.D. of 13.9) than when meeting the shadow image (23.0% ± 10.4) or the static object (11.0 ± 8.9%). The paradigm thus provides sufficient conditions for perceptual processes that are sensitive to social contingency like Murray and Trevarthen's (1985) study.

Analysis of the sources of tactile stimulation revealed an asymmetry: the majority of the stimuli were caused by encounters with the partner's avatar (52.2% ± 11.8 of the received stimuli) followed by the static object (32.7% ± 11.8) and the shadow image (15.2% ± 6.2). Surprisingly, this implies that the relative recognition rate, i.e., the ratio of clicks per type of object divided by stimulations per type of object, does not differ between the mobile object and the interaction partner: There are 1.26 clicks per stimulation by the partner's avatar and 1.51 for the shadow image, a difference that is not significant. Only the static object with 0.33 clicks per stimulation differs. Participants are ca. four times more likely to click after having met a mobile object than after having encountered the static object. The higher proportion of correct clicks when meeting the other is, therefore, not due to an individual ability to recognize the partner as participants are equally likely to click after encountering the shadow as they are when they encounter the other. There is no conscious recognition of the other in terms of this click rate. The correct discrimination emerges instead from the interaction dynamics, as a consequence of the mutual search for one another which make the encounters between the two participants far more frequent.

In explaining the result that participants predominantly click when meeting their partner, there are thus two kinds of processes to account for: the participants' ability to click after touching mobile objects but not after touching the fixed object; and the fact that the perceptual crossing with the other was far more frequent than encounters with the shadow image which explains why participants had 65.9% correct responses even though their relative click rate was identical for the shadow and the other. A closer examination of the results indicated that participants used a strategy of reversing their direction of movement after a sensory encounter; there is a strong negative correlation (r = −0.72) between the mean acceleration after losing contact and the mean velocity before making contact. This strategy results in oscillatory movement around the source of stimulation, an observation consistent with previous studies with such minimalist visuo-tactile feedback devices (Stewart and Gapenne, 2003; Sribunruangrit et al., 2004). According to Lenay et al. (2003) this strategy and the successive stimulation events it brings about give rise to the perception of a spatially localized object.

The participants' ability to distinguish between fixed and moving objects could be based on a number of differences in sensorimotor events. These are the following. A change in stimulation occurs although the participants themselves did not move (this criterion accounts for 54.9% of clicks). Participants experienced two distinct consecutive stimuli even though they have been moving monotonically in a constant direction (32.3%). They experienced a smaller (31.3%) or larger (9.1%) width than the objects' stationary size. The next three types of event occur when the participants leave a source of stimulation and then reverse direction to relocate this source of stimulation. If the stimulation is not, as the participants expected, due to a fixed object, they will encounter the stimulation again sooner (14.4%) or later (14.7%) than expected, or not at all (11.6%). These criteria are not mutually exclusive and may have been used in combination. Detecting these subtle differences in sensorimotor patterns is a task up to the individual.

The more frequent stimulation of the participant due to a perceptual crossing with the other results from the oscillatory scanning strategy employed. When a participant encounters the partner, both participants receive tactile stimulation, and reversing the direction of their movement, they engage in the same oscillatory behavior. This co-dependence of the two perceptual activities thus forms a relatively stable dynamic configuration. In this situation, the perceptual activities mutually attract each other, just as in everyday situations, when two people catch each other's eye. By contrast, when a participant encounters the other's shadow image, she is the only one receiving stimulation and subsequently reversing her direction. This does not allow a stable interaction. Importantly, the correct solution in this instance results from performing the live interaction itself. The coupling of two individuals employing the more general perceptual strategy to oscillate around a source of stimulation leads to the emergence of stable interaction. As the individual click rates show, no individual discrimination between the other and her shadow image is detected by the individual alone. The online interaction and emergent coordination provides the participants with the distinction between the shadow and the other for free. This occurs without the need for consciously detecting differences in the available sensorimotor patterns. The results, therefore, provide an example how interactive processes (that individual-based approaches would be blind to) can serve a functional role in solving a perceptual task.

Extensions and Models of the Paradigm

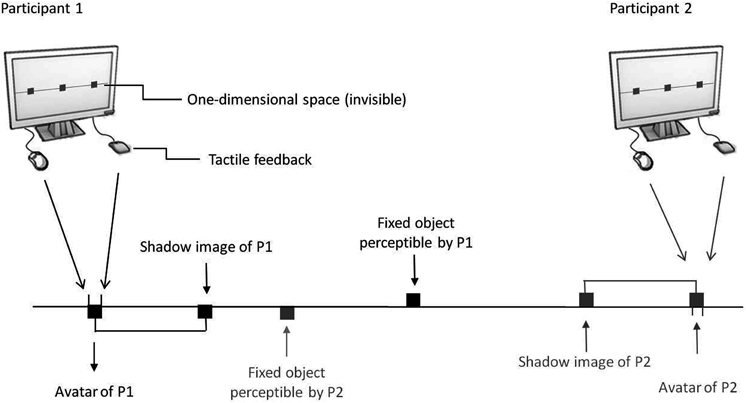

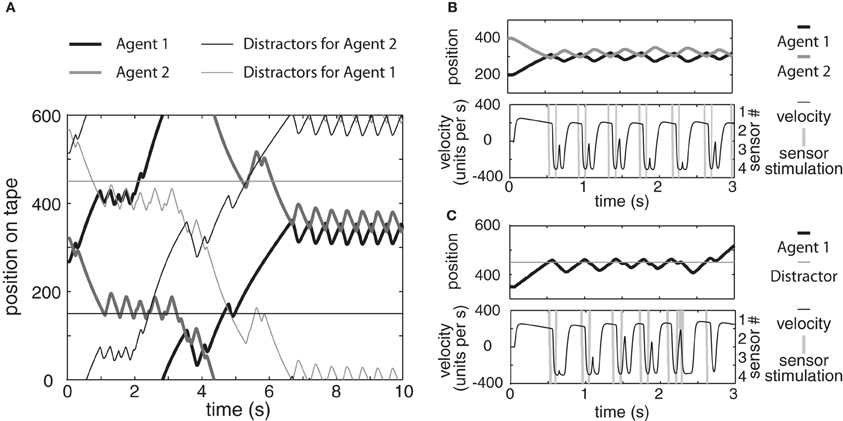

Auvray et al.'s (2009) study has provoked a lot of resonance in different areas of research. These range from experimental and clinical psychology to computer/robot modeling, philosophy, and even engineering and design. Due to its simplicity, the perceptual crossing paradigm serves as an illustrative example for the importance of online interaction dynamics. This section surveys the body of literature emerging as a result of the perceptual crossing paradigm, starting with a section on empirical work, before covering follow-up computational models. In addition, Table 1 lists and summarizes the most important studies in perceptual crossing.

Further Experiments on Perceptual Crossing

In order to test whether these results generalize to richer environments, Lenay et al. (2011) tested a two-dimensional version of the original experiment. Ten pairs of participants were placed in a two-dimensional virtual space. Apart from differences in the size of objects and environment, the set-up and protocol were the same as in the original experiment. Quantitatively speaking, the results were similar, i.e., a very successful identification of the other in terms of percentage of clicks assigned to the different types of objects. Similarly, there was again an equal click rate per stimulation for the partners and their shadow image, showing that successful distinction between the other and the shadow is due to frequency of stimulation from each source. The emphasis of this extension was, however, on qualitative properties of motor behavior. How would participants implement this task in two dimensions? Lenay et al. (2011) report that, while participants scanned the entire space in search for one another, once contact was established, they reverted to one-dimensional oscillatory interactions. Further analysis of active perceptual strategies was informed by results from robot simulations of the task (Rohde and Di Paolo, 2008; Rohde, 2010; Lenay et al., 2011; cf Section “Robot Simulation Models”), i.e., patterns of behavior observed in the robotic agents were tested against the human data. There is evidence that a possible functional role of oscillatory scanning is to relocalize the other after losing touch. This is in agreement with the idea that oscillations serve to spatially localize a source of stimulation. Evidence also suggests that a “surprise,” i.e., the impossibility to precisely predict the location of the other despite coordinated interaction, can explain the clicking behavior, as was previously suggested by Auvray et al. (2009). It should be mentioned that it remains unclear whether the reduction of movement to one dimension is related to the anatomy of the human arm, as no postural variables were recorded and there is considerable variability in preferred oscillation direction across subjects.

Another variation on the paradigm was studied by Iizuka et al. (2009, 2012a) who empirically tested an issue that had previously been studied in simulation (Iizuka and DiPaolo, 2007; Di Paolo et al., 2008; cf. Section “Robot Simulation Models”), i.e., agency detection in an environment, where one-sided coordination (De Jaegher, 2009) is a theoretical possibility. In the original perceptual crossing paradigm, one-sided coordination with the other's shadow image cannot stabilize. Due to the spatial arrangement of the different entities on the tape, if one participant interacts with the other's lure, this implies that the other person is still searching for her partner. Thus, even when not interacting, the participants will influence each other's behavior. Iizuka et al. (2009, 2012a) introduced an important change to the original perceptual crossing paradigm. Instead of simultaneously placing a person and her shadow image into the virtual world, trials were randomized to either expose participants to a live interaction (possibility for two-sided coordination) or to a recording of the other participant's behavior in a previous live interaction trial (possibility for one-sided coordination). Participants had to decide at the end of a trial whether they had perceived the interaction to be live. With this change in the paradigm, participants initially have difficulties to distinguish the two kinds of trials. All of them start off scanning and oscillating around the encountered entities of both types. However, after only a few tens of trials, the participants developed a turn-taking behavior as an active probing strategy. Turn-taking was quantified as the amount to which dyads relied on a behavioral strategy where, at any point in time, just one interaction partner was moving while the other one was standing still, rather than both moving simultaneously. However, only 4 out of those 10 dyads achieved above chance level performance on the task using the turn-taking strategy.

The important difference with Auvray et al.'s (2009) perceptual crossing paradigm is that the procedure used in Iizuka et al.'s (2009, 2012a) experiment renders a one-sided coordination solution theoretically possible. In other words, it allows a situation where coordination occurs but is to be fully credited to one interaction partner. This translates Murray and Trevarthen's (1985) double TV monitor paradigm more faithfully into a minimal virtual environment but also tackles a slightly different scientific question. Indeed, Auvray et al.'s (2009) focus was on the kind of environments and behaviors that lead to the emergence of social coordination, whereas Iizuka et al. (2009, 2012a) focus on how an individual can modulate the interaction dynamics to figure out if an interaction is live or not. This difference in motivation relates to our discussion of whether perceptual crossing constitutes social cognition in Section “Does Perceptual Crossing Constitute Social Cognition?”.

The emergence of symbolic communication without dedicated signaling channels was studied by Iizuka et al. (2012b) in another variant of the perceptual crossing paradigm. Pairs of participants were confronted with different visual stimuli (shapes) and had to decide after 30 s of perceptual crossing whether they saw the same or a different shape (at first there were two possible shapes, later three). Initially, performance was at chance level and participants engaged only in perceptual crossing. Yet, with the feedback provided, participants learned not only to take turns in interaction, but also to negotiate characteristic motion patterns to represent the shape they could see, e.g., by a characteristic oscillation frequency. What is particularly interesting in this instance is that the same sensorimotor coupling afforded by the experimental set-up serves several functions. Oscillatory movement is used to localize the other, to communicate, and to negotiate a common vocabulary. All of these activities are distinguishable, functional processes, yet they all occur concurrently.

In another interesting elaboration on the original paradigm, Lenay and Stewart (2012) tested the extent to which participants are able to explicitly recognize their partner. To do so, participants received feedback sounds instead of tactile feedback. Each sound was associated with one of the three kinds of objects, i.e., the partner's avatar, the shadow image, and the fixed object. Ten pairs of participants were tested in 4 sessions and the mapping from sounds to entities was randomized for each session. In sessions 1 and 2, the moving object had the same rule of displacement as in the original experiment, i.e., located at a fixed distance from the partner's avatar. In sessions 3 and 4, it corresponded instead to the recordings of the partner's trajectory during session 2. At the end of each session, participants were asked to assign the tones to the different kinds of entities. The results revealed a very high and statistically significant ability to recognize the fixed object. The participants' ability to distinguish between partner and mobile object is less conclusive: Overall, the participants were able to distinguish between the two in the two sessions where the mobile objects corresponded to a pre-recording; however, they were only able to do so in one out of the two sessions when it corresponded to a shadow image.

A number of interesting observations emerged from the study. Firstly, there was a non-significant trend for participants' performance to correlate, suggesting that certain types of interaction ease the assignment for both parties alike. In addition, a common strategy to solve the task could be observed. Participants first identified the sound corresponding to the fixed object. They subsequently sought the partner's avatar, trying to stay in contact with it. In a final step, they verified their assignment by tracing the mobile object. This shift in strategy from that observed in the original perceptual crossing paradigm (Auvray et al., 2009) indicates, in line with the results by Iizuka et al. (2009, 2012a), that conscious recognition requires the modulation of the perceptual crossing dynamics emerging from mutual search. It also suggests that such a modified strategy and characteristic feedback sounds are necessary to identify the source of stimulation. This concurs with the conclusion from the original study (Auvray et al., 2009) that the discrimination between the other and the shadow image does not involve conscious recognition. During debriefing, participants reported sessions 3 and 4 (interaction with a recording) harder, because the recording appeared “more human,” moved more, appeared to react more to contacts, and to imitate the oscillation pattern. This is in agreement with the results of Iizuka et al. (2009, 2012a) that reported increased difficulty in discriminating one-sided coordination with a recording of a previous interaction, as opposed to live online interaction.

Another study (Lenay and Stewart, 2012) investigated whether participants are able to modulate their sensorimotor couplings to ease perceptual crossing. The one-dimensional environment contained only the two partners. In this variation of the paradigm, participants could adjust the distance between their receptive field and their corresponding avatar (perceptible by the other). The initial distances between the participant's receptive field and avatar at the beginning of a trial varied from trial to trial. Distance could be adjusted using mouse-clicks during perceptual crossing. If both participants agreed on the same distance, this corresponds to the setting in the original paradigm (Auvray et al., 2009). If there is a discrepancy in these distances between participants, the crossing would be expected to drift to one direction given the described oscillatory strategy. If the discrepancy is very large, coordination would be expected not to stabilize. The participants' task was to adjust the distance between their avatar and the receptive field to decrease any drift experienced and stabilize perceptual crossing. It was found that, using the interaction dynamics as a feedback signal, participants were able to decrease the discrepancy between their distance parameters and thus, over time, to modulate the wiring of their sense organs to achieve smoother interaction.

A different line of experimentation was pursued by Timmermans et al. (2011), who investigate perceptual crossing in High Functioning Autists (HFAs). Their aim was to determine the level at which HFAs have impaired social abilities. While some scientists claim that autistic people have problems with automatic aspects of social cognition (e.g., McIntosh et al., 2006 report a deficit in automatic mimicry), other studies find no impairment in autists in cognitive faculties that involve implicit social cognition, such as action representation (Sebanz et al., 2005) or implicit learning (Brown et al., 2010). By studying HFAs in the perceptual crossing paradigm, it is possible to test whether autistic people have difficulty in coordinating online interactions, or their conscious perception of such interactions. If the coordination capacities of HFAs are less strong, there should be relatively less stimulation by the interaction partner. If, however, the individual processing is impaired, there should be a decreased ability to distinguish the fixed object from the partner and shadow image in terms of click rate. Fifteen pairs of participants were tested in the perceptual crossing paradigm; in eight of these pairs, one interaction partner was a HFA, the other seven pairs consisted of two healthy controls. There were no significant differences in motor behavior or coordination patterns between the two groups: most of the encounters occurred with the interaction partner. Thus, HFAs appear not be impaired at the levels of social interaction that are required for coordination in the perceptual crossing paradigm. In terms of frequency of clicks, unlike the original study (Auvray et al., 2009), there are so far no significant differences between the three types of objects in either group. Thus, apart from the reliable emergence of perceptual crossing interaction, whether or not there are more specific differences in how HFAs and healthy controls interact will require further analysis and experiments.

Beside these direct variations and extensions, the perceptual crossing paradigm has also informed research in different disciplines. In the field of design, Marti (2010) draws inspiration from the perceptual crossing paradigm in order to develop interactive devices capable of a mutual regulation of joint actions. The robot companion Iromec has been developed for the purpose of engaging with children with different disabilities. In one of the scenarios presented in the article, the robot companion follows a child at a fixed distance taking the same trajectory, pace, and speed as the child. If however, another person comes closer to the robot, it will subsequently follow the new person until the child again comes closer to it. The author tested such a scenario with a 9-year-old child who had a mild cognitive disability involving attentional difficulties and delays in learning. Analyzing the video-recordings, the child's teachers agreed that the child was remarkably able to sustain activity across a number of tasks, suggesting that his capacity to focus attention was better than usual. The author shows how the lessons learned from the perceptual crossing experiment can be used in the design of technological artifacts to improve the motivation to act, attention to mobility, coordination, and basic interpersonal interaction.

Ware (2011) was inspired by the perceptual crossing experiments to investigate social interactions in pigeons with a method similar to that of Murray and Trevarthen (1985). A double closed-circuit teleprompter apparatus enabled two birds located in different rooms to interact in real-time via a video interface. Pigeons' courtship behaviors was studied, which is reflected in how much they walk in circles. The courtship behavior of 12 pigeons (six males and six females) was compared when they viewed a real-time video of each of the opposite sex partner versus a recorded video of previous interaction with those same partners. The results revealed an effect of the interactive condition (live versus playback) on pigeons' courtship behaviors. Additional experiments investigated the temporal and spatial details of how live interaction benefits courtship behavior in pigeons. The results demonstrated that pigeons' circle walking behavior also decreased when a 9-s delay was introduced, as compared with the live condition. There was no effect of viewing angle: The pigeons behaved similarly when their partner faced the camera versus when it was displayed rotated 90° away during a live interaction and their interactive circle walking behavior reduced in the two cases when viewing a playback video. It should be noted that differences in circle walking behaviors during playback versus live conditions only appear during courtship (two pigeons of opposite sex) but not during rivalry (two pigeons of the same sex). Ware's results thus reveal that pigeons' courtship behavior is not based on visual signals only, but it is also influenced by sensitivity to social contingencies existing between signals.

Robot Simulation Models

The perceptual crossing paradigm suggests itself for computational modeling by simulating embodied agents, given that it takes place in a minimal virtual world. The experimental task can only be solved in interaction, not through an abstract processing/reasoning. Simulating the behavior in closed-loop agent-agent interaction can reveal the possible mechanisms that could underlie perceptual crossing in humans.

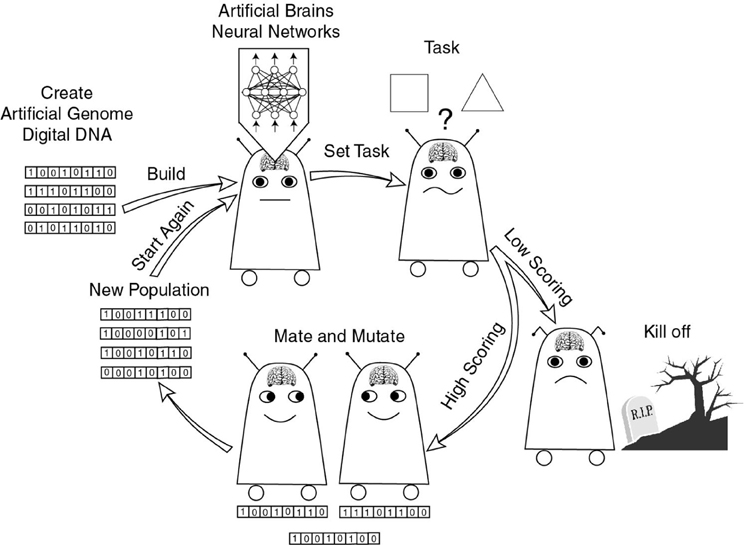

The first studies on computational modeling of the perceptual crossing paradigm were published by Di Paolo et al. (2008). They used Evolutionary Robotics simulation modeling, a technique where simulated robots are parameterized in an automated way to optimize performance in a task. Performance in a task is measured by a “fitness function” that quantifies success in the task (see Figure 2 for a cartoon illustration of how Evolutionary Robotics works). For instance, a fitness function for the perceptual crossing task was to be maximally close to another agent over the course of a simulated interaction within the virtual world also used for humans (Figure 1). The parameters evolved were the weights and biases of a recurrent neural control network that were initially random. By removing those network controllers that did not score high according to the fitness function, and copying the successful ones with modifications to the next generation, the neural controllers evolved (“learned”), over thousands of generations, to locate the other agent. Such Evolutionary Robotics models, as well as other agent simulation models of the task, do not strictly follow the scientific purpose to fit and describe a data set. Instead they can be seen as idea generators and proofs of concept, as the algorithm may come up with circuits that a human engineer would not have come up with. Evolved solutions typically exploit dynamical properties of the closed-loop agent-environment system and are both simple and robust (cf., e.g., Rohde, 2010).

Figure 2. An illustration of the algorithm used in Evolutionary Robotics. Random strings (“genomes”) are interpreted as parameters for neural network robot control. Robot behavior is simulated and evaluated. The higher scoring agents' genome is recombined and copied with mutations to seed the next generation. Over thousands of repetitions of this cycle, behavior according to the evaluation criterion (“fitness function”) is optimized (source: Rohde, 2010; ch. 3).

In the first simulation experiment (Di Paolo et al., 2008; Rohde, 2010; ch. 6), agents were evolved to locate another identical agent in live interaction in a set-up analogous to that used in Auvray et al. (2009). Circuitry to avoid the shadow image evolved effortlessly. However, it turned out to be much more difficult to get controllers to avoid the stationary object than anticipated. This is because the anti-phase oscillations in which agents interlocked when interacting appeared strikingly similar to the one-directional scanning of a fixed object (see Figure 3): A touch, followed by an inversion of movement direction, followed by another touch, an another inversion, and so on. Only subtle differences in the integrated time of stimulation over time made it possible to make this distinction at all; the duration of stimulation is shorter in interaction because the two agents pass each other moving in opposite directions (Figure 3B). This result sheds a new light on the results by Auvray et al. (2009). In their study, the fixed lure accounts for a third of stimulations (cf. Section “Introduction”), implying that humans spend a considerable amount of time trying to figure out if the source of stimulation is static or not. The cue ultimately used by the model, i.e., a shorter duration of stimulation when crossing, accounts for 31.3% of human clicks as well. In summary, the evolved circuits generate behavior that qualitatively accounts for every aspect of the human behavior reported in Auvray et al. (2009), proving that simple sensorimotor control circuitry embedded in online interaction is sufficient to explain success in the task, without the need to explicitly process social cues1. However, it should be noted that another important cue available to humans, i.e., variability of the exact position of the partner (cf. Lenay et al., 2011), is not available to the simulated agents, as the two interacting agents are always identical and there is no sensory or motor noise in the simulations; this means that their interactions are implausibly regular (cf. Figure 2).

Figure 3. Simulated agents performing perceptual crossing (Di Paolo et al., 2008; Rohde, 2010; ch. 6). (A) An example movement generated in simulated interaction. The two agents (thick lines, gray and black) subsequently interact, part, engage with the two kinds of distractor objects (thin lines) and eventually find each other and lock in interaction. (B,C) Even though from the observer perspective, interactions with another agent (B) and with the stationary object (C) look very different (top panels), the sensorimotor plots (sensor activation and motor outputs across time, bottom panels) look strikingly similar. This reveals why discriminating the other and the fixed objects is difficult for simulated agents.

The difficulties agents and humans have in avoiding the fixed lure demonstrates the difference that online interaction makes in the study of social interaction and social cognition. Live interaction in the perceptual crossing paradigm may ease the task to avoid the shadow image, as no stable interaction can be established. This is contrary to our intuition that it should be difficult to distinguish two entities that move exactly the same way. On the other hand, distinguishing another sensing, moving person from a fixed object, a task that appears easy when merely thinking of the stimulus properties offline, becomes more difficult in interaction, as both stay in approximately the same place. Therefore, we have to be sceptical about generalizing findings from offline paradigms in the study of social cognition to live interaction contexts.

The second model (Di Paolo et al., 2008; see also Iizuka and DiPaolo, 2007) tests—in silico—the experimental study that was later put to the empirical test by Iizuka et al. (2009), i.e., the possibility to distinguish a live interaction with a partner from a recording of an earlier interaction with that same partner (see Section “Further Experiments on Perceptual Crossing”). Agents evolved to solve this problem by an active probing strategy, i.e., they performed step changes of position to test whether the other agent follows them or not. In this scenario, discrimination is down to the individual. Yet, the solution is also interactive, as it relies on simple know-how rules of how to provoke a break-down in one-sided interaction. The evolved controllers do not process the inputs for signs of social contingency; they rely on simple sensorimotor couplings. Even though the turn-taking strategies observed in human participants performing the same task is of a different nature, the underlying principle is the same: Both humans and robots actively probe the stability of interaction to assess whether they are dealing with another agent or with a recording (Iizuka et al., 2009, 2012a; cf. Section “Further Experiments on Perceptual Crossing”).

Froese and Di Paolo (2008, 2010, 2011) replicated and extended these models, providing further analysis of the evolved agents and changes to the paradigm. They could show that the globally attractive properties of the experiment are extremely robust (Froese and Di Paolo, 2008, 2010). Even when the agents were re-wired to receive the perceptual inputs of the respective others, perceptual crossing was established. In this scenario, the tactile inputs did not provide any actual cues about the position of any of the entities in the virtual world. Furthermore, they tried to evolve agents to seek interaction with the shadow image. However, agents always ended up in interaction with the other agent, as it is difficult to escape from the coordination emerging from the mutual search for one another. Finally, a more detailed dynamical analysis showed that the dynamical neural network controllers evolved for perceptual crossing, rely on both internal state of the units and external relative positioning of the agent. They exploit more subtle properties of the dynamic interaction of the agents with one another and the environment than could be captured with the simple feedback control circuit they test as a competing model.

Froese and Di Paolo (2011) additionally evolved agents to solve a variant of the task, where stimulation is limited to one fixed size rectangular input upon contact. This variation of the paradigm makes it impossible to distinguish the fixed object and the other merely by integrating the time of stimulation, i.e., the cue used in the first model of the task (Di Paolo et al., 2008; Rohde, 2010; ch. 6). The results confirmed that agents can use other cues than just size and velocity to find each other and coordinate.

Martius et al. (2008) modeled the perceptual crossing experiment with simulated agents that were controlled by a homeokinetic controller. Homeokinesis consists of a simultaneous maximisation of sensitivity to changes in inputs and predictability of future sensory inputs. This rule means that an agent's motion should be maximally variable with changing inputs and that behavior in the closed loop should be governed by rules of sensorimotor contingency that the controller can learn. In their variant of the task, the virtual world contained another agent and its shadow image, but no fixed objects. Perceptual crossing emerged from this simple control rule if an additional reward term to seek stimulation was introduced. When tested with either just another agent or a recording of a previous interaction, these agents established perceptual crossing with the other agent but not with the recording. Thus the homeokinetic control rule can explain sensitivity to social contingency in the perceptual crossing paradigm.

Rohde et al. (Rohde, 2010; ch. 7; Rohde and Di Paolo, 2008; Lenay et al., 2011) also modeled the extension of the Auvray et al.'s (2009) paradigm to a two-dimensional scenario described in Section “Further Experiments on Perceptual Crossing.” Rohde (2010) compared different agent bodies, i.e., a simulated arm, a joystick rooted in Euclidean space and a wheeled agent to compare commonalities and differences between agent bodies. In two dimensions, it is not clear to what extent interactive strategies are governed by principles of Euclidean space or of joint space. The different agent bodies make evolution of one or the other kind of solution more likely. A simulated arm is more likely to operate in joint space; the joystick agent is more likely to operate in Euclidean space; the small wheeled agent is more likely to use navigational strategies that cannot be transferred to the human. It was found that similar principles governed all solutions. The agents reliably evolved two sub-behavioral modes, one for exploration and one for interaction. If they evolved to oscillate around a target, they were more successful. Oscillation was reliably realized by just one of the two motor outputs that reacts very fast. The second motor output was used to modulate behavior on a slower time scale. Yet, the geometry and quantitative properties of these solutions varied with different agent bodies. Wheeled agents moved around in circles, joystick agents scanned the two dimensional world in a grid and arm agents moved along one of their joint axes. These insights fed back into the analysis of human behavior described in Section “Further Experiments on Perceptual Crossing.” Even though the sub-modes of search and oscillatory interaction were observed in humans, too (Lenay et al., 2011), only some could be found to oscillate in a preferred direction as the simulated arm agents did.

The described body of work on simulation models complements the experimental work with humans using the perceptual crossing paradigm and its variants. The models confirm that the behavior observed in humans can emerge in the absence of any explicit social processing. The models have suggested possible underlying mechanisms: Simple feedback rules; small recurrent neural networks exploiting agent-environment interaction (Iizuka and DiPaolo, 2007; Di Paolo et al., 2008; Rohde, 2010; ch. 6 and 7; Froese and Di Paolo, 2011); homeokinetic forward modeling (Martius et al., 2008) and model predictions were tested against empirical data. New variations of the perceptual crossing paradigm were tested in simulation (Iizuka and DiPaolo, 2007; Rohde and Di Paolo, 2008) and were implemented later on (Iizuka et al., 2009, 2012a; Lenay et al., 2011). In this sense, the modeling of perceptual crossing also makes an important methodological point. There is an ongoing debate about whether simple agent models can be useful for the study of human or even non-human cognition (e.g., Kirsh, 1991) or whether such modeling should have an explicit target organism and behavior (Webb, 2009). The cross-fertilization of behavioral experiments and simulated agent modeling in research on perceptual crossing is, therefore, a case in point for the scientific benefits of this type of minimal models (cf. Rohde, 2010). Anecdotically, it should be mentioned that the model by Iizuka and DiPaolo (2007; Di Paolo et al., 2008) that investigates perceptual crossing with a recording was developed independent to the perceptual crossing paradigm as a mere theoretical exercise. Only later, the parallels to Auvray et al.'s (2009) perceptual crossing paradigm were revealed and eventually led to follow-up experiments with humans (Iizuka et al., 2009, 2012a).

The possibilities for future research are vast. More and more researchers pick up on the results and take them further. For instance, Wilkinson et al. (2011) are working on perceptual crossing of eye-movements using the iCub robot platform, a branch of research with potential relevance for studies on human perceptual crossing of gaze (e.g., Pfeiffer et al., 2011; cf. Section “The Road Ahead”).

What Do the Results on Perceptual Crossing Imply?

Due to its compelling minimalism, the results from the perceptual crossing experiment have become a paradigmatic example in promoting a turn toward more embodied and interactionist approaches in the study of social cognition. In the context of such passionate philosophical debates, it is not always clear where a strict interpretation of the results ends and where a philosophical argument or opinion begins. Therefore, we want to discuss different contexts in which the perceptual crossing experiment or its extensions and models have been referred to and make this line explicit, starting with listing a number of conclusions that we endorse without further reservations. We will then focus on two debates that require a more careful evaluation: The discussion of whether perceptual crossing behavior constitutes social cognition and the question of what perceptual crossing tells us about the experience of affect.

What can be Inferred from the Perceptual Crossing Paradigm

What the results on perceptual crossing presented in Auvray et al. (2009) show, in their essence, is that, in dyadic interaction, co-ordination of behavior can emerge from a mutual search of interaction partners for one another, even in a severely impoverished virtual environment. Furthermore, the study reveals that this emergent coordination produces successful detection of agency even though, on an individual level (rate of clicks per stimulation), humans cannot discriminate interacting and non-interacting mobile stimuli. Simulation models of the task (e.g., Di Paolo et al., 2008) demonstrate that this kind of behavior can emerge from very simple agents without explicit social reasoning in online interaction. These results, as well as the modifications of the paradigm listed in Section “Extensions and Models of the Paradigm” illustrate the importance of online dynamical interaction in the study of human social interaction and cognition. Perception, as well as decision-making, relies on the active recruitment of the necessary information in interaction. This leads to emergent patterns of interaction behavior (e.g., stable anti-phase synchronization of perceptual crossing) that change the task in a way that makes comparison with offline paradigms, where stimuli are passively processed, impossible.

There are a number of further interpretations of the results on perceptual crossing that can be endorsed without further reservation:

- As a pioneering study that, even though still limited, proves, and recognizes the importance of social contingency and/or interactionist approaches (Pereira et al., 2008; De Jaegher, 2009; Cangelosi et al., 2010; De Jaegher et al., 2010; Schilbach, 2010; Gangopadhyay and Schilbach, 2012)

- As a proof that, even in simple environments, embodied and embedded interaction can bring about coordination and/or synchronisation (Cowley, 2008; McGann and De Jaegher, 2009; Niewiadomski et al., 2010; Prepin and Pelachaud, 2011a,b)

- As a demonstration that social coordination can be an autonomous interaction process that cannot be reduced to the sum of the individual intentions or behaviors (e.g., De Jaegher and Di Paolo, 2007; Rohde and Stewart, 2008; Colombetti and Torrance, 2009; De Jaegher and Froese, 2009; Di Paolo et al., 2011; Moran, 2011)

- As a demonstration of a fruitful methodological dialogue between simple agent simulation models and empirical research on sensorimotor behavior (Beer, 2008; Husbands, 2009; Rohde, 2009; Di Paolo et al., 2011; Negrello, 2011)

The above-listed points are primarily derived from the main result of the study that, under certain conditions, coordinated interaction between humans can emerge. However, as will be detailed below, it is more difficult to interpret what, if anything, this finding implies for human social cognition and experience.

Does Perceptual Crossing Constitute Social Cognition?

In a recent paper, De Jaegher et al. (2010) have referred to the perceptual crossing experiment (Auvray et al., 2009) and its model (Di Paolo et al., 2008) as a prime example of how interaction can constitute social cognition, rather than to play just a contextual or enabling role for social cognition. They argue that “the variation in the number of clicks is attributable only to the differences in the stability of the coupling and not to individual strategies” (De Jaegher et al., 2010). In their understanding, the distinction between interaction as an enabling factor and as a constitutive factor is based on the fact that participants cannot consciously distinguish between the other and the shadow image; without self-organization of coordination, perceptual discrimination would be at chance level.

This line of argument has been criticized by Herschbach (2012) for a number of reasons. Herschbach argues that the difference between enabling and constitutive factors for social cognition is neither clearly defined nor demonstrated using examples. De Jaegher et al. (2010) refer to the original perceptual crossing study (Auvray et al., 2009) and its model (Di Paolo et al., 2008, first model) as an example where interaction constitutes social cognition. They then refer to Iizuka et al.'s (2009, 2012a) study on interaction with a recording as an example where interaction merely enables social cognition, but does not constitute it. In this model (see also Iizuka and DiPaolo, 2007), the agents scan the recording but eventually disengage, while they remain in indefinite interaction with another agent. Herschbach is puzzled about the nature of this divide and argues that as “the same kind of explanation in terms of the collective dynamics of the social interaction is given in both cases, it is unclear why the language of constitution is only applied to one but not the other” (Herschbach, 2012).

We remain agnostic as to whether or not perceptual crossing constitutes social cognition. The truth of such a statement depends on the definition of social cognition that is adopted and is thus open to interpretation. Having said that, we would like to point out, in response to Herschbach's (2012) criticism, that there is an important difference between the two models presented in Di Paolo et al. (2008), which respectively model Auvray et al.'s (2009) perceptual crossing experiment and its variant studied by Iizuka et al. (2009, 2012a). The original perceptual crossing experiment (Auvray et al., 2009) is deliberately designed in such a way that interaction with the shadow image is inherently unstable. In Iizuka et al.'s (2009, 2012a) experiment, on the other hand, the task and environment afford the possibility of a one-sided coordination with a recording. Indeed, Iizuka et al. (2009); Iizuka et al. (2012a) report that only 4 out of 10 human couples were able to make the distinction between recordings and live interactions. All of the participants had to develop turn-taking strategies in order to assess—individually—whether the interaction is live. Therefore, what matters most for solving the task in Iizuka et al.'s (2009, 2012a) experiment is an individual agent's capacity to modulate coupling so as to engage or disengage. What matters in Auvray et al.'s (2009) experiment is the environment and the mutual search behavior; live interaction and the solution to the task then emerge automatically. This difference may be the reason why De Jaegher et al. (2010) see interaction as merely enabling in Iizuka et al.'s (2009, 2012a) study and as constitutive in the original study (Auvray et al., 2009).

Another line of criticism has been voiced by Michael (2011, Michael and Overgaard, 2012). He argues that, in a hypothetical variant of Auvray et al.'s (2009) experiment, external events could be used to orchestrate a participant's behavior so as to bias the frequency with which a mobile object or the partner are encountered. In his example, the participants would receive electric shocks whenever they move away from a certain zone of proximity with the partner. The same main result would consequently be observed. He argues that if perceptual crossing is an example of social cognition, the electric shock based hypothetical variation would also have to be seen as an example of social interaction. Another argument is that at least the detection of animacy is to be attributed to individual participants, as the relative click rate for the fixed object varies from that of the shadow image and the other. Michael feels that this fact is under-appreciated in the interactionist explanation of the paradigm. His third argument is that it is unclear whether the perceptual crossing paradigm is a good example for social cognition in general and that, if not, one should be careful to generalize from the results.

All three of these critical points can be addressed by pointing out that Michael's (2011, Michael and Overgaard, 2012) idea of social cognition appears to differ fundamentally from De Jaegher et al.'s (2010). This is best illustrated by juxtaposing Michael and Overgaard's (2012) statement that

“for the interaction to constitute social cognition in this sense in the experiment, it would presumably have to constitute the processes by which social judgments are formed” (Michael and Overgaard, 2012)

to De Jaegher and Di Paolo's (2007) notion of “participatory sense-making” in an enactive theory of social cognition. Such a theory

“would be concerned with defining the social in terms of the embodiment of interaction, in terms of shifting and emerging levels of autonomous identity, and in terms of joint sense-making and its experience” (De Jaegher and Di Paolo, 2007).

The former approach places emphasis on what makes an individual perform a click, whereas the latter places emphasis on the interaction process. The former perspective sees no difference between externally orchestrated perceptual crossing and emergence of coordination; for the latter, the emergent coordination process and the mechanism underlying it makes all the difference. The former seeks to emphasize what is left of individual strategies in the experiment, the latter seeks to emphasize the importance of emergent processes in interaction. The former attempts to isolate the perceptual crossing experiment as an odd case and establish differences with other examples of social cognition; the latter strives to integrate it and seek commonalities or similar processes in other domains of social cognition.

Coincidentally, this very debate about how a choice of paradigm will determine whether the perceptual crossing experiment is perceived to “count” as an example of social cognition has been discussed as a hypothetical debate in Rohde and Stewart (2008). The authors suggest that internalists, who believe that (social) cognition is essentially down to what happens inside the brain of an individual, will consider the experiment as “cheating.” Michael (2011) notes several times that the emergence of coordination is an obvious consequence of participants using the same strategy, as if it was a shortcoming of the experiment, rather than a deliberate feature. He also states that emergent coordination is fully explained by the individuals' search strategy. This line of argument neglects the role of the virtual environment in producing these results. Manipulations of the environment could destroy participants' tendency to synchronize in perceptual crossing. For instance, Ware (2011) showed how the introduction of a delay in an interaction environment can lead to the break-down of otherwise stable coordination. These statements reveal strong internalist premises underlying Michael's (2011) argument, which in turn means that the disagreement with De Jaegher et al.'s (2010) interactionist perspective is of a much more fundamental nature than a disagreement of the exact significance of the perceptual crossing paradigm.

Rohde and Stewart (2008) conclude without a recommendation for a paradigm or perspective. They suggest instead that the debate about which explanation is most useful, or suits our intuitions best, should be informed by the scientific study of the underlying mechanisms. We second this recommendation, and want to stress that both the study of the mechanisms of coordination and of individual judgment deserve attention. The mechanisms of coordination matter because external orchestration by electric shocks is not the same thing as self-organized coordination. It in no way resembles the process of catching someone's eye in passing, which, similar to the perceptual crossing experiment, is a result of two people meeting in active perceptual pursuit. However, the individual strategies and how they are modulated by interaction should not be swept under the carpet, as they will both influence interaction and be influenced by interaction.

The reviewed research on perceptual crossing shows how both questions can be addressed as part of a minimalist and interactionist agenda based on experiments in perceptual crossing. The experiment by Auvray et al. (2009) focuses its attention on the necessary ingredients for the emergence of coordination and is complemented by Iizuka et al.'s (2009, 2012a) experiment that focuses on what an individual can do to modulate interaction and improve discriminability. This complementary approach can serve as an example of how to approach problems of social cognition in general. Even if, for any particular example of social cognition, one perspective or the other may be more appropriate, only an alteration and combination of the two perspectives will allow us to tell the full story, whether or not coordinated interaction is seen as constitutive for social cognition.

Perceptual Crossing and Emotion

Lenay (2010) recently mentioned the perceptual crossing study in the context of proposing a theory of emotion. Lenay draws on a number of different sources, including phenomenology, psychology, and even literature, to determine what is necessary in order to experience an encounter with another person as emotional and touching. He argues that for this emotional experience to occur, and even to experience oneself or another as subjects in the first place, it is necessary to engage in perceptual crossing. A further requirement was to be ignorant about how one's sensors and motors are linked up, which one learns in interaction with the other. The kind of self-emergent dynamics of coordination that occur in the perceptual crossing experiment are used as a proof of concept in this article, to illustrate the kind of processes he refers to.

It can be anecdotally reported that participants in the perceptual crossing experiment tend to enjoy the interaction with one another. Declerck et al. (2009) describe this as follows: “this situation […] is immediately richer in an emotional sense and users spontaneously engage in pursuit games or ‘dialogues’ that take the form of little dances in the shared numerical space.” (Declerck et al., 2009, our translation). In the present context of clarifying the exact significance of the results on perceptual crossing reported by Auvray et al. (2009), we want to stress that these observations are to date anecdotal. The main result neither supports nor contradicts this hypothesis. This remark should not be seen as a reservation against the theory proposed. Studies on sensory substitution give reason to believe that the sharing of a same perceptual prosthesis can be used to co-constitute and share an experience of value (e.g., Lenay et al., 2003; Auvray and Myin, 2009; Declerck et al., 2009; Bird, 2011). However, more targeted research will be necessary to understand the possible role of social interaction and communication in the affective experience of perceptual crossing. Lenay et al. have started research into this direction (cf. Deschamps et al., 2012; for a short description).

The Road Ahead

We have learned a lot from studies using the perceptual crossing paradigm already, and we expect to learn much more in the future, given the numerous ongoing lines of research that build upon it (cf. Section “What do the Results on Perceptual Crossing Imply?”). There are still many open issues in trying to understand even the most fundamental questions of how we perceive, interact with, and understand each other. What the perceptual crossing paradigm has shown is that there can be coordination between humans, a self-organized coupling of mutual perceptual exploration, that occurs without an explicit recognition process, as humans are equally likely to click when meeting the other and when meeting their shadow. The perceptual crossing paradigm is the simplest paradigm we know that generates such online coordination. Computational models have shown that simple neural network controllers can explain these results if agents are coupled online. They exploit the dynamic stability conditions of situated and embodied interaction, rather than passively parsing “social stimuli.” The simplicity of the paradigm, as well as the robustness of the results, make a strong case that similar processes of self-organized coordination between humans should be abundant in real-life interaction scenarios. Therefore, the natural way to implement a system capable of social interaction and social cognition would be to teach it to work with and modulate the natural occurrence of coordination. The assumption that this is what humans do is central in the interactionist turn.

One major open question for interactionist research on social cognition is the study of how the underlying processes are neurally implemented. There is a growing body of research investigating the neural correlates of social cognition and online interaction (e.g., Kampe et al., 2003; Amodio and Frith, 2006; Schilbach et al., 2006, 2010; Fujii et al., 2008, 2009). For instance, Schilbach et al.'s (2010) study highlighted the neural activities that are specifically involved in the sharing of a perceptual experience with a virtual character. In future research, the minimalism of the perceptual crossing paradigm will be a key advantage for neuro-imaging, given that the investigated processes can be very carefully controlled and minimal changes in action-perception can be applied that alter the engagement and perception of agency in a participant.

In the perceptual crossing experiment, there is no conscious distinction between the other and her shadow image, as the equal click rates for the other and the shadow reveal. This makes it possible to use the perceptual crossing paradigm to approach the question of social cognition from the other way around. Instead of starting from an individual's perception of social interaction, as it is usually done, in perceptual crossing experiments, one starts with a stable interaction without conscious recognition. From there, it can be asked what has to be added in order to obtain perception of agency at an individual level. Several routes can be pursued in this endeavor.

Lenay et al.'s (Lenay and Stewart, 2012; cf. Section “Extensions and Models of the Paradigm”) results on classification of encounters marked by different sounds suggests the possibility that, in variants of the perceptual crossing experiment, there could be some level of individual discrimination capacity. More fine-grained measures of participants' conscious recognition capacity could be obtained, for instance, by measuring confidence ratings after each click (e.g., Dienes, 2004) or by asking forced-choice judgments after each encounter. Thereby, individual discrimination capacities could be studied parametrically and different facets of consciously perceived agency could be captured.

Another approach would be to change the stability conditions of the task in order to increase task difficulty and demand discriminative actions from the participant. The research performed by Iizuka et al. (2009, 2012a) that requires participants to distinguish between recordings and live interactions can be seen as a venture into this direction. As participants can get trapped in one-sided coordination, the task requires them to learn probing and turn-taking strategies over several trials in order to modulate the dynamics of perceptual crossing and generate a basis for individual discrimination.

There is a third route to pursue that would appear to be so obvious that it is surprising that research in this direction is only just starting. Combining the research strands on behavioral experiments with humans (Section “Further Experiments on Perceptual Crossing”) with the strand of computational modeling (Section “Robot Simulation Models”), one can pair off humans and machines and study their interaction. Thereby, one can exactly control the kind of dynamics that a participant can engage in and how they influence her clicking behavior. This comes down to a minimal “Turing test” (Turing, 1950). The concept of a “Turing test” dates back to the co-inventor of computers, Alan Turing, who proposed to make a human speak to a computer via a digital interface and use the human judgment—is it a machine or another human?—as an empirical test for artificial intelligence. Using the same approach in the perceptual crossing paradigm will allow testing experimentally what it is about an animated and responsive stimulus that makes a human perceive a source of stimulation as another intentional entity. It will also reveal what it is that allows stable interaction.

Lenay's group have recently started testing humans against simulated agents with different control strategies, finding an overall difficulty in distinguishing artificial agents from human partners, as well as a seemingly paradoxical trend to rate agents with simple control strategies as more human than those with more sophisticated control strategies (cf. Deschamps et al., 2012). A similar line of research, yet with a very different paradigm, was explored by Pfeiffer et al. (2011). This experiment used a social gaze paradigm where participants' eyes movements were recorded in order to induce an anthropomorphic virtual character to respond in real time to the participants' fixations (see also Schilbach et al., 2010; Wilms et al., 2010). Participants had to determine if a virtual character's gaze behavior was controlled by another person or a by computer program and were instructed to assume either a cooperative, a competitive, or a naïve strategy from the potential interaction partner. The study found that the attribution of intentionality is influenced by the presumed dispositions (i.e., naïve, cooperative or competitive) of the interacting entity as well as by the contingency of interaction. These studies already demonstrate the advantages of being able to control the interactive properties of an interaction partner in order to observe how this influences the behavior and perception of the other partner.

The perceptual crossing paradigm is but one example of an ongoing shift of paradigm in social cognition. Researchers start to pay attention to the emergent dynamics of live interaction that have thus far been neglected. This interactionist turn, again, can be seen as part of a more general trend in cognitive science to take the embodied interaction with an environment and the emergent properties of situated sensorimotor behavior seriously. These new approaches are characterized by the use of dynamical system's theory as a tool to describe the properties of systems behaving in a closed sensorimotor loop and by paying close attention to the influence that the body and the environment have on behavior and cognition. The cost of such a more encompassing view is that researchers are faced with systems of remarkable complexity and quickly encounter the limits of current mathematical tools. This is why it is important to have simple paradigms, such as the perceptual crossing paradigm. Using restricted behavior in a minimal virtual environment, the complexity of the behavior to be explained could be scaled down to manageable dimensions. The perceptual crossing results demonstrate the power and importance of online interactions in an intelligible way. The variables measured in perceptual crossing—e.g., stability conditions, amount of turn-taking, rules of sensorimotor contingency, inter-participant correlation of behavior—may be very different from those used traditionally in social cognition research. For some such measures it may at first not even be particularly clear how they can be related to existing individually based variables, such as perceptual judgments and inferential capacities. Yet, they demonstrate on a small scale how an explanatory interactionist story can evolve. We are curious what a future, gradual enrichment of this simplest of online paradigms will reveal.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Ezequiel Di Paolo and Jess Hartcher-O'Brien for their comments on a previous version of this manuscript. Marieke Rohde is funded by the DFG grant ELAPS—Embodied Latency Adaptation and the Perception of Simultaneity.

Footnotes

- ^An even earlier simulation model of the task has been implemented by Stewart and Lenay, but has never been published. These agents are controlled by a hand-designed feedback circuit that inverts movement direction when stimulated and are subject to inertial forces. The control law and behavior are akin to those evolved in the Evolutionary Robotics simulations.

References

Amodio, D. M., and Frith, C. D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 7, 268–277.

Auvray, M., Lenay, C., and Stewart, J. (2009). Perceptual interactions in a minimalist environment. New Ideas Psychol. 27, 79–97.

Auvray, M., and Myin, E. (2009). Perception with compensatory devices. From sensory substitution to sensorimotor extension. Cogn. Sci. 33, 1036–1058.

Beer, R. D. (2008). “The dynamics of brain-body-environment systems: a status report,” in Handbook of Cognitive Science: An Embodied Approach, eds P. Calvo and A. Gomila (Amsterdam, Boston, London: Elsevier Science), 99–120.

Bird, J. (2011). The phenomenal challenge of designing transparent technologies. Interactions 18, 20–23.

Brown, J., Aczél, B., Jiménez, L., Kaufman, S. B., and Plaisted-Grant, K. (2010). Intact implicit learning in autism spectrum conditions. Q. J. Exp. Psychol. 63, 1789–1812.

Cangelosi, A., Metta, G., Sagerer, G., Nolfi, S., Nehaniv, C., Fischer, K., Tani, J., Belpaeme, T., Sandini, G., Nori, F., Fadiga, L., Wrede, B., Rohlfing, K., Tuci, E., Dautenhahn, K., Saunders, J., and Zeschel, A. (2010). Integration of action and language knowledge: a roadmap for developmental robotics. IEEE Trans. Auton. Ment. Dev. 2, 167–195.

Colombetti, G., and Torrance, S. (2009). Emotion and ethics: an inter-(en)active approach. Phenomenol. Cogn. Sci. 8, 505–526.

Cowley, S. J. (2008). “Social robotics and the person problem,” in AISB 2008 Convention: Communication, Interaction and Social Intelligence, (Aberdeen: The Society for the Study of Artificial Intelligence and Simulation of Behaviour), 28–34.

De Jaegher, H. (2009). Social understanding through direct perception? Yes, by interacting. Conscious. Cogn. 18, 535–542.

De Jaegher, H., Di Paolo, E., and Gallagher, S. (2010). Can social interaction constitute social cognition? Trends Cogn. Sci. 14, 441–447.

De Jaegher, H., and Froese, T. (2009). On the role of social interaction in individual agency. Adapt. Behav. 17, 444–460.

De Jaegher, H., and Di Paolo, E. (2007). Participatory sense-making - An enactive approach to social cognition. Phenomenol. Cogn. Sci. 6, 485–507.

Declerck, G., Lenay, C., and Khatchatourov, A. (2009). Rendre tangible le visible. IRBM, Ingénierie et Recherche BioMédicale 30(5-6), Numéro spécial “Technologies pour l'autonomie,” 252–257.

Deschamps, L., Le Bihan, G., Lenay, C., Rovira, K., Stewart, J., and Aubert, D. (2012). “Interpersonal recognition through mediated tactile interaction,” in Proceedings of IEEE Haptics Symposium (Vancouver, BC, Canada), March 4–7.

Dienes, Z. (2004). Assumptions of subjective measures of unconscious mental states: high order thoughts and bias. J. Conscious. Stud. 11, 25–45.

Di Paolo, E., Rohde, M., and De Jaegher, H. (2011). “Horizons for the enactive mind: values, social interaction, and play,” in Enaction: Towards a New Paradigm for Cognitive Science, eds J. Stewart, O. Gapenne, and E. Di Paolo (Cambridge, MA: MIT Press), 33–87.

Di Paolo, E., Rohde, M., and Iizuka, H. (2008). Sensitivity to social contingency or stability of interaction? Modelling the dynamics of perceptual crossing. New Ideas Psychol. 26, 278–294.

Froese, T., and Di Paolo, E. (2008). “Stability of coordination requires mutuality of interaction in a model of embodied agents,” in From Animats to Animals 10, The 10th International Conference on the Simulation of Adaptive Behavior, (Osaka), 52–61.

Froese, T., and Di Paolo, E. (2010). Modeling social interaction as perceptual crossing: an investigation into the dynamics of the interaction process. Connect. Sci. 22, 43–68.

Froese, T., and Di Paolo, E. (2011), “Toward minimally social behavior: social psychology meets evolutionary robotics,” in Advances in Artificial Life: Proceedings of the 10th European Conference on Artificial Life, eds G. Kampis, I. Karsai, and E. Szathmary (Berlin, Germany: Springer Verlag), 426–433.

Fujii, N., Hihara, S., and Iriki, A. (2008). Social cognition in premotor and parietal cortex. Soc. Neurosci. 3, 250–260.

Fujii, N., Hihara, S., Nagasaka, Y., and Iriki, A. (2009). Social state representation in prefrontal cortex. Soc. Neurosci. 4, 73–84.

Gangopadhyay, N., and Schilbach, L. (2012). Seeing minds: a neurophilosophical investigation of the role of perception-action coupling in social perception. Soc. Neurosci. 7, 410–423.

Herschbach, M. (2012). On the role of social interaction in social cognition: a mechanistic alternative to Enactivism. Phenomenol. Cogn. Sci. doi: 10.1007/s11097-011-9209-z. [Epub ahead of print].

Husbands, P. (2009). Never mind the iguana, what about the tortoise? Models in adaptive behavior. Adapt. Behav. 17, 320–324.

Iizuka, H., Ando, H., and Maeda, T. (2009). “The anticipation of human behavior using ‘Parasitic Humanoid’,” in Human-Computer Interaction. Ambient, Ubiquitous and Intelligent Interaction,, ed J. Jacko (Berlin, Heidelberg: Springer-Verlag), 284–293.

Iizuka, H., and DiPaolo, E. (2007). “Minimal agency detection of embodied agents,” in Proceedings of the 9th European Conference on Artificial life ECAL2007, LNAI 4648, (Berlin, Heidelberg: Springer-Verlag), 485–494.

Iizuka, H., Ando, H., and Maeda, T. (2012a). “Emergence of communication and turn-taking behavior in nonverbal interaction (in Japanese),” IEICE Transactions on Fundamentals of Electronics, Communications and Computer Sciences J95-A, 165–174.

Iizuka, H., Marocco, D., Ando, H., and Maeda, T. (2012b). Experimental study on co-evolution of categorical perception and communication systems in humans. Psychol. Res. doi: 10.1007/s00426-012-0420-5. [Epub ahead of print].

Kampe, K. K. W., Frith, C. D., and Frith, U. (2003). “Hey John!”: signals conveying communicative intention towards the self-activate brain regions associated with mentalizing regardless of modality. J. Neurosci. 23, 5258–5263.

Lenay, C., Gapenne, O., Hanneton, S., Genouëlle, C., and Marque, C. (2003). “Sensory substitution: limits and perspectives,” in Touching for Knowing, eds Hatwell, A. Streri, and E. Gentaz (Amsterdam: John Benjamins), 275–292.

Lenay, C., and Stewart, J. (2012). Minimalist approach to perceptual interactions. Front. Neurosci. doi: 10.3389/fnhum.2012.00098. [Epub ahead of print].

Lenay, C., Stewart, J., Rohde, M., and Ali Amar, A. (2011). You never fail to surprise me: the hallmark of the other. Experimental study and simulations of perceptual crossing. Interact. Stud. 12, 373–396.

Martius, G., Nolfi, S., and Herrmann, J. M. (2008). “Emergence of interaction among adaptive agents,” in From Animals to Animats 10, 10th International Conference on Simulation of Adaptive Behavior, (SAB, Japan, Proceedings. Springer), 457–466.

McGann, M., and De Jaegher, H. (2009). Self-other contingencies: enacting social perception. Phenomenol. Cogn. Sci. 8, 417–437.

McIntosh, D. N., Reichmann-Decker, A., Winkielman, P., and Wilbarger, J. L. (2006). When the social mirror breaks: deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev. Sci. 9, 295–302.

Michael, J., and Overgaard, S. (2012). Interaction and social cognition: a commentary on Auvray et al.'s perceptual crossing paradigm. New Ideas Psychol. 30, 296–299.

Moran, N. (2011). Music, bodies and relationships: an ethnographic contribution to embodied cognition studies. Psychol. Music. doi: 10.1177/0305735611400174. [Epub ahead of print].

Murray, L., and Trevarthen, C. (1985). “Emotional regulations of interactions between two-month-olds and their mothers,” in Social Perception in Infants, eds T. M. Field and N. A. Fox (Norwood: Ablex), 177–197.

Negrello, M. (2011). “Invariants of behavior: constancy and variability in neural systems,” in Cognitive and Neural Systems Series, ed V. Cutsuridis (USA: Springer).

Niewiadomski, R., Prepin, K., Bevacqua, E., Ochs, M., and Pelachaud, C. (2010). “Towards a smiling ECA: studies on mimicry, timing and types of smiles,” in Proceedings of the 2nd International Workshop on Social Signal Processing, (New York, NY: ACM), 65–70.

Pereira, A. F., Smith, L. B., and Yu, C. (2008). Social coordination in toddler's word learning: interacting systems of perception and action. Connect. Sci. 20, 73–89.

Pfeiffer, U., Timmermans, B., Bente, G., Vogeley, K., and Schilbach, L. (2011). The non-verbal Turing test: differentiating mind from machine in gaze-based social interaction. PLoS ONE 6:e27591. doi: 10.1371/journal.pone.0027591

Prepin, K., and Pelachaud, C. (2011a). “Effect of time delays on agents' interaction dynamics,” in 10th conference on Autonomous Agent and Multiagents Systems AAMAS2011, (Taipei), 1055–1062.

Prepin, K., and Pelachaud, C. (2011b). “Shared understanding and synchrony emergence: synchrony as an indice of the exchange of meaning between dialog partners,” in ICAART2011 International Conference on Agent and Artificial Intelligence, (Rome), 25–34.

Rohde, M. (2010). Enaction, Embodiment, Evolutionary Robotics. Simulation Models in the Study of Human Cognition, Series: Thinking Machines, Vol. 1, Amsterdam, Beijing, Paris: Atlantis Press.

Rohde, M., and Di Paolo, E. (2008). “Embodiment and perceptual crossing in 2D: a comparative evolutionary robotics study,” in Proceedings of the 10th International Conference on the Simulation of Adaptive Behavior SAB 2008, (Berlin, Heidelberg: Springer-Verlag), 83–92.

Rohde, M., and Stewart, J. (2008). Ascriptional and ‘genuine’ autonomy. BioSystems 91, 424–433; Special issue on modelling autonomy.

Schilbach, L., Eickhoff, S. B., Cieslik, E., Shah, N. J., Fink, G. R., and Vogeley, K. (2010). Eyes on me: an fMRI study of the effects of social gaze on action control. Soc. Cogn. Affect. Neurosci. 6, 393–403.

Schilbach, L., Wohlschlaeger, A. M., Kraemer, N. C., Newen, A., Shah, N. J., Fink, G. R., and Vogeley, K. (2006). Being with virtual others: neural correlates of social interaction. Neuropsychologia 44, 718–730.

Sebanz, N., Knoblich, G., Stumpf, L., and Prinz, W. (2005). Far from action-blind: representation of others' actions in individuals with autism. Cogn. Neuropsychol. 22, 433–454.

Sribunruangrit, N., Marque, C., Lenay, C., Gapenne, O., and Vanhoutte, C. (2004). Speed-accuracy tradeoff during performance of a tracking task without visual feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 12, 131–139.

Stewart, J., and Gapenne, O. (2003). Reciprocal modelling of active perception of 2-D forms in a simple tactile-vision substitution system. Minds Mach. 14, 309–330.

Timmermans, B., Schilbach, L., and Vogeley, K. (2011). Can You Feel Me: Awareness to Dyadic Interaction in High Functioning Autism, ESCOP. Spain: San Sebastian, 29 Sep - 2 Oct.

Ware, E. (2011). Interactive Behaviour in Pigeons: Visual Display Interactions as a Model for Visual Communication, PhD thesis, Centre for Neuroscience Studies, Queen's University, Kingston, ON.

Webb, B. (2009). Animals versus animats: or why not model the real iguana? Adapt. Behav. 17, 269–286.

Wilkinson, N., Metta, G., and Gredebäck, G. (2011). “Inter-facial relations: Binocular geometry when eyes meet,” in Proceedings of ICMC International Conference on Morphology and Computation, (Venice), 70–72.

Keywords: social cognition, online interaction, perceptual crossing, coordination

Citation: Auvray M and Rohde M (2012) Perceptual crossing: the simplest online paradigm. Front. Hum. Neurosci. 6:181. doi: 10.3389/fnhum.2012.00181

Received: 27 February 2012; Accepted: 01 June 2012;

Published online: 19 June 2012.

Edited by:

Bert Timmermans, University Hospital Cologne, GermanyReviewed by:

Dimitrios Kourtis, Radboud University Nijmegen, NetherlandsHanne De Jaegher, University of the Basque Country, Spain