Analytical approximations of the firing rate of an adaptive exponential integrate-and-fire neuron in the presence of synaptic noise

- 1Department Theoretical Neuroscience, Bernstein-Center for Computational Neuroscience, Central Institute of Mental Health, Medical Faculty Mannheim/Heidelberg University, Mannheim, Germany

- 2Faculty of Science and Environment, School of Computing and Mathematics, Plymouth University, Plymouth, UK

- 3Departments of Statistics and Neurobiology, University of Chicago, Chicago, IL, USA

Computational models offer a unique tool for understanding the network-dynamical mechanisms which mediate between physiological and biophysical properties, and behavioral function. A traditional challenge in computational neuroscience is, however, that simple neuronal models which can be studied analytically fail to reproduce the diversity of electrophysiological behaviors seen in real neurons, while detailed neuronal models which do reproduce such diversity are intractable analytically and computationally expensive. A number of intermediate models have been proposed whose aim is to capture the diversity of firing behaviors and spike times of real neurons while entailing the simplest possible mathematical description. One such model is the exponential integrate-and-fire neuron with spike rate adaptation (aEIF) which consists of two differential equations for the membrane potential (V) and an adaptation current (w). Despite its simplicity, it can reproduce a wide variety of physiologically observed spiking patterns, can be fit to physiological recordings quantitatively, and, once done so, is able to predict spike times on traces not used for model fitting. Here we compute the steady-state firing rate of aEIF in the presence of Gaussian synaptic noise, using two approaches. The first approach is based on the 2-dimensional Fokker-Planck equation that describes the (V,w)-probability distribution, which is solved using an expansion in the ratio between the time constants of the two variables. The second is based on the firing rate of the EIF model, which is averaged over the distribution of the w variable. These analytically derived closed-form expressions were tested on simulations from a large variety of model cells quantitatively fitted to in vitro electrophysiological recordings from pyramidal cells and interneurons. Theoretical predictions closely agreed with the firing rate of the simulated cells fed with in-vivo-like synaptic noise.

1. Introduction

In recent years there has been an increasing push toward neurobiologically highly realistic large-scale network models that incorporate a lot of anatomical and physiological detail (Traub et al., 1988; Whittington et al., 2000; Traub et al., 2005; Markram, 2006; Izhikevich and Edelman, 2008; Lansner, 2009; Lundqvist et al., 2010). One reason for this push is the growing interest in how various physiological parameters affect network dynamics, in particular in connection with pharmacologically and psychiatrically relevant questions (Markram, 2006, 2012; Kandel et al., 2013). Neural network dynamics has been identified as a crucial link between more basic genetic, molecular, and physiological factors on the one hand, and cognitive function and behavior on the other (e.g., Balaguer-Ballester et al., 2011; Mante et al., 2013, and citations therein), and has been described as a point of convergence for various pathophysiological and psychiatric mechanisms (Durstewitz and Seamans, 2012; Mitchell et al., 2012). A detailed understanding of physiological network dynamics is thus hoped to offer new insights into both how, mechanistically speaking, the healthy brain performs computations and produces behavior, and what exactly in functional and dynamical terms goes astray in psychiatric conditions. However, neurobiologically detailed models reach computational and mathematical limits very fast: Their large number of parameters, stiff non-linearities, and very high dimensionality make fitting to physiological data a very tedious ad-hoc process, numerical simulations very time-consuming, and prevent a deep understanding of the underlying dynamical mechanisms.

Partly for these reasons, mean-field theories (MFT) have been developed for networks of simpler single cell models such as the leaky integrate-and-fire neuron (LIF) (Abbott and van Vreeswijk, 1993; Amit and Brunel, 1997a,b; Brunel and Hakim, 1999; Fusi and Mattia, 1999; Brunel, 2000a,b; Brunel and Wang, 2001; Mattia and Del Giudice, 2002; Del Giudice et al., 2003; Renart et al., 2003, 2006; Brunel and Hansel, 2006). MFTs allow for a self-consistent mathematical description of the population dynamics on the basis of the probability density function for the membrane potential and/or other system variables (Tuckwell, 1988; Risken, 1989). Thanks to these methods, macroscopic quantities like firing rates can be derived, and various network states (e.g., asynchronous or synchronous states), together with bifurcations between these states, can be analyzed (Brunel and Hakim, 1999; Brunel, 2000a; Brunel and Hansel, 2006; Ledoux and Brunel, 2011). Furthermore, they provide a tool for systematically linking network dynamical phenomena to single cell and synaptic parameters on the one hand side, and to cognitive and behavioral observations on the other. A crucial ingredient of such MFTs is the static neuronal transfer function—how the firing rate of a single neuron depends on its mean inputs, in the presence of Gaussian noise. Such a relationship can be computed exactly for one variable such as the LIF (Siegert, 1951; Ricciardi, 1977; Amit and Tsodyks, 1991a), the exponential integrate-and-fire neuron (EIF) (Fourcaud–Trocmé et al., 2003) and the quadratic LIF (Brunel and Latham, 2003) models. Perturbative approaches have also been developed for two dimensional models, such as the LIF with colored noise (Brunel and Sergi, 1998; Moreno et al., 2002) or generalized integrate-and-fire neurons (Brunel et al., 2003).

Recent years have seen a major effort to develop very simple yet physiologically realistic single neuron models, in the sense that these can exhibit almost the full breadth of spiking patterns and bifurcations observed in real cells (Izhikevich, 2007; Naud et al., 2008; Durstewitz, 2009), and can be closely fitted in order to reproduce subthreshold voltage and spiking behavior of their empirical counterparts (Brette and Gerstner, 2005; Jolivet et al., 2006; Clopath et al., 2007; Badel et al., 2008a,b; Jolivet et al., 2008; Naud et al., 2008; Gerstner and Naud, 2009; Hertäg et al., 2012). One class of such models is the exponential integrate-and-fire neuron (Fourcaud–Trocmé et al., 2003) supplemented with an adaptation variable (AdEx, Brette and Gerstner, 2005) or a dynamic threshold variable leading to refractoriness (rEIF, Badel et al., 2008b), for which automatized, predictive and fast fitting procedures have been developed (Badel et al., 2008a,b; Hertäg et al., 2012), and which can exhibit a rich diversity of spiking dynamics due to the second variable representing spike-triggered and/or voltage-dependent adaptation (Naud et al., 2008; Touboul and Brette, 2008). Since adaptation is an almost universal feature of neuronal cells in diverse neural systems throughout the animal kingdom (Fuhrmann et al., 2002; O'Brien et al., 2002), and in cortical pyramidal neurons in particular (McCormick et al., 1985), its inclusion in spiking neuron models was an important step in reproducing a large variety of experimentally observed cell behaviors (see e.g., Izhikevich, 2007; Naud et al., 2008; Touboul and Brette, 2008; Augustin et al., 2013). Although these simple models contain a single adaptation time constant, they can often reproduce the spike adaptation behavior observed empirically (Benda and Herz, 2003; La Camera et al., 2004). While several groups have attempted to derive the firing rate of LIF-type models with an adaptation variable (La Camera et al., 2004; Gigante et al., 2007; Muller et al., 2007; Farkhooi et al., 2011), it is still unclear whether these approaches can be generalized successfully to the aEIF model, especially for parameter sets that fit electrophysiological data. Accurate analytical approximations to the firing rate of the aEIF model in such conditions would be highly desirable.

Here, we derive two approximations for the steady-state firing rate of the aEIF; first, by solving perturbatively the full 2-dimensional Fokker-Planck equation describing the aEIF with noisy inputs in the long adaptation time constant limit, and, second, by combining the 1-dimensional Fokker-Planck solution of the EIF model with distributional assumptions for the adaptation current. The theoretical ν-I (firing rate as a function of mean input current) curves are compared to single neuron simulations for a large number of parameter settings derived from in-vitro electrophysiological recordings of rodent prefrontal cortex pyramidal cells and interneurons probed with a wide range of inputs (see Hertäg et al., 2012). Furthermore, we investigated the influence of synaptic filtering, i.e., including realistic synaptic kinetics, through simple extensions of the Fokker-Planck formalism. These analytical expressions should provide an essential building block for the theoretical analysis of large networks composed of physiologically realistic elements, and thus improved tools for a mechanistic understanding of the relations between physiologically recorded network activity and behavior.

2. Results

In the following, we first derive the 2-dimensional Fokker-Planck equation for the EIF model with spike-triggered adaptation. This is a special case of the AdEx model, a simple 2-dimensional neuron model which combines the exponential integrate-and-fire (EIF) neuron (Fourcaud–Trocmé et al., 2003) with a second differential equation for an adaptation current (Benda and Herz, 2003; Izhikevich, 2003; Brette and Gerstner, 2005; Naud et al., 2008; Hertäg et al., 2012). The membrane potential V and the adaptation current w evolve according to the system of differential equations

Parameters C, gL, and EL correspond to the capacitance, the leak conductance and the leak reversal potential of a neuron. The membrane time constant is given by τm = C/gL. As the membrane potential approaches the threshold VT, the exponential term causes a very rapid increase of voltage, modeling the fast voltage-dependent upswing of an action potential. Parameter ΔT regulates the rise-time of the action potential. The downswing of a spike is replaced by a reset condition: When the upper threshold Vup is reached (which formally could be set to infinity), the membrane potential is reset to value Vr, and the adaptation current is increased by a fixed step b, reflecting spike-triggered adaptation. In addition to spike-triggered adaptation, the AdEx model also represents subthreshold adaptation by the parameter a. For the sake of simplicity, we set a = 0 (that is, w depends on time only), a simplification which we previously found does not limit the model's ability to reproduce a wide range of physiological recordings and spiking patterns (Hertäg et al., 2012). For this choice of parameters, the AdEx model becomes an EIF model with firing-rate adaptation.

The second Results Section develops an alternative approach which incorporates into the 1-dimensional Fokker-Planck equation for the EIF model distributional assumptions for the adaptation variable w. The subsequent two sections then validate and compare these approaches on 156 different parameter settings obtained from electrophysiological recordings of pyramidal cells (from layers 2/3 and 5) and interneurons (bitufted and fast-spiking) from rodent PFC under in-vivo-like input conditions. The final Results Section introduces a simple method for incorporating realistic synaptic kinetics.

2.1. Derivation of the 2-Dimensional Fokker-Planck Equation for the aEIF Model

The next step is to specify our model for the total synaptic input I into a cell. Cortical cells receive thousands of synaptic connections (Braitenberg and Schüz, 1991), at which transmitter release is highly probabilistic (Jahr and Stevens, 1990), and with each of them contributing only a small postsynaptic potential, on the order of 0.1–2 mV (Mason et al., 1991; Markram et al., 1997; Sjöström et al., 2001; Lefort et al., 2009; London et al., 2010). Under these conditions, the total synaptic input can be well approximated by a Gaussian stochastic process (Amit and Tsodyks, 1991a,b; Amit and Brunel, 1997a), known as the diffusion approximation limit. If one furthermore neglects the synaptic time constants, synaptic inputs take the simple form

where ξ(t) represents a Gaussian white noise term with zero mean and unit variance, 〈 ξ(t) ξ (t′) 〉 = δ(t − t′), and the parameters μI and σI are related to the mean and standard deviation of the input current. The dynamics of the neurons as specified by Equations (1–2) provide the link between input currents and spike output. Since these equations are stochastic, the central step in relating synaptic inputs to firing rates is the derivation of equations for the dynamics of the joint probability distribution P(V, w) for the two dynamical variables V and w of the single-cell model. This leads to a partial differential equation known as a Fokker-Planck equation, which can be derived from the Langevin equations, (1–3) (see Models and Methods)

with and .

This equation can be rewritten as a continuity equation,

where JV(V, w) and Jw(V, w) are probability fluxes in the V and w direction, respectively

The boundary conditions are P(Vup, w) = 0 for all w, and both fluxes should vanish sufficiently fast at V = −∞, and w = ± ∞. The steady-state firing rate ν is given by the integral of the probability flux in the V direction at (Vup, w), integrated over all possible values of w,

There is an additional boundary condition at reset, that expresses the fact that neurons that fire at (Vup, w) are reset at (Vr, w + b): This leads to an additional flux at Vr, such that

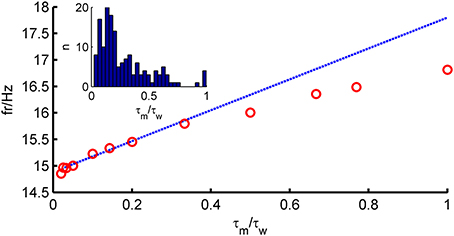

To solve Equation (4), we resort to a perturbative approach. In many neurons, adaptation is very slow compared to the membrane time constant (Womble and Moises, 1992; Powers et al., 1999; Benda and Herz, 2003; Stocker, 2004; Thomson and Lamy, 2007). Figure 1 (inset) shows that for most neurons in our data set, τw ≫ τm (〈 τm 〉 = 28 ms, 〈 τw 〉 = 196 ms). We therefore explore the limit τw ≫ τm and define a small parameter ϵ = . A simple calculation shows that the average of w is given by bτwν. Since ν is expected to be proportional to 1/τm, we see that b should scale as τm/τw for the average of w to stay finite in the limit ϵ → 0. We thus rescale ν → ν/τm and b → ϵ2bgL. In terms of these new variables, the fluctuations of w are expected to be proportional to ϵ b. We therefore define a new variable z, as

Figure 1. Comparison of theoretical firing rate ν with simulation results as a function of τm/τw. Model parameters for an average fast-spiking interneuron (see Table 1), 〈w〉 = bν0τw constant, σIsyn = 150 pA, μIsyn = rheobase + 10 pA. Blue, theoretical prediction; red, simulation.

which describes the fluctuations of w around its mean, in such a way as to remain of order 1 in the ϵ → 0 limit.

Performing the w → z change of variables, we find that the steady-state Fokker-Planck equation (cf. Equation 4, ∂t P(V, z) = 0) is given by

while the boundary conditions become

R(z) denotes the firing rate at z. Next, we expand the probability density function P(V, z) and the firing rate ν in powers of ϵ:

with νi and Pi are the i-th order terms in the perturbation series. By inserting these series expansions into the Fokker-Planck Equation (11), and replacing by its Taylor series (see Models and Methods), we derived a general solution for the ith order correction Pi(V, z):

with Si defined by the inhomogeneous terms of the FP equation. By normalizing P(V, z) properly such that the probability density integrates up to 1, and transforming parameters w and b, and variable ν back to their original definitions (i.e., pA and Hz respectively), we derived the zeroth order approximation for the steady-state firing rate as

This is basically the firing rate of the EIF model in the presence of white noise (Fourcaud–Trocmé et al., 2003), except that the mean input μ is reduced by an amount bν0τw (cf. La Camera et al., 2004; Muller et al., 2007; Farkhooi et al., 2011). Due to the symmetry of the Fokker-Planck equation in ϵ and z, all odd-order contributions to the firing rate must be zero, and thus the first non-vanishing term is the second order-correction (see Models and Methods for full derivation),

where Q0 = Q0(V) denotes the V-distribution at zeroth order, the operators

are evaluated at the upper threshold Vup, and

Hence, at second order, the steady-state firing rate is given by

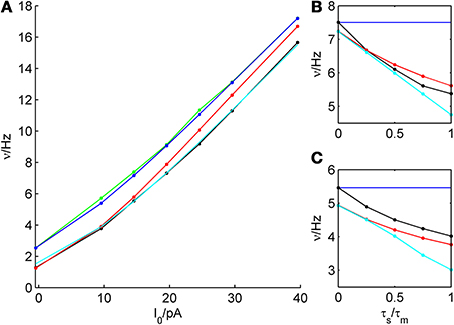

The assumption τm ≪ τw (long-correlation time limit) used in the derivations above plays an important role in the prediction capability of the full-FP approach. Figure 1 shows how the firing rate depends on the ratio τm/τw. It shows that the prediction is very good for ϵ < 0.5, but the agreement deteriorates for larger values of τm/τw. For the large pool of model cells generated from in-vitro recordings studied here, as will be shown below, this turned out not to be a major limitation.

2.2. Combining the Fokker-Planck Equation for the EIF with Distributional Assumptions on Adaptation

A simple approximation of the firing rate of the aEIF model is to replace adaptation w by its mean and plug this into the Fokker-Planck solution for the EIF model [the “zeroth order” approximation ν0, Equation (18)]. We found that this already yields quite reasonable results, but a significant improvement can be obtained by integrating the steady-state EIF firing rate across the marginal distribution F(w) of w:

Note that Equation (25) explicitly neglects the correlation between w and V, and thus cannot be exact.

In order to be able to use Equation (25), one has to compute the marginal distribution F(w). For a neuron firing as a Poisson process, the distribution could be computed exactly using the methods outlined in Gilbert and Pollak (1960). However, the aEIF spiking process is different from Poisson, especially in the suprathreshold regime. Simulation results suggested that a truncated Gamma-distribution, when inserted in Equation (25), captures the firing rate reasonably well:

where γ(k, x/θ) is the so-called lower incomplete gamma function, which is fully specified by the mean, 〈w〉, and variance, σ2w, of the adaptation current. These moments can be derived using the methods of Takács (1955) (see Models and Methods), yielding

where β1 is the Laplace transform of the interspike interval (ISI) distribution at 1/τw. Assuming again a Gamma-distribution for the ISIs parameterized by its mean and variance (see Models and Methods) one obtains for β1,

The truncation points wmin and wmax in the truncated gamma function, Equation (26), were, for simplicity, approximated by bounds of w in the case of a periodic spike train at frequency ν,

Assuming truncation points has computational advantages (considerable speed-up), and improves considerably the accuracy of the approximation in the suprathreshold range, where the spike trains get closer to periodic spike trains and therefore the distribution of w is close to being bounded by the values given in Equations (30)–(31).

2.3. Validation and Comparison of the MF Approaches on Experimentally Derived Parameter Sets

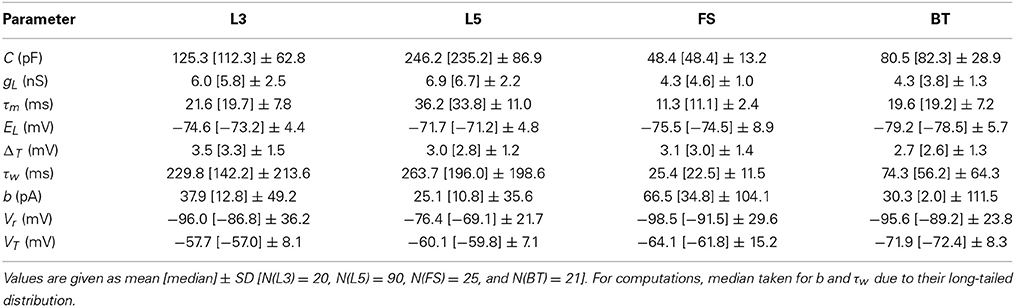

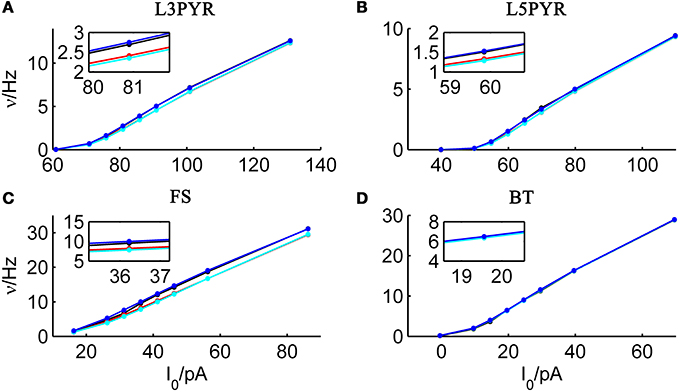

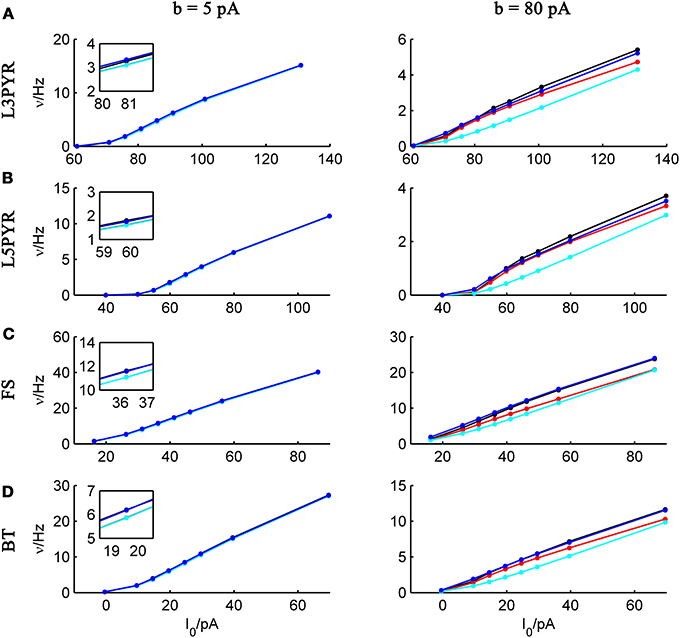

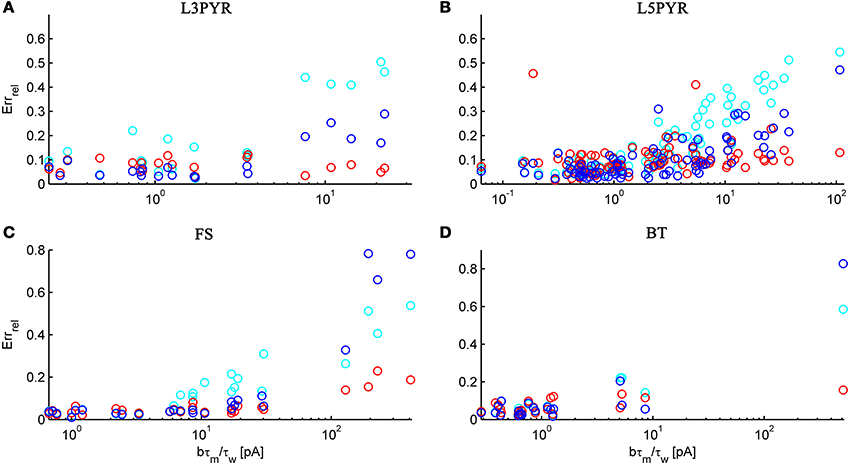

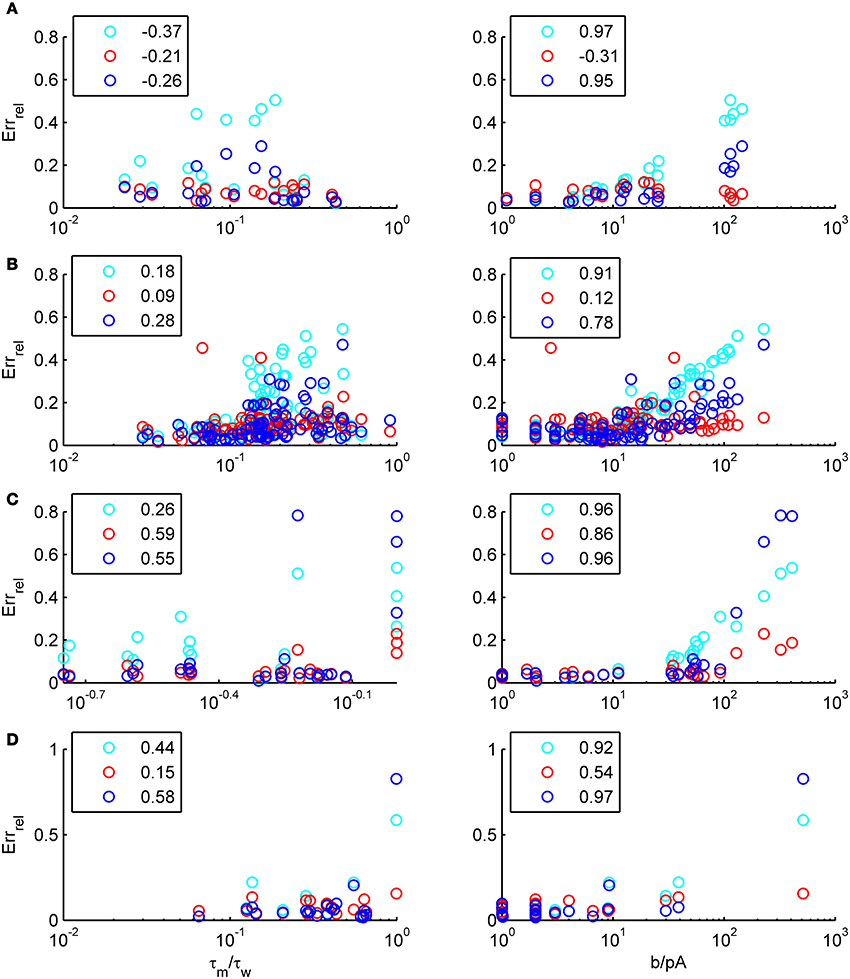

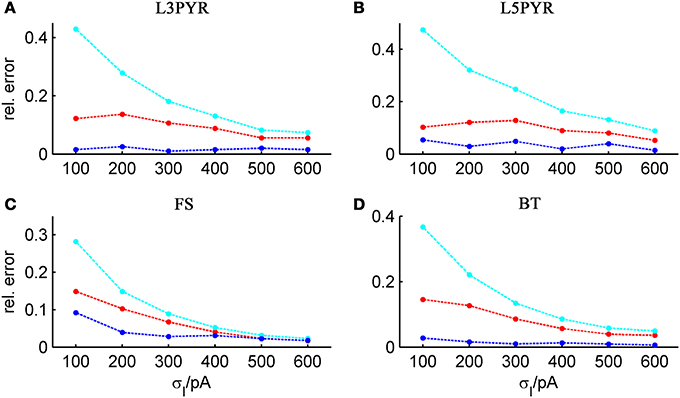

We tested the three different approximations derived above (EIF-〈 w〉 approach: ν0, full-FP approach: ν0 + ϵ2ν2, EIF-F(w) approach: 〈 νEIF〉w) on 4 “canonical” neocortical cell types (pyramidal cells of layers 2/3 and 5, fast-spiking, and bitufted interneurons). Parameters of these “canonical cells” were obtained by fitting a modified AdEx model (“simpAdEx,” see Hertäg et al., 2012) to a large sample from each of these 4 cell types recorded from rodent PFC slices using whole-cell patch-clamping, and taking the grand average across the estimated parameters for each cell type (averaged model parameters are given in Table 1). Figure 2 shows the input-output (ν-I) curves for the numerical simulations of the 4 model cells and the theoretical predictions from the three approximations (μIsyn ∈ [r − 20 pA, r + 50 pA] with r denoting the cell-specific rheobase, σIsyn = 150 pA). All theoretical predictions show a reasonable agreement with the simulation results. As can be seen, (1) the full-FP model tends to capture the simulation results best while the EIF-〈w〉 model performs worst, and (2) the largest deviation occurs for the fast-spiking interneuron, since it has the largest value of b, and the fastest adaptation time constant τw. The aEIF and the EIF model with added 〈w〉-input differ by the fact that the latter does not account for any adaptation dynamics. Hence, we investigated more closely how the parameters governing adaptation (b and τw) influence the theory-simulation agreement for the three approaches. While the adaptation time constant τw does not have a strong effect on the quality of the theoretical predictions, the parameter b which regulates the amplitude of spike-triggered adaptation plays a major role (see Figure 3). For low b (left panel) all three approaches do a similarly good job in predicting the simulated input-output curves, while for high b replacing w just by its average leads to serious discrepancies with the simulation results, in contrast to the other two approaches. More formally, this observation is confirmed by defining a relative goodness-of-fit measure as

and studying its dependence on b starting from the four average cell configurations given in Table 1, as shown in Figure 4 (results were averaged over σIsyn = {100, 200, 300, 400, 500, 600} pA for a mean input current at the rheobase). Note that the non-zero error at b = 0 pA is due to the finite simulation time and vanishes for sufficiently long simulations. As can be seen, the goodness-of-fit steadily decreases for the EIF-〈w〉 model, while the relative error stays below 10% for the EIF-F(w)-based approach and below 5% for the full-FP model when b < 100 pA. Thus, if b is sufficiently small, the simplest approach of just incorporating the mean 〈w〉 into the 1-dimensional EIF solution seems sufficient to capture the simulated input/output relationship. For larger values of b, the agreement between simulations and all approaches deteriorate (see Figures 4, 6, 7). Interestingly, for large enough b, the EIF-F(w) approach outperforms the full FP approach (see Figure 7).

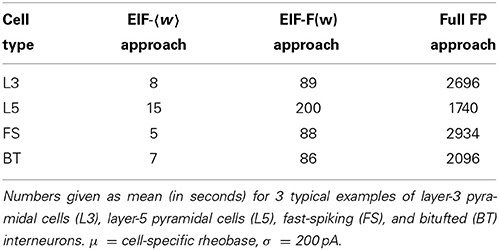

Table 1. Statistics of parameter estimates of the simplified AdEx for different PFC layer 3 (L3) and 5 (L5) pyramidal cells, fast-spiking (FS) and bitufted (BT) interneurons.

Figure 2. Comparison of theoretical and simulated ν-I curves of the aEIF for different canonical cell types. The ν-I curves for a simulated (A) layer-3 pyramidal cell, (B) layer-5 pyramidal neuron, (C) fast-spiking and (D) bitufted interneuron (averaged model parameters given in Table 1) are shown in black and compared to the theoretical predictions (ν0: in cyan, ν0 + ϵ2 ν2: in blue, 〈 νEIF 〉w: red). μIsyn ∈ {r − 20, r − 10, r − 5, r, r + 5, r + 10, r + 20, r + 50} pA with r denoting the cell-specific rheobase, σIsyn = 150 pA. Insets show zoom-ins on the firing rate at the rheobase.

Figure 3. Comparison of theoretical and simulated ν-I curves for small and large spike-triggered adaptation. The ν-I curves for a simulated (A) layer-3 pyramidal cell, (B) layer-5 pyramidal neuron, (C) fast-spiking, and (D) bitufted interneuron (averaged model parameters given in Table 1) are shown in black and compared to the theoretical predictions (ν0: in cyan, ν0 + ϵ2 ν2: in blue, 〈 νEIF 〉w: red) for small (left panel, b = 5 pA) and large b (right panel, b = 80 pA). μIsyn ∈ {r − 20, r − 10, r − 5, r, r + 5, r + 10, r + 20, r + 50} pA with r denoting the cell-specific rheobase, σIsyn = 150 pA. Insets show zoom-ins on the firing rate at the rheobase.

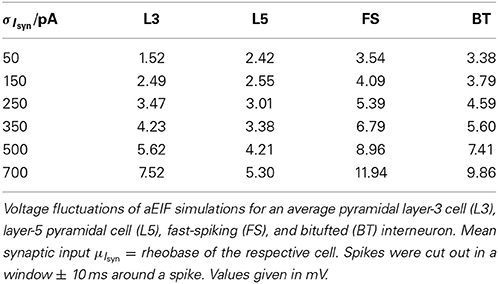

Figure 4. Average relative prediction error as a function of spike-triggered adaptation for different neuron types. For the 4 averaged neuron type parameters (A: layer-3 pyramidal cell, B: layer-5 pyramidal neuron, C: fast-spiking and D: bitufted interneuron), the average prediction error (Equation 32) is shown as a function of adaptation parameter b for the three theoretical approximations (ν0: cyan, ν0 + ϵ2 ν2: blue, 〈 νEIF 〉w: red). Relative prediction error was averaged across σIsyn ∈ {100, 200, 300, 400, 500, 600} pA and μIsyn = cell-specific rheobase.

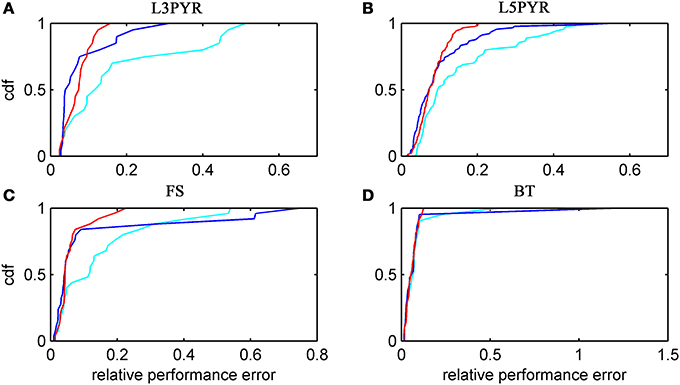

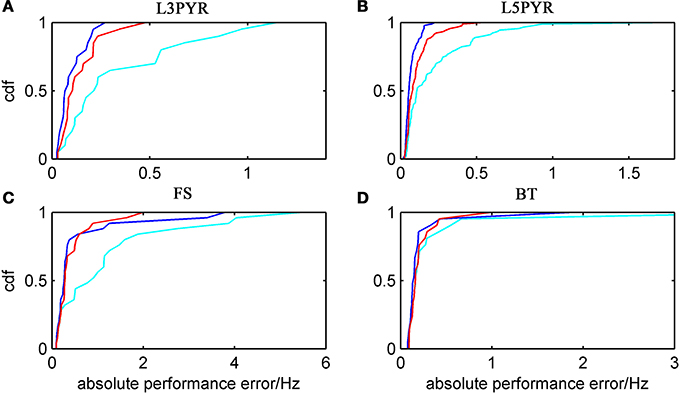

We have examined so far the accuracy of the approximations on a set of averaged model parameters representing “canonical” cortical cell types. However, even cells of the same type may strongly vary in their spiking statistics due to differences in morphological and biophysical properties, as reflected in the diversity of estimated model parameters for different cells of the same type (see Hertäg et al., 2012). To obtain a more complete picture of how the three approaches could cope with the full cellular diversity within our sample of 156 recorded rodent mPFC neurons (electrophysiological recordings and protocol were the same as in Hertäg et al., 2012, extended data set), the goodness-of-fit between simulation and theory was examined for this whole pool. Figure 5 shows the cumulative distribution of the relative prediction errors separately for each of the 4 cell classes, averaged over two input standard deviations σIsyn = [100, 300] pA and different mean input currents μIsyn = [r − 15, r − 10, r − 5, r, r + 5, r + 10, r + 15] pA with r = cell-specific rheobase. These parameters were chosen because they cover the expected range of firing rates and noise amplitudes in these cell types in vivo. In all four cases, the full-FP (ν0 + ϵ2ν2: blue) and the EIF-F(w) approximation (〈 νEIF〉w: red) clearly outperform the EIF-〈w〉 approach (ν0: cyan). Interestingly, while for the cells for which the error is lowest, the full FP approach typically outperforms the EIF-F(w)-based approximation, for the cells with the largest error, the opposite happens. Hence, the EIF-F(w) approach seems to be more robust to variations in parameters. Unsurprisingly, the cells that were less well described by the analytical approximations had an uncommonly large spike-triggered adaptation parameter b, and/or violated the assumption τw ≫ τm (see Figures 6, 7). Note that the large majority of the cells in our sample could be captured by both the full-FP and the EIF-F(w) model with less than 10% error (full FP: 76%, EIF-F(w): 77%) while in contrast, the EIF-〈w〉 approach had a similar accuracy only in 54% of the cells. Thus, these results demonstrate that the best of our three approaches yields accurate results across the vast majority of empirically derived parameter settings including more than 150 recorded cells. Figure 8 shows similar results in terms of the absolute prediction error, illustrating that it rarely exceeds 1 Hz for any of the approaches. Interestingly, the full-FP approximation shows the minimal absolute spike rate deviation for most cells, which indicates that the largest differences occur in the low firing rate regime for this approach.

Figure 5. Relative prediction error for a large physiological data pool from different neuron types recorded in-vitro. For the 4 neuron types (A: layer-3 pyramidal cell, B: layer-5 pyramidal neuron, C: fast-spiking and D: bitufted interneuron), the empirical cumulative distribution of the relative prediction error (Equation 32) is shown (ν0: cyan, ν0 + ϵ2 ν2: blue, 〈 νEIF 〉w: red). Relative prediction error averaged over σIsyn = (100, 300) pA and μIsyn = [r − 15, r − 10, r − 5, r, r + 5, r + 10, r + 15] pA with r denoting the cell-specific rheobase. N(L3) = 20, N(L5) = 90, N(FS) = 25, and N(BT) = 21.

Figure 6. The relative prediction error for the full FP, the EIF-〈w〉 and the EIF-F(w) approach as a function of b · τm/τw. The error is shown for layer-3 pyramidal cells (A), layer-5 pyramidal cells (B), fast-spiking (C) and bitufted (D). Cyan: EIF-〈w〉, blue: full FP, red: EIF-F(w). Pearson correlation (cyan, blue, red): (0.89, 0.86, −0.26) (A), (0.74, 0.74, 0.14) (B), (0.87, 0.94, 0.89) (C), (0.89, 0.97, 0.51) (D). Error averaged over σIsyn ∈ {100, 200, 300, 400, 500, 600} pA and μIsyn = cell-specific rheobase.

Figure 7. The relative prediction error for the full FP, the EIF-〈w〉 and the EIF-F(w) approach as function of model parameters. The error is shown as a function of τm/τw(left) and b (right) for layer-3 pyramidal cells (A), layer-5 pyramidal cells (B), fast-spiking (C) and bitufted (D). cyan: EIF-〈w〉, blue: full FP, red: EIF-F(w). Pearson correlations are given in the legends. Error averaged over σIsyn ∈ {100, 200, 300, 400, 500, 600} pA and μIsyn = cell-specific rheobase.

Figure 8. Absolute prediction error for a large physiological data pool from different neuron types recorded in-vitro. For the 4 neuron types (A: layer-3 pyramidal cell, B: layer-5 pyramidal neuron, C: fast-spiking and D: bitufted interneuron), the empirical cumulative distribution of the absolute prediction error is shown (ν0: cyan, ν0 + ϵ2 ν2: blue, d〈 νEIF 〉w: red). Absolute prediction error averaged across σIsyn = [100, 300] pA and μIsyn = [r − 15, r − 10, r − 5, r, r + 5, r + 10, r + 15] pA with r denoting the cell-specific rheobase, and given in Hz. N(L3) = 20, N(L5) = 90, N(FS) = 25, and N(BT) = 21.

Besides empirical accuracy, an important criterion for the choice of the approach is the computation time requirements. Especially with regards to applications to large pools of data and physiologically relevant issues, the computational cost may be a decisive factor. Clearly, the EIF-〈w〉 approach is the fastest, but at the same time the most inaccurate. Whether taking simply the average of w is nevertheless still sufficient for the scientific questions at hand thus depends on the dynamical regime considered (i.e., the input regime and the spike-triggered adaptation b). The full-FP approach, on the other hand, provides the best results as long as the assumption of long adaptation time limit is met. However, it comes at the price of high computation time costs (due to the multi-dimensional integrals to be evaluated numerically, see Table 2). Hence, in most cases, the EIF-F(w) approximation may provide the best compromise.

Table 2. Comparison of computation time requirements for the EIF-〈w〉, EIF-F(w), and full FP approximation.

2.4. Impact of Different Input Regimes on Theory-Simulation Agreement

It is widely thought that cortical cells operate in a “balanced” regime close to, but still below their spiking threshold (rheobase), with spikes caused by occasional excursions beyond the threshold driven by the fluctuations in the input (van Vreeswijk and Sompolinsky, 1996; Amit and Brunel, 1997b; Shadlen and Newsome, 1998; Brunel, 2000a; Burkitt et al., 2003; Destexhe et al., 2003; Haider et al., 2006; Renart et al., 2006; Miura et al., 2007). According to this scenario, input fluctuations play a prime role in driving cell spiking. We therefore systematically examined how the goodness-of-fit of the theoretical predictions depend on the standard deviation of the input current fluctuations. For σIsyn ∈ [50, 600] pA and a mean current that equals the rheobase of the cells, Table 3 shows that—depending on the examined cell class—subthreshold voltage SD's range from 2 to 12 mV, where the lower range 2–6 mV maps well onto the subthreshold voltage fluctuations observed in-vivo in whole cell patch-recordings as demonstrated in Hertäg et al. (2012) (see also Steriade et al., 2001; Timofeev et al., 2001; London et al., 2010). Figure 9 illustrates that the theory-simulation agreements generally improve for all three approaches as the input variance σ2Isyn increases (μIsyn = rheobase of the respective cell). However, this tendency is particularly pronounced for the EIF-〈w〉 approach, while the EIF-F(w)-based and the full-FP approximations show a more steady performance across the whole range of input fluctuations. The performance gains of these models with respect to the EIF-〈w〉-approach are particularly large in the physiologically most relevant, in-vivo-like regime (i.e., for standard deviations <300 pA causing voltage fluctuation within the range observed in vivo, see Table 3, Hertäg et al., 2012).

Table 3. Standard deviation of the subthreshold voltage fluctuations σV as a function of input variance σ2Isyn.

Figure 9. Average relative prediction error as a function of synaptic input standard deviation σIsyn for different neuron types. For the 4 averaged neuron type parameters (A: layer-3 pyramidal cell, B: layer-5 pyramidal neuron, C: fast-spiking and D: bitufted interneuron), the averaged prediction error (Equation 32) is shown in dependence on the input fluctuations σIsyn, evaluated at the cell-specific rheobase μIsyn, for the three derived firing rate approximations (ν0: cyan, ν0 + ϵ2 ν2: blue, 〈 νEIF 〉w: red). Relative prediction error averaged across b = [15, 30, 50, 80] pA.

2.5. Replacing the White-Noise Assumption by Realistic Synaptic Kinetics

In settling on Gaussian white noise for the input process we basically assumed synaptic inputs to be delta-like current pulses. While this may be a reasonable simplification for fast (compared to the membrane time constant) AMPA- and GABAA-mediated currents, it is certainly not met for longer lasting currents like NMDA- or GABAB-mediated currents. This means our white noise model should be replaced by a colored noise model. Following Brunel et al. (2003); Alijani and Richardson (2011), steady-state firing rate correction terms may be derived that take the non-zero synaptic time constant explicitly into account. However, this would mean studying a 3-D Fokker-Planck equation, performing an expansion in two small parameters (ratios of relevant time constants) and computing the first order terms in both expansions. While this approach is certainly feasible, we resorted to a simpler approach, which consists in replacing the variance in our analytical expressions by a rescaled variance that takes into account the synaptic time constant τs. Intuitively, this may be seen by noting that τs mainly influences the amplitude of the fluctuations. Furthermore, Alijani and Richardson (2011) showed that the firing rate is largely independent of the time scale of filtering as long as the amplitude of the fluctuations is adjusted such that the voltage distribution stays more or less the same. This observation can be exploited for deriving a simple approximation for the colored noise scenario. In brief, assuming that the second-order firing rate correction does not considerably change the general shape of the voltage distribution, one can derive the voltage-variance in the colored and the white noise case and deduce from their ratio a synaptic-time-constant-dependent reduction factor. For the sake of simplicity, we ignored the exponential term and went back to the LIF neuron, where the reduction of the variance is given by

with . Thus, we can proceed as before with the Gaussian white noise based theory, replacing the variance by Equation (33).

Figure 10 illustrates that the theoretically predicted and the simulated input/output curves indeed agree remarkably well when accounting for colored noise by the simple modification above, for small and large settings of b (Figures 10B,C). As one can see in panel (A), this agreement decreases in the supra-rheobase regime. Apparently, here the approach laid out above cannot fully account for the changes in P(V, w) brought about by the synaptic kinetics. The simple approach, Equation (33), was also compared to a more thorough theoretical derivation which explicitly corrects steady-state rates for the synaptic dynamics. For this purpose, the spike rate was expanded in powers of the ratio between the synaptic and membrane time constant k (see Alijani and Richardson, 2011, or Models and Methods), and in order to account for pronounced spike-triggered adaptation, the average over the theoretical w-distribution was taken:

with the second order correction ν2, CN given by Alijani and Richardson (2011)

where , and Q0 is the zeroth-order V-distribution. This method allows to reliably predict the firing rates in the sub- and supra-rheobase regime (see Figure 10, cyan line) as long as k ≪ 1. However, the computation of the integrals is very time-consuming such that the reduction of the variance (Equation 33) might be more suitable in terms of practicability.

Figure 10. Dependence of firing rate prediction performance on synaptic kinetics. For an average bitufted interneuron, the theoretical predictions in the white noise and colored noise case are shown and compared to simulations. (A): ν-I curve, parameters given in Table 1, σIsyn = 250 pA, τs/τm = 1/2. (B): σIsyn = 200 p, μIsyn = rheobase, b = 5 pA. (C): σIsyn = 200 pA, μIsyn = rheobase, b = 30 pA. Green: simulation, white noise; blue: theoretical prediction, white noise; black: simulation, colored noise; red: theoretical prediction (reduction of variance), colored noise. cyan: theoretical prediction by Equation (34), colored noise.

3. Discussion

Any hope for gaining a detailed understanding of the relations between the biophysical and physiological parameters of a neural system and its emerging dynamics is ultimately encased by the computational (in-)tractability of large complex non-linear dynamical systems. Especially in the context of psychiatric disease and pharmacological intervention, there is an increasing desire to harvest computational neuroscience toward obtaining mechanistic insight (Markram, 2012; Montague et al., 2012; Kandel et al., 2013; Spanagel et al., 2013), yet the current possibilities for this are limited: Either the models are simple enough to allow systematic formal treatment and analysis (e.g., Brunel, 2000a; Brunel and Latham, 2003), but then they are often too remote from physiological reality to enable an understanding of how specific biophysical or physiological factors contribute to the system's functional dynamics. Or the models are biophysically and anatomically sophisticated (Traub et al., 1988; Whittington et al., 2000; Traub et al., 2005; Markram, 2006; Durstewitz and Gabriel, 2007; Izhikevich and Edelman, 2008; Lansner, 2009; Lundqvist et al., 2010), but suffer from tedious and time-consuming parameter estimation, long simulation times, hence limited possibilities for scanning large parameter spaces, and little hope for a systematic investigation of the system dynamics. Recently developed single neuron models, like the AdEx model, start to fill in this important gap: On the one hand, they are simple enough (2 differential equations) to enable detailed investigation of their phase space and bifurcations, as well as fast and efficient parameter estimation procedures (Badel et al., 2008a,b; Hertäg et al., 2012). On the other hand, they are sufficiently powerful to model a wide range of physiologically observed spiking patterns (Naud et al., 2008; Touboul, 2008; Touboul and Brette, 2008; Durstewitz, 2009). In fact, they can be fitted to faithfully reproduce subthreshold voltage and spiking dynamics, and even predict spike times on test sets highly dissimilar from the training set within the bounds of physiological reliability (Hertäg et al., 2012). Thus, although these models, unlike Hodgkin-Huxley models, do not explicitly include the full range of biophysical-ionic mechanisms with which real cells are equipped, they may nevertheless implicitly account for them to the degree these are reflected in the probed subthreshold and spike output dynamics. In this sense, models like the AdEx still allow for a phenomenological access to, and study, of a cell's biophysical repertoire.

Here, we developed analytical approximations to the steady-state firing rate of a population of aEIF cells under in-vivo-like synaptic bombardment, characterized by its Gaussian mean and variance. This ‘static transfer function’ provides the central building block for mean-field descriptions of the firing rate of different neural populations in asynchronous network states. These descriptions yield a set of self-consistent, closed-form expressions for these population firing rates. They have been highly successful in gaining a deep understanding of neural system dynamics (e.g., Amit and Brunel, 1997a; Brunel and Hakim, 1999; Brunel, 2000b; Renart et al., 2003), and in efficiently determining suitable parameters for networks of spiking neurons (Deco et al., 2009). We have extended this already established framework to the static transfer function of a neuron model of intermediate mathematical complexity yet high physiological validity, the exponential integrate-and-fire neuron with spike-triggered adaptation (aEIF). Three approaches were followed toward this goal: First, we derived the 2-dimensional Fokker-Planck (FP) equation for the full aEIF system (“full-FP approach”) and solved it under the assumption of slow (compared to the membrane time constant) adaptation, a reasonable account for most cortical neurons. Second, we replaced the adaptation variable w simply by its mean and inserted this into the previously derived solution of the Fokker-Planck equation for the exponential integrate-and-fire neuron (“EIF-〈w〉-approach”). Third, we augmented the EIF solution by accounting for the marginal distribution of w (“EIF-F(w)-approach”) which was derived empirically from simulation studies. The validity of the theoretical predictions was then checked on a large range of physiological parameter sets derived from ~150 in-vitro recordings of prefrontal cortical pyramidal cells and interneurons. While all three theoretical approaches agreed reasonably well with the numerical results for most neurons from this set, the theoretical predictions from the EIF-〈w〉-approach were clearly inferior to the other two MF approaches when adaptation was strong or input fluctuations were relatively low, i.e., within a range inferred from in-vivo patch-clamp recordings. In practice, the computation of the second-order correction for the full-FP takes the longest time due to the multi-dimensional integrals to be evaluated numerically. Therefore, the EIF-F(w) approach presents a good balance between computation time requirements and the accuracy of the firing rate agreement. It yields theoretical predictions with at most 10% of error in our large sample, usually below 2%, and furthermore is amenable to an efficient computational implementation. Finally, realistic synaptic kinetics (“colored noise”) could be incorporated by simply reducing the input variance by a theoretically derived factor that depends on the ratio of the synaptic to the membrane time constant. Thus, we have developed an approach that provides (semi-)analytical access to the dynamics of large networks composed of physiologically realistic neuron models which can be tightly fitted to electrophysiological recordings.

3.1. Assumptions and Limitations of the Mean-Field Approaches

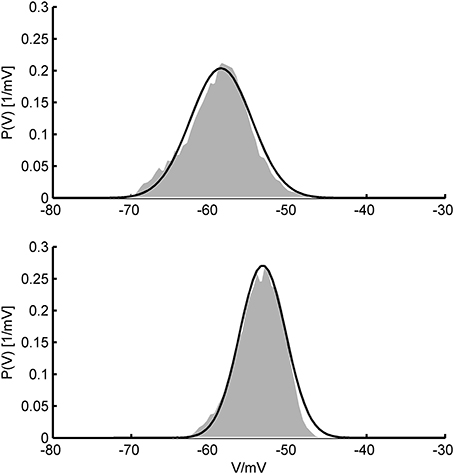

All of our three approaches were based on some assumptions that require discussion. A common rationale underlying the Fokker-Planck formalism is that the system's variables can be treated in the diffusion limit where the input statistics can be accurately described by their two first moments only. This ensues in the presence of a large number of relatively small synaptic inputs where the Central Limit Theorem ensures convergence to a Gaussian distribution which is fully specified by its first two moments. Physiologically, this is a very reasonable assumption given that the number of synaptic contacts a typical cortical pyramidal cell receives is indeed very high, in the range of 103–104 (Braitenberg and Schüz, 1991), each of them delivering a postsynaptic potential of less than 1 mV on average (Markram et al., 1997; Tsodyks and Markram, 1997; Sjöström et al., 2001; Lefort et al., 2009; London et al., 2010). Moreover, cortical spike statistics are often close to Poisson (Shadlen and Newsome, 1998), and synaptic release itself is highly probabilistic (Dobrunz and Stevens, 1997). These assumptions could, in principle, also be experimentally tested using in-vivo patch-clamping (London et al., 2010, see Figure 11).

Figure 11. In-vivo V-distributions and theoretical predictions. Two examples for the agreement of theoretically predicted membrane potential distributions (black curves) with ones obtained from in-vivo patch-clamp recordings (gray areas; data kindly provided by Dr. Thomas Hahn, Central Institute of Mental Health). In-vivo distributions were generated from voltage recordings during up-states in anesthetized rat prefrontal cortex. Theoretical fits were obtained by allowing the mean and standard deviation of the synaptic input to vary (and aligning distributions along the V-axis) while keeping single cell parameters fixed. Single cell parameters were drawn from the pool of AdEx model fits to in-vitro recordings (see text) and held constant in the process.

With regards to the adaption current (w), we assumed that (i) spike-triggered processes are the major drivers of spike train adaptation, and (ii) adaptation is relatively slow in comparison with the membrane time constant. Again, both these assumptions are physiologically highly reasonable as Ca2+-dependent K+ currents (Brown and Adams, 1980; Madison and Nicoll, 1984; Sah, 1996; Powers et al., 1999) and slowly inactivating (upon spiking, τ > 1–3 s) “persistent” Na+ currents (Fleidervish and Gutnick, 1996; Astman et al., 2006) are the major sources of adaptation in cortical cells. Ca2+ influx is a strongly spike-bound process governed mainly by high-voltage-activated Ca2+ and voltage-dependent NMDA currents (Schiller et al., 1995; Spruston et al., 1995; Stuart et al., 1997), and builds up almost linearly with spiking rate (Helmchen et al., 1996). It furthermore decays relatively slowly with time constants usually >100 ms (Helmchen et al., 1996; Vergara et al., 1998), and major Ca2+-dependent K+ currents like AHP also have relatively slow time constants. The EIF-〈w〉-approach, in addition, relies on the assumption that the amplitude b of adaptation is relatively small for the diffusion limit to be accurate. This is the case for some, but not all of the cortical cell types we have investigated. Thus, for a decent fraction of neurons a significant improvement is achieved by using either the full-FP equation or by integrating the EIF rate across the probability density of w (EIF-F(w) approach). This is especially true when input fluctuations are not large and thus fluctuations in w tend to have a larger impact on the neuron's spiking behavior.

If the neuron's spiking process was truly Poissonian, the w-density could be derived explicitly following (Gilbert and Pollak, 1960). However, because of the refractory period and the adaptation process (which causes non-vanishing auto-correlations) this assumption is not fully met for the aEIF model. This leads to strong deviations, especially in the supra-rheobase regime with its higher spiking rate, such that adaptation and refractory processes have a major influence. To circumvent this, we derived the w-distribution empirically from simulations and found that a truncated Gamma-distribution, parameterized by the mean and the variance of the adaptation current could predict rather well the steady-state firing rate of the aEIF. In order to compute the variance of w, we first derived the variance of the interspike intervals which were assumed to be Gamma-distributed, an approximation which is justified by our simulations, experimental data (Barbieri et al., 2001; Miura et al., 2007; Maimon and Assad, 2009), and theoretical studies (Ostojic, 2011). We furthermore observed that replacing the analytical, parameterized truncated Gamma distribution by the true empirical density function hardly improved the results, implying that everything can still be solved in closed-form (data not shown).

3.2. Comparison of the Analytical Approaches to Simulation Results

In general, all approaches agreed remarkably well with numerical simulations on model neuron parameters averaged across large sets of in-vitro recordings from four different major cortical cell types (pyramidal cells of layers 2/3 and 5, fast-spiking, and bitufted interneurons). For a considerable fraction of cases, the EIF-〈w〉-approach gave comparable results to the other two, more sophisticated approaches, due to the fact that the adaptation amplitude b, on average, is rather small in our pool of model cells derived from experimental recordings. If b is sufficiently small, fluctuations in the synaptic input dominate the spike-induced fluctuations in w, and hence in this case the other two approaches yield no significant improvements compared to accounting just for the mean in w. However, we are ultimately interested in extending the approach to physiologically realistic scenarios which capture the experimentally observed diversity in parameters, so that it is important that the approach cannot “only” capture a few “canonical” cell types, but in fact a broad range of experimentally derived input-output behaviors. Investigating prediction performance across a large pool of model cells parameterized by in-vitro recordings from different cell types, layers, and species (rat and mice), the full-FP and EIF-F(w) approaches not only clearly had an edge over the EIF-〈w〉-based MF approach (cf. Figure 5), but predicted the simulated input/output curves in almost all cases with less than 10% error, across a range of different adaptation constants b. The few deviations from this high prediction accuracy observed for the full-FP can be explained through the fact that the assumption of a long-adaptation time limit was violated in these instances. When the range of input standard deviations was chosen such that the evoked subthreshold membrane fluctuations are most consistent with in-vivo data, the EIF-F(w) approach started to clearly outperform the EIF-〈w〉 approach (Figure 9). Thus, to reproduce average spiking behavior under in-vivo-like conditions, neural adaptation implemented by w seems to play a significant role. We had already observed previously that the inclusion of an adaptation variable in the single cell model makes a quite pronounced difference for its prediction performance on spike times from real cell counterparts when driven with in-vivo-like currents (Hertäg et al., 2012). More generally, spike rate adaptation appears to be one of the most important properties in accounting for the rich diversity in real neuron's spiking patterns (see e.g., Izhikevich, 2007; Naud et al., 2008; Touboul and Brette, 2008). Omitting it, or approximating it by a constant mean, may hence profoundly alter the input/output and response behavior of the modeled neural population, and thereby stable state solutions and dynamical properties. Although this certainly has to be investigated in much more detail, these observations suggest that reverting to simpler cell models in mean-field approximations may come at a prize.

In terms of practicability, the EIF-〈w〉 method is much faster and easier to apply than the other two approaches. The EIF-F(w) approach may represent a reasonable balance between the fast, but least accurate EIF-〈w〉 approach, and the most accurate, but time-consuming full-FP method. The choice of the approach should therefore be driven by the purpose of the research, the dynamical regimes considered and/or the specific cell types studied.

3.4. Relation to Previous Work on Models with Adaptation

Several studies have investigated the influence of adaptation at the single neuron level (or for a population of unconnected neurons). La Camera et al. (2004) incorporated the effect of adaptation into the calculation of the mean firing rate by just taking its mean, as in our EIF-〈w〉-approach (see also Ladenbauer et al., 2014). While they reported a good agreement with data using this approach, we have shown here that a significant fraction of recorded cells is not well described. Muller et al. (2007) used a mixed analytical and numerical approach to study various variants of LIF neurons with firing rate adaptation (see also Chizhov and Graham, 2007; Farkhooi et al., 2011). They applied a master equation for the adaptation variable, driven by a hazard function describing the instantaneous firing probability, which was obtained from fitting simulation data. Naud and Gerstner (2012) investigated the dynamics of a spike response model with adaptation (see also Toyoizumi et al., 2009). Richardson (2009) used a numerical approach (the threshold integration method introduced in Richardson, 2007) to investigate the static and dynamical transfer functions of a generalized EIF model (GEM), that include a calcium-based adaptation variable. None of these studies addressed the scenario of LIF-type neurons with diffusive noise, beyond the mean adaptation case, as we have done here. However, some of the above mentioned approaches can be used for time-dependent inputs, a situation we have not considered.

Other authors have investigated the effects of adaptation at the network level using mean-field approaches (Treves, 1993; van Vreeswijk and Hansel, 2001; Fuhrmann et al., 2002; Gigante et al., 2007; Augustin et al., 2013; Nicola and Campbell, 2013a,b; Ladenbauer et al., 2014). These studies were either performed in the absence of noise, or replaced adaptation by its average. The calculations performed here should allow to generalize these studies to the more realistic case in which both synaptic inputs and adaptation variable exhibit fluctuations with finite variance.

3.4. Relation to Input Statistics in vivo

Assuming white noise input implies, by definition, that all frequencies are equally present in the power spectrum, or—put differently—that synaptic inputs are (essentially) delta-like current pulses that introduce no (or only little) filtering of the Poisson spiking input. While this may be a reasonable assumption for AMPA- and GABAA-mediated synaptic events with decay time constants on the order of 1–3 ms (Kandel et al., 2000), much shorter than the typical membrane time constant of 10–20 ms (Kandel et al., 2000), for NMDA- and GABAB currents it is certainly not met. Yet, NMDA receptor channels are major synaptic charge carriers in neocortex (Spruston et al., 1995), and other receptors with slower kinetics like GABAB or cholinergic ACh receptors may significantly contribute as well (Connors et al., 1988; Deisz et al., 1997; Xiang et al., 1998). Thus, we considered colored (filtered) noise to allow synaptic inputs to have arbitrary decay time constants. Following Alijani and Richardson (2011), this can be achieved simply by reducing the input variance by a factor depending on the ratio of the synaptic to the membrane time constant, since the firing rates depend only little on the synaptic filtering as long as the probability density function of the membrane potential is not altered too much. This, in turn, conveniently allows to apply all the equations based on Gaussian white noise. Testing these ideas by simulations, we found that this simple modification works remarkably well for a wide range of synaptic time constants (cf. Figure 10). Deviations occurred mainly in the supra-rheobase regime where the spiking dynamics is mean-driven. By following the lines of Alijani and Richardson (2011), we derived an expression for the firing rates which explicitly corrects for the synaptic dynamics. This allows to reliably predict the spike rates also in the supra-rheobase regime as long as the synaptic time constant is not too large. Figure 11 furthermore illustrates that the V-distributions predicted by the theoretical MF approaches are in good agreement with those from in vivo patch-clamp recordings from prefrontal cortex (courtesy of Thomas Hahn, Central Institute of Mental health) where NMDA receptor densities are particularly high (Monaghan and Cotman, 1985).

3.5. Extensions and Possible Improvements

For practical reasons, it might be interesting to see how one can improve the EIF-F(w) approximation, but at the same time keep the computation time requirements as low as possible. Taking into account the empirical probability density of w does not improve results beyond its parametric-analytical instantiation through the truncated gamma-function. These theoretical approximations could potentially be further improved by incorporating the implicit V-dependency of w explicitly. However, these steps are mathematically challenging due to the exponential term in the AdEx equations modeling the action potential upswing. In order to at least test the predictive power of these possible enhancements, one could return to piecewise defined linear models (Hertäg et al., 2012) which still capture most of the physiological features observed in real neurons.

These approaches could also be carried forward to other simple neuron models, as the refractory EIF (rEIF, Badel et al., 2008b), the adaptive LIF model (aLIF, Hertäg et al., 2012) or the AdEx with subthreshold adaptation (i.e., a ≠ 0 in Equation 2). In Brunel et al. (2003), the generalized integrate-and-fire neuron (GIF) was addressed which accounts for subthreshold, but not spike-triggered adaptation. The second-order steady-state firing rate corrections obtained from both these approaches could easily be combined (a ≠ 0 and b ≠ 0) which would allow to incorporate simultaneously both types of adaptation.

The calculations of the static transfer function of the aEIF developed here can potentially provide the basis for a mean-field analysis of network asynchronous states for large networks of model cells whose parameters are derived from fitting real cells, bridging an important gap in the literature. The true diversity of cortical cell types could be captured by such an approach as well, by defining different pools of neurons with different average parameter settings (see e.g., Brunel and Wang, 2001). The tools provided here should therefore help to gain a deeper understanding of the mechanistic relationships among the physiological/biophysical or anatomical properties of the network, its neural dynamics, and the cognitive and behavioral phenomena emerging from the latter.

4. Models and Methods

4.1. The Solution of the Fokker-Planck Equation for the aEIF in the Long-Correlation Time Limit

By assuming ϵ ≪ 1 (long correlation time limit), the probability distribution and the firing rate can be expanded in powers of ϵ

In order to derive equations for the i-th order correction Pi and νi, respectively, the time series are inserted into the time-independent Fokker-Planck equation (Equation 11) and sorted by the power of ϵ. Thereby, the expression has to be expressed by its Taylor series expansion,

Equation (38) can be re-written by

with the set A given by A = {1, 2, …, L}. The set operation Ai is defined as creating a new set of all ordered pairs where an element of Ai is a vector of i elements of A. Hence, X is an i-dimensional vector which meets the additional constraint that the sum of its elements is equal L. By inserting the time series into the steady-state Fokker-Planck equation (Equation 11), one can derive a general differential equation for the Pi(V, z),

with the operator  defined by

defined by  and si(V, z) determined order by order by the inhomogeneous terms (see Equation 11). Hence, the general solution Pi(V, z) is directly given by

and si(V, z) determined order by order by the inhomogeneous terms (see Equation 11). Hence, the general solution Pi(V, z) is directly given by

with Si the general antiderivative of si. A complete description of the problem and the identification of Si(V, z) requires the definition of the boundary conditions:

with V+r and V−r denoting the membrane potential immediately above and below the reset value. The second boundary condition incorporates the jump constraint (spike triggered adaptation defined by adding a constant b at each spike). The i-th order firing rate correction is then given by the integral of the i-th order probability current correction at the upper threshold Vup over the z-space. By explicitly using the above-defined boundary conditions, one can also write,

The determination of the firing rate corrections comes down to the derivation of the Ri(z). Since P(V, z) is a probability distribution, the integral over the entire space is one and has to be independent of ϵ (Brunel and Latham, 2003). Consequently, one has

Furthermore, because of the ϵ − z − symmetry of the Fokker-Planck equation and the fact that the firing rate is the integral over the z-space from −∞ to ∞, all odd-order contributions to the firing rate are zero (ν2n + 1 = 0 ∀ n ∈ ℕ0). With this in mind and defining the inverse operator  −1 = J · K by

−1 = J · K by

the zeroth and second-order firing rate corrections can be derived. Since  P0(V, z) = 0, one can directly write

P0(V, z) = 0, one can directly write

with Θ(x) the Heaviside step function. The unknown function R0(z) will be determined at higher orders. Note that Equation (49) fulfills all boundary conditions (cf. with Equations 42, 43). Since  , for the first order correction P1(V, z), one obtains by incorporating the boundary conditions

, for the first order correction P1(V, z), one obtains by incorporating the boundary conditions

Deriving P2(V, z) in the same manner yields

The second boundary condition (43) leads to

Hence, in order to comply with the boundary conditions for w and V, R0(z) needs to satisfy

whose solution is

with

The second order correction to the firing rate ν2 is now given by integrating P2 over the z − V − space and applying the condition (46):

The final step is to determine the integral by integrating

once from −∞ to Vup that yields

which in turn leads to a differential equation for the unknown function R1(z):

The solution of Equation (59) is given by R0 multiplied with a polynomial containing only odd-order contributions. The integral can be conveniently obtained by integrating (59) twice over z:

Equations (56) and (60) determine the second order correction ν2.

4.2. The Derivation of the Variance of w

Along the lines of Takács (1955), we introduce a random variable η(t) that denotes the sum of the values of w in the time interval (0, t),

with w(t − tn) defined as

In order to derive the moments of the stochastic process, we consider the value of the sum of the signals directly before the beginning of the n-th event,

with Δt the n-th interspike interval. Any moment can be derived directly from this relation by the recurrence formula (Takács, 1955),

Here, E{•} denotes the expectancy value, and G represents the cumulative distribution for the interspike intervals. If the limit exists for all k, then they can be obtained by Takács (1955)

The first two moments E1 and E2 are then given by

If a limiting distribution for w exists (see p. 369, Theorem 2 in Takács, 1955), its j-th moment E*j can be derived by

with Ej given by Equation (66) and ν the firing rate of the neuron considered. The variance of the stochastic process is defined by the first two moments and yields

with β1 the Laplace transform of the ISI-distribution at 1/τw, and E*1 the mean value of w,

We assume that the ISI distribution is well approximated by a Gamma-probability density function. In order to compute β1, the distributional properties have to be parameterized by its mean μISI directly given by the inverse of the firing rate ν and the variance σ2ISI. The moments can be theoretically derived by the following recurrence relation (see Tuckwell, 1988),

with x = Vr and f(x) defined by

with the effective potential . The variance σ2ISI is directly obtained by the 1st and 2nd moments which are determined by the solution of the differential Equation (72),

with the general antiderivative of f(x). By parameterizing the constants k and Θ of the Gamma-distribution P(x; k, θ) by

the Laplace transform of the ISI-distribution at 1/τw yields

4.3. The Derivation of the Second-Order Firing Rate Correction for Synaptic Kinetics

In order to compute the firing rate correction for the aEIF model under synaptic dynamics, we have to consider the EIF with averaged w-component and synaptic noise obeying the following differential equation

When V reaches “infinity,” the membrane potential is reset to Vr. The FP equation with the boundary conditions explicitly included, is given by Alijani and Richardson (2011)

with , (fP)th denoting fP evaluated in the limit V → ∞ and

By using the respective continuity equation and the FP-equation at the same time, one can derive the following relation for the firing rate (Alijani and Richardson, 2011)

where . By assuming k ≪ 1, the probability distribution and the firing rate can be expanded in powers of k. Consequently, the zeroth order which is already known by the Gaussian white noise case can be written by

with Φ0 directly given by the operator  z and the boundary conditions:

z and the boundary conditions:

By making use of the relations

and evaluating Equation (81) at first and second order, one obtains

The derivation of

allows to deduce a differential equation for ν2 [see Equation (83)] (Alijani and Richardson, 2011):

This can be rewritten into (Alijani and Richardson, 2011)

By making use of the derivative of Q0, one can simplify the previous relation by

Solving the differential equation for {z2} yields

Taking the integral over V from −∞ to ∞ yields an expression for the second-order firing rate correction

Hence, the firing rate in the colored noise case can be computed by νCN = ν0 + k2ν2, CN with ν2, CN given by (96). By assuming that the w-distribution in the case of synaptic dynamics can still be replaced by a Gamma function parametrized by mean and variance of the adaptation current, one obtains Equation (34).

4.4. Simulation and Analysis Details

All simulations were run on a 2.4 GHz Intel(R) Xeon(R) CPU E5620. The single-cell simulations for the purpose of evaluating the prediction power of the theoretical approaches were 50–100 s long, while the first 5 s were discarded and only the remaining part was applied to estimate the steady-state firing rates. The time increment was 0.05 ms. Simulations with smaller time steps did not change the results. For evaluating the subthreshold voltage fluctuations spikes were cut out in a window of ±10 ms around an action potential. All implementations were written in Matlab and C. Matlab-based code and the data sets used in this study can be obtained from the authors on request & and will be made publicly available at www.bccn-heidelberg-mannheim.de.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgment

This work was funded by grants from the German ministry for education and research (BMBF 01ZX1311A, BMBF 01GQ1003B) and the Deutsche Forschungsgemeinschaft to Daniel Durstewitz (Du 354/6-1 and 7-2). Nicolas Brunel was supported by the SI-CODE project of the Future and Emerging Technologies (FET) programme within the Seventh Framework Programme for Research of The European Commission, under FET-Open grant number: FP7284553. We are very grateful to Dr. Thomas Hahn (Central Institute of Mental Health, Theoretical Neuroscience, Medical Faculty Mannheim of Heidelberg University) for providing in vivo patch-clamp recordings from the rodent PFC for Figure 11.

References

Abbott, L. F., and van Vreeswijk, C. (1993). Asynchronous states in networks of pulse–coupled oscillators. Phys. Rev. E 48, 1483–1490. doi: 10.1103/PhysRevE.48.1483

Alijani, A. K., and Richardson, M. J. (2011). Rate response of neurons subject to fast or frozen noise: from stochastic and homogeneous to deterministic and heterogeneous populations. Phys. Rev. E 84:011919. doi: 10.1103/PhysRevE.84.011919

Amit, D. J., and Brunel, N. (1997a). Dynamics of a recurrent network of spiking neurons before and following learning. Network 8, 373–404. doi: 10.1088/0954-898X/8/4/003

Amit, D. J., and Brunel, N. (1997b). Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb. Cortex 7, 237–252. doi: 10.1093/cercor/7.3.237

Amit, D. J., and Tsodyks, M. V. (1991a). Quantitative study of attractor neural network retrieving at low spike rates: I. substrate–spikes, rates and neuronal gain. Netw. Comput. Neural Syst. 2, 259–273. doi: 10.1088/0954-898X/2/3/003

Amit, D. J., and Tsodyks, M. V. (1991b). Quantitative study of attractor neural networks retrieving at low spike rates: II. low-rate retrieval in symmetric networks. Netw. Comput. Neural Syst. 2, 275–294. doi: 10.1088/0954-898X/2/3/004

Astman, N., Gutnick, M. J., and Fleidervish, I. A. (2006). Persistent sodium current in layer 5 neocortical neurons is primarily generated in the proximal axon. J. Neurosci. 26, 3465–3473. doi: 10.1523/JNEUROSCI.4907-05.2006

Augustin, M., Ladenbauer, J., and Obermayer, K. (2013). How adaptation shapes spike rate oscillations in recurrent neuronal networks. Front. Comput. Neurosci. 7:9. doi: 10.3389/fncom.2013.00009

Badel, L., Lefort, S., Berger, T. K., Petersen, C. C. H., Gerstner, W., and Richardson, M. J. E. (2008a). Extracting non–linear integrate–and–fire models from experimental data using dynamic i–v curves. Biol. Cybern. 99, 361–370. doi: 10.1007/s00422-008-0259-4

Badel, L., Lefort, S., Brette, R., Petersen, C., Gerstner, W., and Richardson, M. (2008b). Dynamic IV curves are reliable predictors of naturalistic pyramidal–neuron voltage traces. J. Neurophysiol. 99, 656–666. doi: 10.1152/jn.01107.2007

Balaguer-Ballester, E., Lapish, C. C., Seamans, J. K., and Durstewitz, D. (2011). Attracting dynamics of frontal cortex ensembles during memory-guided decision-making. PLoS Comput. Biol. 7:e1002057. doi: 10.1371/journal.pcbi.1002057

Barbieri, R., Quirk, M. C., Frank, L. M., Wilson, M. A., and Brown, E. N. (2001). Construction and analysis of non–poisson stimulus–response models of neural spiking activity. J. Neurosci. Methods 105, 25–37. doi: 10.1016/S0165-0270(00)00344-7

Benda, J., and Herz, A. V. M. (2003). A universal model for Spike–Frequency adaptation. Neural Comput. 15, 2523–2564. doi: 10.1162/089976603322385063

Braitenberg, V., and Schüz, A. (1991). Anatomy of the Cortex: Statistics and Geometry. New York, NY: Springer-Verlag Publishing. doi: 10.1007/978-3-662-02728-8

Brette, R., and Gerstner, W. (2005). Adaptive exponential integrate–and–fire model as an effective description of neuronal activity. J. Neurophysiol. 94, 3637–3642. doi: 10.1152/jn.00686.2005

Brown, D. A., and Adams, P. R. (1980). Muscarinic suppression of a novel voltage-sensitive k+ current in a vertebrate neurone. Nature 283, 673–676. doi: 10.1038/283673a0

Brunel, N. (2000a). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. doi: 10.1023/A:1008925309027

Brunel, N. (2000b). Persistent activity and the single–cell frequency–current curve in a cortical network model. Network Comput. Neural Syst. 11, 261–280. doi: 10.1088/0954-898X/11/4/302

Brunel, N., and Hakim, V. (1999). Fast global oscillations in networks of integrate–and–fire neurons with low firing rates. Neural Comput. 11, 1621–1671. doi: 10.1162/089976699300016179

Brunel, N., Hakim, V., and Richardson, M. J. E. (2003). Firing–rate resonance in a generalized integrate–and–fire neuron with subthreshold resonance. Phys. Rev. E 67:051916. doi: 10.1103/PhysRevE.67.051916

Brunel, N., and Hansel, D. (2006). How noise affects the synchronization properties of recurrent networks of inhibitory neurons. Neural Comput. 18, 1066–1110. doi: 10.1162/neco.2006.18.5.1066

Brunel, N., and Latham, P. E. (2003). Firing rate of the noisy quadratic integrate–and–fire neuron. Neural Comput. 15, 2281–2306. doi: 10.1162/089976603322362365

Brunel, N., and Sergi, S. (1998). Firing frequency of leaky integrate–and–fire neurons with synaptic current dynamics. J. Theor. Biol. 195, 87–95. doi: 10.1006/jtbi.1998.0782

Brunel, N., and Wang, X.-J. (2001). Effects of neuromodulation in a cortical network model of object working memory dominated by recurrent inhibition. J. Comput. Neurosci. 11, 63–85. doi: 10.1023/A:1011204814320

Burkitt, A. N., Meffin, H., and Grayden, D. B. (2003). Study of neuronal gain in a conductance-based leaky integrate-and-fire neuron model with balanced excitatory and inhibitory synaptic input. Biol. cybern. 89, 119–125. doi: 10.1007/s00422-003-0408-8

Chizhov, A. V., and Graham, L. J. (2007). Population model of hippocampal pyramidal neurons, linking a refractory density approach to conductance-based neurons. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 75:011924. doi: 10.1103/PhysRevE.75.011924

Clopath, Jolivet, R., Rauch, A., Lüscher, H., and Gerstner, W. (2007). Predicting neuronal activity with simple models of the threshold type: adaptive exponential Integrate–and–Fire model with two compartments. Neurocomputing 70, 1668–1673. doi: 10.1016/j.neucom.2006.10.047

Connors, B., Malenka, R., and Silva, L. (1988). Two inhibitory postsynaptic potentials, and GABAA and GABAB receptor-mediated responses in neocortex of rat and cat. J. Physiol. 406, 443–468.

Deco, G., Mart, D., Ledberg, A., Reig, R., and Sanchez Vives, M. V. (2009). Effective reduced diffusion-models: a data driven approach to the analysis of neuronal dynamics. PLoS Comput. Biol. 5:e1000587. doi: 10.1371/journal.pcbi.1000587

Deisz, R. A., Billard, J.-M., and Zieglgänsberger, W. (1997). Presynaptic and postsynaptic GABAB receptors of neocortical neurons of the rat in vitro: differences in pharmacology and ionic mechanisms. Synapse 25, 62–72. doi: 10.1002/(SICI)1098-2396(199701)25:1<62::AID-SYN8>3.0.CO;2-D

Del Giudice, P., Fusi, S., and Mattia, M. (2003). Modelling the formation of working memory with networks of integrate-and-fire neurons connected by plastic synapses. J. Physiol. Paris 97, 659–681. doi: 10.1016/j.jphysparis.2004.01.021

Destexhe, A., Rudolph, M., and Paré, D. (2003). The high–conductance state of neocortical neurons in vivo. Nat. Rev. Neurosci. 4, 739–751. doi: 10.1038/nrn1198

Dobrunz, L. E., and Stevens, C. F. (1997). Heterogeneity of release probability, facilitation, and depletion at central synapses. Neuron 18, 995–1008. doi: 10.1016/S0896-6273(00)80338-4

Durstewitz, D. (2009). Implications of synaptic biophysics for recurrent network dynamics and active memory. Neural Netw. 22, 1189–1200. doi: 10.1016/j.neunet.2009.07.016

Durstewitz, D., and Gabriel, T. (2007). Dynamical basis of irregular spiking in NMDA–Driven prefrontal cortex neurons. Cereb. Cortex 17, 894–908. doi: 10.1093/cercor/bhk044

Durstewitz, D., and Seamans, J. K. (2012). “How can computational models be better utilized for understanding and treating schizophrenia?,” in Schizophrenia: Evolution and Synthesis, Chapter 12, eds S. M. Silverstein, B. Moghaddam, and T. Wykes (Cambridge, MA: MIT Press), 195–207.

Farkhooi, F., Muller, E., and Nawrot, M. P. (2011). Adaptation reduces variability of the neuronal population code. Phys. Rev. E 83:050905. doi: 10.1103/PhysRevE.83.050905

Fleidervish, I., and Gutnick, M. (1996). Kinetics of slow inactivation of persistent sodium current in layer v neurons of mouse neocortical slices. J. Neurophysiol. 76, 2125–2130.

Fourcaud–Trocmé, N., Hansel, D., van Vreeswijk, C., and Brunel, N. (2003). How spike generation mechanisms determine the neuronal response to fluctuating inputs. J. Neurosci. 23, 11628–11640.

Fuhrmann, G., Markram, H., and Tsodyks, M. (2002). Spike frequency adaptation and neocortical rhythms. J. Neurophysiol. 88, 761–770. doi: 10.1152/jn.00848.2001

Fusi, S., and Mattia, M. (1999). Collective behavior of networks with linear (VLSI) integrate–and–fire neurons. Neural Comput. 11, 633–652. doi: 10.1162/089976699300016601

Gerstner, W., and Naud, R. (2009). How good are neuron models? Science 326, 379–380. doi: 10.1126/science.1181936

Gigante, G., Mattia, M., and Del Giudice, P. (2007). Diverse population-bursting modes of adapting spiking neurons. Phys. Rev. Lett. 98:148101. doi: 10.1103/PhysRevLett.98.148101

Gilbert, E. N., and Pollak, H. O. (1960). Amplitude distribution of shot noise. Bell Syst. Tech. J. 39, 333–350. doi: 10.1002/j.1538-7305.1960.tb01603.x

Haider, B., Duque, A., Hasenstaub, A. R., and McCormick, D. A. (2006). Neocortical network activity in vivo is generated through a dynamic balance of excitation and inhibition. J. Neurosci. 26, 4535–4545. doi: 10.1523/JNEUROSCI.5297-05.2006

Helmchen, F., Imoto, K., and Sakmann, B. (1996). Ca2+ buffering and action potential-evoked Ca2+ signaling in dendrites of pyramidal neurons. Biophys. J. 70, 1069–1081. doi: 10.1016/S0006-3495(96)79653-4

Hertäg, L., Hass, J., Golovko, T., and Durstewitz, D. (2012). An approximation to the adaptive exponential integrate–and–fire neuron model allows fast and predictive fitting to physiological data. Front. Comput. Neurosci. 6:62. doi: 10.3389/fncom.2012.00062

Izhikevich, E. (2003). Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572. doi: 10.1109/TNN.2003.820440

Izhikevich, E. M., and Edelman, G. M. (2008). Large–scale model of mammalian thalamocortical systems. Proc. Natl. Acad. Sci. U.S.A. 105, 3593–3598. doi: 10.1073/pnas.0712231105

Jahr, C. E., and Stevens, C. F. (1990). Voltage dependence of NMDA–Activated macroscopic conductances predicted by Single–Channel kinetics. J. Neurosci. 10, 3178–3182.

Jolivet, R., Kobayashi, R., Rauch, A., Naud, R., Shinomoto, S., and Gerstner, W. (2008). A benchmark test for a quantitative assessment of simple neuron models. J. Neurosci. Methods 169, 417–424. doi: 10.1016/j.jneumeth.2007.11.006

Jolivet, R., Rauch, A., Lüscher, H., and Gerstner, W. (2006). Predicting spike timing of neocortical pyramidal neurons by simple threshold models. J. Comput. Neurosci. 21, 35–49. doi: 10.1007/s10827-006-7074-5

Kandel, E. R., Markram, H., Matthews, P. M., Yuste, R., and Koch, C. (2013). Neuroscience thinks big (and collaboratively). Nat. Rev. Neurosci. 14, 659–664. doi: 10.1038/nrn3578

Kandel, E. R., Schwartz, J. H., and Jessell, T. M. (2000). Principles of Neural Science, Vol. 4. New York, NY: McGraw-Hill.

La Camera, G., Rauch, A., Lüscher, H.-R., Senn, W., and Fusi, S. (2004). Minimal models of adapted neuronal response to in vivo–Like input currents. Neural Comput. 16, 2101–2124. doi: 10.1162/0899766041732468