A theory of power laws in human reaction times: insights from an information-processing approach

- 1Departamento de Óptica, Facultad de Ciencias, Universidad de Granada, Granada, Spain

- 2Department of Physics, Institute of Biomaterials and Biomedical Engineering, University of Toronto, Toronto, ON, Canada

Human reaction time (RT) can be defined as the time elapsed from stimulus presentation until a reaction/response occurs (e.g., manual, verbal, saccadic, etc.). RT has been a fundamental measure of the sensory-motor latency at suprathreshold conditions for more than a century and is one of the hallmarks of human performance in everyday tasks (Luce, 1986; Meyer et al., 1988). Some examples are the measurement of RTs in sports science, driving safety or in aging. Under repeated experimental conditions the RT is not a constant value but fluctuates irregularly over time. Stochastic fluctuations of RTs are considered a benchmark for modeling neural latency mechanisms at a macroscopic scale (Luce, 1986; Smith and Ratcliff, 2004). Power-law behavior has been reported in at least three major types of experiments. (1) RT distributions exhibit extreme values. The probability density function (pdf) is often heavy-tailed and can lead to an asymptotic power-law distribution in the right tail (Holden et al., 2009; Moscoso del Prado Martín, 2009; Sigman et al., 2010). (2) RT variability (e.g., variance) is not bounded and usually shows a power relation with the mean, with an exponent β close to unity (Luce, 1986; Wagenmakers and Brown, 2007; Holden et al., 2009; Medina and Díaz, 2011, 2012). This relationship is a manifestation of Taylor's law (also called “fluctuation scaling”) (Taylor, 1961; Eisler et al., 2008), although departures from power law have been reported (Eisler et al., 2008; Schmiedek et al., 2009). And (3), the mean RTs decay as the stimulus strength increases (Cattell, 1886), an issue that is well-described by a truncated power function written in the form of Piéron's law (Piéron, 1914, 1920; Luce, 1986):

tn + 1 indicates the mean RT, S is the stimulus strength (e.g., loudness intensity, odor concentration, etc.), tn represents the asymptotic component of the mean RT reached at very high stimulus strength and d and p are two parameters (Luce, 1986). The sub-index n denotes the time step or order and it indicates a causal process: tn + 1 grows from the previous stage tn by an additive factor that depends on the stimulus strength S (Medina, 2009). The previous stage tn contains those processes at the threshold at an earlier time and tn + 1 in Equation (1) describes those processes at suprathreshold conditions at a later time (Norwich et al., 1989; Medina, 2009). The origin of power-law behavior in RTs has been a long-standing issue. Considerable effort has been dedicated in modeling each power relation separately. While it might be plausible that power laws in RTs could share a limited number of mechanisms, a successful theory remains unresolved. The ubiquity of power laws in many biological and physical systems has revealed the existence of multiple generative mechanisms (Mitzenmacher, 2004; Newman, 2005; Sornette, 2007; Frank, 2009). Research on a unifying framework that links power laws in RTs is an important issue for better understanding the emergent complex behavior of neural activity in simple decisions and in dysfunctional states.

We propose that type (3) power laws govern the threshold for RT; and it follows consequently that power laws govern suprathreshold fluctuations in RT. Piéron's law is valid for each sensory modality (Chocholle, 1940; Banks, 1973; Luce, 1986; Overbosch et al., 1989; Pins and Bonnet, 1996; Bonnet et al., 1999), and in both simple and choice reaction times (Schweickert et al., 1988; Pins and Bonnet, 1996). Instead of diffusion models (Luce, 1986; Smith and Ratcliff, 2004), we use elements from information theory and statistical physics as the principal conceptual tools. We also discuss random multiplicative processes as an important approach to Piéron's law and power laws in RTs.

In our information-theoretic formalism, the information entropy function H always expresses a measure of uncertainty within a sensory neural network. High information entropy values indicates high uncertainty and vice versa. Information is related to the drop of uncertainty (measured, e.g., in bits). It is postulated that sensory perception is not an instantaneous act but it always takes time (Norwich, 1993). Initially, for a given external input signal, the sensory system encodes the stimulus efficiently and then, it adapts and transfers information over time. Therefore, the H-function depends explicitly on the time to represent a continuous process of sensory adaptation (Norwich, 1993). The human RT can be re-defined as the time needed to accumulate ΔH bits of information after efficient encoding (Norwich et al., 1989; Norwich, 1993):

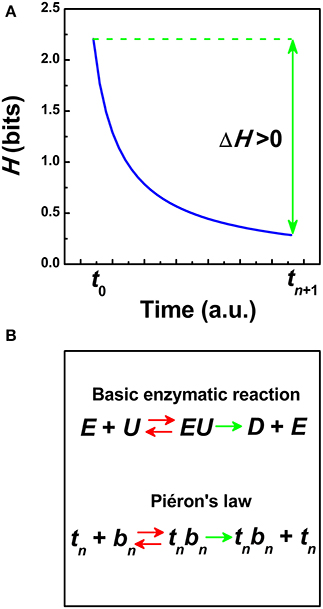

Figure 1A represents the entropy function H in Equation (2). At least two stages can be differentiated. The H-function evolves from a previous state of maximum uncertainty reached at the encoding time t0, H (1/t0), to a final adapting stage with a lower uncertainty H (1/tn + 1) where a reaction occurs, (tn + 1 >t0). Maximum production of entropy and then, a reduction of uncertainty in ΔH as a function of time are concepts introduced from statistical physics, the latter as expressed by Boltzmann (Norwich, 1993). Based on an analytical model of the H-function (Norwich, 1993), the gain of information ΔH is connected with the formation of an internal threshold in Equation (1) (Norwich et al., 1989; Medina, 2009):

Figure 1. (A) Schematic representation of the information entropy function H (1/t) (in bits) as a function of the time t (Norwich, 1993). The transfer of information ΔH is defined in Equation (2) from the encoding time t0 until a reaction occurs at tn + 1. (a.u.) = arbitrary units. (B) Schematic representation of a model of hyperbolic growth in reaction times based on Piéron's law and analogous to Michaelis-Menten kinetics in biochemistry (i.e., the Hill equation) (Pins and Bonnet, 1996). In Michaelis-Menten kinetics, an enzyme E is bounded to a substrate U to form a complex EU that is converted into a product D and the enzyme E. In Piéron's law, those neurons tuned at the time tn are bounded to those neurons that perform the formation of an internal threshold S0 in bn = (S0/S)p to form the term tn bn that is converted into the product tn bn plus the time tn. Red double arrows indicate that the “reaction” is reversible whereas green single arrows indicate that the “reaction” goes only in one way.

Piéron's law can be written as follows:

where bn = (S0/S)p. The parameter S0 represents an estimation of the internal threshold that controls the RT: an external incoming signal S exceeding S0 leads to a RT response (Norwich et al., 1989). Furthermore, S0 varies based on several factors and provides the sensitivity (1/S0) of the sensory system (e.g., in vision the human contrast sensitivity function) (Felipe et al., 1993; Murray and Plainis, 2003). The model of Piéron's law in Equation (4) sets a number of important properties. First property, Equation (4) indicates the existence of multiplicative interactions in a cascade between different time scales: the mean RT is expressed in terms of the asymptotic time, tn, and Piéron's law is written in multiples of threshold S0. That is, we work with dimensionless ratios of S0/S (Norwich, 1993). Different interpretations of the exponent p have been reported. Sp0 could be interpreted as the transfer or transducer function between neurons (Copelli et al., 2002; Billock and Tsou, 2011) at the threshold. The exponent p usually takes non-integer values and could indicate a signature of self-organized criticality in a phase transition (Kinouchi and Copelli, 2006). Here the concept of phase transition does not deal with the classical view of different states of matter in thermodynamics (e.g., liquid vs. gas), but with different states of connectivity between neurons as modeled by branching processes (Kinouchi and Copelli, 2006). Alternatively, power functions Sp0 can be derived from Mackay transforms (Mackay, 1963) and the exponent p could represent oscillatory synchronization states between neurons (Billock and Tsou, 2005, 2011). The model of Piéron's law in Equation (4) is a useful alternative approach and optimal information transfer is related with the entropy function H (Norwich, 1993). Low values of p will promote a minimum in ΔH after efficient encoding, i.e., an Infomin principle at the macroscopic scale (Medina, 2011, 2012).

Second property, the threshold barrier S0 is not a fixed static value but unstable and fluctuates over time due to the presence of endogenous or internal noise (Faisal et al., 2008). Consequently, RTs are influenced and modified by neural noise. Therefore, Equation (4) is not deterministic and is included in a general class of discrete-time stochastic equations that has been used in many applications such as in epidemics, finance, etc. (Levy and Solomon, 1996; Sornette and Cont, 1997; Takayasu et al., 1997; Newman, 2005; Sornette, 2006). The term bn is a random and positive multiplicative factor that depends on the temporal fluctuations of S0 and thus, on ΔH. It has been demonstrated that the model of Piéron's law in Equation (4) produce type (1) power laws. RT pdfs obey a transition from a log-normal distribution into a power law in the right tail (Medina, 2012). If RTs are longer than the asymptotic term, tn, the RT pdf is distributed as a power law with an exponent γ that depends on the exponent p of Piéron's law (Medina, 2012):γ = 1 + (c/p), c being a constant. Two different regimes are observed: for those values p > 0.6 the central moments diverge and if p ≤ 0.6 they are finite (Medina, 2012). Therefore, long RTs compared to the asymptotic term tn are considered intermittent events over time. Their distribution is characterized by power law pdfs that might have finite or infinite variance. A cautionary note should be mentioned here. The magnitude of p could also depend on the metric of the stimulus strength S selected and values different from the boundary p ≅ 0.6 might be possible. For instance, this is important when testing power law RT pdfs in color vision because an appropriate color contrast metric has not been established (Medina and Diaz, 2010).

Third property, the reciprocal of Piéron's law is invariant under rescaling (Chater and Brown, 1999; Medina, 2009). Taking the reciprocal of the mean RT, R = 1/tn + 1. and the reciprocal of the irreducible asymptotic term, Rmax = 1/tn in Equation (4), then, R = Rmax [1 + (S0/S)p]. Therefore, the reciprocal of the Equation (4) defines an affine transformation over multiple time scales that can be mapped into the Naka-Rushton equation at the cellular level (Naka and Rushton, 1966) and the Michaelis-Menten equation in enzyme reactions at the sub-cellular level (Michaelis and Menten, 1913; Pins and Bonnet, 1996). This suggests that some general properties of RT patterns governed by Piéron's law could be mirrored in part into the dynamics of the Naka-Rushton equation and/or the Michealis kinetics (Medina, 2009, 2012). The Naka-Rushton equation represents a canonical form of non-linear gain control in neural responses before saturation (Albrecht and Hamilton, 1982; Billock and Tsou, 2011; Carandini and Heeger, 2012). Threshold normalization in the Naka-Rushton equation is often modeled as a pool of many neurons tuned to different stimulus properties (Heeger, 1992; Carandini and Heeger, 2012). In the Michaelis-Menten equation, the normalization factor is the Michaelis constant and indicates the substrate concentration at a reference value. The Michaelis constant is related with the substrate's affinity for the enzyme and depends on many factors (Murray, 2002). Figure 1B represents a schematic model of RT growth based on Piéron's law and an analogy with enzyme kinetics.

The exponent p of Piéron's law could be related to the scaling exponent β of the variance-mean relationship in type (2) power law. A power law relationship between variance and mean of the stimulus population has been proposed in the H-function (Norwich, 1993) and this relationship could be compatible with the RT variance-mean relationship in the regime around p > 0.6 (Medina, 2011, 2012). Alternative approaches have explored α-stable processes to relate type (1) power laws and long-range correlations (Ihlen, 2013). Tweedie exponential dispersion models are also able to describe type (2) power laws in many biological and physical processes (Eisler et al., 2008; Kendal and Jørgensen, 2011; Moshitch and Nelken, 2014). However, a connection between Piéron's law and α-stable and Tweedie models remains unknown.

In summary, maximum entropy H and then, adaptation over time in Equation (2) leads to a type (3) power law, Piéron's law Equation (1). The H-function also explains many empirical relations of sensory perception (Norwich, 1993). An important message of the entropy function H is that the term d in Piéron's law depends explicitly on a sensory threshold S0 by the power law Equation (3). There is also experimental evidence that RTs and threshold-based sensitivities are mediated by common sensory processes (Felipe et al., 1993; Murray and Plainis, 2003). Therefore, temporal fluctuations at the sensory threshold S0 affect RT fluctuations at suprathreshold conditions and this can be described by means of a simple random multiplicative process in Equation (4). The same multiplicative process produces non-Gaussian RT distributions and type (1) power law RT pdfs. The model of Piéron's law in Equation (4) also generates fractal-like behavior that extends to smaller time scales. The reciprocal of Equation (4) provides a direct link with neural gain control in single neurons as exemplified by the Naka-Ruston equation and a possible analogy with enzyme kinetics within neurons as exemplified by the Michaelis-Menten kinetics.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Albrecht, D. G., and Hamilton, D. B. (1982). Striate cortex of monkey and cat: contrast response function. J. Neurophysiol. 48, 217–237.

Banks, W. P. (1973). Reaction time as a measure of summation warmth. Percept. Psychophys. 13, 321–327. doi: 10.3758/BF03214147

Billock, V. A., and Tsou, B. H. (2005). Sensory recoding via neural synchronization: integrating hue and luminance into chromatic brightness and saturation. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 22, 2289–2298. doi: 10.1364/JOSAA.22.002289

Billock, V. A., and Tsou, B. H. (2011). To honor fechner and obey stevens: relationships between psychophysical and neural nonlinearities. Psychol. Bull. 137, 1–18. doi: 10.1037/a0021394

Bonnet, C., Zamora, M. C., Buratti, F., and Guirao, M. (1999). Group and individual gustatory reaction times and Pieron's law. Physiol. Behav. 66, 549–558. doi: 10.1016/S0031-9384(98)00209-1

Carandini, M., and Heeger, D. J. (2012). Normalization as a canonical neural computation. Nat. Rev. Neurosci. 13, 51–62. doi: 10.1038/nrn3136

Cattell, J. M. (1886). The influence of the intensity of the stimulus on the lenght of the reaction time. Brain 8, 512–515. doi: 10.1093/brain/8.4.512

Chater, N., and Brown, G. D. A. (1999). Scale-invariance as a unifying psychological principle. Cognition 69, B17–B24. doi: 10.1016/S0010-0277(98)00066-3

Chocholle, R. (1940). Variations des temps de réaction auditifs en fonction de l' intensité à diverses fréquences. Anneé Psychol. 41, 64–124. doi: 10.3406/psy.1940.5877

Copelli, M., Roque, A. C., Oliveira, R. F., and Kinouchi, O. (2002). Physics of psychophysics: Stevens and Weber-Fechner laws are transfer functions of excitable media. Phys. Rev. E 65:060901. doi: 10.1103/PhysRevE.65.060901

Eisler, Z., Bartos, I., and Kertész, J. (2008). Fluctuation scaling in complex systems: Taylor's law and beyond. Adv. Phys. 57, 89–142. doi: 10.1080/00018730801893043

Faisal, A. A., Selen, L. P. J., and Wolpert, D. M. (2008). Noise in the nervous system. Nat. Rev. Neurosci. 9, 292–303. doi: 10.1038/nrn2258

Felipe, A., Buades, M. J., and Artigas, J. M. (1993). Influence of the contrast sensitivity function on the reaction-time. Vision Res. 33, 2461–2466. doi: 10.1016/0042-6989(93)90126-H

Frank, S. A. (2009). The common patterns of nature. J. Evol. Biol. 22, 1563–1585. doi: 10.1111/j.1420-9101.2009.01775.x

Heeger, D. J. (1992). Normalization of cell responses in cat striate cortex. Visual Neurosci. 9, 181–197. doi: 10.1017/S0952523800009640

Holden, J. G., Van Orden, G. C., and Turvey, M. T. (2009). Dispersion of response times reveals cognitive dynamics. Psychol. Rev. 116, 318–342. doi: 10.1037/a0014849

Ihlen, E. A. F. (2013). The influence of power law distributions on long-range trial dependency of response times. J. Math. Psychol. 57, 215–224. doi: 10.1016/j.jmp.2013.07.001

Kendal, W. S., and Jørgensen, B. (2011). Tweedie convergence: a mathematical basis for Taylor's power law, 1/f noise, and multifractality. Phys. Rev. E 84:066120. doi: 10.1103/PhysRevE.84.066120

Kinouchi, O., and Copelli, M. (2006). Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–352. doi: 10.1038/nphys289

Levy, M., and Solomon, S. (1996). Power laws are logarithmic Boltzmann laws. Int. J. Mod. Phys. C-Phys. Comput. 7, 595–601. doi: 10.1142/S0129183196000491

Mackay, D. M. (1963). Psychophysics of perceived intensity: a theoretical basis for Fechner's and Stevens' laws. Science 139, 1213–1216. doi: 10.1126/science.139.3560.1213-a

Medina, J. M. (2009). 1/f (alpha) noise in reaction times: a proposed model based on Pieron's law and information processing. Phys. Rev. E 79:011902. doi: 10.1103/PhysRevE.79.011902

Medina, J. M. (2011). Effects of multiplicative power law neural noise in visual information processing. Neural Comput. 23, 1015–1046. doi: 10.1162/NECO_a_00102

Medina, J. M. (2012). Multiplicative processes and power laws in human reaction times derived from hyperbolic functions. Phys. Lett. A 376, 1617–1623. doi: 10.1016/j.physleta.2012.03.025

Medina, J. M., and Diaz, J. A. (2010). S-cone excitation ratios for reaction times to blue-yellow suprathreshold changes at isoluminance. Ophthalmic Physiol. Opt. 30, 511–517. doi: 10.1111/j.1475-1313.2010.00745.x

Medina, J. M., and Díaz, J. A. (2011). “Response variability of the red-green color vision system using reaction times,” in: Applications of Optics and Photonics, ed M. F. M. Costa (Braga: Proc. SPIE), 800131–800139.

Medina, J. M., and Díaz, J. A. (2012). 1/f noise in human color vision: the role of S-cone signals. J. Opt. Soc. Am. A-Opt. Image Sci. Vis. 29, A82–A95. doi: 10.1364/JOSAA.29.000A82

Meyer, D. E., Osman, A. M., Irwin, D. E., and Yantis, S. (1988). Modern mental chronometry. Biol. Psychol. 26, 3–67. doi: 10.1016/0301-0511(88)90013-0

Mitzenmacher, M. (2004). A brief history of generative models for power law and lognormal distributions. Internet Math. 1, 226–251. doi: 10.1080/15427951.2004.10129088

Moscoso del Prado Martín, F. (2009). “Scale invariance of human latencies,” in: 31st Annual Conference of the Cognitive Science Society, eds N. A. Taatgen and V. Rijn (Austin, TX: Proc. Cognitive Science Society), 1270–1275.

Moshitch, D., and Nelken, I. (2014). Using Tweedie distributions for fitting spike count data. J. Neurosci. Meth. 225, 13–28. doi: 10.1016/j.jneumeth.2014.01.004

Murray, I. J., and Plainis, S. (2003). Contrast coding and magno/parvo segregation revealed in reaction time studies. Vision Res. 43, 2707–2719. doi: 10.1016/S0042-6989(03)00408-5

Naka, K. I., and Rushton, W. A. H. (1966). S-potentials from luminosity units in retina of fish (cyprinidae). J. Physiol. Lond. 185, 587–599.

Newman, M. E. J. (2005). Power laws, Pareto distributions and Zipf's law. Contemp. Phys. 46, 323–351. doi: 10.1080/00107510500052444

Norwich, K. H., Seburn, C. N. L., and Axelrad, E. (1989). An informational approach to reaction times. Bull. Math. Biol. 51, 347–358. doi: 10.1007/BF02460113

Overbosch, P., Dewijk, R., Dejonge, T. J. R., and Koster, E. P. (1989). Temporal integration and reaction times in human smell. Physiol. Behav. 45, 615–626. doi: 10.1016/0031-9384(89)90082-6

Piéron, H. (1914). Recherches sur les lois de variation des temps de latence sensorielle en fonction des intensite's excitatrices. Anneé Psychol. 20, 17–96. doi: 10.3406/psy.1913.4294

Piéron, H. (1920). Nouvelles recherches sur l'analyse du temp de latence sensorielle et sur la loi qui relie ce temps a l'intensité de l'excitation. Anneé Psychol. 22, 58–60. doi: 10.3406/psy.1920.4404

Pins, D., and Bonnet, C. (1996). On the relation between stimulus intensity and processing time: Pieron's law and choice reaction time. Percept. Psychophys. 58, 390–400. doi: 10.3758/bf03206815

Schmiedek, F., Lövdén, M., and Lindenberger, U. (2009). On the relation of mean reaction time and intraindividual reaction time variability. Psychol. Aging 24, 841–857. doi: 10.1037/a0017799

Schweickert, R., Dahn, C., and McGuigan, K. (1988). Intensity and number of alternatives in hue identification: Piéron's law and choice reaction time. Percept. Psychophys. 44, 383–389. doi: 10.3758/bf03210422

Sigman, M., Etchemendy, P., Fernandez Slezak, D., and Cecchi, G. A. (2010). Response time distributions in rapid chess: a large-scale decision making experiment. Front. Neurosci. 4:60. doi: 10.3389/fnins.2010.00060

Smith, P. L., and Ratcliff, R. (2004). Psychology and neurobiology of simple decisions. Trends Neurosci. 27, 161–168. doi: 10.1016/j.tins.2004.01.006

Sornette, D. (2007). “Probability distributions in complex systems,” in Encyclopedia of Complexity and System Science, ed R.A. Meyers (New York, NY: Springer), 7009–7024.

Sornette, D., and Cont, R. (1997). Convergent multiplicative processes repelled from zero: power laws and truncated power laws. J. Phys. I 7, 431–444. doi: 10.1051/jp1:1997169

Takayasu, H., Sato, A. H., and Takayasu, M. (1997). Stable infinite variance fluctuations in randomly amplified Langevin systems. Phys. Rev. Lett. 79, 966–969. doi: 10.1103/PhysRevLett.79.966

Taylor, L. R. (1961). Aggregation, variance and the mean. Nature 189, 732–735. doi: 10.1038/189732a0

Keywords: human reaction time, intrinsic variability, power laws, information transfer, Piéron's law

Citation: Medina JM, Díaz JA and Norwich KH (2014) A theory of power laws in human reaction times: insights from an information-processing approach. Front. Hum. Neurosci. 8:621. doi: 10.3389/fnhum.2014.00621

Received: 19 May 2014; Accepted: 24 July 2014;

Published online: 12 August 2014.

Edited by:

John J. Foxe, Albert Einstein College of Medicine, USAReviewed by:

Andreas Klaus, National Institute of Mental Health, USACopyright © 2014 Medina, Díaz and Norwich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: jmedinaru@cofis.es

José M. Medina

José M. Medina José A. Díaz

José A. Díaz Kenneth H. Norwich

Kenneth H. Norwich