Association with emotional information alters subsequent processing of neutral faces

- 1Rotman Research Institute, Toronto, ON, Canada

- 2Department of Psychology, University of Toronto, Toronto, ON, Canada

- 3Department of Psychiatry, University of Toronto, Toronto, ON, Canada

The processing of emotional as compared to neutral information is associated with different patterns in eye movement and neural activity. However, the ‘emotionality’ of a stimulus can be conveyed not only by its physical properties, but also by the information that is presented with it. There is very limited work examining the how emotional information may influence the immediate perceptual processing of otherwise neutral information. We examined how presenting an emotion label for a neutral face may influence subsequent processing by using eye movement monitoring (EMM) and magnetoencephalography (MEG) simultaneously. Participants viewed a series of faces with neutral expressions. Each face was followed by a unique negative or neutral sentence to describe that person, and then the same face was presented in isolation again. Viewing of faces paired with a negative sentence was associated with increased early viewing of the eye region and increased neural activity between 600 and 1200 ms in emotion processing regions such as the cingulate, medial prefrontal cortex, and amygdala, as well as posterior regions such as the precuneus and occipital cortex. Viewing of faces paired with a neutral sentence was associated with increased activity in the parahippocampal gyrus during the same time window. By monitoring behavior and neural activity within the same paradigm, these findings demonstrate that emotional information alters subsequent visual scanning and the neural systems that are presumably invoked to maintain a representation of the neutral information along with its emotional details.

Introduction

We are constantly involved in the interpretation of social and emotional cues from those around us. Such cues can be conveyed via facial expressions (Adolphs, 2003) and facial appearance (Bar et al., 2006; Willis and Todorov, 2006), as well as by biographical information (Todorov and Uleman, 2002, 2003, 2004). For example, imagine being at a party and seeing two different people who appear neutral and non-threatening. However, right before you meet them, you are told that one person has just gotten out of jail for murder and the other person is working on a Ph.D. Research from social psychology suggests that from this minimal information, you will then form a rapid, perhaps automatic, and very different impression of the two people (Todorov and Uleman, 2002, 2003, 2004). As a consequence of forming such rapid impressions, differences may occur in the way in which we perceive otherwise neutral information (i.e., the person’s face).

There are now a number of studies showing that the processing of emotional versus neutral stimuli is characterized by different patterns of eye movement behavior (e.g., Calvo and Lang, 2004, 2005; Nummenmaa et al., 2006; Riggs et al., 2010, 2011). However, the manner in which we perceive information is driven not only by stimulus-bound characteristics, but also by prior knowledge (for review see: Hannula et al., 2010). In a classic study by Loftus and Mackworth (1978), the researchers showed that prior semantic knowledge altered the way participants scanned scenes within the first few fixations. Given the influence of prior knowledge on viewing neutral stimuli, it is possible that emotional information may also influence subsequent viewing of neutral stimuli.

The goal of the present study was to examine whether presenting emotional information would influence subsequent viewing patterns and underlying neural activity of neutral faces, when this may occur and in what manner. Specifically, does presenting emotional information ‘prime’ us to actually perceive the neutral stimulus in a different way, and/or does it modulate post-perceptual processes such as elaboration and binding? Previous neuroimaging studies suggest that while perceptual processing occurs largely within the first 250 ms after stimulus onset in posterior sensory cortices, conceptual/semantic processes, and/or the retrieval of associated information are largely purported to occur during later time windows in frontal and medial temporal regions (e.g., 500–1500 ms; Donaldson and Rugg, 1999; Schweinberger et al., 2002). Therefore, in order to determine how and when emotion may influence visual processing of neutral information, it is important to use a neuroimaging technique with very fine temporal resolution such as magnetoencephalography (MEG).

Magnetoencephalography is a non-invasive neuroimaging technique that measures the magnetic field differences produced by population of neurons (Hämäläinen et al., 1993; Hari et al., 2000), providing recording of neural activity with temporal resolution on the order of milliseconds and with good spatial resolution (Miller et al., 2007). Critically, through its precise timing information, MEG has been successfully utilized to study emotion processing (Cornwell et al., 2008; Hung et al., 2010), as well as how knowledge and/or prior experience can influence the manner by which visual processing occurs (Ryan et al., 2008; Riggs et al., 2009). In a MEG study by Morel et al. (2012), the researchers examined whether prior association with auditorily presented negative, positive, or neutral information changed the manner by which neutral faces are retrieved after a delay (1–7 min). The authors found that neural responses in the bilateral occipital–temporal and right anterior medial temporal regions differentiated neutral faces previously paired with positive versus negative and neutral information as early as 30–60 ms after face onset. In other words, prior association with positive information modulated the earliest stages of perceptual processing during retrieval. A similar ERP by Smith et al. (2004) reported differences over the lateral temporal and frontal–temporal regions during retrieval of neutral items studied in an emotional (positive and negative) versus neutral context, albeit during a later time frame (300–1900 ms). However, it remains unclear whether emotional information has an immediate influence on the online visual processing of neutral information, prior to any contribution from memory consolidation (as may have occurred in Morel et al., 2012).

In the present study, participants were presented with a neutral face (Face 1) followed by either a negative or a neutral sentence about that person, and then the neutral face was presented again in isolation (Face 2)1. In order to examine whether the assignment of an emotional label to a neutral face would influence processing, it was critical to present them separately. Otherwise, it would be impossible to disentangle the effects of processing a neutral item that has been presented with the emotional information from the effects of processing the emotional stimulus itself. Visual scanning and neural activity were recorded simultaneously using eye movement monitoring (EMM) and MEG, respectively. This allowed us to directly link emotion-modulated differences in behavior with neural response. Herdman and Ryan (2007) first demonstrated the feasibility of recording eye movement behavior and neural activity simultaneously within a single paradigm; here, this unique combination of technologies was applied to the study of emotion. It was predicted that if emotion influenced visual processing of subsequent neutral information, then differences in eye movement patterns and neural activity should occur during viewing of Face 2. Specifically, viewing of faces presented after a negative versus neutral sentence should result in increased viewing to the internal features of the face (Calder et al., 2000; Green et al., 2003), especially the eye region (Adolphs et al., 2005; Itier and Batty, 2009; Gamer and Buchel, 2009). It should also elicit enhanced activation of regions implicated in emotion processing such as the amygdala, cingulate, and anterior insula (Adolphs, 2002; Haxby et al., 2002; Phelps, 2006; Chen et al., 2009), attention and facial processing such as the precuneus and fusiform gyrus (Cavanna and Trimble, 2006; Deffke et al., 2007), item binding such as the prefrontal cortices and medial temporal lobe (Cohen et al., 1999; Badgaiyan et al., 2002), and the processing of person identity and biographical information such as the superior temporal sulcus (STS; Haxby et al., 2002; Todorov et al., 2007). Further, if emotion changes perceptual processing of neutral information, then such eye movement and neural differences should manifest early during viewing (i.e., within the first fixation and <250 ms in the brain). However, if emotion influences post-perceptual processes such as retrieval and semantic/conceptual processing, then eye movement and neural differences should manifest later during viewing (i.e., after the first fixation and >500 ms in the brain). If differences are found between processing neutral faces presented following a negative versus neutral information, this would suggest that emotion can influence the visual processing, and the mental representation of subsequent neutral stimuli in a top–down manner that is independent of its physical properties. Thus, we may not only form different impressions of the ‘murderer’ versus ‘student,’ but the very manner in which we process those faces may also be different.

Materials and Methods

Participants

Twelve young adults (mean age = 21.6 years, SEM = 0.71, 5 males) from the Rotman Research Volunteer Pool participated for $10 per hour. All participants had no history of neurological or clinical disorders, no history of head trauma and had normal or corrected-to-normal vision. All participants were either native English speakers or had attended school in English for at least 12 years. All participants provided informed written consent by the guidelines of the Baycrest Hospital Research Ethics Board prior to the start of the experiment.

Stimuli and Design

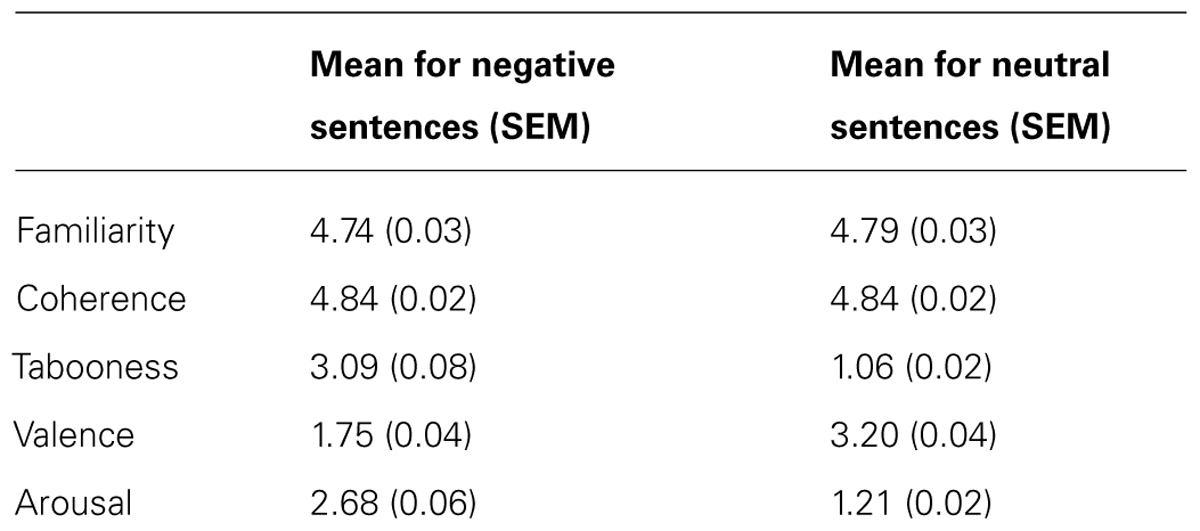

The stimuli used consisted of 300 black and white, non-famous male faces with a neutral expression selected from a database of face images as outlined by Schmitz et al. (2009). Briefly, the photographs showed front-view non-expressive faces. Faces were cropped and did not contain hair or other non-facial features. To prevent discrepancies in the spatial orientation and location of the face stimuli over trials, the eyes and philtrum of each image were aligned to a standard 3-point Cartesian space with the nose at the center. Similar correction procedures were also used to equate low-level visual perceptual differences such as luminance and shading (for more details see: Schmitz et al., 2009). The faces were placed against a uniform black background – the resulting image was 300 × 300 pixels. Each face was randomly paired with a negative (e.g., “This person is a rapist”) and a corresponding neutral sentence (e.g., “This person is a linguist”). Each pair of sentences differed only by one critical word, which was either negative or neutral, and was matched for the number of syllables. All of the sentences began with “This person…” in order to encourage participants to process the face and sentence as a pair. All of the sentences were previously rated by 10 participants (mean age = 21.3, SEM = 0.96, 4 males) on a 5-point Likert scale on dimensions of familiarity, coherence, tabooness, valence, and arousal. Negative and neutral sentences were matched for familiarity [t(99) = -1.30, p = 0.20] and coherence [t(99) = -0.03, p = 0.97]. Critically, negative sentences were judged to be more taboo [t(99) = 26.13, p < 0.0001], negative [t(99) = -25.12, p < 0.0001], and arousing [t(99) = 24.24, p < 0.0001]. The mean values can be found in Table 1.

Participants were shown 200 unique faces across five blocks (40 per block). Each face was presented with either a negative (100) or neutral (100) sentence. Faces were displayed in a pseudo random order such that no more than three negative or three neutral face-sentence-face pairings appeared in succession. Each study block contained 20 faces paired with a negative sentence, and 20 faces paired with a neutral sentence. Counterbalancing was complete such that each face appeared with a neutral and negative sentence equally often across participants.

Procedure

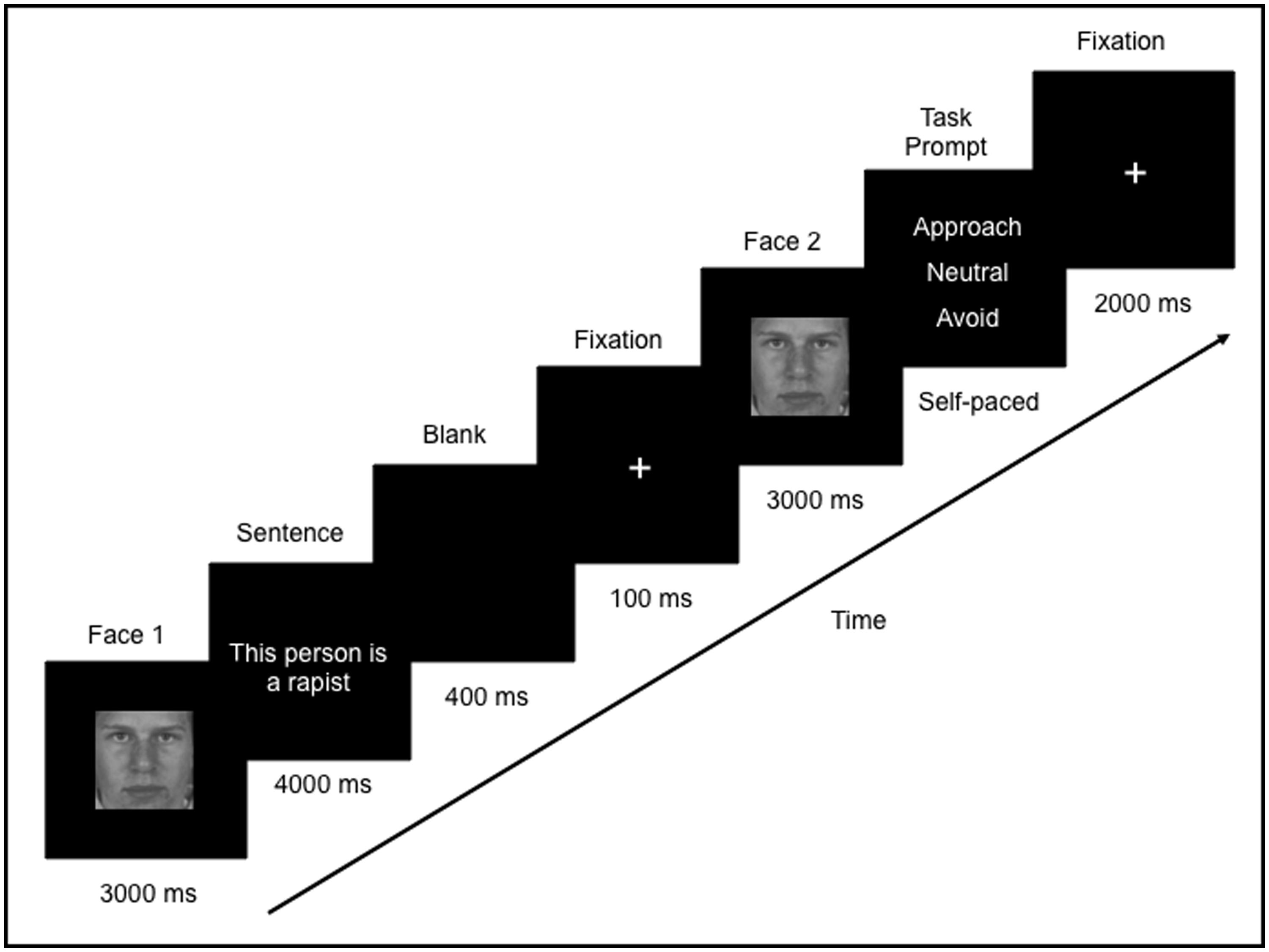

Eye movements and neural activity were recorded simultaneously throughout the experiment. Participants were told that they would see a face, followed by a sentence that describes that person, and then they would see the face again. During each trial, a face was presented for 3000 ms (Face 1), followed by a sentence which was presented for 4000 ms. Since the sentences varied in length, a blank screen (400 ms) followed by a fixation cross (100 ms) was presented in order to direct the participants’ eyes back to the center of the screen. Following this, Face 1 was re-presented again, in isolation, for 3000 ms (Face 2; Figure 1). Participants were then asked to indicate via a button press whether they would want to approach, avoid, or stay neutral to the person. The purpose of the task was to encourage participants to process the meaning of the face-sentence pairings. Throughout the trial, participants were instructed to freely view the faces and sentences presented. There was a 2000 ms inter-trial interval, which consisted of a fixation cross in the center of a blank screen. Participants were instructed to fixate on the central cross whenever it appeared. In this way, participants began each trial fixated on the center of the screen (i.e., over the nose region of the face).

FIGURE 1. Example of one trial in the study. Participants freely viewed a face (Face 1) followed by a sentence that was either negative or neutral. The same face was presented again (Face 2) and participants were asked to judge whether they would want to approach, avoid or neither approach or avoid (remain “neutral” to) that person.

Data Acquisition

Eye movements were measured with a SR Research Ltd. Eyelink 1000 remote eyetracker. This recorded eye movements at a rate of 500 Hz and with a spatial resolution of 0.1°. A 9-point calibration was performed at the start of each block followed by a 9-point calibration accuracy test. Calibration was repeated if the error at any point was more than 1°. Drift corrections were performed at the beginning of each trial if necessary. Poorly calibrated trials were deleted from the eye movement analyses; this resulted in a total of nine deleted trials across all participants.

Magnetoencephalography recordings were performed in a magnetically shielded room, using a 151-channel whole head first order gradiometer system (VSM-Med Tech Inc., Port-Coquitlam, BC, Canada) with detection coils uniformly spaced 31 mm apart on a helmet-shaped array. Participants sat in an upright position, and viewed the stimuli on a back projection screen that subtended approximately 31° of visual angle when seated 30 inches from the screen. The MEG collection included the signal of the stimulus onset by recording the luminance change of the screen. Participant’s head position within the MEG was determined at the start and end of each recording block using indicator coils placed on nasion and bilateral preauricular points. These three fiducial points established a head-based Cartesian coordinate system for representation of the MEG data. In order to specify/constrain the sources of activation as measured by MEG and to co-register the brain activity with the individual anatomy, a structural T1 MRI was also obtained for each participant using standard clinical procedures with a 3T MRI system (Siemens Magnetom Trio whole-body scanner) located at Baycrest Hospital.

Eye Movement Analysis for Faces

Differences in the eye movement patterns made to faces that had been paired with negative versus neutral sentences were taken as evidence that the processing of faces may be changed via the emotional information that had preceded them. Therefore, we compared eye movement behavior during viewing of Face 2 following a negative sentence (Face2-Negative) versus that of Face 2 following a neutral sentence (Face2-Neutral). As a control condition, we also compared eye movement during viewing of Face 1 that preceded a negative (Face1-Negative) versus neutral sentence (Face1-Neutral). Since these faces had not yet been paired with either a negative or a neutral sentence, no differences were expected in measures of eye movement behavior. Viewing to regions corresponding to the location of features within the face, i.e., eyes, nose, and mouth, were examined.

Analyses were conducted for eye movement measures of early viewing and measures of overall viewing, that is, viewing behavior that occurs throughout stimulus presentation (for more details see: Hannula et al., 2010). Measures of early viewing included: the start time of the first fixation, duration of the first gaze, and number of fixations within the first gaze. A fixation was defined as the absence of any saccade (e.g., the velocity of two successive eye movement samples exceeds 22° per second over a distance of 0.1°) or blink (e.g., pupil is missing for three or more samples) activity. Start time of the first fixation is the time, from stimulus onset, at which a fixation was first directed to a particular region of interest. Duration of the first gaze is the total time spent during the first instance the viewer’s eye movements enter a particular region, prior to leaving it. Number of fixations within first gaze is the total number of fixations during the first instance the eyes enter a particular region before moving out of that region. Measures of overall viewing included: average duration of all eye fixations, number of fixations and number of transitions into a region of interest.

MEG Analysis for Face Processing

Similar to the eye movement analysis for faces, spatiotemporal differences in neural activity underlying viewing of faces that had been paired with negative versus neutral sentences were taken as evidence that the processing of faces may be changed via emotional details that had preceded them. We compared neural activity during viewing of Face2-Negative versus Face2-Neutral. A comparison of neural activity underlying viewing of Face1-Negative versus Face1-Neutral was also included as a control condition.

Source activity was estimated using the synthetic aperture magnetometry (SAM) minimum-variance beamformer (Van Veen et al., 1997; Robinson and Vrba, 1999) across the whole brain on a grid with regular spacing of 8 mm. The beamformer analysis, using the algorithm as implemented in the VSM software package, was based on individual multisphere models, for which single spheres were locally approximated for each of the 151 MEG sensors to the shape of the cortical surface as extracted from the MRI (Huang et al., 1999). The SAM beamformer minimizes the sensitivity for interfering sources as identified by analysis of covariance in the multichannel magnetic field signal while maintaining constant sensitivity for the source location of interest. The covariances were calculated based on the entire trial duration (-1000 ms to 10,500 ms) with low-pass filter at 30 Hz. Thereafter, the resultant SAM weights were applied to the MEG sensor data separately for each epoch of interest based on an event-related spatial-filtering approach (ER-SAM; Robinson, 2004; Cheyne et al., 2006) as used in our previous studies (Moses et al., 2009; Fujioka et al., 2010). Using the MEG data of the entire segment of the trial to compute SAM separately for the epoch of interest is necessary because as mentioned above, the spatial filter is dependent on the covariance of the MEG measures. Therefore, this procedure ensures that the resultant ER-SAM source activities for each condition reflect differences in the brain activity patterns and not to differences in the spatial filters computed from different epochs of interest. Further, an advantage of using ER-SAM is that it removes differences between the experimental conditions that are not time-locked, for example, potential eye movement differences. Therefore, observed differences in neural activity reflect differences in cognitive processing that are time-locked to the onset of the neutral face.

The MEG data were epoched from 100 ms prior to stimulus onset to 2800 ms after stimulus onset for each condition (i.e., Face2-Negative, Face2-Neutral). Before applying the beamformer to each single epoch of magnetic field data, artifact rejection using a principal component analysis (PCA) was performed such that field components larger than 1.5 pT at any time were subtracted from the data at each epoch (Kobayashi and Kuriki, 1999). This procedure is effective in removing artifacts with amplitudes significantly larger than brain responses, such as those caused by eye blinks and saccades (Lins et al., 1993; Lagerlund et al., 1997). The PCA approach has been used extensively in both EEG and MEG studies as a means of identifying and removing eye movement artefact (e.g., Riggs et al., 2009; Ross and Tremblay, 2009; Fujioka et al., 2014). By low-pass filtering the MEG data at 30 Hz, we also reduced the influence of micro-saccades, which are low amplitude but occur at high frequency bands (>30 Hz, Carl et al., 2012).

Using the spatial filter, single-epoch source activity was first estimated as a pseudo-Z statistic for each participant and each condition. The time series of the source power within 0–30 Hz was then calculated for each single source waveform. Finally, the representation of the evoked response was obtained as a time series of the average power across trials normalized to the pooled variance across the duration of trials for each voxel. These individual functional maps were then spatially transformed to the standard Talairach space using AFNI (National Institute of Mental Health, Bethesda, MD, USA) and averaged across all participants. For each participant, functional data from the MEG was co-registered with their structural MRIs by using the same fiducial points defined by three indicator coils placed on the nasion and bilateral periauricular points.

In order to capture the influence of emotion on early perceptual processing of neutral faces, we examined MEG data (0–250 ms) with sliding time windows of 100 ms, i.e., 0–100, 50–150, 100–200, and 150–250 (e.g., Walla et al., 2001). In order to capture the influence of emotion on both early and later processing, we also examined MEG data (0–1200 ms) with longer sliding time windows of 600 ms (i.e., 0–600, 300–900, 600–1200 ms; Moses et al., 2009; Fujioka et al., 2010). Spatiotemporal differences in the brain responses to viewing faces presented after negative versus neutral sentences were characterized using the partial least squares (PLS) multivariate approach (McIntosh and Lobaugh, 2004). The PLS approach has been successfully used for time-series neuroimaging data in MEG (Moses et al., 2009; Fujioka et al., 2010). Critically, PLS examines all voxels in the brain at once, thereby circumventing the need to correct for multiple comparisons (e.g., McIntosh et al., 2004). In order to accommodate computation demands, the Talairach-transformed individual functional maps for each participant were down-sampled to 78 Hz, which resulted in volumetric maps every 12.8 ms, and used as input for a mean-centered PLS analysis. Mean centering allowed values for the different conditions to be expressed relative to the overall mean. Using this type of analysis, activation patterns that are unique to a specific condition will be emphasized whereas activations that are consistent across all conditions, such as primary visual activation, will be diminished.

The input of PLS is a cross-block covariance matrix, which is obtained by multiplying the design matrix (an orthonormal set of vectors defining the degrees of freedom in the experimental conditions), and the data matrix (time series of brain activity at each location as columns and subjects within each experimental condition as rows). The output of PLS is a set of latent variables (LVs), obtained by singular value decomposition applied to the input matrix. Similar to eigenvectors in PCA, LVs account for the covariance of the matrix in decreasing order of magnitude determined by singular values. Each LV explains a certain pattern of experimental conditions (design score) as expressed by a cohesive spatial–temporal pattern of brain activity. The significance of each LV was determined by a permutation test using 500 permuted data with conditions randomly reassigned for recomputation of PLS. This yielded the empirical probability for the permuted singular values exceeding the originally observed singular values. An LV was considered to be significant at p ≤ 0.05. For each significant LV, the reliability of the corresponding eigen-image of brain activity was assessed by bootstrap estimation using 250 resampled data with subjects randomly replaced for recomputation of PLS, at each time point at each location. The bootstrap method is a measure of reliability across participants and ensures that the results are not being driven by only a few participants. A high bootstrap value indicates that an effect is observed across most, if not all, of the participants. Sources that were spatially distinct with a bootstrap ratio of ±3 at the local maxima were examined.

Results

Participants responded “avoid” to 78.4% of faces which had been paired with negative sentences, and “neutral” or “approach” to 86.5% of faces which had been paired with neutral sentences. This suggests that faces presented with negative sentences were primarily interpreted as negative, whereas faces presented with neutral sentences were not interpreted as negative. In the current study, all of the faces were included in the eye movement and MEG analysis in order to increase signal-to-noise ratio.

Viewing of Faces

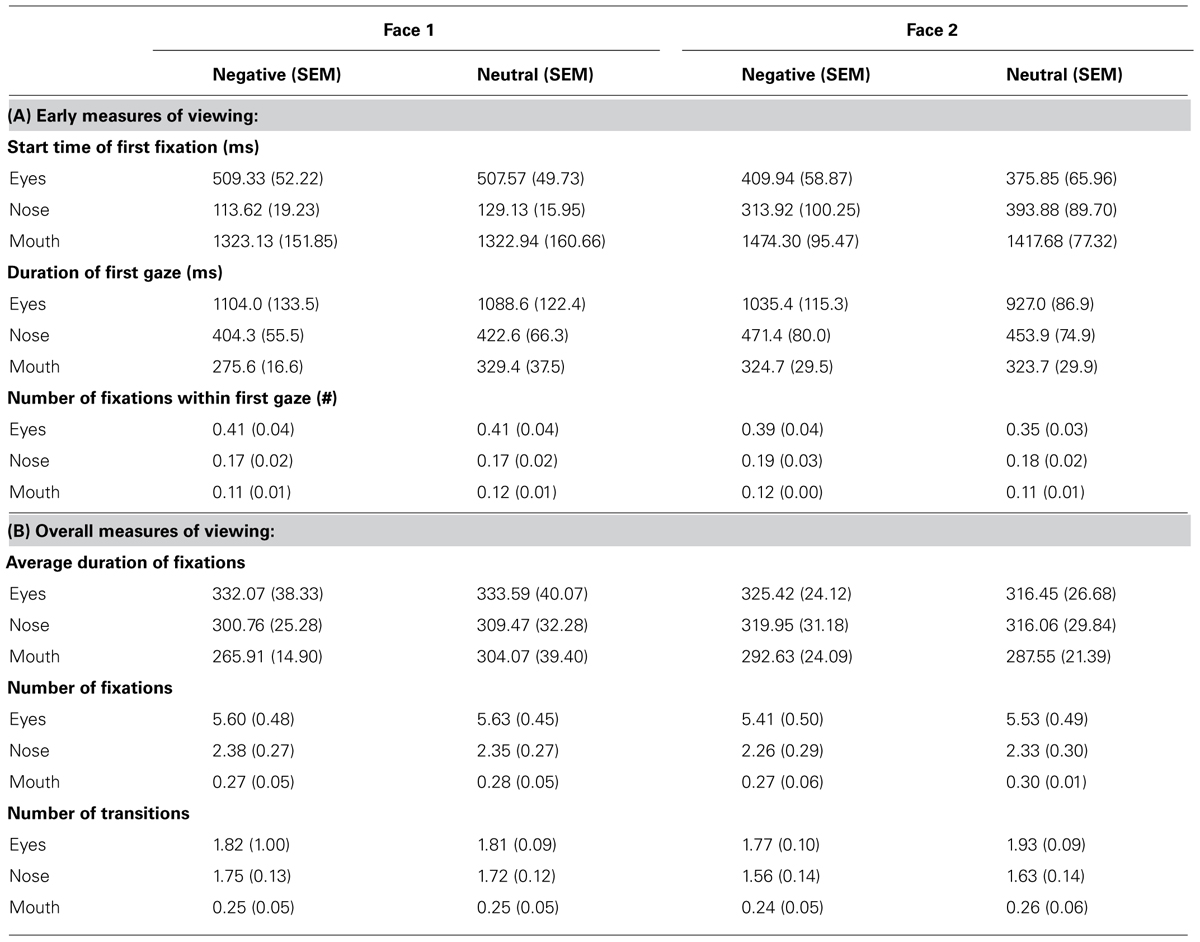

Analyses of variance (ANOVA) were conducted on measures of viewing to Face 2 using emotion (negative, neutral) and face feature (eyes, mouth, nose) as within-subject factors. As a control, the same analyses were also conducted for viewing to Face 1 and no significant effects were found (all p’s > 0.1), therefore, for brevity, we only describe viewing to Face 2. Mean values are found in Table 2.

TABLE 2. The mean and SEM for early (A) and overall (B) measures of viewing to different features within Face 1 and Face 2.

Of note, the very first fixation was typically in the nose region because this was at the center of the screen, or outside of the pre-specified ROIs because participants had not returned their gaze to the center of the screen yet. Participants spent significantly more time [F(1,11) = 4.76, p = 0.05, = 0.30] and directed marginally more fixations [F(1,11) = 3.62, p = 0.08, = 0.25] during the first gaze to the different features of faces paired with negative versus neutral sentences. There was also a marginal interaction between emotion and face feature for the duration of the first gaze [F(2,22) = 3.10, p = 0.07, = 0.22] and the number of fixations within first gaze [F(1,11) = 2.92, p = 0.08, = 0.21]. Follow-up t-tests revealed that during the first gaze, participants spent significantly more time [t(11) = 2.31, p < 0.05] and directly marginally more fixations [t(11) = 1.93, p = 0.08] to the eye region of faces paired with negative versus neutral sentences, but not for other regions of the face (p’s > 0.1). There were no significant effects of emotion for the start time of the first fixation (p’s > 0.1). Across both types of faces, the first fixation to the eye region occurred at around 400 ms after stimulus onset. Thus, emotion-modulated viewing differences found during the first gaze to the eye region occurred approximately between 400 and 1400 ms (the duration of the first gaze was approximately 1000 ms, see Table 2).

Viewing across the entire trial revealed fewer fixations [F(1,11) = 4.34, p = 0.06, = 0.28] and fewer transitions between face features [F(1,11) = 10.51, p < 0.01, = 0.49] for faces paired with negative versus neutral sentences. A significant interaction for the number of transitions [F(2,22) = 5.40, p < 0.05, = 0.33] revealed that participants made fewer transitions into the eye region of faces paired with negative versus neutral sentences [t(11) = -3.45, p < 0.01], whereas there was no difference in viewing of the other features (p’s > 0.1). No significant effects were found for the measure of average fixation duration (p’s > 0.1).

In summary, emotion led to early changes in the subsequent viewing of neutral faces. Specifically, negative versus neutral sentences led to an initial increase in viewing of the eye region of neutral faces, and perhaps as a consequence, decreased overall sampling (i.e., fewer fixations and transitions) across the remainder of the trial.

Neural Activity to Faces

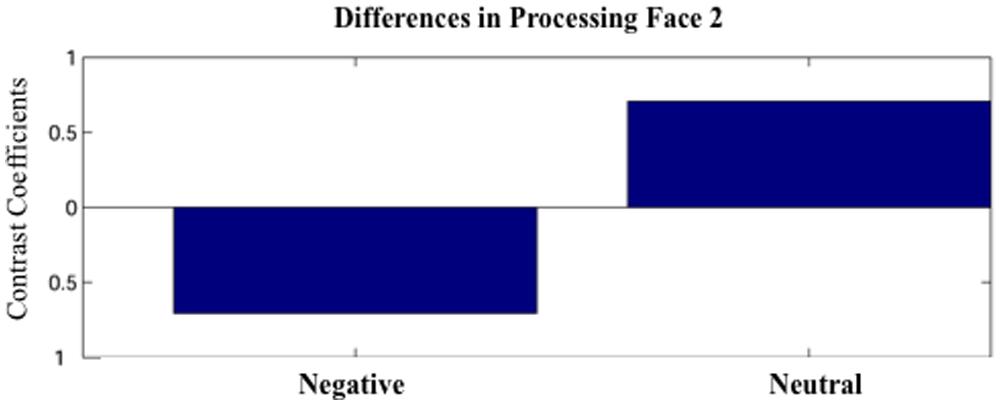

Partial least squares analysis did not reveal any differences between Face1-Negative and Face1-Neutral. For Face 2, PLS analysis did not yield any significant design LVs for the short 100 ms time windows (p’s > 0.05). However, one significant design LV (LV1) emerged for the time window 600–1200 (p < 0.05; Figure 2).

FIGURE 2. The first latent variable (LV1) obtained by the partial least squares (PLS) analysis. The LV1 contrast coefficient revealed that processing faces paired with negative versus neutral sentences yielded unique spatio-temporal patterns of brain activation during 600–1200 ms after face onset.

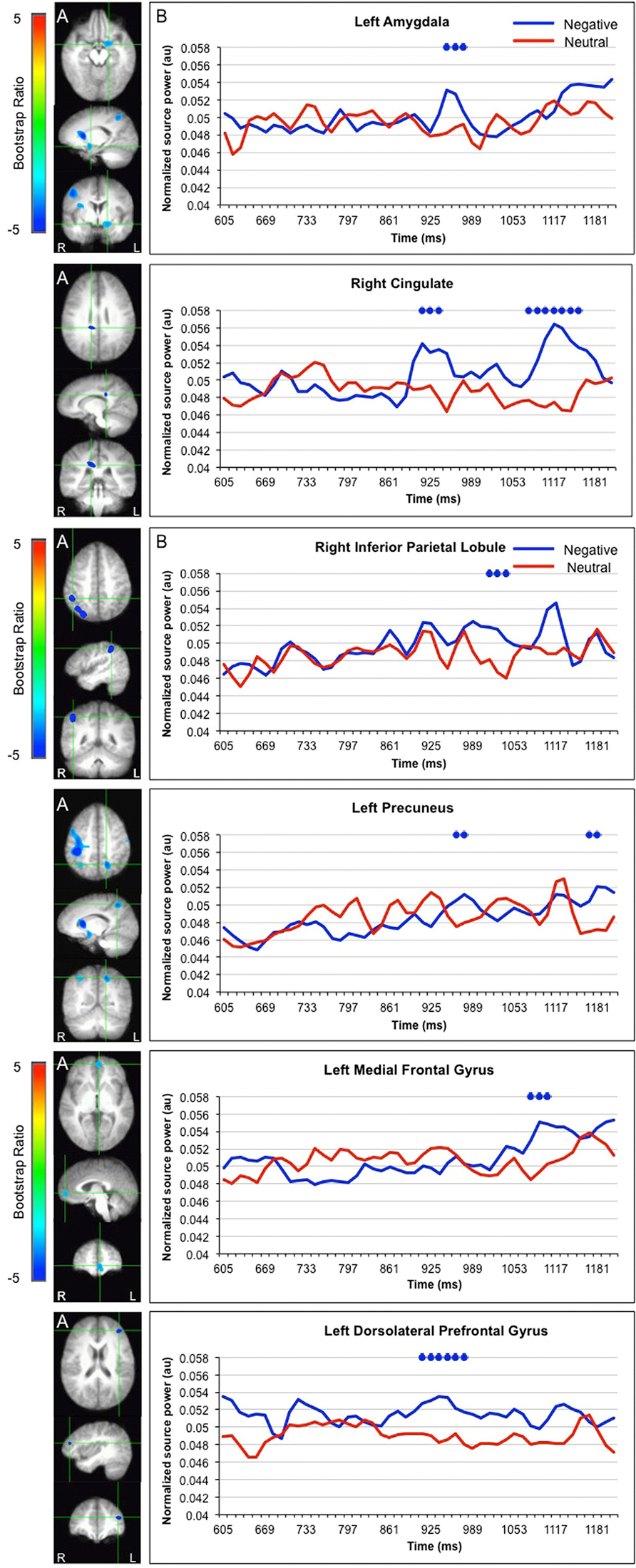

LV1 revealed greater activation for faces paired with negative versus neutral sentences in emotion processing regions such as the left amygdala (950–976 ms), right cingulate (925–938, 1091–1155 ms), and left medial frontal gyrus (1078–1117 ms), in posterior regions such as the left precuneus (989–1053 ms) and right inferior parietal lobule (1014–1040), and in the dorsolateral prefrontal cortex (912–976 ms; Figure 3). These areas were represented by the local maxima/minima of the bootstrap ratio of the LV1.

FIGURE 3. Sources showing stronger activation for faces paired with negative as compared to neutral sentences. (A) The brain areas associated with the PLS bootstrap ratio less than –3 in the LV1. (B) Corresponding ER-SAM waveforms from sources identified in PLS bootstrap ratio maps. Blue dots denote bootstrap ratios <–3, and red dots denote bootstrap ratios >3. Since no significant differences were found for 0–600 ms, the x-axis begins at around 600 ms in order to fully visualize the emotion-modulated differences observed.

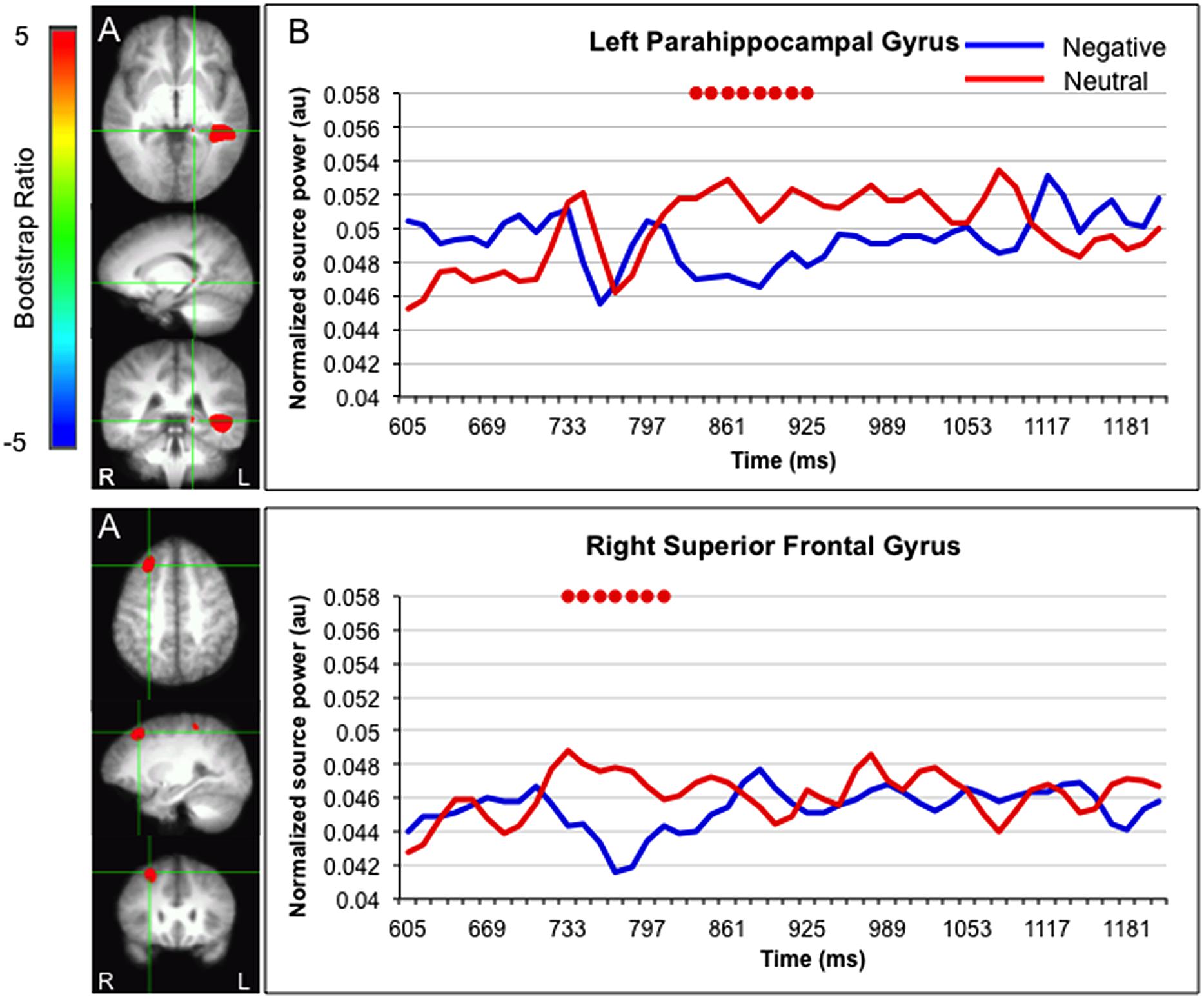

Greater activation for faces paired with neutral versus negative sentences was found in the left parahippocampal gyrus (835–925 ms) and right superior frontal gyrus (733–810 ms; Figure 4).

FIGURE 4. Sources showing stronger activation for faces paired with neutral as compared to negative sentences. (A) The brain areas associated with the PLS bootstrap ratio more than 3 in the LV1. (B) Corresponding ER-SAM waveforms from sources identified in PLS bootstrap ratio maps. Red dots denote bootstrap ratios >3. Since no significant differences were found for 0–600 ms, the x-axis begin at around 600 ms in order to fully visualize the emotion-modulated differences observed.

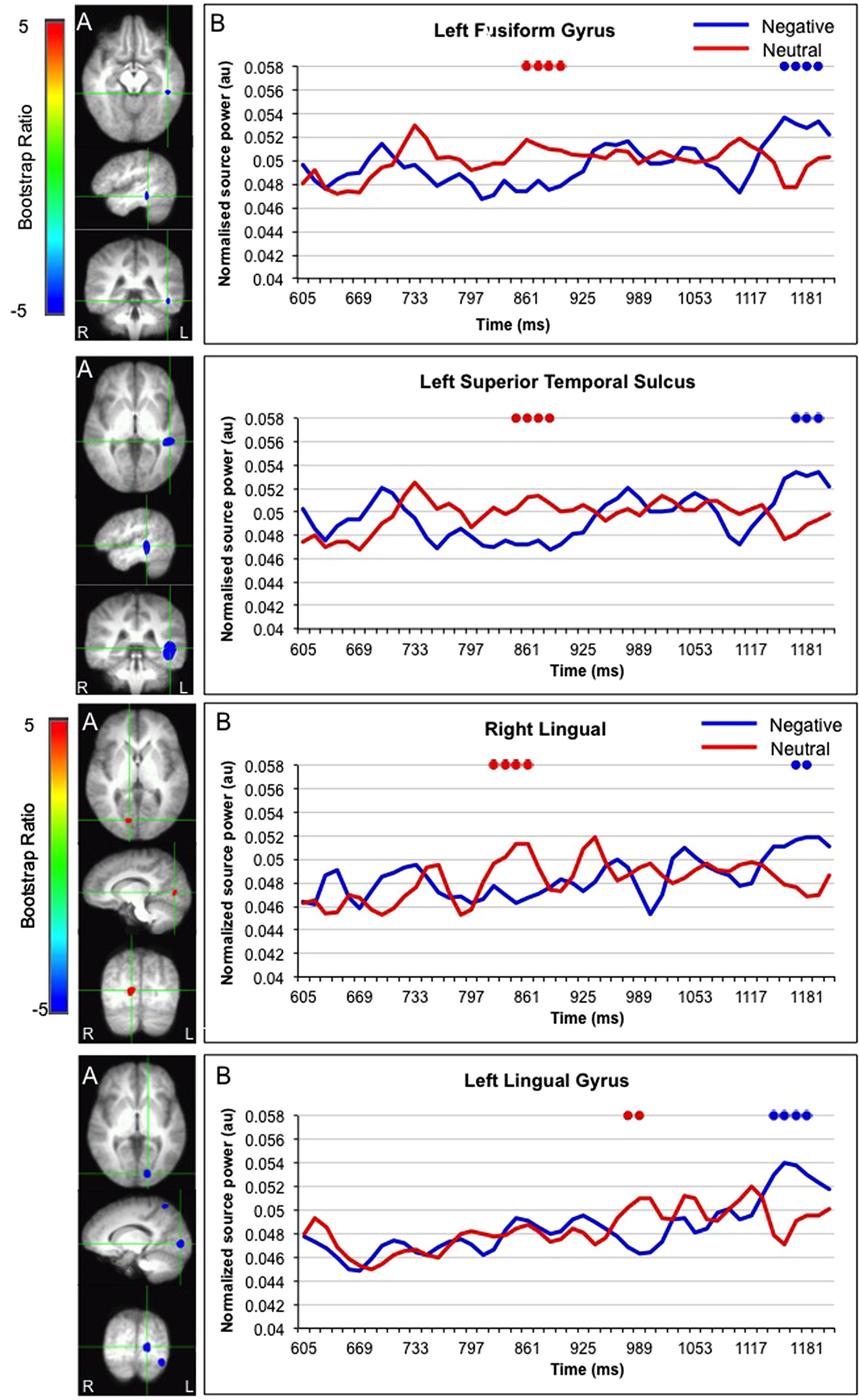

Interestingly, activation in the fusiform gyrus, left superior temporal gyrus, and bilateral lingual gyrus initially showed a larger response for faces paired with neutral versus negative sentences, but ultimately showed a larger response for faces paired with negative sentences (Figure 5).

FIGURE 5. Sources initially showing stronger activation for faces paired with neutral as compared to negative sentences, and subsequently stronger activation for faces paired with negative as compared to neutral sentences. (A) The brain areas associated with the PLS bootstrap ratio of less than –3 and more than 3 in the LV1. (B) Corresponding ER-SAM waveforms from sources identified in PLS bootstrap ratio maps. Blue dots denote bootstrap ratios <–3, and red dots denote bootstrap ratios >3. Since no significant differences were found for 0–600 ms, the x-axis begin at around 600 ms in order to fully visualize the emotion-modulated differences observed.

In summary, presentation of negative versus neutral information led to differences in the subsequent viewing and processing of neutral faces.

Eye Movement-Locked MEG Analysis

Given that differences in viewing neutral faces following negative versus neutral sentences were not observed until after participants entered the eye region of the face (∼400 ms after stimulus onset), we also examined temporal differences in neural activity for the above regions that were time-locked to the moment when eye movements first entered the eye region of the face, i.e., this was marked as time 0. ER-SAM waveforms were extracted for each participant and condition (0–1000 ms), and source activity was averaged within time bins of 100 ms and emotion-modulated differences were examined using paired sample t-tests. After correcting for multiple comparisons, the only significant time period to emerge between Face2-Negative and Face2-Neutral was 700–800 ms in the precuneus [t(11) = 4.19, p < 0.001]. Specifically, following fixation of the eye region, activity in the precuneus was stronger for faces presented after a negative versus neutral sentence.

Discussion

The presentation of negative information changed the way in which neutral faces were subsequently viewed and the neural systems that were engaged following early perceptual processing. Further, the novel use of simultaneous eye movement and MEG recordings allowed us to explore the direct relationship between the influence of emotion on viewing behavior and neural activity. This was achieved by locking the MEG signal to specific eye movement behavior; here, when the eye movements first moved into the eye region of the neutral faces. In the next sections, we discuss our results in light of prior findings regarding how emotion may modulate processing of neutral information, and how the current work may inform theories regarding the influence of emotion on perception and memory.

Emotion-Modulated Viewing of Neutral Faces

Emotional details influenced the immediate viewing to neutral faces. Specifically, when faces were presented after a negative versus neutral sentences, there was increased viewing to the eye region of faces during the first gaze to that region. While the first gaze occurred between 400 and 1400 ms after the onset of Face 2, it was the very first instance that the eye region had been fixated. Given that direct fixation allows more information to be derived from the fovea than could otherwise be gleaned from the periphery, this may suggest that perceptual processing of the eyes themselves is still occurring at this point (even at 400 ms post-onset). Therefore this change in duration of viewing may reflect a change in the manner by which perceptual processing is occurring (Aviezer et al., 2008). Previous studies have shown that the viewing of threat-related versus non-threat-related facial expressions is also characterized by increased viewing to the eye region (Adolphs et al., 2005; Gamer and Buchel, 2009; Itier and Batty, 2009). Within the current experiment, the neutral faces may have taken on emotional qualities and elicited viewing patterns similar to those reported for the viewing of faces that are actually expressing emotion. Further work is needed to directly compare viewing of faces expressing emotion and viewing of neutral faces presented with emotional details.

Alternatively, emotion-modulated differences in viewing neutral faces may reflect processing differences that occur after perceptual processing has been completed. Specifically, after building a visual percept of the neutral face (Face 1), participants may have bound the sentence information to the neutral face during subsequent viewing (Face 2). Faces paired with negative versus neutral sentences may have invoked a greater need to appraise or reappraise the face, leading to increased viewing of regions of the face that are most informative (i.e., the eyes). Consistent with the axiom that the eyes may be a “window to the soul,” previous research shows that viewing to the eye region is associated with the assessment of interest, threat, and intentions of other people (Haxby et al., 2002; Spezio et al., 2007).

Emotion-Modulated Processing of Neutral Faces

In addition to examining emotion-modulated differences in viewing, we also examined the extent to which presenting negative information immediately prior may influence underlying neural processing of the neutral face, and precisely when emotion may exert its influence. Specifically, if emotion modulated processing of neutral faces occurred early, i.e., within the first 250 ms, then this would suggest that emotion changes perceptual processing of subsequent information. However, our analysis did not reveal any significant spatiotemporal differences in processing neutral faces presented after negative versus neutral sentences within the first 250 ms after stimulus onset. This is consistent with the MEG study by Morel et al. (2012), which also did not find any early neural differences between faces paired with negative versus neutral information. While Morel and colleagues only examined neural differences in the first 0–200 ms after stimulus onset, the current results showed that emotion-modulated neural differences emerged 600–1200 ms after stimulus onset. This time frame is consistent with, albeit a little later than the EMM results which revealed emotion-modulated viewing differences between 400 and 1400 ms.

The current pattern of results suggests that emotion may modulate later stages of processing such as binding the sentence to the face and/or the appraisal of the neutral face (at least immediately during encoding). The current results also suggest that the presentation of emotional information may drive participants to direct more viewing to informative regions of the face such as the eyes. In doing so, this may increase neural activity in regions implicated in emotion processing, resulting in the construction of a different type of internal representation as compared to faces paired with a neutral sentence. Interestingly, whereas the current study found that emotional information modulated the immediate processing of neutral faces between 600 and 1200 ms, Smith et al. (2004) found that emotion modulated subsequent retrieval of neutral items as early as 300 ms following stimulus onset. This suggests that not only does association with emotion influence one’s representation of neutral information, but that this may become stronger over time (resulting in faster retrieval) as the links between emotional and neutral items become consolidated or as the emotional information becomes a feature of (i.e., blended into) the neutral item.

In support of the notion that emotion may lead to the construction of a different type of representation, processing neutral faces following negative versus neutral sentences elicited stronger activity in neural regions implicated in emotion processing such as the amygdala, cingulate, and medial prefrontal cortex (Lane et al., 1997; Culham and Kanwisher, 2001; Anderson et al., 2003; Kensinger and Corkin, 2003; Adolphs et al., 2005). It is possible that increased activity in these regions drove subsequent changes in the posterior cortices, leading to increased appraisal or reappraisal of neutral faces following negative as compared to neutral sentences. Consistent with this, activation differences in emotion-related regions were followed by differences in neural activity in the precuneus and inferior parietal lobule, which have been linked to the maintenance of attention and the processing of salient information (Culham and Kanwisher, 2001; Singh-Curry and Husain, 2009). In the current results, activation peaks in these two parietal regions were followed by peaks in neural regions in other emotion processing regions such as the cingulate and medial prefrontal cortex (Culham and Kanwisher, 2001; Kensinger and Corkin, 2003). This may reflect the retrieval and/or attachment of emotional information to a neutral face (Maratos et al., 2001; Smith et al., 2004; Fenker et al., 2005; Medford et al., 2005) and/or the appraisal and assessment of the neutral face in light of the negative sentence.

When we explored the precise relationship between eye movement behavior and neural activity further by locking the MEG results to the time at which participants’ eye movements first moved into the eye region of the neutral faces, activity in the precuneus was observed. Specifically, presentation of neutral faces following negative versus neutral sentences elicited increased activity in the precuneus 700–800 ms following fixation on the eye region (∼1100–1200 after stimulus onset). In addition to attention, the precuneus has also been implicated in episodic memory retrieval, mental imagery, self-referential activity, and theory of mind (ToM); more specifically, relative increases in responses arise in the precuneus when making judgments of others’ actions and intentions compared to making judgments of the self (Cavanna and Trimble, 2006). It has been suggested that judgment of others may elicit a particularly vivid representation of the self in order to “put oneself in another’s shoe”. Thus, the viewing of neutral faces following emotional versus neutral information may increase the likelihood of retrieving the specific information presented before the face, engaging in mental imagery (i.e., some of the sentences were quite graphic), and/or evaluating the face with regards to its potential congruence with the sentence, risk, and/or effect on the self.

A number of regions, namely the fusiform gyrus, STS and bilateral lingual gyrus, initially showed stronger responses for faces presented after neutral versus negative sentences, but then showed stronger responses for faces presented after negative versus neutral sentences. The STS has been shown to be involved in inferring the intentions and attributes of other people (Winston et al., 2002) and the representation of biographical information (Haxby et al., 2002; Todorov et al., 2007). In this way, emotional information may modulate and change encoding processes, possibly via enhanced activation of visual processing regions in the occipital cortex and specific face processing modules in the fusiform gyrus (Halgren et al., 2000; Prince et al., 2009). Critically, these processes may be delayed for neutral faces presented after negative versus neutral sentences because the emotional significance of the face and/or the congruence between the physical properties of the face and preceding biographical information must first be evaluated or considered. Taken together, results from MEG suggest that emotional information may influence the processing of neutral faces via a parietal-limbic-frontal network that may drive, as well as be modulated by, eye movement behavior.

Interestingly, processing of faces presented after neutral versus negative sentences elicited stronger activation in the superior frontal gyrus and within the medial temporal lobe, i.e., in the parahippocampal gyrus, which may reflect enhanced processing of the sentence and the blending/binding of that information to the neutral face. This was somewhat counterintuitive as it seemed that it would be more important and relevant to bind emotionally salient information to the neutral face rather than affectively neutral information. However, there has been some suggestion in the literature showing that while processing and memory of associated neutral information is mediated by the medial temporal lobes, processing and memory of associated emotional information may be more dependent on regions such as the amygdala and temporal poles (Phelps and Sharot, 2008). For example, Todorov and Olson (2008) presented participants with neutral faces paired with positive or negative sentences and later asked them to rate each face on scales of likeability, trustworthiness, and competence, and to make a force-choice judgment of preference. They found that while healthy controls and patients with hippocampal damage showed learning effects (i.e., preferring faces previously paired with positive versus negative behaviors), patients with damage to the amygdala and temporal poles did not. In light of this, it is possible that in the present experiment, participants built different types of representations for the face-sentence pairings depending on whether the sentence was negative or neutral. Specifically, participants may have built a parahippocampal-based representation of faces with neutral sentences, and an emotion system-based representation of faces with negative sentences. However, it is important to note that in the current study, differences in neural activity were relative rather than absolute. In this way, the evidence lends support to the notion that binding/blending of emotional versus neutral information may depend more on one system versus another, not that they rely only on one system versus another.

Considerations and Future Directions

There are a few considerations regarding the current study that should be noted. First, we preprocessed the MEG data using a PCA approach which is widely accepted and commonly used in EEG and MEG studies to identify and reject potential large artifacts such as those related to eye blinks and movement. However, small residuals below our cutoff threshold of 1.5 pT, such as those related to saccades, may remain in the data, and affect the quality of source localization, especially in anterior frontal regions. Previous studies have shown that eye artifacts can ‘leak’ into anterior frontal, inferior temporal, and occipital sources (Bardouille et al., 2006; Carl et al., 2012) and/or mask weaker activity from these regions (e.g., Fatima et al., 2013). This is an inherent problem in MEG studies and various methods have been applied to reduce artifact noise, such as the PCA approach used in the present study, manual and automated rejection of trials containing artifacts (e.g., de Hoyos et al., 2014; Gonzalez-Moreno et al., 2014), dipole modeling (e.g., Berg and Scherg, 1991), and independent component analysis (ICA; e.g., Mantini et al., 2008; Fatima et al., 2013). To the best of our knowledge, no study has systematically reviewed the above mentioned methods and compared their relative efficacy. However, no method is likely perfect, and MEG results need to be interpreted in the context of converging evidence. This becomes especially relevant in studies where emotional stimuli are used (e.g., faces expressing emotion and IAPS pictures) and differences in eye movements are elicited – even if this is not explicitly measured. One solution is to instruct participants to maintain a central fixation and not move their eyes. However, while this reduces potential eye movement artifacts, it also reduces ecological validity and results in different cognitive process (e.g., inhibition of eye movements) as compared to when participants are allowed to freely explore. In the present study, it is important to note that although differences in eye movements were found between the experimental conditions, it is unlikely that they were time-locked to the presentation of the face (i.e., scanning each face in exactly the same manner). Further, the neural differences that we observed were consistent with those reported in other emotion and face processing studies, and not limited to anterior regions near the eyes. Therefore, differences in time-locked neural activity likely reflect underlying cognitive processes, and not merely differences in scanning.

Second, in the present study, the delay between the sentence and second presentation of the face was short (500 ms). With such a short time window, one could argue that differences in viewing Face 2 represent a carryover effect from processing the preceding sentence, or that there are stimulus-general processing effects that occur in response to the presentation of the emotional information. However, if this were the case, then presumably emotion-modulated differences should have occurred very early during Face 2 and dissipated over time. That is, it is not clear why either a carryover effect or a stimulus-general effect of emotion would be initially offline and then re-invoked later during processing. The fact that neural differences were observed later during viewing suggests that it may be the retrieval and/or conceptual evaluation of the face that is influencing ongoing processing, rather than a change in the general state. Although we did not observe any differences in early time windows, it will be important for future studies to control for potential carryover effects.

Lastly, this study was limited to the examination of the influence of negative emotions on the immediate processing of subsequent neutral information. It will be important for future work to explore how valence affects processing of neutral information (i.e., positive versus negative), and how emotions may alter long-term memory of neutral information (e.g., Mather and Knight, 2008; Guillet and Arndt, 2009; Riggs et al., 2010; Madan et al., 2012; Sakaki et al., 2014).

Conclusion

This work demonstrates that emotions can alter processing of otherwise neutral information by changing overt viewing patterns. This adds to the considerable literature showing that visual processing is determined not only by bottom–up physical characteristics, but also by top–down influences such as prior knowledge, memory, and context (Aviezer et al., 2008; Ryan et al., 2008). Processing of faces paired with negative sentences relied more on a neural network mediated by regions involved in emotional processing, whereas processing of faces paired with neutral sentences relied more on regions involved in memory (i.e., the parahippocampal gyrus). This suggests that not only do emotional details influence subsequent online processing of neutral information to which it pertains, but it may also alter the type of representation that is formed. This may have important implications for understanding clinical disorders such as anxiety, post-traumatic stress disorder, and depression where seemingly neutral stimuli may trigger an emotional response. Further, while Herdman and Ryan (2007) demonstrated the feasibility of combining eye movement and neuroimaging data within a single paradigm, the current study represents the first application of this approach to the study of emotion and memory. More generally, the simultaneous recording of eye movements and neural activity can serve to elucidate the relationship between behavior, neural activity, and cognitive processing.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors wish to thank Helen Dykstra, Nathaniel So, and Amy Oziel for their assistance in data collection. The authors also wish to thank Sandra Moses, Bernhard Ross, Zainab Fatima, and Sam Doesburg for their advice and technical assistance. This work was funded by Natural Science and Engineering Research Council and Canada Research Chair grants awarded to Jennifer D. Ryan; and a research studentship from Ontario Mental Health Foundation to Lily Riggs.

Footnotes

- ^ Of note, although emotion includes both positive and negative valenced information, which may have different consequences when paired with neutral information (e.g., Morel et al., 2012), we focused on the effects of negative emotion. For simplicity, all references to ‘emotion’ in this paper concerns negative emotion only.

References

Adolphs, R. (2002). Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12, 169–177. doi: 10.1016/S0959-4388(02)00301-X

Adolphs, R. (2003). Cognitive neuroscience of human social behaviour. Nat. Rev. Neurosci. 4, 165–178. doi: 10.1038/nrn1056

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Adolphs, R., Gosselin, F., Buchanan, T. W., Tranel, D., Schyns, P., and Damasio, A. R. (2005). A mechanism for impaired fear recognition after amygdala damage. Nature 433, 68–72. doi: 10.1038/nature03086

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Anderson, A. K., Christoff, K., Panitz, D., De Rosa, E., and Gabrieli, J. D. (2003). Neural correlates of the automatic processing of threat facial signals. J. Neurosci. 23, 5627–5633.

Aviezer, H., Hassin, R. R., Ryan, J., Grady, C., Susskind, J., Anderson, A.,et al. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19, 724–732. doi: 10.1111/j.1467-9280.2008.02148.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Badgaiyan, R. D., Schacter, D. L., and Alpert, N. M. (2002). Retrieval of relational information: a role for the left inferior prefrontal cortex. Neuroimage 17, 393–400. doi: 10.1006/nimg.2002.1219

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Bar, M., Neta, M., and Linz, H. (2006). Very first impressions. Emotion 6, 269–278. doi: 10.1037/1528-3542.6.2.269

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Bardouille, T., Picton, T. W., and Ross, B. (2006). Correlates of eye blinking as determined by synthetic aperture magnetometry. Clin. Neurophysiol. 117, 952–958. doi: 10.1016/j.clinph.2006.01.021

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Berg, P., and Scherg, M. (1991). Dipole modelling of eye activity and its application to the removal of eye artefacts from the EEG and MEG. Clin. Phys. Physiol. Meas. 12(Suppl. A), 49–54. doi: 10.1088/0143-0815/12/A/010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Calder, A. J., Young, A. W., Keane, J., and Dean, M. (2000). Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 26, 527–551. doi: 10.1037/0096-1523.26.2.527

Calvo, M. G., and Lang, P. J. (2004). Gaze patterns when looking at emotional pictures: motivationally biased attention. Motiv. Emot. 28, 221–243. doi: 10.1023/B:MOEM.0000040153.26156.ed

Calvo, M. G., and Lang, P. J. (2005). Parafoveal semantic processing of emotional visual scenes. J. Exp. Psychol. Hum. Percept. Perform. 31, 502–519. doi: 10.1037/0096-1523.31.3.502

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Carl, C., Açik, A., König, P., Engel, A. K., and Hipp, J. F. (2012). The saccadic spike artifact in MEG. Neuroimage 59, 1657–1667. doi: 10.1016/j.neuroimage.2011.09.020

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cavanna, A. E., and Trimble, M. R. (2006). The precuneus: a review of its functional anatomy and behavioural correlates. Brain 129, 564–583. doi: 10.1093/brain/awl004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Chen, Y. H., Dammers, J., Boers, F., Leiberg, S., Edgar, J. C., Roberts, T. P.,et al. (2009). The temporal dynamics of insula activity to disgust and happy facial expressions: a magnetoencephalography study. Neuroimage 47, 1921–1928. doi: 10.1016/j.neuroimage.2009.04.093

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cheyne, D., Bakhtazad, L., and Gaetz, W. (2006). Spatiotemporal mapping of cortical activity accompanying voluntary movements using an event-related beamforming approach. Hum. Brain Mapp. 27, 213–229. doi: 10.1002/hbm.20178

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cohen, N. J., Ryan, J., Hunt, C., Romine, L., Wszalek, T., and Nash, C. (1999). Hippocampal system and declarative (relational) memory: summarizing the data from functional neuroimaging studies. Hippocampus 9, 83–98. doi: 10.1002/(SICI)1098-1063(1999)9:1<83::AID-HIPO9>3.0.CO;2-7

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Cornwell, B. R., Carver, F. W., Coppola, R., Johnson, L., Alvarez, R., and Grillon, C. (2008). Evoked amygdala responses to negative faces revealed by adaptive MEG beamformers. Brain Res. 1244, 103–112. doi: 10.1016/j.brainres.2008.09.068

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Culham, J. C., and Kanwisher, N. G. (2001). Neuroimaging of cognitive functions in human parietal cortex. Curr. Opin. Neurobiol. 11, 157–163. doi: 10.1016/S0959-4388(00)00191-4

Deffke, I., Sander, T., Heidenreich, J., Sommer, W., Curio, G., Trahms, L.,et al. (2007). MEG/EEG sources of the 170-ms response to faces are co-localized in the fusiform gyrus. Neuroimage 35, 1495–1501. doi: 10.1016/j.neuroimage.2007.01.034

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

de Hoyos, A., Portillo, J., Marín, P., Maestú, F. M. J. L., and Hernando, A. (2014). Clustering strategies for optimal trial selection in multisensor environments. An eigenvector based approach. J. Neurosci. Methods 222, 1–14. doi: 10.1016/j.jneumeth.2013.10.008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Donaldson, D. I., and Rugg, M. D. (1999). Event-related potential studies of associative recognition and recall: electrophysiological evidence for context dependent retrieval processes. Brain Res. Cogn. Brain Res. 8, 1–16. doi: 10.1016/S0926-6410(98)00051-2

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fatima, Z., Quraan, M. A., Kovacevic, N., and McIntosh, A. R. (2013). ICA-based artifact correction improves spatial localization of adaptive spatial filters in MEG. Neuroimage 78, 284–294. doi: 10.1016/j.neuroimage.2013.04.033

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fenker, D. B., Schott, B. H., Richardson-Klavehn, A., Heinze, H. J., and Duzel, E. (2005). Recapitulating emotional context: activity of amygdala, hippocampus and fusiform cortex during recollection and familiarity. Eur. J. Neurosci. 21, 1993–1999. doi: 10.1111/j.1460-9568.2005.04033.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fujioka, T., Fidali, B. C., and Ross, B. (2014). Neural correlates of intentional switching from ternary to binary meter in a musical hemiola pattern. Front. Psychol. 5:1257. doi: 10.3389/fpsyg.2014.01257

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fujioka, T., Zendel, B. R., and Ross, B. (2010). Endogenous neuromagnetic activity for mental hierarchy of timing. J. Neurosci. 30, 3458–3466. doi: 10.1523/JNEUROSCI.3086-09.2010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Gamer, M., and Buchel, C. (2009). Amygdala activation predicts gaze toward fearful eyes. J. Neurosci. 29, 9123–9126. doi: 10.1523/JNEUROSCI.1883-09.2009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Gonzalez-Moreno, A., Aurtenetxe, S., Lopez-Garcia, M. E., del Pozo, F., Maestu, F., and Nevado, A. (2014). Signal-to-noise ratio of the MEG signal after preprocessing. J. Neurosci. Methods 222, 56–61. doi: 10.1016/j.jneumeth.2013.10.019

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Green, M. J., Williams, L. M., and Davidson, D. (2003). Visual scanpaths to threat-related faces in deluded schizophrenia. Psychiatry Res. 119, 271–285. doi: 10.1016/S0165-1781(03)00129-X

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Guillet, R., and Arndt, J. (2009). Taboo words: the effect of emotion on memory for peripheral information. Mem. Cogn. 37, 866–879. doi: 10.3758/MC.37.6.866

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Halgren, E., Raij, T., Marinkovic, K., Jousmaki, V., and Hari, R. (2000). Cognitive response profile of the human fusiform face area as determined by MEG. Cereb. Cortex 10, 69–81. doi: 10.1093/cercor/10.1.69

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hämäläinen, M., Hari, R., Ilmoniemi, R. J., Knuutila, J., and Lounasmaa, O. V. (1993). Magnetoencephalography — theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Mod. Phys. 65, 413–496. doi: 10.1103/RevModPhys.65.413

Hannula, D. E., Althoff, R. R., Warren, D. E., Riggs, L., Cohen, N. J., and Ryan, J. D. (2010). Worth a glance: using eye movements to investigate the cognitive neuroscience of memory. Front. Hum. Neurosci. 4:166. doi: 10.3389/fnhum.2010.00166

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hari, R., Levanen, S., and Raij, T. (2000). Timing of human cortical functions during cognition: role of MEG. Trends Cogn. Sci. (Regul. Ed.) 4, 455–462. doi: 10.1016/S1364-6613(00)01549-7

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67. doi: 10.1016/S0006-3223(01)01330-0

Herdman, A. T., and Ryan, J. D. (2007). Spatio-temporal brain dynamics underlying saccade execution, suppression, and error-related feedback. J. Cogn. Neurosci. 19, 420–432. doi: 10.1162/jocn.2007.19.3.420

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Huang, M. X., Mosher, J. C., and Leahy, R. M. (1999). A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Phys. Med. Biol. 44, 423–440. doi: 10.1088/0031-9155/44/2/010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hung, Y., Smith, M. L., Bayle, D. J., Mills, T., Cheyne, D., and Taylor, M. J. (2010). Unattended emotional faces elicit early lateralized amygdala-frontal and fusiform activations. Neuroimage 50, 727–733. doi: 10.1016/j.neuroimage.2009.12.093

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Itier, R. J., and Batty, M. (2009). Neural bases of eye and gaze processing: the core of social cognition. Neurosci. Biobehav. Rev. 33, 843–863. doi: 10.1016/j.neubiorev.2009.02.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kensinger, E. A., and Corkin, S. (2003). Memory enhancement for emotional words: are emotional words more vividly remembered than neutral words? Mem. Cogn. 31, 1169–1180. doi: 10.3758/BF03195800

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kobayashi, T., and Kuriki, S. (1999). Principal component elimination method for the improvement of S/N in evoked neuromagnetic field measurements. IEEE Trans. Biomed. Eng. 46, 951–958. doi: 10.1109/10.775405

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lagerlund, T. D., Sharbrough, F. W., and Busacker, N. E. (1997). Spatial filtering of multichannel electroencephalographic recordings through principal component analysis by singular value decomposition. J. Clin. Neurophysiol. 14, 73–82. doi: 10.1097/00004691-199701000-00007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lane, R. D., Reiman, E. M., Ahern, G. L., Schwartz, G. E., and Davidson, R. J. (1997). Neuroanatomical correlates of happiness, sadness, and disgust. Am. J. Psychiatry 154, 926–933.

Lins, O. G., Picton, T. W., Berg, P., and Scherg, M. (1993). Ocular artifacts in recording EEGs and event-related potentials. II. Source dipoles and source components. Brain Topogr. 6, 65–78. doi: 10.1007/BF01234128

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Loftus, G. R., and Mackworth, N. H. (1978). Cognitive determinants of fixation location during picture viewing. J. Exp. Psychol. Hum. Percept. Perform. 4, 565–572. doi: 10.1037/0096-1523.4.4.565

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Madan, C. R., Caplan, J. B., Lau, C. S. M., and Fujiwara, E. (2012). Emotional arousal does not enhance association-memory. J. Mem. Lang. 66, 695–716. doi: 10.1016/j.jml.2012.04.001

Mantini, D., Franciotti, R., Romani, G. L., and Pizzella, V. (2008). Improving MEG source localizations: an automated method for complete artifact removal based on independent component analysis. Neuroimage 40, 160–173. doi: 10.1016/j.neuroimage.2007.11.022

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Maratos, E. J., Dolan, R. J., Morris, J. S., Henson, R. N., and Rugg, M. D. (2001). Neural activity associated with episodic memory for emotional context. Neuropsychologia 39, 910–920. doi: 10.1016/S0028-3932(01)00025-2

Mather, M., and Knight, M. (2008). The emotional harbinger effect: poor context memory for cues that previously predicted something arousing. Emotion 8, 850–860. doi: 10.1037/a0014087

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

McIntosh, A. R., Chau, W. K., and Protzner, A. B. (2004). Spatiotemporal analysis of event-related fMRI data using partial least squares. Neuroimage 23, 764–775. doi: 10.1016/j.neuroimage.2004.05.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

McIntosh, A. R., and Lobaugh, N. J. (2004). Partial least squares analysis of neuroimaging data: applications and advances. Neuroimage 23(Suppl. 1), S250–S263. doi: 10.1016/j.neuroimage.2004.05.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Medford, N., Phillips, M. L., Brierley, B., Brammer, M., Bullmore, E. T., and David, A. S. (2005). Emotional memory: separating content and context. Psychiatry Res. 138, 247–258. doi: 10.1016/j.pscychresns.2004.10.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Miller, G. A., Elbert, T., Sutton, B. P., and Heller, W. (2007). Innovative clinical assessment technologies: challenges and opportunities in neuroimaging. Psychol. Assess. 19, 58–73. doi: 10.1037/1040-3590.19.1.58

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Morel, S., Beaucousin, V., Perrin, M., and George, N. (2012). Very early modulation of brain responses to neutral faces by a single prior association with an emotional context: evidence from MEG. Neuroimage 61, 1461–1470. doi: 10.1016/j.neuroimage.2012.04.016

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Moses, S. N., Ryan, J. D., Bardouille, T., Kovacevic, N., Hanlon, F. M., and McIntosh, A. R. (2009). Semantic information alters neural activation during transverse patterning performance. Neuroimage 46, 863–873. doi: 10.1016/j.neuroimage.2009.02.042

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Nummenmaa, L., Hyönä, J., and Calvo, M. G. (2006). Eye movement assessment of selective attentional capture by emotional pictures. Emotion 6, 257–268.

Phelps, E. A. (2006). Emotion and cognition: insights from studies of the human amygdala. Annu. Rev. Psychol. 57, 27–53. doi: 10.1146/annurev.psych.56.091103.070234

Phelps, E. A., and Sharot, T. (2008). How (and Why) emotion enhances the subjective sense of recollection. Curr. Dir. Psychol. Sci. 17, 147–152. doi: 10.1111/j.1467-8721.2008.00565.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Prince, S. E., Dennis, N. A., and Cabeza, R. (2009). Encoding and retrieving faces and places: distinguishing process- and stimulus-specific differences in brain activity. Neuropsychologia 47, 2282–2289. doi: 10.1016/j.neuropsychologia.2009.01.021

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Riggs, L., McQuiggan, D. A., Anderson, A. K., and Ryan, J. D. (2010). Eye movement monitoring reveals differential influences of emotion on memory. Front. Psychol. 1:205. doi: 10.3389/fpsyg.2010.00205

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Riggs, L., McQuiggan, D. A., Farb, N., Anderson, A. K., and Ryan, J. D. (2011). The role of overt attention in emotion-modulated memory. Emotion 11, 776–785. doi: 10.1037/a0022591

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Riggs, L., Moses, S. N., Bardouille, T., Herdman, A. T., Ross, B., and Ryan, J. D. (2009). A complementary analytic approach to examining medial temporal lobe sources using magnetoencephalography. Neuroimage 45, 627–642. doi: 10.1016/j.neuroimage.2008.11.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Robinson, S. E. (2004). Localization of event-related activity by SAM(erf). Neurol. Clin. Neurophysiol. 2004, 109.

Robinson, S. E., and Vrba, J. (1999). “Functional neuroimaging by synthetic aperture magnetometry,” in Recent Advances in Biomagnetism, eds T. Yoshimoto, M. Kotani, S. Kuriki, H. Karibe, and N. Nakasato (Sendai: Tohoku University Press), 302–305.

Ross, B., and Tremblay, K. (2009). Stimulus experience modifies auditory neuromagnetic responses in young and older listeners. Hear. Res. 248, 48–59. doi: 10.1016/j.heares.2008.11.012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ryan, J. D., Moses, S. N., Ostreicher, M. L., Bardouille, T., Herdman, A. T., Riggs, L.,et al. (2008). Seeing sounds and hearing sights: the influence of prior learning on current perception. J. Cogn. Neurosci. 20, 1030–1042. doi: 10.1162/jocn.2008.20075

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sakaki, M., Ycaza-Herrera, A. E., and Mather, M. (2014). Association learning for emotional harbinger cues: when do previous emotional associations impair and when do they facilitate subsequent learning of new associations? Emotion 14, 115–129. doi: 10.1037/a0034320

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Schmitz, T. W., De Rosa, E., and Anderson, A. K. (2009). Opposing influences of affective state valence on visual cortical encoding. J. Neurosci. 29, 7199–7207. doi: 10.1523/JNEUROSCI.5387-08.2009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Schweinberger, S. R., Pickering, E. C., Burton, A. M., and Kaufmann, J. M. (2002). Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia 40, 2057–2073. doi: 10.1016/S0028-3932(02)00050-7

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Singh-Curry, V., and Husain, M. (2009). The functional role of the inferior parietal lobe in the dorsal and ventral stream dichotomy. Neuropsychologia 47, 1434–1448. doi: 10.1016/j.neuropsychologia.2008.11.033

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Smith, A. P., Dolan, R. J., and Rugg, M. D. (2004). Event-related potential correlates of the retrieval of emotional and nonemotional context. J. Cogn. Neurosci. 16, 760–775. doi: 10.1162/089892904970816

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Spezio, M. L., Huang, P. Y., Castelli, F., and Adolphs, R. (2007). Amygdala damage impairs eye contact during conversations with real people. J. Neurosci. 27, 3994–3997. doi: 10.1523/JNEUROSCI.3789-06.2007

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Todorov, A., Gobbini, M. I., Evans, K. K., and Haxby, J. V. (2007). Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia 45, 163–173. doi: 10.1016/j.neuropsychologia.2006.04.018

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Todorov, A., and Olson, I. R. (2008). Robust learning of affective trait associations with faces when the hippocampus is damaged, but not when the amygdala and temporal pole are damaged. Soc. Cogn. Affect. Neurosci. 3, 195–203. doi: 10.1093/scan/nsn013

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Todorov, A., and Uleman, J. S. (2002). Spontaneous trait inferences are bound to actors’ faces: evidence from a false recognition paradigm. J. Pers. Soc. Psychol. 83, 1051–1065. doi: 10.1037/0022-3514.83.5.1051

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Todorov, A., and Uleman, J. S. (2003). The efficiency of binding spontaneous trait inferences to actors’ faces. J. Exp. Soc. Psychol. 39, 549–562. doi: 10.1016/S0022-1031(03)00059-3

Todorov, A., and Uleman, J. S. (2004). The person reference process in spontaneous trait inferences. J. Pers. Soc. Psychol. 87, 482–493. doi: 10.1037/0022-3514.87.4.482

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Van Veen, B. D., van Drongelen, W., Yuchtman, M., and Suzuki, A. (1997). Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 44, 867–880. doi: 10.1109/10.623056

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Walla, P., Hufnagl, B., Lindinger, G., Imhof, H., Deecke, L., and Lang, W. (2001). Left temporal and temporoparietal brain activity depends on depth of word encoding: a magnetoencephalographic study in healthy young subjects. Neuroimage 13, 402–409. doi: 10.1006/nimg.2000.0694

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Willis, J., and Todorov, A. (2006). First impressions: making up your mind after a 100-ms exposure to a face. Psychol. Sci. 17, 592–598. doi: 10.1111/j.1467-9280.2006.01750.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Winston, J. S., Strange, B. A., O’Doherty, J., and Dolan, R. J. (2002). Automatic and intentional brain responses during evaluation of trustworthiness of faces. Nat. Neurosci. 5, 277–283. doi: 10.1038/nn816

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: emotion, magnetoencephalography (MEG), neuroimaging, eye movement monitoring, faces

Citation: Riggs L, Fujioka T, Chan J, McQuiggan DA, Anderson AK and Ryan JD (2014) Association with emotional information alters subsequent processing of neutral faces. Front. Hum. Neurosci. 8:1001. doi: 10.3389/fnhum.2014.01001

Received: 02 September 2014; Accepted: 25 November 2014;

Published online: 18 December 2014.

Edited by:

Sophie Molholm, Albert Einstein College of Medicine, USAReviewed by:

Peter König, University of Osnabrück, GermanyChristopher R. Madan, Boston College, USA

Copyright © 2014 Riggs, Fujioka, Chan, McQuiggan, Anderson and Ryan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lily Riggs, Holland Bloorview Kids Rehabilitation Hospital, 150 Kilgour Road, Toronto, ON M4G 1R8, Canada e-mail: lriggs@hollandbloorview.ca

†Present address: Lily Riggs, Holland Bloorview Kids Rehabilitation Hospital, Toronto, ON, Canada; Takako Fujioka, Stanford University, Stanford, CA, USA; Adam K. Anderson, Cornell University, Ithaca, NY, USA

Lily Riggs

Lily Riggs Takako Fujioka

Takako Fujioka Jessica Chan

Jessica Chan Douglas A. McQuiggan

Douglas A. McQuiggan Adam K. Anderson

Adam K. Anderson Jennifer D. Ryan

Jennifer D. Ryan