Brain-machine interfacing control of whole-body humanoid motion

- 1Computational Neuroscience Laboratories, Department of Brain Robot Interface, Advanced Telecommunications Research Institute International (ATR), Kyoto, Japan

- 2Laboratoire d'Informatique de Robotique et de Micro-électronique de Montpellier, CNRS-University of Montpellier 2, Montpellier, France

- 3CNRS-AIST Joint Robotics Laboratory, UMI3218/CRT, National Intitute of Advanced Industrial Science and Technology, Tsukuba, Japan

- 4National Institute of Information and Communications Technology, Osaka, Japan

- 5Graduate School of Frontier Biosciences, Osaka University, Osaka, Japan

We propose to tackle in this paper the problem of controlling whole-body humanoid robot behavior through non-invasive brain-machine interfacing (BMI), motivated by the perspective of mapping human motor control strategies to human-like mechanical avatar. Our solution is based on the adequate reduction of the controllable dimensionality of a high-DOF humanoid motion in line with the state-of-the-art possibilities of non-invasive BMI technologies, leaving the complement subspace part of the motion to be planned and executed by an autonomous humanoid whole-body motion planning and control framework. The results are shown in full physics-based simulation of a 36-degree-of-freedom humanoid motion controlled by a user through EEG-extracted brain signals generated with motor imagery task.

1. Introduction

Due to their design that allows them to be readily used in an environment that was initially arranged to accommodate the human morphology, that makes them more acceptable to the users, and easier to interact with, it is generally admitted that humanoid robots are an appropriate choice as living assistants for the everyday tasks, for instance for the elderly and/or reduced-mobility people. The problem that naturally arises is that of the control of such an assistant and how to communicate the wills and intentions of the user to the robot. This problem is of course general but becomes more challenging when addressing the above-mentioned category of users for which communication capabilities can also be impaired (stroke patients for example). This brings our initial idea of considering brain-machine interfaces (BMI) as the possible communication tool between the human and the humanoid assistant. Notwithstanding, brought along with this reflection was the more general question, non-necessarily application-directed, of a human using its brain motor functions to control a human-like artificial body the same way they control their own human body. This question becomes our main motivation and concern in the present work since solving it would pave the way of the discussed applicative perspectives. We thus propose our solution to it in this paper.

The approach we choose to investigate deals with the following constraints of the problem. First, we only consider easy-and-ready-to-use non-invasive BMI technologies. Among this class of technologies, we aim more specifically at the one that would align best and most intuitively with our expressed desire of mimicking human motor-control function, namely motor-imagery-based BMI, consisting ideally for the human user of imagining a movement of their own body for it to be replicated in the humanoid body, though we do not reach that ideal vision restricting our study for the sake of feasibility demonstration to the use of a generic motor-imagery task (imagining arm movement) that we re-target to the specific motion of the robot at hand (leg motion of the robot). Finally, the control paradigm for the humanoid robot we set as objective in our study is that of low-level joint/link-level control, to keep as general behavior and class of movements as possible for the user to replicate at the robot, without restriction of the class of movements allowed by particular higher-level humanoid motion controllers.

We address the related work and existing proposed solutions for this problem or approaching ones in the next section (Section 2). We then detail our own solution, based on the integration of, for the humanoid motion control part, an autonomous contact-based planning and control framework (Section 3), and for the BMI part, a motor-imagery-task-generated brain-signal classification method (Section 4). The integration of these two originally independent components is discussed in Section 5, 6 presents an example proof-of-concept experiment with a fully physics-simulated humanoid robot. Section 7 concludes the paper with discussion and future work.

2. Related Work and Proposed Solution

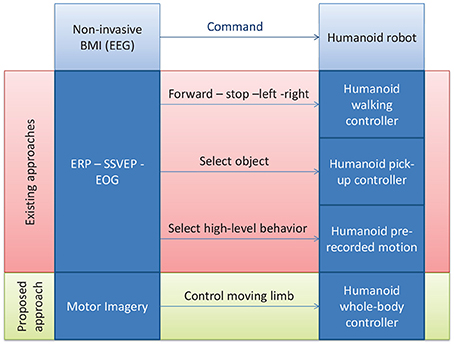

Various approaches have been proposed to solve the problem we stated in the introduction of controlling a humanoid robot with BMI (Bell et al., 2008; Bryan et al., 2011; Chung et al., 2011; Finke et al., 2011; Gergondet et al., 2011; Ma et al., 2013). All approaches, ours included, are based on the integration of a BMI technology with a humanoid controller, and can thus be categorized according to which strategy is followed for each of these two components. See Figure 1 for an overview.

Figure 1. A schematic illustration of the proposed approach vs. the existing ones for controlling a humanoid robot with non-invasive BMI.

From the BMI point-of-view, all these works do abide by our posed constraint of using non-invasive BMIs that rely on electroencephalography (EEG), generally utilizing the well-established frameworks of visual-stimulation-based event-related potentials (ERP) such as P300 in Bell et al. (2008), evoked potentials (EP) such as the steady state visually evoked potential (SSVEP) in Bryan et al. (2011); Chung et al. (2011); Gergondet et al. (2011), or hybrid approaches combining electrooculogram (EOG) with ERP such as in Ma et al. (2013), or P300 with motor-imagery-evoked event-related desynchronization (ERD) (Finke et al., 2011; Riechmann et al., 2011). None, however, investigated a solely motor-imagery-based BMI as stated in our motivations of replicating intuitive human motor-control strategies. Hence our first contribution in the integration initiative.

We adapt in this work a motor-imagery decoding scheme that we previously developed for the control of a one-degree-of-freedom robot and for sending standing-up/sitting-down commands to a wearable exoskeleton (Noda et al., 2012). It allows us to generate a three-valued discrete command that we propose to map to a one-dimensional subspace of the multi-dimensional whole-body configuration space motion of the humanoid, and more precisely the motion along a generalized notion of “vertical axis” of the moving end-limb, such as the foot of the swing leg in a biped motion for instance. As we detail in the course of the paper (Section 5), the motivation behind this strategy is to allow the user to assist the autonomous motion that might lead the moving limb to be “blocked” in potential field local minima while trying to avoid collision. The strategy can in future work be developed into a more sophisticated two-dimensional continuous command one as proven possible by recent and ongoing studies on motor-imagery control (Wolpaw and McFarland, 2004; Miller et al., 2010).

From the humanoid controller point-of-view now, the most standard retained solution consists in using available humanoid high level controllers. These can be either walking controllers with the commands “walk forward” “stop” “turn left” “turn right” sent to a walking humanoid, effectively reducing the problem of humanoid motion control to that of walk steering control (Bell et al., 2008; Chung et al., 2011; Finke et al., 2011; Gergondet et al., 2011), or an object selecton/pick-up controller, where the user selects an object in the scene and then the arm reaching/grasping controller of the robot picks up the desired object (Bryan et al., 2011). Finally Ma et al. (2013) use a hybrid control strategy where both walk steering and selecting a high-level behavior among a finite library can be done by switching between EOG and ERP control. With these strategies, a humanoid can be seen as an arm-equipped mobile robot, with wheels instead of legs (as it is actually the case in Bryan et al., 2011 where only the upper body is humanoid), and consequently the considerable amount of work done on BMI wheelchair control, for example, can be readily adapted. However, in doing so, the advantages of using a legged device over a wheeled one are partially lost, and we can no longer claim the need for the humanoid design nor defend the argument of the possibility of using the robot in everyday living environment which would present non-flat structures, such as stairs for example, with which the walking controllers are not efficient to deal.

While we admit that these strategies relying on walking pattern generators can in the long term benefit from the developments in these techniques that would allow them to autonomously cope with unstructured terrain (variable height stairs, rough terrain) (Takanishi and Kato, 1994; Hashimoto et al., 2006; Herdt et al., 2010; Morisawa et al., 2011), and that they can as well use the hierarchical architectures in which they are embedded as it is the case in Chung et al. (2011); Bryan et al. (2011); Ma et al. (2013) for switching, for example, to an appropriate stair-climbing controller when facing stairs, we choose in this work to investigate an entirely different approach that does not incorporate any kind of walking or high-level controller. Instead, we propose to allow the user to perform lower-level joint/link level control of the whole-body motion of humanoid, driven again by the desire of replicating the human low-level motor-control strategies into the humanoid, but also by the belief that a generic-motion generating approach will allow the robot assistant to deal more systematically with unpredictable situations that inevitably occur in everyday living scenarios and for which the discussed hierarchical architectures would not have exhaustively accounted. This is our second contribution. To achieve this goal, we rely on the contact planning paradigm that we previously proposed for fully autonomous robot (Bouyarmane and Kheddar, 2012), adapting it here to the instance of a BMI-controlled robot.

3. Humanoid Controller

Our humanoid controller is based on the multi-contact planning paradigm, introduced in Hauser et al. (2008); Bouyarmane and Kheddar (2012). This controller allows for autonomously planning and executing the complex high-degree-of-freedom motion of the humanoid from a high-level objective expressed in terms of a desired contact state to reach. The controller works in two stages: an off-line planning stage and an on-line execution stage.

At the planning stage (Bouyarmane and Kheddar, 2011a), a search algorithm explores all the possible contact transitions that would allow the robot to go from the initial contact state to the desired goal contact state. What we mean by contact transition is either removing one contact from the current contact state (e.g., removing the right foot from a double-support state to transition to a left-foot single-support one) or adding one contact to the current contact state (e.g., bringing the swing right foot in contact with the floor to transition from a left-foot single-support phase to a double support phase). One must however note that a contact is defined as a pairing between any surface designated on the cover of the links of the robot and any surface on the environment, and is not restricted to be established between the soles of the feet and the floor surface. For instance, a contact can be defined between the forearm of the robot and the arm of an armchair, or between the palm of the hand of the robot and the top of a table. This strategy stems from the observation that all motions of humans can be broken down to such a succession of contact transitions, be it cyclic motions such as walking where these transitions occur between the feet and the ground, or more complex maneuvers such as standing up from an armchair were contacts transitions occur between various parts of the body (hands, forearms feet, tights, etc.) and various parts the environment objects (armchair, table floor, etc.). This feature makes our planning paradigm able to cope with situations that are broader than the ones classically tackled by humanoid motion planner that either plan for the motion assuming a given contact state (e.g., planning a reaching motion with the two feet fixed on the ground) (Kuffner et al., 2002; Yamane et al., 2004; Yoshida et al., 2006, 2008), or planning footprint placements assuming a cyclic walking pattern will occur on these footprints (Kuffner et al., 2001; Chestnutt et al., 2003, 2005). This aligns well with our initially expressed objective of controlling whole-body motion of any kind without restriction to a subclass of taxonomically identified motions.

At the above-described contact-transition search stage, every contact state that is being explored is validated by running an inverse-kinematics solver which finds an appropriate whole-body configuration (posture) of the robot that meets the desired contact state, while at the same time satisfying physics constraints to make the posture physically realizable within the mechanical limits of the robots (Bouyarmane and Kheddar, 2010). At the end of the offline-contact planning stage, we are provided with a sequence of feasible contact transitions and associated transition postures, that go from the initial contact state to the the goal.

The second stage of the controller is an on-line real-time low-level controller (Bouyarmane and Kheddar, 2011b) that will successively track each of the intermediate postures fed by the off-line planning stage, until the last element of the planned sequence is reached. The controller is formulated as a multi-objective quadratic program optimization scheme, the objectives being expressed in terms of the moving link of the robot involved in the current contact transition being tracked along the sequence (e.g., the foot if the contact transition is a sole/floor one), the center of mass (CoM) of the robot to keep balance, and the whole configuration of the robot to solve for the redundancies of the high-DOF motion. These objectives are autonomously decided by a finite-state machine (FSM) that encodes the current type of transition among the following two types:

• Removing-contact transition: the motion of the robot is performed on the current contact state, and the step is completed when the contact forces applied on the contact we want to remove vanish. This is done by shifting the weight of the robot away from the being-removed contact, tracking the CoM position of the following configuration in the sequence. There is no end-link motion in this kind of step. The corresponding FSM state is labeled “Shift CoM.”

• Adding-contact transition: the motion of the robot is performed on the current contact state, and the motion of the link we want to add as a contact is guided to its desired contact location. There is thus an end-link motion (contact link) in this kind of step. Balance is ensured by also tracking the CoM position of the following configuration in the sequence. The corresponding FSM state is labeled “Move contact link.”

As an example, a cyclic walking FSM state transition sequence will look like: Move contact link (left foot) → Shift CoM (on the left foot) → Move contact link (right foot) → Shift CoM (on the right foot) → Move contact link → … But non-cyclic behaviors are also possible and allowed, for example when standing up from an armchair where contacts between the hands of the robot and arms of chair can be added in succession and removed in succession.

The final output of the quadratic program optimization scheme is a torque command that is sent to the robot at every control iteration, after the execution of which the state of the robot is fed-back to the controller.

4. BMI Decoding

Our aim is for the humanoid system to be controlled by using brain activities in the similar brain regions that are used to control the user's own body. Therefore, we asked a subject to control the simulated humanoid system by using motor imagery of arm movements so that brain activities in motor-related regions such as the primary motor cortex can be used.

As non-invasive brain signal acquisition device we use an electroencephalogram (EEG) system (64 channels and sampling rate of 2048 Hz). The brain signals are decoded and classified using the method that was applied and presented in our previous work (Noda et al., 2012), based on the spectral regularization matrix classifier described in Tomioka and Aihara (2007); Tomioka and Muller (2010). We recall the method here.

The EEG signals, of covariance matrices C considered as input, are classified into two classes, labeled with the variable k, with the following output probabilities (at sampled time t):

with the logit being modeled as a linear function of C

and where W is the parameter matrix to be learned (b is a constant-valued bias).

To learn W the following minimization problem is solved

λ being the regularization variable (λ = 14 in the application below) and

being the spectral l1-norm of W (r is the rank of W and σi[W] its i-th singular value).

Once the classifier learned, the 7–30 Hz band-pass-filtered measured EEG signals are decoded online, by down-sampling them from 2048 to 128 Hz, and applying Laplace filtering and common average substraction to remove voltage bias. Their covariance matrix, initialized at Ct = x⊤txt for the first time step t = 1, where xt ∈ ℝ1×64 denotes the filtered EEG signals, are updated at every time step as follows

and used to compute the probabilities in Equations (1) and (2).

Finally, the three-valued discrete command ct that is sent to the robot is selected from these probabilities through the following hysteresis

where the threshold is set at Pthresh = 0.6.

5. Component Integration

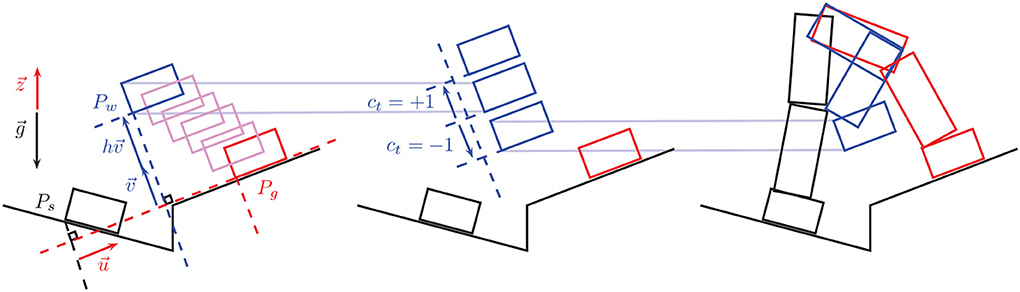

The command ct devised in Equation (7) is sent to the online humanoid whole-body controller via UDP protocol at 128 Hz frequency and used to modify the planned and autonomously executed motion of the humanoid robot as described below and as schematically represented in Figure 2.

Figure 2. The way-point moving strategy. The rectangles in the left and middle figures represent positions of the moving foot (say the right foot, supposing the left foot is the support foot that is fixed and not represented here). In the right figure the whole leg motion is reconstructed from the foot motion. In all three figures, in black is the initial position of the foot/leg at the beginning of the step, in blue the controlled way-point position of the foot/leg at the middle of the step, and in red is the planned final foot/leg position at the end of the step. The left figure shows how a default position of the way point is initialized autonomously by a translation of the final planned position. is the gravity vector, the vertical unit vector (opposite to ), is the unit vector from the initial to the goal position along the goal planned-contact surface plane, is the generalized vertical direction unit vector, i.e., the unit vector normal to and in the plane defined by and , finally, h is a pre-set default height. The middle figure shows how the way-point position is controlled via the command ct sent through the motor imagery interface. Finally the left figure shows how the resulting motion of the leg actually looks like with the foot going through the desired way-point that was translated downwards via the command ct = −1.

When the robot is executing a step that requires moving a link to a planned contact location (contact-adding step, executed by the state “Move contact link” of the FSM, see Section 3), then instead of tracking directly the goal contact location, we decompose the motion of the end-link (the contact link, for instance the foot) into two phases:

• Lift-off phase: The link first tracks an intermediate position located at a designated way-point.

• Touch-down phase: The link then tracks its goal location in the planned contact state sequence.

This two-phase decomposition allows the link to avoid unnecessary friction with the environment contact surface and to avoid colliding with environment features such as stairs.

Each of these two phases correspond to a sub-state of the meta-state “Move contact link” of the FSM, namely:

• State “Move contact link to way-point”

• State “Move contact link to goal”

Additionally, in order to avoid stopping the motion of the contact link at the way-point and to ensure a smooth motion throughout the step, we implemented a strategy that makes the transition from the former to the latter sub-state triggered when the contact link crosses a designated threshold plan along the way, before reaching the tracked way-point.

A default position of the intermediate way-point is automatically pre-set by the autonomous framework using the following heuristic (see Figure 2, left): Let Ps denote the start position of the contact link (at the beginning of the contact-adding step) and Pg denote its goal position (its location in the following contact state along the planned sequence). Let denote the gravity vector, the unit vector opposite to , i.e., , and the unit vector from Ps,g (Ps projected on the goal-contact surface plane) to Pg, i.e., . Finally let be the unit vector normal to that lies in the plan defined by and . The default way-point Pw is defined as

where h is the hand-tuned user-defined parameter that specifies the height of the steps. The command ct in Equation (7) that comes from the BMI decoding system is finally used to modify in real-time this way-point position Pw by modifying its height h (see Figure 2, middle). Let δh denote a desired height control resolution, then the modified position of the way-point through the brain command ct becomes

The command ct could have been used in other ways, however we identified two principles that should in our view stand in a BMI low-level control endeavor of humanoid motion such as ours:

• Principle 1: The full detailed motion, that cannot be designed joint-wise by the BMI user, should be autonomously planned and executed from high-level (task-level) command.

• Principle 2: The brain command can then be used to locally correct or bias the autonomously planned and executed motion, and help overcome shortcomings inherent to full autonomy.

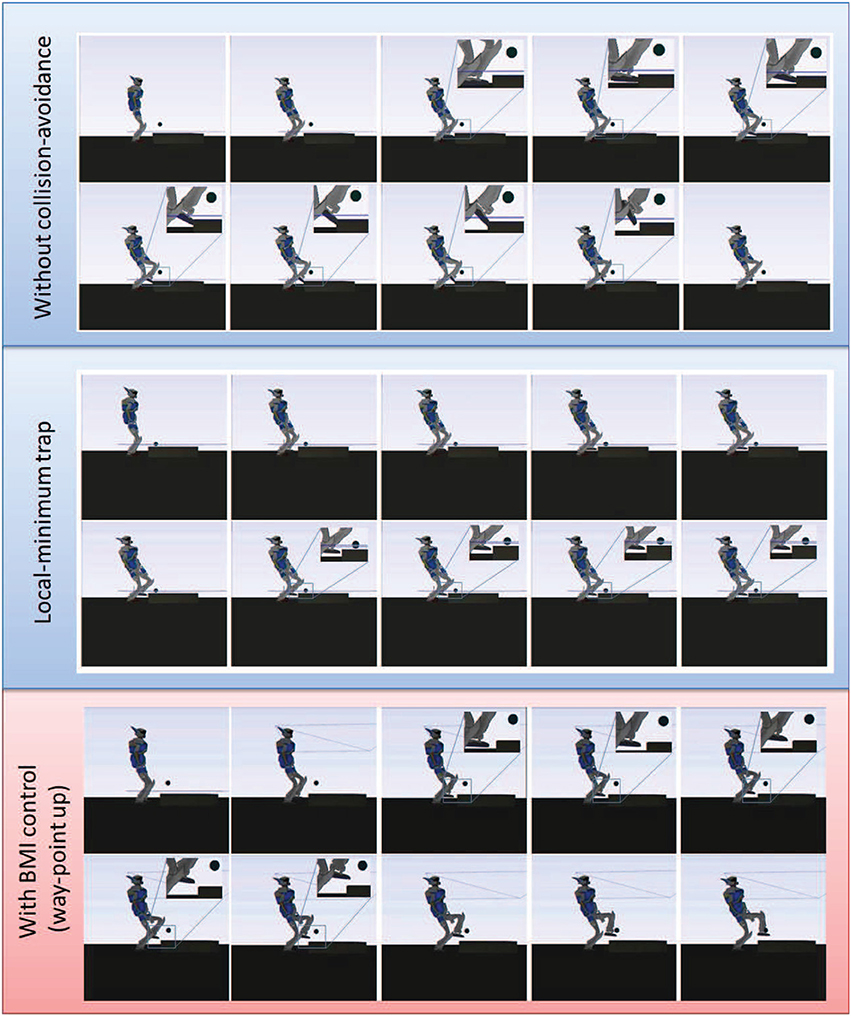

The way-point is a key feature to be controlled according to these two principles as it helps surmount the main limitation of the autonomous collision-avoidance constraint expressed in the on-line quadratic-program-formulated controller described in Section 3. This collision-avoidance constraint, that had to be formulated as a linear constraint in the joint acceleration vector of the robot in order to fit within the quadratic-program formulation [adapting to this end the velocity-damper formulation (Faverjon and Tournassoud, 1987)], acts as a repulsive field, with the tracked way-point acting as an attractive field, on the contact link. The resultant field (from the superposition of these two fields) can display local extrema corresponding to equilibrium situations in which the link stops moving though without having completed its tracking task (see Figure 9). Manual user intervention, here through the brain command, is then necessary to un-block the motion of the link by adequately moving the tracked way-point. The brain command is thus used here for low-level correction of a naturally limitation-affected full-autonomy strategy.

6. Proof-of-Concept Experiment

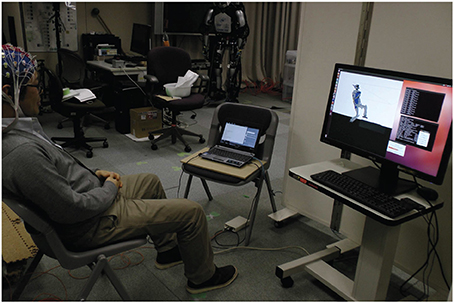

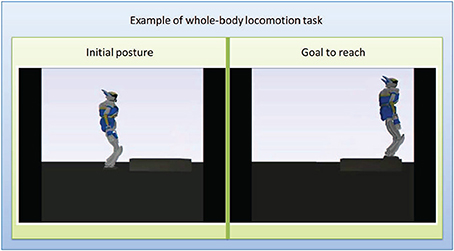

The experiment we designed (see Figure 3 and video that can be downloaded at http://www.cns.atr.jp/~xmorimo/videos/frontiers.wmv) to test the whole framework is described as follows.

Figure 3. Experiment setup. The user is wearing an EEG cap. The laptop on his left side is used for decoding the motor imagery task signal, the computer on his right runs the real-time physics simulation allowing him to control the position of the moving foot through the visual feedback he gets from the simulator window.

An initial and goal configurations (Figure 4) are pre-specified manually by the user among a finite number of locations in the environment. In this case the initial configuration is standing in front of a stair and the goal task is to go up on the stair. This selection is for now done manually, but it can later also be selected through a brain command by embedding the strategy described in this work within a hierarchical framework such as the ones suggested in Chung et al. (2011); Bryan et al. (2011), that will switch between the behavior of selecting the high-level goal task and the low-level motion control.

Figure 4. Intial and goal positions for the experiment. Left: initial configuration with the robot standing in front of the stair. Right: goal configuration with the robot standing at the extremity up on the srair.

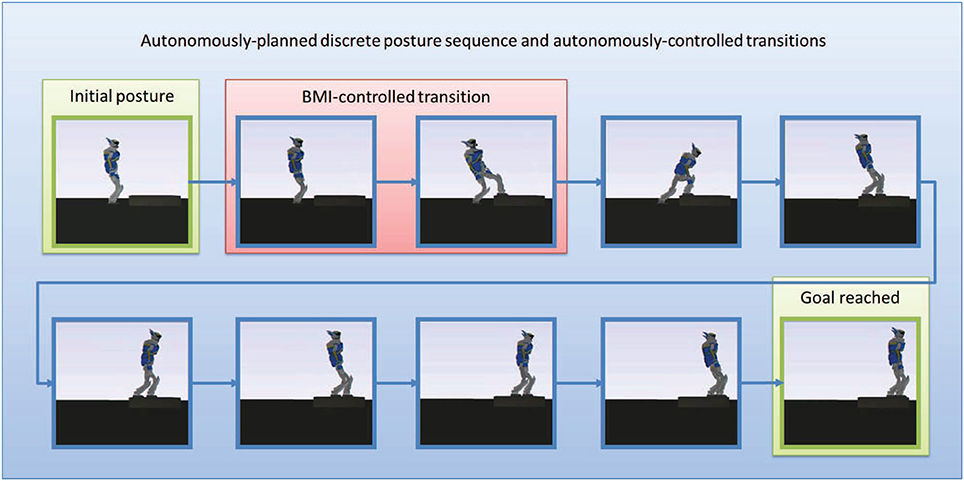

Off-line, the framework autonomously plans the sequence of contact transitions and associated intermediate static postures to reach that goal (Figure 5), then the on-line controller is executed.

Figure 5. The sequence of static postures planned autonomously. The first posture is the initial posture. The second posture which looks like the first one keeps both feet on the ground but puts all the weight of the robot on the right foot so as to zero the contact forces on the right left to release it for the next posture. The third posture moves the now free left foot and puts it on the stair, but still without any contact force applied on it (all the weith of the robot is still supported by the right foot). the fourth postures keeps both feet at their current locations but shifts all the weight of the robot away from the right foot to put it entirely on the left foot, the right foot becomes free of contact forces, and so on.

The user is wearing an EEG cap and is trained with 3 training sessions of approximately 5 min each to learn the parameter of the classifier described in Section 4, through a motor imagery task consisting of imagining respectively left arm and right arm circling movements for going up and down. This task is generic and we retained it since it gave us in our experiment better decoding performances than some other tasks (e.g., leg movements). The user has visual feed-back from the simulator on the desktop computer screen (on his right in Figure 3) and from a bar-diagram representing in real-time the decoded probability of the motor-imagery task classification on the laptop computer screen (on his left in Figure 3). The experiment was successfully completed on the first effective trial, which was the overall third trial (the first two trials were canceled after their respective training sessions since we encountered and fixed some minor implementation bugs before starting the control phase). The subject had prior experience with the same motor-imagery classifier in our previously-cited study (Noda et al., 2012). We only experimented with that one subject as we considered that we reached our aim of testing our framework and providing its proof-of-concept experiment.

The decoding of the BMI command is done in real-time and implemented in Matlab, and the brain command is then sent via UDP protocol to the physics simulator process implemented in C++.

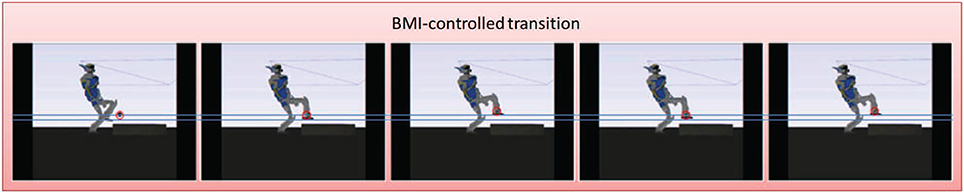

We tested the way-point control strategy in the second step of the motion (the first contact-adding step along the sequence, the highlighted transition in Figure 5). Figure 6 focuses on this controlled part of the motion. The user controlled the position of a black sphere that represents the position of the targeted way-point, that the foot of the robot tracks in real-time, while autonomously keeping balance and avoiding self-collisions, joint limits, and collision with the environment. A total of 8 commands (“up”/“down”) were sent during this controlled transition phase, that we voluntarily made last around 300 s (5 min) in order to allow the user to send several commands. We then externally (manually) triggered the FSM transition to the following step along the sequence and left the autonomous controller complete the motion without brain control. That autonomous part was completed in about 16 s. See the accompanying video.

Figure 6. The controlled motion. The figures represent successive snapshots from the real-time controlled motion in the physics simulator. The controlled position of the way point appears in the simulator as a black sphere that we circle here in red for clarity. This position is tracked by the foot (more precisely at the ankle joint) throught the simulation. The two horizontal lines represent the level of the sole of the foot at the two positions sent as a command by the user through the BMI. These lines do not appear in the simulator we add them here only as common visualization reference lines for all the snapshots. In the first two frames the robot tracks the default position of the way point. In the third frame the user decides to move that position up, then down in fourth frame, and finally up again in the fifth frame.

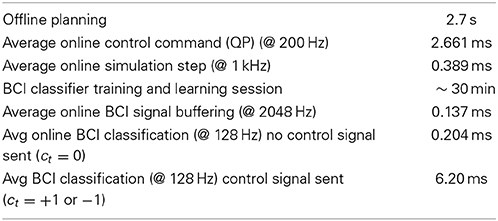

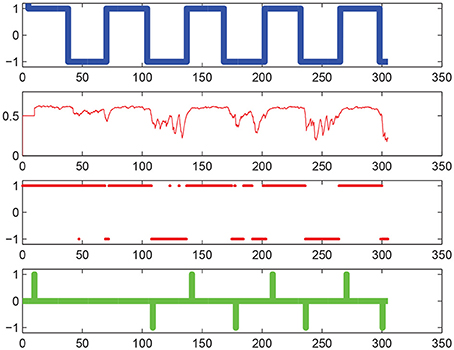

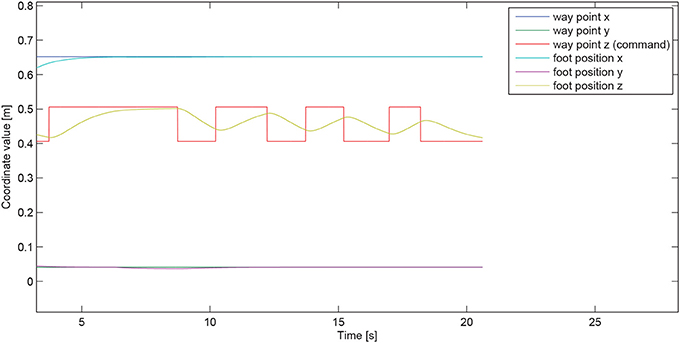

Figure 7 illustrates the decoding performances of the BMI system, while Figure 8 shows the tracking performance of the humanoid whole-body controller. The table below gives computation time figures executed on a Dell Precision T7600 Workstation equipped with a Xeon processor E5-2687W (3.1 GHz, 20 M). Full details on the physics simulator, including contact modeling and resolution, and collision detection, can be found in Chardonnet et al. (2006); Chardonnet (2012).

Figure 7. Motor imagery decoding performances. On the horizontal axis is iteration number. From top to bottom: the thick blue line represents the command cue given as an input to the user, the thin red line represents the decoded brain activities [the probability P(kt = +1|Ct)], the thick red point markers represent the estimated classified label [P(kt = +1|Ct) ≥ 0.5 or < 0.5], finally the thick green line represents the command ct sent to the robot (based on the threshold Pthres = 0.6). Note that this green command does not represent the position of the way-point but the instantaneous rate of change in this position between two successive time steps t and t + 1, according to Equation (9), line 2 (i.e., the “derivative” were we talking of a continuous and differentiable function rather than the time-discretized one at hand).

Figure 8. Way-point tracking performance. The user-controlled quantity, that happens to be in the particular case demonstrated here the z-coordinate of the tracked way-point (the “generalized” vertical direction being reduced in this case to the “conventional” vertical direction, meaning ≡ in Figure 2, since the goal-contact surface on the stair is horizontal), is represented by the piecewise-constant red curve. The corresponding motion of the foot, that tracks this command-induced way-point position, is shown in yellow curve. the two other coordinates of the foot (x and y) are auonomously maintained by the controller at the corresponding ones of the way-point and stay at their desired values throught the command phase.

Figure 9. Comparison of the controlled transition motion in three instances. Top: without collision-avoidance constraint, the foot of the robot collides with the stair while targeting its goal, and the simulation stops. Middle: with autonomous collision-avoidance constraint that happens to create in this case a local-minimum trap, the robot reaches an equilibrium situation and stays idle for as long as we let the simulation run (infinite time). Bottom: The autonomous collision-avoidance strategy combined with the proposed BMI-control approach helps reposition the way-point and overcome the local-minimum problem. The robot safely reaches the goal contact location and the motion along the sequence can be completed.

From this experiment, we confirmed that the autonomous framework can be coupled with the BMI decoding system in real-time in simulation and that the simulated robot can safely realize the task while receiving and executing the brain command.

7. Discussion and Future Work

This work demonstrated the technical possibility of real-time online low-level control of whole-body humanoid motion using motor-imagery-based BMI.

We achieved it by coupling an existing EEG decoder and whole-body multi-contact acyclic planning and control framework. In particular, this coupling allowed us to control a one-dimensional feature of the high-DOF whole-body motion, designed as the generalized height of moving link way-point, in a discrete way. Though the motor-imagery task used in our proof-of-concept experiment was a generic one (left-arm vs. right-arm circling movement), we plan in the future to investigate more specific motor-imagery tasks that are in tighter correspondence with the limb of the robot being controlled, along the longer-term user's-mind-into-robot's-body “full embodiment” quest that motivates our study as expressed in our introductory section. Since previous studies reported that imagery of gait and actual gait execution have been found to recruit very similar cerebral networks (Miyai et al., 2001; La Fougère et al., 2010), we may be able to expect that a human can control a humanoid the same way they control their own human body through motor imagery.

We also aim now at continuous control of two-dimensional feature of this whole-body motion, allowing not only the control of the tracked way point but also of a corresponding threshold plan that decides when to trigger the transition between the lift-off and touch-down phases. We believe this can be achieved based on the previous work done for example on motor-imagery two-dimensional cursor control (Wolpaw and McFarland, 2004). Other previous studies also discussed the possibilities of using EEG for such continuous control (Yoshimura et al., 2012). In addition, for the continuous two-dimensional feature control, explicit consideration of individual differences in cerebral recruitment during motor imagery may be necessary (Meulen et al., 2014). As a future study, we may consider using transfer learning approaches (Samek et al., 2013) to cope with this individual difference problem.

Finally, we aim at porting this framework from the simulation environment to the real robot control, so that in future study we may possibly use the proposed framework in a rehabilitation training program to enhance recovery of motor-related nervous system of stroke patients.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work is supported with a JSPS Postodoctoral Fellowship for Foreign Researchers, ID No. P12707. This study is the result of “Development of BMI Technologies for Clinical Application” carried out under the Strategic Research Program for Brain Sciences by the Ministry of Education, Culture, Sports, Science and Technology of Japan. Part of this research was supported by MIC-SCOPE and a contract with the Ministry of Internal Affairs and Communications entitled “Novel and innovative R&D making use of brain structures.” This research was also partially supported by MEXT KAKENHI grant Number 23120004, Strategic International Cooperative Program, Japan Science and Technology Agency (JST) and by JSPS and MIZS: Japan-Slovenia research Cooperative Program Joris Vaillant and François Keith were partially suported by grants from the RoboHow.Cog FP7 www.robohow.eu, Contract N288533. The authors would like to thank Abderrahmane Kheddar for valuable use of the AMELIF dynamics simulation framework.

References

Bell, C. J., Shenoy, P., Chalodhorn, R., and Rao, R. P. N. (2008). Control of a humanoid robot by a noninvasive brain-computer interface in humans. J. Neural Eng. 5, 214–220. doi: 10.1088/1741-2560/5/2/012

Bouyarmane, K., and Kheddar, A. (2010). “Static multi-contact inverse problem for multiple humanoid robots and manipulated objects,” in 10th IEEE-RAS International Conference on Humanoid Robots (Nashville, TN), 8–13. doi: 10.1109/ICHR.2010.5686317

Bouyarmane, K., and Kheddar, A. (2011a). “Multi-contact stances planning for multiple agents,” in IEEE International Conference on Robotics and Automation (Shanghai), 5546–5353.

Bouyarmane, K., and Kheddar, A. (2011b). “Using a multi-objective controller to synthesize simulated humanoid robot motion with changing contact configurations,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (San Fransisco, CA), 4414–4419.

Bouyarmane, K., and Kheddar, A. (2012). Humanoid robot locomotion and manipulation step planning. Adv. Robot. 26, 1099–1126. doi: 10.1080/01691864.2012.686345

Bryan, M., Green, J., Chung, M., Chang, L., Scherery, R., Smith, J., et al. (2011). “An adaptive brain-computer interface for humanoid robot control,” in 11th IEEE-RAS International Conference on Humanoid Robots (Bled), 199–204.

Chardonnet, J.-R. (2012). Interactive dynamic simulator for multibody systems. Int. J. Hum. Robot. 9, 1250021:1–1250021:24. doi: 10.1142/S0219843612500211

Chardonnet, J.-R., Miossec, S., Kheddar, A., Arisumi, H., Hirukawa, H., Pierrot, F., et al. (2006). “Dynamic simulator for humanoids using constraint-based method with static friction,” in Robotics and Biomimetics, 2006. ROBIO'06. IEEE International Conference on (Kunming), 1366–1371.

Chestnutt, J., Kuffner, J., Nishiwaki, K., and Kagami, S. (2003). “Planning biped navigation strategies in complex environments,” in IEEE-RAS International Conference on Humanoid Robots (Munich).

Chestnutt, J., Lau, M., Kuffner, J., Cheung, G., Hodgins, J., and Kanade, T. (2005). “Footstep planning for the ASIMO humanoid robot,” in IEEE International Conference on Robotics and Automation (Barcelona), 629–634.

Chung, M., Cheung, W., Scherer, R., and Rao, R. P. N. (2011). “A hierarchical architecture for adaptive brain-computer interfacing,” in Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence Vol. 2 (Barcelona), 1647–1652.

Faverjon, B., and Tournassoud, P. (1987). “Planning of manipulators with a high number of degrees of freedom,” in IEEE International Conference on Robotics and Automation (Raleigh, NC).

Finke, A., Knoblauch, A., Koesling, H., and Ritter, H. (2011). “A hybrid brain interface for a humanoid robot assistant,” in 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC2011) (Boston, MA).

Gergondet, P., Druon, S., Kheddar, A., Hintermuller, C., Guger, C., and Slater, M. (2011). “Using brain-computer interface to steer a humanoid robot,” in IEEE International Conference on Robotics and Biomimetics (Phuket), 192–197.

Hashimoto, K., Sugahara, Y., Kawase, M., Ohta, A., Tanaka, C., Hayashi, A., et al. (2006). “Landing pattern modification method with predictive attitude and compliance control to deal with uneven terrain,” in Proceedings of the IEEE-RSJ International Conference on Intelligent Robots and Systems (Beijing).

Hauser, K., Bretl, T., Latombe, J.-C., Harada, K., and Wilcox, B. (2008). Motion planning for legged robots on varied terrain. Int. J. Robot. Res. 27, 1325–1349. doi: 10.1177/0278364908098447

Herdt, A., Diedam, H., Wieber, P.-B., Dimitrov, D., Mombaur, K., and Diehl, M. (2010). Online walking motion generation with automatic footstep placement. Adv. Robot. 24, 719–737. doi: 10.1163/016918610X493552

Kuffner, J., Kagami, S., Nishiwaki, K., Inaba, M., and Inoue, H. (2002). Dynamically-stable motion planning for humanoid robots. Auton. Robots 12, 105–118. doi: 10.1023/A:1013219111657

Kuffner, J., Nishiwaki, K., Kagami, S., Inaba, M., and Inoue, H. (2001). “Footstep planning among obstacles for biped robots,” in IEEE/RSJ International Conference on Intelligent Robots and Systems - Vol. 1 (Maui, HI), 500–505.

La Fougère, C., Zwergal, A., Rominger, A., Förster, S., Fesl, G., Dieterich, M., et al. (2010). Real versus imagined locomotion: an intraindividual [18F]-FDG PET - fMRI comparison. Neuroimage 50, 1589–1598. doi: 10.1016/j.neuroimage.2009.12.060

Ma, J., Zhang, Y., Nam, Y., Cichocki, A., and Matsuno, F. (2013). “EOG/ERP hybrid human-machine interface for robot control,” in IEEE-RAS International Conference on Intelligent Robots and Systems (Tokyo), 859–864.

Meulen, M. V. D., Allali, G., Rieger, S., Assal, F., and Vuilleumier, P. (2014). The influence of individual motor imagery ability on cerebral recruitment during gait imagery. Hum. Brain Mapp. 35, 455–470. doi: 10.1002/hbm.22192

Miller, K. J., Schalk, G., Fetza, E. E., den Nijs, M., Ojemanne, J., and Rao, R. (2010). Cortical activity during motor execution, motor imagery, and imagery-based online feedback. Proc. Natl. Acad. Sci. U.S.A. 107, 4430–4435. doi: 10.1073/pnas.0913697107

Miyai, I., Tanabe, H., Sase, I., Eda, H., Oda, I., Konishi, I., et al. (2001). Cortical mapping of gait in humans: a near-infrared spectroscopic topography study. Neuroimage 14, 1186–1192. doi: 10.1006/nimg.2001.0905

Morisawa, M., Kanehiro, F., Kaneko, K., Kajita, S., and Yokoi, K. (2011). “Reactive biped walking control for a collision of a swinging foot on uneven terrain,” in Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Bled).

Noda, T., Sugimoto, N., Furukawa, J., Sato, M., Hyon, S., and Morimoto, J. (2012). “Brain-controlled exoskeleton robot for bmi rehabilitation,” in 12th IEEE-RAS International Conference on Humanoid Robots (Osaka), 21–27.

Riechmann, H., Hachmeister, N., Ritter, H., and Finke, A. (2011). “Asynchronous, parallel on-line classification of P300 and ERD for an efficient hybrid BCI,” in 5th IEEE EMBS Conference on Neural Engineering (NER2011) (Cancun).

Samek, W., Meinecke, F. C., and Muller, K.-R. (2013). Transferring subspaces between subjects in brain-computer interfacing. IEEE Trans. Biomed. Eng. 60, 2289–2298. doi: 10.1109/TBME.2013.2253608

Takanishi, A., and Kato, I. (1994). “Development of a biped walking robot adapting to a horizontally uneven surface,” in Proceedings of the IEEE-RSJ International Conference on Intelligent Robots and Systems (Munich).

Tomioka, R., and Aihara, K. (2007). “Classifying matrices with a spectral regularization,” in 24th International Conference on Machine Learning (New York, NY), 895–902.

Tomioka, R., and Muller, K. (2010). A regularized discriminative framework for EEG analysis with application to brain-computer interface. Neuroimage 49, 415–432. doi: 10.1016/j.neuroimage.2009.07.045

Wolpaw, J. R., and McFarland, D. J. (2004). Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc. Natl. Acad. Sci. U.S.A. 101, 17849–17854. doi: 10.1073/pnas.0403504101

Yamane, K., Kuffner, J., and Hodgins, J. K. (2004). Synthesizing animations of human manipulation tasks. ACM Trans. Graph. 23, 532–539. doi: 10.1145/1015706.1015756

Yoshida, E., Kanoun, O., Esteves, C., and Laumond, J. P. (2006). “Task-driven support polygon reshaping for humanoids,” in 6th IEEE-RAS International Conference on Humanoid Robots (Genova), 208–213.

Yoshida, E., Laumond, J.-P., Esteves, C., Kanoun, O., Sakaguchi, T., and Yokoi, K. (2008). “Whole-body locomotion, manipulation and reaching for humanoids,” in Motion in Games, Volume 5277 of LNCS, eds A. Egges, A. Kamphuis, and M. Overmars (Berlin; Heidelberg: Springer), 210–221.

Keywords: humanoid whole-body control, brain-machine interfacing, motor imagery, motion planning, semi-autonomous humanoid, contact support planning

Citation: Bouyarmane K, Vaillant J, Sugimoto N, Keith F, Furukawa J and Morimoto J (2014) Brain-machine interfacing control of whole-body humanoid motion. Front. Syst. Neurosci. 8:138. doi: 10.3389/fnsys.2014.00138

Received: 28 May 2014; Accepted: 15 July 2014;

Published online: 05 August 2014.

Edited by:

Mikhail Lebedev, Duke University, USAReviewed by:

Randal A. Koene, Boston University, USAM. Van Der Meulen, University of Luxembourg, Luxembourg

Copyright © 2014 Bouyarmane, Vaillant, Sugimoto, Keith, Furukawa and Morimoto. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Karim Bouyarmane, ATR Computational Neuroscience Laboratories, Department of BRI, 2-2-2 Hikaridai, Seika-cho Soraku-gun, Kyoto 619-0288, Japan e-mail: karim.bouyarmane@lirmm.fr

Karim Bouyarmane

Karim Bouyarmane Joris Vaillant2,3

Joris Vaillant2,3  François Keith

François Keith Jun Morimoto

Jun Morimoto