- 1Center for Lifespan Psychology, Max Planck Institute for Human Development, Berlin, Germany

- 2Department of Psychology, University of Florida, Gainesville, FL, USA

This article addresses four interrelated research questions: (1) Does experienced mood affect emotion perception in faces and is this perception mood-congruent or mood-incongruent?(2) Are there age-group differences in the interplay between experienced mood and emotion perception? (3) Does emotion perception in faces change as a function of the temporal sequence of study sessions and stimuli presentation, and (4) does emotion perception in faces serve a mood-regulatory function? One hundred fifty-four adults of three different age groups (younger: 20–31 years; middle-aged: 44–55 years; older adults: 70–81 years) were asked to provide multidimensional emotion ratings of a total of 1026 face pictures of younger, middle-aged, and older men and women, each displaying six different prototypical (primary) emotional expressions. By analyzing the likelihood of ascribing an additional emotional expression to a face whose primary emotion had been correctly recognized, the multidimensional rating approach permits the study of emotion perception while controlling for emotion recognition. Following up on previous research on mood responses to recurring unpleasant situations using the same dataset (Voelkle et al., 2013), crossed random effects analyses supported a mood-congruent relationship between experienced mood and perceived emotions in faces. In particular older adults were more likely to perceive happiness in faces when being in a positive mood and less likely to do so when being in a negative mood. This did not apply to younger adults. Temporal sequence of study sessions and stimuli presentation had a strong effect on the likelihood of ascribing an additional emotional expression. In contrast to previous findings, however, there was neither evidence for a change from mood-congruent to mood-incongruent responses over time nor evidence for a mood-regulatory effect.

Introduction

How does the way we feel influence the perception of the world around us, and how does this perception in turn affect our own feelings? As innocuous as it may seem, this question constitutes one of the most fundamental research objectives in psychology, ranging from basic research on attention and perception (e.g., Becker and Leinenger, 2011; Hunter et al., 2011) to research in clinical psychology and psychiatry (e.g., Elliott et al., 2002; Eizenman et al., 2003; Rinck et al., 2003; Stuhrmann et al., 2013). For example, cognitive theories of anxiety and depression suggest attentional and memory biases of patients suffering from anxiety or depression toward threatening, respectively dysphoric, stimuli, which in turn contributes to the maintenance or aggravation of the disorder (Clark et al., 1999; Beevers and Carver, 2003; Koster et al., 2010). In particular the question of mood-congruent vs. mood-incongruent information processing, its determinants and consequences, has sparked a lot of research. The present paper contributes to this literature by investigating the relationship between experienced mood and the perception of emotional expression in faces, which has been shown to be important for individuals' social interactions (e.g., Baron-Cohen et al., 2000. Special emphasis will be put on age-related differences in mood-(in)congruent information processing, the role of the temporal sequence of study sessions and stimuli presentation, and the question whether mood may not only affect emotion perception, but whether emotion perception may also serve a mood-regulatory function.

As will be outlined in the following, a number of different theoretical models have been proposed to explain the—in parts conflicting—empirical findings on mood-congruent vs. mood-incongruent information processing:

Mood-Congruent Information Processing

There exists ample evidence that information is often processed in a mood-congruent manner. For example, people in a positive mood are more likely to recall positive memories (Bower, 1981, 1991; Mayer et al., 1995), and report to be more satisfied with their lives (Schwarz and Clore, 1983). Mood congruency effects were also observed for emotion perception in faces. The pattern of findings, however, is somewhat mixed. For example, Coupland et al. (2004) demonstrated that low positive affect (anhedonia) decreased the identification of happy expressions, while negative affect increased the identification of disgust. Contrary to expectations, however, there was no increase in anger identification related to negative affect. Furthermore, Suzuki et al. (2007) found partial support for a mood-congruency effect by observing a positive correlation between negative affect and recognition of sadness. However, this effect did not generalize to other negative emotions. In addition, age-related decrease in sadness recognition was linked to an age-related decrease in negative affect. For similar findings on the relation between age-related decline in the perceived intensity of emotions in faces and age-related decrease in anxiety and depression see Phillips and Allen (2004).

At a more general level, the mood-congruency effect has been explained in terms of Bower's (1981) associative network theory. This theory refers to the idea that emotions serve as memory units and that activation of such a unit not only “aids retrieval of events associated with it [but…] also primes emotional themata for use in free association, fantasies, and perceptual categorization” (Bower, 1981, p. 129). The effect has also been explained in terms of mood as information (Schwarz and Clore, 2003, p. 296), that is, the idea that mood may serve an informational function and may help in directing attention to possible sources of feelings (Wyer and Carlston, 1979; Schwarz and Clore, 1983, 2003). Although both approaches suggest mood-congruent information processing, the proposed mechanisms differ. According to the associative network theory, mood influences information processing indirectly by priming the encoding, retrieval, and use of information, for example by selectively attending to “activated” mood-congruent details in the environment, by selectively encoding information into a network of primed associations, or by selectively retrieving mood-congruent information. According to the mood-as-information account, mood influences information processing directly. By (implicitly) asking themselves “… how do I feel about this? [… people…] misread their current feelings as a response to the object of judgment, resulting in more favorable evaluations under positive rather than negative moods, unless their informational value is discredited” (Schwarz and Clore, 2003, p. 299).

In an attempt to integrate these seemingly contradictory explanations, Forgas (1995) proposed the affect infusion model (AIM), which states the more general preconditions for mood-congruency effects in judgmental processes. According to the AIM, affect-priming, in the sense of Bower's (1981) associative network theory, is most likely to occur during substantive processing, that is, in situations with complex, atypical, and/or personally relevant targets. For example, when being in a happy mood, people evaluate others more favorably than when being in a sad mood, in particular when judging unusual, atypical, persons (Forgas, 1992).

In contrast, mood-as-information (Schwarz and Clore, 1983) is the major affect infusion mechanism during heuristic processing, that is, in situations involving typical targets of low personal relevance and/or in situations with limited processing capacity (e.g., due to time pressure or information overload; Forgas, 1995).

Mood-Incongruent Information Processing as a Mood-Regulatory Function

In addition to the various findings on mood-congruency effects, a number of studies suggested that information may also be processed in a mood-incongruent manner (Morris and Reilly, 1987; Matt et al., 1992; Erber and Erber, 1994; Sedikides, 1994; Forgas and Ciarrochi, 2002; Isaacowitz et al., 2008, 2009b). For example, in two recent articles Isaacowitz and colleagues (Isaacowitz et al., 2008, 2009b) showed that older adults gazed toward positively valenced facial stimuli when in a bad mood. In contrast, a mood-congruency effect was observed in younger adults, in that they were more likely to look at positively valenced faces when in a good mood and more likely to look at negatively valenced faces when in a bad mood. Based on the observed age-differential relationship between mood and gazing pattern, Isaacowitz et al. (2008) concluded that “in older adults, gaze does not reflect mood, but rather is used to regulate it” (2008; p. 848). This interpretation is in line with socioemotional selectivity theory, which postulates that older adults—because of a shrinking time horizon—shift their motivational priorities toward emotion regulation (Carstensen et al., 1999; Carstensen, 2006). Furthermore, given that most studies on mood-(in)congruent information processing used college-student populations, this finding cautions generalizations to other populations and underscores the importance of studying different age groups.

Building upon the first, congruency; then, incongruency hypothesis postulated by Sedikides (1994, p. 163), Forgas and Ciarrochi (2002) showed, in a series of three experiments, that after an initial mood-congruency effect, people in a sad mood were more likely to generate positive person descriptions, positive personality trait adjectives, as well as positive self-descriptions. These findings were interpreted in terms of a spontaneous, homeostatic, mood management mechanism “that limit[s] affect congruence and thus allow[s] people to control and calibrate their mood states by selectively accessing more affect-incongruent responses over time” (Forgas and Ciarrochi, 2002, p. 337). Thus, the temporal sequence of information processing seems to play a crucial role in the interplay between experienced mood and information processing. This may also apply to the processing of emotional expressions in faces as investigated in the present study.

What the studies by Isaacowitz and colleagues and Forgas and colleagues have in common is that differences in mood-congruent vs. mood-incongruent information processing are explained in terms of mood regulation. That is, by focusing on stimuli of a certain valence, people attempt to manage their mood (e.g., they up-regulate their mood when previously in a bad mood). In contrast to work on mood-congruent attentional and memory biases for negatively valenced material, the mechanisms underlying a mood-incongruent bias toward positively valenced stimuli have been less clearly spelled out. However, given the functional relevance of mood-congruent information processing for dysphoria and depression (e.g., Clark et al., 1999; Beevers and Carver, 2003; Koster et al., 2010), it seems reasonable to assume that focusing on positively valenced stimuli when in a bad mood may help to counteract this effect. The underlying mechanism may either constitute a rather spontaneous, homeostatic mood management (Forgas and Ciarrochi, 2002) or active mood regulation.

While Isaacowitz and colleagues explained the mood-congruency vs. mood-incongruency effect in terms of age (“mood-congruent gaze in younger adults, positive gaze in older adults”, Isaacowitz et al., 2008, p. 848), Forgas and Ciarrochi explained the effect in terms of elapsed processing time (“initially mood-congruent responses tend to be automatically corrected and reversed over time,” Forgas and Ciarrochi, 2002, p. 344; see also Sedikides, 1994). Such mood-incongruent information processing is also in line with the AIM, which postulates that mood-congruency effects will be eliminated, or reversed, if a person is influenced by a strong motivational component, such as to improve mood when being in a bad mood (i.e., motivated processing; Erber and Erber, 1994; Forgas, 1995). In the absence of a strong motivational component, the AIM proposes the direct access strategy as another type of a low affect infusion strategy, that is, an information processing strategy that is unlikely to result in a mood-congruency effect. “Direct access processing is most likely when the target is well known or familiar and has highly prototypical features that cue an already-stored and available judgment, the judge is not personally involved, and there are no strong cognitive, affective, motivational, or situational forces mandating more elaborate processing” (Forgas, 1995, p. 46). We will get back to this strategy in the discussion. For a more detailed description of the AIM, and the four alternative processing strategies related to low affect infusion (motivated processing and direct access strategy) and high affect infusion (heuristic and substantive processing), we refer the reader to Forgas (1995).

To summarize, current research has provided ample support for mood-congruent, but also mood-incongruent, information processing. The AIM provides a general framework that predicts the degree of mood-(in)congruency in information processing (Forgas, 1995). According to this model, mood-congruency effects are most likely under substantive processing or heuristic processing which is in line with Bower's (1981) associative network theory, and Schwarz and Clore's (1983) theory of mood-as-information, respectively. In contrast, mood-congruency is least likely in case of motivated processing or the direct access strategy. Especially when in a bad mood, people may be motivated to change this state. As suggested by Isaacowitz et al. (2008), this motivation may be particularly strong in older adults. Furthermore, Forgas and Ciarrochi (2002) observed a shift from mood-congruent to mood-incongruent information processing, pointing to the role of homeostatic cognitive strategies in affect regulation. However, when explaining mood-incongruent information processing in terms of mood regulation, the crucial—and often untested—question is how effective is it in changing peoples' mood? In a recent eye-tracking study, Isaacowitz et al. (2009a,b) showed that older adults with good cognitive functioning showed less mood decline throughout the study when gazing toward positively valenced faces. This provides initial support for the notion that in some people mood-incongruent information processing may serve a mood-regulatory function.

Overall, the research reviewed so far indicates that effects of experienced mood on information processing are by no means simple, but influenced by multiple factors. With few exceptions (e.g., Mayer et al., 1995), most studies on mood-(in)congruent information processing involved active mood induction. Little is known about the extent to which these findings generalize to naturally occurring mood. Moreover, in real-life situations the various mechanisms underlying mood-(in)congruent information processing may work simultaneously and are likely to influence each other. For example, being in a moderately gloomy mood may prime the associative network toward the perception of negatively valenced features in the environment. This in turn may be perceived as more negative because of one's gloomy mood (mood-as-information). At the same time, this may increase the motivation to improve one's mood by selectively attending to positively valenced features in the environment, possibly eliminating or even reversing a mood-congruency effect. At present it is unclear which of these processes will prevail under less extreme conditions of natural mood, rather than experimentally induced mood.

This Study

The purpose of the present study was to link naturally occurring fluctuations in mood to the perception of emotions in faces in order to provide new insights into mood-congruent vs. mood-incongruent information processing. To this end we (1) examined whether natural mood affects emotion perception in faces and to what extent this perception was mood-congruent or mood-incongruent. We (2) tested for age group differences in the interplay between emotion perception and experienced mood, and (3) investigated the role of temporal sequence in emotion processing. Finally, we (4) examined the extent to which emotion perception in faces may serve a mood-regulatory function.

Based on previous findings reviewed above, and independent of age, we expected mood-congruent processing of emotional expressions in faces—operationalized as a higher likelihood of perceiving a positively valenced emotional expression when in a good mood, and a negatively valenced emotional expression when in a bad mood (Hypothesis 1). In line with prior research, but competing with Hypothesis 1, we expected older adults to have a higher likelihood of perceiving positive emotions in facial expressions when in a bad mood (Hypothesis 2). In addition, we expected a shift from mood-congruent to mood-incongruent information processing as a function of processing time (Hypothesis 3). Based on prior research, we expected a positive relationship between the likelihood of perceiving positively valenced emotions in faces and subsequent improvements in mood (Hypothesis 4), supporting the notion of a mood-regulatory function of emotion perception.

To test these hypotheses, we asked young, middle-aged, and older adults to indicate the amount of happiness, sadness, fear, disgust, anger, and neutrality they perceived in photographs of faces displaying prototypical happy, sad, fearful, disgusted, angry, or neutral facial expressions. Of importance, using a multidimensional rating approach, participants had rated all six emotions for each prototypical facial expression. For example, although a face may have been correctly recognized as displaying anger (prototypical primary expression), participants could indicate that they also perceived some sadness, or any of the other emotion(s), in the same face. In terms of the AIM, recognizing a prototypical facial expression is likely the result of either a direct access or motivational processing strategy and thus unlikely to infuse affect (Forgas, 1995). In addition, individuals differ in their ability to recognize prototypical facial expressions, with age as an important predictor of these differences (see Ruffman et al., 2008, for an overview)1. For these reasons we were not interested in emotion recognition, but in the likelihood of ascribing an additional emotional expression to a face, whose primary emotion had already been correctly recognized. This procedure controls2 for differences in emotion recognition and maximizes the likelihood of affect infusion (i.e., a mood-congruency or mood-incongruency effect). Throughout the remainder of this paper, the term emotion perception thus refers to the perception of additional emotional expressions in a face (other than the primary expression), once its primary emotional expression had been correctly recognized (see Methods section for details).

The present article follows up on previous work with the same dataset in which we investigated anticipatory and reactive mood changes throughout the course of the study (Voelkle et al., 2013), age-of-perceiver and age-of-rater effects on multidimensional emotion perception (Riediger et al., 2011), and accuracy and bias in age estimation (Voelkle et al., 2012). Until now, however, we never linked participants' mood to the perception of emotion in others (faces), which is the primary aim of the present study.

Methods

Participants

One hundred fifty-four adults of three different age groups (n = 52 younger adults: 20–31 years; n = 51 middle-aged adults: 44–55 years; n = 51 older adults: 70–81 years) participated in the study. All of the 76 women and 78 men were Caucasian and German-speaking. Self-reported physical functioning was good, and visual-processing speed, as assessed by Wechsler's (1981) digit symbol substitution test, was comparable to typical performance levels in these age groups. For details about the demographic composition of the sample, see Ebner et al. (2010).

Procedure

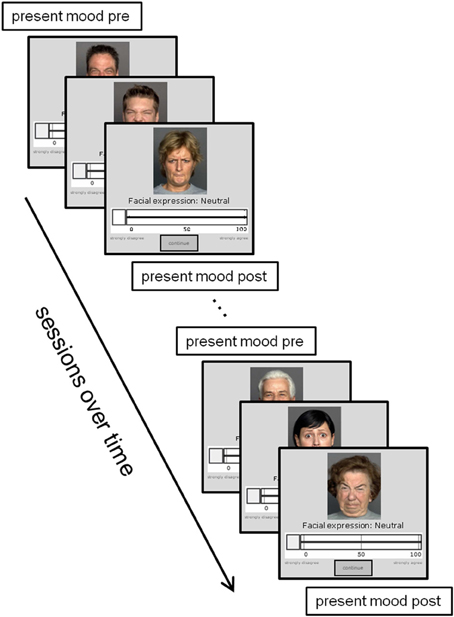

The study was approved by the MPI ethics review board. Figure 1 provides a graphical illustration of the study procedure. After giving informed consent, participants were randomly assigned to one of two sets of parallel face pictures, which were presented one at a time on a 19-inch monitor. For each picture, participants indicated in a self-paced fashion the degree of happiness, sadness, fear, disgust, anger, or neutrality perceived in the face on a scale from 0 (does not apply at all) to 100 (applies completely). After these continuous ratings, participants indicated which of the six emotions was primarily displayed in the face (categorical rating). This was followed by additional face-specific questions that are not of relevance for the present paper. Due to the large number of face stimuli, participants had to complete the ratings in several sessions. Each session lasted for 100 min and there was only one session per day. Although it took up to 24 sessions for the slowest participant to complete all ratings, we restricted our analyses to the first 10 sessions to maintain comparability with previous research and to avoid increasing sparseness of the data. Participants' mood was assessed at the beginning and at the end of each session.

Figure 1. Illustration of the study procedure. Present mood was assessed at the beginning and the end of each session. During each session, participants rated the emotional expression of different face pictures by adjusting a slider. In addition, the primary emotional expression (happiness, sadness, anger, disgust, fear, or neutrality) was assessed via categorical rating.

Measures

Positive and negative mood was assessed using Hampel's (1977) Adjective Scales to Assess Mood. For positive mood, participants indicated on a scale from one to five the extent to which they currently felt happy, cheerful, elated, in high spirits, relaxed, mellow, and exuberant. Negative mood was assessed using the seven items insecure, sorrowful, disappointed, hopeless, melancholic, downhearted, and helpless. Corrected item-scale correlations for positive mood at the first measurement time point ranged between 0.51 and 0.79 (Cronbach's α = 0.89) and between 0.41 and 0.63 (Cronbach's α = 0.81) for negative mood (see Voelkle et al., 2013).

Stimuli

Face photographs of 58 younger (19–31 years), 56 middle-aged (39–55 years), and 57 older (69–80 years) adults were used as stimulus material. The photographs were taken from the FACES Lifespan Database of Facial Expressions (Ebner et al., 2010), which contains in two parallel sets a total of 2052 pictures, displaying each of the 171 target persons with a prototypical happy, angry, sad, disgusted, fearful, or neutral facial expression (i.e., the pictures were taken at the peak of the emotional expression, following a standardized production and selection procedure in line with the Affect Program Theory of facial expressions by Ekman, 1993). In line with the race and ethnicity of study participants and in order to reduce the design complexity (e.g., by avoiding possible race-biased in-group vs. out-group effects; cf. Meissner and Brigham, 2001) all face models were Caucasian. For details on the construction of the FACES database see Ebner et al. (2010).

Analysis

For each of the 1026 photographs (171 individuals times six emotions), N = 154 participants were asked to provide continuous emotion ratings on six different emotions, resulting in a theoretical maximum of 1026 × 6 × 154 = 948,024 ratings (plus the categorical emotion ratings and other ratings as mentioned above). Given that the face pictures displayed maximally prototypical emotional expressions, with about 80%3, the average recognition rate of the primary emotional expression was fairly high. As noted before, we were not interested in emotion recognition, but in the likelihood of ascribing an additional emotional expression to a face, for which the primary emotion had already been correctly recognized. Therefore, we controlled for interindividual differences in accuracy of emotion recognition, by including only ratings of those stimuli of which the primary emotional expression had been correctly identified (as indicated by the categorical ratings). Furthermore, we decided to analyze the likelihood with which an additional emotional expression was perceived in a face rather than the intensity of this perception. The reasons for this decision were twofold: First, the ratings of the additional emotions attributed to a face followed a highly right-skewed distribution. Despite correctly recognizing the primary facial expressions, some individuals indicated additional emotions of 100 (highest intensity of emotion expression) on a scale from 0 to 100. In addition, some people seemed to have used the middle of the rating scale as a reference point for some of their ratings. As a consequence, we could not find a meaningful transformation (such as a log-transform) that would have resulted in homoscedastic and normally distributed residuals. Second, in about 74% of the ratings, no additional continuous emotion ratings were provided resulting in a preponderance of zeros. Although models have been developed to deal with the combination of a zero-inflated and continuous or count part (Hall, 2000; Olsen and Schafer, 2001), we are not aware of any readily available integration with crossed random effects analyses employed in the present paper. Future research in this direction will be desirable.

Differences in the likelihood to perceive an emotion in addition to the primary emotion were modeled as a function of the type of emotion (happiness, sadness, fear, disgust, anger, neutrality; dummy coded with neutrality as baseline), mood at the beginning of the session (group mean centered at the average mood level prior to each session), study session (1–10; grand mean centered at 5.5), age group of perceiver (younger, middle-aged, older; effects coded), stimulus number (i.e., the relative position of a face photograph in the sequence of photographs presented to an individual participant, centered at the average number of stimuli rated by each individual in each session), and interactions between these factors4. Faces and participants were treated as two freely estimated crossed random effects in a generalized linear mixed effects model with a logit link function using the lme4 package 1.0.4 (Bates et al., 2013; see also Pinheiro and Bates, 2000) of R version 2.15.2 (R Core Team, 2012).

The possible role of emotion perception in faces as a mood-regulatory function was investigated by predicting the pre- to post-session changes in mood by how often a participant indicated perceiving a certain emotional expression out of the total number of ratings of this participant (i.e., the individual percentage of emotion perception in each session). In previous work we have demonstrated that changes in pre- to post-session mood decline significantly across the course of the entire study and that this change-in-change is almost perfectly captured by a logistic growth curve model (Voelkle et al., 2013). In order to control for this general trend we used the same model as in Voelkle et al. (2013) and added the interaction between age group and percentage of perceived emotional expression as time varying covariates with time varying effects5. To control for overall trends in emotion perception, session-mean centered scores were used.

Results

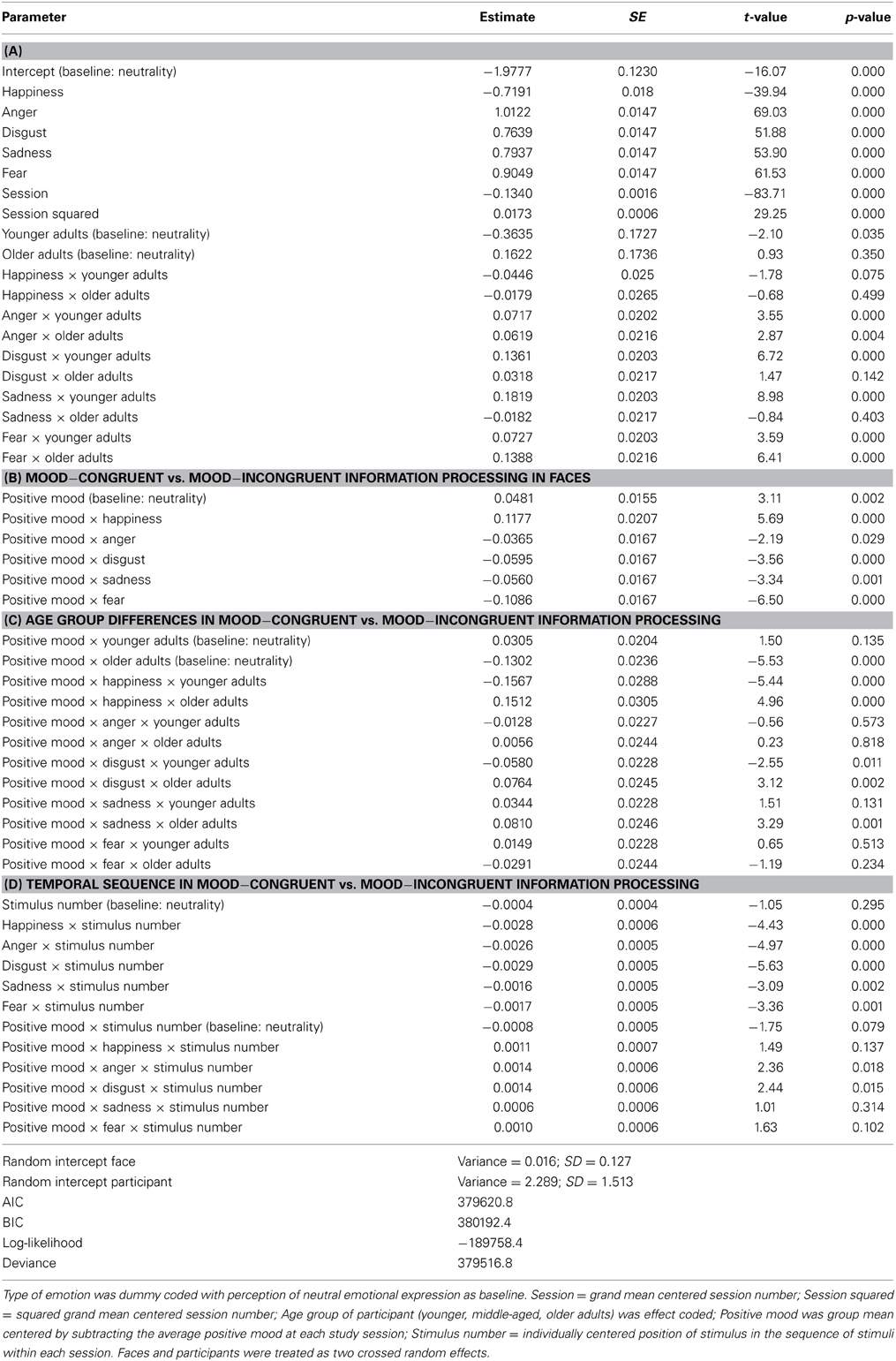

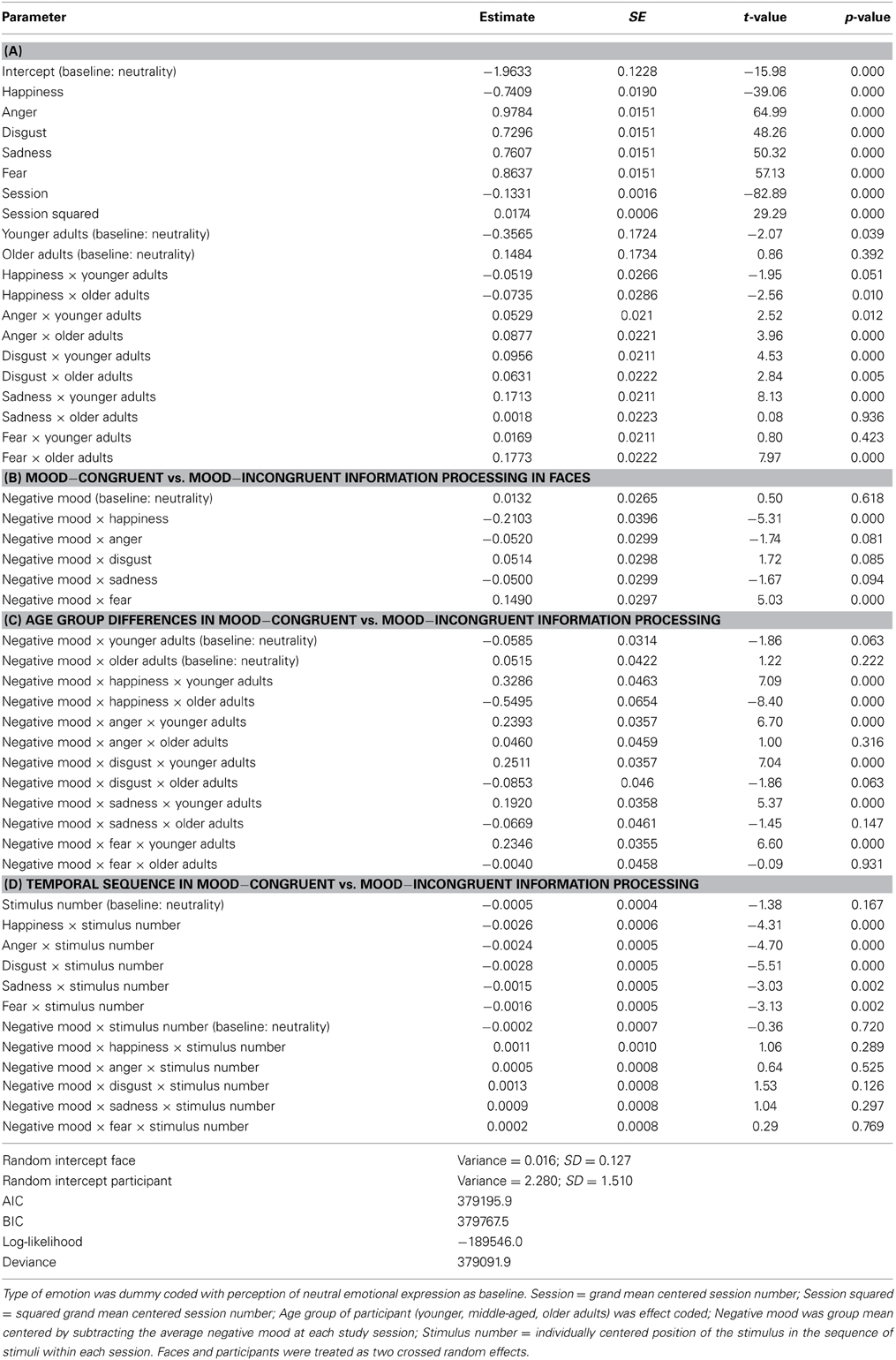

The results section is organized as follows: Table 1 contains all parameter estimates of a single crossed random effects model as described above. In the following, we report results on the relationship between positive mood and emotion perception in faces. The corresponding results regarding negative mood and emotion perception are presented in Table 2. We will begin with discussing differences between displayed emotions, changes in emotion perception across study sessions, and age group differences in the perception of different emotions (Tables 1, 2, Part A). Next, we will turn to the question of mood-congruent vs. mood-incongruent information processing in faces by investigating the effect of present mood on the perception of different emotions in faces (Hypothesis 1; Tables 1, 2, Part B), as well as age group differences in mood-congruent vs. mood-incongruent information processing (Hypothesis 2; Tables 1, 2, Part C). After that we will focus on the role of the temporal sequence in emotion processing by analyzing the effect of stimulus position on emotion perception across the six different facial expressions along with a short discussion of possible three-way interactions of mood × stimulus position × emotion (Hypothesis 3; Tables 1, 2, Part D). Finally, we will investigate the effectiveness of emotion perception in faces as a mood-regulatory function (Hypothesis 4; Figure 4).

Table 1. Results of a crossed random effects analysis predicting the likelihood of perceiving an (additional but the primary) emotional expression in a face by type of emotion, positive mood, session number, stimulus number, and age group.

Table 2. Results of a crossed random effects analysis predicting the likelihood of perceiving an (additional but the primary) emotional expression in a face by type of emotion, negative mood, session number, stimulus number, and age group.

After recognizing the primary emotional expression, the average probability to perceive an additional neutral expression in a randomly selected face picture, presented after half of the stimuli had been rated within a given study session, and after half of the sessions had been completed was [100 · (1/(1 + e1.978))] = 12.16% in a participant with an average within-session positive mood. However, there were large differences between the types of emotions: While the probability of perceiving an additional happy expression was significantly lower [100 · (1/(1 + e1.978 + 0.719))] = 6.31% (logit = −0.719; odds ratio = 0.487; p < 0.001), the probability of perceiving an additional angry expression was significantly higher (27.58%; logit = 1.012; odds ratio = 2.751; p < 0.001). The other emotions fell somewhere in between. Likewise, the likelihood of reporting an additional (neutral) emotional expression changed as a function of the number of sessions, with a significantly higher likelihood at the beginning of the study (20.82%) than after 10 sessions (6.79%). Note that the decline was not linear but leveled off toward the end of the study (i.e., a positive quadratic effect; logitLinear = −0.1340, odds ratio = 0.875, p < 0.001; logitQuadratic = 0.017, odds ratio = 1.017, p < 0.001). In contrast to the impact of session and type of emotion, age group differences in the perception of different emotions were smaller and somewhat mixed. As compared to the average age (age groups are effects coded6), the likelihood to perceive anger increased for both, younger (logitYounger = 0.072, odds ratio = 1.07, p < 0.001) and older (logitOlder = 0.062, odds ratio = 1.06, p < 0.001) adults. The same applied to fear (logitYounger = 0.073, odds ratio = 1.08, p < 0.001; logitOlder = 0.139, odds ratio = 1.15, p < 0.001). In addition, the likelihood of reporting disgust and sadness increased significantly in younger adults. However, with an odds ratio of e0.182 = 1.20 even the largest effect was rather small. See Table 1, Part A, for details.

Mood-Congruent vs. Mood-Incongruent Information Processing in Faces

Turning to Hypothesis 1, and putting age aside for the moment, there was clear evidence for a mood-congruency effect in emotion perception. The more positive participants' mood, the more likely they were to perceive happiness in the presented faces. For example, someone in a maximally positive mood would have a probability of [100 · (1/(1 + e1.978 + 0.719 − 0.048 · 2 − 0.118 · 2))] = 8.58% of perceiving an additional happy expression, but only a probability of [100 · (1/(1 + e1.978 + 0.719 − 0.048 · −2 − 0.118 · −2))] = 4.61% if scoring at the lower end of the mood scale (logit = 0.118; odds ratio = 1.125; p < 0.001). Being in a positive mood also slightly increased the probability of perceiving an additional neutral expression (logit = 0.048; odds ratio = 1.049; p < 0.01). However, being in a positive mood consistently reduced the probability of perceiving an additional expression for all negatively valenced emotions (i.e., anger, disgust, sadness, and fear; see Table 1). See Table 1, Part B.

As apparent from Table 2, a similar pattern held for increasing negative mood. That is, higher levels of negative mood led to a lower probability of perceiving an additional happy expression (logit = −0.210; odds ratio = 0.810; p < 0.001), but an increase in the likelihood to perceive an additional fearful expression (logit = 0.149; odds ratio = 1.161; p < 0.001). All other emotions fell somewhere in between (none of them were significant at a 0.05 alpha level).

Age Group Differences in Mood-Congruent vs. Mood-Incongruent Information Processing

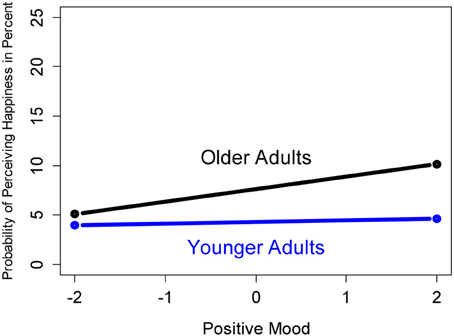

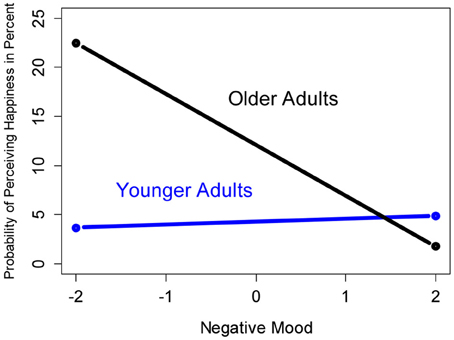

Contradictory to Hypothesis 2, which predicted that older adults have a higher likelihood of perceiving positive emotions in facial expressions when being in a bad mood (i.e., mood-incongruency), a mood-congruency effect was primarily shown by older adults. Other than younger adults (logit = −0.157; odds ratio = 0.855; p < 0.001), older adults exhibited a significantly higher probability of perceiving happiness, when being in a good mood (logit = 0.151; odds ratio = 1.163; p < 0.001). Figure 2 provides a graphical illustration of the model-predicted probabilities of perceiving an additional happy emotional expression in faces for younger and older adults with maximally high vs. low levels of positive mood. As compared to the baseline, higher levels of positive mood in older adults also increased the likelihood of perceiving disgust and sadness (logit = 0.076; odds ratio = 1.079; p < 0.01; logit = 0.081; odds ratio = 1.084; p < 0.01, respectively). In contrast, positive mood in older adults decreased the likelihood of perceiving neutrality (logit = −0.130; odds ratio = 0.878; p < 0.001). Although the results were somewhat mixed, the pronounced mood-congruency effect for older adults in the perception of happiness speaks against Hypothesis 2. See Table 1, Part C, for details. The effect for negative mood (Table 2) further bolstered the rejection of Hypothesis 2: Higher levels of negative mood in older adults decreased the probability of perceiving happiness (logit = −0.550; odds ratio = 0.578; p < 0.001), while no such decrease was found for younger adults (logit = 0.329; odds ratio = 1.390; p < 0.001). These results are illustrated in Figure 3.

Figure 2. Predicted probability of perceiving an additional happy emotional expression in faces for younger and older adults with maximally high (right) vs. maximally low (left) levels of positive mood.

Figure 3. Predicted probability of perceiving an additional happy emotional expression in faces for younger and older adults with maximally high (right) vs. maximally low (left) levels of negative mood.

The Role of the Temporal Sequence in Emotion Processing

The probability of perceiving an additional emotional expression decreased not only across study sessions, but also within each study session (i.e., with stimulus number; see Table 1, Part D). While the decrease was somewhat lower and non-significant for the perception of neutrality (logit = −0.0004; odds ratio = 0.999; p = 0.295), it was significant for all other emotions (p < 0.01). At the descriptive level, the downward trend in the likelihood of reporting an emotional expression, other than neutrality, was slightly weakened for participants in a positive mood, but none of the effects was significant at a 0.01 alpha level. Likewise, there were no three-way interactions with negative mood (see Table 2). Given the non-significant interaction of positive mood with stimulus number and negative mood with stimulus number, respectively, along with the uniform downward trend in the likelihood of perceiving an additional emotional expression, there was little evidence for a shift from mood-congruent to mood-incongruent information processing as a function of elapsed processing time in the present study. Thus, Hypothesis 3 was rejected.

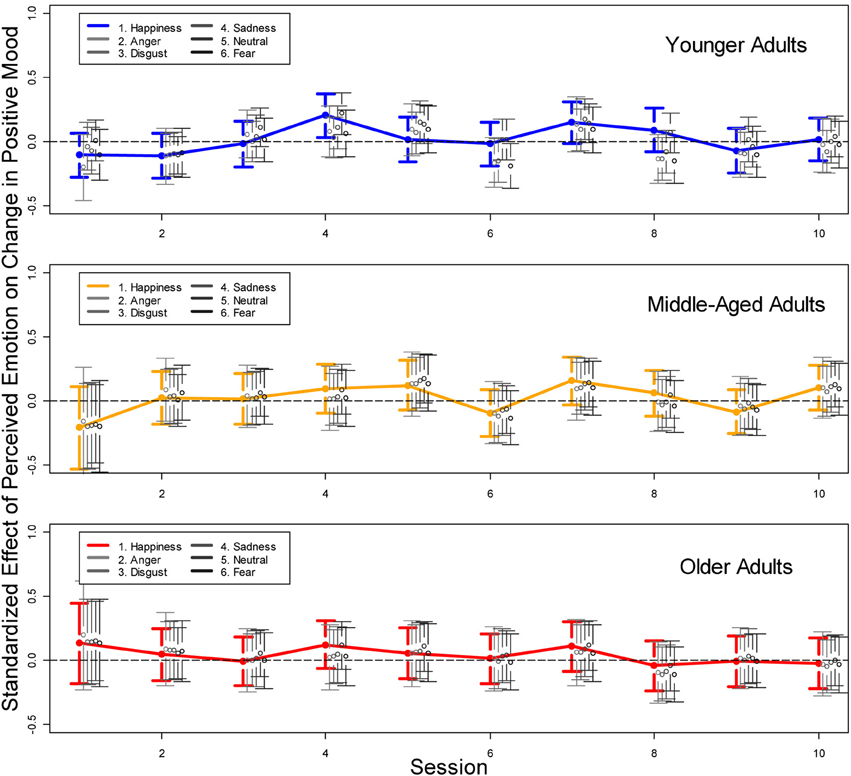

Effectiveness of Emotion Perception in Faces as a Mood-Regulatory Function

In Hypothesis 4 we postulated a positive relationship between the likelihood of perceiving positively valenced emotions in faces and subsequent improvements in mood. This hypothesis was based on prior research suggesting that mood-incongruent information processing in older adults, or a shift from mood-congruent to mood-incongruent information processing as a function of processing time, may serve a mood-regulatory function—in particular if initial mood was bad. Given that we found no empirical support for Hypotheses 2 and 3 this seemed unlikely. To explicitly test the effect of emotion perception during a study session on mood changes from the beginning to the end of a session, we added the interaction between age group and percentage of perceived emotional expressions as time varying covariates to a logistic growth curve model of mood changes as described above. The results are presented in Figure 4 which shows the standardized effects of emotion perception on pre- to post-session mood changes across the 10 study sessions, separated for younger, middle-aged, and older adults, and for all six emotional expressions. As apparent from the 95% confidence intervals, almost none of the effects were significant and there was no consistent pattern across time, across emotions, or across age groups. Rather, most effects varied around zero, providing no empirical support for Hypothesis 4.

Figure 4. Standardized effects of perceived happiness, anger, disgust, sadness, neutrality, and fear on changes between pre- to post-session mood for younger, middle-aged, and older participants. In six separate analyses (for the six emotions), the interaction between perceived emotional expression and age group was entered as a time-varying covariate with time-varying effects into a logistic growth curve model of changes in mood changes across the 10 study sessions (see Voelkle et al., 2013).

Discussion

We began this work by asking how the way we feel influences the perception of the world around us, and how this perception may affect our own feelings. We approached this question by studying the relationship between natural mood (as opposed to experimentally induced mood) and the attribution of emotions to faces for which the primary emotional expression had been correctly identified.

Consistent with our expectations in Hypothesis 1, more positive mood increased the likelihood of perceiving a happy facial expression. In particular older adults had a higher likelihood of reporting the perception of happiness in faces when being in a positive mood, and a lower likelihood when being in a negative mood. Likewise, older adults were increasingly less likely to perceive happiness in faces when in a more negative mood. Both findings did not apply to younger adults. This stands in contrast to Hypothesis 2 which predicted that older adults have a higher likelihood of perceiving positive emotions in facial expressions when in a bad mood. In contrast to previous reports in the literature (Forgas and Ciarrochi, 2002; Isaacowitz et al., 2008, 2009a), we found neither evidence for a change from mood-congruent to mood-incongruent responses over time (Hypothesis 3) nor for a mood-regulatory effect of emotion processing in faces (Hypothesis 4). In the remainder of the discussion we will offer interpretations for our central findings.

Mood-Congruent Information Processing

Although partly in contrast to our expectations based on previous research using active mood induction in controlled laboratory settings, our results may be explained in terms of the AIM. The fact that older adults showed a mood-congruency effect in emotion perception suggests their use of a high affect infusion strategy such as heuristic or substantive processing. Unfortunately, the present study does not allow us to clearly disentangle the underlying mechanisms in terms of heuristic vs. substantive processing. Even though this remains an important topic to be addressed in future research, we believe that the observed mood-congruent information processing in older adults may even be due to a combination of the two processing strategies. On the one hand—and independent of present mood—older compared to younger adults were not only more likely to indicate the perception of an additional happy but also an additional neutral facial expression (see Tables 1, 2). This may reflect their higher personal involvement in the task, which suggests the use of a substantive processing strategy. On the other hand, reducing cognitive resources may have let older adults rely stronger on other sources of information, such as their current mood, supporting the notion of a heuristic (mood-as-information) processing strategy.

For younger adults in contrast, the increase in the perception of happiness almost completely disappeared (see Figure 2), suggesting their use of a low affect infusion strategy such as motivated processing or the direct access strategy. The motivation to increase one's mood—in particular when in a bad mood—should not only eliminate a mood-congruency effect, but should result in mood-incongruent information processing (i.e., an increased likelihood of perceiving happiness). The fact that we did not observe such an effect, rather suggests the use of a direct access strategy in younger adults. As described in the introduction, this strategy is particularly likely when “the judge is not personally involved, and there are no strong cognitive, affective, motivational, or situational forces mandating more elaborate processing” (Forgas, 1995, p. 46).

Mood-Incongruent Information Processing as a Mood-Regulatory Function

As apparent from Figure 4, there was no empirical support that emotion perception in faces serves a mood-regulatory function under conditions of natural occurring mood rather than actively induced mood. This stands in contrast to research using experimentally induced mood, which showed that the focus of older adults in a bad mood on positively valenced faces may help to regulate their mood. One reason for these different findings may be that the naturally occurring fluctuations in positive mood assessed in the present context were not sufficiently strong to reveal such an effect. Furthermore, ascribing an additional emotional expression to a face whose primary emotion had been correctly recognized is, of course, a much more subtle measure of mood-(in)congruent emotion processing as compared, for example, to gaze patterns toward prototypically positively or negatively valenced facial expressions as used in the study by Isaacowitz et al. (2008). In addition, the anticipatory (down)adjustment of positive mood may have further reduced the likelihood of discovering such an effect (Voelkle et al., 2013).

Limitations and Future Directions

We believe the general downward trend in the likelihood of reporting an emotional expression is likely due to participant's increasing fatigue and decreasing motivation. However, it is a shortcoming of the present study that these factors were not assessed in self-report, thus this belief remains speculative. Although we statistically controlled for the downward trend, the remaining variability in mood and emotion perception may have been too small to allow for additional mood regulation effects by means of emotion perception. After all, if an individual's mood is already perfectly adjusted to the situation at hand—or if fatigue effects are very strong—there is little room for additional regulation via mood-incongruent information processing.

This may be viewed as a shortcoming of the present study. However, it also shows that while natural mood (as opposed to experimentally induced mood) is likely to affect the perception of emotions in faces, a possible mood-regulatory effect of such perception seems negligible. In fact, we found that in particular for older adults, positive mood may rather increase the likelihood of perceiving positively valenced facial expressions.

The somewhat undifferentiated take on emotions and mood may be considered another weakness of the study. Future research may profit from more fine-grained distinctions between different aspects of positive and negative mood, including different aspects of valence and arousal, and a discrete emotion perspective as outlined by Kunzmann et al. (2014) in this issue. However, the primary purpose of the present paper was not to offer a comprehensive framework on age-related changes in the intricate interplay between specific aspects of affective experience and the perception (attribution) of additional emotions in faces whose primary emotion were correctly specified. Rather the focus was to place research on emotion perception in faces into the broader context on mood-congruent vs. mood-incongruent information processing. To accomplish this goal it is necessary to remain at a more general level, although we do report more detailed findings. In the same way we encourage future research to focus on more detailed aspects of experienced mood and the perception of emotional expression, we, thus, also encourage more integrative research linking these findings back to more general research on age-related changes in mood-(in)congruent information processing. We hope the present paper will contribute to both ends.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Dulce Erdt, Colin Bauer, Philipp Möller, Luara Santos-Ferreira, Giorgi Kobakhidze, and the COGITO-Team for their assistance in study implementation, data collection, and data management. We thank Janek Berger for editorial assistance.

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fpsyg.2014.00635/abstract

Footnotes

1. ^For an analysis of age differences in emotional expression identification as a function of perceiver's and face model's age and facial expression with the present data, we refer the reader to Ebner et al. (2010).

2. ^Although we disentangle the perception of additional emotional expressions from emotion recognition by analyzing only correctly recognized emotions, this does not preclude the possibility that people with higher recognition ability (and possible age differences therein) may be less likely to ascribe additional emotional expressions to a face after having identified the primary emotional expression. To control for this possibility, we repeated all analyses by including the person-specific recognition ability as an additional covariate. As a matter of fact, higher recognition ability decreased the likelihood of perceiving an additional emotional expression significantly (logit = −10.750; p < 0.001). In addition, the slightly lower (and non-significant at a 0.01 alpha level) likelihood of younger adults of perceiving an additional emotional expression disappeared after controlling for recognition ability. All other results, however, remained virtually unaffected by controlling for person-specific recognition ability. The additional analyses are provided as online Supplementary Materials A and B.

3. ^The average recognition rate of emotional expressions differed across emotions and age groups. For a more detailed analysis, see Ebner et al. (2010).

4. ^In a separate analysis, we included sex of participant as an additional predictor, which resulted in a non-significant improvement in model fit and did not change the pattern of results or any of the substantive conclusions (2Log-LikelihoodDiff = 1.2; dfDiff = 1). Because we had no prior expectations regarding sex effects, we report the analyses without sex.

5. ^Missing values on the predictor variables due to study drop out were handled via Expectation Maximization (EM) imputation.

6. ^Middle-aged adults were chosen as the base group. As pointed out by Cohen et al. (2003), when using effects coding the base group is usually selected to be the group “for which comparisons to the mean are of least interest” (p. 322). This is because the analysis does not inform us directly about this group, but only indirectly. All information, however, is considered in the analysis. See Cohen et al. (2003) for more detailed information.

References

Adolphs, R. (2003). Cognitive neuroscience of human social behaviour. Nat. Rev. Neurosci. 4, 165–178. doi: 10.1038/nrn1056

Baron-Cohen, S. E., Tager-Flusberg, H. E., and Cohen, D. J. (2000). Understanding Other Minds: Perspectives from Developmental Cognitive Neuroscience. 2nd Edn. Oxford: Oxford Univeristy Press.

Bates, D., Maechler, M., Bolker, B., and Walker, S. (2013). lme4: Linear Mixed-Effects Models using Eigen and S4 (Version 1.0-4) [R package]. Available online at: http://CRAN.R-project.org/package=lme4

Becker, M. W., and Leinenger, M. (2011). Attentional selection is biased toward mood-congruent stimuli. Emotion 11, 1248–1254. doi: 10.1037/a0023524

Beevers, C. G., and Carver, C. S. (2003). Attentional bias and mood persistence as prospective predictors of dysphoria. Cogn. Ther. Res. 27, 619–637. doi: 10.1023/A:1026347610928

Bower, G. H. (1991). “Mood congruity of social judgements,” in Emotion and Social Judgements, ed J. P. Forgas (New York, NY: Pergamon), 31–53.

Carstensen, L. L. (2006). The influence of a sense of time on human development. Science 312, 1913–1915. doi: 10.1126/science.1127488

Carstensen, L. L., Isaacowitz, D. M., and Charles, S. T. (1999). Taking time seriously: a theory of socioemotional selectivity. Am. Psychol. 54, 165–181. doi: 10.1037/0003-066X.54.3.165

Clark, D. A., Beck, A. T., and Alford, B. A. (1999). Scientific Foundations of Cognitive Theory and Therapy for Depression. New York, NY: John Wiley and Sons.

Cohen, J., Cohen, P., West, S. G., and Aiken, L. S. (2003). Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences. 3rd Edn. Hillsdale, NJ: Erlbaum.

Coupland, N. J., Sustrik, R. A., Ting, P., Li, D., Hartfeil, M., Singh, A. J., et al. (2004). Positive and negative affect differentially influence identification of facial emotions. Depress. Anxiety 19, 31–34. doi: 10.1002/da.10136

Ebner, N. C., Riediger, M., and Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. doi: 10.3758/BRM.42.1.351

Eizenman, M., Yu, L. H., Grupp, L., Eizenman, E., Ellenbogen, M., Gemar, M., et al. (2003). A naturalistic visual scanning approach to assess selective attention in major depressive disorder. Psychiatry Res. 118, 117–128. doi: 10.1016/S0165-1781(03)00068-4

Ekman, P. (1993). Facial expression and emotion. Am. Psychol. 48, 384–392. doi: 10.1037/0003-066X.48.4.384

Elliott, R., Rubinsztein, J. S., Sahakian, B. J., and Dolan, R. J. (2002). The neural basis of mood-congruent processing biases in depression. Arch. Gen. Psychiatry 59, 597–604. doi: 10.1001/archpsyc.59.7.597

Erber, R., and Erber, M. W. (1994). Beyond mood and social judgment: mood incongruent recall and mood regulation. Eur. J. Soc. Psychol. 24, 79–88. doi: 10.1002/ejsp.2420240106

Forgas, J. P. (1992). Mood and the perception of unusual people: affective asymmetry in memory and social judgments. Eur. J. Soc. Psychol. 22, 531–547. doi: 10.1002/ejsp.2420220603

Forgas, J. P. (1995). Mood and judgment: the affect infusion model (AIM). Psychol. Bull. 117, 39–66. doi: 10.1037/0033-2909.117.1.39

Forgas, J. P., and Ciarrochi, J. V. (2002). On managing moods: evidence for the role of homeostatic cognitive strategies in affect regulation. Pers. Soc. Psychol. Bull. 28, 336–345. doi: 10.1177/0146167202286005

Hall, D. B. (2000). Zero-Inflated poisson and binomial regression with random effects: a case study. Biometrics 56, 1030–1039. doi: 10.1111/j.0006-341X.2000.01030.x

Hampel, R. (1977). Adjektiv-Skalen zur Einschätzung der Stimmung (SES) [Adjective scales to assess mood]. Diagnostica 23, 43–60.

Hunter, P. G., Schellenberg, E. G., and Griffith, A. T. (2011). Misery loves company: mood-congruent emotional responding to music. Emotion 11, 1068–1072. doi: 10.1037/a0023749

Isaacowitz, D. M., Allard, E. S., Murphy, N. A., and Schlangel, M. (2009a). The time course of age-related preferences toward positive and negative stimuli. J. Gerontol. B Psychol. Sci. Soc. Sci. 64B, 188–192. doi: 10.1093/geronb/gbn036

Isaacowitz, D. M., Toner, K., Goren, D., and Wilson, H. R. (2008). Looking while unhappy: mood-congruent gaze in young adults, positive gaze in older adults. Psychol. Sci. 19, 848–853. doi: 10.1111/j.1467-9280.2008.02167.x

Isaacowitz, D. M., Toner, K., and Neupert, S. D. (2009b). Use of gaze for real-time mood regulation: effects of age and attentional functioning. Psychol. Aging 24, 989–994. doi: 10.1037/a0017706

Koster, E. H. W., De Raedt, R., Leyman, L., and De Lissnyder, E. (2010). Mood-congruent attention and memory bias in dysphoria: exploring the coherence among information-processing biases. Behav. Res. Ther. 48, 219–225. doi: 10.1016/j.brat.2009.11.004

Kunzmann, U., Kappes, C., and Wrosch, C. (2014). Emotional aging: a discrete emotions perspective. Front. Psychol. 5:380. doi: 10.3389/fpsyg.2014.00380

Matt, G. E., Vázquez, C., and Campbell, W. K. (1992). Mood-congruent recall of affectively toned stimuli: a meta-analytic review. Clin. Psychol. Rev. 12, 227–255. doi: 10.1016/0272-7358(92)90116-P

Mayer, J. D., McCormick, L. J., and Strong, S. E. (1995). Mood-congruent memory and natural mood: new evidence. Pers. Soc. Psychol. Bull. 21, 736–746. doi: 10.1177/0146167295217008

Meissner, C. A., and Brigham, J. C. (2001). Thirty years of investigatin the own-race bias in memory for faces: a meta-analytic review. Psychol. Public Policy Law 7, 3–35. doi: 10.1037/1076-8971.7.1.3

Morris, W. N., and Reilly, N. P. (1987). Toward the self-regulation of mood: theory and research. Motiv. Emot. 11, 215–249. doi: 10.1007/BF01001412

Olsen, M. K., and Schafer, J. L. (2001). A two-part random-effects model for semicontinuous longitudinal data. J. Am. Stat. Assoc. 96, 730–745. doi: 10.2307/2670310

Phillips, L., and Allen, R. (2004). Adult aging and the perceived intensity of emotions in faces and stories. Aging Clin. Exp. Res. 16, 190–199. doi: 10.1007/BF03327383

Pinheiro, J. C., and Bates, D. M. (2000). Mixed-Effects Models in S and S-Plus. New York, NY: Springer. doi: 10.1007/978-1-4419-0318-1

R Core Team. (2012). R: a Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online at: http://www.R-project.org/

Riediger, M., Voelkle, M. C., Ebner, N. C., and Lindenberger, U. (2011). Beyond “happy, angry, or sad?”: age-of-poser and age-of-rater effects on multi-dimensional emotion perception. Cogn. Emotion 25, 968–982. doi: 10.1080/02699931.2010.540812

Rinck, M., Becker, E. S., Kellermann, J., and Roth, W. T. (2003). Selective attention in anxiety: distraction and enhancement in visual search. Depress. Anxiety 18, 18–28. doi: 10.1002/da.10105

Ruffman, T., Henry, J. D., Livingstone, V., and Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: implications for neuropsychological models of aging. Neurosci. Biobehav. Rev. 32, 863–881. doi: 10.1016/j.neubiorev.2008.01.001

Schwarz, N., and Clore, G. L. (1983). Mood, misattribution, and judgments of well-being: informative and directive functions of affective states. J. Pers. Soc. Psychol. 45, 513–523. doi: 10.1037/0022-3514.45.3.513

Schwarz, N., and Clore, G. L. (2003). Mood as information: 20 years later. Psychol. Inq. 14, 296–303. doi: 10.1080/1047840X.2003.9682896

Sedikides, C. (1994). Incongruent effects of sad mood on self-conception valence: it's a matter of time. Eur. J. Soc. Psychol. 24, 161–172. doi: 10.1002/ejsp.2420240112

Stuhrmann, A., Dohm, K., Kugel, H., Zwanzger, P., Redlich, R., Grotegerd, D., et al. (2013). Mood-congruent amygdala responses to subliminally presented facial expressions in major depression: associations with anhedonia. J. Psychiatry Neurosci. 38, 249–258. doi: 10.1503/jpn.120060

Suzuki, A., Hoshino, T., Shigemasu, K., and Kawamura, M. (2007). Decline or improvement? Age-related differences in facial expression recognition. Biol. Psychol. 74, 75–84. doi: 10.1016/j.biopsycho.2006.07.003

Voelkle, M. C., Ebner, N. C., Lindenberger, U., and Riediger, M. (2012). Let me guess how old you are: Effects of age, gender, and facial expression on perceptions of age. Psychol. Aging 27, 265–277. doi: 10.1037/a0025065

Voelkle, M. C., Ebner, N. C., Lindenberger, U., and Riediger, M. (2013). Here we go again: anticipatory and reactive mood responses to recurring unpleasant situations throughout adulthood. Emotion 13, 424–433. doi: 10.1037/a0031351

Keywords: emotion perception, mood-(in)congruent information processing, mood regulation, faces, crossed random effects analysis

Citation: Voelkle MC, Ebner NC, Lindenberger U and Riediger M (2014) A note on age differences in mood-congruent vs. mood-incongruent emotion processing in faces. Front. Psychol. 5:635. doi: 10.3389/fpsyg.2014.00635

Received: 13 January 2014; Accepted: 04 June 2014;

Published online: 26 June 2014.

Edited by:

Hakan Fischer, Stockholm University, SwedenReviewed by:

Hakan Fischer, Stockholm University, SwedenMara Fölster, Humboldt-Universität zu Berlin, Germany

Marie Hennecke, University of Zurich, Switzerland

Copyright © 2014 Voelkle, Ebner, Lindenberger and Riediger. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Manuel C. Voelkle, Center for Lifespan Psychology, Max Planck Institute for Human Development, Lentzeallee 94, Berlin 14195, Germany e-mail: voelkle@mpib-berlin.mpg.de

Manuel C. Voelkle

Manuel C. Voelkle Natalie C. Ebner

Natalie C. Ebner Ulman Lindenberger

Ulman Lindenberger Michaela Riediger

Michaela Riediger