Synchronization from second order network connectivity statistics

- 1 School of Mathematics, University of Minnesota, Minneapolis, MN, USA

- 2 School of Physics and Astronomy, University of Minnesota, Minneapolis, MN, USA

- 3 Department of Biomedical Engineering, University of Minnesota, Minneapolis, MN, USA

We investigate how network structure can influence the tendency for a neuronal network to synchronize, or its synchronizability, independent of the dynamical model for each neuron. The synchrony analysis takes advantage of the framework of second order networks, which defines four second order connectivity statistics based on the relative frequency of two-connection network motifs. The analysis identifies two of these statistics, convergent connections, and chain connections, as highly influencing the synchrony. Simulations verify that synchrony decreases with the frequency of convergent connections and increases with the frequency of chain connections. These trends persist with simulations of multiple models for the neuron dynamics and for different types of networks. Surprisingly, divergent connections, which determine the fraction of shared inputs, do not strongly influence the synchrony. The critical role of chains, rather than divergent connections, in influencing synchrony can be explained by their increasing the effective coupling strength. The decrease of synchrony with convergent connections is primarily due to the resulting heterogeneity in firing rates.

Introduction

The nervous system is highly organized to allow information to flow efficiently (Watts and Strogatz, 1998; Song et al., 2005) but is robust to pathological behaviors such as seizures. Neuronal synchrony is thought to play an important role in memory formation (Axmacher et al., 2006) while pathological amounts of synchrony are thought to be indicative of schizophrenia (Uhlhaas and Singer, 2010), Parkinson’s disease (Bergman et al., 1998), Alzheimer’s disease (Kramer et al., 2007), and epilepsy (Netoff and Schiff, 2002; Van Drongelen et al., 2003). In many diseases strong evidence suggests reorganization of the neuronal connections causing or caused by the disease (Cavazos et al., 1991; Parent et al., 1997; Zeng et al., 2007) may play a role in generating these pathological behaviors.

The synchronization of spiking activity in neuronal networks is due to a complex interplay among many factors; individual neuron dynamics, the types of synaptic response, external inputs to the network, as well as the network topology all play a role in determining the level of synchronization. The analysis of small networks of neurons has provided significant insight into the ways in which single neuron dynamics and synaptic response can influence the tendency of the network to synchronize (Ermentrout and Kopell, 1991; Strogatz and Mirollo, 1991). Previous studies have focused on synchronization of large networks with all-to-all connectivity (Hansel and Sompolinsky, 1992; Abbott and van Vreeswijk, 1993; Hansel et al., 1995) or independent random connectivity (Brunel, 2000). The focus of the present manuscript is to examine what types of network structures can decrease or increase the likelihood of a network to synchronize.

The study of the synchronization properties of networks has lead to notions of synchronizability (Arenas et al., 2008), whereby networks can be ranked by how well their structure facilitates synchrony. Clearly without specifying a neuron and synapse model, one cannot determine if a network will synchronize. Nonetheless, a network with high synchronizability would be expected to synchronize for a larger range of model choices and parameters than would a network with low synchronizability. To define the synchronizability of a network, we employ analyses designed to identify the effect of the network topology on perfect synchrony (Pecora and Carroll, 1998) and on asynchrony (Restrepo et al., 2006a).

We connect the synchronizability analysis directly to network structure using the recently developed framework of second order networks (SONETs), which is an approach to characterize and generate realistic network structure using key second order statistics of connectivity (Zhao et al., in preparation). SONETs, when combined with the synchronizability analysis, give insight into the types of local network structures that shape the influence of the network topology on synchrony. We demonstrate how characterizing neuronal networks using second order network statistics is useful for determining how changes in topology affect network synchrony.

Materials and Methods

Second Order Networks

A common practice in modeling sparsely connected neuronal networks is to randomly generate connections between neurons using a small probability of connection between neurons. The probability of connection can be a function of neuron identity or location. Implicit in these models is that each connection is generated independently. When the probability of each connection is a single constant p, the resulting special case of the independent random network model is often called the Erdős–Rényi random network model (Erdoős and Rényi, 1959), or the “random network” model. The independent random network model is the natural model that results from specifying only the connection probabilities, as it adds minimal additional structure beyond what is required to by the connection probabilities, i.e., the independent model maximizes entropy (Jaynes, 1957) subject to constraints set by the connection probabilities.

Evidence is emerging that structure in neuronal networks is not captured by the independent model. Some studies (Holmgren et al., 2003; Song et al., 2005; Oswald et al., 2009) but not all (Lefort et al., 2009) found reciprocal connections between pairs of neurons to be more likely than predicted by the independent model. Studies have also found that the frequencies of other patterns of connections differ from the independent model prediction (Song et al., 2005; Perin et al., 2011).

The independent random network model is based only on the first order statistics of the network connectivity, i.e., the marginal probability that any given connection exists. In the independent model, higher order statistics, such as the covariances among connections, are set to zero. We have developed a model framework extending the independent model to allow non-zero second order statistics, which determine the covariance among connections (Zhao et al., in preparation). We refer to these network models as SONETs. SONETs retain the principle from the independent model of adding minimal structure beyond what is required to match the specified constraints (in this case, matching both the first order connection probabilities and the second order connectivity statistics).

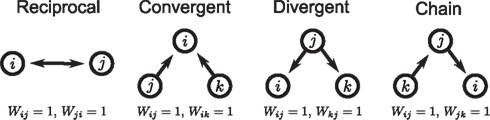

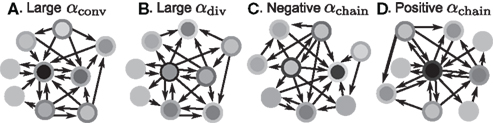

The first class of SONETs that we have developed are for the special case where the connection probability among neurons is fixed to a single constant p (Zhao et al., in preparation). In this way, these SONETs are a generalization of the Erdoős–Rényi random network model. Although the second order connectivity statistics are specified as covariances among connections, it is equivalent, but more intuitive, to determine them using the probability of observing patterns of two connections (or motifs). There are four possible motifs defining SONETs: reciprocal, convergent, divergent, and chains, as illustrated in Figure 1. A given SONET is determined by specifying the connection probability p and the probability of each of these motifs.

Figure 1. The four second order connection motifs of reciprocal, convergent, divergent, and chain connections.

To define the SONETs and the second order connectivity statistics more precisely, we introduce notation for the connectivity matrix W which defines the connectivity among N neurons. The component Wij = 1 indicates a connection from neuron j onto neuron i, and Wij = 0 if that connection is absent. In SONETs self coupling is not allowed; therefore Wii = 0.

In this paper we consider only homogeneous networks where the probability of any combination of connections is independent of the neurons’ labels. Thus, there is only one first order connectivity statistic: the connection probability p that determines the marginal probability of the existence of any connection Wij,

For the independent random network model the probability of any pair of connections would be p2. We introduce second order connectivity statistics that measure deviations from independence among pairs of connections that share a neuron. Since the network is homogeneous, we define a single second order connectivity statistic for each motif of Figure 1. The statistics αrecip, αconv, αdiv, and αchain specify how the probabilities of the reciprocal, convergent, divergence, and chain motifs, respectively, deviate from independence:

for any triplet of distinct neurons i, j, and k. In this way, a non-zero α yields a correlation between the connections of its corresponding motif.

The SONET model is a five-dimensional probability distribution (parametrized by p and the four α’s) for the network connectivity W that satisfies conditions (1) and (2). As the probability distribution adds minimal structure (i.e., higher order correlations) beyond these constraints, the SONET model reduces to the Erdoős–Rényi model when all α’s are zero.

For technical reasons, the SONET model only approximately satisfies the maximum entropy condition for minimal structure (Jaynes, 1957), but instead uses dichotomized Gaussian random variables analogous to those of Macke et al. (2009). The dichotomized Gaussian random variables satisfy the maximum entropy condition for continuous random variables, which are then dichotomized to be either zero or one, forming the Wij. Hence, to generate a SONET, one simply samples a N(N − 1)-dimensional vector of joint Gaussian random variables and thresholds them into the Bernoulli random variables Wij. The main technical challenge is calculating the covariance matrix of the joint Gaussian so that W satisfies the second order statistics (2). See (Zhao et al., in preparation) for details.

Estimating Connectivity Statistics

The above definitions of the first and second order connectivity statistics are based on a probability distribution for the connectivity matrix W. From a given network with connectivity matrix W, one can estimate these statistics directly from W. We use  and

and  to denote these descriptive statistics.

to denote these descriptive statistics.

The average connectivity  is simply the ratio of the number of connections Nconn present in W with the total possible:

is simply the ratio of the number of connections Nconn present in W with the total possible:  , where Nconn = ||W||1≡Σi,j|Wij|. The average second order connectivity statistics can likewise be determined by the total number of corresponding two-connection motifs that are present in the network, divided by the total possible:

, where Nconn = ||W||1≡Σi,j|Wij|. The average second order connectivity statistics can likewise be determined by the total number of corresponding two-connection motifs that are present in the network, divided by the total possible:

where Nk for k ∈ {recip, conv, div, chain} is the number of times motif k appears in the network. The Nk can be quickly calculated from W via Nrecip = Tr(W2)/2, Nconv = (||WTW||1−||W||1)/2, Ndiv = (||WWT||1−||W||1)/2, Nchain = (||W2||1−Tr(W2), where WT denotes matrix transpose and Tr(W) denotes trace.

In our network simulations, we compare the measured  to the network synchrony even when we know the underlying probability distribution and hence the parameters α from the definition (2). Because these networks are generated probabilistically, the actual will

to the network synchrony even when we know the underlying probability distribution and hence the parameters α from the definition (2). Because these networks are generated probabilistically, the actual will  vary slightly from α. We find that

vary slightly from α. We find that  predicts synchrony better than α.

predicts synchrony better than α.

Relating Second Order Connectivity Statistics to Degree Distribution

A common method to describe network structure is through the degree distribution (Newman, 2003; Boccaletti et al., 2006). In this section we derive a relationship between the second order connectivity statistics and degree distribution. This relation will be useful in understanding the effect of the connectivity statistics and synchrony.

The in-degree of a neuron is the total number of connections directed toward a neuron,  for neuron i, and the out-degree is the total number of connections directed away from the neuron,

for neuron i, and the out-degree is the total number of connections directed away from the neuron,  . The mean in-degree is equal to the mean out-degree, so we just refer to a mean degree.

. The mean in-degree is equal to the mean out-degree, so we just refer to a mean degree.

Using the definitions (2) of α, one can calculate that the variance of the in-degree depends on αconv, the variance of the out-degree depends on αdiv, and the covariance between in-degree and out-degree depends on αrecip and αchain:

where i is an arbitrary neuron index.

We can simplify the relationships between the α and the second order statistics of the degree distribution in the limit of large network size N. Neglecting terms that go to zero in this limit1 and rewriting in terms of the expected mean degree E(d) = (N − 1)p, we find the following relationships between the connectivity statistics and the degree distribution:

Hence, αconv and αdiv are nearly equal to the variance of the in-degree and out-degree distributions, normalized by the mean degree squared (with a small offset 1/E(d)). αchain is nearly equal to the covariance between in-degree and out-degree, normalized by the mean degree squared.

Generating Networks with Correlated Power Law Degree Distribution

We developed an algorithm based on the approach by Chung and Lu (2002) to generate directed networks with expected power law degree distributions with a range of second order connectivity statistics (3). We let pd(x, y) be this degree distribution, with x = din and y = dout. We set the marginal distributions of the in- and out-degrees to truncated power laws with exponentβi:

where the cutoff parameters satisfy 0 < ai < bi < N, and the normalization constants ci and di are chosen to make the marginal distributions continuous at ai and integrate to 1. Below the lower cutoff ai, we let the distribution increase smoothly with exponent γi.

For a given set of networks, we fixed the right cutoff parameters bi and the rising exponents γi. The left cutoff parameters ai and the exponent βi were then chosen for each network to match the desired average connectivity p as well as the second order connectivity statistics αconv (for i = in) or αdiv (for i = out) by numerically solving the equations [cf. (5)]

for (αin, βin) and analogous equations for (αout, βout) in terms of αdiv.

To vary the correlation between in- and out-degrees and thus αchain, we let the full degree distribution pd(x,y) be determined by the marginal distributions pin and pout and a Gaussian copula with correlation coefficient ρ. In this way, we can sample from pd(x,y) by a two step process. We generate a joint Gaussian with correlation coefficient ρ and compose each component with the cumulative distribution function of the Gaussian to obtain a sample (u, v) from the Gaussian copula. Next, we compose the result with the inverse of the cumulative distribution functions  to obtain the expected in-degree and out-degree

to obtain the expected in-degree and out-degree  as a sample from pd(x,y).

as a sample from pd(x,y).

Finally, to generate the network, we sample N points (xi,yi) from pd(x,y), which give the expected in-degree and out-degree, respectively, of each neuron i. We let the probability of each network connection Wij from neuron j to neuron i be proportional to xi,yj, where the proportionality constant is chosen so that the average connectivity is Pr(Wij = 1)=p. As described in (Chung and Lu, 2002), as long as maxi,j xi yj ≤N(N−1)p, this approach yields a network whose distribution of expected degrees is given by pd(x,y). This condition was not satisfied for some networks so that their degree distribution deviated from the prescribed pd(x,y). Since we measure the second order statistics directly from the generated connectivity matrix via (3), such deviations were not a concern.

Analysis of Complete Synchrony

To examine the influence of the second order connectivity statistics on network synchrony, we will analyze the stability of the two extreme cases of synchrony, the perfectly synchronous state and the completely asynchronous state. Through linearizations around these states, we derive conditions between the connectivity statistics and synchrony that are valid near these states. We begin by analyzing complete synchrony.

Stability of synchrony determined using master stability function

To determine the stability of the perfectly synchronous case, we linearize the equations for network dynamics around synchrony using the master stability function approach by Pecora and Carroll (1998). This approach is based on determining synchrony in a system of identical, noise-free, coupled oscillators:

where the state of oscillator i at time t is described by the vector x i(t). The dynamics of this system are determined by the scalar coupling strength S and two vector-valued functions of the same dimension as the interval variable vector x i: the internal dynamics function F and the coupling function H. We rewrite (8) in terms of the Laplacian matrix L = D − W, where D is the diagonal of row sums of the connectivity matrix W,

Using the master stability function approach, one can determine a critical stability region in the complex plane that is based solely on the neuron dynamics (given by F and H) and is independent of network connectivity. The influence of the network on the stability enters through the eigenvalues μi of L, as the eigenvalues scaled by the coupling strength Sμi must lie in the critical region for the synchronous state to be stable (Arenas et al., 2008).

If the neuron model is not known, it is not possible to know the critical region in the complex plane, reflecting the fact that the topology alone cannot determine whether a network will synchronize. However, a tighter spread of the eigenvalues will make it easier to scale them by S to fit within the critical region. Therefore, changes in network structure that decrease the spread of the eigenvalues will favor synchronizability. One measure of this eigenvalue spread for asymmetric matrices, as suggested by Nishikawa and Motter (2010), is the variance  of the eigenvalues normalized by the mean degree squared,

of the eigenvalues normalized by the mean degree squared,

where  is the mean of the eigenvalues and d is the mean degree (4). This variance ignores the zero eigenvalue μ1 = 0 present in every Laplacian matrix.

is the mean of the eigenvalues and d is the mean degree (4). This variance ignores the zero eigenvalue μ1 = 0 present in every Laplacian matrix.

Modifications for chemical synapses

The master stability function requires the subtraction of H(x i) in (8) to ensure that the synchronous state  exists and satisfies the equation

exists and satisfies the equation  . The H(x i) term is suitable for neuron models with electrical synapses but is not an appropriate model for chemical synapses because it implies that a connection from neuron j onto neuron i leads to an equivalent negative effect from neuron i onto itself. To model chemical synapses, we must remove the offending H(x i) term, yielding:

. The H(x i) term is suitable for neuron models with electrical synapses but is not an appropriate model for chemical synapses because it implies that a connection from neuron j onto neuron i leads to an equivalent negative effect from neuron i onto itself. To model chemical synapses, we must remove the offending H(x i) term, yielding:

The rows of the connectivity matrix W may not sum to the same value, in contrast to the Laplacian matrix L of (9) which must have rows that sum to zero. Hence, if we substitute the synchronous state  , we would get different, inconsistent equations that

, we would get different, inconsistent equations that  must satisfy:

must satisfy:

where the ith row sum of W is  , the incoming degree of neuron i. If all neurons do not have the same incoming degree, then we cannot solve for

, the incoming degree of neuron i. If all neurons do not have the same incoming degree, then we cannot solve for  independent of i and the synchronous state does not even exist.

independent of i and the synchronous state does not even exist.

Therefore, for chemical synaptic networks (11), rather than analyzing the stability of the synchronous state, we seek to determine how far the network is from having a synchronous solution. In the spirit of the master stability function analysis, we desire a measure of synchronizability analogous to (10) based only on network properties. We propose that network synchrony will decrease with the variance of the in-degrees, normalized by their mean squared

Connecting stability measures to network topology

Fortunately, we can merge the two normalized variances  and

and  into the same measure. It turns out that, for many large networks, the eigenvalues of the Laplacian matrix L = D − W are dominated by the diagonal entries D, which are the in-degrees

into the same measure. It turns out that, for many large networks, the eigenvalues of the Laplacian matrix L = D − W are dominated by the diagonal entries D, which are the in-degrees  If the connectivity matrix were normal (WWT = WTW), then this fact would follow from the Wielandt–Hoffman theorem (Hoffman and Wielandt, 1953), as this theorem shows that (with a suitable reordering of the eigenvalues μi)

If the connectivity matrix were normal (WWT = WTW), then this fact would follow from the Wielandt–Hoffman theorem (Hoffman and Wielandt, 1953), as this theorem shows that (with a suitable reordering of the eigenvalues μi)

Therefore, reproducing an argument from (Zhan et al., 2010), the relative average squared deviation is

which will tend to be small. The worst case would be when all in-degrees  are the same (and equal to d), in which case the bound would be 1/d. Variation in the degrees makes the bound smaller and the eigenvalues μi even closer to the in-degrees

are the same (and equal to d), in which case the bound would be 1/d. Variation in the degrees makes the bound smaller and the eigenvalues μi even closer to the in-degrees  .

.

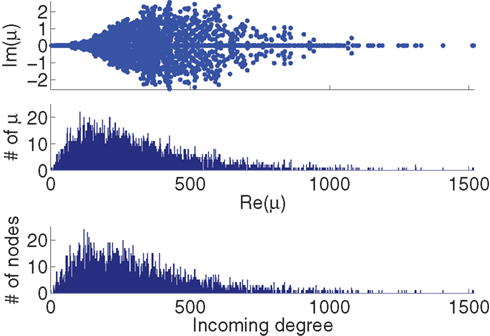

Although our matrices W are not necessarily normal, we have observed that the eigenvalues μi are indeed close to the in-degrees  . An example is shown in Figure 2. Thus, we infer that our two measures based on (8) and (11) are approximately equal,

. An example is shown in Figure 2. Thus, we infer that our two measures based on (8) and (11) are approximately equal,  , and will simply use

, and will simply use  .

.

Figure 2. Comparison between the eigenvalues μi of the Laplacian and the network in-degree  for a sample SONET. A scatter plot of the eigenvalues is shown in the top plot. Note that the real axis scale is much larger than the imaginary axis, so the imaginary parts of the eigenvalues are relatively small. The middle panel shows a histogram of the real parts of these eigenvalues. The histogram of the in-degrees shown at bottom closely matches that of the eigenvalues. Network statistics are: p = 0.1, N = 3000, αrecip = 0, αconv = αdiv = αchain = 0.5.

for a sample SONET. A scatter plot of the eigenvalues is shown in the top plot. Note that the real axis scale is much larger than the imaginary axis, so the imaginary parts of the eigenvalues are relatively small. The middle panel shows a histogram of the real parts of these eigenvalues. The histogram of the in-degrees shown at bottom closely matches that of the eigenvalues. Network statistics are: p = 0.1, N = 3000, αrecip = 0, αconv = αdiv = αchain = 0.5.

Given (6a), we can go one step further, and connect the synchronizability measure  directly with the network statistic αconv. Comparing (6a) to (13), we see that

directly with the network statistic αconv. Comparing (6a) to (13), we see that

where we have used the mean degree d of the network as a proxy for its expected value E(d). Hence, we expect a higher frequency of the convergent connection motif to decrease synchrony.

Stability of the Asynchronous State

The emergence of synchrony

An approach to perform a similar stability analysis for the completely asynchronous state of heterogeneous, noisy oscillators has been recently developed by Restrepo et al. (2006a,b). The asynchronous state is where the oscillators are as spread out evenly as though they were uncoupled (in a sense determined by the oscillator dynamics). The analysis begins with a coupled oscillator model similar to the one used in the master stability function approach (8):

where ξi(t) is a noise term and the model is specified by the functions F, H, and k. One notable difference in this model is that the oscillators can be heterogeneous since their dynamics depend on the parameter vectors ηi. Two important conditions of the analysis are that the parameters cannot be correlated with the network structure and that each neuron must receive many inputs.

For the asynchronous state to be an approximate solution of this system, the factor multiplying W must be approximately zero when the neurons are asynchronous. To satisfy this condition, the equation has an extra term 〈H(x)〉, which is the average of H(x) over the oscillators when they are spread over the asynchronous state. Since 〈H(x)〉 is just a constant vector, it is less problematic than the H(x i) of (8).

Restrepo et al. (2006a,b) analyze the coupling strength where synchrony just begins to emerge in the network (i.e., where the asynchronous state becomes unstable). Their key result is that this critical coupling strength depends on the network structure only through the largest eigenvalue λmax of the connectivity matrix W. Clearly, this coupling strength also depends on the oscillator models and the manner in which they are coupled, but these influences are a separate factor multiplied by λmax. Given two networks with different values of λmax but with neurons whose dynamics are governed by the same oscillator model, synchrony will emerge at a lower coupling strength for the network with the larger λmax. This separation of the oscillator dynamics from the network structure is in the same spirit as the master stability function analysis and allows us to incorporate λmax into our notion of the synchronizability of the network.

Linking emergence of synchrony to network topology

Restrepo et al. (2007) also derive approximate expressions for the largest eigenvalue λmax. If we ignore any correlation between the degrees of neighboring neurons, λmax is a simple function of the degree distribution  Given the relationship (6c) between αchain and the covariance of the degree distribution, the maximum eigenvalue can be written in terms of αchain:

Given the relationship (6c) between αchain and the covariance of the degree distribution, the maximum eigenvalue can be written in terms of αchain:

Hence, λmax will be nearly zero when αchain = −1 (its minimum value) and will increase linearly with αchain with slope equal to the mean degree.

Neuron Models

We simulated many neuron models to explore the extent to which our results were model-independent.

Kuramoto model

The Kuramoto model (Kuramoto, 1984), originally proposed by Yoshiki Kuramoto, is a network of continuously coupled oscillators with phase θi (modulo 2π),

where ω is the natural frequency and ξi(t) is white noise,

, which we scale by σ. We specify the connectivity strength S relative to the number of oscillators N and the connection probability p of the connectivity matrix W.

, which we scale by σ. We specify the connectivity strength S relative to the number of oscillators N and the connection probability p of the connectivity matrix W.

PRC model

The phase response curve networks are pulse-coupled networks, meaning that the effect of the pre-synaptic neuron on the post-synaptic neuron’s phase only occurs when the pre-synaptic neuron crosses the zero phase (modulo 2π), to simulate the effect of synaptic release at the time of an action potential. At the time of the action potential, the phases θi of the post-synaptic neurons are advanced according to their phase response curves fi(θi). If a neuron fires, it is unresponsive to any other inputs it receives at that same instant, but its phase remains at θ = 0. The PRC model

uses the same parameters as the Kuramoto model, except in some simulations we allow the natural frequency ωi to depend on neuron i.  is the time of kth spike of neuron j. The shape of the phase response curve fi is determined by choice of ai. c(ai) is chosen to make the maximum value of phase response curve fi be one. We make the average of ai be two to make PRCs that would promote synchrony in a network coupled through excitatory synapses.

is the time of kth spike of neuron j. The shape of the phase response curve fi is determined by choice of ai. c(ai) is chosen to make the maximum value of phase response curve fi be one. We make the average of ai be two to make PRCs that would promote synchrony in a network coupled through excitatory synapses.

Morris-Lecar model

The Morris-Lecar model is a reduced Hodgkin-Huxley like conductance based oscillator (Morris and Lecar, 1981) with two dynamic variables voltage V and channel inactivation n determined by the equations

where m∞(V)=[1+exp((V1/2,m−V)/km)]−1, n∞(V)=[1+exp((V1/2,m−V)/kn)]−1, and τn(V)=exp(−0.07V−3). The neurons are coupled by synapses that include a synaptic conductance waveform every time a pre-synaptic neuron fires, with the synaptic current Isyn,i(t)=gsyn(Si−fi)(Esyn−Vi) determined by the pair of dynamic equations  and

and  . Our model diverges from the original Morris-Lecar model, which used Ca++ rather than Na+, but essentially retains the same framework. We have also adjusted the model parameters as follows to make the resulting phase response curve look more realistic: C = 0.1 nF, gL = 800 pS, EL = −53.24 mV, gNa = 1.822 nS, ENa = 60 mV, gK = 400 pS, EK = −95.52 mV, V1/2,m = −7.37 mV, km = 11.97 mV, V1/2,n = −16.35 mV, kn = 4.21 mV, Esyn = 0 mV, τs = 0.5 ms, and σ = 20 pA. The synaptic conductance gsyn was varied in the individual simulations.

. Our model diverges from the original Morris-Lecar model, which used Ca++ rather than Na+, but essentially retains the same framework. We have also adjusted the model parameters as follows to make the resulting phase response curve look more realistic: C = 0.1 nF, gL = 800 pS, EL = −53.24 mV, gNa = 1.822 nS, ENa = 60 mV, gK = 400 pS, EK = −95.52 mV, V1/2,m = −7.37 mV, km = 11.97 mV, V1/2,n = −16.35 mV, kn = 4.21 mV, Esyn = 0 mV, τs = 0.5 ms, and σ = 20 pA. The synaptic conductance gsyn was varied in the individual simulations.

Spike Rate Normalization Using an Integral Controller

When neurons receive different numbers of synaptic inputs, the disparity in level of synaptic drive can cause neurons to fire at different rates. In some simulations, we desired to compensate for this effect to make the neurons have similar firing rates. Rather than attempt to numerically solve for the input currents to achieve a desired firing rate, we developed an auto-tuning network by implementing an integral controller that adjusts the input current for each neuron until it fires at the desired firing rate. The controller calculates the input current for each neuron at each firing from the error between the interspike interval and that of the desired rate: ek(j) = isik(j)−(desired rate)−1, where isik(j) is the kth neuron’s jth interspike interval. For all times t during the neuron’s j + 1st interspike interval, the current to neuron k is held at the constant  , where Ki is the integral feedback constant (Ogata, 2010). When neuron k spikes again, a new ek(j + 1) is calculated and added to the sum determining Ik(t). For our purposes, we set Ki = 1, which produced long transients, but results in stable network behaviors. Simulation were run to steady state with the integral controller on and then the current values were held constant (virtually identical results were obtained when controllers were left on through the entire simulation).

, where Ki is the integral feedback constant (Ogata, 2010). When neuron k spikes again, a new ek(j + 1) is calculated and added to the sum determining Ik(t). For our purposes, we set Ki = 1, which produced long transients, but results in stable network behaviors. Simulation were run to steady state with the integral controller on and then the current values were held constant (virtually identical results were obtained when controllers were left on through the entire simulation).

Measuring Synchrony with the Order Parameter

We use a simple quantification of network synchrony, the Kuramoto order parameter, to measure synchrony in the network simulations. The measure is based on defining a phase θj(t) of each neuron, which ranges from 0 to 2π to indicate the relative state of the neuron through its spiking cycle. For models that are not directly based on the phase, we define the phase as the time since the previous spike, normalized by the average period over the previous five spikes.

To calculate the order parameter, we represent the phase of each neuron by a vector on the unit circle at angle θj(t). The population vector is the average of these unit vectors. The order parameter is the length of the population vector (Strogatz, 2000)

where i =  and |·| represents the absolute value of the complex vector. The order parameter ranges between 0 and 1, with r = 1 representing perfect synchrony. The minimum value r = 0 occurs when the network is asynchronous and the phases are evenly distributed around the unit circle. However, r = 0 whenever the population is balanced around the circle, which could also occur when two or more equal clusters are evenly spread around the circle.

and |·| represents the absolute value of the complex vector. The order parameter ranges between 0 and 1, with r = 1 representing perfect synchrony. The minimum value r = 0 occurs when the network is asynchronous and the phases are evenly distributed around the unit circle. However, r = 0 whenever the population is balanced around the circle, which could also occur when two or more equal clusters are evenly spread around the circle.

Since we are looking at oscillating neurons, we obtain equivalent results if we measure spike count correlation rather than synchrony. As long as one counts spikes in bins sufficiently smaller than the oscillation period, the synchrony induces spike correlations.

Results

Overview

We examine the influence of network structure on the synchrony of neuronal networks. We generate networks using the framework of SONETs which allows us to systematically vary second order connectivity statistics, which are the frequency of reciprocal, convergent, divergent, and chain connections shown in Figure 1 (see Materials and Methods). SONETs extend the commonly used Erdoős–Rényi random network model (Erdoős and Rényi, 1959), retaining the feature of adding minimal structure beyond what is required to match the connectivity statistics.

The effect of the second order connectivity statistics underlying SONETs are illustrated by the small networks of Figure 3. The networks illustrate how, if one keeps the connection probability fixed, increased convergence or divergence arises from having some neurons with large in- or out-degree, respectively (Figures 3A,B). The networks have many chains when the large in-degree neurons also have large out-degree (Figure 3D), but they have few chains when neurons with large in-degree have small out-degree and neurons with large out-degree have small in-degree (Figure 3C).

Figure 3. Illustrations of networks with different connectivity statistics. All networks contain N = 9 neurons with Nconn = 22 connections, so that the connection probability is  . For each neuron, darker inner color indicates larger in-degree and darker outer color indicates larger out-degree. (A) Network with large convergence. There is large variance in in-degree. Nrecip = 4, Nconv = 42, Ndiv = 23, and Nchain = 45 so that

. For each neuron, darker inner color indicates larger in-degree and darker outer color indicates larger out-degree. (A) Network with large convergence. There is large variance in in-degree. Nrecip = 4, Nconv = 42, Ndiv = 23, and Nchain = 45 so that  ,

,  ,

,  , and

, and  . (B) Network with large divergence (the same network as in panel A, but with connections reversed). There is large variance in out-degree. Nrecip = 4, Nconv = 23, Ndiv = 42, and Nchain = 45 so that

. (B) Network with large divergence (the same network as in panel A, but with connections reversed). There is large variance in out-degree. Nrecip = 4, Nconv = 23, Ndiv = 42, and Nchain = 45 so that  ,

,  ,

,  , and

, and  . (C) Network with few chains. Neurons with large in-degree tend to have small out-degree, and neurons with large out-degree tend to have small in-degree. Nrecip = 2, Nconv = 33, Ndiv = 35, and Nchain = 29 so that

. (C) Network with few chains. Neurons with large in-degree tend to have small out-degree, and neurons with large out-degree tend to have small in-degree. Nrecip = 2, Nconv = 33, Ndiv = 35, and Nchain = 29 so that  ,

,  ,

,  , and

, and  . (D) Network with many chains. Neurons with large in-degree tend to have large out-degree. Nrecip = 6, Nconv = 33, Ndiv = 33, and Nchain = 65 so that

. (D) Network with many chains. Neurons with large in-degree tend to have large out-degree. Nrecip = 6, Nconv = 33, Ndiv = 33, and Nchain = 65 so that  ,

,  ,

,  , and

, and  .

.

The analyses of perfect synchrony and asynchrony (see Materials and Methods) predict that two second order statistics of connectivity should play key roles in determining synchrony. First, increasing the relative frequency of the convergent connection motif (increasing αconv) should decrease synchrony, as it pushes the network state further from complete synchrony. Second, increasing the relative frequency of the chain connection motif (increasing αchain) should increase synchrony, as it decreases the stability of the asynchronous state.

We demonstrate in simulations that the frequency of chains and convergent motifs can influence network synchrony even when a network is not at or near the extremes of perfect synchrony and asynchrony. We present the results in four parts. First, we generate SONETs with a range of second order connectivity statistics and test the predictions of the analysis through simulations of a simple neuron model. Second, to demonstrate that these results can be explained by the analysis, we confirm that critical eigenvalue quantities from the analysis do have the predicted relationships with the network statistics. Third, to assure that our findings are not model dependent, we test networks with several different single neuron models. Fourth, we test the results with a larger range of networks to evaluate the influence of higher order network statistics on the predictions based on second order statistics.

Synchrony Determined by Second Order Connectivity Statistics

To test the effects of the second order connectivity statistics on synchrony, we generate 186 SONETs with N = 3000 neurons and 10% connectivity (p = 0.1). In addition to using the standard Erdoős–Rényi network (all α’s zero), we randomly sample the second order connectivity statistics in the ranges αrecip ∈ [−1,4], αconv ∈ [0,1], αdiv ∈ [0,1], and αchain ∈ [−1,1]. These ranges are chosen to include the α’s used to reproduce the motif spectrum obtained from neuronal networks published by Song et al. (2005): (αrecip, αconv, αdiv, αchain)≈(3, 0.4, 0.3, 0.2). As hinted by (6c), the valid range of αchain depends on the αconv and αdiv (Zhao et al., in preparation). We maximize its range by sampling some networks where αchain is set to its maximum and minimum value conditioned on αconv and αdiv.

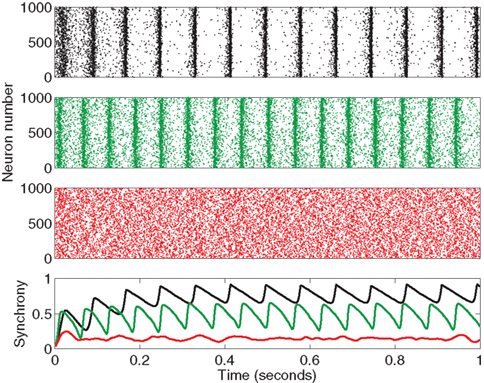

We measure synchrony in network simulations of 3000 pulse-coupled PRC neuron models (17) in these 186 SONETs. Three example networks are shown in Figure 4. For each network, we calculate synchrony measured by the Kuramoto order parameter (19) as a function of time, and calculate its average once the network reaches steady state.

Figure 4. Sample output from the beginning of three network simulations with the PRC model (17). Top three panels are rastergrams showing the spikes of 1000 neurons (out of 3000 in each network) for the first second of time. The bottom panel shows synchrony measured by the Kuramoto order parameter (19) calculated as a function of time, where colors correspond to the associated rastergrams. The networks were chosen to illustrate the range of observed synchrony. The top network (in black) is the Erdoős-Rényi random network (αrecip = αconv = αdiv = αchain = 0) which reached high synchrony (steady state r = 0.8). The middle network (in green) that reached moderate synchrony (r = 0.5) was generated from the second order connectivity statistics αrecip = −0.2, αconv = 0.7, αdiv = 0.6, αchain = 0.6. The bottom network (in red) that stayed fairly asynchronous (r = 0.1) was generated from αrecip = 0.1, αconv = 0.9, αdiv = 0.9, αchain = −0.6. For all networks, average connectivity was p = 0.1. PRC model parameters were S = 6, σ = 3, ai = 2, and ωi = 60 for all neurons i.

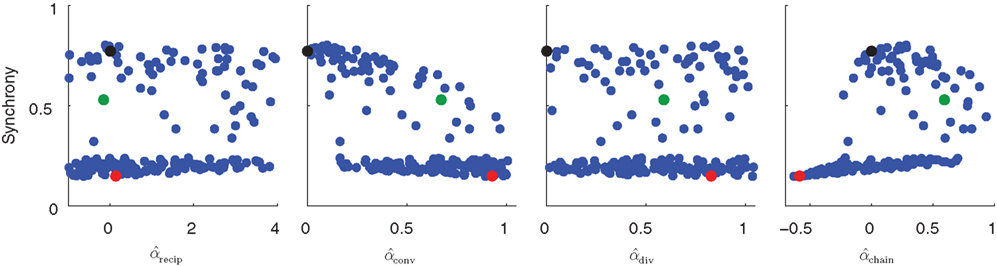

In Figure 5 the steady state synchrony is plotted against the measured second order statistics α calculated by (3). No simple relationship between any single connectivity statistic and synchrony is obvious, though it appears the Erdoős–Rényi network (black) is among the most synchronous. There appears to be a bimodal distribution of synchrony, suggesting that synchrony rapidly jumps up when some threshold is crossed.

Figure 5. Scatter plots of steady state synchrony measured by the order parameter (19) as a function of individual connectivity statistics for the 186 sampled SONETs. For each panel, each dot corresponds to one network. Black, green, and red dots correspond to the networks simulated in Figure 4. Estimates of second order connectivity statistics  are determined from each network connectivity matrix W via (3). PRC model parameters were S = 6, σ = 3, ai = 2 and ωi = 60 for all neurons i.

are determined from each network connectivity matrix W via (3). PRC model parameters were S = 6, σ = 3, ai = 2 and ωi = 60 for all neurons i.

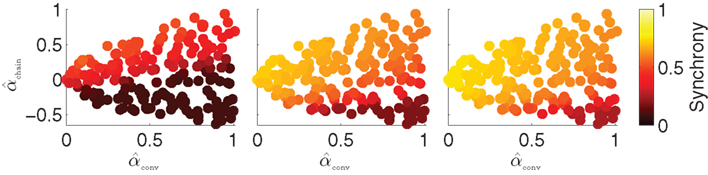

When synchrony is plotted against pairs of connectivity statistics, as shown in Figure 6A, the pattern of dependence on connectivity emerges. The synchrony seems to be a function of (αconv, αchain) alone, as the level of synchrony varies more or less smoothly in the (αconv, αchain) projection (lower left of Figure 6) despite the fact that αrecip and αdiv are varying widely across the whole plot. For a given value of αconv, there appears to be a threshold of αchain above which synchrony jumps up, supporting the trend of higher synchrony with αchain predicted by our analysis. Similarly, synchrony jumps down at a threshold value of αconv for a given value of αchain.

Figure 6. (A) Synchrony plotted as a function of pairs of connectivity statistics. The simulation results of the PRC model over the 186 SONETs (Figure 5) are replotted as a function of each pair of second connectivity statistics α estimated from the connectivity matrix using (3). Each dot corresponds to one network, and color indicates steady state synchrony measured by the order parameter (19). Synchrony varies smoothly in the graph with respect to αchain and αconv but not in the other graphs. (B) Average firing rate as a function of αchain and αconv for the same networks as in (A).

The connectivity statistics change the average firing rate of the network, as shown in Figure 6B. Little difference in synchrony is observed if we adjust the intrinsic frequency ω for each network to keep the average firing rate fixed (not shown). We explore below the effect of fixing not only the firing rate averaged across each network, but also making the firing rate of each neuron be the same.

Note the dependence of the valid range of αchain on the other statistics (bottom row of Figure 6). The relationship between αconv/αdiv/αchain and the variances/covariance of the in- and out-degree distribution shown in (6) implies that the valid range of αchain increases with αconv and αdiv (left two panels of last row of Figure 6). The range of αchain is also affected by αrecip (lower-right panel of Figure 6). The dependence of the range of αchain and the other statistics creates the appearance of spurious correlations between synchrony and αrecip/αdiv in the top two rows of Figure 6.

Eigenvalue Analysis

The influence of the connectivity statistics on synchrony was predicted based on analysis of eigenvalues of the network connectivity matrix W and Laplacian matrix L (see Materials and Methods). An analysis of the asynchronous state predicts synchrony will increase with the largest eigenvalue λmax of the connectivity matrix, which should increase linearly with αchain [see (15)]. An analysis of the synchronous state predicts synchrony will decrease as the normalized variance  (10) of the Laplacian eigenvalues increases, which should be nearly equal to αconv [see (14)].

(10) of the Laplacian eigenvalues increases, which should be nearly equal to αconv [see (14)].

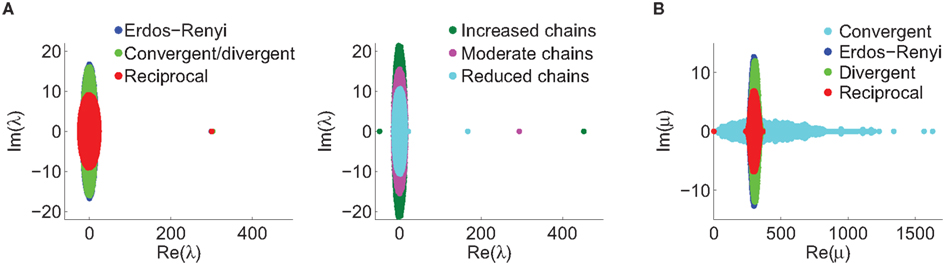

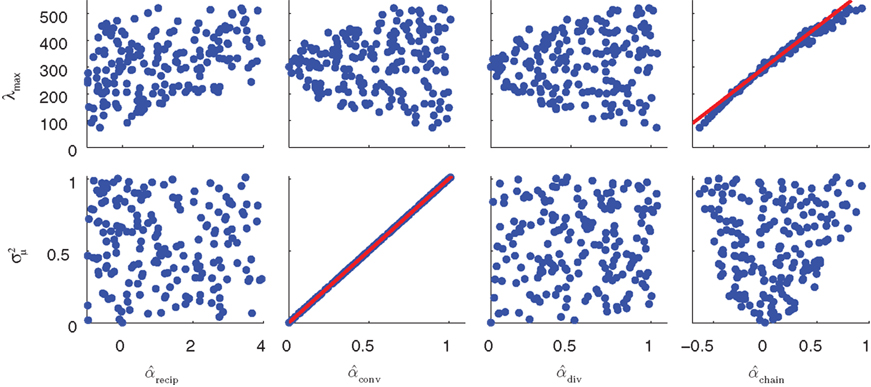

In Figure 7, sample spectrum from a few connectivity matrices demonstrate these trends: λmax increases with αchain, and only αconv modulates the variance  of the eigenvalues of the Laplacian L. These observations are confirmed by the plots of λmax and

of the eigenvalues of the Laplacian L. These observations are confirmed by the plots of λmax and  versus each connectivity statistic for the 186 generated networks (Figure 8). The right top panel shows how λmax is nearly completely determined by αchain according to the relationship (15) plotted in red. The second panel in the bottom row of Figure 8 shows that the normalized variance

versus each connectivity statistic for the 186 generated networks (Figure 8). The right top panel shows how λmax is nearly completely determined by αchain according to the relationship (15) plotted in red. The second panel in the bottom row of Figure 8 shows that the normalized variance  of the eigenvalues is nearly identical to the αconv and follows the relationship (14) plotted in red. The other relationships between the eigenvalues and connectivity statistics simply reflect how the range of sampled values of αchain depend on the other statistics, as seen in Figure 6.

of the eigenvalues is nearly identical to the αconv and follows the relationship (14) plotted in red. The other relationships between the eigenvalues and connectivity statistics simply reflect how the range of sampled values of αchain depend on the other statistics, as seen in Figure 6.

Figure 7. Spectra of connectivity matrices and Laplacian matrices for sample networks. All α’s not mentioned were zero. (A) Left: Eigenvalues for the Erdoős–Rényi network (blue) are mainly occluded by the spectra of the other matrices. Eigenvalues for convergent and divergent networks were identical. Non-zero parameters: αconv = 0.5 (convergent), αdiv = 0.5 (divergent), αrecip = 4 (reciprocal). Right: Largest eigenvalue λmax increases linearly with αchain. All three networks had substantial convergence and divergence (αconv = αdiv = 0.5) to allow large αchain. Parameters: αchain = 0.5 (increased chains), αchain = 0 (moderate chains), αchain = −0.4 (reduced chains). (B) Eigenvalues of the Laplacian for the networks from the left panel of A. The spread of the eigenvalues remains relatively unchanged with different frequencies of chains (not shown).

Figure 8. The distribution of key eigenvalue quantities as function of connectivity statistics for the 186 sampled SONETs. In each panel, each dot corresponds to one of the networks. Red line in top right panel is predicted dependence (15) of largest eigenvalue λmax on αchain. Red line in the second panel of the second row is relationship (14) between the normalized variance  of the eigenvalues of the Laplacian and αconv.

of the eigenvalues of the Laplacian and αconv.

The tight relationships between αchain and λmax and  between αconv and confirm that the influence of these two connectivity statistics on synchrony is indeed through their influence on the key spectral properties of the connectivity matrix W and Laplacian matrix L.

between αconv and confirm that the influence of these two connectivity statistics on synchrony is indeed through their influence on the key spectral properties of the connectivity matrix W and Laplacian matrix L.

Dependence of Synchrony on Single Neuron Model

The synchrony analysis, which was based around the completely synchronous and asynchronous states, predicts that the effect of topology on synchrony should be independent of the neuron model. Since these analyses were based on linearizations around those states, there is no guarantee those predictions will apply to other networks states, such as intermediate levels of synchrony. Non-linearities in neuron models could lead to model-dependent deviations from those predictions.

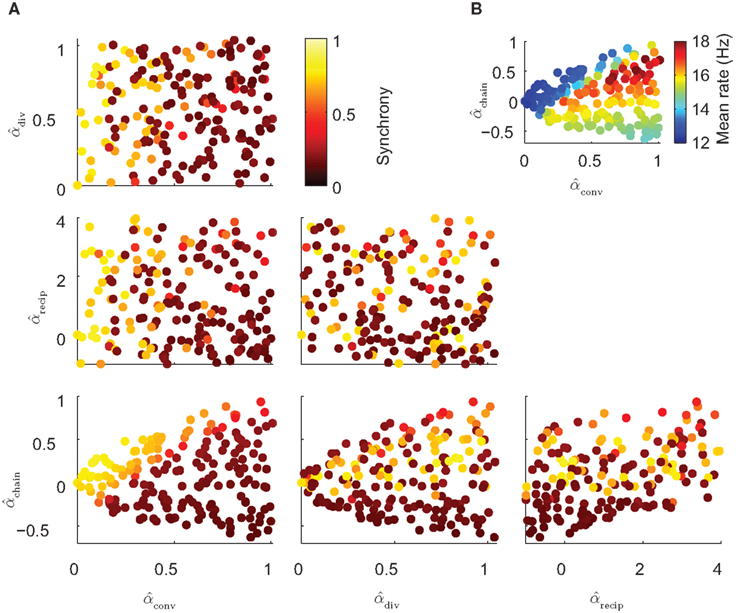

We test for model-dependence of the results using the three neuron models described in Methods. The simplest model is the Kuramoto oscillator (16). Second, we run additional simulations with pulse-coupled PRC oscillator models (17) under two conditions: one with noise as in Figure 6 and the other with heterogeneity in the model parameters. The third model is a simple conductance based neuronal model based on the Morris-Lecar model (18). We run all models on the above 186 SONETs and observe how synchrony is affected by changing the coupling strength.

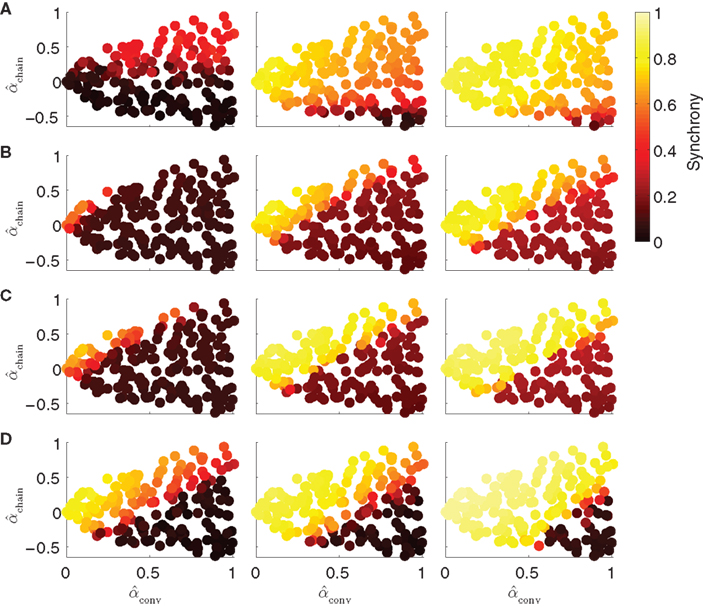

The results shown in Figure 9 demonstrate that for each model, the influence of the network structure on synchrony is indeed determined only by αconv and αchain, as in each case, synchrony appears to be a function of those parameters. The relative influence of αconv and αchain does vary across models. For the Kuramoto model, synchrony is more strongly influenced by αchain than αconv (Figure 9A). For the noisy homogeneous PRC model, the result from Figure 6 is maintained at different connectivity strengths, as both convergence and chains strongly modulated the synchrony (Figure 9B). The noise-free heterogeneous PRC model has a similar dependence on the connectivity parameters (Figure 9C); replacing noise with variability across the population in intrinsic frequency and PRC shape only alters the connectivity strength at which synchrony emerges. The Morris-Lecar model (Figure 9D) has a similar dependence on αchain and αconv as the PRC models.

Figure 9. Dependence of synchrony on model and coupling strength. Each row corresponds to a different neuron model, and coupling strength increases with column. Panels as in Figure 6A. (A) Kuramoto model (16) with coupling strengths S = 1, 2, and 3. (B) PRC model (17) with homogeneous parameters as in Figure 5. Coupling strengths were S = 3, 6, and 9, so that middle panel is the same as lower left of Figure 6. (C) Noise-free (σ = 0) PRC model (17) with heterogeneous parameters: ai was drawn from a Gaussian of mean 2 and SD 0.2; ωi was drawn from a Gaussian of mean 60 and SD 6. Coupling strengths were S = 3, 6, and 9. (D) Morris-Lecar model (18) with coupling strengths gsyn set to 20, 40, and 400 pS.

In all cases, increasing αchain or decreasing αconv tends to increase synchrony. The increase in synchrony appears to be concentrated at an effective threshold line through the (αconv, αchain) plane. Whether a particular network will synchronize or not depends on many factors, but relative changes in αconv or αchain affect synchrony in the same way. Since the effects of changing these two connectivity statistics combine in a model-dependent manner, we obtain a two-dimensional index (αconv, αchain) for the synchronizability of a network.

Synchrony with Other Networks

All the above simulations were based on SONETs of N = 3000 neurons where we fixed the first order connectivity statistics (p = 0.1) and varied the second order connectivity statistics α. We did not separately manipulate higher order connectivity statistics, as the definition of SONETs does not add additional structure into the connectivity beyond that determined by the second order statistics. To explore how well αconv and αchain determine synchrony under a wider range of networks, we simulate networks with different first order statistics and networks with different higher order statistics.

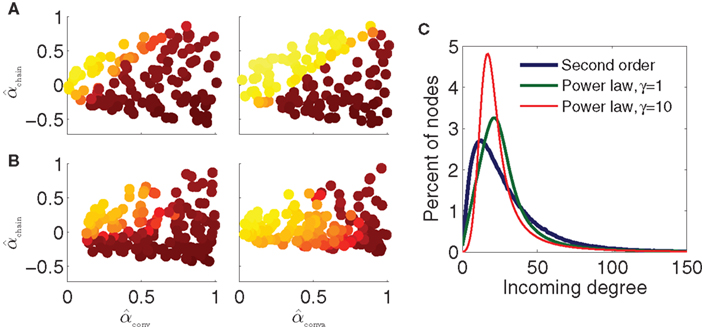

To test the effect of changing first order statistics, we decrease the connection probability by a factor of 10 to p = 0.01. We generate 130 SONETs over the same ranges of α as the original p = 0.1 networks and simulate the PRC model of Figure 9B. As shown in the left panel of Figure 10A, the results are similar to those with p = 0.1. We also increase the network size to N = 10000 and set p = 0.03 so that the average degree p(N − 1) is approximately the same as in the original networks. Simulations from 130 SONETs demonstrate that αconv and αchain determine synchrony in the same manner as before (Figure 10A, right).

Figure 10. Dependence of synchrony on first order connectivity statistics and higher order connectivity statistics. All simulations use the PRC model and coupling strengths S = 6 of the middle panel of Figure 9B. Note that changing p or N changes the effective coupling S/(pN) in the PRC model (17). Pseudocolor scale indicates synchrony as in Figure 9. (A) SONETs with different first order statistics. Left: sparse networks with N = 3000 neurons and connection probability p = 0.01. Right: large networks with N = 10000 neurons and connection probability p = 0.03. (B) Scale-free networks with N = 3000 neurons and connection probability p = 0.01. Rising exponents of degree distribution (7) γin/out were set to 1 (left) and 10 (right). For left panel, the minimum attainable value of αconv and αdiv was 0.1. Maximum degrees: bin = bout = 300. (C) Incoming degree distributions for networks with p = 0.01 and αconv = 0.7. The degree distributions are smoothed versions of the distribution of expected degree (7) as actual connections are generated randomly.

To manipulate higher order connectivity statistics of the network, we generate networks with power law degree distributions, i.e., with scale-free connectivity. The algorithm for generating the power law networks is described in Materials and Methods. The power law degree distribution has different higher order statistics from the degree distribution of SONETs, for fixed first (p) and second (α) order statistics. By comparing power law networks with SONETs that have the same first and second order statistics, we can evaluate the effect of the higher order statistics.

We exploit relationships (6) between the α’s and the degree distribution to generate networks across a range of second order connectivity statistics. We vary the power law exponents of the in-degree and out-degree distributions (7) independently to allow αconv and αdiv to range in (0,1). To maximize the range of αchain, we let the correlation between in-degree and out-degree range in (−1,1).

We generate power law networks with N = 3000 neurons and average connectivity p = 0.01. We first generate 160 such power law networks where we set the rising exponents of the degree distributions (7) to γin = γout = 1 so that the degree distributions initially increase linearly as shown by the green trace of Figure 10C. Synchrony for the PRC model and these power law networks is shown in Figure 10B, left. Even for these scale-free networks, synchrony does appear to be a function of just αconv and αchain, as the synchrony varies smoothly in the (αconv, αchain) projection as before. For the most part, the same qualitative trends persist that were predicted by the synchrony analysis and were observed in the SONETs; synchrony tends to increase with αchain and decrease with αconv. It is clear, though, that the higher order connectivity statistics are modifying the synchrony. The synchrony pattern as a function of second order connectivity statistics in the left panels of Figures 10A,B are not identical despite the model and first order connectivity statistics being the same.

If we increase the steepness of the rise of the degree distribution by setting γin = γout = 10 (red trace of Figure 10C), the dependence of synchrony on the α changes dramatically (Figure 10B, right). Synchrony is still a function of just (αconv, αchain), but for larger αconv, synchrony no longer increases monotonically with αchain. Although the eigenvalue λmax still has the same linear relationship (15) with αchain, this eigenvalue no longer accurately predicts the emergence of synchrony according to the analysis of the stability of the asynchronous state.

We found large values of γin also lead to a non-monotonic dependence of synchrony on αchain for other degree distribution parameters. We hypothesize that this breakdown of the asynchronous state analysis is caused by the presence of many neurons with small incoming degree. One assumption of the stability analysis of the asynchronous state is that all neurons receive many inputs. When γin is large, the number of neurons with small incoming degree (red trace of Figure 10C) is large enough to invalidate the analysis. The results indicate that the moderate number of neurons with even smaller incoming degree in the SONETs (blue trace of Figure 10C) does not invalidate the conclusions of the analysis.

Heterogeneity due to Convergence

Since increasing αconv increases the variance of the in-degree distribution, neurons in networks with high αconv will have large variability in the number of synaptic connections that they receive. This heterogeneity in the input strength will lead to heterogeneity in the firing rates across neurons in the network, thus decreasing synchrony (Chow, 1998; Denker et al., 2004). We investigate whether or not this heterogeneity is the only mechanism by which increasing αconv decreases synchrony.

We repeat the simulation of the noisy PRC model on the SONETs (Figure 9B), where we virtually eliminate the variance in firing rates across neurons by adjusting the intrinsic frequency ωi of each neuron to make its average firing rate be close to 10 Hz. Rather than attempting to solve for the 3000 ωi, we use an integral controller to creating an auto-tuning network that adjusts the ωi (see Materials and Methods, where we refer to ω more generically as an input current I). Using this approach, we can reduce the SD in the firing rate across neurons in each network to below 0.1 (compared to firing rate SD than ranged from 0.1 to upward of 10 for the high αconv networks of Figure 9B).

As shown in Figure 11, the effect of αconv is greatly reduced. Synchrony decreases only slightly with increased αconv. The threshold value of αchain where synchrony increases rapidly with αconv depends only weakly on αconv. The maximum value of synchrony for any αchain does still decrease with αconv. This residual effect of αconv cannot be explained by its effect on the heterogeneity of firing rates, as we have eliminated that heterogeneity.

Figure 11. Dependence of synchrony on αconv and αchain when firing rate rate heterogeneity is eliminated. Simulations of Figure 9B were repeated where the ωi were adjusted by an integral controller to fix the firing rate of each neuron at 10 Hz. The panels correspond to coupling strengths S=3, 6, and 9 of the PRC model (17).

Discussion

Linking Synchrony to Network Motifs

In this paper we present a framework to relate network connectivity to synchrony in homogeneous neuronal networks. We define network structure through the frequency of second order motifs, which form the basis for four second order statistics of connectivity. These second order connectivity statistics define conditional probabilities of connections between neurons, that is, how a connection from one neuron to a second neuron affects the the probability of (1) a synapse back onto the first neuron (reciprocal), (2) the second neuron receiving inputs from a third neuron (convergent), (3) the first neuron sending out connections to a third neuron (divergent), or (4) either the first neuron receiving inputs or the second neuron sending out connections (chain), as illustrated in Figure 1.

The main result of this paper is that the primary influence of second order network connectivity on synchrony is through the chain and convergent connection motifs. We obtain a two-dimensional index (αconv, αchain) of synchronizability that captures the effect of the network structure on the synchrony of the neuronal network. Synchrony tends to increase with the relative frequency of chains and decrease with the relative frequency of convergence, where the majority of this change in synchrony occurs abruptly along a line in (αconv, αchain) parameter space that depends on connectivity strength and the neuron model. There is little dependence of synchrony on divergence or reciprocal connections. The result was robust to changes in first order connectivity statistics or network size.

The value of the synchronizability index (αconv, αchain) stems from the fact that the influence of these network structures on synchrony was similar for a wide variety of neuron models. While there were model specific differences in the simulations, the qualitative dependency of synchrony on the proportion of chains and convergent connections in the networks was the same for most single neuron models and network models. Since the influence of chains and convergence on synchrony was predicted from linearizations around synchrony and asynchrony, it is surprising how well those statistics determined the level of synchrony over the whole range of synchrony. We expect that these linear predictions will not hold for very complicated neuron models, especially with strong coupling. The predictions did break down for networks where many neurons received few inputs, as we discovered parameter regimes where synchrony decreased with αchain.

One result of the two-dimensional synchronizability index is that one cannot arrange networks in order of increasing synchronizability, and hence it may be impossible to predict if some network changes will increase or decrease synchrony without knowing the neuron model. If one network has both a higher αchain and αconv than a second network, it may be that either network may elicit higher synchrony, depending on the neuron model. For example, the Erdoős–Rényi network with all α’s zero usually synchronized better than other networks, even those with higher αconv and αchain. However, this trend was not model-independent, as we observed some exceptions for low connectivity strength with the Kuramoto model (Figure 9A) or when we eliminated the firing rate heterogeneity caused by positive αconv (Figure 11).

The Decrease of Synchrony with Convergence

The decrease of synchrony with convergence is not surprising, given that the convergence is correlated with the variance of the in-degree distribution (6a) and therefore results in increased heterogeneity in firing rates. The effect of this in-degree heterogeneity can be reduced by making the synaptic strengths inversely proportional to the in-degree as done by Motter et al. (2005) or by adjusting the current to each cell so that the firing rates are the same, as we have done in our auto-tuned networks. Reducing the heterogeneity greatly reduced the effect of αconv on synchrony but some residual effect of αconv on synchrony remained indicating higher order effects of αconv on synchrony that cannot be accounted for by firing rate heterogeneity alone.

At first glance, the decrease of synchrony with αconv seems to stand in contradiction of recent results by Rosenbaum et al. (2010), where they find that the build up of synchrony in feedforward networks is primarily due to the level of convergence in the network. They show that when neurons receive many inputs (have high din), any small correlation among their inputs is amplified. This pooling of many inputs averages out independent fluctuations but not the correlations. Thus, increasing convergence increases correlations and synchrony. The apparent contradiction is resolved by observing that Rosenbaum et al. (2010) change the level of convergence by modifying the first order statistics of connectivity rather than the second order statistic αconv. They do not introduce the heterogeneity of increased in-degree variance.

Independence of Synchrony from Common Input

One surprising result is that the synchronizability of a network appears to be unaffected by the relative frequency of divergent connection motifs as captured by αdiv. Intuitively, one would imagine that the common input represented by the divergent connection motif would tend to synchronize neurons, as the common input leads to correlations in their inputs (Shadlen and Newsome, 1998). In feedforward networks, such correlations are amplified and can lead to synchrony in deeper layers of the network (Diesmann et al., 1999; van Rossum et al., 2002; Reyes, 2003; Tetzlaff et al., 2003; Liu and Nykamp, 2009).

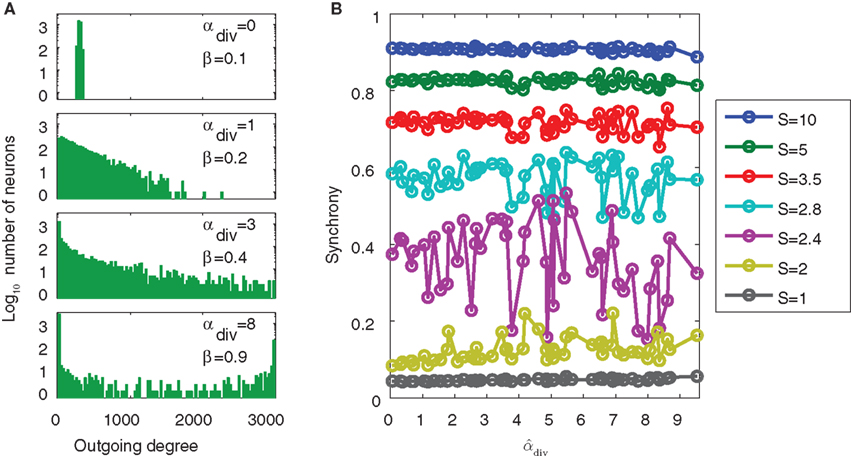

To see the relationship between αdiv and shared input, define β to be the fraction of shared input, i.e., the ratio between the expected number of shared connections between a pair of neurons and the expected number of connections to a single neuron. For SONETs, we can calculate from (2) how β depends on divergence

where the last approximation is in the limit of large N.

As an extreme test to see if the common input determined by αdiv would lead to increased synchrony, we generated SONETs with connection probability p = 0.1 where αdiv varied over its full range αdiv ∈ [0, 1/p − 1] = [0, 9], leading to shared input in the range β ∈ [0.1, 1]. Such large values of αdiv are not realistic, as shown by the out-degree distributions of Figure 12A. When αdiv is near its maximum, the out-degrees are nearly all zero or N − 1: most neurons do not have any projections to others neurons while a small fraction (around pN) project to nearly every other neuron. Nonetheless, we wanted to test to see if such extreme cases, where neurons share almost all of their pre-synaptic connections, would lead to higher synchrony. However, as shown in Figure 12B, even large values of αdiv had little influence on synchrony. An ensemble average over many networks does unmask a small increase of synchrony with αdiv (not shown), but this effect is dwarfed by increases in synchrony due to αchain.

Figure 12. Demonstration of the lack of influence of divergent connections on synchrony. Each network was a SONET of N = 3000 neurons with p = 0.1 and αrecip = αconv = αchain = 0. (A) Sample outgoing degree distributions demonstrate how large values of αdiv are unrealistic. For αdiv = 8, nearly 2500 neurons have no projections and over 140 neurons project to all other neurons. (B) For each of 50 networks with different values of αdiv, the PRC model (17) was simulated using 7 different coupling strengths S. Since the same networks are used, fluctuations due to the particular network structure are similar for each connectivity strength. Values of  can exceed the theoretical maximum of 1/p − 1 = 9 as they are estimates from the generated connectivity matrix using (3). PRC model parameters are the same as used in Figure 9B.

can exceed the theoretical maximum of 1/p − 1 = 9 as they are estimates from the generated connectivity matrix using (3). PRC model parameters are the same as used in Figure 9B.

For our networks, we obtain similar results if we measure spike correlations rather than synchrony (see Materials and Methods). Other researchers have also found that high common input may not necessarily lead to large spike correlations. Roxin (2011) found that changing common input by manipulating the outgoing degree distribution typically had little impact on correlations in networks of excitatory and inhibitory neurons. Renart et al. (2010) similarly showed that common input had little influence on spiking correlation, but since they used Erdoős–Rényi networks where β≈p, they could not isolate changes in common input β from changes in the first order connection probability p. Since our networks do not have inhibition, our results cannot be explained by the opposing effect of inhibition canceling out correlations in input currents (Renart et al., 2010). Instead, we are left to conclude that in these recurrent networks, as opposed to feedforward networks, common input due to divergence only weakly influences correlation, when it is not combined with convergence and chains.

The Increase of Synchrony with Chains

Instead of increasing with common input determined by αdiv, synchrony increases dramatically with chains in the network. This result is consistent with the recent work by LaMar and Smith (2010), who investigated how correlation between in-degree and out-degree decreases the time until complete synchrony in noiseless pulse-coupled PRC models.

We hypothesize that the primary influence of chains is that they increase the effective coupling strength of the network. This effect can be seen by the linear dependence of the largest eigenvalue in (15). Intuitively, one can think that signals are propagated along chains in the network, so that increasing the number of chains increases the effect of the connectivity. When αchain is large, neurons with large in-degree tend to have a large out-degree; since in this case, neurons that listen to more neurons tend to talk to more neurons, communication within the network is enhanced. Thus, synchrony emerges at a lower connectivity strength when αchain is increased.

The analysis of the influence of chains can be extended to networks that include inhibition in order to investigate interactions within and between inhibitory and excitatory populations. We plan to study such heterogeneous SONETs to examine the effect of chains involving different patterns of excitatory and inhibitory neurons. We hypothesize that these chains will play a critical role in determining how synchrony is amplified or suppressed.

The frequency of chains in the network may have additional effects beyond the effective coupling strength that contribute to synchrony. For example the influence of chains on loops might play an additional role, as increasing αchain increases the frequency of short loops in the network (Bianconi et al., 2008).

Higher Order Network Structure

In the SONETs, the synchronizability of the networks was determined by the second order connectivity statistics of convergence and chains. However, higher order network structure can also influence synchronizability. We introduced higher order connectivity statistics of one particular type: the additional structure contained in the power law in-degree and out-degree distributions of scale-free networks. Adding this higher order structure did alter the synchrony, even if we kept the second order connectivity statistics fixed. This simple manipulation demonstrated that knowing the second order connectivity statistics may not be sufficient to fully know the effect of the network on synchrony. Nonetheless, within a particular class of networks, we did see the same qualitative dependence of synchrony on the second order statistics, where only convergence and chains had an influence. As long as there weren’t too many neurons that received few inputs, synchrony tended to increase with chains and decrease with convergence as it did with the SONETs.

The differences observed from the in-degree distribution of the power law networks could likely be captured by adding one-third-order connectivity statistic: the probability of three connections onto a single neuron, which would presumably be linked to the skew of the in-degree distribution in analogy to (6). However, there are many more third-order connection motifs (12 in all) so that dipping into third-order statistics would greatly increase the complexity. We expect, though, that only a subset of those third-order statistics would influence synchrony.

Other higher order network statistics that have captured a lot of attention are the clustering coefficient and mean path length often examined in the context of small world networks Watts and Strogatz (1998). A number of studies have looked at the influence of network connectivity on neuronal activity and synchrony in the context of small world models (Netoff et al., 2004; Kitano and Fukai, 2007; Kriener et al., 2009). In the classical small world models, all the second order network statistics α are zero except αrecip, so one cannot observe the effect of αconv or αchain in these models. For the networks parameters we used, the influence of these second order statistics on synchrony was substantially larger than the influence of the small world rewiring parameter.

While the brain may have higher order connectivity statistics, there are several advantages to using second order statistics to describe a network. In general, lower order statistics require less data to estimate than do higher order statistics; it may be possible to estimate the five SONET parameters from experimental data but harder to estimate the higher order statistics. Furthermore, higher order statistics should be compared to the null hypothesis that they can be explained by the second order statistics observed. Finally, the low-dimensional framework of SONETs provides a mechanism for systematically exploring a wide range of network structures and quantifying their differences.

Conclusion

In the current study, SONETs facilitated our discovery that influence of network structure on the level of synchrony in a network of homogeneous excitatory neurons is primarily mediated through the frequency of convergent and chain connections. Given this analysis, one can predict how modification of specific connections between neurons in the network affects synchrony. By identifying the critical network structures of convergent and chain connections, one can focus efforts to identify structural changes that may play a role in enhancing or reducing susceptibility to different network states.

One application of this theory may be to understand how changes in neuronal wiring leads to pathological population activity such as abnormal synchrony. Second order statistics may provide a formalism by which to interpret changes in the network structure that may occur in a diseased state and how these changes affect network synchrony.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Michael Buice, Chin-Yueh Liu, and Ofer Zeitoni for helpful discussions on the developments of the theory. We thank Alex Roxin for a careful reading of the manuscript. This research was supported by the University of Minnesota’s Grant-in-aid (Theoden Netoff and Bryce Beverlin II) and National Science Foundation grants DMS-0719724, DMS-0847749 (Duane Q. Nykamp), and CBET-0954797 (Theoden Netoff).

Footnote

- ^If we want the expected value of the mean degree E(d)=(N−1)p to remain finite in this limit, then p must scale like 1/N, so terms such as p and Np2 go to zero as N increases.

References

Abbott, L. F., and van Vreeswijk, C. (1993). Asynchronous states in networks of pulse coupled oscillators. Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip. Topics 48, 1483–1490.

Arenas, A., Díaz-Guilera, A., Kurths, J., Moreno, Y., and Zhou, C. (2008). Synchronization in complex networks. Phys. Rep. 469, 93–153.

Axmacher, N., Mormann, F., Fernández, G., Elger, C. E., and Fell, J. (2006). Memory formation by neuronal synchronization. Brain Res. Rev. 52, 170–182.

Bergman, H., Feingold, A., Nini, A., Raz, A., Slovin, H., Abeles, M., and Vaadia, E. (1998). Physiological aspects of information processing in the basal ganglia of normal and parkinsonian primates. Trends Neurosci. 21, 32–38.

Bianconi, G., Gulbahce, N., and Motter, A. E. (2008). Local structure of directed networks. Phys. Rev. Lett. 100, 118701.

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., and Hwang, D.-U. (2006). Complex networks: structure and dynamics. Phys. Rep. 424, 175–308.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comp. Neurosci. 8, 183–208.

Cavazos, J. E., Golarai, G., and Sutula, T. P. (1991). Mossy fiber synaptic reorganization induced by kindling: time course of development, progression, and permanence. J. Neurosci. 11, 2795–2803.

Chow, C. C. (1998). Phase-locking in weakly heterogeneous neuronal networks. Physica A 118, 343–370.

Chung, F., and Lu, L. (2002). Connected components in random graphs with given expected degree sequences. Ann. Comb. 6, 125–145.

Denker, M., Timme, M., Diesmann, M., Wolf, F., and Geisel, T. (2004). Breaking synchrony by heterogeneity in complex networks. Phys. Rev. Lett. 92, 074103.

Diesmann, M., Gewaltig, M. O., and Aertsen, A. (1999). Stable propagation of synchronous spiking in cortical neural networks. Nature 402, 529–533.

Ermentrout, G. B., and Kopell, N. (1991). Multiple pulse interactions and averaging in systems of coupled neural oscillators. J. Math. Biol. 29, 195–217.

Hansel, D., Mato, G., and Meunier, C. (1995). Synchrony in excitatory neural networks. Neural Comput. 7, 307–337.

Hansel, D., and Sompolinsky, H. (1992). Synchronization and computation in a chaotic neural network. Phys. Rev. Lett. 68, 718–721.

Hoffman, A. J., and Wielandt, H. W. (1953). The variation of the spectrum of a normal matrix. Duke Math. J. 20, 37–39.

Holmgren, C., Harkany, T., Svennenfors, B., and Zilberter, Y. (2003). Pyramidal cell communication within local networks in layer 2/3 of rat neocortex. J. Neurophysiol. 551, 139–153.