Network-based statistics for a community driven transparent publication process

- 1Faculty of Psychology and Neuroscience, Department of Cognitive Neuroscience, Maastricht University, Maastricht, Netherlands

- 2Maastricht Brain Imaging Center (M-BIC), Maastricht University, Maastricht, Netherlands

- 3Department of Neuroimaging and Neuromodeling, Netherlands Institute for Neuroscience, an Institute of the Royal Netherlands Academy of Arts and Sciences (KNAW), Amsterdam, Netherlands

The current publishing system with its merits and pitfalls is a mending topic for debate among scientists of various disciplines. Editors and reviewers alike, both face difficult decisions about the judgment of new scientific findings. Increasing interdisciplinary themes and rapidly changing dynamics in method development of each field make it difficult to be an “expert” with regard to all issues of a certain paper. Although unintended, it is likely that misunderstandings, human biases, and even outright mistakes can play an unfortunate role in final verdicts. We propose a new community-driven publication process that is based on network statistics to make the review, publication, and scientific evaluation process more transparent.

From an idealistic point of view, scientists aim to publish their work in order to communicate relevant findings. If we could rely on our own and individual judgment, review processes would not be needed. We obviously do not rely on our own judgment since more eyes see more and hence relevance and validity can be specified in a more objective way. Therefore, a system of peer review has been established as the method of choice to control for scientific relevance and methodological correctness/appropriateness. In fact, journal editors decide via the peer review process what is relevant and what in turn is communicated to other scientists via publication. Peer review has been the method of choice for many years, but scientists are concerned about the state of the current publishing system. Editorial as well as review decisions are not always fully transparent and vary between journals. The quality of a review depends on the expertise of the reviewer and the editorial office sometimes arbitrarily selects this expertise. The arbitrary element is a natural consequence of the task of the office and its realization in times of fast increase in submissions, the increase of interdisciplinary topics, and the lack of individual review expertise necessary to cover all issues of a modern science paper.

This discussion is not new at all. It has been stated before that the metrics by which the possible impact of an article is measured in the editorial handling phase are not well defined and leave a large degree of uncertainty about how decisions are made (Kreiman and Maunsell, 2011). The system is amenable to political as well as opportunistic biases playing a role in whether a paper is accepted or rejected (Akst, 2010). Public communication about an article and the review process to which it was subjected is very limited, if possible at all. In addition, there is growing pressure from grant agencies and local institutions to publish a high number of articles, thereby potentially compromising the scientific quality of submitted papers, while the review process itself might be compromised by increased load due to the increasing number of submissions. Hence, we fear that the large increase in the number of publications in the field of neuroscience and other fields may be accompanied by a decrease in overall quality. Moreover, the explosion in numbers of publications makes it difficult to follow the evolution of a specific topic even for experts of that field. In the light of increasing financial pressure and importance of external funds, the reform of the publishing system cannot be viewed in isolation but has to take into account other parameters, which interact with the publishing system. Here, we provide an alternative to the current review and publishing system, which is meant to be implemented in two steps. The idea we propose is inspired by the development of social media. In the first step it would function as an add-on to the existing scientific publishing system, but in the second step may evolve to completely replace it. It involves the quantification of interactions among scientists using Network-Based Statistics (NBS), as done in social media, in combination with search tools, as used by Google. The proposal laid out below should act as an inspiration to where the future of publishing might lead, and is not intended to be a fully detailed roadmap.

Current State of the Publication Process

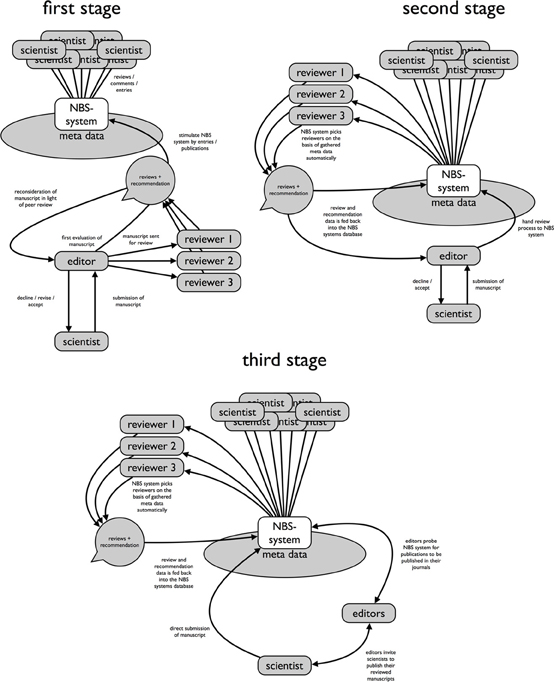

In general, scientists submit an article covering their latest results and findings to a specific journal of interest (Figure 1, first stage, bottom part). In most cases, a preliminary editorial decision is made whether the manuscript is of interest and of sufficient quality, after which the manuscript is either rejected or sent out for review to a small number of scientists (typically 2–3) who provide anonymous reviews of the submitted paper. The editor then faces a decision to accept the paper, to reject it, or to ask for revisions. This decision is to be guided by the Editor's own understanding of the topic, and the evaluation by the reviewers. If an article is rejected, the scientist may use the reviewers' concerns as a guideline to revising the manuscript for future submission in a different journal. If an article is accepted, the final version goes into the publishing stream of the journal and can be accessed by the community. In summary, the editorial and review decisions and the platform on which an article is presented, is tied to each individual journal and the accompanied publisher, and the process itself is usually entirely shielded from any public scrutiny.

Figure 1. Illustration depicting the three stages of our proposed change in the current publishing system. In a first stage, the NBS system acts as an add-on to our current publishing system and starts collecting data. In a second stage, the NBS system takes over the peer review system by automatically suggesting and collecting reviews of articles submitted by the editorial offices. In a third stage, scientists directly submit articles to the NBS system independent of the journal in which the article might be published.

Proposed Future State of the Publication Process

We propose a new system that would initially accompany the existing one (Figure 1, first stage, top part), without generating excessive extra load for scientists and without increasing the already overwhelming number of published articles. The system would make use of modern technology to quantify the behavior of individuals in networks (NBS). The NBS system would initially function as an add-on to the existing system, but it might in a second stage lead to changes in the current system or to its replacement, by showing it is a superior system for all concerned. Evaluation of papers by NBS would be designed to be transparent and controlled by the scientific community. In short, the new system would quantify interactions among scientists pre- and post-publication, introduce new ways of determining an article's impact and, in a future stage, NBS would decouple the review process from individual journals and editors. The add-on NBS system will work similarly to current social networks and would be built up of two types of general information; one being a scientific expertise profile of individual experts and the second being a database of publications (“entries”) with extended additional data (discussed below). Instead of maintaining scattered institutional websites containing individual information about publications, interests, and affiliations, scientists would subscribe to a global network where most important information about them is gathered. This information will include institutional affiliations, publications, and relationships to other collaborating scientists, which can be derived from author lists on publications and from statistical information about the behavior of scientists toward others (see below). Moreover, publications associated with member scientists would deliver information on the expertise and interests of each individual. Thus, the information provided can be used to extract metadata related both to expertise and connections of each individual in the network of scientists, and this information should be anonymously accessible by fellow scientists, editors, and publishers.

NBS as a Parallel Add-on Existing Next to the Classical Publication Process

The proposed system can be used as an add-on to the current review system in the following way: when a new publication appears and when it is entered into the database (feeding of existing databases like Google scholar etc., or direct input by journals, thus having undergone traditional peer review), an editor associated with the NBS system will forward invitations to other scientists selected for their expertise and publication record to write brief comments, longer evaluations, or even extensive blog-like entries. This editor (or network administrator) will make the selection based on parameters provided by the NBS system, though the ultimate goal will be to generate the selection of reviewers and commentators on an automatic basis (see below). The quality and objectivity of a comment can be immediately evaluated, based on the metadata that is present in the system. For example, the position in the network relative to the authors on the publication can be objectively quantified in terms of numbers of common publications, overlap in (past and present) institutional affiliation, overlap in expertise, and content of previous comments (e.g., positive or negative), by algorithms accessing the metadata available in the system. Further statistical procedures could then be used (as in iTunes/Google) to find related comments, all entries from the same commenter, related entries from other commenters, etc. The combined results of such statistical data mining may greatly increase the transparency of evaluations and help scientists to weigh the importance of a paper versus its associated comments. In this initial stage, the NBS system, therefore, acts completely independent of the existing publishing and review system and adds an additional layer of information to each publication listed. This additional information provides an index to the reader about the relevance of a paper/topic within the community based on vividness of ongoing discussions about this paper. It is important to note, that the additional data should not act to replace the relevance, content, and substance as foundations of a given paper since those are not quantifiable in a direct way. However, the additional data can act in navigating through the complex scientific landscape of publications where the final verdict on a paper should always be left to the critical scientific reader.

In addition, once the NBS system starts working, thus having gathered a sufficient amount of information, it may facilitate information clustering and career development. With regard to clustering, smart computer-driven clustering of comments in the database can be carried out in several dimensions (i.e., quality, quantity, type of author). They can then be used to visualize the relevance of a given paper over time. In addition to the comments left for a certain publication, usage of statistics such as views and downloads can be logged and taken into consideration during analysis of an articles history. This can be used as relevant orientation (and data reduction) for the scientific community and inherently contributes to scientific knowledge and quality. With regard to career development, the NBS can highlight competent and objective commentators on the basis of ratings and views. By doing so, NBS adds details to a scientist's career profile in terms of impact (do people hear him/her) and vividness (quantity and quality of actions within NBS). NBS hence forms a tool to valorize scientific expertise via reviews as well as comments in general.

Taken together, the statistical information available can be used to provide measures that can promote more objective views on an article's impact than its mere number of citations or the journals impact factor (Skorka, 2003; Simons, 2008; Franceschet, 2010), and provide a timeline of the importance it has on the scientific community. By having an ongoing assessment of a publication, clustering algorithms can be used to view a research topic and its related publications through the progression of time, independent from a single article's reference list, even indicating what contributions individual manuscripts made to a specific domain of science. While substantive impact of a scientific idea is based on more than statistical data, the NBS system goes beyond the current standard metrics while making the process of judging impact more transparent. Proactive expertise contributions receive direct incentives as they are valued by the community. Since the NBS system relies on a large and valid amount of data, scientific institutions should support such proactive input by their scientists.

NBS as an Alternative that can Partly or Completely Replace the Existing Publication Process

Initially, the NBS system would be based on the submission of papers that were published in journals, as well as unpublished papers, on which authors can comment in various formats similar to working papers which many disciplines are already familiar with. However, the network statistics associated with submitted articles and comments provide a parallel process that can be more than a mere add-on (Figure 1, second stage); we expect that the proposed system will be used to improve the current journal-driven reviewing system. Importantly, the system we propose with the scientist's ability to comment on articles freely does not intend to replace the need for peer review in any way, only to restructure the process. Any manuscript submitted to the NBS system requires and should require a form of peer review, either directed by journals and their editors or by the system itself. For example, the NBS system proposed here can be of immediate help to editors searching for relevant reviewers for a new article that has been submitted. A page rank algorithm, such as used by Google for retrieving information sorted by relevance to a keyword, could provide a relevant and, most importantly, scientifically objective reviewer to an editor. Objectivity could be defined as independent from the submitting scientists' group, affiliations, or personal preferences, but with overlapping expertise. Personal preferences and opportunistic behavior could be quantified based on an anonymous log of behavior among scientists. For example, scientists can be ranked by the tendency (quantified by appropriate metrics) to systematically reject papers of specific authors or institutions, and when this ranking index is too high, it should decrease their probability of being selected as a reviewer. By implementing such procedures, an editor using the proposed add-on system would enhance the review process by counteracting opportunistic behavior by individuals. While this system needs multiple occasions on which a reviewer is found to show this type of behavior, it is likely that its mere existence would reduce biases and make reviewers more aware of their claimed objectivity.

Furthermore, editors and scientists might agree to not only enter their papers into the NBS system, but also its anonymous reviews. Initially, this can be done with reviewers selected by a journal editor, who might have used the proposed system to select the reviewers. Importantly, at the discretion of the scientists authoring the paper and with permission of its reviewers, this would be done as soon as a paper has been reviewed, also if it is rejected. Each entry would, therefore, receive a history of its own review process prior to its ultimate publication in a journal. Hence, even if an article has not been accepted in a certain journal and ends up being published by another, the attached reviews should contain the entire publication process. Having the entire review process available for each article will make it more transparent for readers to judge how the reported findings were received as well as which problems (in terms of data acquisition, analysis methods, or hypothesis) fellow scientists tackled while getting published. Even for very good papers and positive reviews, an openly accessible review process might be enlightening as complementary additional ideas and background information would be shared (like a review of a good book or movie).

We believe that when editors start using this add-on system, it can influence journals and their editors to make better-informed decisions on how to select papers for publication. As our proposed NBS system would provide defined metrics of the success of an article, irrespective of where it gets published, or even whether or not it gets published, it would provide an alternative and more transparent measure of impact. We are convinced that NBS will provide more valuable measures of appreciation of a publication in a research field than classical impact measures and the journal's name. When editors increasingly use NBS to select reviewers, and when the view within the scientific field develops such that a system is beneficial, then consensus may grow. As a consequence, the current review process could be partly or entirely replaced by NBS. Indeed, it is imaginable in a third stage (Figure 1, third stage), that a system based on NBS would select reviewers for articles automatically based on objective statistics, and that what initially would be comments would become the actual reviews of the submitted articles. In this way, a submitted article would generate its own review process that would be publically available, in a way that is de-coupled from specific journals. Scientific journals would then be able to use the output of an NBS-based review processes to select articles for publication. This would create an inverse dynamic, in which journals will have to compete with each other to publish the best articles, as scientists might be contacted by several journals with requests for publication in print.

The scientific review and publication process we have sketched here will provide a context in which truly good publications will be labeled by favorable community-driven statistics and ranked high, while publications that were released prematurely or received poor ratings will also be recognized as such, and ranked low. We suggest that this will create a transparent and content-based competition among researchers and among institutions, so that quality of research may become emphasized more in evaluating an individual's productivity than numbers of publications. It can become a system that facilitates collaboration within the digitized social network. Moreover, we believe the proposed system will trigger a re-orientation of the effort of scientists from anonymous review processes that remain unpublished to interaction in a more open and public arena. We suggest that the more active and more publicly accessible communication style among scientists proposed here will lead to better knowledge of one another's work, and therefore, will be a catalytic factor in enhancing research quality.

Possible Caveats and Downfalls

Any given system will have its inevitable flaws and problems and while we believe that our proposal aims at directly improving and addressing many of those present in our current systems state, it is important to note the possible problems our proposal could encounter. Scientific work, the content it entails and the quality associated with it is by its very nature not entirely quantifiable by metrics of statistics. Therefore, the proposed NBS system will never be independent of human evaluation instead we aim for making the system more transparent in that regard. It is clear that the system we propose has the possibility of generating excessive work load for scientists if mechanisms are not in place to control for endless discussion cycles. One serious problem with a more open system is the problem of danger of lobbyist tendencies. While opportunism and lobbyism are problems already present in the current publishing system and we hope to alleviate them through means of the NBS system discussed above, it is important that activism within the NBS system does not counteract these efforts.

Summary and Outlook for the Future

Although it is difficult to predict how the introduction of the NBS-based publishing system would be received and thus develop, the minimal goal we wish to achieve is that publishers would increase the objectivity and transparency of the current review and publication system by using NBS-based information. This can be achieved by using NBS-based information for selecting reviewers, and scientists and editors agreeing to make the entire anonymized review history public on a publicly accessible site (for a discussion on the problems associated with public reviews see Anderson, 1994 and Kravitz and Baker, 2011). However, in the long-term we suggest that a complete decoupling of the scientific review process from specific journals and from their different, idiosyncratic review systems would tremendously help the scientific objectivity of the review process. Indeed, scientific reviews should not be biased by the fact that a review is being handled for a high impact versus a lower impact journal, and it should not be biased by implicit histories or affinities of an author with a specific journal or editor. Moreover, a review system that is independent of individual editorial decisions and, therefore, not directly related to a particular journal would base the review process on a broader consensus-based evaluation.

Starting off our proposed add-on NBS-based system involves some, but minimal additional work by scientists (for a critical view on electronic publications see Evans, 2008). It would involve an effort to make published articles accessible from a common webpage. Commenting/reviewing may be kicked-off by asking leading scientists to submit a number of comments on a subset of papers related to a topic of their competence. These comments will attract the scientific community to visit the system and to add further comments. This initial phase is essential in the development of the system in its add-on phase, and will be highly dependent on the effort of senior scientists. However, the overall benefits and possibilities of the new system should cover these initial costs entirely. We strongly believe that it is time to leave the sub-optimal reviewing and publication system that is available right now behind, and reform it into a more transparent and open system. Importantly, to make this transition effective, universities, research organizations, and grant agencies have to be part of the reform and support it.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Evans, J. A. (2008). Electronic publication and the narrowing of science and scholarship. Science 321, 395–399.

Kravitz, D. J., and Baker, C. I. (2011). Toward a new model of scientific publishing: discussion and a proposal. Front. Comput. Neurosci. 5:55. doi: 10.3389/fncom.2011.00055

Kreiman, G., and Maunsell, J. H. R. (2011). Nine criteria for a measure of scientific output. Front. Comput. Neurosci. 5:48. doi: 10.3389/fncom.2011.00048

Keywords: network-based statistics, publishing system, scientific evaluation, peer review

Citation: Zimmermann J, Roebroeck A, Uludag K, Sack AT, Formisano E, Jansma B, De Weerd P and Goebel R(2012) Network-based statistics for a community driven transparent publication process. Front. Comput. Neurosci. 6:11. doi:10.3389/fncom.2012.00011

Received: 24 November 2011; Paper pending published: 27 December 2011;

Accepted: 17 February 2012; Published online: 05 March 2012.

Edited by:

Diana Deca, Technische Universität München, GermanyReviewed by:

Talis Bachmann, University of Tartu, EstoniaRogier Kievit, University of Amsterdam, Netherlands

Dwight Kravitz, National Institutes of Health, USA

Copyright: © 2012 Zimmermann, Roebroeck, Uludag, Sack, Formisano, Jansma, De Weerd and Goebel. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Jan Zimmermann and Rainer Goebel, Department of Cognitive Neuroscience, Maastricht University, Maastricht, Netherlands. e-mail: jan.zimmermann@maastrichtuniversity.nl; r.goebel@maastrichtuniversity.nl