Probabilistic inference of short-term synaptic plasticity in neocortical microcircuits

- 1Neuroinformatics Doctoral Training Centre, Institute for Adaptive and Neural Computation, School of Informatics, University of Edinburgh, Edinburgh, UK

- 2Department of Neurology and Neurosurgery, The Research Institute of the McGill University Health Centre, McGill University, Montreal, QC, Canada

- 3Institute for Adaptive and Neural Computation, School of Informatics, University of Edinburgh, Edinburgh, UK

Short-term synaptic plasticity is highly diverse across brain area, cortical layer, cell type, and developmental stage. Since short-term plasticity (STP) strongly shapes neural dynamics, this diversity suggests a specific and essential role in neural information processing. Therefore, a correct characterization of short-term synaptic plasticity is an important step towards understanding and modeling neural systems. Phenomenological models have been developed, but they are usually fitted to experimental data using least-mean-square methods. We demonstrate that for typical synaptic dynamics such fitting may give unreliable results. As a solution, we introduce a Bayesian formulation, which yields the posterior distribution over the model parameters given the data. First, we show that common STP protocols yield broad distributions over some model parameters. Using our result we propose a experimental protocol to more accurately determine synaptic dynamics parameters. Next, we infer the model parameters using experimental data from three different neocortical excitatory connection types. This reveals connection-specific distributions, which we use to classify synaptic dynamics. Our approach to demarcate connection-specific synaptic dynamics is an important improvement on the state of the art and reveals novel features from existing data.

1. Introduction

Synaptic plasticity is thought to underlie learning and information processing in the brain. Short-term plasticity (STP) refers to transient changes in synaptic efficacy, in the range of tens of milliseconds to several seconds or even minutes (Zucker and Regehr, 2002). It is highly heterogeneous and is correlated with developmental stage (Reyes and Sakmann, 1999), cortical layer (Reyes and Sakmann, 1999), brain area (Wang et al., 2006; Cheetham and Fox, 2010), and postsynaptic cell-type (Markram et al., 1998; Reyes et al., 1998; Scanziani et al., 1998; Tóth and McBain, 2000; Rozov et al., 2001; Sun and Dobrunz, 2006). For instance, short-term depression predominates in the juvenile brain, whereas more mature circuits have a preponderance for short-term facilitation (Pouzat and Hestrin, 1997; Reyes and Sakmann, 1999). Similarly, synapses from neocortical pyramidal cells (PCs) impinging on other PCs are depressing, whereas those onto specific interneurons can be strongly facilitating (Markram et al., 1998; Reyes et al., 1998).

STP has been proposed to shape information processing in neural networks in multiple ways (Abbott and Regehr, 2004; Fung et al., 2012), to enable cortical gain control (Abbott et al., 1997), pattern discrimination (Carvalho and Buonomano, 2011), input filtering (Markram et al., 1998), adaptation (van Rossum et al., 2008), spike burst detection (Maass and Zador, 1999), synchronization (Tsodyks et al., 2000), and to maintain the balance of excitation and inhibition in local circuits (Galarreta and Hestrin, 1998).

To model short-term depression, Tsodyks and Markram (1997) introduced a phenomenological model based on vesicle depletion, here referred to as the Tsodyks–Markram (TM) model. This model was later extended to include short-term facilitation (Markram et al., 1998; Tsodyks et al., 1998). Although several other STP models have been developed (Abbott et al., 1997; Varela et al., 1997; Dittman et al., 2000; Loebel et al., 2009; Pan and Zucker, 2009), the TM model has become particularly popular, probably because of its combination of appealing simplicity and biophysically relevant parameters (Markram et al., 1998; Richardson et al., 2005; Le Bé and Markram, 2006; Wang et al., 2006; Rinaldi et al., 2008; Ramaswamy et al., 2012; Testa-Silva et al., 2012; Romani et al., 2013).

Typically, STP models are numerically fitted to electrophysiological data by least-mean-square algorithms, which yield the parameter values that minimize the error between data and model. However, such fitting algorithms can get stuck in local optima and may provide little information about the certainty of the parameter values. As shown below, such fits may produce inaccurate results and may lead to unreliable clustering. Bayesian inference is a natural alternative, because it yields a distribution of parameter values rather than a single outcome. Bayesian inference has recently been applied to neurophysiological data analysis. McGuinness et al. (2010) used this to estimate large and small action potential-evoked Ca2+ events, while Bhumbra and Beato (2013) used a Bayesian framework of quantal analysis to estimate synaptic parameters, which required far fewer trials compared to traditional methods. Here, we introduce a Bayesian approach to obtain the posterior distribution of TM model parameters. This enabled us to take into account the uncertainty inherent to experimental data, which provided a more complete description of STP data.

Our approach has several advantages. First, it allowed us to infer the distribution of synaptic parameters for individual connections and propose a better protocol to extract these parameters. Second, we found that parameter distributions extracted from cortical data are specific to different connection types. Third, we showed that we can automatically cluster the parameters of synaptic dynamics to at least partially classify postsynaptic cell types. We also performed model selection to determine which variant of the TM model best captures the synaptic dynamics of the connection type at hand.

2. Materials and Methods

2.1. Short-Term Plasticity Phenomenological Model

The extended TM model (eTM) is a phenomenological model of short-term plasticity defined by the following ODEs (Markram et al., 1998; Tsodyks et al., 1998)

The first equation models the vesicle depletion process, where the number of vesicles R(t) is decreased with u(t)R(t) after release due to a presynaptic spike at time tAP, modeled by a Dirac delta distribution δ(t). Between spikes R(t) recovers to 1 with a depression timeconstant D. The second equation models the dynamics of the release probability u(t) which increases with f[1 − u(t)] after every presynaptic spike, decaying back to baseline release probability U with a facilitation timeconstant F. The notation t− indicates that these functions should be evaluated in the limit approaching the time of the action potential from below (as would be natural in forward Euler integration).

By varying the four parameters one can obtain depressing, combined facilitating-depressing and facilitating synaptic dynamics. We note that for some data a three parameter model [setting f = U, denoted the TM with facilitation model] or even a two parameter depression model with only Equation (1) [setting u(t) = U, denoted the TM model] is sufficient. This, however, is not generally the case, as shown below.

To speed up the numerical implementation we integrated the above equations between spikes n and n + 1, a time Δtn apart, yielding

As we assumed that at time zero the synapse has not been recently activated, we set R0 = 1 and u0 = U.

The postsynaptic potential PSPn is given by

where A is an amplitude factor that includes the number of release sites, the properties and number of postsynaptic receptors, and cable filtering.

The steady-state values R∞ and u∞ in response to prolonged periodic stimulation with rate ρ are

2.2. Simulated Data

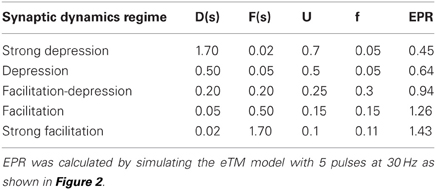

For the simulated data we used five sets of STP parameters, ranging from depression to facilitation, see Table 1.

As the commonly used paired-pulse ratio, PPR = PSP2/PSP1, only takes the first two pulses into account, we introduce the Every Pulse Ratio (EPR) as a more comprehensive measure of STP dynamics. It is defined as

This index measures the average amplitude change from the i to the i + 1 response normalized to the i response in the train. EPR is used in Table 1 and elsewhere to quantify the average degree of depression (EPR < 1) or facilitation (EPR > 1). Using these parameters we calculated the synaptic responses with Equations (3, 4) to a spike train of five pulses at 30 Hz (Figures 2, 4).

2.3. Bayesian Formulation

The posterior distribution of the synaptic parameters follows from Bayes' theorem as , where is a vector of mean postsynaptic potential peaks extracted from simulated or experimental data and is a vector encompassing the model parameters. Many factors contribute to variability in the measured EPSPs, including stochastic vesicle release and experimental noise. A typical noise model of synaptic transmission is a binomial distribution (Zucker and Regehr, 2002). However, we found that our data is well described by a Gaussian noise model (see below). Therefore, we write the likelihood of the data as

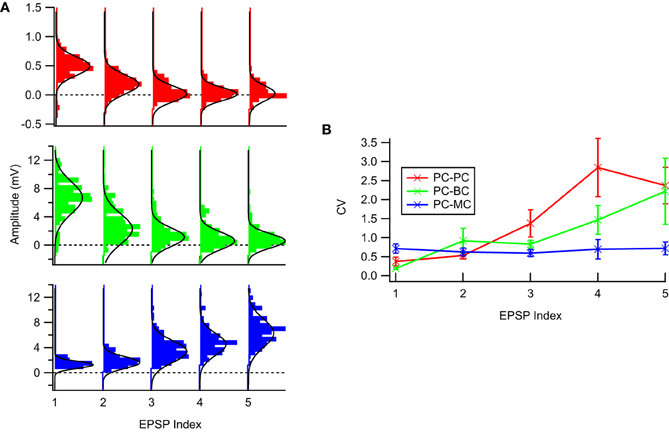

where is the voltage response from the eTM model for i = 1…N runs over the data points in the pulse train. We set the noise σi independently for each pulse. For the data we extracted the CV for each pulse, while for the simulated data a fixed coefficient of variation (CV = 0.5) was assumed, based on Figure 1. Note that we did not include a model of stochastic vesicle release. This would be a possible extension of our model. A stochastic release model also leads to correlations between subsequent events, and Equations (4, 3) would thus have to be extended to their history-dependent variances, which would complicate our model. We did confirm that parameters from a simulated stochastic release model, were inferred correctly using the above noise model, although the posterior distributions were somewhat widened.

Figure 1. The experimental STP data was well described by a Gaussian noise model. (A) Sample EPSP distributions for the three connection types: PC–PC (top, red), PC–BC (middle, green), and PC–MC (bottom, blue) with respective Gaussian fits (solid black line)—94% of the EPSP distributions were not statistically significant different from a Gaussian distribution (see main text for more details). (B) Coefficient of variation analysis. While for facilitating synapses (PC–MC) it was more or less constant, for depressing synapses (PC–PC and PC–BC) we observed an approximately linear increase with EPSP amplitude. Error bars represent standard error of the mean.

The priors were modeled as independent non-informative flat distributions over the model parameters

which limits the posterior distribution within reasonable values.

Bhumbra and Beato (2013) sampled their bidimensional posterior probability using a brute-force grid search. For higher dimensions this is computationally expensive. We therefore inferred the posterior distribution by sampling using the Slice Sampling Markov Chain Monte Carlo (MCMC) method (Neal, 2003). The width parameter w was set equal to the upper limit of the flat prior distributions (i.e., ) and each parameter is sampled sequentially in the four orthogonal directions. We discarded the first 2500 samples as burn-in and use the last 7500. For the numerical implementation we use the log-likelihood . The convergence of the Markov chain to the equilibrium distribution was assessed through the Gelman–Rubin statistical method (Brooks and Gelman, 1998). However, this diagnostic of convergence can indicate lack of convergence, but does not confirm it. Therefore, in order to ensure convergence, we used multiple chains (n = 3) starting at different initial conditions to ensure that the outcome was independent on the initial condition (Gelman and Shirley, 2011). The maximum a posteriori (MAP) estimator of the synaptic parameters is given by

The MAP estimate was obtained by keeping the most likely sample from multiple MCMC chains. In addition we also ran an optimizer to find the most precise MAP using the distribution peak as a starting point. As both approaches gave equally good fits for the sake of simplicity we decided to use the former.

We compared our estimation method with a standard stochastic optimization method, simulation annealing (SA). The SA method minimizes the RMSE

while trying to avoid getting stuck in local minima. We ran the SA algorithm 200 times and selected the estimate with lowest RMSE. Using an objective function scaled by the variance gave similar results when compared to the non-scaled version; thus for the sake of comparison with previous literature, we used the non-scaled version. To compare the goodness of fit of both MAP and SA solutions with the data, we used the coefficient of determination R2.

As the amplitude A is not relevant for the synaptic dynamics, we set A = AMAP,

where . We used this value to normalize the data. Its value does not affect the dynamics estimation, because A only scales the responses.

To estimate the posterior probability distributions, we used a kernel density estimation method (Ihler and Mandel, 2007). Unless otherwise stated, the code was implemented in Matlab (inference code is available online1).

2.3.1. Quantifying inference performance

To quantify which protocol allows for the most precise recovery of the true parameters of simulated STP data (Figure 3A), we computed the sample estimation error over N = 22,500 MCMC samples to the true parameters , as , where the average is over all the runs and all five parameter sets (Table 1). To achieve similar weighting, the parameters were normalized to the true parameters. Alternatively, we normalized the estimated parameters on the upper limit of their priors, or we omitted normalization altogether. This yielded similar results. Note that in probabilistic spirit, this error also quantifies the spread in the distribution. A smaller E gives more peaked distributions, which correspond to tighter parameter estimates. Note that, although similar, this error measure does not follow the standard bootstrap approach.

2.3.2. Model selection

For model selection, we used the Akaike Information Criterion (AIC), which is a information-theoretic measure of the goodness of fit of a given statistical model. It is defined as , where k is the number of estimable parameters in the model and the log-posterior of the MAP estimate on the normalized data. The AIC evaluates models according to their parsimonious description of the data, and is particularly suitable for probabilistic inference. We used the evidence ratio, which is a relative ranking of the Akaike weights, to find the least complex model that best describes the data (Turkheimer et al., 2003; Nakagawa and Hauber, 2011).

2.4. Electrophysiology

Quadruple whole-cell recordings and extracellular stimulation were performed in acute visual cortex slices of young mice (P12–P20) as previously described (Buchanan et al., 2012). The stimulating electrode was positioned in layer 5 (L5). L5 Pyramidal cells (PCs) were targeted based on their characteristic pyramidal soma and thick apical dendrite. Basket cells (BCs) were targeted in transgenic mice genetically tagged for parvalbumin, while Martinotti cells (MCs) were targeted in mice genetically labeled for somatostatin (Markram et al., 2004; Buchanan et al., 2012). Cell identities were verified by cell morphology and rheobase firing pattern. Five spikes were elicited at 30 Hz using 5 ms long current injections (0.7–1.4 nA) every 18 s in all neurons throughout the experiment. Excitatory postsynaptic potentials (EPSPs) were averaged from 20–40 sweeps.

For each connection, a histogram was built from the EPSP amplitudes extracted with 1–2-ms window fixed approximately on the peak depolarization. EPSP distributions were fit with a Gaussian (Equation 9). Recordings with mean EPSPs smaller than 0.015 mV were discarded. Electrophysiological data analysis was carried out in Igor Pro (WaveMetrics Inc., Lake Oswego, OR).

Figure 1 shows typical EPSP distributions for each of the three neocortical excitatory connection types that we studied, PC–PC, PC–BC, and PC–MC. We tested whether the Gaussian noise model was a valid description of the data using the Kolmogorov–Smirnov (KS) normality test, and we found that the null hypothesis that samples were drawn from a normal distribution could not be rejected for 160 out of 170 EPSP distributions, with no connection-specific bias. This suggests that EPSPs were typically normally distributed, consistent with previously published results [e.g., Figure 5B in Markram et al. (1997)]. Due to noise, apparently negative EPSPs (Figure 1) were occasionally recorded. These are consistent with our Gaussian noise model and require no special treatment.

2.5. Clustering and Classification

Distributional clustering was introduced by Pereira et al. (1993). Here we applied a similar information-theoretic approach to cluster . Instead of a “soft” clustering approach we used “hard” clustering, due to its simplicity, computation speed and comparison with standard clustering techniques. We used an agglomerative method [unweighted average distance method, Sokal (1958)] and an f-divergence metric. F-divergence metrics constitute a family of functions that measure the difference between two probability distributions. Consider two discrete probability distributions P and Q both discretized into N bins. To compare any given pair of distributions we used two f-divergence metrics: (i) the symmetrized Kullback–Leibler divergence

with

and the (ii) Hellinger distance

Due to the high dimensionality of our problem, we approximated these two measures first marginalizing over the d = 4 dimensions and then computing the sKL and HL over the d marginal probabilities. We compared our posterior-based clustering with clustering based on the SA estimates. Here, we used the Euclidian distance on the z-scored parameters found with SA.

To estimate the number of clusters we used the Pseudo-F statistic (Caliński and Harabasz, 1974). The Pseudo-F statistic captures the tightness of clusters as the ratio of the mean sum of squares between clusters to the mean sum of squares within cluster

where is the total sum of squares, is the within-cluster sum of squares, G is the number of clusters, and n the total number of items. A larger Pseudo-F usually indicates a better clustering solution. The Pseudo-F statistic has been found to give best performance in simulation studies when compared with 30 other methods (Milligan and Cooper, 1985).

To evaluate the clustering quality, we computed the dendrogram purity as described by Heller and Ghahramani (2005), where we considered two classes according to EPR: class 1 for EPR ≤ 1 and class 2 for EPR > 1. This threshold allows us to separate mostly depressing from mostly facilitating synaptic dynamics.

Finally, we also performed classification using the Naive Bayes Classifier: , where P(C) is the prior over the different synapse types C and the likelihood for a given class. Although information about connectivity rates could in principle be incorporated in the prior, we used a uniform prior over the classes. Our likelihood is given by the MCMC inference over the model parameters for a given training dataset dC and synapse type C, i.e., . As the Naive Bayes Classifier assumes independence between the different classes, we have one independent model per class with the maximum a posterior decision rule . We estimated the performance of our classifier with K-cross validation (K = 7, i.e., ~80% for PC–PC (n = 9) and PC–MC (n = 9), and ~60% for PC–BC (n = 12)), where we sampled over K data points (i.e., connections) for each synapse-type to obtain our likelihood model and then test the classifier with the remaining data points. This process was repeated until all possible different K partitions of the data have been used. Accuracy is defined as the percentage of correct classifications for a given connection type.

3. Results

3.1. Parameter Inference Certainty is Synaptic Dynamics Dependent

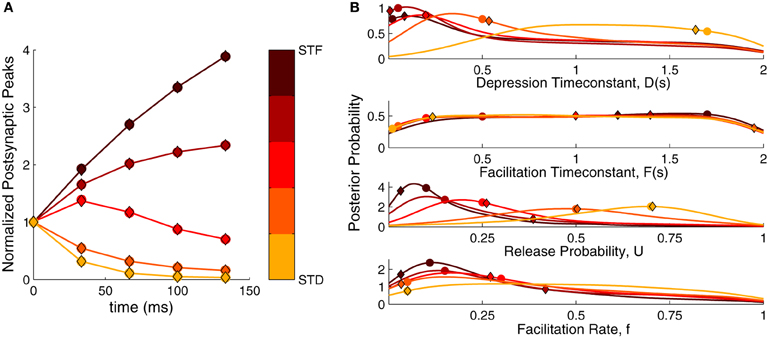

We first checked our method in extracting STP parameters from simulated data with a standard stimulus train of five spikes at 30 Hz (see Materials and Methods). We simulated data with predefined parameter sets ranging from strong depression to strong facilitation. This was achieved by decreasing the baseline release probability U and the depression timeconstant D, while increasing the facilitation rate f and the facilitation timeconstant F (see Materials and Methods, Table 1). The resulting dynamics are shown in Figure 2A.

Figure 2. Bayesian inference of short-term plasticity parameters using simulated data. (A) Simulated PSPs (filled circles in response to five pulses at 30 Hz) for five different synaptic parameter settings ranging from strong depression (yellow) to strong facilitation (dark red). The MAP solution of the inferred distribution is shown with diamonds. (B) Posterior marginalized distributions of the model parameters for the data in (A). The true parameters are shown as filled circles and the MAP solutions as diamonds.

Figure 2B shows the inferred parameter distributions for the various parameter settings. As the full posterior distribution is four dimensional, we plotted the marginals only. The inferred parameter distributions showed varying behavior: The distributions for U were well-tuned to values close to the true parameter values. For the D parameter the shifts in the distributions followed the changes in the true parameter, becoming broader for depressing dynamics. Both F and f were not narrowly tuned to the true parameter. Although f was tuned to small values for facilitating synapses, its distribution became broader for depressing synapses. The F parameter was not particularly tuned to any value, being close to an uniform distribution for both depressing and facilitating synapses. We explored the possibility that the broadness of F depended on the prior boundary by extending it to 5 s and 10 s. However, the distribution remained uniform and merely grew wider, suggesting that the broad distribution was not caused by an improper choice of prior. In summary, the inference procedure shows that—depending on the dynamics—the inferred parameter distributions can be narrow or broad and that some parameters are much more tightly constrained than others.

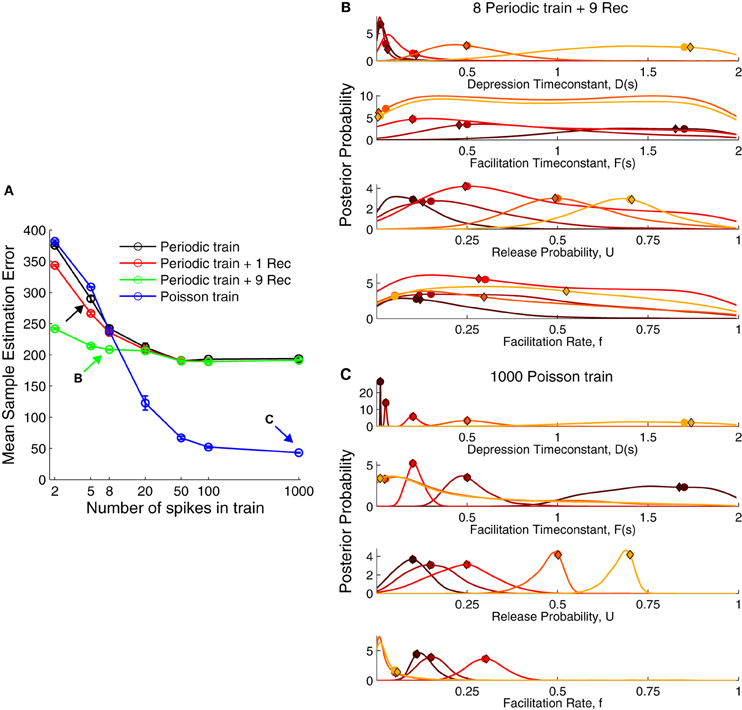

3.2. Improving Experimental Protocol for Parameter Inference

The fact that some of the inferred parameter distributions were broad suggested that the five pulse protocol did not yield enough information to reliably infer the true parameters. Therefore, we used our probabilistic formulation to find an experimental protocol that improves the inference quality (Figure 3). To this end, we compared the sample estimation error on the estimates (see Materials and Methods) for different spike trains: (1) a periodic train at 30 Hz, (2) a periodic train with recovery pulses, and (3) a Poisson train of 30 Hz (Figure 3A). We also varied the number of spikes in the train.

Figure 3. The performance of various stimulation protocols to infer short-term plasticity parameters. (A) Comparison of mean sample estimation error using different stimulation trains. Black arrow corresponds to the protocol used in Figure 2. A periodic spike train at 30 Hz with eight pulses and nine recovery pulses [green arrow, (B)] already provided a better estimate of the STP parameters. However, a Poisson train provided a much smaller error when using more than 20 spikes with a close to zero error for 1000 spikes [blue arrow, (C)]. (B) Posterior distribution for a periodic train with nine recovery pulses (cf. Figure 2B). (C) Posterior distribution for a Poisson train with 1000 pulses. The true parameters are shown as filled circles and the MAP solutions as diamonds. For visualization the marginal probabilities were scaled by their standard deviation.

The widely used paired-pulse protocol to probe synaptic dynamics gave poor estimates even when coupled with nine recovery pulses spaced exponentially across 4 s. Using five pulses in the spike train improved the performance only moderately. Some studies have inferred the TM model parameters with eight spikes and a single recovery pulse after 500 ms (e.g., Wang et al., 2006). This did not improve the recovery error when compared to a periodic spike train alone. A Poisson spike train, however, surpassed other protocols using only 20 spikes. Therefore, we propose a Poisson spike train with 20…100 spikes as a better protocol to obtain accurate estimates of the model parameters. However, also a spike train with eight periodic pulses and nine recovery pulses offers a good compromise, yielding a low recovery error in a reasonably short duration (≈4.23 s). The distributions for these two protocols were more narrowly tuned to the true parameters (Figures 3B,C) compared to a periodic spike train without a full recovery phase (Figure 3B). Contrary to our intuition, the distributions for D were more narrowly tuned for facilitation (darker colors) than for depression (lighter colors). Although for the sake of simplicity, we do not show the results for a short periodic train followed by a Poisson train, such an approach would combine the ability to compute standard STP measures and recover information across frequencies. The reason for the poor performance of periodic trains even with many pulses is that the synapse quickly reaches steady-state, given by Equations (6, 7). Hence additional pulses do not increase information and the estimation error quickly reaches a plateau. In contrast, a random Poisson train allows the inference process to converge to the true parameter distributions in the limit of large spike trains.

Note, that both in Figure 2B and Figures 3B,C, the MAP solution is not always at the peak of the marginal distributions. The reason is that when there are dependencies in the parameters, the peak in the full distribution does not need to coincide with the peaks of the marginals. Indeed, when we compared the log-posterior of the MAP estimate to the log-posterior of the estimate given by the maximum of each marginal probability alone, the MAP approach yielded a much better estimate: , compared to the maximum of the marginal probabilities, .

3.3. Probabilistic Inference of Neocortical Data Reveals Connection-Specific Distributions

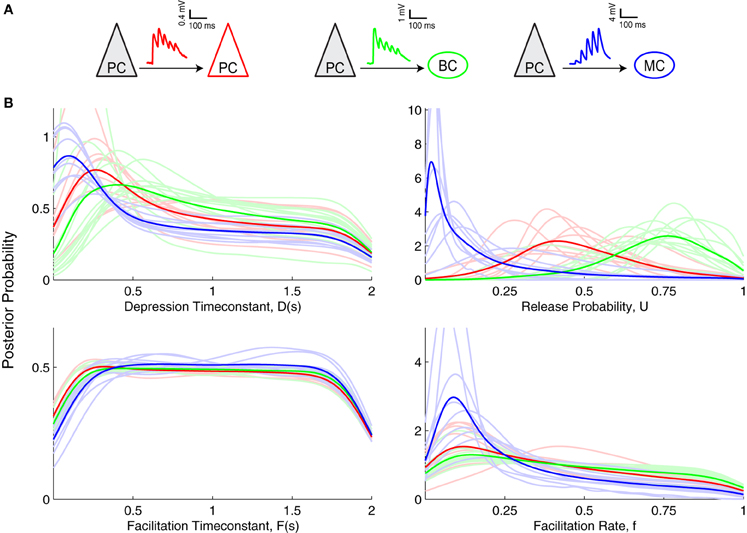

Next, we performed Bayesian inference of the eTM parameters on experimental data from visual cortex L5. These data was recorded earlier using a standard five-pulse protocol, instead of the improved protocols suggested above. This means that the parameters may not be optimally constrained, but the overall findings should still hold. We inferred the posterior distributions of the parameters U, D, F, and f from PC–PC, PC–MC, and PC–BC connections (Figure 4A).

Figure 4. Inference of short-term plasticity parameters from experimental data from visual cortex. (A) Sample experimental STP traces are shown for PC–PC (red), PC–BC (green), and for PC–MC (blue) connections. (B) Marginalized posterior distributions obtained using slice sampling from these three different excitatory connections suggest that PC–MC (n = 9) connections are quite different from PC–PC (n = 9) and PC–BC (n = 12) connections. Light colored lines show individual connections, while dark colored lines correspond to their average.

When comparing the Bayesian model inference of these three different synapse types (Figure 4B), the most salient difference was observed in the U parameter, i.e., the baseline probability of release. PC–MC connections had a small U, D and f. PC–PC connections had a medium U, medium to high D, a close to uniform F and a broad f with a preference for smaller values. PC–BC connections were similar to PC–PC connections, apart from a larger U (PC–BC: 0.72 ± 0.04, n = 12; PC–PC: 0.53 ± 0.05, n = 9; p < 0.01, Mann–Whitney test based on the MAP estimates). This higher value of U indicates that PC–BC synapses are generally more strongly depressing than PC–PC synapses. However, the EPRs for these two connection types were indistinguishable (PC–BC: 0.63 ± 0.04, n = 12; PC–PC: 0.69 ± 0.03, n = 9; p = 0.21, Mann–Whitney test), suggesting that the model inference is more sensitive than the EPR measure, and is therefore better suited for picking up connection-specific differences in STP.

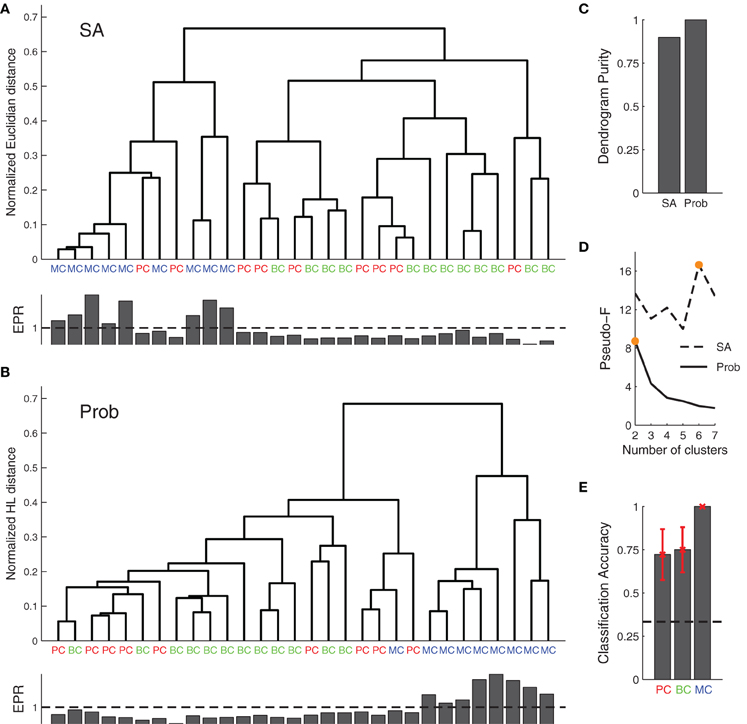

We next used our Bayesian approach for synapse classification. We first clustered the data of the various connections based on the model parameters found by SA, Figure 5A. We next clustered based on the marginalized posterior distributions, Figure 5B using the Hellinger distance (see Materials and Methods). Clustering analysis showed that the Bayesian approach improved the dendrogram purity (Figure 5C), as it split the data into two distinct clusters as assessed by the Pseudo-F statistic (Figures 5B,D).

Figure 5. Agglomerative clustering using posterior distributions improves synaptic dynamics clustering. (A) Clustering based on the synaptic parameters found by SA did not produce good clusters. (B) Clustering of posterior distributions using the probabilistic approach with the Hellinger distance gave rise to two clusters: one for short-term depression and the other for short-term facilitation (cf. EPR, inset bottom), with the first corresponding to both PC–PC and PC–BC connections, while the other roughly mapped onto PC–MC synapses. (C) EPR-based dendrogram purity with probability distribution-based clustering is higher than the purity from SA-based clustering. (D) Maximal Pseudo-F statistic suggests that the data contains two or six clusters when clustering the posterior distributions or SA-based clustering, respectively (orange filled circles). (E) A simple probabilistic classifier (Naive Bayes) achieved good performance for all the connection types, in particular for PC–MC connections (black dashed line represents chance level). Error bars represent standard error of the mean.

With SA-based clustering, the Pseudo-F statistic suggested six clusters (Figure 5D) with a lower dendrogram purity (Figure 5C, 0.89 purity level), which indicates that these six clusters are spurious. Furthermore, with the Bayesian approach, the clusters map better to the EPR measure (Figure 5B, inset bottom), indicating that our approach captures the synaptic properties better than the SA approach. The two clusters found by our approach correspond to synapses that are either chiefly depressing or facilitating. Still, the clusters did not correspond well to synapse type. In particular, PC–PC and PC–BC synapses were classified as the same type.

In an alternative approach, we also clustered the Bayesian posteriors using the symmetric KL-divergence (sKL). The sKL achieved 0.78 dendrogram purity and three clusters according to the Pseudo-F statistic; thus performing worse.

To determine how well the posterior distributions could be classified in keeping with the three connection types, we performed Naive Bayes classification with a 7-fold cross-validation (Figure 5E). We obtained 100% accuracy in PC–MC connection classification. Surprisingly, however, we also obtained a 72% and 75% classification accuracy for PC–PC and PC–BC connections, respectively. These results suggest that each synapse type can be to some extent separated from the other two types. The ability to separate the different connection types is likely to be mostly due to differences in the baseline release probability (cf. Figure 4B, parameter U).

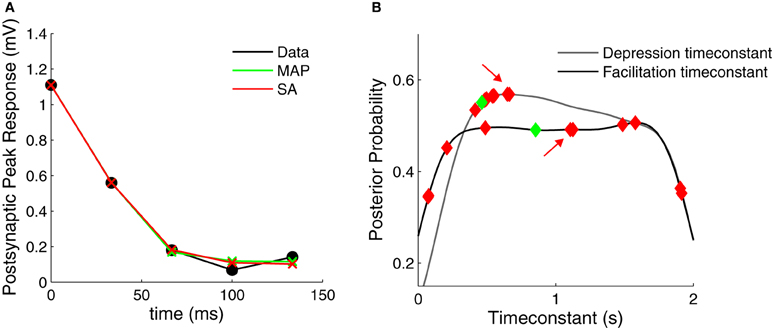

3.4. Comparison to Traditional Fitting Methods

Above we found that for both the simulated and the experimental data, the marginalized posterior of the F parameter resembles an uniform distribution (Figures 2B, 4B). This suggests that standard fitting techniques might not perform well and may become trapped in local minima, thus explaining why the SA-based clustering is not able to separate the different synaptic dynamics as well. To test this idea, we used SA on a depressing PC–PC connection and we found that this was indeed the case (Figure 6). Although the method found everytime good fits to the data (Figure 6A), the fit parameters were highly variable from one run to the next (Figure 6B). Although this variability could be used as a proxy for the parameter variance, there is no principled way in SA to estimate parameter variance. In contrast, with our Bayesian approach, the variability and exact distribution is captured in the posterior distribution. Similar observations were made by Varela et al. (1997), who occasionally found an elongated error valley when fitting their particular STP model.

Figure 6. Comparison of Bayesian approach and traditional fitting methods. (A) STP models using either MAP or SA solutions (green and red crosses, respectively) provide good fits to the experimental data (black filled circles). (B) Marginalized posterior distributions for the depression and facilitation timeconstants (gray and black line, respectively). When fitting the data in (A) 10 times, SA yields widely different parameter values (red diamonds, all solutions provided good fits to the data R2 > 0.99). The MAP solution is shown with green diamonds. The red arrows indicate the SA fit used in (A).

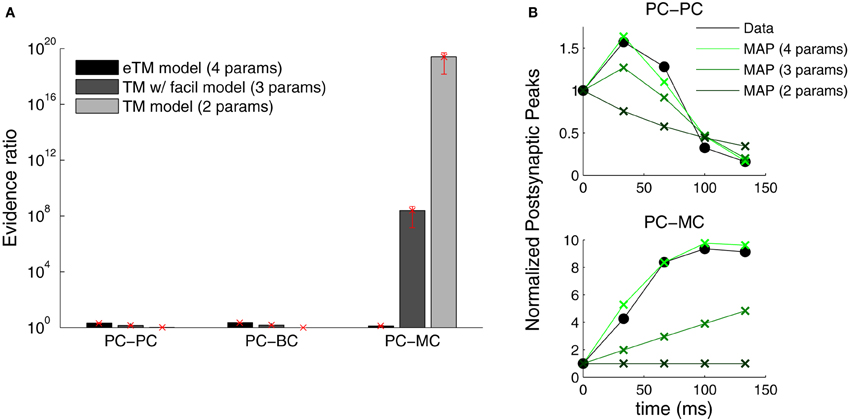

3.5. Finding the Best Model Using Probabilistic Inference

The Bayesian approach offers a natural way to examine which model describes the data most parsimoniously. We performed model selection to identify which formulation of the TM model better described the data (see Materials and Methods). We compared three formulations of the TM model: (1) with depression only—only Equation (1) with D and U (two parameters)—, (2) depression and facilitation—Equations (1, 2) with D, F and U (three parameters)—and, (3) the full extended model used above. Figure 7 shows that only the extended model is able to account for all the data from the three connection types. In contrast to Markram et al. (1998) and Richardson et al. (2005), we found that the TM-with-facilitation model does not fit the PC–MC connections well. Although for some recordings the three-parameter model was sufficient, it failed to fit other recordings (Figure 7B). This discrepancy might be due to experimental differences; our dataset was recorded in mice visual cortex L5 and included extracellular stimulation experiments, while Markram et al. (1998) and Richardson et al. (2005) recorded in the somatosensory cortex of the rat using paired recordings only.

Figure 7. Extended TM model improves the fitting of PC–MC synapses. (A) The evidence ratio based on the Akaike Information Criterion is plotted on a logarithmic scale for the three excitatory synaptic types. Three formulations of the Tsodyks–Markram model were compared—with only depression (TM, two parameters), with a degree of facilitation (TM with facilitation, three parameters) and the extended version with full facilitation (eTM, four parameters). Error bars represent standard error of the mean. (B) Examples of normalized postsynaptic peak responses that can only be accurately fitted by the eTM model. Top: PC–PC recording with combined depression and facilitation. Bottom: PC–MC recording with attenuating facilitation. The postsynaptic peak responses (black filled circles) are given together with the MAP solutions from the two, three, and four parameters model, from dark to light green, respectively.

4. Discussion

Past studies characterizing short-term synaptic dynamics have typically used traditional fitting methods. A Bayesian approach, however, turns out to be particularly advantageous for this problem, because accurate estimation of synaptic parameters is complicated. Here, we have shown that—depending on the synaptic dynamics and experimental protocol—some parameters are not narrowly tuned but broadly distributed. This insensitivity may cause traditional least-mean-square methods to get stuck in local minima.

When applied to experimental data, our method showed that different connections have different distributions. Such synapse-type specific plasticity supports the idea that different synapses perform different computations and subserve different functional roles in the local circuit. Our approach more robustly classifies synapses according to their synaptic dynamics than does clustering using simple point estimates of parameters obtained from standard optimization techniques. Our method might thus enable automatic and independent classification of synapses and cells taking into account the natural variability in the data. Future studies using larger datasets may better identify the synaptic properties that are specific to individual clusters. Furthermore, a model with a more detailed noise description could allow us to also infer the quantal parameters, which could in principle be combined with the Bayesian quantal analysis framework (Bhumbra and Beato, 2013).

We found that inference of the model parameters can be improved by having more pulses as well as by including a recovery phase. The data used here, however, was collected using a standard STP electrophysiology protocol with five pulses at 30 Hz, which still enabled connection-specific clustering. To improve parameter inference further, we propose a combination of a periodic spike train and a Poisson spike train. More pulses add more information, which has an unsurprising positive impact on inference. Poisson trains cover the frequency space better without requiring excessively long experimental recordings. Indeed, Poisson trains add a considerable improvement as compared to the more standard protocol of using fixed-frequency trains (Markram and Tsodyks, 1996; Sjöström et al., 2003).

Experimentally STP has been observed to change with development (Reyes and Sakmann, 1999), drug wash-in (Buchanan et al., 2012), temperature changes (Klyachko and Stevens, 2006), and plasticity (Markram and Tsodyks, 1996; Sjöström et al., 2003). In such situations, it often becomes important to ascertain the particular parameter changes that occur. The Bayesian framework introduced here can be extended to elucidate which components of STP are affected by integrating prior knowledge, through an informative prior. For instance, inferred distributions can be tracked across development.

Our work can also be applied in constructing computer network models with STP using posterior distributions inferred from actual biological data as a generative model. This would yield models with richer dynamics without resorting to simplistic and unrealistic ad-hoc approaches to generate synaptic variability that are poorly grounded in biological data.

Our Bayesian approach promises improved computer models as well as a better and more nuanced understanding of biological data. Yet, this approach is not computationally intense, nor is it difficult to implement. We therefore fully expect probabilistic inference of STP parameters to become a widespread practice in the immediate future.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Luigi Acerbi, Iain Murray, Matthias Hennig, and Máté Lengyel for useful comments and discussions. This work was supported by Fundação para a Ciência e a Tecnologia, Engineering and Physical Sciences Research Council, MRC Career Development Award G0700188, EU FP7 Future Emergent Technologies grant 243914 (“Brain-i-nets”), and Natural Sciences and Engineering Research Council of Canada Discovery Grant.

Footnotes

References

Abbott, L. F., and Regehr, W. (2004). Synaptic computation. Nature 431, 796–803. doi: 10.1038/nature03010

Abbott, L. F., Varela, J. A., Sen, K., and Nelson, S. B. (1997). Synaptic depression and cortical gain control. Science 275, 221–224. doi: 10.1126/science.275.5297.221

Bhumbra, G. S., and Beato, M. (2013). Reliable evaluation of the quantal determinants of synaptic efficacy using Bayesian analysis. J. Neurophysiol. 109, 603–620. doi: 10.1152/jn.00528.2012

Brooks, S., and Gelman, A. (1998). General methods for monitoring convergence of iterative simulations. J. Comput. Graph. Stat. 7, 434–455. doi: 10.1080/10618600.1998.10474787

Buchanan, K. A., Blackman, A. V., Moreau, A. W., Elgar, D., Costa, R. P., Lalanne, T., et al. (2012). Target-specific expression of presynaptic NMDA receptors in neocortical microcircuits. Neuron 75, 451–466. doi: 10.1016/j.neuron.2012.06.017

Caliński, T., and Harabasz, J. (1974). A dendrite method for cluster analysis. Commun. Stat. Theory Meth. 3, 1–27. doi: 10.1080/03610927408827101

Carvalho, T., and Buonomano, D. (2011). A novel learning rule for long-term plasticity of short-term synaptic plasticity enhances temporal processing. Front. Integr. Neurosci. 5:20. doi: 10.3389/fnint.2011.00020

Cheetham, C. E. J., and Fox, K. (2010). Presynaptic development at L4 to l2/3 excitatory synapses follows different time courses in visual and somatosensory cortex. J. Neurosci. 30, 12566–12571. doi: 10.1523/JNEUROSCI.2544-10.2010

Dittman, J. S., Kreitzer, A. C., and Regehr, W. G. (2000). Interplay between facilitation, depression, and residual calcium at three presynaptic terminals. J. Neurosci. 20, 1374–1385.

Fung, C. C. A., Wong, K. Y. M., Wang, H., and Wu, S. (2012). Dynamical synapses enhance neural information processing: gracefulness, accuracy, and mobility. Neural Comput. 24, 1147–1185. doi: 10.1162/NECO_a_00269

Galarreta, M., and Hestrin, S. (1998). Frequency-dependent synaptic depression and the balance of excitation and inhibition in the neocortex. Nat. Neurosci. 1, 587–594. doi: 10.1038/2882

Gelman, A., and Shirley, K. (2011). “Inference from simulations and monitoring convergence,” in Handbook of Markov Chain Monte Carlo, eds S. Brooks, A. Gelman, G. Jones, and X. L. Meng (Boca Raton, FL: CRC Press), 163–174.

Heller, K., and Ghahramani, Z. (2005). “Bayesian hierarchical clustering,” in Proceedings of the 22nd International Conference on Machine learning (Bonn), 297–304.

Ihler, A., and Mandel, M. (2007). Kernel density estimation toolbox for matlab. Available online at: http://ics.udi.edu/ihler/code/kde.html

Klyachko, V. A., and Stevens, C. F. (2006). Temperature-dependent shift of balance among the components of short-term plasticity in hippocampal synapses. J. Neurosci. 26, 6945–6957. doi: 10.1523/JNEUROSCI.1382-06.2006

Le Bé, J., and Markram, H. (2006). Spontaneous and evoked synaptic rewiring in the neonatal neocortex. Proc. Natl. Acad. Sci. U.S.A. 103, 13214. doi: 10.1073/pnas.0604691103

Loebel, A., Silberberg, G., Helbig, D., Markram, H., Tsodyks, M., and Richardson, M. J. E. (2009). Multiquantal release underlies the distribution of synaptic efficacies in the neocortex. Front. Comput. Neurosci. 3:27. doi: 10.3389/neuro.10.027.2009

Maass, W., and Zador, A. M. (1999). Dynamic stochastic synapses as computational units. Neural Comput. 11, 903–917. doi: 10.1162/089976699300016494

Markram, H., Lübke, J., Frotscher, M., Roth, A., and Sakmann, B. (1997). Physiology and anatomy of synaptic connections between thick tufted pyramidal neurones in the developing rat neocortex. J. Physiol. 500(Pt 2), 409–440.

Markram, H., Toledo-Rodriguez, M., Wang, Y., Gupta, A., Silberberg, G., and Wu, C. (2004). Interneurons of the neocortical inhibitory system. Nat. Rev. Neurosci. 5, 793–807. doi: 10.1038/nrn1519

Markram, H., and Tsodyks, M. (1996). Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature. 382, 807–810. doi: 10.1038/382807a0

Markram, H., Wang, Y., and Tsodyks, M. (1998). Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. U.S.A. 95, 5323–5328.

McGuinness, L., Taylor, C., Taylor, R. D. T., Yau, C., Langenhan, T., Hart, M. L., et al. (2010). Presynaptic NMDARs in the hippocampus facilitate transmitter release at theta frequency. Neuron 68, 1109–1127. doi: 10.1016/j.neuron.2010.11.023

Milligan, G., and Cooper, M. (1985). An examination of procedures for determining the number of clusters in a data set. Psychometrika 50, 159–179. doi: 10.1007/BF02294245

Nakagawa, S., and Hauber, M. (2011). Great challenges with few subjects: statistical strategies for neuroscientists. Neurosci. Biobeh. Rev. 35, 462–473. doi: 10.1016/j.neubiorev.2010.06.003

Pan, B., and Zucker, R. (2009). A general model of synaptic transmission and short-term plasticity. Neuron 62, 539–554. doi: 10.1016/j.neuron.2009.03.025

Pereira, F., Tishby, N., and Lee, L. (1993). “Distributional clustering of English words,” in Proceedings of the 31st Annual Meeting on Association for Computational Linguistics (Columbus, OH: USA Association for Computational Linguistics).

Pouzat, C., and Hestrin, S. (1997). Developmental regulation of basket/stellate cell – Purkinje cell synapses in the cerebellum. J. Neurosci. 17, 9104–9112.

Ramaswamy, S., Hill, S. L., King, J. G., Schürmann, F., Wang, Y., and Markram, H. (2012). Intrinsic morphological diversity of thick-tufted layer 5 pyramidal neurons ensures robust and invariant properties of in silico synaptic connections. J. Physiol. 590, 737–752. doi: 10.1113/jphysiol.2011.219576

Reyes, A., Lujan, R., Rozov, A., Burnashev, N., Somogyi, P., and Sakmann, B. (1998). Target-cell-specific facilitation and depression in neocortical circuits. Nat. Neurosci. 1, 279–285. doi: 10.1038/1092

Reyes, A., and Sakmann, B. (1999). Developmental switch in the short-term modification of unitary EPSPs evoked in layer 2/3 and layer 5 pyramidal neurons of rat neocortex. J. Neurosci. 19, 3827–3835.

Richardson, M. J. E., Melamed, O., Silberberg, G., Gerstner, W., and Markram, H. (2005). Short-term synaptic plasticity orchestrates the response of pyramidal cells and interneurons to population bursts. J. Comput. Neurosci. 18, 323–331. doi: 10.1007/s10827-005-0434-8

Rinaldi, T., Silberberg, G., and Markram, H. (2008). Hyperconnectivity of local neocortical microcircuitry induced by prenatal exposure to valproic acid. Cereb. Cortex 18, 763–770. doi: 10.1093/cercor/bhm117

Romani, A., Marchetti, C., Bianchi, D., Leinekugel, X., Poirazi, P., Migliore, M., et al. (2013). Computational modeling of the effects of amyloid-beta on release probability at hippocampal synapses. Front. Comput. Neurosci. 7:1. doi: 10.3389/fncom.2013.00001

Rozov, A., Burnashev, N., Sakmann, B., and NEHER, E. (2001). Transmitter release modulation by intracellular Ca2+ buffers in facilitating and depressing nerve terminals of pyramidal cells in layer 2/3 of the rat neocortex indicates a target cell-specific difference in presynaptic calcium dynamics. J. Physiol. 531, 807–826. doi: 10.1111/j.1469-7793.2001.0807h.x

Scanziani, M., Gähwiler, B., and Charpak, S. (1998). Target cell-specific modulation of transmitter release at terminals from a single axon. Proc. Natl. Acad. Sci. U.S.A. 95, 12004–12009. doi: 10.1073/pnas.95.20.12004

Sjöström, P. J., Turrigiano, G. G., and Nelson, S. B. (2003). Neocortical LTD via coincident activation of presynaptic NMDA and cannabinoid receptors. Neuron 39, 641–654. doi: 10.1016/S0896-6273(03)00476-8

Sokal, R. R. (1958). A statistical method for evaluating systematic relationships. Univ. Kans. Sci. Bull. 38, 1409–1438.

Sun, H., and Dobrunz, L. (2006). Presynaptic kainate receptor activation is a novel mechanism for target cell-specific short-term facilitation at Schaffer collateral synapses. J. Neurosci. 26, 10796. doi: 10.1523/JNEUROSCI.2746-06.2006

Testa-Silva, G., Loebel, A., Giugliano, M., de Kock, C. P. J., Mansvelder, H. D., and Meredith, R. M. (2012). Hyperconnectivity and slow synapses during early development of medial prefrontal cortex in a mouse model for mental retardation and autism. Cereb. Cortex 22, 1333–1342. doi: 10.1093/cercor/bhr224

Tóth, K., and McBain, C. J. (2000). Target-specific expression of pre- and postsynaptic mechanisms. J. Physiol. 525(Pt 1), 41–51. doi: 10.1111/j.1469-7793.2000.00041.x

Tsodyks, M., and Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. U.S.A. 94, 719–723.

Tsodyks, M., Pawelzik, K., and Markram, H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835. doi: 10.1162/089976698300017502

Tsodyks, M., Uziel, A., and Markram, H. (2000). Synchrony generation in recurrent networks with frequency-dependent synapses. J. Neurosci. 20, RC50.

Turkheimer, F. E., Hinz, R., and Cunningham, V. J. (2003). On the undecidability among kinetic models: from model selection to model averaging. J. Cereb. Blood Flow Metab. 23, 490–498.

van Rossum, M. C. W., van der Meer, M. A. A., Xiao, D., and Oram, M. W. (2008). Adaptive integration in the visual cortex by depressing recurrent cortical circuits. Neural Comput. 20, 1847–1872. doi: 10.1162/neco.2008.06-07-546

Varela, J., Sen, K., Gibson, J., Fost, J., Abbott, L. F., and Nelson, S. (1997). A quantitative description of short-term plasticity at excitatory synapses in layer 2/3 of rat primary visual cortex. J. Neurosci. 17, 7926.

Wang, Y., Markram, H., Goodman, P. H., Berger, T. K., Ma, J., and Goldman-Rakic, P. S. (2006). Heterogeneity in the pyramidal network of the medial prefrontal cortex. Nat. Neurosci. 9, 534–542. doi: 10.1038/nn1670

Keywords: short-term synaptic plasticity, probabilistic inference, neocortical circuits, experimental design, parameter estimation

Citation: Costa RP, Sjöström PJ and van Rossum MCW (2013) Probabilistic inference of short-term synaptic plasticity in neocortical microcircuits. Front. Comput. Neurosci. 7:75. doi: 10.3389/fncom.2013.00075

Received: 15 February 2013; Accepted: 17 May 2013;

Published online: 06 June 2013.

Edited by:

Si Wu, Beijing Normal University, ChinaReviewed by:

Magnus Richardson, University of Warwick, UKVictor Matveev, New Jersey Institute of Technology, USA

Copyright © 2013 Costa, Sjöström and van Rossum. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Rui P. Costa, Institute for Adaptive and Neural Computation, School of Informatics, University of Edinburgh, Rm 2.40, Informatics Forum, 10 Crichton Street, EH8 9AB, Edinburgh, UK e-mail: rui.costa@ed.ac.uk