The effect of mild-to-moderate hearing loss on auditory and emotion processing networks

- 1Department of Speech and Hearing Science, University of Illinois at Urbana-Champaign, Champaign, IL, USA

- 2The Neuroscience Program, University of Illinois at Urbana-Champaign, Champaign, IL, USA

- 3Beckman Institute for Advanced Science and Technology, University of Illinois at Urbana-Champaign, Champaign, IL, USA

We investigated the impact of hearing loss (HL) on emotional processing using task- and rest-based functional magnetic resonance imaging. Two age-matched groups of middle-aged participants were recruited: one with bilateral high-frequency HL and a control group with normal hearing (NH). During the task-based portion of the experiment, participants were instructed to rate affective stimuli from the International Affective Digital Sounds (IADS) database as pleasant, unpleasant, or neutral. In the resting state experiment, participants were told to fixate on a “+” sign on a screen for 5 min. The results of both the task-based and resting state studies suggest that NH and HL patients differ in their emotional response. Specifically, in the task-based study, we found slower response to affective but not neutral sounds by the HL group compared to the NH group. This was reflected in the brain activation patterns, with the NH group employing the expected limbic and auditory regions including the left amygdala, left parahippocampus, right middle temporal gyrus and left superior temporal gyrus to a greater extent in processing affective stimuli when compared to the HL group. In the resting state study, we observed no significant differences in connectivity of the auditory network between the groups. In the dorsal attention network (DAN), HL patients exhibited decreased connectivity between seed regions and left insula and left postcentral gyrus compared to controls. The default mode network (DMN) was also altered, showing increased connectivity between seeds and left middle frontal gyrus in the HL group. Further targeted analysis revealed increased intrinsic connectivity between the right middle temporal gyrus and the right precentral gyrus. The results from both studies suggest neuronal reorganization as a consequence of HL, most notably in networks responding to emotional sounds.

Introduction

Hearing loss (HL) remains one of the most common chronic conditions affecting older adults (Cruickshanks et al., 1998), with the prevalence rate increasing from between 25 and 40% in adults above 65 years of age to greater than 80% in people older than 85 years (Yueh et al., 2003). In general, mild-to-moderately severe sensorineural HL, which is often untreated, affects about 23–33% of the adult population in the world (Stevens et al., 2013). HL has a significant impact on quality of life and general well-being of an older adult (Mulrow et al., 1990; Carabellese et al., 1993) and may be associated with depression and isolation, especially in those younger than 70 years of age (Gopinath et al., 2009). However, little is known about the consequences of mild-to-moderately severe HL on the neural architecture and functionality of the brain.

The majority of brain imaging studies that have explored HL have done so when HL has occurred in conjunction with tinnitus (e.g., Weisz et al., 2004; Husain et al., 2011b), other disorders (e.g., Yoneda et al., 2012) or in the context of sign language studies when the impairment has been profound (e.g., Petitto et al., 2000; Husain et al., 2009). A few brain imaging studies have investigated the impact of HL on aging (Wong et al., 2010; Peelle et al., 2011); these remain the best sources to understand the neural correlates of HL. These neural correlates are manifested in decrease in gray matter in the frontal cortex (Wong et al., 2010; Peelle et al., 2011) and a decreased response in the superior temporal cortex, thalamus and brainstem in a speech comprehension task (Peelle et al., 2011). Our previous structural MRI study of HL in older adults (conducted as part of a larger study to investigate neural bases of tinnitus and HL) observed gray matter loss in the frontal cortices and disordered white matter tracts leading into and out of the auditory cortex (Husain et al., 2011a). A companion functional study noted increased response of the regions in the frontal and parietal cortices, possibly which related to increased attention processing (Husain et al., 2011b). In the latter fMRI study, participants had mild-to-moderately severe HL with an average age in the mid-fifties and were asked to perform a discrimination task with simple tones and tonal sweeps. When compared to rest trials, task trials resulted in greater response in the temporal, frontal and parietal cortices in the HL group relative to the normal hearing (NH) controls.

In the present study, we investigated the neural correlates of mild-to-moderate sensorineural HL in middle-aged adults without tinnitus or any other chronic physical or mental condition and compared them to age-matched NH controls. We concentrated on extra-auditory networks, specifically the one concerned with emotional processing. The limbic system is typically the main network of emotion processing. It consists primarily of the amygdala, parahippocampus, ventral medial prefrontal cortex, nucleus accumbens, and insula. Recently, studies have begun to map out the regions and connectivity of the auditory emotional network in adults with NH (Blood and Zatorre, 2001; Koelsch et al., 2006; Kumar et al., 2012) and in those with tinnitus (Giraud et al., 1999; Mirz et al., 2000; Seydell-Greenwald et al., 2012; Golm et al., 2013). Using dynamic causal modeling, Kumar et al. (2012) found that the negative valence of a sound modulates the backward connections from the amygdala to the auditory cortex, and the acoustic features of a sound modulate the forward connections from the auditory cortex to the amygdala. It is likely that such acoustic features, processing nodes and their connectivity may be susceptible to changes due to sustained loss of hearing acuity when listening to affective sounds. This may result in delayed processing or misclassification or both of the affective sounds. One of the goals of the present study was to investigate whether loss of hearing acuity affects the acoustic processing of affective sounds and whether this impacts their perception.

HL may also affect the perception of the valence of affective sounds, regardless of the processing of acoustic features. Tinnitus, in particular, has been established to have an altered auditory-limbic system link (Jastreboff, 1990; Rauschecker et al., 2010); behaviorally, there is greater prevalence of depression and anxiety in the tinnitus patient group compared to the general population (Bartels et al., 2008). Not surprisingly then, this disordered link is beginning to be studied in tinnitus. However, HL occurs in about 90% of the individuals with tinnitus (Davis and Rafaie, 2000), and the unique contribution of tinnitus to any changes in emotional processing is unknown. There are other reasons to study emotional processing in HL. As previously noted, prevalence of HL increases with age (Yueh et al., 2003) and may contribute to social isolation (Gopinath et al., 2009). This in turn may impact the emotion processing limbic network, as it has been shown to occur with aging and with tinnitus (Mather and Knight, 2005; Rauschecker et al., 2010; St Jacques et al., 2010; Anticevic et al., 2012). Nevertheless, no brain imaging study has explicitly investigated the emotion processing network in older adults with HL.

We conducted both a task-based and a resting state functional connectivity study of the emotion processing network in middle-aged adults with bilateral sensorineural HL. The task consisted of classification of sounds as being “pleasant,” “unpleasant,” or “neutral.” Our working hypothesis was that a loss of hearing acuity affects behavior, sounds may appear more unpleasant (Franks, 1982; Feldmann and Kumpf, 1988; Leek et al., 2008; Rutledge, 2009; Uys et al., 2012), reaction times may be longer due to effortful listening (Hicks and Tharpe, 2002; Tun et al., 2009). Likewise, the neural network subserving emotion processing may be affected, specifically in the response patterns of the nodes. In order to assess the impact of HL on a baseline, resting state, without the distraction of any task, we measured the functional connectivity of a number of networks, including that connected to the amygdala (a primary node of the limbic system).

Our main focus was on auditory and limbic systems, but these systems interact with intrinsic networks devoted to attention processing and possibly the default mode network (DMN). Intrinsic networks, or resting state networks, are defined as spontaneous, low-frequency oscillations in brain activity that can be organized into coherent networks. In many cases, intrinsic networks mirror task-related networks. For example, the auditory resting state network closely resembles an auditory task network. However, instead of correlations between activated regions in the task-based network, the intrinsic network shows correlations between deactivated brain regions. The DMN is the quintessential resting state network and is unique in that it exhibits deactivation in a task-based state and is active during rest (Raichle et al., 2001). The DMN exhibits a push-pull type of relationship with other networks in the brain (Fox et al., 2005). The dorsal attention network (DAN), for instance, will show activations while the DMN is deactivated (in a task-based state). Its relationship with the DAN and other intrinsic networks warrants study of the DMN. Many disorders, including Alzheimer's disease, schizophrenia, and tinnitus, have been shown to affect the connectivity of the DMN (for reviews see, Greicius, 2008; Husain and Schmidt, 2013). It is also possible that intrinsic connectivity may differ in patients with HL, perhaps relating in particular to limbic areas. Alterations to resting state functional connectivity may result in decreased preparedness to perform a task. In particular, if limbic areas are shown to display irregular connectivity to other brain regions at rest, it may change how individuals process emotional stimuli.

Although we had provisional hypotheses, our study was exploratory because of a lack of brain imaging studies investigating the impact of HL on emotion processing and related extra-auditory networks. In the resting state portion of our study, general hypotheses were made regarding which networks may show altered connectivity, but we did not specify the nodes and the nature of these alterations. We had more constrained expectations about the role of amygdala and auditory processing areas in the emotion-task study, in that we expected reduced engagement of such regions in listeners with HL when processing affective stimuli. In sum, we conducted a comprehensive study, combining both task- and rest-based fMRI using multiple seed regions in order to establish a baseline for future studies.

Methods

Subjects

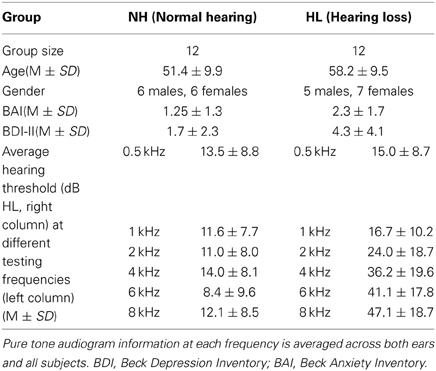

Participants were recruited from the Urbana-Champaign area, were scanned under the UIUC IRB 10144 protocol, gave written informed consent, and were monetarily compensated. Subjects belonged to one of two groups: middle-aged adults with bilateral high-frequency sensorineural HL (n = 12), or their age- and gender-matched controls with NH (n = 15). Three NH subjects were excluded due to excessive motion artifact, and data from only 12 NH participants were included in the final analysis. During recruitment, we excluded subjects that presented with tinnitus or with asymmetric HL at the time of their audiological examination. We defined asymmetric HL to be more than a 15 dB HL difference between the right and left ear at one or more frequencies, or if the right and left ear differed by 10dB HL at two consecutive frequencies. The Beck Depression Inventory (BDI-II) and the Beck Anxiety Inventory (BAI) were used to assess depression and anxiety levels (Beck and Steer, 1984; Steer et al., 1993, 1999). All subjects scored in the minimal depression range and minimal anxiety range for the BDI-II and BAI, respectively. See Table 1 for information about subject demographics.

Audiometric Evaluation

A comprehensive audiometric evaluation was performed for each subject. Audiological testing took place inside a sound-attenuating booth and included pure tone testing, word recognition testing, and bone conduction testing. Additional tests included distortion product otoacoustic emissions and tympanometry measurements to eliminate any confounding peripheral hearing pathologies. For pure tone testing, the test frequencies included 0.25, 0.5, 1, 2, 4, 6, and 8 kHz. For all of the test frequencies, each subject in the NH group had pure-tone thresholds of 25dB HL or lower. Participants in the HL group had a pure-tone threshold of 30 dB HL or lower for the test frequencies 0.25–2 kHz, with the exception of two HL subjects who had a slightly elevated threshold of 35 dB HL at 1 kHz. For the test frequencies 4–8 kHz, the HL subjects had pure-tone thresholds between 30 and 70 dB HL, ranging from mild to moderately-severe HL. Table 1 contains information about the average hearing at testing frequencies for each subject group. None of the HL participants relied on aided hearing.

Data Acquisition

A 3Tesla Siemens Magnetom Allegra head-only scanner was used to acquire all MRI images. A series of two anatomical and two functional images were acquired- the first functional scan was of the emotional task and the second acquired data on resting state; order of acquisition varied across the subjects. Thirty-two low-resolution T2-weighted structural transversal slices (TR = 7260 ms, TE = 98 ms) were collected for each volume with a 4.0 mm slice thickness and a 0.9 × 0.9 × 4.0 mm3 voxel size [matrix size (per slice), 256 × 256; flip angle, 150°]. We obtained 160 high resolution magnetization-prepared rapid-acquisition with gradient echo (MPRAGE) sagittal slices for each volume that were 1.2 mm in thickness with a 1.0 × 1.0 × 1.2 mm3 voxel size [TR = 2300 ms; TE = 2.83 ms; matrix size (per slice), 256 × 256; flip angle, 9°]. The functional images were acquired using the following parameters: slice thickness, 4 mm; inter-slice gap, 0.4 mm; 32 axial or transverse slices, distance factor, 10%; voxel size, 3.4 × 3.4 × 4.0 mm3; field of view (FoV) read, 220 mm; TR, 9000 ms with 2000 ms acquisition time; TE, 30 ms; matrix size (per slice), 64 × 64; flip angle, 90°. Functional images were acquired separately for (a) an emotional task and (b) a resting state study.

(a) Emotion task

To mitigate the loud noise of the radio frequency gradients generated during image acquisition from interfering with the perception of the stimuli, we used clustered echo-planar imaging (EPI) (Hall et al., 1999; Gaab et al., 2003; Zaehle et al., 2004). Clustered EPI, or sparse sampling, was chosen particularly to improve the listening environment for the subjects with HL. To reduce scanner noise interference with sound perception, we collected one image volume (2 s acquisition time) post stimulus presentation rather than using continuous image acquisition during a period of “relative quiet” when the radio-frequency gradients were switched off and the only source of ambient noise was the scanner pump. The repetition time was 9 s, and within each trial a 6 s stimulus was presented during a 7 s interval of reduced scanner noise. To optimize the scanning procedure to detect neural response from regions within the limbic system, prior to data acquisition, a custom MATLAB (http://www.mathworks.com/products/matlab/) toolbox was used in order to fine-tune the timing of stimulus presentation relative to image acquisition (Dolcos and McCarthy, 2006). Stimuli were selected from the International Affective Digital Sounds (IADS) database and had normative scores for valence and arousal; sounds were rated on a scale of 1–9 (9 very pleasant, 1 very unpleasant for valence and 9 very arousing, 1 not at all arousing for arousal) (Bradley and Lang, 2007). Normative scores were as follows: pleasant (valence: 6.83 ± 0.54, arousal: 6.46 ± 0.56), unpleasant (valence: 2.78 ± 0.58, arousal: 6.9 ± 0.31) and neutral (valence: 4.81 ±0.43, arousal: 4.85 ±0.57). The normative valence ratings for P and U sounds are statistically different at p < 0.00001, but did not differ in arousal scores. Supplementary Table 1 contains a complete list of the affective sounds used in the present study. We presented the sounds in the scanner at a maximum comfort level, as indicated by each participant, during the relatively quiet intervals of image acquisition. Prior to data collection, subjects were given both written and verbal instructions. Additionally, subjects were trained using sounds from the IADS database, different from the stimuli chosen in the experiment, to familiarize the participants with the task. During the final experiment, Presentation 14.7 software (www.neurobs.com) on a Windows XP computer in the fMRI control room was used to deliver sounds and instructions via pneumonic headphones (Resonance Technology, Inc., Northridge, CA.). To complete the task, subjects listened to 90 affective sounds [30 pleasant (P), 30 neutral (N), 30 unpleasant (U)], each 6 s in duration and were instructed to rate the sound as P, N, or U as soon as they felt confident in their rating.

(b) Resting state

To acquire information about the resting state, continuous scanning instead of clustered image acquisition was employed. During the resting scan, which was continuous and lasted approximately 5 min, subjects were instructed to lie still and to look at a fixation cross for the scan duration. One hundred and fifty volumes were collected for each subject. The first four images were discarded prior to preprocessing, leaving 146 volumes for analysis.

Preprocessing

Pre-processing was similar for both types of functional scans. Statistical Parametric Mapping 8 (SPM, Welcome Trust Centre for Neuroimaging, http://www.fil.ion.ucl.ac.uk/spm/software/spm8/) software was used to analyze the functional imaging data. The images were first realigned using a rigid body transformation to control for head motion. Next, the low resolution Axial T2 (AxT2) image was registered to the mean fMRI image generated during the first step. The high resolution MPRAGE image was then registered to the AxT2 image. To normalize the functional images to MNI space, the MPRAGE was normalized to match a standard T1 MNI template. The normalized images were then smoothed using a Gaussian kernel of 8 × 8 × 8 mm3 full width at half-maximum. To account for artifacts created by head motion, data from 3 NH subjects who displayed excessive motion (defined as at or above ±1.5 mm translation and ± 1.5° rotation in any direction) were not included for further analysis. We also included regressors of motion as covariates of no-interest in the general linear models created in the different statistical analyses, in order to (partially) remove motion-related artifacts. Further, t-tests of root-mean-square estimates of both rotational and translational movement showed that there was no statistical significant difference between the two groups (translational motion mean ± standard deviation): NH (0.63 ± 0.28); HL (0.67 ± 0.23); rotational motion: NH (0.01 ± 0.005), HL (0.01 ± 0.004).

Data Analysis

(a) Emotion task

Behavioral data were obtained in the scanner during fMRI data acquisition. We collected individual subject ratings of each sound as P, N, or U along with reaction times. Subject ratings and reaction times were analyzed using separate Two-Way ANOVA tests in SPSS ver. 20 software (statistical package for social sciences, IBM, http://www-01.ibm.com/software/analytics/spss/). Group (NH, HL) and condition (P, N, U) were set as independent fixed factors in a general linear model within SPSS, and significance was set at p < 0.05.

For data analysis, trials were coded based upon each individual's subjective rating of the affective sounds. We chose to employ the individual ratings to classify trials as “P,” “N,” or “U,” rather than the norms reported in IADS. Either using the IADS classification or individual rating to analyze data are valid methods of classifying the individual trials for further analysis. The trend in affective neuroscience literature is to move away from the normative classification to the individual classification, particularly when examining special populations where it is expected that emotional responses are altered (e.g., older adults or patient population) (St Jacques et al., 2010). It should be noted that the ratings reported with the IADS were obtained from younger adults with NH (Bradley and Lang, 2007). In the present study, the subject population was older with mean age of 51.4 ± 9.9 for NH adults and 58.2 ± 9.5 for those with HL. Therefore, individual ratings were used rather than the norms for classifying the trials obtained during fMRI scanning. First level fixed effects analysis was performed on each subject's smoothed images to generate P > N and U > N contrast images. Motion parameters were included in the first level model as covariates of no-interest. The contrast images were then included in the flexible factorial analysis and post-hoc two-sample t-tests at the second level. The P + U > N contrast was computed by performing a t-test on the condition vectors for each group separately in the flexible factorial model. The three factor design included group, subject and condition. Group was assumed to be independent and have unequal variance, subject was assumed to be independent and possess equal variance, and condition was assumed to be a dependent factor and to have equal variance. To directly compare the NH and HL groups, we conducted post-hoc two-sample t-tests within the flexible factorial model. Additionally, we performed a region-of-interest (ROI) analysis using the Wake Forest University (WFU) pickatlas toolbox (http://www.fmri.wfubmc.edu) within SPM8, with regions defined anatomically based on the human MNI atlas within the toolbox. Based on our a priori hypothesis about the involvement of auditory and limbic regions in affective sound processing, we created a single anatomically-defined mask via selecting regions including the amygdala, insula, parahippocampus, nucleus accumbens, ventral medial prefrontal cortex, inferior colliculus, medial geniculate body and primary auditory cortex (Brodmann areas 42, 41, 22). ROI analysis was performed on the NH (P + U > N), HL (P + U > N), and between group contrasts, and small volume correction (SVC) was employed. All clusters identified in the results were reported using a significance level set at p < 0.025 FWE at either the voxel or cluster level (threshold halved from the standard p < 0.05 to account for a two-tailed t-test).

(b) Resting state

Preprocessing of the resting state data began with slice time correction for the interleaved, ascending data collection. Following that, the same preprocessing steps used for the emotion task were applied. The Functional Connectivity Toolbox (Conn) (Whitfield-Gabrieli and Nieto-Castanon, 2012) for MATLAB was used for data analysis. The smoothed fMRI data was band-pass filtered from 0.008 to 0.08 kHz. The average BOLD timeseries of the segmented white matter and cerebrospinal fluid as well as the realignment/motion parameters generated during preprocessing were regressed out of the data. Then, seed-to-voxel analysis was performed to analyze the auditory resting state network, the DMN, and the DAN. Connectivity was assessed using pairs of seed regions for both the DMN and auditory networks; correlations between each seed and the whole brain were measured and averaged across seed pairs. Seed locations are listed in Table 2. For the auditory network, seeds were located in the bilateral primary auditory cortices. For the DMN, they were located in the medial prefrontal cortex and the posterior cingulate cortex. The DAN was examined using four seeds in the left and right posterior intraparietal sulci and the left and right frontal eye fields (Burton et al., 2012). All seeds were created using Marsbar (Brett et al., 2002) and were 5 mm in radius. Coordinates of seed regions were the same as those used in (Schmidt et al., 2013). The resting state data used in the present study were partially described in the Schmidt et al. (2013) study, but have been re-analyzed for the purpose of this study. Correlation maps of the whole brain were created for each seed and then averaged over all seeds for a specific network for each subject. These correlations were then z-transformed, group averages were computed, and across-group comparisons were made via two-sample t-tests in the Conn toolbox (Whitfield-Gabrieli and Nieto-Castanon, 2012). Results were then exported to SPM8 for display purposes. After whole brain analysis at p < 0.001 uncorrected threshold, clusters that were significant at p < 0.025 FWE either at voxel or cluster level were selected to account for both tails of the t-test, with cluster extent set at 25 voxels.

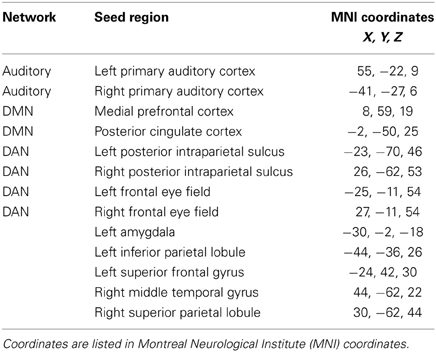

Table 2. Seed regions for the resting state functional connectivity analysis, consisting of seeds for canonical resting state networks and for networks based on local axima from the results of the emotion task study.

A seeding analysis designed to examine resting state network connectivity was performed using ROIs determined from published studies as stated earlier, as well as using ROIs identified from the task-based study. The latter ROIs included the left amygdala, left inferior parietal lobule, left superior frontal gyrus, right middle temporal gyrus, and the right superior parietal lobule (Table 2). The left amygdala seed was created based on the NH > HL (P + U > N) contrast from the task ROI analysis. The right superior parietal lobule and left inferior parietal lobule were both based on the HL > NH (P + U > N) contrast, and the right middle temporal gyrus and left superior frontal gyrus seeds were based on the NH > HL (P + U > N) contrast from the emotion task results. All of these ROIs were determined from group-level contrasts. Peak maxima were used as the centers for the spherical ROIs, and the mean BOLD timeseries of the voxels in the ROI was generated. Seed specification, data generation and statistical analyses were performed in the manner described earlier for the standard seeds.

Results

Behavioral Data

(a) Emotion task

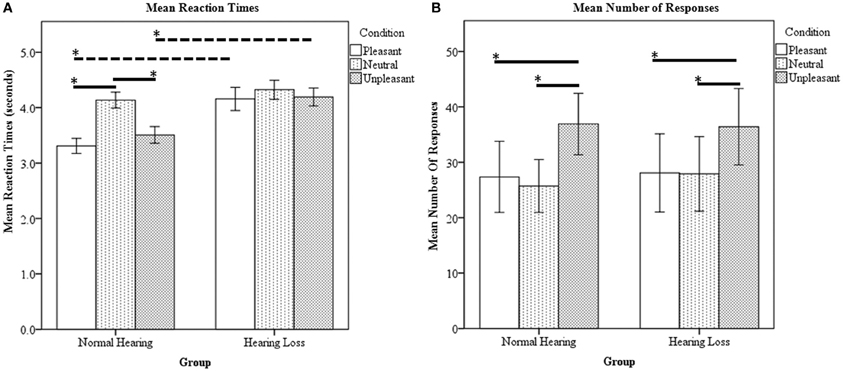

Ratings were obtained in the scanner simultaneous with fMRI data acquisition. There was a statistically significant main effect of group [F(1, 23) = 69.53, p < 0.000001], main effect of condition [F(1, 23) = 17.59, p < 0.000001] and interaction between group and condition [F(1, 23) = 7.79, p < 0.000423] for the reaction time data. The NH group responded significantly slower to the neutral sounds compared to the pleasant and unpleasant sounds (Figure 1A). The HL group's reaction time for the three types of sounds did not significantly differ (Figure 1A). For between group comparisons, the HL group was significantly slower for both pleasant and unpleasant sounds compared to the NH group (Figure 1A). Concerning the type of responses, there was a significant main effect of condition [F(1, 23) = 7.162, p < 0.01]; however, the main effect of group [F(1, 23) = 0.118, p = 0.733] and the interactions [F(1, 23) = 0.109, p = 0.897] did not reach significance. Both groups rated significantly more stimuli as unpleasant compared to pleasant and neutral (determined using post-hoc within-group t-tests) (Figure 1B). Note that the experimental design used an equal number of sounds classified as P, N, and U according to the normative IADS scores (Bradley and Lang, 2007). Due to the observed variation from the normative ratings, we chose to code the trials during analysis according to each individual's rating.

Figure 1. Affective sound categorization task behavioral results. (A) Mean reaction time data. For within group comparison, the HL group did not statistically differ between the P, N, and U reaction times. However, the NH group responded significantly slower to the N sounds compared to the P and U stimuli. Compared to the NH group, the HL reaction times were significantly slower for P and U sounds. (B) Mean number of responses. The NH and HL groups responded U significantly more than N and P. Statistical significance level p < 0.05 indicated by *.

(b) Resting state

No behavioral data were obtained for the resting state study.

fMRI Data

(a) Emotion task

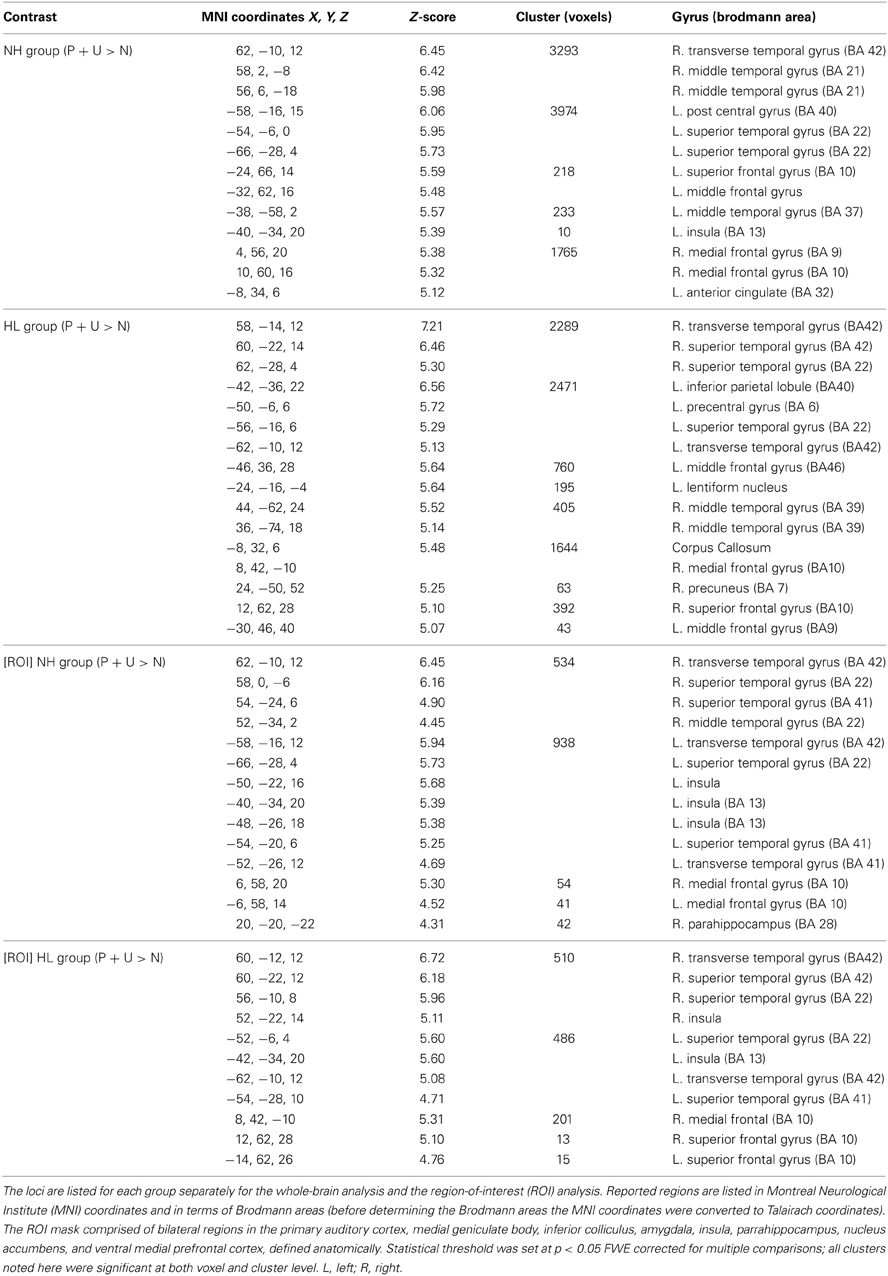

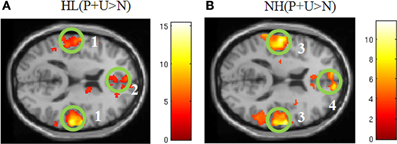

Within group comparisons: In the NH group, areas of increased activation for the contrast P + U > N were observed in the bilateral middle temporal gyri, right transverse temporal gyrus, left superior temporal gyrus, left post central gyrus, right medial frontal gyrus, left superior frontal gyrus, left middle frontal gyrus, left anterior cingulate and the left insula (Figure 2, Table 3). With respect to the HL group, increased response was obtained in the bilateral superior temporal gyri, bilateral transverse temporal gyri, right middle temporal gyrus, right superior frontal gyrus, left middle frontal gyrus, right medial frontal gyrus, right precuneus, left inferior parietal lobule, left precentral gyrus, left lentiform nucleus and corpus callosum for affective sounds compared to neutral sounds (Figure 2, Table 3). ROI analysis revealed increased response in bilateral transverse temporal gyri, bilateral superior temporal gyri, bilateral medial frontal gyrus, left insula, right middle temporal gyrus and right parahippocampus for the NH (P + U > N) contrast (Table 3). For the HL (P + U > N) comparison, increased response was observed in the bilateral transverse temporal gyri, bilateral superior temporal gyri, bilateral superior frontal gyri, right medial frontal gyrus and left insula (Table 3).

Figure 2. Statistical parametric maps for the effect of affective stimuli (P + U > N) for each group separately (A,B). (A) HL (P + U > N) and (B) NH (P + U > N) images illustrate the similar whole brain response patterns from both groups (MNI coordinate z = +14). The maps are displayed at p < 0.001 uncorrected level for better visualization, but the clusters in the circles are corrected for multiple comparisons (p < 0.05 FWE). (1) bilateral middle temporal gyrus, (2) medial frontal gyrus, (3) bilateral middle temporal gyrus, (4) medial frontal gyrus.

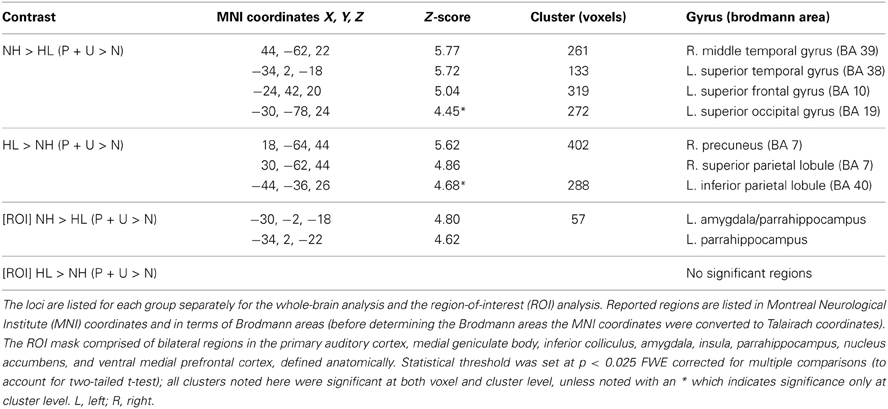

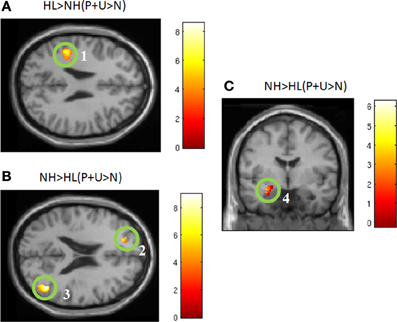

Between-group comparisons: For the NH > HL (P + U > N) comparison, we observed heightened response in the left superior frontal gyrus, right middle temporal gyrus, left superior temporal gyrus, and left superior occipital gyrus (Figure 3, Table 4). Concerning the HL > NH (P + U > N) comparison, elevated response was observed in the right superior parietal lobule, right precuneus, and left inferior parietal lobule (Figure 3, Table 4). For the ROI analysis, no suprathreshold voxels were obtained for the HL > NH (P + U > N) contrast. However, for the NH > HL (P + U > N) comparison, increased response was observed in the left amygdala and left parahippocampus (Figure 3, Table 4).

Figure 3. Statistical parametric maps for post-hoc two-tailed two sample t-tests and region-of-interest analysis (ROI). (A) HL > NH (P + U > N) and (B) NH > HL (P + U > N) illustrate the brain regions chosen from the post-hoc two sample t-tests for the seed analysis (MNI coordinate z = +26, z = +21, respectively). (C) Denotes increased amygdala activation observed for the NH > HL (P + U > N) comparison (MNI coordinate y = −4). The maps are displayed at p < 0.001 uncorrected level for better visualization, but the clusters in the circles are corrected for multiple comparisons (p < 0.025 FWE). (1) left inferior parietal lobule, (2) left superior frontal gyrus, (3) right middle temporal gyrus, (4) left amygdala.

(b) Resting state

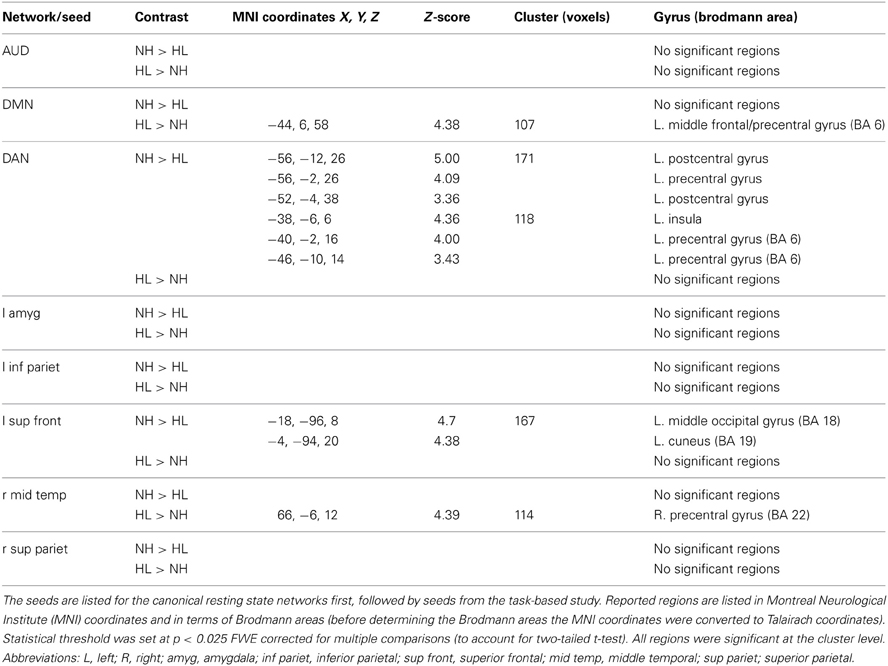

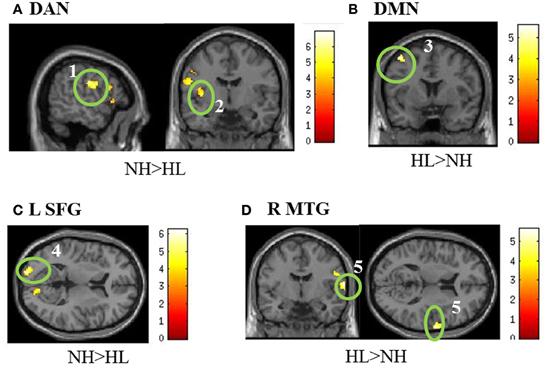

No significant differences were found between groups in the auditory resting state network. With respect to the DMN, a significant difference in the left middle frontal/precentral gyrus was observed in the HL > NH comparison. Analysis of the DAN revealed a significant difference in the left postcentral/precentral gyrus and left insula. These results of the typical intrinsic networks are listed in Table 5 and displayed in Figure 4. For the connectivity analysis with task-based ROIs, no significant differences in connectivity with the left amygdala were seen. Placing a seed in the left inferior parietal lobule also did not reveal significant differences. With the seed in the left superior frontal gyrus, the NH > HL contrast showed significant differences in connectivity with the left middle occipital lobe. The right middle temporal seed showed a stronger correlation with the right precentral gyrus in the HL group compared to the NH group. Finally, no connectivity differences were seen with the seed in the right superior parietal lobule. The results of this analysis are also shown in Table 5 and Figure 4.

Figure 4. Statistical parametric maps of the two-tailed two sample t-tests of the resting state functional connectivity results. (A,B) show the results of the seeding analyses designed to examine the connectivity of the resting state networks, while (C,D) show correlations to the task-based seeds. The maps are displayed at p < 0.001 uncorrected level for better visualization, but the clusters in the circles are corrected for multiple comparisons (p < 0.025 FWE). In (A), the seed regions were located in the bilateral posterior intraparietal sulci and the bilateral frontal eye fields to examine connectivity in the dorsal attention network (DAN). For the default mode network (DMN) in (B), seeds were located in the posterior cingulate and medial prefrontal cortices. (C,D) are labeled with the seed regions in the figure. (1) left postcentral gyrus, (2) left insula, (3) left middle frontal/precentral gyrus, (4) left middle occipital, (5). right precentral gyrus. MNI coordinates for the different sub- figures are: (A) x = −56, y = 6, (B) y = 6, (C) z = −18 left, (D) y = −6, z = 12. Abbreviations: DAN, dorsal attention network; DMN, default mode network; L SFG, left superior frontal gyrus; R MTG, right middle temporal gyrus.

Discussion

We used a combined task- and rest-based fMRI study to identify the influence of HL on auditory and emotion processing. Results of the responses collected in the scanner revealed similar ratings for the unpleasant, pleasant, and neutral sounds—both groups tended to rate more sounds as unpleasant relative to the other types of sounds. However, the HL group differed from the NH group in their response times, which were significantly slower for the affective sounds. Overlapping patterns of fMRI activation were observed in both groups when processing affective sounds compared to neutral sounds. The main finding for the emotion task was increased activation in the left amygdala/parahippocampal gyrus complex for the NH > HL (P + U > N) comparison, via targeted ROI analysis. The reverse contrast, HL > NH (P + U > N), did not show increased activation within the limbic system, but rather revealed heightened responses in the right superior parietal lobule, right precuneus, and left inferior parietal lobule. Resting-state functional connectivity in the same group of participants focused on the canonical intrinsic networks and on seed regions obtained from the task-based activation patterns. Among the typical intrinsic networks, the default mode and DAN, but not the auditory network, revealed differences between the groups. Using seeds from the local maxima noted in the task-based analysis, decreased connectivity between the frontal cortex and other brain regions was noted, with the exception of stronger connectivity between the middle temporal gyrus and the right precentral gyrus in the HL group compared to the NH group. Our results suggest that HL may alter the emotional processing networks and lead to slower reaction times to affective stimuli.

Emotion Task

HL may reduce the engagement of the emotional processing system, either because of disordered processing of acoustic or of valence features. Complex anatomical and functional connections exist between the auditory cortex and the limbic system, primarily with the amygdala (Amaral and Price, 1984; Blood and Zatorre, 2001; Koelsch et al., 2006; Tschacher et al., 2010; Kumar et al., 2012). Forward projections from the auditory cortex to the amygdala have been shown to be modulated by acoustic features, but backward projections appear to be modulated by the valence of sound (Kumar et al., 2012). The forward and backward projections work in concert to interpret incoming affective stimuli (Kumar et al., 2012). Sound deprivation may reduce the amount of acoustic or valence information available for this network. Reduced information may result in a dampened emotional response to affective stimuli, because individuals with HL may not receive all of the acoustic or valence information necessary to cause a robust emotional response. We investigated this hypothesis via whole brain analysis and a targeted ROI analysis of the auditory and limbic areas.

In our study, both positively and negatively valent sounds exhibited greater engagement of the limbic system and faster response times, compared to neutral sounds, in NH individuals. However, this pattern differed in the HL group, with a decreased response in the temporal and frontal cortices (whole brain analysis) and in the amygdala and parahippocampus (ROI analysis), and an increased response in the parietal cortices and precuneus compared to the NH group when processing affective sounds (Table 4). Similarly, in behavioral responses, the reaction times for the P and U sounds were significantly slower in the HL group (Figure 1A). The behavioral data suggest that the advantageous faster processing of affective sound found in the NH group does not occur in the HL group. The slower responses to pleasant and unpleasant sounds in the HL group may be due to lack of faster, bottom-up engagement of the amygdala and other limbic structures during auditory processing. However, HL does not appear to affect the response to all sounds, with the reaction times for neutral sounds being nearly identical in the two groups. Instead, the highly valent sounds were most affected, indicating that it is identification of valence information rather than acoustic information that is impacted by HL. Another possible explanation of the differential processing of affective sounds by the HL group could be that there is more energy or information in the high frequency regions in the affective sounds and less so in the neutral sounds. Difficulty of processing high-frequency information in the affective sounds by the HL group led to longer reaction times. In order to maintain ecological validity, we chose not to low-pass filter the sounds to compensate for the HL of the HL group. In a previous study with mild-to-moderate HL (Husain et al., 2011b), we filtered sounds such that there was no energy in frequencies greater than 2 kHz. We found no difference in accuracy or reaction times for a discrimination task between HL and NH groups, nevertheless, the fMRI activation patterns were different (Husain et al., 2011b). In sum, regardless of the actual mechanism, our results suggest that HL may reduce engagement of the amygdala and result in slower reaction to positively and negatively valent sounds.

Another interesting aspect of the behavioral results was the finding that an increased number of sounds were classified as unpleasant, which differed from the published normative data (Bradley and Lang, 2007). There are at least two possible explanations for this finding. First, the normative scores were obtained in a young, NH population; therefore it is not surprising that both sets of older middle-aged participants in our study differed from these ratings, which points to an effect of age. Another possible explanation is that possible discomfort in the scanner may have influenced our participants to be more negative in their ratings. In order to tease apart these explanations and better understand the effect of aging on emotional processing, we intend to conduct a follow-up study with both young and older participants using stimuli from the IADS database.

Resting State Data

Resting state functional connectivity demonstrated alterations in the frontal cortex in HL patients. Increased connectivity between frontal regions and seed regions for the DAN and DMN was seen in HL patients compared to NH controls. A decreased correlation was seen between the left superior frontal cortex and the left middle occipital gyrus in HL subjects compared to controls. The relationship seen between HL and alterations in frontal connectivity may not just be important at baseline, as engagement of frontal regions was also apparent during the emotion task. The cause of these network alterations is not clear. The alterations could be purely attentional in nature, but they may also be a factor of interactions between emotional and attentional systems. A study including an attentional task without an emotional component may help to clarify this.

Except for activation of the left temporal pole, we did not find evidence to support our expectation that the response of the auditory cortex would differ between the two groups when processing affective sounds. A separate resting-state functional connectivity analysis with seeds in the primary auditory cortices also failed to find significant connectivity differences at rest between the two groups. The lack of significant findings may relate to mild-to-moderate severity of the HL in our study. To date, there have been no studies of resting state functional connectivity in patients with this level of HL. Deafness, however, has been investigated in this context. Intrinsic connectivity has been shown to be impacted by deafness, both within and outside of the temporal cortex (Li et al., 2013). The findings of the (Li et al., 2013) study are similar to those in our own study. Deaf patients showed increased negative correlations between the middle superior temporal sulcus and the left middle occipital and right precentral gyri when compared to NH controls. In our study, the left middle occipital gyrus was shown to be less correlated to the left superior frontal gyrus in the HL group, whereas the right precentral gyrus was more correlated with the right middle temporal gyrus. The presence of altered connectivity in similar regions in both deaf and our mild-to-moderate HL patients warrants further resting state connectivity studies examining varying degrees of HL.

It is important to note that inferences about the directionality of the connections cannot be made from the present functional connectivity analysis. An effective connectivity analysis, perhaps implemented as a structural equation modeling or dynamic causal modeling, would be needed to examine directionality (Horwitz, 2003). Our results suggest only a general alteration in connectivity between two related regions; differences in correlation may not be specifically due to coupling between the seed and a particular region, but may also arise due to the influence of a third region, or changes in noise, etc. (Friston, 1994).

HL is positively correlated with age (Yueh et al., 2003); age is therefore a potential confound in our research. A study examining connectivity in resting state networks (Onoda et al., 2012) noted a significantly decreased correlation between the auditory resting state network and the salience network (which includes the insula, ventrolateral prefrontal cortex (VLPFC), thalamus and cerebellum) with age. In addition, connections between regions of the salience network also weakened with age. The regions of the salience network relate to the processing of emotional stimuli. It is possible that HL within the older population in this study is partially responsible for the observed results. The decreased correlation between regions of the DAN and the insula seen in our study fit well with the results of the aging study. In our own study, however, all participants were matched for age; therefore, we are unable to parse out the effects of age from those of HL. More fMRI studies specifically addressing the effects of HL of varying severity, in different age groups, should be performed to clarify its impact on intrinsic and task-based functional networks. Subject motion in the scanner is another notable confound with the resting state data being particularly sensitive to its influence. Although we excluded data from participants who exhibited excessive head motion, included motion parameters as covariates of no-interest in our statistical models and statistical tests did not show any significant differences between the groups, it is possible that motion-related artifacts continue to affect our results, as shown by recent publications (Kundu et al., 2012; Van Dijk et al., 2012; Kundu et al., 2013; Power et al., 2014). Future studies with more stringent data acquisition considerations and more advanced analytical methods will need to be conducted to fully account for the possibility of motion artifacts.

Conclusion

Our results suggest that HL may affect emotional processing by decreasing amygdalar recruitment, resulting in slower reaction times to highly valent sounds. Although the HL group demonstrated slower response times to affective sounds, there was no difference in sound ratings between the HL and the NH group. Altered engagement of the frontal regions was also demonstrated in both emotion task-based subtraction and resting state functional connectivity analyses. HL is the third most common chronic condition in older adults and is highly comorbid with another hearing disorder, tinnitus. Our results in those with unaided, mild-to-moderately severe HL have implications for auditory rehabilitation for hearing impairment, reducing social isolation in the elderly, and management strategies for tinnitus.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to acknowledge the support of Tinnitus Research Consortium to Fatima T. Husain and of the NeuroEngineering NSF IGERT (Integrative Graduate Education and Research Traineeship) to Jake R. Carpenter-Thompson and Sara A. Schmidt. We are grateful to Kwaku Akrofi and Jaclyn Utz for their assistance in data acquisition.

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fnsys.2014.00010/abstract

Supplementary Table 1 | Sounds included in the study. Separated by column are 30 pleasant, 30 unpleasant, and 30 neutral sounds chosen from the IADS database to be included in the study.

References

Amaral, D. G., and Price, J. L. (1984). Amygdalo-cortical projections in the monkey (Macaca fascicularis). J. Comp. Neurol. 230, 465–496. doi: 10.1002/cne.902300402

Anticevic, A., Repovs, G., and Barch, D. M. (2012). Emotion effects on attention, amygdala activation, and functional connectivity in schizophrenia. Schizophr. Bull. 38, 967–980. doi: 10.1093/schbul/sbq168

Bartels, H., Middel, B. L., Van Der Laan, B. F., Staal, M. J., and Albers, F. W. (2008). The additive effect of co-occurring anxiety and depression on health status, quality of life and coping strategies in help-seeking tinnitus sufferers. Ear Hear. 29, 947–956. doi: 10.1097/AUD.0b013e3181888f83

Beck, A. T., and Steer, R. A. (1984). Internal consistencies of the original and revised Beck Depression Inventory. J. Clin. Psychol. 40, 1365–1367. doi: 10.1002/1097-4679(198411)40:6<1365::AID-JCLP2270400615>3.0.CO;2-D

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Bradley, M. M., and Lang, P. J. (2007). The International Affective Digitized Sounds (IADS-2): Affective Ratings of Sounds and Instruction Manual. Gainesville, FL: University of Florida, Technical Report: B-3.

Brett, M., Anton, J. L., Valabregue, R., and Poline, J. B. (2002). Region of interest analysis using an SPM toolbox. NeuroImage 16, 1140–1141.

Burton, H., Wineland, A., Bhattacharya, M., Nicklaus, J., Garcia, K. S., and Piccirillo, J. F. (2012). Altered networks in bothersome tinnitus: a functional connectivity study. BMC Neurosci. 13:3. doi: 10.1186/1471-2202-13-3

Carabellese, C., Appollonio, I., Rozzini, R., Bianchetti, A., Frisoni, G. B., Frattola, L., et al. (1993). Sensory impairment and quality of life in a community elderly population. J. Am. Geriatr. Soc. 41, 401–407.

Cruickshanks, K. J., Wiley, T. L., Tweed, T. S., Klein, B. E., Klein, R., Mares-Perlman, J. A., et al. (1998). Prevalence of hearing loss in older adults in Beaver Dam, Wisconsin. The Epidemiology of Hearing Loss Study. Am. J. Epidemiol. 148, 879–886. doi: 10.1093/oxfordjournals.aje.a009713

Davis, A., and Rafaie, E. A. (2000). “Epidemiology of tinnitus,” in Tinnitus Handbook, ed R. S. Tyler (San Diego, CA: Singular), 1–24.

Dolcos, F., and McCarthy, G. (2006). Brain systems mediating cognitive interference by emotional distraction. J. Neurosci. 26, 2072–2079. doi: 10.1523/JNEUROSCI.5042-05.2006

Feldmann, H., and Kumpf, W. (1988). [Listening to music in hearing loss with and without a hearing aid]. Laryngol. Rhinol. Otol. (Stuttg). 67, 489–497. doi: 10.1055/s-2007-998547

Fox, M. D., Snyder, A. Z., Vincent, J. L., Corbetta, M., Van Essen, D. C., and Raichle, M. E. (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc. Natl. Acad. Sci. U.S.A. 102, 9673–9678. doi: 10.1073/pnas.0504136102

Franks, J. R. (1982). Judgments of hearing aid processed music. Ear Hear. 3, 18–23. doi: 10.1097/00003446-198201000-00004

Friston, K. J. (1994). Functional and effective connectivity in neuroimaging: a synthesis. Hum. Brain Mapp. 2, 56–78. doi: 10.1002/hbm.460020107

Gaab, N., Gaser, C., Zaehle, T., Jancke, L., and Schlaug, G. (2003). Functional anatomy of pitch memory–an fMRI study with sparse temporal sampling. Neuroimage 19, 1417–1426. doi: 10.1016/S1053-8119(03)00224-6

Giraud, A. L., Chery-Croze, S., Fischer, G., Fischer, C., Vighetto, A., Gregoire, M. C., et al. (1999). A selective imaging of tinnitus. Neuroreport 10, 1–5. doi: 10.1097/00001756-199901180-00001

Golm, D., Schmidt-Samoa, C., Dechent, P., and Kroner-Herwig, B. (2013). Neural correlates of tinnitus related distress: an fMRI-study. Hear. Res. 295, 87–99. doi: 10.1016/j.heares.2012.03.003

Gopinath, B., Wang, J. J., Schneider, J., Burlutsky, G., Snowdon, J., McMahon, C. M., et al. (2009). Depressive symptoms in older adults with hearing impairments: the Blue Mountains Study. J. Am. Geriatr. Soc. 57, 1306–1308. doi: 10.1111/j.1532-5415.2009.02317.x

Greicius, M. (2008). Resting-state functional connectivity in neuropsychiatric disorders. Curr. Opin. Neurol. 21, 424–430. doi: 10.1097/WCO.0b013e328306f2c5

Hall, D. A., Haggard, M. P., Akeroyd, M. A., Palmer, A. R., Summerfield, A. Q., Elliott, M. R., et al. (1999). “Sparse” temporal sampling in auditory fMRI. Hum. Brain Mapp. 7, 213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N

Hicks, C. B., and Tharpe, A. M. (2002). Listening effort and fatigue in school-age children with and without hearing loss. J. Speech Lang. Hear. Res. 45, 573. doi: 10.1044/1092-4388(2002/046)

Horwitz, B. (2003). The elusive concept of brain connectivity. Neuroimage 19, 466–470. doi: 10.1016/S1053-8119(03)00112-5

Husain, F. T., Medina, R. E., Davis, C. W., Szymko-Bennett, Y., Simonyan, K., Pajor, N. M., et al. (2011a). Neuroanatomical changes due to hearing loss and chronic tinnitus: a combined VBM and DTI study. Brain Res. 1369, 74–88. doi: 10.1016/j.brainres.2010.10.095

Husain, F. T., Pajor, N. M., Smith, J. F., Kim, H. J., Rudy, S., Zalewski, C., et al. (2011b). Discrimination task reveals differences in neural bases of tinnitus and hearing impairment. PLoS ONE 6:e26639. doi: 10.1371/journal.pone.0026639

Husain, F. T., Patkin, D. J., Thai-Van, H., Braun, A. R., and Horwitz, B. (2009). Distinguishing the processing of gestures from signs in deaf individuals: An fMRI study. Brain Res. 1276, 140–150. doi: 10.1016/j.brainres.2009.04.034

Husain, F. T., and Schmidt, S. A. (2013). Using resting state functional connectivity to unravel networks of tinnitus. Hear. Res. 307, 154–162. doi: 10.1016/j.heares.2013.07.010

Jastreboff, P. J. (1990). Phantom auditory perception (tinnitus): mechanisms of generation and perception. Neurosci. Res. 8, 221–254. doi: 10.1016/0168-0102(90)90031-9

Koelsch, S., Fritz, T., Dy, V. C., Muller, K., and Friederici, A. D. (2006). Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 27, 239–250. doi: 10.1002/hbm.20180

Kumar, S., Von Kriegstein, K., Friston, K., and Griffiths, T. D. (2012). Features versus feelings: dissociable representations of the acoustic features and valence of aversive sounds. J. Neurosci. 32, 14184–14192. doi: 10.1523/JNEUROSCI.1759-12.2012

Kundu, P., Brenowitz, N. D., Voon, V., Worbe, Y., Vertes, P. E., Inati, S. J., et al. (2013). Integrated strategy for improving functional connectivity mapping using multiecho fMRI. Proc. Natl. Acad. Sci. U.S.A. 110, 16187–16192. doi: 10.1073/pnas.1301725110

Kundu, P., Inati, S. J., Evans, J. W., Luh, W. M., and Bandettini, P. A. (2012). Differentiating BOLD and non-BOLD signals in fMRI time series using multi-echo EPI. Neuroimage 60, 1759–1770. doi: 10.1016/j.neuroimage.2011.12.028

Leek, M. R., Molis, M. R., Kubli, L. R., and Tufts, J. B. (2008). Enjoyment of music by elderly hearing-impaired listeners. J. Am. Acad. Audiol. 19, 519–526. doi: 10.3766/jaaa.19.6.7

Li, Y., Booth, J. R., Peng, D., Zang, Y., Li, J., Yan, C., et al. (2013). Altered intra- and inter-regional synchronization of superior temporal cortex in deaf people. Cereb. Cortex 23, 1988–1996. doi: 10.1093/cercor/bhs185

Mather, M., and Knight, M. (2005). Goal-directed memory: the role of cognitive control in older adults' emotional memory. Psychol. Aging 20, 554–570. doi: 10.1037/0882-7974.20.4.554

Mirz, F., Gjedde, A., Ishizu, K., and Pedersen, C. B. (2000). Cortical networks subserving the perception of tinnitus–a PET study. Acta Otolaryngol. Suppl. 543, 241–243. doi: 10.1080/000164800454503

Mulrow, C. D., Aguilar, C., Endicott, J. E., Velez, R., Tuley, M. R., Charlip, W. S., et al. (1990). Association between hearing impairment and the quality of life of elderly individuals. J. Am. Geriatr. Soc. 38, 45–50.

Onoda, K., Ishihara, M., and Yamaguchi, S. (2012). Decreased functional connectivity by aging is associated with cognitive decline. J. Cogn. Neurosci. 24, 2186–2198. doi: 10.1162/jocn_a_00269

Peelle, J. E., Troiani, V., Grossman, M., and Wingfield, A. (2011). Hearing loss in older adults affects neural systems supporting speech comprehension. J. Neurosci. 31, 12638–12643. doi: 10.1523/JNEUROSCI.2559-11.2011

Petitto, L. A., Zatorre, R. J., Gauna, K., Nikelski, E. J., Dostie, D., and Evans, A. C. (2000). Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc. Natl. Acad. Sci. U.S.A. 97, 13961–13966. doi: 10.1073/pnas.97.25.13961

Power, J. D., Mitra, A., Laumann, T. O., Snyder, A. Z., Schlaggar, B. L., and Petersen, S. E. (2014). Methods to detect, characterize, and remove motion artifact in resting state fMRI. Neuroimage 84, 320–341. doi: 10.1016/j.neuroimage.2013.08.048

Raichle, M. E., Macleod, A. M., Snyder, A. Z., Powers, W. J., Gusnard, D. A., and Shulman, G. L. (2001). A default mode of brain function. Proc. Natl. Acad. Sci. U.S.A. 98, 676–682. doi: 10.1073/pnas.98.2.676

Rauschecker, J. P., Leaver, A. M., and Muhlau, M. (2010). Tuning out the noise: limbic-auditory interactions in tinnitus. Neuron 66, 819–826. doi: 10.1016/j.neuron.2010.04.032

Rutledge, K. L. (2009). A Music Listening Questionnaire for Hearing Aid Users. Unpublished Master's Thesis. University of Canterbury, Christchurch. Available online at: http://hdl.handle.net/10092/3194

Schmidt, S. A., Akrofi, K., Carpenter-Thompson, J. R., and Husain, F. T. (2013). Default mode, dorsal attention and auditory resting state networks exhibit differential functional connectivity in tinnitus and hearing loss. PLoS ONE 8:e76488. doi: 10.1371/journal.pone.0076488

Seydell-Greenwald, A., Leaver, A. M., Turesky, T. K., Morgan, S., Kim, H. J., and Rauschecker, J. P. (2012). Functional MRI evidence for a role of ventral prefrontal cortex in tinnitus. Brain Res. 1485, 22–39. doi: 10.1016/j.brainres.2012.08.052

Steer, R. A., Clark, D. A., Beck, A. T., and Ranieri, W. F. (1999). Common and specific dimensions of self-reported anxiety and depression: the BDI-II versus the BDI-IA. Behav. Res. Ther. 37, 183–190. doi: 10.1016/S0005-7967(98)00087-4

Steer, R. A., Ranieri, W. F., Beck, A. T., and Clark, D. A. (1993). Further evidence for the validity of the Beck Anxiety Inventory with psychiatric outpatients. J. Anxiety Disord. 7, 195–205. doi: 10.1016/0887-6185(93)90002-3

Stevens, G., Flaxman, S., Brunskill, E., Mascarenhas, M., Mathers, C. D., Finucane, M., et al. (2013). Global and regional hearing impairment prevalence: an analysis of 42 studies in 29 countries. Eur. J. Public Health 23, 146–152. doi: 10.1093/eurpub/ckr176

St Jacques, P., Dolcos, F., and Cabeza, R. (2010). Effects of aging on functional connectivity of the amygdala during negative evaluation: a network analysis of fMRI data. Neurobiol. Aging 31, 315–327. doi: 10.1016/j.neurobiolaging.2008.03.012

Tschacher, W., Schildt, M., and Sander, K. (2010). Brain connectivity in listening to affective stimuli: a functional magnetic resonance imaging (fMRI) study and implications for psychotherapy. Psychother. Res. 20, 576–588. doi: 10.1080/10503307.2010.493538

Tun, P. A., McCoy, S., and Wingfield, A. (2009). Aging, hearing acuity, and the attentional costs of effortful listening. Psychol. Aging 24, 761–766. doi: 10.1037/a0014802

Uys, M., Pottas, L., Vinck, B., and Van Dijk, C. (2012). The influence of non-linear frequency compression on the perception of music by adults with a moderate to sever hearing loss: subjective impressions. S. Afr. J. Commun. Disord. 59, 53–67. doi: 10.7196/sajcd.119

Van Dijk, K. R., Sabuncu, M. R., and Buckner, R. L. (2012). The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59, 431–438. doi: 10.1016/j.neuroimage.2011.07.044

Weisz, N., Voss, S., Berg, P., and Elbert, T. (2004). Abnormal auditory mismatch response in tinnitus sufferers with high-frequency hearing loss is associated with subjective distress level. BMC Neurosci. 5:8. doi: 10.1186/1471-2202-5-8

Whitfield-Gabrieli, S., and Nieto-Castanon, A. (2012). Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2, 125–141. doi: 10.1089/brain.2012.0073

Wong, P. C., Ettlinger, M., Sheppard, J. P., Gunasekera, G. M., and Dhar, S. (2010). Neuroanatomical characteristics and speech perception in noise in older adults. Ear Hear. 31, 471–479. doi: 10.1097/AUD.0b013e3181d709c2

Yoneda, M., Ikawa, M., Arakawa, K., Kudo, T., Kimura, H., Fujibayashi, Y., et al. (2012). In vivo functional brain imaging and a therapeutic trial of L-arginine in MELAS patients. Biochim. Biophys. Acta 1820, 615–618. doi: 10.1016/j.bbagen.2011.04.018

Yueh, B., Shapiro, N., Maclean, C. H., and Shekelle, P. G. (2003). Screening and management of adult hearing loss in primary care: scientific review. JAMA 289, 1976–1985. doi: 10.1001/jama.289.15.1976

Keywords: fMRI, hearing loss, resting-state fMRI, functional connectivity, emotion, IADS

Citation: Husain FT, Carpenter-Thompson JR and Schmidt SA (2014) The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Front. Syst. Neurosci. 8:10. doi: 10.3389/fnsys.2014.00010

Received: 15 August 2013; Accepted: 15 January 2014;

Published online: 04 February 2014.

Edited by:

Jonathan E. Peelle, Washington University in St. Louis, USAReviewed by:

Carolyn McGettigan, Royal Holloway University of London, UKConor J. Wild, Western University, Canada

Copyright © 2014 Husain, Carpenter-Thompson and Schmidt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fatima T. Husain, Department of Speech and Hearing Science, University of Illinois at Urbana-Champaign, 901 S. Sixth Street, Champaign, IL 61820, USA e-mail: husainf@illinois.edu

Fatima T. Husain

Fatima T. Husain Jake R. Carpenter-Thompson

Jake R. Carpenter-Thompson Sara A. Schmidt

Sara A. Schmidt