Citations: Indicators of Quality? The Impact Fallacy

- 1Amsterdam School of Communication Research (ASCoR), University of Amsterdam, Amsterdam, Netherlands

- 2Division for Science and Innovation Studies, Administrative Headquarters of the Max Planck Society, Munich, Germany

- 3Center for Applied Information Science, Virginia Tech Applied Research Corporation, Arlington, VA, USA

- 4School of Informatics and Computing, Indiana University, Bloomington, IN, USA

We argue that citation is a composed indicator: short-term citations can be considered as currency at the research front, whereas long-term citations can contribute to the codification of knowledge claims into concept symbols. Knowledge claims at the research front are more likely to be transitory and are therefore problematic as indicators of quality. Citation impact studies focus on short-term citation, and therefore tend to measure not epistemic quality, but involvement in current discourses in which contributions are positioned by referencing. We explore this argument using three case studies: (1) citations of the journal Soziale Welt as an example of a venue that tends not to publish papers at a research front, unlike, for example, JACS; (2) Robert K. Merton as a concept symbol across theories of citation; and (3) the Multi-RPYS (“Multi-Referenced Publication Year Spectroscopy”) of the journals Scientometrics, Gene, and Soziale Welt. We show empirically that the measurement of “quality” in terms of citations can further be qualified: short-term citation currency at the research front can be distinguished from longer term processes of incorporation and codification of knowledge claims into bodies of knowledge. The recently introduced Multi-RPYS can be used to distinguish between short-term and long-term impacts.

Introduction

When asked about whether citations can be considered as an indicator of “quality,” scientometricians are inclined to withdraw to the position that citations measure “impact.” But how does “impact” differ from “quality”? Whereas Cole and Cole (1973, p. 35), e.g., argued that “the data available indicate that straight citation counts are highly correlated with virtually every refined measure of quality,” Martin and Irvine (1983) claimed that quality is indicated only in cases where several indicators converge (e.g., numbers of publications, citations, etc.), thus introducing the notion of partial indicators. In their view “the indicators based on citations are seen as reflecting the impact, rather than the quality or importance, of the research work” (Martin and Irvine, 1983, p. 61). Moed et al. (1985), on the other hand, framed the discussion of the relationship between “impact” and “quality” in the context of enabling science-policy decisions so as to distinguish research groups in terms of their visibility and their longer term “durability”; that is, their potential to make sustained contributions to a field of science in terms of short-term citation impacts during a longer period of time.

With the increase of usage of quantitative indicators for evaluation of individuals, groups, universities, and nations, revisiting the relations between “quality” and “impact” is both timely and important. The operationalization of quality in terms of impact leads first to the question of the definition of “impact.” “Impact” is a physical metaphor used by Garfield and Sher (1963), p. 200, when introducing the “journal impact factor” (JIF). Unlike size-dependent indicators of impact based on the total number of citations (Gross and Gross, 1927), the impact factor normalizes for the size effects of journals by using a (lagged) 2-year moving average.

Scientometricians distinguish between size-dependent and size-independent indicators. Analytically, one would expect “quality” – as against “quantity” – to be size independent, whereas “impact” is size dependent, since two collisions have more impact than a single one. Leydesdorff and Bornmann (2012) argued for an indicator based on integrating impact instead of averaging it in terms of ratios of citations per publication. Bensman and Wilder (1998) found that faculty judgments about the quality of journals in chemistry correlate empirically more with total citation rates than with (size-independent) impact factors. Bensman (2007, p. 118) added that Garfield had modeled the JIF on the basis of an early version of the SCI in the 1960s (Garfield, 1972, p. 476; Martyn and Gilchrist, 1968) in which bio-medical journals with rapid yearly citation turnover would have been dominant.

In the meantime, scientometricians have become thoroughly aware that (i) publication and citation practices differ among disciplines; and (ii) one should not use the average of sometimes extremely skewed distributions (Seglen, 1992), but should instead use non-parametric statistics (e.g., percentiles). In their recent guidelines for evaluation practices Hicks et al. (2015, p. 430), for example, conclude that “(n)ormalized indicators are required, and the most robust normalization method is based on percentiles … in the citation distribution of its field.” However, the definition of percentiles presumes reference sets or, in other words, the demarcation of “fields” of science. The top 10% can be very different from one reference set to another.

In evaluative bibliometrics, a “best practice” has been developed to delineate reference sets in terms of three criteria: cited publications should be (i) from the same year (so that they have had equal opportunity to gather citations); (ii) of the same document type (articles, reviews, or letters, so that documents of the same depth and structure can be compared); and (iii) from the same field of science, each of which has its own distinct citation patterns. The first two criteria are provided by the bibliographic databases,1 but the delineation of fields of science has remained a hitherto unresolved problem (van Eck et al., 2013; Leydesdorff and Bornmann, 2016). Although one can undoubtedly assume an epistemic structure of disciplines and specialties operating in the sciences, the texture of referencing can be considered as both woofs and warps: the woofs may refer, e.g., to disciplinary backgrounds and the warps to current relevance (Quine, 1960, p. 374). Decomposition of this texture using one clustering algorithm or another may be detrimental to the evaluation of units at the margins or between fields (e.g., Rafols et al., 2012), and the effects are also sensitive to the granularity of the decomposition (Zitt et al., 2005; Waltman and van Eck, 2012).

Perhaps, these can be considered as technical issues. More fundamentally, the question of normalization refers to differences in citation behavior among fields of science (Margolis, 1967). Whereas documents are cited, citation behavior is an attribute of authors. The “cited-ness” distribution can be used out of context (e.g., for rankings) and thus apart from the reasons for “citing” (Bornmann and Daniel, 2008). Wouters (1999) argued that the use of citations in evaluations is first based on the transformation of the citation distribution from “citing” to “cited”: the citation indexes collect cited references – which are provided by citing authors/texts – into aggregated citations. Such a transformation of one distribution (“citing”) into another (“cited”) is not neutral: papers may be cited in fields other than those they are citing from.

Does this abstraction legitimate us to compare apples (e.g., excellently elaborated texts) with oranges (e.g., breakthrough ideas)? Normalization brings the citing practices back into the design because one tries to find reference sets of papers cited for similar reasons or in comparable sets. However, the reasons for citation may be very different even within a single text (Chubin and Moitra, 1975; Moravcsik and Murugesan, 1975; Amsterdamska and Leydesdorff, 1989). The assumption that journals, for example, contain documents, which can be compared in terms of citation behavior abstracts from the reasons and the content of citation by using the behavior of authors as the explanatory variable.

Citation counts may seem convenient for the evaluation because they allow us to make an inference prima facie from “quality” in the textual to the socio-cognitive dimensions of authors and ideas, or vice versa (Leydesdorff and Amsterdamska, 1990). However, the results of the bibliometric evaluation inform us about the qualities of document sets, and not immediately about authors, institutions (as aggregates of authors), or the quality of knowledge claims. Furthermore, the aggregation rules of texts, authors, or ideas (as units of analysis) are different. For example, a single text can be attributed as credit to all contributing authors or proportionally to the number of authors using so-called “fractional counting;” but can one also fractionate the knowledge claim? A citation may mean something different with reference to textual, social, or epistemic structures.

At the epistemic level, Small (1978) proposed to consider citations as “concept symbols.” Would one be able to use citations for measuring not only the impact of publications and the standing of authors but also the quality of ideas? Are ideas to be located within specific documents or between and among documents; i.e., in terms of distributions of links such as citations or changes in word distributions? One can then formulate a research agenda for theoretical scientometrics in relation to the history and philosophy of science, but at arm’s length from the research agenda of evaluative bibliometrics where the focus is on developing more refined indicators and solving problems of normalization.

In this study, we use empirical findings from a number of case studies to illustrate what we consider to be major issues at the intersection of theoretical and evaluative bibliometrics, and possible ways to move forward. We first focus on sociology as a case with an extremely long turn-over of citation. However, longer term citation is also important in other disciplines (van Raan, 2004; Ke et al., 2015): short-term citation at the research front can be considered as citation currency, whereas codification of citation into concept symbols is a long-term process. Historical processes tend to be path-dependent and therefore specific. Citation indicators such as the impact factor and SNIP (Moed, 2010), however, focus on citation currency or, in other words, participation at a research front. The extent to which short-term citation can be considered as a predictor of the long-term effects of quality can be expected to vary (Baumgartner and Leydesdorff, 2014; Bornmann and Leydesdorff, 2015).

German Sociology Journals

The journal Soziale Welt – subtitled “a journal for research and practice in the social sciences” – can be considered as twice disadvantaged in evaluation practices: the discipline (sociology) has a low status in the informal hierarchy among the disciplines2 and the journal publishes for a German-speaking audience. Special issues, however, are sometimes entirely in English. German sociology has a well-established tradition, and many of the ground-breaking debates in sociology have German origins (e.g., Adorno et al., 1969); but since the Second World War German sociology has mostly been read in English translation (e.g., Schutz, 1967). Merton (1973b) noted that bi-lingual journals serve niche markets in sociology. The special position of German sociology journals enables us to show the pronounced effect on citation patterns and scores of being outside main-stream science (Leydesdorff and Milojević, 2015).

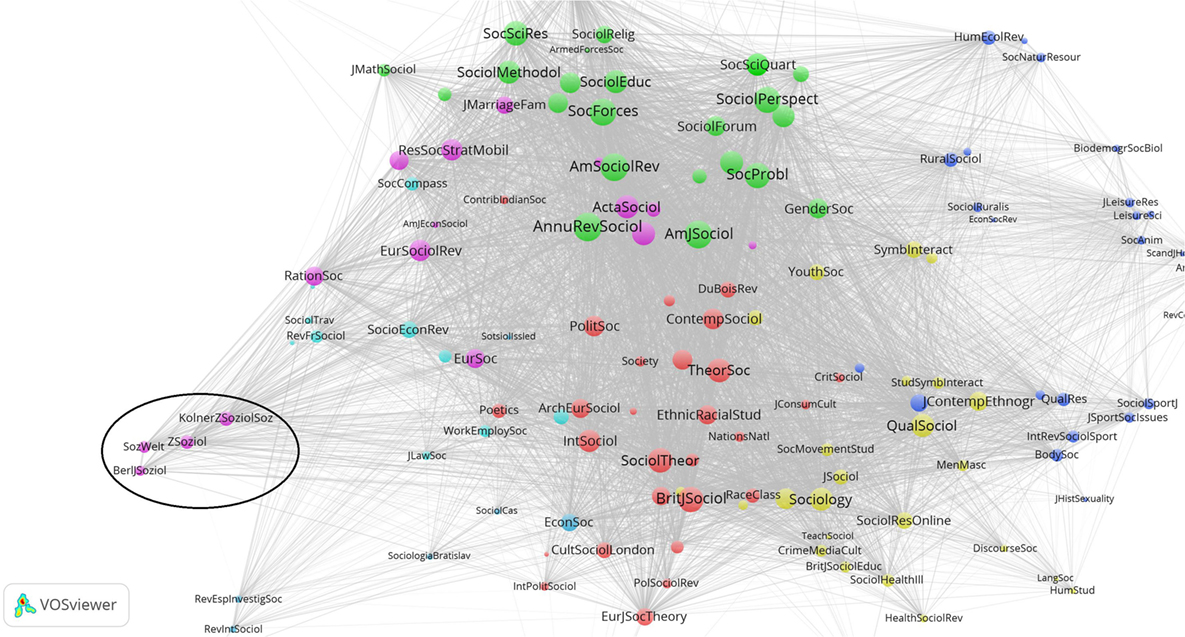

Figure 1 shows the four German sociology journals included in Thomson-Reuters’ Web-of-Science (WoS) when mapped in terms of their “cited” patterns in relations to other sociology journals. The “citing” patterns of these same journals, however, are very different: on the “citing” side the journals are deeply integrated in sociology, which provides the knowledge base for their references. In other words, the identity of these journals is sociological, but their audience is the German-language realm, including journals in political science, education, psychology, etc. Thus, journals can show very different patterns for being cited or citing, and the same asymmetry (cited/citing) holds for document sets other than journals. For example, the œuvre of an author or an institutionally delineated set of documents (e.g., a department) cannot be expected to match journal categories. One may be indebted (“citing”) to literatures other than those to which one contributes (e.g., Leydesdorff and Probst, 2009).

Figure 1. Four German sociology journals among 141 journals classified as “sociology” in the Web-of-Science (JCR 2014), mapped in terms of their (cosine-normalized) being cited patterns using VOSviewer for the mapping and the classification.

In the case of these German sociology journals, the border is mainly a language border, but disciplinary distinctions can have the same effect as language borders. The codification of languages (“jargons”) in the disciplines and specialties drives the further growth of the sciences because more complexity can be processed in restricted languages (Bernstein, 1971; Coser, 1975; Leydesdorff, 2006). “Translational research” in medicine – the largest granting program of the U.S. National Institute of Health – deliberately strives to counteract these dynamics of differentiation by focusing on translation from the laboratory, with its language of molecular biology, to clinical practice in which one proceeds in terms of clinical trials and protocol development (e.g., Hoekman et al., 2012).

Processes of translation between disciplines or between specialist and interdisciplinary contexts (e.g., Nature or Science) require careful translation. Interdisciplinary research is not based on a melting pot of discourses, but on the construction of codes of communication in which the more restricted semantics of specialisms can be embedded (Wagner et al., 2009). Asymmetries in the relations among the various discourses lead, among other things, to different citation rates. Which reference set (“field”) would one, for example, wish to choose in the case of Soziale Welt? The one of its citing identity, or the one in which it is cited?

A second disadvantage of Soziale Welt in terms of JIFs is the virtual absence of short-term citation that would contribute to its JIF-value. The JIF is based on the past 2 or 5 years (JIF-2 and JIF-5, respectively); Elsevier’s SNIP index for journals is based on the past 3 years. In 2014, however, Soziale Welt was cited 98 times in WoS, of which 63 (64.3%) were citations of papers published more than 10 years ago. However, this slow turn-over is not specific to German sociology journals.

The American Journal of Sociology (AJS) and American Sociological Review (ASR) – the two leading sociology journals – have cited and citing half-life times of more than 10 years. In other words, more than half of the citations of these journals are from issues published more than 10 years ago, and more than half of the references in the 2014 volumes were to publications older than 10 years. Unlike the German journals, the American journals also have short-term citation, which leads to a JIF of 3.54 for AJS and 4.39 for ASR. These impact factors are based on only 1.9 and 2.7%, respectively, of these journals’ total citations in 2014.3

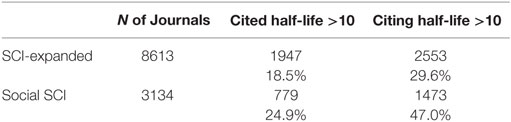

Table 1 shows that longer term citation is not marginal in disciplines other than sociology. Almost half of the journals included in the Social Science Citation Index (47%) have a citing half-life of more than 10 years. In the natural and life sciences, long-term citation is also substantial. The Journal of the American Chemical Society (JACS), for example, has a cited half-life time of 8.0 years and a citing half-life time of 6.5 years. However, this journal obtains 14.7% of its citations in the first 2 years after publication and 37.3% within 5 years.4

Short-Term and Long-Term Citations: Citation Currency and Codification

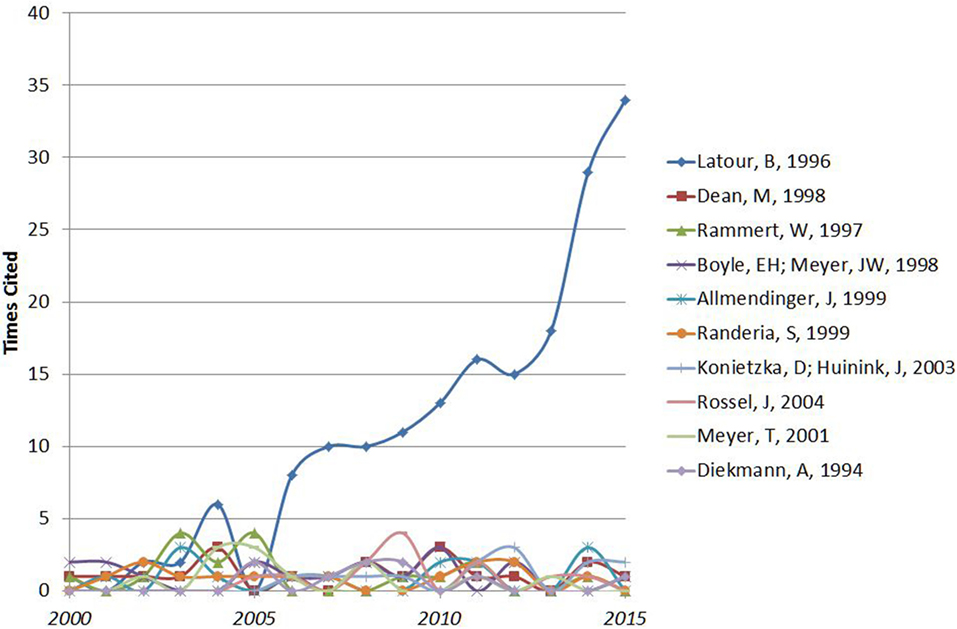

Let us disaggregate citations at the journal level and examine the long-term and accumulated citation rates of specific – highly cited – papers in greater detail. Figure 2 shows the 10 most highly cited articles in Soziale Welt. Nine of these papers were not cited more than four times in any given year. These incidental citations accumulate over time. The single exception to this pattern is Latour’s (1996) contribution to the journal (in English) entitled “On actor-network theory: a few clarifications.” Almost 10 years after its publication, this paper began to be cited at an increasing rate. From this perspective, all other citations to Soziale Welt can be considered as noise. In sum, after a considerable number of years Latour’s (1996) paper became a concept symbol (Small, 1978), whereas the other papers remained marginal in terms of their citation rates.

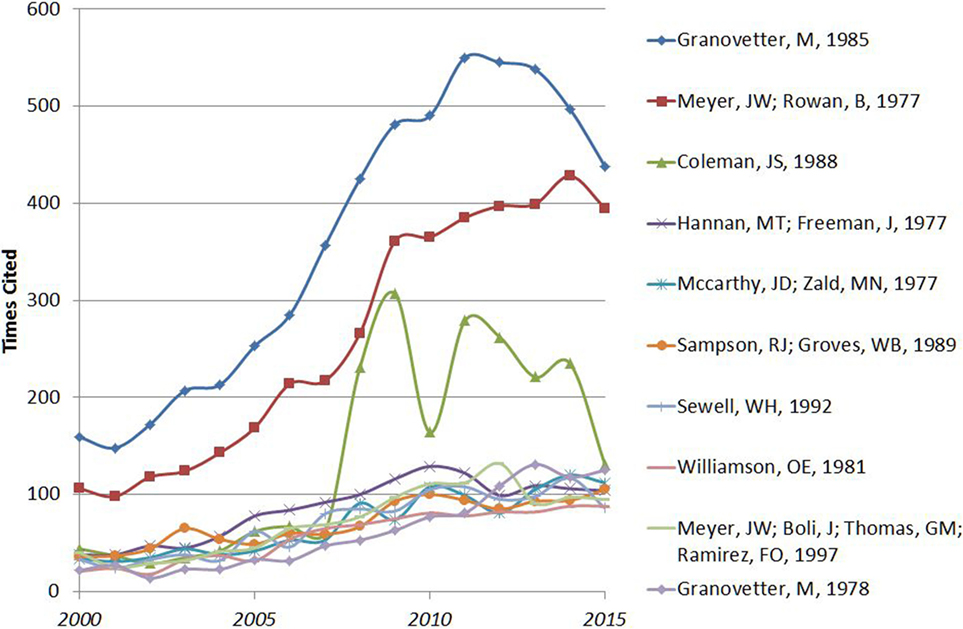

Let us repeat this analysis for the top 10 most highly cited papers in the AJS, a core journal of this same field. Figure 3 shows the results: three papers show the deviant behavior, which we saw for Latour (1996) in Figure 2. However, these 10 papers all have citation rates of more than 1,000 times. Whereas the seven at the bottom continue to increase in terms of yearly citation rates over the decades, the top three papers accelerate this pattern with almost twice the rate. Coleman’s (1988) study entitled “Social Capital in the Creation of Human-Capital” became a most highly cited paper after almost two decades (since 2008; cf. van Raan, 2004): it went from 61 citations in 2007 to 231 citations in 2008. Note that none of these top 10 papers are decaying in terms of the citation curve, as one would expect given the normal pattern after so many years.

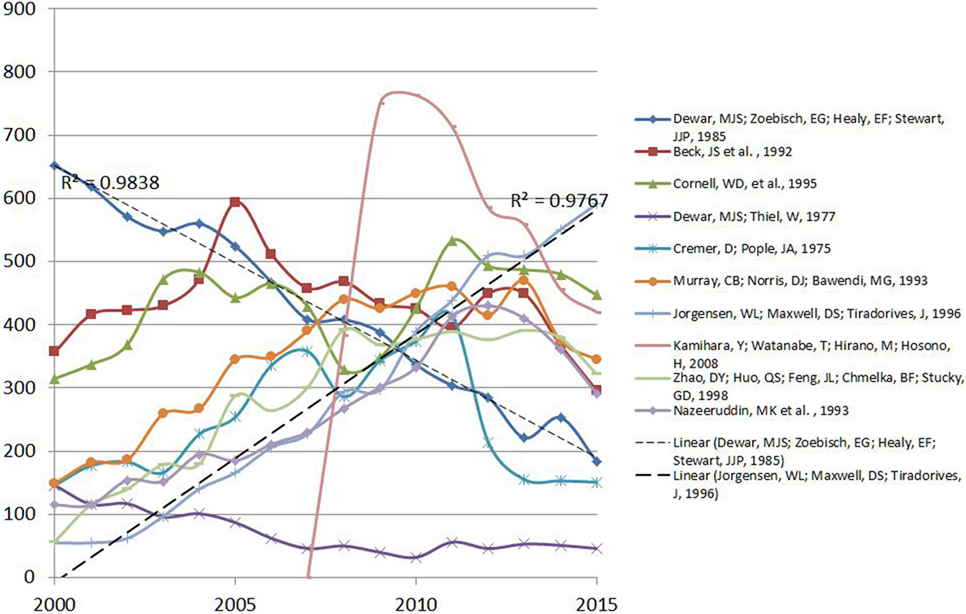

Using a similar format, Figure 4 shows the 10 most-highly cited papers in JACS. One of these is Kamihara et al.’s (2008) paper, entitled “Iron-Based Layered Superconductor La[O1–xFx]FeAs (x = 0.05–0.12) with Tc = 26 K.” Despite its empirical title, this paper is directly relevant for the theory of superconductivity, and therefore was being cited immediately.5 The citation curve of this paper shows the standard pattern of a successful contribution: the paper was cited 353 times in the year of its publication, peaked in 2010 with 763 citations, and thereafter the curve decays. Using the IF-type systematic, one can say that it gathered 1,513 of its total of 4,647 citations (32.6%) in the first 2 years following on its publication, and 3,369 (72.5%) in the first 5 years. This pattern is typical for a paper at a research front (de Solla Price, 1970).

The other nine highly cited papers show different patterns. Some are increasing, while others decrease in terms of yearly citation rates. Jorgensen et al. (1996), for example, shows sustained linear growth in citation, whereas Dewar et al. (1985) has been decreasing since 1997 when it was cited 826 times (after 12 years!).

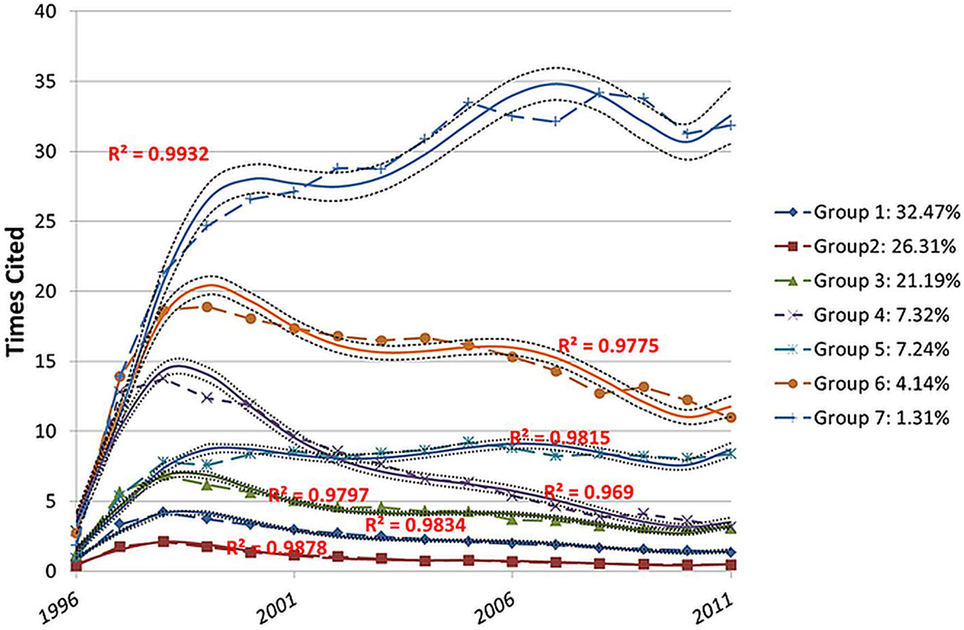

Using Group-Based Trajectory Modeling Nagin (2005), Baumgartner and Leydesdorff (2014) studied a number of journals, among them JACS, in terms of the citation patterns of all papers published in 1996. Figure 5 shows the seven trajectories distinguished among the citation patterns of (2,142) research articles published in JACS during 2016, using a 15-year citation window. Although a number of the (statistically significant) groups show typical citation patterns with an early peak and decay thereafter, groups 5 (7.24% of the papers) and 7 (1.31%) were still increasing their citation rates after 15 years. The authors consistently found that the decay phase was not continuous across journals and fields of science (see, e.g., group 6 in the middle).6

Figure 5. Seven trajectories of 2,142 research articles published in JACS in 1996, using fifth-order polynomials. Source: Baumgartner and Leydesdorff (2014, p. 802).

Baumgartner and Leydesdorff (2014) proposed to distinguish between “sticky” and “transitory” knowledge claims. Transitory knowledge claims are typical for the research front; the community of researchers informs one another about progress. Sticky knowledge claims need time to grow into a codified citation that can function as a concept symbol (Small, 1978). Evaluation in terms of citation analysis focuses on transitory knowledge claims at the research front. Comins and Leydesdorff (2016b) call this the citation currency of the empirical sciences.

The current discourse at a research front is provided by transitory knowledge claims with variations that contribute to shaping the research agenda at the above-individual level. The attribution of the results of this group effect to individual authors or texts is at risk of the ecological fallacy: part of the success is due to relations among individual contributions, and one cannot infer from quality at the group level to quality at the individual level (Robinson, 1950).7 The huge delays in citation that we found above in sociology may indicate that generational change is also needed in fields without a research front before a new concept symbol becomes highly cited. Let us focus on one such concept symbol, most central to our field: Robert K. Merton, who among many other things defined the “Matthew effect” – preferential attachment – in science and who is often cited for his “normative” theory of citation (e.g., Merton, 1965; Haustein et al., 2015; Wyatt et al., 2017).

“Merton” as a Concept Symbol Across Theories of Citation

A “citation debate” has raged in the sociology of science between the constructivist and the normative theories of citation (Edge, 1979; Woolgar, 1991; Luukkonen, 1997). The normative theory of citation (Kaplan, 1965) is grounded in Merton’s (1942) formulation of the CUDOS norms of science: Communalism, Universalism, Disinterestedness, and Organized Skepticism. From a Mertonian perspective, citation analysis can be considered as a methodology for the historical and sociological analysis of the sciences (e.g., de Solla Price, 1965; Cole and Cole, 1973; Elkana et al., 1978). Citation is then considered as a reward and thus an indicator of the credibility of a knowledge claim.

In a paper entitled “A different viewpoint,” Barnes and Dolby (1970) argued for shifting the attention in sociology from the professed (that is, Mertonian) norms of science to citation practices. Gilbert (1977), for example, studied referencing as a technique of rhetorical persuasion, whereas Edge (1979, p. 111) argued that one should “give pre-eminence to the account from the participant’s perspective, and it is the citation analysis which has to be ‘corrected’” (italics in the original). The field of science and technology studies (STS) thus became deeply divided between quantitative scientometrics mainly grounded in the Mertonian tradition and qualitative STS dominated by constructivist assumptions (Luukkonen, 1997). During the 1980s, however, the introduction of discourse analysis (Mulkay et al., 1983) and co-word maps (Callon et al., 1983) made it possible to build bridges from time to time (Wyatt et al., 2017; cf. Leydesdorff and van den Besselaar, 1997; van den Besselaar, 2001).

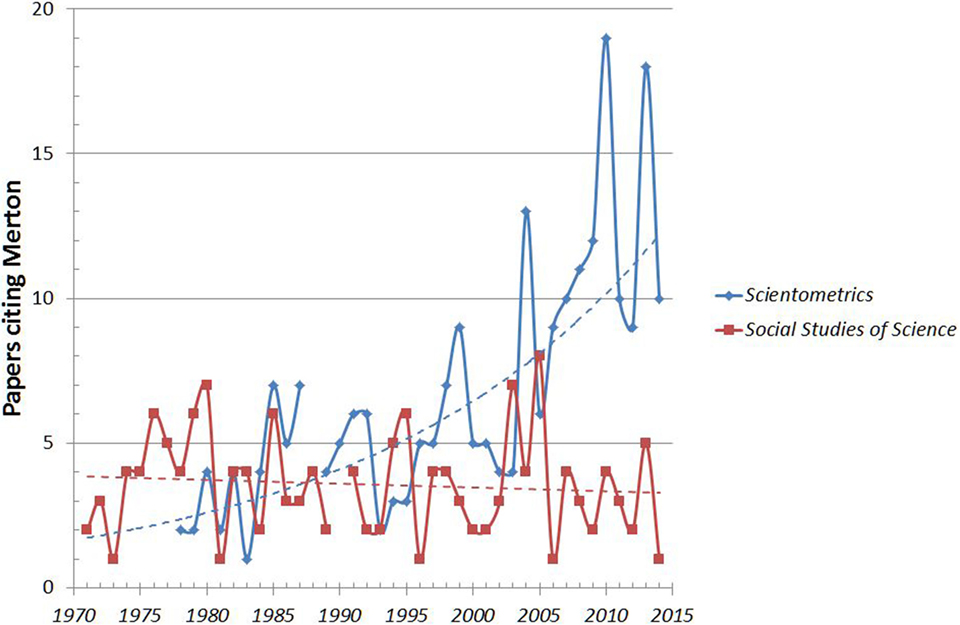

Let us use references to “Merton” as a concept symbol in the citation debate between these theories of citation. Merton can be expected to be cited across the entire set of this literature because proponents as well as opponents use and discuss his ideas. This analysis is based on the full sets of publications in Scientometrics (since 1978) and Social Studies of Science (since 1971) downloaded from WoS on October 6, 2014 in another context (Wyatt et al., 2017). These are 5,677 publications in total, of which 3,891 were published in Scientometrics, and 1,786 in Social Studies of Science.8 These 5,677 records in the document set contain 159,373 references. Among these are 595 references to Merton in 391 documents. In other words, Merton is cited (as a first author)9 in 6.9% of the documents.

Figure 6 shows that the number of references to Merton is declining steadily in Social Studies of Science, but increases in more recent years in Scientometrics. From the perspective of hindsight, Merton’s various contributions to institutional sociology can be considered as scientometrics avant la lettre. Price’s “cumulative advantages” (de Solla Price, 1976, p. 292), for example, operationalized Merton’s (1968) “Matthew effect” – the tendency for citation-rich authors and publications to attract further citations, in part because they are heavily cited (Crane, 1969, 1972; Cole and Cole, 1973; Bornmann et al., 2010).10 The theoretical notions of both Merton and Price thus anticipated the concept of “preferential attachment” in network studies by decades (Barabási and Albert, 1999; Barabási et al., 2002). The mechanism of preferential attachment, for example, enables scientometricians to understand the Matthew effect as a positive feedback at the network level that cannot be attributed to the original author (e.g., Scharnhorst and Garfield, 2011), the journal (Larivière and Gingras, 2010), or the country of origin (Bonitz et al., 1999).

Figure 6. Distribution of references to Merton in papers in Scientometrics and Social Studies of Science, respectively (n of documents = 391).

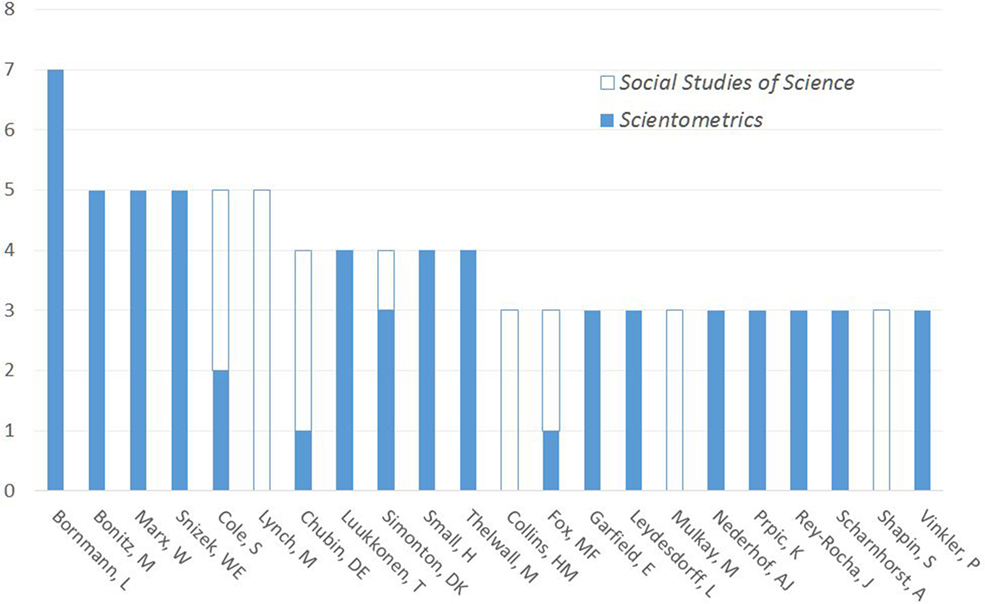

Figure 7 shows the authors who cited Merton more than twice in Scientometrics or Social Studies of Science. Whereas most of these authors published exclusively in one of the two journals, Stephen Cole, one of Merton’s students, has been prolific in both domains. Mary Frank Fox and Daryl Chubin also crossed the boundary. Other authors (e.g., Small, Garfield, and Leydesdorff) published in both journals, citing Merton when contributing to Scientometrics, but not when writing for Social Studies of Science. Others wrote exclusively for one of the two journals.

Figure 7. Eighty-five publications of twenty-two authors citing Merton as a first author (more than twice) in Scientometrics and Social Studies of Science.

In summary: the name “Merton” as a concept symbol has obtained a different meaning in these two contexts of journals. On the sociological side, Merton has become a background figure who is cited incidentally. Garfield (1975) coined the term “obliteration by incorporation” (Cozzens, 1985): one no longer has to cite Merton explicitly and citation gradually decreases. McCain’s (2015) noted that “obliteration by incorporation” is discipline dependent. In Scientometrics, however, the call for more theoretical work in addition to the methodological character of the journal has made referencing to Merton convenient.

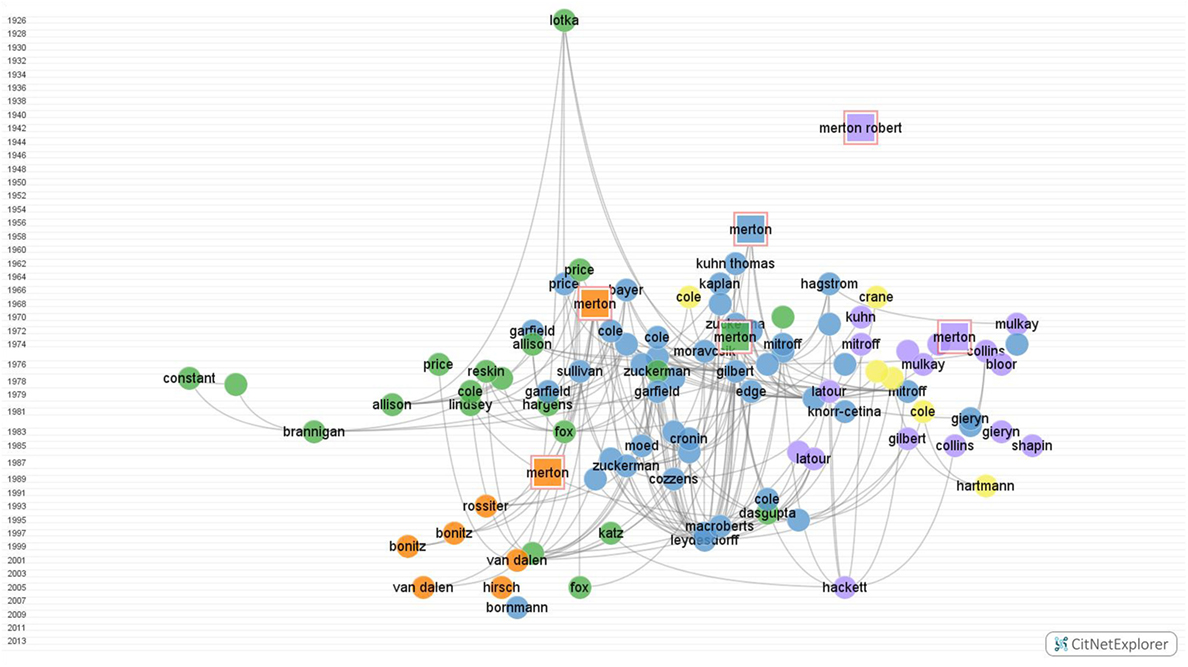

Figure 8 shows, among other things, the influence of Merton’s papers across the domains of quantitative and qualitative STS (Milojević et al., 2014). Merton’s (1973a) “Sociology-of-Science” book, for example, is positioned (on the right side) among the articles of qualitatively oriented sociologists like Harry Collins, Mike Mulkay, and David Bloor. Merton’s (1988) paper about “Cumulative advantage and the symbolism of intellectual property” (also known as “Matthew II”) is positioned on the other side among scientometricians, whereas several other references are to older work used in both traditions (e.g., Merton, 1957, 1968).

Figure 8. Citation network of 100 (of the 391) documents citing Merton in Social Studies of Science and Scientometrics. CitNetExplorerer used for the visualization (van Eck and Waltman, 2014).

In summary, Figure 8 visualizes the interface between the two branches of STS in terms of co-citation patterns. The integration by “Merton” as a concept symbol bridges the historically deep divide in terms of journals and institutions (e.g., Van den Besselaar, 2001). In other words, the figure illustrates the point that the different classifications of the two journals – Scientometrics as “library and information science”11 and Social Studies of Science as “history and philosophy of science” – may cut through important elements of the intellectual organization of a field.

Multi-RPYS

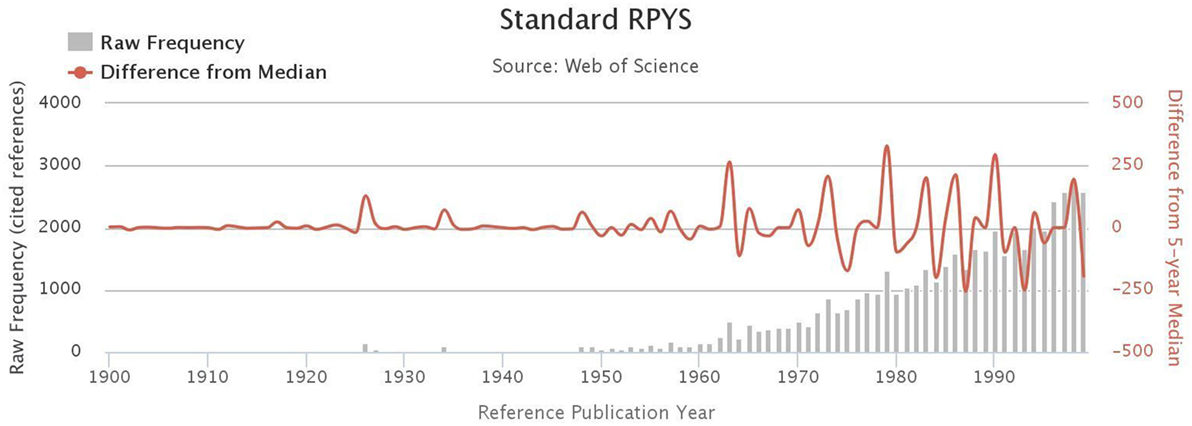

We can illustrate our thesis of the two different functions of citation, at a research front or as longer term codification, by using the Multi-Referenced Publication Years Spectroscopy (Multi-RPYS) recently introduced by Comins and Leydesdorff (2016a). Multi-RPYS is an extension of RPYS, first introduced by Marx and Bornmann (2014) and Marx et al. (2014). In conventional RPYS one plots the number of references against the time axis. Figure 9 shows the result for the case of the 3,777 articles published in Scientometrics between 1978 and 2015.12 The graph shows the numbers of yearly citations normalized as deviations from the 5-year moving median. In this figure, for example, a first peak is indicated for 1926, indicating Lotka’s (1926) Law as a citation classic in this field.13

Figure 9. RPYS of 3,777 articles published in Scientometrics, downloaded on January 2, 2016; curve generated using the interface at http://comins.leydesdorff.net

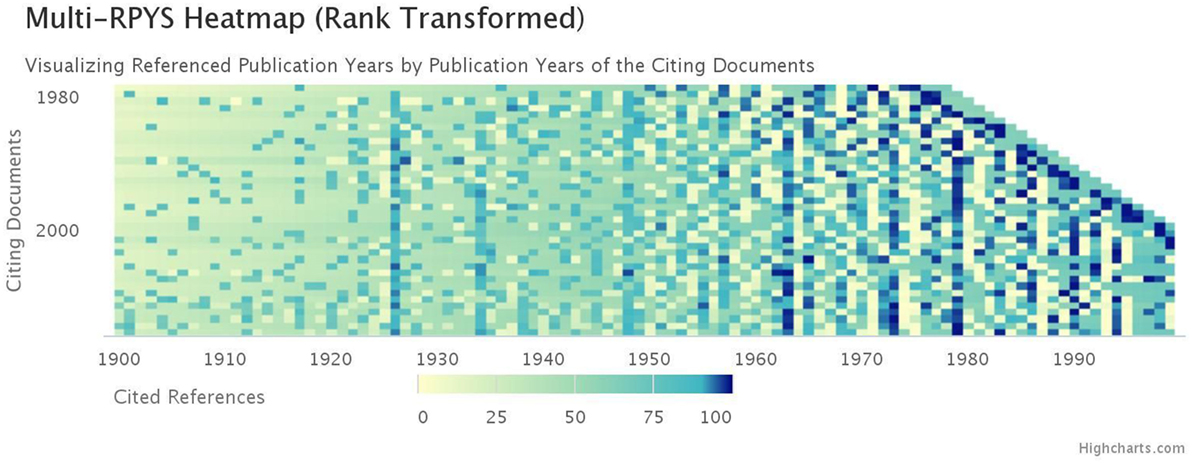

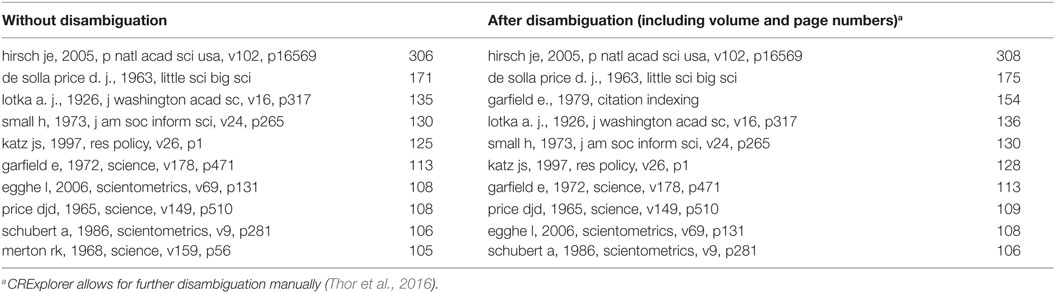

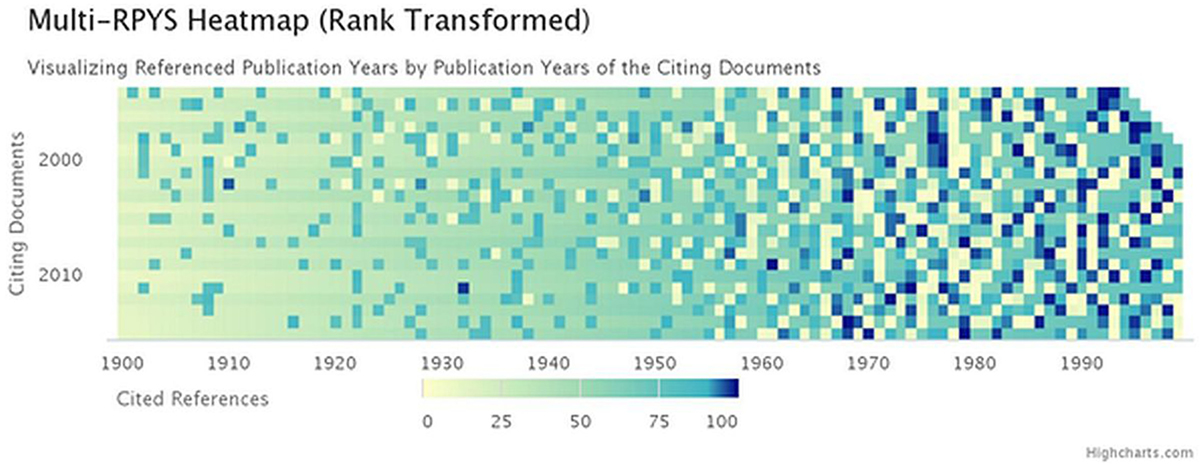

CRExplorer <at http://www.crexplorer.net> enables the user to refine Figure 9 by disambiguating the cited references (Thor et al., 2016). Elaborating on Comins and Hussey (2015); Comins and Leydesdorff (2016a) developed Multi-RPYS. Multi-RPYS maps RPYS for a series of years as a heat map. Figure 10 provides the Multi-RPYS for the same set of 3,777 articles from Scientometrics.

Figure 10. 3,777 articles published in Scientometrics, downloaded on January 2, 2016. Source: http://comins.leydesdorff.net

Figure 10 shows the same bar in 1926, and similarly bars in 1963, 1973, 1979, etc. (Table 2). One can also see that citation of 1963 as referenced publication year became less intensive during the 1990s than in more recent years. Referencing to de Solla Price (1965), however, seems to have been obliterated by incorporation. On the top-right side of the figure, the progression of citing years generates an oblique cut-off. Two years behind this edge the dark blue blocks represent citation currency at the research front.

Table 2. Ten most-cited publications in Scientometrics (before and after machine disambiguation using CRExplorer); January 2, 2016.

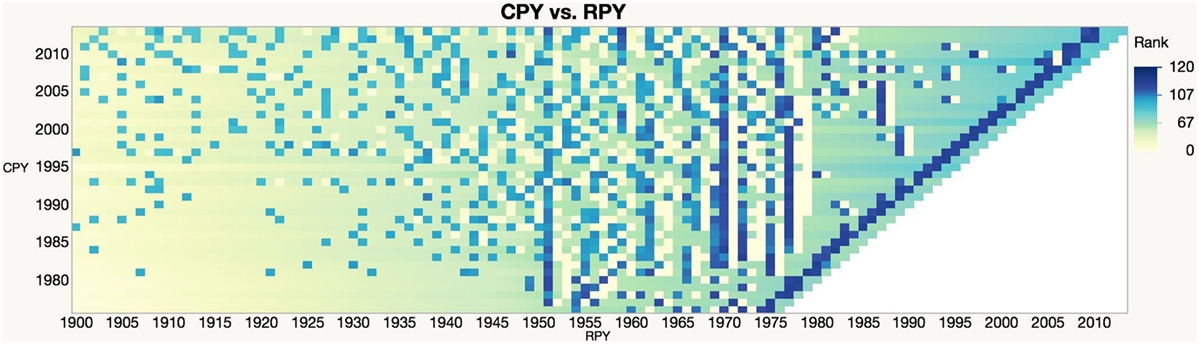

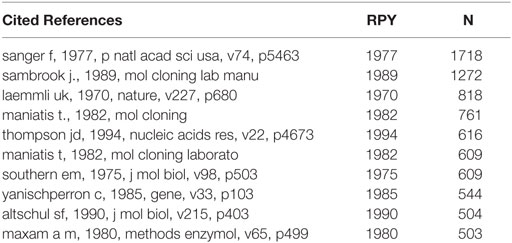

Using Gene as a biomedical journal with a focus on the research front (Baumgartner and Leydesdorff, 2014, pp. 802f.), Figure 11 shows the predominance of the research front over longer term citation in this case. However, the bars indicating longer term citation are far from absent. The top 10 most highly cited papers (Table 3) are all more than 10 years old.

Figure 11. 15,383 articles published in Gene 1996–2015, downloaded on February 14, 2016; visualized using the statistical software JMP.

Table 3. Ten most-cited publications in Gene (without disambiguation using CRExplorer); February 14, 2016.

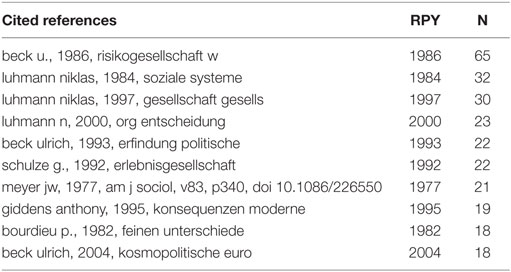

Figure 12 completes our argument by showing the results of Multi-RPYS for Soziale Welt. The research front is not present in all years, and also otherwise citation is not well organized in this journal. With a single exception, the top 10 most highly cited references are in German (Table 4).

Figure 12. 524 documents published in Soziale Welt since its first edition in 1949; downloaded on February 14, 2016. Source: http://comins.leydesdorff.net

Table 4. Ten most-cited publications in Soziale Welt (automatic disambiguation using CRExplorer); February 14, 2016.

Summary and Conclusion

We argue that the measurement of “quality” in terms of citations can further be qualified: one can, and probably should, distinguish between short-term citation currency at the research front and longer term processes of the incorporation and codification of knowledge claims into bodies of knowledge. The latter can be expected to operate selectively, whereas the former provide variation. Citation impact studies focus on short-term citation, and therefore tend to measure not epistemic quality but involvement in current discourses and sustained visibility (Moed et al., 1985).

Major sources of data and a majority of the indicators used for the evaluation of science and scientists are biased toward short-term impact. The use of JIFs, for example, can be expected to lead to a selection bias that is skewing the results of evaluations in favor of short-term impact. The assumption of the existence of a research front underlying JIF and many other policy-relevant indicators (de Solla Price, 1970) is backgrounded in evaluation studies. However, in this study, we have shown that even in the fields with a research front (exemplified in terms of short-term citations), there is significant presence of long-term citations. This calls for more studies (both theoretical and evaluative) examining the relationship between short-term and long-term impact. We have shown that patterns emerging from multi-RPYS visualizations enable distinguishing between short-term and long-term impact (e.g., trailing edges and vertical bands, respectively).

The two processes of citation currency and citation as codification can be distinguished analytically, but they are coupled by feedback and feed-forward relations which evolve dynamically. At each moment of time, selection is structural; but the structures are also evolving, albeit at a slower pace. The dynamics of science and technology are continuously updated by variation at the research fronts. However, the relative weights of the processes of variation, selection, and retention can be expected to vary among the disciplines. There is no “one size fits all” formula. Research styles, disciplinary backgrounds, and methodological styles can be expected to vary within institutional units (e.g., departments, journals, etc.). A later concept symbol does not have to be prominently cited at the research front during the first few years (Ponomarev et al., 2014a,b; cf. Baumgartner and Leydesdorff, 2014; Ke et al., 2015). Using a sample of 40 Spanish researchers, however, Costas et al. (2011) found that such occurrences (coined “the Mendel syndrome” by these authors) are rare. Baumgartner and Leydesdorff (2014) estimated that between 5 and 10% of the citation patterns are atypical.

The transformation of the citing distribution into the cited one first generated an illusion of comparability (Wouters, 1999), but the normalization is based on assumptions about similarities in citing behavior without sociological reflection (Hicks et al., 2015). The citation distributions (“cited”) thus generated are made the subject of study in a political economy of research evaluations (Dahler-Larsen, 2014) with the argument that one follows “best practices.” We call this a political economy because the evaluations are initiated by and may have consequences for funding decisions; the production of indicators itself has become a quasi-industry. As we have shown in case of a German-language sociology journal, Soziale Welt, studying both citing and cited environments of the entity we focus on (individuals, groups, or journals) will be not only more informative in the studies of science via citations, but necessary in deciding reference sets for evaluative purposes. This becomes especially important in evaluation exercises of non-US (and non-English language)-based research.

Are there alternatives? First, the processes of codification of knowledge via long-term citations can be studied empirically by expanding the focus from references (as the currently only way of measuring impact) to the full text of the published research as well. In the full texts, one can study the processes of obliteration by incorporation (e.g., McCain, 2015) and the different functions of referencing in arguments (e.g., Amsterdamska and Leydesdorff, 1989; Leydesdorff and Hellsten, 2005). The increased availability of full text with advances in textual analyses (e.g., Cabanac, 2014; Milojevic, 2015) and citation-in-context studies (e.g., Small, 1982, 2011) are promising venues for further research.

Second, the dynamics of structure/agency contingencies is relevant to citation analysis (Giddens, 1979; Leydesdorff, 1995b). Citing can be considered as an action in which the author integrates cognitive, rethorical, and social aspects or, in other words, reproduces an epistemic, textual, and social dynamics. The structures of the sciences to which one contributes by reproducing them in instantiations (Fujigaki, 1998) are ideational and therefore latent; they are only partially reflected by individual scholars in specific texts. The texts make the different dynamics amenable to measurement (Callon et al., 1983; Leydesdorff, 1995a; Milojevic, 2015).

From a structuralist perspective, the references in the texts can be modeled as variables contributing to the explanation of the dynamics of science and technology. Citations are then not reified as facts naturalistically found in and retrieved from databases. Whereas the indicators seem to be in need of an explanation (e.g., in a so-called “theory of citation”), considering these data as proxies of variables in a model turns the tables: not the citations need to be explained, but the operationalization of the variables in terms of citations has to be specified. From this perspective, issues such as normalization become part of the elaboration of a measurement theory (which is always needed). A scientometric research program can thus be formulated in relation to the history and philosophy of science (Leydesdorff, 1995a; Comins and Leydesdorff, 2016b). However, the research questions about “quality” on this research agenda differ in important respects from those raised in evaluation studies about short-term impact.

Author Contributions

LL designed the project and wrote the first version of the manuscript. LB, JC, and SM revised and rewrote parts. JC contributed also with software development.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to Thomson-Reuters for providing us with JCR data.

Footnotes

- ^The distinction between review and research articles in the Web-of-Science (WoS) is based on citation statistics: “In the JCR system any article containing more than 100 references is coded as a review. Articles in “review” sections of research or clinical journals are also coded as reviews, as are articles whose titles contain the word “review” or ‘overview.’” at http://thomsonreuters.com/products_services/science/free/essays/impact_factor/ (retrieved February 22, 2016).

- ^On average, impact factors in sociology are an order of magnitude smaller than in psychology (Leydesdorff, 2008, p. 280).

- ^The 2012 and 2013 volumes of AJS were cited 63 and 171 times in 2014 out of a total citation count of 12,416. For ASR, the numbers are 101, 259, and 13,181, respectively. For Soziale Welt, the percentage of citation to publications in the last 2 years is 12.2%; (3+9)/98.

- ^The numbers for 2014 are 489,761 total cites; 71,941 as the numerator of IF-2, and 182,760 for IF-5.

- ^The immediacy or Price index is the percentage of papers cited in the year of their publication (Moed, 1989; de Solla Price, 1970).

- ^A fifth order polynomial was needed for the modeling, indicating that the decay (third order) is disturbed by other processes of citation behavior.

- ^Another example of the ecological fallacy is the use of impact factors of journals as a proxy for the quality of individual papers in these journals (Alberts, 2013).

- ^The latter figure includes 1,689 published in Social Studies of Science (since 1975) and 97 in Science Studies (the previous title of the journal between 1971 and 1974).

- ^The cited references in WoS provide only the names and initials of first authors. Citations to Zuckerman with Merton as second author are therefore not included.

- ^The so-called Matthew Effect is based on the following passage from the Gospel: “For unto every one that hath shall be given, and he shall have abundance: but from him that hath not shall be taken away even that which he hath” (Matthew 25:29, King James version).

- ^WoS classifies Scientometrics additionally as “Computer Science, Interdisciplinary Applications.”

- ^Downloaded on January 2, 2016.

- ^This curve was further analyzed in considerable detail by Leydesdorff et al. (2014).

References

Adorno, T. W., Albert, H., Dahrendorf, R., Habermas, J., Pilot, H., and Popper, K. R. (1969). Positivismusstreit in der deutschen Soziologie. Frankfurt am Main: Luchterhand.

Amsterdamska, O., and Leydesdorff, L. (1989). Citations: indicators of significance? Scientometrics 15, 449–471. doi:10.1007/BF02017065

Barnes, S. B., and Dolby, R. G. A. (1970). The scientific ethos: a deviant viewpoint. Eur. J. Sociol. 11, 3–25. doi:10.1017/S0003975600001934

Barabási, A. L., and Albert, R. (1999). Emergence of scaling in random networks. Science 286, 509–512.

Barabási, A.-L., Jeong, H., Néda, Z., Ravasz, E., Schubert, A., and Vicsek, T. (2002). Evolution of the social network of scientific collaborations. Physica A 311, 590–614.

Baumgartner, S., and Leydesdorff, L. (2014). Group-based trajectory modeling (GBTM) of citations in scholarly literature: dynamic qualities of “transient” and “sticky knowledge claims.” J. Am. Soc. Inf. Sci. Technol. 65, 797–811. doi:10.1002/asi.23009

Bensman, S. J. (2007). Garfield and the impact factor. Annu. Rev. Inform. Sci. Technol. 41, 93–155. doi:10.1002/aris.2007.1440410110

Bensman, S. J., and Wilder, S. J. (1998). Scientific and technical serials holdings optimization in an inefficient market: a LSU serials redesign project exercise. Library Resour. Tech. Serv. 42, 147–242. doi:10.5860/lrts.42n3.147

Bernstein, B. (1971). Class, Codes and Control, Vol. 1: Theoretical Studies in the Sociology of Language. London: Routledge & Kegan Paul.

Bonitz, M., Bruckner, E., and Scharnhorst, A. (1999). The Matthew index – concentration patterns and Matthew core journals. Scientometrics 44, 361–378. doi:10.1007/BF02458485

Bornmann, L., and Daniel, H.-D. (2008). What do citation counts measure? A review of studies on citing behavior. J. Doc. 64, 45–80. doi:10.1108/00220410810844150

Bornmann, L., de Moya Anegón, F., and Leydesdorff, L. (2010). Does scientific advancement lean on the shoulders of mediocre research? An investigation of the Ortega hypothesis. PLoS ONE 5:e13327. doi:10.1371/journal.pone.0013327

Bornmann, L., and Leydesdorff, L. (2015). Does quality and content matter for citedness? A comparison with para-textual factors and over time. J. Informetrics 9, 419–429. doi:10.1016/j.joi.2015.03.001

Cabanac, G. (2014). Extracting and quantifying eponyms in full-text articles. Scientometrics 98, 1631–1645. doi:10.1007/s11192-013-1091-8

Callon, M., Courtial, J.-P., Turner, W. A., and Bauin, S. (1983). From translations to problematic networks: an introduction to co-word analysis. Soc. Sci. Inform. 22, 191–235. doi:10.1177/053901883022002003

Chubin, D. E., and Moitra, S. D. (1975). Content analysis of references: adjunct or alternative to citation counting? Soc. Stud. Sci. 5, 423–441. doi:10.1177/030631277500500403

Cole, J. R., and Cole, S. (1973). Social Stratification in Science. Chicago/London: University of Chicago Press.

Coleman, J. S. (1988). Social capital in the creation of human capital. Am. J. Sociol. 94, S95–S120.

Comins, J. A., and Hussey, T. W. (2015). Detecting seminal research contributions to the development and use of the global positioning system by reference publication year spectroscopy. Scientometrics 104, 575–580.

Comins, J. A., and Leydesdorff, L. (2016a). RPYS i/o: software demonstration of a web-based tool for the historiography and visualization of citation classics, sleeping beauties and research fronts. Scientometrics 107, 1509–1517. doi:10.1007/s11192-016-1928-z

Comins, J. A., and Leydesdorff, L. (2016b). Identification of long-term concept-symbols among citations: Can documents be clustered in terms of common intellectual histories? J. Assoc. Inf. Sci. Technol. (in press).

Coser, R. L. (1975). “The complexity of roles as a seedbed of individual autonomy,” in The Idea of Social Structure. Papers in Honor of Robert K. Merton, ed. L. A. Coser (New York/Chicago: Harcourt Brace Jovanovich), 237–264.

Costas, R., van Leeuwen, T. N., and van Raan, A. F. J. (2011). The “Mendel syndrome” in science: durability of scientific literature and its effects on bibliometric analysis of individual scientists. Scientometrics 89, 177–205. doi:10.1007/s11192-011-0436-4

Cozzens, S. E. (1985). Using the archive: Derek Price’s theory of differences among the sciences. Scientometrics 7, 431–441. doi:10.1007/BF02017159

Crane, D. (1969). Social structure in a group of scientists. Am. Sociol. Rev. 36, 335–352. doi:10.2307/2092499

Dewar, M. J., Zoebisch, E. G., Healy, E. F., and Stewart, J. J. (1985). Development and use of quantum mechanical molecular models. 76. AM1: a new general purpose quantum mechanical molecular model. J. Am. Chem. Soc. 107, 3902–3909. doi:10.1021/ja00299a024

Dahler-Larsen, P. (2014). Constitutive effects of performance indicators: getting beyond unintended consequences. Public Manage. Rev. 16, 969–986. doi:10.1080/14719037.2013.770058

de Solla Price, D. J. (1965). Networks of scientific papers. Science 149, 510–515. doi:10.1126/science.149.3683.510

de Solla Price, D. J. (1970). “Citation measures of hard science, soft science, technology, and nonscience,” in Communication among Scientists and Engineers, eds C. E. Nelson and D. K. Pollock (Lexington, MA: Heath), 3–22.

de Solla Price, D. J. (1976). A general theory of bibliometric and other cumulative advantage processes. J. Am. Soc. Inform. Sci. 27, 292–306. doi:10.1002/asi.4630270505

Edge, D. (1979). Quantitative measures of communication in science: a critical overview. Hist. Sci. 17, 102–134. doi:10.1177/007327537901700202

Elkana, Y., Lederberg, J., Merton, R. K., Thackray, A., and Zuckerman, H. (1978). Toward a Metric of Science: The advent of science indicators. New York: Wiley.

Fujigaki, Y. (1998). Filling the gap between discussions on science and scientists’ everyday activities: applying the autopoiesis system theory to scientific knowledge. Soc. Sci. Inform. 37, 5–22. doi:10.1177/053901898037001001

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science 178, 471–479. doi:10.1126/science.178.4060.471

Garfield, E. (1975). The “obliteration phenomenon” in science – and the advantage of being obliterated. Curr. Contents #51/52, 396–398.

Garfield, E., and Sher, I. H. (1963). New factors in the evaluation of scientific literature through citation indexing. Am. Doc. 14, 195–201. doi:10.1002/asi.5090140304

Gilbert, G. N. (1977). Referencing as persuasion. Soc. Stud. Sci. 7, 113–122. doi:10.1177/030631277700700112

Gross, P. L. K., and Gross, E. M. (1927). College libraries and chemical education. Science 66, 385–389. doi:10.1126/science.66.1713.385

Haustein, S., Bowman, T. D., and Costas, R. (2015). Interpreting “altmetrics”: viewing acts on social media through the lens of citation and social theories. arXiv preprint arXiv:1502.05701.

Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., and Rafols, I. (2015). The Leiden Manifesto for research metrics. Nature 520, 429–431. doi:10.1038/520429a

Hoekman, J., Frenken, K., de Zeeuw, D., and Heerspink, H. L. (2012). The geographical distribution of leadership in globalized clinical trials. PLoS ONE 7, e45984.

Jorgensen, W. L., Maxwell, D. S., and Tirado-Rives, J. (1996). Development and testing of the OPLS all-atom force field on conformational energetics and properties of organic liquids. J. Amer. Chem. Soc. 118, 11225–11236.

Kamihara, Y., Watanabe, T., Hirano, M., and Hosono, H. (2008). Iron-based layered superconductor La [O1–xFx] FeAs (x = 0.05–0.12) with Tc = 26 K. J. Am. Chem. Soc. 130, 3296–3297.

Kaplan, N. (1965). The norms of citation behavior: prolegomena to the footnote. Am. Doc. 16, 179–184. doi:10.1002/asi.5090160305

Ke, Q., Ferrara, E., Radicchi, F., and Flammini, A. (2015). Defining and identifying sleeping beauties in science. Proc. Natl. Acad. Sci. 112, 7426–7431. doi:10.1073/pnas.1424329112

Larivière, V., and Gingras, Y. (2010). The impact factor’s Matthew Effect: a natural experiment in bibliometrics. J. Am. Soc. Inf. Sci. Technol. 61, 424–427.

Leydesdorff, L. (1995a). The Challenge of Scientometrics: The Development, Measurement, and Self-Organization of Scientific Communications. Leiden: DSWO Press, Leiden University.

Leydesdorff, L. (1995b). The production of probabilistic entropy in structure/action contingency relations. J. Soc. Evol. Syst. 18, 339–356. doi:10.1016/1061-7361(95)90023-3

Leydesdorff, L. (2006). Can scientific journals be classified in terms of aggregated journal-journal citation relations using the journal citation reports? J. Am. Soc. Inform. Sci. Technol. 57, 601–613. doi:10.1002/asi.20322

Leydesdorff, L. (2008). Caveats for the use of citation indicators in research and journal evaluation. J. Am. Soc. Inf. Sci. Technol. 59, 278–287. doi:10.1002/asi.20743

Leydesdorff, L., and Amsterdamska, O. (1990). Dimensions of citation analysis. Sci. Technol. Human Values 15, 305–335. doi:10.1177/016224399001500303

Leydesdorff, L., and Bornmann, L. (2012). Percentile ranks and the integrated impact indicator (I3). J. Am. Soc. Inf. Sci. Technol. 63, 1901–1902. doi:10.1002/asi.22641

Leydesdorff, L., and Bornmann, L. (2016). The operationalization of “fields” as WoS subject categories (WCs) in evaluative bibliometrics: the cases of “library and information science” and “science & technology studies.” J. Assoc. Inform. Sci. Technol. 67, 707–714. doi:10.1002/asi.23408

Leydesdorff, L., Bornmann, L., Marx, W., and Milojević, S. (2014). Referenced publication years spectroscopy applied to iMetrics: scientometrics, journal of informetrics, and a relevant subset of JASIST. J. Inform. 8, 162–174. doi:10.1016/j.joi.2013.11.006

Leydesdorff, L., and Hellsten, I. (2005). Metaphors and diaphors in science communication: mapping the case of ‘stem-cell research’. Sci. Commun. 27, 64–99. doi:10.1177/1075547005278346

Leydesdorff, L., and Milojević, S. (2015). The citation impact of German sociology journals: some problems with the use of scientometric indicators in journal and research evaluations. Soziale Welt 66, 193–204. doi:10.5771/0038-6073-2015-2-193

Leydesdorff, L., and Probst, C. (2009). The delineation of an interdisciplinary specialty in terms of a journal set: the case of communication studies. J. Assoc. Inf. Sci. Technol. 60, 1709–1718.

Leydesdorff, L., and van den Besselaar, P. (1997). Scientometrics and communication theory: towards theoretically informed indicators. Scientometrics 38, 155–174. doi:10.1007/BF02461129

Lotka, A. J. (1926). The frequency distribution of scientific productivity. J. Wash. Acad. Sci. 16, 317–323.

Luukkonen, T. (1997). Why has Latour’s theory of citations been ignored by the bibliometric community? Discussion of sociological interpretations of citation analysis. Scientometrics 38, 27–37. doi:10.1007/BF02461121

Margolis, J. (1967). Citation indexing and evaluation of scientific papers. Science 155, 1213. doi:10.1126/science.155.3767.1213

Martin, B. R., and Irvine, J. (1983). Assessing basic research – some partial indicators of scientific progress in radio astronomy. Res. Policy 12, 61–90. doi:10.1016/0048-7333(83)90005-7

Marx, W., and Bornmann, L. (2014). Tracing the origin of a scientific legend by reference publication year spectroscopy (RPYS): the legend of the Darwin finches. Scientometrics 99, 839–844. doi:10.1007/s11192-013-1200-8

Marx, W., Bornmann, L., Barth, A., and Leydesdorff, L. (2014). Detecting the historical roots of research fields by reference publication year spectroscopy (RPYS). J. Assoc. Inform. Sci. Technol. 65, 751–764. doi:10.1002/asi.23089

McCain, K. W. (2015). Mining full-text journal articles to assess obliteration by incorporation: Herbert A. Simon’s concepts of bounded rationality and satisficing in economics, management, and psychology. J. Assoc. Inform. Sci. Technol. 66, 2187–2201. doi:10.1002/asi.23335

Merton, R. K. (1942). Science and technology in a democratic order. J. Legal Polit. Sociol. 1, 115–126.

Merton, R. K. (1957). Priorities in scientific discovery: a chapter in the sociology of science. Am. Sociol. Rev. 22, 635–659. doi:10.2307/2089193

Merton, R. K. (1968). The Matthew effect in science. Science 159, 56–63. doi:10.1126/science.159.3810.56

Merton, R. K. (1973a). The Sociology of Science: Theoretical and Empirical Investigations. Chicago/London: University of Chicago Press.

Merton, R. K. (1973b). “Social conflict over styles of sociological work,” in The Sociology of Science: Theoretical and Empirical Investigations, ed. N. W. Storer (Chicago and London: Chicago University Press), 47–69.

Merton, R. K. (1988). The Matthew effect in science, II: cumulative advantage and the symbolism of intellectual property. Isis 79, 606–623. doi:10.1086/354848

Milojevic, S. (2015). Quantifying the cognitive extent of science. J. Inform. 9, 962–973. doi:10.1016/j.joi.2015.10.005

Milojević, S., Sugimoto, C. R., Larivière, V., Thelwall, M., and Ding, Y. (2014). The role of handbooks in knowledge creation and diffusion: a case of science and technology studies. J. Inform. 8, 693–709. doi:10.1016/j.joi.2014.06.003

Moed, H. F. (1989). Bibliometric measurement of research performance and price’s theory of differences among the sciences. Scientometrics 15, 473–483. doi:10.1007/BF02017066

Moed, H. F. (2010). Measuring contextual citation impact of scientific journals. J. Inform. 4, 265–277. doi:10.1016/j.joi.2010.01.002

Moed, H. F., Burger, W. J. M., Frnkfort, J. G., and van Raan, A. F. J. (1985). The use of bibliometric data for the measurement of university research performance. Res. Policy 14, 131–149. doi:10.1016/0048-7333(85)90012-5

Moravcsik, M. J., and Murugesan, P. (1975). Some results on the function and quality of citations. Soc. Stud. Sci. 5, 86–92. doi:10.1177/030631277500500106

Mulkay, M., Potter, J., and Yearley, S. (1983). “Why an analysis of scientific discourse is needed,” in Science Observed: Perspectives on the Social Study of Science, eds K. D. Knorr and M. J. Mulkay (London: Sage), 171–204.

Ponomarev, I. V., Williams, D. E., Hackett, C. J., Schnell, J. D., and Haak, L. L. (2014a). Predicting highly cited papers: a method for early detection of candidate breakthroughs. Technol. Forecast. Soc. Change 81, 49–55. doi:10.1016/j.techfore.2012.09.017

Ponomarev, I. V., Lawton, B. K., Williams, D. E., and Schnell, J. D. (2014b). Breakthrough paper indicator 2.0: can geographical diversity and interdisciplinarity improve the accuracy of outstanding papers prediction? Scientometrics 100, 755–765. doi:10.1007/s11192-014-1320-9

Rafols, I., Leydesdorff, L., O’Hare, A., Nightingale, P., and Stirling, A. (2012). How journal rankings can suppress interdisciplinary research: a comparison between innovation studies and business & management. Res. Policy 41, 1262–1282. doi:10.1016/j.respol.2012.03.015

Robinson, W. D. (1950). Ecological correlations and the behavior of individuals. Am. Sociol. Rev. 15, 351–357. doi:10.2307/2087176

Scharnhorst, A., and Garfield, E. (2011). Tracing scientific influence. J. Dyn. Socio-Econ. Syst. 2, 1–33.

Schutz, A. (1967). The Phenomenology of the Social World. Evanston, IL: Northwestern University Press.

Seglen, P. O. (1992). The skewness of science. J. Am. Soc. Inform. Sci. 43, 628–638. doi:10.1002/(SICI)1097-4571(199210)43:9<628::AID-ASI5>3.0.CO;2-0

Small, H. (1978). Cited documents as concept symbols. Soc. Stud. Sci. 8, 113–122. doi:10.1177/030631277800800305

Small, H. (1982). “Citation context analysis,” in Progress in Communication Sciences, eds B. J. Dervin and M. J. Voight (Norwood, NJ: Ablex), 287–310.

Small, H. (2011). Interpreting maps of science using citation context sentiments: a preliminary investigation. Scientometrics 87, 373–388. doi:10.1007/s11192-011-0349-2

Thor, A., Marx, W., Leydesdorff, L., and Bornmann, L. (2016). Introducing citedreferencesexplorer: a program for reference publication year spectroscopy with cited references disambiguation. J. Inform. 10, 503–515. doi:10.1016/j.joi.2016.02.005

Van den Besselaar, P. (2001). The cognitive and the social structure of STS. Scientometrics 51, 441–460. doi:10.1023/A:1012714020453

van Eck, N. J., and Waltman, L. (2014). CitNetExplorer: a new software tool for analyzing and visualizing citation networks. J. Inform. 8, 802–823. doi:10.1016/j.joi.2014.07.006

van Eck, N. J., Waltman, L., van Raan, A. F., Klautz, R. J., and Peul, W. C. (2013). Citation analysis may severely underestimate the impact of clinical research as compared to basic research. PLoS ONE 8:e62395. doi:10.1371/journal.pone.0062395

van Raan, A. F. J. (2004). Sleeping beauties in science. Scientometrics 59, 467–472. doi:10.1023/B:SCIE.0000018543.82441.f1

Wagner, C., Roessner, J. D., Bobb, K., Klein, J., Boyack, K., Keyton, J., et al. (2009). Evaluating the Output of Interdisciplinary Scientific Research: A Review of the Literature. Unpublished Report to National Science Foundation. Washington, DC: NSF.

Waltman, L., and van Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. J. Am. Soc. Inf. Sci. Technol. 63, 2378–2392. doi:10.1002/asi.22748

Woolgar, S. (1991). Beyond the citation debate: towards a sociology of measurement technologies and their use in science policy. Science and Public Policy, 18, 319–326.

Wouters, P. (1999). The Citation Culture. Unpublished Ph.D. thesis, University of Amsterdam, Amsterdam.

Wyatt, S., Milojević, S., Park, H. W., and Leydesdorff, L. (2017). “The intellectual and practical contributions of scientometrics to STS,” in Handbook of Science and Technology Studies, 4th Edn, eds U. Felt, R. Fouché, C. Miller, and L. Smith-Doerr (Boston, MA: MIT Press), 87–112.

Keywords: citation, symbol, historiography, RPYS, obliteration by incorporation

Citation: Leydesdorff L, Bornmann L, Comins JA and Milojević S (2016) Citations: Indicators of Quality? The Impact Fallacy. Front. Res. Metr. Anal. 1:1. doi: 10.3389/frma.2016.00001

Received: 04 April 2016; Accepted: 07 July 2016;

Published: 02 August 2016

Edited by:

Chaomei Chen, Drexel University, USAReviewed by:

Zaida Chinchilla-Rodríguez, Spanish National Research Council, SpainHenk F. Moed, Sapienza University of Rome, Italy

Copyright: © 2016 Leydesdorff, Bornmann, Comins and Milojević. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Loet Leydesdorff, loet@leydesdorff.net

Loet Leydesdorff

Loet Leydesdorff Lutz Bornmann2

Lutz Bornmann2

Jordan A. Comins

Jordan A. Comins Staša Milojević

Staša Milojević