Conveying Environmental Information to Deafblind People: A Study of Tactile Sign Language Interpreting

- Sign Language Section, Department of Linguistics, Stockholm University, Stockholm, Sweden

Many deafblind people use tactile sign language and interpreters in their daily lives. Because of their hearing and sight status, the role of interpreters does not only involve translating the content expressed by other deaf or hearing people, but it also involves conveying environmental information (i.e., multimodal communication regarding what is happening at a given moment to be able to understand the context). This paper aims to contribute to the field of tactile sign language interpreting by describing how two Tactile Swedish Sign Language interpreters convey environmental information to two deafblind women in a particular situation, that is, a guided visit to a cathedral by a hearing Norwegian speaker. We expect to find various strategies including the use of haptic signs (i.e., a system of signs articulated on the body of the deafblind person aimed to provide environmental and interactional information). After summarizing the small amount of existing research on the issue to date, we present our data and how they were annotated. Our analysis shows that a variety of strategies are used, including Tactile Swedish Sign Language, using locative points to show locations with some type of contact with the body of deafblind individuals, depicting shapes on the palm of the hand of deafblind individuals, using objects to depict shapes, touching elements of the cathedral with the hands or with the feet such as surfaces, and walking around. Some of these strategies are more frequent than others and some strategies are also used in combination, whereas others are used in isolation. We did not observe any use of haptic signs to convey environmental information in our data, which calls for further research on which criteria apply to use this strategy in a particular situation.

Introduction

Deafblind people may have lost hearing and sight to different degrees, and this may have occurred in different moments of their lives. That is, some people may have been born with congenital deafblindness while others may have acquired it later after an illness or an accident. Also, there are people who are deaf and later become blind, blind people who later become deaf or people who progressively lose both senses (Raanes and Slettebakk Berge, 2017). This combined hearing and sight status (which in some cases may also have other physical or intellectual impairments associated) makes it harder for this population to participate in our society, to develop themselves, have access to education, etc. As a matter of fact, the degree of integration into our society varies largely depending on the place the deafblind person is living (i.e., there are some countries or regions which are more advanced than others regarding integration policies for this population), on the existence of interpreting services (i.e., availability of interpreters and mediators), on the person (e.g., preferred communication system, tactile sign language training, degree of autonomy, etc.), among other factors.

In Sweden (as in the other Nordic countries), the services available to deafblind people vary largely depending on where the deafblind person lives. There are more interpreting, social and educational services available in big cities than in smaller and isolated villages. Deaf pupils who attend one of the five state deaf schools1 and start losing sight do receive specific training in tactile sign language and orientation. Deaf signing adults who progressively lose sight receive information about their condition, rehabilitation and training in tactile sign language. Also, deafblind people can meet peers and have a sense of community in bigger cities, whereas this is not the case of deafblind people living in small villages who may live in isolation from other peers.

This paper is organized as follows. In section “Specifics of Tactile Sign Language Interpreting,” we give an account of the specificities of tactile sign language interpreting, as compared to spoken and visual sign language interpreting. In section “Research on Tactile Sign Language Interpreting,” we summarize existing research in the field of tactile sign language interpreting. In section “Materials and Methods,” we describe the dataset, the conditions under which it was recorded, the participants of the study and our annotation methodology. In section “Results,” we present the results of our analysis concerning the different strategies used by tactile sign language interpreters to convey environmental information in this given setting. Finally, we discuss our findings and propose future avenues for research in section “Discussion and Further Research.”

Specifics of Tactile Sign Language Interpreting

Some deafblind people know the tactile sign language of their countries2 and use tactile sign language interpreters in their daily lives. What is expected from these professionals and the necessary conditions to develop their work is quite different from their colleagues working with spoken languages and visual sign languages. When it comes to the setting, spoken language interpreters (who practice unimodal interpreting in the terms of Nicodemus and Emmorey, 2015) may not be visible and users may hear their voices through headphones (e.g., in a conference). The contact between these professionals and their users may therefore be non-existent. Interpreters working with visual sign languages need to be placed in a position from which they are visible when they translate into a signed language, either from a spoken language [i.e., bimodal interpreting in Nicodemus and Emmorey’s (2015) terms], or a source sign language (i.e., unimodal interpreting). One interpreter at a time will suffice for the audience to access information, either signed or spoken. However, tactile sign language interpreting can be both bimodal within the same language (i.e., the interpreter adapts the visual sign language to its tactile form, or vice versa) and bimodal with different languages (i.e., the interpreter translates from a spoken language to a tactile sign language, or vice versa). The setting in which tactile sign language interpreters work is different from their colleagues working with spoken or signed languages because the former work one-to-one with deafblind people. The interpreter needs to be placed or seated close enough to the deafblind person so that their hands are in contact in order for information to be exchanged.

The amount and type of information transferred is also different across these three profiles. The job of spoken language interpreters is to transfer information from one language to the other, which is exclusively perceived by the auditory channel, whereas the role of visual sign language interpreters also involves conveying environmental information (or “multimodal communication”) which is exclusively perceived by the auditory channel, such as students grimacing and covering their ears with their hands because of the chalkboard screech in a classroom. In tactile sign language interpreting, the role of interpreters goes further than that as deafblind people will not perceive environmental information which is conveyed either by the visual or the auditory channel. In the previous example of a classroom, the interpreter working with a deafblind student will have to let him/her know that there has been this chalkboard screech and, as a result, students have covered their ears with their hands. Furthermore, the interpreter will have previously had to convey environmental information about the class setting, where the teacher and the students are placed, etc.

Because of the different profiles of deafblind people, the strategies used by interpreters vary greatly. If deafblind people have some residual vision and/or residual hearing, they may use it to gain access to the world and to a particular situation for capturing both linguistic and environmental information. For instance, some deafblind people may produce clear speech and perceive it to some extent, and they may perceive environmental information through the use of haptic signs (Lahtinen and Palmer, 2008) produced by interpreters (Raanes and Slettebakk Berge, 2017). However, when deafblind do have almost no sight or hearing, face-to-face communication is articulated through touch most of the time. In this case, the two most frequent strategies used by interpreters to convey both linguistic and environmental information are tactile sign language and haptic signs in the Nordic countries.

Tactile sign languages are an adaptation of the visual sign languages which are used by the different Deaf communities. These communication systems “[were] largely unknown prior to the 1980s” (Willoughby et al., 2020). The degree and type of adaptation “differs depending on both the individual deafblind signer and the communities in which they are embedded” (Willoughby et al., 2018, 238). For instance, visual sign languages convey some part of the syntax and pragmatic information using non-manual elements. Non-manuals can be perceived by some deafblind people, but not all of them. Therefore, these people need to receive this information through tactile means [e.g., changing word order, adding a lexical sign to indicate a question, etc.; see Collins and Petronio (1998), Mesch (2001)]. Tactile sign languages also require that individuals are close to one another as some signs may be articulated on the other person’s face, hands or body (Raanes, 2011). Furthermore, reception of tactile sign languages may be one-handed (e.g., in the United States, Sweden, and France) or two-handed (e.g., Norway and Australia), and this variation depends on different factors including the language itself or the community using it, among others (Willoughby et al., 2018).

Haptic signs are a system of signs, used in Nordic countries, which are articulated on the body of the deafblind person (e.g., on his/her back). They aim to provide environmental information (such as the layout of a room) and interactional information (such as feelings and emotional states of others) (Raanes and Slettebakk Berge, 2017). There are 225 conventional signs presented on the website of the Nationellt kunskapscenter för dövblindfrågor (Swedish Knowledge Center for Deafblind Issues, NKCDB)3. One of the advantages of haptic signs is that they can be used while other types of information (e.g., signed information) are being transferred. Another advantage is that they can be “used in crowded situations or other contexts where there is not the space or time to assume a normal tactile signing posture – for example, to quickly inform a deafblind person about what food or drink options are on offer at a function” (Willoughby et al., 2018: 253). In contrast to tactile sign languages, which are also used naturally by deafblind signers for communication, haptic signs are exclusively used by sighted tactile sign language interpreters or relatives.

Research on Tactile Sign Language Interpreting

Although there are many aspects of tactile sign languages which are still unresearched, this is an issue that is attracting more and more attention from scholars, especially with respect to tactile American Sign Language (ASL), tactile Swedish Sign Language (STS), tactile French Sign Language (LSF), tactile Norwegian Sign Language (NTS), tactile Japanese Sign Language (JSL), tactile Australian Sign Language (Auslan) (see Willoughby et al., 2018 for an overview), and tactile Italian Sign Language (LIS) (Checchetto et al., 2018). Furthermore, there are some research initiatives on the practice of tactile sign language interpreters (Frankel, 2002; Metzger et al., 2004; Edwards, 2012; Slettebakk Berge and Raanes, 2013; Raanes and Slettebakk Berge, 2017). The first three initiatives focus on tactile ASL, which remains the best-explored tactile sign language in the literature at present, and the last two initiatives deal with NTS. We summarize these five papers below.

Frankel (2002) investigates how negation was interpreted from visual ASL into tactile ASL by two deaf certified interpreters. She focuses on the “choices [.] made about negation as well as structural accuracy pertaining to message equivalence” (Frankel, 2002: 169), including three different negation signs. Her analysis shows that there is significant variation in the expression of negation, on the one hand, and between interpreters depending on their experience, on the other. Metzger et al. (2004) compares the interpreters’ (non-)rendition in three different modes (i.e., visual ASL, tactile ASL, and spoken English) and three discourse genres (i.e., a medical interview, a college classroom, and a panel). They find that in addition to the translation of utterances, there are interpreted self-generated utterances (i.e., not produced by one of the participants in the situation) that contribute to the interactional management between interlocutors (e.g., the interpreter identifies the person who takes the turn) and the relaying of some aspect of the exchange (e.g., the interpreter repeats what one interlocutor said to ensure that the message is transferred). Interpreted self-generated utterances occur in the three modes and the three discourse genres, but the form and the strategies employed are not the same.

Edwards (2012) paper is the only one which does not adopt a purely linguistic perspective; rather it adopts a linguistic anthropological one. From the framework of the practice approach to language (Hanks, 2005), Edwards studies the interaction between a deafblind woman and her interpreter in a visit to a park in Seattle. In order for the deafblind woman to “[build] continuity between the fading visual world she is relinquishing and the tactile world into which she is venturing” (Edwards, 2012: 61), the interpreter mainly uses classifier constructions4 to depict the different activities that are going on in the park. The author describes some challenges that occur in the process. For instance, there is a moment in which the deafblind person lacks some knowledge about a specific point and the interpreter needs to repeat the information to ensure comprehension. Also, the interpreter’s discourse may have some implicit information which may not be perceived by the deafblind person (e.g., the interpreter describes that a person is wearing expensive clothes from a specific brand, but s/he also implies that this person is posh). The interpretation described in Edwards’ paper, which is not related to linguistic information but to environmental information, is important for deafblind individuals to enhance their integration and participation in society.

Slettebakk Berge and Raanes (2013) investigate how seven tactile NTS interpreters coordinate and express turn-taking in an interpreter-mediated meeting with five deafblind persons, and Raanes and Slettebakk Berge (2017) describe the use of haptic signs using the same dataset. Because of their different degrees of hearing and sight (two people were completely deaf and blind, whereas the other three had some residual hearing), different interpreting strategies were used during the meeting. Spoken utterances were produced and perceived by the three deafblind participants who had some residual hearing, whereas the other two members communicated using tactile NTS. Moreover, embodied techniques (i.e., gestures articulated on the deafblind persons’ back) were used before the formal meeting to let participants know where the others were sitting. The analysis of the dataset showed that interpreters use three patterns of action to coordinate turn-taking including “identifying the addressee of the remarks; actively negotiating speaking turns; and exchanging mini-response signals” (Slettebakk Berge and Raanes, 2013: 358), and these patterns are mediated through the use of haptics signs (Raanes and Slettebakk Berge, 2017). Haptic signs were used to let the chairwoman know who wants to take the turn, to indicate that one participant must wait because somebody else is holding the turn, and to let the participant know that s/he has the attention of others or the other’s reaction to what s/he is saying. These two studies conclude by underlining the importance of building trusting relationships between interpreters, and between interpreters and deafblind individuals, to ensure the communication flow.

Our paper aims to contribute to the literature about tactile interpreters’ professional practice. We investigate the rendition by two Tactile Swedish Sign Language (TSTS) interpreters working with two deafblind women participating in a guided visit to a cathedral. Similar to Slettebakk Berge and Raanes (2013) and Raanes and Slettebakk Berge (2017), the two hearing and sighted interpreters translate spoken utterances (produced by the hearing guide) and convey environmental information about the cathedral. We focus on how this environmental information is transferred as it does not only give the two deafblind women access to this particular situation, but it also helps them moving from their previous visual experience to the tactile world (Edwards, 2012). As far as we know, this is the first study which deals with this specific situation (i.e., a guided visit for hearing tourists adapted to deafblind people) and with TSTS interpreting. Our hypothesis is that because of the objective of our study (i.e., investigating how environmental information is conveyed) and the country of both interpreters and users, we will observe a frequent use of different haptic signs.

Materials and Methods

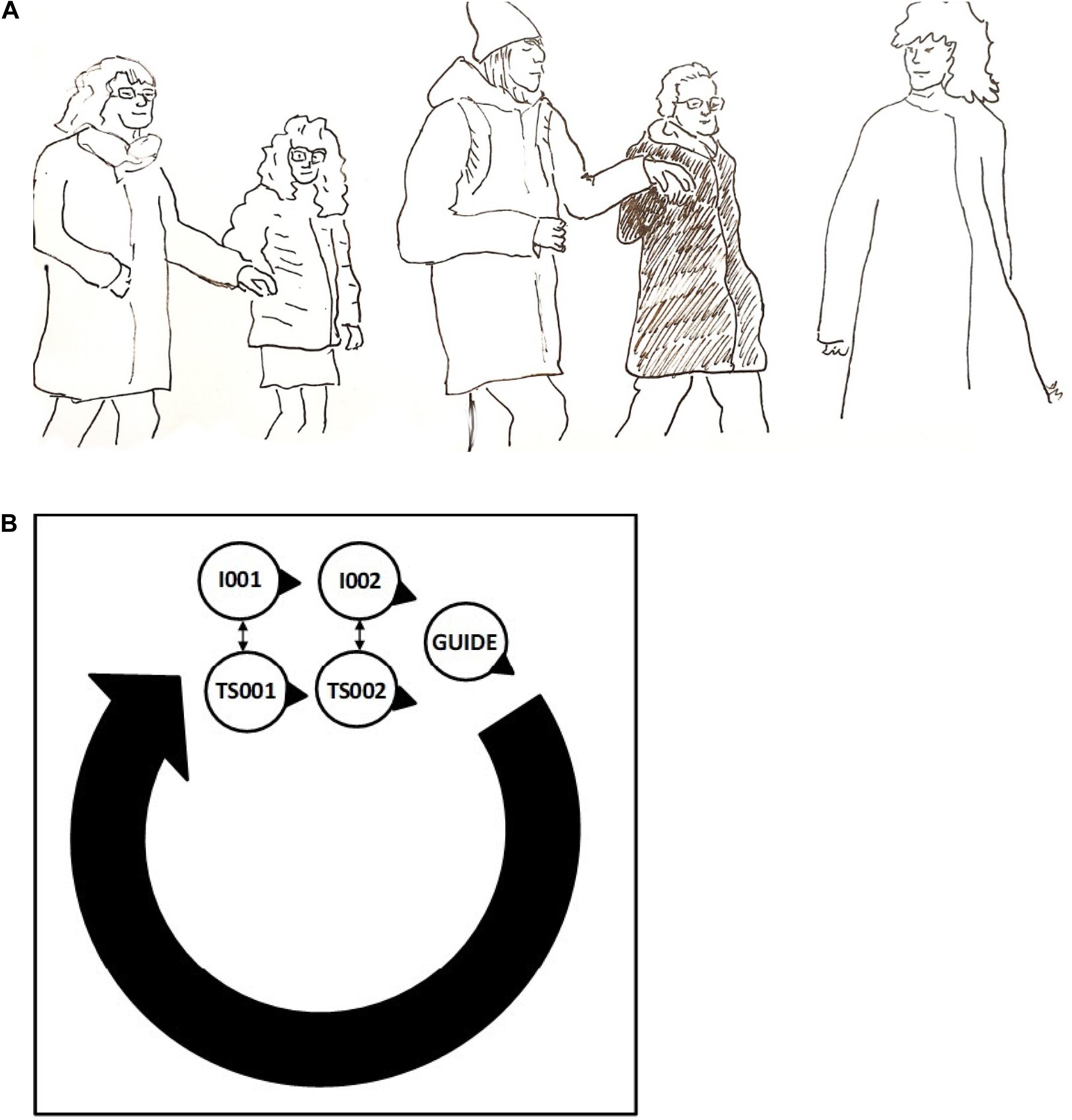

The data analyzed in this study were extracted from a cross-linguistic project on Tactile Norwegian Sign Language and Tactile Swedish Sign Language (Raanes and Mesch, 2019). Four deafblind individuals (two women from Sweden, and one woman and one man from Norway) and eight interpreters (four from Sweden and four from Norway) participated in the recordings. The four deafblind participants are aged above 50. They were deaf users of STS and NTS who started losing sight at different stages of their adulthood. The interpreters recorded for this study are certified professionals who usually work with these four people.

The data recorded consist of 26 h in total, and different aspects of deafblind communication and settings are covered. These aspects include communication between deafblind peers using the same tactile sign language, communication between deafblind peers using the two tactile sign languages of the project, communication between deafblind and sighted individuals using tactile sign language, and tactile sign language interpreting. Recordings took place both indoors and outdoors. One of the outdoor settings is a guided visit to Nidaros Cathedral in Trondheim (Norway), which is of roughly 1 h. Since the communication modes of deafblind people show a high degree of variation and, to the best our knowledge, tactile interpretation in a guided visit has not been studied before, we considered that it was interesting to examine how environmental information was naturally conveyed in this setting.

Nidaros Cathedral’s visitor services are concerned with accessibility, so this guided visit is adapted to the needs of deafblind visitors who come with their tactile sign language interpreters. The tour and the contents had been previously prepared. Eli Raanes from the Norwegian University of Science and Technology had meetings with the staff in which they discussed what should be included in the tour and how it could mix history, pilgrimage, church building, and architectural style. Two months before our recordings took place, the visit was tested as an exercise for the course “Practice in communication and interpretation for the deafblind” in which deafblind people, interpreting students and supervisors participated. After this test, some details were adjusted such as adding more information about stonemasons and concrete tactile experiences related to the construction of the cathedral and the architectural styles. The guide understood what was expected from the visit and suggested, among other things, that deafblind participants and their professional interpreters should start the visit outside the building, at the Western Front Square. Then the guide, who was a hearing-sighted Norwegian speaker, would welcome them there before going inside the cathedral.

The visit was recorded with four cameras, which were moved around by one of the authors of this paper and three research assistants, totaling 10 h of data. The cameras were mainly directed at the participants and sometimes on the environment. All the participants of the project were informed about the study and signed a consent form in which they gave their permission to one of the authors of this paper and to other researchers to use the video-recordings for research and teaching purposes. Because of the type of research which is being conducted, data cannot be anonymized (i.e., faces cannot be blurred because they convey additional information), which is why we are using codes to name the participants (see next paragraph). Metadata about each participant was also collected, but it is exclusively accessible for research purposes as it is sensitive information.

The participants were two deafblind women accompanied by their hearing-sighted tactile sign language interpreters, both from Sweden and using TSTS. The two deafblind women (hereafter TS001 and TS002) are aged 50 and 58 years old. TS001 started to use tactile sign language 15 years ago, but everyday use has mostly been for the past 2 years (since she has limited vision she has been moving from STS to TSTS depending on the situation). She uses one hand or both hands for the perception of signs. TS002 has used TSTS for 10 years. She has a preference for one-handed perception of signs (see Mesch, 2013). The two TSTS interpreters (hereafter I001 and I002) are aged 49 and 58 years old, the same age as the two deafblind women. They are experienced tactile sign language interpreters and have worked in this field for approximately 30 years.

The research presented in this paper is corpus-driven: that is, we analyze the videos without any prior theoretical interpretation and we do not aim to prove any existing theory (McEnery et al., 2006). Our sample was annotated using ELAN5, which is the most frequently used software for the annotation of sign language data. It is free and open source, and continuously updated with improvements and new functionalities. It allows the researcher to create, edit, visualize and search annotations for video and audio data. We created five tiers for each deafblind – TSTS interpreter dyad:

• Environmental information: this tier is independent. It contains the type of environmental information being conveyed to and/or perceived by the deafblind individual. For instance, the description of the elements of a fresco painting using objects.

• Strategies: this tier is dependent on the previous one. It contains specific information on the means used to convey and/or perceive environmental information. If we go back to the previous example of the fresco, what type of objects are touched.

• Gloss-DH: this tier is independent. It is used to annotate the signs articulated by the TSTS interpreter using her dominant hand.

• Gloss-NonDH: this tier is independent. It is used to annotate the signs articulated by the TSTS interpreter using her non-dominant hand.

• Comments: this tier is independent. It is used to write comments about aspects in the video which were relevant for this study.

Results

The analysis of our data showed that the two TSTS interpreters employed six different strategies to convey environmental information to the two deafblind women (the number of occurrences in which each strategy was used is added in parentheses):

• using TSTS (100),

• using locative points (18),

• drawing shapes on the palm of the hand of the deafblind women (3),

• giving them objects provided by the guide (9),

• having them touch different elements of the environment (38), and

• walking around with them (31).

These strategies can be used in isolation or in combination. These combinations are instances of multimodal interpreting, i.e., different strategies are used simultaneously by the same interpreter, which is a practice that has already been reported in other papers about tactile sign language interpreting (Slettebakk Berge and Raanes, 2013; Raanes and Slettebakk Berge, 2017). In what follows, we explain these strategies and exemplify them with excerpts from our dataset. The first three are related to the manual activity (i.e., the hands produce signs or depict shapes, see section “Using Tactile Swedish Sign Language,” “Using Locative Points,” and “Drawing Shapes on the Palm of the Hand of the Deafblind Women”), whereas the other three strategies rely on elements copying those of the cathedral (see section “Use of Objects”) or on the cathedral itself (see sections “Touching Elements of the Setting” and “Walking Around”).

Using Tactile Swedish Sign Language

The use of TSTS is the most frequently used strategy across the dataset. TSTS interpreters employ this strategy to translate linguistic information (i.e., the explanations of the hearing guide and the answers to the questions asked by the deafblind women) and to convey environmental information. When the interpreters refer to different elements of the cathedral by pointing at them, there is always a short description in TSTS, either before or after the pointing (e.g., POINT.THERE POINT.THERE DIFFERENT COLOR6 “The windows on the left and on the right have different colors”). When the interpreters want to describe something, e.g., the shape of the cathedral windows, they use both lexical and depicting signs. Lexical signs are tokens that are fully conventionalized and could be listed as a dictionary entry (Johnston, 2019). Examples from our data include the sign WINDOW “window,” COLOR “color” and BLUE “blue.” Depicting signs are partly conventionalized, that is, they cannot be listed as dictionary entries as their meaning greatly depends on the context in which they are used (Johnston, 2019). In our data, these signs are used to depict columns or windows; but the same tokens could be used in a different context to depict other elements with a similar cylindrical or rounded shape.

Tactile Swedish Sign Language is also used to give commands to the deafblind women or to refer to the present situation (e.g., NOW “now,” COME “come along,” FOLLOW “follow me,” THIS-DIRECTION “go on this direction”). This type of information is very important for deafblind individuals to understand what is going on. For instance, the deafblind women needed to be told when the guide started moving as they could not see her. Sighted people would follow instinctively without any command, but deafblind people need to be warned that this movement or action has taken place so that they react accordingly. In this situation, as in many others, deafblind people need to be informed in advance, they cannot just be pulled toward a place without previous notice or explanation.

In example 1, I002 describes the location of the windows using her (dominant) right hand. TS002 perceives the translation with her left hand. She asks for the exact location using her flat hand and pointing backward over her right shoulder. After pointing toward the windows, I002 continues to describe them (see first two interventions of I002 in example 1). However, TS002 asks again for clarification using the sign WHERE, turning around and moving her body toward the windows as she is relying on her residual sight to perceive their aspect. Meanwhile, I002 points toward the windows and afterward keeps on describing the shape and color (see the remainder of example 1).

Example 1.

| I002 | IAT-TOP DS-column POINT.THERE PRO1 SAY EARLIER The column is located there, at the top, as I said before. |

| ITS002 | IPOINT.THERE(flathand)—hold There? |

| I002 | IPOINT.THERE There. |

| ITS002 | IYES Yes. |

| I002 | I WINDOWS ROUND POINT(short round movement) There are round windows. |

| ITS002 | IWHERE[deafblind moves her body toward the windows] Where? |

| I002 | IPOINT.THERE POINT(short round movement) DIFFERENT COLOR BLUE RED BEAUTIFUL DS-windows There, there. They have different colors like blue and red, and their shape is beautiful. |

In example 2, I001 is translating what the guide says about the size of the windows. I001 uses her right hand (which is her dominant hand) to articulate signs and TS001 uses her left hand to perceive them. I001 holds some of her signs in order to mark a pause when sentences are finished. The guide wants the visitors to understand how big the rose window is and asks them to follow her for a walk in a circle (see section “Walking Around” for further details). Both I001 and TS001 start following her. The interpreter’s right hand remains the point of attachment and guide for TS001 who keeps her left hand positioned on I001’s right hand. After some steps, I001 makes a short stop and describes the size of the rose window with some lexical signs. TS001 checks whether she understood by pointing at the window at the same time.

Example 2.

| I001 | IPRO1.FL WILL UP SEE HOW TRUE LARGE POINT.TRACK.ROUND SHOW HOW LARGE TO-BE FLOWER—hold WINDOWS PRO1 SHOW COME-ATTENTION FOLLOW [Both walk in a circle] [short stop] POINT.TRACK.ROUND SUCH LARGE SUCH WINDOWS POINT.THERE—hold We will go around and see the real size of the rounded shape. We will see how big the flower shape of the windows is. I will show you. Come along! Follow me! [both walk in a circle]. This is how rounded and how big this rose window is. This window. |

| ITS001 | ITS001 POINT.THERE There? |

| I001 | ISHOW HOW LARGE I will show how big it is. |

| ITS001 | IPOINT.THERE There? |

| I001 | I[I001 asks the guide and points with her left flat hand toward the windows without hand contact with TS001’s hand] |

| IPOINT.THERE(flathand) There. |

Using Locative Points

The use of locative points was also a frequent strategy. For the purposes of this paper, we make a difference between pointing signs directed to entities (e.g., PRO1 “me” pointing at one’s own chest, POINT > person “you” pointing at the addressee or to some other entity, and locative points, which are used to refer to the exact location of objects such as windows, statues, etc. (Fenlon et al., 2019). Pointing signs belong to the syntactic structure of utterances, which consist of “a predicate core” (obligatory) arguments assigned by the predicate, and a periphery (optional modifiers) (Börstell et al., 2016: 20); whereas locative points are used alone or appear in the periphery of utterances. Moreover, we observed two different types of locative points in the dataset: locative points with the deafblind person’s hand lying on the interpreter’s hand and locative points with the interpreter’s hand touching the deafblind person’s shoulder. Interestingly, both types of locative points were held for a longer time than pointing signs directed to entities. Holding certain signs or gestures for a longer time as compared to others is a feature that Willoughby et al. (2020) also found in their tactile Auslan data. Their explanation was that some signs (e.g., numbers) are held for a longer duration because their meaning is harder to infer from context. In our case, the motivation is different. TSTS interpreters held these locative points in order for the deafblind person to rely on her residual sight to locate the element referred to in the setting.

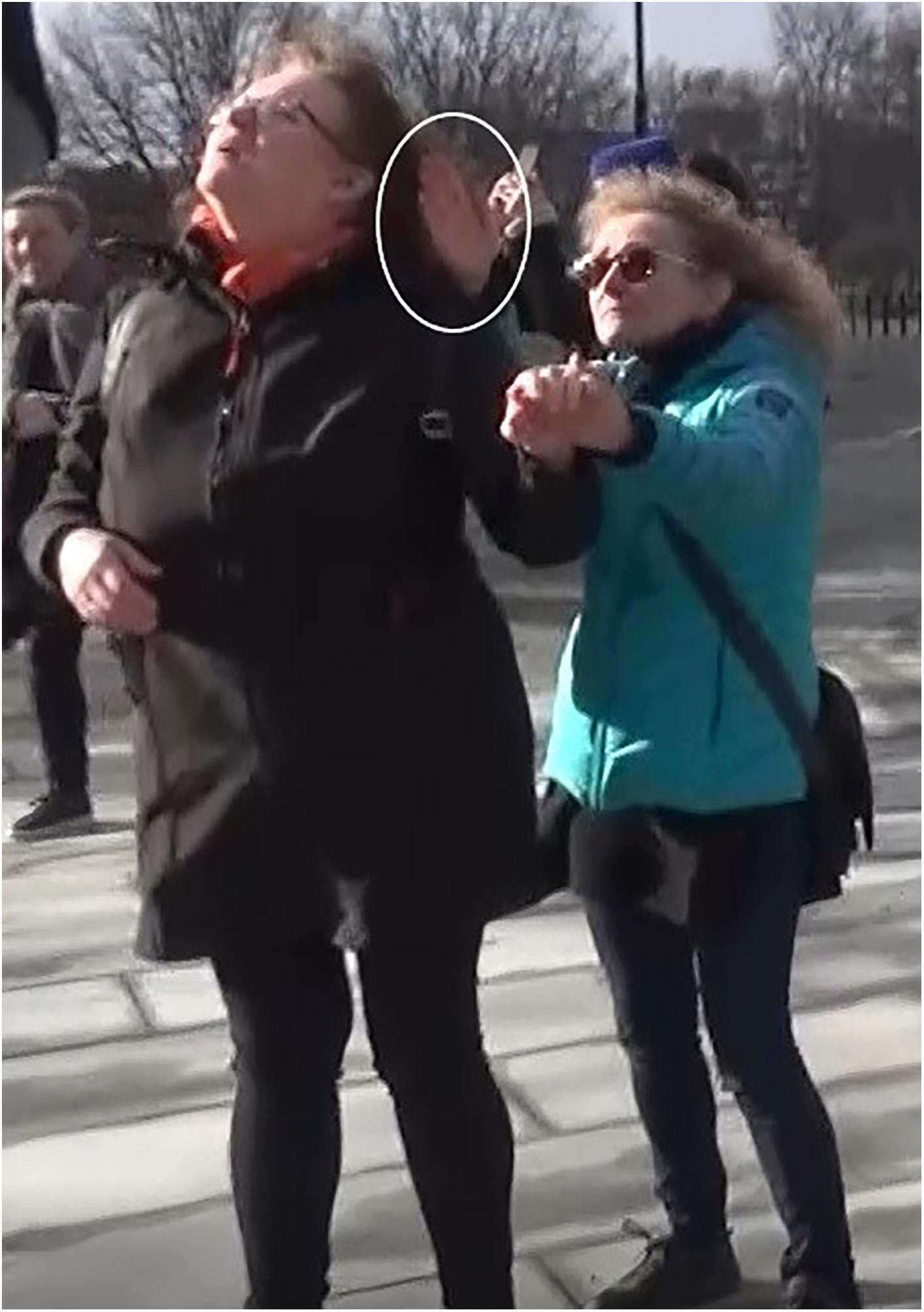

The first type of locative points was repeatedly found with two possible handshapes: an extended index finger (see Figure 1A) and an open flat hand with fingers together (see Figure 1B). We hypothesize that this second handshape is used for clarifying purposes as it is bigger than the index handshape and therefore easier to perceive with residual sight. Both handshapes could depict a straight or circular movement or be held still. They could also be articulated while standing in a static position or while walking. In Figures 1A,B, the two frames depict a static position. In both of them, I001 holds the locative point for some seconds while TS001 directs her eye gaze to the elements referred to, which are situated in an elevated position.

Figure 1. (A) Locative point with an extended index finger. (B) Locative point with an open flat hand with fingers together.

The second type of locative point (i.e., contacting the shoulder of the deafblind person) was only found once in the data set. Both the deafblind person and the TSTS interpreter were standing still. The handshape was an open flat hand with fingers together that touched the deafblind person’s left shoulder with the side of the little finger, as shown in Figure 2. In this example, the deafblind women and the TSTS interpreter are outside the cathedral before the guide meets them. The two interpreters are explaining how the building looks from the outside. I001 is saying that on the left-hand side of the cathedral there is one tower which has a statue with wings on its top left corner. This information is conveyed with I001’s right hand and perceived with TS001’s left hand. TS001 faces the interpreter and turns her head toward the building from time to time. When the explanation is over, I001 moves backward. I001 changes hands, so she puts her left hand as a support for TS001’s left hand and articulates the locative point with her right hand on TS001’s shoulder. As can be observed in Figure 2, TS001 looks toward the pointed direction with her eye gaze and head aligned with the locative point. Afterward, TS001 moves her right hand up and depicts the shape of the top left corner of the tower in order to check that she understood correctly. Meanwhile, I001 changes hands again and acknowledges that TS001 got the right interpretation, articulating the sign YES “yes.”

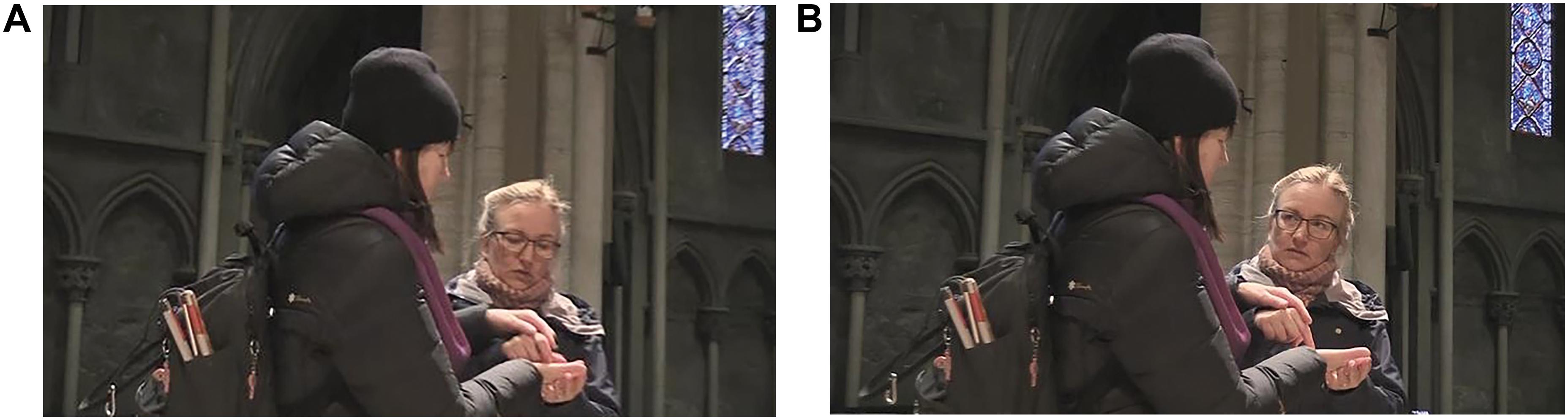

Drawing Shapes on the Palm of the Hand of the Deafblind Women

Another strategy which was observed in this particular situation was the use of the deafblind person’s palm of the hand to depict the shape of the cathedral and to tell the deafblind person where they were situated in a given moment. This strategy was only used by one of the dyads (I002 and TS002). I002 tells TS002 that the cathedral is shaped like a cross. I002 adds that TS001 is being given a cross to show her where they are standing. I002 is about to draw a cross “in the air” with her right hand closed and the index and middle fingers flexed. When she starts the movement, TS002 extends her right hand opened with fingers together and palm up so that I002 can draw the cross on it. I002 puts her left hand under TS002’s right hand and draws the cross with her right hand while TS002 places her left hand on top of it (see Figure 3A). Afterward, I002 uses her index finger to point at the palm of TS002’s hand, to show the place where they are standing (see Figure 3B). TS002 backchannels signing YES and OK, and I002 signs PRO1.FL STAND NOW “we are standing here now.”

This proprioceptive strategy is later employed by TS002 twice more. The group has been walking around the cathedral and they stop to listen to the guide’s explanation. When the guide has finished, TS002 extends her right hand and draws a cross on it and asks whether the style of the nave is Romanesque. I002 puts her left hand under TS002’s right hand and draws the cross with her index finger. TS002 repeats her question and I002 replies that the transept is the Romanesque part by repeating the shorter part of the cross on TS002’s palm. Then, TS002 draws the longer part of the cathedral above her palm (she does not touch it) and asks whether the style is Gothic. I002 has been translating this information into spoken Swedish7 for the guide who is beside them. The guide says that this is right, I002 signs YES and TS002 answers back OK. The previous time this strategy was used is quite similar. The group is visiting one of the chapels and TS002 wants to know exactly where they are placed. TS002 extends her right hand so that I002 can draw the cross on her hand and point to the place where the chapel is situated.

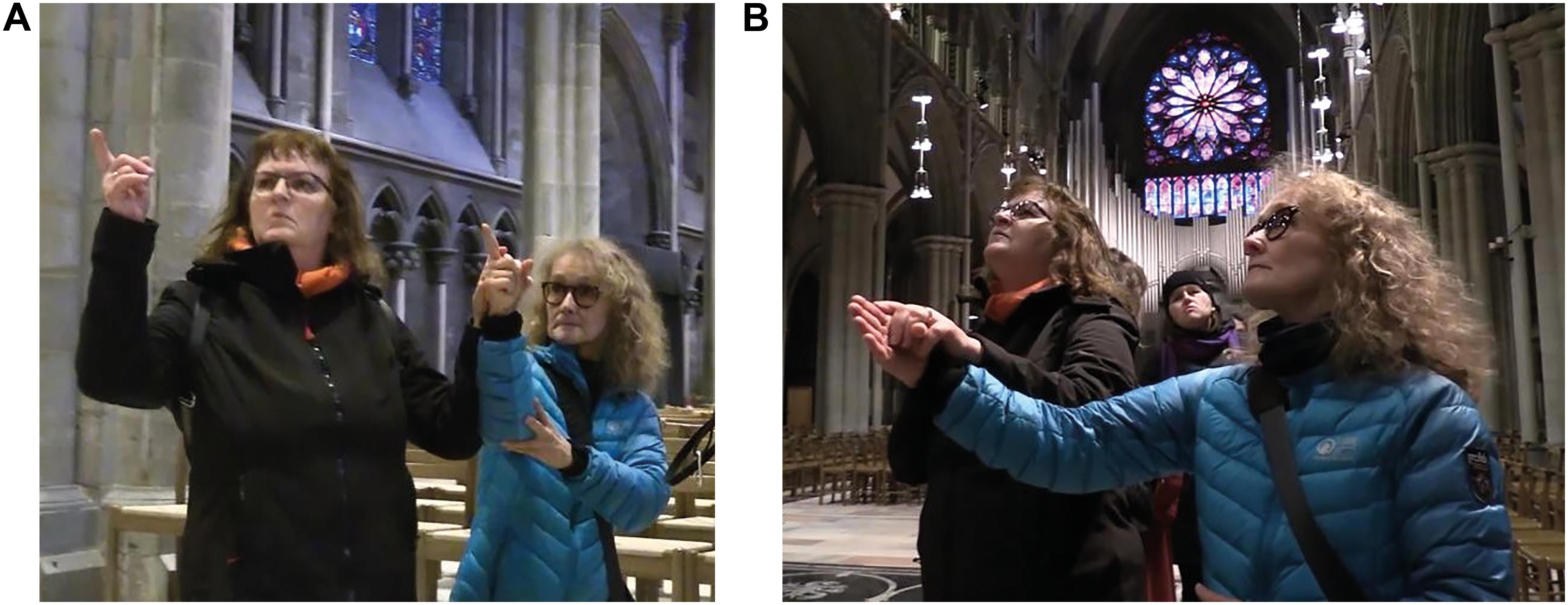

Use of Objects

The first strategy that involves different elements of the setting is the use of objects. This strategy is part of the Nidaros Cathedral accessibility project for deafblind visitors, which was presented in section “Materials and Methods.” In this visit, the guide provides three objects for the deafblind participants to perceive with their hands: a metal cross (see Figure 4A), a piece of plaster depicting an animal’s head (see Figure 4B), and a little statue of King Olav (see Figure 4C). The first object is used to help the deafblind visitors orient themselves as to where they are at any given moment. The other two are used to perceive the shape of two elements in the cathedral which are not perceptible by the deafblind participants, either because they are not reachable (the animal’s head made of plaster depicts a statue which is placed in a higher position) or because they are part of a painting (King Olav appears in a fresco painting in the altar of a little chapel).

Figure 4. (A) Proprioception using a metal cross. (B) Perceiving with touch a piece of plaster depicting an animal’s head. (C) Holding a little statue of King Olav while signing.

These objects are always introduced by the guide and her explanation is translated into TSTS. While the deafblind visitors are perceiving these objects, TSTS can be used at the same time. In Figure 4A, we can see that I002 is pointing to the position in which they are. The guide has previously given the cross to TS002 while saying “we are here” and pointing to one of the edges. In Figure 4C, TS002 is perceiving the statue and fingerspells the name “Olav” in order to check that what she has been given corresponds to the statue of the king. I002 acknowledges using a haptic sign, i.e., tapping on TS002’s arm.

Touching Elements of the Setting

Another strategy to convey and perceive environmental information which was observed in our data is touching elements of the setting. The deafblind women perceive different elements of the cathedral including columns, walls, chairs, etc., by touching them with their hands. Touching can be done in a static position, that is, the deafblind women stand still, and they touch the elements in front of them. On occasion, interpreters guide their hands while touching to relate this sensory experience to previous discourse (see Figure 5A). On the other hand, this strategy can be used in movement, that is, the deafblind women walk with their interpreters while they touch elements in the setting (see Figure 5B).

Figure 5. (A) Perception with the hands of the holes of the column. (B) Perception with the hands of the walls while moving.

Regardless of whether there is body motion or not, touching is sometimes combined with TSTS, either to clarify or to add information. For instance, after having touched the holes in the column (see Figure 5A), I001 tells TS001 how they are placed in TSTS. We also found one instance of touching in which perception was with the feet (see Figure 6). The guide explains the story of two basins that can be found on the floor of the cathedral and from which water poured at some point. I002 translates this information and tells TS002 to perceive it. TS002 approaches her right foot while I002 is repeatedly signing YES, to let her know that she is approaching the basin in the right direction. TS002 feels the surface with her right foot and withdraws it. I002 explains how the basin looks and tells TS002 to feel the basin. TS002 moves her left foot to do it, while I002 is indicating for her to bend in order to perceive the shape with her hand (see Figure 6). Afterward, TS002 bends and touches the basin with her right hand.

Walking Around

The last strategy found in our data was walking around the cathedral. As mentioned earlier, walking around is usually combined with TSTS and with touching. Interpreters guide the deafblind visitors in this process and one of the deafblind person’s hands is always holding one of the interpreter’s hands or arms. Therefore, the interpreter can use the hand in contact to convey information to the deafblind person. The other deafblind person’s hand is free to touch the setting or to be used to talk to/answer the interpreter.

We do not consider walking around an interpreting strategy per se to convey environmental information, as it is the guide who decides where they are moving and the deafblind visitors together with their interpreters follow her. Furthermore, this is more or less the same path that the guide would probably follow with hearing and deaf-sighted visitors to show the different parts of the cathedral.

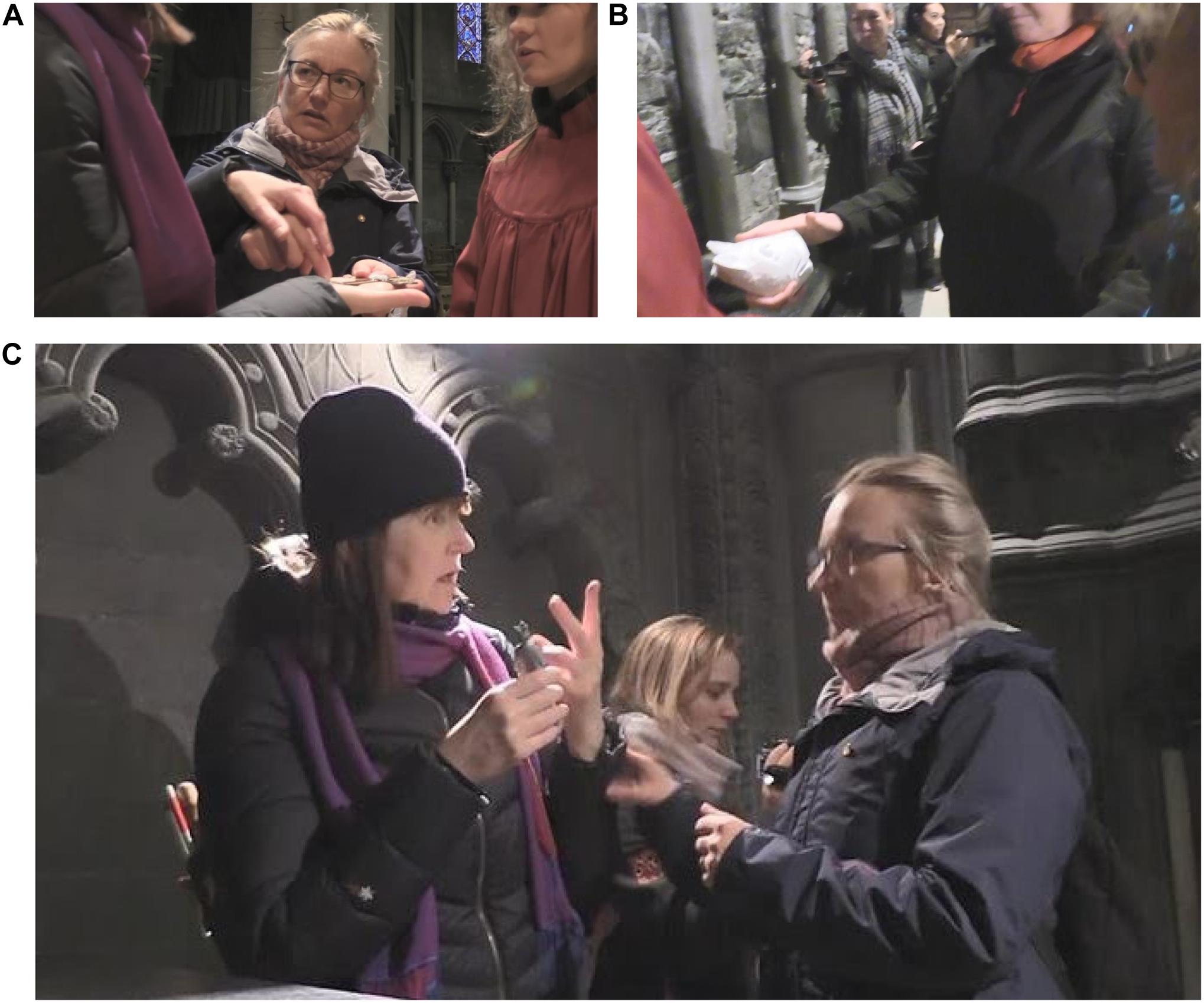

However, there is an interesting instance of walking around in order for the deafblind visitors to perceive the size of the rose window (see Example 2 in section “Using Tactile Swedish Sign Language”). This instance is not done on the interpreters’ initiative, but it is part of Nidaros Cathedral’s accessibility project for deafblind visitors. The guide walks in a circle in the crossing of the cathedral, in the square area just in front of the altar. She is followed by TS002 with I002, and TS001 with I001 behind (see Figure 7A). When they have finished the circle (see Figure 7B), the two interpreters say that this is the end of the circle and that the size corresponds to the rose window. Then, the deafblind women acknowledge that they have understood it.

Figure 7. (A) Picture depicting the position of the guide, deafblind individuals and interpreters while walking in a circle. (B) Graph depicting the movement of the participants for the deafblind women to perceive the size of the rose window.

Discussion and Further Research

In this paper, we have described how Tactile Swedish Sign Language (TSTS) interpreters convey environmental information to two deafblind women in a cathedral visit. As pointed out by Edwards (2012), conveying environmental information in daily activities enhances the integration and participation of deafblind people in society (Edwards, 2012). TSTS interpreters need to continuously provide environmental information to the two deafblind women using different means, which underlines the difference between what is expected from these professionals and what is expected from other interpreters working with spoken and visual signed languages. Furthermore, the role of TSTS interpreters goes beyond merely translating linguistic information and conveying environmental information. These professionals may need to repeat information (Metzger et al., 2004), answer questions outside the framework of the interaction with other participants, act as guides for deafblind users, etc., for all of which it is important to build trusting relationships between interpreters and deafblind individuals (Slettebakk Berge and Raanes, 2013; Raanes and Slettebakk Berge, 2017).

To convey environmental information, we found that TSTS interpreters employ several strategies such as using TSTS, using locative points, drawing shapes on the palm of the hand of the deafblind person, giving them objects to perceive their shape, having them touch different elements in the setting, and walking to gain an idea about the size of some parts of the cathedral. Using TSTS is the most frequent strategy in our data to convey linguistic and environmental information. The use of objects and touching elements of the setting is mainly related to environmental information, but these can complement to different extents linguistic information provided before. However, locative points with some type of contact with the body of deafblind individuals, drawing on the palm of their hands and walking, are strategies which were only used to convey environmental information. We observed that these strategies can be combined, showing that multimodal interpreting is a flexible process which varies depending on the situation and from interpreter to interpreter.

When comparing these interpreted discourses with natural conversations between deafblind peers using TSTS extracted from the same corpus project (Raanes and Mesch, 2019), we can observe that locative points are more frequent in the former setting than in the latter. What may explain this variation is that this strategy is used to counterbalance (to some extent) the different sight status in this particular situation. The nature of the situation (a visit to a cathedral) and the fact that it is planned and guided by sighted individuals makes the role of sight central to it, as is the use of strategies to compensate for it. However, this imbalance does not exist when deafblind peers communicate between themselves as they have the same sensory status and do not need any device to compensate for it. Despite the difference in frequency, locative points proved to be a useful strategy to be used in tactile sign language interpreting when used in cooperation with deafblind individuals. A similar pattern can be observed when deaf-sighted individuals convey environmental to deafblind individuals (Edwards, 2012).

We hypothesized that haptic signs are a device that would be frequently used to convey environmental information. Although this system is mostly used in Nordic countries by tactile sign language interpreters, we did not find it used to convey environmental information about the cathedral. We mentioned a case in which a haptic sign had been used by one of the interpreters to provide a backchannel to one of the deafblind women while her hands were touching a little statue. Our findings call for further research on the use of haptic signs, which seem to be extensively used in other situations such as multi-party meetings between Norwegian deafblind individuals (Slettebakk Berge and Raanes, 2013; Raanes and Slettebakk Berge, 2017). Moreover, some participants in this Corpus of Tactile Norwegian Sign Language and Tactile Swedish Sign Language (Raanes and Mesch, 2019) reported in personal interviews that not all deafblind individuals feel comfortable with the use of this communication system. Therefore, there is some interpersonal variation which needs to be considered too. In order to further elaborate on this observation, we plan to study the use of haptic signs by the same two interpreters and these two deafblind women in other situations recorded in the same corpus project.

Different levels of linguistic analysis also deserve further attention, as they will allow us to describe how interpreted discourse is produced, perceived and understood. For instance, it would be interesting to investigate what type of repetitions are used by TSTS interpreters. In our data, we can observe that signs are frequently repeated in the same sentence [syntactic repetitions in the words of Notarrigo and Meurant (2019)] or that the same sentence or chunk of discourse is repeated many times (semantic and pragmatic repetitions according to the same authors). As a matter of fact, pragmatic repetitions also occur in cross-linguistic communication between Norwegian and Swedish deafblind individuals when they try to understand each other (Mesch and Raanes, submitted). Another interesting issue would be to study how interpreters contribute to building shared knowledge between participants. This issue has been examined in visual sign language interpreting (e.g., Janzen and Shaffer, 2013) when one or several participants have a different hearing status, but it has not been investigated in tactile sign language interpreting in which at least one of the participants has both a hearing and sight status different from the other participant(s).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation and also available at: https://snd.gu.se/en.

Ethics Statement

The studies involving human participants were reviewed and approved by the Norwegian Centre for Research Data, project number 192998. Written informed consent to participate in this study was provided by the participants.

Author Contributions

JM collected the data as part of a project with Eli Raanes from the Norwegian University of Science and Technology. JM and SG-L contributed to the conception and design of the study, annotated the data, and wrote different parts of the article. Both authors contributed to manuscript revision, read and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the participants of the project “Corpus of Tactile Norwegian Sign Language and Tactile Swedish Sign Language,” which is a collaboration between the Norwegian University of Science and Technology and Stockholm University.

Footnotes

- ^ These schools are based in Örebro, Stockholm, Härnösand, Vänersborg, and Lund.

- ^ In many countries (still) no or hardly any tactile sign language exists (e.g., New Zealand) as deafblind people live isolated lives and do not form a community sharing a communication system.

- ^ https://socialhaptisk.nkcdb.se/

- ^ Classifier constructions are an inventory of handshapes which are used to represent referents (see Cormier et al., 2012; Hodge et al., 2019 for recent accounts). They have received different names in the literature such as polymorphemic predicates (Wallin, 1990) or depicting signs (Liddell, 2003), among others. In the present paper, we prioritize the use of the latter denomination as it is the most frequently used in sign language corpora.

- ^ https://archive.mpi.nl/tla/elan

- ^ As is traditional in sign language linguistics, signs are represented by approximate translation glosses in small caps (see https://benjamins.com/series/sll/guidelines.pdf). The translation in English appears below each line of glosses in italics. Elements in parentheses after a gloss indicate the handshape of the token and elements in square brackets describe body movements. When a sign is held, the gloss is followed by —hold. Hyphens are used in glosses which contain more than one word (e.g., AT-TOP) and dots are used to specify the motion of a pointing (e.g., POINT.TRACK.ROUND). PRO1 stands for the first person singular pronoun, PRO1.FL refers to the first person plural pronoun and the glosses which start with DS indicate that the token is a depicting sign.

- ^ Swedish and Norwegian are typologically similar languages belonging to the same language family. For this reason, the guide can speak Norwegian and the interpreters Swedish, and they will understand one another.

References

Börstell, C., Wirén, M., Mesch, J., and Gärdenfors, M. (2016). “Towards an annotation of syntactic structure in the Swedish sign language corpus,” in Workshop Proceedings: 7th Workshop on the Representation and Processing of Sign Languages: Corpus Mining, eds E. Efthimiou, S.-E. Fotinea, T. Hanke, J. Hochgesang, J. Kristoffersen, and J. Mesch (Paris: ELRA), 19–24.

Checchetto, A., Geraci, C., Cecchetto, C., and Zucchi, S. (2018). The language instinct in extreme circumstances: the transition to tactile italian sign language (LISt) by Deafblind signers. Glossa J. Gen. Linguist. 3, 1–28. doi: 10.5334/gjgl.357

Collins, S., and Petronio, K. (1998). “What happens in tactile ASL,” in Pinky Extension and Eye Gaze: Language use in Deaf Communities, ed. C. Lucas (Washington, DC: Gallaudet University Press), 18–37.

Cormier, K., Quinto-Pozos, D., Sevcikova, Z., and Schembri, A. (2012). Lexicalisation and de-lexicalisation processes in sign languages: comparing depicting constructions and viewpoint gestures. Lang. Commun. 32, 329–348. doi: 10.1016/j.langcom.2012.09.004

Edwards, T. (2012). Sensing the rhythms of everyday life: temporal integration and tactile translation in the Seattle Deaf-Blind community. Lang. Soc. 41, 29–71. doi: 10.1017/S004740451100090X

Fenlon, J., Cooperrider, K., Keane, J., Brentari, D., and Goldin-Meadow, S. (2019). Comparing sign language and gesture: insights from pointing. Glossa J. Gen. Linguist. 4:2. doi: 10.5334/gjgl.499

Frankel, M. A. (2002). Deaf-blind interpreting: interpreters’ use of negation in tactile american sign language. Sign Lang. Stud. 2, 169–181. doi: 10.1353/sls.2002.0004

Hanks, W. F. (2005). Explorations in the deictic field. Curr. Anthropol. 46, 191–220. doi: 10.1038/074019a0

Hodge, G., Ferrara, L. N., and Anible, B. D. (2019). The semiotic diversity of doing reference in a deaf signed language. J. Pragmat. 143, 33–53. doi: 10.1016/j.pragma.2019.01.025

Janzen, T., and Shaffer, B. (2013). “The interpreter’s stance in intersubjective discourse,” in Sign Language Research, Uses and Practices: Crossing Views on Theoretical and Applied Sign Language Linguistics, eds L. Meurant, A. Sinte, M. Van Herreweghe, and M. Vermeerbergen (Berlin: De Gruyter Mouton), 63–84. doi: 10.1515/9781614511472.63

Lahtinen, R., and Palmer, R. (2008). “Haptices and haptemes – Environmental information through touch,” in Proceedings of the 3rd International Haptic and Auditory Interaction Design Workshop, Vol. 8, Jyväskylä, 8–9.

Liddell, S. K. (2003). Grammar, Gesture, and Meaning in American Sign Language. Cambridge: Cambridge University Press.

McEnery, T., Xiao, R., and Tono, Y. (2006). Corpus-based language studies. An advanced resource book. London: Routledge.

Mesch, J. (2001). Tactile Sign Language - Turn Taking and Questions in Signed Conversations of Deaf-Blind People. Hamburg: Signum Verlag, 38.

Mesch, J. (2013). Tactile signing with one-handed perception. Sign. Lang. Stud. 13, 238–263. doi: 10.1353/sls.2013.0005

Metzger, M., Fleetwood, E., and Collins, S. D. (2004). Discourse genre and linguistic mode: interpreter influences in visual and tactile interpreted interaction. Sign. Lang. Stud. 4, 118–137. doi: 10.1353/sls.2004.0004

Nicodemus, B., and Emmorey, K. (2015). Directionality in ASL-English interpreting: accuracy and articulation quality in L1 and L2. Interpret. Int. J. Res. Pract. Interpret. 17, 145–166. doi: 10.1075/intp.17.2.01nic

Notarrigo, I., and Meurant, L. (2019). Conversations spontanées en langue des signes de Belgique francophone (LSFB)?: fonctions et usages de la répétition [Spontaneous Conversations in French Belgian Sign Language (LSFB): functions and uses of repetitions]. Lidil

Raanes, E., and Mesch, J. (2019). Dataset. Parallel Corpus of Tactile Norwegian Sign Language and Tactile Swedish Sign Language. Trondheim: Norwegian University of Science and Technology.

Raanes, E., and Slettebakk Berge, S. (2017). Sign language interpreters’ use of haptic signs in interpreted meetings with deafblind persons. J. Pragmat. 107, 91–104. doi: 10.1016/j.pragma.2016.09.013

Slettebakk Berge, S., and Raanes, E. (2013). Coordinating the chain of utterances: an analysis of communicative flow and turn taking in an interpreted group dialogue for deaf-blind persons. Sign Lang. Stud. 13, 350–371. doi: 10.1353/sls.2013.0007

Wallin, L. (1990). “Polymorphemic predicates in Swedish sign language,” in Sign Language Research: Theoretical Issues, ed. C. Lucas (Washington, DC: Gallaudet University Press), 133–148.

Willoughby, L., Iwasaki, S., Bartlett, M., and Manns, H. (2018). “Tactile sign languages,” in Handbook of Pragmatics, eds J.-O. Östman and J. Verschueren (Amsterdam: John Benjamins), 239–258. doi: 10.1075/hop.21.tac1.

Keywords: deafblind people, tactile sign language, Tactile Swedish Sign Language interpreters, interpreting strategies, environmental information

Citation: Gabarró-López S and Mesch J (2020) Conveying Environmental Information to Deafblind People: A Study of Tactile Sign Language Interpreting. Front. Educ. 5:157. doi: 10.3389/feduc.2020.00157

Received: 20 March 2020; Accepted: 04 August 2020;

Published: 28 August 2020.

Edited by:

Marleen J. Janssen, University of Groningen, NetherlandsReviewed by:

Bencie Woll, University College London, United KingdomBeppie Van Den Bogaerde, University of Amsterdam, Netherlands

Copyright © 2020 Gabarró-López and Mesch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Johanna Mesch, johanna.mesch@ling.su.se

Sílvia Gabarró-López

Sílvia Gabarró-López Johanna Mesch

Johanna Mesch