A Commentary on: “Neural overlap in processing music and speech”

- 1Neurobiology of Language, Max Planck Institute for Psycholinguistics, Nijmegen, Netherlands

- 2Neurobiology of Language, Donders Institute for Brain, Cognition and Behaviour, Radboud University Nijmegen, Nijmegen, Netherlands

- 3Language and Music Cognition Lab, Department of Psychology, University of Maryland, College Park, MD, USA

A commentary on

Neural overlap in processing music and speech

by Peretz, I., Vuvan, D., Lagrois, M.-É., and Armony, J. L. (2015). Philol. Trans. R. Soc. Lond. B Biol. Sci. 370:20140090. doi: 10.1098/rstb.2014.0090.

Evidence for Neural Overlap in Processing Music and Speech?

There is growing interest in whether the brain networks responsive to music and language are separate after basic sensory processing or whether they share neural resources. Peretz et al.'s (2015) review on the available brain imaging evidence is a good moment to reflect on the field. We agree that “the question of overlap between music and speech processing must still be considered as an open question.” (p. 16) However, even though their review was not intended to be exhaustive, Peretz et al. (2015) have arguably focused too narrowly on neuroimaging results to give a fair assessment of current knowledge about music-language relationships.

Firstly though, it is worth re-iterating the limitations of neuroimaging studies. The fact that music experiments and language experiments reveal common brain regions (e.g., Koelsch et al., 2002; Herdener et al., 2014) is insufficient evidence for shared neural circuitry, as domain-specific neural populations might be intermingled within the same brain regions (especially given the resolution of noninvasive brain-imaging techniques). Similarly, different cognitive processes might underlie common activation sites, especially in pre-frontal areas. As just one example, attending to music over scanner noise might draw particularly strongly on prefrontal mechanisms of focused attention, compared to language perception, which might be more robust (especially in non-musicians). Therefore, Peretz et al. (2015) propose more sophisticated methods such as multivariate pattern analysis (MVPA) and adaptation paradigms. However, even these methods give equivocal interpretations: different patterns of activation in common brain areas (as revealed by MVPA) might reflect separate music-or-language neural populations within the same region (Rogalsky et al., 2011) or indicate the same neural population reacting differently to music and language (Abrams et al., 2011) possibly due to changes in functional connectivity. And while fMRI adaptation paradigms hold promise, it remains to be seen how they can be applied to this question (for two very different attempts see Steinbeis and Koelsch, 2008a; Sammler et al., 2010). Thus, the current brain imaging literature is indeed equivocal. However, looking beyond fMRI can be beneficial.

Beyond fMRI: The Interference Paradigm in Brain and Behavior

Although Peretz et al. (2015) nicely describe the current state and limitations of functional neuroimaging evidence on music-language overlap, they ignore a large body of behavioral and electrophysiological evidence for interactive processes1. Much of this work relies on interference paradigms, for example, Slevc et al. (2009) asked participants to read garden path sentences like the following, segment by segment (while measuring reading time as a proxy for processing cost):

(a) After | the trial | the attorney | advised | the defendant | was | likely | to commit | more crimes.

(b) After | the trial | the attorney | advised that | the defendant | was | likely | to commit | more crimes.

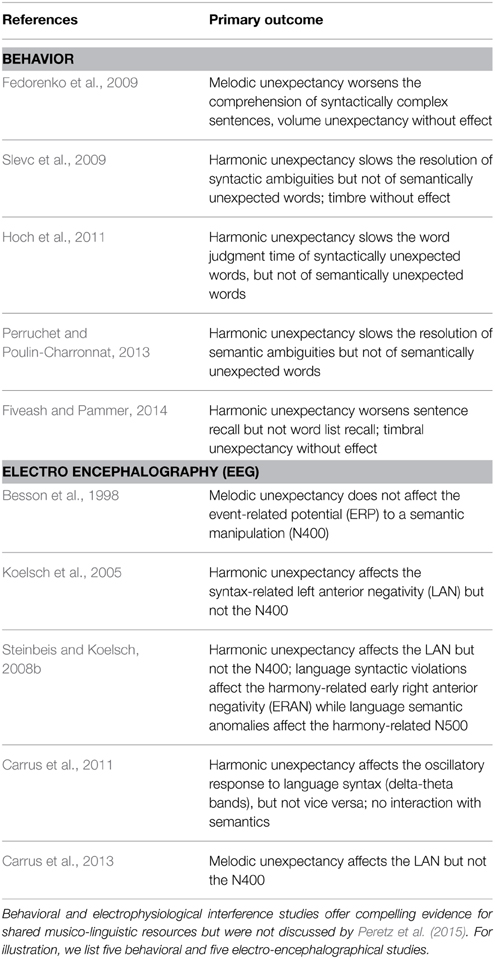

Resolving the temporary syntactic ambiguity in (a), where “defendant” is initially misinterpreted as a direct object, causes slower reading of “was” than in (b), where “that” signals the correct interpretation. This syntactic garden path effect was augmented when hearing a task-irrelevant, harmonically unexpected chord during the reading of “was” (compared to a harmonically expected chord). This is unlikely to be due to the chord's acoustic unexpectancy, since a timbrally unexpected chord (i.e., new instrument) had no such effect. Slevc et al. (2009) interpreted their result as evidence for shared music-language resources which process structural relations. When these resources are taxed by a harmonically unexpected chord, they sub-optimally process challenging syntactic relations as in (a). See Table 1 for similar studies.

These interference effects are compelling evidence for shared resources. While the aforementioned fMRI paradigms investigate whether shared neural circuitry is extensive enough to be visible in fMRI, studies like those in Table 1 investigate the functional relevance of shared resources (e.g., in terms of behavioral outcomes). Given the support for the latter, an important debate has centered on the functional role of shared resources, such as involvement in structural processing (Patel, 2003), general attention (e.g., Perruchet and Poulin-Charronnat, 2013), or cognitive control (Slevc and Okada, 2015). This debate would surely benefit from a variety of approaches which reveal the time-course, oscillatory, and network dynamics (e.g., via electrophysiological measures of brain activity), as well as the causal role of associated brain areas (e.g., via transcranial magnetic stimulation). Targeted fMRI studies informed by the entirety of the neural as well as the behavioral literature are needed to complement these approaches.

Toward an Inter-Disciplinary Science of Music and Language Processing

Peretz et al. (2015) are certainly right when they write that “converging evidence from several methodologies is needed.” We have tried to sketch the impressive extent of the evidence that is already available. However, there are still open questions. For example, the interference paradigm has so far not been used with linguistic processes beyond syntax and semantics (e.g., phonology, morphology, and prosody) and musical processes beyond melody, harmony, and timbre (e.g., rhythm).

Greater insights into music and language offer great potential for example in terms of clinical applications. Specifically, syntactic processing problems found in Broca's aphasia (see Patel et al., 2008) and specific language impairment (Jentschke et al., 2008) could be helped by melody-harmony interventions given evidence for shared resources for syntax and harmony, see Table 1. Progress with such clinical applications requires us first to understand how music and language relate to each other. This understanding can only emerge when going beyond a focus on any one method and, instead, viewing the field as an inter-disciplinary challenge.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by a PhD grant from the Max Planck Society to RK and a grant from the GRAMMY Foundation to LRS.

Footnotes

1. ^ Peretz et al. (2015) focus on music and speech, not language as we do here. However, the former has also been investigated with the interference paradigm with stimuli sung a cappella (Besson et al., 1998; Fedorenko et al., 2009).

References

Abrams, D. A., Bhatara, A., Ryali, S., Balaban, E., Levitin, D. J., and Menon, V. (2011). Decoding temporal structure in music and speech relies on shared brain resources but elicits different fine-scale spatial patterns. Cereb. Cortex 21, 1507–1518. doi: 10.1093/cercor/bhq198

Besson, M., Faïta, F., Peretz, I., Bonnel, A.-M., and Requin, J. (1998). Singing in the brain: independence of lyrics and tunes. Psychol. Sci. 9, 494–498. doi: 10.1111/1467-9280.00091

Carrus, E., Koelsch, S., and Bhattacharya, J. (2011). Shadows of music–language interaction on low frequency brain oscillatory patterns. Brain Lang. 119, 50–57. doi: 10.1016/j.bandl.2011.05.009

Carrus, E., Pearce, M. T., and Bhattacharya, J. (2013). Melodic pitch expectation interacts with neural responses to syntactic but not semantic violations. Cortex 49, 2186–2200. doi: 10.1016/j.cortex.2012.08.024

Fedorenko, E., Patel, A., Casasanto, D., Winawer, J., and Gibson, E. (2009). Structural integration in language and music: evidence for a shared system. Mem. Cogn. 37, 1–9. doi: 10.3758/MC.37.1.1

Fiveash, A., and Pammer, K. (2014). Music and language: do they draw on similar syntactic working memory resources? Psychol. Music 42, 190–209. doi: 10.1177/0305735612463949

Herdener, M., Humbel, T., Esposito, F., Habermeyer, B., Cattapan-Ludewig, K., and Seifritz, E. (2014). Jazz drummers recruit language-specific areas for the processing of rhythmic structure. Cereb. Cortex 24, 836–843. doi: 10.1093/cercor/bhs367

Hoch, L., Poulin-Charronnat, B., and Tillmann, B. (2011). The influence of task-irrelevant music on language processing: syntactic and semantic structures. Front. Psychol. 2:112. doi: 10.3389/fpsyg.2011.00112

Jentschke, S., Koelsch, S., Sallat, S., and Friederici, A. D. (2008). Children with specific language impairment also show impairment of music-syntactic processing. J. Cogn. Neurosci. 20, 1940–1951. doi: 10.1162/jocn.2008.20135

Koelsch, S., Gunter, T. C., von Cramon, D. Y., Zysset, S., Lohmann, G., and Friederici, A. D. (2002). Bach speaks: a cortical “language-network” serves the processing of music. Neuroimage 17, 956–966. doi: 10.1016/S1053-8119(02)91154-7

Koelsch, S., Gunter, T. C., Wittfoth, M., and Sammler, D. (2005). Interaction between syntax processing in language and in music: an ERP study. J. Cogn. Neurosci. 17, 1565–1577. doi: 10.1162/089892905774597290

Patel, A. D., Iversen, J. R., Wassenaar, M., and Hagoort, P. (2008). Musical syntactic processing in agrammatic Broca's aphasia. Aphasiology 22, 776–789. doi: 10.1080/02687030701803804

Peretz, I., Vuvan, D., Lagrois, M.-É., and Armony, J. L. (2015). Neural overlap in processing music and speech. Philol. Trans. R. Soc. Lond. B Biol. Sci. 370:20140090. doi: 10.1098/rstb.2014.0090

Perruchet, P., and Poulin-Charronnat, B. (2013). Challenging prior evidence for a shared syntactic processor for language and music. Psychon. Bull. Rev. 20, 310–317. doi: 10.3758/s13423-012-0344-5

Rogalsky, C., Rong, F., Saberi, K., and Hickok, G. (2011). Functional anatomy of language and music perception: temporal and structural factors investigated using functional magnetic resonance imaging. J. Neurosci. 31, 3843–3852. doi: 10.1523/JNEUROSCI.4515-10.2011

Sammler, D., Baird, A., Valabrègue, R., Clément, S., Dupont, S., Belin, P., et al. (2010). The relationship of lyrics and tunes in the processing of unfamiliar songs: a functional magnetic resonance adaptation study. J. Neurosci. 30, 3572–3578. doi: 10.1523/JNEUROSCI.2751-09.2010

Slevc, L. R., and Okada, B. M. (2015). Processing structure in language and music: a case for shared reliance on cognitive control. Psychon. Bull. Rev. 22, 637–652. doi: 10.3758/s13423-014-0712-4

Slevc, L. R., Rosenberg, J. C., and Patel, A. D. (2009). Making psycholinguistics musical: self-paced reading time evidence for shared processing of linguistic and musical syntax. Psychon. Bull. Rev. 16, 374–381. doi: 10.3758/16.2.374

Steinbeis, N., and Koelsch, S. (2008a). Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS ONE 3:e2226. doi: 10.1371/journal.pone.0002226

Keywords: neural overlap, music, harmony, speech, language

Citation: Kunert R and Slevc LR (2015) A Commentary on: “Neural overlap in processing music and speech”. Front. Hum. Neurosci. 9:330. doi: 10.3389/fnhum.2015.00330

Received: 20 February 2015; Accepted: 22 May 2015;

Published: 03 June 2015.

Edited by:

Lutz Jäncke, University of Zurich, SwitzerlandReviewed by:

Eckart Altenmüller, Hannover University of Music, Drama and Media, GermanyStefan Elmer, University of Zurich, Switzerland

Copyright © 2015 Kunert and Slevc. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Richard Kunert, richard.kunert@mpi.nl

Richard Kunert

Richard Kunert L. Robert Slevc

L. Robert Slevc