- Performance Management and Evaluation Unit, Alberta Innovates, Edmonton, AB, Canada

Publicly funded research and innovation (R&I) organizations around the world are facing increasing demands to demonstrate the impacts of their investments. In most cases, these demands are shifting from academically based outputs to impacts that benefit society. Funders and other organizations are grappling to understand and demonstrate how their investments and activities are achieving impact. This is compounded with challenges that are inherent to impact assessment, such as having an agreed understanding of impact, the time lag from research to impact, establishing attribution and contribution, and consideration of diverse stakeholder needs and values. In response, many organizations are implementing frameworks and using web-based tools to track and assess academic and societal impact. This conceptual analysis begins with an overview of international research impact frameworks and emerging tools that are used by an increasing number of public R&I funders to demonstrate the value of their investments. From concept to real-world, this paper illustrates how one organization, Alberta Innovates, used the Canadian Academy of Health Sciences (CAHS) impact framework to guide implementation of its fit-for-purpose impact framework with an agnostic international six-block protocol. The implementation of the impact framework at Alberta Innovates is also supported by adopting emerging web-based tools. Drawing on the lessons learned from this continuous organizational endeavor to assess and measure R&I impact, we present preliminary plans for developing an impact strategy for Alberta Innovates that can be applied across sectors, including energy, environment and agriculture, and may possibly be adopted by other international funders.

Introduction

Public funding of research and innovation (R&I) is considered a strong catalyst for overall economic growth, with returns on investment estimated to be around 20% (European Commission, 2017). Despite this benefit, there have been substantial, and in some cases, consistently declining public investments in R&I (Izsak et al., 2013; Veugelers, 2015; Mervis, 2017). Concurrently, there have been increasing demands globally for public R&I organizations to demonstrate accountability and evidence of “value for money” by measuring the impact of their investments (Gubbins and Rousseau, 2015).

The increasing demands for assessment of R&I returns has led organizations to incorporate good management practices to optimize the results of publicly funded research (Martin-Sardesai et al., 2017). Many mission oriented public R&I funding organizations have also implemented impact frameworks and tools to assist with the assessment of impact (Donovan, 2011). A primary means of demonstrating the value generated through R&I investments, impact assessments synthesize, and judge the evidence about the intended and unintended changes that can be linked to an intervention such as a project, program or policy (GAO, 2012; ISRIA, 2014a; Gertler et al., 2016). The use of impact assessments in R&I funding organizations reflects an explicit focus on and response to the need to demonstrate research impacts that benefit society through changes in the economy, public health and the environment (Bornmann, 2017).

The measurement and assessment of impact within a R&I ecosystem is not without inherent challenges. These challenges are often characterized by diverse stakeholder interests and a lack of agreement on a common approach for impact assessment. Deficiencies in standardized impact data, and data quality issues are another common challenge. Additionally, time lag issues from research investment to achievement of impact with supporting evidence takes time, up to 17 years on average (Morris et al., 2011), which is not easily accommodated in situations requiring more responsive, rapid, and real-time reporting. Another important challenge is establishing how research attributes and contributes to impact. As impact takes time to achieve, funders need to consider the many contributors and contributions to achieving impact. Despite these challenges, many funders including Alberta Innovates have adopted frameworks and tools that can help to address or mitigate these challenges: impact frameworks provide a common language and approach to inform the systematic and standardization of data collection needed to conduct impact assessment and provide a model of the pathways to impact that allow funders to convey progress to longer term, and ultimate impacts. Adoption of impact frameworks are also advantageous in helping to identify key elements (stakeholders and activities) that are needed to inform attribution and contribution analysis (Mayne, 2008).

Alberta Innovates is a publicly funded, not-for-profit organization in Canada with a long history of impact assessment. Over the course of its nearly 40-year history, Alberta's provincial health research funding organization has undergone changes to its name and most recently organizational mandate: originally the Alberta Heritage Foundation for Medical Research (AHFMR) from 1979-2010, to Alberta Innovates—Health Solutions (AIHS) from 2010-2016, to the current Alberta Innovates (effective November 1, 2016) with a focus on supporting cross-sector R&I. Mandated to improve the environment, health and socioeconomic well-being of Albertans through R&I, Alberta Innovates strives to optimize societal impact by better understanding how its investments in R&I make a difference toward a more prosperous economy, cleaner environment, and healthier citizens. By working with others, Alberta Innovates aims to improve “what we do and how we do it” by planning, measuring, and assessing impacts and communicating these results to stakeholders, including Albertans. Using an experiential learning approach, the organization developed, implemented, and revised an organizational conceptual R&I framework that serves as the foundation of its impact assessment activities. It also implemented diverse automated data collection tools to generate evidence along its pathways to impact (i.e., logic model). The framework and tools have been critical in addressing some of the inherent challenges encountered during impact assessments and for evidencing the “value for money” of Alberta Innovates investments.

This paper begins with an overview of international research impact frameworks and emerging tools that are used by an increasing number of public R&I funders to demonstrate the value of their investments. We then share how Alberta Innovates has used the Canadian Academy of Health Sciences (CAHS) Making an Impact: Preferred Framework and Indicators to Measure Returns on Investment in Health Research (for brevity, this framework will be referred to hereon as the CAHS impact framework) concepts to guide implementation of its fit-for-purpose impact framework using an agnostic international six-block protocol. Next, we discuss how Alberta Innovates assesses and communicates R&I impact by adopting web-based tools. Finally drawing on lessons learned from the field, we present plans to develop an impact strategy for Alberta Innovates that can be adopted by other international funders and applied across sectors including energy, environment and agriculture.

Defining Research and Innovation Impact

There are differences in the R&I ecosystem and elsewhere concerning the definition of impact (Terama et al., 2016). The most widely used definition comes from the Organization for Economic Co-operation and Development (OECD) which defines impact as the “positive and negative, primary and secondary long-term effects produced by an intervention, directly or indirectly, intended or unintended” (OECD-DAC, 2002, p. 24). What is important about this definition is that it acknowledges that impact can be negative, and that unintended impact may occur. Others provide more specific definitions, defining impact as being beyond academia. For example, the Research Excellence Framework (REF) in the United Kingdom defines impact as “…an effect on, change or benefit to the economy, society, culture, public policy or services, health, the environment or quality of life, beyond academia” (REF, 2002, p. 26). In Canada, the CAHS impact framework uses a logic model to describe impact and includes proximal outputs and outcomes of advancing knowledge (e.g., traditional academic outputs such as number of publications) as well as distal impacts such as socio-economic prosperity (CAHS, 2009). At a conceptual level, the definition of impact has important implications for R&I impact assessments because it frames what should be considered as impact, for example whether to exclude academic impact, as the definition informs the selection of indicators and measures.

Research Impact Assessment Frameworks

Research impact has been aptly described as “an inherent and essential part of research and acts as an important way in which publicly funded research is accounted for” (Terama et al., 2016, p. 12). To this end, a multitude of impact assessment frameworks have been developed across the globe to assist public R&I funding organizations in demonstrating how their activities and investments benefit society (Guthrie et al., 2013). Frameworks help organizations articulate their impact story—and the stories of those they fund—by providing a tangible structure for organizing evidence about the progress to, and achievement of, results along various pathways to impact. Funders and other organizations are motivated to assess the impact of their investments for several reasons. A review of 25 research impact assessment frameworks by Deeming et al. (2017) found that frameworks are designed for different objectives:

• Accountability;

• Advocacy;

• Management and allocation;

• Prospective orientation;

• Speed of translation;

• Steering research for societal benefits;

• Transparency; and

• Value for money.

Accountability for research impact at the sector, state, and national level of governments was found to be the most frequent objective of the reviewed frameworks (Deeming et al., 2017). Closely aligned with and complementary to accountability was transparency because the collection of information about research activities, outputs and outcomes serves as a “bottom up” accountability measure along the pathways to impact. Another objective met by most frameworks was advocacy. This relates to public R&I organizations needing to showcase the benefits of supporting R&I and advocating for policy and practice change. Conversely, alignment of research agendas to specific targets was not always observed despite a common objective across the reviewed frameworks being the steering of research for societal benefits.

The importance of identifying and being explicit about the objective or purpose of the impact assessment is emphasized by the International School of Research Impact Assessment (ISRIA), of which Alberta Innovates was a co-founder. ISRIA builds on the work of RAND (Guthrie et al., 2013) that is reiterated in the 10-point guideline for an effective process of research impact assessment published by Adam et al. (2018). In practice, these purposes are commonly referred to as the “4As” of impact assessment and are used by Alberta Innovates as part of its six-block protocol for implementing fit-for-purpose frameworks:

• Accountability: to show that money has been managed effectively and accountable to tax-payers, donors and society;

• Advocacy: to demonstrate the benefits of R&I and make the case for investments and funding;

• Allocation: to determine where best to allocate funds in the future; and

• Analysis: to understand how and why R&I is effective and inform strategy and decision making.

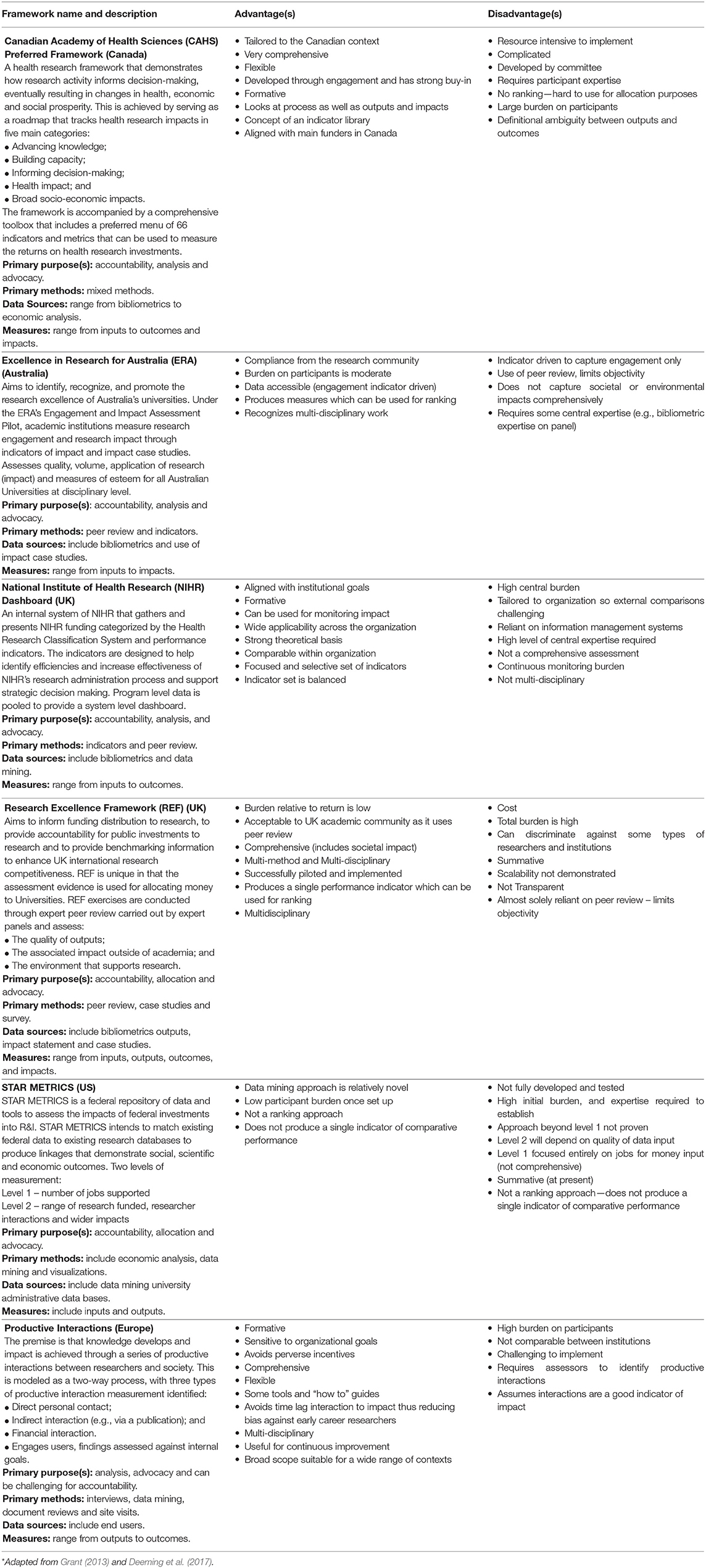

Integrating the work of Deeming et al. (2017) with the teachings of ISRIA, brief descriptions of a sample of six research impact assessment frameworks are provided in Table 1. Table 1 considers advantages and disadvantages of each framework and highlights the need to articulate the assessment purpose and consider the different types of methods and data collection tools that will be used to meet the purpose. A more detailed overview of these frameworks is available in Guthrie et al.'s (2013) guide to research evaluation frameworks and tools.

Evolution of the AIHS Health Research Impact Framework

Alberta Innovates has been an early adopter of research impact assessment frameworks to inform its assessment and evaluation activities. This began in 1999 in AHFMR, one of the predecessor organizations of Alberta Innovates, that used the Payback model to assess the returns of its clinical and biomedical research investments using a case study methodology (Buxton and Hanney, 1996; Buxton and Schneider, 1999). Ongoing evaluation activities in the organization were subsequently informed by the Payback model and, in 2007, the organization set out to implement a comprehensive impact framework systematically across its funding programs. The framework was designed to help the organization assess and ultimately realize the multi-dimensional and far reaching impacts of its health research investments.

Identification of an existing framework that met business needs was challenged by a general lack of consensus in the published literature about how to best assess the returns on health research investments. This changed in 2009 when CAHS published its blue-ribbon panel report containing its impact framework and associated indicators. Specifically, the report offered a systematic approach for the assessment of health research impact that addressed several gaps and inconsistences in assessment practices while providing a common language and theory about the benefits of health research.

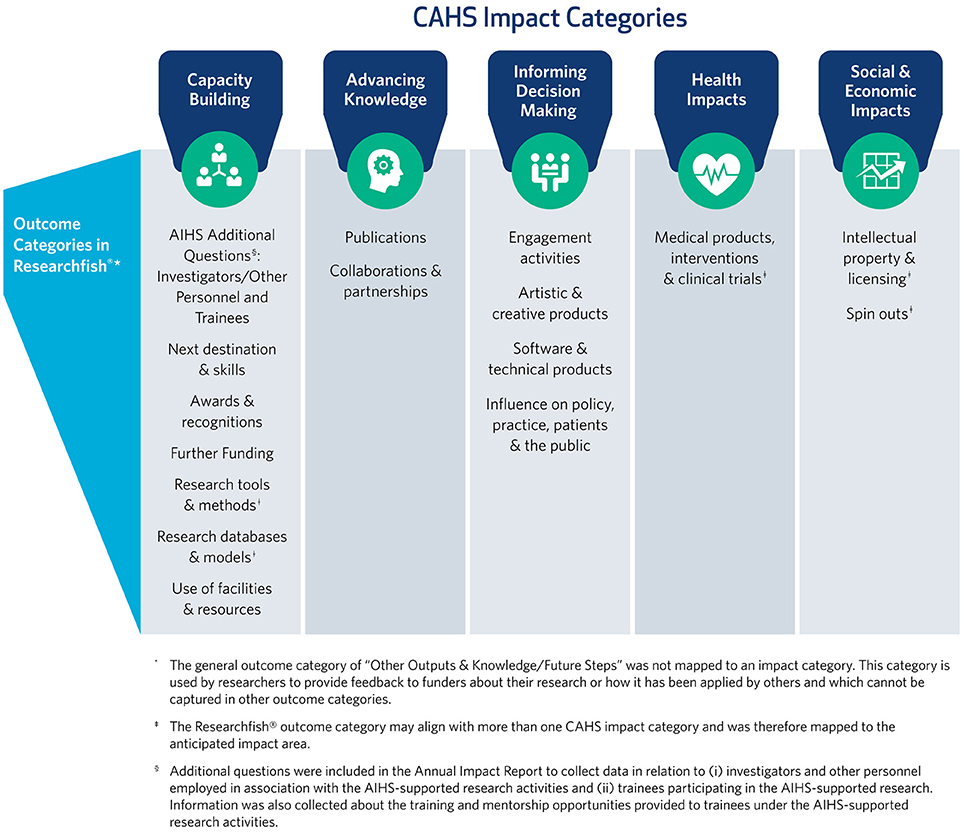

The CAHS impact framework was appealing because, unlike other conceptual frameworks, it was designed for the Canadian health research context and reflected the four pillars of health research described by the Canadian Institutes of Health Research (CIHR). This was an important feature as provincial health research organizations and post-secondary institutions throughout Canada frequently categorized research by the four CIHR pillars. It was also of interest that the CAHS impact framework was built upon the strengths of the Payback model (which the organization was already using), including its logic model and impacts approach, while attempting to address some of its limitations (Graham et al., 2012). The design of the framework as a roadmap to track health research impacts across the R&I system using five impact categories (i.e., advancing knowledge, capacity building, informing decision making, health impacts, and social and economic impacts) appeared advantageous for informing the collection and aggregation of data. This was because the impact categories provided a consistent basis for organizing the evidence obtained through metrics and communicating the impact using impact narratives at different units of analysis (e.g., project, program, portfolio, and organizational levels, etc.), tailored for diverse audiences. The sub-categorization of health impacts into health status, determinants of health and health-system changes was also unique relative to other frameworks, as was inclusion of quality of life as an important component of improved health. This aligned to the organization's vision of improving the health and well-being of Albertans. Finally, the CAHS impact toolbox of definitions, impact categories, indicators, metrics and data sources were viewed as useful tools to accelerate implementation of the framework.

Despite its appeal, the face validity of the CAHS impact framework and the ability to implement it in a “real world” context had yet to be demonstrated. To test this, in 2010 AIHS conducted a series of retrospective and prospective studies to verify the applicability of the CAHS impact framework for the local context and determine its feasibility for implementation (Graham et al., 2012). The reviews found that while the framework was generally applicable to AIHS and feasible to implement, modifications were necessary to better meet business needs. This included the addition of indicators for reach (e.g., collaborations and partnerships) and organizational performance (i.e., balanced scorecard), the development of progress ladders for the impact categories of informing decision making and economic and social prosperity, and a new subcategory of capacity building that included measures for training and mentorship.

The insights gained through the reviews informed the evolution of the CAHS impact framework into the first iteration of the AIHS Health Research Impact Framework (Graham et al., 2012). The framework was customized to be fit-for-purpose with AIHS's mission and strategic planning process. The fit-for-purpose approach also contextualized the framework to Alberta's R&I system, more specifically with alignment to the Alberta Health Research Innovation Strategy (Government of Alberta, 2010).

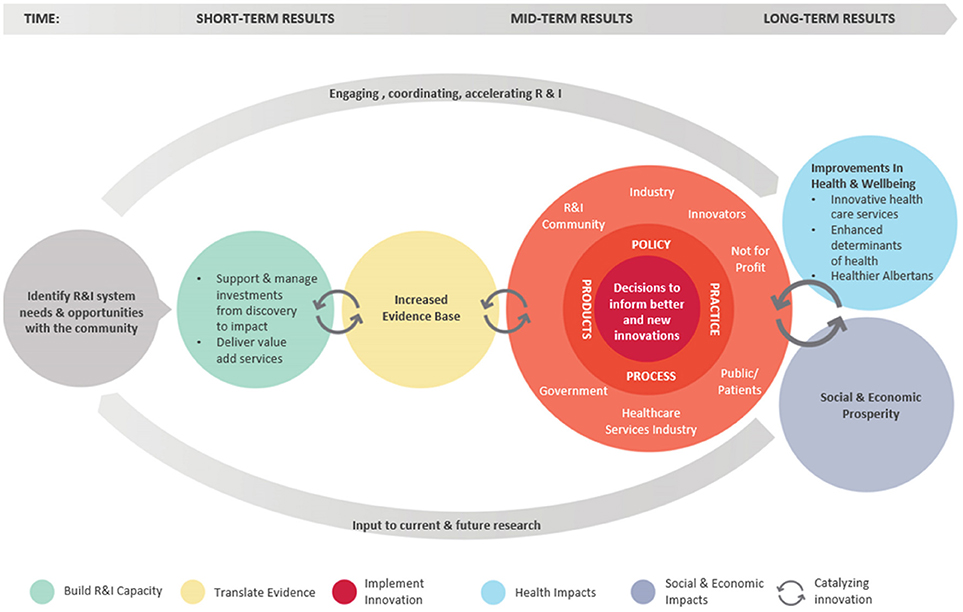

The first version of the AIHS impact framework was “boxy and linear” (Graham et al., 2012), the second version in Figure 1 was designed to be circular to better illustrate the system complexity and dynamic nature of R&I. Like the first version, the refined framework reads from left to right and demonstrates how research activity informs decision making for implementing innovation, eventually resulting in changes in health and economic prosperity. The framework also shows how research impacts feedback upstream, potentially influencing the diffusion and impacts of other research and creating inputs for current and future research.

A key enhancement of the AIHS impact framework (Figure 1) was the inclusion of three embedded red circles for the impact category of implement innovation. These circles highlight that knowledge and evidence inform decisions for innovation. This revision was informed by work with the Canadian Health Services Policy Research Alliance (CHSPRA) that is “unpacking” decision making impact from the CAHS impact framework (CHSPRA, 2018). The innermost circle refers to the type of decisions that inform better and new innovations. The Council of Canadian Academies defines innovation as “new or better ways of doing valued things,” (Council of Canadian Academies-Expert Panel on Business Innovation, 2009, p. 3) and the Advisory Panel on Healthcare Innovation defines it as activities that “generate value in terms of quality and safety of care, administrative efficiency, the patient experience, and patient outcomes” (Advisory Panel on Healthcare Innovation, 2015, p. 5). The middle circle references the four main types of innovations (i.e., the “4Ps”) in which decisions influenced by research can play an important role, namely:

• Policies: are a set of rules, directives and guidelines (e.g., legislation, regulation, public reporting) developed and implemented by a government, organization, agency, or institution;

• Practices: refer to providers' care practices (e.g., prevention and management, diagnosis, treatment, post-treatment) directly or, for example, through the development, revision, or implementation of clinical practice guidelines, competencies, standards, incentives, or other means;

• Processes: are the work flow processes of care production and/or delivery, including processes in service delivery models (e.g., integrated models of care), resource allocation processes (including the process of de-adoption, the reduction and/or elimination of unsafe, low quality, low value care, etc.), and more (e.g., involvement of new techniques, equipment or software in the care delivery process); and

• Products and services: includes the development of new or improved products, services, devices or treatment. Examples include technologies (e.g., eHealth technologies), pharmaceuticals, personalized medicine, diagnostic equipment, and more.

The outermost circle of implement innovation in Figure 1 identifies the key stakeholder groups and end users with whom decision-making occurs. These decisions can influence the behavior (or change in behavior) of a policy/decision maker, healthcare provider, patient and/or others. As highlighted in this circle, the stakeholders identified in the CAHS impact framework were modified and expanded on to include the R&I community, health care services industry, other industries, innovators (new), not for profits (new separate category), public/patients (patients are new), and government.

The evolved AIHS Health Research Impact Framework continues to expand on the concept of the “who” in relation to who uses the R&I outputs, who needs to be engaged along the pathways to achieve impact, who contributes to the achievement of impact and who benefits (e.g., public and patients). This is an important consideration when identifying the key actors who are needed to effect change in translating research to impact. To provide extra emphasis, the feedback loops in the graphic were made to be more circular to stress that engagement with stakeholders needs to occur along the entire R&I pathway and not only at the point of implementing innovation. For optimization of impact, the model proposed that engagement starts early with the identification of needs and opportunities. Highlighting stakeholder engagement and innovation informed the selection of data collection methods and measures that move beyond bibliometrics to include broader metrics of use and innovation.

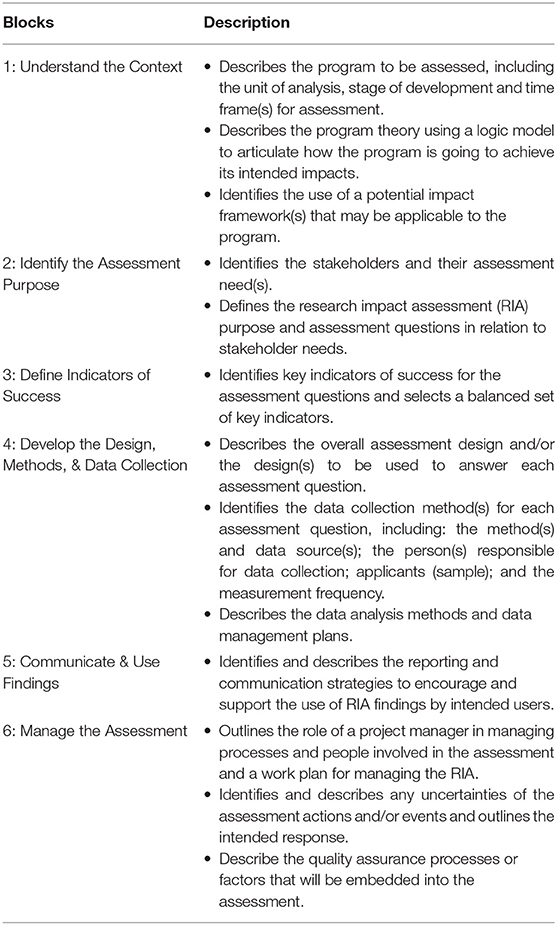

Another evolution of the framework was “how” to customize it when implementing it across the organization. AIHS used (and Alberta Innovates continues to use) the six-block protocol in implementing the impact framework for monitoring, evaluating, and assessing organizational performance and the impacts achieved through its investments. Specifically, the protocol guides the selection of indicators and measures used by the organization to answer organizational and stakeholder questions along the pathways to impact. Developed for ISRIA, the protocol is agnostic, and its component blocks serve as a foundational fit-for-purpose guide that requires users to work through each block prior to moving on to the next, with iterations to previous blocks being made when required (International School of Research Impact Assessment (ISRIA, 2014b). Table 2 outlines the six-block protocol as well as a description of what is included in each block.

Alberta Innovates is not the only organization to customize and implement the CAHS impact framework to better meet organizational needs. At the Practise Making Perfect: The Canadian Academy of Health Sciences (CAHS) Impact Framework Forum hosted by AIHS in Edmonton, Canada in 2015, nearly 70 individuals representing health and non-health funders, post-secondary institutions, health service providers, not-for-profits and industry came together to share how they were using the framework in practice (NAPHRO, 2015). The forum provided an opportunity for the participants to learn about specific applications of and revisions to the framework, to explore how the framework could be used more effectively and broadly moving forward, and how the framework had assisted organizations in implementing data capture tools for better communicating impact results.

Use of Emerging Tools for Measuring and Assessing Research Impact

The R&I ecosystem has been described as a complex and unstable network of people and technologies (Kok and Schuit, 2012). The ability to track the impacts of research or innovation projects in such a system becomes increasingly difficult as the focus shifts from proximal attribution-based outputs and outcomes to more distal contributory impacts. This new reality requires many R&I organizations to find better and more efficient ways to measure and communicate the impact generated through their investments.

The challenges faced by many publicly funded R&I organizations in the systematic and routine collection, analysis, and reporting of comprehensive and quality impact data are only intensified with the shift in focus to more distal impact. This stems from trade-offs between the quality, completeness and timeliness of data used in R&I impact assessment and the cost and feasibility of collecting such data (Greenhalgh et al., 2016). A limited ability to more fully demonstrate the value of investments creates difficulties for R&I funders in advocating to stakeholders, including the public, about the “so what” of R&I (i.e., how R&I contributes to positive changes in the lives of end-users) and making a convincing case for future investments. It also hinders funders' abilities to make more evidence informed investments decisions by learning what works, what doesn't and why.

Assessing the returns generated from R&I across the pathways from proximal outputs to distal impacts is best achieved through mixed method approaches using multiple data sources and collection systems that can store data over time. A key consideration is the use of automated data capture systems that have interoperability with other systems or enable data to be more easily integrated across systems. This functionality increases the analytical capability of funding organizations while concurrently reducing the administrative burden on researchers and funders.

The evolution of the Internet and social media has created a massive amount of data that traditional bibliometrics (i.e., the quantitative analysis of publications such as journal articles, book chapters, patents, and conference papers using indicators related to publication counts, citations, and collaboration patterns) are not suitable for measuring (Haustein and Larivière, 2015; Karanatsiou et al., 2017). This alternative data, called altmetrics, includes almost immediate information about the sharing of datasets, experimental designs and codes; semantic publishing such as nanopublications; and the use of social media for widespread self-publishing (Priem et al., 2010). Altmetrics data informs analysis about the volume and nature of attention that research receives online and provides insights into how research flows into and affects society (Priem et al., 2010; Waltman Costas and Costas, 2014). In doing so, altmetrics data complements citation-based metrics by expanding the view of what R&I impact looks like, what's making the impact, and how distal and collective impact can be measured (Priem et al., 2010). Altmetrics data is therefore of growing interest to many R&I funding organizations as it represents a newer source of data through which policy and practice impacts can be measured. However, altmetrics should be used cautiously in assessments of research impact. Quality control is limited, which makes these metrics more amenable to gaming (Thelwall, 2014). While altmetrics may be an indicator of public response to research (Barnes, 2015), the association between altmetrics and citations appears weak (Thelwall, 2014; Barnes, 2015). Research and innovation impact assessment is truly benefiting from emerging tools that utilize the digitization of information. However, regardless of the type of tool or data, mixed methods using multiple data sources remains a best practice approach for better evidencing the progress to, and impacts achieved through R&I investments. The inherent limitations of any given tool or type of data can be offset when used in combination with other established and/or emerging tools, thereby providing a more complete picture of the full value being generated.

Aligning Tools to the Framework: Meeting Organizational Needs

Implementation of the first and second iterations of the AIHS Health Impact Framework were accompanied by changes in the tools used to collect annual outcome and impact data from funding recipients. Although this better aligned the tools to the framework and led to more focused and purposeful data collection, several challenges continued to hinder the organization's ability to report on the progress to impacts being achieved through its investments. Many of these challenges stemmed from a lack of automated and integrated data capture, analytic, and reporting tools. Fortunately, several tools emerged in the R&I ecosystem to help funders address data challenges through more automated approaches. These tools, which are driven by technological advances and economic pressures on the research community, are increasingly overtaking more labor-intensive practices (Greenhalgh et al., 2016). Digitalization of impact data is one technological advance that is making possible the unprecedented use of large datasets. It enables the use of artificial intelligence and machine learning to analyze and classify “big data” in significantly shorter periods of time, including the identification of patterns of investments from the program to the eco-system level. International standards, e.g., ORCID (2018) and CASRAI (2018), are also drastically improving the ability to pool and compare data across borders, thereby enabling “big data” collections.

As illustrated in Figure 1, the AIHS impact framework begins with the collaborative identification of needs and opportunities in the province's R&I ecosystem and progresses to investments and services across the R&I continuum. To assess the impact (or returns) on these investments, the minimum requirements of the organization were tools to systematically classifying investments and collect impact information. As there are numerous commercial and non-commercial tools available in the market to collect impact data, this report is not intended to endorse any one tool but illustrate how the tool has been used in practice to support organizational impact assessment. One tool used by AIHS to assess its investments dynamically is Dimensions for Funders, a cloud-based information tool that includes over 1.3 trillion US dollars in historical grants from over 200 funders (Digital Science, 2018). The tool enables R&I funders to rapidly complete environmental scans and analyses and compare their awards against the global funding landscape.

AIHS's consideration of a tool like Dimensions was a culmination of several factors. One was recognition within the organization that reproducible and meaningful classification of its R&I investments was a critical requirement for improved investment analysis. This included the consistent and systematic application of common standardized classifications that would enable the organization to compare its investments over time, across different research portfolios and/or across organizations and geographical locations. The organization also felt that this approach would create an objective, consistent and reproducible methodology and associated evidence base for data-driven strategic planning and reporting. Additionally, through standardized and customized classifications, this tool provided a means of linking investments to impact data, thereby enabling the value generated in specific R&I areas of organizational priorities to be demonstrated.

AIHS successfully implemented Dimensions with its health investment portfolio. Most importantly, this has provided the organization with a significantly improved ability to address internal and external stakeholder questions in a highly efficient and timely manner. It has also provided novel insights into the R&I investment landscape in Alberta that would not have been readily recognized, including investment trends relative to other jurisdictions and the types of research in Alberta that receive support from other R&I funders. The tool allows the organization to run dynamic searches quickly and thus can be used to identify emerging “hot topic” areas and experts in fields of study.

Canada has made significant progress toward the integration and harmonized classification of research investment data. For example, members of the National Alliance of Provincial Health Research Organizations (NAPHRO) are applying a common classification scheme across their individual datasets. With Dimensions, NAPHRO can efficiently apply this scheme to their data and those of others with the goal of generating a collective view of R&I investments in Canada. Currently NAPHRO members in western Canada are collaborating with Digital Science on a project to identify trends in grant and award patterns within that region and identify more distal impacts of their investments.

To classify impacts according to the AIHS impact framework, a second tool of interest to AIHS was Researchfish®. Researchfish is a web-based system used by funders to collect quantitative and qualitative information on the outputs, outcomes and impacts of the R&I projects that they fund (Researchfish, 2018). By 2014, the tool had been adopted by all the research councils in the UK and had been taken up by public funders and non-governmental organizations in North America and continental Europe. Over 110 research organizations were using the tool as of 2016, contributing to the collection of 2 million outputs and outcomes.

AIHS was the first funder in the western hemisphere to implement Researchfish. Prior to doing so, the organization had to determine if the tool was sufficiently compatible with AIHS's impact data collection requirements. A mapping exercise indicated that the tool's common question set was suitable for collecting ~80–90% of the desired indicators across the AIHS framework (Figure 2); additional information needs could be addressed through the addition of custom questions (i.e., fit-for-purpose) tailored to the needs of the business. Researchfish was also of interest to AIHS because of its framework agnostic nature and its capability of standardizing input. Finally, the tool helped AIHS overcome several challenges, namely:

• Streamlined and standardized data collection between programs, thereby enhancing the ability to analyze and report impact data in a reproducible way;

• Use of a web-based data collection tool that was accessible 24/7 throughout the year and enabled users to report data once and re-use it multiple times;

• Provided the ability to internally benchmark on a year-by-year basis; and

• Incorporated data validation processes.

AIHS implemented Researchfish using a phased approach and several unique questions were added to improve the tool's fit with the organization's strategic priorities and focus areas. This included questions aligned to the framework's health impact sub-categories of health status, determinants of health, and health-system changes. Because AIHS had been collecting outcome data from its researchers and trainees previously, implementation of the tool was essentially a process change for those involved rather than a net new development. In addition, end user engagement activities were undertaken to help AIHS's research community better understand the importance and value of impact reporting in general. These engagement activities included orientation sessions with end users to highlight how the data collected through the tool aligned to the AIHS Health Research Impact Framework, and how the data would be used and communicated by the organization.

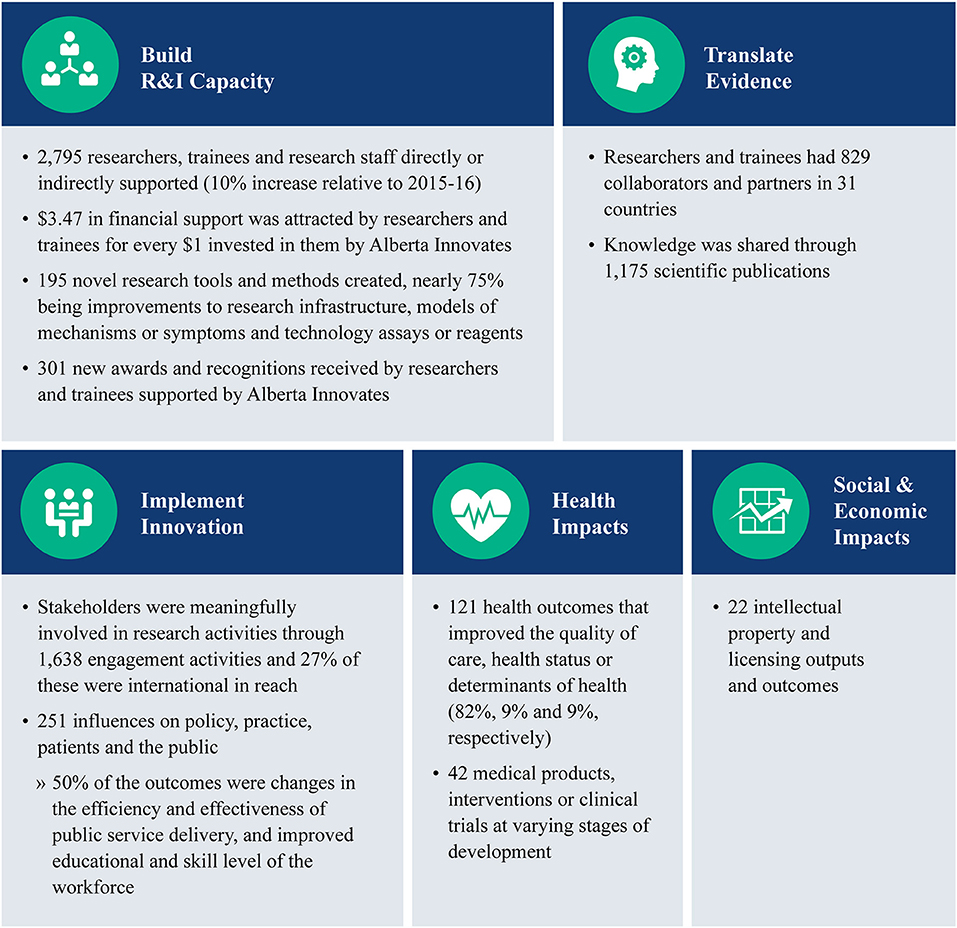

Implementation of Researchfish provides the organization with a more systematic and standardized way to annually collect impact data along the pathways from initial discoveries in the research laboratory to wide-scale adoption in the health system. It enables the organization to produce and make publicly available comprehensive annual impact reports about its health portfolio investments (AIHS, 2015; Alberta Innovates, 2016, 2017). The reports include outcomes and impacts being achieved across the impact framework, a sample of which is provided in Figure 3. Key outcomes and impacts from a strategic perspective are communicated on a one-page scorecard that is useful for informing both internal and external stakeholders (Figure 4). The ability to create cascading scorecards for different health portfolios and programs has increased the analytical and reporting capability of the organization. These impact assessment tools and products also inform other organizational activity including performance monitoring. Several impact exemplars are also included in the reports to showcase in more detail the achievements made by individual trainees or research teams.

Clearly communicating impact to diverse stakeholders provides the opportunity to enhance use and actioning R&I evidence. A third tool under development, an impact narrative repository is being designed by Alberta Innovates to communicate key research contributions that have occurred along the pathways to impact. Impact narratives that will be collected in the repository range from 2 to 4 pages and are designed as a communication product that uses a narrative approach to “tell the story” of what and how impacts were generated. Rigor is addressed by using a guiding template (available through the repository), providing corroborating sources and integrating metrics into the narrative, as well as through document review and stakeholder interviews as needed. Because impact narratives are audience driven, they are written in plain English with a public audience in mind.

Lessons Learned Through Lived Experience

Alberta Innovates, primarily through the activities of the AHRMR and AIHS, has nearly 20 years of experience in implementing, using and refining research impact frameworks and tools to assess the returns on R&I investments. Over time and through lived experience, the evidence-based practice approach to research impact assessment within the organization has been increasingly augmented by practice-based evidence and experience. A key lesson learned is that conceptual frameworks and associated tools need to be flexible to accommodate new or additional metrics that address changes in business and/ or stakeholder needs. This includes measures that will be used for reporting at an aggregate level (e.g., organizational scorecard) as well as those used at different levels of analysis (e.g., portfolio, program, project, etc.).

Implementing the framework and tools was a pragmatic approach to systematically collect information at an organizational level and cascade to funding portfolio and program levels across the organization. Successful implementation required the additional development of new data management governance structures as well as integration of new processes into the business workflow.

Best practice guidance on communicating impact to diverse stakeholders requires integrating quantitative impact information with narratives (Adam et al., 2018). The narratives provide additional qualitative information that help contextualize the impact achieved by researchers. Creating different communication products for diverse stakeholders was required given different preferences for using the information; for example, the one-page scorecard was useful for policy makers, while the research community found the more comprehensive annual impact report useful for describing impacts across the continuum from basic discovery research to applied.

Continuous learning was best achieved through collaboration within the organization as well as across organizations and borders. Collaboration initiatives and the sharing of better practices with international funders and other organizations have helped accelerate implementation and move the “science” of impact forward. Collaborations have also helped harmonize research funding mechanisms and have resulted in greater understandings about how funders are using data to maintain effective funding systems and better program design.

The biggest lesson learned to date for implementation has been to understand the culture of change in terms of both assessment (i.e., assessors) and receptor capacity (i.e., practitioners, decision makers) that is needed to action assessment findings. The change management efforts and steps necessary to effect change both internally and externally cannot be over-estimated.

Next Steps

Work is currently underway to further evolve the Alberta Innovates Research and Innovation Impact Framework by generalizing it to other sectors (e.g., Clean Energy). The result will be a cross-sectoral R&I impact framework. This continued evolution is driven by the recent consolidation (November, 2016) of four individual sector-focused Alberta Innovates agencies—Bio Solutions, Energy and Environment Solutions, Health Solutions and Technology Futures— into a single organization, and the Alberta Government's new Research and Innovation Framework (Government of Alberta, 2017). In keeping with its mandate to be outcomes focused, the forthcoming framework will be instrumental in guiding Alberta Innovates in tracking its progress and achievements across the pathways to impact and aligning it with the organization's strategic priorities.

To further enhance its utility and value, the forthcoming framework will serve as a policy instrument to realize economic and societal impacts and will be used prospectively within Alberta Innovates to assess the rollout of the organization's new strategic plan. A series of cascading balanced scorecards will also be developed and implemented to inform both strategic and program management decision-making. To allow sufficient time for impact to occur, Alberta Innovates will follow up post grant and continue to conduct impact assessments retrospectively. To communicate impact that makes sense to Albertans plans are underway to create an impact narrative repository tool which will provide public access and a search functionality by research impact categories, keywords etc.

Conclusion

Assessing and measuring impact is challenging given issues with the many definitions of impact, time lags, establishing attribution, and contribution, etc. However, implementing a framework to assess the impact of health R&I has proven to be useful in creating an impact culture within an organization that includes a shared understanding of impact, use of a common language, approach and shared tools. As the focus shifts from proximal to distal impacts, it is increasingly important to use mixed methods and heterogeneous data sources given the complexity of measuring and assessing societal impact. The advancement of new web-based tools in the market will assist in meeting the challenges associated with measuring impact as they enable greater collection and integration of data and the ability to conduct more comprehensive analysis. The robustness of information that emerges from these tools will better the understanding of the collective efforts needed in the R&I ecosystem to achieve these distal impacts and realize the maximum benefits to society.

Moving forward in Alberta Innovates, the focus will be on incorporating impact prospectively through the identification of the ultimate intended impact(s) as well as the pathways for achieving them. The goal is to understand the diverse impact pathways so that the organization can better accelerate the translation of research and innovation to optimize societal impact. This will require further enhancement of the framework and tools used to assess impact.

Author Contributions

KG developed the overall strategy and approach for framework development and implementation. In addition to proposing the overall structure for the manuscript, she wrote the abstract, lessons learned and conclusion as well as the section describing the framework. DL-K contributed to framework development and implementation, created the first draft of the manuscript and coordinated feedback on the manuscript from all the authors. SA conducted the literature review, provided an overview of the international frameworks, and reviewed the draft documents. LC conducted the literature search, compiled all references, and provided feedback on the draft documents. HC contributed to framework development and implementation. All authors reviewed and approved the final manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to acknowledge our Performance Management and Evaluation colleagues: Susan Shaw, Shannon Cunningham, Dorothy Pinto, Reesa John, and Maxi Miciak (Cy Frank Fellow in Impact Assessment) who, in one way or another, were instrumental in the development and implementation of the impact framework. We would also like to acknowledge the support from Alberta Innovates. A special thank you goes to our funded researchers and Alberta Innovates staff who work tirelessly to collect and report on impact and have made implementation happen. As well, we would like to extend our gratitude to the reviewers and editors who helped further refine our manuscript.

References

Adam, P., Ovseiko, P. V., Grant, J., Graham, K. E. A., Boukhris, O. F., Dowd, A. M., et al. (2018). ISRIA statement: ten-point guidelines for an effective process of research impact assessment. Health Res. Policy Syst. 16:8. doi: 10.1186/s12961-018-0281-5

Advisory Panel on Healthcare Innovation (2015). Unleashing Innovation: Excellent Healthcare for Canada. Ottawa, ON: Health Canada. Available online at: http://healthycanadians.gc.ca/publications/health-system-systeme-sante/report-healthcare-innovation-rapport-soins/alt/report-healthcare-innovation-rapport-soins-eng.pdf

Alberta Innovates (2016). Annual Impact Report: Health 2015-16. Available online at: https://albertainnovates.ca/wp-content/uploads/2018/02/Alberta-Innovates-Health-Impact-Report.pdf

Alberta Innovates (2017). Annual Impact Report for Health Innovation 2016-17. Available online at: https://albertainnovates.ca/wp-content/uploads/2018/05/AI_health_impact_report_2017_march21-2.pdf

Alberta Innovates-Health Solutions (AIHS) (2015). Annual Impact Report 2014-15. Available online at: https://albertainnovates.ca/wp-content/uploads/2018/02/Annual-Impact-Report-2014-2015-1.pdf

Alberta Innovates–Health Solutions (AIHS) and the National Alliance of Provincial Health Research Organizations (NAPHRO) (2015). Practise Making Perfect: The Canadian Academy of Health Sciences Impact Framework-Forum Proceedings Report. Edmonton, AB: AIHS and NAPHRO. Available online at: https://albertainnovates.ca/wp-content/uploads/2018/02/AIHS-CES-SUMMARY-ONLINE-1.pdf

Barnes, C. (2015). The use of altmetrics as a tool for measuring research impact. Aust. Acad. Res. Libr. 46, 121–134. doi: 10.1080/00048623.2014.1003174

Bornmann, L. (2017). Measuring impact in research evaluations: a thorough discussion of methods for, effects of and problems with impact measurements. High. Educ. 73, 775–787. doi: 10.1007/s10734-016-9995-x

Buxton, M., and Hanney, S. (1996). How can payback from health services research be assessed? J. Health Serv. Res. Policy 1, 35–43. doi: 10.1177/135581969600100107

Buxton, M., and Schneider, W. (1999). Assessing the Payback From AHFMR-Funded Research. Edmonton, AB: Alberta Heritage Foundation for Medical Research. Available at: https://archive.org/details/assessingpayback00buxt

Canadian Academy of Health Sciences-Panel on Return of Investment in Health Research (CAHS) (2009). Making an Impact: A Preferred Framework and Indicators to Measure Returns on Investments in Health Research. Ottawa, ON: CAHS. Available online at: http://www.cahs-acss.ca/wp-content/uploads/2011/09/ROI_FullReport.pdf

Canadian Health Services and Policy Research Alliance (CHSPRA) (2018) Making an Impact: A Shared Framework for Assessing the Impact of Health Services and Policy Research on Decision-Making.

CASRAI (2018). CASRAI Home Page. Available online at: https://casrai.org/

Council of Canadian Academies-Expert Panel on Business Innovation (2009). Innovation and Business Strategy: Why Canada Falls Short. Ottawa, ON: Council of Canadian Academies. Available online at: http://www.scienceadvice.ca/uploads/eng/assessments%20and%20publications%20and%20news%20releases/inno/(2009-06-11)%20innovation%20report.pdf

Deeming, S., Searles, A., Reeves, P., and Nilsson, M. (2017). Measuring research impact in Australia's medical research institutes: a scoping literature review of the objectives for and an assessment of the capabilities of research impact assessment frameworks. Health Res. Policy Syst. 15, 1–13. doi: 10.1186/s12961-017-0180-1

Digital Science (2018). Government/ Funders and Dimensions. Available online at: https://www.dimensions.ai/info/government-funder/detail/

Donovan, C. (Ed.). (2011). Special issue on the state of the art in assessing research impact. Res. Eval. 20, 175–260. doi: 10.3152/095820211X13118583635918

European Commission (2017). The Economic Rationale for Public R&I Funding and Its Impact. Available online at: http://bookshop.europa.eu/uri?target=EUB:NOTICE:KI0117050:EN

Gertler, P. J., Martinez, S., Premand, P., Rawlings, L. B., and Vermeersch, C. M. J. (2016). Impact Evaluation in Practice, 2nd Edn. Washington, DC: The World Bank.

Government Accountability Office (GAO) (2012). Designing Evaluation. (GAO-12-208G). Available online at: http://www.gao.gov/assets/590/588146.pdf

Government of Alberta (2010). Alberta's Health Research and Innovation Strategy. Available online at: https://web.archive.org/web/20150326015932/http://eae.alberta.ca/media/277640/ahris_report_aug2010_web.pdf

Government of Alberta (2017). Alberta Research and Innovation Framework (ARIF). Available online at: https://www.alberta.ca/alberta-research-innovation-framework.aspx

Graham, K. E. R., Chorzempa, H. L., Valentine, P., and Magnan, J. (2012). Evaluating health research impact: development and implementation of the Alberta Innovates – Health Solutions impact framework. Res. Eval. 21, 354–367. doi: 10.1093/reseval/rvs027

Grant, J. (2013). Models and Frameworks. Presentation at the International School on Research Impact Assessment. Available online at: https://www.theinternationalschoolonria.com/uploads/resources/barcelona_2013/13_04_Frameworks.pdf

Greenhalgh, T., Raftery, J., Hanney, S., and Glover, M. (2016). Research impact: a narrative review. BMC Med. 14:78. doi: 10.1186/s12916-016-0620-8

Gubbins, C., and Rousseau, D. M. (2015). Embracing translational HRD research for evidence-based management: let's talk about how to bridge the research-practice gap. Hum. Resour. Dev. Q. 26, 109–125. doi: 10.1002/hrdq.21214

Guthrie, S., Wamae, W., Diepeveen, S., Wooding, S., and Grant, J. (2013). Measuring Research: A Guide to Research Evaluation Frameworks and Tools. Santa Monica, CA: RAND Corporation. Available online at: https://www.rand.org/pubs/monographs/MG1217.html

Haustein, S., and Larivière, V. (2015). “The use of bibliometrics for assessing research: possibilities, limitations and adverse effects,” in Incentives and Performance: Governance of Research Organizations, eds I. M. Welpe, J. Wollersheim, S. Ringelhan, and M. Osterloh (Cham: Springer International Publishing), 121–39.

International School of Research Impact Assessment (ISRIA) (2014a). Glossary: Terms, Definitions and Acronyms Commonly Used in the SCHOOL. Version 2.0. Available online at: https://www.theinternationalschoolonria.com/uploads/resources/banff_school_2014/14_20_Glossary.pdf

International School of Research Impact Assessment (ISRIA) (2014b). Resources: Banff School 2014. Available online at: https://www.theinternationalschoolonria.com/banff_school_2014.php

Izsak, K., Markianidou, P., Lukach, R., and Wastyn, A. (2013). The Impact of the Crisis on Research and Innovation Policies: Study for the European Commission DG Research, Directorate C – Research and Innovation. Available online at: https://ec.europa.eu/research/innovation-union/pdf/expert-groups/ERIAB_pb-Impact_of_financial_crisis.pdf

Karanatsiou, D., Misirlis, N., and Vlachopoulou, M. (2017). Bibliometrics and altmetrics literature review: performance indicators and comparison analysis. Perform. Meas. Metrics 18, 16–27. doi: 10.1108/PMM-08-2016-0036

Kok, M. O., and Schuit, A. J. (2012). Contribution mapping: a method for mapping the contribution of research to enhance its impact. Health Res. Policy Syst. 10:21. doi: 10.1186/1478-4505-10-21

Martin-Sardesai, A., Irvine, H., Tooley, S., and Guthrie, J. (2017). Organizational change in an Australian university: responses to a research assessment exercise. Br. Acc. Rev. 49, 399–412. doi: 10.1016/j.bar.2017.05.002

Mayne, J. (2008). Contribution Analysis: An Approach to Exploring Cause and Effect. ILAC Brief Number 16. Available online at: http://lib.icimod.org/record/13855/files/4919.pdf

Mervis, J. (2017). Data check: U.S. government share of basic research funding fall below 50%. Science 355. doi: 10.1126/science.aal0890

Morris, Z. S., Wooding, S., and Grant, J. (2011). The answer is 17 years, what is the question: understanding time lags in translational research. J. R. Soc. Med. 103, 510–520. doi: 10.1258/jrsm.2011.110180

ORCID (2018). ORCID Home Page. Available online at: https://orcid.org/

Organisation for Economic Co-operation and Development-Development Assistance Committee (OECD-DAC) (2002). Glossary of Key Terms in Evaluation and Results Based Management. Available online at: https://www.oecd.org/dac/evaluation/2754804.pdf

Priem, J., Taraborelli, D., Groth, P., and Neylon, C. (2010). Altmetrics: A Manifesto. Available online at: http://altmetrics.org/manifesto

Researchfish (2018). Researchfish Home Page. Available online at: https://www.researchfish.net/

Terama, E., Smallman, M., Lock, S. J., Johnson, C., and Austwick, M. Z. (2016). Beyond Academia-Interrogating research impact in the research excellence framework. PLoS ONE 11:e0168533. doi: 10.1371/journal.pone.0168533

Thelwall, M. (2014). Five Recommendations for Using Alternative Metrics in the Future UK Research Excellence Framework. LSE Impact Blog. Available online at: http://blogs.lse.ac.uk/impactofsocialsciences/2014/10/23/alternative-metrics-future-uk-research-excellence-framework-thelwall/

Veugelers, R. (2015). Is Europe Saving Away for its Future? European Public Funding for Research in the Era of Fiscal Consolidation. Policy brief by the Research, Innovation, and Science policy Experts (RISE) for the European Commission. Available online at: https://ec.europa.eu/research/openvision/pdf/rise/veugelers-saving_away.pdf

Keywords: research evaluation, research impact assessment, research and innovation, measurement, impact frameworks

Citation: Graham KER, Langlois-Klassen D, Adam SAM, Chan L and Chorzempa HL (2018) Assessing Health Research and Innovation Impact: Evolution of a Framework and Tools in Alberta, Canada. Front. Res. Metr. Anal. 3:25. doi: 10.3389/frma.2018.00025

Received: 16 May 2018; Accepted: 21 August 2018;

Published: 18 September 2018.

Edited by:

Staša Milojević, Indiana University Bloomington, United StatesReviewed by:

Marc J. J. Luwel, Leiden University, NetherlandsM. J. Cobo, University of Cádiz, Spain

Copyright © 2018 Graham, Langlois-Klassen, Adam, Chan and Chorzempa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kathryn E. R. Graham, a2F0aHJ5bi5ncmFoYW1AYWxiZXJ0YWlubm92YXRlcy5jYQ==

Kathryn E. R. Graham

Kathryn E. R. Graham Deanne Langlois-Klassen

Deanne Langlois-Klassen Sagal A. M. Adam

Sagal A. M. Adam Liza Chan

Liza Chan