Random vector functional link networks for function approximation on manifolds

- 1Department of Mathematics, University of California, Los Angeles, Los Angeles, CA, United States

- 2Department of Mathematical Sciences, United States Air Force Academy, Colorado Springs, CO, United States

- 3Department of Mathematics and Halıcıoğlu Data Science Institute, University of California, San Diego, San Diego, CA, United States

- 4Mathematical Institute, Utrecht University, Utrecht, Netherlands

The learning speed of feed-forward neural networks is notoriously slow and has presented a bottleneck in deep learning applications for several decades. For instance, gradient-based learning algorithms, which are used extensively to train neural networks, tend to work slowly when all of the network parameters must be iteratively tuned. To counter this, both researchers and practitioners have tried introducing randomness to reduce the learning requirement. Based on the original construction of Igelnik and Pao, single layer neural-networks with random input-to-hidden layer weights and biases have seen success in practice, but the necessary theoretical justification is lacking. In this study, we begin to fill this theoretical gap. We then extend this result to the non-asymptotic setting using a concentration inequality for Monte-Carlo integral approximations. We provide a (corrected) rigorous proof that the Igelnik and Pao construction is a universal approximator for continuous functions on compact domains, with approximation error squared decaying asymptotically like O(1/n) for the number n of network nodes. We then extend this result to the non-asymptotic setting, proving that one can achieve any desired approximation error with high probability provided n is sufficiently large. We further adapt this randomized neural network architecture to approximate functions on smooth, compact submanifolds of Euclidean space, providing theoretical guarantees in both the asymptotic and non-asymptotic forms. Finally, we illustrate our results on manifolds with numerical experiments.

1 Introduction

In recent years, neural networks have once again triggered an increased interest among researchers in the machine learning community. So-called deep neural networks model functions using a composition of multiple hidden layers, each transforming (possibly non-linearly) the previous layer before building a final output representation [1–5]. In machine learning parlance, these layers are determined by sets of weights and biases that can be tuned so that the network mimics the action of a complex function. In particular, a single layer feed-forward neural network (SLFN) with n nodes may be regarded as a parametric function of the form

Here, the function ρ:ℝ → ℝ is called an activation function and is potentially non-linear. Some typical examples include the sigmoid function , ReLU ρ(z) = max{0, z}, and sign functions, among many others. The parameters of the SLFN are the number of nodes n ∈ ℕ in the the hidden layer, the input-to-hidden layer weights and biases and (resp.), and the hidden-to-output layer weights . In this way, neural networks are fundamentally parametric families of functions whose parameters may be chosen to approximate a given function.

It has been shown that any compactly supported continuous function can be approximated with any given precision by a single layer neural network with a suitably chosen number of nodes [6], and harmonic analysis techniques have been used to study stability of such approximations [7]. Other recent results that take a different approach directly analyze the capacity of neural networks from a combinatorial point of view [8, 9].

While these results ensure existence of a neural network approximating a function, practical applications require construction of such an approximation. The parameters of the neural network can be chosen using optimization techniques to minimize the difference between the network and the function f:ℝN → ℝ it is intended to model. In practice, the function f is usually not known, and we only have access to a set of values of the function at finitely many points sampled from its domain, called a training set. The approximation error can be measured by comparing the training data to the corresponding network outputs when evaluated on the same set of points, and the parameters of the neural network fn can be learned by minimizing a given loss function ; a typical loss function is the sum-of-squares error

The SLFN which approximates f is then determined using an optimization algorithm, such as back-propagation, to find the network parameters which minimize . It is known that there exist weights and biases which make the loss function vanish when the number of nodes n is at least m, provided the activation function is bounded, non-linear, and has at least one finite limit at either ±∞ [10].

Unfortunately, optimizing the parameters in SLFNs can be difficult. For instance, any non-linearity in the activation function can cause back-propagation to be very time-consuming or get caught in local minima of the loss function [11]. Moreover, deep neural networks can require massive amounts of training data, and so are typically unreliable for applications with very limited data availability, such as agriculture, healthcare, and ecology [12].

To address some of the difficulties associated with training deep neural networks, both researchers and practitioners have attempted to incorporate randomness in some way. Indeed, randomization-based neural networks that yield closed form solutions typically require less time to train and avoid some of the pitfalls of traditional neural networks trained using back-propagation [11, 13, 14]. One of the popular randomization-based neural network architectures is the Random Vector Functional Link (RVFL) network [15, 16], which is a single layer feed-forward neural network in which the input-to-hidden layer weights and biases are selected randomly and independently from a suitable domain and the remaining hidden-to-output layer weights are learned using training data.

By eliminating the need to optimize the input-to-hidden layer weights and biases, RVFL networks turn supervised learning into a purely linear problem. To see this, define ρ(X) ∈ ℝn×m to be the matrix whose jth column is and f(X) ∈ ℝm the vector whose jth entry is f(xj). Then, the vector v ∈ ℝn of hidden-to-output layer weights is the solution to the matrix-vector equation f(X) = ρ(X)Tv, which can be solved by computing the Moore-Penrose pseudoinverse of ρ(X)T. In fact, there exist weights and biases that make the loss function vanish when the number of nodes n is at least m, provided the activation function is smooth [17].

Although originally considered in the early- to mid-1990s [15, 16, 18, 19], RVFL networks have had much more recent success in several modern applications, including time-series data prediction [20], handwritten word recognition [21], visual tracking [22], signal classification [23, 24], regression [25], and forecasting [26, 27]. Deep neural network architectures based on RVFL networks have also made their way into more recent literature [28, 29], although traditional, single layer RVFL networks tend to perform just as well as, and with lower training costs than, their multi-layer counterparts [29].

Even though RVFL networks are proving their usefulness in practice, the supporting theoretical framework is currently lacking [see 30]. Most theoretical research into the approximation capabilities of deep neural networks centers around two main concepts: universal approximation of functions on compact domains and point-wise approximation on finite training sets [17]. For instance, in the early 1990s, it was shown that multi-layer feed-forward neural networks having activation functions that are continuous, bounded, and non-constant are universal approximators (in the Lp sense for 1 ≤ p < ∞) of continuous functions on compact domains [31, 32]. The most notable result in the existing literature regarding the universal approximation capability of RVFL networks is due to Igelnik and Pao [16] in the mid-1990s, who showed that such neural networks can universally approximate continuous functions on compact sets; the noticeable lack of results since has left a sizable gap between theory and practice. In this study, we begin to bridge this gap by further improving the Igelnik and Pao result, and bringing the mathematical theory behind RFVL networks into the modern spotlight. Below, we introduce the notation that will be used throughout this study, and describe our main contributions.

1.1 Notation

For a function f:ℝN → ℝ, the set supp(f)⊂ℝN denotes the support of f. We denote by and the classes of continuous functions mapping ℝN to ℝ whose support sets are compact and vanish at infinity, respectively. Given a set S⊂ℝN, we define its radius to be ; moreover, if dμ denotes the uniform volume measure on S, then we write to represent the volume of S. For any probability distribution P:ℝN → [0, 1], a random variable X distributed according to P is denoted by X~P, and we write its expectation as . The open ℓp ball of radius r > 0 centered at x ∈ ℝN is denoted by for all 1 ≤ p ≤ ∞; the ℓp unit-ball centered at the origin is abbreviated . Given a fixed δ > 0 and a set S⊂ℝN, a minimal δ-net for S, which we denote , is the smallest subset of S satisfying ; the δ-covering number of S is the cardinality of a minimal δ-net for S and is denoted .

1.2 Main results

In this study, we analyze the uniform approximation capabilities of RVFL networks. More specifically, we consider the problem of using RVFL networks to estimate a continuous, compactly supported function on N-dimensional Euclidean space.

The first theoretical result on approximating properties of RVFL networks, due to Igelnik and Pao [16], guarantees that continuous functions may be universally approximated on compact sets using RVFL networks, provided the number of nodes n ∈ ℕ in the network goes to infinity. Moreover, it shows that the mean square error of the approximation vanishes at a rate proportional to 1/n. At the time, this result was state-of-the-art and justified how RVFL networks were used in practice. However, the original theorem is not technically correct. In fact, several aspects of the proof technique are flawed. Some of the minor flaws are mentioned in Li et al. [33], but the subsequent revisions do not address the more significant issues which would make the statement of the result technically correct. We address these issues in this study, see Remark 1. Thus, our first contribution to the theory of RVFL networks is a corrected version of the original Igelnik and Pao theorem:

Theorem 1 ([16]). Let with K: = supp(f) and fix any activation function ρ, such that either ρ ∈ L1(ℝ)∩L∞(ℝ) with or ρ is differentiable with ρ′ ∈ L1(ℝ)∩L∞(ℝ) and . For any ε > 0, there exist distributions from which input weights and biases are drawn, and there exist hidden-to-output layer weights that depend on the realization of weights and biases, such that the sequence of RVFL networks is defined by

satisfies

as n → ∞.

For a more precise formulation of Theorem 1 and its proof, we refer the reader to Theorem 5 and Section 3.1.

Remark 1.

1. Even though in Theorem 1 we only claim existence of the distribution for input weights and biases , such a distribution is actually constructed in the proof. Namely, for any ε > 0, there exist constants α, Ω > 0 such that the random variables

are independently drawn from their associated distributions, and bk: = −〈wk, yk〉 −αuk.

2. We note that, unlike the original theorem statement in Igelnik and Pao [16], Theorem 1 does not show exact convergence of the sequence of constructed RVFL networks fn to the original function f. Indeed, it only ensures that the limit fn is ε-close to f. This should still be sufficient for practical applications since, given a desired accuracy level ε > 0, one can find values of α, Ω, andn such that this accuracy level is achieved on average. Exact convergence can be proved if one replaces α and Ω in the distribution described above by sequences and of positive numbers, both tending to infinity with n. In this setting, however, there is no guaranteed rate of convergence; moreover, as n increases, the ranges of the random variables and become increasingly larger, which may cause problems in practical applications.

3. The approach we take to construct the RVFL network approximating a function f allows one to compute the output weights exactly (once the realization of random parameters is fixed), in the case where the function f is known. For the details, we refer the reader to Equations 6, 8 in the proof of Theorem 1. If we only have access to a training set that is sufficiently large and uniformly distributed over the support of f, these formulas can be used to compute the output weights approximately, instead of solving the least squares problem.

4. Note that the normalization of the activation function can be replaced by the condition . Indeed, in the case when ρ ∈ L1(ℝ)∩L∞(ℝ) and one can simply use Theorem 1 to approximate by a sequence of RVFL network with the activation function . Mutatis mutandis in the case when More generally, this trick allows any of our theorems to be applied in the case

One of the drawbacks of Theorem 1 is that the mean square error guarantee is asymptotic in the number of nodes used in the neural network. This is clearly impractical for applications, and so it is desirable to have a more explicit error bound for each fixed number n of nodes used. To this end, we provide a new, non-asymptotic version of Theorem 1, which provides an error guarantee with high probability whenever the number of network nodes is large enough, albeit at the price of an additional Lipschitz requirement on the activation function:

Theorem 2 Let with K: = supp(f) and fix any activation function ρ ∈ L1(ℝ)∩L∞(ℝ) with Suppose further that ρ is κ-Lipschitz on ℝ for some κ > 0. For any ε > 0 and η ∈ (0, 1), suppose that n ≥ C(N, f, ρ)ε−1log(η−1/ε), where C(N, f, ρ) is independent of ε and η and depends on f, ρ, and superexponentially on N. Then, there exist distributions from which input weights and biases are drawn, and there exist hidden-to-output layer weights that depend on the realization of weights and biases, such that the RVFL network defined by

satisfies

with probability at least 1−η.

For simplicity, the bound on the number n of the nodes on the hidden layer here is rough. For a more precise formulation of this result that contains a bound with explicit constant, we refer the reader to Theorem 6 in Section 3.2. We also note that the distribution of the input weight and bias here can be selected as described in Remark 1.

The constructions of RVFL networks presented in Theorems 1, 2 depend heavily on the dimension of the ambient space ℝN. If N is small, this dependence does not present much of a problem. However, many modern applications require the ambient dimension to be large. Fortunately, a common assumption in practice is the support of the signals of interest lies on a lower-dimensional manifold embedded in ℝN. For instance, the landscape of cancer cell states can be modeled using non-linear, locally continuous “cellular manifolds;” indeed, while the ambient dimension of this state space is typically high (e.g., single-cell RNA sequencing must account for approximately 20,000 gene dimensions), cellular data actually occupies an intrinsically lower dimensional space [34]. Similarly, while the pattern space of neural population activity in the brain is described by an exponential number of parameters, the spatiotemporal dynamics of brain activity lie on a lower-dimensional subspace or “neural manifold” [35]. In this study, we propose a new RVFL network architecture for approximating continuous functions defined on smooth compact manifolds that allows to replace the dependence on the ambient dimension N with dependence on the manifold intrinsic dimension. We show that RVFL approximation results can be extended to this setting. More precisely, we prove the following analog of Theorem 2.

Theorem 3 Let be a smooth, compact d-dimensional manifold with finite atlas {(Uj, ϕj)}j ∈ J and . Fix any activation function ρ ∈ L1(ℝ)∩L∞(ℝ) with such that ρ is κ-Lipschitz on ℝ for some κ > 0. For any ε > 0 and η ∈ (0, 1), suppose n ≥ C(d, f, ρ)ε−1log(η−1/ε), where C(d, f, ρ) is independent of ε and η and depends on f, ρ, and superexponentially on d. Then, there exists an RVFL-like approximation fn of the function f with a parameter selection similar to the Theorem 1 construction that satisfies

with probability at least 1−η.

For a the construction of the RVFL-like approximation fn and a more precise formulation of this result and an analog of Theorem 1 applied to manifolds, we refer the reader to Section 3.3.1 and Theorems 7, 8. We note that the approximation fn here is not obtained as a single RVFL network construction, but rather as a combination of several RVFL networks in local manifold coordinates.

1.3 Organization

The remaining part of the article is organized as follows. In Section 2, we discuss some theoretical preliminaries on concentration bounds for Monte-Carlo integration and on smooth compact manifolds. Monte-Carlo integration is an essential ingredient in our construction of RVFL networks approximating a given function, and we use the results listed in this section to establish approximation error bounds. Theorem 1 is proven in Section 3.1, where we break down the proof into four main steps, constructing a limit-integral representation of the function to be approximated in Lemmas 3, 4, then using Monte-Carlo approximation of the obtained integral to construct an RVFL network in Lemma 5, and, finally, establishing approximation guarantees for the constructed RVFL network. The proofs of Lemmas 3, 4, and 5 can be found in Sections 3.5.1, 3.5.2, and 3.5.3, respectively. We further study properties of the constructed RVFL networks and prove the non-asymptotic approximation result of Theorem 2 in Section 3.2. In Section 3.3, we generalize our results and propose a new RVFL network architecture for approximating continuous functions defined on smooth compact manifolds. We show that RVFL approximation results can be extended to this setting by proving an analog of Theorem 1 in Section 3.3.2 and Theorem 3 in Section 3.5.5. Finally, in Section 3.4, we provide numerical evidence to illustrate the result of Theorem 3.

2 Materials and methods

In this section, we briefly introduce supporting material and theoretical results which we will need in later sections. This material is far from exhaustive, and is meant to be a survey of definitions, concepts, and key results.

2.1 A concentration bound for classic Monte-Carlo integration

A crucial piece of the proof technique employed in Igelnik and Pao [16], which we will use repeatedly, is the use of the Monte-Carlo method to approximate high-dimensional integrals. As such, we start with the background on Monte-Carlo integration. The following introduction is adapted from the material in Dick et al. [36].

Let f:ℝN → ℝ and S⊂ℝN a compact set. Suppose we want to estimate the integral , where μ is the uniform measure on S. The classic Monte Carlo method does this by an equal-weight cubature rule,

where are independent identically distributed uniform random samples from S and is the volume of S. In particular, note that 𝔼In(f, S) = I(f, S) and

Let us define the quantity

It follows that the random variable In(f) has mean I(f, S) and variance vol2(S)σ(f, S)2/n. Hence, by the Central Limit Theorem, provided that 0 < vol2(S)σ(f, S)2 < ∞, we have

for any constant C > 0, where ε(f, S): = vol(S)σ(f, S). This yields the following well-known result:

Theorem 4 For any f ∈ L2(S, μ), the mean-square error of the Monte Carlo approximation In(f, S) satisfies

where the expectation is taken with respect to the random variables and σ(f, S) is defined in Equation 1.

In particular, Theorem 4 implies as n → ∞.

In the non-asymptotic setting, we are interested in obtaining a useful bound on the probability ℙ(|In(f, S)−I(f, S)| ≥ t) for all t > 0. The following lemma follows from a generalization of Bennett's inequality (Theorem 7.6 in [37]; see also [38, 39]).

Lemma 1 For any f ∈ L2(S) and n ∈ ℕ, we have

for all t > 0 and a universal constant C > 0, provided |vol(S)f(x)| ≤ K for almost every x ∈ S.

2.2 Smooth, compact manifolds in Euclidean space

In this section, we review several concepts of smooth manifolds that will be useful to us later. Many of the definitions and results that follow can be found, for instance, in Shaham et al. [40]. Let be a smooth, compact d-dimensional manifold. A chart for is a pair (U, ϕ) such that is an open set and ϕ:U → ℝd is a homeomorphism. One way to interpret a chart is as a tangent space at some point x ∈ U; in this way, a chart defines a Euclidean coordinate system on U via the map ϕ. A collection {(Uj, ϕj)}j ∈ J of charts defines an atlas for if . We now define a special collection of functions on called a partition of unity.

Definition 1 Let be a smooth manifold. A partition of unity of with respect to an open cover {Uj}j ∈ J of is a family of non-negative smooth functions {ηj}j ∈ J such that for every , we have and, for every j ∈ J, supp(ηj)⊂Uj.

It is known that if is compact, there exists a partition of unity of such that supp(ηj) is compact for all j ∈ J [see 41]. In particular, such a partition of unity exists for any open cover of corresponding to an atlas.

Fix an atlas {(Uj, ϕj)}j ∈ J for , as well as the corresponding, compactly supported partition of unity {ηj}j ∈ J. Then, we have the following useful result [see 40, Lemma 4.8].

Lemma 2 Let be a smooth, compact manifold with atlas {(Uj, ϕj)}j ∈ J and compactly supported partition of unity {ηj}j ∈ J. For any , we have

for all , where

In later sections, we use the representation of Lemma 2 to integrate functions over . To this end, for each j ∈ J, let Dϕj(y) denote the differential of ϕj at y ∈ Uj, which is a map from the tangent space into ℝd. One may interpret Dϕj(y) as the matrix representation of a basis for the cotangent space at y ∈ Uj. As a result, Dϕj(y) is necessarily invertible for each y ∈ Uj, and so we know that |det(Dϕj(y))| > 0 for each y ∈ Uj. Hence, it follows by the change of variables theorem that

3 Results

In this section, we prove our main results formulated in Section 1.2 and also use numerical simulations to illustrate the RVFL approximation performance in a low-dimensional submanifold setup. To improve readability of this section, we postpone the proofs of technical lemmas till Section 3.5.

3.1 Proof of Theorem 1

We split the proof of the theorem into two parts, first handling the case ρ ∈ L1(ℝ)∩L∞(ℝ) and second, addressing the case ρ′ ∈ L1(ℝ)∩L∞(ℝ).

3.1.1 Proof of Theorem 1 when ρ ∈ L1(ℝ)∩L∞(ℝ)

We begin by restating the theorem in a form that explicitly includes the distributions that we draw our random variables from.

Theorem 5 ([16]) Let with K: = supp(f) and fix any activation function ρ ∈ L1(ℝ)∩L∞(ℝ) with . For any ε > 0, there exist constants α, Ω > 0 such that the following holds: If, for k ∈ ℕ, the random variables

are independently drawn from their associated distributions, and

then there exist hidden-to-output layer weights (that depend on the realization of the weights and biases ) such that the sequence of RVFL networks defined by

satisfies

as n → ∞.

Proof. Our proof technique is based on that introduced by Igelnik and Pao and can be divided into four steps. The first three steps essentially consist of Lemma 3, Lemma 4, and Lemma 5, and the final step combines them to obtain the desired result. First, the function f is approximated by a convolution, given in Lemma 3. The proof of this result can be found in Section 3.5.1.

Lemma 3 Let and h ∈ L1(ℝN) with For Ω > 0, define

Then, we have

uniformly for all x ∈ ℝN.

Next, we represent f as the limiting value of a multidimensional integral over the parameter space. In particular, we replace (f*hΩ)(x) in the convolution identity (Equation 4) with a function of the form , as this will introduce the RVFL structure we require. To achieve this, we first use a truncated cosine function in place of the activation function ρ and then switch back to a general activation function.

To that end, for each fixed Ω > 0, let and define cosΩ:ℝ → [−1, 1] by

Moreover, introduce the functions

where y, w ∈ ℝN, u ∈ ℝ, and ϕ = A*A for any even function A ∈ C∞(ℝ) supported on s.t. ∥A∥2 = 1. Then, we have the following lemma, a detailed proof of which can be found in Section 3.5.2.

Lemma 4 Let and ρ ∈ L1(ℝ) with K: = supp(f) and . Define Fα, Ω and bα as in Equation 6 for all α > 0. Then, for , we have

uniformly for every x ∈ K, where .

The next step in the proof of Theorem 5 is to approximate the integral in Equation 7 using the Monte-Carlo method. Define for k = 1, …, n, and the random variables by

Then, we have the following lemma that is proven in Section 3.5.3.

Lemma 5 Let and ρ ∈ L1(ℝ)∩L∞(ℝ) with K: = supp(f) and . Then, as n → ∞, we have

where and .

To complete the proof of Theorem 5, we combine the limit representation (Equation 7) with the Monte-Carlo error guarantee (Equation 9) and show that, given any ε > 0, there exist α, Ω > 0 such that

as n → ∞. To this end, let ε′ > 0 be arbitrary and consider the integral I(x; p) given by

for x ∈ K and p ∈ ℕ. By Equation 7, there exist α, Ω > 0 such that |f(x)−I(x; 1)| < ε′ holds for every x ∈ K, and so it follows that

for every x ∈ K. Jensen's inequality now yields that

By Equation 9, we know that the second term on the right-hand side of Equation 11 is O(1/n). Therefore, we have

and so the proof is completed by taking and choosing α, Ω > 0 accordingly.

3.1.2 Proof of Theorem 1 when ρ′ ∈ L1(ℝ)∩L∞(ℝ)

The full statement of the theorem is identical to that of Theorem 5 albeit now with ρ′ ∈ L1(ℝ)∩L∞(ℝ), so we omit it for brevity. Its proof is also similar to the proof of the case where ρ ∈ L1(ℝ)∩L∞(ℝ) with some key modifications. Namely, one uses an integration by parts argument to modify the part of the proof corresponding to Lemma 4. The details of this argument are presented in Section 3.5.4.

3.2 Proof of Theorem 2

In this section, we prove the non-asymptotic result for RVFL networks in ℝN, and we begin with a more precise statement of the theorem that makes all the dimensional dependencies explicit.

Theorem 6 Consider the hypotheses of Theorem 5 and suppose further that ρ is κ-Lipschitz on ℝ for some κ > 0. For any

suppose

where , c > 0 is a numerical constant, and Σ is a constant depending on f and ρ, and let parameters , , and be as in Theorem 5. Then, the RVFL network defined by

satisfies

with probability at least 1−η.

Proof. Let with K: = supp(f) and suppose ε > 0, η ∈ (0, 1) are fixed. Take an arbitrarily κ-Lipschitz activation function ρ ∈ L1(ℝ)∩L∞(ℝ). We wish to show that there exists an RVFL network defined on K that satisfies the

with probability at least 1−η when n is chosen sufficiently large. The proof is obtained by modifying the proof of Theorem 5 for the asymptotic case.

We begin by repeating the first two steps in the proof of Theorem 5 from Sections 3.5.1, 3.5.2. In particular, by Lemma 4 we have the representation given by Equation 4, namely,

holds uniformly for all x ∈ K. Hence, if we define the random variables fn and In from Section 3.5.3 as in Equations 8, 29, respectively, we seek a uniform bound on the quantity

over the compact set K, where I(x; 1) is given by Equation 10 for all x ∈ K. Since Equation 7 allows us to fix α, Ω > 0 such that

holds for every x ∈ K simultaneously, the result would follow if we show that, with high probability, uniformly for all x ∈ K. Indeed, this would yield

with high probability. To this end, for δ > 0, let denote a minimal δ-net for K, with cardinality . Now, fix x ∈ K and consider the inequality

where is such that ∥x−z∥2 < δ. We will obtain the desired bound on Equation 12 by bounding each of the terms (*), (**), and (***) separately.

First, we consider the term (*). Recalling the definition of In, observe that we have

where and we define

Now, since ρ is assumed to be κ-Lipschitz, we have

for any y ∈ K, w ∈ [−Ω, Ω]N, and Hence, an application of the Cauchy–Schwarz inequality yields for all x ∈ K, from which it follows that

holds for all x ∈ K.

Next, we bound (***) using a similar approach. Indeed, by the definition of I(·;1), we have

Using the fact that for al x ∈ K, it follows that

holds for all x ∈ K, just like Equation 13.

Notice that the Equations 13, 14 are deterministic. In fact, both can be controlled by choosing an appropriate value for δ in the net . To see this, fix ε′ > 0 arbitrarily and recall that vol(K(Ω)) = (2Ω)Nπ(2L + 1)vol(K). A simple computation then shows that (*) + (***) < ε′ whenever

We now bound (**) uniformly for x ∈ K. Unlike (*) and (***), we cannot bound this term deterministically. In this case, however, we may apply Lemma 1 to

for any . Indeed, because and ρ ∈ L∞(ℝ). Then, Lemma 1 yields the tail bound

for all t > 0, where c > 0 is a numerical constant and

By taking

we obtain B = Cα(Ω/π)N(π+2Nrad(K)Ω) and

If we choose the number of nodes such that

then a union bound yields (**) < t simultaneously for all with probability at least 1−η. Combined with the bounds from Equations 13, 14, it follows from Equation 12 that

simultaneously for all x ∈ K with probability at least 1−η, provided δ and n satisfy Equations 15, 16, respectively. Since we require , the proof is then completed by setting and choosing δ and n accordingly. In particular, it suffices to choose so that Equations 15, 16 become

as desired.

Remark 2 The implication of Theorem 6 is that, given a desired accuracy level ε > 0, one can construct a RVFL network fn that is ε-close to f with high probability, provided the number of nodes n in the neural network is sufficiently large. In fact, if we assume that the ambient dimension N is fixed here, then δ and n depend on the accuracy ε and probability η as

Using that log(1+x) = x+O(x2) for small values of x, the requirement on the number of nodes behaves like

whenever ε is sufficiently small. Using a simple bound on the covering number, this yields a coarse estimate of n≳ε−1log(η−1/ε).

Remark 3 If we instead assume that N is variable, then, under the assumption that f is Hölder continuous with exponent β, one should expect that n = ω(N2βN) as N → ∞ (in light of Remark 10 and in conjunction with Theorem 6 with log(1+1/x)≈1/x for large x). In other words, the number of nodes required in the hidden layer is superexponential in the dimension. This dependence of n on N may be improved by means of more refined proof techniques. As for α, if follows from Remark 12 that α = Θ(1) as N → ∞ provided

Remark 4 The κ-Lipschitz assumption on the activation function ρ may likely be removed. Indeed, since (***) in Equation 12 can be bounded instead by leveraging continuity of the L1 norm with respect to translation, the only term whose bound depends on the Lipschitz property of ρ is (*). However, the randomness in In (that we did not use to obtain the bound in Equation 13) may be enough to control (*) in most cases. Indeed, to bound (*), we require control over quantities of the form |ρ(α(〈wk, x−yk〉 −uk−ρα〈wk, z−yk〉−uk))|. For most practical realizations of ρ, this difference will be small with high probability (on the draws of yk, wk, uk), whenever ∥x−z∥2 is sufficiently small.

3.3 Results on sub-manifolds of Euclidean space

The constructions of RVFL networks presented in Theorems 5, 6 depend heavily on the dimension of the ambient space ℝN. Indeed, the random variables used to construct the input-to-hidden layer weights and biases for these neural networks are N-dimensional objects; moreover, it follows from Equations 15, 16 that the lower bound on the number n of nodes in the hidden layer depends superexponentially on the ambient dimension N. If the ambient dimension is small, these dependencies do not present much of a problem. However, many modern applications require the ambient dimension to be large. Fortunately, a common assumption in practice is that signals of interest have (e.g., manifold) structure that effectively reduces their complexity. Good theoretical results and algorithms in a number of settings typically depend on this induced smaller dimension rather than the ambient dimension. For this reason, it is desirable to obtain approximation results for RVFL networks that leverage the underlying structure of the signal class of interest, namely, the domain of .

One way to introduce lower-dimensional structure in the context of RVFL networks is to assume that supp(f) lies on a subspace of ℝN. More generally, and motivated by applications, we may consider the case where supp(f) is actually a submanifold of ℝN. To this end, for the remainder of this section, we assume to be a smooth, compact d-dimensional manifold and consider the problem of approximating functions using RVFL networks. As we are going to see, RVFL networks in this setting yield theoretical guarantees that replace the dependencies of Theorems 5, 6 on the ambient dimension N with dependencies on the manifold dimension d. Indeed, one should expect that the random variables , are essentially d-dimensional objects (rather than N-dimensional) and that the lower bound on the number of network nodes in Theorem 6 scales as a (superexponential) function of d rather than N.

3.3.1 Adapting RVFL networks to d-manifolds

As in Section 2.2, let {(Uj, ϕj)}j ∈ J be an atlas for the smooth, compact d-dimensional manifold with the corresponding compactly supported partition of unity {ηj}j ∈ J. Since is compact, we assume without loss of generality that |J| < ∞. Indeed, if we additionally assume that satisfies the property that there exists an r > 0 such that, for each , is diffeomorphic to an ℓ2 ball in ℝd with diffeomorphism close to the identity, then one can choose an atlas {(Uj, ϕj)}j ∈ J with by intersecting with ℓ2 balls in ℝN of radii r/2 [40]. Here, Td is the so-called thickness of the covering and there exist coverings such that Td≲dlog(d).

Now, for , Lemma 2 implies that

for all , where

As we will see, the fact that is smooth and compact implies for each j ∈ J, and so we may approximate each using RVFL networks on ℝd as in Theorems 5, 6. In this way, it is reasonable to expect that f can be approximated on using a linear combination of these low-dimensional RVFL networks. More precisely, we propose approximating f on via the following process:

1. For each j ∈ J, approximate uniformly on using a RVFL network as in Theorems 5, 6;

2. Approximate f uniformly on by summing these RVFL networks over J, i.e.,

for all .

3.3.2 Main results on d-manifolds

We now prove approximation results for the manifold RVFL network architecture described in Section 3.3.1. For notational clarity, from here onward, we use to denote the limit as each nj tends to infinity simultaneously. The first theorem that we prove is an asymptotic approximation result for continuous functions on manifolds using the RVFL network construction presented in Section 3.3.1. This theorem is the manifold-equivalent of Theorem 5.

Theorem 7 Let be a smooth, compact d-dimensional manifold with finite atlas {(Uj, ϕj)}j ∈ J and . Fix any activation function ρ ∈ L1(ℝ)∩L∞(ℝ) with . For any ε > 0, there exist constants αj, Ωj > 0 for each j ∈ J such that the following holds. If, for each j ∈ J and for k ∈ ℕ, the random variables

are independently drawn from their associated distributions, and

then there exist hidden-to-output layer weights such that the sequences of RVFL networks defined by

satisfy

as {nj}j ∈ J → ∞.

Proof. We wish to show that there exist sequences of RVFL networks defined on ϕj(Uj) for each j ∈ J, which together satisfy the asymptotic error bound

as {nj}j ∈ J → ∞. We will do so by leveraging the result of Theorem 5 on each .

To begin, recall that we may apply the representation given by Equation 17 for f on each chart (Uj, ϕj); the RVFL networks we seek are approximations of the functions in this expansion. Now, as supp(ηj)⊂Uj is compact for each j ∈ J, it follows that each set ϕj(supp(ηj)) is a compact subset of ℝd. Moreover, because if and only if z ∈ ϕj(Uj) and , we have that is supported on a compact set. Hence, for each j ∈ J, and so we may apply Lemma 4 to obtain the uniform limit representation given by Equation 7 on ϕj(Uj), that is,

where we define

In this way, the asymptotic error bound that is the final result of Theorem 5, namely

holds. With these results in hand, we may now continue with the main body of the proof.

Since the representation given by Equation 17 for f on each chart (Uj, ϕj) yields

for all , Jensen's inequality allows us to bound the mean square error of our RVFL approximation by

To bound (*), note that the change of variables given by Equation 2 implies

for each j ∈ J. Defining , which is necessarily bounded away from zero for each j ∈ J by compactness of , we therefore have

Hence, applying Equation 18 for each j ∈ J yields

because With the bound given by Equation 20 in hand, Equation 19 becomes

as {nj}j ∈ J → ∞, and so the proof is completed by taking each εj > 0 in such a way that

and choosing αj, Ωj > 0 accordingly for each j ∈ J.

Remark 5 Note that the neural-network architecture obtained in Theorem 7 has the following form in the case of a generic atlas. To obtain the estimate of f(x), the input x is first “pre-processed” by computing ϕj(x) for each j ∈ J such that x ∈ Uj, and then put through the corresponding RVFL network. However, using the Geometric Multi-Resolution Analysis approach from Allard et al. [42] (as we do in Section 3.4), one can construct an approximation (in an appropriate sense) of the atlas, with maps ϕj being linear. In this way, the pre-processing step can be replaced by the layer computing ϕj(x), followed by the RVFL layer fj. We refer the reader to Section 3.4 for the details.

The biggest takeaway from Theorem 7 is that the same asymptotic mean-square error behavior we saw in the RVFL network architecture of Theorem 5 holds for our RVFL-like construction on manifolds, with the added benefit that the input-to-hidden layer weights and biases are now d-dimensional random variables rather than N-dimensional. Provided the size of the atlas |J| is not too large, this significantly reduces the number of random variables that must be generated to produce a uniform approximation of .

One might expect to see a similar reduction in dimension dependence for the non-asymptotic case if the RVFL network construction of Section 3.3.1 is used. Indeed, our next theorem, which is the manifold-equivalent of Theorem 6, makes this explicit:

Theorem 8 Let be a smooth, compact d-dimensional manifold with finite atlas {(Uj, ϕj)}j ∈ J and . Fix any activation function ρ ∈ L1(ℝ)∩L∞(ℝ) such that ρ is κ-Lipschitz on ℝ for some κ > 0 and . For any ε > 0, there exist constants αj, Ωj > 0 for each j ∈ J such that the following holds. Suppose, for each j ∈ J and for k = 1, ..., nj, the random variables

are independently drawn from their associated distributions, and

Then, there exist hidden-to-output layer weights such that, for any

and

where , c > 0 is a numerical constant, and the sequences of RVFL networks defined by

satisfy

with probability at least 1−η.

Proof. See Section 3.5.5.

As alluded to earlier, an important implication of Theorems 7, 8 is that the random variables and are d-dimensional objects for each j ∈ J. Moreover, bounds for δj and nj now have superexponential dependence on the manifold dimension d instead of the ambient dimension N. Thus, introducing the manifold structure removes the dependencies on the ambient dimension, replacing them instead with the intrinsic dimension of and the complexity of the atlas {(Uj, ϕj)}j ∈ J.

Remark 6 The bounds on the covering radii δj and hidden layer nodes nj needed for each chart in Theorem 8 are not optimal. Indeed, these bounds may be further improved if one uses the local structure of the manifold, through quantities such as its curvature and reach. In particular, the appearance of |J| in both bounds may be significantly improved upon if the manifold is locally well-behaved.

3.4 Numerical simulations

In this section, we provide numerical evidence to support the result of Theorem 8. Let be a smooth, compact d-dimensional manifold. Since having access to an atlas for is not necessarily practical, we assume instead that we have a suitable approximation to . For our purposes, we will use a Geometric Multi-Resolution Analysis (GMRA) approximation of (see [42]; and also, e.g., [43] for a complete definition).

A GMRA approximation of provides a collection of centers and affine projections on ℝN such that, for each j ∈ {1, …, J}, the pairs define d-dimensional affine spaces that approximate with increasing accuracy in the following sense. For every , there exists and such that

holds whenever is sufficiently small.

In this way, a GMRA approximation of essentially provides a collection of approximate tangent spaces to . Hence, a GMRA approximation having fine enough resolution (i.e., large enough j) is a good substitution for an atlas. In practice, one must often first construct a GMRA from empirical data, assumed to be sampled from appropriate distributions on the manifold. Indeed, this is possible, and yields the so-called empirical GMRA, studied in Maggioni et al. [44], where finite-sample error bounds are provided. The main point is that given enough samples on the manifold, one can construct a good GMRA approximation of the manifold.

Let be a GMRA approximation of for refinement level j. Since the affine spaces defined by (cj,k, Pj,k) for each k ∈ {1, …, Kj} are d-dimensional, we will approximate f on by projecting it (in an appropriate sense) onto these affine spaces and approximating each projection using an RVFL network on ℝd. To make this more precise, observe that, since each affine projection acts on as Pj,kx = cj,k+Φj,k(x−cj,k) for some othogonal projection , for each k ∈ {1, …Kj}, we have

where is the compact singular value decomposition (SVD) of Φj,k (i.e., only the left and right singular vectors corresponding to non-zero singular values are computed). In particular, the matrix of right-singular vectors enables us to define a function , given by

which satisfies for all . By continuity of f and Equation 21, this means that for any ε > 0, there exists j ∈ ℕ such that for some k ∈ {1, …, Kj}. For such k ∈ {1, …, Kj}, we may therefore approximate f on the affine space associated with (cj,k, Pj,k) by approximating using a RFVL network of the form

where and are random input-to-hidden layer weights and biases (resp.) and the hidden-to-output layer weights are learned. Choosing the activation function ρ and random input-to-hidden layer weights and biases as in Theorem 8 then guarantees that is small with high probability whenever nj,k is sufficiently large.

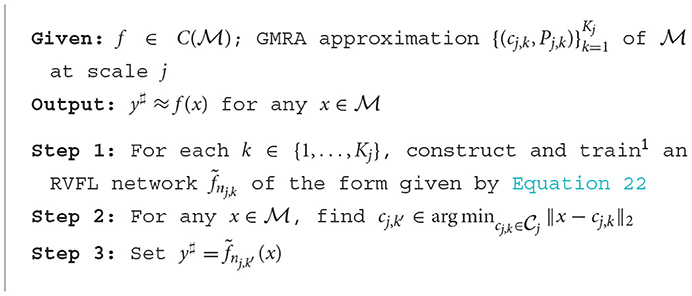

In light of the above discussion, we propose the following RVFL network construction for approximating functions : Given a GMRA approximation of with sufficiently high resolution j, construct and train RVFL networks of the form given by Equation 22 for each k ∈ {1, …, Kj}. Then, given and ε > 0, choose such that

and evaluate to approximate f(x). We summarize this algorithm in Algorithm 1. Since the structure of the GMRA approximation implies holds for our choice of [see 43], continuity of f and Lemma 5 imply that, for any ε > 0 and j large enough,

holds with high probability, provided satisfies the requirements of Theorem 8.

Remark 7 In the RVFL network construction proposed above, we require that the function f be defined in a sufficiently large region around the manifold. Essentially, we need to ensure that f is continuously defined on the set , where is the scale-j GMRA approximation

This ensures that f can be evaluated on the affine subspaces given by the GMRA.

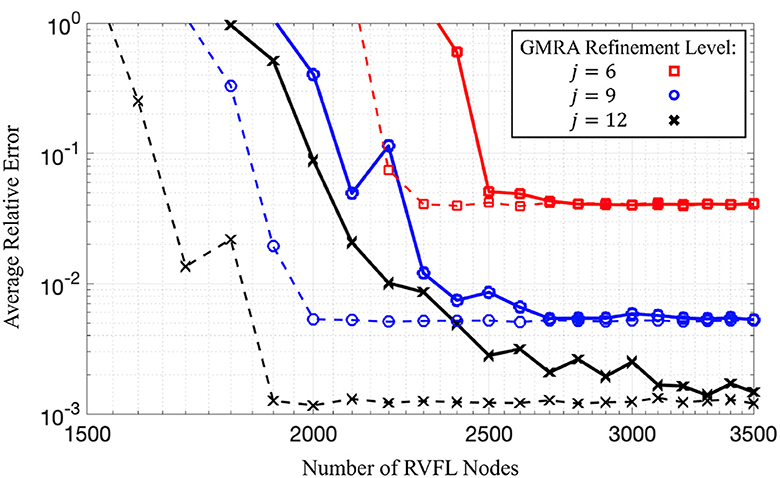

To simulate Algorithm 1, we take embedded in ℝ20 and construct a GMRA up to level jmax = 15 using 20,000 data points sampled uniformly from . Given j ≤ jmax, we generate RVFL networks as in Equation 22 and train them on using the training pairs , where Tk,j is the affine space generated by (cj,k, Pj,k). For simplicity, we fix nj,k = n to be constant for all k ∈ {1, …, Kj} and use a single, fixed pair of parameters α, Ω > 0 when constructing all RVFL networks. We then randomly select a test set of 200 points for use throughout all experiments. In each experiment (i.e., point in Figure 1), we use Algorithm 1 to produce an approximation of f(x). Figure 1 displays the mean relative error in these approximations for varying numbers of nodes n; to construct this plot, f is taken to be the exponential and ρ the hyperbolic secant function. Notice that for small numbers of nodes, the RVFL networks are not very good at approximating f, regardless of the choice of α, Ω > 0. However, the error decays as the number of nodes increases until reaching a floor due to error inherent in the GMRA approximation. Hence, as suggested by Theorem 3, to achieve a desired error bound of ε > 0, one needs to only choose a GMRA scale j such that the inherent error in the GMRA (which scales like 2−j) is less than ε, then adjust the parameters αj, Ωj, and nj,k accordingly.

Figure 1. Log-scale plot of average relative error for Algorithm 1 as a function of the number of nodes n in each RVFL network. Black (cross), blue (circle), and red (square) lines correspond to GMRA refinement levels j = 12, j = 9, and j = 6 (resp.). For each j, we fix αj = 2 and vary Ωj = 10, 15 (solid and dashed lines, resp.). Reconstruction error decays as a function of n until reaching a floor due to error in the GMRA approximation of . The code used to obtain these numerical results is available upon direct request sent to the corresponding author.

Remark 8 As we just mentioned, the error can only decay so far due to the resolution of the GMRA approximation. However, that is not the only floor in our simulation; indeed, the ε in Theorem 3 is determined by the αj's and Ωj's, which we kept fixed (see the caption of Figure 1). Consequently, the stagnating accuracy as n increases, as seen in Figure 1, is also predicted by Theorem 3. Since the solid and dashed lines seem to reach the same floor, the floor due to error inherent in the GMRA approximation seems to be the limiting error term for RVFL networks with large numbers of nodes.

Remark 9 Utilizing random inner weights and biases resulted in us needing to approximate the atlas to the manifold. To this end, knowing the computational complexity of the GMRA approximation would be useful in practice. As it turns out in Liao and Maggioni [45], calculating the GMRA approximation has computational complexity O(CdNmlog(m)), where m is the number of training data points and C > 0 is a numerical constant.

3.5 Proofs of technical lemmas

3.5.1 Proof of Lemma 3

Observe that hΩ defined in Equation 3 may be viewed as a multidimensional bump function; indeed, the parameter Ω > 0 controls the width of the bump. In particular, if Ω is allowed to grow very large, then hΩ becomes very localized near the origin. Objects that behave in this way are known in the functional analysis literature as approximate δ-functions:

Definition 2 A sequence of functions are called approximate (or nascent) δ-functions if

for all . For such functions, we write for all x ∈ ℝN, where δ0 denotes the N-dimensional Dirac δ-function centered at the origin.

Given φ ∈ L1(ℝN) with , one may construct approximate δ-functions for t > 0 by defining for all x ∈ ℝN [46]. Such sequences of approximate δ-functions are also called approximate identity sequences [47] since they satisfy a particularly nice identity with respect to convolution, namely, for all [see 47, Theorem 6.32]. In fact, such an identity holds much more generally.

Lemma 6 [46, Theorem 1.18] Let φ ∈ L1(ℝN) with and for t > 0 define for all x ∈ ℝN. If f ∈ Lp(ℝN) for 1 ≤ p < ∞ (or for p = ∞), then .

To prove Equation 4, it would suffice to have which is really just Lemma 6 in case p = ∞. Nonetheless, we present a proof by mimicking [46] for completeness. Moreover, we will use a part of proof in Remark 10 below.

Lemma 7 Let h ∈ L1(ℝn) with and define as in Equation 3 for all Ω > 0. Then, for all , we have

Proof. By symmetry of the convolution operator in its arguments, we have

Since a simple substitution yields it follows that

Finally, expanding the function hΩ, we obtain

where we have used the substitution z = Ωy. Taking limits on both sides of this expression and observing that

using the Dominated Convergence Theorem, we obtain

So, it suffices to show that, for all z ∈ ℝN,

To this end, let ε > 0 and z ∈ ℝN be arbitrary. Since , there exists r > 0 sufficiently large such that |f(x)| < ε/2 for all , where is the closed ball of radius r centered at the origin. Let so that for each we have both x and x−z/Ω in . Thus, both |f(x)| < ε/2 and |f(x−z/Ω)| < ε/2, implying that

Hence, we obtain

Now, as is a compact subset of ℝN, the continuous function f is uniformly continuous on , and so the remaining limit and supremum may be freely interchanged, whereby continuity of f yields

Since ε > 0 may be taken arbitrarily small, we have proved the result.

Remark 10 While Lemma 7 does the approximation we aim for, it gives no indication of how fast

decays in terms of Ω or the dimension N. Assuming h(z) = g(z(1))⋯g(z(N)) for some non-negative g (which is how we will choose h in Section 3.5.2) and f to be β-Hölder continuous for some fixed β ∈ (0, 1) yields that

where the third inequality follows from Jensen's inequality.

3.5.2 Proof of Lemma 4: the limit-integral representation

Let A ∈ C∞(ℝ) be any even function supported on s.t. ∥A∥2 = 1. Then, ϕ = A*A ∈ C∞(ℝ) is an even function supported on [−1, 1] s.t. ϕ(0) = 1. Lemma 3 implies that

uniformly in x ∈ K for any h ∈ L1(ℝN) satisfying We choose

which the reader may recognize as the (inverse) Fourier transform of . As we announced in Remark 10, h(z) = g(z(1))⋯g(z(N)), where (using the convolution theorem)

Moreover, since g is the Fourier transform of an even function, h is real-valued and also even. In addition, since ϕ is smooth, h decays faster than the reciprocal of any polynomial (as follows from repeated integration by parts and the Riemann–Lebesgue lemma), so h ∈ L1(ℝN). Thus, Fourier inversion yields

which justifies our application of Lemma 3. Expanding the right-hand side of Equation 23 (using the scaling property of the Fourier transform) yields that

because ϕ is even and supported on [−1, 1]. Since Equation 24 is an iterated integral of a continuous function over a compact set, Fubini's theorem readily applies, yielding

Since it follows that

where cosΩ is defined in Equation 5.

With the representation given by Equation 25 in hand, we now seek to reintroduce the general activation function ρ. To this end, since cosΩ ∈ Cc(ℝ)⊂C0(ℝ) we may apply the convolution identity given by Equation 4 with f replaced by cosΩ to obtain uniformly for all z ∈ ℝ, where hα(z) = αρ(αz). Using this representation of cosΩ in Equation 25, it follows that

holds uniformly for all x ∈ K. Since f is continuous and the convolution cosΩ*hα is uniformly continuous and uniformly bounded in α by ∥ρ∥1 (see below), the fact that the domain K×[−Ω, Ω]N is compact then allows us to bring the limit as α tends to infinity outside the integral in this expression via the Dominated Convergence Theorem, which gives us

uniformly for every x ∈ K. The uniform boundedness of the convolution follows from the fact that

where v = αu.

Remark 11 It should be noted that we are unable to swap the order of the limits in Equation 26, since cosΩ is not in C0(ℝ) when Ω is allowed to be infinite.

Remark 12 Complementing Remark 10, we will now elucidate how fast

decays in terms of α. Using the fact that Equation 27 and the triangle inequality allows us to bound the absolute difference above by

Since cosΩ is 1-Lipschitz, it follows that the above integral is bounded by

To complete this step of the proof, observe that the definition of cosΩ allows us to write

By substituting Equation 28 into Equation 26, we then obtain

uniformly for all x ∈ K, where . In this way, recalling that and bα(y, w, u): = −α(〈w, y〉+u) for y, w ∈ ℝN and u ∈ ℝ, we conclude the proof.

3.5.3 Proof of Lemma 5: Monte-Carlo integral approximation

The next step in the proof of Theorem 5 is to approximate the integral in Equation 7 using the Monte-Carlo method. To this end, let , , and be independent samples drawn uniformly from K, [−Ω, Ω]N, and , respectively, and consider the sequence of random variables defined by

for each x ∈ K, where we note that vol(K(Ω)) = (2Ω)Nπ(2L + 1)vol(K). If we also define

for x ∈ K and p ∈ ℕ, then we want to show that

as n → ∞, where the expectation is taken with respect to the joint distribution of the random samples , , and . For this, it suffices to find a constant C(f, ρ, α, Ω, N) < ∞ independent of n satisfying

Indeed, an application of Fubini's theorem would then yield

which implies Equation 30. To determine such a constant, we first observe by Theorem 4 that

where we define the variance term

for x ∈ K. Since ∥ϕ∥∞ = 1 (see Lemma 8 below), it follows that

for all y, w ∈ ℝN and u ∈ ℝ, where , we obtain the following simple bound on the variance term

Since we assume ρ ∈ L∞(ℝ), we then have

Substituting the value of vol(K(Ω)), we obtain

is a suitable choice for the desired constant.

Now that we have established Equation 30, we may rewrite the random variables In(x) in a more convenient form. To this end, we change the domain of the random samples to [−αΩ, αΩ]N and define the new random variables by bk: = −(〈wk, yk〉+αuk) for each k = 1, …, n. In this way, if we denote

for each k = 1, …, n, the random variables defined by

satisfy fn(x) = In(x) for every x ∈ K. Combining this with Equation 30, we have proved Lemma 5.

Lemma 8 ∥ϕ∥∞ = 1.

Proof. It suffices to prove that |ϕ(z)| ≤ 1 for all z ∈ ℝ because ϕ(0) = 1. By Cauchy–Schwarz,

because A is even.

3.5.4 Proof of Theorem 1 when ρ′ ∈ L1(ℝ)∩L∞(ℝ)

Let with K: = supp(f) and suppose ε > 0 is fixed. Take the activation function ρ:ℝ → ℝ to be differentiable with ρ′ ∈ L1(ℝ)∩L∞(ℝ). We wish to show that there exists a sequence of RVFL networks defined on K which satisfy the asymptotic error bound

as n → ∞. The proof of this result is a minor modification of second step in the proof of Theorem 5.

If we redefine hα(z) as αρ′(αz), then Equation 26 plainly still holds and Equation 28 reads

Recalling the definition of cosΩ in Equation 5 and integrating by parts, we obtain

for all z ∈ ℝ, where and sinΩ:ℝ → [−1, 1] is defined analogously to Equation 5. Substituting this representation of (cosΩ*hα)(z) into Equation 26 then yields

uniformly for every x ∈ K. Thus, if we replace the definition of Fα, Ω in Equation 6 by

for y, w ∈ ℝN and u ∈ ℝ, we again obtain the uniform representation given by Equation 7 for all x ∈ K. The remainder of the proof proceeds from this point exactly as in the proof of Theorem 5.

3.5.5 Proof of Theorem 8

We wish to show that there exist sequences of RVFL networks defined on ϕj(Uj) for each j ∈ J, which together satisfy the error bound

with probability at least 1−η for {nj}j ∈ J sufficiently large. The proof is obtained by showing that

holds uniformly for with high probability.

We begin as in the proof of Theorem 7 by applying the representation given by Equation 17 for (f on each chart (Uj, ϕj), which gives us

for all . Now, since we have already seen that for each j ∈ J, Theorem 6 implies that for any εj > 0, there exist constants αj, Ωj > 0 and hidden-to-output layer weights for each j ∈ J such that for any

we have

uniformly for all z ∈ ϕj(Uj) with probability at least 1−ηj, provided the number of nodes nj satisfies

where c > 0 is a numerical constant and Indeed, it suffices to choose

for each k = 1, …, nj, where

for each j ∈ J. Combined with Equation 32, choosing δj and nj satisfying Equations 33, 34, respectively, then yields

for all with probability at least . Since we require that Equation 31 holds for all with probability at least 1−η, the proof is then completed by choosing {εj}j ∈ J and {ηj}j ∈ J, such that

In particular, it suffices to choose

and ηj = η/|J| for each j ∈ J, so that Equations 33, 34 become

as desired.

4 Discussion

The central topic of this study is the study of the approximation properties of a randomized variation of shallow neural networks known as RVFL. In contrast with the classical single-layer neural networks, training of an RVFL involves only learning the output weights, while the input weights and biases of all the nodes are selected at random from an appropriate distribution and stay fixed throughout the training. The main motivation for studying the properties of such networks is as follows:

1. Random weights are often utilized as an initialization for a NN training procedure. Thus, establishing the properties of the RVFL networks is an important first step toward understanding how random weights are transformed during training.

2. Due to their much more computationally efficient training process, the RVFL networks proved to be a valuable alternative to the classical SLFNs. They were successfully used in several modern applications, especially those that require frequent re-training of a neural network [20, 26, 27].

Despite their practical and theoretical importance, results providing rigorous mathematical analysis of the properties of RVFLs are rare. The work of Igelnik and Pao [16] showed that RVFL networks are universal approximators for the class of continuous, compactly supported functions and established the asymptotic convergence rate of the expected approximation error as a function of the number of nodes in the hidden layer. While this result served as a theoretical justification for using RVFL networks in practice, a close examination led us to the conclusion that the proofs in Igelnik and Pao [16] contained several technical errors.

In this study, we offer a revision and a modification of the proof methods from Igelnik and Pao [16] that allow us to prove a corrected, slightly weaker version of the result announced by Igelnik and Pao. We further build upon their work and show a non-asymptotic probabilistic (instead of on average) approximation result, which gives an explicit bound on the number of hidden layer nodes that are required to achieve the desired approximation accuracy with the desired level of certainty (that is, with high enough probability). In addition to that, we extend the obtained result to the case when the function is supported on a compact, low-dimensional sub-manifold of the ambient space.

While our study closes some of the gaps in the study of the approximation properties of RVFL, we believe that it just starts the discussion and opens many directions for further research. We briefly outline some of them here.

In our results, the dependence of the required number n of the nodes in the hidden layer on the dimension N of the domain is superexponential, which is likely an artifact of the proof methods we use. We believe this dependence can be improved to be exponential by using a different, more refined approach to the construction of the limit-integral representation of a function. A related interesting direction for future research is to study how the bound on n changes for more restricted classes of (e.g., smooth) functions.

Another important direction that we did not discuss in this study is learning the output weights and studying the robustness of the RVFL approximation to the noise in the training data. Obtaining provable robustness guarantees for an RVFL training procedure would be a step toward the robustness analysis of neural networks.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

DN: Writing – original draft, Writing – review & editing. AN: Writing – original draft, Writing – review & editing. RS: Writing – original draft, Writing – review & editing. PS: Writing – original draft, Writing – review & editing. OS: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. DN was partially supported by NSF DMS 2108479 and NSF DMS 2011140. RS was partially supported by a UCSD senate research award and a Simons fellowship. PS was partially supported by NSF DMS 1909457.

Acknowledgments

The authors thank F. Krahmer, S. Krause-Solberg, and J. Maly for sharing their GMRA code, which they adapted from that provided by M. Maggioni.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

The views expressed in this article are those of the authors and do not reflect the official policy or position of the U.S. Air Force, Department of Defense, or U.S. Government.

Footnotes

1. ^The construction and training of RVFL networks is left as a “black box” procedure. How to best choose a specific activation function ρ(z) and train each RVFL network given by Equation 22 is outside of the scope of this study. The reader may, for instance, select from the range of methods available for training neural networks.

References

1. Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems. (2012). p. 1097–105.

2. Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. (2015). p. 1–9. doi: 10.1109/CVPR.2015.7298594

3. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition. (2016). p. 770–778. doi: 10.1109/CVPR.2016.90

4. Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (2017). p. 4700–4708. doi: 10.1109/CVPR.2017.243

5. Yang Y, Zhong Z, Shen T, Lin Z. Convolutional neural networks with alternately updated clique. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (2018). p. 2413–2422. doi: 10.1109/CVPR.2018.00256

6. Barron AR. Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans Inf Theory. (1993) 39:930–45. doi: 10.1109/18.256500

7. Candés EJ. Harmonic analysis of neural networks. Appl Comput Harm Analysis. (1999) 6:197–218. doi: 10.1006/acha.1998.0248

8. Vershynin R. Memory capacity of neural networks with threshold and ReLU activations. arXiv preprint arXiv:200106938. (2020).

9. Baldi P, Vershynin R. The capacity of feedforward neural networks. Neural Netw. (2019) 116:288–311. doi: 10.1016/j.neunet.2019.04.009

10. Huang GB, Babri HA. Upper bounds on the number of hidden neurons in feedforward networks with arbitrary bounded nonlinear activation functions. IEEE Trans Neural Netw. (1998) 9:224–9. doi: 10.1109/72.655045

11. Suganthan PN. Letter: On non-iterative learning algorithms with closed-form solution. Appl Soft Comput. (2018) 70:1078–82. doi: 10.1016/j.asoc.2018.07.013

12. Olson M, Wyner AJ, Berk R. Modern Neural Networks Generalize on Small Data Sets. In: Proceedings of the 32Nd International Conference on Neural Information Processing Systems. NIPS'18. Curran Associates Inc. (2018). p. 3623–3632.

13. Schmidt WF, Kraaijveld MA, Duin RPW. Feedforward neural networks with random weights. In: Proceedings 11th IAPR International Conference on Pattern Recognition. Vol. II. Conference B: Pattern Recognition Methodology and Systems. (1992). p. 1–4.

14. Te Braake HAB, Van Straten G. Random activation weight neural net (RAWN) for fast non-iterative training. Eng Appl Artif Intell. (1995) 8:71–80. doi: 10.1016/0952-1976(94)00056-S

15. Pao YH, Takefuji Y. Functional-link net computing: theory, system architecture, and functionalities. Computer. (1992) 25:76–9. doi: 10.1109/2.144401

16. Igelnik B, Pao YH. Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans Neur Netw. (1995) 6:1320–9. doi: 10.1109/72.471375

17. Huang GB, Zhu QY, Siew CK. Extreme learning machine: theory and applications. Neurocomputing. (2006) 70:489–501. doi: 10.1016/j.neucom.2005.12.126

18. Pao YH, Park GH, Sobajic DJ. Learning and generalization characteristics of the random vector functional-link net. Neurocomputing. (1994) 6:163–80. doi: 10.1016/0925-2312(94)90053-1

19. Pao YH, Phillips SM. The functional link net and learning optimal control. Neurocomputing. (1995) 9:149–64. doi: 10.1016/0925-2312(95)00066-F

20. Chen CP, Wan JZ, A. rapid learning and dynamic stepwise updating algorithm for flat neural networks and the application to time-series prediction. IEEE Trans Syst Man Cybern B. (1999) 29:62–72. doi: 10.1109/3477.740166

21. Park GH, Pao YH. Unconstrained word-based approach for off-line script recognition using density-based random-vector functional-link net. Neurocomputing. (2000) 31:45–65. doi: 10.1016/S0925-2312(99)00149-6

22. Zhang L, Suganthan PN. Visual tracking with convolutional random vector functional link network. IEEE Trans Cybern. (2017) 47:3243–53. doi: 10.1109/TCYB.2016.2588526

23. Zhang L, Suganthan PN. Benchmarking ensemble classifiers with novel co-trained kernel ridge regression and random vector functional link ensembles [research frontier]. IEEE Comput Intell Mag. (2017) 12:61–72. doi: 10.1109/MCI.2017.2742867

24. Katuwal R, Suganthan PN, Zhang L. An ensemble of decision trees with random vector functional link networks for multi-class classification. Appl Soft Comput. (2018) 70:1146–53. doi: 10.1016/j.asoc.2017.09.020

25. Vuković N, Petrović M, Miljković Z. A comprehensive experimental evaluation of orthogonal polynomial expanded random vector functional link neural networks for regression. Appl Soft Comput. (2018) 70:1083–1096. doi: 10.1016/j.asoc.2017.10.010

26. Tang L, Wu Y, Yu L, A. non-iterative decomposition-ensemble learning paradigm using RVFL network for crude oil price forecasting. Appl Soft Comput. (2018) 70:1097–108. doi: 10.1016/j.asoc.2017.02.013

27. Dash Y, Mishra SK, Sahany S, Panigrahi BK. Indian summer monsoon rainfall prediction: A comparison of iterative and non-iterative approaches. Appl Soft Comput. (2018) 70:1122–34. doi: 10.1016/j.asoc.2017.08.055

28. Henríquez PA, Ruz G. Twitter sentiment classification based on deep random vector functional link. In: 2018 International Joint Conference on Neural Networks (IJCNN). IEEE (2018). p. 1–6. doi: 10.1109/IJCNN.2018.8489703

29. Katuwal R, Suganthan PN, Tanveer M. Random vector functional link neural network based ensemble deep learning. arXiv preprint arXiv:190700350. (2019).

30. Zhang Y, Wu J, Cai Z, Du B, Yu PS. An unsupervised parameter learning model for RVFL neural network. Neural Netw. (2019) 112:85–97. doi: 10.1016/j.neunet.2019.01.007

31. Hornik K. Approximation capabilities of multilayer feedforward networks. Neural Netw. (1991) 4:251–7. doi: 10.1016/0893-6080(91)90009-T

32. Leshno M, Lin VY, Pinkus A, Schocken S. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. (1993) 6:861–7. doi: 10.1016/S0893-6080(05)80131-5

33. Li JY, Chow WS, Igelnik B, Pao YH. Comments on “Stochastic choice of basis functions in adaptive function approximation and the functional-link net” [with reply]. IEEE Trans Neur Netw. (1997) 8:452–4. doi: 10.1109/72.557702

34. Burkhardt DB, San Juan BP, Lock JG, Krishnaswamy S, Chaffer CL. Mapping phenotypic plasticity upon the cancer cell state landscape using manifold learning. Cancer Discov. (2022) 12:1847–59. doi: 10.1158/2159-8290.CD-21-0282

35. Mitchell-Heggs R, Prado S, Gava GP, Go MA, Schultz SR. Neural manifold analysis of brain circuit dynamics in health and disease. J Comput Neurosci. (2023) 51:1–21. doi: 10.1007/s10827-022-00839-3

36. Dick J, Kuo FY, Sloan IH. High-dimensional integration: the quasi-Monte Carlo way. Acta Numerica. (2013) 22:133–288. doi: 10.1017/S0962492913000044

37. Ledoux M. The Concentration of Measure Phenomenon. Providence, RI: American Mathematical Soc. (2001).

38. Massart P. About the constants in Talagrand's deviation inequalities for empirical processes. Technical Report, Laboratoire de statistiques, Universite Paris Sud. (1998).

39. Talagrand M. New concentration inequalities in product spaces. Invent Mathem. (1996) 126:505–63. doi: 10.1007/s002220050108

40. Shaham U, Cloninger A, Coifman RR. Provable approximation properties for deep neural networks. Appl Comput Harmon Anal. (2018) 44:537–57. doi: 10.1016/j.acha.2016.04.003

41. Tu LW. An Introduction to Manifolds. New York: Springer. (2010). doi: 10.1007/978-1-4419-7400-6_3

42. Allard WK, Chen G, Maggioni M. Multi-scale geometric methods for data sets II: geometric multi-resolution analysis. Appl Comput Harmon Anal. (2012) 32:435–62. doi: 10.1016/j.acha.2011.08.001

43. Iwen MA, Krahmer F, Krause-Solberg S, Maly J. On recovery guarantees for one-bit compressed sensing on manifolds. Discr Comput Geom. (2018) 65:953–998. doi: 10.1109/SAMPTA.2017.8024465

44. Maggioni M, Minsker S, Strawn N. Multiscale dictionary learning: non-asymptotic bounds and robustness. J Mach Learn Res. (2016) 17:43–93.

45. Liao W, Maggioni M. Adaptive Geometric Multiscale Approximations for Intrinsically Low-dimensional Data. J Mach Learn Res. (2019) 20:1–63.

46. Stein EM, Weiss G. Introduction to Fourier Analysis on Euclidean Spaces. London: Princeton University Press (1971). doi: 10.1515/9781400883899

Keywords: machine learning, feed-forward neural networks, function approximation, smooth manifold, random vector functional link

Citation: Needell D, Nelson AA, Saab R, Salanevich P and Schavemaker O (2024) Random vector functional link networks for function approximation on manifolds. Front. Appl. Math. Stat. 10:1284706. doi: 10.3389/fams.2024.1284706

Received: 28 August 2023; Accepted: 21 March 2024;

Published: 17 April 2024.

Edited by:

HanQin Cai, University of Central Florida, United StatesReviewed by:

Jingyang Li, The University of Hong Kong, Hong Kong SAR, ChinaDong Xia, Hong Kong University of Science and Technology, Hong Kong SAR, China

Copyright © 2024 Needell, Nelson, Saab, Salanevich and Schavemaker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Deanna Needell, deanna@math.ucla.edu

Deanna Needell

Deanna Needell Aaron A. Nelson

Aaron A. Nelson Rayan Saab

Rayan Saab Palina Salanevich

Palina Salanevich Olov Schavemaker

Olov Schavemaker