Young and old persons' subjective feelings when facing with a non-human computer-graphics-based agent's emotional responses in consideration of differences in emotion perception

- 1Research & Development Group, Hitachi, Ltd., Kokubunji, Japan

- 2Faculty of Sociology, Toyo University, Tokyo, Japan

- 3Graduate School of Humanities and Sociology, The University of Tokyo, Tokyo, Japan

Virtual agents (computer-graphics-based agents) have been developed for many purposes such as supporting the social life, mental care, education, and entertainment of both young and old people individuals. Promoting affective communication between young/old users and agents requires clarifying subjective feelings induced by an agent's expressions. However, an emotional response model of agents to induce positive feelings has not been fully understood due to differences in emotion perception between young and old adults. We investigated subjective feelings induced when facing with a non-human computer-graphics-based agent's emotional responses in consideration of differences of emotion perception between young adults and old adults. To emphasize the differences in emotion perception, the agent's expressions were developed by adopting exaggerated human expressions. The differences in their emotion perception of happy, sadness, and angry between young and old participants were then identified through a preliminary experiment. Considering the differences in emotion perception, induced feelings when facing with the agent's expressions were analyzed from the three types of emotion sources of the participant, agent, and other, which was defined as subject and responsibility of induced emotion. The subjective feelings were evaluated using a subjective rating task with 139 young and 211 old participants. The response of the agent that most induced positive feelings was happy when participants felt happy, and that was sad when participants felt sad, regardless emotion sources in both young and old groups. The response that most induced positive feelings was sad when participants felt angry and emotion sources were participant and the agent, and that was angry when the emotion source was other. The emotion types of the response to induce most positive feelings were the same between the young and old participants, and the way to induce most positive feelings was not always to mimic the emotional expressions, which is a typical tendency of human responses. These findings suggest that a common agent response model can be developed for young and old people by combining an emotional mimicry model with a response model to induce positive feelings in users and promote natural and affective communication, considering age characteristics of emotion perception.

1 Introduction

As artificial intelligence and robotics technologies have advanced, various communicative robots and virtual agents (computer-graphics-based agents) have been developed in a variety of fields for a wide range of applications. Computer-graphics-based agents have the potential to be widely used by many people because their applications can be installed in common devices such as smartphones, tablets, and PCs. Such agents also have an advantage in making expressions without physical restriction including exaggerated emotional expressions. Non-human agents have been developed for many purposes traditionally handled by human agents, such as supporting the social life of individuals, mental care, education, and entertainment (Kidd et al., 2006; Miklósi and Gácsi, 2012). Most agents have been developed with human or non-human emotional expressions as a means to promote affective communication with various types of users such as young and old people. To achieve affective communication between users and agents, two essential factors need to be considered when choosing the expressions to be used by an agent to express emotion: the user's emotion-perception characteristics and current emotional state.

Understanding the characteristics of the user's emotion perception when observing an agent's expression would enable more natural expressions to be chosen for the user, leading to more affective communication with a wide range of users. In human-to-human communication, emotion perception varies depending on the characteristics of each person (Orgeta, 2010; Tu et al., 2018), which makes seamless communication difficult among different people by using monochronic expressions. Age in particular has a significant effect on the emotion perception in human facial expressions (Isaacowitz et al., 2007; Khawar and Buswell, 2014). Although the effect of aging on emotion perception is not a simple decline, in many cases, old people are worse than young people at identifying happiness, sadness, anger, fear, and surprise in human facial expressions (Isaacowitz et al., 2007; Ruffman et al., 2008; Sze et al., 2012; Khawar and Buswell, 2014; Goncalves et al., 2018; Hayes et al., 2020).

Previous studies have also explored emotion perception in robots and computer-graphics-based agents (Jung, 2017; Moltchanova and Bartneck, 2017; Hortensius et al., 2018), and they have demonstrated the effect of age on the emotion perception for emotional expressions of robots and agents (Beer et al., 2009, 2015; Pavic et al., 2021). Beer et al. (2009, 2015) investigated the effect of age on emotion perception by using human-like facial expressions in an agent with a robotic head and found age-related differences in the emotion perception in agent facial expressions as well as human facial expressions. They used agents that resembled a human face, and the agent's facial expressions of emotion were based on Ekman's facial expressions of emotion (Ekman et al., 1980). Although the effect of age on perceiving an agent's emotional expression is not fully understood, age should be considered when analyzing emotion in expressions in human-to-agents communication, and it is currently necessary to accurately identify properly perceived emotional expressions for each agent, in consideration of differences in emotion perception.

As well as the user's emotion perception, the effect of an agent's emotional expression on a user's subjective feelings depends on the user's emotional state when communicating with the agent. Therefore, understanding how this effect depends on the user's emotional state could help in the development of expressions that induce positive feelings in a user, which would lead to more positive communication. In human-to-human communication, mimicry of the partner's behavior such as facial expressions, postures, and body motions has been shown to elicit positive feelings (Lundqvist, 1995; Chartrand and Bargh, 1999; Catmur and Heyes, 2013; Numata et al., 2020). However, it is not clear that mimicry of negative emotional expressions can elicit positive or negative feelings in a person. On the one hand, mimicry has a positive effect on subjective feelings (Chartrand and Bargh, 1999; Catmur and Heyes, 2013), and people automatically tend to mimic emotional expressions even if they are negative emotional expressions (Lundqvist, 1995). On the other hand, expressions of negative emotions can induce negative emotions in the partner (Friedman et al., 2004; Kulesza et al., 2015). An imbalance in non-verbal communication would be observed during subjectively positive rated conversation (Numata et al., 2021). In fact, emotional mimicry is not a merely automatic response of facial expressions in humans, and facial expressions are used as means to communicate social intentions. Emotional mimicry is modulated by several factors including emotional feelings and social intention (Seibt et al., 2015; Hess, 2020). Although many studies find anger mimicry, it is not consistently found in the literature as mimicry of happy expressions (Seibt et al., 2015). This is because that emotional mimicry occurs when a person has the social intention of affiliation, and the mimicry of anger expression is often conflicted with the social affiliative intention (Seibt et al., 2015; Hess, 2020). Given this contradiction, an emotional response model of agents to induce positive feelings has not been fully understood yet, and we assumed that mimicry of emotional expressions by agents is not always the way to induce most positive feelings in users.

To understand the desirable emotional response of agents to induce positive feelings, the social context during interaction between a user and agent plays an important role (Ochs et al., 2008). A user's emotional state depends on the source of the emotion, even among users with the same emotion type (Imbir, 2013). The emotion source is defined based on self/other control, which is one of the appraisal dimensions in the cognitive appraisal theory (Roseman, 1984; Scherer, 1984; Smith and Ellsworth, 1985). The cognitive appraisal theory has assumed that emotion should be induced by the evaluation of events along with appraisal dimensions. Based on the cognitive appraisal theory, we had empirically integrated the appraisal dimensions associated with emotional event, and extracted appraisal dimensions including self/other control (Mitani and Karasawa, 2005). In the previous study, self/other control was extracted as a factor related to the subject and responsibility of self and others. By extending the definition of self/other control, the emotion sources in this study are defined as three emotion sources; user itself, the agent, and other. Thus, it can be assumed that a user's emotional state is affected by not only emotion type but also emotion source. Development of an agent-response model that is based on the user's subjective feelings and takes into account emotion type and emotion source should be useful in achieving affective communication between users and agents. A versatile agent-response model is needed that uses emotional expressions in which most users can recognize the same emotion type, considering with the difference characteristics in emotion perception.

The purpose of this study was to develop an agent response model to induce positive feelings that is based on the user's subjective feelings and takes into account the user's emotion type and emotion source in consideration of age differences regarding emotion perception. Various exaggerated emotional expressions for a non-human computer-graphics-based agent were developed to emphasize age differences of emotion perception, and then two experiments (a preliminary experiment and a main experiment) were conducted involving young and old participants. The preliminary experiment was conducted to investigate emotion perception by using the expressions and identify the expressions that most young and old participants recognized as the same emotion type. The main experiment was conducted to investigate how subjective feelings depend on the emotion type and emotion source by using the identified expressions. Although this study was not preregistered, we set hypotheses in the experiments. In the preliminary experiment, we hypothesized that the extracted expressions that most young and old participants recognized as the same emotion type would be different. In the main experiment, we hypothesized that the emotion types of the response to induce most positive feelings were the same between young and old participants in consideration of differences regarding emotion perception, and mimicry of emotional expressions by agents would not be always the way to induce most positive feelings in users.

2 Material and methods

2.1 Computer-graphics-based agent

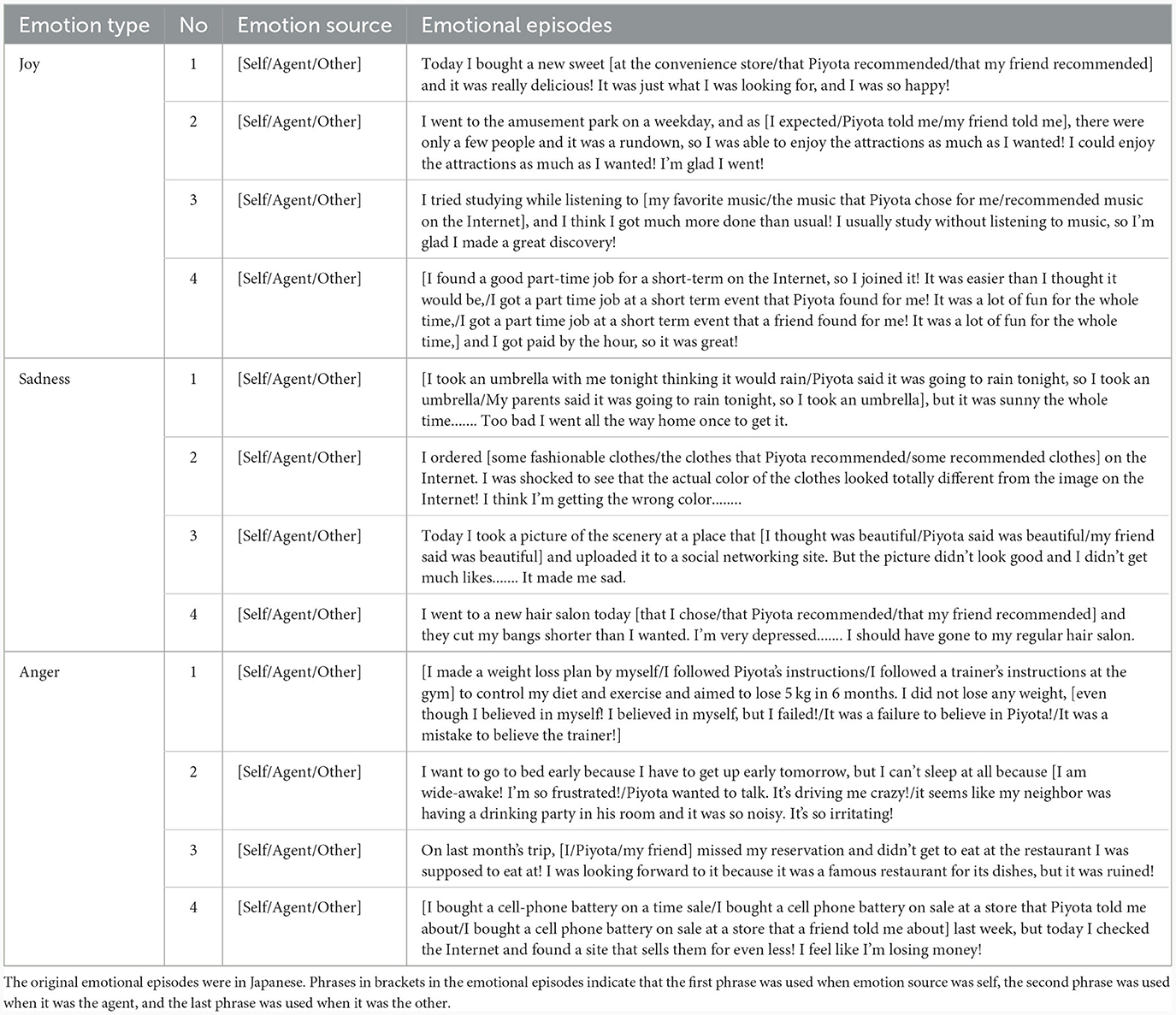

A computer-graphics-based agent called Piyota, which looks like a chick (baby chicken), was used in this study. Piyota has disproportionately large eyes, a quality known to induce affinity in humans (Heike et al., 2011). Since it should be effective to develop various emotional expressions to extract the different characteristics of emotion perception between young and old users, the expressions were developed by adopting exaggerated versions of various human emotional expressions. The expressions of Piyota were developed on the basis of Plutchik's wheel of emotions (Plutchik, 2001), which consists of eight primary emotions (joy, trust, fear, surprise, sadness, disgust, anger, and anticipation). Each emotion has three levels of intensity. Twenty-four emotional facial expressions (eight emotions with three levels of intensity each) were purpose-developed for Piyota, as shown in Figure 1. Since exaggerated expressions of a human agent induce a feeling of strangeness (Mäkäräinen et al., 2014), a non-human agent, such as Piyota, has an advantage in adopting exaggerated human expressions. We assumed that adopting exaggerated human expressions enabled the participants to easily recognize the agent's emotion depending on the characteristics of emotion perception. For instance, the agent's mouth did not open horizontally for the expression of joy whereas a person's mouth opens widely and horizontally in Ekman's expressions (Ekman et al., 1980). Although the agent's expressions were more human-like and less chick-like, they extended the diversity in agent expressions from previous studies (Beer et al., 2009, 2015).

Figure 1. Exaggerated expressions of Piyota computer-graphics-based agent used in this study. They are based on Plutchik's wheel of emotion.

Because these expressions were developed under the guidance of professional animation designers, it was expected that most people would easily recognize and understand them. However, subjectivity is inevitable when working with handmade expressions, so the expressions did not always express the target emotion. We extracted properly perceived expressions for each emotion in the preliminary experiment, which then enabled us to compare the subjective feelings between the young and old participants in the main experiment.

2.2 Preliminary experiment (emotion perception)

2.2.1 Design

The purpose of the preliminary experiment was to demonstrate the different characteristics of emotion perception between young and old participants and identify the agent expressions that most young and old participants recognized as the same emotion type. We assumed that the characteristics of emotion perception depended on the participant's age, as assumed in previous studies (Beer et al., 2009, 2015). We also assumed that the expressions that achieved the highest matching rates differed between the two groups. These assumptions were verified by comparing emotion perception between the young and old participants. The protocol was approved by the ethical committee of the authors' institution. The data were obtained in accordance with the standards of the internal review board of the authors' institution, following receipt of the participants' informed consent.

2.2.2 Procedure

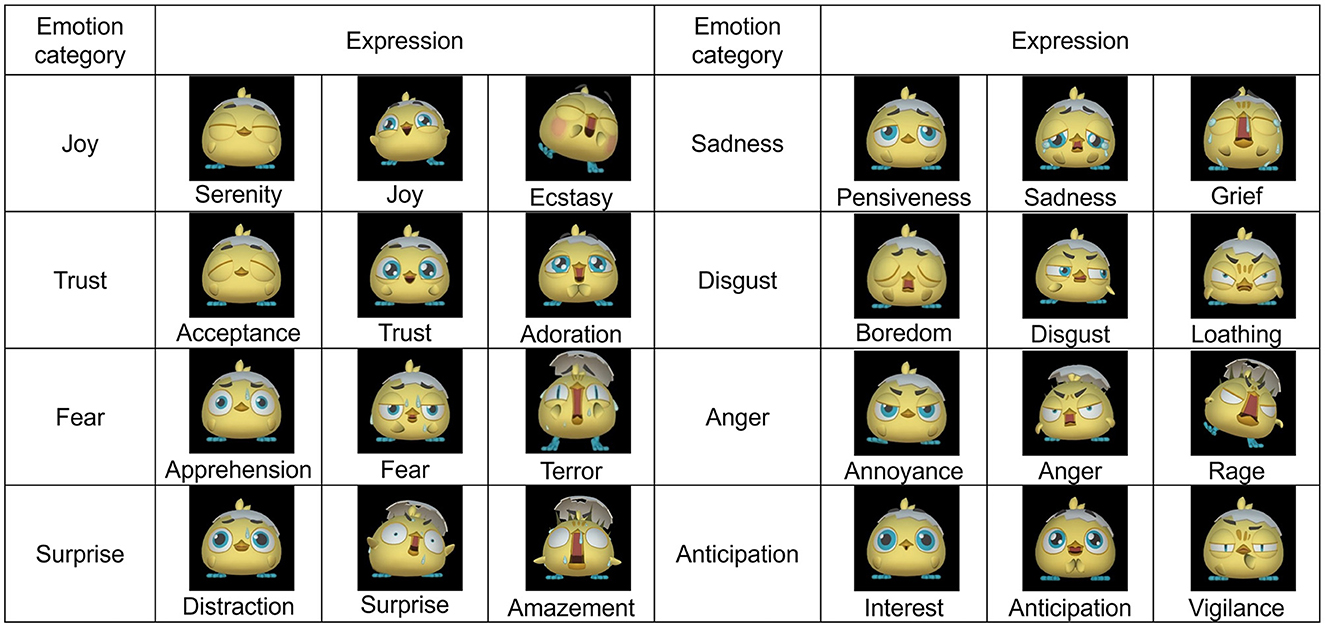

A form with 24 alternative forced-choice tasks was used to identify the agent expressions that most young and most old participants recognized as the same emotion type by using the developed 24 expressions of the computer-graphics-based agent. For each task, the participants were asked to identify the emotion conveyed in the agent's expression, which expressed one of seven emotions (joy, sympathy, fear, surprise, sadness, disgust, and anger). They were instructed as follows; “You will be presented with character non-human agents with various expressions. For each expression, please choose from seven options what emotion you feel the agent is feeling. Please choose intuitively without thinking too much.” Figure 2 shows an example task. The task was performed by using a paper form; thus, the static images of agent expressions (approximately 24 mm × 24 mm) were presented on paper. Because the size of the task-instruction text was approximately 4 mm (12 pt), and we confirmed that there were no uniform selection (the answers were not only one type) among participants, the size would not be a problem even for the old participants. There was no time limit. Since it was quite difficult to distinguish 24 emotion types on the basis of Plutchik's wheel of emotion, we set choices by using consolidated emotion types. Selection of the emotions used was based on Ekman's facial action coding system (Ekman et al., 1980)—the expressions of joy, fear, surprise, sadness, disgust, and anger were easy to understand. Sympathy was added because the effect of sympathy on human-agent communication has been verified in previous studies (Hasler et al., 2014; Macedonia et al., 2014), and expressing sympathy is useful for promoting affective communication in human-agent communication. Whereas it is difficult to recognize sympathy from facial expressions only (Keltner and Buswell, 1996), non-verbal behavior would improve recognition (Hertenstein et al., 2006). We thus anticipated that studying non-human exaggerated expressions of sympathy in an agent would contribute to the understanding of the recognition of sympathy and that expressions conveying sympathy might be found from among the agent's expressions.

Figure 2. Example task, including instructions, for emotion-perception experiment. Actual instructions were in Japanese.

2.2.3 Participants

Sixty-two young Japanese (34 men, 28 women) and 39 old Japanese (13 men, 26 women) took part in the preliminary experiment. The average age ± standard deviation of the young participants was 21.5 ± 3.3 years (ranging 20 to 30) and that of the old participants was 83.1 ± 8.5 years (ranging 63 to 103). The level of care needed for old participants was zero or low and they lived independently with no or light nursing care. Sample size in this study was set to be no less than those in previous studies in the field of emotion perception (Goncalves et al., 2018), and the participants were widely recruited from various locations simultaneously including university and old care facilities.

2.2.4 Data analysis

The number of times an emotion was selected for each agent expression (emotion-selection distribution) was derived using the emotion-perception data. The accuracy for matching pre-specified emotions on Plutchik's wheel of emotions and consistency for matching within group were then calculated and compared between the young and old participants.

The accuracy for matching pre-specified emotions was the rate at which the participants' emotion perception matched that in Plutchik's wheel of emotion. Although the agent expressions did not always convey the target emotion on Plutchik's wheel of emotions, comparing the accuracy between the young and old participants should nevertheless be useful to demonstrate different characteristics of emotion perception between them. The accuracy was calculated as follows. First, the emotion selection distribution for each expression was derived. Next, the numbers of expressions selected by the participants were grouped by emotion type on Plutchik's wheel of emotion. Finally, the ratios of successful matches were calculated. The consistency of matching within a group was also calculated for the agent expressions that most participants recognized as the same emotion type. That is, the agent expressions that represented the maximum consistency for each emotion type were identified for the young and old participants. If the rate of consistency for an emotion type was more than 75% for both the young and old participants, the identified agent expressions for that type were used in the main experiment.

Accuracy was used to compare the characteristics of emotion perception between young and old participants. To evaluate this accuracy, each emotion selection distribution for the expressions was statistically compared by emotion type by using Fisher's exact test. The statistical significance level was set at p < 0.05.

2.3 Main experiment (subjective feelings when facing with an agent's responses)

2.3.1 Design

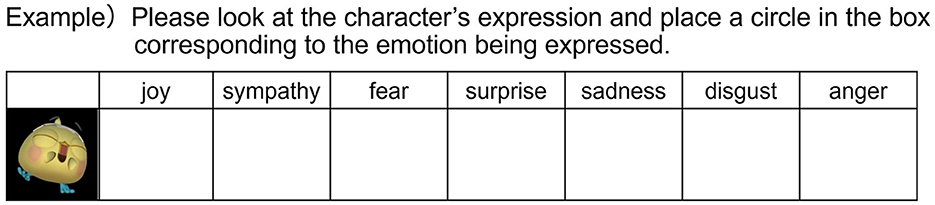

The purpose of the main experiment was to investigate how subjective feelings depend on the emotion type and emotion source and construct an agent-response model that is based on the emotion type and source to induce positive feelings for the young and old participants. In this experiment, the subjective feelings induced by the agent's expressions in the young and old participants were measured. We assumed that mimicry of emotional expressions by agents is not always the way to induce most positive feelings in both young and old people. We also assumed that the model was the same for young and old people in consideration of differences in emotion perception (i.e., expressions that achieved the highest consistency in the young and old participants in the preliminary study were used. Actual expressions are shown in Figure 3 from the preliminary experiment). The experimental protocol was approved by the ethical committee of the Faculty of Letters, the University of Tokyo. The data were obtained in accordance with the standards of the internal review board of the Research & Development Group, Hitachi, Ltd., following receipt of the participants' informed consent.

Figure 3. Emotional expressions with maximum consistency for matching within group for each emotion type. Consistency was calculated for agent expressions that most participants recognized as same emotion type.

2.3.2 Procedure

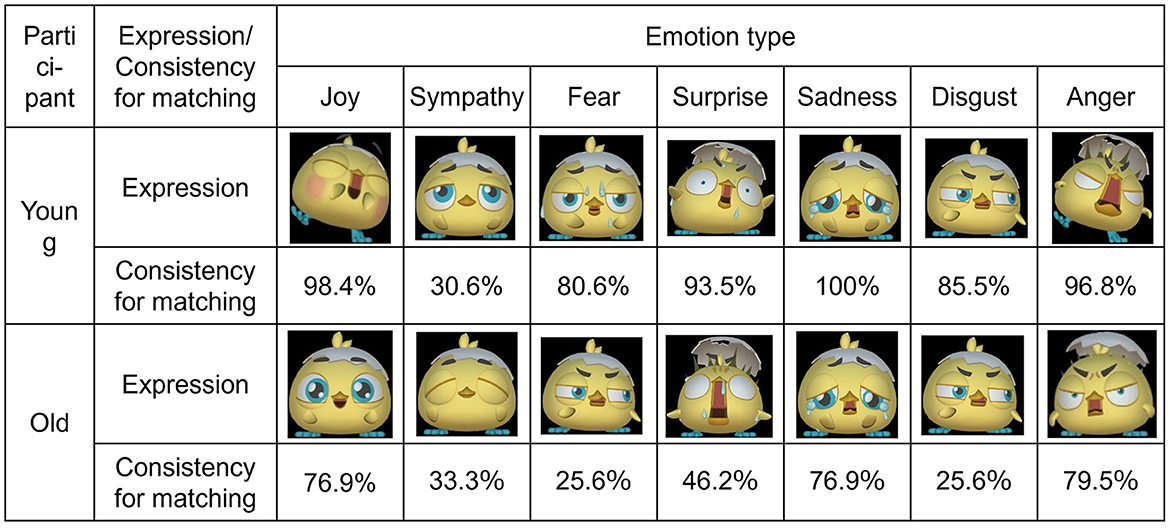

A paper instrument with statements expressing emotion was used to evaluate the subjective feelings of the participants induced by the agent's expressions. The experiment was conducted in a laboratory room and the task was performed by using a paper instrument. The instrument included emotional episodes we made on the basis of the cognitive appraisal theory of emotion (Roseman, 1984; Scherer, 1984; Smith and Ellsworth, 1985). This theory assumes that emotions are induced by the evaluation of events along with appraisal dimensions. On the basis of the cognitive appraisal theory, we had empirically investigated the appraisals associated with emotional event along the appraisal dimensions such as pleasantness, motivational state and coping potentials, and found associations between each emotion and its appraisal profiles (Mitani and Karasawa, 2005). By using the representative features of associations between the emotions of joy, sadness, anger and their appraisal profiles, we made the emotional episodes listed in Table 1. The validity of the instrument was reviewed by two social psychologists before the experiment. The participants were asked to read the statements as if they were speaking to an agent. They were instructed as follows; “The character agent (Piyota) understands the emotions you express and responds to you with expressions. The words and feelings you expressed to Piyota and Piyota's expressions in response to those words and feelings are presented. Please answer how you felt about the expression that Piyota gives you when you are in the emotional state as your expressed emotion.” Each statement expressed one emotion type and one emotion source. The static images of agent expressions as the reaction to the statements were presented on paper form. The agent's expressions extracted in the preliminary experiment for the young and old participants represented joy, sadness, and anger, and these three emotion types were expressed in the statements. We created four statements for each type, so there were 12 statements on the instrument.

We defined three emotion sources: the participant (hereafter, self), agent, and other (neither self nor agent). In previous studies, the emotion sources were classified into self and other (Bartneck, 2002; Mitani and Karasawa, 2005; Steunebrink et al., 2009). Since the agent should be distinguished from other in emotional episodes, we defined these emotion sources. In case that the emotion source was self, the subject and responsibility in an emotional episode was wholly set to user itself. In cases that the emotion sources were agent and other, the subject and responsibility in emotional episodes were partly set to the agent and other, respectively. In this example statement, “Today, I ate a new sweet that I bought. It was really delicious, and I was so glad to find such a wonderful sweet!” joy is the emotion type, and the self is the emotion source. Using the same emotion episode, when the statement was begun with “Today, I ate a new sweet that you suggested,” the agent is the emotion source, and when the statement was begun with “Today, I ate a new sweet that my friends suggested,” other is the emotion source. Since we used common emotion episodes except descriptions in regard to emotion sources among different emotion sources, we randomly divided the participants into three groups, each with three types of paper instruments. The participants were asked to read each statement and then observe the agent's expression in response to the statement. Both the emotional episodes and the agent's expression were presented by using a paper instrument, and participants read the emotional episodes and watched the agent's expression by their own pace. In the paper, each emotional episode was printed on the top of the paper, and then the agent's expression was printed on the bottom of the paper apart from the texts of emotional episode. Therefore, participants generally read the emotional episodes and then watched the agent's expression as the reaction. On basis of the positions of each emotional episode and agent's expression and the task instruction, most participants would understand the agent's expression as the reaction. The participants' subjective feelings about the agent's expressions were recorded using a visual analog scale (VAS) (Torrance et al., 2001). The participants were told to record their subjective feeling after observing the agent's expression by drawing a vertical line at the point on a horizontal line that corresponded to their feeling, where the horizontal line had “Very good feeling” at the right end and “Very bad feeling” at the left end.

2.3.3 Participants

One-hundred-thirty-nine young Japanese (65 men, 74 women) and 208 old Japanese (105 men, 106 women) took part in the main experiment. The average age ± standard deviation of the young participants was 19.9 ± 1.1 years old (ranging 18 to 24) and that of the old participants was 68.1 ± 5.0 years old (ranging 60 to 86). Sample size in this study was set to be no less than the preliminary experiment, and the participants were widely recruited from various locations simultaneously including university.

2.3.4 Data analysis

The subjective feelings measured using the VAS ranged from −50 to 50; “Very good feeling” was calculated as 50, and “Very bad feeling” was calculated as −50. Each participant's subjective rating was averaged by emotion type and used as the participant's subjective feeling.

The subjective feelings were compared among the agent's emotional expressions in response to the emotional statements for the young and old participants. The effect of the agent's emotional expression was compared by emotion source and agent expression using two-way analysis of variance (ANOVA). Age group was not included as a factor because agent expressions used were not exactly same between young and old participants. Although the emotion perception by using the agent's expressions should be same between young and old participants, it is quite difficult to separate the effects of differences of agent's expressions and age. Post-hoc tests for comparison of emotion sources were conducted using Student's t-test, and those for comparison of the agent's emotional expressions were conducted using a paired t-test. Multiple comparison was conducted using Bonferroni correction. The statistical significance level was set at p < 0.05.

3 Results

3.1 Preliminary experiment (emotion perception)

Figure 3 shows the emotional expressions with the maximum consistency for matching within group for each emotion type. On the basis of the threshold set for the maximum consistency, the emotional expressions for joy, sadness, and anger were extracted. Therefore, these emotions were used in the main experiment. The maximum consistency for the old participants was consistently lower, except for sympathy. There were also large differences in emotion perception between the two age groups for several expressions. For example, whereas the “ecstasy” expression had the highest consistency (98.4%) for joy for the young participants, it was recognized as joy by only 33.3% of the old participants.

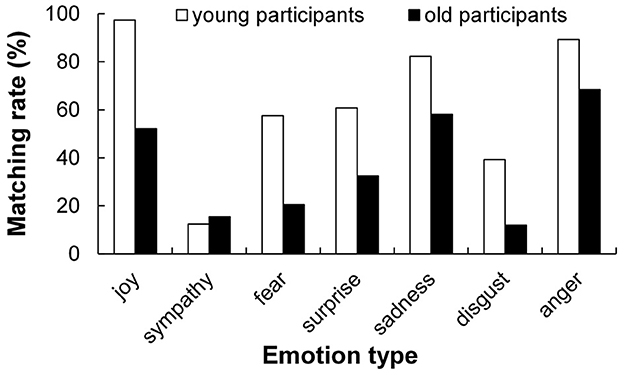

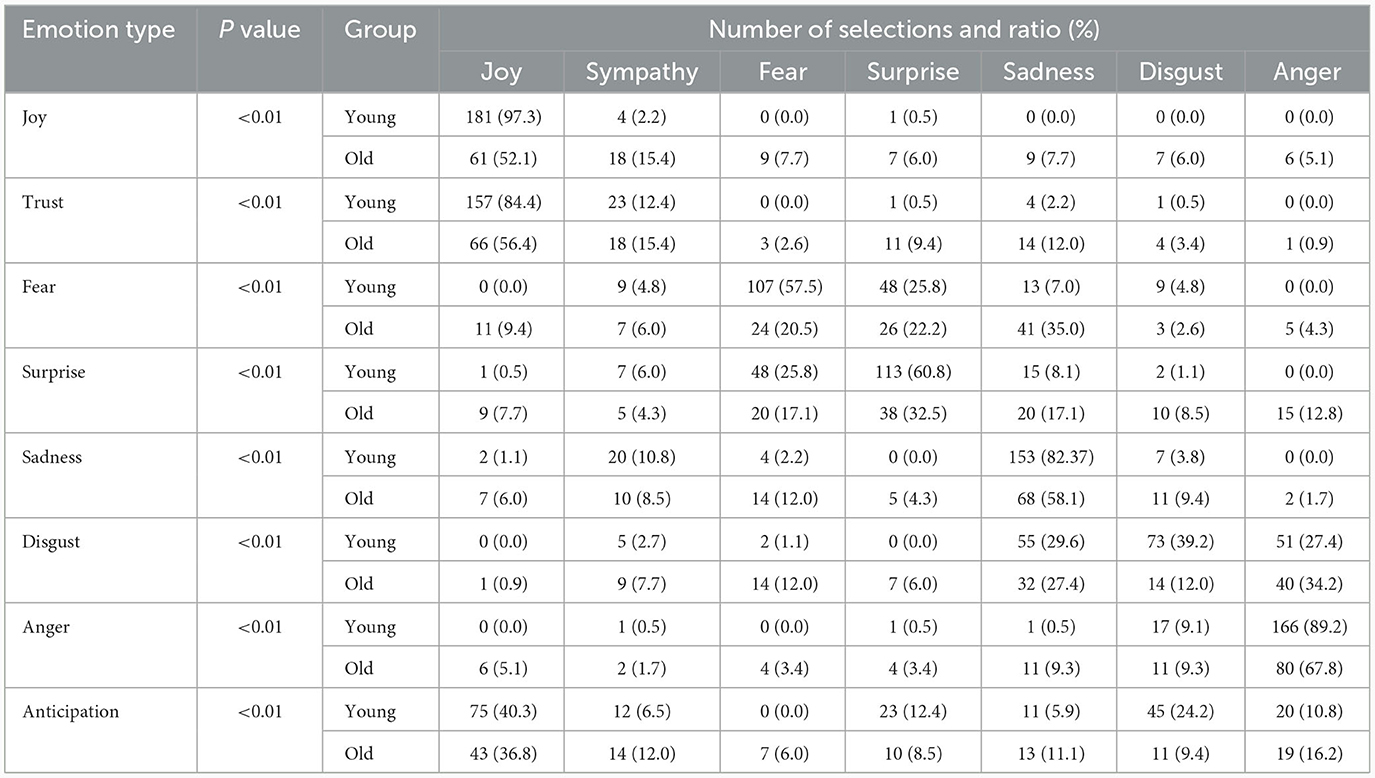

Figure 4 shows the average accuracies for matching of pre-specified emotions for the agent expressions. The accuracies for the old participants were consistently lower, except for sympathy. The accuracies for joy, sadness, and anger were relatively high (more than 50%) for both age groups while those for the other four emotions were relatively low. Table 2 shows a cross-tabulation of the emotion selection distributions for the two age groups of participants for each emotion type. There was a significant difference [p < 0.001, N (young): 186, N (old): 117] between the age groups for each emotion type. Focusing on the emotion selection distributions for the old participants, we found that unmatched emotion selection was not only biased to a different specific emotion but also spread to various emotions. The old participants had higher variability in emotion perception.

Figure 4. Average accuracy for matching pre-specified emotions for agent expressions. Accuracy was derived by calculating the ratios of successful matches with pre-specified emotions on basis of Plutchik's wheel of emotions.

Table 2. Cross-tabulation of emotion selection distributions for young and old participants by emotion type.

3.2 Main experiment (subjective feelings when facing with an agent's responses)

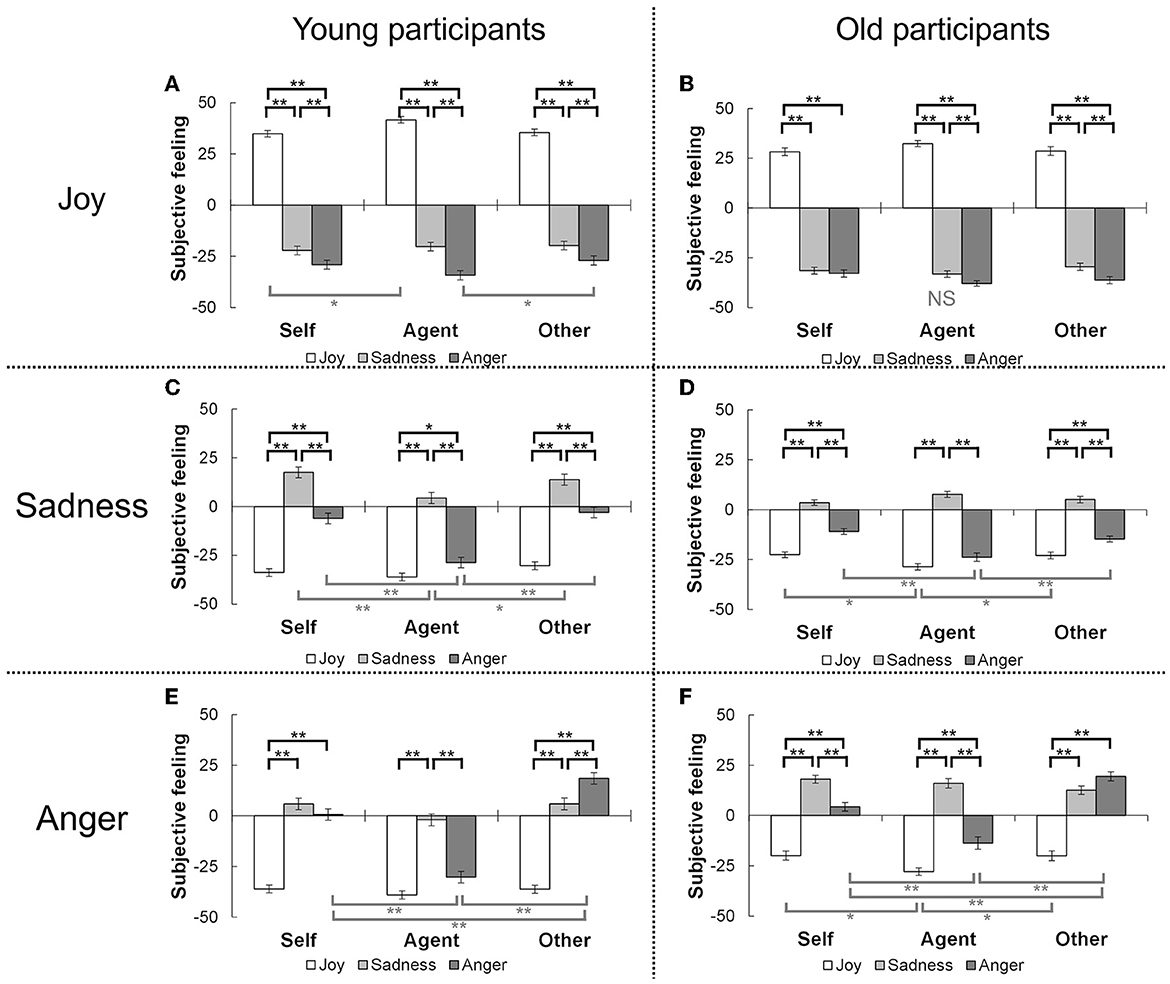

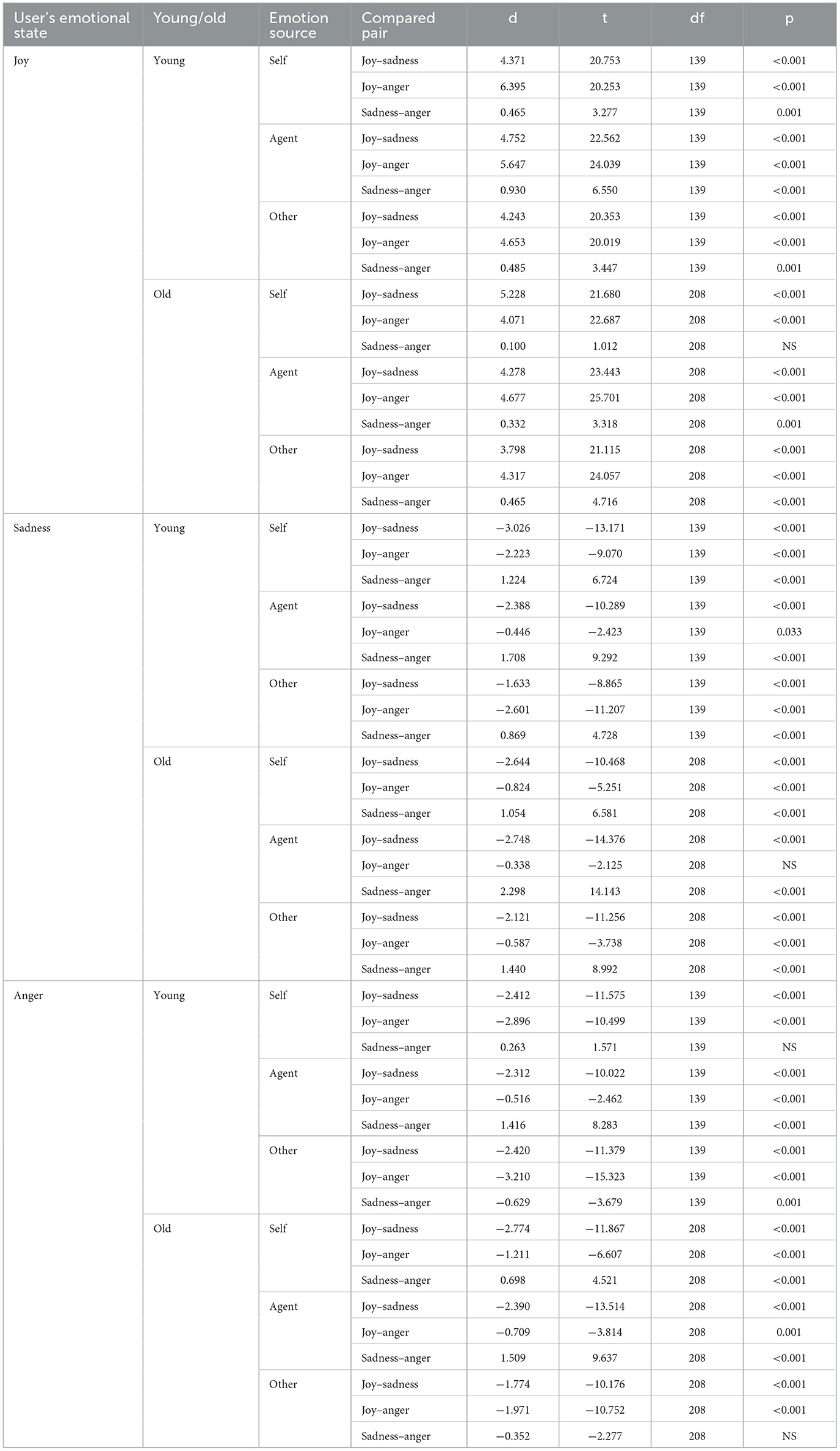

Figure 5 and Table 3 show the statistical results of the subjective feelings as measured using the VAS. Two-way ANOVAs revealed significant interactions between the agent's response and emotion source for both the young and old participants when the participant's emotional state was sadness or anger [young: F(4, 272) = 6.450, p < 0.001, η2 = 0.087; F(4, 272) = 25.549, p < 0.001, η2 = 0.273; old: F(4, 416) = 7.269, p < 0.001, η2 = 0.065; F(4, 416) = 15.893, p < 0.001, η2 = 0.133]. When the participant's emotional state was joy, the ANOVAs revealed a significant interaction for the young participants [F(4, 272) = 3.751, p = 0.009, η2 = 0.052] and not for the old participants [F(4, 416) = 2.518, p = 0.062, η2 = 0.024].

Figure 5. Results for subjective feelings about agent's response. Charts (A, B) show results when emotional state was joy, (C, D) show results when emotional state was sadness, and (E, F) show results when emotional state was anger for young and old participants, respectively. Emotion sources are shown on horizontal axis. “Self” means that source was participant, “agent” means that source was computer-graphics-based agent, and “Other” means that source was neither participant nor agent. Subjective feelings were measured by using VAS. Bars represent participants' subjective feelings about agent's response. Error bars represent standard error. **p < 0.01, *p < 0.05, NS, not significant.

Table 3. Statistical results for subjective feelings about agent's response with statistics and effect sizes.

When the emotional state was joy (Figures 5A, B), the post-hoc test results revealed that most differences among the agent's responses were significant (p < 0.01) for both age groups regardless of the emotion source. That is, the better way to induce positive feelings was for the agent's emotional expression to convey in the order of joy, sadness, and anger.

When the participant's emotional state was sadness (Figures 5C, D) and the emotion source was the self or other, the post-hoc test results revealed that all differences among the agent's responses were significant (all p < 0.01) for both the young and old participants. That is, the better way to induce positive feelings was for the agent's emotional expression to convey in the order of sadness, anger, and joy. When the emotion source was the agent, the results revealed that the subjective feelings when the agent's response conveyed sadness were significantly better than those when it conveyed joy or anger for both age groups (both p < 0.01). The subjective feelings when the agent's response conveyed anger and the emotion source was the agent were significantly worse than those when the emotion source was the self or other for both groups (all p < 0.01). Thus, when the emotion source was the agent, the expression that most induce positive feelings was for the agent's emotional expression to convey sadness rather than joy or anger.

When the participant's emotional state was anger and the emotion source was self for the young participants (Figure 5E), the subjective feeling when the agent's emotional expression conveyed joy was significantly worse than when it conveyed sadness or anger (both p < 0.01). When the emotion source was the agent for the young participants, the subjective feeling when the agent's emotional expression conveyed sadness was significantly better than when it conveyed joy or anger (both p < 0.01). When the emotion source was other for the young participants, all differences among the agent's emotional expressions were significant (all p < 0.01). That is, the better way to induce positive feelings was for the agent's emotional expression to convey in the order of anger, sadness, and joy. When the emotion source was self or the agent for the old participants (Figure 5F), all differences among the agent's emotional expressions were significant (all p < 0.01). That is, the better way to induce positive feelings was for the agent's emotional expression to convey in the order of sadness, anger, and joy. When the emotion source was other, the subjective feeling when the agent's emotional expression conveyed joy was significantly worse than when it conveyed sadness or anger. For both age groups, when the agent's emotional expression conveyed anger, all differences among the emotion sources were significant (all p < 0.01). That is, the emotion sources leading to the most positive feeling when the agent's emotional expression conveyed anger were in the order of other, self, and the agent.

4 Discussion

4.1 Preliminary experiment (emotion perception)

The emotional expressions for joy, sadness, and anger that most young and most old participants recognized as the same type were identified respectively, and they were used in the main experiment. The old participants exhibited a higher degree of variability in their emotion perception and the emotional expression for other emotion could not be extracted. Such emotion perception characteristics indicate that the emotion perceptions conveyed in expressions displayed by the non-human agent developed in this study are affected by the person's age, as shown in previous studies (Beer et al., 2009, 2015; Pavic et al., 2021). This means that it is important to clarify the individual characteristics of emotion perception to achieve affective communication between old users and non-human agents.

Although the effect of aging on the emotion perception is not a simple decline, an age-related decrement in identifying happiness, sadness, anger, fear, and surprise in human facial expressions has been demonstrated in previous studies (Isaacowitz et al., 2007; Ruffman et al., 2008; Sze et al., 2012; Khawar and Buswell, 2014; Goncalves et al., 2018; Hayes et al., 2020). The high degree of variability in the emotion perception of the old participants when observing the agent's expressions conveying joy, anger, sadness, fear, and surprise (Table 1) is consistent with the findings of previous research based on the emotion perception in Ekman's facial expressions of emotion (Ekman et al., 1980). The high degree of variability in the old participants' emotion perception of these emotion types was affected by age. A high degree of variability in the perception of disgust was not consistently observed in previous studies (Isaacowitz et al., 2007; Ruffman et al., 2008; Sze et al., 2012; Khawar and Buswell, 2014; Goncalves et al., 2018; Hayes et al., 2020). Given that the agent's expressions in this study did not always match the target emotion, the identification of disgust by old participants was unlikely to be worse than that of young participants although the high variability in disgust perception likely reflected differences in emotion perception characteristics between the young and old participants. Since younger adults may be used to interpreting emotions from a non-human agent, generally speaking, it is possible that the expressions of the agent in this study were especially easy for the young participants to understand and difficult for the old participants to understand. To clarify the general age-related characteristics of emotion perception and to widen the diversity of agent expressions, further experiments and analysis using various exaggerated and/or non-human-like expressions are needed.

Compared with the expressions of the agent with a robot head that resembled those of a human face in a previous study, the maximum numbers of successful matches for fear, sadness, and anger to the agent's expressions for the young participants (fear: 80.6%, sadness: 100.0%, anger: 96.8%) were higher than the identification accuracy of the agent that had a human-like face in the previous study (fear: 41%, sadness: 94%, anger: 31%) (Beer et al., 2015). In addition, the maximum numbers of successful matches for the agent's expressions conveying fear or anger for the old participants (fear: 25.6%, anger: 79.5%) were higher than the identification accuracy for the expression of a human-face-like agent's expressions (fear: 22%, anger: 5%) (Beer et al., 2015). Although there were several differences in experimental conditions between the two studies, several of the non-human expressions displayed by the agent were more easily recognized by both young and old participants in our study.

The accuracy of emotion identification for human-face-like expressions of agents was found in a previous study to be worse than that for human facial expressions (Beer et al., 2015), and it was suggested that humanity could be an important factor in emotion identification. However, the expressions of the agent in this study had less humanity, and several of the non-human expressions displayed by the agent were more easily recognized. It is therefore thought that the level of humanity in emotion expression is not always important for successful emotion perception, meaning that exaggeration has the potential to be an important factor in developing understandable agent expressions for both young and old users.

Although it was expected that sympathy could be found in the expressions of a non-human agent, the maximum consistency for sympathy was low. Therefore, not only is it difficult to recognize sympathy in human facial expressions (Keltner and Buswell, 1996), but also difficult to recognize it in agent expressions. Moreover, the maximum consistency for fear, surprise, and disgust in the agent expressions was low (< 50.0%) for the old participants. In previous studies, dynamic facial expressions were found to enhance the emotional response of various emotion types in human-human communications (Rymarczyk et al., 2016). The characteristics of emotion perception differ between faces and voices (Ruffman et al., 2008). Therefore, dynamic emotional expressions alone or in combination with emotional voice expressions should be useful for improving successful emotion perception.

4.2 Main experiment (subjective feelings when facing with an agent's responses)

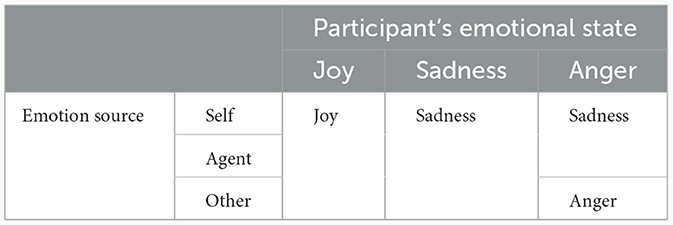

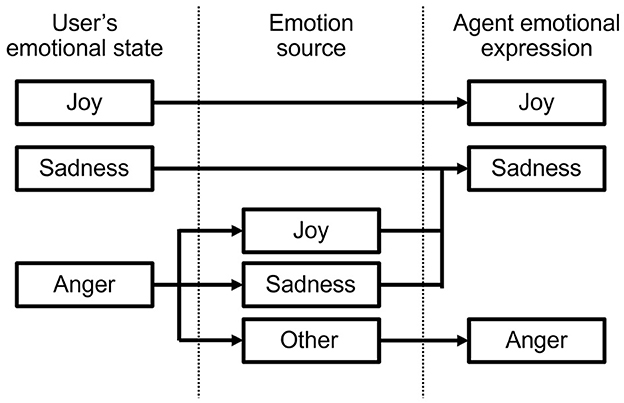

Table 4 lists the agent emotional expressions that most induced positive feelings depending on the participant's emotional state and emotion source, considering the differences in emotion perception between the young and old participants. Overall, the expressions were common between the two age groups. If the participant's emotional state was joy or sadness, the response should be an expression that conveyed that emotion, regardless of the emotion source. If the participant's emotional state was anger, the response should be selected depended on the emotion source. If the source was other, the expression should be one that reflected the participant's emotional state, i.e., anger. If the source was self or agent, the expression should be sadness, not one that reflected the participant's emotional state. Thus, a response model of the agent to induce positive feelings was constructed for both the young and old participants, as shown in Figure 6. By using this model, an agent's emotional expression for inducing a positive feeling can be selected among expressions of joy, sadness, and anger, when user's emotional state is joy, sadness, or anger, depending on the emotion source.

Table 4. Agent emotional expressions for inducing most positive feeling in participant depending on participant's emotional state and emotion source.

Figure 6. Model of agent emotional expression for inducing positive feeling depending on participant's emotional state and emotion source, derived from results of main experiment.

Since the agent emotional expressions that represented the maximum consistency (joy, sadness, and anger) for both age groups were used in the experiment, most participants had the expected emotion perception of the agent's expressions. Overall, the expressions that most induced positive feelings in the participants were the same for both age groups. Although the subjective feelings under certain conditions did not represent a fully positive value when the emotion type was sadness or anger (e.g., when the participant's emotional state was anger and the emotion source was the agent for the young participants), it should be considered that the statements on the paper instrument negatively affected the participants' subjective feelings. In other words, we can assume that the subjective feelings when the participants read the sadness or anger statements were negative and that they were positively improved by the expression of the agent.

The expressions of the agent that most induce positive feelings depended on the emotion source, and reflection of the participant's simulated emotional state was not always the way to induce most positive feelings in the participant. When the emotion source was self and the agent's response conveyed anger, the participants likely felt that they were being scolded by the agent. Therefore, it is reasonable that the agent expression that most induced positive feelings was sadness when the participant's emotional state was anger, and the emotion source was self or agent. In other words, the agent should show sadness to show understanding that the participant was angry and to show sadness because anger is a negative emotion in this case. This differs from the typical human-like response in which people tend to mimic emotional expressions even if they are anger expressions (Lundqvist, 1995), which suggests that mimicry of emotional expressions is not always the way to induce most positive feelings. In fact, emotional mimicry is not a merely automatic response in humans, and it is modulated by several factors including emotion and social affiliative intention (Seibt et al., 2015; Hess, 2020). Although many studies observed anger mimicry, it was not consistently found (Seibt et al., 2015). This is because it has been argued that emotional mimicry occurs when a person has the social affiliative intention, and the mimicry of anger expression is often conflicted with this intention (Seibt et al., 2015; Hess, 2020). When applied to this study, on one hand, the agent expression should have been sadness when the participant's emotional state was anger, and the emotion source was self or the agent, because the mimicry of anger expression conflicted with the social affiliative intention. On the other hand, the agent expression should have been anger when the emotion source was other, because the mimicry of anger is felt as a group emotion toward a common opponent (van der Schalk et al., 2011) and matched the social affiliation intention. Agents have an advantage in that they do not have any natural tendency, which should be useful to take into account the emotion source when choosing the agent's response along with the social affiliative intention. The emotion source can be detectable in the user's statements in a conversation between users and agents as well as in the statements in our experiment. This indicates that the combination of our agent-response model with a voice-recognition function should be useful in achieving more affective communication and in creating empathy between users and non-human agents.

It should be noted as a limitation to the main experiment that only three emotion types were used, and only the emotion expressions that represented the maximum consistency were used. To construct a better agent-response model for inducing positive feelings, it would be useful to clarify the personal characteristics of emotion perception and evaluate subjective feelings by using agents with various appearances and expressions for a wide range of users. It should be also noted that age group was not included as a factor of ANOVA because agent expressions used in the experiment were not exactly same between young and old participants. To include the age group as a factor, it would be necessary to make various agent's expressions and explore common expressions to be precepted same emotion between young and old participants. Further, the paper instrument was originally made in this study. Although the emotional episodes were made based on the representative features of associations between emotions and their appraisal profiles and they were reviewed by social psychologists, it should be desirable be verified the validity of the emotional episodes empirically. In detail, it should be verified that the emotional state was induced by reading the emotional episodes and it was considerably responsibility of emotion source as expected. In addition, it should be validated that the scenarios were similarly meaningful and appropriate for young and old participants. Moreover, since we used a paper instrument and both the emotional episodes and the agent's expression were presented by using a paper instrument, it is difficult to rule out the possibility that some participants checked the agent's reaction first. In this study, we made and used a paper instrument because of two reasons. One was that reading speed of emotional episodes should be widely varied especially among old participants and it was necessary to provide instruments which enabled participants read the emotional episodes by their own pace. The other was that the use of PC or smartphones should be an additional cognitive work load for old participants, which affect the subjective feelings. Although agency has been conventionally studied by using various materials including static pictures (Bock, 1986; Ruby and Decety, 2001), as a future work, it should be useful to apply such ICT tools with fully practice for old participants to get used to using the ICT tools. Finally, we focused on only subjective feelings to evaluate feelings independently of indicators of specific emotions. Based on only the subjective ratings, it should be quite difficult to understand factors and mechanisms in related to the induced subjective feelings. For example, social desirability as well as participants' social beliefs about appropriateness would affect the results of subjective feelings. Therefore, objective measures should be useful to understand such factors and mechanism as a future work. In the viewpoint of emotional mimicry, measurement of EMG (Electromyography) would be important, as for mimicry to appear one interactive partner first needs to show a facial expression which is then mimicked by the other interactive partner (Kroczek and Mühlberger, 2022). For example, EMG measurements of the M. corrugator supercilia and the M. zygomaticus major should be useful to understand the emotion of the initial participants' facial expression. In addition, since it is reported that EMG responses did not track subjective intensity of negative feelings (Golland et al., 2018), measurements of brain activity and autonomic nervous activity would be also useful (Ruby and Decety, 2001; Numata et al., 2019, 2020). For example, a measurement of activities of anterior cingulate cortex would contribute to understand whether the actual experience of emotional expression of the agent was induced (Numata et al., 2020). Thus, it would be useful to understand factors and mechanisms underlying the subjective feelings in the experiment by combining subjective ratings and objective measures.

4.3 General discussion

The results of both experiments indicate that a common agent-response model could induce positive feelings in users, regardless of age, when the agent's emotional expressions are appropriately recognized. Although we did not evaluate subjective feelings by using the same expressions for both age groups, some of the expressions represented large differences in emotion perception between the groups. Therefore, the expressions to be used by an agent should be chosen with consideration of the differences in emotion perception between young and old users. Thus, extracting the age characteristics of emotion perception should be important over the age characteristics of the agent response model for more seamless communication with non-human agents.

Although the agent's expressions were developed from exaggerated human expressions, the way to induce most positive feelings was not always to mimic the emotional expressions, which is a typical tendency of human emotional responses. This means that development of an agent-response model that is based on simple emotional mimicry may not be the best way to induce positive feelings. For natural and affective agents, it might be useful to combine an emotional-mimicry model with a response model to induce positive feelings. An alternative is to carefully choose a response model from between them, depending on the characteristics of the user and agent, such as the user's personality and agent's appearance (similarity to a human face), situation, and purpose of the communication. Taking these factors into account should lead to more affective communication between people and non-human agents.

There were several limitations in this study. One is that the participants' characteristics were limited in terms of age and nationality. Participants in this study were only young and old people (e.g., middle-aged people were not considered). The participants were also all Japanese, whereas it is known that emotion perception and emotion context have cultural differences (Masuda et al., 2008). Therefore, investigation of emotion perception and subjective feelings with wide range of age groups and various cultural groups is left for future work. Another limitation is that we used were limited to static images on paper. Although static images could be displayed on both paper and a screen, their impression on users cannot be always common. Therefore, it would be useful to understand the differences in the effects on subjective feelings depending on the presentation methods. In previous studies, dynamic facial expressions were found to enhance the emotional response of various emotion types in human-human communications (Rymarczyk et al., 2016). Although using dynamic expressions is complex (e.g., exploration of appropriate present timing is needed to use dynamic expressions as reactions in an emotion episode), extracting differences in subjective feelings between static and dynamic expressions should be useful for improving emotion perception and subjective positive feelings in human-agent interaction. These further investigations with preregistration studies would be desirable to understand the subjective feelings induced in this study and apply the agent's response model to induce positive feelings in real cases of human-agent communication.

5 Conclusion

We investigated subjective feelings induced when facing with a non-human computer-graphics-based agent's emotional responses by taking into account the user's emotion type and emotion source and considering differences in emotion perception between young and old adults. Twenty-four exaggerated emotional expressions were developed for the non-human agent to emphasize age differences in emotion perception and used in the experiments. Despite the differences in emotion perception characteristics between the young and old participants, the expressions that most induced positive feelings were common between both groups. When the participant's emotional state was joy or sadness, the response that most induced positive feelings was an expression that conveyed that emotion, regardless of the emotion source. When the participant's emotional state was anger, the response that most induced positive feelings depended on the emotion source; if the source was other, the expression was one that reflected the participant's emotional state. If the source was self or agent, the expression that most induced positive feelings was sadness, not one that reflected the participant's emotional state. These findings suggest that a common agent-response model can be developed for both young and old people by combining an emotional mimicry model with a response model to induce positive feelings in users and promote natural and affective communication by taking into account age characteristics of emotion perception.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethical Committee of the Faculty of Letters, the University of Tokyo. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

TN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing—original draft. YA: Conceptualization, Funding acquisition, Investigation, Project administration, Resources, Software, Writing—review & editing. TH: Investigation, Methodology, Resources, Validation, Writing—review & editing. KK: Investigation, Methodology, Project administration, Resources, Validation, Writing—review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The authors declare that this study received funding from Hitachi, Ltd. The funder had no role in study design, data collection and analysis, or preparation of the manuscript.

Conflict of interest

TN and YA are employed by Hitachi Ltd. The authors declare that this study received funding from Hitachi, Ltd. The funder had no role in study design, data collection and analysis, or preparation of the manuscript.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bartneck, C. (2002). “Integrating the OCC model of emotions in embodied characters,” in The Workshop on Virtual Conversational Characters: Applications, Methods, and Research Challenges.

Beer, J. M., Fisk, A. D., and Rogers, W. A. (2009). Emotion recognition of virtual agents facial expressions: the effects of age and emotion intensity. Proc. Hum. Factors Efgon. Soc. Ann. Meeting 53, 131–135. doi: 10.1177/154193120905300205

Beer, J. M., Smarr, C.-A., Fisk, A. D., and Rogers, W. A. (2015). Younger and older users' recognition of virtual agent facial expressions. Int. J. Hum. Comput. Stud. 75, 1–20. doi: 10.1016/j.ijhcs.2014.11.005

Bock, J. K. (1986). Syntactic persistence in language production. Cogn. Psychol. 18, 355–387. doi: 10.1016/0010-0285(86)90004-6

Catmur, C., and Heyes, C. (2013). Is it what you do, or when you do it? The roles of contingency and similarity in pro-social effects on imitation. Cogn. Sci. 37, 1541–1552. doi: 10.1111/cogs.12071

Chartrand, T. L., and Bargh, J. A. (1999). The chameleon effect: the perception–behavior link and social interaction. J. Person. Soc. Psychol. 76, 893–910. doi: 10.1037/0022-3514.76.6.893

Ekman, P., Friesen, W. V., and Ancoli, S. (1980). Facial signs of emotional experience. J. Person. Soc. Psychol. 39, 1125–1134. doi: 10.1037/h0077722

Friedman, R., Anderson, C., Brett, J., Olekalns, M., Goates, N., and Lisco, C. C. (2004). The positive and negative effects of anger on dispute resolution: evidence from electronically mediated disputes. J. Appl. Psychol. 89, 369–376. doi: 10.1037/0021-9010.89.2.369

Golland, Y., Hakim, A., Aloni, T., Schaefer, S., and Levit-Binnun, N. (2018). Affect dynamics of facial EMG during continuous emotional experiences. Biol. Psychol. 139, 47–58. doi: 10.1016/j.biopsycho.2018.10.003

Goncalves, A. R., Fernandes, C., Pasion, R., Ferreira-Santos, F., Barbosa, F., and Marques-Teixeira, J. (2018). Effects of age on the identification of emotions in facial expressions: a meta-analysis. PeerJ 6:e5278. doi: 10.7717/peerj.5278

Hasler, B. S., Hirschberger, G., Shani-Sherman, T., and Friedman, D. A. (2014). Virtual peacemakers: mimicry increases empathy in simulated contact with virutal outgroup members. Cyberpsychol. Behav. Soc. Netw. 17, 766–771. doi: 10.1089/cyber.2014.0213

Hayes, G. S., McLennan, S. N., Henry, J. D., Phillips, L. H., Terrett, G., Rendell, P. G., et al. (2020). Task characteristics influence facial emotion recognition age-effects: a meta-analytic review. Psychol. Aging 35, 295–315. doi: 10.1037/pag0000441

Heike, M., Kawasaki, H., Tanaka, T., and Fujita, K. (2011). Study on deformation rule of personalized-avatar for producing sense of affinity. J. Hum. Inter. Soc. 13, 243–254.

Hertenstein, M. J., Keltner, D., App, B., Bulleit, B. A., and Jaskolka, A. R. (2006). Touch communicates distinct emotions. Emotion 6, 528–533. doi: 10.1037/1528-3542.6.3.528

Hess, U. (2020). Who to whom and why: The social nature of emotional mimicry. Psychophysiology 58:e13675. doi: 10.1111/psyp.13675

Hortensius, R., Heleke, F., and Cross, E. S. (2018). The perception of emotion in artificial agents. IEEE Trans. Cogn. Dev. Syst. 10, 852–864. doi: 10.1109/TCDS.2018.2826921

Imbir, K. (2013). Origin and source of emotion as factors that modulate the scope of attention. Roczniki Psychol. Ann. Psychol. 16, 287–310.

Isaacowitz, D. M., Löckenhoff, C. E., Lane, R. D., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol. Aging 22, 147–159. doi: 10.1037/0882-7974.22.1.147

Jung, M. F. (2017). “Affective grounding in human-robot interaction,” in Proceedings of the 12th ACM/IEEE International Conference on Human-Robot Interaction, 263–273. doi: 10.1145/2909824.3020224

Keltner, D., and Buswell, B. N. (1996). Evidence for the distinctness of embarrassment, shame, and guilt. A study of recalled antecedents and facial expressions of emotion. Cognit. Emot. 10, 155–171. doi: 10.1080/026999396380312

Khawar, D., and Buswell, B. N. (2014). Evidence for the distinctness of embarrassment, shame, and guilt: a study of recalled antecedents and facial expressions of emotion. Cogn. Emot. 10, 155–171. doi: 10.1080/026999396380312

Kidd, C. D., Taggart, W., and Turkle, S. (2006). “A sociable robot to encourage social interaction among elderly,” in Proceedings of the IEEE International Conference on Robotics and Automation, 3972–3976.

Kroczek, L. O. H., and Mühlberger, A. (2022). Returning a smile: Initiating a social interaction with a facial emotional expression influences the evaluation of the expression received in return. Biol. Psychol. 175:108453. doi: 10.1016/j.biopsycho.2022.108453

Kulesza, W. M., Cislak, A., Vallacher, R. R., Nowak, A., Czekiel, M., and Bedynska, S. (2015). The face of the chameleon: the experience of facial mimicry for the mimicker and the mimickee. J. Soc. Psychol. 155, 590–604. doi: 10.1080/00224545.2015.1032195

Lundqvist, L.-O. (1995). Facial EMG reactions to facial expressions: a case of facial emotional contagion? Scandinavian J. Psychol. 36, 130–141. doi: 10.1111/j.1467-9450.1995.tb00974.x

Macedonia, M., Groher, I., and Roithmayr, F. (2014). Intelligent virtual agent as language trainers facilitate multilingualism. Front. Psychol. 5:295. doi: 10.3389/fpsyg.2014.00295

Mäkäräinen, M., Kätsyri, J., and Takala, T. (2014). Exaggerating facial expressions: a way to intensify emotion or a way to the uncanny valley? Cogn. Comput. 6, 708–721. doi: 10.1007/s12559-014-9273-0

Masuda, T., Ellsworth, P. C., Mesquita, B., Leu, J., Tanida, S., Veerdonk, E. V., et al. (2008). Placing the face in context: cultural differences in the perception of facial emotion. J. Person. Soc. Psychol. 94, 365–381. doi: 10.1037/0022-3514.94.3.365

Miklósi, A., and Gácsi, M. (2012). On the utilization of social animals as a model for social robotics. Front. Psychol. 3:75. doi: 10.3389/fpsyg.2012.00075

Mitani, N., and Karasawa, K. (2005). Appraisal dimensions of emotions: an empirical integration of the previous findings. Japanese J. Psychol. 76, 26–34. doi: 10.4992/jjpsy.76.26

Moltchanova, E., and Bartneck, C. (2017). Individual differences are more important than the emotional category for the perception of emotional expressions. Inter. Stud. 18, 161–173. doi: 10.1075/is.18.2.01mol

Numata, T., Kiguchi, M., and Sato, H. (2019). Multiple-time-scale analysis of attention as revealed by EEG, NIRS, and pupil diameter signals during a free recall task: a multimodal measurement approach. Front. Neurosci. 13:1307. doi: 10.3389/fnins.2019.01307

Numata, T., Kotani, K., and Sato, H. (2021). Relationship between subjective ratings of answers and behavioral and autonomic nervous activities during creative problem-solving via online conversation. Front. Neurosci. 15:724679. doi: 10.3389/fnins.2021.724679

Numata, T., Sato, H., Asa, Y., Koike, T., Miyata, K., Nakagawa, E., et al. (2020). Achieving affective human–virtual agent communication by enabling virtual agents to imitate positive expression. Sci. Rep. 10:5977. doi: 10.1038/s41598-020-62870-7

Ochs, M., Sabouret, N., and Corruble, V. (2008). “Modeling the dynamics of virtual agent's cosial relations,” in 8th International Conference on Intelligent Virtual Agents, 524–525. doi: 10.1007/978-3-540-85483-8_71

Orgeta, V. (2010). Effects of age and task difficulty on recognition of facial affect. J. Gerontol. 65B, 323–327. doi: 10.1093/geronb/gbq007

Pavic, K., Oker, A., Chetouani, M., and Chabe, L. (2021). Age-related changes in gaze behaviour during social interaction: an eye-tracking study with an embodied conversational agent. Quart. J. Exper. Psychol. 74, 1128–1139. doi: 10.1177/1747021820982165

Plutchik, R. (2001). The nature of emotions: human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 89, 344–350. doi: 10.1511/2001.28.344

Roseman, I. J. (1984). “Cognitive determinants of emotion: a structural theory,” in Review of personality and social psychology: Vol. 5. Emotions, relationships and health, ed. P. Shaver (Beverly Hills, CA: Sage), 11–36.

Ruby, P., and Decety, J. (2001). Effect of subjective perspective taking during simulation of action: a PET investigation of agency. Nat. Neurosci. 4, 546–550. doi: 10.1038/87510

Ruffman, T., Henry, J. D., Livingstone, V., and Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: implications for neuropsychological models of aging. Neurosci. Behav. Rev. 32, 863–881. doi: 10.1016/j.neubiorev.2008.01.001

Rymarczyk, K., Zurawski, Ł., and Jankowiak-Siuda K, Szatkowska, I. (2016). Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Front. Psychol. 7:1853. doi: 10.3389/fpsyg.2016.01853

Scherer, K. R. (1984). “On the nature and function of emotion: a component process approach,” in Approaches to Emotion, eds. K. R. Schere, and P. Ekman (Hillsdale, NJ: Lawrence Erlbaum Associates), 293–317.

Seibt, B., Mühlberger, A., Likowski, K. U, and Weyers, P (2015). Facial mimicry in its social setting. Front. Psychol. 6:1122. doi: 10.3389/fpsyg.2015.01122

Smith, C. A., and Ellsworth, P. C. (1985). Patterns of cognitive appraisal in emotion. J. Person. Soc. Psychol. 48, 813–838. doi: 10.1037/0022-3514.48.4.813

Steunebrink, B. R., Dastani, M., and Meyer, J.-J. C. (2009). “The OCC model revisited,” in The 4th Workshop on Emotion and Computing.

Sze, J. A., Goodkind, M. S., Gyurak, A., and Levenson, R. W. (2012). Aging and emotion recognition: not just a losing matter. Psychol. Aging 27, 940–950. doi: 10.1037/a0029367

Torrance, G. W., Feeny, D., and Furlong, W. (2001). 2001 Visual analog scales: do they have a role in the measurement of preferences for health states? Med. Decis. Making 21, 329–334. doi: 10.1177/02729890122062622

Tu, Y.-Z., Lin, D.-W., Suzuki, A., and Goh, J. O. S. (2018). East Asian young and older adult perceptions of emotional faces from an age- and sex-fair east Asian facial expression database. Front. Psychol. 9:2358. doi: 10.3389/fpsyg.2018.02358

Keywords: social agent, emotion in human-computer interaction, adaptation to user state, influencing human emotional state, subjective perception, emotional expression

Citation: Numata T, Asa Y, Hashimoto T and Karasawa K (2024) Young and old persons' subjective feelings when facing with a non-human computer-graphics-based agent's emotional responses in consideration of differences in emotion perception. Front. Comput. Sci. 6:1321977. doi: 10.3389/fcomp.2024.1321977

Received: 15 October 2023; Accepted: 17 January 2024;

Published: 31 January 2024.

Edited by:

Pierre Raimbaud, École Nationale d'Ingénieurs de Saint-Etienne, FranceReviewed by:

Darragh Higgins, Trinity College Dublin, IrelandLeon O. H. Kroczek, University of Regensburg, Germany

Copyright © 2024 Numata, Asa, Hashimoto and Karasawa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Takashi Numata, takashi.numata.rf@hitachi.com

Takashi Numata

Takashi Numata Yasuhiro Asa1

Yasuhiro Asa1  Kaori Karasawa

Kaori Karasawa