Abstract

We study p-Laplacians and spectral clustering for a recently proposed hypergraph model that incorporates edge-dependent vertex weights (EDVW). These weights can reflect different importance of vertices within a hyperedge, thus conferring the hypergraph model higher expressivity and flexibility. By constructing submodular EDVW-based splitting functions, we convert hypergraphs with EDVW into submodular hypergraphs for which the spectral theory is better developed. In this way, existing concepts and theorems such as p-Laplacians and Cheeger inequalities proposed under the submodular hypergraph setting can be directly extended to hypergraphs with EDVW. For submodular hypergraphs with EDVW-based splitting functions, we propose an efficient algorithm to compute the eigenvector associated with the second smallest eigenvalue of the hypergraph 1-Laplacian. We then utilize this eigenvector to cluster the vertices, achieving higher clustering accuracy than traditional spectral clustering based on the 2-Laplacian. More broadly, the proposed algorithm works for all submodular hypergraphs that are graph reducible. Numerical experiments using real-world data demonstrate the effectiveness of combining spectral clustering based on the 1-Laplacian and EDVW.

1. Introduction

Spectral clustering makes use of eigenvalues and eigenvectors of graph Laplacians to group vertices in a graph. It is one of the most popular clustering methods due to its generality, efficiency, and strong theoretical basis. Standard graph Laplacians were first adopted to obtain relaxations of balanced graph cut criteria (Von Luxburg, 2007). Later, these were generalized to p-Laplacians, which are able to provide better approximations of the Cheeger constant (Amghibech, 2003; Bühler and Hein, 2009). Especially, the second smallest eigenvalue of the 1-Laplacian is identical to the Cheeger constant and the partition that achieves the optimal Cheeger cut can be obtained by thresholding the corresponding eigenvector (Szlam and Bresson, 2010).

Graphs are widely used to model pairwise interactions, but in many real-world applications the entities engage in higher-order relationships (Benson et al., 2016; Schaub et al., 2021). For instance, in co-authorship networks multiple authors may interact in writing an article together (Chitra and Raphael, 2019). In an e-commerce system, multiple customers can be associated if they once purchased the same product (Li et al., 2018). In text mining, multiple documents are related to each other if they contain the same keywords (Hayashi et al., 2020; Zhu et al., 2021). Such multi-way relations can be modeled by hypergraphs, where the notion of an edge is generalized to a hyperedge that can connect more than two vertices.

In graphs, there is only one way to cut an edge, thus, a scalar weight is enough to characterize the cut. But in hypergraphs, there may exist multiple ways to split a hyperedge. Consequently, a splitting function we is introduced for each hyperedge e in the hypergraph, assigning a cost to every possible cut of e. For any , indicates the penalty of partitioning e into and . In particular, when we is submodular for every hyperedge e, the corresponding model is termed as a submodular hypergraph which has desirable mathematical properties, making it convenient for theoretical analysis (Li and Milenkovic, 2017, 2018). A series of results in graph spectral theory including p-Laplacians, nodal domain theorems, and Cheeger inequalities have been generalized to submodular hypergraphs (Li and Milenkovic, 2018; Yoshida, 2019).

The choice of hyperedge splitting functions has a large practical effect on the hypergraph clustering performance. There are mainly two types of splitting functions in existing work. One is the so-called all-or-nothing splitting function in which an identical penalty is charged if the hyperedge is split regardless of how its vertices are separated (Hein et al., 2013). Another slightly more general type is the class of cardinality-based splitting functions where the splitting penalty depends only on the number of vertices placed on each side of the split (Veldt et al., 2020).

The limitation of existing splitting functions is that they treat all the vertices in a hyperedge equally while in practice these vertices may have different degrees of contribution to the hyperedge. Such information can be captured by edge-dependent vertex weights (EDVW): every vertex v is associated with a weight γe(v) for each incident hyperedge e that reflects the contribution or importance of v to e (Chitra and Raphael, 2019). Going back to the aforementioned examples, EDVW can be used to model the author positions in a co-authored article, the quantity of a product bought by a customer, as well as the frequency of a word in a document.

Spectral theory on the hypergraph model with EDVW is much less developed than on submodular hypergraphs. Existing works studying hypergraphs with EDVW have only focused on random walk-based Laplacian matrices (Chitra and Raphael, 2019; Hayashi et al., 2020; Zhu et al., 2021), thus raising the question: How to define non-linearp-Laplacians for the hypergraph model that incorporates EDVW? Our basic idea for solving this problem is to convert a hypergraph with EDVW into a submodular hypergraph then p-Laplacians and related theorems developed for submodular hypergraphs can be directly leveraged. Based on our earlier work (Zhu et al., 2022), the model conversion can be achieved by defining submodular EDVW-based splitting functions in the form of where ge is a concave function and the splitting penalty is dependent only on the sum of EDVW in . Moreover, hypergraphs with such splitting functions are proved to be graph reducible, meaning that there exists some graph sharing the same cut properties (Zhu and Segarra, 2022).

Since the 1-Laplacian provides the tightest approximation of the Cheeger constant, a follow-up question is: How to apply 1-spectral clustering to submodular hypergraphs with EDVW-based splitting functions? To this end, we develop an algorithm to compute the second eigenvector of the 1-Laplacian for EDVW-based submodular hypergraphs based on the inverse power method (IPM). The IPM was initially proposed for undirected graphs (Hein and Bühler, 2010), then generalized to submodular hypergraphs with cardinality-based splitting functions (Li and Milenkovic, 2018). A key to the success of IPM is an efficient solution to the inner-loop optimization problem in it. In this paper, we derive an equivalent definition of graph reducibility based on which we further propose an efficient solution to the inner problem that works for all graph reducible submodular hypergraphs including those with EDVW. The proposed solution can also be used to solve submodular function minimization (SFM) problems (Bach, 2013) when the submodular function can be represented as sums of concave functions applied to modular functions.

The major contributions of this paper can be summarized as follows:

(1) We present an equivalent definition of graph reducibility in terms of the Lovász extension of the cut function (see Theorem 1), which is helpful for understanding the relations between graph Laplacians and hypergraph Laplacians.

(2) We propose an algorithm to compute the eigenvector of the 1-Laplacian associated with the second smallest eigenvalue for all graph reducible submodular hypergraphs including those with EDVW-based splitting functions, and use the eigenvector for 1-spectral clustering.

(3) We validate the effectiveness of the proposed algorithm which leverages both of EDVW and 1-spectral clustering via numerical experiments on real-world datasets.

1.1. Paper outline

The rest of this paper is structured as follows. Preliminary mathematical concepts and submodular hypergraphs are reviewed in section 2. Section 3 introduces the hypergraph model with EDVW and shows how to convert it to graph reducible submodular hypergraphs by constructing EDVW-based splitting functions. The section also presents two equivalent definitions for graph reducibility. The proposed 1-spectral clustering algorithm is described in section 4. Section 5 presents experimental results. The relation between hypergraph Laplacians defined in different ways and the application of the proposed algorithm in SFM are discussed in section 6. Closing remarks are included in section 7.

1.2. Notation

For a vector x and a set , denotes the vector formed by the entries of x indexed by and . The operator projects every entry of x onto the range [a, b]. Throughout the paper we assume that the considered hypergraphs are connected.

2. Preliminaries

2.1. Mathematical preliminaries

For a finite set , a set function → ℝ is called submodular if for every . Considering a set function → ℝ such that F(∅) = 0 where , its Lovász extension f:ℝN → ℝ is defined as follows. For any x ∈ ℝN, sort its entries in non-increasing order xi1 ≥ xi2 ≥ ⋯ ≥ xiN, where (i1, i2, ⋯ , iN) is a permutation of (1, 2, ⋯ , N), and set

where for 1 ≤ j < N. A set function F is submodular if and only if its Lovász extension f is convex (Lovász, 1983). For any , where is the indicator vector of . If , f(ax + b1) = af (x) for any a ∈ ℝ≥0, b ∈ ℝ. More properties of submodular functions can be found in Appendix A.

2.2. Submodular hypergraphs

Let denote a submodular hypergraph (Li and Milenkovic, 2018) where is the vertex set and is the set of hyperedges. The function assigns positive weights to vertices. Each hyperedge is associated with a submodular splitting function that assigns non-negative penalties to every possible split of e. Moreover, we is required to satisfy we(∅) = 0 and be symmetric so that for any . The domain of we can be extended from 2e to by setting for any , guaranteeing that the submodularity is maintained.

A cut is a partition of the vertex set into two disjoint, non-empty subsets denoted by and its complement . The weight of the cut is defined as the sum of splitting penalties associated with each hyperedge (Li and Milenkovic, 2018; Veldt et al., 2020), i.e.,

The normalized Cheeger cut (NCC) is defined as

where denotes the volume of . The 2-way Cheeger constant is defined as the minimum NCC over all non-empty subsets of except itself, i.e.,

The solution to (4) provides an optimal partitioning in the sense that we obtain two clusters which are balanced in terms of volume and loosely connected as captured by a small cut weight. In this paper, we adopt the minimization of NCC as our objective. There exist other clustering measures such as ratio cut, normalized cut and ratio Cheeger cut, which are closely related (Von Luxburg, 2007; Bühler and Hein, 2009).

Optimally solving (4) has been shown to be NP-hard for graphs (Wagner and Wagner, 1993), let alone hypergraphs. In spectral graph theory, Cheeger inequalities are derived to bound and approximate the Cheeger constant using graph p-Laplacians (Amghibech, 2003; Bühler and Hein, 2009; Szlam and Bresson, 2010; Chang, 2016; Chang et al., 2017; Tudisco and Hein, 2018). The results have been generalized to submodular hypergraphs (Li and Milenkovic, 2018; Yoshida, 2019). The p-Laplacian △p of a submodular hypergraph is defined as an operator that, for all x ∈ ℝN, induces

where and fe is the Lovász extension of the normalized splitting function . Notice that △p can be alternatively defined in terms of the subdifferential of fe (Li and Milenkovic, 2018), while we keep the definition (5) since it is more instrumental to our development. In particular, when p = 1, Q1(x) turns out to be the Lovász extension of the cut function defined in (2). It has been proved that the second smallest eigenvalue of the 1-Laplacian is identical to the Cheeger constant h2 (Li and Milenkovic, 2018).

3. EDVW-based submodular hypergraphs

3.1. The hypergraph model with EDVW

Let represent a hypergraph with EDVW (Chitra and Raphael, 2019) where , , and μ respectively denote the vertex set, the hyperedge set, and positive vertex weights. The function assigns positive weights to hyperedges, and those weights can reflect the strength of connection. Each hyperedge is associated with a function to assign edge-dependent vertex weights. For v ∈ e, γe(v) is positive and measures the importance of the vertex v to the hyperedge e; for v∉e, γe(v) is set to zero. For convenience, we define .

The motivation of introducing EDVW is to enable the hypergraph model to describe the cases when the vertices in the same hyperedge contribute differently to this hyperedge. This information cannot be captured by hypergraphs adopting the all-or-nothing or cardinality-based splitting functions and is also hard to be directly described by submodular hypergraphs, but it can be conveniently represented via EDVW. For example, Chitra and Raphael (2019) studies the application of ranking authors in an academic citation network where authors and papers are respectively modeled as vertices and hyperedges. For a paper e and any author v of the paper, the corresponding EDVW γe(v) is set to 2 if v is the first or last author, otherwise the weight is set to 1.

3.2. Building submodular hypergraphs from EDVW

In order to effectively handle EDVW while still leveraging existing results obtained for submodular hypergraphs, we consider the conversion from a hypergraph with EDVW to a submodular hypergraph . The basic idea is to keep , , and μ unchanged, and construct submodular splitting functions {we} from EDVW {γe} and hyperedge weights κ. After such a transformation, we can directly extend concepts such as p-Laplacians and related theorems proposed for the submodular hypergraph model (Li and Milenkovic, 2018) to the EDVW-based hypergraph model.

In our preliminary works (Zhu and Segarra, 2022; Zhu et al., 2022), we have proposed a class of submodular EDVW-based splitting functions in the following form

where he:ℝ+ → ℝ+ is an arbitrary function and ge:[0, γe(e)] → ℝ≥0 is concave, symmetric with respect to γe(e)/2, and satisfies ge(0) = 0. The resulting we is a valid splitting function that is non-negative, submodular, symmetric, and satisfies we(∅) = 0. In practice, it is reasonable to select a non-decreasing function for he such as he(x) = 1 and he(x) = x since a larger hyperedge weight κ(e) is expected to lead to a larger splitting penalty for the same split of the hyperedge. Possible choices of ge include ge(x) = x·(γe(e) − x), ge(x) = min{x, γe(e) − x}, and ge(x) = min{x, γe(e) − x, b} where b is a positive parameter. Also notice that for trivial EDVW, namely γe(v) = 1 for all v ∈ e, the splitting functions defined as (6) reduce to cardinality-based ones (Veldt et al., 2020).

3.3. Hypergraph-to-graph reductions

Submodular hypergraphs with splitting functions defined as (6) have a desirable property that they are graph reducible. In other words, they can project onto some graph which shares identical cut properties. Following Veldt et al. (2020), we consider the reduction of a hypergraph to a (possibly directed) graph with a potentially augmented vertex set. For a directed graph (digraph) with vertex set and weighted adjacency matrix A whose entry Auv denotes the weight of the directed edge pointing from u to v, its cut function is defined as

In the following, we state a formal definition for graph reducibility in terms of cut weights, which is a variant of the definition in terms of hyperedge splitting functions stated in Veldt et al. (2020).

Definition 1. For a submodular hypergraph with vertex set , we say that its cut function is reducible to the cut function of a directed graph with vertex set where is a set of auxiliary vertices if the following equality holds

In the following theorem, we show an equivalent condition for graph reducibility regarding the Lovász extension of the cut function, which is beneficial for our later development of the 1-spectral clustering algorithm. The Lovász extension of the graph cut function can be written as

where y is a vector of length .

Theorem 1. The equality presented in (8) is equivalent to

where Q1(x) and are respectively defined in (5) and (9), , , and y ∈ ℝN+M is composed of and .

Proof. The equivalence between (8) and (10) is proved in Appendix B.

It has been shown in Veldt et al. (2020) that all hypergraphs with submodular cardinality-based splitting functions are graph reducible. Our earlier work (Zhu and Segarra, 2022) has generalized the conclusion to hypergraphs with submodular EDVW-based splitting functions. In the following section, we propose a 1-spectral clustering algorithm for all submodular hypergraphs that are graph reducible including those EDVW-based ones, which are the focus of this paper.

4. Spectral clustering based on the 1-Laplacian

4.1. IPM-based 1-spectral clustering

We study spectral clustering algorithms for EDVW-based submodular hypergraphs leveraging the 1-Laplacian. As mentioned in section 2.2, for submodular hypergraphs the Cheeger constant h2 is equal to the second smallest eigenvalue λ2 of the 1-Laplacian △1. The corresponding optimal bipartition can be obtained by thresholding the eigenvector of △1 associated with λ2 (Li and Milenkovic, 2018). This eigenvector can be computed by minimizing

where . Given the eigenvector x, a partitioning can be defined as and its complement, where t is a threshold value. The optimal t can be determined as the one that minimizes the NCC in (3). The pipeline for the 1-spectral clustering algorithm is summarized in Algorithm 1.

Algorithm 1

| 1:Input: hypergraph with EDVW |

| 2: Convert to a submodular hypergraph by constructing submodular splitting functions based on (6) |

| 3: Compute the second eigenvector of the hypergraph 1-Laplacian via the minimization of R1(x) in (11) |

| 4: Threshold the obtained eigenvector to get the bipartition of where we choose the threshold value as the one that minimizes the NCC in (3) |

1-spectral clustering for hypergraphs with EDVW.

The minimization of R1(x) can be solved based on the inverse power method (Hein and Bühler, 2010; Li and Milenkovic, 2018), as outlined in Algorithm 2. Three functions are introduced: , and . Although this algorithm cannot guarantee convergence to the second eigenvector, the objective R1(x) is guaranteed to decrease and converge in the iterative process. Moreover, if we start from some point in Algorithm 2 where is a given partition of , then each step of the IPM-based method gives a partition that has a smaller (or at least equal) NCC value (see theorem 4.2 in Hein and Setzer, 2011). This implies that we can leverage the partition obtained via other methods as initialization. The algorithm was first proposed for the undirected graph setting (Hein and Bühler, 2010), then generalized to submodular hypergraphs with cardinality-based splitting functions (Li and Milenkovic, 2018). It is actually a special case of a more general class of minimization algorithms called RatioDCA and generalized RatioDCA proposed in Hein and Setzer (2011) and Tudisco et al. (2018) in order to handle more types of balanced graph cuts and modularity measures, respectively. The major difference between the graph setting and the hypergraph setting lies in how the inner-loop optimization problem (cf. line 5 in Algorithm 2) is solved.

Algorithm 2

| 1: Input: submodular hypergraph with N vertices, accuracy ϵ |

| 2: Initialization: non-constant x ∈ ℝN subject to 0 ∈ argminc||x − c1||1, μ, λ ← R1(x) |

| 3: repeat |

| 4: |

| 5: x←argmin||x|| ≤ 1Q1(x)−λ〈x, g〉 (inner problem) |

| 6: c←argminc||x−c1||1, μ |

| 7: x ← x − c1 |

| 8: |

| 9: until |

| 10: Output:x |

In Li and Milenkovic (2018), the authors solved the inner problem using a random coordinate descent method (Ene and Nguyen, 2015) together with a divide-and-conquer algorithm proposed in Jegelka et al. (2013). The computational complexity of the divide-and-conquer algorithm depends on the time of solving the problem for an arbitrary vector z ∈ ℝ|e|. For a cardinality-based splitting function, the solution to this problem can be found efficiently via a line search even when |e| is large. In the line search, we create a series of sets , where contains i vertices corresponding to the first i smallest entries in vector z. We compare their objective values and identify the solution leading to the minimum objective value. However, such solution does not work for EDVW-based splitting functions. In the following section, we study the inner problem considering the EDVW-based case.

4.2. Solution to the inner problem

We propose efficient solutions to the inner problem for EDVW-based submodular hypergraphs leveraging the property that they are graph reducible. More generally speaking, the proposed solutions work for all graph reducible submodular hypergraphs. We first show that the inner problem is equivalent to another optimization problem (12) defined on the digraph obtained via the reduction.

Theorem 2. For any submodular hypergraph with vertex set , if it is reducible to a digraph with vertex set and edge set , i.e., (8) or (10) holds, then the solution x to the inner problem in Algorithm 2 can be obtained (up to a scalar multiple) by setting and is the solution to

where is a vector composed of and .

Proof. The proof can be found in Appendix C where we have used the equivalent definition for graph reducibility proposed in Theorem 1.

We present two ways for solving problem (12) in sections 4.2.1 and 4.2.2, respectively.

4.2.1. Solving the inner problem via FISTA

Both of the original inner problem and its equivalent problem (12) are convex but non-smooth. Inspired by Hein and Bühler (2010), we derive a dual formulation (13) of problem (12). Compared to the primal problem, the objective function Ψ of the dual problem is smooth. Moreover, problem (13) can be efficiently solved using a fast iterative shrinkage-thresholding algorithm (FISTA) (Nesterov, 1983; Beck and Teboulle, 2009), which has a guaranteed convergence rate O(1/k2) where k is the number of iterations. FISTA requires an upper bound on the Lipschitz constant of the gradient of Ψ as the input, which is provided in (15). The steps of FISTA are summarized in Algorithm 3.

Algorithm 3

| 1: Input: A Lipschitz constant L of ∇Ψ |

| 2: Initialization:α = β ∈ ℝm, t = 1 |

| 3: repeat |

| 4: α′←α, |

| 5: |

| 6: t′←t, |

| 7: |

| 8: until convergence or a predefined maximum number of iterations is reached |

| 9: Output:α |

Solution of problem (13) with FISTA.

To make it clear, in the theorem below, the set (as well as the directed edge set ) contains ordered node pairs, meaning that (u, v) and (v, u) are different. The parameter m can be understood as the number of connected node pairs where the connection might be unidirectional or bidirectional.

Theorem 3. Define a set and set . The dual of problem (12) is

where α is a vector of length m collecting all αuv satisfying and u < v. For the function , the uth element of fA(α) is

The primal and dual variables are related as

The Lipschitz constant of the gradient of Ψ is upper bounded by

Proof. The proof is given in Appendix D.

In a nutshell, to solve the inner problem, we first compute the adjacency matrix of the digraph according to the graph reduction procedure proposed in Zhu and Segarra (2022). Then we solve the dual problem (13) using FISTA, and get the solution y to the primal problem (12) according to the relation between the primal and dual variables as shown in (14). Finally, we obtain the solution x to the original problem by taking the entries of y indexed by .

4.2.2. Solving the inner problem via PDHG

Problem (12) can also be solved using a primal-dual hybrid gradient (PDHG) algorithm (Chambolle and Pock, 2011, 2016a,b). Although both FISTA and PDHG ensure a quadratic convergence rate, it has been observed that PDHG can outperform FISTA in practice for clustering applications (Hein and Setzer, 2011).

PDHG is able to solve problems in the following form:

whose dual problem is

where and are proper, convex, lower semicontinuous functions, is a bounded linear operator, and and are the corresponding conjugate functions of f1 and f2 (Boyd et al., 2004).

The solution to problem (12) can be obtained via normalizing the solution to problem (18) below.

We can fit (18) into the form (16) by setting , , , , and B is a matrix. For the row of B corresponding to edge u → v, the uth and vth elements in the row are respectively equal to Auv and −Auv, and the other elements are zero. We can show that , with the domain that 0 ≤ zi ≤ 1 for any 0 ≤ i ≤ N2. Since f1 is 1-strongly convex, we can leverage an accelerated variant of the PDHG algorithm. The algorithm tailored for problem (18) is given in Algorithm 4 (cf. algorithm 8 in Chambolle and Pock, 2016a).

Algorithm 4

| 1: Initialization:, , |

| 2: repeat |

| 3: |

| 4: y′←y, |

| 5: , τ←θτ, |

| 6: |

| 7: until convergence or a predefined maximum number of iterations is reached |

| 8: Output:y |

Solution of problem (18) with accelerated PDHG.

4.3. A special case: Reduction to an undirected graph

If the submodular hypergraph is reducible to an undirected graph (e.g., see theorem 3.3 in Zhu and Segarra, 2022), then there exists another dual formulation of problem (12) that bears a similar form to (13) while the gradient of its objective has a smaller Lipschitz constant (cf. lemma 4.3 in Hein and Bühler, 2010). In fact, for this case, the hypergraph 1-spectral clustering can be implemented via its graph counterpart.

Following Theorem 1, we further define a vertex weight function for the graph such that for and for each auxiliary vertex . Then it follows from (10) that for any x ∈ ℝN where and y is composed of and . Minimizing both sides of the equation over x ∈ ℝN leads to the same minimum NCC value. When is undirected, the second eigenvector of the graph 1-Laplacian can be computed by minimizing and then the second eigenvector of the hypergraph 1-Laplacian can be computed as . This can also be understood in terms of the cut function. Following (8), it is easy to show that the Cheeger constant of the graph is identical to that of the hypergraph . This coincides with theorem 3.3 in (Liu et al., 2021), which further proves that if is the set in leading to the minimum NCC for the vertex weight function , then is the minimum NCC set in .

5. Experiments

We evaluate the performance of the proposed 1-spectral clustering algorithm for EDVW-based submodular hypergraphs (termed as EDVW-based hereafter) by focusing on the bipartition case. In particular, for hyperedge splitting functions as in (6), we select he(x) = x and ge(x) = min{x, γ(e) − x, βγ(e)} for every hyperedge , namely

where β is tunable. Notice that we reuse the symbols α and β in this section, which are different from and not related to α and β used when describing FISTA. A hypergraph with splitting functions as (19) can be reduced to a digraph that consists of vertices and edges. More precisely, we first project each hyperedge e onto a small graph which contains |e| + 2 vertices including two auxiliary vertices denoted by e′ and e″. For every v ∈ e, there are two directed edges respectively from v to e′ and from e″ to v, both of weight κ(e)γe(v). There is also a directed edge of weight βκ(e)γe(e) from e′ to e″. Then we concatenate these small graphs for all hyperedges together to form the final graph.

5.1. Datasets

We consider three widely used real-world datasets.

Reuters Corpus Volume 1 (RCV1): This dataset is a collection of manually categorized newswire stories (Lewis et al., 2004). We consider two categories C14 and C23. A few short documents containing less than 20 words are ignored. We select the 100 most frequent words in the corpus after removing stop words and words appearing in >10 and < 0.2% of the documents. We then remove documents containing < 5 selected words, leaving us with 7, 446 documents. A document (vertex) belongs to a word (hyperedge) if the word appears in the document. The edge-dependent vertex weights are taken as the corresponding tf-idf (term frequency-inverse document frequency) values (Leskovec et al., 2020) to the power of α, where α is a tunable parameter.

20 Newsgroups1: This is also a text dataset. For our two-partition case, we consider the documents in categories “rec.autos" and “rec.sport.hockey." We preprocess the dataset following the same procedure as used in RCV1 above. We finally construct a hypergraph of 1, 389 vertices and 100 hyperedges.

Covtype: This dataset contains patches of forest that are in different cover types. We consider two cover types (labeled as 4 and 5) and all numerical features. Each numerical feature is first quantized into 20 bins of equal range and then mapped to hyperedges. The resulting hypergraph has 12, 240 vertices and 196 hyperedges. For each hyperedge (bin), we compute the distance between each feature value in this bin and their median, and then normalize these distances to the range [0, 1]. The edge-dependent vertex weights are computed as exp(−α·distance). Under this setting, larger edge-dependent vertex weights are assigned to vertices whose feature values are close to the typical feature value in the corresponding bin.

Following Hayashi et al. (2020), for all datasets we set the hyperedge weight κ(e) to the standard deviation of the EDVW γe(v) for all . Following Li and Milenkovic (2018), we set the vertex weight μ(v) to the vertex degree defined in the submodular hypergraph model, i.e., .

5.2. Baselines

We compare the proposed approach with three baseline methods.

Random walk-based: The paper Hayashi et al. (2020) defines a hypergraph Laplacian matrix based on random walks with EDVW. We compute the second eigenvector of the normalized hypergraph Laplacian [cf. (6) in Hayashi et al., 2020] and then threshold it to get the partitioning.

Cardinality-based: In the description of the datasets above, when α = 0, we get the trivial EDVW and the splitting functions reduce to cardinality-based ones.

All-or-nothing: For the adopted splitting functions (19), they reduce to the all-or-nothing case if β is small enough [i.e., ].

For the proposed method, in Algorithm 2 we adopt the eigenvector obtained in the random walk-based method described above as the starting point. We solve the inner problem using PDHG presented in Algorithm 4. Moreover, in Algorithm 4 we initialize and z = 0.

5.3. Results

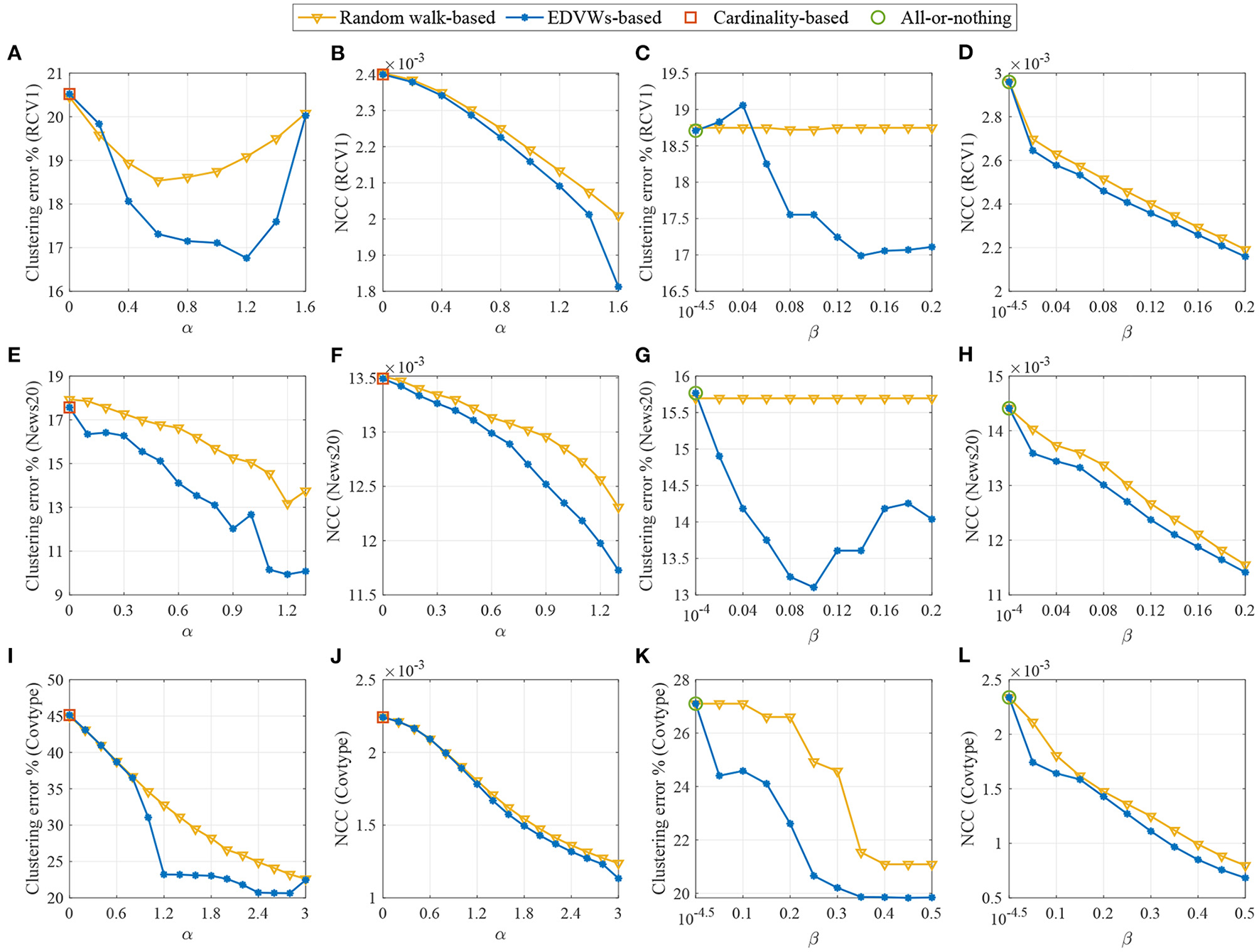

The results are shown in Figure 1. We present both the clustering error and the NCC where the clustering error is computed as the fraction of incorrectly clustered samples. For the RCV1 dataset (A–D), we fix β = 0.2 to observe the influence of α in (A, B) and fix α = 1 to test β in (C, D). For the 20 Newsgroups dataset (E–H), we fix β = 0.1 in (E, F) and α = 0.8 in (G, H) to observe the effects of α and β, respectively. For the Covtype dataset (I–L), we fix β = 0.2 in (I, J) and α = 2 in (K, L). We can see that for all the considered datasets, when edge-dependent vertex weights are ignored (including α = 0 for cardinality-based splitting functions and β close to zero for the all-or-nothing splitting function), the clustering performance is severely deteriorated, validating that it is necessary to incorporate EDVW for extra modeling flexibility. It can also be observed that, when appropriate values for α and β are selected (such as α = 1.2, β = 0.2 for RCV1, α = 1.2, β = 0.1 for 20 Newsgroups and α = 2.4, β = 0.2 for Covtype), the proposed method performs much better than the random walk-based method which depends on the classical Laplacian. This highlights the value of using the non-linear 1-Laplacian in spectral clustering. To summarize, both the use of EDVW and 1-Laplacian are beneficial for improving the spectral clustering performance.

Figure 1

Clustering performance for three real-world datasets displayed in pairs of figures depicting the clustering error and the NCC value as a function of the parameters α or β. For RCV1, we fix β = 0.2 in (A, B) and α = 1 in (C, D). For 20 Newsgroups, we fix β = 0.1 in (E, F) and α = 0.8 in (G, H). For Covtype, we fix β = 0.2 in (I, J) and α = 2 in (K, L). A proper choice of α and β helps significantly decrease the clustering error compared with existing methods. The performance improvement may benefit from both the use of EDVW and 1-Laplacian.

6. Related work

We discuss the related works in this section. We will show the relationship between hypergraph Laplacians introduced via random walks and those defined based on submodular splitting functions. We will also show how the proposed solution to the inner problem contributes to SFM.

6.1. Random walk-based Laplacians

Here we expand the discussion about the relation between hypergraph Laplacians based on random walks with EDVW and those studied in this paper considering submodular EDVW-based splitting functions.

Considering the hypergraph model with EDVW (cf. section 3.1), the random walk incorporating EDVW is defined as follows (Chitra and Raphael, 2019). Starting at some vertex u, the walker selects a hyperedge e containing u with probability proportional to κ(e), then moves to vertex v contained in e with probability proportional to γe(v). In this process, the probability of moving from u to vviae is

Then one can define a transition matrix P whose (u, v)th entry denotes the transition probability from u to v and is computed as . When the hypergraph is connected, the random walk converges to a unique stationary distribution π which is the all-positive dominant left eigenvector of P scaled to satisfy ||π||1 = 1. In Chitra and Raphael (2019) and Hayashi et al. (2020), hypergraph Laplacians based on such random walks are proposed and they are actually equal to combinatorial and normalized graph Laplacian matrices (i.e., graph 2-Laplacians) of an undirected graph which is defined on the same vertex set as the hypergraph and has the following adjacency matrix

where Φ is a diagonal matrix whose (v, v)th entry is πv.

Consider a hypergraph with submodular splitting functions in the following form for each of its hyperedges:

It is easy to show that is reducible to the graph defined by the adjacency matrix (21) since they have the same cut function as . Notice that there are no auxiliary vertices introduced in the graph reduction. Following Theorem 1, we have , implying that the hypergraph 1-Laplacian of is identical to the graph 1-Laplacian of if we assume that they share the same vertex weight function μ.

6.2. Decomposable submodular function minimization

In the inner problem of Algorithm 2, if the norm of x is ||x||∞, the problem is equivalent to the following SFM problem

where the primal and dual variables are related as xv = 1 if and xv = −1 if (Li and Milenkovic, 2018). Hence, the proposed solution to the inner problem can also be used to solve problems in the form of (23) when each function we can be represented as a concave function applied to a modular (additive) function [cf. (6)].

7. Conclusion

We presented an equivalent definition of graph reducibility based on which we further proposed a 1-spectral clustering algorithm for submodular hypergraphs that are graph reducible, especially for those with EDVW-based splitting functions. Through experiments on real-world datasets, we showcased the value of combining the hypergraph 1-Laplacian and EDVW. Future research directions include: (1) Developing computation methods for the hypergraph 1-Laplacian's eigenvectors which can work efficiently for all submodular splitting functions, (2) Designing multi-way partitioning algorithms based on non-linear Laplacians (Bühler and Hein, 2009), and (3) Exploring applications of p-Laplacians for different values of p (Fu et al., 2022).

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YZ developed the methodology, conducted the experiments, and wrote the initial manuscript. SS supervised the study, contributed to the discussions, and edited the manuscript. All authors read and approved the final manuscript.

Funding

This work was supported by NSF under award CCF 2008555.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdata.2023.1020173/full#supplementary-material

Footnotes

References

1

AmghibechS. (2003). Eigenvalues of the discrete p-laplacian for graphs. Ars Comb. 67, 283–302.

2

BachF. (2013). Learning with submodular functions: a convex optimization perspective. Found. Trends Mach. Learn. 6, 145–373. 10.1561/2200000039

3

BeckA.TeboulleM. (2009). A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202. 10.1137/080716542

4

BensonA. R.GleichD. F.LeskovecJ. (2016). Higher-order organization of complex networks. Science353, 163–166. 10.1126/science.aad9029

5

BoydS.BoydS. P.VandenbergheL. (2004). Convex Optimization. Cambridge: Cambridge University Press. 10.1017/CBO9780511804441

6

BühlerT.HeinM. (2009). “Spectral clustering based on the graph p-laplacian,” in International Conference on Machine Learning (Montreal, Canada), 81–88. 10.1145/1553374.1553385

7

ChambolleA.PockT. (2011). A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40, 120–145. 10.1007/s10851-010-0251-1

8

ChambolleA.PockT. (2016a). An introduction to continuous optimization for imaging. Acta Numer. 25, 161–319. 10.1017/S096249291600009X

9

ChambolleA.PockT. (2016b). On the ergodic convergence rates of a first-order primal-dual algorithm. Math. Program. 159, 253–287. 10.1007/s10107-015-0957-3

10

ChangK.-C. (2016). Spectrum of the 1-laplacian and cheeger's constant on graphs. J. Graph Theory81, 167–207. 10.1002/jgt.21871

11

ChangK.-C.ShaoS.ZhangD. (2017). Nodal domains of eigenvectors for 1-laplacian on graphs. Adv. Math. 308, 529–574. 10.1016/j.aim.2016.12.020

12

ChitraU.RaphaelB. (2019). “Random walks on hypergraphs with edge-dependent vertex weights,” in International Conference on Machine Learning, 1172–1181. Available online at: http://proceedings.mlr.press/v97/chitra19a.html (accessed February 9, 2023).

13

EneA.NguyenH. (2015). “Random coordinate descent methods for minimizing decomposable submodular functions,” in International Conference on Machine Learning, 787–795. Available online at: http://proceedings.mlr.press/v37/ene15.html (accessed February 9, 2023).

14

FuG.ZhaoP.BianY. (2022). “p-laplacian based graph neural networks,” in International Conference on Machine Learning, 6878–6917. Available online at: https://proceedings.mlr.press/v162/fu22e.html (accessed February 9, 2023).

15

HayashiK.AksoyS. G.ParkC. H.ParkH. (2020). “Hypergraph random walks, laplacians, and clustering,” in Conference on Information and Knowledge Management (Galway), 495–504. 10.1145/3340531.3412034

16

HeinM.BühlerT. (2010). An inverse power method for nonlinear eigenproblems with applications in 1-spectral clustering and sparse PCA. Adv. Neural Inf. Process. Syst. 23.

17

HeinM.SetzerS. (2011). Beyond spectral clustering - tight relaxations of balanced graph cuts. Adv. Neural Inf. Process. Syst. 24.

18

HeinM.SetzerS.JostL.RangapuramS. S. (2013). The total variation on hypergraphs-learning on hypergraphs revisited. Adv. Neural Inf. Process. Syst. 26.

19

JegelkaS.BachF.SraS. (2013). Reflection methods for user-friendly submodular optimization. Adv. Neural Inf. Process. Syst. 26.

20

LeskovecJ.RajaramanA.UllmanJ. D. (2020). Mining of Massive Data Sets. Cambridge: Cambridge University Press. 10.1017/9781108684163

21

LewisD. D.YangY.Russell-RoseT.LiF. (2004). RCV1: a new benchmark collection for text categorization research. J. Mach. Learn. Res. 5, 361–397.

22

LiJ.HeJ.ZhuY. (2018). “E-tail product return prediction via hypergraph-based local graph cut,” in International Conference on Knowledge Discovery and Data Mining (London), 519–527. 10.1145/3219819.3219829

23

LiP.MilenkovicO. (2017). Inhomogeneous hypergraph clustering with applications. Adv. Neural Inf. Process. Syst. 30. 10.1007/978-3-319-70139-4

24

LiP.MilenkovicO. (2018). “Submodular hypergraphs: p-laplacians, cheeger inequalities and spectral clustering,” in International Conference on Machine Learning, 3014–3023. Available online at: http://proceedings.mlr.press/v80/li18e.html (accessed February 9, 2023).

25

LiuM.VeldtN.SongH.LiP.GleichD. F. (2021). “Strongly local hypergraph diffusions for clustering and semi-supervised learning,” in International World Wide Web Conference (Ljubljana), 2092–2103. 10.1145/3442381.3449887

26

LovászL. (1983). “Submodular functions and convexity,” in Mathematical Programming The state of the Art, eds A. Bachem, M. Grötschel, and B. Korte (New York, NY: Springer), 235–257. 10.1007/978-3-642-68874-4_10

27

NesterovY. E. (1983). A method for solving the convex programming problem with convergence rate o (1/k2). Dokl. Akad. Nauk SSSR269, 543–547.

28

SchaubM. T.ZhuY.SebyJ.-B.RoddenberryT. M.SegarraS. (2021). Signal processing on higher-order networks: Livin'on the edge…, beyond. Signal Process. 187, 108149. 10.1016/j.sigpro.2021.108149

29

SzlamA.BressonX. (2010). “Total variation and cheeger cuts,” in International Conference on Machine Learning (Haifa), 1039–1046.

30

TudiscoF.HeinM. (2018). A nodal domain theorem and a higher-order cheeger inequality for the graph p-laplacian. J. Spectr. Theory8, 883–908. 10.4171/JST/216

31

TudiscoF.MercadoP.HeinM. (2018). Community detection in networks via nonlinear modularity eigenvectors. SIAM J. Appl. Math. 78, 2393–2419. 10.1137/17M1144143

32

VeldtN.BensonA. R.KleinbergJ. (2020). Hypergraph cuts with general splitting functions. arXiv [preprint]. 10.48550/arXiv.2001.02817

33

Von LuxburgU. (2007). A tutorial on spectral clustering. Stat. Comput. 17, 395–416. 10.1007/s11222-007-9033-z

34

WagnerD.WagnerF. (1993). “Between min cut and graph bisection,” in International Symposium on Mathematical Foundations of Computer Science (Berlin), 744–750. 10.1007/3-540-57182-5_65

35

YoshidaY. (2019). “Cheeger inequalities for submodular transformations,” in ACM-SIAM Symposium on Discrete Algorithms (Tokyo), 2582–2601. 10.1137/1.9781611975482.160

36

ZhuY.LiB.SegarraS. (2021). “Co-clustering vertices and hyperedges via spectral hypergraph partitioning,” in European Signal Processing Conference (Dublin), 1416–1420. 10.23919/EUSIPCO54536.2021.9616223

37

ZhuY.LiB.SegarraS. (2022). “Hypergraphs with edge-dependent vertex weights: spectral clustering based on the 1-laplacian,” in International Conference on Acoustics, Speech and Signal Processing (Singapore), 8837–8841. 10.1109/ICASSP43922.2022.9746363

38

ZhuY.SegarraS. (2022). Hypergraph cuts with edge-dependent vertex weights. Appl. Netw. Sci. 7:45. 10.1007/s41109-022-00483-x

Summary

Keywords

submodular hypergraphs, p-Laplacian, spectral clustering, edge-dependent vertex weights, decomposable submodular function minimization

Citation

Zhu Y and Segarra S (2023) Hypergraphs with edge-dependent vertex weights: p-Laplacians and spectral clustering. Front. Big Data 6:1020173. doi: 10.3389/fdata.2023.1020173

Received

15 August 2022

Accepted

02 February 2023

Published

21 February 2023

Volume

6 - 2023

Edited by

Pan Li, Purdue University, United States

Reviewed by

Francesco Tudisco, Gran Sasso Science Institute, Italy; Uthsav Chitra, Princeton University, United States

Updates

Copyright

© 2023 Zhu and Segarra.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yu Zhu ✉ yz126@rice.edu

This article was submitted to Machine Learning and Artificial Intelligence, a section of the journal Frontiers in Big Data

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.