Civic Online Reasoning Among Adults: An Empirical Evaluation of a Prescriptive Theory and Its Correlates

- 1Department of Psychology, Uppsala University, Uppsala, Sweden

- 2Department of Education, Uppsala University, Uppsala, Sweden

Today, the skill to read digital news in constructive ways is a pivotal part of informed citizenship. A large part of the research on digital literacy is dedicated to adolescents and not adults. In this study, we address this research gap. We investigated the abilities of 1222 Swedish adults to determine the credibility of false, biased, and credible digital news in relation to their background, education, attitudes, and self-reported skills. Their ability was operationalized as three components in the prescriptive theory of civic online reasoning. Results from a combined survey and performance test showed that the ability to determine the credibility of digital news is associated with higher education, educational orientation in humanities/arts, natural sciences, and technology, the incidence of sourcing at work, and appreciation of credible news. An SEM analysis confirmed that the items used to assess the different skills tapped into the theoretical constructs of civic online reasoning and that civic online reasoning was associated with a majority of the predictors in the analyses of the separate skills. The results provide unique evidence for a prescriptive theory of the skills needed to navigate online.

Introduction

Online propaganda and disinformation are major challenges to democracy in an era of information disorder and infodemics (Wardle and Derakhshan, 2017; Zarocostas, 2020). On this account, international organizations and researchers emphasize how citizens need skills to navigate credible and misleading information (EU, 2018; Kozyreva et al., 2020). The educational challenge is evident in research focusing on teenagers' abilities and attitudes, but research with a focus on adults' digital media literacy is more limited (Lee, 2018). Process research emphasizes the complexity of evaluating online news in a world where it is easy to make misleading information look credible. Even history professors and students at elite universities struggle to separate trustworthy digital information from false and biased information (Wineburg and McGrew, 2019). Similarly, young people, born in the digital era, have difficulties evaluating online information (McGrew et al., 2018; Breakstone et al., 2019; Ku et al., 2019; Nygren and Guath, 2019).

The ability to read and assess digital information in updated ways has been identified as central to citizens' ability to evaluate online news efficiently. McGrew et al. (2018, p. 1) proposed a prescriptive1 theory of civic online reasoning defined as “the ability to effectively search for, evaluate, and verify social and political information online.” Specifically, McGrew et al. (2017, 2018) state that, to evaluate a piece of online news, people should investigate who is behind the information, evaluate the evidence presented, and compare the information with other sources. However, to suggest better educational measures, it is important to delineate how civic online reasoning skills are related to individual differences. The goal of the present study was to (i) investigate how background, education, and attitudes are related to the ability to determine the credibility of different types of digital news departing from the prescriptive theory of civic online reasoning and (ii) evaluate civic online reasoning in relation to the measured constructs.

Previous research in the field of media and information literacy (MIL) has produced a number of definitions of digital literacies related to the ability to navigate digital media and information (Aufderheide, 1993; Livingstone, 2004; Hobbs, 2010; Leaning, 2019). One often-cited definition of digital literacy is “the ability to understand information and—more important—to evaluate and integrate information in multiple formats that the computer can deliver” (Gilster in Leaning, 2019, p. 5). Although very broad, the definition encompasses the different skills that have later become a consensus term, including (i) literacy per se, (ii) knowledge about information sources, (iii) central competencies (e.g., media and information literacy), and (iv) attitudes (Bawden, 2001).

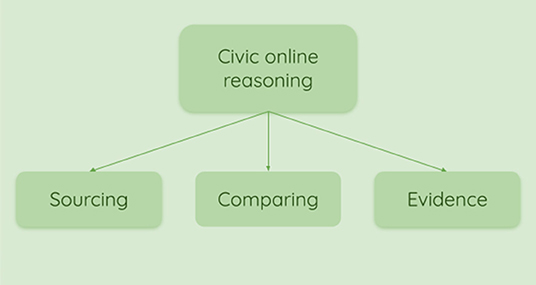

Media literacy, denoting the critical ability to assess information in mass media (Bawden, 2001), is intimately related to information literacy, and therefore, it is often considered a component of information literacy (McClure in Bawden, 2001). Information literacy has many definitions, but most researchers seem to agree that it includes the ability to “access, evaluate and use information from a variety of sources” (Doyle in Bawden, 2001, p. 231). Information literacy has been described as a “survival skill” for citizens in a digital world (Eshet, 2004). Further, the concept of information literacy has been formed by its close relation to education, which is reflected in many operationalizations. Of specific interest is the one given by Breivik (Breivik in Bawden, 2001, p. 242–243), suggesting that information literate individuals ask three “fundamental questions […]: how do you know that—what evidence do you have for that—who says so—how can [you] find out.” The questions are almost identical to the operationalization of civic online reasoning (see Figure 1) by McGrew et al. (2017, 2018): Who is behind the information? What is the evidence? What do other sources say? In this view, civic online reasoning refers to the specific skills of information literacy, which, together with media literacy, are subsets of digital literacy.

Figure 1. Schematic figure of the skills Sourcing (Who is behind the information?), Evidence (What is the evidence?), and Comparing (What do other sources say?) that constitute the prescriptive theory of media and information literacy, civic online reasoning.

Productive ways of using digital media seem to mirror levels of education and societal inequalities. What previously was described as a digital divide between people having access to computers and the internet has become a digital divide characterized by usage differences between groups in society (van Dijk, 2020). Digital literacy, including more general internet skills, differs with regard to age, gender, and education (Van Deursen et al., 2011; van Deursen and van Dijk, 2015). Better abilities to navigate manipulative interfaces have, for instance, been connected to higher education (Luguri and Strahilevitz, 2019). Further, education, epistemic cognition, and self-regulated learning have been connected to the ability to assess the credibility of information (Zimmerman, 2000; Greene and Yu, 2016). There is also a gap between news-seekers and news-avoiders, consisting of a divide between politically interested people and those engaging very little in the democratic debate (Strömbäck et al., 2013).

While digital media literacy in schools has attracted a lot of research interest, little is known about how adults navigate digital news (Lee, 2018). Previous research on digital literacy has shown that, in contrast to older adults, young people possess the operational and formal skills needed to navigate online (van Deursen and van Dijk, 2015). However, when it comes to civic issues online, older adults often excel due to higher levels of education and content knowledge (Van Deursen et al., 2011). Other research indicates the opposite: when it comes to sharing fake news on Facebook, older adults may share more than young people (Guess et al., 2019).

Knowledge and attitudes toward information have been noted as important when people navigate and evaluate information (Kammerer et al., 2015; Brand-Gruwel et al., 2017; Flynn et al., 2017; Kahan, 2017). Still, to our knowledge, there are no existing studies that investigate the relationship between adults' abilities to evaluate digital news and their background and attitudes. Research on adolescents' ability to assess the credibility of online news has shown that confidence in one's skills to navigate online and higher trust in the reliability of digital news were associated with poorer performance, while attaching much importance to access to credible news was associated with better performance (Nygren and Guath, 2019).

Navigating and assessing the credibility of online information is rendered difficult due to a number of factors. Users may be manipulated by the framing of the information and seduced by the design and functionality of the site calling for quick decision-making (e.g., Metzger et al., 2010). Assessment of information credibility is also affected by people's prior beliefs, cognitive abilities, and coherence of the message (Lewandowsky et al., 2012; De Keersmaecker and Roets, 2017). In addition, people use cues and heuristics (Gigerenzer and Gaissmaier, 2011) as an “intellectual rule of thumb” to navigate information in online feeds. The strategy may be useful, but oftentimes it fails to separate credible from biased and false information (Metzger et al., 2010; Breakstone et al., 2019). Research on civic online reasoning has identified constructive strategies among professional fact-checkers that benefit from their experience when evaluating online information (Wineburg and McGrew, 2019). This knowledge, in turn, seems to be part of a disciplinary literacy where journalists scrutinize online information differently than historians and students. In fact, professional knowledge may be tacit knowledge coming from practical experiences (Eraut, 2000). This is confirmed by research on disciplinary literacy that identified implicit differences in information evaluation skills between experts (Shanahan et al., 2011) and how experience with manipulated images can make one better at identifying processed images (Shen et al., 2019).

The Present Study

The present study (i) investigated how differences in background, education, attitudes, and self-reported abilities regarding digital information relate to civic online reasoning in a performance test; and (ii) validated the prescriptive theory of civic online reasoning with structural equation modeling (SEM). Departing from the definition of civic online reasoning, we specifically measured performance as (i) sourcing: who is behind the information? (ii) comparing: what do other sources say? and (iii) evidence: what is the evidence? (Wineburg et al., 2016; McGrew et al., 2017, 2018). In line with previous research, we hypothesized that the following:

H1) Attitudes and self-rated skills will have the following effects:

H1a) higher ratings of importance of access to credible news will be related to better performance on item measuring skills to evaluate evidence (Nygren and Guath, 2019)

H1b) higher ratings of internet information reliability will be related to poor performance on all skills, especially item measuring skills to evaluate evidence (Nygren and Guath, 2019; Vraga and Tully, 2019)

H1c) higher self-rated ability to evaluate information online will be related to poor performance on item measuring skills to evaluate evidence (Nygren and Guath, 2019)

H1d) higher ratings of source criticism at work will be related to better performance on item measuring skills of sourcing, evaluating evidence, and comparing (Shen et al., 2019; Wineburg and McGrew, 2019).

H2) Educational level and educational orientation will have the following effects:

H2a) higher educational level (Van Deursen et al., 2011) will be related to better performance on item measuring skills of sourcing, evaluating evidence, and comparing

H2b) educational orientation will affect performance, specifically, an education within humanities/arts will be associated with better performance on item measuring skills to evaluate evidence and comparing (Nygren and Guath, 2019).

H3) Age will affect the performance; specifically, older age is predictive of poor performance in comparing and sourcing (Guess et al., 2019).

Materials and Methods

Participants

A sample of the Swedish adult population (N = 1,222), aged 19–99, was given an online survey and a performance test. The response rate was 83.4 % with an error margin of ±3.13% after screening. Participants, recruited by Survey Monkey, agreed to anonymously take the survey and test for research purposes and participated for charity or gift cards. Ten participants were classified as outliers based on the criteria that time on task was >-3 z-scores and <3 z-scores (M = 13.24 min, SD = 18.06 min), resulting in 1,212 participants. The age categories were 19–29 years (N = 144), 30–44 years (N = 306), 45–60 years (N = 314), and >60 years (N = 241) with 493 women, 512 men and 207 participants did not want to reveal their gender.

Design

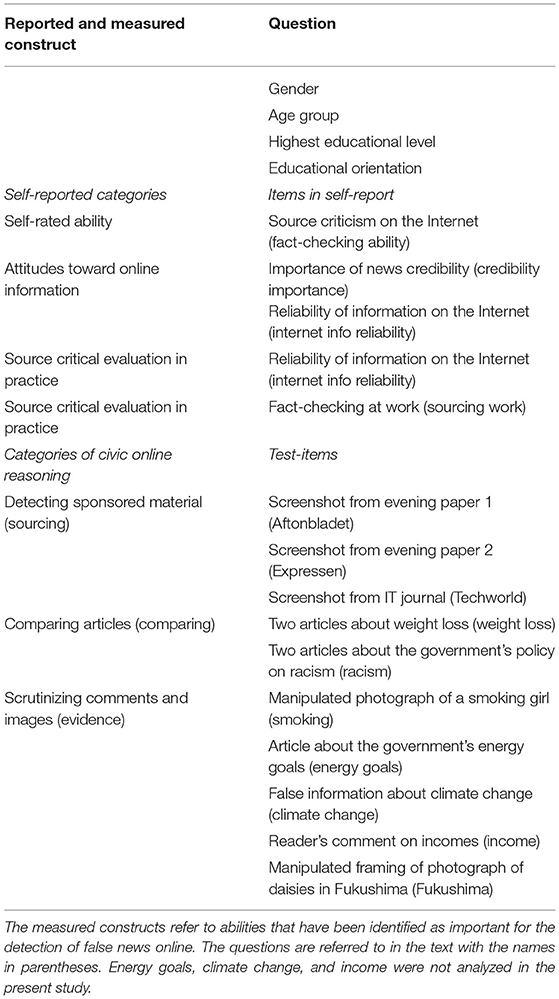

The survey and performance test consisted of 21 questions whose contents and measured variables are presented in Table 1. To safeguard validity and reliability, we used test items with inspiration from previous research on civic online reasoning (Wineburg et al., 2016; McGrew et al., 2017, 2018). The test items were designed in iterative processes including multiple meetings with researchers in media, education, and psychology. The process also included two pilot tests with feedback from ~100 participants. In the pilot tests, participants were asked to fill out a number of items and comment on whether the questions were clear or not. The feedback was used in the construction of the survey questions used in the present study.

Measures

The items reflect three basic skills for assessing the credibility of information on the Internet: (i) sourcing—identifying where the news came from (ii) comparing information—what other sources say about the news; and (iii) evidence—evaluating the presented evidence. The basic skills are latent variables, and we used nine items (described below) to assess them. Three latent variables were subordinate to an overall latent variable, civic online reasoning.

To test sourcing abilities, participants were asked, in three different test items, to separate news from advertisements. Specifically, they were asked to identify the source of information on screenshots from popular online newspapers and distinguish between information designed to manipulate buyers (advertisements) from information designed to inform readers (news).

To test the ability to compare information, participants were asked to compare the credibility of an article based on current research about weight loss (~300 words) with an article about a surgeon working for a company selling cosmetic surgery (~200 words). The less credible weight loss article used a picture of a doctor, which gives credibility to the text when it selectively emphasizes certain facts to promote surgery. Participants were also asked to assess the credibility of a balanced text from public service on the government's new policies regarding hate crime with a biased right-wing text on the same matter from an online newspaper described as junk news2 (Hedman et al., 2018). We deleted information about the sources behind the texts to make sure that participants would compare the texts, not just the sources. The right-wing text (Article A) referred to a credible source (Article B) in an attempt to assume credibility and support a false narrative. Article B was published on the day of the event and had direct quotes from the press conference while Article A was published the following day without primary sources. Ideally, the survey text should have been linked to the articles on the internet; however, for technical reasons, we could not direct the participants from the survey platform. Consequently, the participants were not encouraged to search online, which prevented us from measuring the more advanced skill of corroboration (i.e., mastery) as defined by McGrew et al. (2018), and we, therefore, labeled this skill, comparing. For further discussion of this limitation, refer to the Discussion section below.

The less credible text on racism selectively emphasizes certain facts and consciously misrepresents the message and the source to underscore a false narrative regarding migration (e.g., Rapacioli, 2018). For instance, the right-wing text use quotation marks when mentioning racism and calls the new legislation an attempt to punish more critics of immigration.

To test the ability to evaluate evidence, participants were asked to evaluate if misplaced and manipulated images can be used as evidence. A manipulated image of a girl smoking emphasizing claims regarding the health risks of smoking in the text was used as misleading visual information. We also used a cropped photograph of daisies (Fukushima) in manipulative framing.3 The image of mutated flowers was intended to lead the reader astray with a claim that radiation from the reactor in Fukushima caused the mutation. However, no information was provided about the evidence of radiation or where the photograph was taken.

Procedure

Participation was voluntary and all participants were informed about the purpose of the study and their rights to withdraw from the study at any point. No traceable data was collected in line with ethical guidelines.4 Participants were given a link to the survey that they could respond to wherever they typically access digital information.

Analysis of Results

The group with 9 years of compulsory schooling was merged with the group with 2 years of upper secondary schooling, and the group with PhDs was merged with the group with at least 3 years of university studies.

We computed the sum of the items measuring the three dimensions of civic online reasoning into three categories: sourcing (Techworld, Aftonbladet, and Expressen), comparing (weight loss and racism), and evidence (smoking and Fukushima). We then made Poisson regressions for each category, with the number of correct as a dependent variable and educational level, educational orientation, sourcing work, credibility importance, internet info reliability, fact-checking ability, and age as predictor variables. The coefficients describe the expected log count for correct items. For instance, a positive coefficient indicates that the predictor increases the expected log count for the number of correct items, and inversely, a negative coefficient indicates that the predictor decreases the expected log count of correct items. The open-ended responses asking participants to justify their answers were not analyzed in the present study.

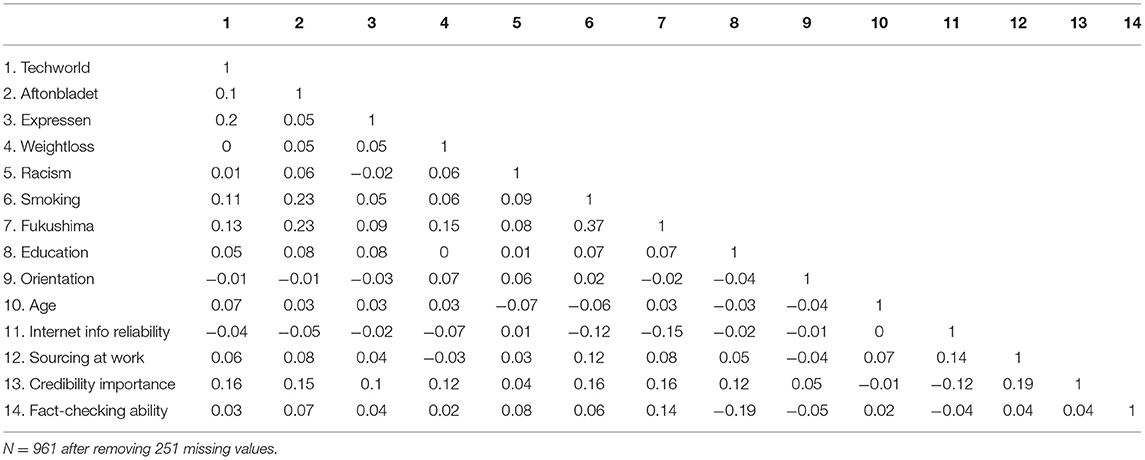

Next, we made an SEM analysis with the lavaan-package in R (Rosseel, 2012) in order to investigate (i) whether the measured variables tapped into the subordinate latent variables (sourcing, evidence, and comparing), (ii) whether the subordinate latent variables were related to the superordinate latent variable, civic online reasoning, and (iii) whether the observed relationships between the predictor variables in the regressions could be replicated on the superordinate latent variable, civic online reasoning. We only present significant results in the text. Complete results are represented in Tables 2–7. All data are available on the Open Science Framework project page: https://osf.io/ze53h/.

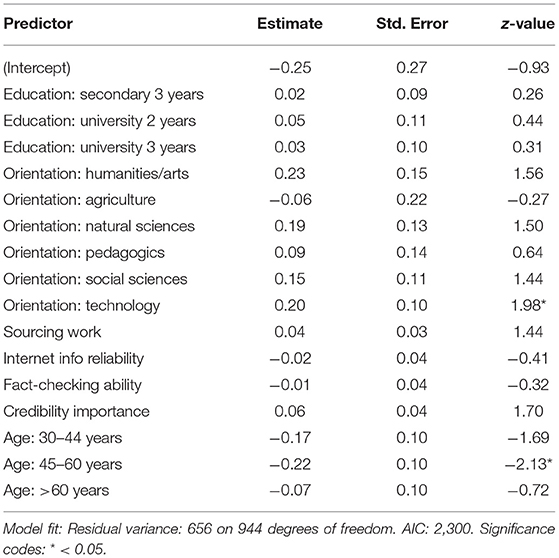

Table 2. Estimates of best fitting Poisson regression model of the number of correct responses on sourcing, with coefficients denoting the expected log count for a unit increase of the ordinal predictors and the expected log count for each category compared with the baseline category for the categorical predictors.

Results

There was a great spread of the participants' experience of sourcing at work. Rated on a 1–4 scale, the mean was 2.6 with a standard deviation of 1.3 (M = 2.6, SD = 1.3). On a scale of 1 to 5, participants considered it important to consume credible news (M = 4.2, SD = 1.0), and they considered information on the internet to be slightly more reliable than average (M = 3.0, SD = 0.8). Finally, participants considered themselves quite apt at source criticism on the internet (M = 3.9, SD = 0.8).

The self-rated ability of fact-checking was somewhat misaligned with the participants' objective abilities measured in the performance test. On items measuring sourcing abilities as multiple-choice questions, 14.8% answered correctly on Techworld, 22.6% on Aftonbladet, and 16.3% on Expressen. For items testing comparing, 57.7% answered correctly on weight loss and 45.2% on racism, while on assessing abilities to evaluate evidence, 65.0% answered correctly on Fukushima and 56.3% on smoking.

Performance

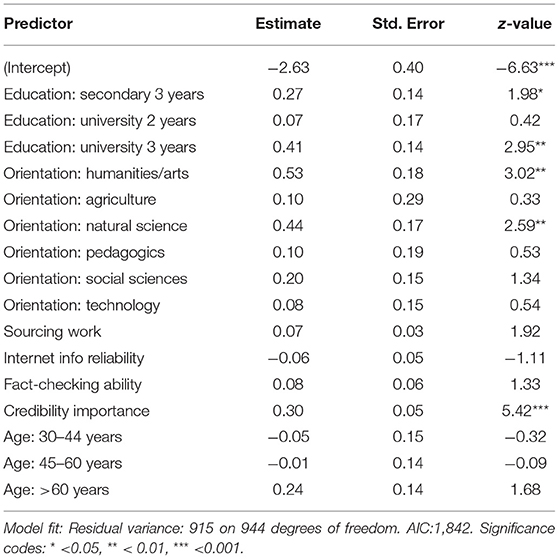

Sourcing

For sourcing (Table 2), we summed the number of correct responses on Techworld, Expressen, and Aftonbladet, and there were three significant effects: educational level, educational orientation, and credibility importance (see Table 2). For educational level, there was a larger expected log count of correct answers for those with 3 years upper secondary school [b = 0.27, SE = 0.14, p = 0.048] and 3 years of university education [b = 0.41, SE = 0.14, p = 0.003] compared with those with 2 years of secondary school (baseline) for the number of correct answers. For educational orientation, there was a larger expected log count of correct answers for natural sciences [b = 0.44, SE = 0.17, p = 0.010] compared with health (baseline). For credibility importance, there was a larger expected log count of correct answers for a unit increase of credibility importance [b = 0.30, SE = 0.05, p < 0.001].

Comparing

For comparing (Table 3), we summed the number of correct responses on weight loss and racism, and there were two significant effects: educational orientation and age group. For educational orientation, there was a significantly larger expected log count of correct answers for those with technology and production oriented education (technology) [b = 0.20, SE = 0.10, p = 0.048] compared with baseline (health). For age group, there was a significantly smaller expected log count of correct answers from those aged 45–60 years [b = −0.22, SE = 0.10, p = 0.033] compared with baseline (19–29 years).

Table 3. Estimates of best fitting Poisson regression model of the number of correct responses on comparing, with coefficients denoting the expected log count for a unit increase of the ordinal predictors and the expected log count for each category compared with the baseline category for the categorical predictors.

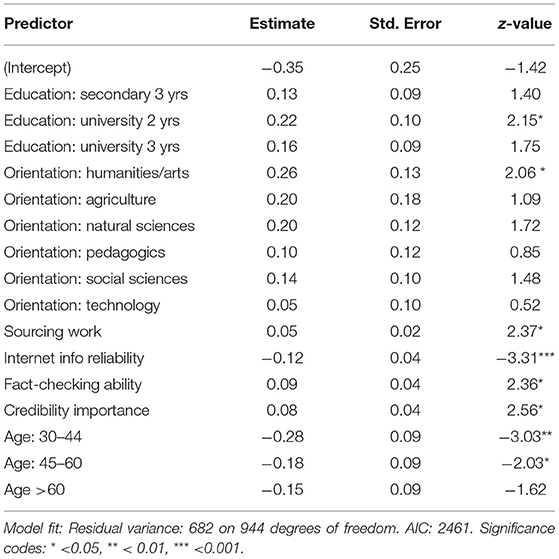

Evidence

For evidence (Table 4), we summed the number of correct responses on smoking and Fukushima, and there were seven significant effects: educational level, educational orientation, sourcing at work, internet information reliability, sourcing at work, credibility importance, and age group. For educational level, there was a larger expected log count of correct answers for those with 2 years of university education [b = 0.22, SE = 0.10, p = 0.032] compared with baseline (two years of upper secondary school education). For educational orientation, there was a larger expected log count of correct answers for those with humanities/arts [b = 0.26, SE = 0.13, p = 0.039] compared with baseline (health). For sourcing at work, there was a larger expected log count of correct answers [b = 0.09, SE = 0.04, p = 0.018] with a unit increase in the rating. For internet information reliability, there was a smaller expected log count of correct answers [b = −0.12, SE = 0.04, p < 0.001] with a unit increase in rating. For fact-checking ability, there was a larger expected log count of correct answers [b = 0.05, SE = 0.02, p = 0.018] with a unit increase in rating. For credibility importance, there was a larger expected log count of correct answers [b = 0.08, SE = 0.03, p = 0.010] with a unit increase in the rating. For age group, there was smaller expected log count of correct answers for those aged 30–44 years [b = −0.28, SE = 0.09, p = 0.002] and aged 45-60 years [b = −0.18, SE = 0.09, p = 0.042] compared with baseline (age 19–29 years).

Table 4. Estimates of best fitting Poisson regression model of the number of correct responses on evidence, with coefficients denoting the expected log count for a unit increase of the ordinal predictors and the expected log count for each category compared with the baseline category for the categorical predictors.

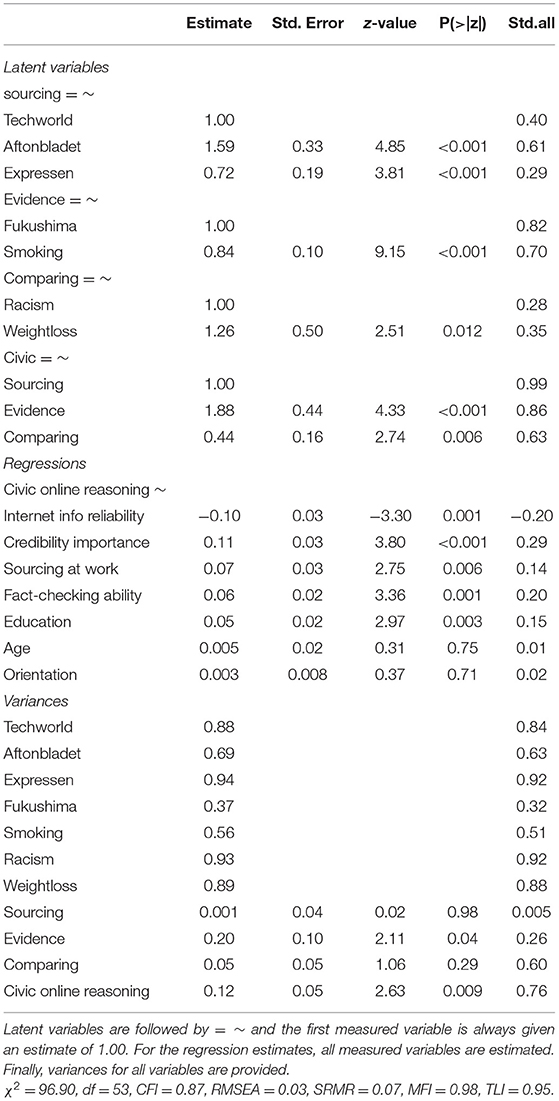

SEM Analysis

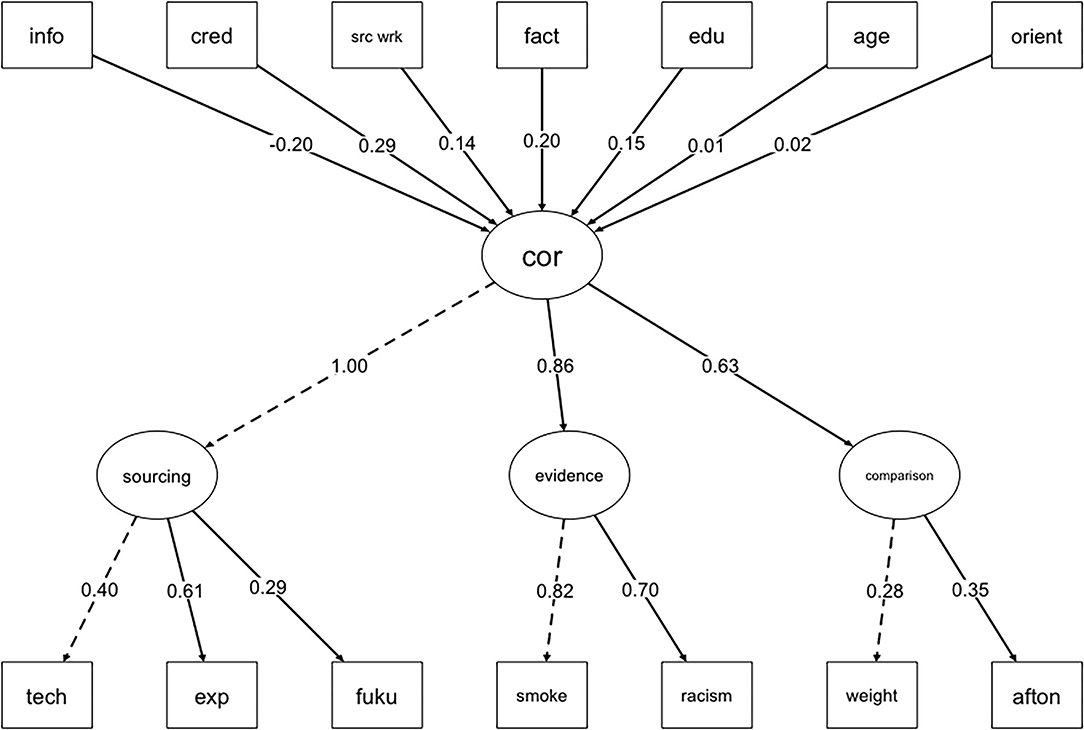

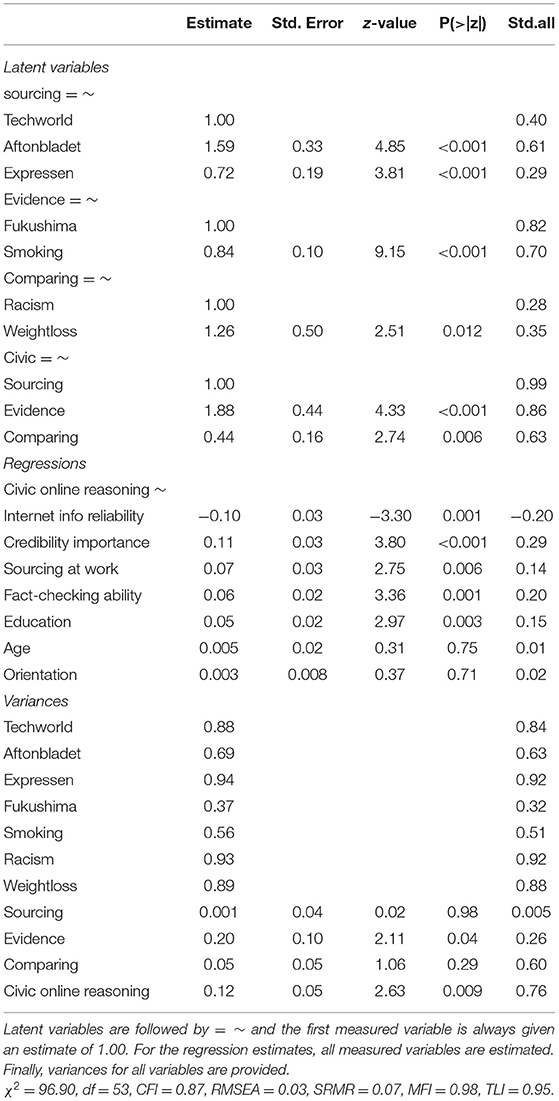

We undertook an SEM analysis based on data from 1,212 adults on the attitudes, background variables, and performance measures described in the Methods section. In Figure 2, we can see the initial model, where circles represent latent variables and rectangles represent measured variables. The model was based on the assumptions of civic online reasoning. Specifically, it posited that (i) the latent skills sourcing, comparing, and evidence described the items used to measure each skill, (ii) the latent skills were related to a common latent construct civic online reasoning, and (iii) civic online reasoning was related to the attitudes, self-rated skills, and background variables used in the regression analyses. A correlation table with the measured variables is shown in Table 5. From the total number, 251 cases were removed due to list-wise deletion of missing values.5 Since a majority of the variables were categorical (only the attitudes and self-rated abilities were on an ordinal scale), we used item factor analysis (IFA) which treats the responses to dichotomous indicator variables as course representations of a continuous underlying variable Beaujean(2014, p. 96). Further, we used a DWLS (diagonally weighted least squares estimator) producing chi-square statistics that are robust. The hypothesized model (Figure 2) was not a good fit for the data (Table 6 for a complete specification).

Figure 2. An initial hypothesized model with four latent variables: cor (civic online reasoning), comparison, evidence, and sourcing. Civic online reasoning is related to the underlying constructs comparison, evidence, and sourcing. The latter three latent variables are related to the respective indicator variables: weight (weightloss), racism; smoke (smoking), fuku (Fukushima); exp (Expressen), afton (Aftonbladet), tech (Techworld). In the upper part of the figure the regression variables appear: info (internet info reliability), cred (credibility importance), src wrk (sourcing at work), fact (fact-checking ability), edu (education), age, and orient (orientation). The solid lines represent measured relationships. The dotted lines indicate that the loadings of the variables were fixed (in this case at 1).

Table 6. Standardized estimates from the hypothesized model (latent variables and regression), including z-values and p-values.

Modification

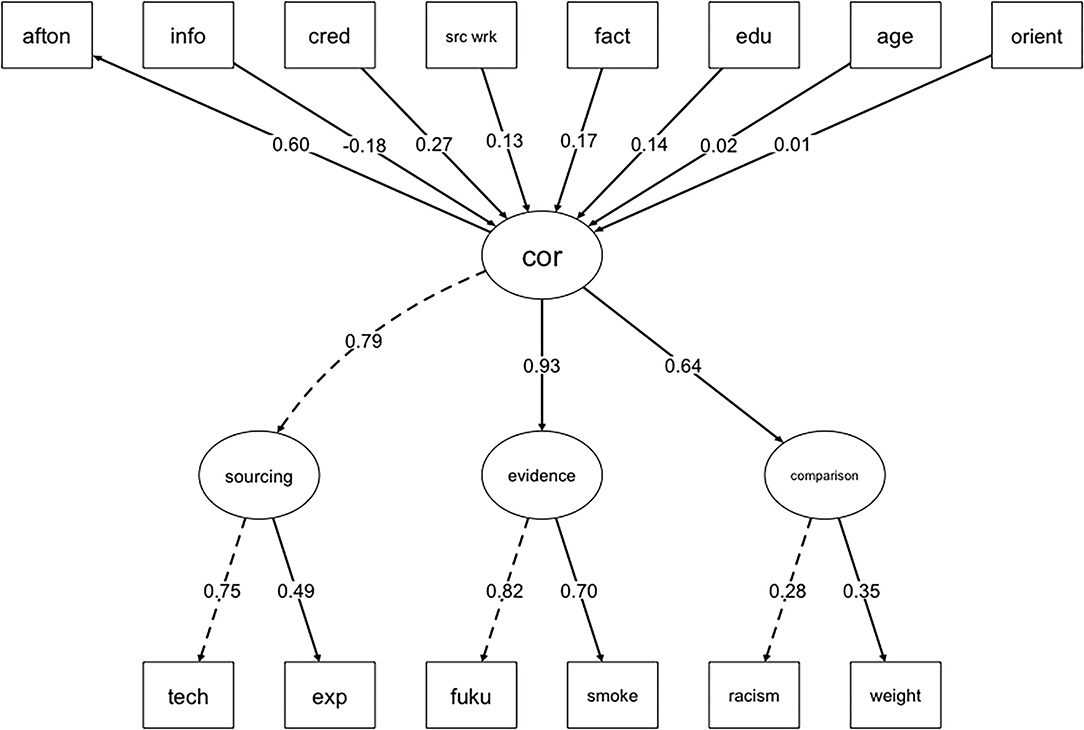

We, therefore, modified the model (Table 7) based on the modification indices and theoretical considerations. Briefly, the modification indices implied that Aftonbladet was related directly to the latent variable civic online reasoning. This was confirmed by the fact that Aftonbladet was related to both latent variables sourcing and evidence. Accordingly, we made a direct arrow between Aftonbladet and civic online reasoning (see Figure 3). The modified model provided a good fit with the data, = 71.72 (p = 0.04), CFI = 0.94, TLI = 0.98, and RMSEA = 0.019.

Table 7. Standardized estimates from the hypothesized model (latent variables and regression), including z-values and p-values.

Figure 3. A modified model with four latent variables: cor (civic online reasoning), sourcing, evidence, and comparing. Civic online reasoning is related to the underlying constructs evidence, sourcing, comparison and the indicator variable afton (Aftonbladet). The three latent variables are related to the same indicator variables as in Figure 2. In the upper part of the figure the same regression variables as in Figure 2 appear: info (internet info reliability), cred (credibility importance), src wrk (sourcing at work), edu (education), fact (fact-checking ability), age, and orient (orientation). The solid lines represent measured relationships. The dotted lines indicate that the loadings of the variables were fixed (in this case at 1).

Direct Effects

Sourcing was positively related to correct judgments on Techworld (standardized coefficient = 0.75) and Expressen (standardized coefficient = 0.50), indicating that the items measure the same type of skill. Evidence was positively related to correct judgments on Fukushima (standardized coefficient = 0.82) and smoking (standardized coefficient = 0.70). Again, both items seem to measure a common skill. Comparing was positively related to correct judgements on racism (standardized coefficient = 0.28) and weight loss (standardized coefficient = 0.35). In the final model, Aftonbladet is not included as an indicator variable for sourcing. Instead, it is directly related (standardized coefficient = 0.60) to the superordinate latent variable civic online reasoning. This is theoretically sound since the item was correlated with both comparing and evidence as well as civic online reasoning in the hypothesized model. Finally, we calculated the reliability of each factor with Cronbach's alpha for ordinal and binary data (Cronbach, 1951). For sourcing α = 0.54, for evidence α = 0.72, and for comparing α = 0.17.

Indirect Effects

There was also evidence of a hierarchical relationship between civic online reasoning and sourcing (standardized coefficient = 0.89), evidence (standardized coefficient = 0.95), and comparing (standardized coefficient = 0.62). Finally, the regression on civic online reasoning and the background variables and attitudes showed that there were four significant associations: negative relationship with internet information reliability [b = −0.18, SE = 0.04, p = 0.001], credibility importance [b = 0.27, SE = 0.04, p < 0.001], sourcing at work [b = 0.13, SE = 0.04, p = 0.006], fact-checking ability [b = 0.17, SE = 0.02, p = 0.001], and educational level [b = 0.14, SE = 0.03, p = 0.003]. All associations were in the hypothesized direction.

Discussion

In the present study, we investigated how the ability to determine the credibility of digital news was associated with background and self-rated abilities in a survey design departing from the prescriptive theory of civic online reasoning (McGrew et al., 2017, 2018). We hypothesized that the ability to determine news credibility would be related to (i) self-rated abilities and attitudes; (ii) educational level and orientation; and (iii) age, specifically older age will be associated with poor performance. The following results confirm the hypotheses: appreciating access to credible news, higher education, lower ratings on internet information reliability, and educational orientation in humanities/arts and natural sciences were associated with better performance. However, there was no general trend for poor performance among the oldest age group. Instead, we found that the two middle-aged groups (30–44 years and 45–60 years) performed poorly on comparing and evidence. Further, a higher rating on fact-checking ability was not associated with poor performance. Educational orientation in natural science was associated with better performance in sourcing and technology with better performance in comparing. Credibility importance was not only related to better performance in evidence but also in sourcing.

An SEM analysis confirmed that all items except one (tapping into sourcing) were explained by the latent constructs (sourcing, evidence, and comparing), and they were, in turn, related to the superordinate latent construct civic online reasoning. Finally, civic online reasoning was related to self-rated attitudes and skills as well as educational level, but not age and educational orientation.

The results provide evidence for a prescriptive theory of digital literacy and the abilities, attitudes, and background variables that affect both the overall performance and the separate skills. Specifically, the results point to a digital divide between well-informed citizens with useful knowledge (education), skills (sourcing at work and fact-checking ability), and attitudes (credibility importance), and other citizens who struggle to navigate news in productive ways on the majority of the items. Below, we discuss the results in relation to the hypotheses and connect them to previous research.

Hypothesis 1: Self-Rated Abilities and Attitudes

Beginning with credibility importance, we hypothesized that the performance on evidence would be positively related to a higher rating on credibility importance. Results showed that a higher rating on credibility importance was related to better performance on both evidence and sourcing but not on comparing. One interpretation is given by the results from the SEM analysis, where the standardized coefficients on the items measuring comparing were distinctly lower compared with the items tapping into evidence and sourcing. This was also true for the arrow between comparing and civic online reasoning. This implies that either the items that were used to assess comparing were inadequate or that the arrow between comparing and civic online reasoning is weaker. We lean toward the former explanation. First, there was a weak correlation between the items (racism and weight loss), and second, we could not control whether participants double-checked the information online. Hence, it may be that participants relied on the face-validity of the articles, leading them to draw incorrect conclusions, resulting in inadequate performance assessments. Hence, it is possible that a design including measurement items of the mastery level (corroboration) might have yielded a significant relationship between comparing and credibility importance.

One of the reasons why credibility importance was associated with good performance on both evidence and sourcing is that it measures an attitude central in critically assessing online news. In the present study, credibility importance is a quite crude measurement, and hence, it is not possible to give a detailed account of the mechanisms behind the association. One explanation is that the attitude is linked to a mindset of openness toward others' knowledge and an interest in the news. This, in turn, may indicate that people who actively search for news to become more informed, so-called news-seekers (Strömbäck et al., 2013), are better at navigating digital information. Another tentative explanation is that valuing the credibility of news is linked to actively open-minded thinking (AOT), which in turn has been shown to be highly correlated with the ability to identify misinformation (Roozenbeek et al., 2022). Similarly, credibility importance may also be linked to a propensity to seek out evidence-based information and science curiosity (Kahan et al., 2017). Future research should investigate the relationship between thinking dispositions and personality variables as well as educational level and orientation.

For internet information reliability, the results were in line with the hypothesized direction: a higher rating was associated with poor performance on evidence and it was negatively related to civic online reasoning in the SEM analysis. The findings replicate previous results (Nygren and Guath, 2019) and we speculate that the attitude may be related to naïve attitudes to the internet, which is confirmed by research showing a relationship between news literacy and skepticism of information quality on social media (Vraga and Tully, 2019). This is also confirmed by research showing a relationship between critical ability and skepticism of news algorithms (Ku et al., 2019). However, we did not find a relationship between internet information reliability and sourcing and comparing. One interpretation is that the items measuring evidence were pictures with no or very little text, whereas the items measuring sourcing and comparing contained both pictures and text. Hence, the attitude appears to be primarily related to the skill of assessing the credibility of pictorial information.

For self-rated fact-checking ability, the results were in the other direction on evidence: higher ratings on fact-checking ability were associated with better performance. This is confirmed by the results from the SEM analysis, where fact-checking is positively related to civic online reasoning. The hypothesis on fact-checking was based on results from a study on adolescents' (age 16–19) abilities to evaluate information online (Nygren and Guath, 2019), and it is possible that adults have better self-knowledge and consequently suffer less from over-confidence (e.g., Kruger and Dunning, 1999). A tentative interpretation of the current result is that self-reported fact-checking ability is related to an ability to debunk visual disinformation, which is supported by the fact that we did not see an effect of fact-checking on other items where the image was less central.

Finally, for sourcing at work, the results were also in the hypothesized direction for evidence but not for the other skills. Also, in the SEM analysis, there was a positive relationship between sourcing at work and fact-checking ability, replicating the same pattern as with internet information relatability, where we only saw a relationship with evidence, which still turned out to be related to civic online reasoning. The results indicate that when people work with sourcing at work and regard information online as unreliable, they are better at evaluating pictorial information. One interpretation is that the skill to evaluate items as measured by evidence is connected to photograph-visual literacy and experiences from manipulating the digital media (Eshet, 2004; Shen et al., 2019). Further investigation is needed to explore what components in sourcing at work are behind the relationship between the ability to assess evidence in pictorial stimuli.

Hypothesis 2: Educational Level and Orientation

On educational level, the results were in the hypothesized direction: higher education was associated with better performance, specifically; having 3 and 2 years of university education compared with 2 years of secondary school were associated with better performance on sourcing and evidence. Also, in the SEM analysis, higher education was associated with civic online reasoning. However, on sourcing, 3 years upper secondary school was also associated with better performance. The results that were in line with the hypothesis are confirmed by not only research showing an association with educational level (Van Deursen et al., 2011) but also research linking the propensity to adjust one's confidence in a piece of news to cognitive ability (De Keersmaecker and Roets, 2017).

On educational orientation, the results were in line with the hypothesis that those with an educational orientation within humanities/arts will perform better, but only on evidence. In addition, participants with an orientation in natural science performed better at sourcing, and on comparing, participants with an orientation in technology performed better. In the SEM analysis, there was, however, no significant relationship between civic online reasoning and educational orientation. This may be due to the fact that educational orientation was only related to a subset of the skills required for civic online reasoning or to how orientation was coded.6

The superior performance among participants with an orientation in humanities and arts may be linked to previous research indicating that experience from working with manipulated texts and images may support digital literacy (Shen et al., 2019). Another possibility is that people with education in arts have subject-specific knowledge and habits of mind (Sawyer, 2015) related to the test items. If this is true, disciplinary literacy may partly explain the association between performance and educational orientation. Domain expertise is constituted by a specific composition of knowledge, skills, and attitudes related to a specific domain (Shanahan et al., 2011), and one possibility is that high-performing participants have acquired disciplinary literacy during their education. Identifying advertisements disguised as medical news or information about natural resources designed like news may be less difficult for people with a background in natural science, leading to better performance on sourcing. The fact that those with an orientation in technology performed better on the comparing items is intriguing since they measured a different skill, namely that of comparing evidence in two articles. One possibility is that participants with an educational orientation in technology used technical resources to double-check the information to a larger extent than other participants.

Hypothesis 3: Older Adults Will Perform Worse

The results did not confirm the hypothesis that older age is predictive of poor performance on sourcing, or any other skill for that matter. Results showed poor performance within the age category of 45–60 years (compared with baseline 19–29 years) on two skills, comparing and evidence. For evidence, the 30–44 years age category also performed worse; again, this is not the older age category. The study (Guess et al., 2019) that found a relationship between older age and poor performance in evaluating information on the internet targeted the age group 65+ and looked at their behavior of sharing false news. We looked at the diverse skills of assessing the credibility of online news, and our sample was presumably younger, including older adults from 60 years and above. This is confirmed by research (Hunsaker and Hargittai, 2018) showing that older adults are a heterogenous group and that internet skills is associated with higher education and income, not age per se. This is partly confirmed by the SEM analysis that did not find a relationship between age category and civic online reasoning.

SEM Analysis

The SEM analysis confirmed a majority of the results from the regressions on the specific skills that constitute civic online reasoning. More importantly, it provided the first evaluation of the prescriptive theory on civic online reasoning. Except for Aftonbladet (measuring sourcing), all items used to measure each skill tapped into the latent constructs—sourcing, evidence, and comparing. Further, the three skills were described by one latent construct—civic online reasoning—that, in turn, was related to all self-rated attitudes and skills, and background variables except age and educational orientation. However, the internal consistency of comparing was unacceptably low, pointing out that the items (weight loss & racism) used to measure comparing were not consistent. One explanation could be that the skills to compare information in the context of health and politics are rarely found in the same person. Future research should try to delineate the importance of the topic for people's ability to corroborate information. Nevertheless, there is evidence that the measured constructs tap into the latent skills that they intend to measure and that the superordinate construct of civic online reasoning is related to the attitudes and skills that have previously been associated with specific items.

Limitations

The participants in the current study were recruited from an online survey company, and we can therefore not rule out that they were more confident (and skilled) in using computers than the average Swedish citizen. Further, the survey platform did not allow the participants to follow links; hence, they could not compare and corroborate information “in the wild” as the participants in the study by Wineburg and McGrew (2019). Because of this, we can only draw conclusions about what McGrew et al. (2018) denoted as “the beginning and emerging level of the development process of corroboration” (p. 173)—which we label as comparing. In order to assess the mastery level, the participants should have been able to search online to corroborate the evidence, but due to the online format, we do not know whether they took help when answering the questions (e.g., googling pictures or articles or taking help of someone else). Another limitation is that we could not operationalize the site characteristics (Metzger and Flanagin, 2015) that may have contributed to the participants' credibility judgments. However, the survey design made it possible to reach a quite large national sample and, despite the limitations, we are fairly confident in the validity of the results. Finally, the number of items used for measuring each latent variable in the SEM analysis was quite small, which may affect the validity of the results. This may have contributed to the low internal reliability of the comparison items. Although the model fit of the final model was quite good, we encourage researchers to validate the model with a larger number of items on a different sample of participants.

Conclusions

The present study replicated previous relationships between mindsets and background variables that are connected to civic online reasoning and offers a unique evaluation of the prescriptive theory of civic online reasoning. Results showed that the items used to measure the separate skills were related to the latent constructs they intended to measure and they in turn were related to the superordinate skill, civic online reasoning. Further, both the separate skills and the latent construct were related to higher education, appreciation of credible news, sourcing at work, and fact-checking ability. However, older age was not related to poor performance on any of the skills, nor the latent construct of civic online reasoning. Instead, our results suggest that civic online literacy is constituted by a diverse set of knowledge, skills, attitudes, and background characteristics that need to be carefully mapped to site characteristics to get a more complete picture of online credibility assessments and how civic online reasoning skills may be supported.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/ze53h/.

Ethics Statement

The studies involving human participants were reviewed and approved by Regionala etiksprövningsnämnden i Uppsala Dnr 2018/397 2018-11-07. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MG: conceptualization, formal analysis, and writing—original draft preparation. TN: resources, project administration, writing—reviewing, and editing. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by VINNOVA (Grant Number 2018-01279).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to direct a special thanks to Jenny Wiksten Folkeryd, Ebba Elwin, Fredrik Brounéus, Kerstin Ekholm, Maria Lindberg, and members of the Stanford History Education Group for their valuable input and support in the process.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.721731/full#supplementary-material

Footnotes

1. ^According to Ullrich (2008) (p. 85, 2008) “[p]rescriptive learning theories are concerned with guidelines that describe what to do in order to achieve specific outcomes. They are often based on descriptive theories; sometimes they are derived from experience. Instructional design is the umbrella which assembles prescriptive theories”.

2. ^Test items inspired by Wineburg et al. (2016) and McGrew et al. (2017, 2018) asking students to compare articles.

3. ^Image in manipulative framing previously used in McGrew et al. (2018).

4. ^http://www.codex.vr.se/forskninghumsam.shtml

5. ^A closer inspection of the missing values revealed that they were missing not at random (MNAR). The literature on imputation of missing values does not recommend imputation for MNAR unless one has enough information to generate unbiased values (e.g., Mack et al., 2018).

6. ^Educational orientation was coded as categorical variable consisting of seven categories, and we arranged them in the following order: health, agriculture, pedagogics, social sciences, humanities and arts, technology, and natural sciences. The rational was that previous results showed that the more theoretical educations were related to superior performance.

References

Aufderheide, P. (1993). Media Literacy. A Report of the National Leadership Conference on Media Literacy. ERIC.

Bawden, D. (2001). Information and digital literacies: a review of concepts. J. Document. 57, 218–259. doi: 10.1108/EUM0000000007083

Beaujean, A. A. (2014). Latent Variable Modeling Using R: A Step-by-Step Guide, 1st Edn. Routledge. doi: 10.4324/9781315869780

Brand-Gruwel, S., Kammerer, Y., van Meeuwen, L., and van Gog, T. (2017). Source evaluation of domain experts and novices during Web search. J. Comput. Assisted Learn. 33, 234–251. doi: 10.1111/jcal.12162

Breakstone, J., Smith, M., Wineburg, S., Rapaport, A., Carle, J., Garland, M., et al. (2019). Students' civic online reasoning: a national portrait. Available online at: https://purl.stanford.edu/gf151tb4868 (accessed December 21, 2020).

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/BF02310555

De Keersmaecker, J., and Roets, A. (2017). ‘Fake news’: incorrect, but hard to correct. The role of cognitive ability on the impact of false information on social impressions. Intelligence 65, 107–110. doi: 10.1016/j.intell.2017.10.005

Eraut, M. (2000). Non-formal learning and tacit knowledge in professional work. Br. J. Educ. Psychol. 70, 113–136. doi: 10.1348/000709900158001

Eshet, Y. (2004). Digital literacy: A conceptual framework for survival skills in the digital era. J. Educ. Multimed. Hypermed. 13, 93–106. Available online at: https://www.learntechlib.org/primary/p/4793/ (accessed June 20, 2022).

EU (2018). Action Plan against Disinformation. Joint communication to the european parliament, the european council, the council, the european economic and social committee and the committee of the regions. Available online at: https://ec.europa.eu/information_society/newsroom/image/document/2018-49/action_plan_against_disinformation_26A2EA85-DE63-03C0-25A096932DAB1F95_55952.pdf (accessed June 20, 2022).

Flynn, D. J., Nyhan, B., and Reifler, J. (2017). The nature and origins of misperceptions: understanding false and unsupported beliefs about politics. Polit. Psychol. 38, 127–150. doi: 10.1111/pops.12394

Gigerenzer, G., and Gaissmaier, W. (2011). Heuristic decision making. Annu. Rev. Psychol. 62, 451–482. doi: 10.1146/annurev-psych-120709-145346

Greene, J. A., and Yu, S. B. (2016). Educating critical thinkers: the role of epistemic cognition. Policy Insights Behav. Brain Sci. 3, 45–53. doi: 10.1177/2372732215622223

Guess, A., Nagler, J., and Tucker, J. (2019). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 5, eaau4586. doi: 10.1126/sciadv.aau4586

Hedman, F., Sivnert, F., Kollanyi, B., Narayanan, V., Neudert, L., and Howard, P. N. (2018). News and Political Information Consumption in Sweden: Mapping the 2018 Swedish General Election on Twitter. Oxford: Oxford University, United Kingdom.

Hobbs, R. (2010). Digital and Media Literacy: A Plan of Action. A White Paper on the Digital and Media Literacy Recommendations of the Knight Commission on the Information Needs of Communities in a Democracy. Washington, DC: The Aspen Institute.

Hunsaker, A., and Hargittai, E. (2018). A review of Internet use among older adults. New Media Soc. 20, 3937–3954. doi: 10.1177/1461444818787348

Kahan, D. (2017). Misconceptions, misinformation, and the logic of identity-protective cognition. SSRN Electron. J. doi: 10.2139/ssrn.2973067

Kahan, D., Landrum, A., Carpenter, K., Helft, L., and Hall Jamieson, K. (2017). Science curiosity and political information processing. Polit. Psychol. 38, 179–199. doi: 10.1111/pops.12396

Kammerer, Y., Amann, D. G., and Gerjets, P. (2015). When adults without university education search the Internet for health information: the roles of Internet-specific epistemic beliefs and a source evaluation intervention. Comput. Hum. Behav. 48, 297–309. doi: 10.1016/j.chb.2015.01.045

Kozyreva, A., Lewandowsky, S., and Hertwig, R. (2020). Citizens versus the internet: confronting digital challenges with cognitive tools. Psychol. Sci. Public Interest 21, 103–156. doi: 10.1177/1529100620946707

Kruger, J., and Dunning, D. (1999). Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments. J. Pers. Soc. Psychol. 77, 1121. doi: 10.1037/0022-3514.77.6.1121

Ku, K. Y. L., Kong, Q., Song, Y., Deng, L., Kang, Y., and Hu, A. (2019). What predicts adolescents' critical thinking about real-life news? The roles of social media news consumption and news media literacy. Think. Skills Creat. 33, 100570. doi: 10.1016/j.tsc.2019.05.004

Leaning, M. (2019). An approach to digital literacy through the integration of media and Information literacy. Media Commun. 7, 4–13. doi: 10.17645/mac.v7i2.1931

Lee, N. M. (2018). Fake news, phishing, and fraud: a call for research on digital media literacy education beyond the classroom. Commun. Educ. 67, 460–466. doi: 10.1080/03634523.2018.1503313

Lewandowsky, S., Ecker, U. K., Seifert, C. M., Schwarz, N., and Cook, J. (2012). Misinformation and its correction: continued influence and successful debiasing. Psychol. Sci. Public Interest 13, 106–131. doi: 10.1177/1529100612451018

Livingstone, S. (2004). Media literacy and the challenge of new information and communication technologies. Commun. Rev. 7, 3–14. doi: 10.1080/10714420490280152

Luguri, J., and Strahilevitz, L. (2019). Shining a Light on Dark Patterns. Chicago, IL: University of Chicago. doi: 10.2139/ssrn.3431205

Mack, C., Su, Z., and Westreich, D. (2018). Managing Missing Data in Patient Registries: Addendum to Registries for Evaluating Patient Outcomes: A User's Guide, 3rd Edn. Rockville, MD: Agency for Healthcare Research and Quality (US).

McGrew, S., Breakstone, J., Ortega, T., Smith, M., and Wineburg, S. (2018). Can students evaluate online sources? Learning from assessments of civic online reasoning. Theory Res. Soc. Educ. 46, 165–193, doi: 10.1080/00933104.2017.1416320

McGrew, S., Ortega, T., Breakstone, J., and Wineburg, S. (2017). The challenge that's bigger than fake news: Civic reasoning in a social media environment. Am. Educ. 41, 1–44. Available online at: https://www.aft.org/sites/default/files/ae_fall2017.pdf

Metzger, M. J., and Flanagin, A. J. (2015). Psychological approaches to credibility assessment online. Handb. Psychol. Commun. Technol. 32, 445. doi: 10.1002/9781118426456.ch20

Metzger, M. J., Flanagin, A. J., and Medders, R. B. (2010). Social and heuristic approaches to credibility evaluation online. J. Commun. 60, 413–439. doi: 10.1111/j.1460-2466.2010.01488.x

Nygren, T., and Guath, M. (2019). Swedish teenagers' difficulties and abilities to determine digital news credibility. Nordicom Rev. 40, 23–42. doi: 10.2478/nor-2019-0002

Rapacioli, P. (2018). Good Sweden, Bad Sweden : The Use and Abuse of Swedish Values in a Post-Truth World. Stockholm: Volante.

Roozenbeek, J., Maertens, R., Herzog, S. M., Geers, M., Kurvers, R., Sultan, M., et al. (2022). Susceptibility to misinformation is consistent across question framings and response modes and better explained by myside bias and partisanship than analytical thinking. Judg. Decis. Mak. 17, 547–573.

Rosseel, Y. (2012). lavaan: An r package for structural equation modeling. J. Statist. Softw. 48, 1–36. doi: 10.18637/jss.v048.i02

Sawyer, K. (2015). A call to action: the challenges of creative teaching and learning. Teach. Coll. Rec. 117, 1–34. doi: 10.1177/016146811511701001

Shanahan, C., Shanahan, T., and Misischia, C. (2011). Analysis of expert readers in three disciplines. J. Literacy Res. 43, 393–429. doi: 10.1177/1086296X11424071

Shen, C., Kasra, M., Pan, W., Bassett, G. A., Malloch, Y., and O'Brien, J. F. (2019). Fake images: the effects of source, intermediary, and digital media literacy on contextual assessment of image credibility online. New Media Soc. 21, 438–463. doi: 10.1177/1461444818799526

Strömbäck, J., Djerf-Pierre, M., and Shehata, A. (2013). The dynamics of political interest and news media consumption: a longitudinal perspective. Int. J. Public Opin. Res. 25, 414–435. doi: 10.1093/ijpor/eds018

Ullrich, C. (2008). “Descriptive and prescriptive learning theories,” in Pedagogically Founded Courseware Generation for Web-Based Learning. Lecture Notes in Computer Science, vol. 5260 (Berlin: Springer). doi: 10.1007/978-3-540-88215-2_3

van Deursen, A. J., and van Dijk, J. A. (2015). Internet skill levels increase, but gaps widen: a longitudinal cross-sectional analysis (2010–2013) among the Dutch population. Inform. Commun. Soc. 18, 782–797. doi: 10.1080/1369118X.2014.994544

Van Deursen, A. J., van Dijk, J. A., and Peters, O. (2011). Rethinking Internet skills: the contribution of gender, age, education, Internet experience, and hours online to medium-and content-related Internet skills. Poetics 39, 125–144. doi: 10.1016/j.poetic.2011.02.001

Vraga, E. K., and Tully, M. (2019). News literacy, social media behaviors, and skepticism toward information on social media. Infm. Commun. Soc. 24, 1–17. doi: 10.1080/1369118X.2019.1637445

Wardle, C., and Derakhshan, H. (2017). Information Disorder: Toward an Interdisciplinary Framework for Research and Policymaking. Cambridge; Strasbourg: Council of Europe report DGI.

Wineburg, S., and McGrew, S. (2019). Lateral reading and the nature of expertise: reading less and learning more when evaluating digital information. Teach. Coll. Rec. 121, 1–40. doi: 10.1177/016146811912101102

Wineburg, S., McGrew, S., Breakstone, J., and Ortega, T. (2016). Evaluating Information: The Cornerstone of Civic Online Reasoning. Stanford Digital Repository. Available online at: http://purl.stanford.edu/fv751yt5934 (accessed December 20, 2016).

Zarocostas, J. (2020). How to fight an infodemic. Lancet 395, 676. doi: 10.1016/S0140-6736(20)30461-X

Keywords: media information literacy, critical thinking (skills), fact-checking behavior, disinformation, SEM

Citation: Guath M and Nygren T (2022) Civic Online Reasoning Among Adults: An Empirical Evaluation of a Prescriptive Theory and Its Correlates. Front. Educ. 7:721731. doi: 10.3389/feduc.2022.721731

Received: 07 June 2021; Accepted: 06 June 2022;

Published: 08 July 2022.

Edited by:

Tom Crick, Swansea University, United KingdomReviewed by:

Julie Vaiopoulou, Aristotle University of Thessaloniki, GreeceJoshua Littenberg-Tobias, WGBH Educational Foundation, United States

Copyright © 2022 Guath and Nygren. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mona Guath, mona.guath@psyk.uu.se

Mona Guath

Mona Guath Thomas Nygren

Thomas Nygren