- Department of Educational Science, University of Freiburg, Freiburg, Germany

A goal of teacher education is to promote evidence-based teaching. Teacher beliefs are assumed to act as facilitators or barriers to evidence-based thinking and practices. In three sub-studies with a total of N = 346 German student teachers, the extent of student teachers’ beliefs about education science and their consequences and sources were investigated. First, the results of questionnaire data indicated that student teachers held skeptical beliefs about education science: On average, they perceived education science as less complex than their subject disciplines and as less important for successful teaching than their subject didactics. Additionally, they endorsed myths about learning and teaching. Second, the more skeptical the student teachers’ beliefs, the lower their engagement in education science courses within teacher education. Third, hypotheses about potential sources of these skeptical beliefs were experimentally tested as starting points for changing beliefs. The results showed that the “soft” research methods typical of education science and a general tendency to perceive research findings as trivial (hindsight bias) might contribute to this devaluation. Furthermore, students studying the natural sciences and students with little experience with education science held more skeptical beliefs.

Introduction

There are increasing demands for teaching to evolve into a more evidence-based profession (Slavin, 2002; Bauer and Prenzel, 2012; Ferguson, 2021). Such demands relate not only to educational policy but also to teachers’ professional thinking and behavior (Davies, 1999; Bauer and Prenzel, 2012; Niemi, 2016). Many countries require in their teacher education standards that teachers should be able to plan and design lessons based on scientific evidence (e.g., see Bauer and Prenzel, 2012, for an overview). Orientation toward the best available knowledge is standard in other professions, such as medicine (Sackett et al., 1996; Helmsley-Brown and Sharp, 2003). However, teachers rarely draw on evidence when planning, analyzing, and reflecting on job-related situations (Dagenais et al., 2012; Patry, 2019). A central reason for this lack of evidence-based thinking lies in teachers’ beliefs (e.g., Patry, 2019). There is evidence that people in general hold skeptical beliefs about disciplines such as psychology or education science (Lilienfeld, 2012). The content of such disciplines is commonly perceived as less complex than that of the natural sciences (Keil et al., 2010). In particular, the research methods in disciplines such as education science seem to be perceived as “soft” (Munro and Munro, 2014, p. 533), providing less valid results than “hard” (Munro and Munro, 2014, p. 533) methods in the natural sciences. Similarly, (prospective) teachers often belief that findings from education science are of little relevance to their teaching in the classroom (Broekkamp and Hout-Wolters, 2007). These beliefs contradict research findings highlighting the importance of teachers’ knowledge of empirical evidence from education science – for example, knowledge about effective teaching methods or effective classroom management strategies (e.g., König and Pflanzl, 2016; Ulferts, 2019; Voss et al., 2022) – for their professional success.

Consequently, in a series of three studies, I investigated whether student teachers held skeptical beliefs about education science and examined the consequences and sources of such skeptical beliefs. First, I implemented questionnaires capturing the extent of student teachers’ beliefs about the importance and complexity of education science compared to the subject disciplines and the subject didactics. Second, I examined the consequences of such skeptical beliefs for engagement in education science courses within the teacher education program by using correlational data. Third, I conducted experimental studies to test hypotheses about sources of student teachers’ skeptical beliefs.

Beliefs about evidence from education science

Conceptualizations of teachers’ professional knowledge typically distinguish three domains of teachers’ professional knowledge (Shulman, 1987): content knowledge (Krauss et al., 2008), pedagogical content knowledge (Krauss et al., 2008), and pedagogical-psychological knowledge (Voss et al., 2011). In accordance with this topology, in many countries, teacher education curricula (e.g., the curricula of the German teacher education system) require pre-service teachers to take courses in three disciplines (Bauer and Prenzel, 2012), namely in the subject disciplines to acquire content knowledge (i.e., the knowledge about the subject matter they will be teaching), subject didactics to acquire pedagogical content knowledge (i.e., how to make the subject matter accessible to their future students), and education science to acquire knowledge about pedagogical-psychological phenomena relevant for students’ learning in general (e.g., knowledge about learning strategies, students’ motivation, assessment).

Prior research has indicated that beliefs about education science as a discipline within teacher education are rather skeptical (Cramer, 2013; Siegel and Daumiller, 2021). Beliefs are personal views about the self and the world that are thought to be true (Richardson, 1996). Beliefs are organized in a complex mental network and often termed subjective theories (Patry, 2019). Analogously to scientific theories, people use such subjective theories or beliefs to describe, explain, and predict phenomena, but such beliefs have a different epistemic status. While scientific theories are based on objectifiable, justifiable bodies of knowledge, beliefs are based on experience. Thus, beliefs do not meet the criterion of being objectifiable through scientific evidence (Richardson, 1996). Therefore, systems of beliefs typically contain elements that are not based on scientific evidence and not consistent with scientific theories. The skeptical beliefs about education science primarily manifest themselves with respect to two aspects.

First, teachers often doubt that empirical evidence from education science is important for teachers (Beycioglu et al., 2010; Dagenais et al., 2012; Cain, 2016; Thomm et al., 2021a). Many teachers assume that such evidence is not applicable to their practice (e.g., Merk et al., 2017; Joram et al., 2020; review by van Schaik et al., 2018) and perceive a gap between education science research and daily challenges in the classroom (Broekkamp and Hout-Wolters, 2007; Merk et al., 2017). There is also some evidence that student teachers perceive the education science even as less important than the subject disciplines (e.g., Cramer, 2013).

Second, research on epistemological beliefs has shown that student teachers often hold unsophisticated epistemological beliefs about education science topics (Guilfoyle et al., 2020; Moser et al., 2021). Epistemological beliefs are subjective theories about knowledge and knowing (Hofer, 2001). Existing conceptualizations identify various dimensions of epistemological beliefs. However, most conceptualizations include complexity of knowledge as one such dimension (e.g., Schommer, 1990; Hofer and Pintrich, 1997). Research results have indicated that student teachers hold rather unsophisticated beliefs (e.g., Brownlee et al., 2001) and, for instance, believe that education science content is not particularly complex (Lilienfeld, 2012). Even children rate psychological questions as easier to answer than questions from disciplines like chemistry or physics (Keil et al., 2010).

In addition to general beliefs about education science as a discipline of teacher education, student teachers often hold misconceptions about specific educational topics. Misconceptions are beliefs contradicted by established research findings in a discipline (Bensley and Lilienfeld, 2017). Research has shown that teachers and student teachers often endorse misconceptions (e.g., Dekker et al., 2012; Bensley and Lilienfeld, 2017; Pieschl et al., 2021). Such misconceptions are: instruction needs to be adapted to specific learning styles (Macdonald et al., 2017; Eitel et al., 2021), it is primarily the teacher’s personality that matters for teaching success (Darling-Hammond, 2006), having more experience automatically makes one a better teacher, smaller class sizes automatically lead to better student learning (Menz et al., 2021a). Existing research exposes these four statements as misconceptions. For instance, (1) there is no solid evidence that there is any benefit to adapting instruction to learning styles (e.g., Kirschner and van Merriënboer, 2013). Several studies have (2) shown that teachers’ profession-specific competencies (rather than general personality traits) are important for teaching success (e.g., Kunter et al., 2013). Evidence also indicates that (3) teachers with more experience are not automatically better teachers, but that it depends on how teachers leverage their experiences (Friedrichsen et al., 2009; Kleickmann et al., 2013). Several studies (4) have indicated that a smaller class size is no guarantee for better learning (Hattie, 2009). Such misconceptions that receive widespread endorsement are also called myths (e.g., neuromyths, Dekker et al., 2012).

Consequences of student teachers’ beliefs for their learning

It is assumed that beliefs have consequences for people’s motivation and behavior (Fives and Buehl, 2012; Buehl and Beck, 2015), because they are thought to serve as a filter for interpreting new experiences (Pajares, 1992): Humans always perceive situations through the lens of their existing beliefs, which affect how they select and process information and how they make decisions in a given situation (Fives and Buehl, 2012; Patry, 2019).

This filtering effect of beliefs is assumed to be particularly important in the context of teaching and teacher education (Yadav et al., 2011; Brownlee et al., 2017). Beliefs are formed very early during our school careers (for an overview, see, for example, Pajares, 1992; for an empirical study, see, for example, Haney and McArthur, 2002). Accordingly, student teachers bring a set of fixed beliefs based on their experiences with them into their teacher education program. It is assumed that these beliefs shape what and how pre-service teachers learn during teacher education (Fives and Buehl, 2012; Stark, 2017; Ferguson, 2021). For instance, inadequate beliefs or misconceptions may lead to an oversimplification of complex information and result in poor learning outcomes (Schommer-Aikins, 2004; Moser et al., 2021). Accordingly, the results of interview studies with rather small samples (Holt-Reynolds, 1992; Bondy et al., 2007) have shown that students interpret situations in line with their beliefs. Furthermore, in a study with Norwegian student teachers, Bråten and Ferguson (2015) found evidence that more positive beliefs by student teachers about the importance of formalized sources of knowledge (such as research articles or textbooks) are associated with higher motivation to learn from formal teacher training courses (see also Chan, 2003 for a study with student teachers from Hong Kong and Siegel and Daumiller, 2021 for a mixed-method study with a relatively small sample).

Sources of student teachers’ beliefs

Because beliefs about teaching and learning are based on years of one’s own school experiences, these beliefs are thought to have multifarious sources (Lilienfeld, 2012).

(1) Disciplinary culture: People often assume that the impact of science on society strongly differs across disciplines (Janda et al., 1998; Richardson and Lacroix, 2021). Physics and mathematics typically have the highest prestige, whereas social sciences such as psychology, sociology, or education science have the lowest (e.g., Simonton, 2006; Klavans and Boyack, 2009). This low prestige may contribute to the devaluation of education science among student teachers. Furthermore, research on epistemological beliefs has revealed interindividual differences in students’ beliefs by disciplinary culture: Students studying “soft” disciplines (e.g., psychology, education science) held more sophisticated epistemological beliefs than students of “hard” sciences (e.g., mathematics, physics, biology, Paulsen and Wells, 1998; Karimi, 2014). However, there are also contradictory research results. For instance, Rosman et al. (2020) found hardly any differences in epistemological beliefs between biology and psychology students.

(2) Experience with the academic discipline: Based on experiences from their own school days, student teachers enter teacher education with a fixed set of beliefs about teaching and learning (Richardson, 1996; Fives and Buehl, 2012). These beliefs are often at odds with the scientific theories taught at universities (Joram, 2007; Fives and Buehl, 2012). As they gain more experience with the academic discipline of education science, student teachers should develop a more appropriate representation of it. Accordingly, (limited) research results indicate that students with more experience (e.g., students with more courses in the discipline or students in a master’s degree program vs. bachelor’s degree program) hold more positive beliefs about the discipline than students with less experience (Bartels et al., 2009, for psychology students; Moser et al., 2021, for pre-service teachers).

(3) “Soft” research methods of the discipline: Many people have an unfavorable opinion of psychology’s scientific quality (Lilienfeld, 2012). It is interesting to note that neuropsychological evidence is perceived more like “hard” sciences than the other “softer” subdisciplines of psychology (Keil et al., 2010). For instance, there is manifold evidence on the seductive allure effect (Weisberg et al., 2008): People judge explanations of psychology findings as better when those explanations contain logically irrelevant neuroscience information (e.g., Weisberg et al., 2008; Hopkins et al., 2016). Consequently, distrust in the reliability of evidence from soft sciences such as education science may also be rooted in the typical research methods of education science as opposed to “harder” sciences such as chemistry, physics, or neuropsychology (Lilienfeld, 2012). For instance, Munro and Munro (2014) found evidence in a scenario-based approach that students evaluated the quality of evidence generated with brain magnetic resonance imaging (e.g., MRI) more favorably than evidence from cognitive tests.

(4) Preference for information from anecdotal sources: Drawing upon their own experiences in school, teachers often prefer anecdotal information from practitioners to inform their practice compared to evidence from scientific sources (e.g., Ferguson, 2020; Kiemer and Kollar, 2021). Research results have demonstrated that the preference for such non-scientific (i.e., anecdotal) sources contributes to shaping student teachers’ misconceptions about topics from education science (Menz et al., 2021b).

(5) Hindsight bias: Many people believe that most knowledge from “soft” disciplines is obvious (Lilienfeld, 2012). This tendency to view outcomes as foreseeable once we know them is termed hindsight bias (the “I knew it all along” effect, Lilienfeld, 2012, p. 120). Research results have shown that this tendency is pronounced among human beings in general (e.g., in political elections, Blank et al., 2003), as well as among student teachers concerning topics from education science (Wong, 1995). Hindsight bias concerning evidence from education science may thus also contribute to student teachers’ skeptical beliefs about education science.

Thus, there is evidence for potential sources of the skeptical beliefs, but studies with actual samples of student teachers that systematically explore these different sources are lacking.

The present study

Data come from three sub-studies from a research program investigating German secondary school student teachers’ beliefs about education science. In Germany, secondary school teacher training programs are divided into a bachelor’s degree program (six semesters) and a master’s degree program (four semesters). Student teachers study at least two subjects and take courses in the subject didactics of these two subjects as well as in education science. Bachelor’s degree programs at most universities have a clear focus on the two subject disciplines, with the most credits awarded in the subject disciplines, while master’s degree programs have a stronger focus on education science and subject didactics. Although German universities are organized in a federal system with differences across the federal states, this overall structure is found in each state.

In the present study, I first compared student teachers’ beliefs about education science with their beliefs about their subject disciplines and subject didactics. I assumed a devaluation of education science in terms of beliefs about the importance of education science for teaching and the complexity of education science (Sub-Study 1). Furthermore, I expected that student teachers, on average, would endorse myths about educational topics (Sub-Study 3). Second, consequences of these beliefs were investigated with the assumption that skeptical beliefs about education science would be associated with a lower engagement with research from education science and a lower openness to scientific evidence (Sub-Study 2). Third, possible sources of the devaluation of education science were examined. Specifically, I investigated (a) the impact of the subjects students were studying as an indicator of the disciplinary culture [i.e., natural science subjects (STEM) vs. other subjects; Sub-Study 1], (b) the impact of students’ level of experience with education science (i.e., students in the bachelor’s vs. master’s degree program; Sub-Study 1). As further possible sources, I investigated in two experimental studies (c) whether student teachers tend to devaluate evidence from studies using soft research methods in comparison to hard research methods (Sub-Study 2), (d) whether student teachers prefer information from anecdotal (vs. scientific) sources (Sub-Study 2), and (e) whether student teachers tend to believe that evidence from education science is trivial (hindsight bias; Sub-Study 3).

Sub-Study 1

Hypotheses

I assumed that students would hold skeptical beliefs about the importance and complexity of education science. Furthermore, I expected moderating effects of the subject the student teachers were studying and their degree program (bachelor’s versus master’s level).

(1) Hypotheses on the importance of the disciplines for professional success:

• Student teachers believe that education science is less important for professional success than their subject disciplines and subject didactics (importance devaluation hypothesis).

• The tendency to devalue the importance of education science is more pronounced among student teachers studying a STEM subject than among students not studying a STEM subject (importance-by-subject hypothesis).

• The tendency to devalue the importance of education science is more pronounced among student teachers in the bachelor’s degree program than among student teachers in the master’s degree program (importance-by-degree hypothesis).

(2) Hypotheses on the complexity of the disciplines:

• Student teachers evaluate education science as less complex than their subject disciplines and subject didactics (complexity devaluation hypothesis).

• Student teachers studying a STEM subject devalue the complexity of education science more strongly than student teachers not studying a STEM subject (complexity-by-subject hypothesis).

• The tendency to devalue the complexity of education science is more pronounced among student teachers in the bachelor’s degree program than among student teachers in the master’s degree program (complexity-by-degree hypothesis).

I conducted an a priori power analysis in G*Power (Faul et al., 2009) for analyses of variance with the between-subject factors degree program (bachelor’s vs. master’s degree) and subject (STEM vs. non-STEM), with beliefs as the within-subject factor (three levels: beliefs about education science, subject disciplines, and subject didactics; expected medium-sized intercorrelations), and their interaction (α = 0.05, power β = 0.80). The results indicated that a sample size of N = 36 would be sufficient to detect the expected medium-sized main effects for the within-subject factor, and a sample size of N = 206 would be sufficient to detect the expected small interaction effects between the within-subject factor and the between-subject factors.

Materials and methods

Sample

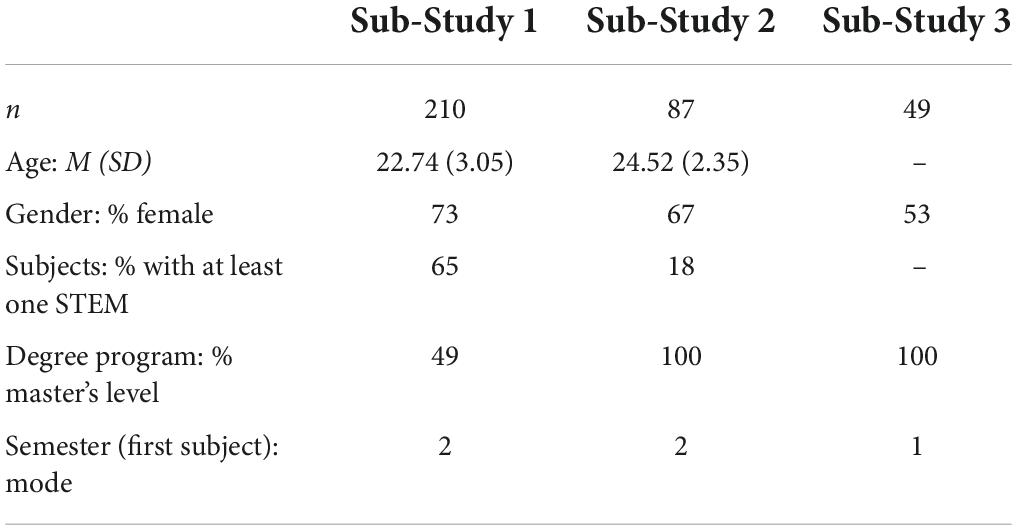

A total of N = 210 student teachers from the University of Freiburg participated in Sub-Study 1 (Table 1). About 50% of the participants were enrolled in a master’s degree program (i.e., Master of Education), the others were enrolled in a bachelor’s degree program with the option to subsequently pursue a Master of Education. Among participants, 65% were studying at least one STEM subject.

Instruments

Beliefs about the disciplines

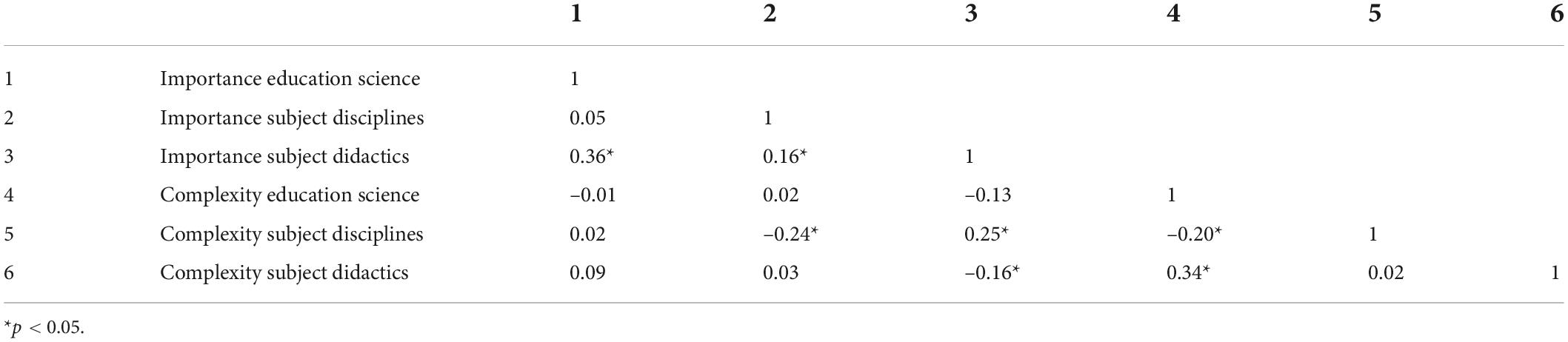

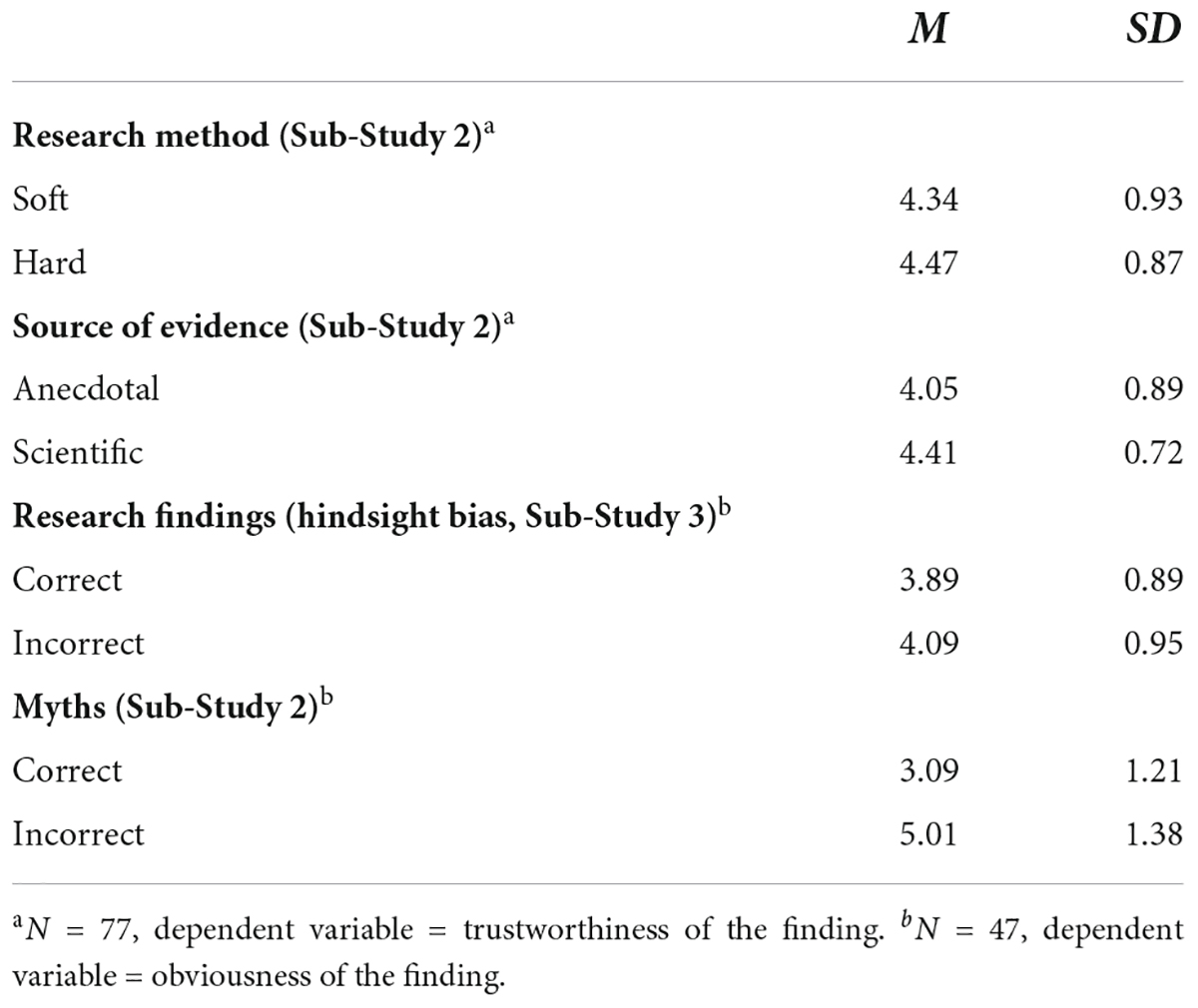

Student teachers answered questions about the importance of education science, the students’ subject disciplines, and subject didactics for professional success and the complexity of the topics covered in these disciplines. The item wording was parallel for the three disciplines. Student teachers indicated their agreement with statements about the importance and complexity of each discipline on 6-point Likert scales ranging from 1 (=completely disagree) to 6 (=completely agree). An example item measuring beliefs about the importance of the discipline for professional success is: Comprehensive knowledge of theories and concepts from education science/my subject disciplines/my subject didactics helps to cope with the daily challenges of being a teacher (7 items for each discipline). An example item measuring beliefs about the complexity of the topics in the discipline is: You have to think hard to understand the topics in education science/my subject disciplines/my subject didactics (4 items for each discipline; see Table 2 for descriptive statistics and Table 3 for the intercorrelations among the scales).

Table 2. Descriptive statics for the instruments measuring student teachers’ beliefs about the three disciplines (Sub-Study 1 and Sub-Study 2).

Degree program and subjects

Additionally, student teachers indicated their degree program (master’s or bachelor’s degree program) and the subjects they were studying. The subjects were coded as STEM (students studying at least one STEM subject; i.e., mathematics, physics, chemistry, computer science, or geography) versus non-STEM (students studying two subjects in the linguistics, humanities, or social sciences).

Results

Do student teachers evaluate education science as less important than their subject disciplines and subject didactics?

I computed an analysis of variance with discipline as the within-subject factor (i.e., education science, subject disciplines, and subject didactics) and beliefs regarding their importance as the dependent variable. Furthermore, I included the students’ subjects (STEM vs. non-STEM) and degree program (master vs. bachelor) as between-subject factors to investigate the moderator hypotheses. The results showed a significant large main effect of discipline, F(2, 352) = 59.166, p = 0.000, η2 = 0.25 [controlling for gender with no significant effect: F(1, 352) = 1.051, p = 0.351, η2 = 0.00]. To uncover the significant main effect of discipline, I computed a planned contrast following the importance devaluation hypothesis that students rated education science as less important than the subject disciplines and subject didactics. Consequently, I specified the contrast with the weights: subject disciplines = +1, subject didactics = +1, education science = –2. The contrast was statistically significant, F(1, 176) = 34.240, p = 0.000, η2 = 0.16. Thus, the results supported the importance devaluation hypothesis: Student teachers evaluated subject didactics and subject disciplines as more important for professional success than education science. Descriptively, student teachers rated their subject didactics as particularly important compared to the other two disciplines (Table 2).

The interaction with subject was not statistically significant [F(2, 352) = 1.822, p = 0.163, η2 = 0.01]. Thus, the results did not support the importance-by-subject hypothesis that devaluation of education science is more pronounced among students studying a STEM subject.

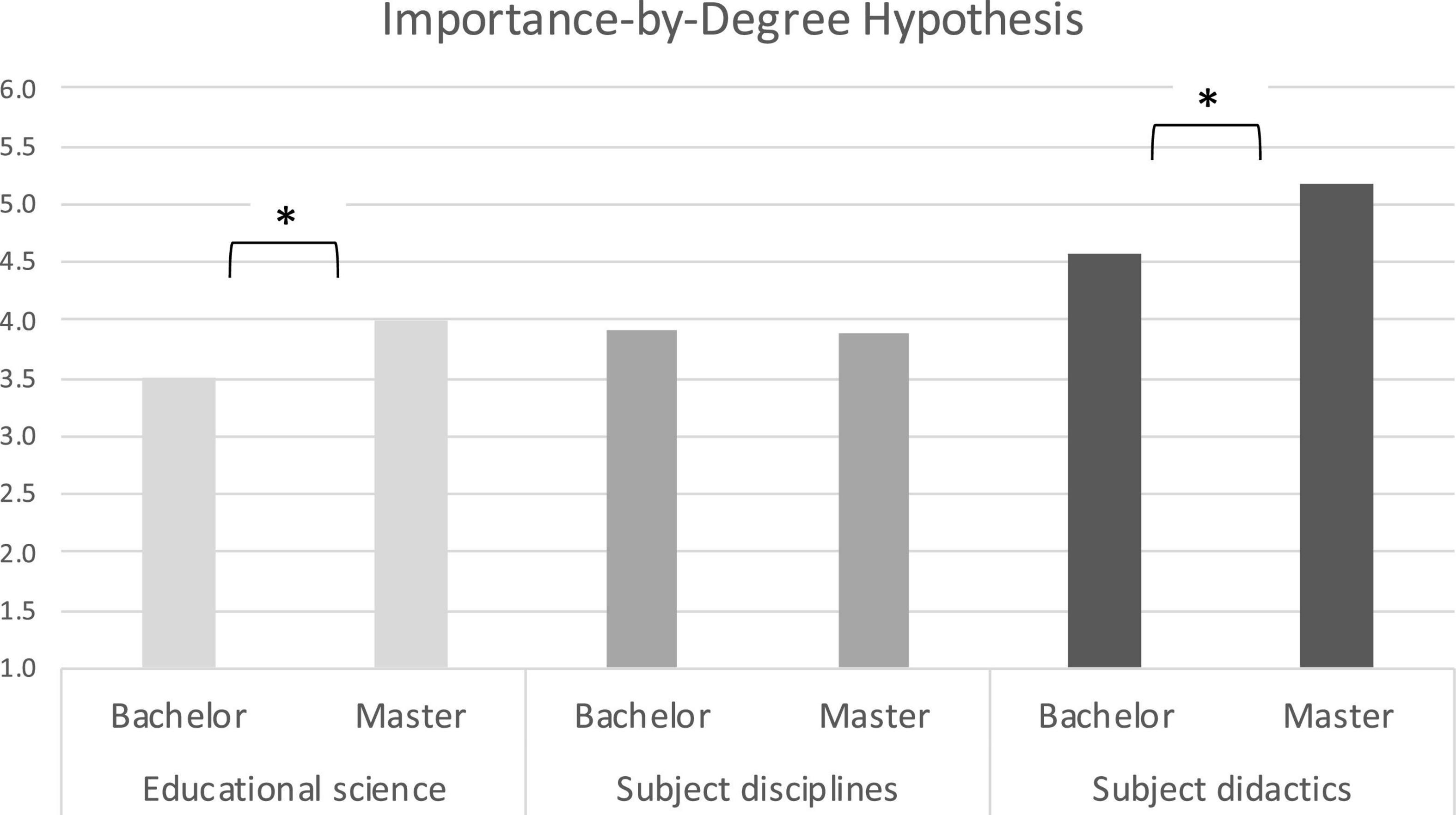

In contrast, the interaction effect with degree program was statistically significant [F(2, 352) = 5.173, p = 0.006, η2 = 0.03; small effect, Figure 1]. A simple effects analysis with Bonferroni correction of the alpha level revealed that student teachers at the master’s level rated education science (p = 0.001) – and subject didactics (p = 0.000) – as significantly more important than students at the bachelor’s level (Figure 1). In contrast, the difference for subject disciplines by degree program (p = 0.992) was not statistically significant. Hence, the results partially supported the importance-by-degree hypothesis that devaluation of education science is more pronounced among students at the bachelor’s compared to master’s level.

Figure 1. Interaction effects: Importance of the disciplines for professional success as a function of degree program (Sub-Study 1). The figure displays beliefs about the importance of the three disciplines for professional success among students at the bachelor’s vs. master’s level. *p < 0.05 in the simple effects analyses.

Do student teachers evaluate education science as less complex than their subject disciplines and subject didactics?

An analysis of variance with discipline as the within-subject factor (i.e., education science, subject disciplines, and subject didactics), subject and degree program as between-subject factors, and beliefs about complexity as the dependent variable revealed a significant, large main effect, F(2, 348) = 150.982, p = 0.000, η2 = 0.47 [again controlling for gender with no significant effect: F(1, 348) = 0.170, p = 0.8431, η2 = 0.00]. To test the complexity devaluation hypothesis that student teachers rate education science as less complex than their subject disciplines and subject didactics, I computed a planned contrast with the weights: subject disciplines = +1, subject didactics = +1, education science = –2. The contrast was statistically significant, F(1, 174) = 78.269, p = 0.000, η2 = 0.31. Hence, in line with the hypothesis, student teachers evaluated the complexity of education science significantly lower than the complexity of their subject disciplines and subject didactics.

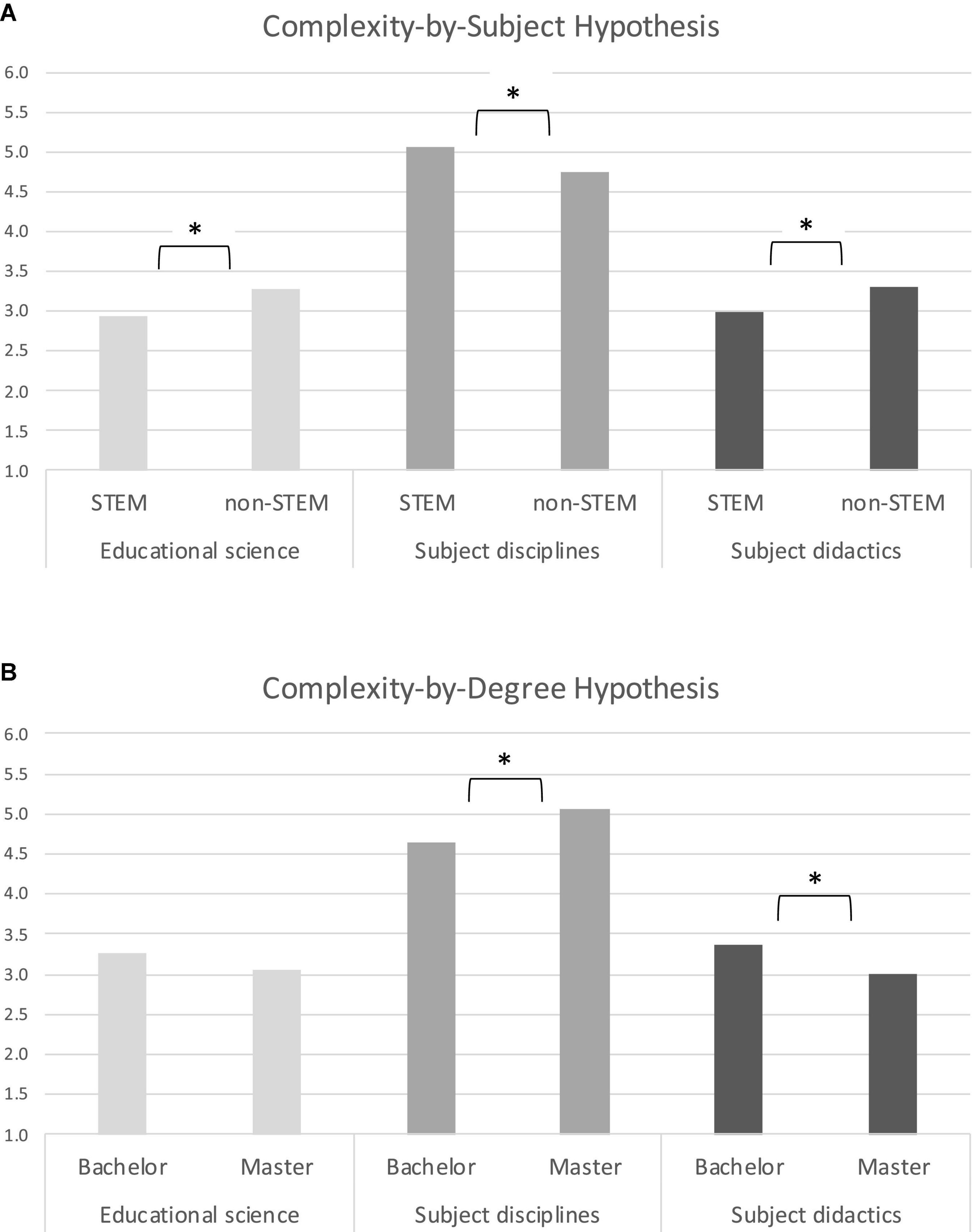

Furthermore, the results indicated a significant interaction effect for both moderators, that is, subject [F(2, 348) = 5.366, p = 0.005, η2 = 0.03] and degree program [F(2, 348) = 7.235, p = 0.001, η2 = 0.04; Figure 2]. A simple effects analysis with Bonferroni correction of the alpha level revealed that students with and without a STEM subject differed significantly on all three variables: In line with the complexity-by-subject hypothesis, students with a STEM subject rated the complexity of education science significantly lower (p = 0.021), the complexity of their subject disciplines significantly higher (p = 0.019), and the complexity of subject didactics lower (p = 0.028) than students without a STEM subject. Regarding the complexity-by-degree hypothesis, the simple effects analysis indicated that students at the bachelor’s level rated the complexity of their subject disciplines significantly lower (p = 0.003) and the complexity of subject didactics significantly higher (p = 0.009) than students at the master’s level. Contradicting the hypothesis, students from the two programs did not differ significantly with regard to their ratings of the complexity of education science (p = 0.160).

Figure 2. Interaction effects: Complexity of the disciplines as a function of subject and degree program (Sub-Stud 1). The figure displays beliefs about the complexity of the three disciplines among students with a STEM subject (STEM) versus no STEM subjects (non-STEM; A) and for students at the bachelor’s versus master’s level (B). *p < 0.05 in the simple effects analyses.

Summary

In line with the assumptions, I found evidence for a devaluation of education science among student teachers: On average, they perceived education science as less important for professional success than subject didactics and as less complex than their subject disciplines. The results also yielded moderating effects: The tendency to devalue the importance of education science was more pronounced among students with less experience with education science (i.e., bachelor’s degree students) than among students with more experience with education science (i.e., master’s degree students). In addition, the tendency to devalue the complexity of education science was more pronounced among students with a STEM subject than among students without a STEM subject.

Sub-Study 2

Hypotheses

I investigated the consequences of the skeptical beliefs for engagement and possible sources (soft versus hard research methods and anecdotal versus scientific sources of evidence) with the following assumptions.

(1) I hypothesized that more skeptical beliefs about education science would be associated with lower engagement with research from education science:

• Students with more negative beliefs are less willing to exert effort in educational science courses (beliefs engagement hypothesis).

• Students with more negative beliefs are less open to evidence-based practices (beliefs openness hypothesis).

(2) As potential sources for the devaluation of education science, I expected:

• Students consider research findings from studies using soft research methods (typical methods from education science, such as surveys, systematic observations, standardized tests) to be less trustworthy than findings from studies using hard research methods (typical research methods from the natural sciences, such as EEG, fMRI; research method hypothesis).

• Student teachers perceive information reported by colleagues (i.e., anecdotal source) as more trustworthy than information from empirical educational research (scientific source; source of evidence hypothesis).

An a priori power analysis in G*Power for a linear multiple regression analysis to test the hypotheses on the engagement (α = 0.05, power β = 0.80, 9 predictors) indicated that a sample size of N = 74 would be sufficient to detect a medium-sized effect. For the hypotheses on the sources, I conducted two experimental manipulations (soft versus hard research methods; anecdotal versus scientific source). The power analysis for an ANOVA with a within-subject factor with two levels indicated that a sample size of N = 46 would be sufficient to detect a medium-sized effect.

Materials and methods

Sample

In total, N = 87 student teachers participated in Sub-Study 2 (Table 1). All participants were in the first to fourth semester of the Master of Education.

Instruments

Beliefs about the disciplines

Student teachers completed the same instrument as in Sub-Study 1 on their beliefs about the importance of the three disciplines for professional success and the complexity of the disciplines (Table 2).

Engagement with education science

Two aspects of engagement with education science were measured using 15 6-point Likert scale items (completely disagree to completely agree). First, eight items captured student teachers’ willingness to make an effort in education science (Cronbach’s α = 0.88; adapted from Jonkmann et al., 2013). An example item is: I do my best in education science courses. Second, seven items were adapted from Aarons (2004) to measure openness to evidence-based practices (Cronbach’s α = 0.70). An example item is: I would use new methods that have been proven effective in research, even if they were very different from what I am used to doing.

Vignettes about research findings

A total of 11 short vignettes about research findings were constructed. For each vignette, participants indicated how trustworthy the research findings were (6-point Likert scales, very trustworthy to not at all trustworthy). They were instructed that trustworthiness means the extent to which they would rely on and trust these findings. The vignettes were included in two versions of the questionnaire with two experimental within-subject variations:

The (1) research method was experimentally varied for five research findings. Each finding existed in two versions: one based on a typical research method from neuroscience (research method = hard; e.g., the recording of brain activity/results from EEG/results from fMRI showed…) and one based on a typical research method from education science (research method = soft, e.g., the results of a standardized survey/of a systematic observation/of a standardized test showed…). The research findings were counterbalanced across the two questionnaire versions.

The (2) source of evidence was experimentally varied for the other six research findings: Again, each finding existed in two versions that were identical except that one was based on a report by a colleague (source = anecdotal: e.g., In my daily school life, I often observe that…) and one on a scientific source (source = science; e.g., Research results have shown that…). The vignettes were also counterbalanced across questionnaire versions, and each participant rated three findings from an anecdotal source and three findings from a scientific source.

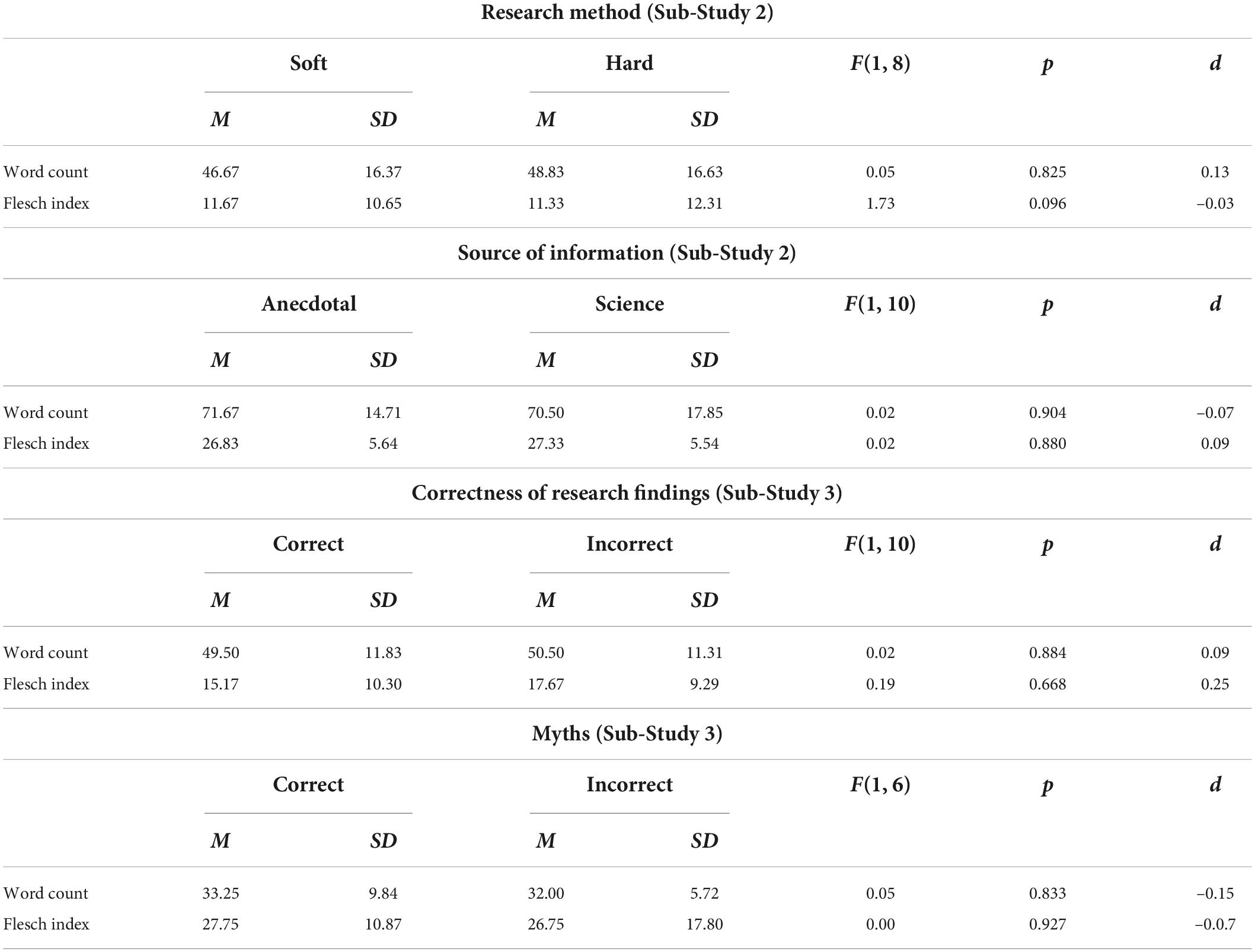

Other than these two experimental variations (source and research method), the vignettes were parallelized in terms of content (e.g., testing effect, self-regulated learning, homework), length (word count), and readability (Flesch, 1948). Neither the vignettes experimentally varying the research method nor those varying the source of information differed significantly from one another in terms of length and readability (Table 4).

Table 4. Means, standard deviations, and one-way analyses of variance in length and readability of the vignettes (Sub-Study 2 and Sub-Study 3).

Results

Are beliefs about education science related to engagement with education science?

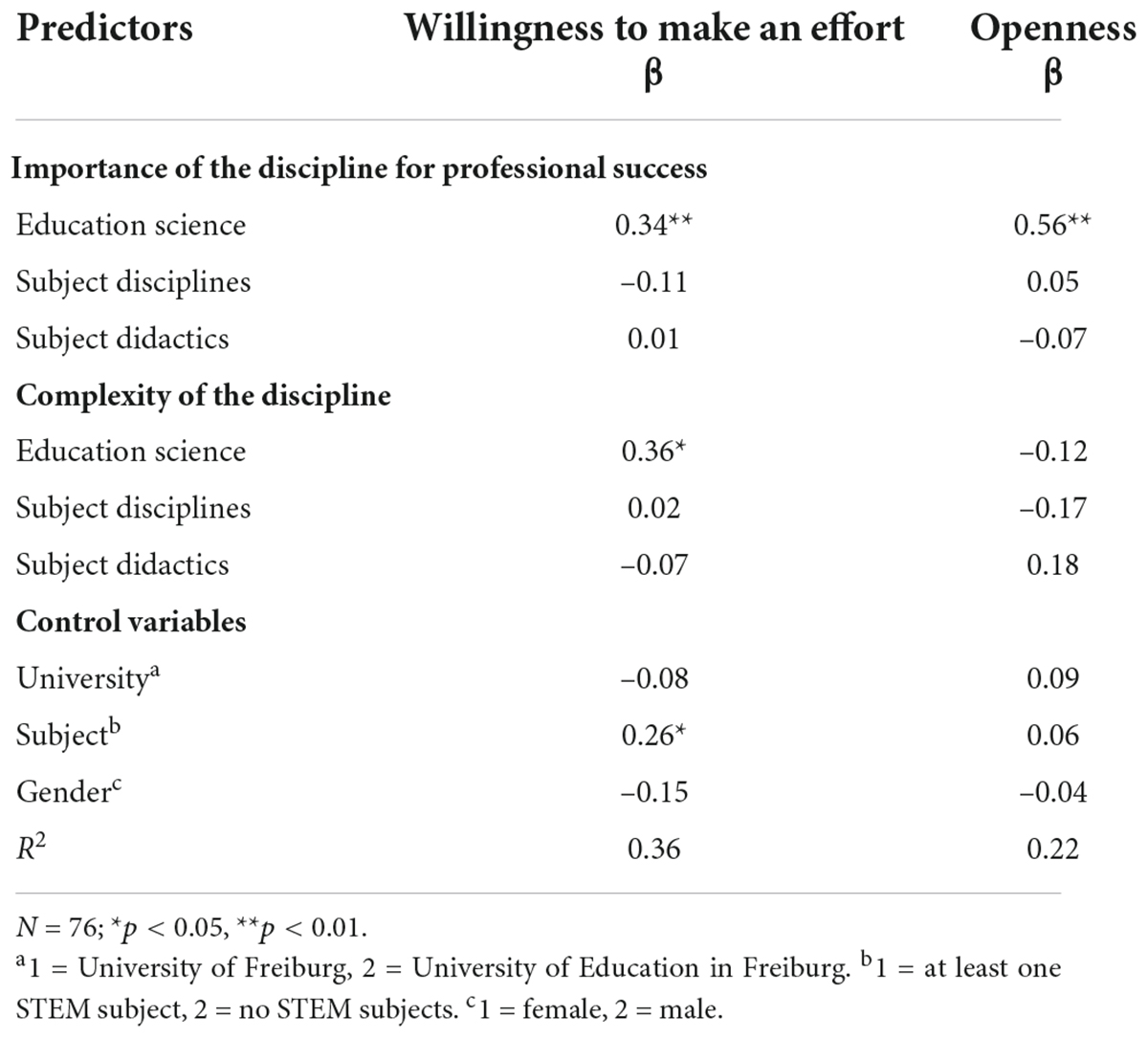

I conducted a regression analysis of willingness to make an effort in education science on beliefs about the importance and complexity of education science, the students’ subject disciplines, and subject didactics (controlling for gender, subject, and additionally for university, because students in this sub-study studied either at the University of Freiburg or the University of Education in Freiburg). Importance of education science and complexity of education science (and subject) were significant predictors of willingness to make an effort, whereas beliefs about the importance and complexity of the students’ subject disciplines and subject didactics did not explain differences in willingness to make an effort in education science courses (Table 5). Thus, in line with the beliefs engagement hypothesis, stronger beliefs that education science is important for professional success and that education science is complex were associated with students being more willing to make an effort in their education science courses.

Table 5. Results of the regression analysis predicting engagement with education science (Sub-Study 2).

An analogously computed regression analysis of openness to evidence-based practices on beliefs about the importance and complexity of education science, subject disciplines, and subject didactics (also controlling for gender, subject, and university) showed that importance was a significant predictor, but complexity was not. Thus, partly in line with the hypothesis, more strongly believing that education science is important was associated with students being more open to evidence-based practice.

Do soft research methods and students’ preference for anecdotal information contribute to the devaluation of education science?

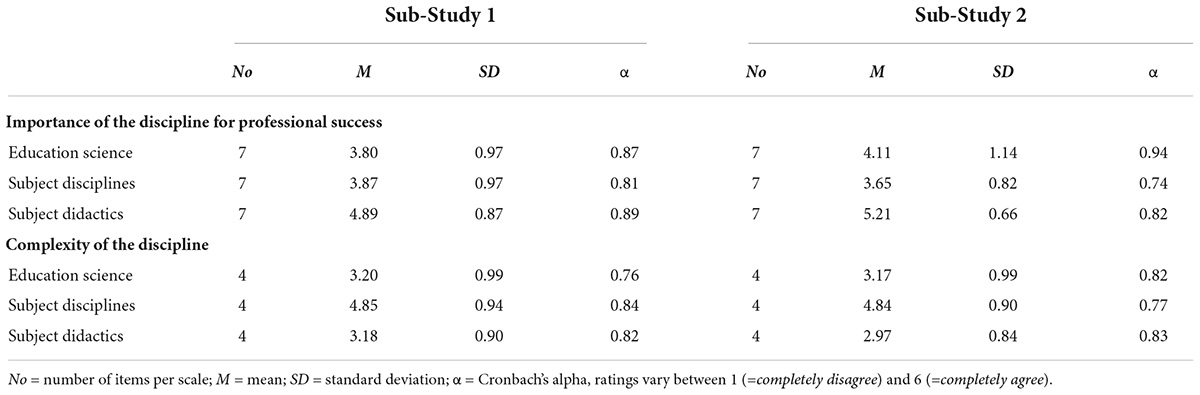

The analysis of variance with research method as the within-subject factor (i.e., soft vs. hard), trustworthiness of the findings as the dependent variable (and gender, subject, and university as control variables) revealed a significant small to medium-sized main effect of research method [F(1, 69) = 7.127, p = 0.009, η2 = 0.09]. This main effect supported the research method hypothesis: Student teachers rated findings obtained with hard research methods as more trustworthy than findings obtained with soft research methods. With the exception of university [F(1, 69) = 4.982, p = 0.029, η2 = 0.06], none of the covariates showed a significant effect.

An analogous analysis of variance with source of evidence as the within-subject factor (i.e., anecdotal vs. scientific) also revealed a significant medium-sized main effect of source [F(1, 68) = 4.324, p = 0.041, η2 = 0.06, no significant effects of any of the covariates]. This result indicated that – contradicting the source of evidence hypothesis – student teachers rated findings from scientific sources as more trustworthy than findings from anecdotal sources.

Summary

Regarding the consequences of student teachers’ beliefs, in line with the hypothesis, the results indicated that more skeptical beliefs about education science were related to lower engagement with research from education science and – at least for skeptical beliefs about the importance of education science – to lower openness to scientific evidence.

Furthermore, regarding possible sources of these skeptical beliefs, the experimental manipulation results indicated that student teachers, on average, evaluated empirical findings from studies with soft research methods as less trustworthy than equivalent empirical findings from studies with hard research methods – but with a rather small effect size. Thus, education science’s typical soft research methods might contribute to its devaluation. Against the expectation, findings from anecdotal sources were given lower trustworthiness ratings than equivalent findings from scientific sources. However, this effect was also small.

Sub-Study 3

Research questions

Do (1) student teachers tend to perceive evidence from education science as trivial and (2) do they believe in myths regarding education science?

Materials and methods

Sample

A total of N = 49 student teachers participated in Sub-Study 3 (Table 1). All participants were in the first semester of their Master of Education program at the University of Freiburg.

Instruments: Vignettes about research findings and myths

The participants were also presented with short vignettes about research findings from education science, again with an experimental manipulation, but this time regarding the correctness of the research findings: Each of the six findings overall was presented in two versions, one as actually found in the research (e.g., research findings showed that performance improved), and one not in line with the research results (e.g., research findings showed that performance did not improve). The correctness of the findings was also counterbalanced across questionnaires and randomly assigned to subjects. Participants had to indicate on a 6-point Likert scale how obvious these findings were to them (very obvious to not at all obvious). They were instructed to rate whether the findings were expectable and not surprising (i.e., obvious) or surprising and contradicted what they would have expected (i.e., not obvious).

Furthermore, four similar vignettes on typical misconceptions (i.e., myths) about phenomena from education science were developed in two versions (incorrect = myth vs. correct) and randomly assigned to the students. The four myths were (1) the need to adapt instruction to students’ learning styles (need to adapt vs. do not need to adapt), (2) impact of teacher personality (it is primarily a teacher’s personality that matters for teaching success vs. a teacher’s personality is not the primary factor for teaching success), (3) impact of teaching experience (having more experience automatically makes one a better teacher vs. does not automatically make one a better teacher), (4) impact of class size on student learning (smaller class sizes automatically lead to better student learning vs. do not automatically lead to better learning). These vignettes were also parallelized and did not significantly differ in terms of length or readability (Flesch, 1948; Table 4).

Results

The a priori power analysis for an analysis of variance with a within-subject factor with two levels (incorrect vs. correct) indicated that a sample size of N = 46 would be sufficient to detect a medium-sized effect.

Do student teachers perceive evidence from education science as trivial?

In the analysis of variance with correctness as the within-subject factor (i.e., correct vs. incorrect) and obviousness as the dependent variable (controlling for gender), the main effect was not significant [F(1, 43) = 1.918, p = 0.173, η2 = 0.04]. The means were above the theoretical midpoint of 3.5 (Table 6), indicating that, in line with the hindsight assumption, students on average tended to evaluate findings from education science as rather obvious – independent of whether the findings were correct or incorrect. Descriptively, the mean was higher for the incorrect than the correct research findings (Table 6).

Do student teachers endorse myths about education science?

The analogously computed analysis of variance for myths with the within-subject factor correctness and obviousness as the dependent variable (controlling for gender) revealed a large main effect for correctness [F(1, 43) = 40.434, p = 0.000, η2 = 0.49]. This result suggested that students strongly believe in the myths: They rated the incorrect findings (i.e., the myths) as much more obvious and expected than the correct findings (see Table 6 for descriptive statistics).

Summary

The results of Sub-Study 3 suggest that, consistent with the hindsight bias, student teachers retrospectively evaluated research findings from education science as trivial (“I knew it all along”). Furthermore, on average, student teachers strongly believe in myths about learning and teaching.

Overall discussion

I examined whether student teachers devaluate education science compared to their subject disciplines and subject didactics. Additionally, I investigated the consequences and potential sources of the devaluation.

Do student teachers hold skeptical beliefs about education science?

The results of three sub-studies indicated a pronounced devaluation of education science among student teachers in a German sample. I found evidence for this devaluation based on different research approaches (quasi-experimental questionnaire data and data from experimental studies) and reflecting several aspects.

First, in the questionnaire Sub-Study 1 with a large sample, student teachers perceive education science as less complex than their subject disciplines on average.

Second, student teachers perceive education science as less important for teaching success than subject didactics on average.

These skeptical beliefs about the importance of education science for teaching success are not in line with the empirical evidence: Research results indicate that teachers’ knowledge about topics from education science is related to teaching success in terms of instructional quality and achievement: Higher pedagogical-psychological knowledge of teachers is associated with higher learning support of the students (Voss et al., 2014, 2022), a more efficient classroom management (Voss et al., 2014, 2022), and higher students’ achievement (König and Pflanzl, 2016). This importance of teacher knowledge is not limited to pedagogical-psychological knowledge. It has also been shown that pedagogical content knowledge is related to the teaching success (e.g., Baumert et al., 2010), and content knowledge has been shown to be an essential basis for developing pedagogical content knowledge (e.g., Friedrichsen et al., 2009; Kleickmann et al., 2017). Thus, the three domains of teacher knowledge are important, pedagogical-psychological knowledge, pedagogical content knowledge, and content knowledge. This is in line with the national standards or guidelines for teacher education of many countries. Such standards describe what teachers should know and be able to do and typically cover aspects of all three domains of teacher knowledge (Bauer and Prenzel, 2012). Consequently, in Germany, student teachers must take courses in the three disciplines – subject disciplines, subject didactics, and education science – to acquire pedagogical-psychological knowledge, pedagogical content knowledge, and content knowledge. Thus, the devaluation of education science’s importance for teaching found in the present study on average among student teachers is not consistent with either education policy standards or empirical research. Therefore, it seems necessary to target such inappropriate beliefs for change during teacher education. The devaluation tendency is also mirrored in motivational constructs, as research, for instance, indicated that the subject interest of teachers is higher than the interest in education science (e.g., Pozas and Letzel, 2021).

Third, in the experimental Sub-Study 3, student teachers turned out to strongly believe in myths about learning. Although plenty of research debunks the myths as myths (e.g., Kirschner and van Merriënboer, 2013; Macdonald et al., 2017; Eitel et al., 2021), the student teachers in the present sample believe in these myths. Thus, the results suggest a need to break down misconceptions of student teachers (Menz et al., 2021a; Prinz et al., 2021). In the present study, four such myths were examined as examples. However, other myths exist, such as that some students are information-savvy digital natives and that learners can multitask (Kirschner and De Bruyckere, 2017). Future research should address such other myths.

Are skeptical beliefs related to engagement in education science courses?

The devaluation of education science compared to subject disciplines and subject didactics is especially relevant in light of the assumed filtering effect of beliefs (e.g., Pajares, 1992; Patry, 2019) and thus the assumed importance of beliefs for future teachers’ professional thinking and learning (e.g., Fives and Buehl, 2012). The results of the present study indicate that these beliefs matter for student teachers’ motivation: More skeptical beliefs about the complexity and importance of education science were associated with lower engagement with research from education science and less openness to scientific evidence (the latter statistically significant only for skeptical beliefs about the importance of education science). Thus, the results suggest that the devaluation of education science is crucial, as it is related to the quality of students’ uptake of learning opportunities. This result is in line with the assumed importance of beliefs for learning and the uptake of learning opportunities during teacher education (Fives and Buehl, 2012; Stark, 2017; Ferguson, 2021). The questionnaire data of the present study thus complement the results of smaller interview studies (e.g., Holt-Reynolds, 1992; Bondy et al., 2007). However, further research is needed to examine other indicators of uptake of learning opportunities in teacher training. In the present study, self-report data on motivation and openness were used. An important next step would be to investigate the associations of student teachers’ beliefs with alternative measures of motivation (Fulmer and Frijters, 2009). Possible alternative approaches include the use of observational data (e.g., tasks chosen or persistence when engaging in tasks) or analyses of students’ authentic learning materials (e.g., learning protocols, lesson plans).

Furthermore, more research is needed to examine the assumed detrimental effect of skeptical beliefs about evidence from education science on teaching success as prior research is ambiguous. For instance, some studies found that teachers with more skeptical beliefs about evidence-based practices do not differ in the frequency of the use of evidence-based practices from teachers with less skeptical beliefs (e.g., McNeill, 2019, see also Krammer et al., 2021, for believing in neuromyths). At the same time, other studies showed that positive beliefs about evidence are related to more frequent use of evidence-based practices (Combes et al., 2016). Additionally, evidence on beliefs, in general, indicated congruencies between teacher beliefs and teaching practices (e.g., overview from Buehl and Beck, 2015).

What are potential sources of skeptical beliefs about education science?

Knowledge of the sources of student teachers’ skeptical beliefs may serve as starting points for breaking down dysfunctional beliefs and misconceptions.

Therefore, first, in the questionnaire Sub-Study 1, I investigated whether the devaluation of education science depends on students’ selected subject disciplines and degree programs as potential sources of skeptical beliefs about education science. The results indicated that the tendency to devaluate the complexity of education science was more pronounced among students of STEM subjects than students with no STEM subjects. Thus, disciplinary culture obviously plays a role in shaping the tendency to devaluate the complexity of education science compared to students’ subject disciplines. Furthermore, the devaluation of the importance of education science compared to subject didactics was moderated by student teachers’ experience with education science: Student teachers at the bachelor’s level devaluate the importance of education science for teaching success more strongly than student teachers at the master’s level. The participants of the present study were student teachers from Freiburg University. During the bachelor’s degree program at Freiburg University, student teachers have to complete only one module on education science, whereas in the master’s degree program, significantly more credit hours are devoted to education science. Thus, on average, bachelor’s students have less experiences with education science. The results of the present study suggest they are more prone to dysfunctional beliefs about education science, whereas student teachers with more experience (i.e., in the master’s degree program) appear less prone to such devaluations. This might be because students in the bachelor’s degree program have little knowledge about education science as a professional discipline and thus might lack the awareness of the importance of the discipline. This explanation would also have parallels to the Dunning-Kruger effect (Kruger and Dunning, 1999), a prominent effect in metacognitive research indicating that people with little knowledge in a domain tend to be unaware of their deficient knowledge. As a consequence of the moderating effect of experience, it seems vital to create learning opportunities early in teacher training programs that support students in reflecting on their skeptical beliefs about education science and forming a more appropriate conception of the discipline and its importance for teaching success. In light of research on typical gender differences (e.g., women are less likely to choose STEM subjects than men; Roloff Henoch et al., 2015), it is interesting to note that I found no effect of the covariate gender.

Second, experimental evidence indicated in the present study a dysfunctional pattern in the reception of research findings from education science. This also sheds light on potential sources for the devaluation of education science. The results of the experimental Sub-Study 2 indicate that the soft research methods typical of education science might contribute to its devaluation: Student teachers on average evaluated empirical findings from studies with soft research methods as less trustworthy than equivalent empirical findings from studies with hard research methods. Thus, student teachers need more knowledge about research methods and their validity (Voss et al., 2020; Thomm et al., 2021b) to reduce this potentially biased perception of the quality of research findings based on different methods.

Contrary to the assumption, findings from anecdotal sources were given lower trustworthiness ratings than equivalent findings from scientific sources. Prior research indicates that student teachers prefer teachers as sources of information compared to researchers (Menz et al., 2021b), have more positive beliefs about the utility of anecdotal information compared to educational research, and use anecdotal sources more frequently than scientific evidence (Kiemer and Kollar, 2021). Together with the results of the present study, this may indicate that even though student teachers evaluate information from scientific sources as more trustworthy, they use scientific sources less frequently than anecdotal sources. Additionally, Hendriks et al. (2021) found that the perceived trustworthiness of teachers and researchers depends on students’ specific epistemic goal. Further research should shed light on this apparent contradiction by investigating beliefs about different sources together with concrete use of these sources in teaching and students’ goals.

Finally, student teachers in the experimental Sub-Study 3 tended to believe that evidence from education science is trivial. This hindsight bias is a general phenomenon that has also been investigated in areas other than education (e.g., Blank et al., 2003). I found the tendency to perceive evidence about learning and teaching as common sense and foreseeable in the study among student teachers. Thus, this tendency may also contribute to the evolution of skeptical beliefs about education science.

Strengths and limitations

A main strength of this study was the combination of a quasi-experimental sub-study with experimental sub-studies. In doing so, evidence to describe phenomena was generated, such as the average level of student teachers’ beliefs about education science compared to their beliefs about their subject disciplines and subject didactics. In addition, evidence to explain phenomena was generated in the experimental sub-studies, such as why student teachers perceive research findings from education science as less trustworthy than research findings from STEM subjects.

The samples of the three sub-studies consisted of a total of 346 student teachers from two universities in Germany. They studied different subject disciplines and were enrolled in different degree programs (bachelor’s and master’s). These results might be generalized to countries with similar conditions (e.g., culture, teacher education system). However, the results cannot be generalized to differently structured teacher education systems. As the a priori power analyses indicated, the sample sizes were sufficient to detect the expected effects. Nevertheless, larger samples would be desirable in future research to examine the importance of further moderators, such as the amount of student teachers’ teaching experience, the length of time they have been in a teacher education program, or the prior knowledge about the disciplines.

Another limitation is the use of cross-sectional data. In the present study, the degree programs (bachelor’s and master’s) served as a proxy for experiences with education science. However, longitudinal data would be necessary to draw conclusions about the development of student teachers’ beliefs over the course of teacher training. For example, a longitudinal study with student teachers in Germany found a decline in beliefs about the importance of education science over time on average (Cramer, 2013). As these results contradict the results of the present cross-sectional study, with more positive beliefs among students with more experience with education science, further research is needed to elucidate this contradiction.

Another limitation is how the consequences of skeptical beliefs were measured in Sub-Study 2, as the participants provided self-reports on engagement with education science. The self-report instrument was adapted from validated instruments (e.g., Aarons, 2004; Jonkmann et al., 2013). However, further studies with alternative measures, such as observational data or analyses of authentic learning materials, are needed.

Furthermore, the experimental sub-studies provided evidence for potential sources of skeptical beliefs about education science. For instance, the results indicate that student teachers rate research findings based on hard research methods as more trustworthy than equivalent research findings based on soft research methods like those typical of education science. However, as a further limitation, the direct impact of these sources on the formation of student teachers’ beliefs was not examined and should be addressed in future research. Additionally, future research should investigate other potential influencing factors. For instance, student teachers’ professional roles might also affect their beliefs about education science. Similar to research on motives for teaching (e.g., Watt et al., 2012), some student teachers might see themselves primarily as experts on their subject matter. Those student teachers might be highly interested in the subject and pass on the subject matter to the students. On the contrary, for other student teachers, educating students might be much more part of their professional role. As a result, those students might be more interested in education science and might also have more positive beliefs about the discipline.

Conclusion

Overall, the findings of the sub-studies indicate that student teachers, on average, held skeptical beliefs about education science. This poses a challenge to those involved in teacher education: Student teachers bring inappropriate beliefs and misconceptions into their teacher education program, and these beliefs are related to their engagement with the learning content. The findings support the assumption that beliefs are facilitators or barriers to the use of evidence in instructional situations (Fischer, 2021). Thus, it seems important to address beliefs early in teacher education programs (Stark, 2017), encourage students to reflect on their beliefs, and create specific learning opportunities to break down misconceptions and inappropriate beliefs.

The results of the present study on the potential sources of student teachers’ skeptical beliefs can provide information on where to start. They should be considered alongside theoretical models (e.g., Gregoire, 2003) and evidence on how to successfully change beliefs (Gill et al., 2004; Kleickmann et al., 2016; Prinz et al., 2021).

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author contributions

TV contributed to resource, conceptualization, methodology, analysis, and writing.

Acknowledgments

Many thanks go to Jörg Wittwer for his feedback and to Keri Hartman for proofreading the article. Furthermore, the author acknowledge support by the Open Access Publication Fund of the University of Freiburg.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aarons, G. A. (2004). Mental health provider attitudes toward adoption of evidence-based practice: the evidence-based practice attitude scale (EBPAS). Ment. Health Serv. Res. 6, 61–74. doi: 10.1023/B:MHSR.0000024351.12294.65

Bartels, J. M., Hinds, R. M., Glass, L. A., and Ryan, J. J. (2009). Perceptions of psychology as a science among university students: the influence of psychology courses and major of study. Psychol. Rep. 105, 383–388. doi: 10.2466/PR0.105.2.383-388

Bauer, J., and Prenzel, M. (2012). European teacher training reforms. Science 336, 1642–1643. doi: 10.1126/science.1218387

Baumert, J., Kunter, M., Blum, W., Brunner, M., Voss, T., Jordan, A., et al. (2010). Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. Am. Educ. Res. J. 47, 133–180. doi: 10.3102/0002831209345157

Bensley, D. A., and Lilienfeld, S. O. (2017). Psychological misconceptions: recent scientific advances and unresolved issues. Curr. Dir. Psychol. Sci. 26, 377–382. doi: 10.1177/0963721417699026

Beycioglu, K., Ozer, N., and Ugurlu, C. T. (2010). Teachers’ views on educational research. Teach. Teach. Educ. 26, 1088–1093. doi: 10.1016/j.tate.2009.11.004

Blank, H., Fischer, V., and Erdfelder, E. (2003). Hindsight bias in political elections. Memory 11, 491–504. doi: 10.1080/09658210244000513

Bondy, E., Ross, D., Adams, A., Nowak, R., Brownell, M., Hoppey, D., et al. (2007). Personal epistemologies and learning to teach. Teach. Educ. Spec. Educ. 30, 67–82. doi: 10.1177/088840640703000202

Bråten, I., and Ferguson, L. E. (2015). Beliefs about sources of knowledge predict motivation for learning in teacher education. Teach. Teach. Educ. 50, 13–23. doi: 10.1016/j.tate.2015.04.003

Broekkamp, H., and Hout-Wolters, B. v (2007). The gap between educational research and practice: a literature review, symposium, and questionnaire. Educ. Res. Eval. 13, 203–220. doi: 10.1080/13803610701626127

Brownlee, J. L., Ferguson, L. E., and Ryan, M. (2017). Changing teachers’ epistemic cognition: a new conceptual framework for epistemic reflexivity. Educ. Psychol. 521, 242–252. doi: 10.1080/00461520.2017.1333430

Brownlee, J., Purdie, N., and Boulton-Lewis, G. (2001). Changing epistemological beliefs in pre-service teacher education students. Teach. High. Educ. 6, 247–268. doi: 10.1080/13562510120045221

Buehl, M. M., and Beck, J. S. (2015). “The relationship between teachers’ beliefs and practices,” in International Handbook of Research on Teachers’ Beliefs, eds H. Fives and M. G. Gill (London: Routledge), 66–84.

Cain, T. (2016). Research utilisation and the struggle for the teacher’s soul: a narrative review. Eur. J. Teach. Educ. 39, 616–629. doi: 10.1080/02619768.2016.1252912

Chan, K. (2003). Hong Kong teacher education students’ epistemological beliefs and approaches to learning. Res. Educ. 69, 36–50. doi: 10.7227/RIE.69.4

Combes, B. H., Chang, M., Austin, J. E., and Hayes, D. (2016). The use of evidenced-based practices in the provision of social skills training for students with autism spectrum disorder among school psychologists. Psychol. Sch. 53, 548–563. doi: 10.1002/pits.21923

Cramer, C. (2013). Beurteilung des bildungswissenschaftlichen Studiums durch Lehramtsstudierende in der ersten Ausbildungsphase im Längsschnitt [Longitudinal assessment of educational science by student teachers in the first phase of their teacher education program]. Z. Pädagog. 59, 66–82. doi: 10.25656/01:11927

Dagenais, C., Lysenko, L., Abrami, P. C., Bernard, R. M., Ramde, J., and Janosz, M. (2012). Use of research-based information by school practitioners and determinants of use: a review of empirical research. Evid. Policy 8, 285–309. doi: 10.1332/174426412X654031

Davies, P. (1999). What is evidence-based education? Br. J. Educ. Stud. 47, 108–121. doi: 10.1111/1467-8527.00106

Dekker, S., Lee, N. C., Howard-Jones, P., and Jolles, J. (2012). Neuromyths in education: prevalence and predictors of misconceptions among teachers. Front. Psychol. 3:429. doi: 10.3389/fpsyg.2012.00429

Eitel, A., Prinz, A., Kollmer, J., Niessen, L., Russow, J., Ludäscher, M., et al. (2021). The misconceptions about multimedia learning questionnaire: an empirical evaluation study with teachers and student teachers. Psychol. Learn. Teach. 20, 420–444. doi: 10.1177/14757257211028723

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/brm.41.4.1149

Ferguson, L. E. (2021). Evidence-informed teaching and practice-informed research. Z. Pädagog. Psychol. 35, 199–208. doi: 10.1024/1010-0652/a000310

Fischer, F. (2021). Some reasons why evidence from educational research is not particularly popular among (pre-service) teachers: a discussion. Z. Pädagog. Psychol. 35, 209–214. doi: 10.1024/1010-0652/a000311

Fives, H., and Buehl, M. M. (2012). “Spring cleaning for the “messy” construct of teachers’ beliefs: what are they? Which have been examined? What can they tell us?,” in APA Educational Psychology Handbook, Vol 2: Individual Differences and Cultural and Contextual Factors, eds K. R. Harris, S. Graham, T. Urdan, S. Graham, J. M. Royer, and M. Zeidner (Washington, DC: American Psychological Association), 471–499. doi: 10.1037/13274-019

Flesch, R. (1948). A new readability yardstick. J. Appl. Psychol. 32, 221–233. doi: 10.1037/h0057532

Friedrichsen, P. J., Abell, S. K., Pareja, E. M., Brown, P. L., Lankford, D. M., and Volkmann, M. J. (2009). Does teaching experience matter? Examining biology teachers’ prior knowledge for teaching in an alternative certification program. J. Res. Sci. Teach. 46, 357–383. doi: 10.1002/tea.20283

Fulmer, S. M., and Frijters, J. C. (2009). A review of self-report and alternative approaches in the measurement of student motivation. Educ. Psychol. Rev. 21, 219–246. doi: 10.1007/s10648-009-9107-x

Gill, M. G., Ashton, P. T., and Algina, J. (2004). Changing preservice teachers’ epistemological beliefs about teaching and learning in mathematics: an intervention study. Contemp. Educ. Psychol. 29, 164–185. doi: 10.1016/j.cedpsych.2004.01.003

Gregoire, M. (2003). Is it a challenge or a threat? A dual-process model of teachers’ cognition and appraisal processes during conceptual change. Educ. Psychol. Rev. 15, 147–179. doi: 10.1023/A:1023477131081

Guilfoyle, L., McCormack, O., and Erduran, S. (2020). The “tipping point” for educational research: the role of pre-service science teachers’ epistemic beliefs in evaluating the professional utility of educational research. Teach. Teach. Educ. 90:103033. doi: 10.1016/j.tate.2020.103033

Haney, J. J., and McArthur, J. (2002). Four case studies of prospective science teachers’ beliefs concerning constructivist teaching practices. Sci. Educ. 86, 783–802. doi: 10.1002/sce.10038

Hattie, J. (2009). Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement. London: Routledge.

Helmsley-Brown, J., and Sharp, C. (2003). How do teachers use research findings to improve their professional practice. Oxf. Rev. Educ. 29, 449–471.

Hendriks, F., Seifried, E., and Menz, C. (2021). Unraveling the “smart but evil” stereotype: pre-service teachers’ evaluations of educational psychology researchers versus teachers as sources of information. Z. Pädagog. Psychol. 35, 157–171. doi: 10.1024/1010-0652/a000300

Hofer, B. K. (2001). Personal epistemology research: implications for learning and teaching. J. Educ. Psychol. Rev. 13, 353–383. doi: 10.1023/A:1011965830686

Hofer, B. K., and Pintrich, P. R. (1997). The development of epistemological theories: beliefs about knowledge and knowing and their relation to learning. Rev. Educ. Res. 67, 88–140. doi: 10.2307/1170620

Holt-Reynolds, D. (1992). Personal history-based beliefs as relevant prior knowledge in course work. Am. Educ. Res. J. 29, 325–349. doi: 10.2307/1163371

Hopkins, E. J., Weisberg, D. S., and Taylor, J. C. (2016). The seductive allure is a reductive allure: people prefer scientific explanations that contain logically irrelevant reductive information. Cognition 155, 67–76. doi: 10.1016/j.cognition.2016.06.011

Janda, L. H., England, K., Lovejoy, D., and Drury, K. (1998). Attitudes toward psychology relative to other disciplines. Prof. Psychol. 29, 140–143. doi: 10.1037/0735-7028.29.2.140

Jonkmann, K., Rose, N., and Trautwein, U. (2013). Tradition und Innovation: Entwicklungsverläufe an Haupt- und Realschulen in Baden-Württemberg und Mittelschulen in Sachsen [Tradition and Innovation: Developmental Trajectories at Hauptschulen and Realschulen in Baden-Württemberg and Mittelschulen in Saxony]. Tübingen: Projektbericht an die Kultusministerien der Länder.

Joram, E. (2007). Clashing epistemologies: aspiring teachers’, practicing teachers’, and professors’ beliefs about knowledge and research in education. Teach. Teach. Educ. 23, 123–135. doi: 10.1016/j.tate.2006.04.032

Joram, E., Gabriele, A. J., and Walton, K. (2020). What influences teachers’“buy-in” of research? Teachers’ beliefs about the applicability of educational research to their practice. Teach. Teach. Educ. 88:102980. doi: 10.1016/j.tate.2019.102980

Karimi, M. N. (2014). Disciplinary variations in English domain-specific personal epistemology: insights from disciplines differing along Biglan’s dimensions of academic domains classification. System 44, 89–100. doi: 10.1016/j.system.2014.03.002

Keil, F. C., Lockhart, K. L., and Schlegel, E. (2010). A bump on a bump? Emerging intuitions concerning the relative difficulty of the sciences. J. Exp. Psychol. 139, 1–15. doi: 10.1037/a0018319

Kiemer, K., and Kollar, I. (2021). Source selection and source use as a basis for evidence-informed teaching. Z. Pädagog. Psychol. 35, 127–141. doi: 10.1024/1010-0652/a000302

Kirschner, P. A., and De Bruyckere, P. (2017). The myths of the digital native and the multitasker. Teach. Teach. Educ. 67, 135–142. doi: 10.1016/j.tate.2017.06.001

Kirschner, P. A., and van Merriënboer, J. J. (2013). Do learners really know best? Urban legends in education. Educ. Psychol. 48, 169–183. doi: 10.1080/00461520.2013.804395

Klavans, R., and Boyack, K. W. (2009). Toward a consensus map of science. J. Am. Soc. Inf. Sci. Technol. 60, 455–476. doi: 10.1002/asi.20991

Kleickmann, T., Richter, D., Kunter, M., Elsner, J., Besser, M., Krauss, S., et al. (2013). Teachers’ content knowledge and pedagogical content knowledge: the role of structural differences in teacher education. J. Teach. Educ. 64, 90–106. doi: 10.1177/0022487112460398

Kleickmann, T., Tröbst, S., Heinze, A., Anschütz, A., Rink, R., and Kunter, M. (2017). “Teacher knowledge experiment: conditions of the development of pedagogical content knowledge,” in Competence Assessment in Education: Research, Models and Instruments, eds D. Leutner, J. Fleischer, J. Grünkorn, and E. Klieme (Berlin: Springer), 111–130. doi: 10.1007/978-3-319-50030-0_8

Kleickmann, T., Tröbst, S., Jonen, A., Vehmeyer, J., and Möller, K. (2016). The effects of expert scaffolding in elementary science professional development on teachers’ beliefs and motivations, instructional practices, and student achievement. J. Educ. Psychol. 108, 21–42. doi: 10.1037/edu0000041

König, J., and Pflanzl, B. (2016). Is teacher knowledge associated with performance? On the relationship between teachers’ general pedagogical knowledge and instructional quality. Eur. J. Teach. Educ. 39, 419–436. doi: 10.1080/02619768.2016.1214128

Krammer, G., Vogel, S. E., and Grabner, R. H. (2021). Believing in neuromyths makes neither a bad nor good student-teacher: the relationship between neuromyths and academic achievement in teacher education. Mind Brain Educ. 15, 54–60. doi: 10.1111/mbe.12266

Krauss, S., Brunner, M., Kunter, M., Baumert, J., Blum, W., Neubrand, M., et al. (2008). Pedagogical content knowledge and content knowledge of secondary mathematics teachers. J. Educ. Psychol. 100, 716–725. doi: 10.1037/0022-0663.100.3.716

Kruger, J., and Dunning, D. (1999). Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J. Pers. Soc. Psychol. 77, 1121–1134. doi: 10.1037//0022-3514.77.6.1121

Kunter, M., Klusmann, U., Baumert, J., Richter, D., Voss, T., and Hachfeld, A. (2013). Professional competence of teachers: effects on instructional quality and student development. J. Educ. Psychol. 105, 805–820. doi: 10.1037/a0032583

Lilienfeld, S. O. (2012). Public skepticism of psychology: why many people perceive the study of human behavior as unscientific. Am. Psychol. 67, 111–129. doi: 10.1037/a0023963

Macdonald, K., Germine, L., Anderson, A., Christodoulou, J., and McGrath, L. M. (2017). Dispelling the myth: training in education or neuroscience decreases but does not eliminate beliefs in neuromyths. Front. Psychol. 8:1314. doi: 10.3389/fpsyg.2017.01314

McNeill, J. (2019). Social validity and teachers’ use of evidence-based practices for autism. J. Autism Dev. Disord. 49, 4585–4594. doi: 10.1007/s10803-019-04190-y

Menz, C., Spinath, B., and Seifried, E. (2021a). Misconceptions die hard: prevalence and reduction of wrong beliefs in topics from educational psychology among preservice teachers. Eur. J. Psychol. Educ. 36, 477–494. doi: 10.1007/s10212-020-00474-5

Menz, C., Spinath, B., and Seifried, E. (2021b). Where do pre-service teachers’ educational psychological misconceptions come from?. Z. Pädagog. Psychol. 35, 143–156. doi: 10.1024/1010-0652/a000299

Merk, S., Rosman, T., Rueß, J., Syring, M., and Schneider, J. (2017). Pre-service teachers’ perceived value of general pedagogical knowledge for practice: relations with epistemic beliefs and source beliefs. PLoS One 12:e0184971. doi: 10.1371/journal.pone.0184971

Moser, S., Zumbach, J., Deibl, I., Geiger, V., and Martinek, D. (2021). Development and application of a scale for assessing pre-service teachers’ beliefs about the nature of educational psychology. Psychol. Learn. Teach. 20, 189–213. doi: 10.1177/1475725720974575

Munro, G. D., and Munro, C. A. (2014). “Soft” versus “hard” psychological science: biased evaluations of scientific evidence that threatens or supports a strongly held political identity. Basic Appl. Soc. Psychol. 36, 533–543. doi: 10.1080/01973533.2014.960080

Niemi, H. (2016). “Academic and practical: research-based teacher education in Finland,” in Do Universities have a Role in the Education and Training of Teachers? An International Analysis of Policy and Practice, ed. B. Moon (Cambridge: Cambridge University Press), 19–33.

Pajares, M. F. (1992). Teachers’ beliefs and educational research: cleaning up a messy construct. Rev. Educ. Res. 62, 307–332. doi: 10.3102/00346543062003307

Patry, J.-L. (2019). “Situation specificity of behavior: the triple relevance in research and practice of education,” in Progress in Education, ed. R. V. Nata (Hauppauge, NY: Nova Publishers), 29–144.

Paulsen, M. B., and Wells, C. T. (1998). Domain differences in the epistemological beliefs of college students. Res. High. Educ. 39, 365–384. doi: 10.1023/A:1018785219220

Pieschl, S., Budd, J., Thomm, E., and Archer, J. (2021). Effects of raising student teachers’ metacognitive awareness of their educational psychological misconceptions. Psychol. Learn. Teach. 20, 214–235. doi: 10.1177/1475725721996223

Pozas, M., and Letzel, V. (2021). Pedagogy, didactics, or subject matter? Exploring pre-service teachers’ interest profiles. Open Educ. Stud. 3, 163–175.

Prinz, A., Kollmer, J., Flick, L., Renkl, A., and Eitel, A. (2021). Refuting student teachers’ misconceptions about multimedia learning. Instr. Sci. 50, 89–110. doi: 10.1007/s11251-021-09568-z

Richardson, L., and Lacroix, G. (2021). What do students think when asked about psychology as a science? Teach. Psychol. 48, 80–89. doi: 10.1177/0098628320959924

Richardson, V. (1996). “The role of attitudes and beliefs in learning to teach,” in Handbook of Research on Teacher Education, 2nd Edn, eds J. Sikula, T. Buttery, and E. Guyton (New York, NY: Macmillan), 102–106.

Roloff Henoch, J., Klusmann, U., Lüdtke, O., and Trautwein, U. (2015). Who becomes a teacher? Challenging the “Negative Selection” hypothesis. Learn. Instr. 36, 46–56.

Rosman, T., Seifried, E., and Merk, S. (2020). Combining intra-and interindividual approaches in epistemic beliefs research. Front. Psychol. 11:570. doi: 10.3389/fpsyg.2020.00570

Sackett, D. L., Rosenberg, W. M. C., Gray, J. A. M., Haynes, R. B., and Richardson, W. S. (1996). Evidence-based medicine: what it is and what it is not. Br. Med. J. 312, 71–72. doi: 10.1136/bmj.312.7023.71

Schommer, M. (1990). Effects of beliefs about the nature of knowledge on comprehension. J. Educ. Psychol. 82, 498–504. doi: 10.1037/0022-0663.82.3.498

Schommer-Aikins, M. (2004). Explaining the epistemological belief system: introducing the embedded systemic model and coordinated research approach. Educ. Psychol. 39, 19–29. doi: 10.1207/s15326985ep3901_3

Shulman, L. S. (1987). Knowledge and teaching: foundations of the new reform. Harv. Educ. Rev. 57, 1–22. doi: 10.17763/haer.57.1.j463w79r56455411

Siegel, S. T., and Daumiller, M. (2021). Students’ and instructors’ understandings, attitudes and beliefs about educational theories: results of a mixed-methods study. Educ. Sci. 11:197. doi: 10.3390/educsci11050197

Simonton, D. K. (2006). Scientific status of disciplines, individuals, and ideas: empirical analyses of the potential impact of theory. Rev. Gen. Psychol. 10, 98–112. doi: 10.1037/1089-2680.10.2.98

Slavin, R. E. (2002). Evidence-based education policies: transforming educational practice and research. Educ. Res. 31, 15–21. doi: 10.3102/0013189X031007015

Stark, R. (2017). Probleme evidenzbasierter bzw. -orientierter Pädagogischer Praxis. Z. Pädagog. Psychol. 31, 99–110.

Thomm, E., Gold, B., Betsch, T., and Bauer, J. (2021a). When preservice teachers’ prior beliefs contradict evidence from educational research. Br. J. Educ. Psychol. 91, 1055–1072. doi: 10.1111/bjep.12407

Thomm, E., Sälzer, C., Prenzel, M., and Bauer, J. (2021b). Predictors of teachers’ appreciation of evidence-based practice and educational research findings. Z. Pädagog. Psychol. 35, 173–184. doi: 10.1024/1010-0652/a000301