Information literacy skills of health professions students in assessing YouTube medical education content

- 1College of Graduate Health Studies, A.T. Still University, Kirksville, MO, United States

- 2College of Business and Information Technology, Lawrence Technological University, Southfield, MI, United States

Introduction: YouTube is a popular social media video platform used by health professions students for medical education. YouTube videos vary in quality, and students need to be able to evaluate and select high-quality videos to supplement their learning. Evaluating the quality of YouTube videos is an essential information literacy skill, and the Association of College and Research Libraries updated the framework of information literacy to include collaborative platforms such as YouTube. Research is needed to understand and explore the information literacy proficiency of students in the health professions who are using YouTube videos as learning resources.

Methods: This exploratory expert-novice study investigated the information literacy proficiency of students in evaluating the quality of medical education YouTube videos. Students (n = 89) and experts (n = 23) evaluated three preselected medical education YouTube videos of varying quality using the Medical Quality Video Evaluation Tool (MQ-VET).

Results: Results of two-way mixed repeated-measures ANOVA found experts assigned significantly lower ratings to low- and medium-quality videos than students. In other words, students were less information proficient in selecting videos due to overrating source credibility, education quality, and production quality, along with having lower expectations and finding videos more useful.

Discussion: The tendency of students to overrate lower-quality videos raises concerns about their selection of educational content outside structured learning environments. If students select videos to watch outside the classroom, they could unintentionally learn medical skills from low-quality videos. These insights suggest a need for teachers to select only high-quality videos for students and for more focused curricular strategies to enhance students’ abilities in critically assessing YouTube resources for medical education.

1 Introduction

Health professions students utilize YouTube for medical education, valuing its accessibility and free content for learning clinical skills (Mukhopadhyay et al., 2014; Barry et al., 2016; Khamis et al., 2018; Aldallal et al., 2019; O'Malley et al., 2019; Burns et al., 2020; Vizcaya-Moreno and Pérez-Cañaveras, 2020; Kauffman et al., 2022). Supporting the effective and efficient integration of YouTube into medical education is imperative as YouTube use is quickly increasing among students in the health professions (Curran et al., 2020). The extent of YouTube use in the health professions can be seen in a recent study by Alzoubi et al. (2023), in which 699 medical students were surveyed, and 82.3% of the students used YouTube to supplement their learning. In a newer study by Pradhan et al. (2024), of 195 medical students, 91.8% of the men and 89.3% of the women used YouTube as a medical educational resource. However, the sheer volume and unregulated nature of YouTube content make discerning quality challenging. These videos, while accessible, may lack reliability and educational value (Deangelis et al., 2019; Van den Eynde et al., 2019; Yoo et al., 2020; Jackson et al., 2021; Zengin and Onder, 2021; Garip and Sakallioğlu, 2022). Therefore, it is crucial for students to critically evaluate YouTube videos to select reliable, quality content (Lee et al., 2019; Van den Eynde et al., 2019; Curran et al., 2020; Helming et al., 2021; Zengin and Onder, 2021).

Evaluating the quality of YouTube videos is an essential information literacy skill (Kim et al., 2014). Information literacy is the group of abilities needed to discover, use, and understand to create knowledge (Association of College and Research Libraries, 2015). The Association of College and Research Libraries (2015) has updated its focus on information literacy to include abilities needed for collaborative platforms. Students can benefit from learning YouTube information literacy skills because the site differs from traditional resources, may lack citations, and may be less motivated to evaluate content from video-sharing sites over other forms of social media (Kim et al., 2014).

Despite its potential utility in curricula, YouTube video evaluation is not typically a standard educational component in the health professions. As such, students may not have the information literacy skills to appraise online information (Theron et al., 2017; Zhu et al., 2021) and select suitable educational YouTube videos (Helming et al., 2021). Educators should teach critical assessment skills for YouTube, considering the risks of unsupervised navigation on this platform (Aldallal et al., 2019; O'Malley et al., 2019; Van den Eynde et al., 2019; Burns et al., 2020; Kauffman et al., 2022).

Recent studies have focused on experts evaluating medical YouTube videos (e.g., Deangelis et al., 2019; Jackson et al., 2021; Garip and Sakallioğlu, 2022). This study is, to our knowledge, the first where students and experts from multiple disciplines in the health professions have evaluated YouTube videos of varying quality for medical education. The purpose of this study was to examine the information literacy proficiency of health professions students in evaluating the quality of medical education YouTube videos using experts’ ratings as a benchmark for comparison.

2 Literature review

2.1 YouTube in medical education

Health professions students highly value YouTube as an educational tool and use the site to learn clinical skills (Mukhopadhyay et al., 2014; Barry et al., 2016; Khamis et al., 2018; Aldallal et al., 2019; O'Malley et al., 2019; Burns et al., 2020; Vizcaya-Moreno and Pérez-Cañaveras, 2020; Kauffman et al., 2022). For example, Barry et al. (2016) found that 78% of radiation therapy and second-year undergraduate medical students in their study used YouTube as a primary video source to learn anatomy. Rapp et al. (2016) surveyed general surgery medical students, residents, and faculty and found that 86% of the participants using videos for surgical preparation used YouTube. Students also use the videos because they are free, highly accessible, and can be used independently as an external resource (Mukhopadhyay et al., 2014; Barry et al., 2016; Rapp et al., 2016; Kauffman et al., 2022). Despite the benefits of YouTube, students may find identifying quality YouTube videos challenging.

Students may have difficulties finding high-quality videos on YouTube for several reasons. Anyone can post content on YouTube without a rating system or peer-review process, and copious videos are available from which to choose (Mukhopadhyay et al., 2014; Kauffman et al., 2022). YouTube videos may be unreliable, may lack quality, and may not be educationally useful (Deangelis et al., 2019; Van den Eynde et al., 2019; Yoo et al., 2020; Jackson et al., 2021; Zengin and Onder, 2021; Garip and Sakallioğlu, 2022). Students may also be unable to rely on viewer engagement parameters such as likes, dislikes, views, and comments to identify suitable videos (Van den Eynde et al., 2019; Garip and Sakallioğlu, 2022). Van den Eynde et al. (2019) examined immunology YouTube videos for medical students and found them to lack quality and references. Yoo et al. (2020) evaluated educational YouTube videos on knee stability tests and found only 126 of the 218 videos were suitable for education. Zengin and Onder (2021) found that 40% of the videos they evaluated about musculoskeletal ultrasound were of low quality. With all the low-quality videos on YouTube, students should evaluate videos during the selection process to identify suitable content.

Students can take different steps in the evaluation process to increase the chances of selecting reliable content. Students can use predefined search strategies to identify suitable videos (Lee et al., 2019; Van den Eynde et al., 2019; Zengin and Onder, 2021). Students can also assess viewer parameters, sources, keywords, and video duration (Lee et al., 2019; Van den Eynde et al., 2019; Zengin and Onder, 2021; Garip and Sakallioğlu, 2022). Search strategies may not always result in reliable video lists (Van den Eynde et al., 2019), especially if reliable videos on the topic are sparse (Yoo et al., 2020). Search strategies are also only as effective as the student’s critical evaluation skills used to guide the search (Van den Eynde et al., 2019), so students need to be taught the skills to complete effective evaluations. Students also need training on how to use social media in the educational realm and about social media rating tools (Brisson et al., 2015; El Bialy and Jalali, 2015; Theron et al., 2017), particularly as students use YouTube outside of the classroom as a supplemental learning tool without the guidance of educators (Kauffman et al., 2022). However, evaluating and watching YouTube videos may not currently be a standard curricular component in health professions education, despite students preferring to have more YouTube videos incorporated into the curricula (Barry et al., 2016; Burns et al., 2020).

2.2 Information literacy

Evaluating YouTube videos is an information literacy skill. Information literacy is the group of abilities needed to discover, use, and understand to create knowledge, including abilities needed for collaborative platforms (Association of College and Research Libraries, 2015). The Framework for Information Literacy for Higher Education (Association of College and Research Libraries, 2015) includes concepts that regard the construction of authority, the process of information creation, the value of information, research inquiry, scholarship, and strategic exploration. The concepts were designed to be implemented in an ever-changing information ecosystem to include collaborative platforms such as social media (Association of College and Research Libraries, 2015). Information literacy instruction for social media may also include learning about information production, information-seeking skills, information-sharing skills, and information-verification skills (Khan and Idris, 2019). The instruction of information literacy can be directed toward the use of social media sites in the classroom and as Supplementary resources (Kim et al., 2014).

Information literacy skills are essential in the health professions. Information is continuously changing in the medical field, and health professions students need information literacy skills to remain current (Bazrafkan et al., 2017; Sezer, 2020). These skills can afford health professions students a way to solve problems, implement evidence-based practice, care for patients, and effectively use resources (Bazrafkan et al., 2017; Sezer, 2020; Shamsaee et al., 2021). Given the amended framework of information literacy to include collaborative platforms such as YouTube (Association of College and Research Libraries, 2015), research is needed to understand and explore the information literacy proficiency of students in the health professions who are using the videos as learning resources. Therefore, our research study question is as follows:

What is the information literacy proficiency of students relative to experts in evaluating the quality of YouTube videos?

2.3 Evaluation tools for YouTube videos

Many tools and methods exist for completing YouTube evaluation studies (Drozd et al., 2018; Okagbue et al., 2020; Helming et al., 2021). Methods include determining search terms, identifying inclusion criteria for YouTube videos, determining variables to evaluate, choosing an evaluation tool, and analyzing content (Drozd et al., 2018; Okagbue et al., 2020). Reviewers are selected to assess videos (Drozd et al., 2018), with many studies including two reviewers (Okagbue et al., 2020). Evaluators assess different variables, such as reliability, accuracy, overall quality, technical quality, and educational quality (Drozd et al., 2018; Okagbue et al., 2020). Selecting an evaluation tool for a YouTube study can be complex because many rating tools are available to choose from (Drozd et al., 2018; Okagbue et al., 2020; Helming et al., 2021). The most used rating tools include the DISCERN instrument, HONcode, Journal of American Medical Association guidelines, and global quality score guidelines (Drozd et al., 2018; Okagbue et al., 2020), all of which are externally validated (Helming et al., 2021). The other most common rating tools are novel scoring systems that are internally validated (Drozd et al., 2018; Okagbue et al., 2020). The lack of consistency in YouTube evaluation methods and tools makes comparing and generalizing findings difficult (Drozd et al., 2018; Helming et al., 2021).

Additionally, problems exist with some of the rating tools used in prior YouTube evaluation studies (Azer, 2020; Helming et al., 2021; Guler and Aydın, 2022). Many of the rating tools were not designed specifically for YouTube or video assessments (Azer, 2020; Guler and Aydın, 2022). Some tools are too generic and necessitate the complementary use of specialized evaluation criteria, and other tools have not been validated for online medical education information (Helming et al., 2021). For example, the Journal of American Medical Association guidelines and the DISCERN instrument were validated for assessing written and online health information, but neither has been validated for medical videos (Azer, 2020; Guler and Aydın, 2022). The Journal of American Medical Association guidelines are not comprehensive enough to assess video quality (Helming et al., 2021), and the second half of the DISCERN instrument is specifically related to treatment choices (Azer, 2020; Guler and Aydın, 2022). Due to the problems with the current rating tools, authors have called for the development of a standardized rating tool (Azer, 2020; Helming et al., 2021; Guler and Aydın, 2022). A standardized rating tool should be valid and reliable for medical YouTube videos, generalizable (Azer, 2020; Guler and Aydın, 2022), and comprehensive (Helming et al., 2021) and should include items about educational principles (Azer, 2020).

2.4 MQ-VET

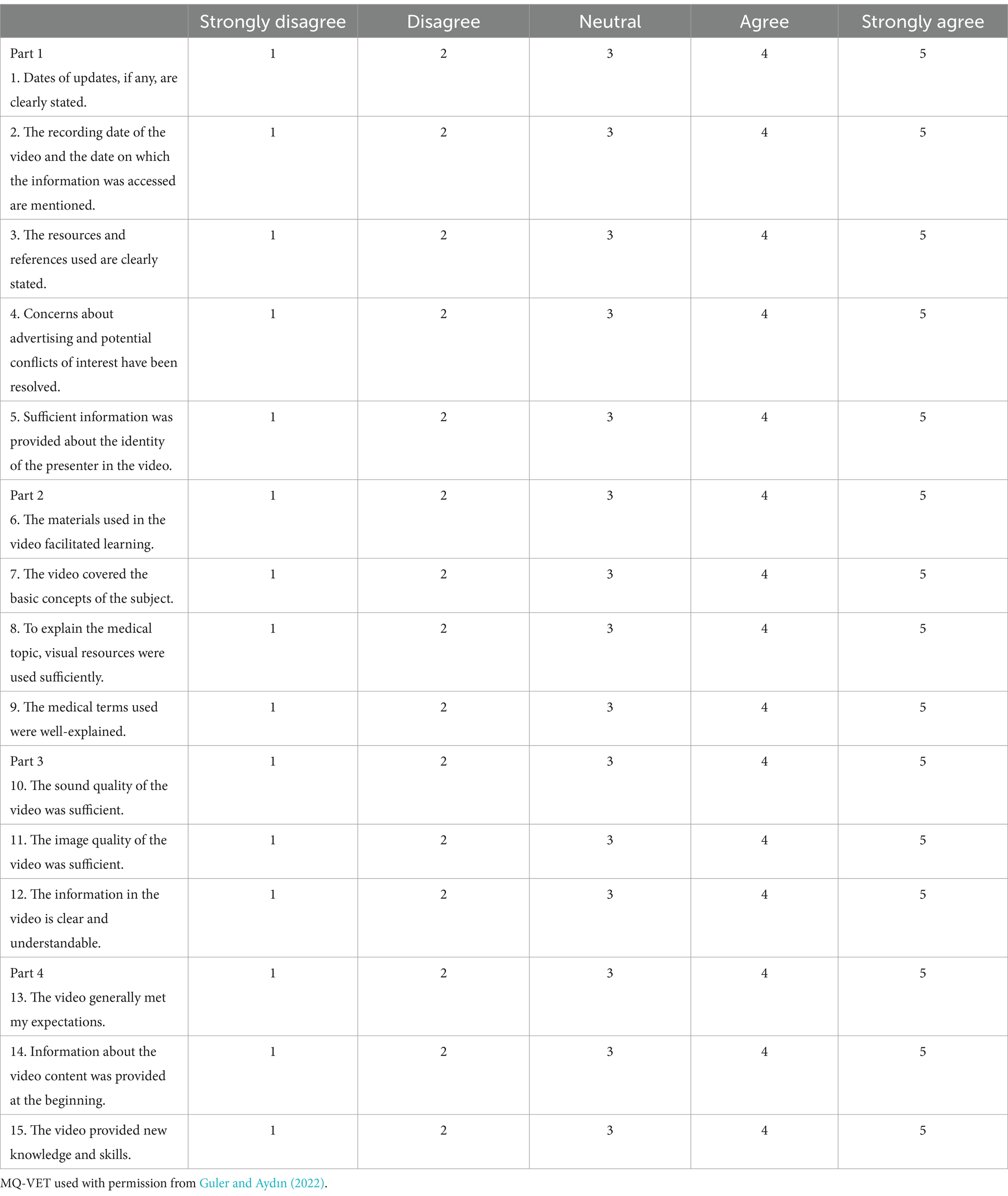

In addressing the call for a standardized YouTube tool, Guler and Aydın (2022) created a rating tool called the Medical Quality Video Evaluation Tool (MQ-VET; See Table 1). The MQ-VET is a tool simple enough for use by both medical professionals and the general population. The MQ-VET consists of 15, five-point Likert scale questions organized by Guler and Aydin into four unnamed parts: Part 1 is questions 1–5, part 2 is questions 6–9, part 3 is questions 10–12, and part 4 is questions 13–15. The MQ-VET was deemed reliable and valid for evaluating YouTube videos on various medical topics (Guler and Aydın, 2022), with Cronbach’s alpha reliability coefficient = 0.72 across all items, and alpha = 0.73–0.81 across parts (Guler and Aydın, 2022). Face and content validity of the MQ-VET was evaluated by 25 medical and 25 non-medical providers who rated 10 videos on a variety of health topics (Guler and Aydın, 2022). The MQ-VET is further described in the methods section as this tool was used to address information literacy proficiency in regard to YouTube video evaluation for our study.

Table 1. Medical quality video evaluation tool (MQ-VET) by Guler and Aydın (2022).

3 Materials and methods

3.1 Participants

This exploratory study employed an expert-novice design, often used in education to establish baseline knowledge and structure curricula and compare student and educator performance (Schunn and Nelson, 2009). Study design and participant recruitment received IRB approval for human subjects research from A.T. Still University Institutional Review Board on 16 February 2022. The IRB number for the study is OP20220216-001. Prior to participation in the study, all participants were required to provide voluntary written consent. Via the consent form, participants were made aware that the study was being conducted to investigate the information literacy proficiency of students in evaluating medical education YouTube videos. The consent form further indicated that participants would be evaluating three YouTube videos with the MQ-VET and that all responses were entirely voluntary.

In recruiting student participants, the researcher began by addressing a single university with multiple colleges in different states. The researcher emailed the deans of the individual colleges to request permission to survey their population of students. Two of the college deans provided the researcher with student emails through enrollment services. The researcher sent out invitation emails directly to those students. Faculty and staff from the other two colleges sent out the study invites to their students. Students from two additional allied health programs from two different universities in two different states also participated in the study. Staff from the two programs sent out study invites via email to their students. Overall, student participants hailed from diverse health professions programs across three universities in five US states.

The novice group comprised post-secondary health professions students, encompassing various ages and professional backgrounds. Exclusion criteria included any students below the post-secondary education level. Inclusion criteria included post-secondary students of all ages and professional backgrounds. Students with high school degrees could participate if they were currently in a health professions undergraduate program.

Experts were defined as having at least 5 years of field experience and 2 years as health professions educators. Experts were initially identified through the researchers’ contacts. Those experts were asked, via email or orally, to provide any leads to additional experts or students via snowball sampling. All potential experts meeting the inclusion criteria were welcome to participate in the study. Experts represented various health professions fields and institutions.

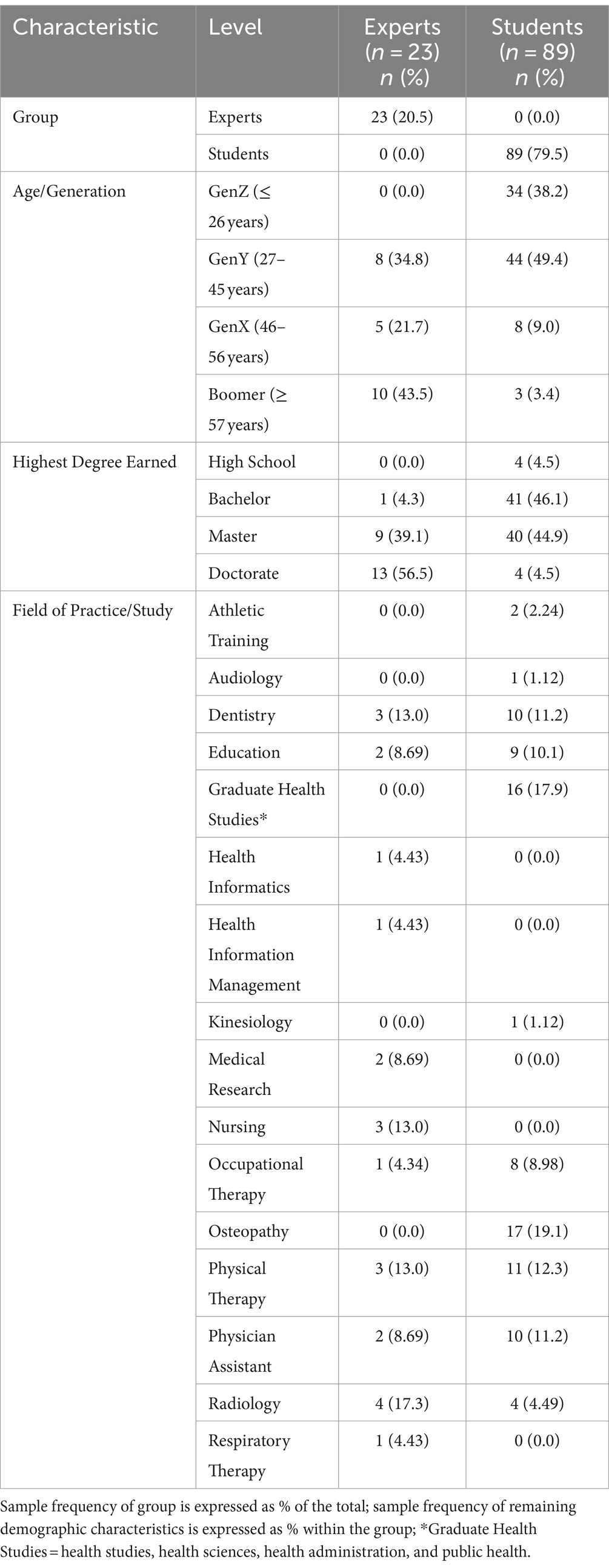

Power analysis using G*Power software (Version 3.1.9.7; Faul et al., 2009) using parameters of estimated medium effect size (d) = 0.6, alpha = 0.05, power = 0.8, and an allocation ratio = 4:1 for the novice to expert samples, the estimated sample size required for the novice group = 88, while for the expert group = 22. Recruitment efforts led to 94 students and 25 expert participants from different generations, educational levels, and healthcare fields of practice (see Table 2). Responses with more than 10% of missing data were removed, leaving a final sample of 89 students and 23 experts.

3.2 Study procedure

The study utilized the MQ-VET by Guler and Aydın (2022) to evaluate the quality of three YouTube videos. One of the researchers obtained permission to use the MQ-VET from the creators of the instrument. Three panelists, distinct from the study’s expert group, collaborated with one of the researchers to select the videos. The panelists were health professionals and/or educators.

The panelists and researchers aimed to select low-, medium-, and high-quality health professional videos freely available on YouTube. Criteria for video selection included a length under 4 min, English language, and health professions relevance. The video search was done by typing in search queries on YouTube, such as “medical videos for students,” “osteopathic videos for students,” and “radiology videos for students.” After typing in the search terms and clicking enter, the duration filter of “Under 4 min” was selected. The panelists viewed many videos in each area on multiple pages of the video list and viewed videos suggested by YouTube. The videos were rated using the MQ-VET, which was deemed valid and reliable for assessing YouTube videos (Guler and Aydın, 2022). The MQ-VET has 15 items with a minimum score of 15 and a total possible score of 75. Each MQ-VET question includes a 5-point Likert-style response ranging from “strongly disagree” to “strongly agree.” Videos were categorized based on MQ-VET scores.

Although videos scored with the MQ-VET can result in a minimum of 15 or a high of 75, there was no scoring key provided by the creators of the instrument to interpret total scores and no cutoff system included for what constitutes a low-rated, medium-rated, or high-rated video. For video selection in this study, the panelists agreed that a lower rated video scored between 15 and 34, a medium-rated video scored between 35 and 54, and a higher rated video scored between 55 and 75, based on splitting the 60-point range of the MQ-VET into three bins. The panelists’ total scores for the 15 questions were added together and averaged. The high-rated video was named “OMT for patients with sacral somatic dysfunction” by the Journal of Osteopathic Medicine (2018). The medium-rated video was named “Colchicine for preventing heart attack” by CardioGauge (2020). The low-rated video was named “Chest x-ray || homogenous opacity || interpretation” by Learn MBBS (2021). All participants evaluated the same three preselected YouTube videos.

As noted previously, Guler and Aydın (2022) divided the 15 questions in the MQ-VET into four unnamed parts. The researchers and panelists in this study agreed that the parts should be labeled for additional clarification during data analysis. The researchers and panelists agreed that the MQ-VET parts should be labeled: Credibility of Source (corresponding to Part 1, questions 1–5); Educational Quality (corresponding to Part 2, questions 6–9); Production Quality (corresponding to Part 3, questions 10–12); and Learning Experience (corresponding to Part 4, questions 13–15).

3.3 Data collection

Participants received an email containing a Qualtrics link to the survey. Upon clicking the link, they first encountered an online informed consent form. They were required to give written consent before proceeding to the questionnaire. Participants watched three preselected YouTube videos, and for each video, they filled out the MQ-VET. A $20 drawing was available for participants. The data collection period ran from 8 March 2022 to 23 May 2022.

3.4 Data analysis

Raw data from the online survey were imported into Microsoft Excel. Data were imported into R and JASP v. 0.15 (JASP, 2021) for quantitative data analysis and visualizations. Descriptive statistics included frequency analysis for categorical variables and mean, standard deviation, and skewness for continuous variables, adhering to a normality criterion of skewness < |2| (Kim, 2013). MQ-VET items were tested for internal consistency reliability using Cronbach’s alpha. Item-level reliability was determined by Cronbach’s alpha (α) > 0.70 (Nunnally and Bernstein, 1993).

To test the validity of the repeated-measures F tests, the assumption of sphericity was tested using the test by Mauchly (1940). When sphericity was found to be violated (due to p < 0.05 for Mauchly’s test), the Greenhouse–Geisser correction to the F test results was reported (Greenhouse and Geisser, 1959). All inferential statistics were tested at the 95% confidence interval.

The study research question, “What is the information literacy proficiency of students relative to experts in evaluating the quality of YouTube videos?” was tested through hypothesis (H1): Experts will have higher information literacy proficiency than students in evaluating the quality of YouTube videos. A two-way mixed repeated-measures ANOVA F inferential statistic (between = group, within = video) was run to investigate whether group membership (expert vs. student) was a significant predictor of MQ-VET scores (information literacy) across the three YouTube quality videos (low vs. medium vs. high).

4 Results

4.1 Descriptive statistics of MQ-VET

The MQ-VET items in this study sample at the three video levels (i.e., low, medium, and high quality) were normally distributed with skewness between −2 and 2. Histograms of the MQ-VET items were completed, and normal curve overlays on each histogram showed the MQ-VET items essentially followed a normal distribution. Each of the four parts of the MQ-VET at each video level had acceptable internal consistency reliability alphas (α = 0.73–0.87) except for part 3 (α = 0.64) of the MQ-VET completed for the high-quality video.

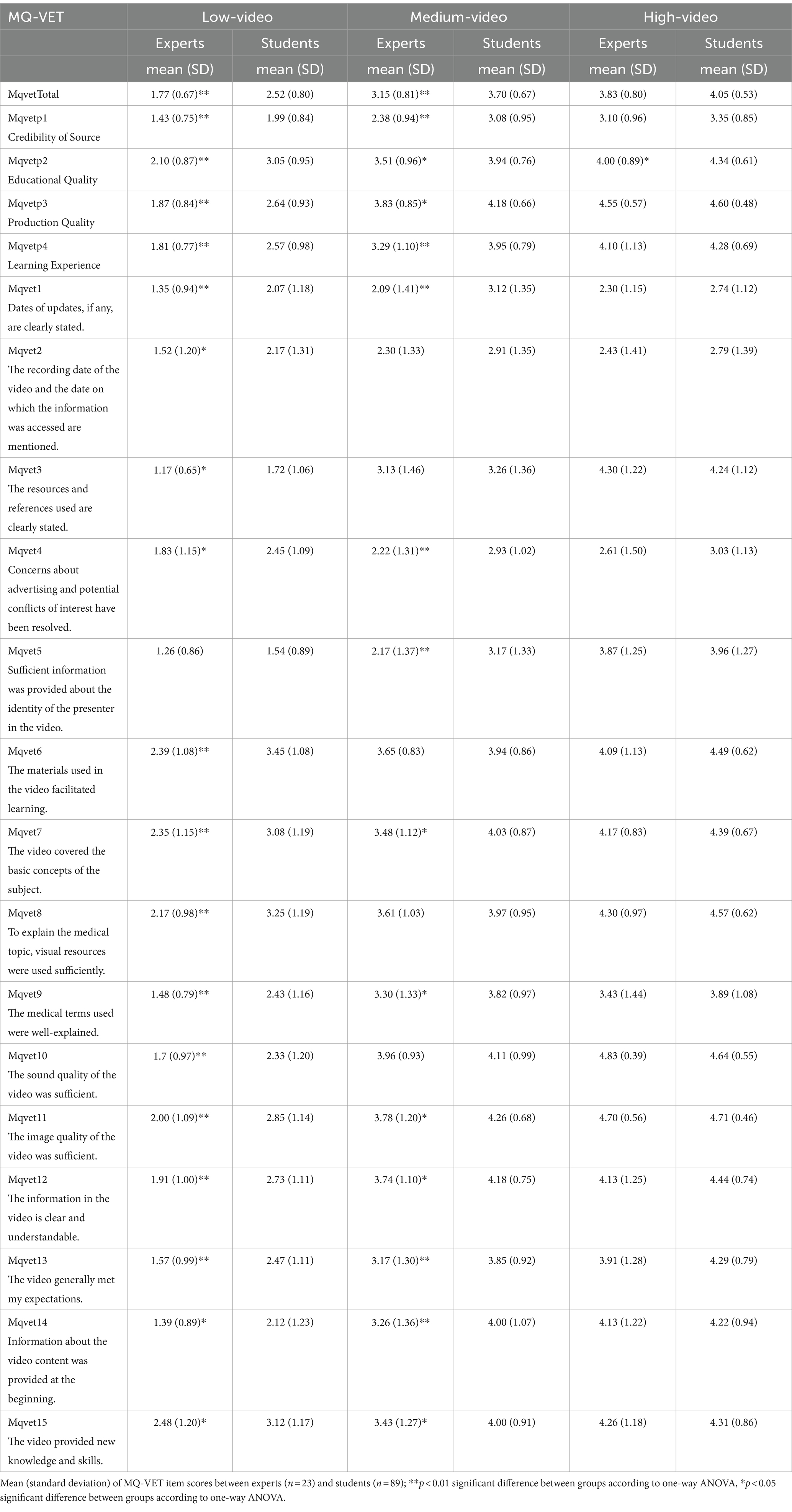

Table 3 presents the results of descriptive statistics in which the mean and standard deviation of the MQ-VET items across the three video levels were examined between experts and students. MQ-VET scores are presented as the mean score (instead of the total score) to simplify comparisons across items and factors. This approach facilitates comparisons, regardless of item count. Mean scores on the MQ-VET items were consistently higher in both groups as the quality increased across the three videos.

4.2 Differences between experts and students in evaluating the quality of YouTube videos

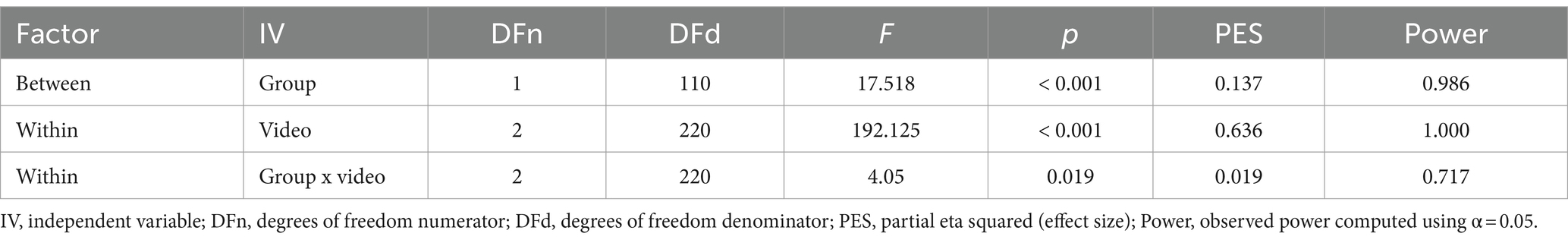

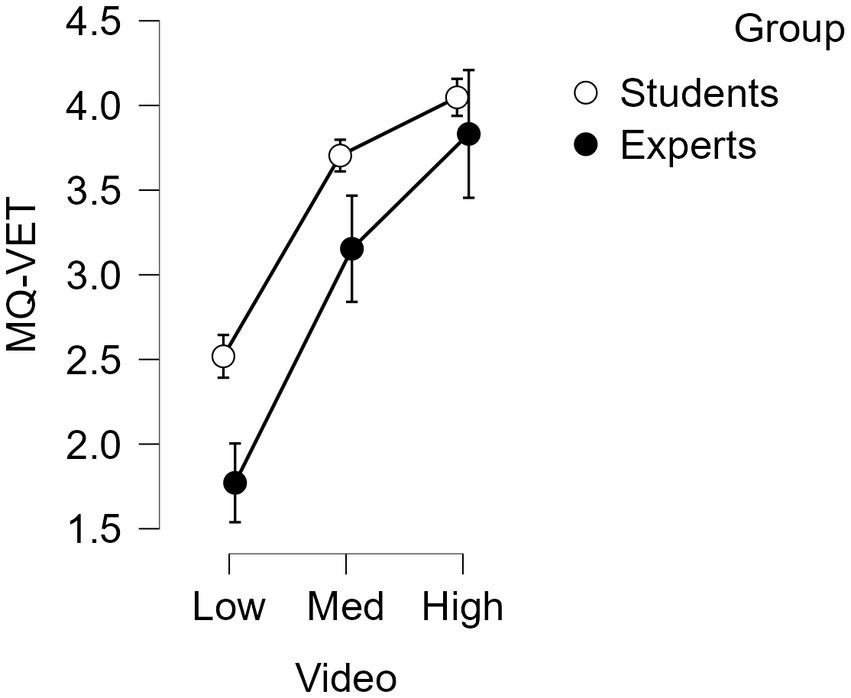

Results found significant main effects for group and video, and a significant group x video interaction was found: F(2, 220) = 4.05, p = 0.019 (see Table 4; Figure 1). The MQ-VET grand mean scores across all items for experts were significantly lower than students when the video type was low- (mean = 1.77, SD = 0.67 vs. mean = 2.52, SD = 0.80) or medium-quality (mean = 3.15, SD = 0.81 vs. mean = 3.70, SD = 0.67) at p < 0.01. The MQ-VET means across the four parts also showed similar results for the MQ-VET parts (see Table 3). The results suggest experts had higher information literacy proficiency than students in evaluating the quality of YouTube videos.

Figure 1. MQ-VET grand mean score across all items (+/− 95% confidence interval) in experts and students across low, medium, and high videos.

5 Discussion

Information literacy is the ability to discover, use, and understand to create knowledge, including the ability to use collaborative platforms (Association of College and Research Libraries, 2015). Information literacy abilities are needed to evaluate content on social media and YouTube videos (Kim et al., 2014). Our study used the MQ-VET as an information literacy instrument to evaluate YouTube videos to answer the research question, “What is the information literacy proficiency of students relative to experts in evaluating the quality of YouTube videos?” Results of repeated-measures ANOVA suggest students, relative to experts, significantly overrated the quality of medical YouTube videos, especially for low- and medium-quality videos (e.g., low video: student mean = 2.52, expert mean = 1.77; medium video: student mean = 3.70, expert mean = 3.15; high video: student mean = 4.05, expert = 3.83). The general finding of students overrating medical YouTube videos was consistent with prior studies. Deangelis et al. (2019) found that trainees overscored the proficiency of surgeons in laparoscopic YouTube videos compared to senior experts. In addition, Jackson et al. (2021) found that junior residents and medical students overrated surgical YouTube videos compared to attending. Overrating the quality of YouTube videos by students in our study may indicate students have less information proficiency in evaluating YouTube videos than experts. Exploring student scores on the parts of the MQ-VET may provide several possible explanations for why students have lower information literacy proficiency than experts when evaluating YouTube videos.

For example, student mean scores across the set of MQ-VET items comprising part 1 (questions 1–5) suggest students found the low- and medium-quality YouTube videos to be from more credible sources (low video mean = 1.99, medium video mean = 3.08) than the experts (low video mean = 1.43, medium video mean = 2.38) in terms of transparency of updates (question 1), recording dates (question 2), references (question 3), conflicts of interest (question 4), and presenter identity (question 5). A significant aspect of being information literacy proficient is information verification (Khan and Idris, 2019) and establishing the credibility of a source as an extension of the information literacy concept that authority is constructed and contextual (Association of College and Research Libraries, 2015). The learning resource reflects the creators’ credibility (Association of College and Research Libraries, 2015), and our findings show that students may be supplementing their learning with low-quality videos from sources that lack credibility. Educators may need to vet the credibility of video sources for students, in addition to helping students improve their information literacy proficiency in verifying information and evaluating the credibility of sources for YouTube videos.

Students in our study found the low-quality video to have better educational quality and production quality when compared to experts based on the set of MQ-VET items comprising parts two (questions 6–9) and three (questions 10–12), respectively. For example, students found the low-quality video to be of better educational quality (mean = 3.05) compared to the experts (mean = 2.1) regarding materials facilitating learning (question 6), explaining basic concepts (question 7), using sufficient visual resources (question 8), and medical terms (question 9). Regarding the production quality of videos (part 3), the students rated the sound quality (question 10), image quality (question 11), and overall clarity (question 12) of the low video (mean = 2.64) significantly higher than the experts (mean = 1.87). Information literacy includes information production (Khan and Idris, 2019), and information creation about how the content material is created and conveyed to be educationally useful (Association of College and Research Libraries, 2015). The findings indicate that the students may need to become more proficient in understanding what content creation and production are more likely to facilitate learning for the student’s specific needs and what constitutes a quality educational resource with medical education YouTube videos.

Finally, students in our study found the lower quality videos provided them with a greater learning experience (mean = 2.57) compared to experts (mean = 1.81) based on part 4 of the MQ-VET (questions 13–15). More specifically, the students had a lower set of expectations based on the higher student ratings of the low- and medium-quality video (student: low mean = 1.57, medium mean = 3.17) compared to experts (expert: low mean = 2.47, medium mean = 3.85) with MQ-VET question 13, “The video generally met my expectations.” The students also found the low- and medium-quality videos provided more new knowledge and skills (student: low mean = 2.48, medium mean = 3.43) compared to the experts (expert: low mean = 3.12, medium mean = 4.0) based on higher ratings to MQ-VET question 15, “The video provided new knowledge and skills.” Deangelis et al. (2019) and Jackson et al. (2021) suggested that students may rate aspects of videos higher because they have less experience and find specific videos more useful. Based on our findings, it is possible that when students are in the initial phases of learning a subject, they may have lower expectations for what constitutes an effective learning experience and may find videos more useful because they lack knowledge and experience. The findings indicate that educators may need to be more active in selecting appropriate and high-quality videos for their students, particularly when students are initially learning about a topic so that students do not unintentionally rely on low-quality videos. Educators may also need to assist students in understanding what expectations students should have regarding their learning resources on YouTube.

5.1 Recommendations

The first recommendation is for educators to select high-quality videos for students, especially when students are first learning a topic. This recommendation is in line with other studies where recommendations included educators creating channels with content and selecting videos for students (Barry et al., 2016; O'Malley et al., 2019; Burns et al., 2020; Helming et al., 2021).

The second recommendation is for educators to assist students in becoming more information literacy proficient regarding evaluating medical education YouTube videos, paying particular attention to helping students evaluate source credibility, along with information creation such as educational quality and production quality, and helping students develop appropriate expectations for YouTube videos as learning resources. In assisting students to develop evaluation skills for YouTube videos, curricular activities and opportunities to practice evaluating social media content can be helpful (Shatto and Erwin, 2016; Theron et al., 2017). Some activities might include having students find appropriate videos, make YouTube videos, and critique YouTube videos to possibly foster critical thinking skills for YouTube (Shatto and Erwin, 2016).

5.2 Limitations and future research

One limitation of the study is that the MQ-VET tool was recently developed. The MQ-VET was published in 2022, and prior studies with the MQ-VET are not available for comparison, apart from the initial development of the tool. Although the MQ-VET is valid and reliable, using a recently developed tool poses a limitation because of minimal research about the tool. Another limitation of the study was the small sample size. The small sample size may have made the results less generalizable. The small sample size also limited our ability to investigate any possible moderating effects attributable to demographic differences in the study sample (e.g., age, education, and field of study). A third limitation is that medical content on YouTube spans a wide range of topics, and only three videos on three different medical topics were used in this study. A final limitation is that many types of social media platforms exist and may be used by students in health professions, but this study only investigated the use of YouTube.

Future research should include a more extensive novice-expert study investigating health professions students’ information literacy proficiency in evaluating YouTube videos. Future research should also be conducted to determine what other social media platforms health professions students are using in conjunction with YouTube to supplement their learning.

6 Conclusion

Our findings indicate that health professions students have less information literacy proficiency in evaluating YouTube videos than experts and tend to overrate low- and medium-quality YouTube videos. Overrating videos may lead students to learn from substandard sources outside formal education. Teachers can select high-quality videos for their students and enhance curricula with YouTube evaluation training to improve students’ information literacy proficiency in selecting high-quality educational videos, augmenting their learning effectively.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The requirement of ethics approval was waived by A.T. Still University Institutional Review Board on February 16, 2022 (OP20220216-001). Digital informed consent for participation in the study was obtained from the participants.

Author contributions

OP: Writing – original draft, Writing – review & editing. LK: Writing – original draft, Writing – review & editing. MC: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors would like to thank everyone who made this research possible, with a special thanks to all who assisted at A.T. Still University and the research participants.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1354827/full#supplementary-material

References

Aldallal, S. N., Yates, J. M., and Ajrash, M. (2019). Use of YouTube™ as a self-directed learning resource in oral surgery among undergraduate dental students: a cross-sectional descriptive study. Br. J. Oral Maxillofac. Surg. 57, 1049–1052. doi: 10.1016/j.bjoms.2019.09.010

Alzoubi, H., Karasneh, R., Irshaidat, S., Abuelhaija, Y., Abuorouq, S., Omeish, H., et al. (2023). Exploring the use of YouTube as a pathology learning tool and its relationship with pathology scores among medical students: cross-sectional study. JMIR Med. Educ. 9:e45372. doi: 10.2196/45372

Association of College and Research Libraries (2015). Framework for information literacy for higher education. American Library Association. Available at: https://www.ala.org/acrl/standards/ilframework [Accessed: May 2022].

Azer, S. A. (2020). Are DISCERN and JAMA suitable instruments for assessing YouTube videos on thyroid cancer? Methodological concerns. J of Cancer Ed. 35, 1267–1277. doi: 10.1007/s13187-020-01763-9

Barry, D. S., Marzouk, F., Chulak-Oglu, K., Bennett, D., Tierney, P., and O'Keeffe, G. W. (2016). Anatomy education for the YouTube generation: online video use in anatomy education. Anat Sci Ed. 9, 90–96. doi: 10.1002/ase.1550

Bazrafkan, L., Hayat, A. A., Abbasi, K., Bazrafkan, A., Rohalamini, A., and Fardid, M. (2017). Evaluation of information literacy status among medical students at Shiraz University of Medical Sciences. J. Adv. Med. Educ. Prof. 5, 42–48

Brisson, G. E., Fisher, M. J., LaBelle, M. W., and Kozmic, S. E. (2015). Defining a mismatch: differences in usage of social networking sites between medical students and the faculty who teach them. Teach. Learn. Med. 27, 208–214. doi: 10.1080/10401334.2015.1011648

Burns, L. E., Abbassi, E., Qian, X., Mecham, A., Simeteys, P., and Mays, K. A. (2020). YouTube use among dental students for learning clinical procedures: a multi-institutional study. J. Dent. Ed. 84, 1151–1158. doi: 10.1002/jdd.12240

CardioGauge. (2020). Colchicine to Prevent Heart Attack? [Video]. Available from: https://youtu.be/XGFiLt6SUKQ [Accessed June 2022].

Curran, V., Simmons, K., Matthews, L., Fleet, L., Gustafson, D. L., Fairbridge, N. A., et al. (2020). YouTube as an educational resource in medical education: a scoping review. Medical Sci. Educator. 30, 1775–1782. doi: 10.1007/s40670-020-01016-w

Deangelis, N., Gavriilidis, P., and Martínez-Pérez, A. (2019). Educational value of surgical videos on YouTube: quality assessment of laparoscopic appendectomy videos by senior surgeons vs. novice trainees. World J. Emerg. Surg. 14, 22–11. doi: 10.1186/s13017-019-0241-6

Drozd, B., Couvillon, E., and Suarez, A. (2018). Medical YouTube videos and methods of evaluation: literature review. JMIR Medical Educ. 4:e3. doi: 10.2196/mededu.8527

El Bialy, S., and Jalali, A. (2015). Go where the students are: a comparison of the use of social networking sites between medical students and medical educators. JMIR Medical Educ. 1:e7. doi: 10.2196/mededu.4908

Faul, F., Erdfelder, E., Buchner, A., and Lang, A. G. (2009). Statistical power analyses using G*power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Garip, R., and Sakallioğlu, A. K. (2022). Evaluation of the educational quality and reliability of YouTube videos addressing eyelid ptosis surgery. Orbit 41, 598–604. doi: 10.1080/01676830.2021.1989467

Greenhouse, H., and Geisser, S. (1959). On methods in the analysis of profile data. Psychometrika 24, 95–112. doi: 10.1007/BF02289823

Guler, M. A., and Aydın, E. O. (2022). Development and validation of a tool for evaluating YouTube-based medical videos. Irish J. of Med. Sci. 191, 1985–1990. doi: 10.1007/s11845-021-02864-0

Helming, A. G., Adler, D. S., Keltner, C., Igelman, A. D., and Woodworth, G. E. (2021). The content quality of YouTube videos for professional medical education: a systematic review. Acad. Med. 96, 1484–1493. doi: 10.1097/acm.0000000000004121

Jackson, H. T., Hung, C. S., and Potarazu, D. (2021). Attending guidance advised: educational quality of surgical videos on YouTube. Surg. Endosc. 36, 4189–4198. doi: 10.1007/s00464-021-08751-0

JASP. (2021). Jeffery’s amazing stats program (v.0.15). [statistical software]. Accessed via the world wide web: https://jasp-stats.org

Journal of Osteopathic Medicine. (2018). OMT for patients with sacral somatic dysfunction. [video]. Available from: https://youtu.be/1pSF4Gzjslw [Accessed June 2022].

Kauffman, L., Weisberg, E. M., Eng, J., and Fishman, E. K. (2022). YouTube and radiology: the viability, pitfalls, and untapped potential of the premier social media video platform for image-based education. Acad. Radiol. 29, S1–S8. doi: 10.1016/j.acra.2020.12.018

Khamis, N., Aljumaiah, R., Alhumaid, A., Alraheem, H., Alkadi, D., Koppel, C., et al. (2018). Undergraduate medical students’ perspectives of skills, uses and preferences of information technology in medical education: a cross-sectional study in a Saudi medical college. Med. Teach. 40, S68–S76. doi: 10.1080/0142159X.2018.1465537

Khan, M. L., and Idris, I. K. (2019). Recognise misinformation and verify before sharing: a reasoned action and information literacy perspective. Behav. Inform. Technol. 38, 1194–1212. doi: 10.1080/0144929x.2019.1578828

Kim, H. Y. (2013). Statistical notes for clinical researchers: assessing normal distribution (2) using skewness and kurtosis. Restorative Dentistry & Endodontics. 38, 52–54. doi: 10.5395/rde.2013.38.1.52

Kim, K. S., Sin, S. C. J., and Yoo-Lee, E. Y. (2014). Undergraduates’ use of social media as information sources. Coll. Res. Libr. 75, 442–457. doi: 10.5860/crl.75.4.442

Learn MBBS. (2021). Chest xray || homogenous opacity || interpretation. [video]. Available from: https://youtu.be/7AsU5eyyT5g [Accessed June 2022].

Lee, H., Choi, A., Jang, Y., and Lee, J. I. (2019). YouTube as a learning tool for four shoulder tests. Prim. Health Care Res. Dev. 20:e70. doi: 10.1017/s1463423618000804

Mauchly, J. W. (1940). Significance test for sphericity of a normal n-variate distribution. Ann. Math. Stat. 11, 204–209. doi: 10.1214/aoms/1177731915

Mukhopadhyay, S., Kruger, E., and Tennant, M. (2014). YouTube: a new way of supplementing traditional methods in dental education. J. Dent. Educ. 78, 1568–1571. doi: 10.1002/j.0022-0337.2014.78.11.tb05833.x

Nunnally, J. C., and Bernstein, I. (1993). Psychometric theory. 3rd Edn. Maidenhead, England: McGraw Hill Higher Education.

Okagbue, H. I., Oguntunde, P. E., Bishop, S. A., Obasi, E. C. M., Opanuga, A. A., and Ogundile, O. P. (2020). Review on the reliability of medical contents on YouTube. Int. J. Online and Biomed. Engineer. 16:83. doi: 10.3991/ijoe.v16i01.11558

O'Malley, D., Barry, D. S., and Rae, M. G. (2019). How much do preclinical medical students utilize the internet to study physiology? Adv. Physiol. Educ. 43, 383–391. doi: 10.1152/advan.00070.2019

Pradhan, S., das, C., Panda, D. K., and Mohanty, B. B. (2024). Assessing the utilization and effectiveness of YouTube in anatomy education among medical students: a survey-based study. Cureus. 16:e55644. doi: 10.7759/cureus.55644

Rapp, A. K., Healy, M. G., Charlton, M. E., Keith, J. N., Rosenbaum, M. E., and Kapadia, M. R. (2016). YouTube is the most frequently used educational video source for surgical preparation. J. Surg. Educ. 73, 1072–1076. doi: 10.1016/j.jsurg.2016.04.024

Schunn, C. D., and Nelson, M. M. (2009). “Expert-novice studies: An educational perspective” in Psychology of classroom learning. ed. Anderman (US: Macmillan), 398–401.

Sezer, B. (2020). Implementing an information literacy course: impact on undergraduate medical students’ abilities and attitudes. J. Acad. Librariansh. 46:102248. doi: 10.1016/j.acalib.2020.102248

Shamsaee, M., Mangolian shahrbabaki, P., Ahmadian, L., Farokhzadian, J., and Fatehi, F. (2021). Assessing the effect of virtual education on information literacy competency for evidence-based practice among the undergraduate nursing students. BMC Med. Inform. Decis. Mak. 21:48. doi: 10.1186/s12911-021-01418-9

Shatto, B., and Erwin, K. (2016). Moving on from millennials: preparing for generation Z. J. Contin. Educ. Nurs. 47, 253–254. doi: 10.3928/00220124-20160518-05

Theron, M., Redmond, A., and Borycki, E. M. (2017). Baccalaureate nursing students’ abilities in critically identifying and evaluating the quality of online health information. Stud. Health Technol. Inform. 234, 321–327. doi: 10.3233/978-1-61499-742-9-321

van den Eynde, J., Crauwels, A., Demaerel, P. G., van Eycken, L., Bullens, D., Schrijvers, R., et al. (2019). YouTube videos as a source of information about immunology for medical students: cross-sectional study. JMIR Med. Ed. 5:e12605. doi: 10.2196/12605

Vizcaya-Moreno, M. F., and Pérez-Cañaveras, R. M. (2020). Social media used and teaching methods preferred by Generation Z students in the nursing clinical learning environment: A cross-sectional research study. Int. J. Environ. Res. Public Health 17:8267. doi: 10.3390/ijerph17218267

Yoo, M., Hong, J., and Jang, C. W. (2020). Suitability of YouTube videos for learning knee stability tests: a cross-sectional review. Arch. Phys. Med. Rehabil. 101, 2087–2092. doi: 10.1016/j.apmr.2020.05.024

Zengin, O., and Onder, M. E. (2021). Educational quality of YouTube videos on musculoskeletal ultrasound. Clin. Rheumatol. 40, 4243–4251. doi: 10.1007/s10067-021-05793-6

Keywords: YouTube, videos, quality, health professions, students, medical education, information literacy, MQ-VET

Citation: Pearlman O, Konecny LT and Cole M (2024) Information literacy skills of health professions students in assessing YouTube medical education content. Front. Educ. 9:1354827. doi: 10.3389/feduc.2024.1354827

Edited by:

Mona Hmoud AlSheikh, Imam Abdulrahman Bin Faisal University, Saudi ArabiaReviewed by:

Rebecca Romine, University of Hawaii–West Oahu, United StatesZongshui Wang, Beijing Information Science and Technology University, China

Copyright © 2024 Pearlman, Konecny and Cole. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Orianne Pearlman, opearlman@yahoo.com

Orianne Pearlman

Orianne Pearlman Lynda Tierney Konecny

Lynda Tierney Konecny Matthew Cole

Matthew Cole