Deep Learning for Photonic Design and Analysis: Principles and Applications

- 1State Key Laboratory of Information Photonics and Optical Communications, School of Information and Communication Engineering, Beijing University of Posts and Telecommunications, Beijing, China

- 2Institute of Electromagnetics and Acoustics and Fujian Provincial Key Laboratory of Electromagnetic Wave Science and Detection Technology, Xiamen University, Xiamen, China

- 3Shenzhen Research Institute of Xiamen University, Shenzhen, China

Innovative techniques play important roles in photonic structure design and complex optical data analysis. As a branch of machine learning, deep learning can automatically reveal the inherent connections behind the data by using hierarchically structured layers, which has found broad applications in photonics. In this paper, we review the recent advances of deep learning for the photonic structure design and optical data analysis, which is based on the two major learning paradigms of supervised learning and unsupervised learning. In addition, the optical neural networks with high parallelism and low energy consuming are also highlighted as novel computing architectures. The challenges and perspectives of this flourishing research field are discussed.

1 Introduction

Over the past few decades, photonics, as an important field of fundamental research, has been penetrating into various domains, such as life science and information technology (Vukusic and Sambles, 2003; Bigio and Sergio, 2016; Ravì et al., 2016). In particular, the advances of photonic devices, optical imaging and spectroscopy techniques have further accelerated the wide applications of photonics (Török and Kao, 2007; Ntziachristos, 2010; Dong et al., 2014; Jiang et al., 2021). For example, the creation of metasurfaces/metamaterials have promoted the development of holography and superlenses (Zhang and Liu, 2008; Yoon et al., 2018), while the optical spectroscopy and imaging have deep utility in medical diagnosis (Chan and Siegel, 2019; Lundervoldab and Lundervoldacd, 2019) and biological study (Török and Kao, 2007). However, for sophisticated photonic devices, the initial design relies on the electromagnetic modelling, which is largely determined by human experience gained from the physical intuition and previous (Ma W. et al., 2021). The specific structure parameters are determined by means of trial-and-error, and their parametric space is limited by simulation power and time. Besides, the optical data generated from optical measurements are becoming more and more complicated. For instance, when applying optical spectroscopy to characterize various analytes (e.g., malignant tumor tissue and bacterial pathogens) in complex biological environments, it is challenging to extract the fingerprint due to the large spectral overlap from the common bonds in the analytes (Rickard et al., 2020; Fang et al., 2021). The traditional analysis methods are mainly based on the physical intuition and prior-experiences, which are time-consuming and susceptive to human error.

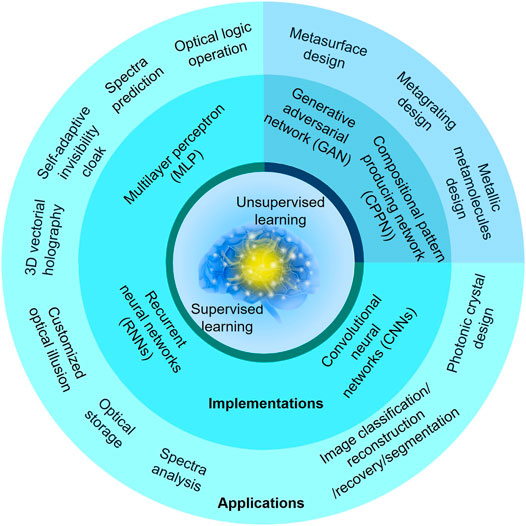

Recently, the booming field of artificial intelligence has accelerated the pace of technological progresses (Goodfellow et al., 2016). Particularly, deep learning, as a data-driven method, can automatically reveal the inherent connections behind the data by using hierarchically structured layers. It has been widely exploited in the field of computer visions (Luongo et al., 2021), image analysis (Barbastathis et al., 2019), robotic controls (Abbeel et al., 2010), driverless cars (Karmakar et al., 2021) and language translations (Wu et al., 2016; Popel et al., 2020). In the photonics applications, deep learning provides a new perspective for device design and optical data analysis (Anjit et al., 2021). It is capable of searching the nonlinear physical correlations, such as the relationship between photonic structures and their electromagnetic response (Wiecha and Muskens, 2019; Li et al., 2020). The cross-discipline of deep learning and photonics enables researchers to design photonic devices and decode optical data without explicitly modeling the underlying physical processes or manually manipulating the models (Chen et al., 2020). Particular areas of success include the materials and structures design (Malkiel et al., 2018; Ma et al., 2019), optical spectroscopy and image analysis (Ghosh et al., 2019; Moen et al., 2019), data storage (Rivenson et al., 2019; Liao et al., 2019), and optical communications (Khan et al., 2019), as shown in Figure 1. The deep neural networks used for these applications are mainly tested and trained in electronic computing systems. Compared with the conventional electronic platforms, the photonic systems have attracted increasing attention due to the low energy consuming, multiple interconnections and high parallelism (Sui et al., 2020; Goi et al., 2021). Recently, various optical neural network (ONN) architectures have been used for high-speed data analysis, such as optical interferometric neural network (Shen et al., 2017) and diffractive optical neural network (Lin et al., 2018).

In this article, we focus on the merging of photonics and deep learning for the optical structures design and data analysis. In Section 2, we introduce the typical deep learning algorithms, including supervised learning and unsupervised learning. In Section 3, we present the deep learning-assisted photonic structure design and optical data analysis. The optical neural networks are highlighted as novel computing architectures in Section 4. In Section 5, we discuss the outlook of this flourishing field accompanied with a short conclusion.

2 Principles of Typical Neural Networks

In this section, we will introduce several typical deep learning algorithms, and elucidate their working principles for the cross-discipline optical applications. Basically, the algorithms can be divided into supervised learning and unsupervised learning.

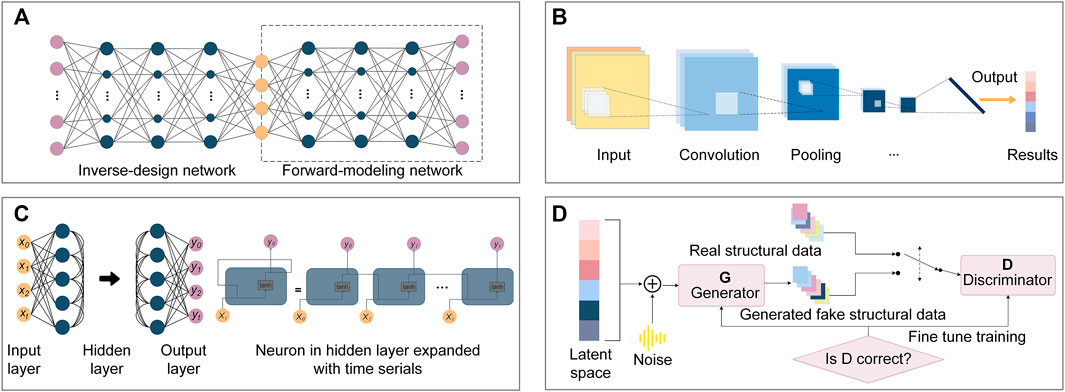

In supervised learning, the input training data are accompanied with “correct answer” labels. During the training process, supervised learning compares the predicted results with the ground-true labels in the datasets, and constantly optimizes the network to achieve desired performance. Specifically, it can learn the correlations between photonic structures and optical properties, so as to perform special optical functions. Supervised learning includes multiple artificial neural networks (LeCun et al., 2015), such as multilayer perceptron (MLP), convolutional neural networks (CNNs) and recurrent neural networks (RNNs), as shown in Figures 2A–C.

FIGURE 2. Schematic illustration of typical deep learning models. (A) Multilayer perceptron, (B) Convolutional neural networks, (C) Recurrent neural networks, (D) Generative adversarial network.

2.1 Multilayer Perceptron

MLP is a fundamental model from which all other artificial neural networks are developed, so it is usually considered as the beginning of deep learning. MLP is composed of a series of hidden layers, which are the link between the inputs and outputs. The neurons in the upper and lower layers are connected to each other through a nonlinear activation function. This model determines a large number of optimizable parameters, which provides high capability to learn the complex and nonlinear relationships in the optical data. In a typical MLP training process, we need to pre-define a cost function by the variance or cross entropy between the predicted and actual values. During the optimization, the weights of the neurons are adjusted by the back propagation algorithm to minimize the cost function. Later, the target optical functions such as the scattering spectra are imported into the network, and the predicted photonic structures are obtained (Wu et al., 2021). Intuitively, with the increase of hidden layers in MLP, the neural network learns more features and realizes higher training accuracy at the cost of training time. Noting that too many hidden layers are prone to cause over-fitting results, thus the appropriate hidden-layer numbers are preferred. To solve the universally non-uniqueness problems, the tandem network model is proposed by cascading an inverse-design network with a forward-modeling network (Liu et al., 2018).

2.2 Convolutional Neural Networks

CNNs are specially designed for image classification (Li et al., 2014; Guo et al., 2017) and recognition (Hijazi et al., 2015; Fu et al., 2017), and their performance on special tasks such as image recognition can even surpass humans (Lundervoldab and Lundervoldacd, 2019). The reason why CNNs can effectively process high-dimensional data such as images, is that they can automatically learn the features from large-scale data and generalize them to the same type of unknown data. Generally, CNNs consist of four parts: 1) The convolution layers extract the features of the input images; 2) The activation layers realize nonlinear mapping; 3) The pooling layers aggregate features in different regions to reduce the data dimension; 4) The full connection layer outputs the final classification results. The convolution layers usually contain several convolution kernels, which are also known as filters, and they sequentially extract the features of the input image just like the human brain. In the past years, various derivative networks, such as LeNet (Lecun et al., 1998), AlexNet (Krizhevsky et al., 2012), ZFNet (Zeiler and Fergus, 2014), VGG (Simonyan and Zisserman, 2014), GoogleNet (Szegedy et al., 2015), ResNet (He et al., 2016) and SENet (Hu et al., 2018) are developed on the basic components of CNNs. The network accuracy is improved by manipulating the layer numbers and connection modes. CNNs exhibit two important characteristics: First, the neurons in the neighboring layers are connected locally, which is different from the fully connected neurons in MLP. Second, the weight array in a region is shared to reduce the number of parameters, and it accelerates the convergence of network. Since the complexity of the model is reduced, the over-fitting problem can be released. Theoretically, CNNs are prominent to solve problems relevant to the images, such as optical illusion custom and super-resolution imaging. The network can automatically extract image features, including color, texture, shape and topology, which increases the robustness and operation efficiency in image processing. Recently, CNNs have been applied in photonic crystal (Asano and Noda, 2018) design. By optimizing the positions of air holes in a base nanocavity with CNNs, the extremely high Q-factor of 1.58 × 109 was successfully obtained.

2.3 Recurrent Neural Networks

Just like human beings can better understand the world by virtue of their memory effects, RNNs have certain memory for the past processed information. The output of RNNs is related not only to the current input, but also to the previous inputs. Thus, RNNs are prevalently used to simulate continuous sequential optical signal in the time domain. Since the networks memorize all information in the same way, they usually occupy a lot of memory and reduce the computational efficiency. The long short-term memory network, as a derivative RNNs, can selectively memorize the important information and forget the unimportant information by controlling the gate states (Ochreiter and Schmidhuber, 1997). Moreover, it solves the problem of gradient disappearance and gradient explosion for the long sequence training.

Unsupervised learning is fed with unlabeled training data, which denotes having no standard answer in the training process. Consequently, unsupervised systems are capable of discovering new patterns in the training datasets, some of which can even go beyond prior knowledge and scientific intuition. Moreover, the unsupervised learning focuses on extracting important features from data, rather than directly predicting the optical response, thus it does not need massive data to train the network. In this way, it removes the burden of creating massive labeled data.

2.4 Generative Adversarial Network

GAN is proposed by Goodfellow et al. (2014) to solve unsupervised learning problems. It contains two independent networks as shown in Figure 2D, which fight against each other to complete a zero-sum game. The discriminator network distinguishes whether the input structure data is real or fake. The generator network generates fake structure data by selecting and combining elements in the latent space with superposed noise. In the training process, the discriminator receives data from both the real and fake structure data, and judges which category it belongs to. Specifically, if the discriminator is right, adjust the generator to make the fake structure data more real to deceive the discriminator; otherwise, adjust the discriminator to avoid making similar mistakes again. The continuous training will reach a balanced state, and a generator with high quality and discriminator with strong judgment ability is achieved. After training, the generator is capable of producing target photonic structures quickly, and the discriminator can accurately judge whether a new input structure matches the target optical response or not.

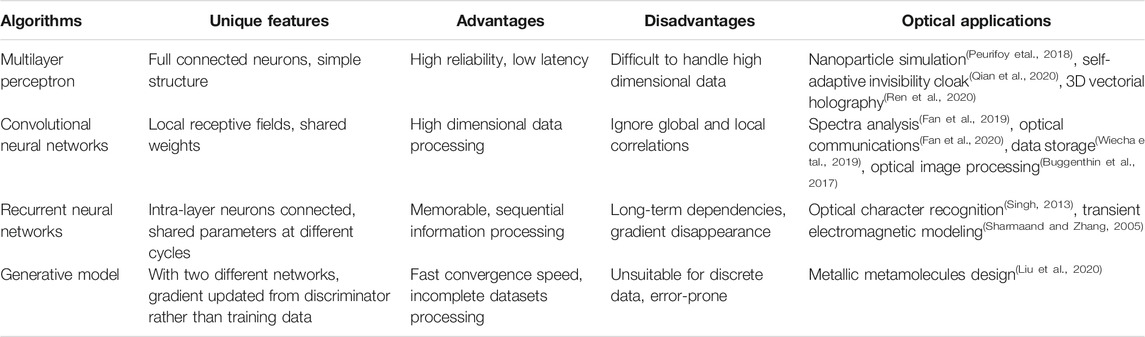

The typical characteristics of deep learning algorithms including MLP, CNNs, RNNs and generative model are summarized in Table 1. Note that MLP and CNNs have been widely used in the photonic devices design and optical data analysis. Further research of RNNs and generation models for photonics applications needs to be explored.

In the inverse design of photonic devices, there have been various optimization algorithms including the classic machine learning approaches (e.g., regularization algorithms, ensemble algorithms, or decision tree algorithms) and the traditional optimization approaches (e.g. topology optimization, adjoint methods or genetic algorithms) to efficiently search the target in large design space. By involving more data, deep learning can usually improve the computing accuracy efficiently, but this method has almost no effect on the conventional machine learning approaches. Moreover, the transfer learning technology enables the well-trained deep learning models to be applied to other scenarios, making it adaptable and easy to transform. In contrast, machine learning can only be applied to a single scene and is weak in transportability. Traditional optimization approaches search the maximal solution iteratively, which modify the searching strategy according to the intermediate results. This strategy consumes huge computational resources and is difficult to be applied for complex designs. People interested in these algorithms can refer to the recent review for more information (Ma L. et al., 2021).

3 Photonic Applications of Deep Learning

In this section, we briefly introduce the deep learning-based applications from photonic structure design to data analysis.

3.1 Deep Learning for Photonic Structure Design

In the past decades, the photonics have developed rapidly, and show a strong capability in tailoring light-matter interactions. Recently, this field has been revolutionized by the data-driven deep learning method. The method can search for the intricate relationship between the photonic structures and the optical responses after training on large samples, which circumvents the time-consuming iterative numerical simulations in photonic structure designs. Moreover, unlike traditional optimization algorithms, which requires repeated iterative training for each computation, data collection and network training for deep learning are only one-time costs. Such data-driven model can serve as a powerful tool for the on-demand design of photonic devices.

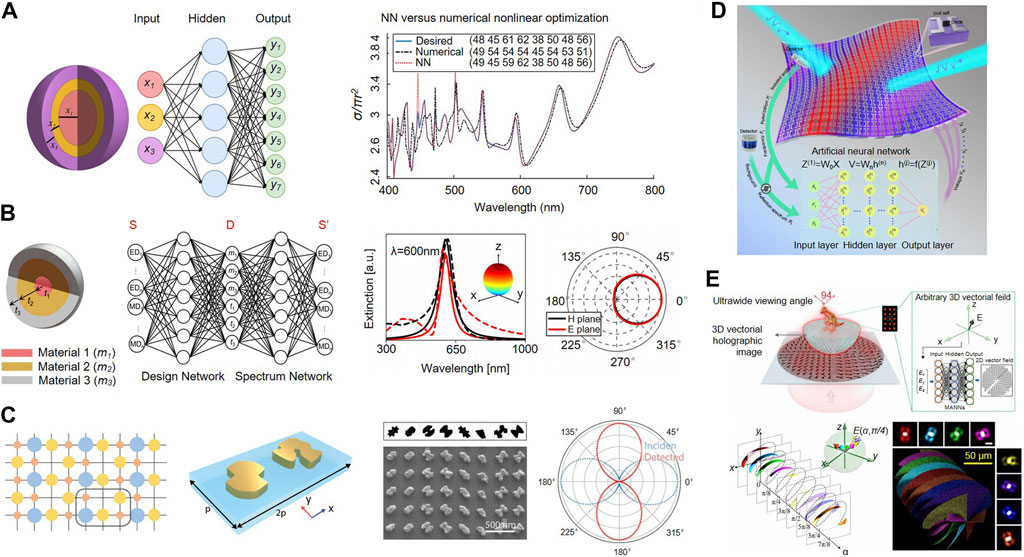

3.1.1 Inverse Design of Optical Nanoparticles

Core-shell nanoparticles can exhibit intriguing phenomena, such as multifrequency superscattering (Qin et al., 2021), directional scattering and Fano-like resonance, but its higher degree-of-freedom makes designing difficult. Peurifoy et al. (2018) applied MLP to predict the scattering cross-section of a nanoparticle with silicon dioxide/titanium dioxide multilayered structures, as shown in Figure 3A. In this work, MLP was trained on 50,000 scattering cross-section spectra obtained by the transfer matrix method. They achieved dual functions of forward modeling and inverse design. Specifically, MLP was used to approximate the scattering cross section of the core-shell nanoparticle for the input layer parameters. Besides, with the target scattering spectra, MLP would expeditiously output the corresponding structural parameters of the nanoparticle. The results show that MLP is able to calculate spectra accurately even the input structure goes beyond the training data. It suggests that MLP is not just simply fitting the data, but instead discovering some underlying patterns and structures of the input and output data. Note that this model architecture can not achieve the inverse design of materials, and there are certain restrictions on design freedom. So et al. (2019) took a step forward and inversely designed optical material and structural thickness simultaneously by realizing the classification and regression at the same time, as shown in Figure 3B. They used classification to determine what materials were used for each layer, and regression to predict the thickness. The loss function was defined as weighted average of spectrum and design losses. The spectrum loss was calculated by mean squared error of target spectra and predicted response by deep learning, and the design loss was weighted average of material and structural losses. As a result, the material and thickness of the core-shell nanoparticle are designed simultaneously and accurately.

FIGURE 3. Photonic designs enabled by deep learning models. (A) Nanophotonic particle scattering simulation. Reproduced from Peurifoy et al. (2018) with permission from American Association for the Advancement of Science. (B) Simultaneous design of material and structure of nanosphere particles. Reproduced from So et al. (2019) with permission from American Chemical Society. (C) Inverse design of metallic metamolecules. Reproduced from Liu et al. (2020) with permission from the WILEY-VCH Verlag GmbH & Co. KGaA, Weinheim. (D) Self-adaptive invisibility cloak. Reproduced from Qian et al. (2020) with permission from Springer Nature. (E) Optical vectorial hologram design of a 3D-kangaroo projection. Reproduced from Ren et al. (2020) with permission from American Association for the Advancement of Science.

3.1.2 Inverse Design of Metasurface

Over the past 2 decades, the explorations of metasurfaces have led to the discovery of exotic light–matter interactions, such as anomalous deflection (Yu and Capasso, 2014; Wang et al., 2018), asymmetric polarization conversion (Schwanecke et al., 2008; Pfeiffer et al., 2014) and wave-front shaping (Pu et al., 2015; Zhang et al., 2017; Raeker and Grbic, 2019).

From individual nanoparticles to collective meta-atoms metasurfaces, the structural degree of freedom and flexibility are increased drastically. Liu et al. (2020) proposed a hybrid framework, i.e. compositional pattern-producing networks (CPPN) and cooperative coevolution (CC) algorithm, to design metamolecules with significantly increased training efficiency, as shown in Figure 3C. The CPPN as a generative network composes high-quality nanostructure patterns, and CC divides the target metamolecule into the independent meta-atoms. The metallic metamolecules for arbitrary manipulation of the polarization and wavefront of light were demonstrated in the hybrid framework. This work provides a promising way to automatically construct the large-scale metasurfaces with high efficiency. Note that the proposed framework is assumed with weak-coupled meta-atoms, the strong coupling and nonlinear optical effects are expected to be involved for the future development. The nature of three-dimensional (3D) vector optical field is crucial to understand the light-matter interaction, which plays a significant role in imaging, holographic optical trapping and high-capacity data storage. Hence, using deep learning to manipulate the complex 3D vector optical fields in photonic structures such as spin and orbital momentum, topology and anisotropic vector fields are ready to be explored. For instance, Ren et al. (2020) designed an optical vectorial hologram of a 3D-kangaroo projection by MLP, as shown in Figure 3E. The phase hologram and a 2D vector-field distribution were served as state vector and label vector, respectively, and they were used to train the network model to reconstruct a stereo optical image. This work achieves the lensless reconstruction of a 3D-image with an ultra-wide viewing angle of 94°and a high diffraction efficiency of 78%, which shows great potentials in multiplexed displays and encryption.

Following the pioneering works on the static manipulation of optical field, there is an increasing interest to dynamically manipulate the optical filed, such as the design of invisibility cloak. The invisibility cloak is an intriguing device with great applications in various fields, however, the conventional cloak could not fit into the ever-changing environment. Qian et al. (2020) used MLP to design a self-adaptive cloak with millisecond response time to the dynamic incident wave and surrounding environment, as shown in Figure 3D. To this end, the optical response of each element inside the metasurface was independently tuned by feeding different bias voltages across a loaded varactor diode. With deep learning, the integrated system could exploit the intricate relationship between incident waves, reflection spectra and bias voltages for each individual meta-atom. Thereafter, the proposed intelligent cloak with bandwidth of 6.7–9.2 GHz was realized. The concept of demonstration can be potentially extended to the visible spectra with ingredients of gate-tunable conducting oxide (e.g. indium tin oxide) (Huang et al., 2016) or phase-change materials (e.g., vanadium dioxide) (Cormier et al., 2017).

Deep learning technology exhibits the huge potential in photonic structure design, material optimization, and even the optimization of the entire optical system. Besides the aforementioned work, it has been used for various intricate devices design, such as multi-mode converters (Liu et al., 2019; Zheng et al., 2021), metagratings (Inampudi and Mosallaei, 2018; Jiang et al., 2019), chiral metamaterials (Ma et al., 2018) and photonic crystals (Hao et al., 2019).

3.2 Deep Learning for Optical Data Analysis

The optical techniques have been widely implemented in various fields. Large optical data will be generated when applying optical spectroscopy and imaging to medical diagnosis, information storage and optical communication. The conventional analysis of optical data is often based on the prior experiences and physical intuition. Yet it is time-consuming and error-prone when processing huge amount of the complex optical data, such as optical spectra and images. To tackle this challenge, various deep neural networks have been exploited. In the following part, some important work of deep learning in optical data analysis are introduced.

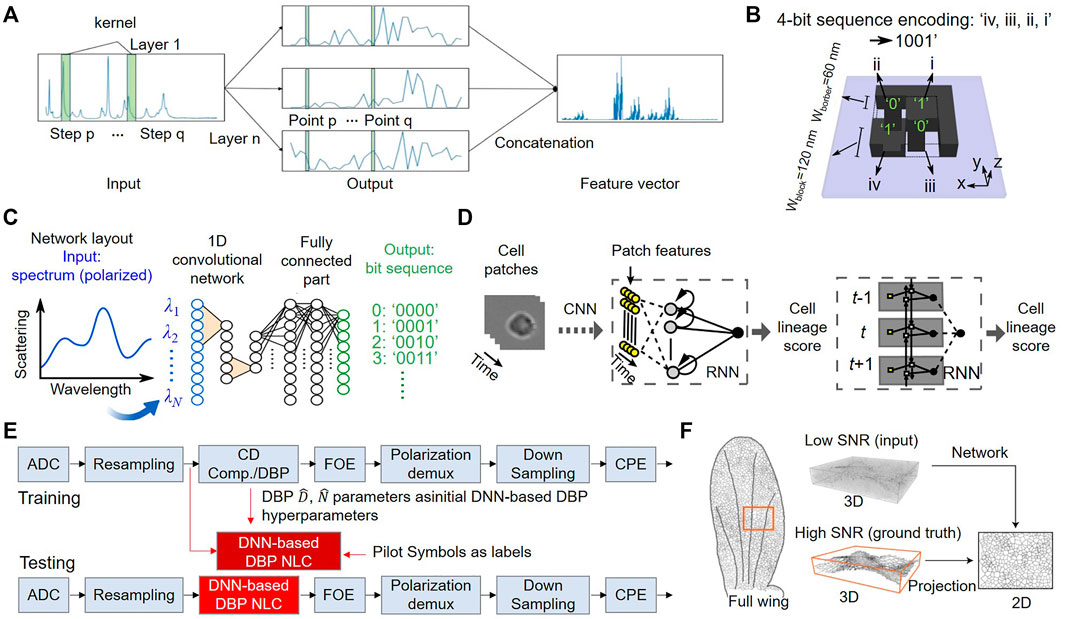

3.2.1 Complex Spectra Analysis

The optical spectroscopy is the study of interaction between matter and light radiation as a function of the wavelength or frequency. From the spectral analysis, the chemical compositions and relative contents of the target analytes can be deduced. Deep learning provides an alternative way for a better extraction of the encoded information from the massive and complex spectra. For example, Fan et al. (2019) implemented a CNN to analyze the Raman spectra and identify the components of mixtures, as shown in Figure 4A. The training datasets contained the spectra of 94 ternary mixtures of methanol, acetonitrile and distilled water. The identification accuracy of CNN was up to 99.9% and the detected volume percentage of methanol was as low as 4%, which went beyond the conventional models, such as k-nearest neighbor. The proposed component identification algorithm is suitable for complex mixtures sensing and is potential for rapid disease diagnosis.

FIGURE 4. Deep learning for optical data analysis. (A) Working principle of CNN used in spectral analysis. Reproduced from Fan et al. (2019) with permission from the Royal Society of Chemistry. (B) Schematic of 4-bit nanostructure geometry. (C) Illustration of CNN applied for information storage. Reproduced from Wiecha et al. (2019) with permission from Springer Nature. (D) Schematic of CNN used for classification of differentiated cells. Reproduced from Buggenthin et al. (2017) with permission from Springer Nature. (E) DNN-based DBP architecture. FOE: frequency-offset estimation, CPE: carrier-phase estimation. Reproduced from Fan et al. (2020) with permission from Springer Nature. (F) The proposed CARE for image restoration. Reproduced from Weigert et al. (2018) with permission from Springer Nature.

The optical memory provides an intriguing solution for “big data” due to the high information capacity and longevity. However, the diffraction limit of light inevitably restricts the bit density in optical information storage. Wiecha et al. (2019) encoded multiple bits of information in the subwavelength dielectric nanostructures by using a CNN and MLP, as illustrated in Figure 4B. The scattering spectra were identified to extract the bit sequence. In the network training, the scattering spectra data propagated forward through the network, and the outputs of highly activated neurons indicated the encoded bit sequence (Figure 4C). In this way, they efficiently improved the bit density up to 9-bits with quasi-error-free readout accuracy, which was of 40% higher information density than that of the Blu-ray. Furthermore, they simplified the readout process by probing few wavelengths of nanostructure scattering, i.e., the scattered RGB values of the dark-field microscopy images. This study provides a promising solution for high-density optical information storage based on the planar nanostructures.

3.2.2 Nonlinear Signal Processing

The long-haul optical communications face the fundamental bottlenecks, such as the fiber Kerr nonlinearity and chromatic dispersion. Deep learning, as a powerful tool, has been applied to fiber nonlinearity compensation in optical communications. Fan et al. (2020) utilized the deep learning-based digital back-propagation (DBP) architecture for nonlinear optical signal processing, as shown in Figure 4E. For a single-channel 28-GBaud 16-quadrature amplitude modulation system, the developed method demonstrated a 0.9-dB quality factor gain. This architecture was further extended to polarization-division-multiplexed (PDM) and wavelength-division-multiplexed (WDM). The quality factor gain of modified DBP were 0.6 and 0.25 dB for the single channel PDM and WDM system, respectively. This work shows that deep learning provides an effective tool for theoretical understanding of the nonlinear fiber transmission.

In addition, deep learning has promoted the development of intelligent systems in fiber optic communication, such as eye map analyzers. Wang et al. (2017) proposed an intelligent eye-diagram analyzer based on CNNs to achieve the modulation format recognition and optical signal-to-noise rate estimation in optical communications. Four commonly used optical signals by the simulations were obtained, which were then detected by the photodetectors. They collected 6,400 eye-diagram images from the oscilloscope as training sets. Each image in the training datasets had a 20-bits label vector. During the training process, CNNs gradually extracted the effective features of the input images and the back-propagation algorithm was exploited to optimize the kernel parameters. Consequently, the estimation accuracy nearly reached 100%.

3.2.3 Optical Images Processing

Optical imaging technology, such as fluorescence microscopy and super resolution imaging, have been considered as powerful tools in various areas. For example, image classification has been widely used for the medical image recognition. Buggenthin et al. (2017) established a classifier by combining CNNs and RNNs for directly identifying differentiated cells. With massive bright-field images input, the convolutional layers extracted the local features and the concatenation layer combined the highest-level spatial features with cell displacement in differentiation process. The extracted features were fed into the RNNs to exploit the temporal information of the single-cell tracks for cells lineage prediction, as shown in Figure 4D. They achieved the label-free identification of cells with differentially expressed lineage-specifying genes, and the lineage choice could be detected up to three generations. The model allows for analyzing the cell differentiation processes with high robustness and rapid prediction.

In the fluorescence microscopy, the observable phenomena of fluorescence microscopy is limited by the chemistry of fluorophores, and the maximum photon exposure that the sample can withstand. The cross-discipline of deep learning and bio-imaging provides an opportunity to overcome this tackle. For instance, Weigert et al. (2018) proposed a content-aware image restoration (CARE) method to restore the microscopy images with enhanced performance. In Figure 4F, the CNN architecture was trained on the well-registered pairs of images: a low signal-to-noise ratio (SNR) image as input and high SNR one as output. The CARE networks could maintain the microscopy images of high SNR even if the 60-fold light dosage was decreased. Besides, the isotropic resolution could be realized with the tenfold fewer axial slices. Impressively, they achieved the imaging speed by CARE of 20-times faster than that of the state-of-the-art reconstruction methods. The proposed CARE networks can extend the range of biological phenomena observable by microscopy, and can be automatically adapted to various image contents.

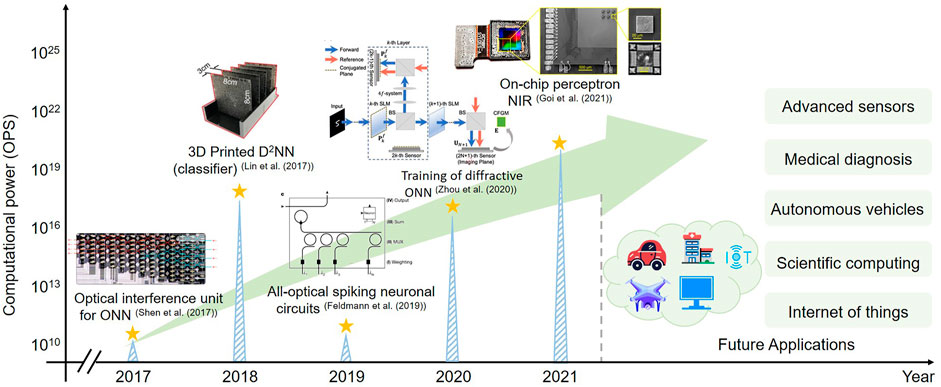

4 Optical Neural Networks

Integrated circuit chip is the mainstream hardware carrier, such as graphical processing units, central processing units and application-specific integrated circuits (Misra and Saha, 2010). However, the conventional electronic computing systems on von Nemumann architectures are insufficient for training and testing neural networks (Neumann, 2012). It is because that they separate the data space from the program space, and the tidal data load is generated between the computing unit and the memory. Photons exhibit the unique abilities of realizing multiple interconnections and simultaneously parallel calculations at the speed of light (Xu et al., 2021). Thus, the optical neural networks (ONNs) constructed by the photonic devices, have opened a new road to achieving orders-of-magnitude improvements in both computation speed and energy consumption over the existing solutions (Cardenas et al., 2009; Yang et al., 2013). The ONNs have shown the potential for addressing the ever-growing demand of high-speed data analysis in complex applications, such as medical diagnosis, autonomous driving, and high-performance computing, as shown in Figure 5. The platforms to achieving ONNs mainly include photonic circuits and optical diffractive layers as discussed following.

Recently, Shen et al. (2017) experimentally demonstrated an ONN by using a cascaded array of 56 programmable Mach-Zehnder interferometers on an integrated chip. Theoretically, they estimated that the proposed ONN could achieve 1011 N-dimension matrix-vector multiplications per second, which was two orders of magnitude faster than the state-of-the-art electronic devices. To test the performance, they verified the utility in vowel recognition with measured accuracy of 76.7%. They claimed that the system could achieve a correctness of 90% with calibrations to reduce the thermal cross-talk, which was comparable to conventional 64-bits computer with accuracy of 91.7%. Noted that the optical nonlinearity unit by a saturable absorber was only modelled on a computer, and the power dissipation of data movement was significant in the current ONNs. There is still a long way to exploring the optical interconnects and optical computing units to realize the supremacy of ONNs.

In addition to the photonic integrated circuit, the physical diffractive layers also provides a method for implementing neural networks algorithms. The optical diffraction of planar structures is mathematically a convolutional processing of input modulated fields and propagation functions. Thus the diffractive layers can be intuitively used to train ONNs. Lin et al. (2018) pioneered the study of all-optical diffractive deep neural network (D2NN) architectures. The learning framework was based on multiple layers of 3D-printed diffractive surfaces, which was designed through a computer. They demonstrated that the trained D2NN could achieve the automated classification of handwritten digits (accuracy of 93.39%) and complex images datasets (Fashion MNIST, accuracy of 86.6%) with the 10 diffractive layers and 0.4 million neurons. The proposed D2NN shows the ability to operates at the speed of light, and it can be easily extended to billions of neurons and connections (Lin et al., 2018).

5 Outlook and Conclusion

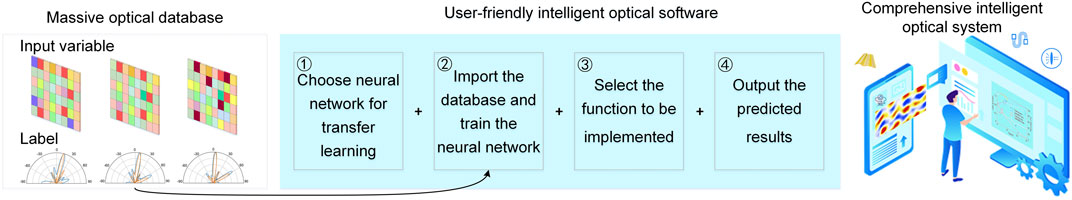

Deep learning usually needs large amounts of data support. However, it is impractical to collect massive databases by either physical simulations or experimental measurements. There are mainly two approaches to solving this problem. First, transfer learning allows migrating the knowledge of neural network trained from a certain physical process to other similar cases (Torrey and Shavlik, 2010). Specifically, the neural network pre-trained on high-quality datasets shows strong generalization ability, which can solve new problems with small datasets. Thus the data collection can be substantially reduced. Second, the burden of massive data collection can also be relieved by combining deep learning model with basic physical rules. For example, deep learning can be used as an intermediate step to effectively solve the Maxwell’s equations (So et al., 2020), rather than to directly find a mapping of optical structures and properties.

In the past few years, intelligent photonics has made great progress benefited from the interdisciplinary collaborations from researchers in the field of computer science and physical optics. To relieve the researchers from tedious and complex algorithm programming, a user-friendly system is highly on demand. This system should basically contain two parts: open-source resources and user-friendly interface, as shown in Figure 6. Inspired by computer-science community, researchers are encouraged to share their datasets and neural networks to establish a comprehensive optical open-source community. Furthermore, the abundant open-source networks enable transfer learning to solve the various problems. The basic idea is to migrate data characteristics from related domains to improve the learning effect of the target tasks. Thereafter, when a deep learning network is needed to train and solve a specific photonic problem, we can directly call the relative database and well-trained neural networks from the open-source resources, which avoids the ab initio building of data collection.

In the context of photonic structures, people are not only interested in some specific designs and their performances, but also in the general mechanism or principle that leads to the functionalities. The neural networks are considered as black-box models, which fit the training sets to directly provide the expected results. There is relentless effort for researchers to study the interpretability of neural networks. For instance, Zhou et al. (2016) proved that by using global average pooling, CNNs could retain remarkable localization ability, which exposed the implicit attention of CNNs on image-level labels. The remarkable localization ability are probably transferred to physical interpretability of the photonic devices design.

In this review, we have surveyed the recent development of deep learning in the field of photonics, including photonic structure design and optical data analysis. Optical neural networks are also emerging to reform the conventional electronic-circuit architecture for deep learning with high computational power and low energy consumption. We have witnessed the interactions between the deep learning and photonics, and look forward to more exciting works in the interdisciplinary field.

Author Contributions

Conceptualization, D-QY and J-hC; methodology, BD and BW; original draft preparation, BD, BW and J-hC; writing-review and editing, D-QY, J-hC and HC. All authors have read and agreed to the submitted version of the manuscript.

Funding

The authors would like to thank the support from National Natural Science Foundation of China (11974058, 62005231); Beijing Nova Program (Z201100006820125) from Beijing Municipal Science and Technology Commission; Beijing Natural Science Foundation (Z210004); State Key Laboratory of Information Photonics and Optical Communications (IPOC2021ZT01), BUPT, China; Fundamental Research Funds for the Central Universities (20720200074, 20720210045); Guangdong Basic and Applied Basic Research Foundation (2021A1515012199).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbeel, P., Coates, A., and Ng, A. Y. (2010). Autonomous Helicopter Aerobatics through Apprenticeship Learning. Int. J. Robotics Res. 29, 1608–1639. doi:10.1177/0278364910371999

Anjit, T. A., Benny, R., Cherian, P., and Mythili, P. (2021). Non-iterative Microwave Imaging Solutions for Inverse Problems Using Deep Learning. Pier M 102, 53–63. doi:10.2528/pierm21021304

Asano, T., and Noda, S. (2018). Optimization of Photonic crystal Nanocavities Based on Deep Learning. Opt. Express 26, 32704–32717. doi:10.1364/OE.26.032704

Barbastathis, G., Ozcan, A., and Situ, G. (2019). On the Use of Deep Learning for Computational Imaging. Optica 6, 921–943. doi:10.1364/OPTICA.6.000921

Bigio, I., and Sergio, F. (2016). Quantitative Biomedical Optics: Theory, Methods, and Applications. Cambridge: Cambridge University Press.

Buggenthin, F., Buettner, F., Hoppe, P. S., Endele, M., Kroiss, M., Strasser, M., et al. (2017). Prospective Identification of Hematopoietic Lineage Choice by Deep Learning. Nat. Methods 14, 403–406. doi:10.1038/nmeth.4182

Cardenas, J., Poitras, C. B., Robinson, J. T., Preston, K., Chen, L., and Lipson, M. (2009). Low Loss Etchless Silicon Photonic Waveguides. Opt. Express 17, 4752–4757. doi:10.1364/OE.17.004752

Chan, S., and Siegel, E. L. (2019). Will Machine Learning End the Viability of Radiology as a Thriving Medical Specialty? Bjr 92, 20180416. doi:10.1259/bjr.20180416

Chen, X., Wei, Z., Li, M., and Rocca, P. (2020). A Review of Deep Learning Approaches for Inverse Scattering Problems (Invited Review). Pier 167, 67–81. doi:10.2528/PIER20030705

Cormier, P., Son, T. V., Thibodeau, J., Doucet, A., Truong, V.-V., and Haché, A. (2017). Vanadium Dioxide as a Material to Control Light Polarization in the Visible and Near Infrared. Opt. Commun. 382, 80–85. doi:10.1016/j.optcom.2016.07.070

Dong, P., Chen, Y.-K., Duan, G.-H., and Neilson, D. T. (2014). Silicon Photonic Devices and Integrated Circuits. Nanophotonics 3, 215–228. doi:10.1515/nanoph-2013-0023

Fan, Q., Zhou, G., Gui, T., Lu, C., and Lau, A. P. T. (2020). Advancing Theoretical Understanding and Practical Performance of Signal Processing for Nonlinear Optical Communications through Machine Learning. Nat. Commun. 11, 3694. doi:10.1038/s41467-020-17516-7

Fan, X., Ming, W., Zeng, H., Zhang, Z., and Lu, H. (2019). Deep Learning-Based Component Identification for the Raman Spectra of Mixtures. Analyst 144, 1789–1798. doi:10.1039/C8AN02212G

Fang, J., Swain, A., Unni, R., and Zheng, Y. (2021). Decoding Optical Data with Machine Learning. Laser Photon. Rev. 15, 2000422. doi:10.1002/lpor.202000422

Fu, J., Zheng, H., and Mei, T. “Look Closer to See Better: Recurrent Attention Convolutional Neural Network for fine-grained Image Recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, July 2017, 4438–4446.

Ghosh, K., Stuke, A., Todorović, M., Jørgensen, P. B., Schmidt, M. N., Vehtari, A., et al. (2019). Deep Learning Spectroscopy: Neural Networks for Molecular Excitation Spectra. Adv. Sci. 6, 1801367. doi:10.1002/advs.201801367

Goi, E., Chen, X., Zhang, Q., Cumming, B. P., Schoenhardt, S., Luan, H., et al. (2021). Nanoprinted High-Neuron-Density Optical Linear Perceptrons Performing Near-Infrared Inference on a CMOS Chip. Light Sci. Appl. 10, 1–11. doi:10.1038/s41377-021-00483-z

Goodfellow, I. J., Bengio, Y., and Courville, A. (2016). Deep Learning. Cambridge, Massachusetts: MIT press.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2014). Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 27 [arXiv:1406.2661].

Guo, T., Dong, J., Li, H., and Gao, Y. “Simple Convolutional Neural Network on Image Classification,” in 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, March 2017, 721–724.

Hao, J., Zheng, L., Yang, D., and Guo, Y. “Inverse Design of Photonic Crystal Nanobeam Cavity Structure via Deep Neural Network,” in Proceedings of the Asia Communications and Photonics Conference, ed. M4A.296 (Optical Society of America), Chengdu, China, November 2019, 1597–1600.

He, K., Zhang, X., Ren, S., and Sun, J. “Deep Residual Learning for Image Recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, June 2016Las Vegas, NV, USA, 770–778.

Hijazi, S., Kumar, R., and Rowen, C. (2015). Using Convolutional Neural Networks for Image Recognition. San Jose, CA, USA: Cadence Design Systems Inc., 1–12.

Hochreiter, S., and Schmidhuber, J. (1997). Long Short-Term Memory. Neural Comput. 9, 1735–1780. doi:10.1162/neco.1997.9.8.1735

Hu, J., Shen, L., and Sun, G. “Squeeze-and-excitation Networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, June 2018, 7132–7141.

Huang, Y.-W., Lee, H. W. H., Sokhoyan, R., Pala, R. A., Thyagarajan, K., Han, S., et al. (2016). Gate-tunable Conducting Oxide Metasurfaces. Nano Lett. 16, 5319–5325. doi:10.1021/acs.nanolett.6b00555

Inampudi, S., and Mosallaei, H. (2018). Neural Network Based Design of Metagratings. Appl. Phys. Lett. 112, 241102. doi:10.1063/1.5033327

Jiang, J., Chen, M., and Fan, J. A. (2021). Deep Neural Networks for the Evaluation and Design of Photonic Devices. Nat. Rev. Mater. 6, 679–700. doi:10.1038/s41578-020-00260-1

Jiang, J., Sell, D., Hoyer, S., Hickey, J., Yang, J., and Fan, J. A. (2019). Free-form Diffractive Metagrating Design Based on Generative Adversarial Networks. ACS Nano 13, 8872–8878. doi:10.1021/acsnano.9b02371

Karmakar, G., Chowdhury, A., Das, R., Kamruzzaman, J., and Islam, S. (2021). Assessing Trust Level of a Driverless Car Using Deep Learning. IEEE Trans. Intell. Transport. Syst. 22, 4457–4466. doi:10.1109/TITS.2021.3059261

Khan, F. N., Fan, Q., Lu, C., and Lau, A. P. T. (2019). An Optical Communication's Perspective on Machine Learning and its Applications. J. Lightwave Technol. 37, 493–516. doi:10.1109/JLT.2019.2897313

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105. doi:10.1145/3065386

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep Learning. Nature 521, 436–444. doi:10.1038/nature14539

Lecun, Y., Bottou, L., Bengio, Y., and Haffner, P. (1998). Gradient-based Learning Applied to Document Recognition. Proc. IEEE 86, 2278–2324. doi:10.1109/5.726791

Li, J., Li, Y., Cen, Y., Zhang, C., Luo, T., and Yang, D. (2020). Applications of Neural Networks for Spectrum Prediction and Inverse Design in the Terahertz Band. IEEE Photon. J. 12, 1–9. doi:10.1109/JPHOT.2020.3022053

Li, Q., Cai, W., Wang, X., Zhou, Y., Feng, D. D., and Chen, M. “Medical Image Classification with Convolutional Neural Network,” in Proceedings of the 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, December 2014, 844–848.

Liao, Z., Zhang, R., He, S., Zeng, D., Wang, J., and Kim, H.-J. (2019). Deep Learning-Based Data Storage for Low Latency in Data center Networks. IEEE Access 7, 26411–26417. doi:10.1109/ACCESS.2019.2901742

Lin, X., Rivenson, Y., Yardimci, N. T., Veli, M., Luo, Y., Jarrahi, M., et al. (2018). All-optical Machine Learning Using Diffractive Deep Neural Networks. Science 361, 1004–1008. doi:10.1126/science.aat8084

Liu, D., Tan, Y., Khoram, E., and Yu, Z. (2018). Training Deep Neural Networks for the Inverse Design of Nanophotonic Structures. ACS Photon. 5, 1365–1369. doi:10.1021/acsphotonics.7b01377

Liu, Y., Xu, K., Wang, S., Shen, W., Xie, H., Wang, Y., et al. (2019). Arbitrarily Routed Mode-Division Multiplexed Photonic Circuits for Dense Integration. Nat. Commun. 10, 3263. doi:10.1038/s41467-019-11196-8

Liu, Z., Zhu, D., Lee, K. T., Kim, A. S., Raju, L., and Cai, W. (2020). Compounding Meta‐Atoms into Metamolecules with Hybrid Artificial Intelligence Techniques. Adv. Mater. 32, 1904790. doi:10.1002/adma.201904790

Lundervold, A. S., and Lundervold, A. (2019). An Overview of Deep Learning in Medical Imaging Focusing on MRI. Z. für Medizinische Physik 29, 102–127. doi:10.1016/j.zemedi.2018.11.002

Luongo, F., Hakim, R., Nguyen, J. H., Anandkumar, A., and Hung, A. J. (2021). Deep Learning-Based Computer Vision to Recognize and Classify Suturing Gestures in Robot-Assisted Surgery. Surgery 169, 1240–1244. doi:10.1016/j.surg.2020.08.016

Ma, L., Li, J., Liu, Z., Zhang, Y., Zhang, N., Zheng, S., et al. (2021a). Intelligent Algorithms: New Avenues for Designing Nanophotonic Devices. China Opt. Express 19, 011301. doi:10.3788/COL202119.011301

Ma, W., Cheng, F., and Liu, Y. (2018). Deep-learning-enabled On-Demand Design of Chiral Metamaterials. ACS Nano 12, 6326–6334. doi:10.1021/acsnano.8b03569

Ma, W., Cheng, F., Xu, Y., Wen, Q., and Liu, Y. (2019). Probabilistic Representation and Inverse Design of Metamaterials Based on a Deep Generative Model with Semi‐Supervised Learning Strategy. Adv. Mater. 31, 1901111. doi:10.1002/adma.201901111

Ma, W., Liu, Z., Kudyshev, Z. A., Boltasseva, A., Cai, W., and Liu, Y. (2021b). Deep Learning for the Design of Photonic Structures. Nat. Photon. 15, 77–90. doi:10.1038/s41566-020-0685-y

Malkiel, I., Mrejen, M., Nagler, A., Arieli, U., Wolf, L., and Suchowski, H. (2018). Plasmonic Nanostructure Design and Characterization via Deep Learning. Light Sci. Appl. 7, 60. doi:10.1038/s41377-018-0060-7

Misra, J., and Saha, I. (2010). Artificial Neural Networks in Hardware: a Survey of Two Decades of Progress. Neurocomputing 74, 239–255. doi:10.1016/j.neucom.2010.03.021

Moen, E., Bannon, D., Kudo, T., Graf, W., Covert, M., and Van Valen, D. (2019). Deep Learning for Cellular Image Analysis. Nat. Methods 16, 1233–1246. doi:10.1038/s41592-019-0403-1

Ntziachristos, V. (2010). Going Deeper Than Microscopy: the Optical Imaging Frontier in Biology. Nat. Methods 7, 603–614. doi:10.1038/nmeth.1483

Peurifoy, J., Shen, Y., Jing, L., Yang, Y., Cano-Renteria, F., DeLacy, B. G., et al. (2018). Nanophotonic Particle Simulation and Inverse Design Using Artificial Neural Networks. Sci. Adv. 4, eaar4206. doi:10.1126/sciadv.aar4206

Pfeiffer, C., Zhang, C., Ray, V., Guo, L. J., and Grbic, A. (2014). High Performance Bianisotropic Metasurfaces: Asymmetric Transmission of Light. Phys. Rev. Lett. 113, 023902. doi:10.1103/PhysRevLett.113.023902

Popel, M., Tomkova, M., Tomek, J., Kaiser, Ł., Uszkoreit, J., Bojar, O., et al. (2020). Transforming Machine Translation: a Deep Learning System Reaches News Translation Quality Comparable to Human Professionals. Nat. Commun. 11, 4381. doi:10.1038/s41467-020-18073-9

Pu, M., Li, X., Ma, X., Wang, Y., Zhao, Z., Wang, C., et al. (2015). Catenary Optics for Achromatic Generation of Perfect Optical Angular Momentum. Sci. Adv. 1, e1500396. doi:10.1126/sciadv.1500396

Qian, C., Zheng, B., Shen, Y., Jing, L., Li, E., Shen, L., et al. (2020). Deep-learning-enabled Self-Adaptive Microwave Cloak without Human Intervention. Nat. Photon. 14, 383–390. doi:10.1038/s41566-020-0604-2

Qin, Y., Xu, J., Liu, Y., and Chen, H. (2021). Multifrequency Superscattering Pattern Shaping. China Opt. Express 19, 123601. doi:10.3788/col202119.123601

Raeker, B. O., and Grbic, A. (2019). Compound Metaoptics for Amplitude and Phase Control of Wave Fronts. Phys. Rev. Lett. 122, 113901. doi:10.1103/PhysRevLett.122.113901

Ravì, D., Wong, C., Deligianni, F., Berthelot, M., Andreu-Perez, J., Lo, B., et al. (2017). Deep Learning for Health Informatics. IEEE J. Biomed. Health Inform. 21, 4–21. doi:10.1109/JBHI.2016.2636665

Ren, H., Shao, W., Li, Y., Salim, F., and Gu, M. (2020). Three-dimensional Vectorial Holography Based on Machine Learning Inverse Design. Sci. Adv. 6, eaaz4261. doi:10.1126/sciadv.aaz4261

Rickard, J. J. S., Di-Pietro, V., Smith, D. J., Davies, D. J., Belli, A., and Oppenheimer, P. G. (2020). Rapid Optofluidic Detection of Biomarkers for Traumatic Brain Injury via Surface-Enhanced Raman Spectroscopy. Nat. Biomed. Eng. 4, 610–623. doi:10.1038/s41551-019-0510-4

Rivenson, Y., Wang, H., Wei, Z., de Haan, K., Zhang, Y., Wu, Y., et al. (2019). Virtual Histological Staining of Unlabelled Tissue-Autofluorescence Images via Deep Learning. Nat. Biomed. Eng. 3, 466–477. doi:10.1038/s41551-019-0362-y

Schwanecke, A. S., Fedotov, V. A., Khardikov, V. V., Prosvirnin, S. L., Chen, Y., and Zheludev, N. I. (2008). Nanostructured Metal Film with Asymmetric Optical Transmission. Nano Lett. 8, 2940–2943. doi:10.1021/nl801794d

Sharma, H., and Zhang, Q. “Transient Electromagnetic Modeling Using Recurrent Neural Networks,” in Proceedings of the IEEE MTT-S International Microwave Symposium Digest, 2005, Long Beach, CA, USA, June 2005, 1597–1600.

Shen, Y., Harris, N. C., Skirlo, S., Prabhu, M., Baehr-Jones, T., Hochberg, M., et al. (2017). Deep Learning with Coherent Nanophotonic Circuits. Nat. Photon 11, 441–446. doi:10.1038/nphoton.2017.93

Simonyan, K., and Zisserman, A. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 1097–1105. preprint arXiv:1409.1556.

Singh, S. (2013). Optical Character Recognition Techniques: a Survey. J. emerging Trends Comput. Inf. Sci. 4, 545–550. doi:10.1142/S0218001491000041

So, S., Badloe, T., Noh, J., Bravo-Abad, J., and Rho, J. (2020). Deep Learning Enabled Inverse Design in Nanophotonics. Nanophotonics 9, 1041–1057. doi:10.1515/nanoph-2019-0474

So, S., Mun, J., and Rho, J. (2019). Simultaneous Inverse Design of Materials and Structures via Deep Learning: Demonstration of Dipole Resonance Engineering Using Core-Shell Nanoparticles. ACS Appl. Mater. Inter. 11, 24264–24268. doi:10.1021/acsami.9b05857

Sui, X., Wu, Q., Liu, J., Chen, Q., and Gu, G. (2020). A Review of Optical Neural Networks. IEEE Access 8, 70773–70783. doi:10.1109/ACCESS.2020.2987333

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., et al. “Going Deeper with Convolutions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, June 2015, 1–9.

Török, P., and Kao, F. J. (2007). Optical Imaging and Microscopy: Techniques and Advanced Systems. New York: Springer.

Torrey, L., and Shavlik, J. (2010). “Transfer Learning,” in Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques (IGI Global). Editors E. S. Olivas, J. D. M. Guerrero, M. M. Sober, J. R. M. Benedito, and A. J. S. Lopez (Hershey, PA: IGI Publishing), 242–264. doi:10.4018/978-1-60566-766-9.ch011

Vukusic, P., and Sambles, J. R. (2003). Photonic Structures in Biology. Nature 424, 852–855. doi:10.1038/nature01941

Wang, D., Zhang, M., Li, Z., Li, J., Fu, M., Cui, Y., et al. (2017). Modulation Format Recognition and OSNR Estimation Using CNN-Based Deep Learning. IEEE Photon. Technol. Lett. 29, 1667–1670. doi:10.1109/LPT.2017.2742553

Wang, S., Wu, P. C., Su, V.-C., Lai, Y.-C., Chen, M.-K., Kuo, H. Y., et al. (2018). A Broadband Achromatic Metalens in the Visible. Nat. Nanotech 13, 227–232. doi:10.1038/s41565-017-0052-4

Weigert, M., Schmidt, U., Boothe, T., Müller, A., Dibrov, A., Jain, A., et al. (2018). Content-aware Image Restoration: Pushing the Limits of Fluorescence Microscopy. Nat. Methods 15, 1090–1097. doi:10.1038/s41592-018-0216-7

Wiecha, P. R., Lecestre, A., Mallet, N., and Larrieu, G. (2019). Pushing the Limits of Optical Information Storage Using Deep Learning. Nat. Nanotechnol. 14, 237–244. doi:10.1038/s41565-018-0346-1

Wiecha, P. R., and Muskens, O. L. (2019). Deep Learning Meets Nanophotonics: a Generalized Accurate Predictor for Near fields and Far fields of Arbitrary 3D Nanostructures. Nano Lett. 20, 329–338. doi:10.1021/acs.nanolett.9b03971

Wu, B., Hao, Z.-L., Chen, J.-H., Bao, Q.-L., Liu, Y.-N., and Chen, H.-Y. (2022). Total Transmission from Deep Learning Designs. J. Electron. Sci. Technol. 20, 100146. doi:10.1016/j.jnlest.2021.100146

Wu, Y., Schuster, M., Chen, Z., Le, Q. V., Norouzi, M., Macherey, W., et al. (2016). Google’s Neural Machine Translation System: Bridging the gap between Human and Machine Translation. arXiv. preprint arXiv:1609.08144.

Xu, X., Tan, M., Corcoran, B., Wu, J., Boes, A., Nguyen, T. G., et al. (2021). 11 TOPS Photonic Convolutional Accelerator for Optical Neural Networks. Nature 589, 44–51. doi:10.1038/s41586-020-03063-0

Yang, L., Zhang, L., and Ji, R. (2013). On-chip Optical Matrix-Vector Multiplier. Proc. SPIE 8855. doi:10.1117/12.2028585

Yoon, G., Lee, D., Nam, K. T., and Rho, J. (2018). Pragmatic Metasurface Hologram at Visible Wavelength: the Balance between Diffraction Efficiency and Fabrication Compatibility. ACS Photon. 5, 1643–1647. doi:10.1021/acsphotonics.7b01044

Yu, N., and Capasso, F. (2014). Flat Optics with Designer Metasurfaces. Nat. Mater 13, 139–150. doi:10.1038/nmat3839

Zeiler, M. D., and Fergus, R. (2014). “Visualizing and Understanding Convolutional Networks,” in European Conference on Computer Vision (Springer), 818–833. doi:10.1007/978-3-319-10590-1_53

Zhang, F., Pu, M., Li, X., Gao, P., Ma, X., Luo, J., et al. (2017). All-dielectric Metasurfaces for Simultaneous Giant Circular Asymmetric Transmission and Wavefront Shaping Based on Asymmetric Photonic Spin-Orbit Interactions. Adv. Funct. Mater. 27, 1704295. doi:10.1002/adfm.201704295

Zhang, X., and Liu, Z. (2008). Superlenses to Overcome the Diffraction Limit. Nat. Mater 7, 435–441. doi:10.1038/nmat2141

Zheng, Z.-h., Chen, Y., Chen, H.-y., and Chen, J.-H. (2021). Ultra-compact Reconfigurable Device for Mode Conversion and Dual-Mode DPSK Demodulation via Inverse Design. Opt. Express 29, 17718–17725. doi:10.1364/OE.420874

Keywords: optics and photonics, deep learning, photonic structure design, optical data analysis, optical neural networks

Citation: Duan B, Wu B, Chen J-h, Chen H and Yang D-Q (2022) Deep Learning for Photonic Design and Analysis: Principles and Applications. Front. Mater. 8:791296. doi: 10.3389/fmats.2021.791296

Received: 08 October 2021; Accepted: 08 December 2021;

Published: 06 January 2022.

Edited by:

Lingling Huang, Beijing Institute of Technology, ChinaCopyright © 2022 Duan, Wu, Chen, Chen and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Da-Quan Yang, ydq@bupt.edu.cn; Jin-hui Chen, jimchen@xmu.edu.cn

†These authors have contributed equally to this work

Bing Duan

Bing Duan Bei Wu2†

Bei Wu2†  Jin-hui Chen

Jin-hui Chen Huanyang Chen

Huanyang Chen