Welding robot automation technology based on digital twin

- School of Mechanical Engineering, Henan Institute of Technology, Xinxiang, China

In the era of intelligence and automation, robots play a significant role in the field of automated welding, enhancing efficiency and precision. However, challenges persist in scenarios demanding complexity and higher precision, such as low welding planning efficiency and inaccurate weld seam defect detection. Therefore, based on digital twin technology and kernel correlation filtering algorithm, a welding tracking model is proposed. Firstly, the kernel correlation filtering algorithm is used to train the filter on the first frame of the collected image, determine the position of image features in the region, extract histogram features of image blocks, and then train the filter using ridge regression to achieve welding trajectory tracking. Additionally, an intelligent weld seam detection model is introduced, employing a backbone feature network for feature extraction, feature fusion through a feature pyramid, and quality detection of weld seams through head classification. During testing of the tracking model, the maximum tracking error is −0.232 mm, with an average absolute tracking error of 0.08 mm, outperforming other models. Comparatively, in tracking accuracy, the proposed model exhibits the fastest convergence with a precision rate of 0.845, surpassing other models. In weld seam detection, the proposed model excels with a detection accuracy of 97.35% and minimal performance loss at 0.023. In weld seam quality and melt depth error detection, the proposed model achieves errors within the range of −0.06 mm, outperforming the other two models. These results highlight the outstanding detection capabilities of the proposed model. The research findings will serve as technical references for the development of automated welding robots and welding quality inspection.

1 Introduction

With the advent of the era of industrial intelligence, robots are playing an increasingly vital role in the field of welding. Robot welding not only significantly enhances the efficiency and precision of welding but also reduces the labor intensity and work risks for workers. However, in complex and high-precision welding scenarios, traditional welding robots face challenges such as low welding planning efficiency and an inability to adapt to complex and high-precision welding environments (Lyons, 2022). Digital twin technology, which precisely correlates and maps physical objects or processes with virtual models, offers a solution. According to research, digital twins can serve multiple constituencies, but they face difficulties in matching scope and scale in the application of digital twin technology. With the development of Industry 4.0, twin technology has been widely applied in the field of robot welding. How to solve the problems of insufficient planning of welding robots and decreased welding quality is crucial (Li et al., 2022). To address the aforementioned challenges, this research proposes a welding tracking model based on the Kernel Correlation Filter (KCF) algorithm, leveraging digital twin technology. The first frame image features are collected using the KCF model, and accurate tracking of welding trajectories is achieved through filter training. Subsequently, a welding seam detection model is proposed based on deep learning algorithms, enabling effective detection of welding seam quality through target feature extraction and assessment. The research innovation lies in the introduction of a welding tracking model based on digital twin technology, achieving efficient and accurate welding trajectory tracking through filter training and feature extraction. Additionally, in the realm of welding seam quality detection, an intelligent welding seam quality detection model is presented, employing deep learning and feature fusion methods to achieve precise judgment of welding seam quality. The study contributes to enhancing the controllability and stability of welding processes, reducing the occurrence of welding defects, and promoting further development and application of welding robot technology in the field of automated manufacturing.

The research is divided into four parts. The first part involves studying the forefront technologies and applications of digital twin technology, as well as analyzing its latest applications in automated welding. The second part analyzes the current challenges faced by welding robots, constructing tracking and welding seam detection models based on digital twin technology to improve the automation capabilities of welding robots. The third part applies the mentioned technologies to specific scenarios, validating the application effectiveness of the proposed models in actual welding situations. The fourth part concludes and analyzes the entire paper, outlining directions for improving the research.

2 Related work

Digital twin technology is a method of digitizing physical entities or processes in the real world. Its applications span various fields, including manufacturing, energy, and healthcare, for optimizing design, enhancing operations, and predictive maintenance (Jin et al., 2022). Franciosa et al. research aimed to provide a digital twin framework for flexible parts in assembly systems. The concept of “process capability space” was introduced, simulating dimensions, geometric shapes, and welding quality of parts and components to identify the root causes of quality defects. The study achieved quality improvement in the development process of assembly systems through automated defect mitigation measures. Results showed that the model significantly enhanced the application effectiveness of digital welding processes in aluminum door welding (Franciosa et al., 2020). Kliestik et al. conducted relevant research on the construction of digital twin cities. Through comprehensive analysis of extensive literature, the study identified the need for data visualization tools, virtual modeling techniques, and IoT-based decision support systems for digital twin cities. However, there was a scarcity of literature data in the research at that time. Future research was suggested to focus on the application of twin technology in urban development to enhance the effectiveness of urban digitalization (Kliestik et al., 2022). The research conducted by Khan and his colleagues focused on the applications of the Internet of Things (IoT) using sixth-generation wireless systems. The study suggested that enabling IoT applications on the new sixth-generation wireless systems required a novel framework capable of managing, operating, and optimizing the wireless system and its underlying IoT services. The research proposed the use of a communication services framework based on digital twins, leveraging virtual representations of physical systems, associated algorithms, communication technologies, and relevant communication security techniques. Applying these technologies to specific scenarios, the results demonstrated that the system exhibited reliable service performance and security, surpassing related technologies (Khan et al., 2022).

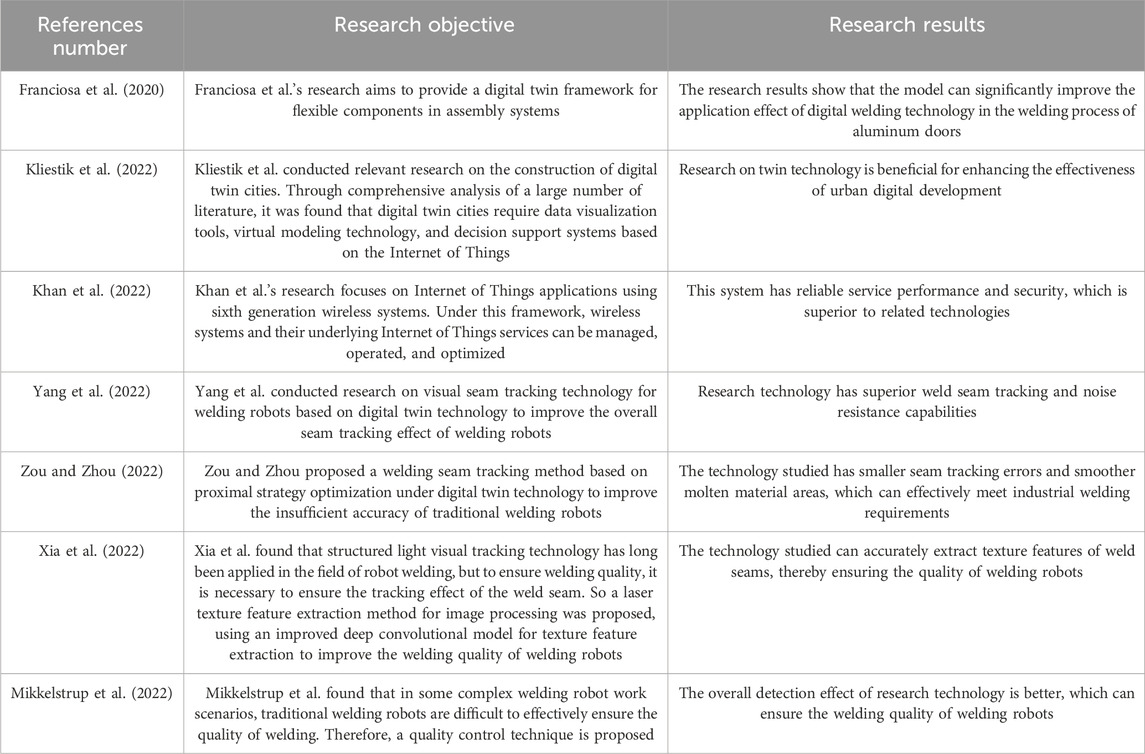

Digital twin technology has important applications in the field of welding machine automation, significantly improving the trajectory tracking effect and welding quality of welding robots. Yang et al. conducted research on visual weld seam tracking technology for welding robots based on digital twin technology. The line of sight weld seam tracking technology can better extract weld seam texture features and improve the overall weld seam tracking effect of welding robots. However, in traditional visual weld seam tracking technology, image processing based tracking technology has poor flexibility and robustness. Therefore, research has proposed a deep convolutional neural network feature extraction model, which can better extract laser patterns. At the same time, for the problem of feature extraction detail loss, a variety of residual bidirectional block models are introduced to better adapt to local feature extraction problems. In addition, a weighted loss function was introduced for model training to address class imbalance issues. This technology is applied to specific scenarios, and the research technology has better weld seam tracking and noise resistance (Yang et al., 2022). Zou and Zhou proposed a weld seam tracking method based on proximal strategy optimization under digital twin technology to improve the insufficient accuracy of traditional welding robots in weld seam tracking. This method first takes the reference image block and the target image block as dual channel inputs to the network, predicts the translation relationship between the two images, and corrects the position of feature points in the weld seam image. Establish a positioning accuracy estimation network to update the reference image blocks during the welding process. Comparing this technology with similar weld seam tracking techniques, the studied technology has smaller weld seam tracking errors and smoother molten material areas, which can effectively meet industrial welding requirements (Zou and Zhou, 2022) Xia et al. found that structured light visual tracking technology has long been applied in the field of robot welding, but to ensure welding quality, it is necessary to ensure the tracking effect of the weld seam. So a laser texture feature extraction method for image processing was proposed, using an improved depth convolution model for texture feature extraction. At the same time, considering the problem of image noise, a method based on attention dense convolutional blocks is adopted to solve the problem of image noise. Finally, the research model will be applied to specific robot welding scenarios, and the technology studied can accurately extract weld texture features, thereby ensuring the quality of welding robots (Xia et al., 2022) Mikkelstrup et al. found that in some complex welding robot work scenarios, traditional welding robots are difficult to effectively ensure the quality of welding. On the basis of digital twin technology, this study focuses on high-frequency mechanical impact treatment. In order to ensure the welding quality of welding robots, a three-dimensional technology is proposed for weld treatment and quality inspection, in order to determine the curvature and gradient of the local weld surface and achieve effective detection of weld quality. Determine the overall effect of weld seam and quality through 3D scanning, and generate robot weld seam tracking trajectory based on the weld toe position. Adaptively adjust the robot welding position through trajectory calculation to ensure the overall welding quality of the welding robot. Comparing this technology with similar detection techniques, the overall detection effect of the research technology is better, which can ensure the welding quality of welding robots (Mikkelstrup et al., 2022). The relevant work research is shown in Table 1.

In summary, the aforementioned studies have demonstrated the potential applications of digital twin technology in various fields. Simultaneously, the application effectiveness of digital twin technology in the field of automated welding machines has been analyzed. Digital twin technology has the capability to enhance the stability and welding effectiveness of machine welding. However, there is limited research on the application of digital twin technology in the field of robotic welding. Therefore, conducting research on digital twin technology in the domain of robotic welding is crucial to drive the development of industrial automation technology.

3 Robot automatic control technology based on digital twin

This section focuses on addressing the challenges faced by welding robots. Two models, namely, the welding tracking model and the weld seam detection model, are proposed based on digital twin technology to solve traditional welding robot issues.

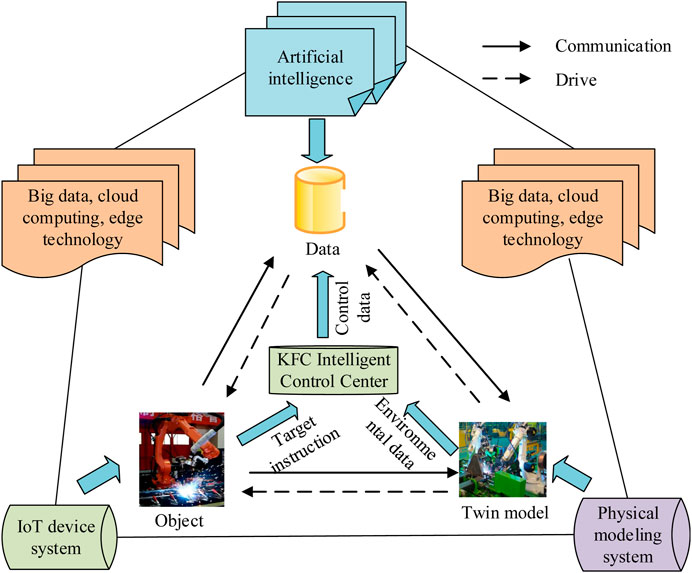

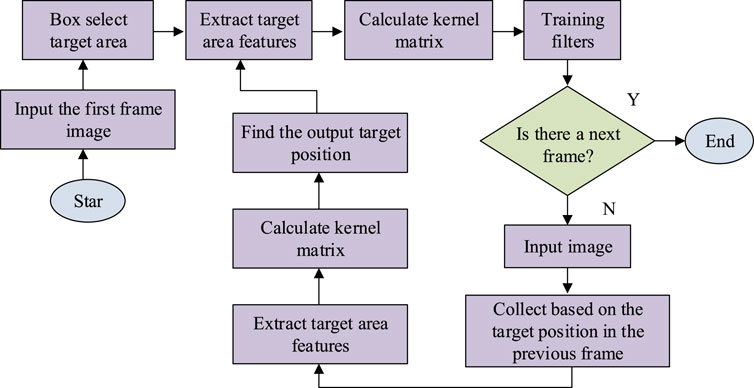

3.1 Construction of welding robot automatic tracking model based on improved KCF

In recent years, welding robots have played a crucial role in industrial production, resolving significant issues related to manpower and costs for enterprises. However, traditional welding robots still face challenges in complex and high-precision scenarios, such as inaccurate welding planning and poor welding stability (Zhou et al., 2021). Therefore, constructing a robotic welding tracking model based on digital twin technology is proposed to address traditional welding robot planning issues through the digital modeling of the welding process. The framework of a welding robot system based on digital twin technology is illustrated in Figure 1.

In the process of robot welding, due to the recognition problem of the system itself, it is often difficult for welding robots to effectively track the target. Especially in some scenarios where there are obstacles masking, similar feature interference, and light interference, similar feature interference can directly affect the welding quality of the system. In response to the issue of light interference, the laser wavelength is selected within the characteristic wavelength range in research, and combined with corresponding filters, it can effectively reduce the interference of arc light on laser imaging. For obstacle masking and similar feature interference problems, KCF is used for feature extraction. In order to improve the feature extraction effect, partial Histogram of Oriented Gradient (HOG) features are collected to reduce the computational complexity of the tracking model (Zheng et al., 2021). Initially, the first-frame data collected undergoes filter training. Image blocks are gathered from the specified target region, and HOG features are then extracted from these image blocks. The KCF model uses ridge regression for filter training, aiming to find a training function that minimizes the variance of the regression target, as shown in Eq. 1.

In Eq. 1,

In Eq. 2,

In Eq. 3,

In Eq. 4,

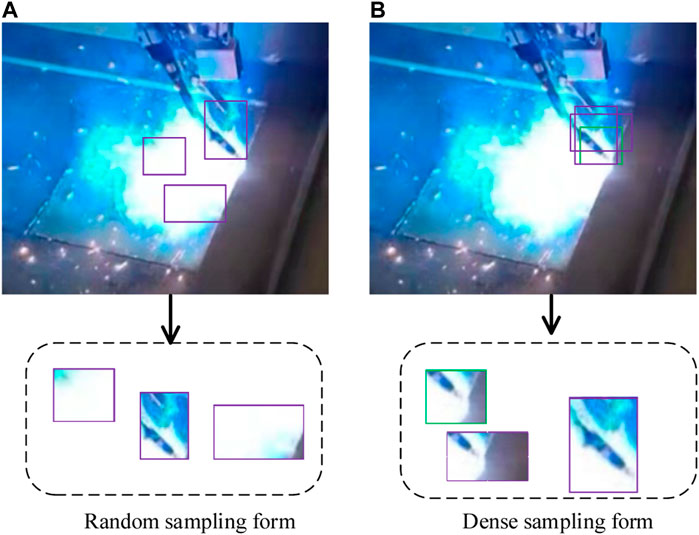

Figure 2. Comparison of sample data collection forms. (A) Random sampling form, (B) Dense sampling form.

Random sampling yields relatively fewer data samples, leading to a decrease in target computational accuracy. Hence, KCF adopts dense sampling for sample acquisition. However, considering that dense sampling increases the computational load of the tracking model, the exhaustive form of collection is abandoned. Instead, a cyclic displacement method is employed to obtain the sample set. This method allows for better formation of the cyclic matrix, reducing computational load through the diagonalization properties in the frequency domain (Wang, 2020). Defining the collected samples of the welding robot as a one-dimensional dataset, the dataset is represented as in Eq. 5.

In Eq. 5,

In Eq. 6,

In Eq. 7,

Transforming the solving of

In Eq. 9,

In Eq. 10,

3.2 Construction of weld seam defect detection model based on YOLOX-s

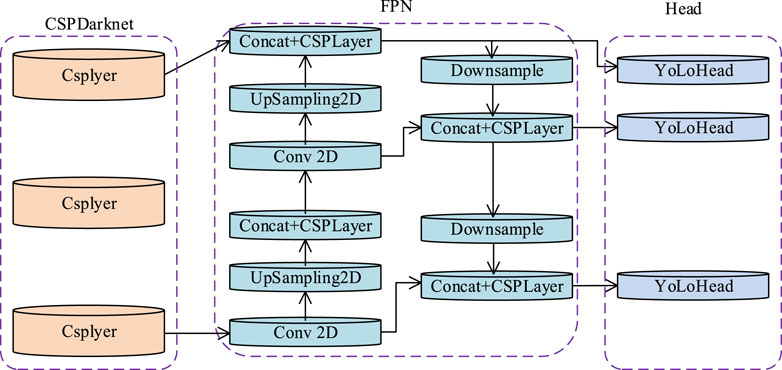

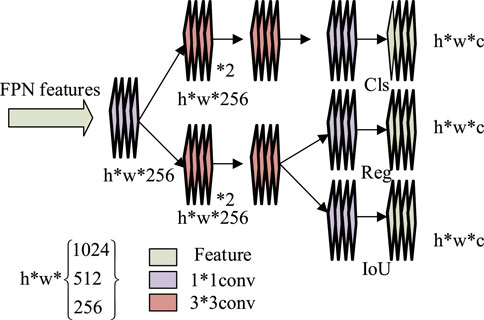

In some complex industrial manufacturing scenarios, such as vehicle manufacturing and shipbuilding, high demands are placed on the welding of welding robots, requiring the weld seam to meet relevant quality requirements. However, in the actual production process, traditional welding robots cannot accurately identify the quality of weld seams. Therefore, a weld seam defect detection model based on the deep object learning algorithm You Only Look Once-s (YOLOX-s) is proposed to enhance the quality of welding robot work by marking weld seams with quality issues (Suresh et al., 2020). YOLOX-s consists of three parts: the head (YOLO Head), the Cross Stage Partial Darknet (CSPDarknet), and the Feature Pyramid Networks (FPN). The structure of the entire YOLOX-s model is shown in Figure 4.

In the weld seam defect detection model based on YOLOX-s, the CSPDarknet network is responsible for detecting the target feature map, extracting the main feature set from it as the model’s feature layer. The feature layer will participate in the construction of the FPN layer. As a YOLOX feature-enhanced extraction network, FPN will take the three feature layers extracted by CSPDarknet and use them as input (Suresh et al., 2020). The FPN layer will continue to extract features from the obtained features, simultaneously expanding and merging features through upsampling and downsampling, resulting in enhanced effective features. Each feature layer contains parameters for height, width, and depth, which are used as input for YOLOHead. This part classifies and recognizes feature points through a classifier and regressor, determining the object type contained in the parameters and thus effectively detecting weld seam defects.

CSPDarknet is the core part of the YOLOX-s model, responsible for extracting target features. Based on the Darknet architecture, CSPDarknet introduces residual connections and cross-stage partial connections (CSP) to improve model performance. Assuming the input image is I, the feature map sequence obtained after processing through CSPDarknet is denoted as

In Eq. 11,

The FPN is a crucial component in the YOLOX-s model, responsible for merging feature maps of different scales to enhance the model’s multi-scale detection capabilities. In FPN, different scale feature maps are fused together through upsampling and downsampling operations (Preethi and Mamatha, 2023). Let the scale factor for the upsampling operation be denoted as S; then the upsampled feature map can be represented as shown in Eq. 13.

The downsampling operation is depicted in Eq. 14.

In Eq. 14,

Finally, the fused feature map

The classifier part maps features to class probabilities through a series of fully connected layers and activation functions. The regressor part predicts the coordinates

Here,

Finally, the outputs of the classifier and regressor are combined to obtain the final output for weld seam defect detection. However, during YOLOX-s model training, for the sake of technical loss convenience, a label-matching strategy (SimOTA) is employed for the allocation of positive and negative samples. To enhance the training accuracy of YOLOX-s, the goal is to minimize the loss, or in other words, minimize the cost by assigning real bounding boxes to corresponding anchor points, thereby improving the model’s training effectiveness.

4 Algorithm model simulation testing

This section primarily focuses on the simulation performance testing of the two proposed models, examining their practical application effects in real-world scenarios. Performance evaluation metrics include tracking error, success rate, precision, detection accuracy, among others.

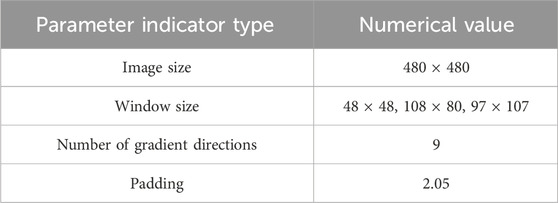

4.1 Welding robot tracking model performance testing

In order to verify the performance of the proposed welding machine tracking model, the study will select a self built dataset to evaluate the performance of the proposed model. In the experiment, the prominent HY Vision Studio equipment was used to collect images of the weld seam. The collected image data includes information such as the position, angle, width, height, and depth of the weld seam. At the same time, Adobe Photoshop software is used to perform general noise detail processing on the collected data. This dataset was obtained through HY visual studio equipment, which collected 156545 continuous welding trajectory images on site, while 235422 continuous welding seam images were collected from the network and welding site. The collected data will be cleaned and organized, and the training and testing sets will be arranged in a 7:3 ratio. The training results are output as tracking coordinate values. The software platform adopts a stable WINDOWS7 system as the testing platform. Considering the high hardware platform requirements for dense sampling, the latest INTEL I9 series processing is used in the experiment, and the graphics card is NVIDIA RTX3080Ti, to meet the experimental needs. Finally, use the Python software platform to complete the experimental simulation analysis. Precision (P), success rate, welding seam tracking error, recall (R), and mean average precision (MAP) will be selected as evaluation benchmarks. Additionally, classic tracking algorithms, such as Minimum Output Sum Square Error (MOSSE) and Discriminative Scale Space Tracker (DSST), will be introduced as testing benchmarks. The initialization parameters of the experimental models are presented in Table 2.

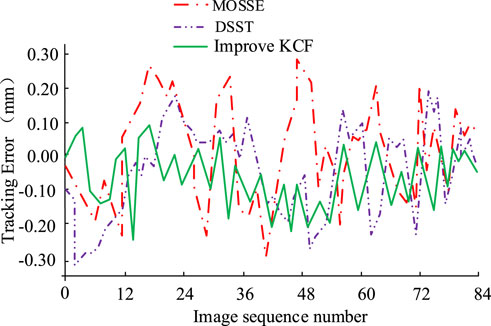

Firstly, under the digital twin system, the robot’s target tracking parameters will be inputted, and the three tracking models will perform welding according to the target trajectory. The tracking error results for the three models in different image sequences are illustrated in Figure 6.

From the results in Figure 6, it can be observed that the target tracking effects vary across different image sequences. The proposed model demonstrates the best performance, with a maximum tracking error of −0.232 mm and an average absolute tracking error of 0.08 mm. The DSST model follows with a maximum tracking error of −0.312 mm and an average absolute tracking error of 0.155 mm. The MOSSE model exhibits the poorest performance, with a maximum tracking error of −0.296 mm and an average absolute tracking error of 0.163 mm. Additionally, a comparison of the tracking accuracy and success rate for different models is presented in Figure 7.

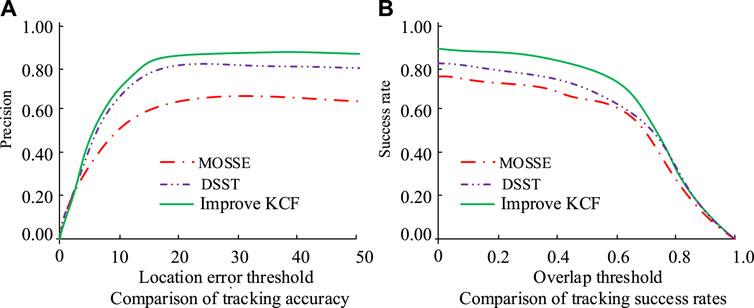

Figure 7. Comparison of tracking accuracy and tracking success rate. (A) Experiment number MOSSE, (B) Overlap threshold Comparison of tracking success rates.

Figures 7A, B present the comparison results of tracking accuracy and tracking success rate for different models. The Overlap Rate threshold represents the average overlap rate, used to assess the degree of bounding box overlap, while the Location Error threshold is the localization error used to distinguish whether the bounding box accurately captures the target. The proposed model achieves the fastest convergence, with a peak accuracy of 0.845, while the DSST model and MOSSE model reach peak accuracies of 0.809 and 0.605, respectively. Furthermore, comparing the tracking success rates of different models, as the average overlap rate increases, the tracking success rates of all three models continuously decrease. Overall, the proposed model exhibits the highest tracking success rate, with an improvement of 13.65% and 21.35% compared to DSST and MOSSE tracking models, respectively. Additionally, a comparison of tracking quality in different welding scenarios is shown in Figure 8.

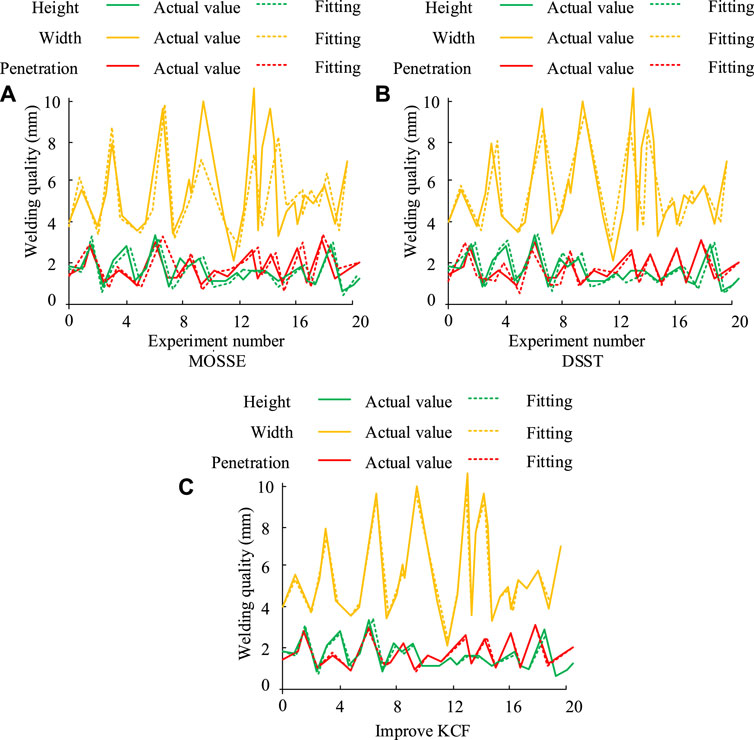

Figure 8. Comparison of tracking quality between different models. (A) Location error threshold Comparison of tracking accuracy, (B) Experiment number DSST, (C) Improve KCF.

Figures 8A–C depict the tracking quality comparison results for the MOSSE, DSST, and proposed models, respectively. From the data in the figures, it is evident that the proposed model performs better in tracking height, width, and melt depth, with a mean fitting rate of 96.65%. This is significantly superior to the other two models. The MOSSE model has a mean fitting rate of 80.35%, indicating poorer tracking quality, while the DSST model has a mean fitting rate of 86.67%, better than MOSSE but inferior to the proposed model.

4.2 Weld seam detection model performance testing

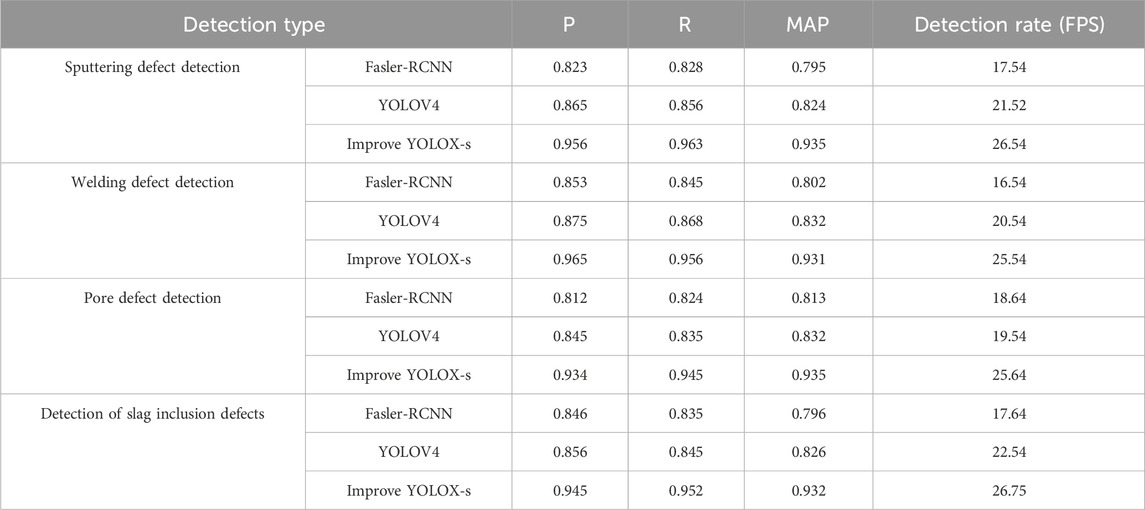

Simultaneously, the performance of the proposed weld seam detection model was tested. The image input resolution was set to 640 × 640, and the experiments were conducted using the PyTorch 1.2 framework. The model’s learning rate was set to 0.01, and training was performed for 500 rounds. YOLOv4 and Faster-RCNN were introduced as baseline tests. A comparison of the detection results for different models is shown in Figure 9.

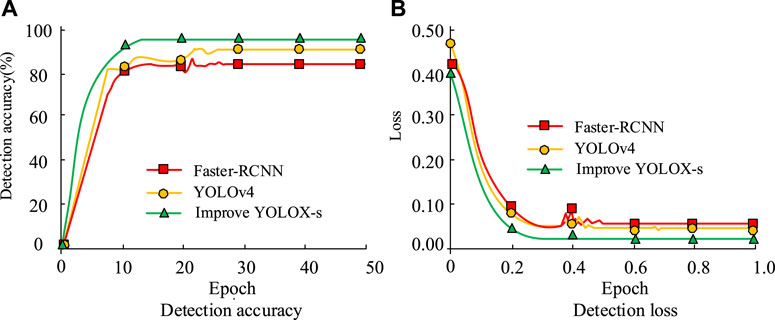

Figure 9. Comparison of detection accuracy of different models. (A) Detection accuracy, (B) Detection loss.

Figures 9A, B present the results for detection accuracy and detection loss, respectively. Comparing the best detection results for the three models when converged, the proposed model achieves a detection accuracy of 97.35%, while YOLOv4 and Faster-RCNN achieve accuracies of 90.65% and 83.21%, respectively. The losses for the proposed model, YOLOv4, and Faster-RCNN when converged are 0.023, 0.045, and 0.076, respectively. Additionally, a comparison of detection errors in melt depth and excess height for different models is shown in Figure 10.

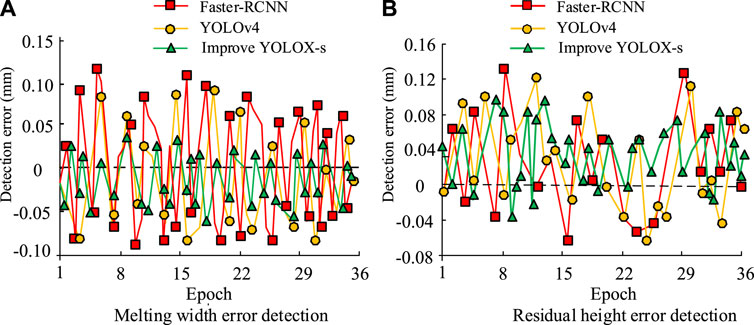

Figure 10. Comparison of weld seam detection errors among different models. (A) Melting width error detection, (B) Residual height error detection.

Figures 10A, B present the results of weld penetration depth and reinforcement height detection, respectively. In the comparison of weld penetration depth, the proposed model demonstrates a detection error within the range of −0.06mm, while YOLOv4 and Faster-RCNN exhibit errors of 0.11 mm and 0.13mm, respectively. Regarding reinforcement height detection, all three models show comparable performance, but the proposed model is overall more stable with a detection error controlled within a range of 0.09 mm. Compared to YOLOv4 and Faster-RCNN, the detection error is reduced by 9.63% and 12.35%, respectively. Finally, a comprehensive comparison of the weld detection performance of the three models is presented in Table 3.

Table 2 summarizes the comprehensive weld quality comparison results, encompassing quality checks for four common weld defects: spatter detection, weld bead detection, porosity detection, and slag inclusion detection. The results indicate that the proposed model outperforms the others in terms of precision, recall, and detection speed. For instance, in the comparison of spatter defect detection speed, Faster-RCNN, YOLOV4, and the proposed model achieve frame rates of 17.54FPS, 21.52FPS, and 26.54FPS, respectively, with the proposed model demonstrating the best overall performance.

5 Conclusion

Welding robots find widespread application in industrial manufacturing, yet they face challenges such as low welding accuracy and inadequate weld quality inspection, especially in high-precision and complex scenarios. To address these issues, an intelligent welding robot tracking technology based on digital twin technology is proposed. The approach involves utilizing the KCF model for feature extraction in the first welding frame image, simultaneously collecting local HOG features to enhance feature details and achieve target tracking through filter training. Additionally, a weld quality detection model based on the YOLOX-s algorithm was proposed, extracting features through the CSPDarknet network, and performing feature detection after merging features through the FPN layer. In tracking model testing, the proposed model’s average tracking error absolute value was 0.08 mm, whereas DSST and MOSSE exhibited errors of 0.155 and 0.163 mm, respectively. In tracking quality comparison, the proposed model demonstrated an average tracking fitting degree of 96.65%, while MOSSE and DSST exhibited 80.35% and 86.67%, respectively. In weld detection, the proposed model, YOLOv4, and Faster-RCNN achieved detection accuracies of 97.35%, 90.65%, and 83.21%, respectively. In weld penetration depth quality detection, the proposed model’s detection error was within the range of −0.06 mm, while YOLOv4 and Faster-RCNN exhibited errors of 0.11 and 0.13 mm, respectively, making the proposed model’s weld detection error the lowest. It can be seen that the proposed model has excellent performance and meets the requirements of welding robot automation development. The research content will provide important technical support for the development and construction of Industry 4.0. However, while the technology in this study shows excellent results for common weld quality detection, challenges remain in detecting defects such as weld beads and abrasions. Future work should focus on gathering more feature data to improve the model’s detection effectiveness.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YK: Conceptualization, Methodology, Project administration, Validation, Writing–original draft. RC: Data curation, Formal Analysis, Supervision, Visualization, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ashtari Talkhestani, B., and Weyrich, M. (2020). Digital Twin of manufacturing systems: a case study on increasing the efficiency of reconfiguration. At-Automatisierungstechnik 68 (6), 435–444. doi:10.1515/auto-2020-0003

Dong, Q., He, B., Qi, Q., and Xu, G. (2021). Real-time prediction method of fatigue life of bridge crane structure based on digital twin. Fatigue Fract. Eng. Mater. Struct. 44 (9), 2280–2306. doi:10.1111/ffe.13489

Franciosa, P., Sokolov, M., Sinha, S., Sun, T., and Ceglarek, D. (2020). Deep learning enhanced digital twin for Closed-Loop In-Process quality improvement. CIRP Ann. 69 (1), 369–372. doi:10.1016/j.cirp.2020.04.110

Hongfei, G., Zhu, Y., Zhang, Y., Ren, Y., Chen, M., and Zhang, R. (2021). A digital twin-based layout optimization method for discrete manufacturing workshop. Int. J. Adv. Manuf. Technol. 112 (6), 1307–1318. doi:10.1007/s00170-020-06568-0

Jin, X., Dong, H., and Yao, X. (2022). Research on the integrated application of digital twin technology in the field of automobile welding. International Conference on Advanced Manufacturing Technology and Manufacturing Systems (ICAMTMS 2022). SPIE 12 (9), 38–42. doi:10.1117/1.2645556

Khan, L. U., Saad, W., Niyato, D., Han, Z., and Hong, C. S. (2022). Digital-twin-enabled 6G: vision, architectural trends, and future directions. IEEE Commun. Mag. 60 (1), 74–80. doi:10.1109/mcom.001.21143

Kliestik, T., Poliak, M., and Popescu, G. H. (2022). Digital twin simulation and modeling tools, computer vision algorithms, and urban sensing technologies in immersive 3D environments. Geopolit. Hist. Int. Relat. 14 (1), 9–25. doi:10.22381/HIR14120221

Li, H., Li, B., Liu, G., Wen, X., Wang, H., Zhang, S., et al. (2022). A detection and configuration method for welding completeness in the automotive body-in-white panel based on digital twin. Sci. Rep. 12 (1), 7929. doi:10.1038/s41598-022-11440-0

Lyons, N. (2022). Deep learning-based computer vision algorithms, immersive analytics and simulation software, and virtual reality modeling tools in digital twin-driven smart manufacturing. Econ. Manag. Financial Mark. 17 (2), 67–81. doi:10.22381/emfm17220224

Mikkelstrup, A. F., Kristiansen, M., and Kristiansen, E. (2022). Development of an automated system for adaptive post-weld treatment and quality inspection of linear welds. Int. J. Adv. Manuf. Technol. 119 (6), 3675–3693. doi:10.1007/s00170-021-08344-0

Mokayed, H., Quan, T. Z., Alkhaled, L., and Sivakumar, V. (2023). Real-time human detection and counting system using deep learning computer vision techniques. Artif. Intell. Appl. 1 (4), 221–229. doi:10.47852/bonviewaia2202391

Preethi, P., and Mamatha, H. R. (2023). Region-based convolutional neural network for segmenting text in epigraphical images. Artif. Intell. Appl. 1 (2), 119–127. doi:10.47852/bonviewaia2202293

Suresh, S., Elango, N., Venkatesan, K., Lim, W. H., Palanikumar, K., and Rajesh, S. (2020). Sustainable friction stir spot welding of 6061-T6 aluminium alloy using improved non-dominated sorting teaching learning algorithm. J. Mater. Res. Technol. 9 (5), 11650–11674. doi:10.1016/j.jmrt.2020.08.043

Wang, L. (2020). Application and development prospect of digital twin technology in aerospace. IFAC-PapersOnLine 53 (5), 732–737. doi:10.1016/j.ifacol.2021.04.165

Xia, S., Khiang Pang, C., Al Mamun, A., Seng Wong, F., and Meng Chew, C. (2022). Design of adaptive weld quality monitoring for multiple-conditioned robotic welding tasks. Asian J. Control 24 (4), 1528–1541. doi:10.1002/asjc.2574

Yang, L., Fan, J., Huo, B., and Liu, Y. (2022). Image denoising of seam images with deep learning for laser vision seam tracking. IEEE Sensors J. 22 (6), 6098–6107. doi:10.1109/jsen.2022.3147489

Zheng, Y., Chen, L., Lu, X., Sen, Y., and Cheng, H. (2021). Digital twin for geometric feature online inspection system of car body-in-white. Int. J. Comput. Integr. Manuf. 34 (7-8), 752–763. doi:10.1080/0951192x.2020.1736637

Zhou, C., Zhang, F., Wei, B., Lin, Y., He, K., and Du, R. (2021). Digital twin–based stamping system for incremental bending. Int. J. Adv. Manuf. Technol. 116 (1-2), 389–401. doi:10.1007/s00170-021-07422-7

Keywords: kernel correlation filter, digital twin, welding robot, trajectory tracking, weld seam detection

Citation: Kang Y and Chen R (2024) Welding robot automation technology based on digital twin. Front. Mech. Eng 10:1367690. doi: 10.3389/fmech.2024.1367690

Received: 09 January 2024; Accepted: 12 March 2024;

Published: 02 April 2024.

Edited by:

Zhibin Zhao, Xi’an Jiaotong University, ChinaReviewed by:

Xueliang Zhou, Hubei University of Automotive Technology, ChinaShohin Aheleroff, SUEZ Smart Solutions, New Zealand

Copyright © 2024 Kang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuhui Kang, kevin7kang@163.com

Yuhui Kang

Yuhui Kang Rongshang Chen

Rongshang Chen