Exponential Time Differencing Algorithm for Pulse-Coupled Hodgkin-Huxley Neural Networks

- School of Mathematical Sciences, MOE-LSC, Institute of Natural Sciences, Shanghai Jiao Tong University, Shanghai, China

The exponential time differencing (ETD) method allows using a large time step to efficiently evolve stiff systems such as Hodgkin-Huxley (HH) neural networks. For pulse-coupled HH networks, the synaptic spike times cannot be predetermined and are convoluted with neuron's trajectory itself. This presents a challenging issue for the design of an efficient numerical simulation algorithm. The stiffness in the HH equations are quite different, for example, between the spike and non-spike regions. Here, we design a second-order adaptive exponential time differencing algorithm (AETD2) for the numerical evolution of HH neural networks. Compared with the regular second-order Runge-Kutta method (RK2), our AETD2 method can use time steps one order of magnitude larger and improve computational efficiency more than ten times while excellently capturing accurate traces of membrane potentials of HH neurons. This high accuracy and efficiency can be robustly obtained and do not depend on the dynamical regimes, connectivity structure or the network size.

1. Introduction

The Hodgkin-Huxley (HH) model (Hodgkin and Huxley, 1952; Hassard, 1978; Dayan and Abbott, 2003) is a classical neuron model, originally proposed to describe the behaviors of action potentials of the squid's giant axon. It provides a useful mechanism that accounts for the detailed generation of action potentials and the existence of the absolute refractory periods. It also serves as the foundation for other neuron models such as the one that can describe the behaviors of bursting and adaption (Pospischil et al., 2008). However, the HH equations are so complicated that it is difficult to study its properties analytically such as the Hopf bifurcation and chaotic dynamics (Aihara, 1986; Hansel and Sompolinsky, 1996; Guckenheimer and Oliva, 2002; Lin, 2006). Therefore, its investigation often relies on numerical simulations, for example, by the Runge-Kutta (RK) methods.

There are several difficulties to design an efficient and accurate numerical algorithm for the HH neural network, especially when the network size is large. First, when an HH neuron driven by external input generates an action potential (the interval of action potential is called spike period in this work), the HH neuron equations become stiff. Regular RK methods have to use very small time step to satisfy the requirement of numerical stability (Guckenheimer and Oliva, 2002; Börgers et al., 2005; Kassam and Trefethen, 2005; Börgers and Nectow, 2013). This small time step will significantly increase the computational cost when studying long time behavior of large-scale HH networks such as chaotic attractor dynamics or collecting reliable statistical information of HH neurons such as the distribution of inter-spike-intervals.

For more realistic situations, the neurons are generally driven by stochastic spike input and the interaction term is usually modeled by a Dirac delta function (pulse-coupled), while the spike-induced conductance dynamics are modeled by an alpha function (Somers et al., 1995; Hansel et al., 1998; Sun et al., 2009). These make the system become non-smooth and event-driven, while providing challenges for the design of efficient numerical simulation algorithms. For instance, it is impossible to predetermine the synaptic spike times since they are convoluted with neurons' trajectories themselves. As a result, one has to evolve the HH network by ignoring the spike interactions among neurons and then use spike-spike interaction to amend the neurons' trajectories at the end of the time step (Hansel et al., 1998; Brette et al., 2007). Without a careful recalibration for the neuronal spikes, the numerical algorithm often suffers from the issue of instability or relatively low numerical accuracy.

The exponential time differencing (ETD) method (Hochbruck et al., 1998; Cox and Matthews, 2002; Kassam and Trefethen, 2005; de la Hoz and Vadillo, 2008; Nie et al., 2008; Hochbruck and Ostermann, 2010) is proposed for efficient simulation of stiff ordinary differential equations (ODEs). The basic idea is to decompose the ODEs into a linear stiff part and a nonlinear non-stiff part. Then, the linear stiff part can be solved by using the integrating factor method, while the nonlinear non-stiff part can be approximated by numerical quadrature (Cox and Matthews, 2002). A second-order ETD (ETD2) method for HH neural networks has been proposed in a recent work (Börgers and Nectow, 2013), which allows using a large time step to raise computational efficiency. In Börgers and Nectow (2013), the HH equations are linearly approximated in each time step, and then solved analytically over the time step. The ETD2 method proposed in Börgers and Nectow (2013) is a reduced situation of that in Cox and Matthews (2002), but it is difficult to generalize to higher-order cases, e.g., the fourth-order ETD method. Besides, although the ETD2 method proposed in Börgers and Nectow (2013) is proven to be unconditionally stable for HH system, it will be inaccurate using a large time step (Börgers and Nectow, 2013).

In this work, we first provide an ETD2 method following the idea proposed in Cox and Matthews (2002) to evolve a pulse-coupled HH neural network driven by stochastic spike input. Note that the stiffness of HH equations are quite different, especially between the spike and non-spike periods, and we find that the ETD2 method may introduce a relatively large error in the membrane potentials in the non-stiff period if using the same time step as that in the stiff period. We then design an adaptive ETD2 method (AETD2) that using different decompositions of the linear and non-linear parts in stiff and non-stiff periods. In addition, for the situation where neurons generate spikes in the time step, the effects of the spikes are carefully recalibrated in our AETD2 method to achieve a second-order numerical accuracy. Our AETD2 method is capable of using a large time step, while achieving the same high accurate traces of membrane potential of each neuron as the second-order RK (RK2) method using a very small time step. It can improve computational efficiency more than one order of magnitude compared with the RK2 method. This high numerical accuracy and computational efficiency can be achieved over a wide range of dynamical regimes and does not depend on the network connectivity or size.

2. Materials and Methods

2.1. The Model

The dynamics of the ith neuron of an HH neural network is governed by

where C is the cell membrane capacitance, Vi is the membrane potential, mi, hi, and ni are gating variables for sodium and potassium currents, respectively (Dayan and Abbott, 2001). The parameters VNa, VK, and VL are the reversal potentials for the sodium, potassium, and leak currents, respectively, GNa, GK, and GL are the corresponding maximum conductances. The form of αz and βz are set as (Dayan and Abbott, 2001): αm(V) = (0.1V+4)/(1−exp(−0.1V−4)), βm(V) = 4exp(−(V+65)/18), αh(V) = 0.07exp(−(V+65)/20), βh(V) = 1/(1+exp(−3.5−0.1V)), αn(V) = (0.01V+0.55)/(1−exp(−0.1V−5.5)), and βn(V) = 0.125exp(−(V+65)/80).

The input current is given by

where and are excitatory and inhibitory conductances, respectively, and are the corresponding reversal potentials. The dynamics of conductance , Q = E, I, is governed by

where is an auxiliary dynamical variable to make the conductance as a continuous function, δ(·) is the Dirac delta function, sil is the spike time of the feedforward Poisson input with strength FQ and rate ν, τjl is the lth spike time of the jth neuron, and and are slow decay and fast rise time scale, respectively. Each neuron is either excitatory or inhibitory and its coupling strength is labeled by its type E or I, respectively. For example, () is the coupling strength from the jth excitatory (inhibitory) neuron to its postsynaptic ith neuron. By analytically solving Equations (4) and (5), the spike-induced conductance change can be explicitly expressed as

where Θ(·) is the Heaviside function. For all neurons, we take FE = f and FI = 0. The model parameters are C = 1μF·cm−2, VNa = 50 mV, VK = −77 mV, VL = −54.387 mV, , , , mV, mV, ms, ms, ms, and ms (Dayan and Abbott, 2001).

The voltage Vi evolves continuously according to Equations (1) and (2). When it reaches the firing threshold Vth = −50 mV (Sun et al., 2009), we say the ith neuron generates a spike at this time, say τil. Then it will trigger its postsynaptic jth neuron's conductance change in the form of , Q = E, I. For the ease of discussion about our algorithm design, we consider an all-to-all connected network with , where Q = E, I, S is the coupling strength, and N is the total number of neurons in the network. Note that our algorithm can be easily extended to networks with more complicated connectivity structure.

2.2. Runge-Kutta Method

Without loss of generality, we consider the RK2 method as the benchmark and compare it with the ETD methods. We first introduce the RK2 method to evolve the HH neural network with a fixed time step Δt, for example, to evolve the system from time t = tk = kΔt to t = tk+1 = (k+1)Δt. Since the synaptic spike times in [tk, tk+1] can not be predetermined, one has to evolve the network without considering synaptic spike interactions and reconsider their effects by using spike-spike interactions at the end of time step (Hansel et al., 1998; Brette et al., 2007).

Due to the pulse-coupled dynamics in Equation (5), the numerical accuracy may be very low if the spike timing is not well estimated. For example, suppose that a presynaptic spike fired at between tk and tk+1. If one simply assigns it to be the end of time step tk+1, then the error of the spike-induced conductance change is

Therefore, the error with the magnitude of Δt will be introduced when the system evolves to t = tk+1.

We now solve the above issue arising from the pulse-coupled dynamics to achieve a second-order numerical accuracy. First, we evolve the HH neural network without considering the feedforward and synaptic spikes during the time interval [tk, tk+1]. Then, at time t = tk+1, some neuron's voltage may be above the threshold, i.e., generating a spike, say neuron i, if Vi, k<Vth and Vi, k+1≥Vth where Vi, k and Vi, k+1 represent Vi(tk) and Vi(tk+1), respectively. The spike time, say τil, can be estimated following the idea proposed in Hansel et al. (1998) and Shelley and Tao (2001). The neuron's membrane potential during the time interval can be approximated by a linear interpolation:

and the spike time τil can be estimated by solving the equation:

Since there may be some neurons firing and some feedforward spikes emitting during the time interval and they will induce the conductance change, the conductance should be then recalibrated. When the neuron firing and the feedforward spikes are not considered, the conductance variables in such cases, denoted by and , Q = E, I, are

and the conductance variables are then recalibrated by taking into account the neuron firing and the feedforward spikes as

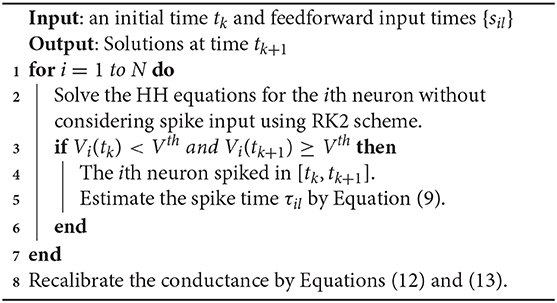

for j = 1, 2, …, N. A detailed algorithm of the RK2 method is given in Algorithm 1.

We show that the above algorithm can indeed achieve a second-order numerical accuracy as follows. If there are no feedforward or synaptic spikes, then all the dependent variables are infinitely differentiable and the RK2 method can achieve an error of order O(Δt2). For the time step that contains feedforward or synaptic spikes, an error of order O(Δt) is introduced in the conductance with the form of . Nevertheless, the dependent variables of V, m, h, and n can have an error of order O(Δt2). The synaptic spike times are estimated by a linear interpolation and also have an error of order O(Δt2). After recalibration shown in Equations (12) and (13), the conductance variables GQ and HQ, Q = E, I can achieve numerical accuracy of second-order at the end of the time step. Therefore, all the dependent variables V, m, h, n, GE, GI, HE, and HI that are numerically solved in each time step have an error of order O(Δt2) (see below for verification of numerical results).

2.3. Exponential Time Differencing Method

Exponential time differencing method is proposed to solve the stiff problem in differential equations by decomposing the system into a linear stiff term and a nonlinear non-stiff term (Hochbruck et al., 1998; Cox and Matthews, 2002; Kassam and Trefethen, 2005; Nie et al., 2008). Following this idea, we propose the ETD schemes for HH Equations (1) and (2) below. As illustrated in Algorithm 1, each neuron in the HH network is evolved independently and their conductances are recalibrated at the end of time step. Thus, one can first derive an ETD scheme for a single HH neuron and then consider the spike interactions among neurons, and obtain an ETD scheme for the numerical evolution of an HH neural network.

Consider the evolution of a single HH neuron from tk to tk+1. The first step of the ETD method is to rewrite Equations (1) and (2) as

where

and

where zk represents z(tk) for z = V, m, h, n of this neuron. Here, Fz(t, V, m, h, n) is actually a function of t, V, and z for z = m, h, n, but we write in this way for ease of illustration. Note that the linear coefficient cz in Equation (14) is a constant value in the kth time step [tk, tk+1] and is updated with respect to k. Multiplying Equation (14) by an integrating factor and taking integral from tk to tk+1, we obtain

for z = V, m, h, and n.

The next step of the ETD method is to derive proper approximations to the above integration. We take a second-order ETD formula with RK time stepping which was proposed as ETD2RK method in Cox and Matthews (2002). Let,

and approximate Fz during the time interval [tk, tk+1] by

for z = V, m, h, and n, where Fz, k represents Fz(tk, Vk, mk, hk, nk). Substituting the above approximation into Equation (19) yields the ETD2 scheme (the ETD method which has second-order numerical accuracy) which is given by

for z = V, m, h, and n. The procedure of the ETD2 algorithm for an HH neural network is similar to that of the RK2 algorithm given in Algorithm 1, but the RK2 scheme is replaced by the ETD2 scheme in Equation (22).

2.4. Adaptive Exponential Time Differencing Method

The ETD2 method can indeed use a large time step to improve computational efficiency, but we find that it will introduce relatively large error in the trajectories of neurons' membrane potentials and even lead to the missing of action potentials (see below for numerical results). In addition, the number of the missed action potentials in the ETD2 method can grow with the increase of time steps compared with the RK2 method using a small time step. Thus, it is important to design an efficient but also reliable ETD method to solve this issue.

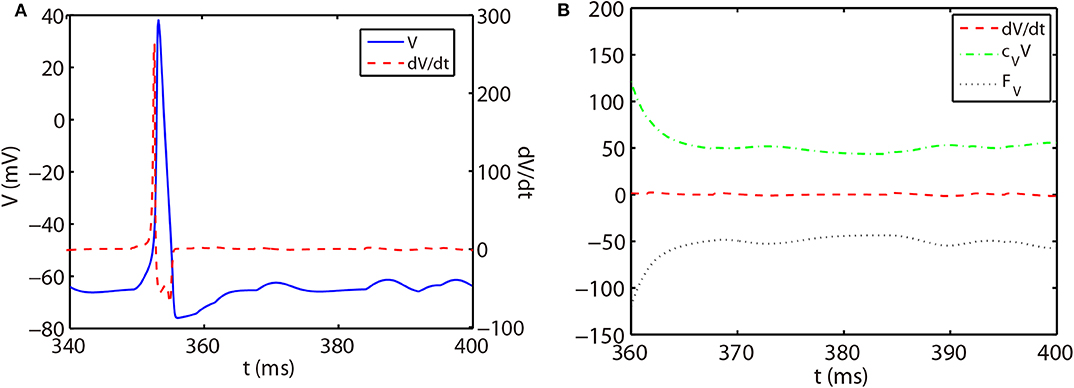

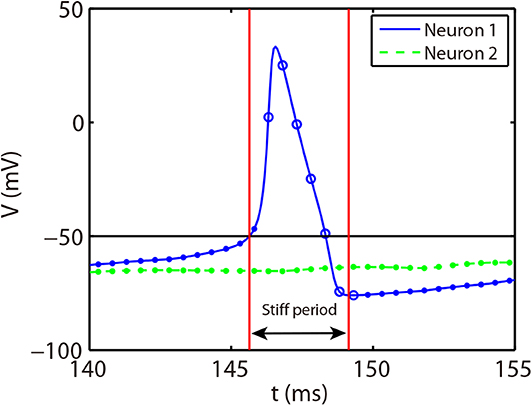

As shown in Figure 1A, the slope of voltage has a very large value when the neuron generates an action potential (spike period) and quickly reduces to a value around zero in the non-spike period until the next spike time. Therefore, the stiffness of HH equations is quite different between spike and non-spike periods. In the non-spike period, the slope of voltage is almost zero, while the linear and nonlinear parts in Equation (14) have a much larger absolute value and nearly cancel each other out as shown in Figure 1B. Therefore, the decomposition in Equation (14) may not be appropriate in the non-spike period since both the linear and nonlinear parts become stiff while the summation of them is indeed non-stiff. Based on this, we propose a different decomposition in the non-stiff period from that in the stiff period: taking cz = 0 and the whole right hand side of Equation (14) as the nonlinear part. For such a decomposition, the ETD2 scheme reduces to the RK2 scheme in the non-stiff period.

Figure 1. (A) Trajectory of voltage (blue solid curve) and the slope of voltage (red dashed curve) for a single HH neuron. (B) Trajectory of the slope of voltage (red dashed curve), linear part cVV (green dash-dotted curve), and nonlinear part FV (black dotted curve) in Equation (14) for the non-spike period. The time interval of (B) zooms into the later part of (A).

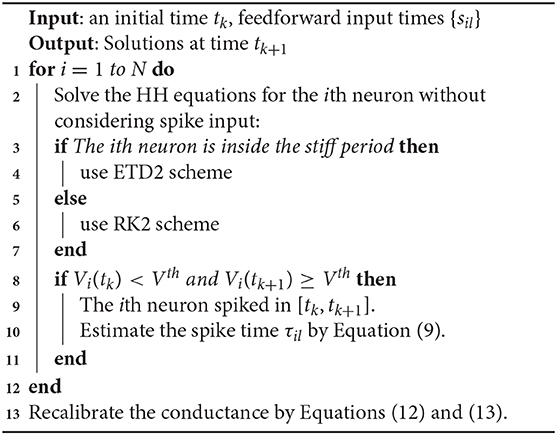

The stiff period of HH equations can be clearly identified as shown in Figure 1A and is defined as follows. For each spike event, the starting point of the stiff period is determined as the spike time when the voltage reaches the firing threshold Vth = −50 mV and the interval of stiff period is chosen as 3.5 ms which is sufficient long to cover the highly stiff region of the spike. Based on the above observation, we give our AETD2 method for HH neural network as following: each neuron is evolved using ETD2 scheme if it is in the stiff period and use the reduced ETD2 scheme, the RK2 scheme, otherwise, as shown in Figure 2. Detailed AETD2 algorithm is given in Algorithm 2.

Figure 2. Illustration of the AETD2 method. After neuron 1 fires a spike, we use the ETD2 scheme to evolve the HH equations for neuron 1 during the stiff period indicated by the red vertical lines, while the HH equations for neuron 2 is evolved using RK2 scheme since neuron 2 is in the non-stiff period. The starting point of the stiff period is determined as the spike time and it lasts for the following about 3.5 ms. The circles and dots indicate the time nodes where we use the ETD2 and RK2 schemes, respectively.

3. Results

We consider an all-to-all connected network of 80 excitatory and 20 inhibitory neurons driven by Poisson feedforward input. For the ease of illustration, we choose the Poisson input strength f = 0.06 mS·cm−2 and input rate ν = 300 Hz, and the coupling strength between neurons are chosen as S = 0.2 mS·cm−2 throughout this work, unless indicated otherwise. However, our algorithm can be applied to HH neural networks under a variety of dynamical regimes.

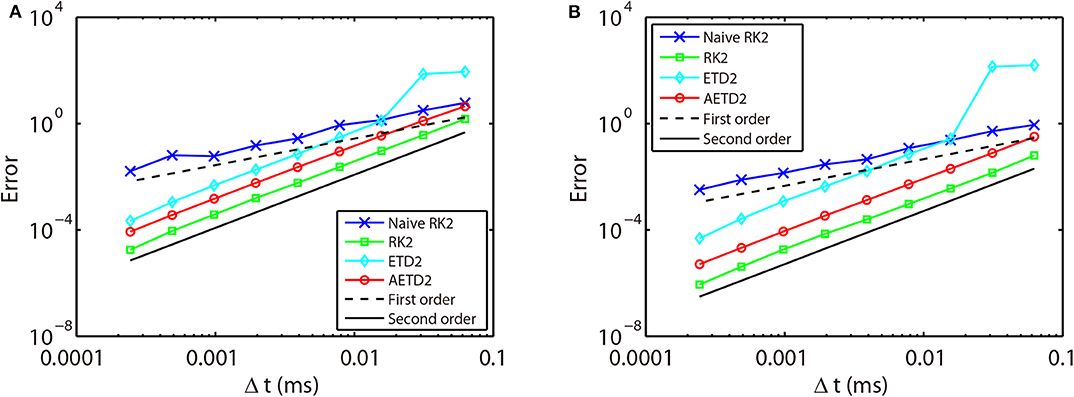

First, we verify the second-order numerical accuracy by performing convergence tests. A high precision solution is obtained by using RK2 method with a sufficiently small time step Δt = 1 × 10−6 ms and is denoted by a superscript “high.” It is compared with the solutions computed by the RK2, ETD2, and AETD2 methods with various values of larger time steps Δt = 2−4, 2−5, …, 2−12 ms which is denoted by a superscript “Δt.” Errors of membrane potentials at final run time T = 2, 000 ms and the last spike time of each neuron are computed:

where indicates the last spike time of the ith neuron during the run time interval. As shown in Figure 3, if one naively assigns the end of time step as the spike times in the RK2 method (naive RK2), the numerical accuracy of the membrane potentials and spike times can only be of the first-order. In contrast, if one determines the spike times by linear interpolation and recalibrate the conductances accordingly, all the RK2, ETD2, and AETD2 methods can achieve a second-order numerical accuracy. In addition, we find that the ETD2 method has much larger error compared with the RK2 and AETD2 methods using the same time step as shown in Figure 3. When using a time step larger than Δt = 2−6 = 0.0156 ms, the ETD2 method performs even worse than the naive RK2 method. The underlying reason is that the HH equations are almost non-stiff in the non-spike period, but the decomposition in Equation (14) induces a relatively large stiffness for the nonlinear term as discussed previously.

Figure 3. Errors of membrane potentials (A) and the last spike time of each neuron (B) in the all-to-all connected network when it is evolved using various time steps. Blue crosses are naive RK2 method without performing the linear interpolation for the estimate of the spike times. Green squares are RK2 method, cyan diamonds are ETD2 method, and red circles are AETD2 method. The last three methods all perform the linear interpolation to estimate the spike times. The dashed line and the solid line indicate the numerical convergence of the first-order and the second-order, respectively. The total run time T = 2, 000 ms.

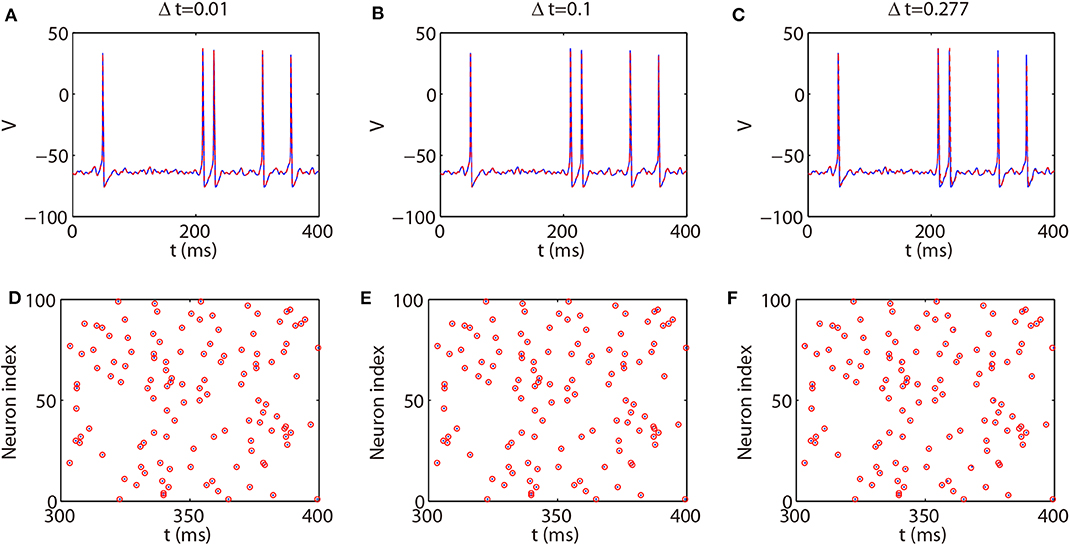

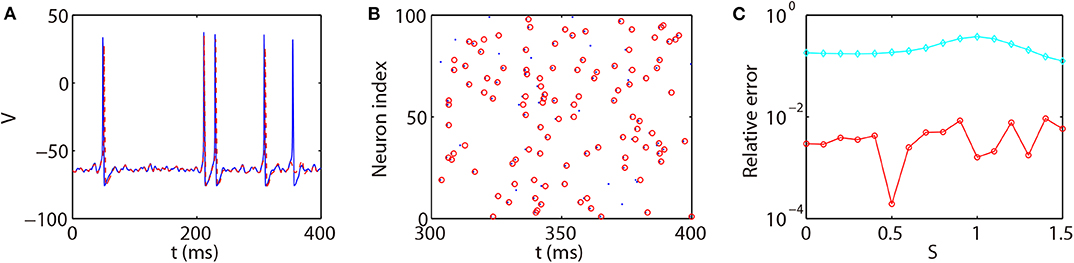

We next discuss the numerical performance of our AETD2 method and compare it with other different numerical methods. As shown in the top panel of Figure 4, the AETD2 method with large time steps (maximum time step Δt = 0.277 ms) can obtain the same high accuracy in membrane potentials as the RK2 method using a very small time step Δt = 0.01 ms. The bottom panel of Figure 4 shows the raster plots (neuron index vs. its spike time) of the spike events in the network. It can be seen that the spike times are well-captured by the AETD2 method with large time steps. In contrast, as shown in Figures 5A,B, the ETD2 method is highly inaccurate in terms of voltage traces and raster plots when the time step Δt = 0.277 ms is used (the maximum time step in AETD2 method). Figure 5C shows the relative error in the mean firing rate (the average number of synaptic spikes per unit time) between the ETD2 and RK2 methods, and that between the AETD2 and RK2 methods over different values of coupling strength. It can be seen that the ETD2 method can achieve only one digit of numerical accuracy while the AETD2 method can robustly achieve more than two digits of numerical accuracy when the time step Δt = 0.277 ms is used in both methods. Therefore, the ETD2 method has worse numerical performance compared with the AETD2 method.

Figure 4. Comparing the AETD2 method with the RK2 method. (Top) Traces of membrane potential of an HH neuron in the all-to-all connected network. (Bottom): Raster plots of the network spikes. The blue solid curves and dots indicate the results by the RK2 method with time step Δt = 0.01 ms, while the red dashed curves and circles indicate the results by the AETD2 method. The time steps for the AETD2 method are Δt = 0.01, 0.1, 0.277 ms for (A,D), (B,E), and (C,F), respectively.

Figure 5. Comparing the ETD2 and AETD2 methods with the RK2 method. (A) Voltage trace of the same HH neuron used in Figure 4. (B) Raster plot of the network spikes. The blue solid curve and dots indicate the results by the RK2 method with time step Δt = 0.01 ms while the red dashed curve and circles indicate the results by the ETD2 method with time step Δt = 0.277 ms. The coupling strength is S = 0.2 mS·cm−2. (C) Relative error in the mean firing rates between the ETD2 and RK2 methods (cyan diamonds), and that between the AETD2 and RK2 methods (red circles) for different choice of the coupling strength. Both the ETD2 and AETD2 methods use time step Δt = 0.277 ms. The benchmark mean firing rate is computed by the RK2 method with a very small time step Δt = 1 × 10−6 ms.

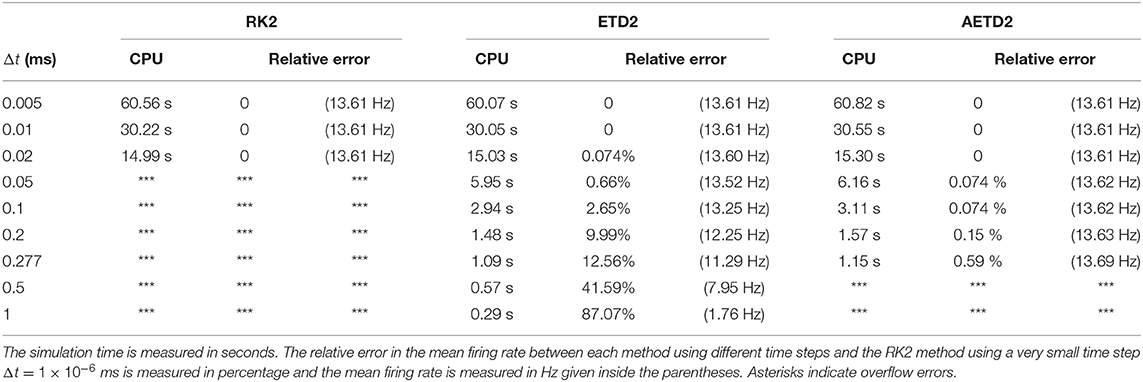

To demonstrate the efficiency of our AETD2 method, we compare the simulation time that RK2, ETD2, and AETD2 methods take for a common total run time. We simulate the all-to-all connected network by the RK2, ETD2, and AETD2 methods on a Windows platform using an Intel i7 2.6 GHz processor (the weblink of the source codes is given in section 4), and the simulation time and numerical accuracy of mean firing rate are given in Table 1. The AETD2 method can achieve over an order of magnitude of speedup compared with the RK2 method while achieving the same high accuracy in terms of the mean firing rate.

In addition, we define the efficiency ratio of the AETD2 method over the RK2 method as

where TRK2 and TAETD2 indicate the simulation times of the RK2 and AETD2 methods, respectively, for the HH neural network to evolve the run time T. Note that the RK2 and ETD2 methods take almost the same simulation time when using the same small time step as shown in Table 1. Thus, the above efficiency ratio can be approximated by the ratio of the total number of time steps each method requires as

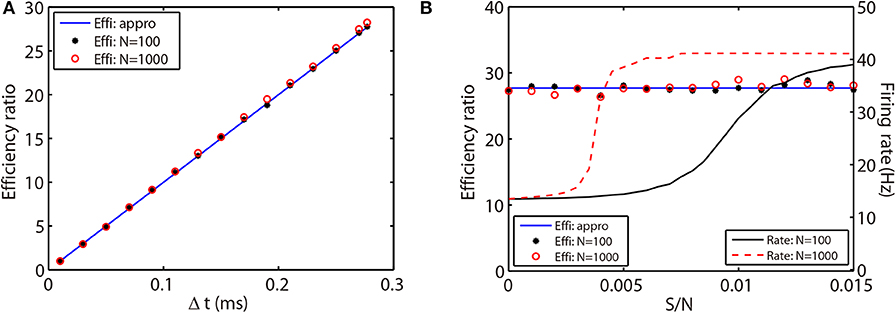

where ΔtRK2 and ΔtAETD2 indicate the time steps used in the RK2 and AETD2 methods, respectively. To demonstrate that the above efficiency ratio is independent of the network connectivity, size, and dynamical regimes, we evolve the all-to-all connected network of 80 excitatory and 20 inhibitory neurons and a randomly connected network of 800 excitatory and 200 inhibitory neurons with a variety choice of coupling strength. Not surprisingly, the efficiency ratio approximated by Equation (26) agrees well with the one measured by the ratio of simulation time between the RK2 and AETD2 methods in both two networks as shown in Figure 6. Hence, the efficiency ratio of the AETD2 method relies on only the size of evolved time steps.

Figure 6. Efficiency ratio of the AETD2 method when evolving the HH neural network using various time steps (A) and coupling strength (B). In (A,B), the blue lines are efficiency ratio measured by the approximation in Equation (26), while the black stars and red circles are the efficiency ratio measured by the ratio of simulation times between the RK2 and AETD2 methods. The black stars represent the results for the all-to-all connected HH neural network of 80 excitatory and 20 inhibitory neurons, while the red circles represent the results for an HH neural network of 800 excitatory and 200 inhibitory neurons randomly connected with probability 25%. The black solid and red dashed curves in (B) are the mean firing rates in the smaller network of 100 neurons and larger network of 1,000 neurons, respectively. The coupling strength in (A) is S/N = 0.002 mS·cm−2 and the time step for AETD2 method in (B) is Δt = 0.277 ms. The time step for RK2 method is 0.01 ms and total run time is T = 50 seconds in both (A,B).

4. Discussion

We have presented an adaptive second-order ETD method to evolve the pulse-coupled HH neural network. Our AETD2 method can solve the stiff problem in the HH equations when an HH neuron generates an action potential (spike period). It can use a large time step to raise computational efficiency while accurately capturing dynamical properties of HH neurons such as the trace of membrane potentials, spike times of each neuron, and the mean firing rate. We point out that our AETD2 method can robustly enlarge time steps and raise computational efficiency over one order of magnitude compared with the RK2 method. This high efficiency seems to be independent of parameter choice of connectivity structure, dynamical regimes, or network size.

Our adaptive ideas of ETD methods can be applied to dynamical systems with stiff and non-stiff periods. In addition, we point out that the ETD scheme in our AETD2 algorithm can be chosen in a variety of forms according to the properties of dynamical systems. Here, we use the ETD2 scheme derived by approximating the integration in Equation (19) with RK time stepping. Other forms of numerical schemes can also be used to approximate the integration. For example, one can use a liner interpolation to approximate the nonlinear part in Equation (14) to obtain another form of ETD2 scheme. Besides, one can derive an ETD scheme following the idea proposed in Börgers and Nectow (2013) by linearly approximating the HH equations. The derived ETD scheme is proven to be unconditionally stable for HH system in Börgers and Nectow (2013). All these different ETD schemes can be easily embedded into our AETD2 method in the same way as given in Algorithm 2. For example, we can embed the ETD formula proposed in Börgers and Nectow (2013) into the AETD2 method to evolve the reduced Traub Miles (RTM) neural networks (Ermentrout and Kopell, 1998; Olufsen et al., 2003; Börgers and Nectow, 2013). The dynamical equations for an RTM neuron is almost the same as that for an HH neuron except that the gating variable m is described by mi = αm(Vi)/(αm(Vi)+βm(Vi)). The forms of α and β for the RTM neurons are set as: αm(Vi) = 0.32(Vi+54)/(1−exp(−(Vi+54)/4)), βm(Vi) = 0.28(Vi+27)/(exp((Vi+27)/5)−1), αh(Vi) = 0.128exp(−(Vi+50)/18), βh(Vi) = 4/(1+exp(−(Vi+27)/5)), αn(Vi) = 0.032(Vi+52)/(1−exp(−(Vi+52)/5)), and βn(Vi) = 0.5exp(−(Vi+57)/40).

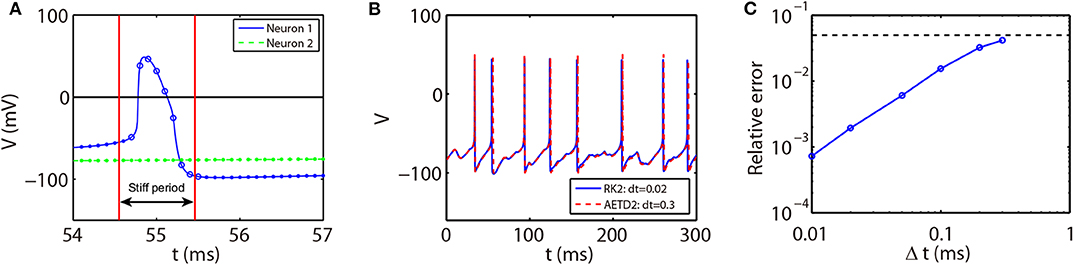

Note that the rising phase of action potentials for the RTM neurons is extremely short, around 0.03 ms as shown in Figure 7A. In such a situation, it may not be appropriate to choose the spike time (when the voltage reaches the firing threshold) as the starting point of the stiff period in the AETD2 method as shown in Figure 7A since large numerical error will be introduced, especially when a large time step is used, e.g., time step Δt = 0.3 ms. This is because the system is evolved by the RK2 scheme during the time step that contains the rapid rising region of the neuron's action potential. Therefore, to achieve high numerical accuracy, the interval of the stiff period should cover the rapid rising region. To achieve this, we then define the stiff period of the RTM neurons as the region where the magnitude of the slope of voltage is over a proper threshold as shown in Figure 7A. We point out that our AETD2 method using a large time step can still accurately capture the membrane potential traces and the mean firing rates compared with the RK2 method using a small time step as shown in Figures 7B,C. Therefore, the definition of the stiff period in our AETD2 method can be flexibly determined based on dynamical properties of studied systems.

Figure 7. The AETD2 method for the RTM neural network. (A) Illustration of the AETD2 method. We take a relatively high firing threshold Vth = 0 mV for the RTM neurons indicated by the solid horizontal line. The stiff period is defined as the region where |dV/dt|≥20. The circles and dots indicate the time nodes where we use the ETD2 and RK2 schemes, respectively. (B) Traces of membrane potential of an RTM neuron. The blue solid and red dashed curves indicate the results by the RK2 method with time step Δt = 0.02 ms and the AETD2 method with time step Δt = 0.3 ms, respectively. (C) Relative error in the mean firing rates between the AETD2 and RK2 methods for different choice of time steps used in the AETD2 method for an all-to-all connected RTM network of 80 excitatory and 20 inhibitory neurons with Poisson input. The dashed horizontal line indicates 5% error, relative error = 0.05. The benchmark mean firing rate is computed by the RK2 method with a very small time step Δt = 1 × 10−6. The parameters for RTM model is C = 1μF·cm−2, VNa = 50 mV, VK = −100 mV, VL = −67 mV, GNa = 100 mS·cm−2, GK = 80 mS·cm−2, and GL = 0.1 mS·cm−2.

In this work, the numerical accuracy of our AETD2 method is second-order. In some situations, high accurate traces of membrane potentials may be required, especially the accurate shapes of action potentials (Traub et al., 2001; Kopell and Ermentrout, 2004). Therefore, one future work may be the design of the fourth-order ETD method. As illustrated above, due to the discontinuity arising from the pulse-coupled dynamics, an even more careful recalibration needs to be designed to achieve fourth-order numerical accuracy.

Finally, we point out that our AETD2 method can be easily extended to networks of other HH type neurons (Pospischil et al., 2008). And our AETD2 method can also robustly achieve high numerical accuracy and efficiency. In addition, our method is naturally a parallel algorithm which can be applied to simulations of large-scale neural network dynamics. For reproducibility of our results by other researchers, all the source codes written in C++ can be accessed at http://github.com/KyleZhongqi/ETD2_HH.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Author Contributions

ZT and DZ designed the research, wrote and code, ran the simulations, analyzed the results, and wrote the paper.

Funding

This work was supported by National Science Foundation of China with Grant Nos. 11671259, 11722107, and SJTU-UM Collaborative Research Program, and by the Student Innovation Center at Shanghai Jiao Tong University (DZ).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This manuscript has been released as a Pre-Print at arXiv repository (Tian and Zhou, 2019). We dedicate this paper to our late professor David Cai.

References

Aihara, K. (1986). Chaotic oscillations and bifurcations in squid giant axons. Chaos 257–269. doi: 10.1515/9781400858156.257

Börgers, C., Epstein, S., and Kopell, N. J. (2005). Background gamma rhythmicity and attention in cortical local circuits: a computational study. Proc. Natl. Acad. Sci. U.S.A. 102, 7002–7007. doi: 10.1073/pnas.0502366102

Börgers, C., and Nectow, A. R. (2013). Exponential time differencing for Hodgkin-Huxley-like odes. SIAM J. Sci. Comput. 35, B623–B643. doi: 10.1137/120883657

Brette, R., Rudolph, M., Carnevale, T., Hines, M., Beeman, D., Bower, J. M., et al. (2007). Simulation of networks of spiking neurons: a review of tools and strategies. J. Comput. Neurosci. 23, 349–398. doi: 10.1007/s10827-007-0038-6

Cox, S. M., and Matthews, P. C. (2002). Exponential time differencing for stiff systems. J. Comput. Phys. 176, 430–455. doi: 10.1006/jcph.2002.6995

Dayan, P., and Abbott, L. (2003). Theoretical neuroscience: computational and mathematical modeling of neural systems. J. Cogn. Neurosci. 15, 154–155. doi: 10.1162/089892903321107891

de la Hoz, F., and Vadillo, F. (2008). An exponential time differencing method for the nonlinear schrödinger equation. Comput. Phys. Commun. 179, 449–456. doi: 10.1016/j.cpc.2008.04.013

Ermentrout, G. B., and Kopell, N. (1998). Fine structure of neural spiking and synchronization in the presence of conduction delays. Proc. Natl. Acad. Sci. U.S.A. 95, 1259–1264. doi: 10.1073/pnas.95.3.1259

Guckenheimer, J., and Oliva, R. A. (2002). Chaos in the Hodgkin-Huxley model. SIAM J. Appl. Dyn. Syst. 1, 105–114. doi: 10.1137/S1111111101394040

Hansel, D., Mato, G., Meunier, C., and Neltner, L. (1998). On numerical simulations of integrate-and-fire neural networks. Neural Comput. 10, 467–483. doi: 10.1162/089976698300017845

Hansel, D., and Sompolinsky, H. (1996). Chaos and synchrony in a model of a hypercolumn in visual cortex. J. Comput. Neurosci. 3, 7–34. doi: 10.1007/BF00158335

Hassard, B. (1978). Bifurcation of periodic solutions of the Hodgkin-Huxley model for the squid giant axon. J. Theor. Biol. 71, 401–420. doi: 10.1016/0022-5193(78)90168-6

Hochbruck, M., Lubich, C., and Selhofer, H. (1998). Exponential integrators for large systems of differential equations. SIAM J. Sci. Comput. 19, 1552–1574. doi: 10.1137/S1064827595295337

Hochbruck, M., and Ostermann, A. (2010). Exponential integrators. Acta Numer. 19, 209–286. doi: 10.1017/S0962492910000048

Hodgkin, A. L., and Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117:500. doi: 10.1113/jphysiol.1952.sp004764

Kassam, A.-K., and Trefethen, L. N. (2005). Fourth-order time-stepping for stiff pdes. SIAM J. Sci. Comput. 26, 1214–1233. doi: 10.1137/S1064827502410633

Kopell, N., and Ermentrout, B. (2004). Chemical and electrical synapses perform complementary roles in the synchronization of interneuronal networks. Proc. Natl. Acad. Sci. U.S.A. 101, 15482–15487. doi: 10.1073/pnas.0406343101

Lin, K. K. (2006). Entrainment and chaos in a pulse-driven Hodgkin-Huxley oscillator. SIAM J. Appl. Dyn. Syst. 5, 179–204. doi: 10.1137/050631677

Nie, Q., Wan, F. Y., Zhang, Y.-T., and Liu, X.-F. (2008). Compact integration factor methods in high spatial dimensions. J. Comput. Phys. 227, 5238–5255. doi: 10.1016/j.jcp.2008.01.050

Olufsen, M. S., Whittington, M. A., Camperi, M., and Kopell, N. (2003). New roles for the gamma rhythm: population tuning and preprocessing for the beta rhythm. J. Comput. Neurosci. 14, 33–54. doi: 10.1023/A:1021124317706

Pospischil, M., Toledo-Rodriguez, M., Monier, C., Piwkowska, Z., Bal, T., Frégnac, Y., et al. (2008). Minimal Hodgkin-Huxley type models for different classes of cortical and thalamic neurons. Biol. Cybern. 99, 427–441. doi: 10.1007/s00422-008-0263-8

Shelley, M. J., and Tao, L. (2001). Efficient and accurate time-stepping schemes for integrate-and-fire neuronal networks. J. Comput. Neurosci. 11, 111–119. doi: 10.1023/A:1012885314187

Somers, D. C., Nelson, S. B., and Sur, M. (1995). An emergent model of orientation selectivity in cat visual cortical simple cells. J. Neurosci. 15, 5448–5465. doi: 10.1523/JNEUROSCI.15-08-05448.1995

Sun, Y., Zhou, D., Rangan, A. V., and Cai, D. (2009). Library-based numerical reduction of the Hodgkin-Huxley neuron for network simulation. J. Comput. Neurosci. 27, 369–390. doi: 10.1007/s10827-009-0151-9

Tian, Z.-Q. K., and Zhou, D. (2019). Exponential time differencing algorithm for pulse-coupled Hodgkin-Huxley neuronal networks. arXiv [preprint] arXiv:1910.08724.

Keywords: Hodgkin-Huxley, exponential time differencing method, efficiency, pulse-coupled, second-order

Citation: Tian ZK and Zhou D (2020) Exponential Time Differencing Algorithm for Pulse-Coupled Hodgkin-Huxley Neural Networks. Front. Comput. Neurosci. 14:40. doi: 10.3389/fncom.2020.00040

Received: 20 November 2019; Accepted: 16 April 2020;

Published: 08 May 2020.

Edited by:

Thomas Nowotny, University of Sussex, United KingdomReviewed by:

Lianchun Yu, Lanzhou University, ChinaMarcel Stimberg, INSERM U968 Institut de la Vision, Université de la Sorbonne, France

Copyright © 2020 Tian and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Douglas Zhou, zdz@sjtu.edu.cn

Zhong-qi Kyle Tian

Zhong-qi Kyle Tian Douglas Zhou

Douglas Zhou