Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds

- 1 Cognitive Brain Research Unit, Institute of Behavioural Sciences, Cognitive Science, University of Helsinki, Helsinki, Finland

- 2 Center of Excellence in Interdisciplinary Music Research, University of Jyväskylä, Jyväskylä, Finland

- 3 Department of Psychology, University of Jyväskylä, Jyväskylä, Finland

- 4 Institute of Behavioral Sciences, University of Helsinki, Helsinki, Finland

Neurocognitive studies have demonstrated that long-term music training enhances the processing of unattended sounds. It is not clear, however, whether music training also modulates rapid (within tens of minutes) neural plasticity for sound encoding. To study this phenomenon, we examined whether adult musicians display enhanced rapid neural plasticity compared to non-musicians. More specifically, we compared the modulation of P1, N1, and P2 responses to standard sounds between four unattended passive blocks. Among the standard sounds, infrequently presented deviant sounds were presented (the so-called oddball paradigm). In the middle of the experiment (after two blocks), an active task was presented. Source analysis for event-related potentials (ERPs) showed that N1 and P2 source activation was selectively decreased in musicians after 15 min of passive exposure to sounds and that P2 source activation was found to be re-enhanced after the active task in musicians. Additionally, ERP analysis revealed that in both musicians and non-musicians, P2 ERP amplitude was enhanced after 15 min of passive exposure but only at the frontal electrodes. Furthermore, in musicians, the N1 ERP was enhanced after the active discrimination task but only at the parietal electrodes. Musical training modulates the rapid neural plasticity reflected in N1 and P2 source activation for unattended regular standard sounds. Enhanced rapid plasticity of N1 and P2 is likely to reflect faster auditory perceptual learning in musicians.

Introduction

Several neurocognitive studies have demonstrated that long-term musical training enhances sound processing (for reviews, see Münte et al., 2002; Tervaniemi, 2009). Enhanced auditory perception in musicians is likely to result from auditory perceptual learning during several years of intensive, daily music playing. Auditory perceptual learning refers to an improved ability of the senses to discriminate differences in the attributes of stimuli. Neurally, the improved discrimination can be observed as rapid plastic changes in the responses to the learned stimuli. Auditory perceptual learning can occur either through goal-oriented active practice with feedback and repetition or through passive exposure by encoding event probabilities inherent in the environment (Goldstone, 1998; Gilbert et al., 2001) such as statistical rules inherent in speech and in music.

Rapid plasticity refers to the capacity of the neural system to change its functional properties within tens of minutes to optimize the responsiveness for processing demands. Auditory event-related potential (ERP) studies on rapid plasticity can provide an objective evaluation of the effectiveness of learning and rehabilitation on auditory neurocognition. Typical learning-related plastic changes are enhanced ERP responses (i.e., facilitation) or diminished responses with or without the capacity to recover for the auditory stimuli (i.e., habituation and adaptation, respectively).

Auditory ERP components, such as P1, N1, and P2, are ideal for studying rapid plasticity because although they occur automatically after the presentation of any sound, these components are also sensitive to training and various top-down effects, such as active attention and reinforcement (for reviews, see Purdy et al., 2001; Seitz and Watanabe, 2005). For example, the auditory evoked P1 response, which occurs 50–80 ms after the sound onset and reflects thalamo-cortical processing and non-specific gating mechanism, is modulated by the level of attention (Boop et al., 1994). Although no rapid plasticity has been reported for P1, long-term musical training modulates P1 (see next paragraph). The N1 response, peaking at 80–110 ms after sound onset, reflects acoustic feature detection, and change detection (Näätänen and Picton, 1987). For sounds, N1 is enhanced during selective attention tasks (e.g., Hillyard et al., 1973; Woldorff and Hillyard, 1991) and demonstrates rapid plasticity after 15–40 min of intensive training (Brattico et al., 2003; Ross and Tremblay, 2009). The P2 response, which is elicited at 160–200 ms after sound onset, reflects further stimulus evaluation and classification, and is typically enhanced after a prolonged training (Reinke et al., 2003; Bosnyak et al., 2004; Tremblay et al., 2010). Rapid plasticity in the auditory system can occur without behavioral improvements in discrimination accuracy or even precede them (e.g., Ross and Tremblay, 2009). These findings reflect the automaticity of the neural system in extracting auditory events even without active attention to sounds.

Although the effects of long-term music training on neural auditory processing have been extensively studied (Münte et al., 2002; Tervaniemi, 2009), little is known about the rapid (short-term) plasticity in musicians. However, it is possible that the rapid plasticity for sounds (as described previously for N1 and P2 in non-musicians) could be enhanced in musicians. Interestingly, the findings related to the impact of musical training in automatic processing of sounds, as indicated by the P1, N1, and P2 ERP components (based on traditional ERP analysis and ERP source estimates), are not entirely clear. For instance, P1 has been reported to show larger (P50m: Schneider et al., 2005) and smaller amplitudes (Nikjeh et al., 2009) as well as different lateralizations (P1m: Kuriki et al., 2006) in musicians compared to non-musicians. In addition, evidence about N1 has been discrepant. In some studies, the N1 response was larger or faster in musicians (Baumann et al., 2008; N1m: Kuriki et al., 2006; omission-related N1: Jongsma et al., 2005) but not in others (N1m: Schneider et al., 2002; Lütkenhöner et al., 2006). Furthermore, the P2 response was larger in musicians than in non-musicians during passive listening (Shahin et al., 2003, 2005) and active discrimination (Jongsma et al., 2005; P2m: Kuriki et al., 2006, see Baumann et al., 2008). Taken together, it is not generally agreed upon whether the rapid plasticity of the P1, N1, and P2 responses in musicians is enhanced compared to non-musicians and, most importantly, whether passive exposure to sounds is sufficient to produce rapid plasticity or is active discrimination training necessary.

The main objective in the present study was to investigate the interplay between the short- and long-term effects of auditory perceptual learning. The effects of long-term musical training on the rapid (short-term) plasticity of unattended standard sounds during one session were evaluated. Standard (i.e., non-target) sounds were regularly presented among irregularly presented deviant sounds (so-called oddball paradigm). In our previous report based on the current data set, we found that the rapid plasticity for unattended, infrequently deviating sounds occurred faster in musicians than in non-musicians (Seppänen et al., submitted). More specifically, only musicians showed a significant habituation of MMN source activation for deviant sounds before the active discrimination. We concluded that music training enhances the build-up of preattentive prediction coding for deviating auditory events. However, another parallel possibility is that musical expertise enhances the rapid plasticity also for frequently presented (non-deviating) standard sounds. Unattended processing of standard sounds might have a crucial role for predicting auditory events since the standard sounds help the auditory system to build rules also for irregular events (e.g., Haenschel et al., 2005; Baldeweg, 2007; Bendixen et al., 2007). To explore this alternative, we examined whether the rapid plasticity of the P1, N1, and P2 responses for the standard sounds differed between professional musicians and non-musicians. This question was studied by comparing the neural changes in P1, N1, and P2 elicitation for standard sounds between experimental blocks when participants ignored auditory stimuli during 1 h of passive exposure to sounds. Based on previous literature on musicians, our hypothesis was that the rapid plasticity would be most pronounced for N1 and P2 specifically in musicians. Further, generally enhanced sound processing in musicians could be linked to an enhanced rapid plasticity for sounds, such as greater N1 decrease and P2 enhancement between blocks compared with non-musicians.

Our second question was whether active attention to sounds is required to induce the rapid plasticity of the P1, N1, and P2 responses. This question was studied by comparing the neural responses to standard sounds presented before and after the active auditory discrimination task. Based on the previous findings, we hypothesized that both musical training and active attention (during the active task) modulate the rapid plasticity for standard sounds. We found that musical training modulated the rapid plasticity of N1 and P2 source activation but not that of P1. Active attention also modulated the plasticity of N1 and P2.

Materials and Methods

Ethics Statement

Before the experiment, participants gave a written informed consent. The experiment was approved by the Ethics Committee of the Department of Psychology, University of Helsinki, and conducted in accordance with the Declaration of Helsinki.

Participants

The participants were musicians (n = 14, nine women, five men, age range = 21–39, Mage = 25 ± 5 SD) and non-musicians (n = 16, nine women, seven men, age range = 19–31, Mage = 24 ± 3 SD). The musicians had started to play a musical instrument at the age of 8 years on average (±3 SD) and had played for a total of 17 years on average. None of the non-musicians had any professional music training. However, most (12 out of 16 non-musicians) had played a musical instrument over 1 year during their first school years as a hobby. Four non-musicians reported currently playing 0.5–1 h per week. Age did not differ significantly between groups (t28 = 0.377, P = 0.71). The musicians had graduated from Finnish universities that provide professional musical education or were active students in those institutions. The non-musicians were students from the University of Helsinki, Finland. The participants were recruited by announcements in the student email lists and information boards. All participants had normal hearing and normal or corrected vision. None of the participants reported a history of neurological or psychiatric disorders. All participants were Finnish speaking.

Procedure

Experimental sessions were conducted during two separate days. Here, we report the results for the EEG recording from the first day. Participants were compensated for their voluntary participation with movie tickets.

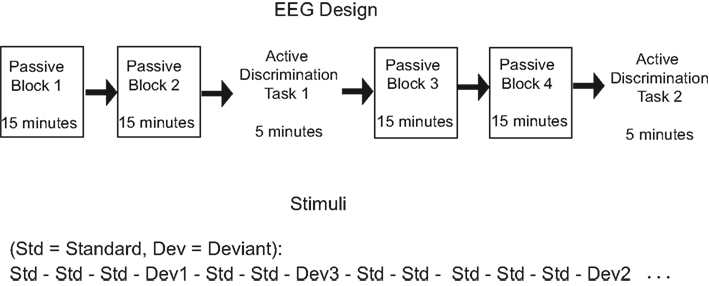

The individual hearing threshold was determined before the recording by presenting a short excerpt of the experimental stimuli binaurally through headphones. During the recordings, the stimuli were presented binaurally at 50 dB above the individual threshold while the participant sat on a comfortable chair in an electrically shielded chamber. Two passive listening blocks (15 min each) were followed by Active Task 1 (5 min), which was followed by another two passive listening blocks 3 and 4 and Active Task 2 (5 min) (see Figure 1). This design allowed us to examine the effects of passive exposure to sounds (i.e., neural changes between blocks 1 and 2 before the active task) and the effects of active attention on ERP responses to unattended sounds (i.e., neural changes between blocks presented before and after the active tasks). In the passive blocks, participants were instructed to ignore the sounds and concentrate on a self-selected muted movie with subtitles while hearing the stimuli. In active conditions, participants were instructed to press a button whenever they noticed a deviating sound among the standard sounds. Before the first active task, both groups had a self-paced short practice (not analyzed) with examples of each deviant. During the active tasks, half of the participants in the musician and the non-musician groups were told that they would have a visual feedback after each correct answer. There was no visual feedback to incorrect or missed answers. The other halves of the groups were instructed to look at the fixation cross on the screen, but the sound stimuli and the task were the same as with the feedback group. Because only responses to standard (and not deviant) sounds are examined in the current analysis, the feedback manipulation was not analyzed.

Stimuli

During both the passive and active conditions, an oddball paradigm was used. In the oddball paradigm, deviant sounds were presented randomly among frequently presented standard sounds. The frequency level of the stimuli (based on the standard) was varied between the blocks to avoid neural fatigue and frequency specific adaptation. Thus, in different blocks, standard sounds were harmonically rich tones of 466.16, 493.88, or 523.25 Hz in fundamental frequency. The sound was composed of two additional harmonic partials in proportions of 60, 30, and 15%. Sounds were 150 ms in duration (with 10 ms rise and fall times). Among the standard sounds, pitch, duration, and sound location (interaural time and decibel difference), deviations of three difficulty levels were presented infrequently (not reported here, see detailed description from Seppänen et al., submitted). Stimulus-onset-asynchrony (SOA) was 400 ms in both passive and attentive conditions. Only the passive condition and standard stimuli were analyzed in this study.

All blocks started with five standard tones that were not analyzed because of the occurrence of the rapidly decaying orientation response at the beginning of the stimulation. At least one standard tone was presented after each deviant sound. In each passive block, all of the deviant types and their difficulty levels were presented. Seventy percent of all stimuli were standard sounds. Each deviant type (pitch, duration, and location) was presented 10% of the time. The total 10% was further allocated for three difficulty levels (easy, medium, and difficult). In each passive block, 1,575 standard sounds were presented. In each active task, the stimuli and SOA were the same as in the passive conditions, but the number of trials for each deviant was different for every individual because the number of deviant stimuli presented depended on how many correct answers the participant could make. The better the participant discriminated the deviating sounds, the more difficult the active task gradually became. Approximately 525 standard sounds were presented in each active task. The number of standard sounds in the active tasks did not differ significantly between musicians and non-musicians.

Sound files were created using Adobe Audition (Adobe Systems, Inc., USA) using a sample rate of 44 kHz as 16-bit samples. Passive blocks with semi-randomized stimuli were generated with Seqma (developed by Tuomas Teinonen, University of Helsinki). Presentation (Neurobehavioral Systems, Inc., USA) software was used for the stimulus presentation.

EEG Acquisition and Signal Processing

The BioSemi ActiveTwo measurement system (BioSemi, The Netherlands) with a 64-channel cap with active electrodes was used. Additional electrodes were used to record the signal from the mastoids behind the auricles, electro-oculography (EOG) below the lower eyelid of the right eye, and EEG reference on the nose.

EEG data were down-sampled to 512 Hz offline from the online sampling rate of 2,048 Hz before further processing in the BESA v5.2 software (MEGIS Software GmbH, Germany). Large muscular artifacts were first visually checked and removed manually, and channels with relatively large high-frequency noise compared with the neighboring channels were interpolated. Automatic adaptive artifact correction was conducted for the continuous data using 150 μV criteria for horizontal EOG and 250 μV for vertical EOG (Berg and Scherg, 1994; Ille et al., 2002). Automatic correction uses a principal component analysis (PCA) to separate spatial (topography) components for the artifact and brain signal. Then, the EEG is reconstructed by subtracting the artifact component of the original EEG. Further artifacts (such as blinks) that were not corrected were excluded based on individual amplitude thresholds (determined by the interactive BESA Artifact scanning tool) after epoching. The data were divided into the epochs of 500 ms starting 100 ms before sound onset (=pre-stimulus baseline) and ending 400 ms after sound onset. Thereafter, the EEG was first 0.5 Hz high-pass filtered and this was then 35 Hz low-pass filtered. On average, 3–7% of all standard sounds were rejected (musicians: 3–6%, 40–93 trials on average; non-musicians: 3–9%, 43–149 trials on average) in the passive blocks. The number of rejected ERP trials for the standard sounds was higher for non-musicians than for musicians in the Passive Block 4 (P = 0.009), but in other blocks, there were no significant differences. After preprocessing, deviant, and standard stimuli were averaged separately for each participant, block, and stimulus. ERPs to deviant tones will be reported elsewhere. Averaged files were converted into ASCII multiplexed format for further analysis in Matlab R2008a (The MathWorks, Inc., USA).

Using custom Matlab scripts, peak latencies for each standard stimulus in each block were determined from grand average waves for each group by visual inspection from Fz (P1) and Cz (N1, P2). Peak latencies were determined between 40 and 90 ms for P1 (maximum) from Fz, 80–140 ms for N1 (minimum) from Cz, and 120–200 ms for P2 (maximum) from Cz. Mean amplitudes for the standard were computed ±20 ms around the peak latency of the grand average for each participant, block, and stimulus.

EEG Source Analysis

BESA Research v5.3 (BESA GmbH.) was used for source analysis with preprocessed grand average data without further filtering. The BESA realistic head model for adults was used. Regional sources with three orientations were used to model a single source. Regional sources are more realistic for source modeling because these assume multiple active sites in the cortex instead of one (dipole). However, computationally, they may give redundant information in a case of basic sensory activation, which typically requires only one orientation for accurate estimation of the generator. The four passive experimental blocks (Passive 1, 2, 3, and 4) were collapsed together to make robust models for the standard stimuli. Separate seed models were used for musicians and non-musicians. Seed models were calculated for the 40-ms interval, ±20 ms around the local maximum in the global field power (see fitting intervals Table 1).

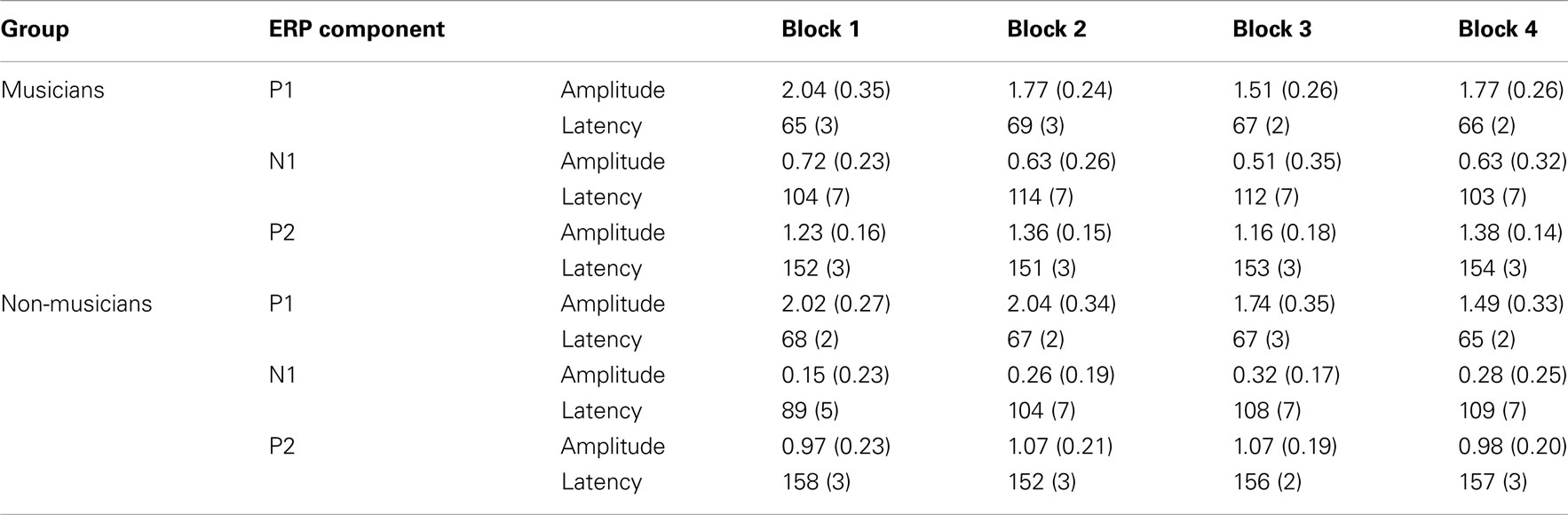

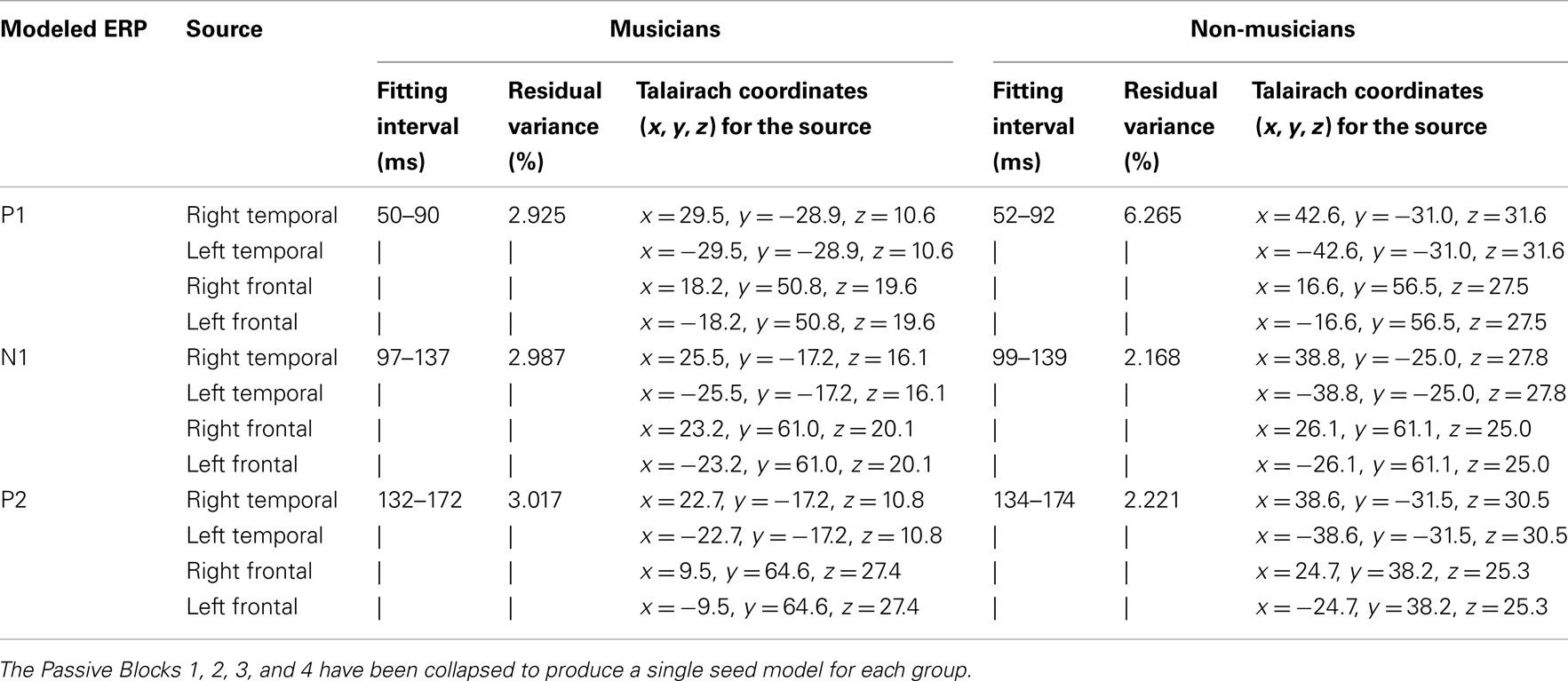

Table 1. Fitting intervals (ms), residual variance (%), and Talairach coordinates for grand average seed source models for musicians and non-musicians.

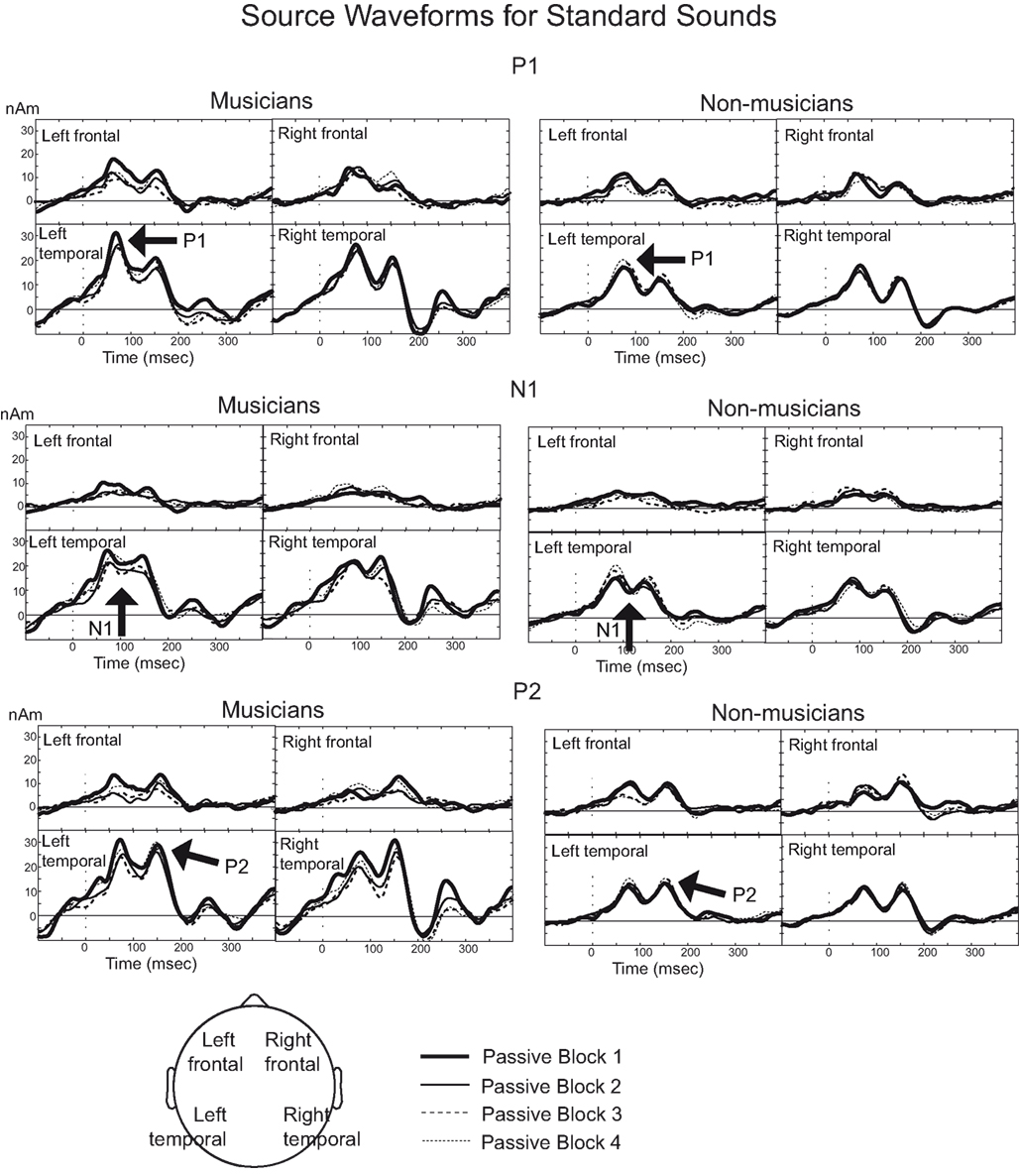

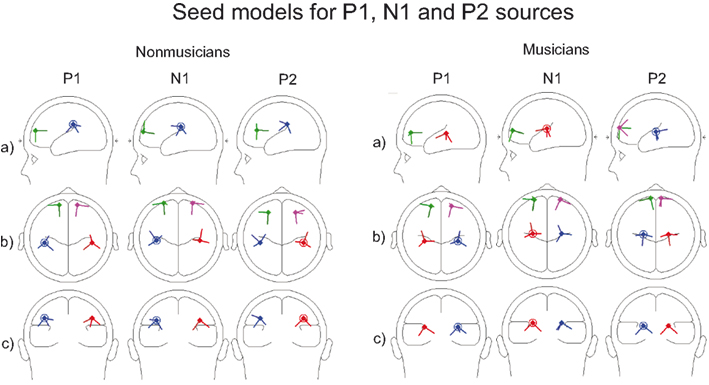

Each seed model consisted of four sources based on the previous literature on P1, N1, and P2: one source in the left and right hemisphere temporal area (near the auditory cortices) with symmetry constraint and one in the left and right hemisphere frontal cortex with symmetry constraint (e.g., Picton et al., 1999, for P1: Weisser et al., 2001; Korzyukov et al., 2007) (for Talairach coordinates, see Table 1; for the locations of the sources, see Figure 2). Frontal sources were analyzed because we wanted to examine the effects of active attention on sound processing, which typically activates the frontal generators (Picton et al., 1999). As Figure 2 and Table 1 demonstrate, separate seed models for P1, N1, and P2 were relatively similar (c.f. Yvert et al., 2005). We created separate models for these components because previous studies have shown different localizations and functionality for these components (Hari et al., 1987; Godey et al., 2001; Ross and Tremblay, 2009). The exact localization of the brain activity was not the main goal here, and, thus, regional sources were used to capture the brain activity originating from a relatively wide area in the range of centimeters.

Figure 2. Seed models of musicians and non-musicians for P1, N1, and P2 BESA source analysis. Sources are presented from the (A) sagittal, (B) vertical, and (C) coronal viewpoints.

After calculating the seed models, individual source waveforms (with peak latency and mean amplitude) and orientations (with first orientation set at maximum) were computed with a Simplex algorithm provided by BESA for each ERP and each passive block separately with the fixed source locations of the corresponding seed model and by individually adjusting the latency window to the maximum when the maximum was at a different time interval.

Statistical Data Analysis

Repeated measures ANOVAs were used to analyze the ERP changes for the standard sound. Amplitudes and latencies were analyzed separately for each component (P1, N1, and P2) using the block (Passive Blocks 1, 2, 3, and 4), frontality (frontal: F3, Fz, F4, frontocentral: FC3, FCz, FC4, central: C3, Cz, C4, parietal: P3, Pz, P4) and laterality (left hemisphere: F3, FC3, C3, P3, middle row: Fz, FCz, Cz, Pz, right hemisphere: F4, FC4, C4, P4) as repeated measures and music training (musician, non-musician) as the between-subjects factor. Statistical analysis for mean (nAm) amplitudes and peak latencies was conducted with same parameters as with ERPs except that frontality and laterality were replaced by the factor source (left frontal, right frontal, left temporal, right temporal). We used only the maximum orientation for the statistical analysis of all sources.

To determine whether rapid plasticity between blocks was related to the length of musical training or onset age of playing in musicians, Pearson correlations were computed. All statistical tests are reported with the alpha level of 0.05 as the significance criterion. Post hoc tests for repeated measures ANOVAs are reported with the Bonferroni-adjusted P-values for multiple comparisons unless otherwise stated. All P-values for ANOVAs are reported with Greenhouse–Geisser corrected values with uncorrected F-values. All statistics were computed with the SPSS v16 (SPSS Inc., USA) statistical software.

Results

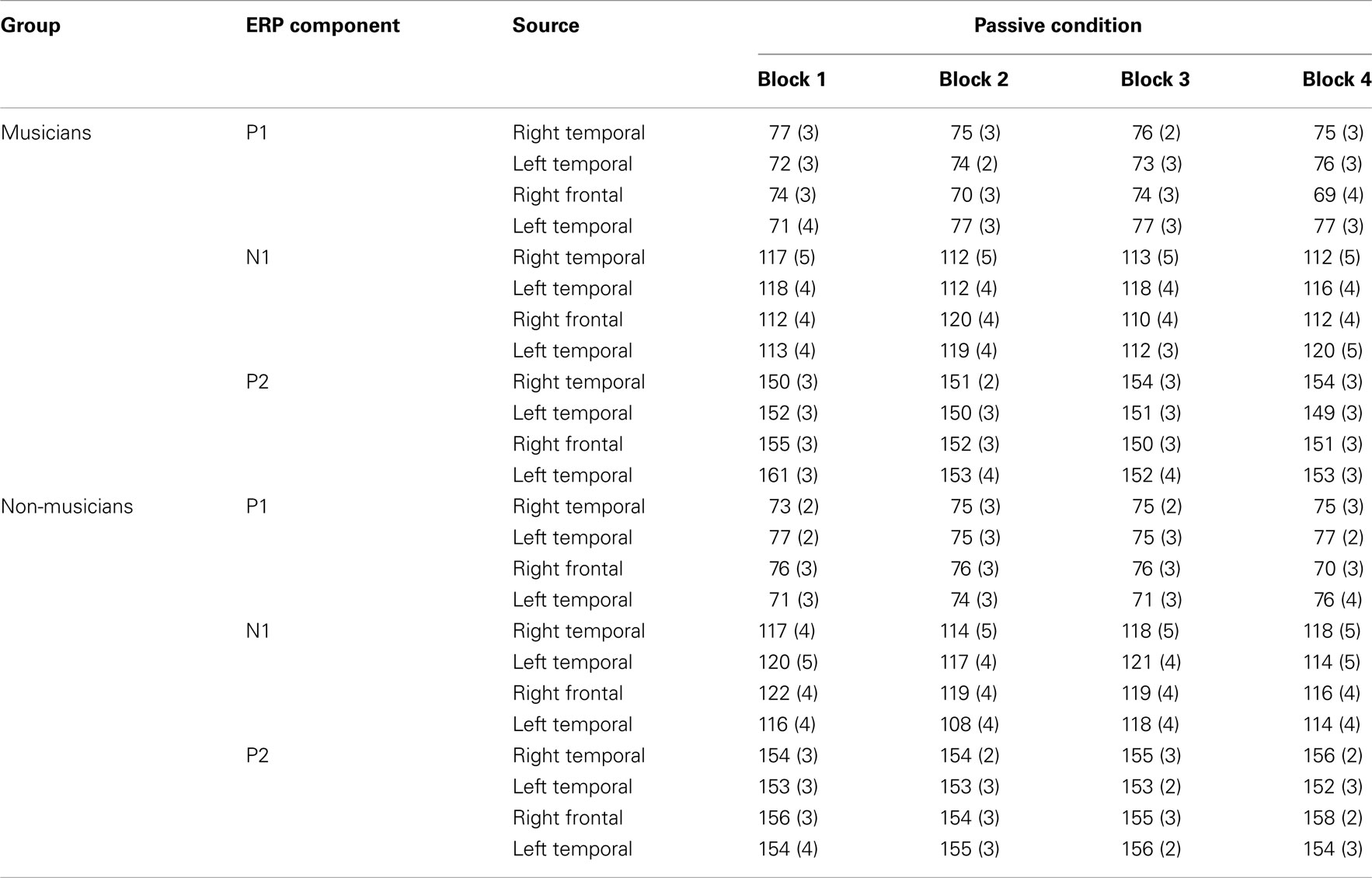

Mean amplitudes and peak latencies for P1, N1, and P2 ERPs and generators for the standard sounds between the four passive blocks (see Tables A1– A3 in Appendix for supporting information) were tested in separate repeated measures ANOVAs. Of specific interest was the interaction between Block and Musical training, which indicate enhanced rapid plasticity between experimental blocks as a function of long-term musical training. We found that music training modulated rapid neural plasticity in N1 and P2 generators and N1 ERPs.

P1, N1, and P2 Source Analysis

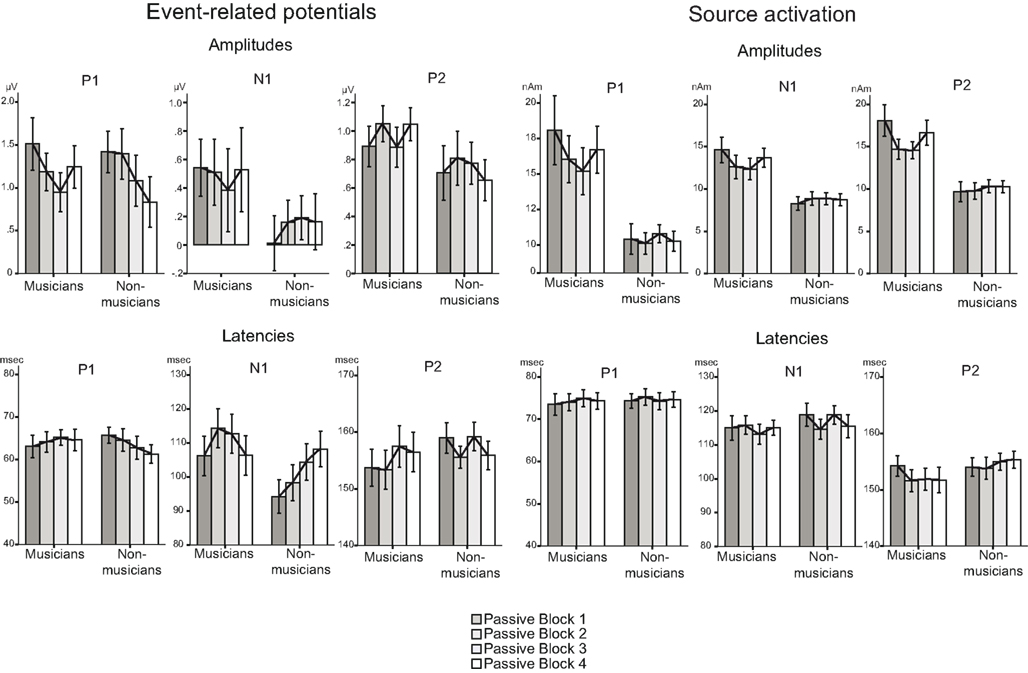

Rapid plasticity in source activation was observed in musicians for N1 and P2 components but not for P1 (Figure 3, for a summary of amplitudes and latencies, see Figure 5). Only in the musicians, the N1 source activation decreased from Block 1 to 2 (P = 0.041) (Block × Music training, F3,84 = 4.41, P = 0.012,  ). No other significant differences between blocks were found. Additionally, only in the musicians, the P2 source activation decreased from Passive Block 1 to Block 2 (P = 0.024) but increased again from Block 2 to 4 (P = 0.032) (Block × Music training, F3,84 = 3.93, P = 0.027,

). No other significant differences between blocks were found. Additionally, only in the musicians, the P2 source activation decreased from Passive Block 1 to Block 2 (P = 0.024) but increased again from Block 2 to 4 (P = 0.032) (Block × Music training, F3,84 = 3.93, P = 0.027,  ). There were no significant differences from Block 2 to 3 or from Block 3 to 4. Thus, passive exposure to sounds produced rapid plasticity of the N1 and P2 sources for standard sounds even before the active task, but the P2 source activation recovered after the active auditory discrimination task in musicians. No plastic effects were observed for source latencies.

). There were no significant differences from Block 2 to 3 or from Block 3 to 4. Thus, passive exposure to sounds produced rapid plasticity of the N1 and P2 sources for standard sounds even before the active task, but the P2 source activation recovered after the active auditory discrimination task in musicians. No plastic effects were observed for source latencies.

In addition to these findings, we found that musicians had stronger P1 source activation than non-musicians at both temporal sources (right temporal P = 0.001, left temporal P = 0.003) but not at the frontal sources (Music training, F1,28 = 11.31, P = 0.002,  Source × Music training, F3,84 = 4.30, P = 0.022,

Source × Music training, F3,84 = 4.30, P = 0.022,  ). In addition, in musicians, both P1 temporal sources were significantly stronger than both frontal sources (all sources P < 0.001), whereas in non-musicians temporal sources were stronger (right temporal P = 0.008, left temporal P = 0.007) than the left frontal sources (not the right frontal). Further, musicians had stronger N1 source activation at temporal sources (right temporal P = 0.001, left temporal P = 0.004) but not at the frontal sources compared to non-musicians (Music training, F1,28 = 11.46, P = 0.002,

). In addition, in musicians, both P1 temporal sources were significantly stronger than both frontal sources (all sources P < 0.001), whereas in non-musicians temporal sources were stronger (right temporal P = 0.008, left temporal P = 0.007) than the left frontal sources (not the right frontal). Further, musicians had stronger N1 source activation at temporal sources (right temporal P = 0.001, left temporal P = 0.004) but not at the frontal sources compared to non-musicians (Music training, F1,28 = 11.46, P = 0.002,  Source × Music training, F3,84 = 9.48, P < 0.001,

Source × Music training, F3,84 = 9.48, P < 0.001,  ). Musicians also had stronger P2 activation than non-musicians, with significantly stronger P2 source activations at both temporal sources (both sources P < 0.001) but not for either frontal source (Music training, F1,28 = 17.26, P < 0.001,

). Musicians also had stronger P2 activation than non-musicians, with significantly stronger P2 source activations at both temporal sources (both sources P < 0.001) but not for either frontal source (Music training, F1,28 = 17.26, P < 0.001,  Source × Music training, F3,84 = 24.60, P < 0.001,

Source × Music training, F3,84 = 24.60, P < 0.001,  ).

).

P1, N1, and P2 ERPs

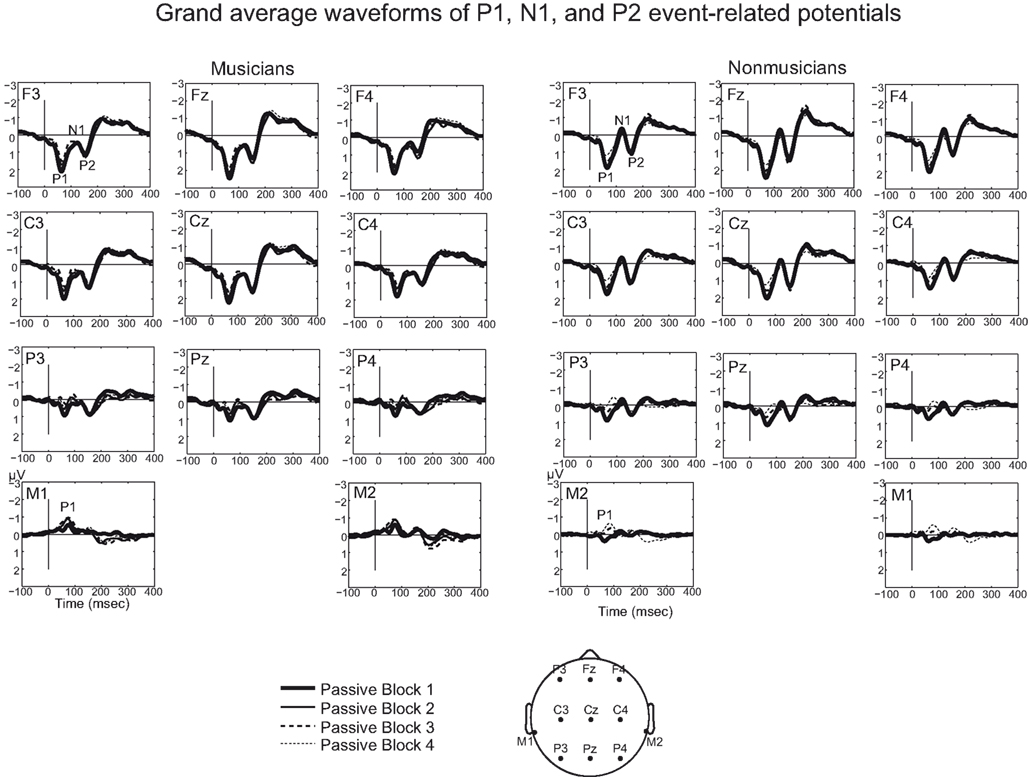

Figure 4 illustrates the grand average waves for the ERPs (see also Figure 5). In respect to the frontal electrodes, the opposite polarity of the responses in the mastoidal electrodes suggests the responses were generated in the primary auditory cortex or nearby. This conclusion was supported by the source analyses (Figure 3). No plastic effects were observed for the P1 ERP. However, N1 changes between the passive blocks were modulated by musical training (Block × Frontality × Laterality × Music training F18,504 = 2.28, P = 0.022,  ). Whereas musicians had smaller (more positive) N1 in the left frontal (P = 0.047) and central (P = 0.044) electrodes than non-musicians in the first block, only in musicians was N1 enhanced (i.e., became more negative) from Passive Block 1 to 3 but only in the parietal left (P = 0.054) and right (P = 0.021) hemisphere electrodes. The P2 amplitude was found to be enhanced in both groups from Block 1 to 2 in most frontal electrodes (Block × Frontality, F9,252 = 3.11, P = 0.016,

). Whereas musicians had smaller (more positive) N1 in the left frontal (P = 0.047) and central (P = 0.044) electrodes than non-musicians in the first block, only in musicians was N1 enhanced (i.e., became more negative) from Passive Block 1 to 3 but only in the parietal left (P = 0.054) and right (P = 0.021) hemisphere electrodes. The P2 amplitude was found to be enhanced in both groups from Block 1 to 2 in most frontal electrodes (Block × Frontality, F9,252 = 3.11, P = 0.016,  post hoc tests ns.). The P2 amplitude changes also showed a quadratic pattern (enhancement between successive passive blocks and decrease after the active task) in both groups in a lateral comparison (Block × Laterality F6,168 = 2.87, P = 0.024,

post hoc tests ns.). The P2 amplitude changes also showed a quadratic pattern (enhancement between successive passive blocks and decrease after the active task) in both groups in a lateral comparison (Block × Laterality F6,168 = 2.87, P = 0.024,  post hocs ns.). Unlike the P1, N1, and P2 source estimates, there were no main effects of music training for either ERP component.

post hocs ns.). Unlike the P1, N1, and P2 source estimates, there were no main effects of music training for either ERP component.

Figure 4. Grand average waveforms for standard sounds for musicians and non-musicians. Grand averages are presented for nose-referenced Fz, Cz, Pz (and their right and left hemisphere electrodes) and right and left mastoids. Mastoid electrodes show a negative polarity in the P1 and P2 time window.

Figure 5. Summary of the P1, N1, and P2 results (with SEs of the means) for amplitudes (left) and latencies (right) of standard sounds.

Discussion

In this study, we compared the rapid plasticity of P1, N1, and P2 ERP responses and source activation of musicians and non-musicians. Specifically, we examined the neural modulation for regularly presented standard sounds among oddball stimuli during 1 h of passive exposure to sounds. We found that professionally trained musicians had enhanced rapid (within 15–30 min) plasticity of N1 and P2 source activation to unattended standard sounds during passive exposure. Since the effect was observed already between first two blocks before the active task, active attention to or discrimination of the sounds was not necessary for these effects to emerge. However, in musicians N1 ERPs and P2 source activation were also modulated by the active attention, enhancing these responses after the active task (from blocks before the active task to blocks after the active task). No rapid plasticity was found for P1.

Our first aim was to determine whether rapid plasticity of P1, N1, and P2 responses differentiated musicians and non-musicians. We found that musical training enhanced rapid plasticity of N1 and P2 responses. Source waveform analysis showed that N1 and P2 source activation was decreased in the early phase of passive auditory stimulation (i.e., between the first two 15 min blocks before the active task) only in musicians. The decreasing N1 and P2 source activation in musicians may indicate a fast learning capacity in the auditory system to extract both sound features and the rules for differentiating the standard sounds from deviant sounds as well as predicting future auditory events even without active attention. A previous study showed a rapid (within tens of minutes) decrease for N1m and an increase of P2m for the same repeated speech-sound stimuli in non-musicians (Ross and Tremblay, 2009). The reason why we did not find similar rapid plasticity in non-musicians in this study might be because the oddball stimuli contain unattended standard sounds that have a particular functional role of being a comparison template against the deviating sounds (e.g., Bendixen et al., 2007). Instead of processing the same repeated sound stimuli, the oddball stimuli may require more processing resources from the auditory system because it requires the passive extraction of the simple rules (e.g., probability, deviancy) within an oddball sequence (Korzyukov et al., 2003; Winkler et al., 2003). Repetition effects for the standard sounds may well indicate the prediction coding also for the deviating sounds as well as perceptual learning (e.g., Haenschel et al., 2005; Baldeweg, 2007; Bendixen et al., 2007). Our findings suggest that this extraction process between standard sounds and deviant sounds was pronounced in musicians.

Whereas our findings showed rapid plasticity of P2 sources only in musicians where both the temporal and frontal sources were statistically collapsed in the ERP analysis, both musicians and non-musicians showed P2 ERP amplitude enhancement at the frontal electrodes between the first two blocks. Additionally, we found that P2 source activation (as well as P1 and N1 source activation) was overall significantly stronger in the temporal sources in musicians compared with non-musicians. Accordingly, a main effect of musical training was not found for ERP responses except at the beginning of the experiment with a differently lateralized and smaller (more positive) N1 in musicians than non-musicians. P2 enhancement for ERP responses (and not for source waveforms) may be caused by the summated scalp response from various other P2 sources that were not modeled here (see Godey et al., 2001). Hence, the ERP responses reflect the combined activity of all cortical sources due to the volume conductance of the head tissues, and even though the unexplained variance in the source model was within the acceptable limits, some of the smaller sources might have been omitted from the source model. The separate effects of musical expertise on the temporal and frontal sources could also be one explanation (other than the different stimuli and paradigms used) for the partly contradicting findings on the strength of the basic ERP components in musicians, as found in the previous studies (see Introduction).

Our second aim was to investigate whether the active attention would modulate the rapid neural plasticity of P1, N1, or P2 differentially for standard sounds in musicians and non-musicians. When comparing the blocks before and after the active auditory discrimination task, we found that N1 ERP amplitude was enhanced (i.e., became more negative) at the parietal electrodes after the active task only in musicians. In previous ERP studies, N1 has been shown to decrease for the non-attended sounds during single-session training (Brattico et al., 2003; Alain and Snyder, 2008; Ross and Tremblay, 2009), to remain unchanged (Atienza et al., 2002), or to increase when no deviating sounds were present (Clapp et al., 2005). Lateralized parietal enhancement is not a typical N1 response (which shows a maximum at vertex), but in general, changes in the parietal areas may indicate the automatization of processing due to active attention (Pugh et al., 1996).

The active attention task between the passive blocks also influenced the P2 responses. After the initial decrement during the passive blocks, the P2 source activation was enhanced after the active task for unattended standard sounds in musicians. Our results partly contrast Sheehan et al. (2005), where P2 was shown to be increased in both the training and control groups (both non-musicians), although behavioral discrimination was improved only with training. They suggested that P2 would not reflect perceptual learning, but, instead, inhibitory processes will be reinforced for non-relevant standard sounds. Additionally, in Alain and Snyder (2008) where only non-musicians were studied, P2 ERP was shown to be enhanced between blocks within one session. In contrast to these and our findings, Clapp et al. (2005) did not find P2 changes after exposure to repeated sounds without deviating sounds (and without a requirement of inhibiting irrelevant sounds) nor in the oddball condition. Yet, it is possible that an increased inhibition for non-target, standard sounds could indeed represent a mechanism for auditory perceptual learning during the oddball paradigm and not just repetition effects, as suggested by Sheehan et al. (2005). Our findings of the recovery of P2 might reflect an increased inhibition to unattended standard sounds. The suppression of unattended regular standard sounds is important for the auditory system, which optimizes the processing demands by constant active predicting. This prediction coding is implemented through rapidly extracting the rules for incoming auditory events, including whether they are different or familiar and relevant or irrelevant. Moreover, simple repetition effects cannot explain the fact that neural changes often precede or coincide with the behavioral improvement in the discrimination of sounds (e.g., Ross and Tremblay, 2009).

Another explanation for the neural enhancements for the standard sounds in musicians after the active task could be more frequent exposure (i.e., having more trials) to the most difficult deviant target trials during the active task. Active tasks were adaptive, that is, the better participant could discriminate the more difficult deviants. In a previous study, we found that musicians discriminated deviating sounds in active tasks better than non-musicians (Seppänen et al., 2012). There was, however, no significant difference in the number of standard or deviant trials in Active task 1, which was used as a probe for the active attention. Furthermore, only non-musicians demonstrated an improvement in the behavioral discrimination of difficult deviations, whereas in musicians, the discrimination remained at the maximum level (Seppänen et al., 2012). Based on these arguments, it is unlikely that the present finding of the standard sound enhancement in musicians would be caused by the more frequent exposure to deviating sounds during the active task.

Taken together, the previous and current findings show that P2 is prone to the effects of long-term auditory (musical) training and rapid plasticity. This is further supported by the fact that the P2 generators were located in the secondary auditory cortices where plasticity is considered high (Crowley and Colrain, 2004; Jääskeläinen et al., 2007). Additionally, our findings of enhanced P2 plasticity were found for relatively simple sine tone complexes, whereas previous studies have proposed that enhanced P2 in musicians is especially pronounced for musical timber (P2m for deviants during active discrimination: Kuriki et al., 2006, P2 and P2m: Shahin et al., 2005). Enhanced P2 for unattended sounds in musicians might reflect not only the timber but also enhanced attentional mechanisms, with better inhibition occurring with standard sounds during the oddball paradigm.

In addition to music training, it is possible that other factors, such as genetic predispositions and musically enriched homes in childhood, enhance cognitive skills, and auditory processing. However, in a previous study, no evidence of pre-existing cognitive, music, motor, or structural brain differences were found between children starting instrumental training or control groups at the pre-training phase (Norton et al., 2005). Furthermore, the length of music training and the strength of neural processing for sounds correlate positively in several neurocognitive studies on musicians (Jäncke, 2009). Finally, in our previous study, we did not find significant differences between musicians and non-musicians (with a slightly larger sample) with standardized attention capacity tests, although musicians had more variability in their attention task performance (Seppänen et al., 2012).

Conclusion

In the present study, we have shown that professionally trained musicians had enhanced rapid plasticity of N1 and P2 for unattended standard sounds that were presented regularly among irregularly presented deviant sounds. Furthermore, we found that the rapid neural plasticity of N1 and P2 in the auditory system did not require active attention or reinforcement but had already occurred during unattended, passive exposure to sounds. Enhanced rapid plasticity for unattended standard sounds may indicate a faster capacity in the auditory system to improve perceptual discrimination accuracy for both regular and irregular auditory events in musicians. Thus, intensive and long-term musical training seem to enhance encoding of the comparison templates (which are standard sounds) rather than the deviating sounds even when not attending to the sounds. Present findings suggest that N1 and P2 could be used as indicators for rapid neural plasticity, auditory perceptual learning and long-term auditory training. Future studies should investigate whether rapid plasticity in musicians expands beyond musically relevant sounds, such as faster learning of foreign language phonemes.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The study was supported by the Research Foundation of the University of Helsinki and the National Doctoral Programme of Psychology, Finland. We thank Mr. Pentti Henttonen, and Ms. Eeva Pihlaja, M.A., for their help in data collection and Ms. Veerle Simoens, M.Sc., for her comments on an earlier version of the manuscript. Also, we are thankful for technical personnel in the Cognitive Brain Research Unit, Institute of Behavioral Sciences, in the University of Helsinki.

References

Alain, C., and Snyder, J. (2008). Age-related differences in auditory evoked responses during rapid perceptual learning. Clin. Neurophysiol. 119, 356–366.

Atienza, M., Cantero, J., and Dominguez-Marin, E. (2002). The time course of neural changes underlying auditory perceptual learning. Learn. Mem. 9, 138–150.

Baldeweg, T. (2007). ERP repetition effects and mismatch negativity generation – a predictive coding perspective. J. Psychophysiol. 21, 204–213.

Baumann, S., Meyer, M., and Jäncke, L. (2008). Enhancement of auditory-evoked potentials in musicians reflects an influence of expertise but not selective attention. J. Cogn. Neurosci. 20, 2238–2249.

Bendixen, A., Roeber, U., and Schröger, E. (2007). Regularity extraction and application in dynamic auditory stimulus sequences. J. Cogn. Neurosci. 19, 1664–1677.

Berg, P., and Scherg, M. (1994). A multiple source approach to the correction of eye artifacts. Electroencephalogr. Clin. Neurophysiol. 90, 229–241.

Boop, F., Garcia-Rill, E., Dykman, R., and Skinner, R. (1994). The P1: insights into attention and arousal. Pediatr. Neurosurg. 20, 57–62.

Bosnyak, D., Eaton, R., and Roberts, L. (2004). Distributed auditory cortical representations are modified when non-musicians are trained at pitch discrimination with 40 Hz amplitude modulated tones. Cereb. Cortex 14, 1088–1099.

Brattico, E., Tervaniemi, M., and Picton, T. (2003). Effects of brief discrimination-training on the auditory N1 wave. Neuroreport 14, 2489–2492.

Clapp, W., Kirk, I., Hamm, J., Shepherd, D., and Teyler, T. (2005). Induction of LTP in the human auditory cortex by sensory stimulation. Eur. J. Neurosci. 22, 1135–1140.

Crowley, K., and Colrain, I. (2004). A review of the evidence for P2 being an independent component process: age, sleep and modality. Clin. Neurophysiol. 115, 732–744.

Gilbert, C., Sigman, M., and Crist, R. (2001). The neural basis of perceptual learning. Neuron 31, 681–697.

Godey, B., Schwartz, D., de Graaf, J. B., Chauvel, P., and Liégeois-Chauvel, C. (2001). Neuromagnetic source localization of auditory evoked fields and intracerebral evoked potentials: a comparison of data in the same patients. Clin. Neurophysiol. 112, 1850–1859.

Haenschel, C., Vernon, D. J., Dwivedi, P., Gruzelier, J. H., and Baldeweg, T. (2005). Event-related brain potential correlates of human auditory sensory memory-trace formation. J. Neurosci. 25, 10494–10501.

Hari, R., Pelizzone, M., Mäkelä, J. P., Hällstrom, J., Leinonen, L., and Lounasmaa, O. V. (1987). Neuromagnetic responses of the human auditory cortex to on and offsets of noise bursts. Audiology 26, 31–43.

Hillyard, S., Hink, R., Schwent, V., and Picton, T. (1973). Electrical signs of selective attention in the human brain. Science 182, 177–180.

Ille, N., Berg, P., and Scherg, M. (2002). Artifact correction of the ongoing EEG using spatial filters based on artifact and brain signal topographies. J. Clin. Neurophysiol. 19, 13–24.

Jääskeläinen, I., Ahveninen, J., Belliveau, J., Raij, T., and Sams, M. (2007). Short-term plasticity in auditory cognition. Trends Neurosci. 30, 653–661.

Jongsma, M., Eichele, T., Quian Quiroga, R., Jenks, K., Desain, P., Honing, H., and Van Rijn, C. (2005). Expectancy effects on omission evoked potentials in musicians and non-musicians. Psychophysiology 42, 191–201.

Korzyukov, O., Pflieger, M., Wagner, M., Bowyer, S., Rosburg, T., Sundaresan, K., Elger, C., and Boutros, N. (2007). Generators of the intracranial P50 response in auditory sensory gating. Neuroimage 35, 814–826.

Korzyukov, O., Winkler, I., Gumenyuk, V., and Alho, K. (2003). Processing abstract auditory features in the human auditory cortex. Neuroimage 20, 2245–2258.

Kuriki, A., Kanda, S., and Hirata, Y. (2006). Effects of musical experience on different components of MEG responses elicited by sequential piano-tones and chords. J. Neurosci. 26, 4046–4053.

Lütkenhöner, B., Seither-Preisler, A., and Seither, S. (2006). Piano tones evoke stronger magnetic fields than pure tones or noise, both in musicians and non-musicians. Neuroimage 30, 927–937.

Münte, T., Altenmüller, E., and Jäncke, L. (2002). The musician’s brain as a model of neuroplasticity. Nat. Rev. Neurosci. 3, 473–478.

Näätänen, R., and Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology 24, 375–425.

Nikjeh, D., Lister, J., and Frisch, S. (2009). Preattentive cortical-evoked responses to pure tones, harmonic tones, and speech: influence of music training. Ear Hear. 30, 432–446.

Norton, A., Winner, E., Cronin, K., Overy, K., Lee, D., and Schlaug, G. (2005). Are there pre-existing neural, cognitive, or motoric markers for musical ability? Brain Cogn. 59, 124–134.

Picton, T. W., Alain, C., Woods, D., John, M., Scherg, M., Valdes-Sosa, P., Bosch-Bayard, J., and Trujillo, N. J. (1999). Intracerebral sources of human auditory-evoked potentials. Audiol. Neurootol. 4, 64–79.

Pugh, K., Shaywitz, B., Shaywitz, S., Fulbright, R., Byrd, D., Skudlarski, P., Shankweiler, D., Katz, L., Constable, R. T., Fletcher, J., Lacadie, C., Marchione, K., and Gore, J. (1996). Auditory selective attention: an fMRI Investigation. Neuroimage 4, 159–173.

Purdy, S., Kelly, A., and Thorne, P. (2001). Auditory evoked potentials as measures of plasticity in humans. Audiol. Neurootol. 6, 211–215.

Reinke, K., He, Y., Wang, C., and Alain, C. (2003). Perceptual learning modulates sensory evoked response during vowel segregation. Brain Res. Cogn. Brain Res. 17, 781–791.

Ross, B., and Tremblay, K. (2009). Stimulus experience modifies auditory neuromagnetic responses in young and older listeners. Hear. Res. 248, 48–59.

Schneider, P., Scherg, M., Dosch, H. G., Specht, H. J., Gutschalk, A., and Rupp, A. (2002). Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 5, 688–694.

Schneider, P., Sluming, V., Roberts, N., Scherg, M., Goebel, R., Specht, H., Dosch, H., Bleeck, S., Stippich, C., and Rupp, A. (2005). Structural and functional asymmetry of lateral Heschl’s gyrus reflects pitch perception preference. Nat. Neurosci. 8, 1241–1247.

Seitz, A., and Watanabe, T. (2005). A unified model for perceptual learning. Trends Cogn. Sci. (Regul. Ed.) 9, 329–334.

Seppänen, M., Pesonen, A.-K., and Tervaniemi, M. (2012). Music training enhances the rapid plasticity of P3a/P3b event-related brain potentials for unattended and attended target sounds. Atten. Percept. Psychophys. 74, 600–612.

Shahin, A., Bosnyak, D., Trainor, L., and Roberts, L. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 23, 5545–5552.

Shahin, A., Roberts, L., Pantev, C., Trainor, L., and Ross, B. (2005). Modulation of P2 auditory-evoked responses by the spectral complexity of musical sounds. Neuroreport 16, 1781–1785.

Sheehan, K., McArthur, G., and Bishop, D. (2005). Is discrimination training necessary to cause changes in the P2 auditory event-related brain potential to speech sounds? Brain Res. Cogn. Brain Res. 25, 547–553.

Tremblay, K., Inoue, K., McClannahan, K., and Ross, B. (2010). Repeated stimulus exposure alters the way sound is encoded in the human brain. PLoS ONE 5, e10283.

Weisser, R., Weisbrod, M., Roehrig, M., Rupp, A., Schroeder, J., and Scherg, M. (2001). Is frontal lobe involved in the generation of auditory evoked P50? Neuroreport 12, 3303–3307.

Winkler, I., Teder-Sälejärvi, W., Horvath, J., Näätänen, R., and Sussman, E. (2003). Human auditory cortex tracks task-irrelevant sound sources. Neuroreport 14, 2053–2056.

Woldorff, M., and Hillyard, S. (1991). Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr. Clin. Neurophysiol. 79, 170–191.

Yvert, B., Fischer, C., Bertrand, O., and Pernier, J. (2005). Localization of human supratemporal auditory areas from intracerebral auditory evoked potentials using distributed source models. Neuroimage 28, 140–153.

Appendix

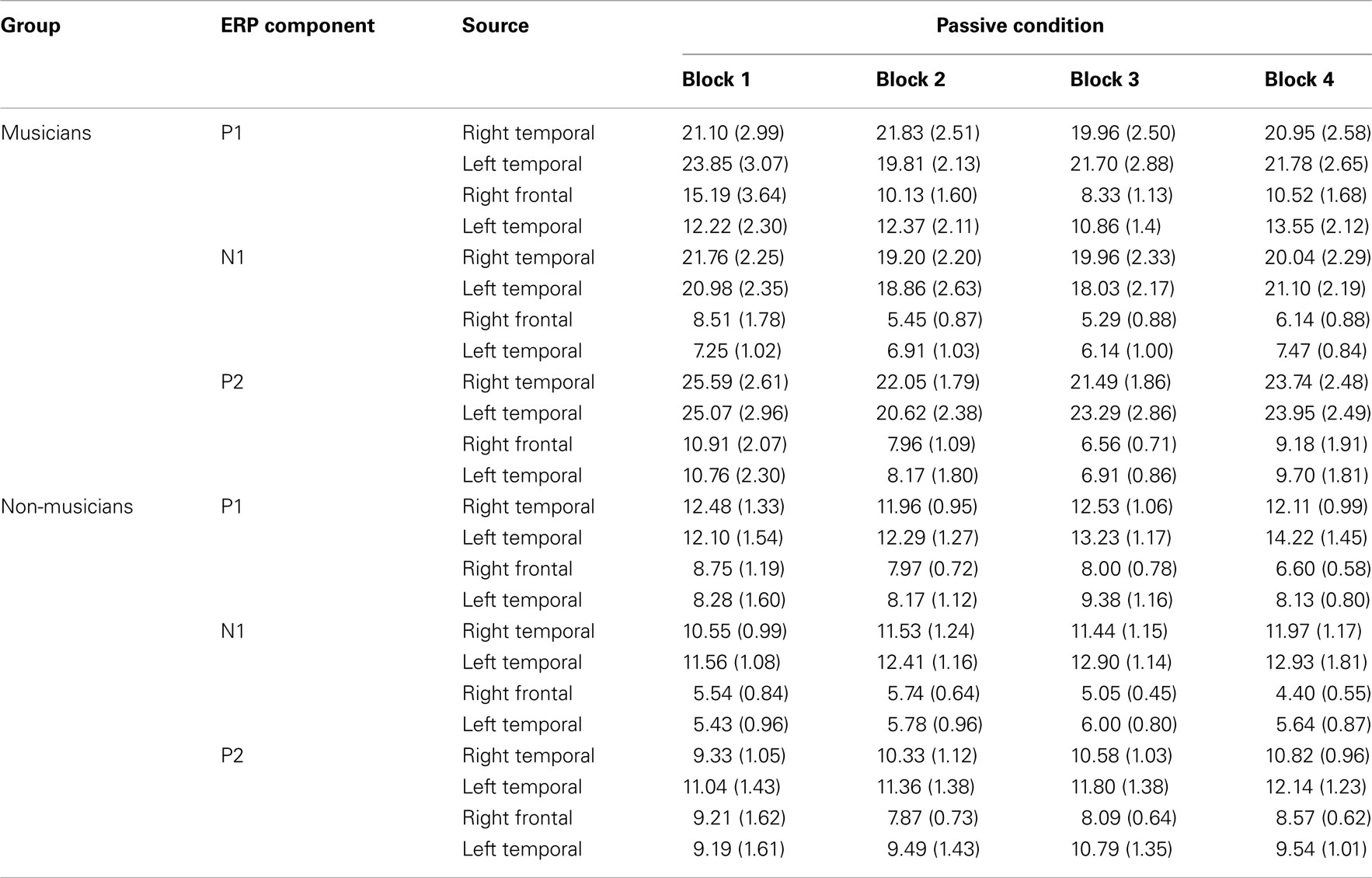

Table A1. P1, N1, and P2 source magnitude mean amplitudes (nAm) and SEs of the means (in parentheses) for the standard stimuli from nose-referenced grand averages.

Table A2. P1, N1, and P2 source peak latencies (ms) and SEs of the means (in parentheses) for the standard stimuli from nose-referenced grand averages.

Keywords: musical expertise, music training, EEG, rapid plasticity, auditory perceptual learning, P1, N1, P2

Citation: Seppänen M, Hämäläinen J, Pesonen A-K and Tervaniemi M (2012) Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds. Front. Hum. Neurosci. 6:43. doi: 10.3389/fnhum.2012.00043

Received: 03 November 2011; Accepted: 21 February 2012;

Published online: 14 March 2012.

Edited by:

Alvaro Pascual-Leone, Beth Israel Deaconess Medical Center/Harvard Medical School, USAReviewed by:

Erich Schröger, University of Leipzig, GermanyNina Kraus, Northwestern University, USA

Copyright: © 2012 Seppänen, Hämäläinen, Pesonen and Tervaniemi. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Miia Seppänen and Mari Tervaniemi, Cognitive Brain Research Unit, Institute of Behavioural Sciences, University of Helsinki, P.O. BOX 9 (Siltavuorenpenger 1 B), Helsinki FI-00014, Finland. e-mail: miia.seppanen@helsinki.fi