A comprehensive dataset for home appliance control using ERP-based BCIs with the application of inter-subject transfer learning

- 1Department of Biomedical Engineering, Ulsan National Institute of Science and Technology, Ulsan, Republic of Korea

- 2The Institute of Healthcare Convergence, College of Medicine, Catholic Kwandong University, Gangneung-si, Republic of Korea

- 3Center for Bionics, Korea Institute of Science and Technology, Seoul, Republic of Korea

Brain-computer interfaces (BCIs) have a potential to revolutionize human-computer interaction by enabling direct links between the brain and computer systems. Recent studies are increasingly focusing on practical applications of BCIs—e.g., home appliance control just by thoughts. One of the non-invasive BCIs using electroencephalography (EEG) capitalizes on event-related potentials (ERPs) in response to target stimuli and have shown promise in controlling home appliance. In this paper, we present a comprehensive dataset of online ERP-based BCIs for controlling various home appliances in diverse stimulus presentation environments. We collected online BCI data from a total of 84 subjects among whom 60 subjects controlled three types of appliances (TV: 30, door lock: 15, and electric light: 15) with 4 functions per appliance, 14 subjects controlled a Bluetooth speaker with 6 functions via an LCD monitor, and 10 subjects controlled air conditioner with 4 functions via augmented reality (AR). Using the dataset, we aimed to address the issue of inter-subject variability in ERPs by employing the transfer learning in two different approaches. The first approach, “within-paradigm transfer learning,” aimed to generalize the model within the same paradigm of stimulus presentation. The second approach, “cross-paradigm transfer learning,” involved extending the model from a 4-class LCD environment to different paradigms. The results demonstrated that transfer learning can effectively enhance the generalizability of BCIs based on ERP across different subjects and environments.

1 Introduction

Brain-Computer Interfaces (BCIs) have become a promising technology that establishes a direct communication channel between the human brain and external computational devices (Gao et al., 2021). BCIs have gained attention for their diverse applications across multiple disciplines. Initially developed for medical and rehabilitative purposes, BCIs have expanded to practical areas such as virtual reality, gaming, and robotics (Ahn et al., 2014). One of the primary neural signals used for BCIs is electroencephalography (EEG). BCIs using EEG can be divided into active, reactive and passive BCIs according to the paradigm to generate desired EEG patterns (Amiri et al., 2013). Among them, most reactive BCIs have relied on two major EEG features, event-related potentials (ERPs) and steady-state visually evoked potentials (SSVEPs) (Fazel-Rezai et al., 2012). Specifically, ERP-based BCIs transcend sensory modalities and require less training for the user compared to other methods, making them suitable for real-time interaction with various computer systems (Krol et al., 2018).

The speller is one of the most representative examples of ERP-based BCIs. Over the past 20 years, the BCI speller has served as a communication tool for individuals afflicted with a range of neuromuscular disorders, including ALS, brainstem stroke, brain or spinal cord injury, cerebral palsy, muscular dystrophies, multiple sclerosis, and other patients (Sosa et al., 2011). Moreover, ERP-based BCIs have demonstrated their utility in various domains beyond medical and rehabilitative applications—e.g., game control and lie detection (Marshall et al., 2013; Anwar et al., 2019; Mane et al., 2020). Additionally, they are increasingly being integrated into everyday technologies, providing a hands-free and intuitive means of interacting with computer systems. One emerging area of interest is home automation, where ERP-based BCIs can be employed to control a wide range of household appliances (Bentabet and Berrached, 2016). In addition to the commonly explored applications such as televisions, lighting systems, there exists potential for regulating thermostats, window blinds, and even robotic vacuum cleaners (Kim et al., 2019). For example, a user could effortlessly adjust the room temperature or open the window by simply focusing on specific visual cues presented on a screen. This application not only enhances user convenience but also holds promise for individuals with limited mobility, granting them increased independence in interacting with their home environment.

Despite advancements, ERP-based BCIs face challenges that hinder their widespread adoption and reliability. One of the most pressing issues is inter-subject variability, which refers to variations in ERP responses across different individuals (Pérez-Velasco et al., 2022). Inter-subject variability can be attributed to factors such as age, cognitive abilities, emotional states, and the quality of EEG equipment used (Li et al., 2020). Additionally, ERP responses are sensitive to internal and external conditions, such as the user’s level of focus or environmental noise. Inter-subject variability affects the generalizability and practical utility of ERP-based BCIs, often leading to reduced performance when a BCI model built on pre-existing data is applied to a new user. The complexity of these factors necessitates research into methodologies that can account for inter-subject variability (Maswanganyi et al., 2022). While some progress has been made, such as the development of new signal processing techniques to alleviate inter-subject variability, further development is required (Dolzhikova et al., 2022).

Several studies have attempted to address inter-subject variability in ERP-based BCIs. To address inter-subject variability, the predominant approach is transfer learning, which encompasses a spectrum of methodologies. Among these, Riemannian geometry stands out as a promising mathematical framework for enhancing BCI performance across subjects. It has been utilized for BCI decoding, feature representation, classifier design, calibration time reduction, and specifically, transfer learning, demonstrating its versatility (Congedo et al., 2017). Additional methods include the use of domain adaptation techniques that adjust classifiers to handle new subject data (Jayaram et al., 2016), and the application of deep learning models that can learn representations transferable across subjects (Kindermans et al., 2014). Moreover, few-shot learning has been applied to facilitate the BCI systems’ adaptability with minimal subject-specific data (An et al., 2023). Combining these transfer learning strategies with Riemannian approaches can potentially overcome the generalizability issues posed by inter-subject variability. Nonetheless, for these methods to be truly effective, a larger and more diverse dataset is essential. The current limitations posed by small sample sizes and the heterogeneity of stimuli across experiments necessitate the creation of comprehensive datasets, which employ uniform stimuli to ensure consistency and facilitate more generalized outcomes (Barachant et al., 2013; Rodrigues et al., 2019).

In this study, we develop a comprehensive dataset that can address the challenges associated with inter-subject variability in ERP-based BCIs. The dataset is collected from a large number of subjects under diverse stimulus presentation environments, ranging from liquid crystal display (LCD) displays to AR. Based on this dataset, we introduce two transfer learning approaches. The first approach, termed ‘within-paradigm transfer learning,’ focuses on generalizing the BCI model within the same stimulus presentation paradigm. The second approach, termed ‘cross-paradigm transfer learning,’ seeks to adapt a BCI model built from a specific paradigm to the data in other distinct paradigms. These approaches aim to enhance the adaptability and efficiency of BCIs across varied subjects and environments.

The dataset introduced by this study provides a rich resource for exploring novel transfer learning methods and investigating the nuances of ERP responses across different conditions. By making this dataset publicly available, we aim to stimulate further research of ERP-based BCIs and enable the development of more effective and generalizable BCI systems. This initiative aligns with the emphasis on open science and data sharing, which is crucial for accelerating advancements in multidisciplinary fields such BCI research. In summary, this study aims to contribute significantly to the field of BCI by providing an open dataset that can facilitate the development of algorithms and methodologies for effectively addressing the challenges of inter-subject variability.

2 Materials and methods

2.1 Subjects

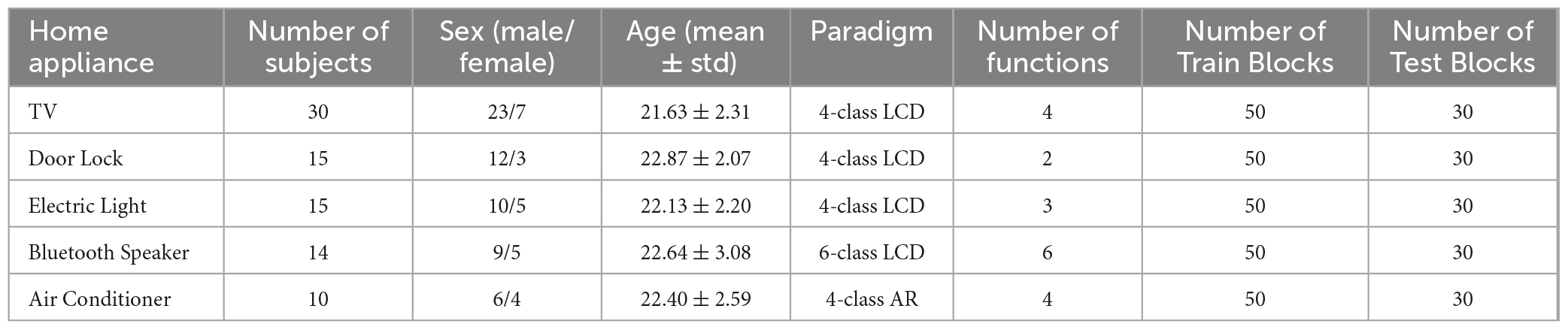

In this study, a total of 84 healthy volunteers participated, each involved in only one experiment, ensuring no overlap of subjects between experiments. The demographic information of subjects is listed in Supplementary Table 1. The distribution of subjects across five different online BCI experiments was as follows: 30 subjects were recruited for the TV control experiment, 15 in the door lock (DL) control, and another 15 in the electric light (EL) control. Additionally, 14 subjects participated in the bluetooth speaker (BS) control experiment, while 10 were involved in the air conditioner (AC) control experiment. This distribution confirms that transfer learning applied within or between paradigms did involve transfer between subjects, given the distinct participant groups for each experiment. Compared to prior research where the subject pool ranged from 5 to 18 individuals, our study maintained a similar scale in terms of the number of subjects for each experiment (Serby et al., 2005; Townsend et al., 2010). Ethical approval for this study was granted by the Institutional Review Board at the Ulsan National Institutes of Science and Technology (IRB: UNISTIRB-18-08-A), and all subjects provided informed consent before participating.

2.2 EEG data acquisition

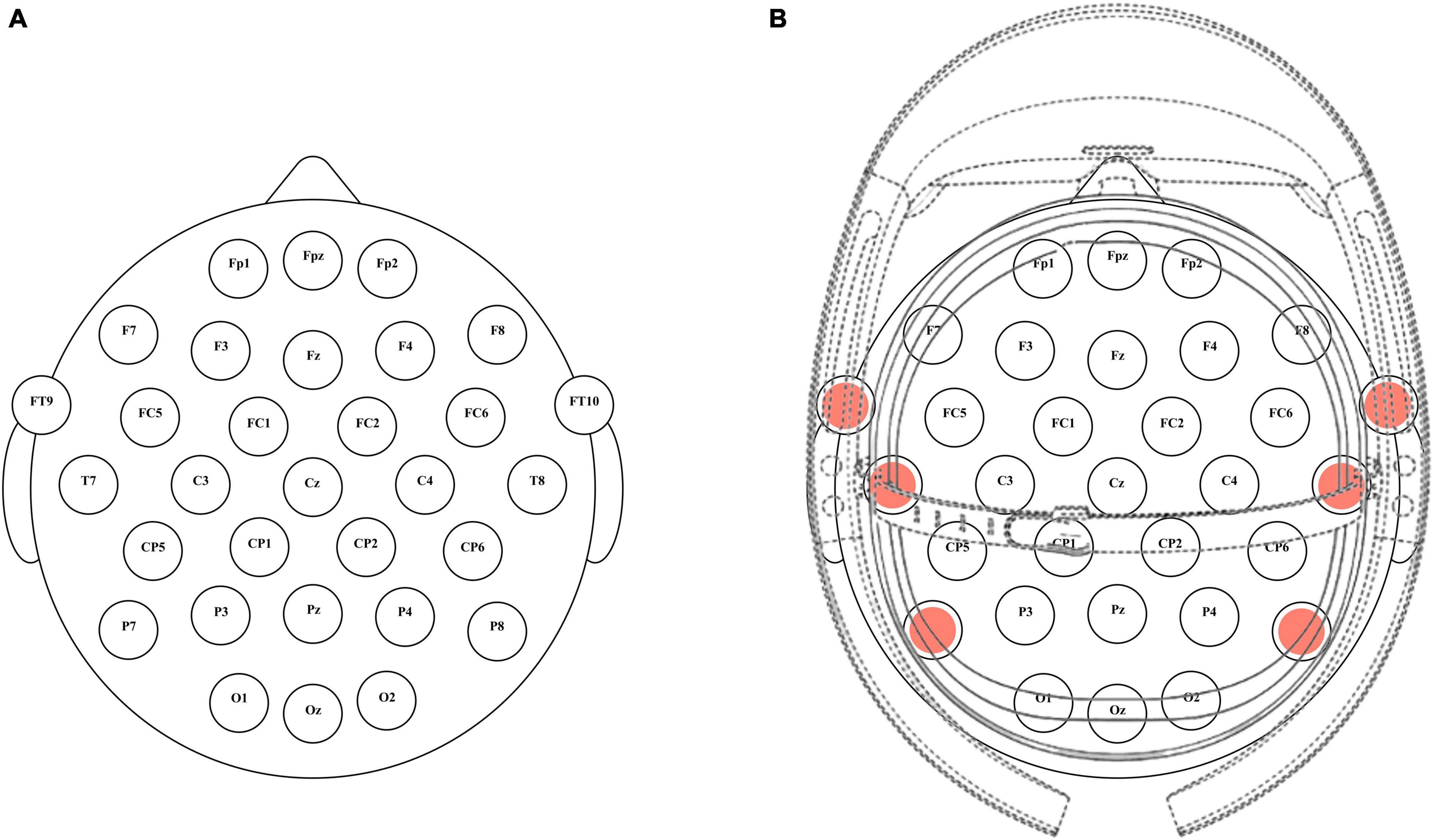

Scalp EEG data were collected using a commercially available EEG acquisition system (actiCHamp, Brain Products GmbH, Germany) following the electrode placement guidelines of the American Clinical Neurophysiology Society’s 10–20 system. In the LCD experimental environment, data were acquired from 31 active wet electrodes positioned (FP1, FPz, FP2, F7, F3, Fz, F4, F8, FT9, FC5, FC1, FC2, FC6, FT10, T7, C3, Cz, C4, T8, CP5, CP1, CP2, CP6, P7, P3, Pz, P4, P8, O1, Oz, and O2) at specific locations on the scalp (Figure 1A). For the augmented reality (AR) environment, facilitated by Microsoft’s HoloLens 1, six channels were omitted, resulting in 25 active electrodes at different scalp locations (FP1, FPz, FP2, F7, F3, Fz, F4, F8, FC5, FC1, FC2, FC6, C3, Cz, C4, CP5, CP1, CP2, CP6, P3, Pz, P4, O1, Oz, and O2) (Figure 1B). In both settings, reference and ground electrodes were placed on the linked mastoids of the left and right ears, respectively. The impedance of the electrodes was maintained below 5 kΩ. The EEG signals were digitized at a sampling rate of 500 Hz and band-pass filtered between 0.01 and 50 Hz.

Figure 1. The placement of electrodes and the experimental settings for two different environments. (A) Electrode configuration for the LCD environment using 31 electrodes based on the 10–20 system. (B) Electrode configuration for the AR environment using 25 active electrodes. The omitted 6 channels (FT9, FT10, T7, T8, P7, P8) are represented by red dots.

2.3 Experimental design

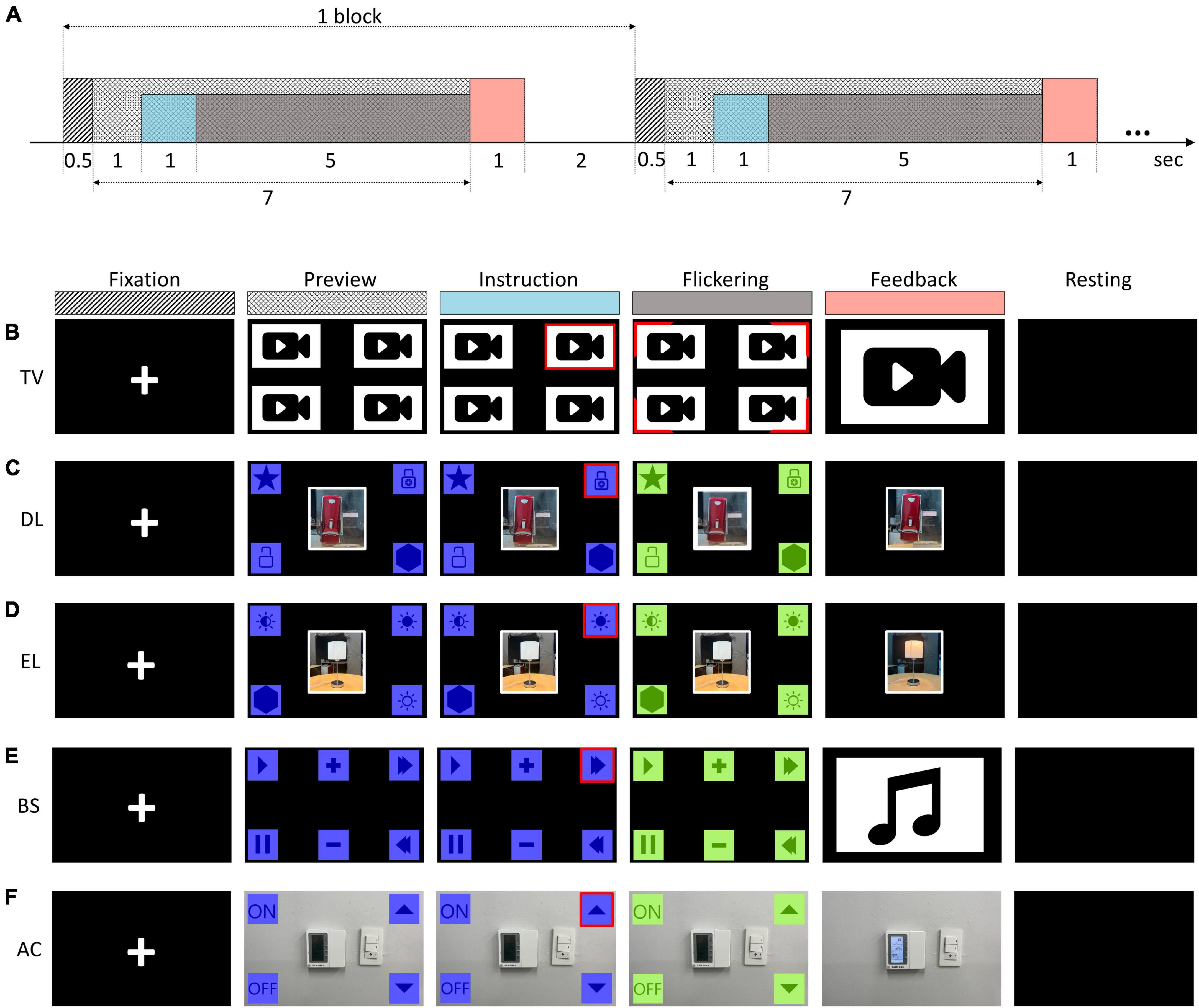

All the BCI experiments for home appliance control shared an identical experimental procedure. The experiments consisted of a series of blocks of the oddball task (Figure 2A). A single block began with 0.5-s fixation where a white cross appeared at the center of the screen. The preview of stimuli followed for 1 s which allowed subjects to perceive all the stimuli. Then, a target stimulus was instructed by changing the color of the border of the target stimulus to red for 1 s. Each stimulus flickered in a random order, which took 5 s total. Afterward, the feedback of BCI control was shown for 1 s, and subjects rested for 2 s until the next block. During the training phase, 50 blocks were repeated, while in the testing phase, 30 blocks were repeated. Specific paradigms for each home appliance are described below (see also Figures 2B–F).

Figure 2. Various BCI paradigms used for controlling different home appliances: (A) Temporal sequence of the experiment paradigm, (B) TV, (C) Door Locks (DL), (D) Electric Lights (EL), (E) Bluetooth Speakers (BS), and (F) Air Conditioners (AC).

To develop a BCI for controlling TV channels, an emulated Multiview TV platform was created. This platform displayed four preview channels simultaneously at four quadrants from the center of the screen (Figure 2B). The video clips in each channel served as both channel previews and visual distractors. The video clips were presented on a 50-inch Ultra High Definition (UHD) TV with a resolution of 1,920 × 1,080 and a refresh rate of 60 Hz. Additional red stimuli flickering at a frequency of 8 Hz surrounded the corners of the video clip windows. Each block began with the subject fixating their gaze at the center of the screen, followed by an instruction indicating the target channel by turning its boundary red. Subsequently, the four video clips and their surrounding stimuli were displayed, each flickering ten times in a random order. Subjects were instructed to gaze at the stimulus surrounding the target channel while seated comfortably 2.5 meters away from the TV screen. The video clip of the selected channel was then displayed for 1 s as feedback.

The BCI experiments for controlling door locks (DL) and electric lights (EL) presented the stimuli on a tablet PC screen (Figures 2C, D). Subjects were instructed to select one of the two control icons for door lock control (lock/unlock) or one of three icons for electric light control (on/off/dim). To maintain a target-to-non-target stimulus ratio of 1:3 (4 classes), dummy stimuli were added to the task. Although the number of commands for TV, EL, and DL varied, dummy stimuli were added to ensure that the classifier could differentiate among four targets (target-to-non-target stimulus ratio of 1:3). Additionally, a shared characteristic among all three experiments was the utilization of an LCD environment for stimulus presentation. Consequently, these experiments were categorized under a unified paradigm referred to as the 4-class LCD paradigm. The BCI experiment to control Bluetooth speakers (BS) was conducted in which subjects selected one of the six functions (on/off/play/pause/next track/previous track). In this experiment, the target-to-non-target stimulus ratio was maintained at 1:5 (Figure 2E). A BCI experiment was conducted to control air conditioners (AC) through an AR environment. The AR stimuli were displayed on Microsoft’s HoloLens 1, which had a resolution 2 HD 16:9 light engines–2.3 million light points. The BCI for AC control consisted of four functions (on/off/ + temperature/- temperature). The target-to-non-target stimulus ratio was maintained at 1:3 (Figure 2F).

The stimuli were presented in the form of icons representing control functions in the four experiments (DL, EL, BS, and AC). All the stimuli were displayed in blue. Then, during the stimulus flickering period, each stimulus flickered in a random order by briefly changing its color to light green for 0.625 s. The inter-stimulus interval was 0.125 s. Each stimulus flickered 10 times in the stimulus flickering period (McFarland et al., 2011). The home appliances to be controlled were displayed in the background of the screen in the DL, El, and AC experiments, while no display of the device was provided in the BS experiment (Figure 2).

2.4 Dataset structure

The data acquired from the experiments had a hierarchical structure, comprising three levels. A summary of the dataset information is provided in Table 1. First Level: Home Appliance Type. The first level categorized the data based on the type of home appliance being controlled through the BCI. Second Level: Subject. Within each home appliance category, the data was further divided based on the subject. Each subject was anonymized and identified only by a unique ID. Third Level: Block-Specific Data. The third level contained the granular, block-based data for each subject. The data files were named following a specific convention for easy identification: SubX_training refers to the training data for subject X, and SubX_test_tr_Y refers to the test data for the Y-th block of subject X. Each data file at the third level was composed of two main components:

• Data.signal: it contains EEG signals in a matrix format, with dimensions; [channel x time (points)].

• Data.trigger: it contains the event trigger data in a row vector format, with dimensions; [1 x time (points)].

The trigger types are coded as follows:

• 11: Block start

• 12: Stimulation start

• 13: Block end

• 1 to 6: Types of stimuli

• Between 11 and 12: Indicates the target stimulus.

2.5 EEG preprocessing

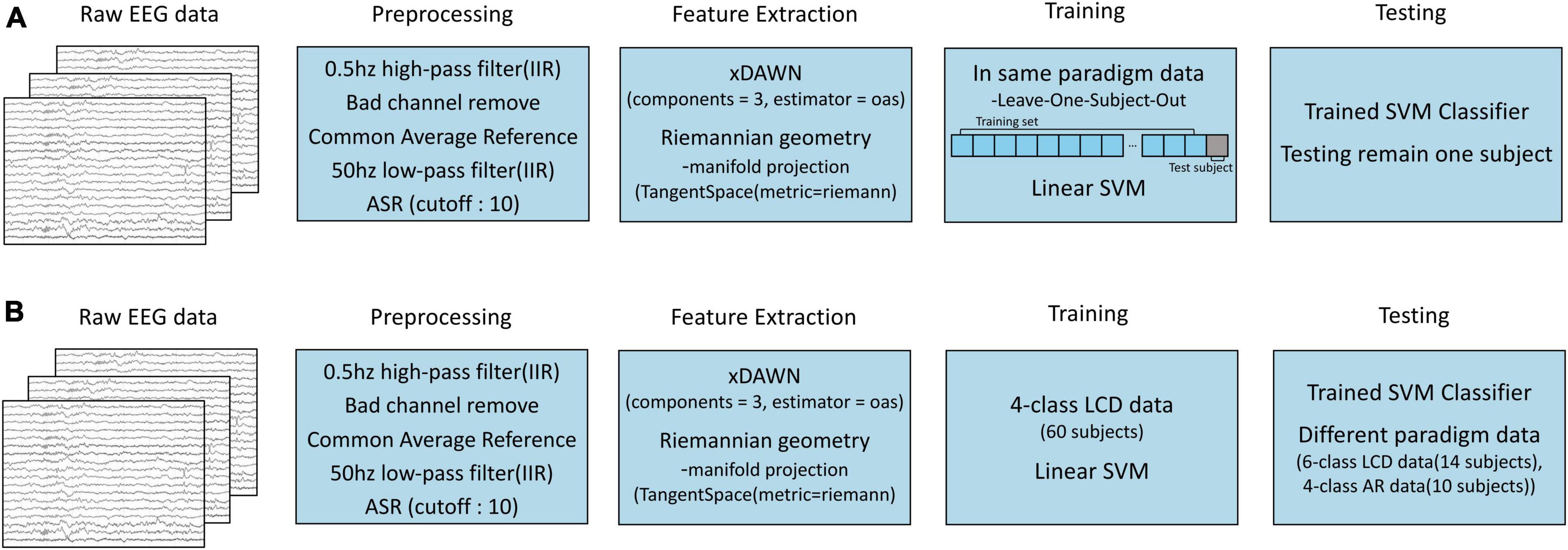

In our analysis, the preprocessing of EEG signals involved several steps. Initially, the EEG signals were subjected to high-pass filtering at 0.5 Hz using a Infinite Impulse Response (IIR) filter to eliminate slow drifts (4th-order Butterworth filter). Subsequently, each channels was evaluated for signal quality; the channels containing EEG signals that had the Pearson correlation coefficient lower than 0.4 after a 2-Hz low-pass filtering (2nd-order Butterworth filter) with more than 30% of all other channels were considered ‘bad’ and subsequently removed (Bigdely-Shamlo et al., 2015). The EEG signals in the remaining channels were then re-referenced using the Common Average Reference (CAR) method (Mullen et al., 2013). Following this, a 50-Hz low-pass IIR filter was employed to reduce line noise (4th-order Butterworth filter). Lastly, Artifact Subspace Reconstruction (ASR) was employed with a cutoff value of 10 for artifact removal (Mullen et al., 2013; Figure 3).

Figure 3. Overall process of transfer learnings. (A) Process of within-paradigm transfer learning. (B) Process of cross-paradigm transfer learning.

Event-related potentials were extracted by segmenting and averaging EEG signals in epochs time-locked to the stimuli. Specifically, an epoch was defined as 0.2 s before and 0.6 s after the onset of each stimulus.

2.6 Online BCI

The online BCI system was trained and tested over separate blocks. The training phase consisted of 50 blocks, during which subjects were instructed to gaze at a randomly displayed target. Feedback was provided based on the desired target stimulus, not the decoded one. From the training data, ERP amplitude features distinguishing target from non-target ERPs were selected using a two-sample t-test (p < 0.01). Dimensionality reduction was performed using Principal Component Analysis (PCA), retaining components that explained more than 90% of the feature variance. A Support Vector Machine (SVM) classifier with a linear kernel was then constructed to identify the target based on ERP features (Kim et al., 2019).

During the testing phase, which consisted of 30 blocks, subjects controlled a specified home appliance according to the target instruction using the BCI system. The classifier, trained from training phase (50 blocks), used the ERP features to predict the target command, with the prediction outcome then provided as feedback.

2.7 Transfer learning

Transfer learning was employed in this study to investigate its feasibility and effectiveness within and across different BCI paradigms (Wang et al., 2015). Among various types of transfer learning, this study focused on transductive transfer learning, in which the target task (classification) is identical between the domains but the training and testing domains differ (Pan and Yang, 2010). When applying transfer learning, we used only testing data that consisted of 30 blocks from all subjects, excluding the training data of 50 blocks from the analysis. The dataset was divided into three distinct subsets based on the experimental paradigms: (1) 4-class LCD (TV, DL, EL), (2) 6-class LCD (BS), and (3) 4-class AR (AC).

Initially, transfer learning was performed within each paradigm. The Riemannian geometry approach was used for feature extraction. We utilized the Riemannian geometry approach for feature extraction, applying the PyRiemann library (Barachant et al., 2023). ERP signals were spatially filtered using xDAWN, configured to use five components (n_components = 5) and an Orthogonal Approximation Subspace (OAS) estimator for covariance estimation (estimator = ‘oas’). Following xDAWN filtering, the signals were projected onto the Riemannian manifold with Tangent Space mapping [TangentSpace(metric = ‘riemann’)], preserving the manifold’s intrinsic structure (Barachant et al., 2013; Figure 3). The projected signals in the Riemannian manifold were used as features for classification. This projection was executed through tangent space mapping, a technique that linearizes the manifold at a given point, transforming the covariance matrices into tangent vectors in a Euclidean space. This projection is not just a transformation; it preserves the intrinsic structure of the manifold in the new Euclidean vector space. A Support Vector Machine (SVM) classifier with a linear kernel was constructed to identify the target based on the extracted features.

The accuracy of the within-paradigm transfer learning classification was evaluated using a ‘Leave-One-Subject-Out’ cross-validation approach for each subject within every dataset. This means that for each cross-validation fold, 30 blocks from all subjects except one were used for training, and the 30 blocks from the excluded subject were used for testing (for instance, in a 4-class LCD subset, training data consisted of 30 × 59 = 1,770 blocks, while test data consisted of 30 blocks from the excluded subject) (Figure 3A).

Next, transfer learning was applied across different paradigms. The feature extraction and classification methods remained consistent with the within-paradigm transfer learning. The larger training data size offered the opportunity to build a more robust model capable of generalizing well to other tasks. Therefore, the largest dataset, which consisted of 60 subjects in the 4-class LCD (TV, DL, EL), served as the training data for cross-paradigm transfer learning. Cross-paradigm transfer learning was then applied to the remaining datasets: 6-class LCD involving 14 subjects (BS) and 4-class AR involving 10 subjects (AC). The accuracy of the classification was evaluated for each subjects (Figure 3B).

3 Results

3.1 BCI performance

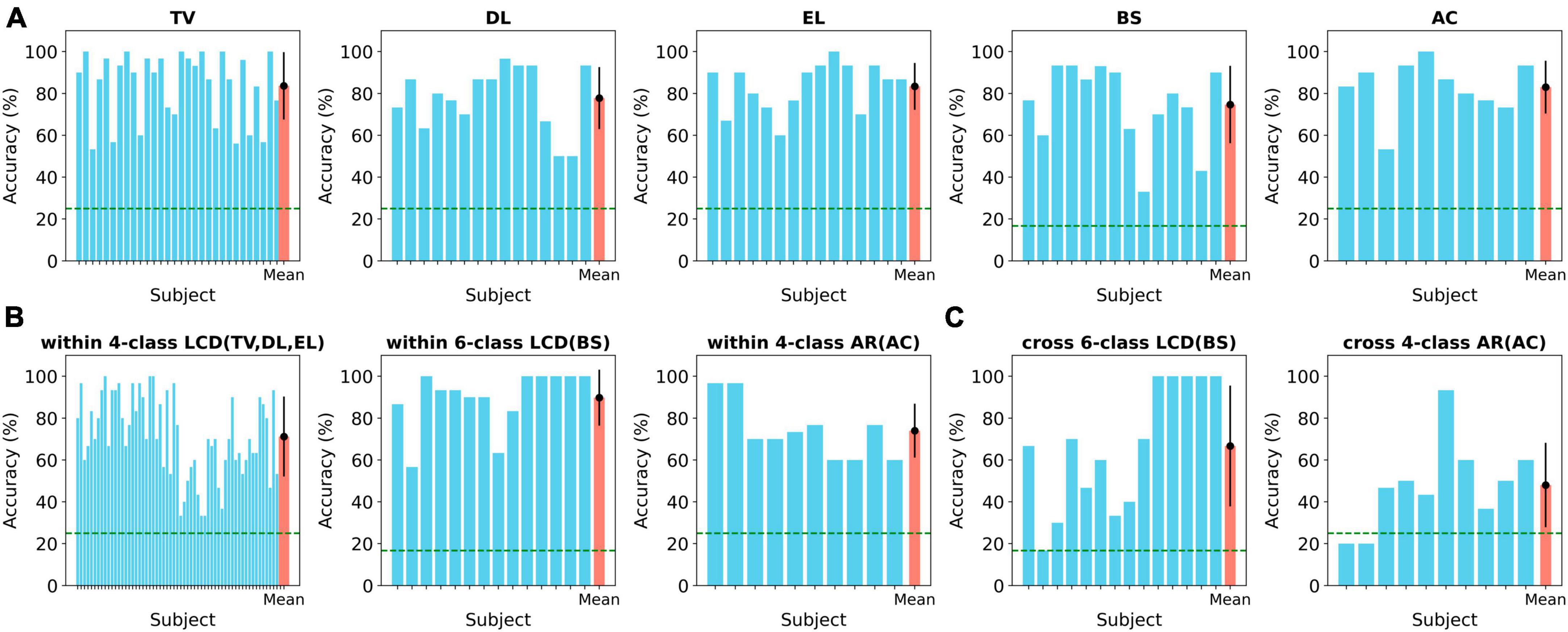

The performance of the BCI system was evaluated over three different paradigms, including a 4-class LCD environment (TV, DL, EL), a 6-class LCD environment (BS), and a 4-class AR environment (AC). The primary metric used to assess performance was classification accuracy, which measured the ratio of correctly classified commands to the total number of commands. Figure 4A shows the accuracy of the online control of each appliance. In the 4-class LCD paradigm, the system was used to control three home appliances: TV, DL, and EL. The average classification accuracy for TV control (N = 30) was 83.62 ± 16.38% (MEAN ± STD). The accuracy ranged from a minimum of 53% to a maximum of 100%. For DL control (N = 15), the average accuracy was 77.78 ± 15.3% (range: 50–96.6%). For EL control (N = 15), the average accuracy was 83.35 ± 11.56% (range: 60–100%). Note that the chance level was 25%. In the 6-class LCD paradigm, which focused on controlling a Bluetooth Speaker (BS), the average classification accuracy (N = 14) was 74.67 ± 19.2% (range: 33.33–93.33%). In the 4-class AR paradigm, which involved controlling an Air Conditioner (AC), the average classification accuracy (N = 10) was 86.3 ± 13.28% (range: 53.3–100%).

Figure 4. Accuracy across different paradigms and transfer learnings. (A) Online classification accuracy for controlling various home appliances in 4-class and 6-class LCD and 4-class AR environments. (B) Classification accuracy when applying within-paradigm transfer learning across different appliances. (C) Classification accuracy when implementing cross-paradigm transfer learning between 4-class LCD and 6-class LCD, and 4-class AR environments.

There was no significant difference in accuracy among home appliances within the 4-class LCD paradigm (Kruskal–Wallis test, p > 0.05). When comparing accuracy between the different paradigms, the 6-class LCD paradigm (BS) and 4-class AR paradigm (AC) showed no significant difference (Kruskal–Wallis test, p > 0.05).

3.2 Within-paradigm transfer learning

We investigated transfer learning within the same paradigm, as described in the Methods section. Figure 4B shows results of each appliance accuracy when applying within-paradigm transfer learning. In the 4-class LCD environment, the average classification accuracy was 71.17 ± 19.26% (range: 33.33–100%). In the 6-class LCD environment, the average classification accuracy was 89.76 ± 13.87% (range: 56.67–100%). Lastly, in the 4-class AR environment, the average classification accuracy was 74.00 ± 13.59% (range: 60–96.67%). There was no significant difference in accuracy with and without transfer learning in the 4-class AR environment and the 4-class AR environment (Paired t-test, p > 0.05). But there was a significant increase in accuracy with transfer learning for the 6-class LCD environment (Paired t-test, p < 0.05). It indicated that we could successfully apply the BCI system built from others’ data to a new user without calibrating the system for the new user.

3.3 Cross-paradigm transfer learning

Furthermore, we explored the feasibility of cross-paradigm transfer learning, while maintaining consistency in feature extraction and classification methods used in within-paradigm transfer learning. Figure 4C displays the outcomes of the accuracy of each appliance when implementing cross-paradigm transfer learning. When transferring from the 4-class LCD to the 6-class LCD environment, the average classification accuracy was 66.67 ± 29.99% (range: 16.67–100%). Similarly, when transferring from the 4-class LCD to the 4-class AR environment, the average classification accuracy was 48.00 ± 21.27% (range: 20–93.33%). A significant decrease in accuracy was observed in both cases (Paired t-test, p < 0.01). Accuracy dropped by 8% from BCI without transfer learning to that with transfer learning for the 6-class LCD paradigm. Also, it dropped by 35% from BCI without transfer learning to that with transfer learning for the 4-class AR paradigm. The accuracy of individual subjects, as well as the mean accuracies and standard deviations across all paradigms, can be found in Supplementary Table 2. We observed increase in classification accuracy when integrating the Riemannian geometric approach with xDAWN for feature extraction compared to using xDAWN alone. Statistically significant improvements were seen in three out of five cases (Supplementary Table 3).

4 Discussion

One of the most pressing challenges in the field of BCIs is the issue of inter-subject variability. Our study was explicitly designed to address this problem by constructing a comprehensive dataset that covers various stimulus presentation paradigms for ERP-based BCIs. Our dataset included ERP-based BCI data of 84 subjects with the oddball task used for controlling real-world home appliances online. The various stimulus presentation paradigms would offer opportunities to explore similarities and differences of ERP patterns as well as BCI operations induced by different paradigms. The improved classification accuracy achieved by within-transfer learning, particularly in the 6-class LCD (BS), demonstrates a possibility to mitigate the inter-subject variability. It suggests that BCIs can be generalized across different individuals without a substantial loss in performance.

The idea of transfer learning has become a noteworthy approach in the field of BCIs, especially for tackling the issue of inter-subject variability. Our results by within-paradigm transfer learning are promising with reasonable accuracies for different paradigms. This indicates that once the BCI system is built in one paradigm, the model might be applicable to others similar paradigms with little or no additional training (Lee et al., 2020). This could help reduce the time and resources needed for BCI deployment, potentially making it easier to move from research labs to practical use (Ko et al., 2021).

However, the challenge of inter-subject variability still remains between different paradigms according to our results. We observed that cross-paradigm transfer learning was not as successful as within-paradigm transfer learning. Despite the fact that the oddball task was consistent between the paradigms, it is intriguing to observe decreases in the BCI performance when we applied the BCI system built from LCD-based stimulus presentation to the data with AR-based stimulus presentation, event with the same number of classes. In contrast, there was no change in the BCI performance when we applied the BCI system built using the same AR-based stimulus presentation paradigm. Future research is warranted to identify potential factors that contribute to this variability induced by differences in stimulus presentation environment, such as attention, cognitive load and visual distraction (Souza and Naves, 2021; Gibson et al., 2022). Also, using more advanced machine learning methods, which can handle the complex relationships in the data (Alzahab et al., 2021; Zhang et al., 2021), may be useful to improve cross-paradigm transfer learning.

5 Conclusion

We constructed a comprehensive dataset of ERP-based BCIs with a relatively larger number of subjects (N = 84). The stimulus paradigms used to elicit ERPs in the oddball task were diverse, with varied number of stimuli (4 and 6) and display types (LCD monitor and AR). The dataset included both the training and testing data to build and assess BCIs. Especially, the testing data contained online BCI control data. each associated with different home appliances. We showcased the utility of the data by applying transfer learning to mitigate inter-subject variability. The results showed that transfer learning of BCIs was successful across subjects within the same stimulus presentation paradigm but limited across the different paradigms. It demonstrated the importance of understanding how different visual stimulations affect ERPs even performing the identical oddball task for the design of ERP-based BCIs. The feasibility of transfer learning, verified in this study when the paradigm of visual stimulus presentation is unchanged, may be useful to reduce subject-dependent calibration for practical use of ERP-based BCIs. We also believe that the dataset of ERP-based BCIs presented in this study can offer opportunities to researchers to develop and test new BCI algorithms to advance ERP-based BCIs to be more accessible and user-friendly.

Data availability statement

The datasets presented in this study are available in an online repository. The data can be accessed at https://github.com/jml226/Home-Appliance-Control-Dataset.

Ethics statement

The studies involving humans were approved by the Institutional Review Board at the Ulsan National Institutes of Science and Technology. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JL: Data curation, Formal analysis, Methodology, Writing—original draft. MK: Data curation, Methodology, Writing—original draft. DH: Data curation, Methodology, Writing—original draft. JK: Data curation, Methodology, Writing—original draft. M-KK: Data curation, Methodology, Writing—original draft. TL: Data curation, Methodology, Writing—original draft. JP: Data curation, Methodology, Writing—original draft. HK: Data curation, Methodology, Writing—original draft. MH: Data curation, Methodology, Writing—original draft. LK: Funding acquisition, Project administration, Writing—review and editing. S-PK: Funding acquisition, Project administration, Writing—original draft, Writing—review and editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the Alchemist Project (grant number 20012355, Fully implantable closed loop Brain to X for voice communication) funded by the Ministry of Trade, Industry and Energy (MOTIE, Korea), the Institute of Information and Communications Technology Planning and Evaluation (IITP) Grant funded by the Korea Government (MSIT) (grant number 2017-0-00432, Development of non-invasive integrated BCI SW platform to Control Home Appliances and External Devices by user’s thought via AR/VR interface), and the Brain Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (grant number 2022M3C7A1015112).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2024.1320457/full#supplementary-material

References

Ahn, M., Lee, M., Choi, J., and Jun, S. C. (2014). A review of brain-computer interface games and an opinion survey from researchers, developers and users. Sensors (Basel) 14, 14601–14633.

Alzahab, N. A., Apollonio, L., Di Iorio, A., Alshalak, M., Iarlori, S., Ferracuti, F., et al. (2021). Hybrid deep learning (hDL)-based brain-computer interface (BCI) systems: a systematic review. Brain Sci. 11:75.

Amiri, S., Fazel-Rezai, R., and Asadpour, V. (2013). A review of hybrid brain-computer interface systems. Adv. Hum. Comput. Interact. 2013:18702.

An, S., Kim, S., Chikontwe, P., and Park, S. H. (2023). “Dual attention relation network with fine-tuning for few-shot EEG motor imagery classification,” in Proceedings of the IEEE transactions on neural networks and learning systems, (Piscataway, NJ: IEEE), 1–15.

Anwar, S., Batool, T., and Majid, M. (2019). “Event related potential (ERP) based lie detection using a wearable EEG headset,” in Proceedings of the 16th IEEE international Bhurban conference on applied sciences and technology (IBCAST), (Piscataway, NJ: IEEE), 543–547.

Barachant, A., Barthélemy, Q., King, J.-R., Gramfort, A., Chevallier, S., Rodrigues, P. L. C., et al. (2023). pyRiemann. Available online at: https://github.com/pyRiemann/pyRiemann (accessed June, 2023).

Barachant, A., Bonnet, S., Congedo, M., and Jutten, C. (2013). Classification of covariance matrices using a Riemannian-based kernel for BCI applications. Neurocomputing 112, 172–178.

Bentabet, N., and Berrached, N.-E. (2016). “Synchronous P300 based BCI to control home appliances,” in Proceedings of the 8th IEEE international conference on modelling, identification and control (ICMIC), (Piscataway, NJ: IEEE), 835–838.

Bigdely-Shamlo, N., Mullen, T., Kothe, C., Su, K. M., and Robbins, K. A. (2015). The PREP pipeline: standardized preprocessing for large-scale EEG analysis. Front. Neuroinform. 9:16. doi: 10.3389/fninf.2015.00016

Congedo, M., Barachant, A., and Bhatia, R. (2017). Riemannian geometry for EEG-based brain-computer interfaces; a primer and a review. Brain Comput. Interfaces 4, 155–174.

Dolzhikova, I., Abibullaev, B., and Zollanvari, A. (2022). “An ensemble of convolutional neural networks for zero-calibration ERP-based BCIs,” in Proceedings of the 10th IEEE international winter conference on brain-computer interface (BCI), (Piscataway, NJ: IEEE), 1–4.

Fazel-Rezai, R., Allison, B., Guger, C., Sellers, E. W., Kleih, S. C., and Kübler, A. (2012). P300 brain computer interface: current challenges and emerging trends. Front. Neuroeng. 5:14. doi: 10.3389/fneng.2012.00014

Gao, X., Wang, Y., Chen, X., and Gao, S. (2021). Interface, interaction, and intelligence in generalized brain-computer interfaces. Trends Cogn. Sci. 25, 671–684.

Gibson, E., Lobaugh, N. J., Joordens, S., and McIntosh, A. R. (2022). EEG variability: task-driven or subject-driven signal of interest? Neuroimage 252:119034.

Jayaram, V., Alamgir, M., Altun, Y., Schölkopf, B., and Grosse-Wentrup, M. (2016). Transfer learning in brain-computer interfaces. IEEE Comput. Intell. Mag. 11, 20–31.

Kim, M., Kim, M.-K., Hwang, M., Kim, H.-Y., Cho, J., and Kim, S.-P. (2019). Online home appliance control using EEG-based brain–computer interfaces. Electronics 8:1101.

Kindermans, P. J., Schreuder, M., Schrauwen, B., Müller, K. R., and Tangermann, M. (2014). True zero-training brain-computer interfacing – An online study. PLoS One 9:e102504. doi: 10.1371/journal.pone.0102504

Ko, W., Jeon, E., Jeong, S., Phyo, J., and Suk, H. I. (2021). A survey on deep learning-based short/zero-calibration approaches for EEG-based brain–computer interfaces. Front. Hum. Neurosci. 15:643386. doi: 10.3389/fnhum.2021.643386

Krol, L. R., Andreessen, L. M., and Zander, T. O. (2018). “Passive brain–computer interfaces: a perspective on increased interactivity,” in Brain–computer interfaces handbook, eds C. S. Nam, A. Nijholt, and F. Lotte (Boca Raton, FL: CRC press), 69–86.

Lee, J., Won, K., Kwon, M., Jun, S. C., and Ahn, M. (2020). CNN with large data achieves true zero-training in online P300 brain-computer interface. IEEE Access 8, 74385–74400.

Li, F., Tao, Q., Peng, W., Zhang, T., Si, Y., Zhang, Y., et al. (2020). Inter-subject P300 variability relates to the efficiency of brain networks reconfigured from resting-to task-state: evidence from a simultaneous event-related EEG-fMRI study. Neuroimage 205:116285.

Mane, R., Chouhan, T., and Guan, C. (2020). BCI for stroke rehabilitation: motor and beyond. J. Neural Eng. 17:041001.

Marshall, D., Coyle, D., Wilson, S., and Callaghan, M. (2013). Games, gameplay, and BCI: the state of the art. IEEE Trans. Comput. Intell. AI Games 5, 82–99.

Maswanganyi, R. C., Tu, C., Owolawi, P. A., and Du, S. (2022). Statistical evaluation of factors influencing inter-session and inter-subject variability in EEG-based brain computer interface. IEEE Access 10, 96821–96839.

McFarland, D. J., Sarnacki, W. A., Townsend, G., Vaughan, T., and Wolpaw, J. R. (2011). The P300-based brain–computer interface (BCI): effects of stimulus rate. Clin. Neurophysiol. 122, 731–737.

Mullen, T., Kothe, C., Chi, Y. M., Ojeda, A., Kerth, T., Makeig, S., et al. (2013). “Real-time modeling and 3D visualization of source dynamics and connectivity using wearable EEG,” in Proceedings of the 35th annual international conference of the IEEE engineering in medicine and biology society (EMBC), (Piscataway, NJ: IEEE), 2184–2187.

Pan, S. J., and Yang, Q. (2010). A survey on transfer learning. IEEE Trans. Knowledge Data Eng. 22, 1345–1359.

Pérez-Velasco, S., Santamaría-Vázquez, E., Martínez-Cagigal, V., Marcos-Martinez, D., and Hornero, R. (2022). EEGSym: overcoming inter-subject variability in motor imagery based BCIs with deep learning. IEEE Trans. Neural Syst. Rehabil. Eng. 30, 1766–1775.

Rodrigues, P. L. C., Jutten, C., and Congedo, M. (2019). Riemannian procrustes analysis: transfer learning for brain–computer interfaces. IEEE Trans. Biomed. Eng. 66, 2390–2401.

Serby, H., Yom-Tov, E., and Inbar, G. F. (2005). An improved P300-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 13, 89–98.

Sosa, O. P., Quijano, Y., Doniz, M., and Chong-Quero, J. (2011). “BCI: a historical analysis and technology comparison,” in Proceedings of the Pan American health care exchanges, (Piscataway, NJ: IEEE), 205–209.

Souza, R., and Naves, E. L. M. (2021). Attention detection in virtual environments using EEG signals: a scoping review. Front. Physiol. 12:727840. doi: 10.3389/fphys.2021.727840

Townsend, G., Lapallo, B. K., Boulay, C. B., Krusienski, D. J., Frye, G. E., Hauser, C. K., et al. (2010). A novel P300-based brain–computer interface stimulus presentation paradigm: moving beyond rows and columns. Clin. Neurophysiol. 121, 1109–1120.

Wang, P., Lu, J., Zhang, B., and Tang, Z. (2015). “A review on transfer learning for brain-computer interface classification,” in Proceedings of the 5th international conference on information science and technology (ICIST), (Piscataway, NJ: IEEE), 315–322.

Keywords: ERP-based BCI, EEG, transfer learning, BCI dataset, home appliance

Citation: Lee J, Kim M, Heo D, Kim J, Kim M-K, Lee T, Park J, Kim H, Hwang M, Kim L and Kim S-P (2024) A comprehensive dataset for home appliance control using ERP-based BCIs with the application of inter-subject transfer learning. Front. Hum. Neurosci. 18:1320457. doi: 10.3389/fnhum.2024.1320457

Received: 12 October 2023; Accepted: 08 January 2024;

Published: 01 February 2024.

Edited by:

Ana Matran-Fernandez, University of Essex, United KingdomReviewed by:

Qingshan She, Hangzhou Dianzi University, ChinaHendrik Achim Woehrle, German Research Center for Artificial Intelligence (DFKI), Germany

Copyright © 2024 Lee, Kim, Heo, Kim, Kim, Lee, Park, Kim, Hwang, Kim and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laehyun Kim, laehyunk@kist.re.kr; Sung-Phil Kim, spkim@unist.ac.kr

Jongmin Lee

Jongmin Lee Minju Kim

Minju Kim Dojin Heo

Dojin Heo Jongsu Kim

Jongsu Kim Min-Ki Kim

Min-Ki Kim Taejun Lee

Taejun Lee Jongwoo Park1

Jongwoo Park1  Minho Hwang

Minho Hwang Laehyun Kim

Laehyun Kim Sung-Phil Kim

Sung-Phil Kim