- 1Faculty of Information Technology, Beijing Artificial Intelligence Institute, Beijing University of Technology, Beijing, China

- 2Tianjin Key Laboratory of Cognitive Computing and Application, School of Computer Science and Technology, Tianjin University, Tianjin, China

- 3School of Computer and Communication Engineering, University of Science and Technology Beijing, Beijing, China

- 4State Key Laboratory of Intelligent Technology and Systems, National Laboratory for Information Science and Technology, Tsinghua University, Beijing, China

- 5Medical Imaging Research Institute, Binzhou Medical University, Yantai, China

Emotions can be perceived from both facial and bodily expressions. Our previous study has found the successful decoding of facial expressions based on the functional connectivity (FC) patterns. However, the role of the FC patterns in the recognition of bodily expressions remained unclear, and no neuroimaging studies have adequately addressed the question of whether emotions perceiving from facial and bodily expressions are processed rely upon common or different neural networks. To address this, the present study collected functional magnetic resonance imaging (fMRI) data from a block design experiment with facial and bodily expression videos as stimuli (three emotions: anger, fear, and joy), and conducted multivariate pattern classification analysis based on the estimated FC patterns. We found that in addition to the facial expressions, bodily expressions could also be successfully decoded based on the large-scale FC patterns. The emotion classification accuracies for the facial expressions were higher than that for the bodily expressions. Further contributive FC analysis showed that emotion-discriminative networks were widely distributed in both hemispheres, containing regions that ranged from primary visual areas to higher-level cognitive areas. Moreover, for a particular emotion, discriminative FCs for facial and bodily expressions were distinct. Together, our findings highlight the key role of the FC patterns in the emotion processing, indicating how large-scale FC patterns reconfigure in processing of facial and bodily expressions, and suggest the distributed neural representation for the emotion recognition. Furthermore, our results also suggest that the human brain employs separate network representations for facial and bodily expressions of the same emotions. This study provides new evidence for the network representations for emotion perception and may further our understanding of the potential mechanisms underlying body language emotion recognition.

Introduction

Humans can readily recognize others’ emotions and make the corresponding reactions. In daily communications, emotions can be perceived from facial and bodily expressions. The ability to decode emotions from different perceptual cues is a crucial skill for the human brain. In recent years, the representation mechanisms of facial and bodily expressions have been intensively explored, so as to deepen our understanding of the neural basis underlying this brain–behavior relationship.

Using functional magnetic resonance imaging (fMRI), neuroimaging studies have identified a number of brain regions showing preferential activation to facial and bodily expressions. An earlier model for face perception was proposed by Haxby et al. (2000) and Gobbini and Haxby (2007), which consisted of a “core” and an “extended” system. These face-selective areas, especially the occipital face area (OFA), the fusiform face area (FFA), and the posterior superior temporal sulcus (pSTS), which together constituted the core face network, have been considered as key regions in charge of processing the identity and emotional features of the face (Grill-Spector et al., 2004; Ishai et al., 2005; Lee et al., 2010; Gobbini et al., 2011). Bodies and body parts are found to be represented in the extrastriate body area (EBA) and the fusiform body area (FBA) (Downing and Peelen, 2016). Particularly, the FBA is partially overlapped with the FFA, and some similarities have been found between the processing of bodies and faces (Minnebusch and Daum, 2009; de Gelder et al., 2010). Because the fusiform gyrus (FG) contains both FFA and FBA, this area is considered to represent the characteristics of the whole person (Kim and McCarthy, 2016). Studies in macaques and humans have found that the STS, which acted as a crucial node for processing of social information, exhibited sensitivity to both faces and bodies (Pinsk et al., 2009). Recent studies have also proposed that the STS participated in the processing of facial and bodily motions, postures, and emotions (Candidi et al., 2011; Zhu et al., 2013).

As a data-driven technique, multivariate pattern analysis (MVPA) provides a promising method to infer the functional roles of the cortical areas and networks from the distributed patterns of the fMRI data (Mahmoudi et al., 2012). Recently, using MVPA, a growing number of studies have explored the emotion decoding based on the activation patterns. Said et al. (2010) and Harry et al. (2013) revealed the successful decoding of facial emotions in the STS and FFA, while Wegrzyn et al. (2015) directly compared the emotion classification rates across the face processing areas in Haxby’s model (Haxby et al., 2000). Our previous studies respectively, identified the face- and body-selective areas as well as the motion-sensitive regions and employed activation-based MVPA to explore their roles in decoding of facial and bodily expressions (Liang et al., 2017; Yang et al., 2018). However, these studies mainly focused on the emotion decoding from the activation patterns of specific brain regions. Due to the expected existence of interactions between different cortical regions, the potential contributions of the connectivity patterns in the processing of emotional information need to be further explored. In comparison with the studies on specific brain regions, functional connectivity (FC) analysis takes into account the functional interactions between distinct brain regions and thus can provide new insights into how large-scale neuronal communication and information integration relate to the human cognition and behavior. Commonly, FC can be effectively measured by the correlation analysis, which characterizes the temporal correlations in the fMRI activity between different cortical regions (Smith, 2012). In recent years, there has been increasing interest in FC analyses, and studies on the recognition of various objects have commonly observed intrinsic interconnections between distinct brain regions (He et al., 2013; Zhen et al., 2013; Hutchison et al., 2014). In addition to analyzing several predefined regions of interest (ROIs) or networks, whole-brain FC analysis can further ensure the optimal employment of the wealth of information present in the fMRI data (Zeng et al., 2012). Using whole-brain FC analysis combined with MVPA, recent fMRI studies have demonstrated the successful decoding of neurological disorders and various object categories from the FC patterns (Zeng et al., 2012; Liu et al., 2015; Wang et al., 2016). Inspired by these studies, our recent study has further revealed the successful decoding of facial expressions based on the FC patterns (Liang et al., 2018). However, the potential contribution of the FC patterns in the decoding of bodily expressions remains unclear, and no neuroimaging studies have resolved the question of whether emotions perceiving from facial and bodily expressions are processed rely upon common or different neural networks.

This study aimed to explore the network representations of facial and bodily expressions. To address this, we collected fMRI data in a block design multi-category emotion classification experiment wherein participants viewed emotions (anger, fear, and joy) from videos of facial and bodily expressions. Dynamic stimuli were employed in the present study as there was evidence that suggested that dynamic stimuli had greater ecological validity than their static counterparts and might be more appropriate to investigate the “authentic” mechanism of the brain (Johnston et al., 2013). We conducted whole-brain FC analysis to estimate the FC patterns for each emotion in each stimulus type, and employed multivariate connectivity pattern classification analyses (fcMVPA). We calculated the classification accuracies for facial and bodily expressions based on the FC patterns and constructed emotion-preferring networks by identifying the discriminative FCs.

Materials and Methods

Participants

Twenty-four healthy, right-handed subjects (12 females, range 19–25 years old) participated in this study. All subjects had normal or corrected-to-normal vision and with no history of neurological or psychiatric disorders. Informed consents were obtained from all individual participants included in the study. This experiment was conducted in accordance to the local Ethics Committee and was approved by the Research Ethics Committee of Yantai Affiliated Hospital of Binzhou Medical University. Four subjects were discarded due to the excessive head motion during the scanning (translation >2 mm, rotation >2°, Liu et al., 2015). Therefore, the final connectivity analysis included 20 subjects.

fMRI Data Acquisition

Functional and structural images were acquired by a 3.0-T Siemens scanner with an eight-channel head coil in Yantai Affiliated Hospital of Binzhou Medical University. Foam pads and earplugs were used to reduce the head motion and scanner noise. Functional images were acquired using a gradient echo-planar imaging (EPI) sequence (TR = 2000 ms, TE = 30 ms, voxel size = 3.1 × 3.1 × 4.0 mm3, matrix size = 64 × 64, slices = 33, slice thickness = 4 mm, slice gap = 0.6 mm). In addition, T1-weighted structural images were acquired using a three-dimensional magnetization-prepared rapid-acquisition gradient echo (3D MPRAGE) sequence (TR = 1900 ms, TE = 2.52 ms, TI = 1100 ms, voxel size = 1 × 1 × 1 mm3, matrix size = 256 × 256). In the scanner, participants viewed the stimuli through the high-resolution stereo 3D glasses of the VisuaStim Digital MRI Compatible fMRI system.

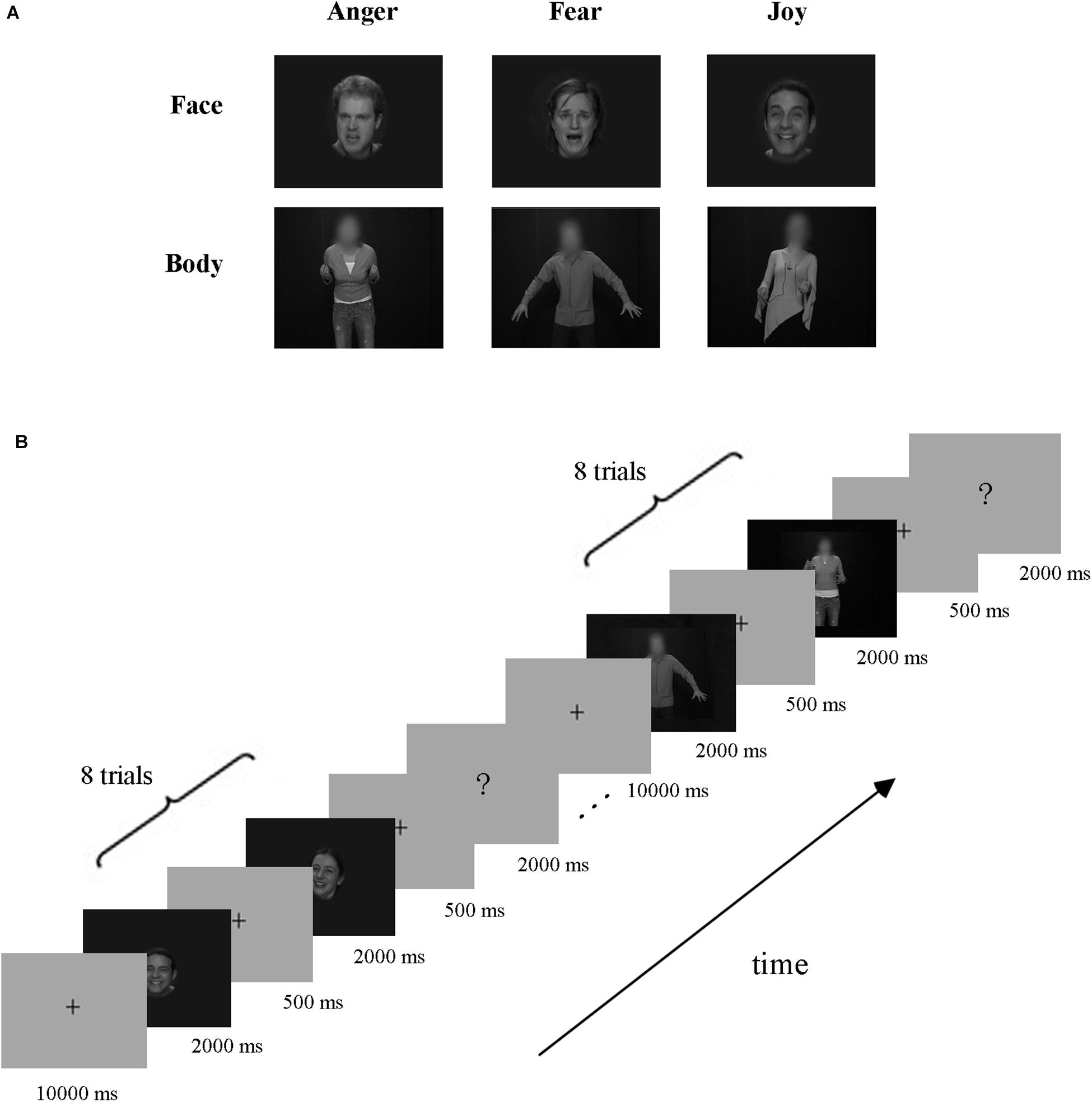

Facial and Bodily Expression Stimuli

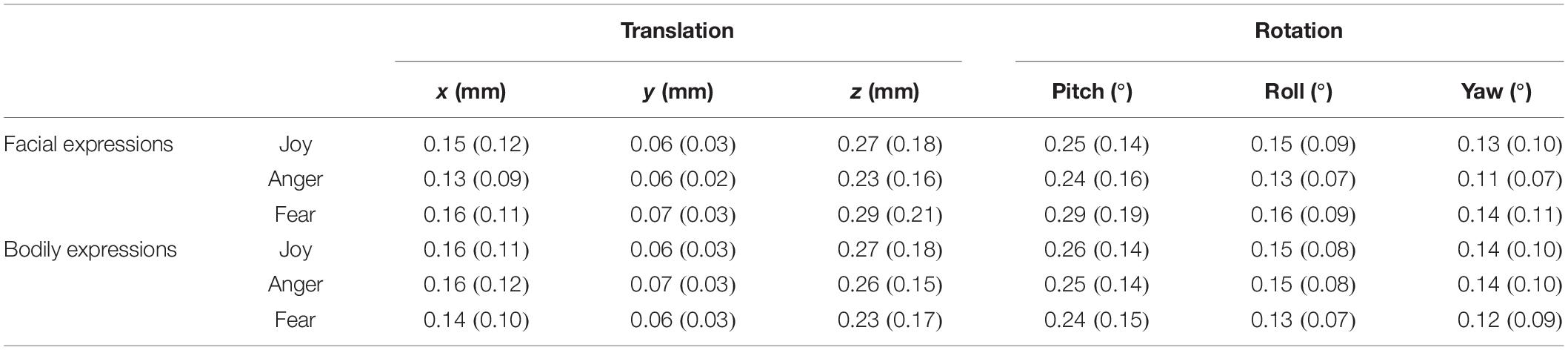

Video clips of eight different individuals (four males and four females) displaying anger, fear, and joy (Grezes et al., 2007; de Gelder et al., 2012, 2015) were chosen from the Geneva Multimodal Emotion Portrayals (GEMEP) corpus (Banziger et al., 2012). Facial and bodily expression stimuli were created by cutting out and obscuring the irrelevant part of the whole-person videos using Gaussian blur masks (with Adobe Premiere Pro CC) (Kret et al., 2011), and the acquired face clips were magnified appropriately. All videos were edited to 2000 ms (25 frames/s) and were converted into grayscale using MATLAB (Furl et al., 2012, 2013, 2015; Kaiser et al., 2014; Soria Bauser and Suchan, 2015). Finally, video clips were resized to 720 × 576 pixels and presented on the center of the screen. All generated stimuli were validated well, with recognition from another group of participants before scanning. The exemplar stimuli are shown in Figure 1A.

Figure 1. Exemplar stimuli and schematic representation of the experiment paradigm. (A) Exemplar facial and bodily expression stimuli. All emotion stimuli were taken from the GEMEP database. Videos of faces and bodies displaying three emotions (anger, fear, and joy) were used in the experiment. (B) Paradigm of the experiment design. A cross was presented for 10 s before each block, and then eight emotion stimuli appeared. Subsequently, the participants completed a button task to indicate their discrimination of the emotion category they had seen in the previous block.

Experiment Paradigm

The experiment employed a block design, with three runs. There were three emotions (joy, anger, and fear) in our experiment, which were expressed by three stimulus types: facial expressions, bodily expressions, and whole-person expressions. Data from blocks of whole-person expressions were not analyzed in this study, which were included for the purpose of another study (Yang et al., 2018). The schematic representation of the experiment paradigm is shown in Figure 1B. Each run contained 18 blocks (3 emotions × 3 types × 2 repetitions), presented in a pseudo-random order to ensure that the same emotion or stimulus type did not appear consecutively (Axelrod and Yovel, 2012; Furl et al., 2013, 2015). At the beginning of each run, there was a 10 s fixation cross, followed by a stimulus block of eight trials, and then a 2 s button task. Successive stimulus blocks were separated by a fixation interval for 10 s. Each trial consisted of a 2000-ms stimulus video and an interstimulus interval (ISI) of 500 ms. During the button task, participants were instructed to press a button to indicate the emotion category they had seen in the previous block. Stimulus presentation was performed using E-Prime 2.0 Professional (Psychology Software Tools, Pittsburgh, PA, United States) and the behavioral responses were collected using the response pad in the scanner.

Network Node Definitions

Regions of interests were defined according to the Brainnetome Atlas (Fan et al., 2016). The Brainnetome is generated using a connectivity-based parcellation framework, which provides fine-grained information on both anatomical and functional connections. We employed this atlas to define the network nodes in the FC analysis since it provided a stable starting point for the exploration of the complex relationships between structure, function and connectivity. There are 246 regions in this atlas, with 210 cortical and 36 subcortical subregions of the entire brain. Details about the label and the MNI coordinates of each node can be found at http://atlas.brainnetome.org/.

Data Preprocessing

The fMRI data were first preprocessed using SPM8 software1. For each run, the first five volumes were discarded to allow for T1 equilibration effects (Wang et al., 2016; Liang et al., 2018; Zhang et al., 2018). The remaining functional images were corrected for slice acquisition time and head motion. Each participant’s structural image was co-registered with the functional images and was subsequently segmented into gray matter, white matter (WM), and cerebrospinal fluid (CSF). Then, the generated parameters by unified segmentation were used to normalize the functional images into the standard Montreal Neurological Institute (MNI) space with voxel sizes resampled into 3 × 3 × 3 mm. Finally, the functional data were spatially smoothed with a 4 mm full-width at half-maximum (FWHM) isotropic Gaussian kernel.

Estimation of the FC Patterns for Facial and Bodily Expressions

The task-related whole-brain FC pattern estimation was carried out in MATLAB using the CONN toolbox2 (Whitfield-Gabrieli and Nieto-Castanon, 2012). CONN provides a common framework to perform a large suite of connectivity analyses for both resting and task fMRI data. For each subject, the normalized structural volume and the preprocessed functional images were submitted to CONN. A total of 246 network nodes were defined according to the Brainnetome Atlas. In FC analysis, it is critical to appropriately address noise in order to avoid possible confounding effects. CONN employs a component-based noise correction (CompCor) strategy (Behzadi et al., 2007), which can be particularly useful to reduce non-neural confounders in the context of FC analysis, increasing not only the validity, but also the sensitivity and specificity of the analysis. Before the FC calculation, standard preprocessing and denoising procedures using the default settings of the CONN toolbox were performed on the fMRI time series to further remove unwanted motion (Power et al., 2012) and physiological and other artificial effects from the BOLD signals. Confounding factors were regressed out by adding covariates of the six realignment parameters of head motion, the principal components of WM and CSF, and the first-order linear trend. The modeled task effects (box-car task design function convolved with the canonical hemodynamic response function) were also included as covariates to ensure that temporal correlations reflected FC and did not simply reflect task-related co-activations (Cole et al., 2019). Each of these defined confounding factors was then regressed out from the BOLD time series, and the resulting residual time series were temporally filtered using band-pass filter 0.01–0.1 Hz (Wang et al., 2016; Liang et al., 2018). The FC computation was conducted on the residual BOLD time series. After these preprocessing, the BOLD time series were divided into scans associated with each block presentation. All of the scans with nonzero effects in the resulting time series were concatenated for each condition (each emotion category in each stimulus type) and across all runs. Mean time series were obtained by averaging the time series of all voxels within each ROI and an ROI-to-ROI analysis was conducted to calculate the pairwise correlations between the mean time series of ROIs. Then, the correlation coefficients were Fisher z transformed, producing a connectivity map per emotion for each stimulus type in each participant, which were used as features in the later multivariate connectivity pattern classification analysis (fcMVPA).

FcMVPA Classification Implementation

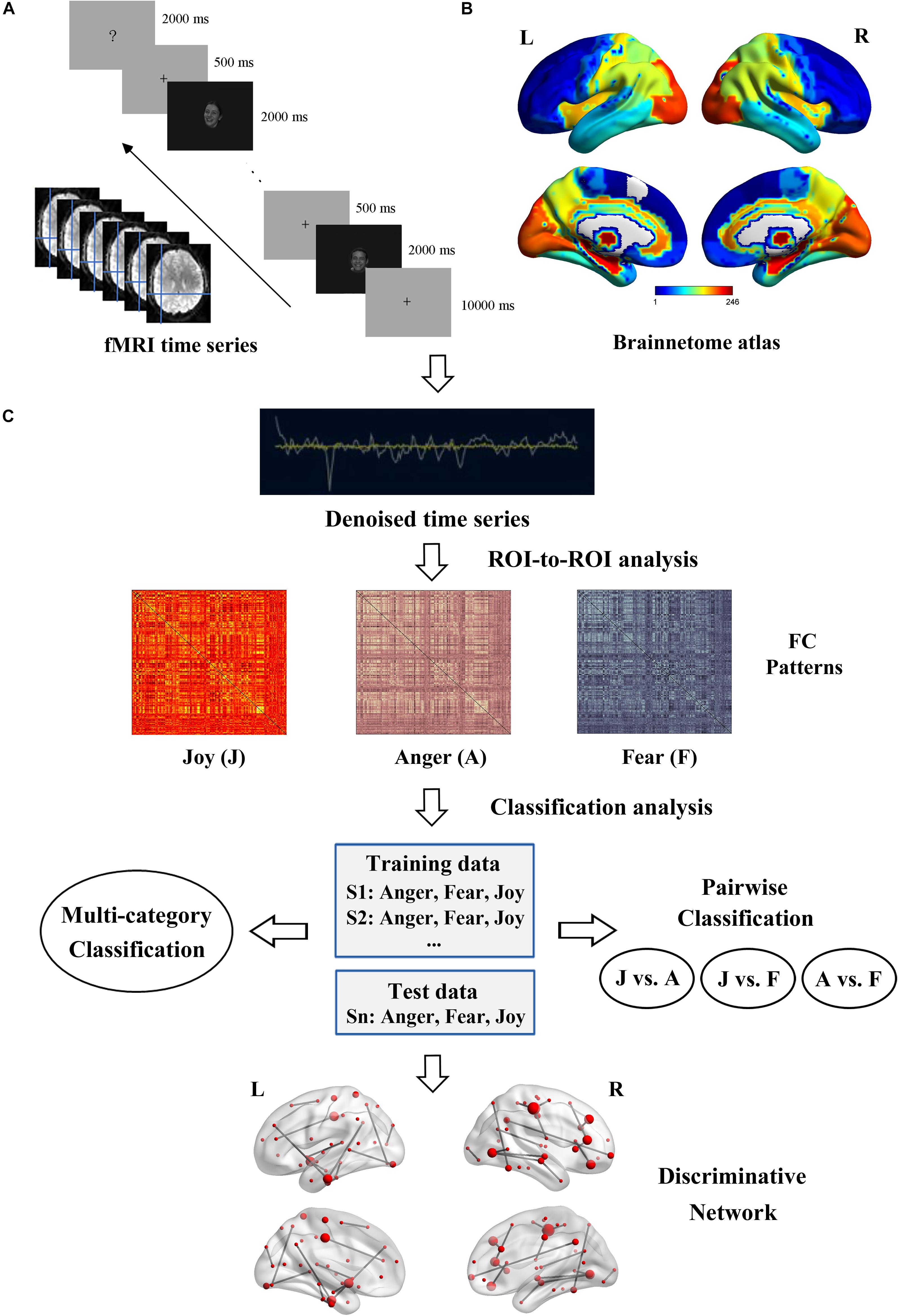

Multivariate connectivity pattern classification was conducted on the estimated FC patterns to explore their roles in decoding of facial and bodily expressions. Figure 2 illustrates the flowchart of the experiment and analysis procedures, in which Figures 2A,B respectively show the fMRI data acquisition and the network nodes definition, and Figure 2C shows the overall framework of the fcMVPA. Due to the symmetry of the FC matrices, we extracted the lower triangle values to generate initial FC features. This procedure resulted in 30,135 [(246 × 245)/2] features in total. As there was evidence that showed that the interpretation of negative FCs remained controversial (Fox et al., 2009; Weissenbacher et al., 2009; Wang et al., 2016; Parente et al., 2018), in this study, we employed one-sample t tests to focus on the positive FCs and explored their roles in the decoding of facial and bodily expressions (Wang et al., 2016; Zhang et al., 2018). That is, for the training data, we conducted one-sample t test across participants for each of the 30,135 connections in each emotion category, and retained the FCs that had values significantly greater than zero [p values were corrected using false discovery rate (FDR) q = 0.01 for multiple comparisons]. Then, for each stimulus type (facial and bodily expressions), we pooled the positive FCs of the three emotions together to generate features for classification, which were significantly positive for at least one emotion category (Wang et al., 2016; Liang et al., 2018; Zhang et al., 2018). For the classification analysis, a linear support vector machine implemented in LIBSVM3 was employed as classifier, and the classification performance was evaluated with leave-one-subject-out cross-validation (LOOCV) scheme (Liu et al., 2015; Wang et al., 2016; Zhang et al., 2018). For each LOOCV fold, feature selection was only executed on the training set to avoid peeking. We conducted multi-category and pairwise emotion classifications for the facial and bodily expressions. The implementation of the multi-category classification employed a one-against-one voting strategy. In each LOOCV trial, the classifier was trained on all but one subject, and was tested on the remaining one. This procedure was repeated with 20 iterations; all subjects had been used as test data once, and the decoding performance was generated by averaging the accuracies of all iterations.

Figure 2. Flowchart of the experiment and data analysis procedure. (A) Experiment and fMRI data acquisition. (B) Brainnetome atlas for network nodes definition. (C) Framework overview of the fcMVPA. Estimation of the FC patterns was carried out using CONN toolbox. Before the FC computing, BOLD time series were denoising to further remove unwanted motion and physiological and other artifactual effects. Then, the whole-brain FC patterns for each emotion were constructed using ROI-to-ROI analysis. Emotion classification was performed in a leave-one-subject-out cross-validation scheme with an SVM classifier. Multi-category and pairwise emotion classifications for the facial and bodily expressions were conducted. Emotion-preferring networks were constructed based on the discriminative FCs.

To evaluate the decoding performance, the statistical significance of the classification accuracy was assessed using permutation test (Liu et al., 2015; Cui et al., 2016; Wang et al., 2016; Fernandes et al., 2017). Permutation test is a non-parametric approach (Golland and Fischl, 2003), which is used to test the null hypothesis that the computed result is obtained by chance (Zhu et al., 2008). Thus, the same cross-validation procedure was carried out for 1000 random shuffles of class labels and the results were obtained across all permutations. If less than 5% of the accuracies from all permutations exceeded the actual accuracy (using correct labels), the result was considered to be significant (p < 0.05).

Constructing Emotion-Preferring Networks for Facial and Bodily Expressions From Discriminative FCs

In this section, we identified the most contributive FCs in the emotion-discriminative networks for the facial and bodily expressions. We used the classification weights for each FC to reflect its contribution to the classification (Liu et al., 2015; Cui et al., 2016). Since feature selection was based on a slightly different sample subset in each LOOCV fold, consensus FCs that were selected on all folds were defined as the discriminative features. The discriminative weight for each feature was defined as the average of their absolute weights across all LOOCV folds. FCs with higher discriminative weights were considered to be more contributive to the emotion classification (Ecker et al., 2010; Dai et al., 2012; Cui et al., 2016). We then defined emotion-preferring network for each emotion category with FCs exhibiting reliable discriminative power when classifying a particular emotion with each of other two emotions.

Results

Behavioral Results

We collected the behavioral data for the recognition of facial and bodily expressions during the fMRI scanning. The average classification accuracy for facial expressions was 98.06% (SD = 3.73%) (Joy: mean = 100%, SD = 0; Anger: mean = 97.5%, SD = 6.11%; Fear: mean = 96.67%, SD = 6.84%), and that for bodily expressions was 97.22% (SD = 4.6%) (Joy: mean = 97.5%, SD = 6.11%; Anger: mean = 97.5%, SD = 6.11%; Fear: mean = 96.67%, SD = 6.84%). These results verified the validity of the stimuli in our experiment, where both facial and bodily expressions could be successfully classified at high accuracies. Further repeated-measures analysis of variance (ANOVA) for accuracies with Condition (Facial and Bodily) × Emotion (Joy, Anger, and Fear) revealed no significant effect for Condition [F(1,19) = 0.588, p = 0.453] or for Emotion [F(2,38) = 1.498, p = 0.237], and there was no significant interaction of Condition and Emotion [F(2,38) = 1, p = 0.357]. Details for the recognition accuracies and the corresponding reaction times can be found in Table 1.

Table 1. Behavioral accuracies and reaction times for facial and bodily expressions [mean% and standard deviation (SD)].

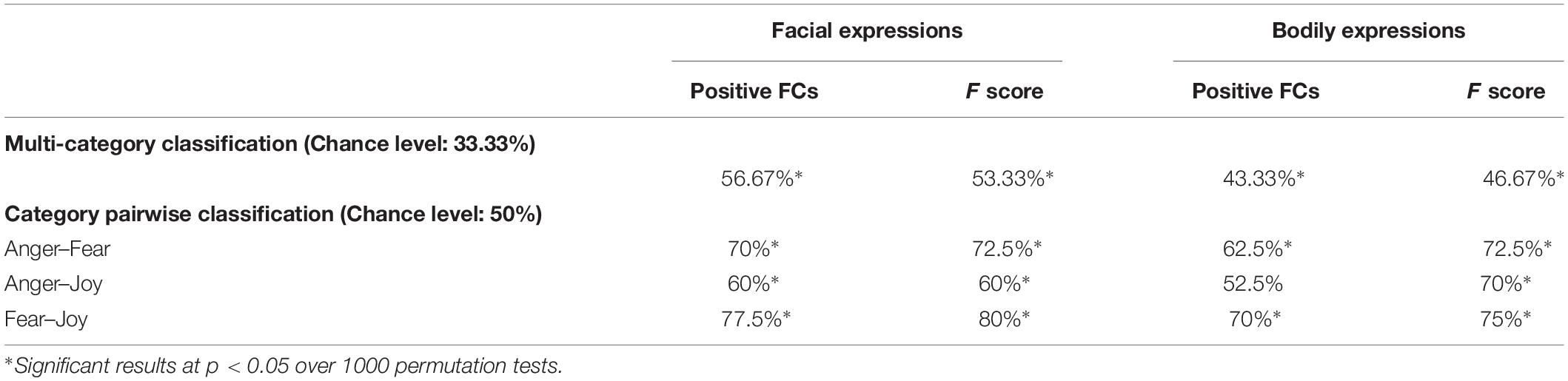

Emotion Classification Performance Based on fcMVPA

In this section, we conducted fcMVPA to explore the classification of facial and bodily expressions based on the constructed FC patterns. Network nodes were defined by the Brainnetome atlas and the fMRI time series were denoised using CONN to further remove unwanted motion (Van Dijk et al., 2012; Zeng et al., 2014) and physiological and other artificial effects from the BOLD signals. Table 2 summarizes the head motion parameters for different emotion categories. ROI-to-ROI analysis was performed to generate the connectivity map for each emotion in each stimulus type (facial and bodily expressions). In the main fcMVPA classification, we focused on the positive FCs (using one-sample t test with FDR q = 0.01) as features since the interpretation of negative FCs remained controversial and unclear (Fox et al., 2009; Weissenbacher et al., 2009; Wang et al., 2016; Parente et al., 2018). Table 3 shows the multi-category and pairwise classification results for the facial and bodily expressions based on the positive FCs. We also add analyses of an additional feature selection with F score (Liu et al., 2015), and these results are shown in Table 3 as F score results. Using the positive FCs, we found that both facial and bodily expressions could be successfully decoded for the multi-category emotion classification (chance level: 33.33%, p < 0.05, 1000 permutation tests), and for the pairwise emotion classification (chance level: 50%), all pairs of facial expressions (anger vs. fear, anger vs. joy, fear vs. joy) and two pairs of bodily expressions (anger vs. fear, fear vs. joy) could be significantly decoded (p < 0.05, 1000 permutation tests). In addition, we verified our analysis with two other parcellation schemes, the Harvard–Oxford atlas and the 200-region parcellations in Craddock et al. (2012), which were frequently used in previous fcMVPA studies (Wang et al., 2016; Liang et al., 2018; Zhang et al., 2018). These classification accuracies were generally similar to those from the Brainnetome atlas and were significantly higher than chance (decoding accuracies for the facial expressions were much higher than that for the bodily expressions), indicating the robustness of our results (Supplementary Table S1).

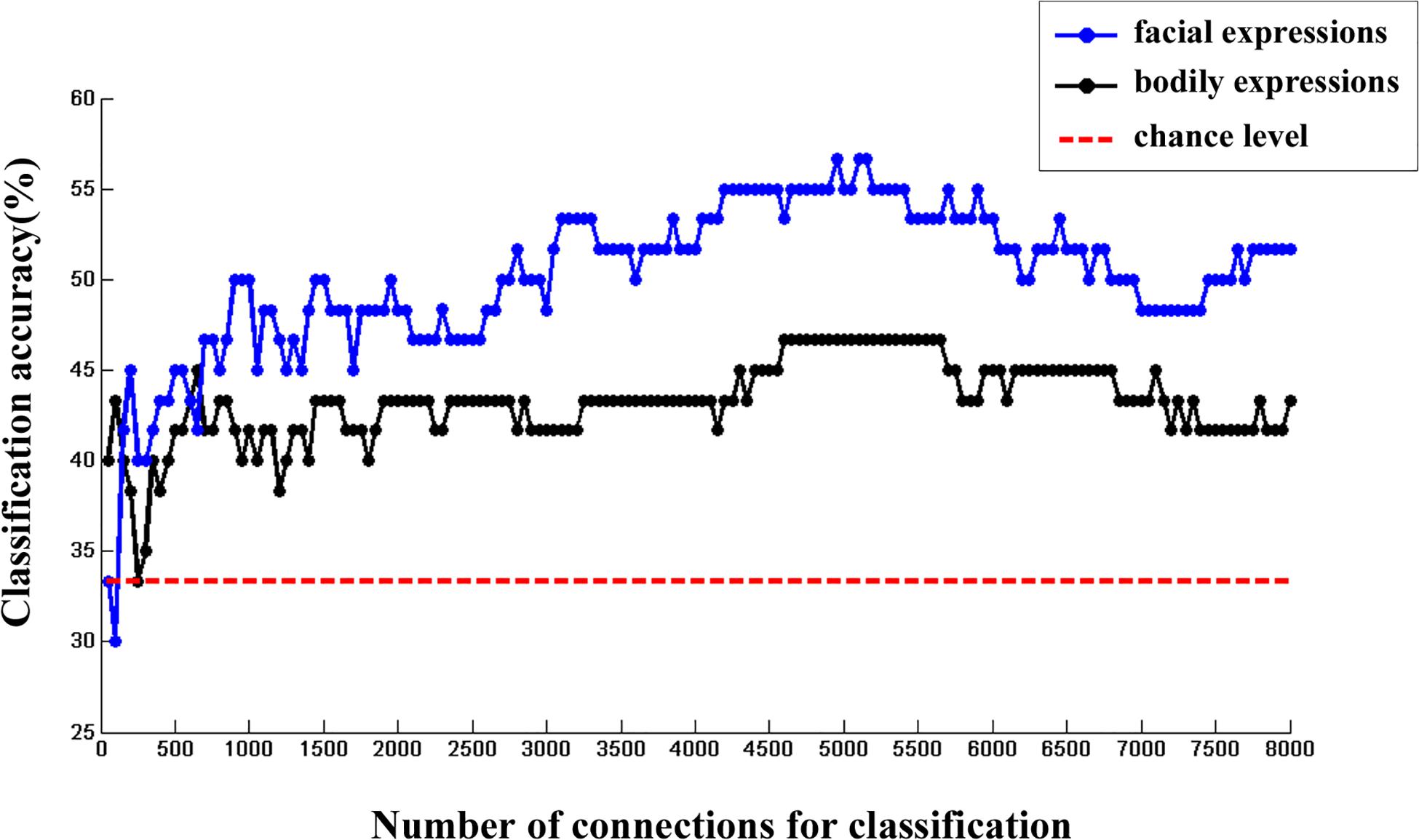

Moreover, we calculated the multi-category classification accuracy as a function of the number of FC features used in the classification procedure. In this step, FC features were ranked according to their p values of one-sample t test in ascending order. Results are shown in Figure 3. We found that both facial and bodily expressions could be consistently successful in decoding based on the large-scale FC patterns, and the decoding accuracies were higher for the facial than for the bodily expressions.

Figure 3. The changes of multi-category classification accuracies for facial and bodily expressions when different number of FC features were used.

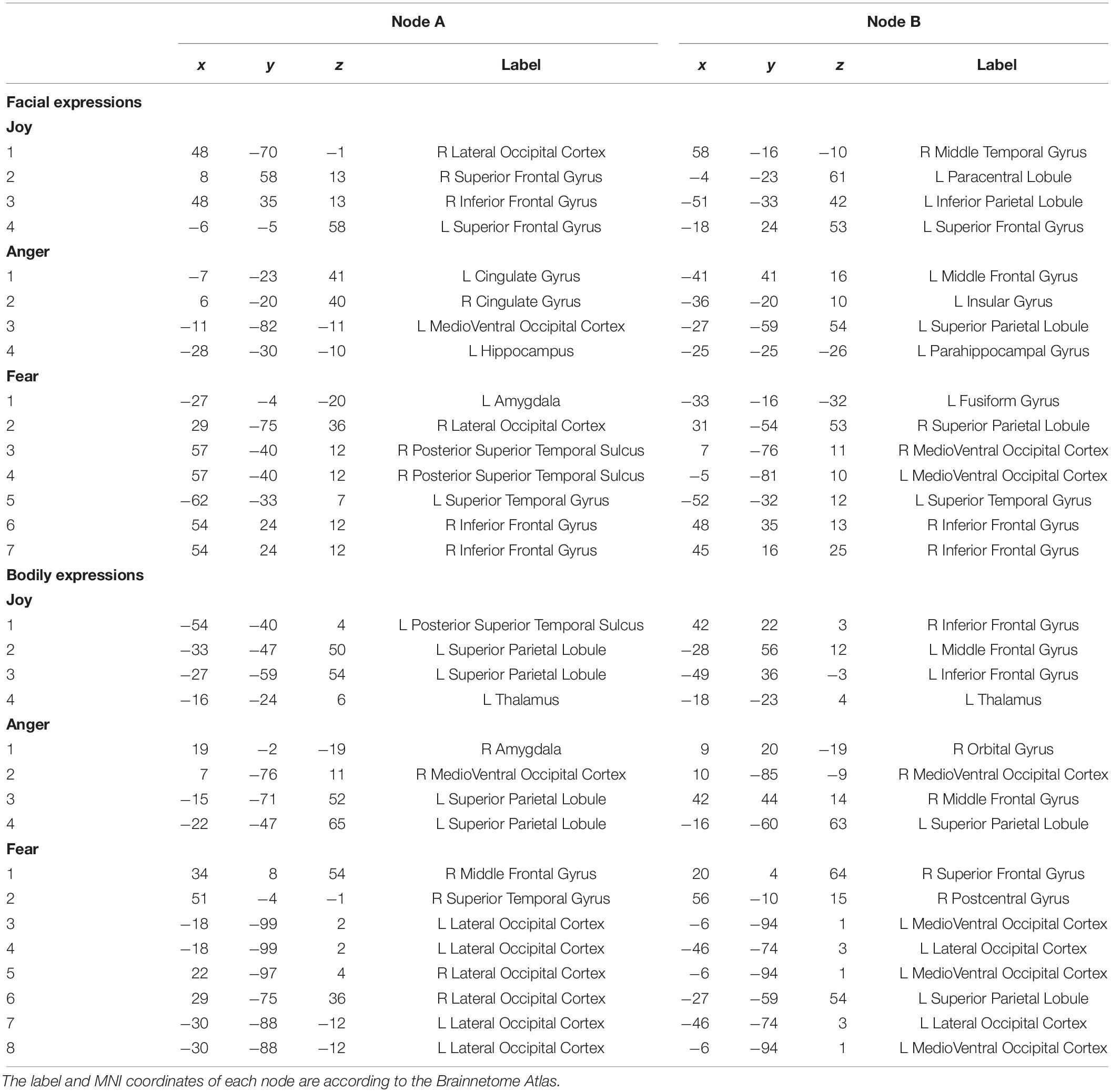

Discriminative Networks for Facial and Bodily Expressions

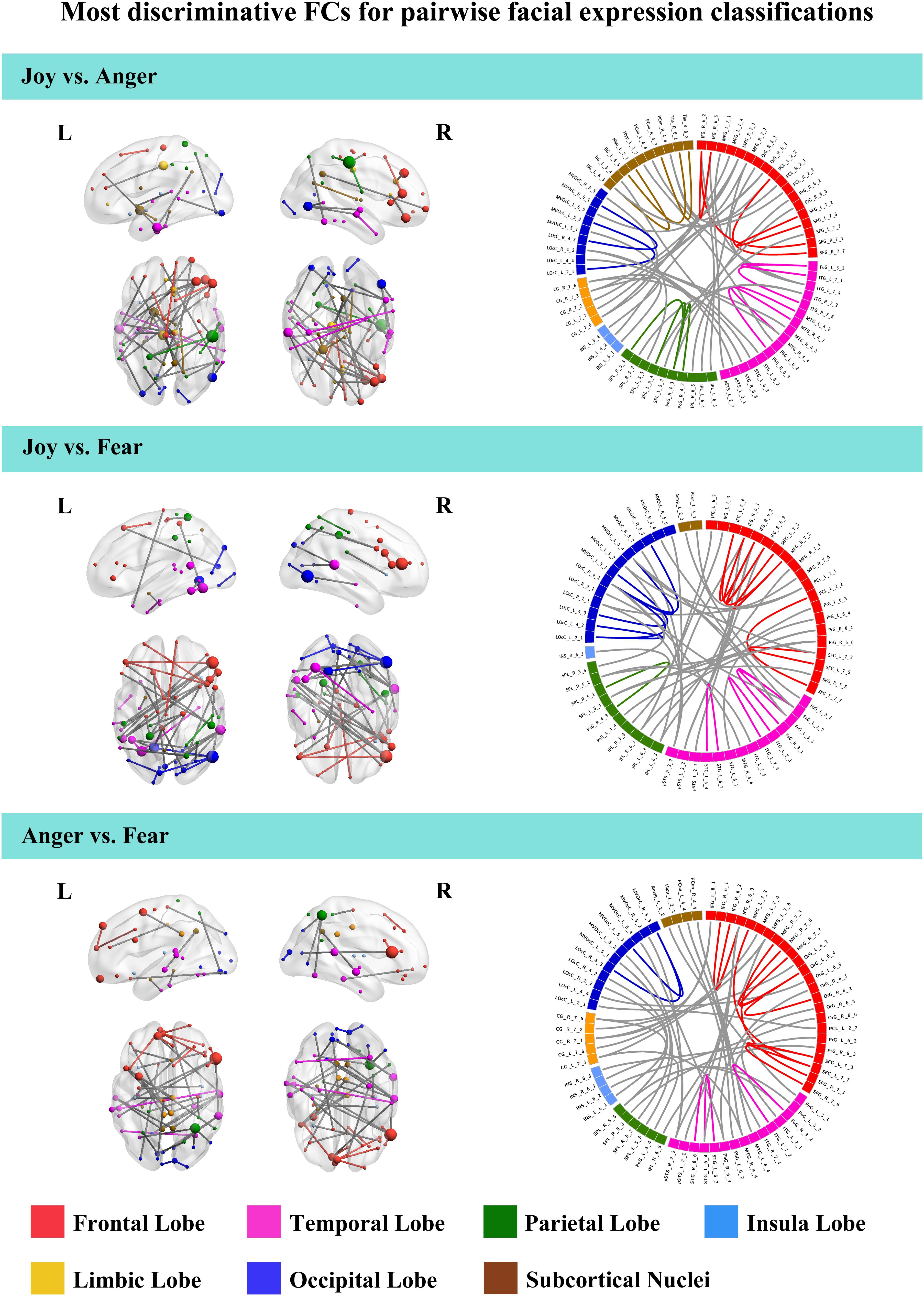

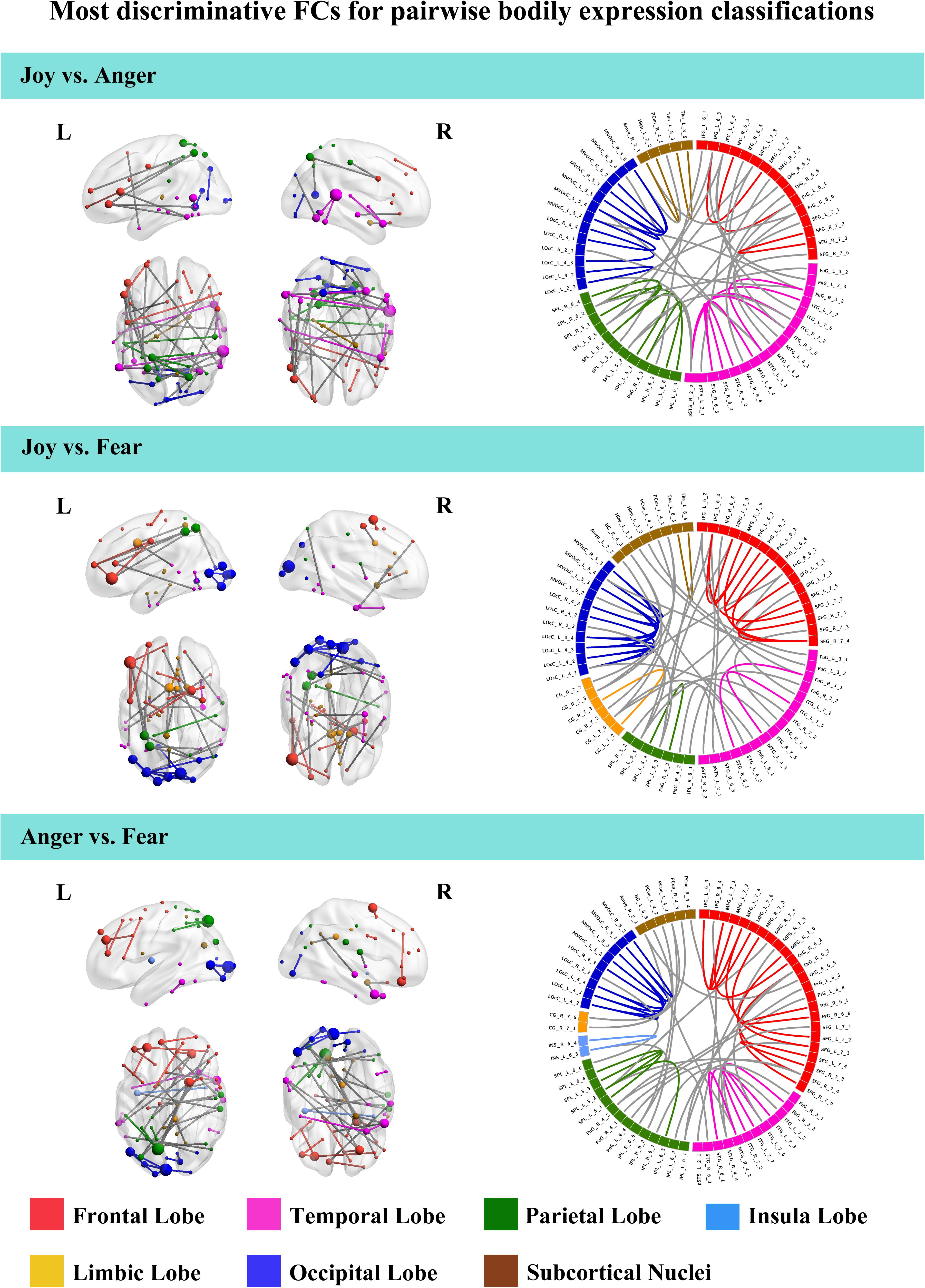

To further understand the emotion-discriminative networks for facial and bodily expressions, we identified the most contributive FCs based on the classifier weights. Consensus FCs were firstly selected on all folds of LOOCV, and the discriminative weight for each feature was defined as the average of their absolute weights across all folds of classification (Ecker et al., 2010; Dai et al., 2012; Cui et al., 2016). FCs with higher discriminative weights were considered to be more contributive to the emotion classification. Figures 4, 5 show the top 50 most contributive FCs (mapped onto the cortical surfaces using BrainNet Viewer, Xia et al., 2013, and the connectogram is created using Circos)4 based on the discriminative weights for the pairwise emotion classifications (joy vs. anger, joy vs. fear, anger vs. fear). Different colors are used to indicate different modules (the frontal, temporal, parietal, insula, limbic, and occipital lobes as well as the subcortical nuclei) according to the Brainnetome atlas. Lines of the intra-module connections are represented by the same color as the located module, while the inter-module connections are represented by gray lines. With insight into these emotion-discriminative networks, we found the involvement of widespread brain regions in both hemispheres, ranging from primary visual regions to higher-level cognitive regions. Furthermore, we identified emotion-preferring network for each emotion category based on these contributive FCs, constituting with FCs that exhibited reliable discriminative power when classifying a particular emotion with each of the other two emotions (details of the discriminative FCs for each emotion are summarized in Table 4). We found that, for both facial and bodily expressions, fear engaged more discriminative FCs than anger and joy. Moreover, we compared the emotion-preferring networks between the facial and bodily expressions. We found that, for a particular emotion, discriminative FCs for facial and bodily expressions were distinct, suggesting that emotions perceiving from different body cues are processed rely upon different networks.

Figure 4. Most discriminative FCs for pairwise facial expression classifications. Results are mapped onto the cortical surfaces using BrainNet Viewer. The coordinates of each node are according to the Brainnetome atlas, and the brain regions are scaled by the number of their connections. The connectogram is created using Circos. Different colors are used to indicate different modules (the frontal, temporal, parietal, insula, limbic and occipital lobes as well as the subcortical nuclei) according to the Brainnetome atlas. Lines of the intra-module connections are represented by the same color as the located module, while the inter-module connections are represented by gray lines.

Figure 5. Most discriminative FCs for pairwise bodily expression classifications. Results are mapped onto the cortical surfaces using BrainNet Viewer. The coordinates of each node are according to the Brainnetome atlas and the brain regions are scaled by the number of their connections. The connectogram is created using Circos. Different colors are used to indicate different modules (the frontal, temporal, parietal, insula, limbic, and occipital lobes as well as the subcortical nuclei) according to the Brainnetome atlas. Lines of the intra-module connections are represented by the same color as the located module, while the inter-module connections are represented by gray lines.

Discussion

In the present study, we explored network representation mechanisms for facial and bodily expressions based on the FC analysis. We employed a continuous multi-category emotion task paradigm wherein participants viewed emotions (joy, anger, and fear) from facial and bodily expressions. We constructed the FC patterns for each emotion in each stimulus type and conducted multivariate connectivity pattern classification analysis (fcMVPA). Results showed that the FC patterns made successful predictions of emotion categories for both facial and bodily expressions, and the decoding accuracies were higher for the facial than for the bodily expressions. Further discriminative FC analysis showed the involvement of a wide range of brain areas in the emotion processing, and the emotion-preferring networks for facial and bodily expressions were different.

Successful Decoding of Facial and Bodily Expressions Based on the Large-Scale FC Patterns

Adopting FC-based MVPA, we showed that emotions perceiving from facial and bodily expressions can be successfully decoded from the large-scale FC patterns.

Regarding the exploration of the neural basis for the emotion perception, most of the previous neuroimaging studies were dominated by using activation-based univariate analysis to identify brain regions showing significant responses to facial or bodily expressions (Kanwisher and Yovel, 2006; de Gelder et al., 2010; Kret et al., 2011; Pitcher, 2014; Henriksson et al., 2015; Downing and Peelen, 2016; Tippett et al., 2018). Although some recent studies employed machine learning algorithms into fMRI analysis, they mainly focused on the activation-based decoding of facial emotions in several predefined ROIs (Said et al., 2010; Harry et al., 2013; Wegrzyn et al., 2015; Liang et al., 2017). Due to the expected existence of interactions between distinct cortical regions, FC analysis has recently attracted more and more interest. A growing body of evidence suggests that distinct cortical regions are intrinsically interconnected during the processing of high-level cognition (Cole et al., 2013; Wang et al., 2016; Zhang et al., 2018). One of our recent studies employed FC-based analysis and showed successful decoding of facial expressions based on the large-scale FC patterns (Liang et al., 2018). To date, however, compared with facial expressions, bodily expressions have received relatively little attention, and no fMRI studies have adequately addressed the potential role of the FC patterns in the decoding of bodily expressions. In the present study, using whole-brain FC analysis and fcMVPA classification, we found that in addition to the facial expressions, bodily expressions could also be successfully decoded from the large-scale FC patterns. These results add to the recently increasing number of studies suggesting that significant amount of information may also be represented in the FC patterns, which can be successfully applied to distinguish social anxiety disorder and major depression patients from the healthy controls (Zeng et al., 2012; Liu et al., 2015), and differentiate among various object categories (Wang et al., 2016), tasks (Cole et al., 2013), mental states (Dosenbach et al., 2010; Pantazatos et al., 2012; Shirer et al., 2012), and sound categories (Zhang et al., 2018). Moreover, our results not only are in line with previous findings on facial expressions but also further suggest the potential contribution of the large-scale FC patterns in the processing of bodily expressions.

Taken together, our results highlight the potential role of the FC patterns in the neural processing of emotions, suggesting that large-scale FC patterns may contain rich emotional information to accurately decode both facial and bodily expressions. Our study provides new evidence for the distributed neural representations of emotions in the large-scale FC patterns and further support that general interactions between distributed brain regions may effectively contribute to the decoding of human emotions.

Network Representations for Facial and Bodily Expressions

In this study, we identified the most contributive FCs in emotion discrimination based on the classifier weights. Figures 4, 5 show the top 50 most discriminative FCs (mapped onto the cortical surfaces using BrainNet Viewer, Xia et al., 2013, and the connectogram is created using Circos, see text footnote 4) for the pairwise emotion classifications (joy vs. anger, joy vs. fear, anger vs. fear). Different colors are used to indicate different brain modules (the frontal, temporal, parietal, insula, limbic, and occipital lobes as well as the subcortical nuclei) according to the Brainnetome atlas. Lines of the intra-module connections are represented by the same color as the located module, while the inter-module connections are represented by gray lines. We found that these emotion-discriminative networks were widely distributed in both hemispheres, containing FCs among widespread brain regions in occipital, parietal, temporal, and frontal lobes, ranging from primary visual areas to higher-level cognitive areas. Particularly, these networks included classical face- and body-selective areas, such as the FG and the posterior superior temporal sulcus (pSTS). Additionally, regions that were not classically considered sensitive by traditional activation-based measures, such as the postcentral gyrus and the middle frontal gyrus, were also included in the discriminative networks. To some extent, these results were compatible with recent fcMVPA studies on decoding of various object categories, sounds, and facial expressions, suggesting the potential effects of the activation-defined neutral areas on high-level cognition (Wang et al., 2016; Liang et al., 2018; Zhang et al., 2018). Together, our results from the discriminative network analysis indicate how large-scale FC patterns reconfigure in the processing of facial and bodily expressions and further corroborate the distributed neural representation for the emotion recognition.

Furthermore, we constructed an emotion-preferring network for each emotion category, composed of FCs that significantly contributed to the classifications between a particular emotion and the other two emotion categories (Table 4). With insight into these emotion-preferring networks, we found that for the facial expressions, joy evoked FCs across the occipital, the frontal, the temporal, and the parietal lobes; fear evoked more FCs than joy and anger, which is mainly across the occipital, the temporal, the frontal, and the parietal lobes as well as the subcortical nuclei; and anger evoked FCs across all seven modules. Our results were compatible with previous studies on facial expression perception, which demonstrated the involvement of anatomical regions, such as the visual areas, the FG, the STS, the amygdala, the insula, the middle temporal gyrus, and the inferior frontal areas, in the processing, analyzing, and evaluating of the emotional facial stimuli (Trantmann et al., 2009; Kret et al., 2011; Harry et al., 2013; Furl et al., 2015; Henriksson et al., 2015; Wegrzyn et al., 2015; Liang et al., 2017). Moreover, results of our emotion-preferring networks may provide new evidence to indicate the potential preference of a specific region in the processing of particular emotions; for instance, in our study, amygdala was involved in the discriminative network for fear, which was consistent with previous findings that showed that amygdala could enhance the encoding of fearful facial expressions using dynamic causal modeling analysis (Furl et al., 2013, 2015).

For the emotion-preferring networks of bodily expressions, however, we found that the networks for each emotion were different from that for the facial expressions. When perceiving emotions from bodily stimuli, joy mainly evoked FCs across the temporal, the parietal, and the frontal lobes as well as the subcortical nuclei; anger evoked FCs across the subcortical nuclei, the occipital, the parietal, and the frontal lobes; and fear mainly evoked FCs across the frontal, the temporal, the occipital, and the parietal lobes. For fear, the bilateral lateral occipital cortex served as the hub region (most densely connected region). Previous studies using activation-based analysis have found the preferential activations in the STS, the superior parietal lobule, the superior temporal gyrus, and the thalamus for the bodily expression perception (Kret et al., 2011; Yang et al., 2018). Our results were consistent with these previous findings, and may further our understanding of the neural basis for decoding of bodily expressions. Moreover, for a particular emotion, discriminative FCs for facial and bodily expressions were distinct, suggesting that the human brain employs separate network representations for facial and bodily expressions of the same emotions. To sum, our results provide new evidence for the network representations of emotions, and suggest that emotions perceiving from different body cues may be processed rely upon different networks.

The present study employed a similar sample size as those reported in previous fMRI studies on facial emotion perception and fcMVPA-based decoding analyses (Furl et al., 2013; Wegrzyn et al., 2015; Wang et al., 2016; Zhang et al., 2018). The inclusion of additional samples could further improve the statistical power and boost the accuracy. Moreover, a larger number of participants can better prove the effectiveness of our findings. Thus, it is important to confirm our findings with a larger sample size in the future study. Additionally, emotions can also be perceived from sounds and other clues. Future studies on other perceptual cues would be meaningful to help to further understand the neural basis of emotion processing more fully and deeply.

Conclusion

Taken together, using fcMVPA-based classification analyses, we show that rich emotional information is represented in the large-scale FC patterns, which can accurately decode not only facial but also bodily expressions. These findings further corroborate the importance of the FC patterns in emotion perception. In addition, we show that the emotion-discriminative networks are widely distributed in both hemispheres, suggesting the interactive nature of distributed brain areas underlying the neural representations of emotions. Furthermore, our results provide new evidence for the network representations of facial and bodily expressions and suggest that emotions perceiving from different body cues may be processed rely upon different networks. This study further extends previous fcMVPA studies and may be helpful to improve the understanding of the potential mechanisms that enable the human brain to efficiently recognize emotions from body language in daily lives.

Data Availability Statement

The datasets generated for this study are available on reasonable request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by the Research Ethics Committee of Yantai Affiliated Hospital of Binzhou Medical University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

BL designed the study. YL and XL performed the experiments. YL analyzed the results and wrote the manuscript. YL and JJ contributed to the manuscript revision. All authors have approved the final manuscript.

Funding

This work was supported by the National Key Research and Development Program of China (No. 2018YFB0204304), the National Natural Science Foundation of China (Nos. U1736219, 61571327, and 61906006), the China Postdoctoral Science Foundation funded project (No. 2018M641135), the Beijing Postdoctoral Research Foundation (No. zz2019-74), and the Chaoyang District Postdoctoral Research Foundation.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Prof. Irene Rotondi (Campus Biotech, University of Geneva, Switzerland) for supplying the GEMEP Corpus. We also thank the Medical Imaging Research Institute of Binzhou Medical University, Yantai Affiliated Hospital of Binzhou Medical University, as well as the volunteers for the assistance in the data acquisition.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2019.01111/full#supplementary-material

Footnotes

- ^ http://www.fil.ion.ucl.ac.uk/spm/software/spm8/

- ^ http://www.nitrc.org/projects/conn

- ^ https://www.csie.ntu.edu.tw/~cjlin/libsvm/

- ^ http://circos.ca/

References

Axelrod, V., and Yovel, G. (2012). Hierarchical processing of face viewpoint in human visual cortex. J. Neurosci. 32, 2442–2452. doi: 10.1523/JNEUROSCI.4770-11.2012

Banziger, T., Mortillaro, M., and Scherer, K. R. (2012). Introducing the Geneva multimodal expression corpus for experimental research on emotion perception. Emotion 12, 1161–1179. doi: 10.1037/a0025827

Behzadi, Y., Restom, K., Liau, J., and Liu, T. T. (2007). A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage 37, 90–101. doi: 10.1016/j.neuroimage.2007.04.042

Candidi, M., Stienen, B. M., Aglioti, S. M., and De Gelder, B. (2011). Event-related repetitive transcranial magnetic stimulation of posterior superior temporal sulcus improves the detection of threatening postural changes in human bodies. J. Neurosci. 31, 17547–17554. doi: 10.1523/JNEUROSCI.0697-11.2011

Cole, M. W., Ito, T., Schultz, D., Mill, R., Chen, R., and Cocuzza, C. (2019). Task activations produce spurious but systematic inflation of task functional connectivity estimates. Neuroimage 189, 1–18. doi: 10.1016/j.neuroimage.2018.12.054

Cole, M. W., Reynolds, J. R., Power, J. D., Repovs, G., Anticevic, A., and Braver, T. S. (2013). Multi-task connectivity reveals flexible hubs for adaptive task control. Nat. Neurosci. 16, 1348–1355. doi: 10.1038/nn.3470

Craddock, R. C., James, G. A., Holtzheimer, P. E., Hu, X. P., and Mayberg, H. S. (2012). A whole brain fMRI atlas generated via spatially constrained spectral clustering. Hum. Brain Mapp. 33, 1914–1928. doi: 10.1002/hbm.21333

Cui, Z., Xia, Z., Su, M., Shu, H., and Gong, G. (2016). Disrupted white matter connectivity underlying developmental dyslexia: a machine learning approach. Hum. Brain Mapp. 37, 1443–1458. doi: 10.1002/hbm.23112

Dai, Z., Yan, C., Wang, Z., Wang, J., Xia, M., Li, K., et al. (2012). Discriminative analysis of early Alzheimer’s disease using multi-modal imaging and multi-level characterization with multi-classifier (M3). Neuroimage 59, 2187–2195. doi: 10.1016/j.neuroimage.2011.10.003

de Gelder, B., De Borst, A. W., and Watson, R. (2015). The perception of emotion in body expressions. Wiley Interdiscip. Rev. Cogn. Sci. 6, 149–158. doi: 10.1002/wcs.1335

de Gelder, B., Hortensius, R., and Tamietto, M. (2012). Attention and awareness each influence amygdala activity for dynamic bodily expressions-a short review. Front. Integr. Neurosci. 6:54. doi: 10.3389/fnint.2012.00054

de Gelder, B., Van Den Stock, J., Meeren, H. K., Sinke, C. B., Kret, M. E., and Tamietto, M. (2010). Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci. Biobehav. Rev. 34, 513–527. doi: 10.1016/j.neubiorev.2009.10.008

Dosenbach, N. U. F., Nardos, B., Cohen, A. L., Fair, D. A., Power, J. D., Church, J. A., et al. (2010). Prediction of individual brain maturity using fMRI. Science 329, 1358–1361. doi: 10.1126/science.1194144

Downing, P. E., and Peelen, M. V. (2016). Body selectivity in occipitotemporal cortex: causal evidence. Neuropsychologia 83, 138–148. doi: 10.1016/j.neuropsychologia.2015.05.033

Ecker, C., Marquand, A., Mourão-Miranda, J., Johnston, P., Daly, E. M., Brammer, M. J., et al. (2010). Describing the brain in autism in five dimensions-magnetic resonance imaging-assisted diagnosis of autism spectrum disorder using a multiparameter classification approach. J. Neurosci. 30, 10612–10623. doi: 10.1523/JNEUROSCI.5413-09.2010

Fan, L., Li, H., Zhuo, J., Zhang, Y., Wang, J., Chen, L., et al. (2016). The human brainnetome atlas: a new brain atlas based on connectional architecture. Cereb. Cortex 26, 3508–3526. doi: 10.1093/cercor/bhw157

Fernandes, O., Portugal, L. C. L., Alves, R. C. S., Arruda-Sanchez, T., Rao, A., and Volchan, E. (2017). Decoding negative affect personality trait from patterns of brain activation to threat stimuli. Neuroimage 145, 337–345. doi: 10.1016/j.neuroimage.2015.12.050

Fox, M. D., Zhang, D., Snyder, A. Z., and Raichle, M. E. (2009). The global signal and observed anticorrelated resting state brain networks. J. Neurophysiol. 101, 3270–3283. doi: 10.1152/jn.90777.2008

Furl, N., Hadj-Bouziane, F., Liu, N., Averbeck, B. B., and Ungerleider, L. G. (2012). Dynamic and static facial expressions decoded from motion-sensitive areas in the macaque monkey. J. Neurosci. 32, 15952–15962. doi: 10.1523/JNEUROSCI.1992-12.2012

Furl, N., Henson, R. N., Friston, K. J., and Calder, A. J. (2013). Top-down control of visual responses to fear by the amygdala. J. Neurosci. 33, 17435–17443. doi: 10.1523/JNEUROSCI.2992-13.2013

Furl, N., Henson, R. N., Friston, K. J., and Calder, A. J. (2015). Network interactions explain sensitivity to dynamic faces in the superior temporal sulcus. Cereb. Cortex 25, 2876–2882. doi: 10.1093/cercor/bhu083

Gobbini, M. I., Gentili, C., Ricciardi, E., Bellucci, C., Salvini, P., Laschi, C., et al. (2011). Distinct neural systems involved in agency and animacy detection. J. Cogn. Neurosci. 23, 1911–1920. doi: 10.1162/jocn.2010.21574

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Golland, P., and Fischl, B. (2003). Permutation tests for classification: towards statistical significance in image-based studies. Inf. Process. Med. Imaging 18, 330–341. doi: 10.1007/978-3-540-45087-0_28

Grezes, J., Pichon, S., and De Gelder, B. (2007). Perceiving fear in dynamic body expressions. Neuroimage 35, 959–967. doi: 10.1016/j.neuroimage.2006.11.030

Grill-Spector, K., Knouf, N., and Kanwisher, N. (2004). The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci. 7, 555–562. doi: 10.1038/nn1224

Harry, B., Williams, M. A., Davis, C., and Kim, J. (2013). Emotional expressions evoke a differential response in the fusiform face area. Front. Hum. Neurosci. 7:692. doi: 10.3389/fnhum.2013.00692

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/s1364-6613(00)01482-0

He, C., Peelen, M. V., Han, Z., Lin, N., Caramazza, A., and Bi, Y. (2013). Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage 79, 1–9. doi: 10.1016/j.neuroimage.2013.04.051

Henriksson, L., Mur, M., and Kriegeskorte, N. (2015). Faciotopy-A face-feature map with face-like topology in the human occipital face area. Cortex 72, 156–167. doi: 10.1016/j.cortex.2015.06.030

Hutchison, R. M., Culham, J. C., Everling, S., Flanagan, J. R., and Gallivan, J. P. (2014). Distinct and distributed functional connectivity patterns across cortex reflect the domain-specific constraints of object, face, scene, body, and tool category-selective modules in the ventral visual pathway. Neuroimage 96, 216–236. doi: 10.1016/j.neuroimage.2014.03.068

Ishai, A., Schmidt, C. F., and Boesiger, P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93. doi: 10.1016/j.brainresbull.2005.05.027

Johnston, P., Mayes, A., Hughes, M., and Young, A. W. (2013). Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 49, 2462–2472. doi: 10.1016/j.cortex.2013.01.002

Kaiser, D., Strnad, L., Seidl, K. N., Kastner, S., and Peelen, M. V. (2014). Whole person-evoked fMRI activity patterns in human fusiform gyrus are accurately modeled by a linear combination of face- and body-evoked activity patterns. J. Neurophysiol. 111, 82–90. doi: 10.1152/jn.00371.2013

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Kim, N. Y., and McCarthy, G. (2016). Task influences pattern discriminability for faces and bodies in ventral occipitotemporal cortex. Soc. Neurosci. 11, 627–636. doi: 10.1080/17470919.2015.1131194

Kret, M. E., Pichon, S., Grezes, J., and De Gelder, B. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. an. fMRI study Neuroimage 54, 1755–1762. doi: 10.1016/j.neuroimage.2010.08.012

Lee, L. C., Andrews, T. J., Johnson, S. J., Woods, W., Gouws, A., Green, G. G., et al. (2010). Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48, 477–490. doi: 10.1016/j.neuropsychologia.2009.10.005

Liang, Y., Liu, B. L., Li, X. L., and Wang, P. Y. (2018). Multivariate pattern classification of facial expressions based on large-scale functional connectivity. Front. Hum. Neurosci. 12:94. doi: 10.3389/fnhum.2018.00094

Liang, Y., Liu, B. L., Xu, J. H., Zhang, G. Y., Li, X. L., Wang, P. Y., et al. (2017). Decoding facial expressions based on face-selective and motion-sensitive areas. Hum. Brain Mapp. 38, 3113–3125. doi: 10.1002/hbm.23578

Liu, F., Guo, W., Fouche, J. P., Wang, Y., Wang, W., Ding, J., et al. (2015). Multivariate classification of social anxiety disorder using whole brain functional connectivity. Brain Struct. Funct. 220, 101–115. doi: 10.1007/s00429-013-0641-4

Mahmoudi, A., Takerkart, S., Regragui, F., Boussaoud, D., and Brovelli, A. (2012). Multivoxel pattern analysis for fMRI data: a review. Comput. Math. Methods Med. 2012:961257. doi: 10.1155/2012/961257

Minnebusch, D. A., and Daum, I. (2009). Neuropsychological mechanisms of visual face and body perception. Neurosci. Biobehav. Rev. 33, 1133–1144. doi: 10.1016/j.neubiorev.2009.05.008

Pantazatos, S. P., Talati, A., Pavlidis, P., and Hirsch, J. (2012). Decoding unattended fearful faces with whole-brain correlations: an approach to identify condition- dependent large-scale functional connectivity. PLoS Comput. Biol. 8:e1002441. doi: 10.1371/journal.pcbi.1002441

Parente, F., Frascarelli, M., Mirigliani, A., Di Fabio, F., Biondi, M., and Colosimo, A. (2018). Negative functional brain networks. Brain Imaging Behav. 12, 467–476. doi: 10.1007/s11682-017-9715-x

Pinsk, M. A., Arcaro, M., Weiner, K. S., Kalkus, J. F., Inati, S. J., Gross, C. G., et al. (2009). Neural representations of faces and body parts in macaque and human cortex: a comparative FMRI study. J. Neurophysiol. 101, 2581–2600. doi: 10.1152/jn.91198.2008

Pitcher, D. (2014). Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. J. Neurosci. 34, 9173–9177. doi: 10.1523/JNEUROSCI.5038-13.2014

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L., and Petersen, S. E. (2012). Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59, 2142–2154. doi: 10.1016/j.neuroimage.2011.10.018

Said, C. P., Moore, C. D., Engell, A. D., Todorov, A., and Haxby, J. V. (2010). Distributed representations of dynamic facial expressions in the superior temporal sulcus. J. Vis. 10, 71–76.

Shirer, W. R., Ryali, S., Rykhlevskaia, E., Menon, V., and Greicius, M. D. (2012). Decoding subject-driven cognitive states with whole-brain connectivity patterns. Cereb. Cortex 22, 158–165. doi: 10.1093/cercor/bhr099

Smith, S. M. (2012). The future of FMRI connectivity. Neuroimage 62, 1257–1266. doi: 10.1016/j.neuroimage.2012.01.022

Soria Bauser, D., and Suchan, B. (2015). Is the whole the sum of its parts? Configural processing of headless bodies in the right fusiform gyrus. Behav. Brain Res 281, 102–110. doi: 10.1016/j.bbr.2014.12.015

Tippett, D. C., Godin, B. R., Oishi, K., Oishi, K., Davis, C., Gomez, Y., et al. (2018). Impaired recognition of emotional faces after stroke involving right amygdala or insula. Semin. Speech Lang. 39, 087–100. doi: 10.1055/s-0037-1608859

Trantmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284, 100–115. doi: 10.1016/j.brainres.2009.05.075

Van Dijk, K. R. A., Sabuncu, M. R., and Buckner, R. L. (2012). The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59, 431–438. doi: 10.1016/j.neuroimage.2011.07.044

Wang, X. S., Fang, Y. X., Cui, Z. X., Xu, Y. W., He, Y., Guo, Q. H., et al. (2016). Representing object categories by connections: evidence from a multivariate connectivity pattern classification approach. Hum. Brain Mapp. 37, 3685–3697. doi: 10.1002/hbm.23268

Wegrzyn, M., Riehle, M., Labudda, K., Woermann, F., Baumgartner, F., Pollmann, S., et al. (2015). Investigating the brain basis of facial expression perception using multi-voxel pattern analysis. Cortex 69, 131–140. doi: 10.1016/j.cortex.2015.05.003

Weissenbacher, A., Kasess, C., Gerstl, F., Lanzenberger, R., Moser, E., and Windischberger, C. (2009). Correlations and anticorrelations in resting-state functional connectivity MRI: a quantitative comparison of preprocessing strategies. Neuroimage 47, 1408–1416. doi: 10.1016/j.neuroimage.2009.05.005

Whitfield-Gabrieli, S., and Nieto-Castanon, A. (2012). Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2, 125–141. doi: 10.1089/brain.2012.0073

Xia, M., Wang, J., and He, Y. (2013). BrainNet viewer: a network visualization tool for human brain connectomics. PLoS One 8:e68910. doi: 10.1371/journal.pone.0068910

Yang, X. L., Xu, J. H., Cao, L. J., Li, X. L., Wang, P. Y., Wang, B., et al. (2018). Linear representation of emotions in whole persons by combining facial and bodily expressions in the extrastriate body area. Front. Hum. Neurosci. 11:653. doi: 10.3389/fnhum.2017.00653

Zeng, L. L., Shen, H., Liu, L., Wang, L., Li, B., Fang, P., et al. (2012). Identifying major depression using whole-brain functional connectivity: a multivariate pattern analysis. Brain 135, 1498–1507. doi: 10.1093/brain/aws059

Zeng, L. L., Wang, D., Fox, M. D., Sabuncu, M., Hu, D., Ge, M., et al. (2014). Neurobiological basis of head motion in brain imaging. Proc. Natl. Acad. Sci. U.S.A. 111, 6058–6062. doi: 10.1073/pnas.1317424111

Zhang, J., Zhang, G., Li, X., Wang, P., Wang, B., and Liu, B. (2018). Decoding sound categories based on whole-brain functional connectivity patterns. Brain Imaging Behav. doi: 10.1007/s11682-018-9976-z [Epub ahead of print].

Zhen, Z., Fang, H., and Liu, J. (2013). The hierarchical brain network for face recognition. PLoS One 8:e59886. doi: 10.1371/journal.pone.0059886

Zhu, C. Z., Zang, Y. F., Cao, Q. J., Yan, C. G., He, Y., Jiang, T. Z., et al. (2008). Fisher discriminative analysis of resting-state brain function for attention-deficit/hyperactivity disorder. Neuroimage 40, 110–120. doi: 10.1016/j.neuroimage.2007.11.029

Keywords: facial expressions, bodily expressions, functional magnetic resonance imaging, functional connectivity, multivariate pattern classification

Citation: Liang Y, Liu B, Ji J and Li X (2019) Network Representations of Facial and Bodily Expressions: Evidence From Multivariate Connectivity Pattern Classification. Front. Neurosci. 13:1111. doi: 10.3389/fnins.2019.01111

Received: 25 July 2019; Accepted: 02 October 2019;

Published: 29 October 2019.

Edited by:

Sheng Zhang, Yale University, United StatesReviewed by:

Doug Schultz, University of Nebraska-Lincoln, United StatesLing-Li Zeng, National University of Defense Technology, China

Copyright © 2019 Liang, Liu, Ji and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Baolin Liu, liubaolin@tsinghua.edu.cn; Junzhong Ji, jjz01@bjut.edu.cn

Yin Liang

Yin Liang Baolin Liu2,3,4*

Baolin Liu2,3,4*