- 1School of Mechanical Engineering, Xi’an Jiaotong University, Xi’an, Shaanxi, China

- 2Shaanxi Key Laboratory of Intelligent Robots, Xi’an Jiaotong University, Xi’an, Shaanxi, China

- 3Wearable Human Enhancement Technology Innovation Center, Xi’an Jiaotong University, Xi’an, Shaanxi, China

The electroencephalogram (EEG) and surface electromyogram (sEMG) fusion has been widely used in the detection of human movement intention for human–robot interaction, but the internal relationship of EEG and sEMG signals is not clear, so their fusion still has some shortcomings. A precise fusion method of EEG and sEMG using the CNN-LSTM model was investigated to detect lower limb voluntary movement in this study. At first, the EEG and sEMG signal processing of each stage was analyzed so that the response time difference between EEG and sEMG can be estimated to detect lower limb voluntary movement, and it can be calculated by the symbolic transfer entropy. Second, the data fusion and feature of EEG and sEMG were both used for obtaining a data matrix of the model, and a hybrid CNN-LSTM model was established for the EEG and sEMG-based decoding model of lower limb voluntary movement so that the estimated value of time difference was about 24 ∼ 26 ms, and the calculated value was between 25 and 45 ms. Finally, the offline experimental results showed that the accuracy of data fusion was significantly higher than feature fusion-based accuracy in 5-fold cross-validation, and the average accuracy of EEG and sEMG data fusion was more than 95%; the improved average accuracy for eliminating the response time difference between EEG and sEMG was about 0.7 ± 0.26% in data fusion. In the meantime, the online average accuracy of data fusion-based CNN-LSTM was more than 87% in all subjects. These results demonstrated that the time difference had an influence on the EEG and sEMG fusion to detect lower limb voluntary movement, and the proposed CNN-LSTM model can achieve high performance. This work provides a stable and reliable basis for human–robot interaction of the lower limb exoskeleton.

Introduction

The exoskeleton robot is used as an assistive device for disabled people, rehabilitation for paraplegics, and power augmentation for military or manual workers (Hamza et al., 2020). This robot can augment or restore a measure of their motor function to enable them to regain or enhance the partial function of their limbs (Sawicki et al., 2020). For the exoskeleton robot in the application of early rehabilitation training, the prespecified targeted task such as trajectory tracking control is the common control strategy to help patients recover muscle strength passively. The middle or later rehabilitation training and power-assisted exoskeleton robot need to follow the user’s movement intention (Ajayi et al., 2020). The detection of human movement intention is an indispensable part of the robot, and this ability is based on large quantities of human or interaction information for precise tracking control of the exoskeleton robot because this robot is a typical human–machine coupling system.

As early as 2007, the lower extremity powered exoskeleton (LOPES) robot detected the human movement intention by the human–robot interaction (HRI) through bundle connectors. The EMG-based evaluation result showed that the limb orientations of the LOPES robot and the walking subject agree well (Veneman et al., 2007). The HIT load-carrying exoskeleton (HIT-LEX) measured the human–machine interactive forces at the kinematic terminals for human movement identification and exoskeleton control; the load-bearing walk experimental result showed this method was feasible (Chao et al., 2016). Yuxiang et al. (2019) developed a newly split embedded intrinsic sensor, which can accurately measure the HRI force applied to extract the human movement intention without being affected by differences in the wearing status; the proposed method enhanced the identification accuracy from 96.2 to 99.7%. The advantage of the HRI-based method is the simplicity of signal measurement, and it is also the most stable and mature method in exoskeleton robot control. However, there are some problems too difficult to overcome. The most important problem is related to response delay in the robot control loop, which is caused by the HRI generated after human movement, as well as the information processing time of the robot system. This response delay will reduce the system’s performance (Fleischer et al., 2006).

Electrophysiological signals are electrical signals generated during human physiological activities, which can reflect the relevant information about the human body. The typical electroencephalogram (EEG) and electromyogram (EMG) signals have been widely studied and applied in medicine and human–computer interaction. Fleischer et al. calculated the knee torque from EMG signals and applied it to the control of the exoskeleton robot, and there was a remarkable performance without complicated dynamic models (Fleischer et al., 2006). Palayil Baby et al. used the support vector machine (SVM) classifier to identify the intended motion patterns based on three-channel sEMG signals, developed nonlinear mathematical models for joint torque estimation, and utilized swarm techniques to identify model parameters for each movement pattern of the ankle (Palayil Baby et al., 2020). Longbin et al. estimated ankle joint torques by using an EMG-driven neuromusculoskeletal (NMS) model and an artificial neural network (ANN), which includes fast walking, slow walking, and self-selected speed walking. The ANN-predicted torque had a lower root mean square error (RMSE), and it contributes to compensate the assistive torque for the user’s remaining muscle within exoskeleton control (Longbin et al., 2021). Dezhen et al. employed principle component analysis (PCA), factor analysis (FA), and nonnegative matrix factorization (NMF) to extract muscle synergy from EMG signals, and a bidirectional gated recurrent unit (BGRU)-based deep regression neural network has been established for gait tracking at different walking speed. The results showed that the proposed methods can reach R2var scores of 0.83–0.88, and it demonstrated that muscle synergy of EMG has a good correlation with gait tracking (Dezhen et al., 2021). Chao-Hung et al. proposed an EMG-based single-joint exoskeleton system by merging a differentiable continuous system with a dynamic musculoskeletal model. It can solve the problem that the process of transforming the input of biomedical signals into the output of adjusting the torque and angle of the exoskeleton is limited by a finite time lag and precision of trajectory prediction, which results in a mismatch between the subject and exoskeleton. Its results revealed accurate torque and angle prediction for the knee exoskeleton and good performance of assistance during movement (Chao-Hung et al., 2022).

The EEG-based brain–computer interface (BCI) provides a direct connection path to detect human movement intention. BCI-based control technology has achieved considerable progress. The steady-state visual evoked potential (SSVEP) EEG signals were decoded into columns of instructions to drive the exoskeleton tracking the intended trajectories in the human operator’s mind, and the experimental result verifies the validity of BCI-based control method (Shiyuan et al., 2016). Kwak et al. (2015) decoded the five movements of the subject based on the SSVEP paradigm while wearing the exoskeleton and achieved average accuracies of 91.3% and an information transfer rate of 32.9 bits/min. This work indicated that an SSVEP-based lower limb exoskeleton for gait assistance is becoming feasible (Kwak et al., 2015). Choi et al. developed an motor imagery (MI)-based hybrid BCI controller for the lower limb exoskeleton operation; it can control the exoskeleton to stand up, gait start/stop, and sit down without any steer or button press using the real-time EEG decoder, but the online experimental results showed that the proposed method spent 145% of the control time compared with the conventional smartwatch controller (Junhyuk et al., 2020). The real-time performance of the BCI using a specific paradigm in exoskeleton control is not satisfactory. Continuous decoding of voluntary movement is desirable for closed-loop, natural control of the neuro-robot (Valeria et al., 2020).

The EEG or sEMG-based control method has some shortcomings: the decoding accuracy and stability of EEG are not good enough, and the performance of sEMG decoding has no advantage in movement prediction. Therefore, EEG and sEMG signals are combined or fused to further improve the decoding performance. It forms EEG and EMG-based hybrid and EEG and sEMG fusion methods. The hybrid method combines different control commands in series or in parallel to improve the performance of system; it expands a conventional “simple” BCI or human–machine interface (HMI) in different ways (Pfurtscheller et al., 2010). The fusion of EEG and sEMG fuses the two signals in the data level, feature level, or decision level to improve classification accuracy, allowing each to compensate for the weakness of others (Tryon et al., 2019).

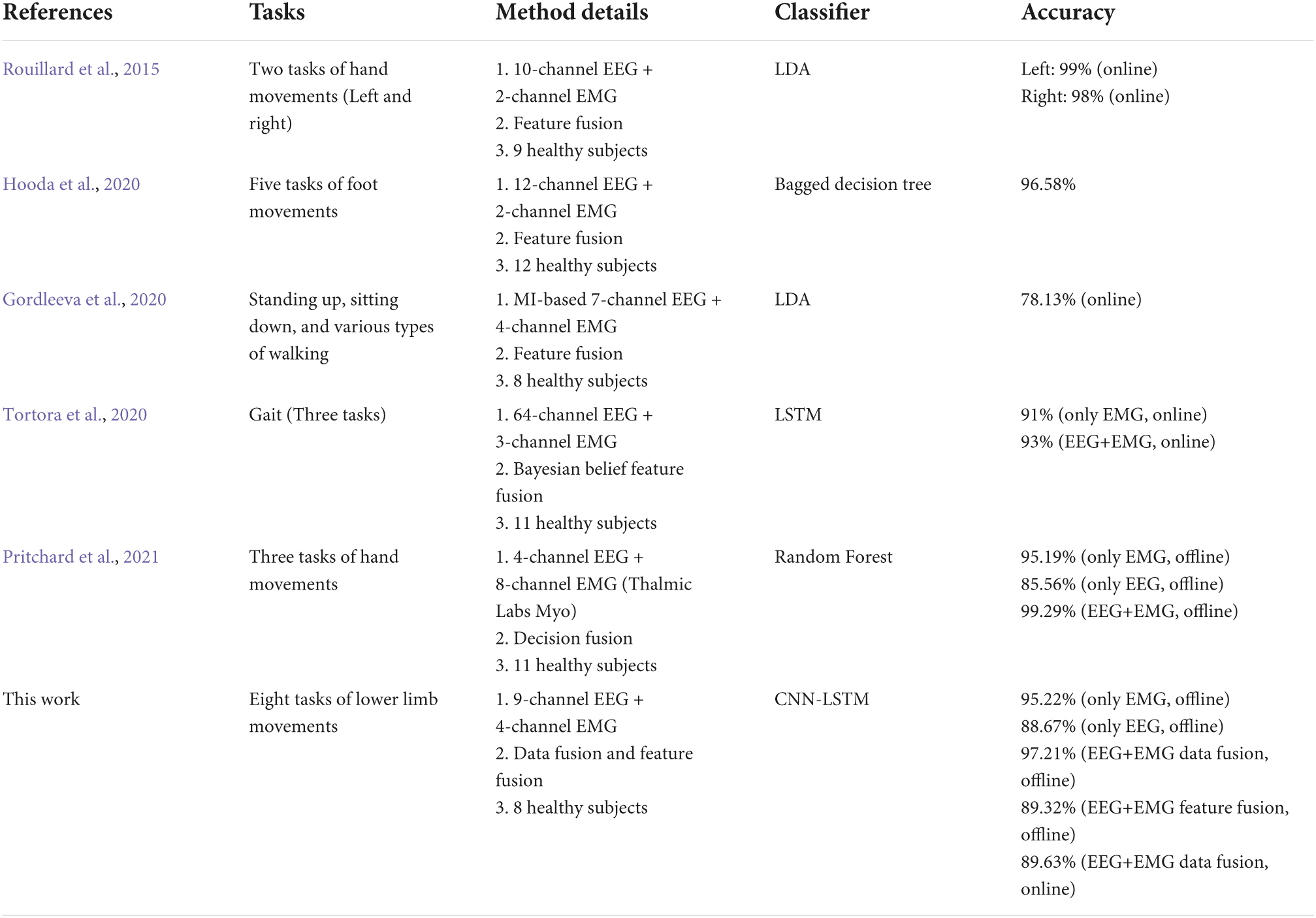

Rouillard et al. proposed a hybrid BCI coupling EEG and EMG for severe motor disabilities using virtual reality; a 5-fold cross-validation test was used to assess performances of the left hand (0.99) and right hand (0.98) classifiers (Rouillard et al., 2015). Hooda et al. explored the fusion of EEG and sEMG to identify unilateral lower limb movements. Those signals were analyzed for parallel and cascaded classification of different movement tasks, and high performance was achieved (Hooda et al., 2020). Gordleeva et al. presented a rehabilitation technique based on a lower limb exoskeleton integrated with the HMI, and EMG + EEG classification based on the CSP feature with subsequent linear discriminant analysis (LDA) classification showed an accuracy of 80%, which was less than EMG-based accuracies (89%). However, it can be significantly more informative of patients (trauma, stroke, etc.) (Gordleeva et al., 2020). Tortora et al. proposed and evaluated a hybrid HMI to decode walking phases of both legs from the Bayesian fusion of EEG and EMG signals; the results showed that the fusion of EEG and EMG information helps keep a stable recognition rate of each gait phase of more than 80% independently on the permanent level of EMG degradation (Tortora et al., 2020). Using low-cost sEMG and EEG devices in tandem can achieve high accuracy with decision-level fusion, which reported accuracies of up to 99% (Pritchard et al., 2021). So, the fusion of EEG and sEMG can keep a stable recognition rate of tasks with high accuracy.

Nevertheless, our previous work showed a response time difference between EEG and sEMG of limb movement (Xiaodong et al., 2021). Using simulated data, Yuhange et al. demonstrated that under certain conditions, the time lag between EEG and EMG segments at points of the local maxima of corticomuscular coherence with time lag (CMCTL) corresponds to the average delay along the involved corticomuscular conduction pathways. The result showed that all delays estimated were in the region of 19.5 ± 3.9 ms (Yuhang et al., 2017). Jinbiao et al. proposed a rate of voxel change (RVC) to estimate the time lag between EEG and EMG, and the results showed that the time lag was 22.8 ms for healthy subjects with 30% maximum voluntary contraction (MVC), and 34.5 ms for patients with cognitive difficulties (Jinbiao et al., 2022).

Therefore, the direct fusion of EEG and sEMG has a problem: the current time information of EEG and sEMG is inconsistent. So, eliminating the time difference between those signals can improve recognition performance. This work aims at detecting human lower limb movement intention for the exoskeleton robot, and EEG and sEMG fusion will be employed in this work. Starting with the EEG and sEMG generation mechanism of lower limb movement, this work will estimate and calculate the response time difference between EEG and sEMG signals according to physiological parameters and symbolic transfer entropy, respectively. Eliminating this time difference between EEG and sEMG signals will ensure the consistency of information of the two signals at the same time, to further improve the recognition accuracy. The hybrid CNN-LSTM model will be established for EEG and sEMG fusion-based decoding of lower limb movement intention. This work will provide a stable and reliable basis for human–computer interaction control of the lower limb exoskeleton.

Methodology

Estimation of electroencephalogram and surface electromyogram response time difference based on physiological parameters

The lower limb movement of humans is produced by the response of skeletal muscles; under the control of the brain, the activities of brain neurons and skeletal muscles generate corresponding bioelectric signals (EEG and sEMG), and these signals contain important information about lower limb movement.

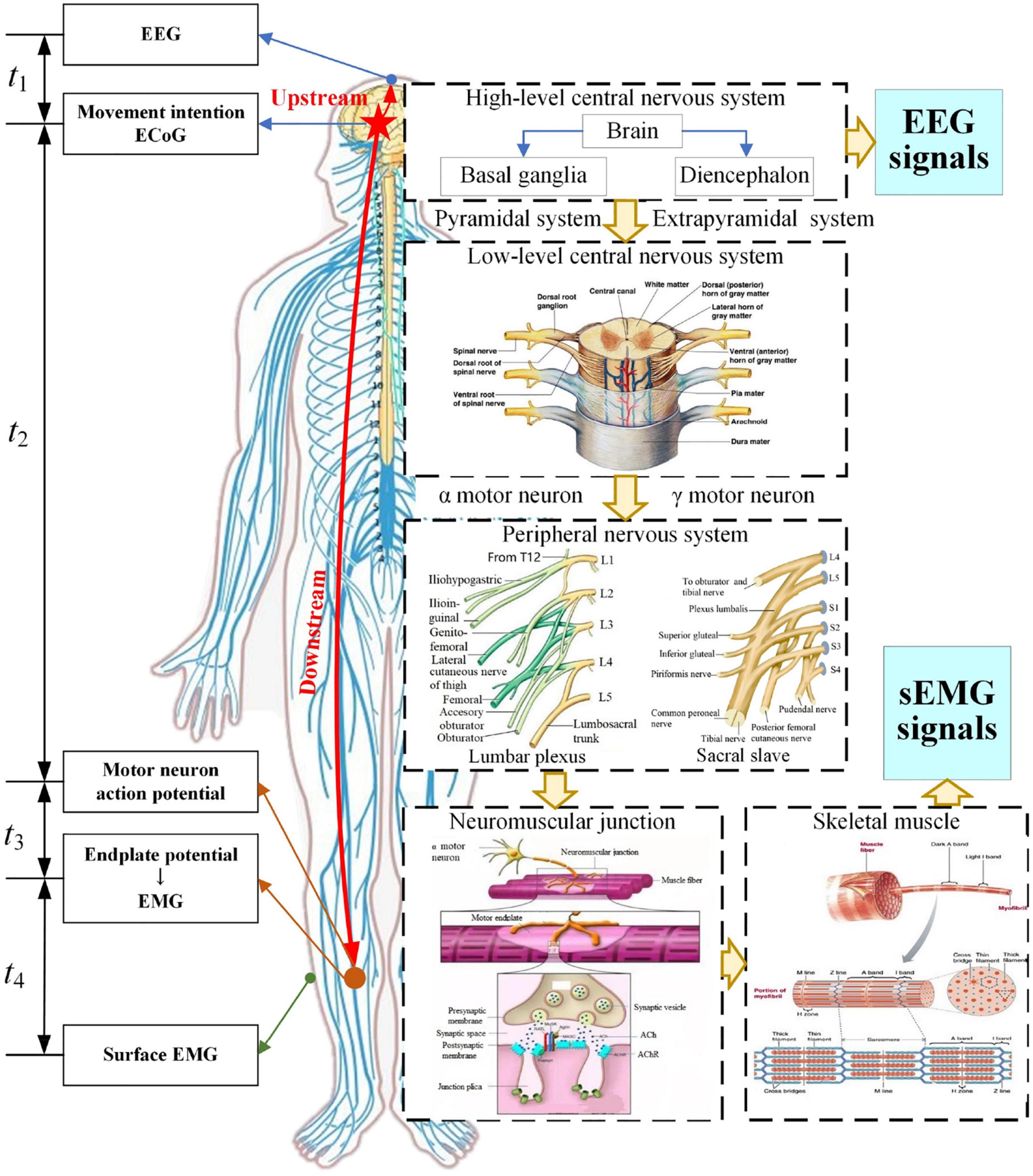

The mechanism of EEG and sEMG generation and transmission in the process of lower limb movement is shown in Figure 1.

The high-level central nervous system with the brain as the center performs information integration, processing, and decision-making, and controls the skeletal muscles of the lower limb to achieve a purpose of movement. In this processing, the low-level central nervous system and peripheral nervous system transmit these nerve signals. The neuromuscular junction, as the bridge connecting nerves and skeletal muscles, will translate nerve signals into endplate potential that can drive skeletal muscles. Then, the skeletal muscle fibers will contract or expand to produce lower limb movements.

Analysis of the generation and transmission process of EEG and sEMG signals can be divided into an upstream and downstream pathway. The upstream pathway is a process from electrocorticogram (ECoG) to EEG, and the cost time for this process was defined as t1. The downstream pathway is a process from ECoG to sEMG, and it can be divided into three stages. The first stage is the transmission from ECoG to the neuromuscular junction, and the cost time was defined as t2. The second stage is the conversion of the neuromuscular junction, and the cost time was defined as t3. The third stage is the process from an endplate potential to EMG or intramuscular EMG (iEMG) and then to surface EMG, and the cost time was defined as t4. The cost time at each stage was shown in the left side of Figure 1.

Based on the reference time of ECoG, the response time difference (ΔT) between EEG and sEMG was estimated as follows:

First, the research shows an apparent phase lag and signal amplitude attenuation in the transmission from ECoG to EEG. The simulation result showed that the time delay from ECoG to EEG was a few microseconds (μs) (Yi, 2021). It meant that t2 was in microseconds (μs).

Second, nerves perform the transmission function as a wire. t2 can be calculated based on the motor nerve conduction velocity and transmission distance as follows:

where v is the motor nerve conduction velocity and Dn is the conduction distance of the nerve.

The l was estimated by the human height based on the structural characteristics of the spinal cord and peripheral nervous system. The research report showed that the average height of Chinese men and women aged 18∼44 years was 169.7 and 158 cm, respectively (Yang, 2020). In this work, the distance from the brain to the tibialis anterior is about 1.4∼1.5 m. Previous research showed that the motor nerve conduction velocity is about 60 m/s (Bano et al., 2020; Nobue et al., 2020). t2 was obtained based on those data, so t2≈23∼25 ms.

Regarding the cost time t3 of neuromuscular junction conversion, our previous simulation result of the neuromuscular junction showed the peak offset of the model response was delayed by about 1 ms compared to the peak of the activation function. So, the cost time of neuromuscular junction conversion was 1 ms (t3 = 1 ms) (Xiaodong et al., 2021). Medical research indicated that the cost time of the chemical conversion of the neuromuscular junction is about 0.5∼1.0 ms (Yudong and Jianhong, 2007). Hence, t3 = 0.5∼1.0 ms in this work.

For the cost time t4 from EMG to sEMG, there was no published article on the response time difference between EMG to sEMG. However, the performance of EMG- and sEMG-based decoding had a good consistency; there were small differences in decoding stability and some statistical indicators (Crouch et al., 2018; Hofste et al., 2020; Knox et al., 2021). The transmission process from EMG to sEMG goes through the fat and the skin, that is, filtering and spatial superposition of signals. Referring to the simulation of EEG signals, the cost time of these volume conductors is about microseconds (μs). Therefore, t4 was also in microseconds (μs).

The aforementioned results indicated that t1 and t4 were in microseconds, and t2 and t3 were in milliseconds. Therefore, t1 and t4 were ignored in this work, and response time difference (ΔT) between EEG and sEMG was as follows:

Calculation of electroencephalogram and surface electromyogram response time difference based on symbolic transfer entropy

Fusing EEG and sEMG for human movement detection, EEG and sEMG signals need to be synchronized to ensure the consistency of information contained at the same time and improve the accuracy of recognition. The coherence analysis and the Granger causality analysis are often used to determine the homologous coupling relationship between EEG and sEMG signals. However, these methods ignore some nonlinear information. Transfer entropy analyses nonlinear information without relying on the established model. In this work, the transfer entropy method was used to calculate the coupling characteristics between EEG and sEMG signals and quantify the response time difference between EEG and sEMG signals.

The EEG and sEMG signals are typical dynamic signals with some noise. For extracting features of the EEG and sEMG signals, symbolization of signals is needed. The traditional symbolic methods cannot meet the requirements of EEG and sEMG because of the positive and negative transitions. At the same time, the scale parameters of symbolism are an essential factor affecting the performance, and the improved multiscale symbolic method is as follows (Yunyuan et al., 2017):

where x(•) is the signal sequence, S(•) is the symbolic signal sequence, δ is the increment per interval (δ = (max(x)-min(x))/(Ps+1)), and min(•) and max(•) are their minimum and maximum values, respectively. Ps is the scale parameters of symbolic, the value of Ps proportional to the fine level of symbolic, the larger the finer (Ps = 30 in this work).

For two signal sequences X = {x1, x2,…, xN,} and Y = {y1, y2,…, yN,}, N is the length of signals. The transfer entropy (TE) from x to y is defined as follows (Staniek and Lehnertz, 2008):

where prob(•) denotes the transition probability density and u is the time step.

The larger the value of TE, the stronger the coupling between EEG and sEMG, and vice versa. The combination of symbolic and transfer entropy is to replace the signal sequence with the symbolic signal sequence, and the multiscale transfer entropy of symbolic EEG and sEMG signals is expressed as TEPsEEG→EMG. It denotes the transfer entropy under the symbolic scale parameter Ps.

Electroencephalogram and surface electromyogram fusion-based detection method

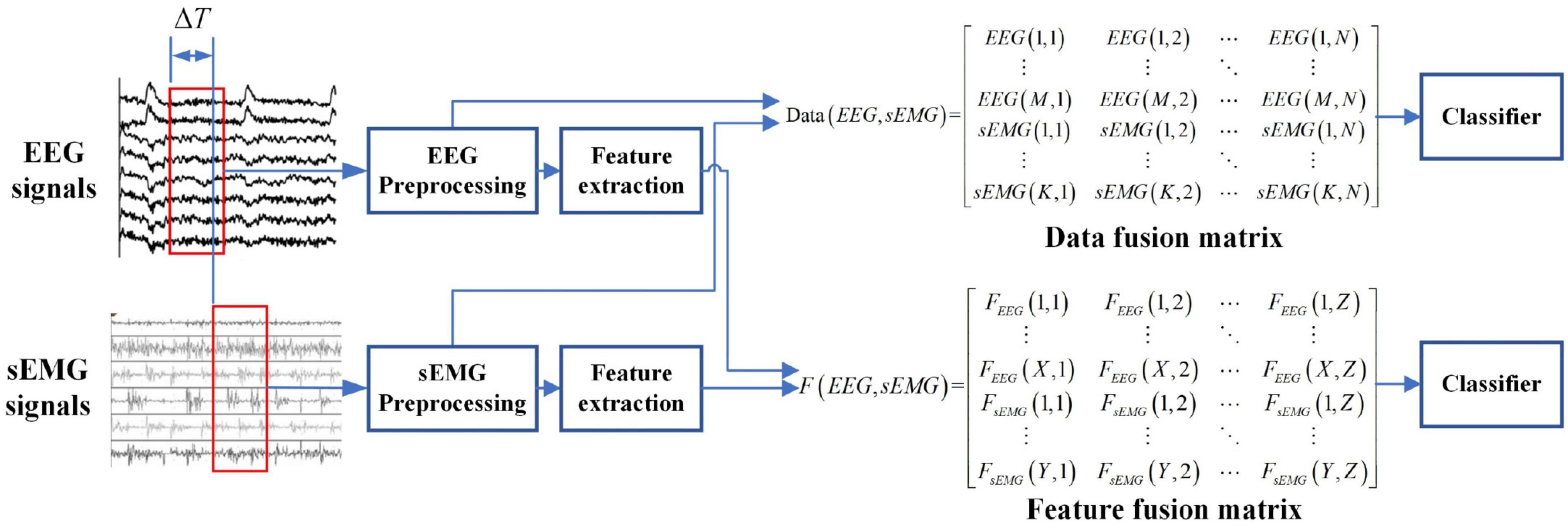

There are three common information fusion methods: data fusion, feature fusion, and decision fusion. Aiming at the detection of lower limb movement intention based on EEG and sEMG fusion, this work will use data and feature fusion to use the advantages of EEG and sEMG signals fully. The framework of EEG and sEMG fusion-based is shown in Figure 2.

Data fusion of electroencephalogram and surface electromyogram

There were three steps in the data fusion of EEG and sEMG signals. The first step was the data selection of EEG and sEMG, which was to eliminate the response time difference (ΔT) between EEG and sEMG by ensuring the consistency of information in the same time window. The second step was the preprocessing of EEG and sEMG. The preprocessing is mainly band-pass filtering and baseline calibration. The spatial filter and Butterworth filter are commonly used. Typical representatives of spatial filters are common average reference (CAR), Laplacian filters, and bipolar manners. A spatial filter can effectively improve the signal-to-noise ratio of signal (Delisle-Rodriguez et al., 2017; Jochumsen et al., 2018; Lipeng et al., 2020; Tsuchimoto et al., 2021). The Butterworth filter can remove part artifact and keep the original information (Ranran et al., 2018); it is also widely used in EEG and sEMG preprocessing (Ioana Sburlea et al., 2015a,b; Romero-Laiseca et al., 2020). So, the EEG data were filtered by a 0.5- to 64-Hz band-pass filter and a 50-Hz notch filter using the Butterworth filter. The sEMG data were filtered by a 20- to 200-Hz band-pass filter and a 50-Hz notch filter. The EEG and sEMG signals were normalized separately. The normalization calculation was given as follows:

where x(n) is the signals; N is the length of signals; xmax(n) and xmin(n) are the maximum value and minimum value of x(n), respectively; and X(n) is normalized value of x(n).

The last step was combing the EEG and sEMG data to obtain the data fusion matrix. The data fusion matrix will be used to detect lower limb movement intention.

Feature fusion of electroencephalogram and surface electromyogram

Considering the nonlinear characteristics of EEG signals, wavelet packet transformation (WPT) was employed for feature extraction in this work. WPT is a time–frequency analysis method, and low- and high-frequency information can be obtained at the same time (Liwei et al., 2019). According to the decomposition of the wavelet packet spatial structure, the rth wavelet packet decomposition coefficients of the (j+1) layer and the g point are obtained by the following recurrence formula:

where j,k∈Z, r = 1,2,…,2j-1, j is the layer of WPT, g is the translation factor, m is the scale parameter, r is the frequency band, and h and l are the high-pass filter and low-pass filter as a set of mutually orthogonal filters, respectively.

To obtain the EEG signals of delta (0–4 Hz), theta (4–7 Hz), alpha (8–12 Hz), and beta (13–30 Hz) (Hashimoto and Ushiba, 2013; Kline et al., 2021), the five-layer wavelet packet transformation was conducted after downsampling to 128 Hz. Variance and energy of decomposition coefficients were calculated as the EEG feature:

where NWPT is the total number of wavelet packet decomposition nodes and Df are each nodes of layer.

The feature matrix of EEG signals of M channels is defined as follows:

where fi is the EEG feature vector of the ith channel, i∈M.

The time domain and frequency domain characteristics of sEMG signals are significant, and its indexes in the time domain, frequency domain, and time–frequency domain are often used as features (Phinyomark et al., 2012). In this work, the variance (VAR), slope sign change (SSC), zero crossing (ZC), root mean square (RMS), mean power frequency (MPF), and Fourier cepstrum coefficient (FCC) of sEMG were selected for feature extraction.

(1) Variance (VAR)

where xk is the sEMG signals, k = 1, 2, …, N, N is the length of signals, and xm is the mean value of signals.

(2) Slope sign change (SSC)

where ε is the threshold factor.

(3) Zero crossing (ZC)

(4) Root mean square (RMS)

(5) Mean power frequency (MPF)

where f is the frequency of signals and P() is the power of the signal.

(6) Fourier cepstrum coefficient (FCC)

where X[k] is the Fourier transform of signals x[k], Yk = f(| X[k]|) is the amplitude logarithm of X[k], and i is the number of the Fourier cepstrum coefficient.

The feature matrix of sEMG signals of K channels is defined as follows:

where gi is the sEMG feature vector of the ith channel, i∈K.

Due to the dimensionality characteristic of the EEG and sEMG feature matrix, the cascaded fusion feature matrix was obtained, as follows:

Hybrid convolution neural network-long short-term memory model

Deep learning has been applied widely in pattern recognition and regression fitting. According to the structure and functional characteristics of deep learning, it includes typical convolution neural network (CNN), deep brief network (DBN), recurrent neural network (RNN), etc. The CNN involves representation learning, and it can conduct shift-invariant classification based on its hierarchical structure. The CNN is mainly composed of an input layer, a convolutional layer, a pooling layer, a fully connected layer, and an output layer. These layers render the CNN with the characteristics of local sensing field and downsampling, which can improve the ability of feature extraction and decrease network complexity. In the process of network construction, the network framework, convolution kernel, activation function, and pooling parameter are the key points and difficulties. The convolution kernel is the key to automatic feature extraction. The activation function is used to overcome the gradient disappearance problem. The pooling parameter is downsampling the feature matrix from the convolutional layer; and it can reduce the amount of data (Yaqing et al., 2020).

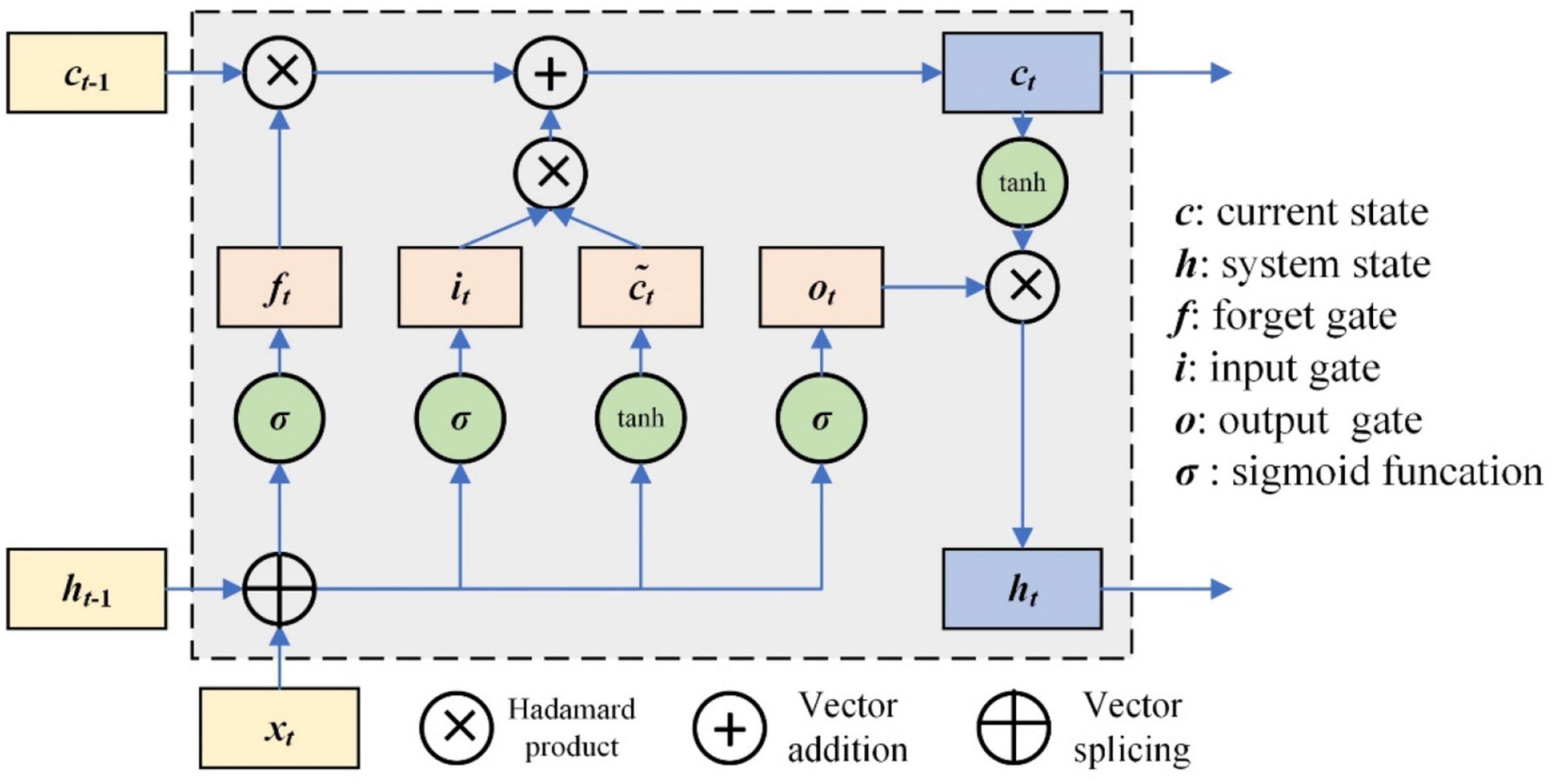

The RNN has the characteristics of memory and parameter sharing, and its typical representative is long short-term memory (LSTM) networks. The LSTM unit controls three gates, which are the input gate, forget gate, and output gate. The framework of the LSTM unit is shown in Figure 3.

The working mechanism of LSTM can be simply described as three stages: forgetting stage, selective memory stage, and output stage (Xipeng, 2022). The forgetting stage involves selectively forgetting the information from the previous neuron node, remembering the important, and forgetting the unimportant. The forget gate is ft, which selectively forgets the previous state ct–1. The selective memory stage is the selective memory of the input information xt, and the current state ct can be obtained by the input data and previous system state gate. The output stage is to determine which information will be regarded as the current output; it is controlled by ot. The output is ht. The update of those gates is as follows.

where Wi, Wf, and Wo are the weight matrix of the input gate, forget gate, and output gate, respectively, corresponding to input at the current time. Ui, Uf, and Uo are the weight matrix of the input gate, forget gate, and output gate, respectively, corresponding to the external state of the cyclic unit at a previous time; bi, bf, and bo are the offset vector of the input gate, forget gate, and output gate, respectively.

The current state output of the LSTM unit ht is as follows (Jiangjiang et al., 2017; Khataei Maragheh et al., 2022):

where Wc and Uc are the corresponding weight matrixes, respectively, and bc is the offset vector.

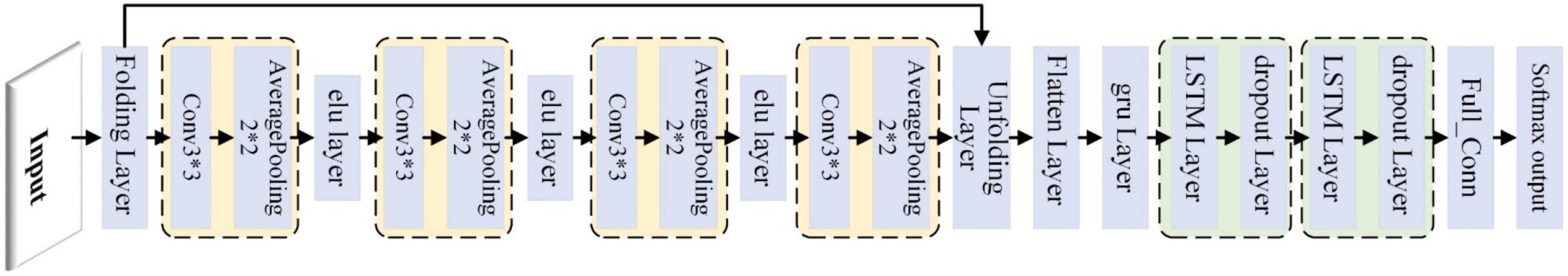

The CNN performs well in feature extraction, and LSTM can capture the long-term dependence relationship in sequence data. So, the combination of the CNN and LSTM can make full use of the advantages of the two networks, and it has been proven a good performance in pattern recognition (Jianfeng et al., 2019).

The schematic diagram of the hybrid CNN-LSTM network is shown in Figure 4. The CNN has four convolution—average pooling blocks, and the exponential linear unit layer was used to connect those blocks. There were two LSTM-dropout blocks, and the dropout layer was commonly used to solve over-fitting problems. The folding layer and unfolding layer were used to connect the CNN and LSTM, and finally to the fully connected layer and softmax classification layer.

Experimental system and setup

Experimental system

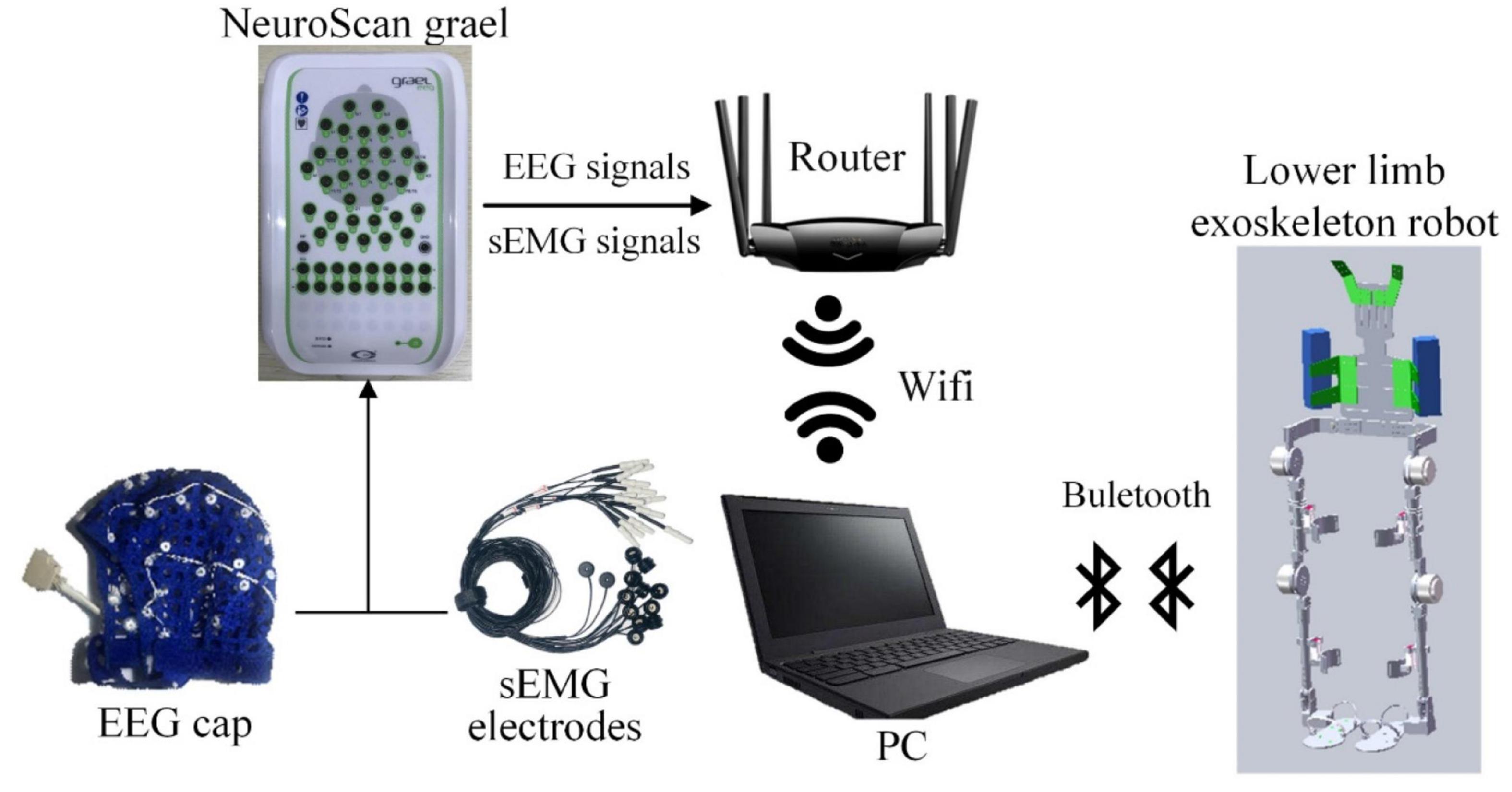

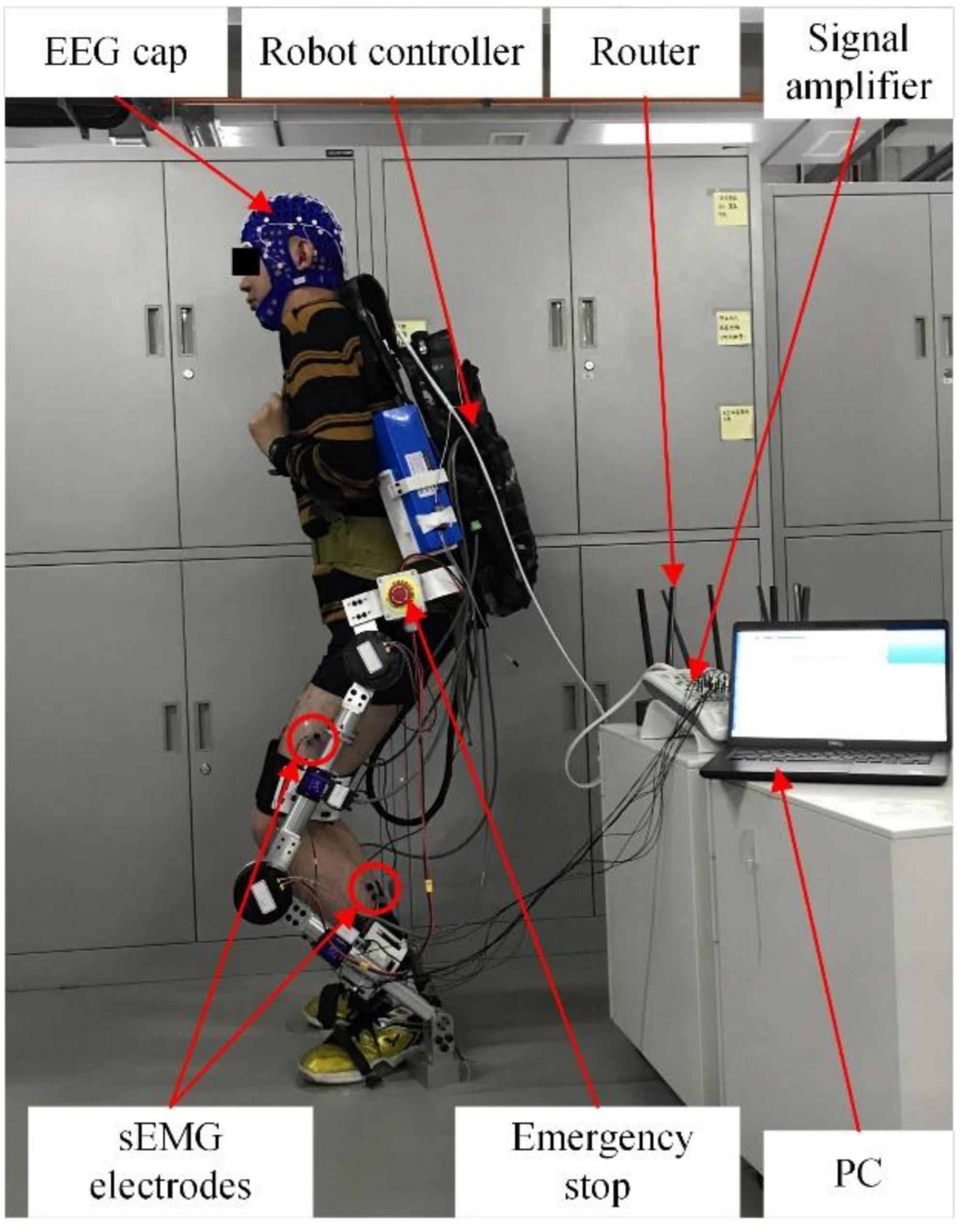

The experimental system mainly includes three parts: EEG/sEMG acquisition device, computer, and lower limb exoskeleton robot. The EEG/sEMG acquisition device adopted the NeuroScan-grael, and it can collect 32-channel EEG signals and eight-channel sEMG signals synchronously. The computer with Intel i5 was used for signal processing. The exoskeleton robot was developed by our team at Xi’an Jiaotong University. The scheme of EEG and sEMG fusion-based detection for a lower limb exoskeleton robot is shown in Figure 5. The NeuroScan-grael sent the EEG and sEMG data to the router by cable. All the recorded data were simultaneously transferred to a computer by Wifi, and the computer and robot communicated via Bluetooth.

Subjects and electroencephalogram/electromyogram data recording

A total of eight healthy (seven men and one woman) subjects were invited to participate in the experiment; they were 25 ± 1.9 years of age and without limb dysfunction and any known cognitive deficits. All the subjects gave informed consent after the nature and possible consequences of the experiment were explained. The Institutional Review Board of Xi’an Jiaotong University approved the proposed experiments, and all the experiments were conducted in accordance with the Declaration of Helsinki.

The EEG and sEMG data were collected using NeuroScan-grael at a sampling rate of 1,024 Hz. The EEG channel distribution was based on the international 10/20 system. FC1, FC2, FC5, FC6, Cz, C3, C4, CP1, and CP2 were selected for EEG data; the AFz channel was used as ground, and the CPz channel was used as reference. The vastus medialis, the biceps femoris, the lateral gastrocnemius, and the tibialis anterior were selected to collect sEMG data (Gordleeva et al., 2020; Tortora et al., 2020). Differential electrodes were used for sEMG signal collecting. The distance between differential electrodes was about 1.4 cm, and it shared the reference electrode with EEG signals.

Experimental procedure setup

The experiments were conducted in a quiet room with electromagnetic interference being controlled, to verify the effectiveness of the proposed method.

The lower movement task of the experiment included eight kinds of lower limb movement intentions, such as going upstairs on right and left legs, going downstairs on right and left legs, crouching, stepping forward on the left leg, stepping forward on the right leg, and standing. The subjects were asked to avoid unnecessary movement in the experiment and perform voluntary movement of the lower limb without any stimulation.

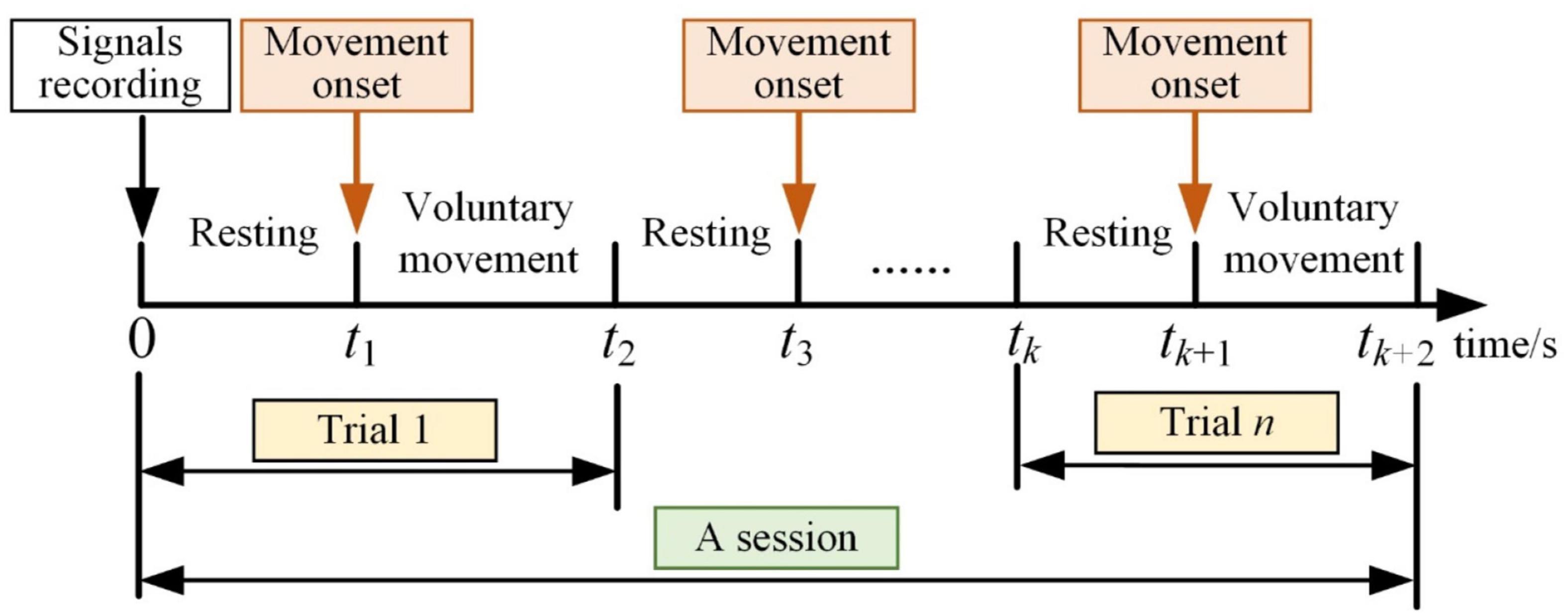

These experiments included eight movement tasks, and each movement task consisted of several sessions. The subject repeated the same voluntary movement tasks five times (five trials) in each session, and the time scheme of each session is shown in Figure 6. Each movement task contained 10 sessions.

After recording the EEG and sEMG signals, there were more than 10 s for the subjects to return to the resting stage. The subjects performed lower limb voluntary movement at any time, and this movement was consistent for about 3 s. There were also more than 10 s for resting between each trial, and about a 5-min intermission after a session of the experiment. The movement onset was detected by sEMG signals, and it was used for data partition (Xiaodong et al., 2021).

In online experiments, the lower limb movement tasks were the same as offline experiments. Reciprocal exoskeleton robot motion and lower limb movements served as the control targets, and this robot was controlled based on the finite state machine (FSM). It took standing as the intermediate state to switch the movement state. Once the lower limb movement was detected, the result was sent to the robot controller and displayed on the PC for the experimenter, and the experimenter recorded this result and the actual movement dictated by the subjects.

Experimental results

The response time difference between electroencephalogram and surface electromyogram

After preprocessing the EEG and sEMG data from the experiments, the movement onset of the lower limb was detected by the tibialis anterior sEMG signals. Taking this movement onset as the data center and zero in the time domain, 2 s of EEG and sEMG data pre- and post-onset time were segmented for processing, so the time range of data was from −2.0 to 2.0 s (Ioana Sburlea et al., 2015a,b; Delisle-Rodriguez et al., 2017; Dong et al., 2018).

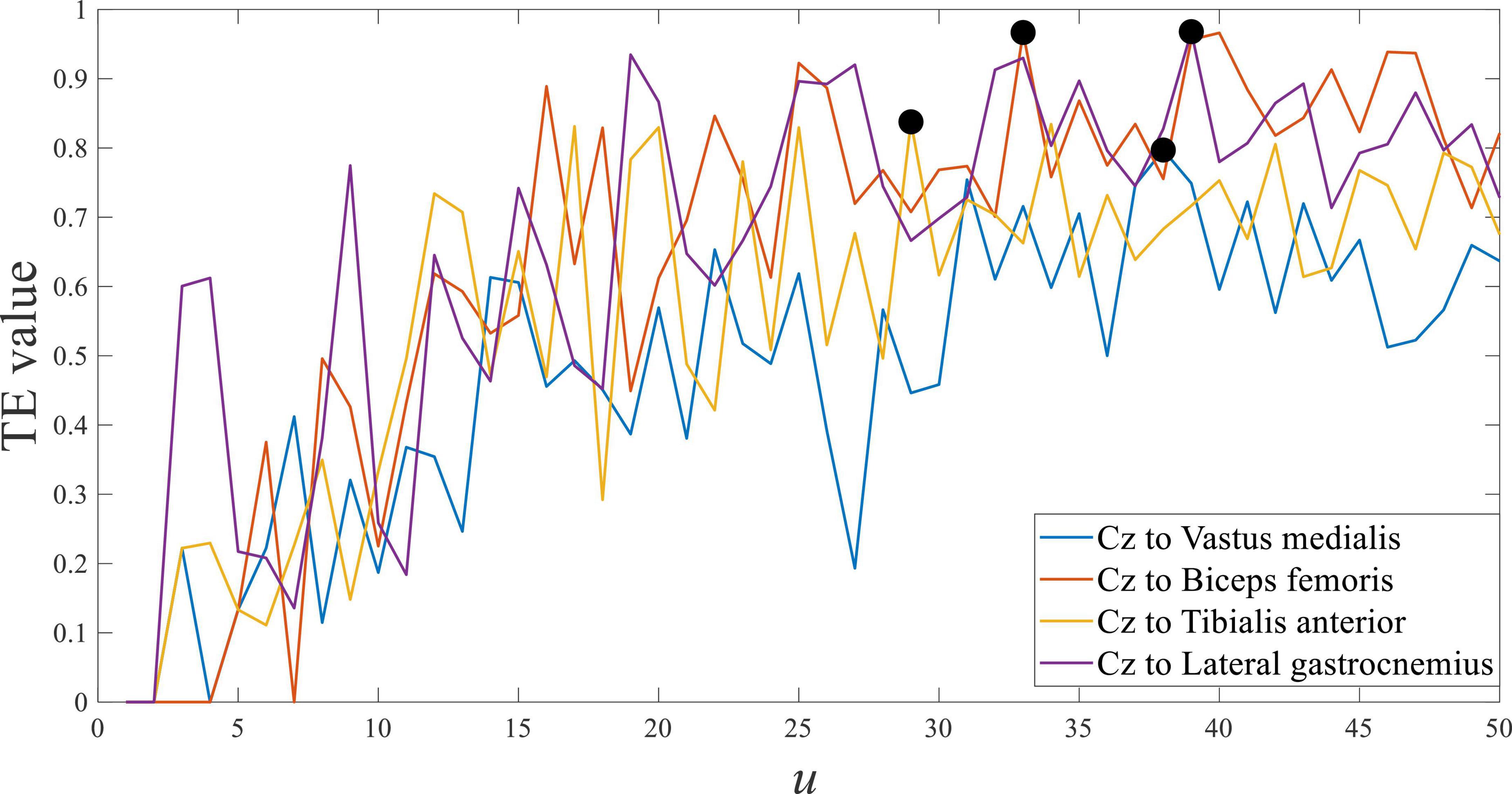

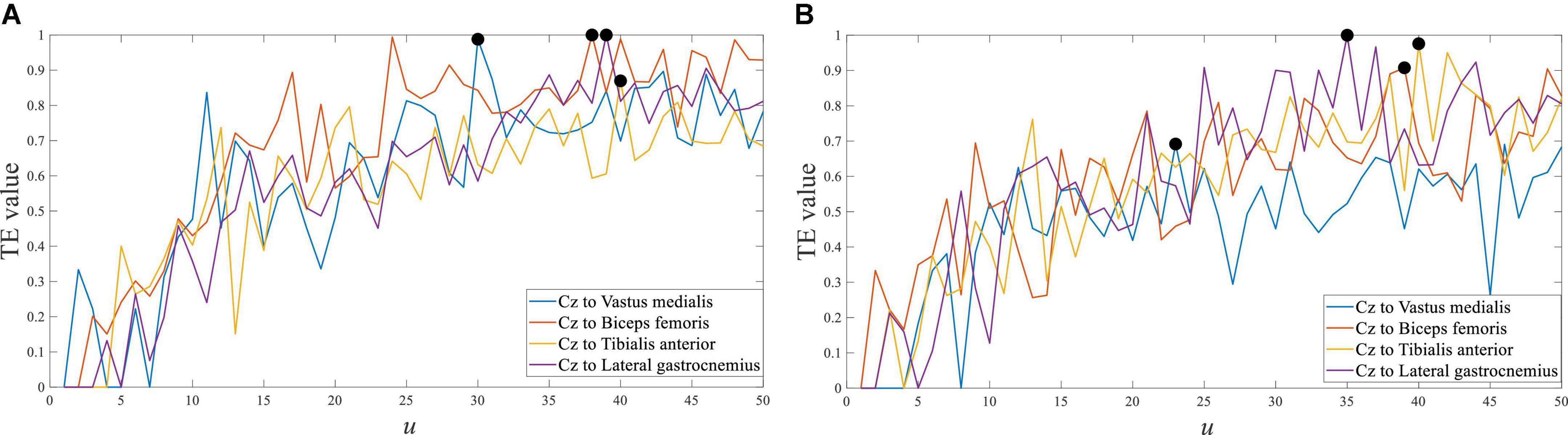

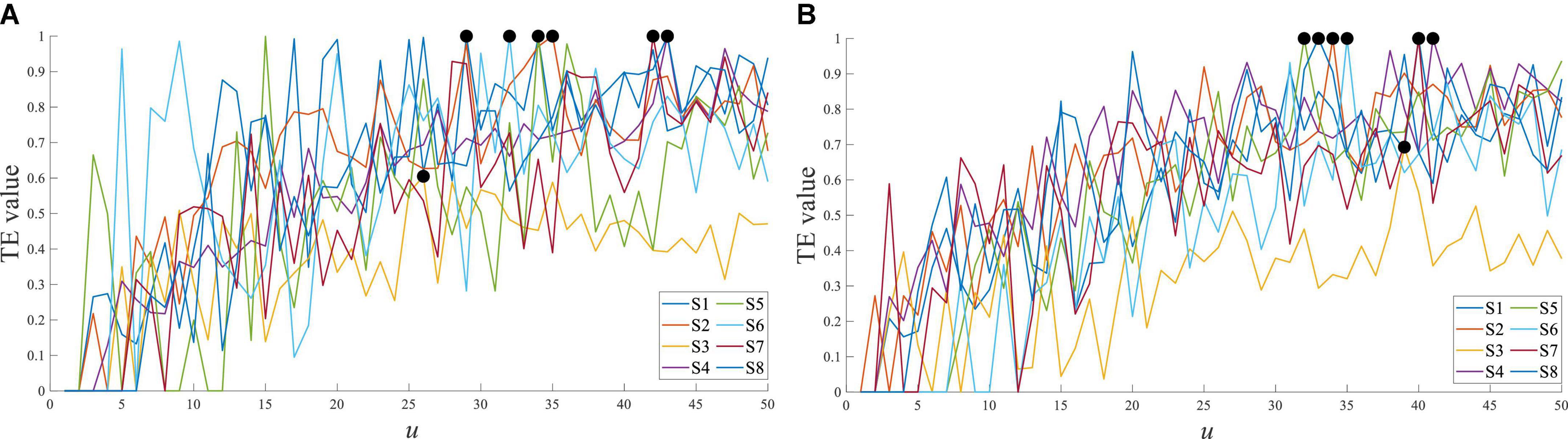

Using the symbolic transfer entropy to calculate the response time difference between EEG and sEMG, the time step u was set as 50. The result of subject 1 is shown in Figure 7, and it was the average of multiple trials.

Figure 7 shows the transfer entropy of Cz EEG to all sEMG at stepping forward of the left leg, and the marked black point was the maximum value of each curve. The maximum value of transfer entropy was distributed in the u range of 27 to 38, and it meant that the response time difference between EEG and sEMG was equal to 27∼38 ms. The response time difference between EEG and sEMG was greater than the estimated value of 25∼26 ms. Comparing the estimated value and calculated value, they were consistent in the order of magnitude but different in value. It suggested that the physiological parameter-based estimation method was correct. In addition, the generation and transmission of EEG and sEMG were more complex in human movement.

Taking the representative channel Cz in the central cortex as an example, the transfer entropy results of EEG to each sEMG channel signal from crouching are shown in Figure 8. The response time difference between EEG and sEMG at crouching of the left leg was equal to 30∼40 ms, and it was equal to 24∼39 ms in crouching of the right leg. The maximum value of the transfer entropy of the Cz channel EEG signal to different sEMG signals of the left and right legs was consistent in the same movement. It indicated that they had almost the same performance in left and right leg movements (Hashimoto and Ushiba, 2013; Kline et al., 2021).

Figure 8. Transfer entropy of Cz EEG to all sEMG at crouching from S1. (A) Left leg and (B) right leg.

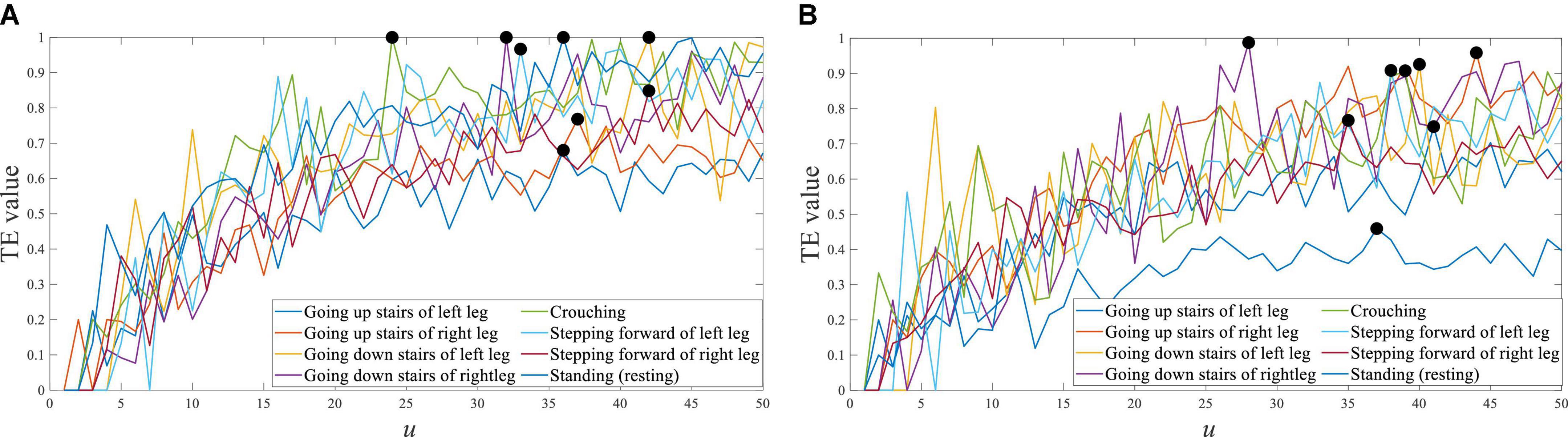

For the different movement tasks of the lower limb, the transfer entropy of Cz EEG to tibialis anterior sEMG is shown in Figure 9. So, the response time difference between EEG and sEMG was equal to 24∼42 ms of the left leg, and equal to 27∼45 ms of the right leg. Those maximum distributions were mainly concentrated near 35 ms. This result indicated a slight difference in response time between different lower limb movement tasks, which can be equal to the same value.

Figure 9. Transfer entropy of Cz EEG to tibialis anterior sEMG of all movement tasks from S1. (A) Left leg and (B) right leg.

For different subjects, the transfer entropy of Cz EEG to tibialis anterior sEMG at stepping forward is shown in Figure 10. the response time difference between EEG and sEMG was equal to 26∼43 ms at stepping forward of the left leg, and there was a little difference between the subjects. The response time difference was 32∼42 ms at stepping forward of the right leg. The results demonstrated that each subject’s results had a good consistency and verified the universality of the results.

Figure 10. Transfer entropy of Cz EEG to tibialis anterior sEMG at stepping forward from all subjects. (A) Stepping forward of the left leg and (B) stepping forward of the right leg.

The maximum value of transfer entropy between EEG and sEMG signals was different in different EEG and sEMG channels and different lower limb movement tasks of the same subject. However, the distribution of the maximum value was still concentrated near a specific value (u = 35). For different subjects, the distribution of the maximum value also had an aggregated distribution with an insignificant difference. This result proved that the response time difference between EEG and sEMG was insignificant. To simplify the procedure of eliminating this time difference for EEG and sEMG fusion, the response time difference between all EEG and all sEMG of all subjects was set to a constant (ΔT = 35 ms).

Performance of lower limb voluntary movement intention detection

Compared with EEG and sEMG fusion-based lower limb movement intention detection, this lower limb movement intention decoding was based on only EEG and only EMG signals.

After the data segment was conducted for each trial from −2.0 to 2.0 s, a preprocessing of EEG and sEMG data was conducted with window length L, respectively, and the length of the sliding window was set as 0.5*L. The feature of EEG and sEMG was also extracted with window length L, respectively. The data fusion dataset of EEG and sEMG and feature fusion dataset of EEG and sEMG were constructed for each subject, respectively. The time window length of signals has an impact on classification accuracy. The time window length L was 200, considering the information contained and the cost time of processing.

Detecting the robustness of the proposed CNN-LSTM model and preventing an over-fitting problem, 5-fold cross-validation was employed. The dataset was randomly divided into five equal-sized subsets. That can avoid the contingency of a single dataset partition through multiple random partitions of the dataset. It can ensure the consistency of distribution of the training set and testing set to avoid over-fitting to a certain extent and reduce the possibility of falling into the local optimum (Rui et al., 2018), four of which were used for training, and one was used for testing. Each subject’s data were used to train the subject’s own classifier.

Performance of electroencephalogram- and surface electromyogram-based movement intention detection

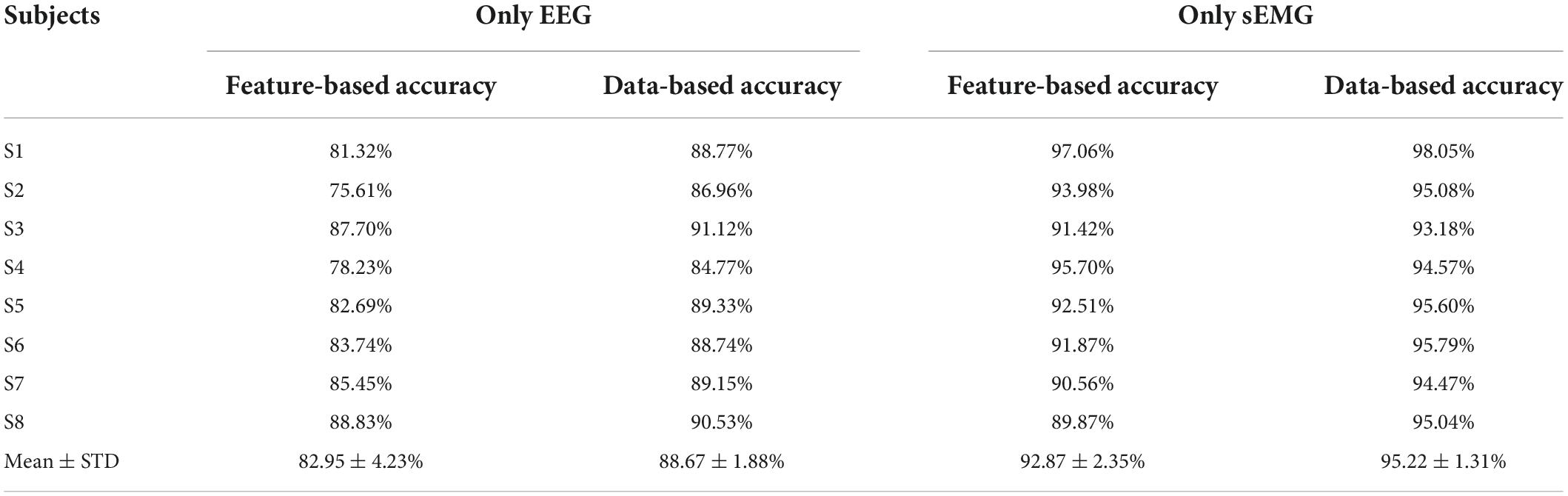

For the recognition of lower limb movement intention based on only EEG or sEMG signals, this work also used the data and feature of EEG or EMG to test. The classifier was the established CNN-LSTM model, and the data window length was equal to 200. The performance of EEG- and sEMG-based lower limb movement intention detection is shown in Table 1.

Table 1. Performance of electroencephalogram (EEG)- and sEMG-based lower limb movement intention detection.

The standard deviation (STD) of the average accuracy of EEG feature-based lower limb movement intention detection was 82.95 ± 4.23%, and the average accuracy of EEG data-based detection was 88.67 ± 1.88%. These corresponded to normal distribution (p = 0.90 and p = 0.42, respectively, Shapiro–Wilk test). There was a significant difference between EEG feature-based and EEG data-based detection (t = −5.43, p = 0.001 with the paired t-test). The average accuracy of sEMG feature-based lower limb movement intention detection was 92.87 ± 2.35%, and the average accuracy of sEMG data-based detection was 95.22 ± 1.31%. These corresponded to normal distribution (p = 0.62 and p = 0.53, respectively). There was a significant difference between EEG feature-based and EEG data-based detection (t = −3.39, p = 0.01).

The accuracy of EEG or sEMG data-based detection had a better performance by using the CNN-LSTM model, and sEMG data- or feature-based detection achieved a good classification accuracy compared with EEG data- or feature-based detection (t = −6.18, p = 0.00 and t = −4.25, p = 0.00, respectively). It indicated that the sEMG-based method had a distinct advantage in detecting lower limb movement.

Performance of electroencephalogram and surface electromyogram fusion-based movement intention detection

The response time difference (ΔT) between EEG and sEMG was obtained by the previous work, and it can be used to improve classification performance. Eliminating this time difference, this work took the current time t of EEG signals as the time benchmark. The current time of time window for sEMG signals was t+ΔT, which meant that the sEMG data lagged EEG data.

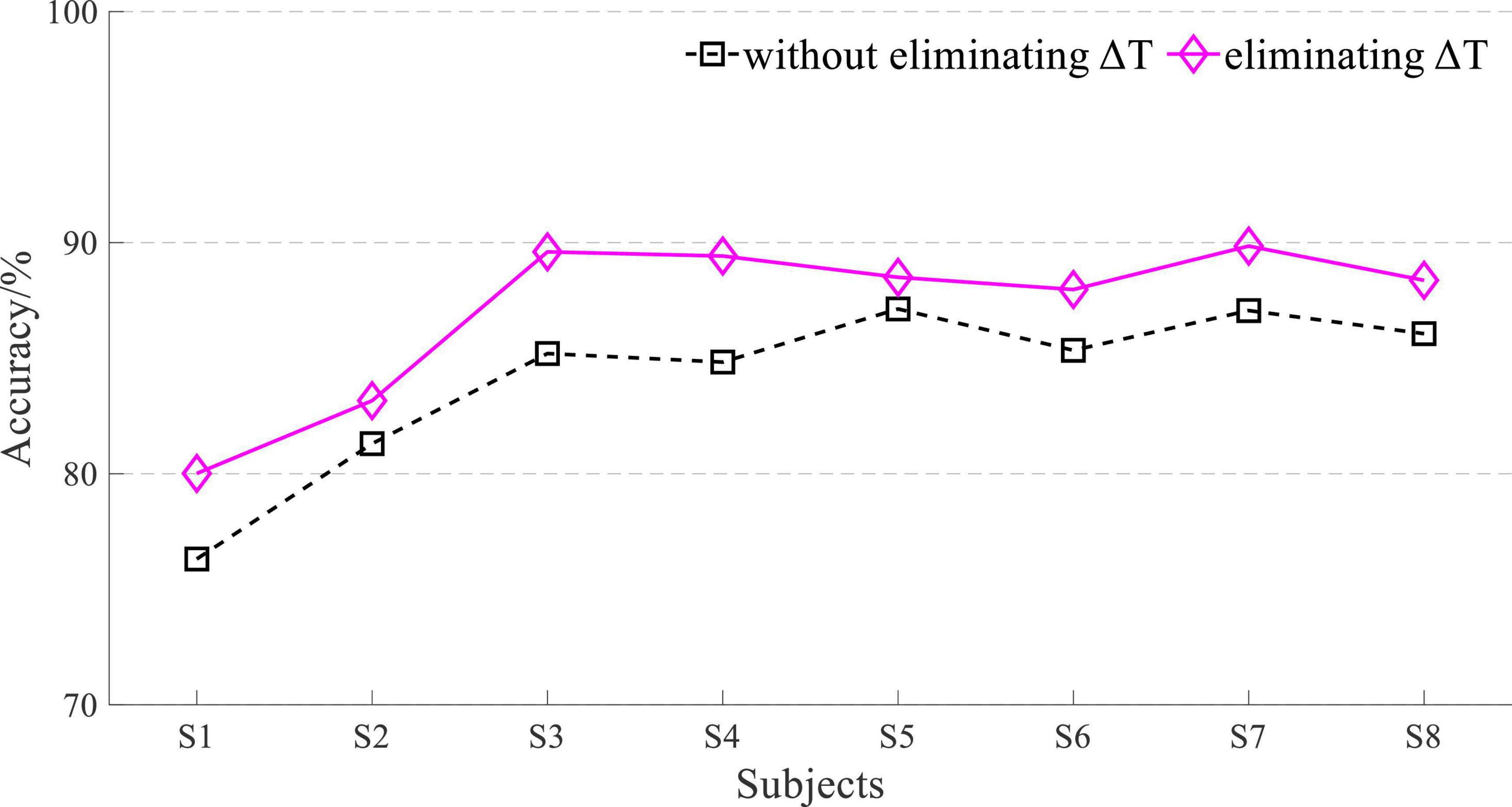

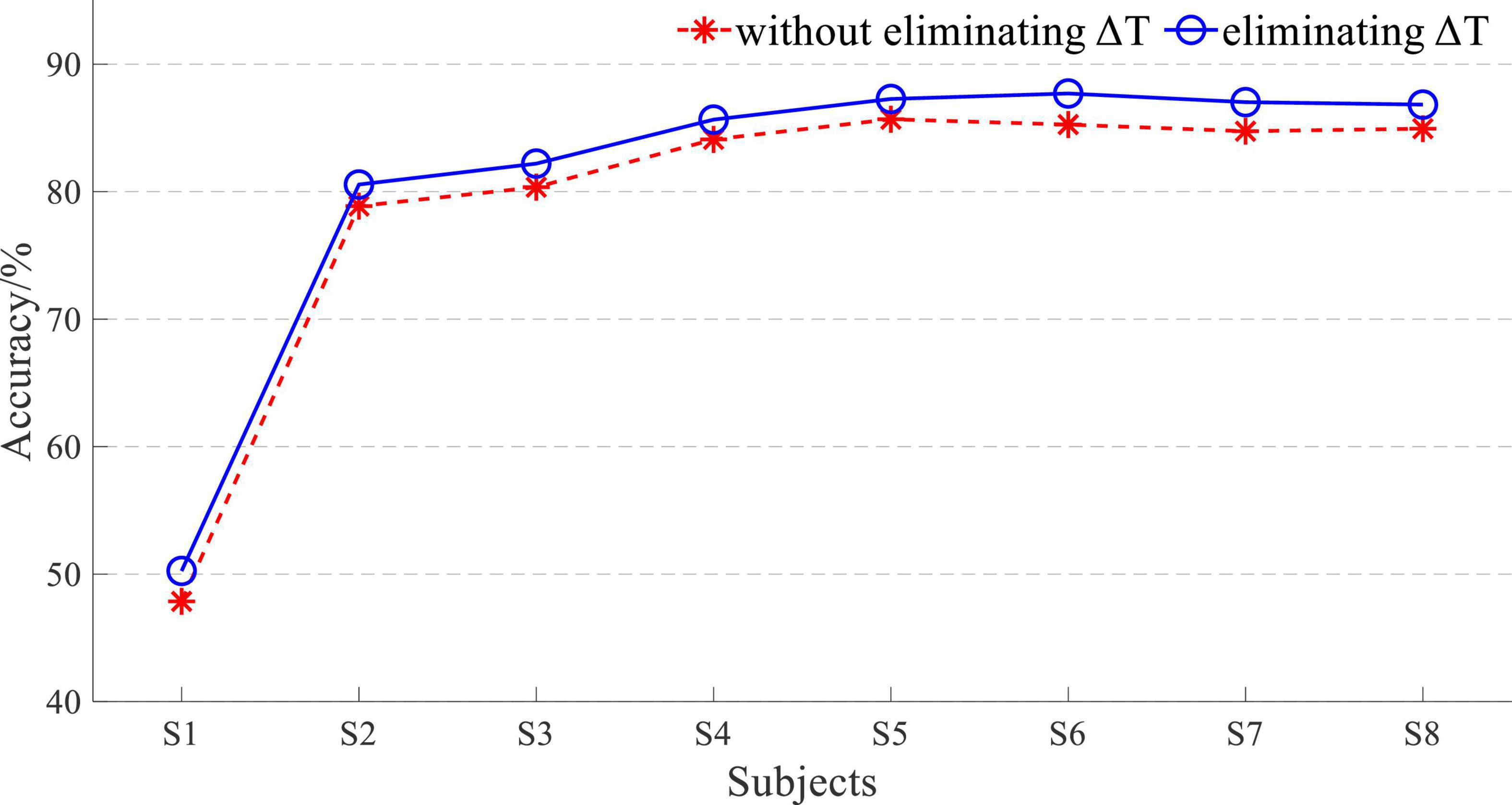

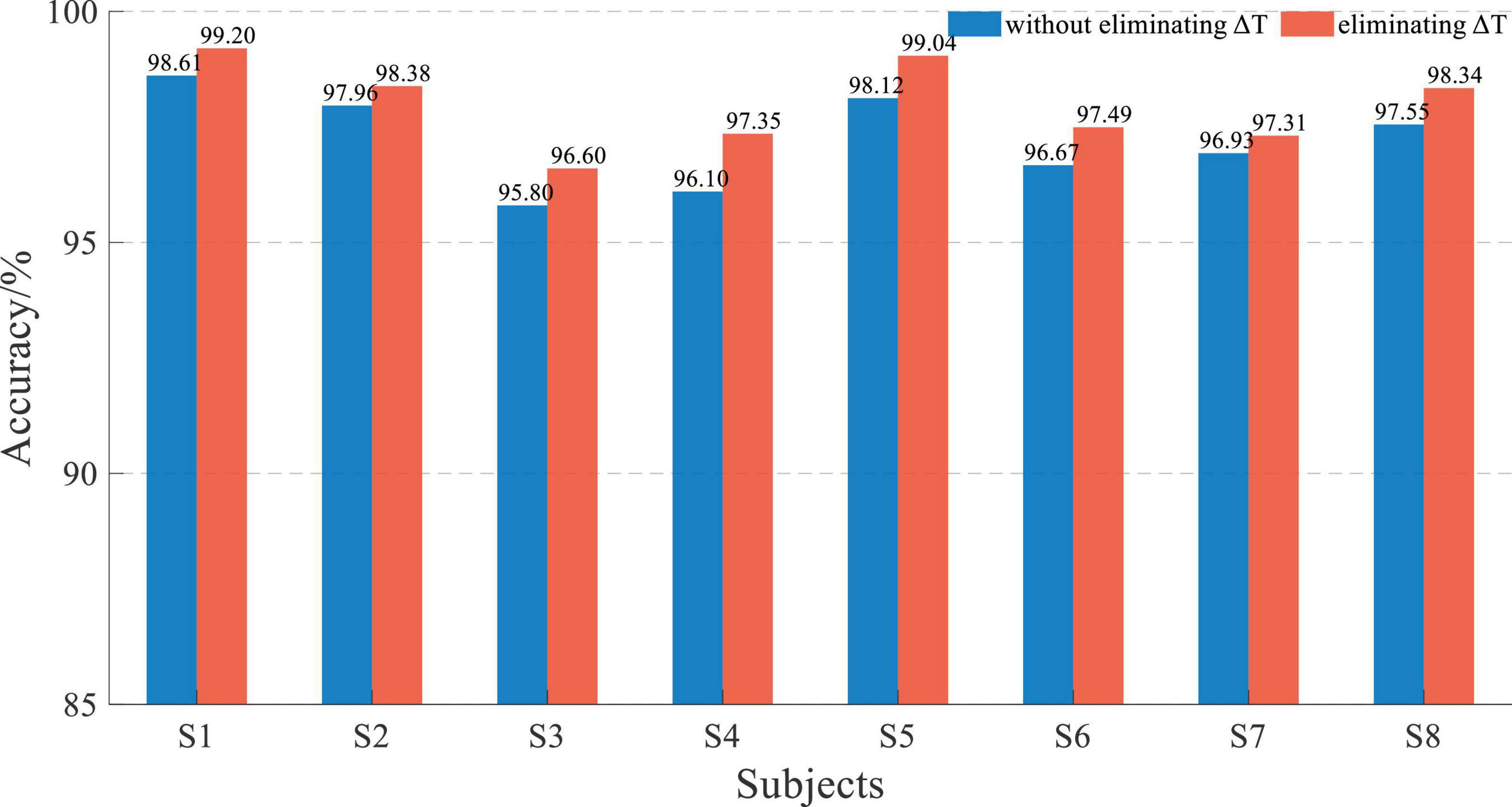

It is well known that the signal sequence of longer length contains more information, and a higher accuracy of pattern recognition will be obtained to a certain extent. So, the signal length greatly influences the performance of detection. The response time difference ΔT between EEG and sEMG was set as 35 ms. If the time window length L was set as 200, the proportion of ΔT in L is less than 20%. Therefore, L was set to 50 to increase the ratio of ΔT to reflect its importance. The length of the sliding window was also set as 50 in this part. With an L of 50, the 5-fold cross-validation-based average accuracy of EEG and sEMG data fusion is shown in Figure 11.

The average accuracy of EEG and sEMG data fusion-based movement detection without eliminating ΔT was 84.15 ± 3.42% at L of 50 and did not correspond to normal distribution (p = 0.02). When using EEG and sEMG data fusion to classify by eliminating ΔT, the average accuracy rate was 87.11 ± 3.34% and did not correspond to a normal distribution (p = 0.01). There was a significant difference between whether eliminating ΔT in data fusion (t = −7.15 and p = 0.00). It verified the effectiveness of eliminating this time difference ΔT. The average improvement accuracy was 2.96 ± 1.09%, corresponding to a normal distribution (p = 0.64).

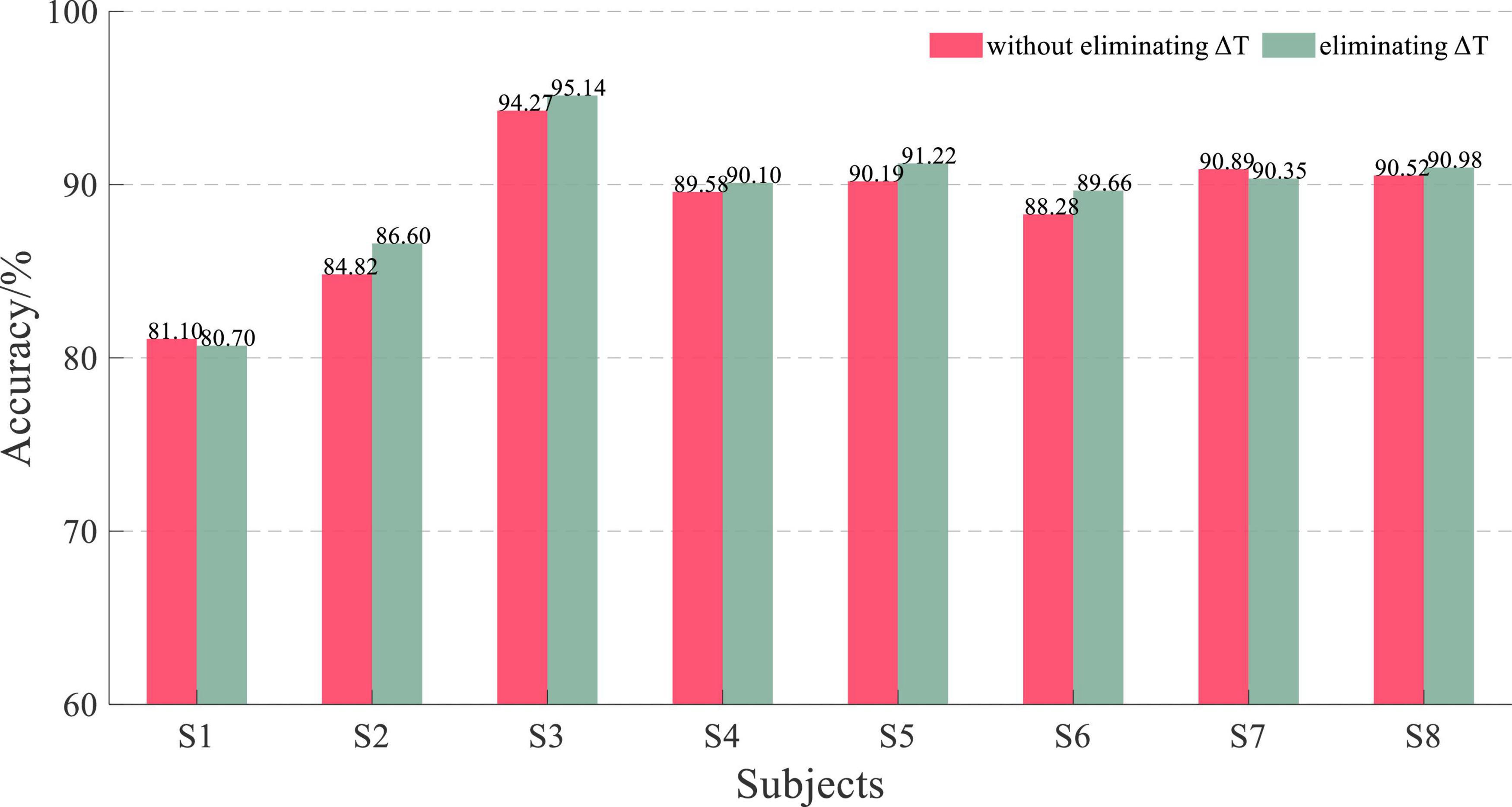

With an L of 50, the 5-fold cross-validation-based average accuracy of EEG and sEMG feature fusion is shown in Figure 12, and the average accuracy without eliminating ΔT was 78.98 ± 11.99% and did not correspond to a normal distribution (p = 0.00). When eliminating ΔT, the average accuracy was 80.94 ± 11.85% and did not correspond to a normal distribution (p = 0.00). There also was a significant difference between whether eliminating ΔT in feature fusion (t = −15.35 and p = 0.00). It also verified the effectiveness of eliminating this time difference ΔT. The average improvement accuracy was 1.96 ± 0.34%, corresponding to a normal distribution (p = 0.20).

When the L was equal to 50, although the classification accuracy was not good enough, eliminating ΔT had a significant impact on the result. It suggested that eliminating the time difference ΔT can improve the EEG and sEMG fusion-based detection of lower limb movement intention.

The result of L = 50 was not good, and the 5-fold cross-validation-based average accuracy of whether eliminating ΔT based on EEG and sEMG data fusion with an L of 200 is shown in Figure 13.

Figure 13. Performance comparison histogram of whether eliminating ΔT based on EEG and sEMG data fusion with an L of 200.

The average accuracy of EEG and sEMG data fusion without eliminating ΔT was 97.21 ± 0.94%, corresponding to a normal distribution (p = 0.79). When using EEG and sEMG data fusion to classify by eliminating ΔT, the average accuracy rate was 97.96 ± 0.86%, corresponding to normal distribution (p = 0.58). With paired t-test on those results, it showed that there was a significant difference between whether eliminating ΔT of EEG and sEMG to detect the movement. It demonstrated that eliminating the time difference between EEG and sEMG can improve accuracy. The average accuracy of improvement was 0.75 ± 0.26%, corresponding to a normal distribution (p = 0.62).

The 5-fold cross-validation-based average classification accuracy of EEG and sEMG feature fusion is shown in Figure 14. The average accuracy of EEG and sEMG feature fusion without eliminating ΔT was 88.71 ± 3.79%, corresponding to a normal distribution (p = 0.47). When using EEG and sEMG feature fusion to classify by eliminating ΔT, the average accuracy rate was 89.32 ± 3.93%, corresponding to a normal distribution (p = 0.20). The paired t-test on those results showed no significant difference between the two methods (t = −2.23 and p = 0.06). It also verified the effectiveness of eliminating this time difference ΔT. The average improvement accuracy was 0.64 ± 0.76%, corresponding to a normal distribution (p = 0.73).

Figure 14. Performance comparison histogram of whether eliminating ΔT based on EEG and sEMG feature fusion with an L of 200.

The eliminating time difference ΔT can improve the accuracy by about 0.7%. Although this increased accuracy was not considerable, it also proved the impact of the time difference. With the increase in the window length L, the proportion of ΔT in the data window decreased. So, the accuracy improvement with an L of 200 was not significant, but there was still a statistical improvement.

The experimental results showed that the accuracy based on the data fusion method was higher than the feature fusion-based method in all offline testing. Feature extraction of EEG and sEMG signals significantly reduced input data dimension, and the cost time of single classification was 10∼20 ms. The cost time of a single classification using data fusion was 70∼80 ms. However, because there were many channels of EEG and sEMG signals for classification, it took 200∼250 ms to extract features. The data fusion method only needed to preprocess the EEG and sEMG data, which only took about 100 ms in the offline experiment. The data fusion method was more appropriate in performance and cost time than the CNN-LSTM model. This was the reason for establishing a hybrid CNN-LSTM model, which can not only make full use of feature extraction of the CNN but also make full use of the memory and classification ability of LSTM.

Therefore, EEG and sEMG data fusion-based method was used for testing in the online experiment. In an online application, the signal processing algorithm reads the real-time EEG and sEMG data from the data buffer of the EEG/sEMG acquisition device. According to our research results, sEMG after ΔT of the current time should be used for processing and fusion, but this part of sEMG data is not available in the data buffer at the current time. Solving this problem took the sEMG signal as the time benchmark. So, the time window of EEG signals was [t-ΔT, t+L-ΔT], and the time window of sEMG signals was [t, t+L]. The t+L was the end of the data buffer. The online experimental scene is shown in Figure 15.

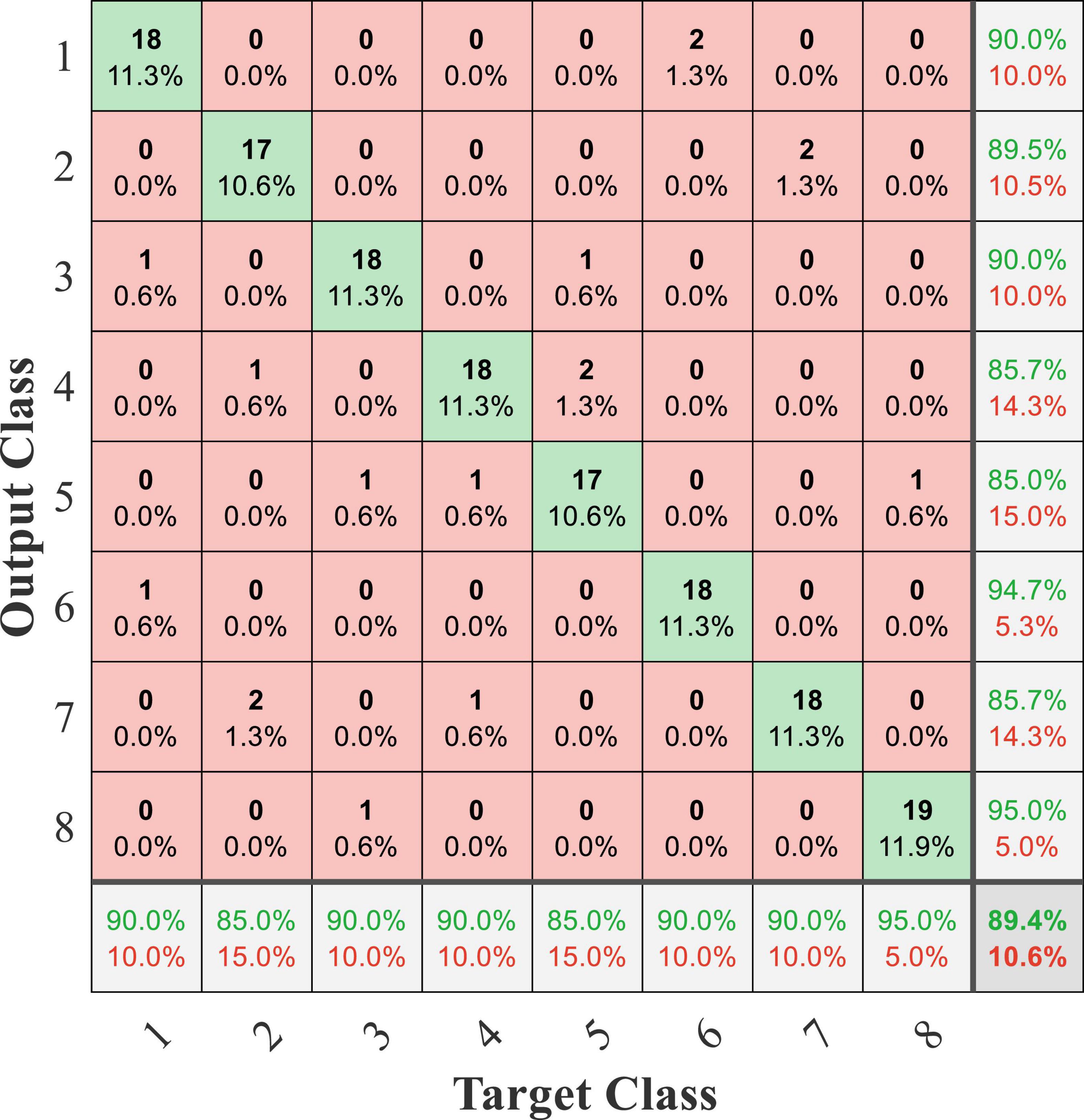

In this online experiment, the experimenter recorded the detection result displayed by the PC and the actual movement dictated by the subject and judged whether the detection result was correct based on the actual movement dictated by the subject. The exoskeleton robot still performed the predetermined action according to the detection result. The confusion matrix of online testing from subject 1 is shown in Figure 16, and the average accuracy was 89.4%. Compared with the accuracy of the offline experiment, the online accuracy was significantly reduced. The online experiment was conducted on several separate days. This phenomenon was caused by the time variability of EEG and sEMG signals. Furthermore, the computational cost of processing in online testing was about 220 ms, and a classification result was transmitted from the PC to the robot controller in a cost time of 45 ms.

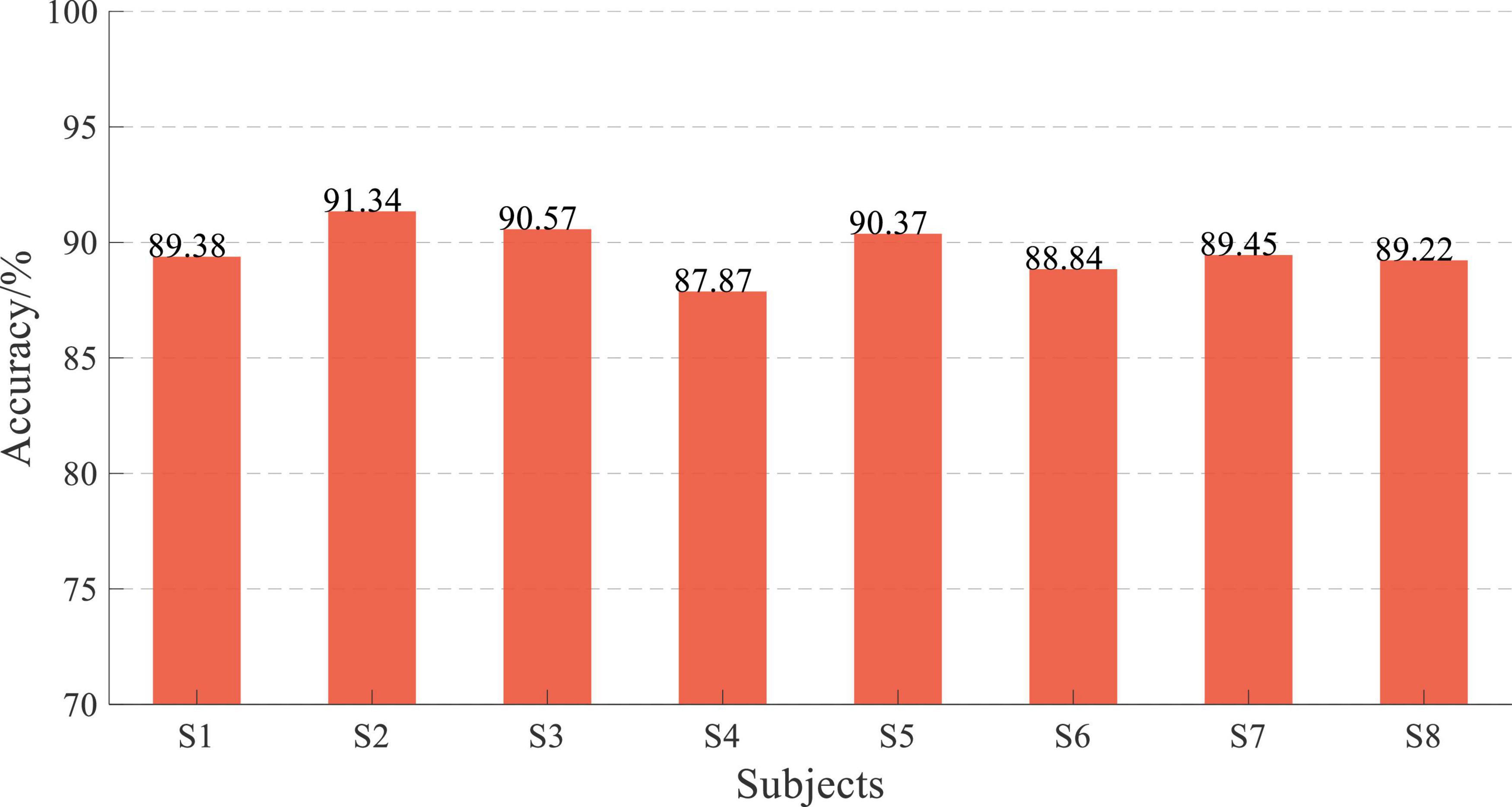

The online performance of subjects is shown in Figure 17, and the average accuracy of EEG and sEMG data feature fusion was 89.63 ± 1.02%, corresponding to a normal distribution (p = 0.94). Similarly, the online average accuracy of all subjects was significantly reduced by about 10% compared with offline performance. However, the online classification accuracy of all subjects remained at a high level.

Discussion

The synchronization of electroencephalogram and surface electromyogram signals

Electroencephalogram and sEMG signals were the expression of human movement, and they were related to each other. These two signals have been widely used in decoding human movement intention, especially in EEG and sEMG fusion. It is known that sEMG signals are controlled by EEG signals, but the generation of those two signals is different. The characteristics of those two signals should be fully considered in EEG and sEMG fusion-based decoding. Through theoretical analysis, the significant difference in EEG and sEMG signals was reflected in the signal response characteristics, especially the difference in response time. This time difference will affect the information consistency of those two signals at the current time in data fusion. Therefore, obtaining the response time difference between EEG and sEMG signals is the key to further improving the performance of fusion decoding. After analyzing the generation and transmission mechanism of EEG and sEMG signals, this work concentrated on the signal information processing of each stage. The response time difference between EEG and sEMG was estimated based on the physiological parameters of the subjects, and the result was about 24 ∼ 26ms. At the same time, the symbolic transfer entropy was used to calculate this response time difference during lower limb voluntary movement. The result of the response time difference was between 24 and 44 ms, and the data center was 35 ms. Therefore, obtaining the response time difference between EEG and sEMG signals is the key to further improving the performance of fusion decoding.

The research on the coupling characteristics of EEG and sEMG is also called corticomuscular coherence (CMC), proving their relevance. Ping Xie et al. analyzed the response time difference between EEG and upper limb sEMG based on the multiscale transfer entropy, which was about 20 ∼ 25 ms (Ping et al., 2016). Qinyi Sun et al. calculated the time difference between EEG and lower limb sEMG based on coherence, and the average time difference was about 23 ms (Qinyi et al., 2022). These experiments both controlled the maximum volumetric contraction (MVC) of the human limb, collecting the EEG and sEMG signals at the subjects’ stable state. In this work, the movement task was lower limb voluntary movement, and no variable control was performed in the experiment. Therefore, the calculated time difference was more remarkable than others, but it benefits EEG and sEMG fusion decoding.

Performance of electroencephalogram and surface electromyogram fusion

The EEG and sEMG fusion-based detection of lower limb movement intention had a good performance. Starting with the response time difference between EEG and sEMG, this work synchronized those two signals to ensure the consistency of those two signals in fusion. The data fusion and feature of EEG and sEMG were both employed for testing, and a hybrid CNN-LSTM was established for the decoding model.

Compared with the performance of EEG- and sEMG-based movement intention detection, EEG and sEMG fusion-based detection had a higher classification accuracy. The average accuracy of sEMG data-based detection was 95.22%, close to the accuracy of EEG and sEMG fusion-based detection. It indicated that the sEMG-based method had a massive advantage in lower limb movement intention decoding. The accuracy of EEG data fusion was the highest, which was 97.96%. That suggested that EEG and sEMG fusion is a better method for detecting lower limb movement.

With different time window length L, the results of eliminating ΔT were significantly different. When the L was 50, the average accuracy of improvement was 2.96 ± 1.09% in EEG and sEMG data fusion-based decoding and was 0.75 ± 0.26% with an L of 200. These results proved that this time difference had an influence on the EEG and sEMG fusion. Qinyi sun et al. also tested the impact of response time difference with different time window lengths, and the results of LDA showed that the improvement was from 0.96 to 1.42% (Qinyi et al., 2022).

All offline experimental results showed that the accuracy of data fusion was significantly higher than feature fusion-based accuracy, and the average accuracy of EEG and sEMG data fusion was more than 95%. This result proved the effectiveness of the proposed CNN-LSTM model. Considering the performance and real-time performance of those two fusion methods, the EEG and sEMG data fusion method was used for online testing. The online experimental results showed that the average accuracy was more than 89.63 ± 1.02%. It has been reduced by about 10% compared with offline performance.

A performance comparison of previous work is shown in Table 2. It shows that the proposed method reaches state-of-the-art performance, which verifies the effectiveness of our approach. The proposed method and results can achieve a good classification effect performance for lower limb movement detection. The proposed method and results of this work have an advantage in the number of categories. In offline and online performance, this work realizes a high classification accuracy. These related studies used the EEG and sEMG feature fusion-based method to detect movement tasks, and the EEG and sEMG data fusion-based method was used to recognize the movement in this work. The advantage of the data fusion method is that it omits the steps of feature extraction, saves computational cost, and has significant advantages in the online application.

The average computational cost of processing and classification result transmission in online testing was 265 ms, and it took more computational cost than the offline experiment. Compared with other results, this result was due to the short data length and simple data processing, which hid complex processing in the process of CNN-LSTM (Romero-Laiseca et al., 2020). This experimental result indicated that the proposed method could detect lower limb voluntary movement with EEG and sEMG data fusion. But it also reflects that the generalization ability of the proposed CNN-LSTM model still needs to be further improved.

The limitations of the study and further work

Despite the advantages of EEG and sEMG fusion-based detection, two limitations should be considered. One is that only eight subjects participated in this experiment. Even though the experimental group size was not large enough, the number of subjects was sufficient to demonstrate the effectiveness of the proposed method. The average accuracy of those subjects was more than 97%, which can prove the effectiveness of the proposed method. Another limitation of this study is a gender imbalance among subjects, and only one female subject participated in the experiment. No significant differences were found between male and female subjects. So, a large sample size and a balance between male and female subjects are desired to fully evaluate the robustness of the proposed method.

Furthermore, it is essential to have asynchronous detection in human–computer interaction and robot control. Asynchronous detection or control is the core of making this technology from laboratory to application. In future work, another detection method will be added to build a hybrid detection system for the application.

Conclusion

This work focused on the fusion of EEG and sEMG to detect lower limb voluntary movement. Analyzing the generation and transmission mechanism of EEG and sEMG signals, this work concentrated on the signal information processing of each stage. The response time difference between EEG and sEMG was estimated based on human physiological parameters, the estimated value was about 24 ∼ 26 ms, and the time difference was calculated using symbolic transfer entropy. A hybrid CNN-LSTM was established as the decoding model, which can fully use the advantages of feature extraction and classification of those two networks. The experiments also validated the feasibility of the decoding method. The calculated value of the response time difference between EEG and sEMG was 25–45 ms, and it was set to a constant (ΔT = 35 ms) to simplify the procedure of EEG and sEMG fusion.

The offline experimental results showed that the CNN-LSTM eliminating ΔT could achieve an average accuracy of more than 95.00 and 78.00% in data fusion and feature fusion, respectively. When the L was equal to 50, the improved accuracy of eliminating ΔT was 2.96% in EEG and sEMG data fusion-based decoding. However, the accuracy of improvement decreased to 0.75% with an L of 200. The reason is the proportion of time difference ΔT in time window length L. It is undeniable that eliminating time difference ΔT can improve performance. This offline experiment demonstrated that data fusion of EEG and sEMG had a better performance by using CNN-LSTM and eliminating the time difference between those two signals can effectively improve the accuracy. The online results demonstrated that the detection accuracy was higher than 87% for all subjects. But it has been reduced by about 10% compared with offline performance.

In general, a method to improve the detection of lower limb voluntary movement intention in the fusion of EEG and sEMG patterns was proposed, which is significant for the interactive control of the lower limb exoskeleton robot.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Institutional Review Board of Xi’an Jiaotong University. The patients/participants provided their written informed consent to participate in this study. All the experiments were conducted in accordance with the Declaration of Helsinki.

Author contributions

XZ supervised the work and revised the manuscript. HL did the research and wrote the manuscript. RD and ZL organized the experiments and analyzed the data. CL carried out the experiments. All authors contributed to the article and approved the submitted version.

Funding

This research work was supported by the National Natural Science Foundation of China (No. U1813212), the Key R&D Project of Shaanxi (No. 2019ZDLGY14-09), and the Fundamental Research Funds for the Central Universities (No. sxjh032021055).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ajayi, M. O., Djouani, K., and Hamam, Y. (2020). Interaction Control for Human-Exoskeletons. J. Control Sci. Eng. 2020:8472510. doi: 10.1155/2020/8472510

Bano, H., Gul, S., and Kazi, U. (2020). Nerve conduction velocity and magnitude of action potential in motor peripheral nerves of upper and lower limbs in normal subjects. Rawal Med. J. 45, 981–984.

Chao-Hung, K., Jia-Wei, C., Yi, Y., Yu-Hao, L., Shao-Wei, L., Ching-Fu, W., et al. (2022). A Differentiable Dynamic Model for Musculoskeletal Simulation and Exoskeleton Control. Biosens. Basel 12:312. doi: 10.3390/bios12050312

Chao, Z., Xizhe, Z., Zhengfei, L., Hongying, Y., Jie, Z., and Yanhe, Z. (2016). Human-machine force interaction design and control for the HIT load-carrying exoskeleton. Adv. Mechanical Eng. 8, 1–14. doi: 10.1177/1687814016645068

Delisle-Rodriguez, D., Villa-Parra, A. C., Bastos, T., Lopez-Delis, A., Neto, A. F., Krishnan, S., et al. (2017). Adaptive Spatial Filter Based on Similarity Indices to Preserve the Neural Information on EEG Signals during On-Line Processing. Sensors 17: 2725. doi: 10.3390/s17122725

Dezhen, X., Daohui, Z., Xingang, Z., Yaqi, C., and Yiwen, Z. (2021). Synergy-Based Neural Interface for Human Gait Tracking With Deep Learning. IEEE Trans. Neural. Syst. Rehabil. Eng. 29, 2271–2280. doi: 10.1109/TNSRE.2021.3123630

Dong, L., Weihai, C., Kyuhwa, L., Ricardo, C., Fumiaki, I., Mohamed, B., et al. (2018). EEG-Based Lower-Limb Movement Onset Decoding: Continuous Classification and Asynchronous Detection. IEEE Trans. Neural. Syst. Rehabil. Eng. 26, 1626–1635. doi: 10.1109/tnsre.2018.2855053

Crouch, D. L., Pan, L., Filer, W., Stallings, J. W., and Huang, H. (2018). Comparing Surface and Intramuscular Electromyography for Simultaneous and Proportional Control Based on a Musculoskeletal Model: A Pilot Study. IEEE Trans. Neural. Syst. Rehabil. Eng. 26, 1735–1744. doi: 10.1109/tnsre.2018.2859833

Fleischer, C., Wege, A., Kondak, K., and Hommel, G. (2006). Application of EMG signals for controlling exoskeleton robots. Biomed. Tech. 51, 314–319. doi: 10.1515/bmt.2006.063

Gordleeva, S. Y., Lobov, S. A., Grigorev, N. A., Savosenkov, A. O., Shamshin, M. O., Lukoyanov, M. V., et al. (2020). Real-Time EEG–EMG Human–Machine Interface-Based Control System for a Lower-Limb Exoskeleton. IEEE Access 8, 84070–84081. doi: 10.1109/access.2020.2991812

Hamza, M. F., Ghazilla, R. A. R., Muhammad, B. B., and Yap, H. J. (2020). Balance and stability issues in lower extremity exoskeletons: A systematic review. Biocybern. Biomed. Eng. 40, 1666–1679. doi: 10.1016/j.bbe.2020.09.004

Hashimoto, Y., and Ushiba, J. (2013). EEG-based classification of imaginary left and right foot movements using beta rebound. Clin. Neurophysiol. 124, 2153–2160. doi: 10.1016/j.clinph.2013.05.006

Hofste, A., Soer, R., Salomons, E., Peuscher, J., Wolff, A., Van Der Hoeven, H., et al. (2020). Intramuscular EMG Versus Surface EMG of Lumbar Multifidus and Erector Spinae in Healthy Participants. Spine 45, 1319–1325. doi: 10.1097/brs.0000000000003624

Hooda, N., Das, R., and Kumar, N. (2020). Fusion of EEG and EMG signals for classification of unilateral foot movements. Biomed. Signal Proc. Control 60: 101990. doi: 10.1016/j.bspc.2020.101990

Ioana Sburlea, A., Montesano, L., Cano De La Cuerda, R., Alguacil Diego, I. M., Carlos Miangolarra-Page, J., and Minguez, J. (2015a). Detecting intention to walk in stroke patients from pre-movement EEG correlates. J. Neuroeng. Rehabil. 12:113. doi: 10.1186/s12984-015-0087-4

Ioana Sburlea, A., Montesano, L., and Minguez, J. (2015b). Continuous detection of the self-initiated walking pre-movement state from EEG correlates without session-to-session recalibration. J. Neural. Eng. 12:036007. doi: 10.1088/1741-2560/12/3/036007

Jianfeng, Z., Xia, M., and Lijiang, C. (2019). Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Proc. Control 47, 312–323. doi: 10.1016/j.bspc.2018.08.035

Jiangjiang, L., Biao, L., Pengfei, Y., Ding, W., and Derong, L. (2017). “Long Short-term Memory based on a Reward/punishment Strategy for Recurrent Neural Networks,” in Proceedings of the 32ND Youth Academic Annual Conference of Chinese Association of Automation (YAC), (Hefei: IEEE).

Jinbiao, L., Gansheng, T., Yixuan, S., Yina, W., and Honghai, L. (2022). A Novel Delay Estimation Method for Improving Corticomuscular Coherence in Continuous Synchronization Events. IEEE Trans. Biomed. Eng. 69, 1328–1339. doi: 10.1109/TBME.2021.3115386

Jochumsen, M., Shafique, M., Hassan, A., and Niazi, I. K. (2018). Movement intention detection in adolescents with cerebral palsy from single-trial EEG. J. Neural. Eng. 15:066030. doi: 10.1088/1741-2552/aae4b8

Junhyuk, C., Tae, K. K., Hyeok, J. J., Laehyun, K., Joo, L. S., and Hyungmin, K. (2020). Developing a Motor Imagery-Based Real-Time Asynchronous Hybrid BCI Controller for a Lower-Limb Exoskeleton. Sensors 20:7309. doi: 10.3390/s20247309

Khataei Maragheh, H., Gharehchopogh, F. S., Majidzadeh, K., and Sangar, A. B. (2022). A New Hybrid Based on Long Short-Term Memory Network with Spotted Hyena Optimization Algorithm for Multi-Label Text Classification. Mathematics 10:488. doi: 10.3390/math10030488

Kline, A., Ghiroaga, C. G., Pittman, D., Goodyear, B., and Ronsky, J. (2021). EEG differentiates left and right imagined Lower Limb movement. Gait Post. 84, 148–154. doi: 10.1016/j.gaitpost.2020.11.014

Knox, J., Gupta, A., Banwell, H. A., Matricciani, L., and Turner, D. (2021). Comparison of EMG signal of the flexor hallucis longus recorded using surface and intramuscular electrodes during walking. J. Electromyogr. Kinesiol. 60:102574. doi: 10.1016/j.jelekin.2021.102574

Kwak, N.-S., Mueller, K.-R., and Lee, S.-W. (2015). A lower limb exoskeleton control system based on steady state visual evoked potentials. J. Neural. Eng. 12:056009. doi: 10.1088/1741-2560/12/5/056009

Lipeng, Z., Peng, W., Rui, Z., Mingming, C., Li, S., Jinfeng, G., et al. (2020). The Influence of Different EEG References on Scalp EEG Functional Network Analysis During Hand Movement Tasks. Front. Hum. Neurosci. 14:367. doi: 10.3389/fnhum.2020.00367

Liwei, C., Duanling, L., Xiang, L., and Shuyue, Y. (2019). The Optimal Wavelet Basis Function Selection in Feature Extraction of Motor Imagery Electroencephalogram Based on Wavelet Packet Transformation. IEEE Access 7, 174465–174481. doi: 10.1109/access.2019.2953972

Longbin, Z., Zhijun, L., Yingbai, H., Christian, S., Gutierrez, F. E. M., and Ruoli, W. (2021). Ankle Joint Torque Estimation Using an EMG-Driven Neuromusculoskeletal Model and an Artificial Neural Network Model. IEEE Trans. Auto. Sci. Eng. 18, 564–573. doi: 10.1109/tase.2020.3033664

Nobue, A., Kunimasa, Y., Tsuneishi, H., Sano, K., Oda, H., and Ishikawa, M. (2020). Limb-Specific Features and Asymmetry of Nerve Conduction Velocity and Nerve Trunk Size in Human. Front. Physiol. 11: 609006. doi: 10.3389/fphys.2020.609006

Palayil Baby, J., Paras, A., Lian, X., Kairui, G., Hairong, Y., Mark, W., et al. (2020). “Estimation of Ankle Joint Torque and Angle Based on S-EMG Signal for Assistive Rehabilitation Robots,” in Biomedical Signal Processing: Advances in Theory, Algorithms and Applications, ed. G. Naik (Singapore: Springer), 31–47.

Pfurtscheller, G., Allison, B. Z., Brunner, C., Bauernfeind, G., Solis-Escalante, T., Scherer, R., et al. (2010). The hybrid BCI. Front. Neurosci. 4:42. doi: 10.3389/fnpro.2010.00003

Phinyomark, A., Phukpattaranont, P., and Limsakul, C. (2012). Feature reduction and selection for EMG signal classification. Exp. Syst. Appl. 39, 7420–7431. doi: 10.1016/j.eswa.2012.01.102

Ping, X., Fang-Mei, Y., Xiao-Ling, C., Yi-Hao, D., and Xiao-Guang, W. (2016). Functional coupling analyses of electroencephalogram and electromyogram based on multiscale transfer entropy. Acta Physica Sinica 64:248702. doi: 10.7498/aps.64.248702

Pritchard, M., Weinberg, A. I., Williams, J. A. R., Campelo, F., Goldingay, H., and Faria, D. R. (2021). Dynamic Fusion of Electromyographic and Electroencephalographic Data towards Use in Robotic Prosthesis Control. J. Physics 1828:012056. doi: 10.1088/1742-6596/1828/1/012056

Qinyi, S., Xiaodong, Z., Hanzhe, L., and Cunxin, L. (2022). Research on Coherent EEG-EMG Synchronization Method for Driving Human Lower Limb Movements. J. Xi’an Jiaotong Univ. 56, 149–158. doi: 10.7652/xjtuxb202202016

Ranran, Z., Xiaoyan, X., Zhi, L., Wei, J., Jianwen, L., Yankun, C., et al. (2018). A New Motor Imagery EEG Classification Method FB-TRCSP plus RF Based on CSP and Random Forest. IEEE Access 6, 44944–44950. doi: 10.1109/access.2018.2860633

Romero-Laiseca, M. A., Delisle-Rodriguez, D., Cardoso, V., Gurve, D., Loterio, F., Nascimento, J. H. P., et al. (2020). A Low-Cost Lower-Limb Brain-Machine Interface Triggered by Pedaling Motor Imagery for Post-Stroke Patients Rehabilitation. IEEE Trans. Neural. Syst. Rehabil. Eng. 28, 988–996. doi: 10.1109/tnsre.2020.2974056

Rouillard, J., Duprès, A., Cabestaing, F., Leclercq, S., Bekaert, M.-H., Piau, C., et al. (2015). “Hybrid BCI Coupling EEG and EMG for Severe Motor Disabilities,” in Proceedings of the 6th International Conference on Applied Human Factors and Ergonomics and the Affiliated Conferences, (Las Vegas, NV).

Rui, L., Xiaodong, Z., Zhufeng, L., Chang, L., Hanzhe, L., Weihua, S., et al. (2018). An Approach for Brain-Controlled Prostheses Based on a Facial Expression Paradigm. Front. Neurosci. 12: 943. doi: 10.3389/fnins.2018.00943

Sawicki, G. S., Beck, O. N., Kang, I., and Young, A. J. (2020). The exoskeleton expansion: Improving walking and running economy. J. Neuroeng. Rehabil. 17:25. doi: 10.1186/s12984-020-00663-9

Shiyuan, Q., Zhijun, L., Wei, H., Longbin, Z., Chenguang, Y., and Chun-Yi, S. (2016). Brain–Machine Interface and Visual Compressive Sensing-Based Teleoperation Control of an Exoskeleton Robot. IEEE Trans. Fuzzy Syst. 25, 58–69. doi: 10.1109/tfuzz.2016.2566676

Staniek, M., and Lehnertz, K. (2008). Symbolic transfer entropy. Physical. Rev. Lett. 100:158101. doi: 10.1103/PhysRevLett.100.158101

Tortora, S., Tonin, L., Chisari, C., Micera, S., Menegatti, E., and Artoni, F. (2020). Hybrid Human-Machine Interface for Gait Decoding Through Bayesian Fusion of EEG and EMG Classifiers. Front. Neurorobot. 14:582728. doi: 10.3389/fnbot.2020.582728

Tryon, J., Friedman, E., and Trejos, A. L. (2019). “Performance Evaluation of EEG/EMG Fusion Methods for Motion Classification,” in Proceedings of the 16th IEEE International Conference on Rehabilitation Robotics. (Toronto, ON: IEEE) doi: 10.1109/ICORR.2019.8779465

Tsuchimoto, S., Shibusawa, S., Iwama, S., Hayashi, M., Okuyama, K., Mizuguchi, N., et al. (2021). e of common average reference and large-Laplacian spatial-filters enhances EEG signal-to-noise ratios in intrinsic sensorimotor activity. J. Neurosci. Methods 353: 109089. doi: 10.1016/j.jneumeth.2021.109089

Valeria, M., Reinmar, J. K., Andreea, I. S., and Gernot, R. (2020). Continuous low-frequency EEG decoding of arm movement for closed-loop, natural control of a robotic arm. J. Neural Eng. 17:046031. doi: 10.1088/1741-2552/aba6f7

Veneman, J. F., Kruidhof, R., Hekman, E. E. G., Ekkelenkamp, R., Van Asseldonk, E. H. F., and Van Der Kooij, H. (2007). Design and evaluation of the LOPES exoskeleton robot for interactive gait rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 15, 379–386. doi: 10.1109/tnsre.2007.903919

Xiaodong, Z., Hanzhe, L., Zhufengn, L., and Gui, Y. (2021). Homology Characteristic of EEG and EMG for Lower Limb Voluntary Movement Intention. Front. Neurorobot. 15:642607. doi: 10.3389/fnbot.2021.642607

Yang, W. (2020). Report on Nutrition and Chronic Diseases of Chinese Residents (2020) [Online]. Available online at: http://www.gov.cn/xinwen/2020-12/24/content_5572983.htm (accessed April 25, 2022).

Yaqing, Z., Jinling, C., Hong, T. J., Yuxuan, C., Yunyi, C., Dihan, L., et al. (2020). An Investigation of Deep Learning Models for EEG-Based Emotion Recognition. Front. Neurosci. 14:622759. doi: 10.3389/fnins.2020.622759

Yi, X. (2021). Study on the electrical model of reconstruction of cortical EEG signal by scalp EEG, Ph.D thesis Zhenjiang: Jiangsu University of Science and Technology.

Yudong, X., and Jianhong, W. (2007). Human Anatomy and Physiology. Beijing: People’s medical publishing house Co.,LTD.

Yuhang, X., McClelland, M. V., Zoran, C., and Mills, K. R. (2017). Corticomuscular Coherence With Time Lag With Application to Delay Estimation. IEEE Trans. Biomed. Eng. 64, 588–600. doi: 10.1109/TBME.2016.2569492

Yunyuan, G., Leilei, R., Rihui, L., and Yingchun, Z. (2017). Electroencephalogram-Electromyography Coupling Analysis in Stroke Based on Symbolic Transfer Entropy. Front. Neurol. 8:716. doi: 10.3389/fneur.2017.00716

Keywords: lower limb voluntary movement, precise detection, EEG and sEMG fusion, response time difference, CNN-LSTM

Citation: Zhang X, Li H, Dong R, Lu Z and Li C (2022) Electroencephalogram and surface electromyogram fusion-based precise detection of lower limb voluntary movement using convolution neural network-long short-term memory model. Front. Neurosci. 16:954387. doi: 10.3389/fnins.2022.954387

Received: 27 May 2022; Accepted: 26 August 2022;

Published: 23 September 2022.

Edited by:

Surjo R. Soekadar, Charité Universitätsmedizin Berlin, GermanyReviewed by:

Denis Delisle Rodriguez, Edmond and Lily Safra International Institute of Neuroscience of Natal (ELS-IINN), BrazilYaqi Chu, Shenyang Institute of Automation (CAS), China

Copyright © 2022 Zhang, Li, Dong, Lu and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hanzhe Li, lihanzhe@stu.xjtu.edu.cn

Xiaodong Zhang1,2,3

Xiaodong Zhang1,2,3 Hanzhe Li

Hanzhe Li Zhufeng Lu

Zhufeng Lu