- 1School of Optoelectronic and Communication Engineering, Xiamen University of Technology, Xiamen, Fujian, China

- 2Department of Radiology, Fujian Maternity and Child Health Hospital, Fuzhou, Fujian, China

Early treatment increases the 5-year survival rate of patients with endometrial cancer (EC). Deep learning (DL) as a new computer-aided diagnosis method has been widely used in medical image processing which can reduce the misdiagnosis by radiologists. An automatic staging method based on DL for the early diagnosis of EC will benefit both radiologists and patients. To develop an effective and automatic prediction model for early EC diagnosis on magnetic resonance imaging (MRI) images, we retrospectively enrolled 117 patients (73 of stage IA, 44 of stage IB) with a pathological diagnosis of early EC confirmed by postoperative biopsy at our institution from 1 January 2018, to 31 December 2020. Axial T2-weighted image (T2WI), axial diffusion-weighted image (DWI) and sagittal T2WI images from 117 patients have been classified into stage IA and stage IB according to the patient’s pathological diagnosis. Firstly, a semantic segmentation model based on the U-net network is trained to segment the uterine region and the tumor region on the MRI images. Then, the area ratio of the tumor region to the uterine region (TUR) in the segmentation map is calculated. Finally, the receiver operating characteristic curves (ROCs) are plotted by the TUR and the results of the patient’s pathological diagnosis in the test set to find the optimal staging thresholds for stage IA and stage IB. In the test sets, the trained semantic segmentation model yields the average Dice similarity coefficients of uterus and tumor on axial T2WI, axial DWI, and sagittal T2WI were 0.958 and 0.917, 0.956 and 0.941, 0.972 and 0.910 respectively. With pathological diagnostic results as the gold standard, the classification model on axial T2WI, axial DWI, and sagittal T2WI yielded an area under the curve (AUC) of 0.86, 0.85 and 0.94, respectively. In this study, an automatic DL-based segmentation model combining the ROC analysis of TUR on MRI images presents an effective early EC staging method.

Introduction

Endometrial cancer (EC) is one of the most common malignant diseases worldwide. Its incidence rate increases with the gradual aging of the population and the increase in obesity (Amant et al., 2005). Cancer of the uterine corpus is often referred as EC because more than 90% of cases occur in the endometrium (lining of the uterus) (American Cancer Society, 2021). For EC, the prognosis of patients in the early stage is relatively optimistic. In contrast, the prognosis of EC is extremely poor in the advanced stage due to the metastasis of cancer cells in the body (Amant et al., 2005; Guo et al., 2020). According to the 2020 global cancer statistics (Sung et al., 2021), uterine corpus cancer is the sixth most commonly diagnosed cancer in women, with 417,000 new cases and 97,000 deaths in 2020. According to the American cancer society’s 2021 annual and cancer statistics 2021 report (American Cancer Society, 2021; Siegel et al., 2021), an estimated 66,570 cases of the uterine corpus cancer will be diagnosed and 12,940 women will die from the disease in Unite States. The EC incidence rate increases about 1% per year since the mid-2000s.

According to the International Federation of Gynecology and Obstetrics (FIGO) staging, Stage IA of EC is determined by the tumor invading less than 50% of the myometrium while stage IB of EC is presented as the tumor involving 50% or more of the myometrium (Pecorelli, 2009). The 5-year survival rate for EC stage IA patients after surgery is 90–96% and 78–87% for EC stage IB patients (Haldorsen and Salvesen, 2016; Rezaee et al., 2018; Boggess et al., 2020; Mirza, 2020). And the 5-years survival rate was about 92.6% when the myometrial invasion was less than 50%, and only about 66.0% when the myometrial invasion was greater than 50% (Pinar, 2017). Low-risk patients were discouraged from adjuvant radiation therapy and required only a simple hysterectomy, while high-risk patients usually required adjuvant radiation therapy and were recommended pelvic and para-aortic lymphadenectomy (Amant et al., 2018). Therefore, an efficient and automatic prediction model for early EC staging, before cancer cells invade and spread, not only can improve diagnostic efficiency but also provides valuable information for clinicians to recommend treatment to patients.

Magnetic resonance imaging (MRI) and contrast-enhanced dynamic MRI are very accurate in the local staging of EC (Manfredi et al., 2004). In 2009, the European Society of Urogenital Radiology (ESUR) issued guidelines for the staging of EC. The new guidelines regard MRI as the preferred imaging modality for assessing the disease severity of newly diagnosed EC patients (Kinkel et al., 2009). MRI is now widely accepted as the first choice for the initial staging of EC (Nougaret et al., 2019). However, there would be big differences in the evaluation results of two different radiologists on the same MRI images (Haldorsen and Salvesen, 2012). The main reason is that the pathological evaluation obtained by MRI mostly depends on the experience of the radiologist (Woo et al., 2017).

In recent years, deep learning (DL) as a new computer-aided diagnosis method has been widely used in the field of image recognition (LeCun et al., 2015; Shin et al., 2016; Chan H. et al., 2020). This method can automatically capture the target area after training on a large number of data sets (Litjens et al., 2017; Chan H. P. et al., 2020). Therefore, DL is widely used in medical image processing such as classification between tumor epithelium and stroma (Du et al., 2018), providing new prognostic biomarkers for cancer recurrence prediction (Wang et al., 2016), computer-aided diagnosis (CAD) of prostate cancer and lung cancer (Serj et al., 2018; Song et al., 2018), automatic segmentation of the left ventricle in echocardiographic images (Kim et al., 2021), and classification of benign and malignant breast tumor and quality inspection in mammography images (Borges Sampaio et al., 2011; Rouhi et al., 2015).

Some DL-based studies have been carried out to assess the EC. Y. Kurata et al. established a U-net model for uterine segmentation of images with uterine diseases (Kurata et al., 2019). The average dice similarity coefficient (DSC) of the model for uterine segmentation is 0.82, which proves that the U-net model has good segmentation performance in uterus segmentation. Besides, its segmentation performance is not affected by uterine diseases. However, the model only segmented the uterus on the sagittal T2WI images which means it has not been further applied to the automatic segmentation of early EC. M. Bonatti et al. presented a DL model to locate EC lesion area and evaluate MI depth (Chen et al., 2020). However, the AUC of their model on the test set was 0.78 which indicates the classification performance of their model is relatively unsatisfactory. The main reason is that only a box was used to locate the suspected lesion and the surrounding normal anatomical structure. E. Hodneland et al. used a three-dimensional CNN to segment the tumor in preoperative pelvic MRI images of EC patients (Hodneland et al., 2021). The median DSCs between the segmentation results of their model and two raters are 0.84 and 0.77, respectively. Nevertheless, they didn’t segment the uterus and evaluate the invasion depth. The results of the previous work were relatively unsatisfactory in terms of segmentation performance and did not further analyze the TUR in relation to early EC staging. Therefore, the purpose of this study is twofold: first, to establish a DL-based semantic segmentation model to automatically segment tumor and uterus on MRI images. Second, to analyze the performance and potential of using TUR as a reference for early EC staging.

Materials and methods

The Institutional Review Board (IRB) of Fujian maternity and child health hospital in China approved our retrospective study, the requirement for informed consent was waived.

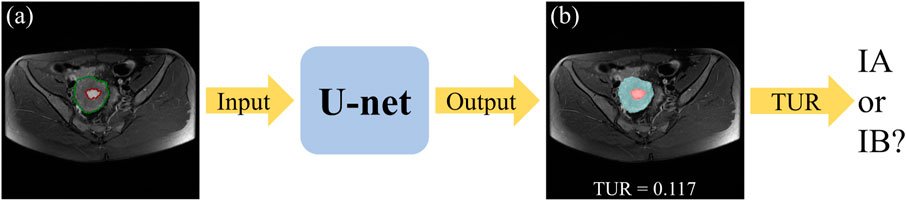

The flowchart of the automatic staging model based on DL is shown in Figure 1. Firstly, both the tumor and the uterus in the input MRI images have been labelled by the experienced radiologist and have been divided into the training set, test set and validation set randomly. Secondly, The U-net segmentation model has been trained by the input training set. Thirdly, the segmentation of the tumor and the uterus in the test set can be obtained by the trained segmentation model. At last, the stage IA or IB of EC patients can be determined by the TUR.

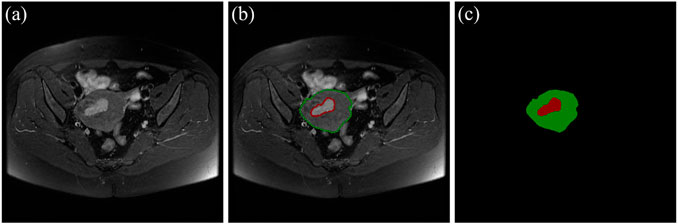

FIGURE 1. The flowchart of the automatic staging model based on DL. The original MRI image (A), where the red outline is the ground-truth of the tumor and the green outline is the ground-truth of the uterus. A blend of the predicted segmentation map of the U-net and the original MRI image (B), where the red area is the tumor and the blue area is the uterus.

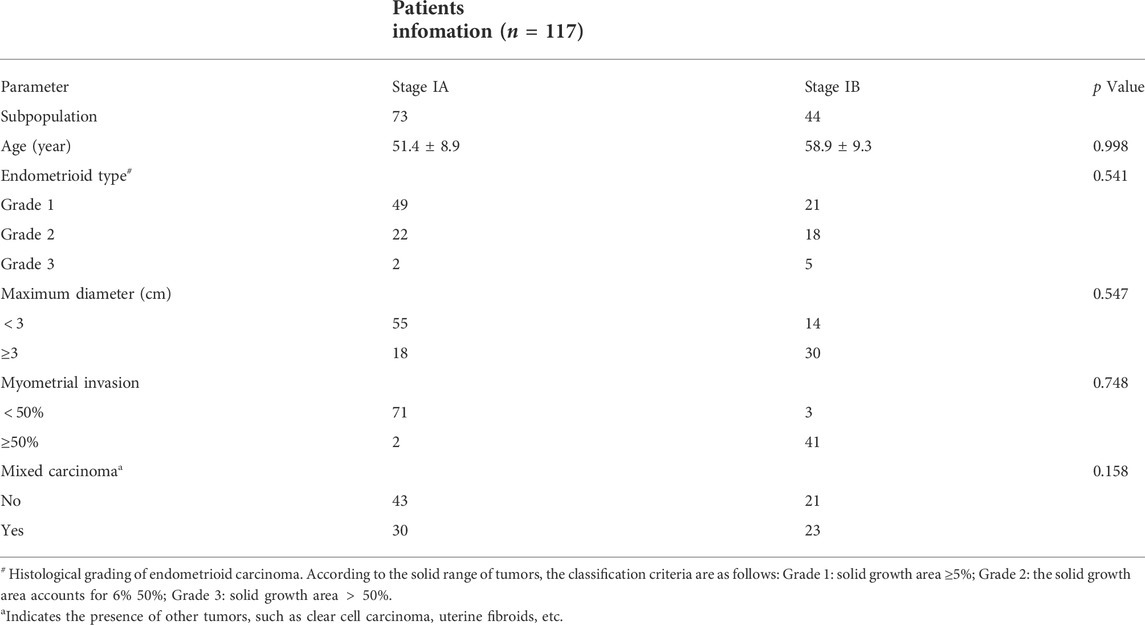

Patient population

We retrospectively enrolled 117 patients (73 patients in stage IA, 44 patients in stage IB) with a pathological diagnosis of early EC confirmed by postoperative biopsy at our institution (Fujian maternity and child health hospital) from 1 January 2018, to 31 December 2020. All 117 female patients underwent preoperative pelvic MRI and were diagnosed with stage I EC (mean age: 54.8 years, standard deviation (SD): 9.7 years) on postoperative pathology. A summary of the clinical and pathological data of these patients is shown in Table 1.

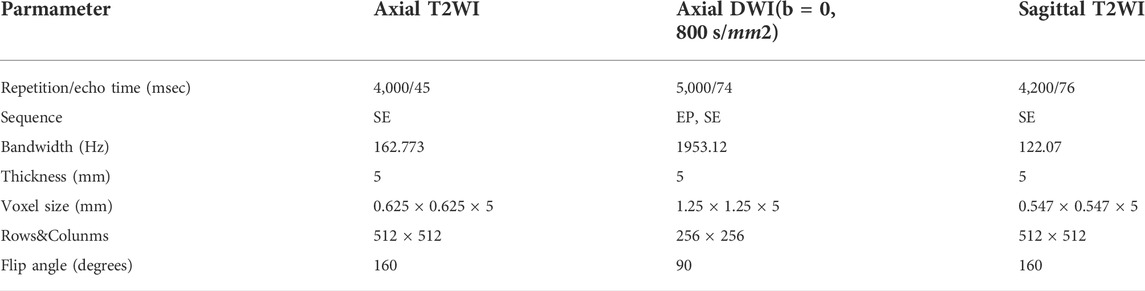

MRI protocol

All MRI examinations were performed on a 1.5 T MRI scanner (Optima MR360, GE Healthcare) with the patients lying supine on the table, with their arms along their bodies. Before the examination, the patient without muscle injection used Glycerini Enema to defecate and suppress the urine. The MRI protocols include: according to the endometrial cavity longest axis, high-resolution T2WI images were acquired along three orthogonal planes (para-axial, para-sagittal) and DWI images were acquired on two planes only (para-axial). All T2WI images are fast spin-echo shimming and the DWI images with B-values of 0, 800 s/mm2. The detailed MRI acquisition parameters are listed in Table 2.

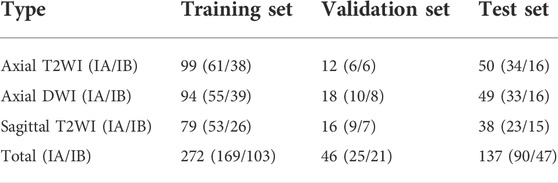

Data preparation and processing

There are 117 patients with early EC in the data sets, including 73 cases of stage IA and 44 cases of stage IB. An experienced radiologist selected the slices that clearly visualizes the uterus and tumor from each patient’s three different MRI sequences. As a result, 455 MRI images (161 axial T2WI images, 161 axial DWI images, 133 sagittal T2WI images) are obtained. According to the convention of deep learning model training, the dataset is divided in the ratio of 6:1:3 (Andrew, 2018; Jayapandian et al., 2021) The selected MRI images are randomly separated into 70 cases (44 IA/26 IB) including 272 images as the training set, 12 cases (7 IA/5 IB) including 46 images as the validation set, and 35 cases (22 IA/13 IB) including 136 images as the test set. The details of data sets are shown in Table 3. The uterus and tumor on the above MRI images were manually segmented by the experienced radiologist through the software LabelMe (Russell et al., 2008). These segmented areas were used as the ground truth for uterus and tumor segmentation. An original MRI image and the corresponding segmented image are shown in Figure 2.

FIGURE 2. (A) The original MRI image. (B) The original MRI image with ground-truth contours of the uterus and tumor, where the red outline is the ground-truth of the tumor and the green outline is the ground-truth of the uterus. (C) The label image that LabelMe transforms from the ground-truth contours in (B) which is used for DL model training.

Training the DL networks

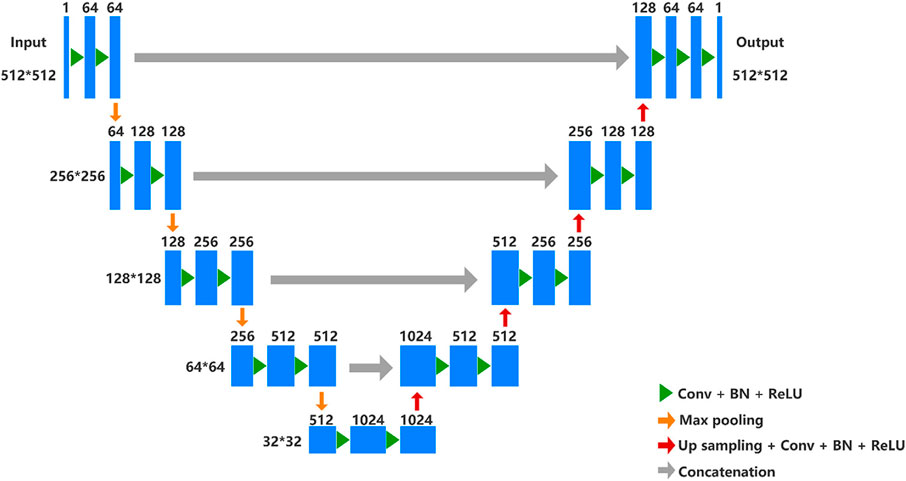

An automatic segmentation DL-based model has been implemented by a U-net architecture to segment the uterus and tumor on the MRI images in this work. U-net is a semantic segmentation network based on fully convolutional networks (FCN), which is suitable for medical image segmentation. The network architecture is shown in Figure 3 and it has both a contraction path to capture context information and an asymmetric expansion path to allow accurate positioning, which makes the network propagate context information to a higher resolution (Ronneberger et al., 2015). The Adam algorithm with an initial learning rate of 0.0003 was used to minimize the cross-entropy loss. The model was implemented by TensorFlow (version 2.5.0) with 300 epochs and employed an early-stop strategy to avoid model overfitting. The experiments were conducted on a workstation equipped with a high-performance graphics processing unit (NVIDIA RTX 2080TI, Gigabyte Ltd.). It took about 3 hours to train the semantic segmentation model.

FIGURE 3. U-net architecture. In the contracting path, every step consists of downsampling with stride two of the feature map and doubles the number of feature channels. Repeated application of two 3 × 3 convolutions (unpadded convolutions), each followed by a rectified linear unit (ReLU) and a 2 × 2 max pooling operation. In the expansive path, every step consists of an upsampling of the feature map followed by a 2 × 2 up convolution that halves the number of feature channels, a concatenation with the correspondingly cropped feature map from the contracting path, and two 3 × 3 convolutions, each followed by a ReLU.

Quantitative evaluation of the uterus and tumor segmentation

The DSC is used to measure the overlap of two samples. In this study, DSC was been used to quantitatively evaluate the segmentation overlap of the uterus and the tumor in the test set. DSC is calculated as follows:

Where X is the real artificial segmentation map and Y is the predicted segmentation map. The range of DSC values is from 0 to 1. DSC is 0 when the two images are completely non-overlapping and one when the two images are completely overlapping.

Calculation of TUR

An area-based approach is used to perform TUR calculations on the segmented images predicted by the DL model in the test set. The number of pixels is used to calculate the area. As shown in Figure 1, the tumor and the uterus areas are obtained to calculate the TUR (i.e., calculate the ratio of the number of pixels in the red area to the number of pixels in both the blue area and the red area). For MRI slices with different scanning parameters, the TUR is all calculated in the same way.

Validation and statistics

The test set with 35 randomly selected patients (22 IA/13 IB) including 137 images (50 Axial T2WI, 49 Axial DWI, 38 Sagittal T2WI) are used to validate the performance of the semantic segmentation model. For the TURs obtained on the predicted segmentation maps on the three different MRI sequence slices, the ROCs were plotted and the AUCs were calculated. For a patient in the test set, the corresponding slices from three different MRI sequences are chosen for predictive segmentation, and then calculate the TURs of the segmentation maps, the final classification results will be determined by the threshold values obtained from the corresponding ROCs. Statistical analyses were performed on SPSS (version 26.0., SPSS Inc.) and p-values were obtained by t-test. The test datasets and code implementation presented in this study can be found in online repositories (https://github.com/mw1998/Segmentation-Area-Ratio).

Results

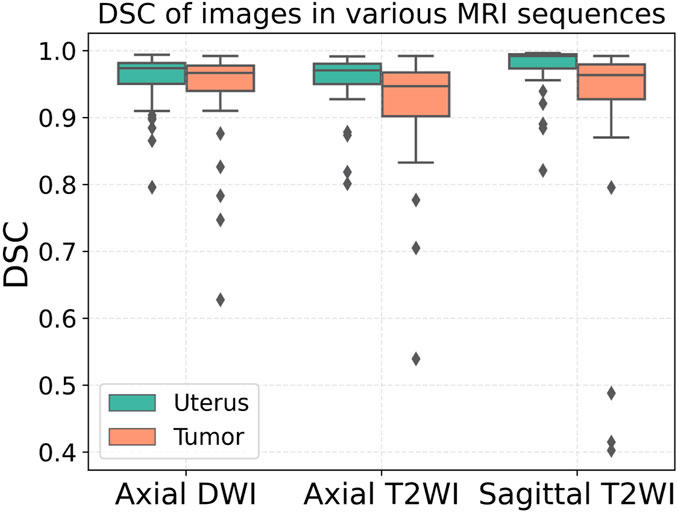

Performance of the automatic uterus and tumor segmentation model

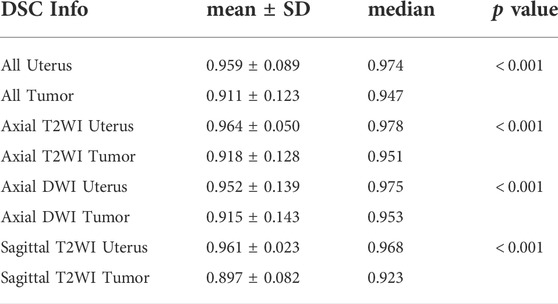

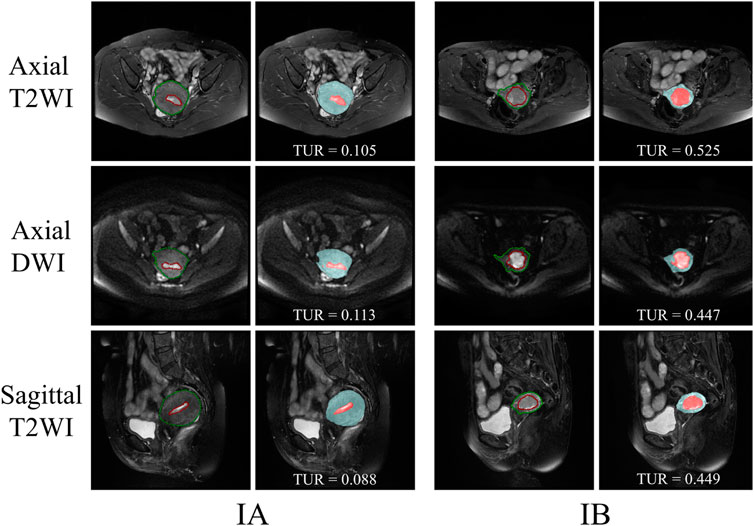

The segmentation results of a patient with EC stage IA (left) and a patient with stage IB (right) on axial T2WI image, axial DWI and sagittal T2WI image are shown in Figure 5. Both the uterus and tumor were well segmented. The average DSC values of uterus and tumor in 137 MRI images in the test set are 0.959 and 0.911, respectively. A box plot of the DSC values is shown in Figure 4). The DSCs of three different MRI images are all over 0.9. As shown in Figure 4, the DSCs of sagittal T2WI images and axial DWI images have better performance in the three MRI sequences, and the DSC of the uterus is obviously higher than that of the tumor. The DSC variances of uterus and tumor in three MRI sequences images are less than 0.15, indicating that the data has little fluctuation and the segmentation model is stable. The DSCs of the segmented uterus and tumor on MRI images are shown in Table 4. The segmented tumor and the segmented uterus are all significantly different (p < 0.001) from each other in the three MRI sequences images.

TUR findings

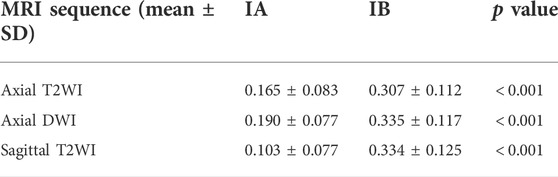

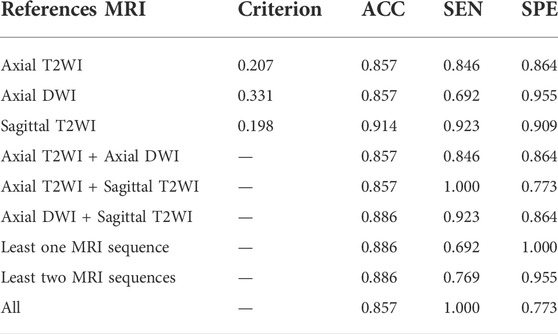

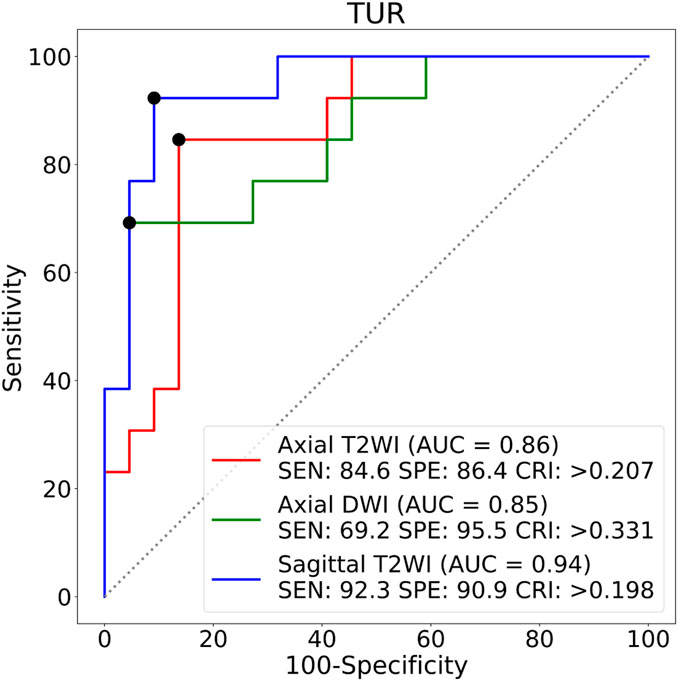

The TURs of a patient with stage IA and a patient with stage IB on axial T2WI, axial DWI and sagittal T2WI images are shown in Figure 5. Compare to stage IB patient, stage IA patient has small TURs on all three MRI sequence slices. Moreover, small TUR differences in the same stage patients indicate that early EC classification by using TUR is effective. The mean TURs of all stage IA patients and all stage IB patients on three different MRI sequence slices in the test set are shown in Table 5. For axial T2WI images, the mean TUR is 0.165 for stage IA and 0.307 for stage IB. For axial DWI images, the mean TUR is 0.190 for stage IA and 0.335 for stage IB. For sagittal T2WI images, the mean TUR is 0.103 for stage IA and 0.334 for stage IB. All three MRI sequence slices have a statistically significant difference in the stage IA group to the stage IB group. ROCs on three different MRI sequence slices are shown in Figure 6. For axial T2WI images, the threshold of 0.207 can distinguish stage IA from stage IB with 84.6% sensitivity, 86.4% specificity, and AUC of 0.86. For axial DWI images, the threshold of 0.331 can distinguish stage IA from stage IB with 69.2% sensitivity, 95.5% specificity and AUC of 0.85. For sagittal T2WI images, the threshold of 0.198 can distinguish stage IA from stage IB with 92.3% sensitivity, 90.9% specificity and AUC of 0.94.

FIGURE 5. The segmentation results of a patient with EC stage IA (left) and a patient with stage IB (right) on axial T2WI image, axial DWI and sagittal T2WI image. The red outline is the ground-truth of the tumor and the green outline is the ground-truth of the uterus. The red area is the tumor and the blue area is the uterus.

FIGURE 6. ROCs on three different MRI sequence slices. SEN:Sensitivity, SPE:Specificity, CRI:Criterion.

Comparisons between three different MRI sequence slices

The radiologist decides by viewing more than one MRI sequence slice of an EC patient. Table 6 demonstrates the classification performance of TUR on a single MRI sequence slice and combined different MRI sequence slices for a patient with early EC. The first three rows indicate the classification performance only by one MRI sequence slice. The sagittal T2WI image has the best TUR classification performance, reaching an accuracy of 0.914, a sensitivity of 0.923 and a specificity of 0.909. The middle three rows indicate the classification performance by two MRI sequence slices. The best accuracy is 0.886 by the axial DWI and sagittal T2WI images, while the sensitivity is 1.000 by the axial T2WI and sagittal T2WI images. The last three rows indicate the performance of TUR when using a fuzzy logic approach by at least one MRI sequence, at least two MRI sequences and three MRI sequences. The optimal specificity of 1.000 is obtained by at least one MRI sequence, while an optimal sensitivity of 1.000 is obtained by all three MRI sequences.

Discussion

In this study, by using a DL-based semantic segmentation model to segment tumor and uterus on three types of MRI images, and then calculate the TUR to classify the stage IA and stage IB for early EC. Employing the patient’s pathological diagnostic results as the gold standard, using TUR for early EC staging has a different performance for different MRI sequence slices. The proposed method has the best performance in classifying early EC on only sagittal T2WI images, yielding an accuracy of 0.914, a sensitivity of 0.923, and a specificity of 0.909. And the classification performance has a higher sensitivity or specificity by multiple MRI sequences. Its main clinical values are: 1. Accurate segmentation of uterus and tumor can help the clinician to better observe the invasion trend of tumor; 2. Using TUR to analyze the tumor invasion of patients can help clinicians to develop more appropriate treatment strategies for patients. For example, while the TUR is small, the clinician may recommend a hysterectomy for the patient. While the TUR is large, the clinician may recommend pelvic and para-aortic lymphadenectomy for the patient. 3. The model is automatic and efficient, which can reduce the clinician’s workload.

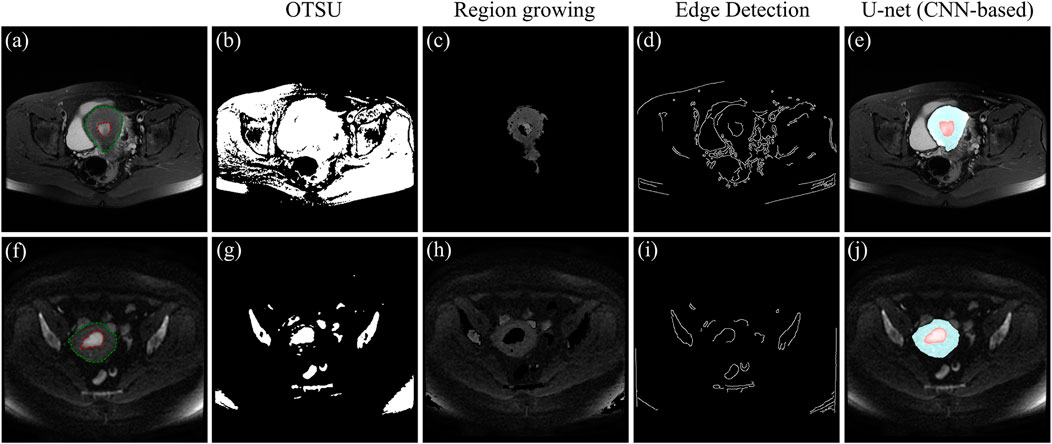

As shown in Figure 7, three traditional machine learning segmentation algorithms are compared with the CNN-based U-net segmentation algorithm on two MRI images of the test set. OTSU is a threshold-based image segmentation algorithm, and the segmentation results of the OTSU algorithm are shown in Figures 7B,G. MRI images are grayscale maps, and because the grayscale values of the uterus and tumor are not significantly different from the grayscale values of other pelvic tissue, the OTSU algorithm was unable to find a threshold for distinguishing both the uterus and tumor from pelvic MRI images. The region growing algorithm is a region-based image segmentation algorithm. The segmentation results of the region growth algorithm are shown in Figures 7C,H. The region growth algorithm requires the initial seed and growth criterion to be set manually according to the image conditions. However, the location and size of the uterus and tumor in each MRI image are different, the region growth algorithm is difficult to be applied to the uterus and tumor segmentation. Figures 7D,I show the preliminary results of the segmentation algorithm based on edge detection. Due to the complex and dense distribution of tissues and organs in the pelvis, there are more edge features in the MRI images. Therefore, the segmentation algorithm based on edge detection is difficult to segment the uterus and tumor from MRI images. Figures 7E,J show the segmentation results of CNN-based U-net on MRI images. It can be seen that U-net segmented the uterus and tumor well on the MRI images and were very close to the region in the ground-truth contour. Compared with traditional machine learning methods, the CNN-based method can automatically and accurately segment both uterus and tumor regions from pelvic MRI images.

FIGURE 7. (A) and (F) MRI images of the test set, where the red outline is the ground-truth of the tumor and the green outline is the ground-truth of the uterus. (B) and (G) Segmentation results of the OTSU algorithm. (C) and (H) Segmentation results of the region growth algorithm. (D) and (I) Segmentation results based on edge detection. (E) and (J) Segmentation results of the U-net, where the blue area is the uterus region and the red area is the tumor region.

(Bonatti et al., 2018) found that the tumor/uterus volume ratio greater than 0.13 was significantly associated with high-grade EC, and the cut-off value of 0.13 enabled to distinguish low-grade EC from high-grade EC with 50% sensibility and 89% specificity. However, volume calculation is time-consuming and has poor utility in daily clinical practice. Besides, there is an error when they use ellipsoidal formulas rather than segmentation to estimate tumor volume. (Hodneland et al., 2021). proposed the use of 3D convolution neural networks for the segmentation of tumors in EC patients on MRI images. The method achieved high segmentation accuracy and accurate volume calculation which are close to the results of radiologists’ manual segmentation. However, accurate manual tumor labelling of 3D image data is highly labor-intensive, and they did not investigate the relationship between the volume ratio of tumor to the uterus and the grade of EC. (Chen et al., 2020). proposed a DL-based two-stage CAD method for assessing the depth of myofilament infiltration of EC on MRI images. The classification method yielded a sensitivity of 0.67, specificity of 0.88, and accuracy of 0.85. However, only T2WI data were used for training the DL network so it could not provide interpretable references to scientists due to the black-box nature of DL. This study differs from previous studies as follows: 1. avoids the use of labor-intensive 3D datasets. 2. analyzes the classification performance of TUR on different MRI sequences for early EC. 3. Compared to using the DL model exclusively, combining the DL model with the TUR analysis method provides a more interpretable reference for the staging of early EC.

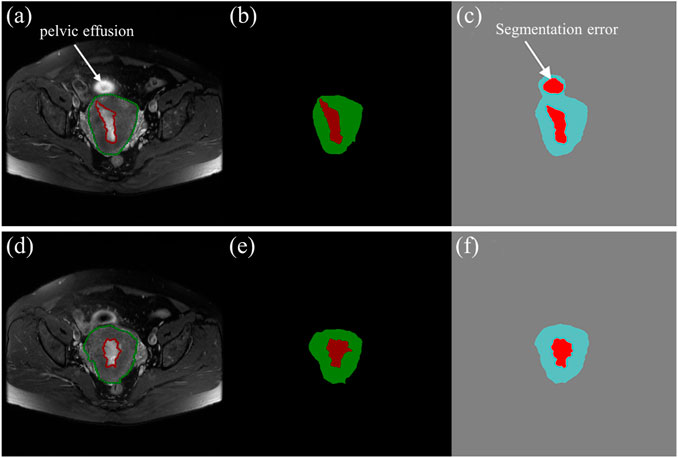

The mean DSCs of the uterus is higher than that of the tumor which is shown in Figure 4. It indicates that the model has a better segmentation effect on the uterus compared to tumors. The main reasons are as follows: The shape of the uterus in the human body is relatively geometrically fixed so that the model can learn the characteristic parameters of the uterus region better. The shape of the tumors in various patterns makes it harder to learn. There are also some interference factors inside or outside the uterus, such as pelvic effusion, hematocele, uterine fibroids, cervical cancer, and so on, which makes the model more complicated in the selection of tumor characteristics parameters. An fault segmentation example is shown (Figure 8). Figure 8A is the original axial T2WI image with a pelvic effusion (pointed by the arrow). The pelvic effusion is false segmented as a tumor by the DL model as shown Figure 8C. The reason could be the brightness similarity of the pelvic effusion and the tumor. Figure 8D is the adjacent image which doesn’t have the bright pelvic effusion like Figure 8A. The tumor is segmented correctly as a tumor as shown Figure 8F. Such kinds of faults will be discussed in future work.

FIGURE 8. (A), (D) The two axial T2WI images from the continuous sequences of a patient. (B), (E) The tumor (red) and uterus (green) images labelled by experienced radiologists. (C), (F) The predictive tumor (red) and uterus (blue) images by the DL model.

The automatic staging method also has some limitations. Firstly, the number of patient cases used in the study is small and no data of healthy individuals are included. We will improve the model by collecting more patient cases including MRI images of healthy individuals. Secondly, although we have preliminary proved the feasibility of distinguishing stage IA from stage IB by using the method of TUR, the accuracy could also be improved by other methods. Further research will focus on developing a computer-aided diagnosis method that can imitate radiologists’ behavior on early EC staging. Last but not the least, picking the optimal slice from a MRI sequence to be segmented is time-consuming. An automatic picking method would be studied in the future to make the model more practical.

In summary, the results show that the DL-based semantic segmentation model for tumor and uterus segmentation on MRI images and then performing TUR analysis for early EC staging is effective. This method is an automatic and time-saving solution and has the potential to be used for early EC in clinical use.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/mw1998/Segmentation-Area-Ratio.

Ethics statement

The studies involving human participants were reviewed and approved by The Fujian maternity and child health hospital. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

WM, CC, and YL contributed to conception and design of the study. CC and LX organized the database. WM and HG performed the statistical analysis. WM wrote the first draft of the manuscript. YL and WM wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by Natural Science Foundation of Fujian Province (2021J011216, 2020J02048).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amant F., Mirza M. R., Koskas M., Creutzberg C. L. (2018). Cancer of the corpus uteri. Int. J. Gynaecol. Obstet. 143, 37–50. doi:10.1002/ijgo.12612

Amant F., Moerman P., Neven P., Timmerman D., Van Limbergen E., Vergote I. (2005). Endometrial cancer. Lancet 366, 491–505. doi:10.1016/S0140-6736(05)67063-8

American Cancer Society (2021). American cancer society: Cancer facts and figures 2021. Atlanta, Ga: American Cancer Society, 13–15.

Boggess J. F., Kilgore J. E., Tran A.-Q. (2020). “Uterine cancer,” in Abeloff’s clinical oncology (Elsevier), 1508–1524. doi:10.1016/B978-0-323-47674-4.00085-2

Bonatti M., Pedrinolla B., Cybulski A. J., Lombardo F., Negri G., Messini S., et al. (2018). Prediction of histological grade of endometrial cancer by means of MRI. Eur. J. Radiol. 103, 44–50. doi:10.1016/j.ejrad.2018.04.008

Borges Sampaio W., Moraes Diniz E., Corrĉa Silva A., Cardoso de Paiva A., Gattass M. (2011). Detection of masses in mammogram images using CNN, geostatistic functions and SVM. Comput. Biol. Med. 41, 653–664. doi:10.1016/j.compbiomed.2011.05.017

Chan H., Hadjiiski L. M., Samala R. K. (2020a). Computer-aided diagnosis in the era of deep learning. Med. Phys. 47, e218–e227. doi:10.1002/mp.13764

Chan H. P., Samala R. K., Hadjiiski L. M., Zhou C. (2020b). Deep learning in medical image analysis. Adv. Exp. Med. Biol. 1213, 3–21. doi:10.1007/978-3-030-33128-3_1

Chen X., Wang Y., Shen M., Yang B., Zhou Q., Yi Y., et al. (2020). Deep learning for the determination of myometrial invasion depth and automatic lesion identification in endometrial cancer MR imaging: A preliminary study in a single institution. Eur. Radiol. 30, 4985–4994. doi:10.1007/s00330-020-06870-1

Du Y., Zhang R., Zargari A., Thai T. C., Gunderson C. C., Moxley K. M., et al. (2018). Classification of tumor epithelium and stroma by exploiting image features learned by deep convolutional neural networks. Ann. Biomed. Eng. 46, 1988–1999. doi:10.1007/s10439-018-2095-6

Guo J., Cui X., Zhang X., Qian H., Duan H., Zhang Y. (2020). The clinical characteristics of endometrial cancer with extraperitoneal metastasis and the value of surgery in treatment. Technol. Cancer Res. Treat. 19, 1533033820945784. doi:10.1177/1533033820945784

Haldorsen I. S., Salvesen H. B. (2012). Staging of endometrial carcinomas with MRI using traditional and novel MRI techniques. Clin. Radiol. 67, 2–12. doi:10.1016/j.crad.2011.02.018

Haldorsen I. S., Salvesen H. B. (2016). What is the best preoperative imaging for endometrial cancer? Curr. Oncol. Rep. 18, 25. doi:10.1007/s11912-016-0506-0

Hodneland E., Dybvik J. A., Wagner-Larsen K. S., Šoltészová V., Munthe-Kaas A. Z., Fasmer K. E., et al. (2021). Automated segmentation of endometrial cancer on MR images using deep learning. Sci. Rep. 11, 179. doi:10.1038/s41598-020-80068-9

Jayapandian C. P., Chen Y., Janowczyk A. R., Palmer M. B., Cassol C. A., Sekulic M., et al. (2021). Development and evaluation of deep learning–based segmentation of histologic structures in the kidney cortex with multiple histologic stains. Kidney Int. 99, 86–101. doi:10.1016/j.kint.2020.07.044

Kim T., Hedayat M., Vaitkus V. V., Belohlavek M., Krishnamurthy V., Borazjani I. (2021). Automatic segmentation of the left ventricle in echocardiographic images using convolutional neural networks. Quant. Imaging Med. Surg. 11, 1763–1781. doi:10.21037/qims-20-745

Kinkel K., Forstner R., Danza F. M., Oleaga L., Cunha T. M., Bergman A., et al. (2009). Staging of endometrial cancer with MRI: Guidelines of the European society of urogenital imaging. Eur. Radiol. 19, 1565–1574. doi:10.1007/s00330-009-1309-6

Kurata Y., Nishio M., Kido A., Fujimoto K., Yakami M., Isoda H., et al. (2019). Automatic segmentation of the uterus on MRI using a convolutional neural network. Comput. Biol. Med. 114, 103438. doi:10.1016/j.compbiomed.2019.103438

Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., et al. (2017). A survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88. doi:10.1016/j.media.2017.07.005

Manfredi R., Mirk P., Maresca G., Margariti P. A., Testa A., Zannoni G. F., et al. (2004). Local-regional staging of endometrial carcinoma: Role of MR imaging in surgical planning. Radiology 231, 372–378. doi:10.1148/radiol.2312021184

Mirza M. R. (2020). Management of endometrial cancer. Cham: Springer International Publishing. doi:10.1007/978-3-319-64513-1

Nougaret S., Horta M., Sala E., Lakhman Y., Thomassin-Naggara I., Kido A., et al. (2019). Endometrial cancer MRI staging: Updated guidelines of the European society of urogenital Radiology. Eur. Radiol. 29, 792–805. doi:10.1007/s00330-018-5515-y

Pecorelli S. (2009). Revised FIGO staging for carcinoma of the vulva, cervix, and endometrium. Int. J. Gynaecol. Obstet. 105, 103–104. doi:10.1016/j.ijgo.2009.02.012

Pinar G. (2017). Survival determinants in endometrial cancer patients: 5-Years experience. Arch. Nurs. Pract. Care 3, 012–015. doi:10.17352/anpc.000019

Rezaee A., Schäfer N., Avril N., Hefler L., Langsteger W., Beheshti M. (2018). Gynecologic cancers. Elsevier. doi:10.1016/B978-0-323-48567-8.00009-2

Ronneberger O., Fischer P., Brox T. (2015). U-Net: Convolutional networks for biomedical image segmentation. Med. Image Comput. Computer-Assisted Intervention (MICCAI) 9351, 234–241.

Rouhi R., Jafari M., Kasaei S., Keshavarzian P. (2015). Benign and malignant breast tumors classification based on region growing and CNN segmentation. Expert Syst. Appl. 42, 990–1002. doi:10.1016/j.eswa.2014.09.020

Russell B. C., Torralba A., Murphy K. P., Freeman W. T. (2008). LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 77, 157–173. doi:10.1007/s11263-007-0090-8

Serj M. F., Lavi B., Hoff G., Valls D. P. (2018). A deep convolutional neural network for lung cancer diagnostic. arXiv, 1–10.

Shin H. C., Roth H. R., Gao M., Lu L., Xu Z., Nogues I., et al. (2016). Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 35, 1285–1298. doi:10.1109/TMI.2016.2528162

Siegel R. L., Miller K. D., Fuchs H. E., Jemal A. (2021). Cancer statistics, 2021. Ca. Cancer J. Clin. 71, 7–33. doi:10.3322/caac.21654

Song Y., Zhang Y. D., Yan X., Liu H., Zhou M., Hu B., et al. (2018). Computer-aided diagnosis of prostate cancer using a deep convolutional neural network from multiparametric MRI. J. Magn. Reson. Imaging. 48, 1570–1577. doi:10.1002/jmri.26047

Sung H., Ferlay J., Siegel R. L., Laversanne M., Soerjomataram I., Jemal A., et al. (2021). Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. Ca. Cancer J. Clin. 0, 209–249. doi:10.3322/caac.21660

Wang S., Liu Z., Rong Y., Zhou B., Bai Y., Wei W., et al. (2016). Deep learning provides a new computed tomography-based prognostic biomarker for recurrence prediction in high-grade serous ovarian cancer. Radiother. Oncol. 171, 171–177. doi:10.1016/j.radonc.2018.10.019

Keywords: automatic staging method, tumor segmentation, early endometrial cancer, deep learning, medical image processing

Citation: Mao W, Chen C, Gao H, Xiong L and Lin Y (2022) A deep learning-based automatic staging method for early endometrial cancer on MRI images. Front. Physiol. 13:974245. doi: 10.3389/fphys.2022.974245

Received: 21 June 2022; Accepted: 09 August 2022;

Published: 30 August 2022.

Edited by:

Yu Gan, Stevens Institute of Technology, United StatesCopyright © 2022 Mao, Chen, Gao, Xiong and Lin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongping Lin, yplin@t.xmut.edu.cn

†These authors have contributed equally to this work

Wei Mao1†

Wei Mao1† Yongping Lin

Yongping Lin