- Curry School of Education and Human Development, University of Virginia, Charlottesville, VA, United States

Although online courses are becoming increasingly popular in higher education, evidence is inconclusive regarding whether online students are likely to be as academically successful and motivated as students in face-to-face courses. In this study, we documented online and face-to-face students’ academic motivation and outcomes in community college mathematics courses, and whether differences might vary based on student characteristics (i.e., gender, underrepresented ethnic/racial minority status, first-generation college status, and adult learner status). Over 2,400 developmental mathematics students reported on their math motivation at the beginning (Week 1) and middle (Weeks 3, 5) of the semester. Findings indicated that online students received lower grades and were less likely to pass from their courses than face-to-face students, with online adult learners receiving particularly low final course grades and pass rates. In contrast, online and face-to-face students did not differ on incoming motivation, with subgroup analyses suggesting largely similar patterns of motivation across student groups. Together, findings suggest that online and face-to-face students may differ overall in academic outcomes but not in their motivation or differentially based on student characteristics. Small but significant differences on academic outcomes across modalities (Cohen’s ds = 0.17–0.28) have implications for community college students’ success in online learning environments, particularly for adult learners who are most likely to be faced with competing demands.

Introduction

Online courses are increasingly popular in higher education, with over 3 million students nationwide having participated in at least one online course (Bennett and Monds, 2008; Green and Wagner, 2011). Growing access to online learning holds promise for Science, Technology, Engineering, and Mathematics (STEM) education, as online math and science courses augment both the number and diversity of students entering into STEM majors (Drew et al., 2015). Online courses also offer increased flexibility for non-traditional learners, such as adult learners, in terms of scheduling and transportation (Hung et al., 2010; Yoo and Huang, 2013). However, there is contradictory evidence on whether students fare equally well in online courses as they do in face-to-face courses. Some studies indicate no differences in performance by modality (e.g., online versus face-to-face courses, Bernard et al., 2004; O’Dwyer et al., 2007; Driscoll et al., 2012). Other studies, by contrast, suggest that online students drop out more often than their face-to-face counterparts (Patterson and McFadden, 2009; Boston and Ice, 2011). Low achieving students may face particular difficulties in online courses (Harrington, 1999), raising the question of whether online courses are benefitting the students who are most likely to enroll in them. Given the increasing popularity and contradictory evidence associated with online learning, it is critical to understand who is likely to be successful in online courses and the underlying mechanisms that may explain differential success.

Students’ academic motivation, or reasons for engaging in a task, is an important predictor of academic success that has been under-investigated in the online learning space (Jones and Issroff, 2005; Ortiz-Rodríguez et al., 2005; Yoo and Huang, 2013). In the current study, we examined whether community college students enrolled in online and face-to-face math courses received different academic outcomes, and whether any differences might be a function of students’ incoming motivation and changes in motivation. We focused on developmental mathematics courses, which are designed for students who place below college-level math, because they are characterized by notoriously low pass rates and serve as a significant barrier to degree completion (Bailey et al., 2010; Hughes and Scott-Clayton, 2011).

Learning Context: Community College Developmental Mathematics Courses

We chose to conduct this study in developmental mathematics courses at a community college given the high-stakes nature of developmental mathematics courses, dearth of evidence on online learning in community college settings and exponential growth of online courses in community colleges (Johnson and Mejia, 2014; Allen and Seaman, 2016). Community colleges serve more than one-third of degree-seeking U.S. citizens, and include proportionally more students from underrepresented groups (Levin, 2007; Ma and Baum, 2016). Over half of community college students are placed into at least one developmental course, which are designed for students who place below the college-ready level. Developmental math courses serve as a prerequisite to credit-bearing mathematics classes, are characterized by notoriously low pass rates, and can serve as a barrier to degree completion (Bailey et al., 2010; Hughes and Scott-Clayton, 2011). For instance, Silva and White (2013) reported that over 75% of students in developmental courses fail to achieve college-level readiness even after several semesters of remediation. As such, community college developmental mathematics courses serve as a high-stakes area to understand the effect of online learning on academic motivation and outcomes.

Online Learning Environments

Over the past two decades, online learning enrollment has consistently outpaced traditional student enrollment (Oblinger and Hawkins, 2005; Allen and Seaman, 2016), with the number of students enrolled in at least one online course increasing by 32% from 2006 to 2008 (Bennett et al., 2010). These trends are most pronounced in community colleges, higher education institutions which offer 2 years associate’s degrees (Allen and Seaman, 2016). Community colleges accounted for more than one-half of all online enrollments from 2002 to 2007, with one in five students at such institutions taking at least one online course between 2011 and 2012 (Johnson and Mejia, 2014). Given the impressive growth in online course enrollment, it is necessary to gain a better understanding of whether online learning is beneficial or detrimental to student success. Some researchers suggest that students fare better when engaging in online courses. For instance, Feintuch (2010) concluded from a review of more than 1000 articles that students in online learning environments spent more time engaged with course materials. She also argued that online students could benefit from personalized instruction and real-time feedback. Similarly, other researchers have lauded the potential for online course offerings to support enrollment among traditionally underserved students (e.g., adult learners, underrepresented ethnic/racial minority students) due to increased flexibility (Hung et al., 2010; Yoo and Huang, 2013), particularly in fields with a lack of diversity such as STEM (Drew et al., 2015). By contrast, other researchers have argued that students enrolled in online courses perform worse than they would in face-to-face courses. For example, researchers cite that dropout rates can be as much as 10–20% higher in online courses than in comparable face-to-face courses (Harris and Parrish, 2006; Xu and Jaggars, 2013). Still other researchers report no difference between academic outcomes in face-to-face and online learning environments (e.g., Bernard et al., 2004; Steinweg et al., 2005; Zhao et al., 2005).

To complicate matters further, researchers have called into question the academic rigor of existing studies. For example, Phipps and Merisotis (1999) noted that many studies failed to use valid and reliable measures or account for students’ attitudes. Other scholars assert that conclusions depend on how academic success is operationalized. For example, findings from the Public Policy Institute of California indicated that online learning was correlated with negative short-term outcomes, including course pass rates that were 11–14% lower than face-to-face courses even when controlling for overall grade point average and school characteristics. However, the same report suggested that participating in an online course may have long-term benefits for community college students, including greater likelihood to attain an associate’s degree and enroll in a 4 years institution (Johnson and Mejia, 2014).

Differences by Student Characteristics

Researchers are increasingly attending to the fact that online learning may be more beneficial for some types students compared to others. One group that has received substantial attention is adult learners (i.e., individuals who are 24 or older; Yoo and Huang, 2013). On the one hand, online courses provide increased access for adult learners, who are more likely to have work and family obligations to balance alongside attaining a degree (Hung et al., 2010; Yoo and Huang, 2013). Adult learners may be expected to perform better in online courses because they tend to be more self-directed and autonomous (Arghode et al., 2017). On the other hand, they may be less familiar navigating online learning environments, which can affect their performance (Chang, 2015; Lai, 2018).

Results comparing success rates for adult and traditional-aged learners are inconsistent. A number of studies found that adult learners perform more poorly in online learning environments than in face-to-face learning environments (Richardson and Newby, 2006; Park and Choi, 2009; Yoo and Huang, 2013). For example, one study found that online adult learners enrolled in an MBA program were four times more likely to drop out than in face-to-face courses (Patterson and McFadden, 2009). Other studies have found that adult learners participate more often in course activities than traditional-aged learners (Kilgore and Rice, 2003; Hoskins and Van Hooff, 2005; Chyung, 2007) or found that age was not a significant predictor of outcomes (Hargis, 2001; Ke and Xie, 2009). Taken together, findings related to age and success in online courses are inconsistent. The current study not only considered academic outcomes for adult learners compared to traditional-aged students, but also characterized their incoming motivation levels.

Other student characteristics that have been examined with respect to online learning are gender (e.g., Park, 2007; Cercone, 2008) and prior achievement (e.g., Harrington, 1999; Summers et al., 2005). With respect to gender, findings suggest that females may be more actively engaged in online learning (e.g., Chyung, 2007), but this does not translate to lower dropout rates (Park and Choi, 2009). With respect to prior achievement, findings suggest that lower achieving students may be less satisfied with online compared to face-to-face courses (e.g., Summers et al., 2005) and may perform more poorly in online than in face-to-face courses or than their higher achieving classmates. Relevant to the current study, which was conducted in developmental mathematics courses, Harrington (1999) examined students’ performance in online and face-to-face versions of an introductory statistics course. She found that students with low grade point averages performed more poorly in the online course than low-performing students in the face-to-face course, or than high-achieving students in either version of the course. Overall, evidence suggests some potential differences in how successful students may be in online courses based on their individual characteristics. However, the evidence is generally mixed and several important student characteristics have yet to be systematically examined (e.g., underrepresented ethnic/racial minority status, first generation college status).

Academic Motivation in Online Learning Contexts

The research on non-cognitive factors in online education has focused heavily on students’ behaviors, such as self-regulated learning strategies, as predictors of success in online learning environments (Broadbent and Poon, 2015). However, differences between online learning and face-to-face environments may also affect students’ attitudes in online courses (Rovai, 2002; Baker, 2004; Mullen and Tallent-Runnels, 2006). Online students may require adaptive motivation to stay engaged (Karimi, 2016; Park and Yun, 2018) and enrolled in their courses (Aragon and Johnson, 2008) more so than face-to-face students, and online courses may attract students with lower initial motivation. This study documents online learners’ motivation in comparison to face-to-face students in the same courses.

To operationalize motivation, we adopted an expectancy-value-cost framework (Eccles, 1983; Barron and Hulleman, 2015) because it is well established, describes student motivation broadly, and aligns with constructs from popular conceptualizations of motivation in the online learning space (e.g., Keller and Suzuki, 2004). Expectancy-value-cost theory posits that the most proximal determinants of student motivation are expectancy (i.e., belief that one can complete a task successfully), value (i.e., belief that there is a worthwhile reason for engaging in a task), and cost (i.e., belief that that there are obstacles preventing one from engaging in a task). Because we were interested in capturing a rich description of students’ motivation, we assessed a number of related motivational constructs (for a similar approach, see Hulleman et al., 2016). Related to expectancy, we assessed growth mindset (i.e., belief that intelligence is malleable and can be improved; Dweck and Leggett, 1988; Dweck, 2006). Related to value, we assessed interest (i.e., engaging in a task due to interest or enjoyment; Eccles, 1983) and social belonging (i.e., belief that one fits in to the learning context and is respected by others in that environment; Cohen and Garcia, 2008).

A growing number of studies have assessed online learners’ expectancy or value. Consistent with the broader literature, expectancy-related constructs – particularly self-efficacy, or students’ perception that they can successfully complete a task – were positively associated with online students’ course satisfaction (Brinkerhoff and Koroghlanian, 2007), performance (Joo et al., 2000; Wang and Newlin, 2002; Lynch and Dembo, 2004; Bell and Akroyd, 2006), persistence (Holder, 2007), and likelihood of enrolling in future online courses (Lim, 2001; Artino, 2007). Overall perceived value of course content was also associated with course satisfaction (Xie et al., 2006), performance (Yoo and Huang, 2013), and future enrollment choices (Artino, 2007), although some articles reported no associations between value and final grade (Chen and Jang, 2010). Studies suggested that value was a particularly important predictor for adult learners (Kim and Frick, 2011). There is less empirical evidence suggesting that perceived cost is associated with poorer academic outcomes. However, theory suggests that cost may be lower in online courses since they do not require students to be in a particular location at a particular time. Cost may be a critical predictor for certain groups of online learners, such as adult learners who may be more likely to be balancing competing demands on their time from work, family, and school (Hung et al., 2010).

Current Study

We sought to document academic outcomes, incoming motivation, and changes in motivation for students enrolled in online and face-to-face math courses. We were interested in two primary research questions. First, do students enrolled in online courses receive lower academic outcomes (i.e., final grades, pass rates, withdraw rates) than students enrolled in face-to-face courses? We hypothesized that online students would receive lower final grades and pass rates, but higher withdraw rates, than face-to-face students (Patterson and McFadden, 2009) based on evidence that lower achieving students struggle in online learning environments (Harrington, 1999; Coldwell et al., 2008) and community college students reported negative short-term outcomes in online courses (Johnson and Mejia, 2014).

Second, do online students report lower incoming motivation than face-to-face students, and does that vary Eccles, 1983 as a function of student characteristics (i.e., gender, adult learner status, underrepresented ethnic/racial minority status, and generation status)? Given the general lack of evidence in online courses in general, and community college developmental math courses in particular, we tentatively hypothesized that online students would report (1) lower perceived cost than face-to-face students, given the argument that online courses offer increased flexibility Yoo and Huang, 2013); and (2) lower belonging, given that online courses tend to involve less interaction and synchronous learning opportunities.

Materials and Methods

Participants

The sample included 2,411 students (Mage = 20.7 years, SDage = 5.2 years) from a community college in the Southeastern United States. Participants were enrolled in 310 individual courses of two different developmental mathematics topics – Intermediate Algebra and College Math – taught by 63 instructors over six semesters. Participants were drawn from the control condition of a larger randomized-control trial assessing the effects of a utility-value and growth mindset intervention on students’ math achievement. Participants were primarily female (60%), with 70% having applied for financial aid, 50% identifying as first-generation students (i.e., neither parent received a degree form a 4 years institution), 45% identifying as part-time students, and 13% adult learners. Approximately 31% self-reported as White, 38% Hispanic/Latino, 21% Black/African American, 2.1% Asian, and 7.9% reporting another ethnicity. The current sample is representative of the overall population of the community college (31% White, 32% Hispanic/Latino, 18% Black/African American, 6% Asian, and 13% reporting some other ethnicity). Out of the total sample, 2,036 students (84.45%) were enrolled in face-to-face courses and 375 (15.55%) were enrolled in online courses. Students in online courses were more likely to be women (66%), identify as an underrepresented ethnic/racial minority group (53%; i.e., identifying as Hispanic/Latino, Black/African American, or Pacific Islander at this institution), adult learners (i.e., 25 or older; 26%) and enrolled part time (52%) than students in face-to-face courses [59% female, χ2(1, N = 2,384) = 6.18, p = 0.013; 43% underrepresented ethnic/racial minority, χ2(1, N = 2,227) = 17.17, p < 0.001; 11% adult learner, χ2(1, N = 2,411) = 0.65.41, p < 0.001; 43% part time learner, χ2(1, N = 2,411) = 9.52, p = 0.002]. Students in online and face-to-face courses did not differ by generation status.

Measures

Academic Motivation

Student motivation was assessed via self-report survey measures for four constructs from Expectancy-Value-Cost Theory – expectancy, value, cost, and interest – at four points throughout the semester, along with growth mindset and social belonging at the beginning of the semester. Four expectancy items (α = 0.90; e.g., “How confident are you that you can learn the material in the class?”), six value items (α = 0.91; e.g., “How important is this class to you?”), five cost items (α = 0.78; e.g., “How stressed out are you by your math class?”), and three interest items (α = 0.88; e.g., “How interested are you in learning more about math?”) were adapted from the Expectancy-Value-Cost Scale (Kosovich et al., 2015; Hulleman et al., 2017) to make them specific to math courses. Responses ranged from 1 (Not at All) to 6 (Extremely). Students also completed a three-item measure of growth mindset from Good et al. (2012), (α = 0.85; e.g., “I have a certain amount of math ability, and I can’t really do much to change it”; reverse-scored) on a 6-point Likert-type scale (1 = Strongly Disagree; 6 = Strongly Agree) and a three-item measure of social belonging (Manai et al., 2016; α = 0.75; e.g., “In this class, how much do you feel as though you belong?”) on a 6-point Likert-type scale (1 = Not at All; 6 = Extremely). Confirmatory factor analysis indicated that the motivation variables fit the data well (χ2(231, N = 1,676) = 1220.54, p < 0.001; CFI: 0.96; TLI: 0.95; RMSEA: [0.047, 0.053]; SRMR: 0.043).

Academic Outcomes

Administrative data were collected from the office of institutional research at the end of the semester for pass rates, withdraw rates, and numeric grade. Pass rates were calculated such that students who earned an A, B, or C in the course were coded a “1” while students who earned a D, F, W, or I were coded as “0.” Withdraw rates were calculated such that students who earned a Withdraw (W) in the course were coded as a “1” while students who earned an A, B, C, D, or F were coded as a “0.” Students who earned an incomplete (I, n = 4 students) could not be categorized. Numeric grades were coded by converting letter grades to a normal GPA scale (0–4) such that students who received an A were coded as a “4,” students who received a B were coded as a “3,” students who received a C were coded as a “2,” students who received a D were coded as a “1,” students who received an F or a W were coded as a “0.”

Procedure

All students enrolled in participating developmental mathematics courses were invited to participate in the current study. Materials were administered online through the Qualtrics platform during the lab portion of students’ developmental mathematics class for the face-to-face courses, and as part of an assigned homework activity on the course management platform for online courses. Overall, 91% of students enrolled in participating courses completed at least one of the 4 activities. Participants reported on their motivation during the first class period of the semester in Week 1 (Time 1, 70% response rate), as well as during Week 3 (Time 2, 77% response rate), Week 5 (Time 3, 70% response rate), and Week 12 (Time 4, 45% response rate). Given the low response rate, we did not consider motivation at Time 4 in analyses for the current study. After responding to survey items, participants provided information about their self-identified gender, race, parental education, and previous academic achievement before being thanked for their time. Instructors incentivized students to complete activities with course credit, but were given autonomy over what kind of course credit they offered (e.g., extra credit, participation grade).

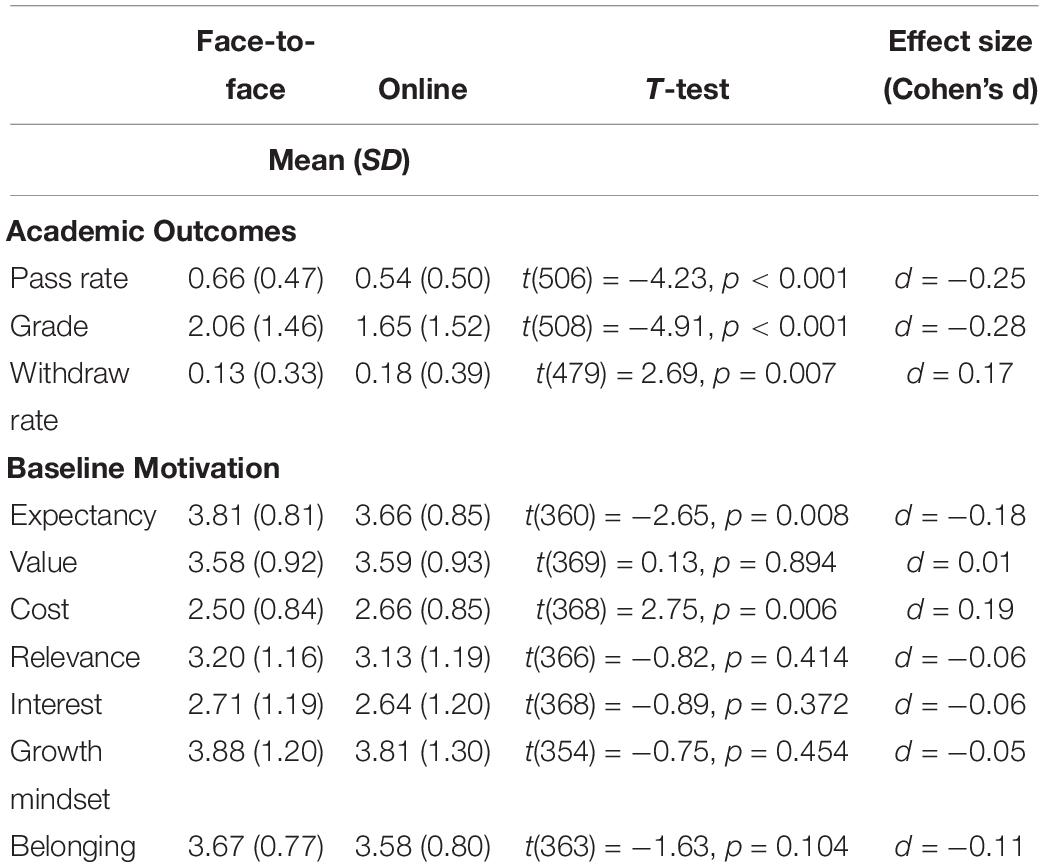

Analytic Plan

Descriptive differences in student achievement, demographics, and baseline motivations by course modality were examined by conducting t-tests and ANOVAs Because modality comparisons were exploratory, we employed a Bonferroni adjustment for them and reduced our threshold for significance to α = 0.0056 (see Table 1). Additionally, we calculated effect sizes of differences (i.e., Cohen’s d) to consider practical significance. See the Supplementary Materials for the tables displaying descriptive differences in student achievement, demographics, and baseline motivation by course modality and student characteristics (gender, underrepresented ethnic/racial minority status, generation status, and adult learner status).

To determine whether students in online and face-to-face courses received different academic outcomes, we tested the influence of course modality, the interaction of course modality and student characteristics, and the latent interaction of baseline motivation and course modality on student academic achievement in the course. To determine whether students in online courses were less motivated initially than students in face-to-face courses, we tested the influence of course modality and the interaction of course modality and student characteristics on latent student baseline motivation. To do this, we fit two structural equation models – one predicting the three academic outcomes (i.e., pass rates, withdraw rates, and numeric grade) and one predicting the six latent student motivation scores (i.e., expectancy, value, cost, interest, growth mindset, and belonging). Models included course modality, course modality and student demographic interactions, and course modality and latent incoming motivation interactions (only when predicting academic achievement) as predictors. Models were estimated in the statistical program R using the “lavaan” package (Rosseel, 2012).

For all analyses predicting latent baseline student motivation, we controlled for student gender (i.e., male versus female), student underrepresented minority status (i.e., Hispanic/Latino or Black/African American versus White or Asian), student generation status (i.e., first-generation status versus continuing-generation status, adult learner status, and prior achievement (i.e., high school GPA). For all analyses predicting academic outcomes (i.e., pass rate, numeric grade, withdraw rate), we controlled the same student covariates as well as the six latent student motivation scores.

To determine if students in face-to-face courses or online courses experienced differences in their change in motivation over the course of the semester, we tested the influence of course modality on Time 3 student motivation while accounting for Time 1 student motivation. These models could not be estimated in an SEM framework, as the sample size of online students participating during surveys conducted during both Time 1 and Time 3 was too small. To answer this question, models were estimated in the statistical program R using the “lme4” package (Bates et al., 2014). This package is appropriate for cross-classified levels in data structures, which was necessary for the current study given that instructors taught courses across multiple semesters. Prior to analyses, all continuous predictor variables (e.g., Time 1 motivation composites, student’s reported high school GPA) were grand-mean centered. For all analyses predicting changes in motivation over the semester (i.e., Time 3 expectancy, value, cost, and interest), we controlled for the aforementioned student covariates along with students’ Time 1 composite score for the motivational construct being predicted.

Results

Predicting Academic Outcomes by Course Modality

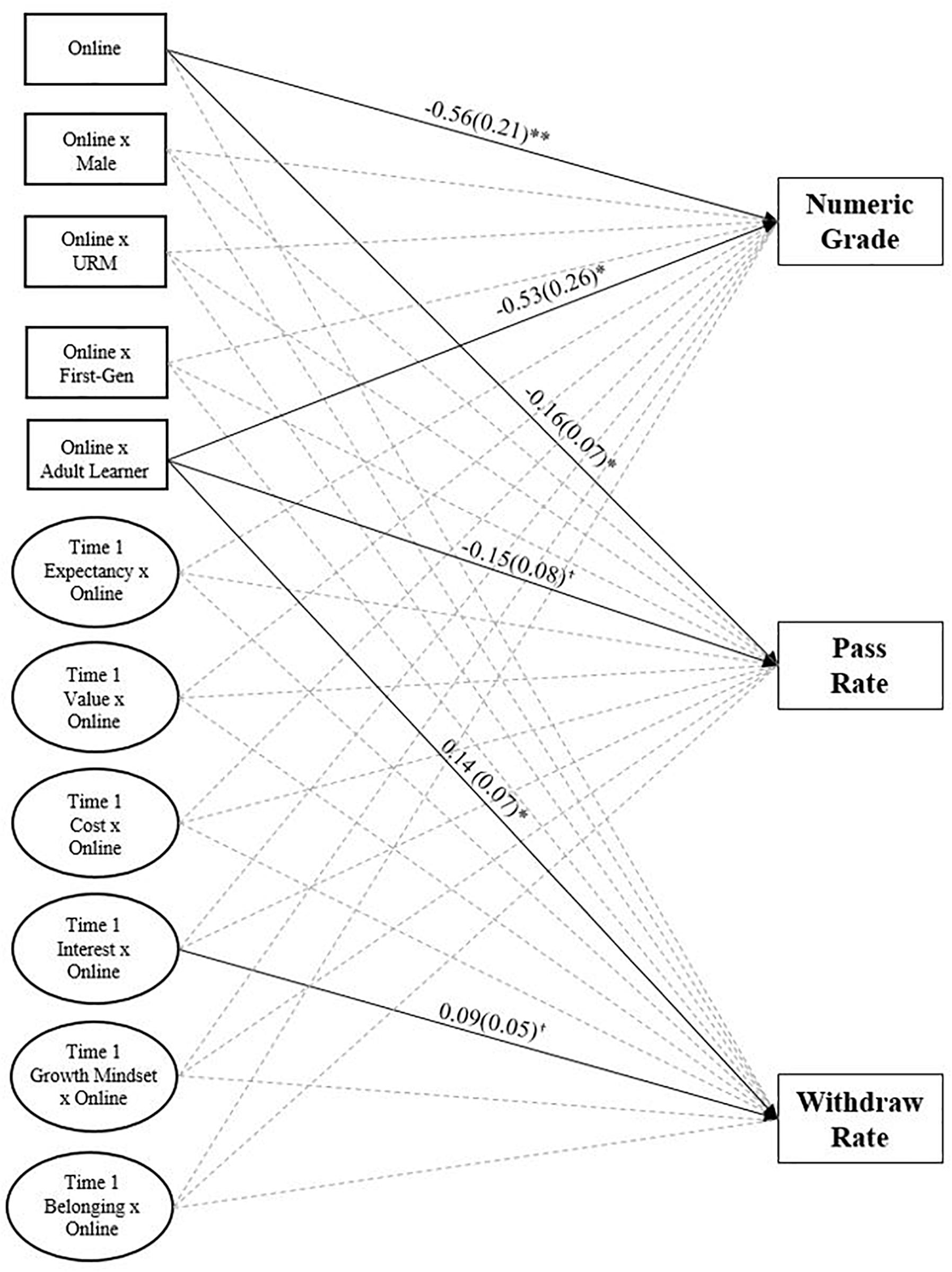

First, we tested whether course modality predicted students’ course performance (i.e., whether students in online courses performed better, worse, or the same as students in face-to-face courses). Descriptive statistics for course performance by course modality can be seen in Table 1. Structural equation models were conducted in which pass rate and withdraw rate were predicted by course modality (0 = face-to-face; 1 = online). All models controlled for latent baseline student motivation scores, student gender, student underrepresented ethnic/racial minority status, student generation status, and student prior achievement. The model fit the data well (χ2(1,480, N = 1,456) = 5048.12, p < 0.001; CFI: 0.92; TLI: 0.91; RMSEA: [0.039, 0.042]; SRMR: 0.041). As shown in Figure 1, being enrolled in an online course was significantly negatively associated with pass rate and numeric grade (β = −0.56, p = 0.007; β = −0.16, p = 0.021) but did not predict withdraw rate.

Figure 1. Course modality and interactions predicting academic outcomes. †p < 0.10, ∗p < 0.05, and ∗∗p < 0.01.

This same model also included interactions of course modality and student characteristics to determine if the effect of course modality on academic achievement was differential by gender, student underrepresented racial/ethnic minority status, generation status, or adult learner status. As shown in Figure 1, being an adult learner in an online course was significantly negatively associated with numeric grade (β = −0.53, p = 0.043), marginally negatively associated with pass rate (β = −0.15, p = 0.064), and significantly predicted withdraw rate (β = 0.14, p = 0.043). These results suggest that students in online courses tend to pass less often, withdraw more often, and earn lower numeric grades than students in face-to-face courses. Further, this effect is not a function of student gender, underrepresented ethnic/racial minority status, or generation status but may be a function of adult learner status.

Modality Effects on Academic Outcomes Based on Incoming Motivation

One hypothesis was that differences in online and face-to-face students’ performance is a function of their incoming motivation. To assess this possibility, we included latent interactions of baseline motivation and course modality for each motivation construct (i.e., expectancy, value, cost, interest, growth mindset, and belonging) predicting academic achievement. As shown in Figure 1, there were no significant interactions of course modality and Time 1 motivation predicting academic outcomes with the exception that being an online student scoring higher on interest in the course was marginally associated with withdraw rate (β = 0.09, p = 0.080). These results suggest that the differences in online and face-to-face students’ academic performance is not a function of their baseline motivation coming into the course with the marginal exception of perceived interest in the course when considering withdraw rate.

Baseline Motivational Differences by Course Modality

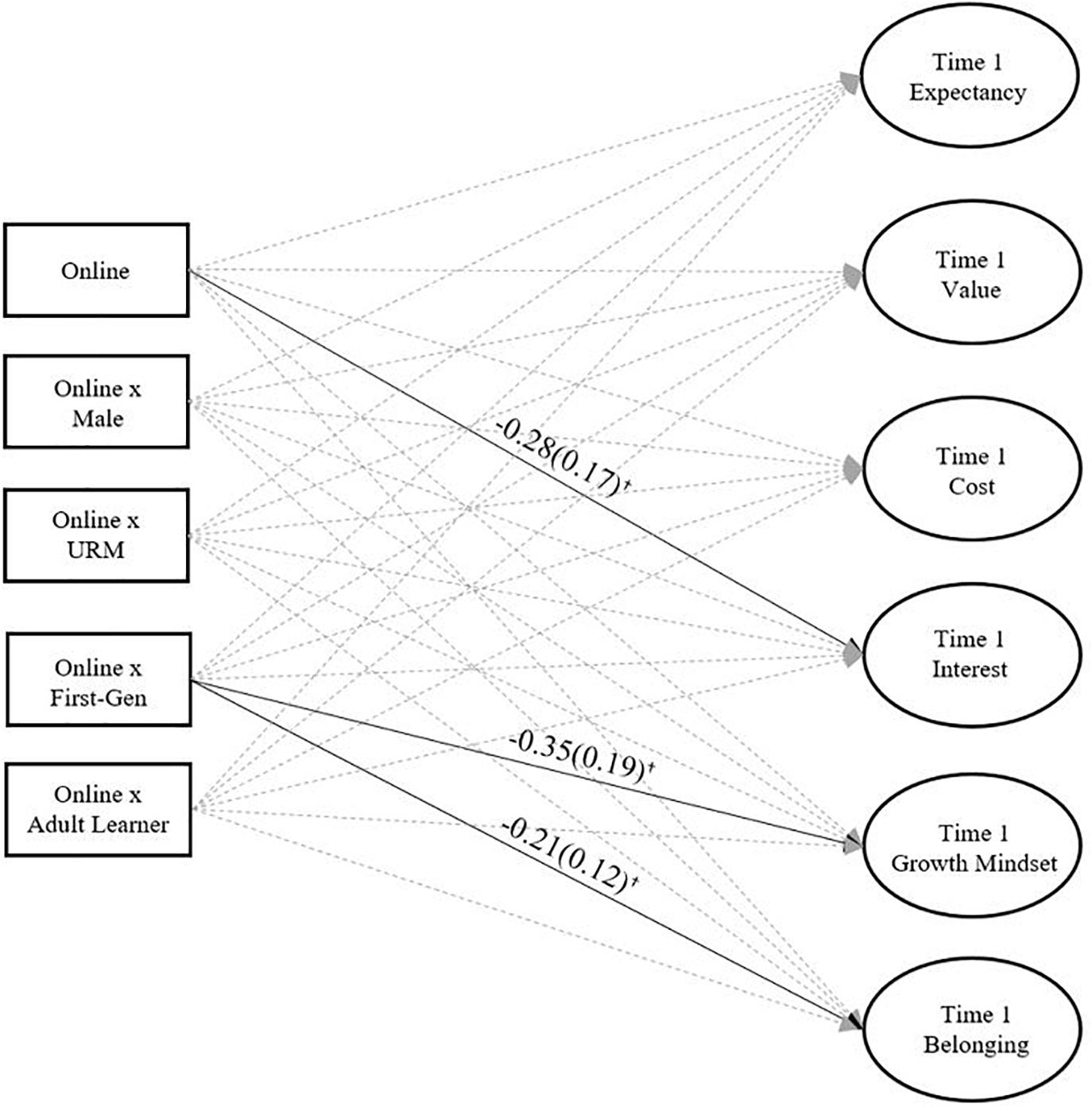

Next, we examined whether students’ incoming motivation significantly differed based on course modality (i.e., whether students in online courses were more, less, or equally motivated at the beginning of the semester as students in face-to-face courses). As displayed in Table 1, students in online courses did not differ significantly from students in face-to-face courses in any of the Time 1 motivational constructs. In terms of practical significance, effect sizes indicated that any differences were below what would be considered a small effect (i.e., Cohen’s ds < 0.30). Results suggested that face-to-face and online students did not differ in their incoming motivation. This was further supported by the SEM model predicting latent incoming student motivation scores, which fit the data well (χ2(414, N = 1,456) = 1393.28, p < 0.001; CFI: 0.95; TLI: 0.94; RMSEA: [0.038, 0.043]; SRMR: 0.033). with the exception of online course enrollment being marginally negatively associated with latent incoming interest scores (β = −0.28, p = 0.098), there were no differences between online and face-to-face students in academic motivation.

We were also interested in determining whether the effect of course modality on latent baseline student motivation was moderated by student characteristics. To assess this possibility, we included interactions of course modality and student characteristics (gender, underrepresented racial/ethnic minority status, generation status, and adult learner status) predicting latent motivation scores. As shown in Figure 2, there were no significant interactions of course modality and student characteristics predicting latent baseline expectancy, value, cost, or interest. However, the interaction of course modality and generation status were marginally negatively associated with latent incoming growth mindset and social belonging such that first-generation students enrolled in online courses tended to report less growth mindset and less social belonging at their institution. These results suggest that course modality is unrelated to latent Time 1 student motivation (with the marginal exception of interest), and that generally, student gender, underrepresented racial/ethnic minority status, and adult student status do not moderate the relationship between course modality and latent student motivations, however, course modality and generation status are marginally negatively associated with growth mindset and social belonging.

Figure 2. Course modality and interactions of course modality and student characteristics predicting latent baseline student motivation. †p < 0.10.

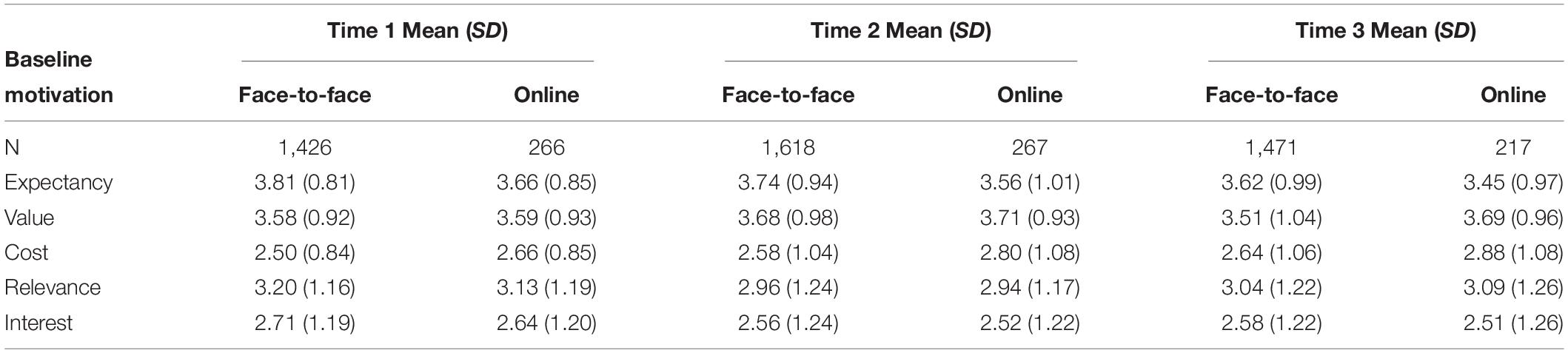

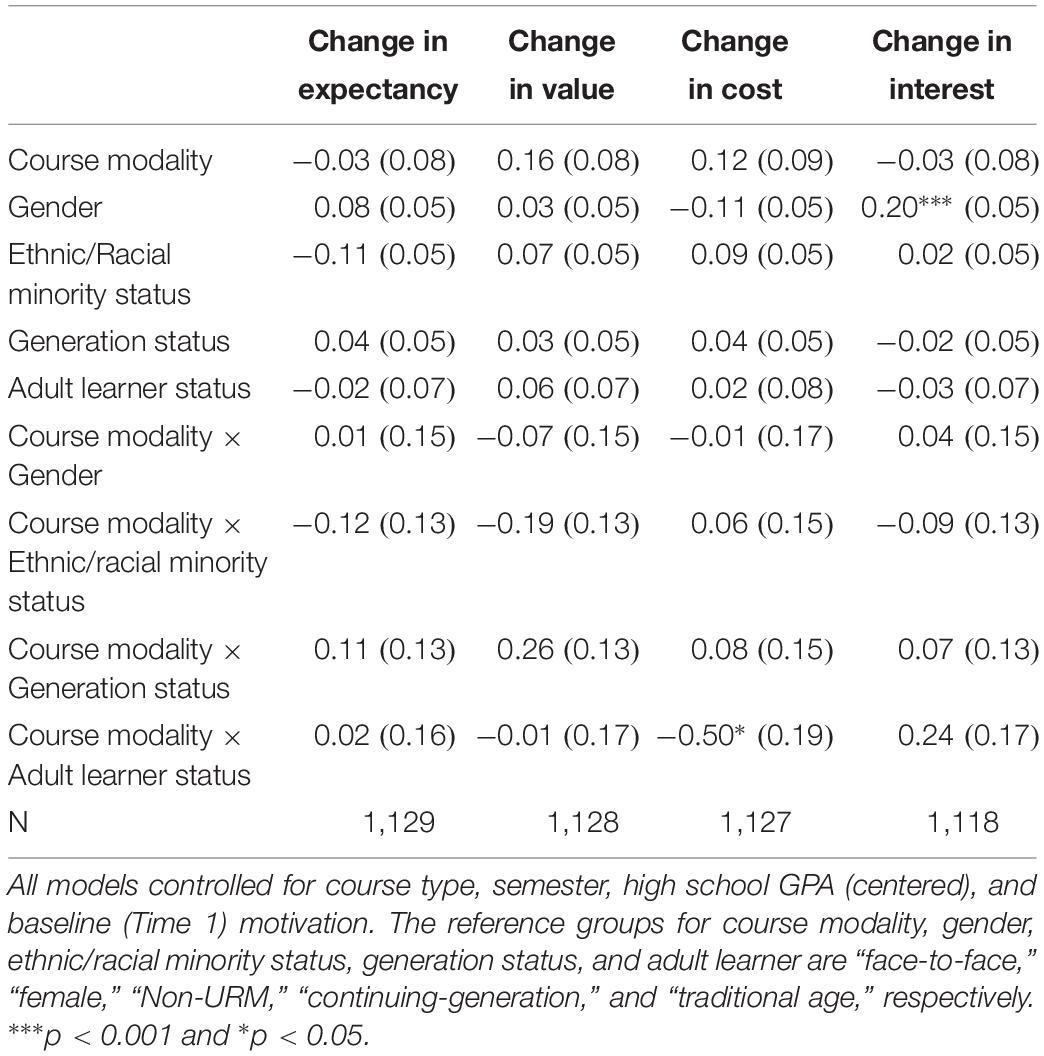

Predicting Change in Motivation Over Time by Course Modality

Next, we examined whether course modality predicted change in motivation over the course of the semester (i.e., whether students in online courses reported greater, lesser, or equal changes in motivation across the semester as students in face-to-face courses). We conducted these analyses for expectancy, value, cost, interest, and relevance because these variables were assessed at multiple points throughout the semester. In analyses considering change in motivation over time, we operationalized change as the difference in motivation from Time 1 (Week 1) to Time 3 (Week 5). Descriptive statistics for motivation composites across Time 1–Time 3 by course modality can be seen in Table 2. In order to determine whether course modality predicted change in motivation over time, we fit linear multilevel models in which Time 3 motivation was regressed on course modality for each motivational construct (i.e., expectancy, value, cost, interest, relevance). All models controlled for course type, semester, student gender, student underrepresented ethnic/racial minority status, student generation status, and student high school GPA as well as the Time 1 motivation composite for the motivation being predicted. As shown in Table 3, being enrolled in an online course was not a significant predictor of change in motivation over the semester after employing a Bonferroni correction (α = 0.01). Findings suggest that changes in motivation over the course of the semester were not a function of course modality.

We further investigated whether student demographics interacting with course modality predicted change in motivation over the course of the semester (i.e., whether students in online courses reported greater, lesser, or equal changes in motivation based on demographic characteristics compared to students in face-to-face courses. In order to do this, we fit linear models in which Time 3 motivation was regressed on the interaction of course modality and student demographic characteristic (gender, underrepresented ethnic/racial minority status, generation status, and adult learner status). All models controlled for course type, semester, student gender, student underrepresented ethnic/racial minority status, student generation status, and student high school GPA as well as the Time 1 motivation composite for the motivation being predicted. As shown in Table 3, there are no significant interactions of course modality and student demographic predicting change in motivation after employing Bonferroni corrections (α = 0.013) with the exception of the interaction of course modality and adult learner status on change in cost [β = −0.50, t(1,125) = −2.71, p = 0.007]. Adult learners in online courses tended to experience less of an increase in cost over the course of the semester than adult learners in face-to-face courses and traditional-aged learners in general. These results indicate that changes in motivation over the course of the semester were not a function of course modality and student demographics with the exception of adult learners in online courses and their experience of cost over time.

Discussion

Online courses have become increasingly available and popular in higher education, particularly in community college (Bennett et al., 2010; Allen and Seaman, 2016). While some have lauded online courses as an opportunity to increase access for non-traditional and historically underrepresented learners (Yoo and Huang, 2013; Drew et al., 2015), others cite poor performance and high dropout rates as significant drawbacks (Patterson and McFadden, 2009; Boston and Ice, 2011). Evidence is inconsistent on whether students who engage in online learning are as motivated and successful as students who engage in traditional face-to-face learning, and for whom online courses may be most beneficial or detrimental (Phipps and Merisotis, 1999; Bernard et al., 2004; Johnson and Mejia, 2014). This study documented the motivational experiences of students in online and face-to-face courses, along with their academic performance. We focused on developmental mathematics courses, which serve academically underprepared students and are notorious barriers to graduation. Taken together, our findings suggest that there are few differences in online and face-to-face students’ incoming motivation and motivational change over time, and that motivational experiences do not differ systematically based on students’ gender, generation status, underrepresented ethnic/racial minority status, or age.

Do Face-to-Face Students Outperform Online Students?

One of the primary arguments against online learning is that online students perform worse and drop out at higher rates than face-to-face students (Harris and Parrish, 2006). However, multiple syntheses concluded that there are no significant differences between the two modalities (e.g., Russell and Russell, 1999; Bernard et al., 2004; Zhao et al., 2005) and that negative effects are only present for certain subgroups of students. Findings from the current study indicated that online learners received lower course grades, lower pass rates, and higher withdrawal rates than their classmates in face-to-face courses. Although significant, it is important to note that the size of effects was small (Cohen’s ds = 0.17–0.28). When interpreting these findings, we are also mindful of Johnson and Mejia’s (2014) work with community college students in California, who concluded that online students displayed negative short-term effects (i.e., course-level performance and persistence) but positive long-term outcomes (i.e., degree attainment, enrollment in 4 years institution). Future analyses with this sample will assess participants’ longer-term outcomes, such as how many math courses they pursue and whether they are successful in future higher education or employment contexts.

When considering findings by subgroup, results suggested that the only significant interaction between student characteristic and course modality was for adult learner status. Online adult learners received significantly lower course grades and pass rates than face-to-face adult learners or traditional-aged learners in either online or face-to-face courses, with a consistent marginal finding for withdraw rates. This finding is of interest because adult learners are one of the most commonly cited reasons for providing online education options and comprise a sizable percentage of the online learner population (Yoo and Huang, 2013).

Do Online Students Report Lower Motivation Than Face-to-Face Students?

Motivation is a critical predictor of academic success (Wigfield and Cambria, 2010), and has been identified as a theoretically-meaningful component of online learners’ success (Hartley and Bendixen, 2001; Hu and Kuh, 2002; Keller and Suzuki, 2004). We documented students’ motivation using an expectancy-value-cost framework (Wigfield and Eccles, 2000; Barron and Hulleman, 2015) and additional key constructs such as growth mindset and belonging. Results indicated that incoming students reported comparable expectations that they could be successful in the course, value for the course, and perceived cost of being involved in the course regardless of whether they enrolled in an online or face-to-face version of the class. This lack of difference counters any hypothesis that students may be differentially selecting to enroll in online courses because they are more or less motivated to take the course, at least among the current sample.

We were also interested in how students’ motivation changed over time, and documented students’ motivation at the beginning (Week 1) and middle (Weeks 3, 5) of the semester. We focused on this time period because it aligned with the add/drop period for the course, and consequently could be an important predictor of course drop out. Similar to findings for incoming motivational levels, descriptive results indicated that face-to-face and online students did not show differential patterns of motivational change. This suggests that, at least for the first half of the semester, online students’ changes in expectancy, value, and cost are not meaningfully different from those of face-to-face students. However, we were not able to meaningfully assess motivational change from the beginning to end of the semester given a low response rate (45%) to our survey administered in Week 12. Future research may wish to collect data on longer-term changes in motivation. Future studies could also assess motivational change at a more fine-grained level by collecting data more frequently to determine when – if ever – online and face-to-face students’ motivational trajectories diverge.

We were also interested in whether incoming academic motivation could account for differences in online and face-to-face students’ academic outcomes. Findings from our structural equation model (Figure 1) indicated no significant interaction between any of the incoming motivational variables and online versus face-to-face courses. The fact that this finding applied across a sample of students drawn from six semesters provides some assurance that these findings are replicable in the current sample of community college developmental mathematics students. Future research, however, could help determine whether this lack of relation replicates in other learning contexts, which would suggest that academic motivation is not a meaningful explanatory factor accounting for differences between online and face-to-face students’ academic success, or is unique to the community college or developmental mathematics setting.

Limitations and Future Directions

The current study provides a broad description of online students’ motivational experiences in an important setting in higher education. It also provides preliminary evidence suggesting that the small but significant differences in academic performance between online and face-to-face courses does not appear to be a function of students’ incoming motivational beliefs. Although this information contributes to our understanding of online students’ affective experiences, there are a number of additional potential explanatory mechanisms that were not assessed. Future research may wish to consider constructs such as self-regulated learning strategies (e.g., time management; Broadbent and Poon, 2015) as reasons why online and face-to-face learners may receive different academic outcomes. Students’ prior experiences in online courses may also be an important factor to consider. Like motivation, the extant literature on prior experience is mixed, with some studies finding no relation between prior online experience and course performance (e.g., Arbaugh, 2005) and others finding effects of prior online experience on retention and completion rates (e.g., Dupin-Bryant, 2004). Similarly, students’ reasons for enrolling in online courses and the percentage of courses that students take online versus face-to-face may also affect students’ academic outcomes and course motivation. Future studies should assess these background variables and account for them in subsequent analyses.

The current study was also limited to assessing short-term motivation (i.e., from the beginning to middle of the semester) and outcomes (i.e., course grade, pass rate, and withdraw rate). However, prior research suggests that there are benefits to measuring longer-term change in motivation (Kosovich et al., 2017) and that the pattern of effects of taking online courses for short-term and long-term outcomes can vary substantially (Johnson and Mejia, 2014). Future research may wish to collect longer-term data from online and face-to-face students in terms of their motivation, perceptions of instructors, and academic outcomes. Finally, the current study was correlational. Because students chose to enroll in online or face-to-face versions of their courses, we cannot make claims regarding causal effects of online course enrollment on academic outcomes or motivational change. To enable such claims, future studies may wish to randomly assign students to complete online or face-to-face versions of courses, then assess their academic outcomes. Causal evidence from a randomized controlled trial could augment the current evidentiary basis by providing more definitive evidence on the effect of online course enrollment for student motivation and success.

Data Availability

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by University of Virginia Institutional Review Board Valencia College Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MF contributed to the conceptual framing of the study, helped determine and execute the analytic plan, helped conduct a literature review, and was the primary author of the methods and results sections. SW contributed to the conceptual framing of the study, provided the theoretical framework from which the study was based, helped conduct a literature review, helped determine an analytic plan, was the primary author of the introduction and discussion sections, and provided substantive direction and feedback on the methods and results sections. CH contributed to the conceptual framing of the current study and the larger project from which it was drawn, oversaw all efforts to collect data, and provided substantive feedback on the manuscript.

Funding

This work was supported by the National Science Foundation (Award No. 1534835) – sole funder of this project.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.02054/full#supplementary-material

References

Allen, I. E., and Seaman, J. (2016). Online Report Card: Tracking Online Education in the United States. Babson Park, MA: Babson Survey Research Group.

Aragon, S. R., and Johnson, E. S. (2008). Factors influencing completion and non-completion of community college online courses. Am. J. Dist. Educ. 22, 146–158. doi: 10.1080/08923640802239962

Arbaugh, J. B. (2005). Is there an optimal design for on-line MBA courses? Acad. Manag. Learn. Educ. 4, 135–149. doi: 10.5465/AMLE.2005.17268561

Arghode, V., Brieger, E. W., and McLean, G. N. (2017). Adult learning theories: implications for online instruction. Eur. J. Train. Dev. 41, 593–609. doi: 10.1108/EJTD-02-2017-0014

Artino, A. R. (2007). Self-regulated learning in online education. Int. J. Instruct. Technol. Dist. Learn. 4, 3–18.

Bailey, T., Jeong, D. W., and Cho, S. W. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Econ. Educ. Rev. 29, 255–270. doi: 10.1016/j.econedurev.2009.09.002

Baker, J. D. (2004). An investigation of relationships among instructor immediacy and affective and cognitive learning in the online classroom. Internet High. Educ. 7, 1–13. doi: 10.1016/j.iheduc.2003.11.006

Barron, K. E., and Hulleman, C. S. (2015). Expectancy-value-cost model of motivation. Psychology 84, 261–271. doi: 10.1016/B978-0-08-097086-8.26099-6

Bates, D. M., Machler, M., Bolker, B. M., and Walker, S. C. (2014). Fitting Linear Mixed-Effects Models Using lme4. Available at: http://CRAN.R-project.org/package=lme4 (accessed May 22, 2019).

Bell, P. D., and Akroyd, D. (2006). Can factors related to self-regulated learning predict learning achievement in undergraduate asynchronous Web-based courses. Int. J. Instruct. Technol. Dist. Learn. 3, 5–16.

Bennett, C. F., and Monds, K. E. (2008). Online courses: the real challenge is “motivation.”. Coll. Teach. Methods Styles J. 4, 1–6. doi: 10.19030/ctms.v4i6.5553

Bennett, D. L., Lucchesi, A. R., and Vedder, R. K. (2010). For-Profit Higher Education: Growth, Innovation and Regulation. Washington, DC: Center for College Affordability and Productivity.

Bernard, R. M., Abrami, P. C., Lou, Y., Borokhovski, E., Wade, A., Wozney, L., et al. (2004). How does distance education compare with classroom instruction? A meta-analysis of the empirical literature. Rev. Educ. Res. 74, 379–439. doi: 10.3102/00346543074003379

Boston, W. E., and Ice, P. (2011). Assessing retention in online learning: an administrative perspective. Online J. Dist. Learn. Administ. 14:2.

Brinkerhoff, J., and Koroghlanian, C. M. (2007). Online students’ expectations: enhancing the fit between online students and course design. J. Educ. Comput. Res. 36, 383–393.5 doi: 10.2190/r728-28w1-332k-u115

Broadbent, J., and Poon, W. L. (2015). Self-regulated learning strategies & academic achievement in online higher education learning environments: a systematic review. Internet High. Educ. 27, 1–13. doi: 10.1016/j.iheduc.2015.04.007

Cercone, K. (2008). Characteristics of adult learners with implications for online learning design. AACE J. 16, 137–159. doi: 10.1016/j.apergo.2018.08.002

Chang, C. C. (2015). Alteration of influencing factors of continued intentions to use e-learning for different degrees of adult online participation. Int. Rev. Res. Open Distrib. Learn. 16, 33–61. doi: 10.19173/irrodl.v16i4.2084

Chen, K. C., and Jang, S. J. (2010). Motivation in online learning: testing a model of self-determination theory. Comput. Hum. Behav. 26, 741–752. doi: 10.1016/j.chb.2010.01.011

Chyung, S. Y. (2007). Invisible motivation of online adult learners during contract learning. J. Educ. Online 4, 1–22.

Cohen, G. L., and Garcia, J. (2008). Identity, belonging, and achievement: a model, interventions, implications. Curr. Direct. Psychol. Sci. 17, 365–369. doi: 10.1111/j.1467-8721.2008.00607.x

Coldwell, J., Craig, A., Paterson, T., and Mustard, J. (2008). Online students: relationships between participation, demographics and academic performance. Elect. J. E Learn. 6, 19–30.

Drew, J. C., Oli, M. W., Rice, K. C., Ardissone, A. N., Galindo-Gonzalez, S., Sacasa, P. R., et al. (2015). Development of a distance education program by a land-grant university augments the 2-year to 4-year STEM pipeline and increases diversity in STEM. PLoS One 10:e0119548. doi: 10.1371/journal.pone.0119548

Driscoll, A., Jicha, K., Hunt, A. N., Tichavsky, L., and Thompson, G. (2012). Can online courses deliver in-class results? A comparison of student performance and satisfaction in an online versus a face-to-face introductory sociology course. Teach. Sociol. 40, 312–331. doi: 10.1177/0092055x12446624

Dupin-Bryant, P. A. (2004). Pre-entry variables related to retention in online distance education. Am. J. Dist. Educ. 18, 199–206. doi: 10.1207/s15389286ajde1804_2

Dweck, C. S., and Leggett, E. L. (1988). A social-cognitive approach to motivation and personality. Psychol. Rev. 95, 256–273. doi: 10.1037/0033-295X.95.2.256

Eccles, J. (1983). “Expectancies, values, and academic behaviors,” in Achievement and achievement motives: Psychological and sociological approaches, ed. J. T. Spence, (San Francisco, CA: W. H. Freeman), 75–146.

Good, C., Rattan, A., and Dweck, C. S. (2012). Why do women opt out? Sense of belonging and women’s representation in mathematics. J. Pers. Soc. Psychol. 102:700. doi: 10.1037/a0026659

Green, K. C., and Wagner, E. (2011). Online education: Where is it going? What should boards know. Trusteeship 19, 24–29.

Hargis, J. (2001). Can students learn science using the internet? J. Res. Comput. Educ. 33, 475–487. doi: 10.1080/08886504.2001.10782328

Harrington, D. (1999). Teaching statistics: a comparison of traditional classroom and programmed instruction/distance learning approaches. J. Soc. Work Educ. 35, 343–352. doi: 10.1080/10437797.1999.10778973

Harris, D. M., and Parrish, D. E. (2006). The art of online teaching: online instruction versus in-class instruction. J. Technol. Hum. Serv. 24, 105–117. doi: 10.1300/J017v24n02_06

Hartley, K., and Bendixen, L. D. (2001). Educational research in the internet age: examining the role of individual characteristics. Educ. Res. 30, 22–26. doi: 10.3102/0013189x030009022

Holder, B. (2007). An investigation of hope, academics, environment, and motivation as predictors of persistence in higher education online programs. Internet High. Educ. 10, 245–260. doi: 10.1016/j.iheduc.2007.08.002

Hoskins, S. L., and Van Hooff, J. C. (2005). Motivation and ability: which students use online learning and what influence does it have on their achievement? Br. J. Educ. Technol. 36, 177–192. doi: 10.1111/j.1467-8535.2005.00451.x

Hu, S., and Kuh, G. D. (2002). Being (dis)engaged in educationally purposeful activities: the influences of student and institutional characteristics. Res. High. Educ. 43, 555–575. doi: 10.1023/A:1020114231387

Hughes, K. L., and Scott-Clayton, J. (2011). Assessing developmental assessment in community colleges. Commun. Coll. Rev. 39, 327–351. doi: 10.1097/ACM.0000000000001544

Hulleman, C. S., Barron, K. E., Kosovich, J. J., and Lazowski, R. A. (2016). “Student motivation: Current theories, constructs, and interventions within an expectancy-value framework,” in Psychosocial skills and school systems in the 21st century: Theory, research, and applications, eds A. A. Lipnevich, F. Preckel, and R. D. Roberts, (Cham: Springer), 241–278. doi: 10.1007/978-3-319-28606-8_10

Hulleman, C. S., Kosovich, J. J., Barron, K. E., and Daniel, D. B. (2017). Making connections: Replicating and extending the utility value intervention in the classroom. J. Educ. Psychol. 109, 387–404. doi: 10.1037/edu0000146

Hung, M. L., Chou, C., Chen, C. H., and Own, Z. Y. (2010). Learner readiness for online learning: Scale development and student perceptions. Comput. Educ. 55, 1080–1090. doi: 10.1016/j.compedu.2010.05.004

Johnson, H. P., and Mejia, M. C. (2014). Online learning and student outcomes in California’s community colleges. Washington, D.C: Public Policy Institute.

Jones, A., and Issroff, K. (2005). Learning technologies: affective and social issues in computer-supported collaborative learning. Comput. Educ. 44, 395–408. doi: 10.1016/j.compedu.2004.04.004

Joo, Y. J., Bong, M., and Choi, H. J. (2000). Self-efficacy for self-regulated learning, academic self-efficacy, and internet self-efficacy in Web-based instruction. Educ. Technol. Res. Dev. 48, 5–17. doi: 10.1016/j.chb.2016.06.014

Karimi, S. (2016). Do learners’ characteristics matter? An exploration of mobile-learning adoption in self-directed learning. Comput. Hum. Behav. 63, 769–776. doi: 10.1016/j.chb.2016.06.014

Ke, F., and Xie, K. (2009). Toward deep learning for adult students in online courses. Internet High. Educ. 12, 136–145. doi: 10.1016/j.iheduc.2009.08.001

Keller, J., and Suzuki, K. (2004). Learner motivation and e-learning design: a multinationally validated process. J. Educ. Media 29, 229–239. doi: 10.1080/1358165042000283084

Kilgore, D., and Rice, P. J. (2003). Meeting the special needs of adult students (No. 102). San Francisco, CA: Jossey-Bass.

Kim, K. J., and Frick, T. W. (2011). Changes in student motivation during online learning. J. Educ. Comput. Res. 44, 1–23. doi: 10.2190/ec.44.1.a

Kosovich, J. J., Flake, J. K., and Hulleman, C. S. (2017). Understanding short-term motivation trajectories: a parallel process model of expectancy-value motivation. Contemp. Educ. Psychol. 49, 130–139. doi: 10.1016/j.cedpsych.2017.01.004

Kosovich, J. J., Hulleman, C. S., Barron, K. E., and Getty, S. (2015). A practical measure of student motivation: establishing validity evidence for the expectancy-value-cost scale in middle school. J. Early Adolesc. 35, 790–816. doi: 10.1177/0272431614556890

Lai, H. J. (2018). Investigating older adults’ decisions to use mobile devices for learning, based on the unified theory of acceptance and use of technology. Interact. Learn. Environ. 26, 1–12. doi: 10.1080/10494820.2018.1546748

Levin, J. (2007). Nontraditional Students and Community Colleges: The Conflict of Justice and Neoliberalism. New York, NY: Palgrave Macmillan.

Lim, C. K. (2001). Computer self-efficacy, academic self-concept, and other predictors of satisfaction and future participation of adult distance learners. Am. J. Dist. Educ. 15, 41–51. doi: 10.1080/08923640109527083

Lynch, R., and Dembo, M. (2004). The relationship between self-regulation and online learning in a blended learning context. Int. Rev. Res. Open Distrib. Learn. 5, 1–16. doi: 10.19173/irrodl.v5i2.189

Ma, J., and Baum, S. (2016). Trends in community colleges: enrollment, prices, student debt, and completion. Coll. Board Res. Brief 4, 1–23.

Manai, O., Yamada, H., and Thorn, C. (2016). “Real-time indicators and targeted supports: Using online platform data to accelerate student learning,” in Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, (Edinburgh), 183–187.

Mullen, G. E., and Tallent-Runnels, M. K. (2006). Student outcomes and perceptions of instructors’ demands and support in online and traditional classrooms. Internet High. Educ. 9, 257–266. doi: 10.1016/j.iheduc.2006.08.005

O’Dwyer, L. M., Carey, R., and Kleiman, G. (2007). A study of the effectiveness of the Louisiana Algebra I online course. J. Res. Technol. Educ. 39, 289–306. doi: 10.1080/15391523.2007.10782484

Ortiz-Rodríguez, M., Telg, R. W., Irani, T., Roberts, T. G., and Rhoades, E. (2005). College students’ perceptions of quality in distance education: the importance of communication. Q. Rev. Dist. Educ. 6, 97–105.

Park, J. (2007). “Factors related to learner dropout in online learning,” in Proceedings of the 2007 Academy of Human Resource Development Annual Conference, eds F. M. Nafukho, T. H. Chermack, and C. M. Graham, (Indianapolis, IN: AHRD), 251–258.

Park, J. H., and Choi, H. J. (2009). Factors influencing adult learners’ decision to drop out or persist in online learning. J. Educ. Technol. Soc. 12, 207–217.

Park, S., and Yun, H. (2018). The influence of motivational regulation strategies on online students’ behavioral, emotional, and cognitive engagement. Am. J. Dist. Educ. 32, 43–56. doi: 10.1080/08923647.2018.1412738

Patterson, B., and McFadden, C. (2009). Attrition in online and campus degree programs. Online J. Dist. Learn. Administ. 12, 1–8.

Phipps, R., and Merisotis, J. (1999). What’s the Difference? A Review of Contemporary Research on the Effectiveness of Distance Learning in Higher Education. Washington, DC: Institute for Higher Education Policy (accessed May 11, 2019).

Richardson, J. C., and Newby, T. (2006). The role of students’ cognitive engagement in online learning. Am. J. Dist. Educ. 20, 23–37. doi: 10.1207/s15389286ajde2001_3

Rosseel, Y. (2012). lavaan: an R package for structural equation modeling. J. Stat. Softw. 48, 1–36. doi: 10.3389/fpsyg.2014.01521

Rovai, A. P. (2002). A preliminary look at the structural differences of higher education classroom communities in traditional and ALN courses. J. Asynchron. Learn. Networks 6, 41–56.

Russell, G., and Russell, N. (1999). Cyberspace and school education. Westminster Stud. Educ. 22, 7–17. doi: 10.1080/0140672990220102

Silva, E., and White, T. (2013). Pathways to Improvement: Using Psychological Strategies to Help College Students Master Developmental Math. Stanford, CA: Carnegie Foundation for the Advancement of Teaching.

Steinweg, S. B., Davis, M. L., and Thomson, W. S. (2005). A comparison of traditional and online instruction in an introduction to special education course. Teach. Educ. Spec. Educ. 28, 62–73. doi: 10.1177/088840640502800107

Summers, J. J., Waigandt, A., and Whittaker, T. A. (2005). A comparison of student achievement and satisfaction in an online versus a traditional face-to-face statistics class. Innovat. High. Educ. 29, 233–250. doi: 10.1007/s10755-005-1938-x

Wang, A. Y., and Newlin, M. H. (2002). Predictors of web-student performance: the role of self-efficacy and reasons for taking an on-line class. Comput. Hum. Behav. 18, 151–163. doi: 10.1016/S0747-5632(01)00042-5

Wigfield, A., and Cambria, J. (2010). Students’ achievement values, goal orientations, and interest: definitions, development, and relations to achievement outcomes. Dev. Rev. 30, 1–35. doi: 10.1016/j.dr.2009.12.001

Wigfield, A., and Eccles, J. S. (2000). Expectancy–value theory of achievement motivation. Contemp. Educ. Psychol. 25, 68–81. doi: 10.1006/ceps.1999.1015

Xie, K. U. I., Debacker, T. K., and Ferguson, C. (2006). Extending the traditional classroom through online discussion: the role of student motivation. J. Educ. Comput. Res. 34, 67–89. doi: 10.2190/7bak-egah-3mh1-k7c6

Xu, D., and Jaggars, S. S. (2013). The impact of online learning on students’ course outcomes: evidence from a large community and technical college system. Econ. Educ. Rev. 37, 46–57. doi: 10.1016/j.econedurev.2013.08.001

Yoo, S. J., and Huang, W. D. (2013). Engaging online adult learners in higher education: motivational factors impacted by gender, age, and prior experiences. J. Contin. High. Educ. 61, 151–164. doi: 10.1080/07377363.2013.836823

Keywords: developmental mathematics, community college, online learning, academic motivation, adult learners

Citation: Francis MK, Wormington SV and Hulleman C (2019) The Costs of Online Learning: Examining Differences in Motivation and Academic Outcomes in Online and Face-to-Face Community College Developmental Mathematics Courses. Front. Psychol. 10:2054. doi: 10.3389/fpsyg.2019.02054

Received: 04 June 2019; Accepted: 23 August 2019;

Published: 10 September 2019.

Edited by:

Sanna Järvelä, University of Oulu, FinlandReviewed by:

Jesús-Nicasio García-Sánchez, Universidad de León, SpainGina L. Peyton, Nova Southeastern University, United States

Copyright © 2019 Francis, Wormington and Hulleman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michelle K. Francis, michellefrancis@virginia.edu; Stephanie V. Wormington, svw3f@virginia.edu

Michelle K. Francis

Michelle K. Francis Stephanie V. Wormington

Stephanie V. Wormington Chris Hulleman

Chris Hulleman