- 1Department of Epidemiology, School of Medicine, University of California Irvine, Irvine, CA, USA

- 2Department of Epidemiology and Biostatistics, School of Public Health, Texas A&M Health Science Center, College Station, TX, USA

- 3Department of Health Promotion and Behavior, College of Public Health, University of Georgia, Athens, GA, USA

- 4Department of Health Policy and Management, School of Public Health, Texas A&M Health Science Center, College Station, TX, USA

- 5Division of Immunology and Rheumatology, Department of Medicine, Stanford University, Stanford, CA, USA

- 6CVS Health, Baltimore, MD, USA

- 7Center for Healthy Aging, National Council on Aging, Washington, DC, USA

- 8Department of Health Promotion and Community Health Sciences, School of Public Health, Texas A&M Health Science Center, College Station, TX, USA

Objectives: To evaluate the concordance between self-reported data and variables obtained from Medicare administrative data in terms of chronic conditions and health care utilization.

Design: Retrospective observational study.

Participants: We analyzed data from a sample of Medicare beneficiaries who were part of the National Study of Chronic Disease Self-Management Program (CDSMP) and were eligible for the Centers for Medicare and Medicaid Services (CMS) pilot evaluation of CDSMP (n = 119).

Methods: Self-reported and Medicare claims-based chronic conditions and health care utilization were examined. Percent of consistent numbers, kappa statistic (κ), and Pearson’s correlation coefficient were used to evaluate concordance.

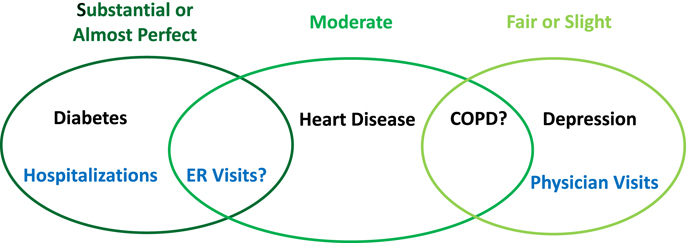

Results: The two data sources had substantial agreement for diabetes and chronic obstructive pulmonary disease (COPD) (κ = 0.75 and κ = 0.60, respectively), moderate agreement for cancer and heart disease (κ = 0.50 and κ = 0.47, respectively), and fair agreement for depression (κ = 0.26). With respect to health care utilization, the two data sources had almost perfect or substantial concordance for number of hospitalizations (κ = 0.69–0.79), moderate concordance for ED care utilization (κ = 0.45–0.61), and generally low agreement for number of physician visits (κ ≤ 0.31).

Conclusion: Either self-reports or claim-based administrative data for diabetes, COPD, and hospitalizations can be used to analyze Medicare beneficiaries in the US. Yet, caution must be taken when only one data source is available for other types of chronic conditions and health care utilization.

Introduction

Chronic conditions and health care utilization are important measurements in health interventions and other health-related studies. These measures may be captured either by patient self-reports or through some type of administrative data. Both types of data have advantages and limitations. Self-report data are cost efficient and inclusive of all sources of health care, but suffer from recall bias and inaccuracy. Conversely, claim-based administrative data could be more objective and accurate but are limited by coding errors as well as its inability to cover out-of-plan use.

Although investigated by multiple research groups in the past (1–19), the concordance between self-reports and administrative data is not well established. The accuracy of self-reported chronic conditions has been found to vary for different conditions (1, 4, 9, 11–16). Diabetes usually has substantial to almost perfect agreement between self-reported status and diagnosis from medical records or claim-based data (4, 9, 11–16). Yet, the validity of self-reports for other chronic conditions was not as high. For example, fair to moderate agreement between self-reports and claim-based data has been reported for heart disease in both US veterans (11) and older adults (13) as well as a national sample of Taiwanese (12). The concordance of two different data sources for mental disorders such as depression is usually fair or slight in various settings (4, 11, 12, 15). For chronic obstructive pulmonary disease (COPD), previous reports have been inconsistent in the literature. Some studies found moderate to substantial concordance between self-reported and administrative data among older emergency department patients (5) and hospitalized patients (16). But other studies only found fair agreement in a large sample of the general population in ON, Canada (9), in the US Veterans Affairs health care setting (11), and among participants of the 2005 Taiwan National Health Interview Survey (12).

With respect to health care utilization, some researchers reported that self-reports and administrative claims match better when the health event is more salient to the individual (2, 3, 6, 12, 13). For instance, most previous studies reported substantial agreement between self-reported hospitalizations and inpatient stay identified from administrative data (2, 3, 6, 12, 13, 17). A few studies investigated the concordance for emergency room visits between two different data resources and found generally good agreement, but the degree of agreement was less than that for hospitalizations in the same study (3, 12). Conversely, the agreement for outpatient physician visits was usually low (2, 3, 6, 13). A recent study reported that age and aging had an important effect on the concordance – older participants tended to have lower rate of concordant reporting (13). We have summarized the existing evidence and our hypotheses for the concordance of different chronic conditions and health care utilization as a conceptual framework shown in Figure 1.

In this study, we aim to investigate the concordance issue among Medicare beneficiaries participating in an evidence-based chronic disease management program, of which the participants were older adults with at least one common chronic condition. Specifically, the pilot evaluation of the Chronic Disease Self-Management Program (CDSMP) examined the impact of the CDSMP on health care utilization and costs in a sample of Medicare beneficiaries who are part of the National Study of CDSMP (20, 21). This pilot evaluation was also designed to inform Centers for Medicare and Medicaid Services (CMS) about the concordance between self-reported data collected by the study questionnaires and corresponding measurements identified using Medicare administrative data.

Materials and Methods

Participants

The CMS pilot evaluation of CDSMP was designed as a retrospective observational study of adults enrolled in an evidence-based self-management program for chronic disease. The Stanford CDSMP aids individuals with chronic diseases to develop self-management skills that improve health status through an evidence-based disease prevention model in community-based settings. CDSMP workshops were delivered throughout the US by 22 licensed sites in 17 states that enrolled respondents between August 2010 and April 2011 (21). Written informed consent was obtained from 1,170 individuals to collect and use survey data about health status, health care utilization, and other self-reported health care measures relevant to a participant’s chronic conditions. Part of the survey data included the participant’s name, mailing address, state, ZIP code, birth date, and gender.

The pilot evaluation study was based on a subset of CDSMP respondents who (1) were at least age 65.5 years at the beginning of the National Study of CDSMP; (2) reported having Medicare in their CDSMP survey responses; (3) actively consented to the CMS study (i.e., agreed to have their self-reported survey data linked to Medicare Administrative Data); and (4) did not have Health Maintenance Organization (HMO) enrollment in the 18 months before the CDSMP class start date. HMO enrollees were excluded because most Medicare Advantage payments would not be captured in the study datasets. Among the 1,170 CDSMP participants, 676 of them were 65.5 years or older at the beginning of the program and reported having Medicare. However, only 267 of them consented to participate in the CMS pilot evaluation.

Only those ZIP Codes that housed either a CDSMP workshop or a consented participant’s residence were identified for Medicare administrative data extracts. The details of the linking process are described elsewhere (22). Briefly, the Medicare Vital Status File with names and addresses was used to identify the correct beneficiary identification number (BIN) for each consented participant. The variables in the Vital Status File relevant to linking CDSMP participants to their Medicare utilization data included beneficiary name, beneficiary date of birth, beneficiary gender, beneficiary mailing address, beneficiary state, and beneficiary ZIP code. Following a block-based fuzzy matching algorithm, we successfully linked 208 CDSMP participants to their Medicare administrative data, representing a 78% linkage rate (out of 267 consented participants). Among these 208 linked individuals, 89 of them were excluded because they had HMO enrollment in the 18 months before the CDSMP class start date. Thus, the final sample size of the current study is 119.

The pilot evaluation was carried out in accordance with the recommendations by the IRBs of Stanford University and Texas A&M University with written informed consent from all subjects.

Data Sources

Self-Reported Data

Participants of the National Study of CDSMP completed questionnaires at three time points: baseline, 6 months, and 12 months after program enrollment. Self-reported questionnaires collected information about participants’ demographic characteristics, chronic conditions, health-related behaviors, and health care utilization.

At baseline, the CDSMP participants were asked to report the number and type of chronic conditions with which they had been diagnosed. The survey question was “Please indicate below which chronic condition(s) you have (check all that apply).” The response options included type 2 diabetes; type 1 diabetes; asthma; chronic bronchitis, emphysema, or COPD; other lung disease; high blood pressure; heart disease; arthritis or other rheumatic disease; cancer, depression; anxiety or other emotional/mental health condition; and other chronic condition. Depression was also measured using the PHQ-8, where a PHQ-8 score of 10 or higher is defined as depression (23). Health care utilization was measured by a series of self-reported items, asking respondents to indicate the number of non-emergency physician visits they had (physician visits), number of emergency room visits (ER visits), and number of times hospitalized for one night or longer (hospital stays) in the past 6 months.

Claims-Based Data

Variables from several Medicare administrative data files were requested. These included the Vital Status File, Beneficiary Annual Summary Files (BASF), Medicare fee-for-service institutional claim summary and revenue line data files, Medicare fee-for-service non-institutional claim summary and claim line data files, the hierarchal condition category (HCC) concurrent risk scores and indicators, and MedPAR data. Beneficiary unique identifiers were then linked across other datasets to select the relevant data for the concordance analysis of the current study.

Claims-based chronic conditions were identified through HCC chronic condition indicators (24). Specifically, variables hcc_15_cd to hcc_19_cd and hirchcl_15_cd to hirchcl_18_cd were used to identify those with diabetes; hcc_108_cd was used to identify those with COPD; hcc_80_cd to hcc_83_cd, hcc_92_cd, and hirchcl_81_cd, hirchcl_82_cd were used for heart disease identification; and hcc_55_cd was used to identify depression. To identify physician visits, the non-institutional physician/supplier data file was used to find all claims by the following combinations of BETOS codes and HCPCS codes: M1A: 99201–99205 or M1B: 99211–99215. The number of physician office visits was then calculated by counting the number of unique claim-from-date values from the claims identified. Outpatient events were identified from institutional claims data by counting the number of unique claim-from-date values for each beneficiary among the claims with a NCH claim type code of 40, excluding outpatient ER utilization. ER utilization was calculated by adding the number of unique claim-from-date values from institutional claims line files using the revenue center code values of 0450–0459 and 0981. Inpatient stays were identified by counting the number of unique claim-from-date values among the claims with a NCH claim type code of 60 or 61 in institutional claims data. Claims for those beneficiaries seen in the ER and admitted to the hospital were counted as inpatient stays only, not ER care utilization.

Statistical Analyses

Concordance for various chronic conditions was evaluated using kappa statistic (κ). In addition, because the magnitude of kappa statistic is highly influenced by the prevalence of the condition as well as the bias between the two data sources, we also reported several other values, including the bias index (BI), prevalence index (PI), and the prevalence-adjusted bias-adjusted kappa (PABAK). The BI ranges from 0 to 1, with 0 indicating no bias and 1 implying that one data source never identifies the condition while the other source always does. The PI also ranges from 0 to 1, with 0 indicating the prevalence of the condition is 50%, while 1 suggesting the prevalence of the condition is 0 or 100%. PABAK reflects the concordance under a hypothetically ideal situation, where no prevalence or bias effects exist. On its own, PABAK is uninformative. It should always be presented along with kappa statistic, to inform the readers about the possible effects of prevalence and bias (25, 26). For health care utilization variables, the following steps were taken to assess the concordance between self-reports and claims data: (1) calculate the percent of participants with the same number of health care utilization in the same time period from the two data sources; (2) calculate rates of concordance by considering a discrepancy of ±1 visit as concordance; (3) calculate the Pearson’s correlation coefficient between the number of utilization from two sources; (4) dichotomize each utilization variable to a yes/no variable for any utilization in the past 6 months and then estimate kappa statistic, BI, PI, and PABAK to assess the degree of agreement between the dichotomized utilization variables.

Results

Sample Characteristics

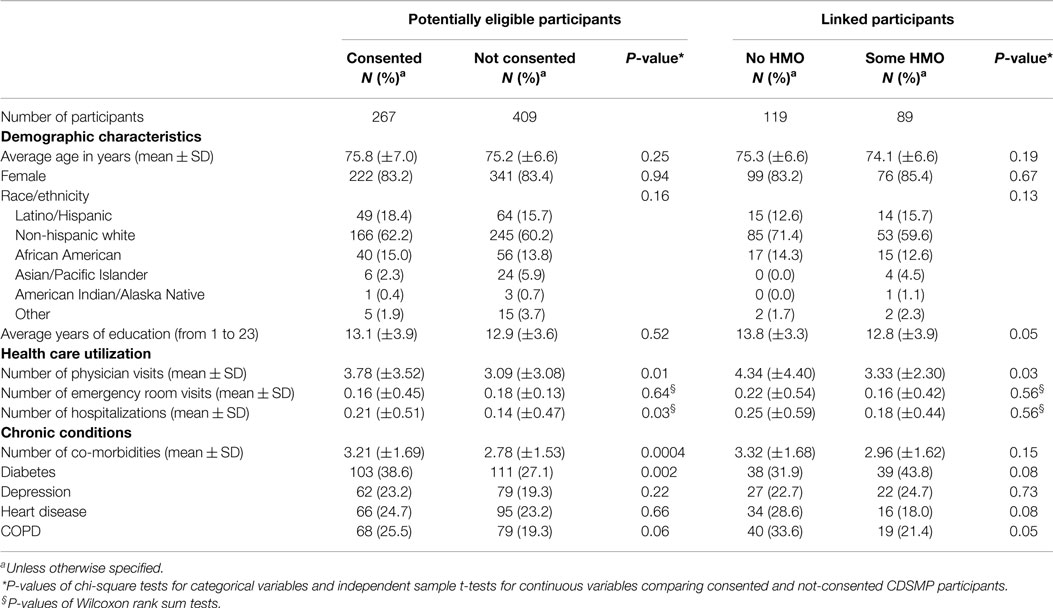

In total, 267 CDSMP participants (39% of all potentially eligible participants) consented to the pilot evaluation. Table 1 illustrates the average age, gender composition, race distribution, and years of education were not significantly different between those who consented and those who did not consent. However, the consented respondents had significantly more physician visits (3.78 vs. 3.09, P = 0.01) and more hospitalizations (0.21 vs. 0.14, P = 0.03) at baseline than the respondents who did not consent. Furthermore, the consented respondents had significantly more co-morbidities (3.21 vs. 2.78, P = 0.0004) and higher rate of diabetes (38.6 vs. 27.1%, P = 0.002).

Among the 208 CDSMP participants who were linked to their available Medicare administrative data, 119 had no HMO coverage and were eligible for study analyses. The linked participants with and without HMO coverage did not differ significantly for most of the characteristics. Yet, the participants without HMO coverage had more years of education (13.8 vs. 12.8, p = 0.05) and had more baseline physician visits (4.34 vs. 3.33, p = 0.03) than those who had some HMO coverage.

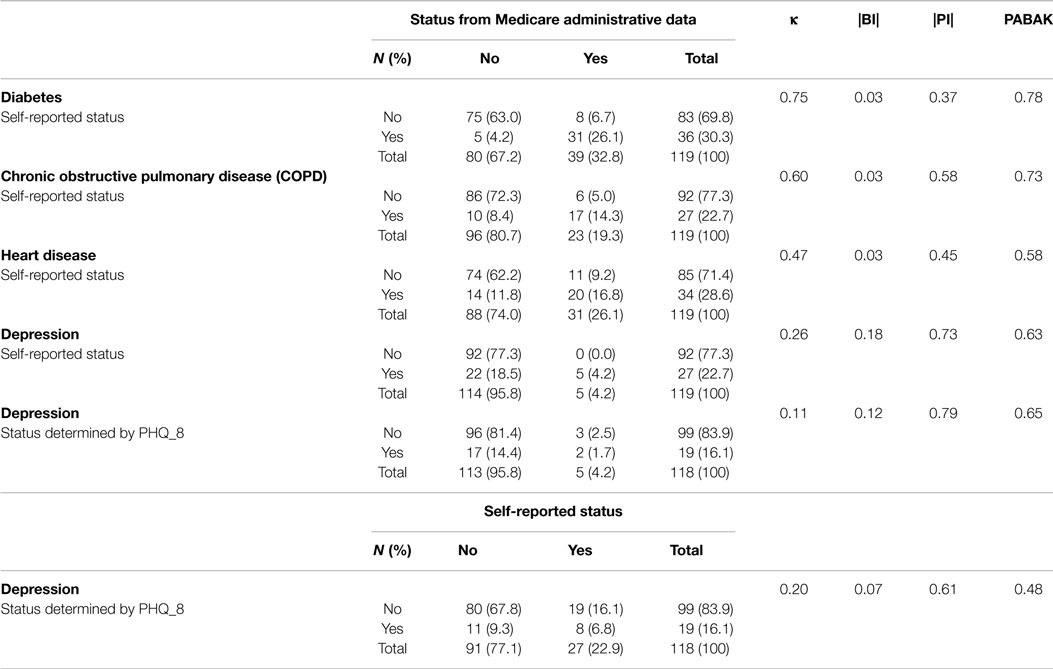

Chronic Disease Status

Table 2 presents the concordance analysis results for various chronic conditions. At baseline, the two data sources had substantial agreement for diabetes and COPD status. The kappa statistics for the agreement between the two data sources for these two conditions were 0.75 for diabetes and 0.60 for COPD. Self-reports and Medicare administrative data had moderate agreement for heart disease, with a kappa statistic of 0.47. All these conditions had very small BI (<0.03), suggesting similar prevalence rates of the two data sources. Although they all had relatively higher PI, the values of PABAK were similar to slightly higher than the corresponding kappa statistic.

The two data sources had fair agreement for depression. All of the 22 inconsistent participants self-reported having depression at baseline, but no depression or bi-polar disorder-related claim was identified in the Medicare administrative data in 2009 or 2010. The kappa statistics for this variable was 0.26. Additionally, the depression status determined by PHQ-8 only had slight agreement with either self-reported depression (κ = 0.20) or Medicare administrative data (κ = 0.11). The BI for depression was between 0.11 and 0.26, whereas the PI for this condition ranges from 0.61 to 0.79. In this case, PABAK (0.48–0.65) was substantially higher than their corresponding kappa statistic (0.11–0.26).

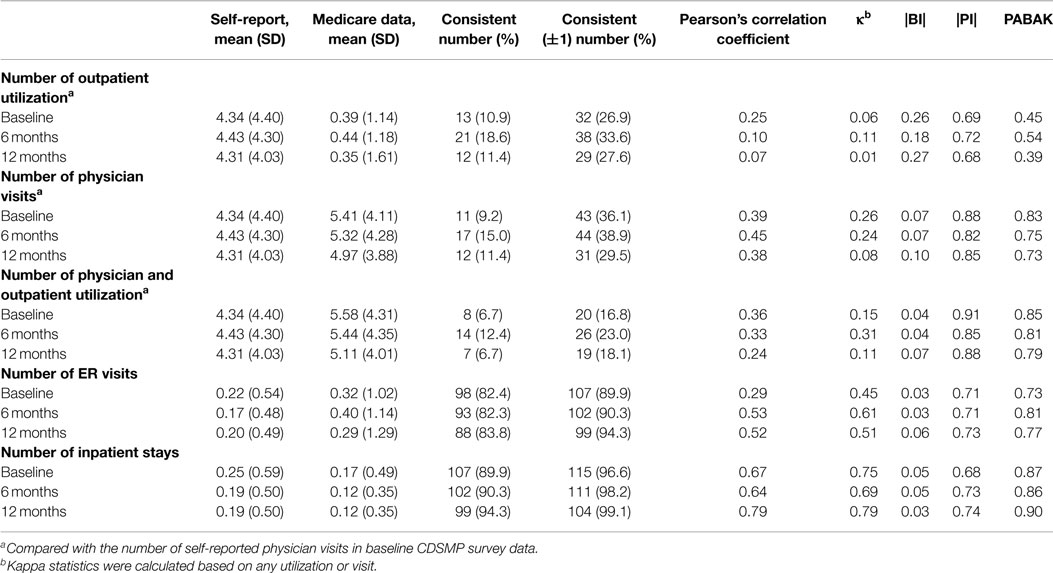

Health Care Utilization

From the Medicare administrative data, two variables related to physician encounters were identified: (1) the number of outpatient visits from the Institutional claims files and (2) the number of physician office visits from the non-Institutional claims files. As shown in Table 3, neither of these two variables had good concordance with self-reported physician visits, even when a difference of ±1 between two variables is considered concordant. Except number of outpatient visits, the other two situations had very low BI (≤0.1). All three measures had very high PI, which led to substantially higher PABAK than the corresponding kappa statistic.

Self-reports and Medicare administrative data had moderate agreement with respect to ER utilization. Specifically, 82% or more linked CDSMP participants had the same number of ER visits in both data sources at each time point. When comparing the dichotomized status of ER visits (yes/no for any ER visits), the kappa statistics for the agreement of the two data sources was 0.45 at baseline, 0.61 at 6-month, and 0.51 at 12-month follow-up. A high percentage (i.e., 89.9, 90.3, and 94.3%) of the linked participants had the same number of hospitalizations in both data sources at the three time points. When comparing the dichotomized status of inpatient visits (yes/no for any hospitalization), the kappa statistics for the agreement of the two data sources was 0.75, 0.69, and 0.79 at baseline, 6-month, and 12-month, respectively, indicating substantial agreement.

The BI for ER visits and hospitalizations was small (0.03–0.06), but the PI for them was relatively large (0.68–0.74). The PABAKs for both ER utilizations and inpatient visits were substantially higher than their corresponding kappa statistics.

Discussion

The results of this study are consistent with previous reports in finding substantial agreement for diabetes, moderate agreement for heart disease, and fair agreement for depression (1, 4, 9, 11–16). The results for depression may be a reflection of the under-diagnosis for mental illness among Medicare beneficiaries which was partially caused by the beneficiaries’ unwillingness to seek treatment (27). Neither the self-reported status nor the claim-based depression diagnosis had a good agreement with the PHQ-8-based depression diagnosis, suggesting investigators must be cautious in depression-related analysis when only one data source for depression is available. The concordance for COPD between self-reports and medical record/administrative data has been inconsistent in the literature. Some studies found moderate to substantial concordance (5, 16), but others only found fair agreement (9, 11, 12). The results of the current study add to the body of evidence about the potential accuracy of using self-reports or Medicare claims data to identify this condition among Medicare beneficiaries interested in evidence-based chronic disease management program.

Regarding health care utilization, the two data sources had almost perfect or substantial concordance for number of hospitalizations, and moderate concordance for ER utilization. Yet, the self-reported number of physician visits had generally low agreement with the potentially corresponding physician visits variables available in the Medicare administrative data. These findings are also consistent with previous studies (2, 3, 6, 12, 13, 17). In an earlier study of 216 CDSMP participants who received care through Kaiser Permanente HMO, the participants were found to have a tendency of “over-reporting” the number of ER visits, which might be caused by outside system usage (3). Yet, among the CDSMP participants without HMO included in the current study, the average number of self-reported ER visits was lower than that from CMS administrative data at all three time points. This may imply outside system ER visits happened less frequently among CMS beneficiaries without HMO who were 65 years or older.

This is one of the first studies that investigated the agreement between self-reported and claim-based administrative data for both chronic conditions and health care utilization variables. It provides an opportunity for us to investigate the correlation between reporting errors for these two types of measures. The agreement between the two data sources for health care utilization and that for chronic conditions were not significantly associated with each other (data not shown). This suggests that the reporting or coding errors were generally distributed randomly, not all happened in a particular subgroup of the study participants. Neither of the two previous studies that reported the concordance between different data sources for both chronic disease diagnosis and health care utilization examined whether the discordant cases were common for chronic conditions and health care utilization (12, 13).

The results of this study are an important contribution to understanding concordance between self-reported and claims-based chronic conditions and utilization of services for older Americans, but need to be interpreted in light of a few limitations. First, the retrospective active consenting process limited the number of CDSMP participants available for linkage to Medicare Administrative Claims Records and analyzed of this study. The consenting process also implies that the specific population studied cannot be assumed to be representative of the general population. In particular, Table 1 revealed that the consented participants had significantly higher number of co-morbidities, physician visits, and hospitalizations at baseline. In addition, those without HMO were better educated and had more physician visits at baseline than those who were enrolled in HMO. This potentially biased final sample further limits the generalizability of our results to general population. Future studies using more broad-based samples with larger sample size would be needed to further inform the controversies on the advantages and disadvantages of difference data sources.

Second, neither Medicare administrative data nor patient self-reported information is a gold standard. Therefore, for the measures with low concordance, it is inconclusive regarding which data source is more accurate. Recall bias is likely a large source of the discordance observed for those measures. Additionally, inaccuracies in self-reported data might be caused by participant’s misunderstanding of the condition or service inquired in the questionnaire. On the other hand, coding errors and inaccurate mapping of the diagnostic and service utilization codes might also be the source of discordance.

Lastly, while evaluating the concordance between two data sources using kappa statistic, although the BI was very or relatively small in most cases, the PI was large for depression and all health care utilization variables. Adjusting for low prevalence of those variables resulted in substantially higher agreement coefficients as measured by PABAK. However, previous methodology research suggested that PABAK values should be interpreted with caution, especially for conditions with low prevalence (28). In those situations, the evaluation and conclusion for the strength of agreement should be judged from multiple aspects, as we did in this study.

In conclusion, the findings of this study expand the existing literature of the concordance between self-reported and medical administrative data for chronic conditions and health care utilization. The findings confirmed the substitutability between self-reports and CMS administrative data for diabetes and hospitalizations in US older population. They also suggest potential substitutability between the two data sources for COPD and ER visits. Finally, it calls for future research on the accuracy of depression and physician visits measures from two data sources.

Conflict of Interest Statement

Kate Lorig receives royalties from the book used by participants in the CDSMP program. There are no other potential conflicts of interest. Sponsor’s role: Nancy Whitelaw from NCOA contributed to the discussion of this study, reviewed, and edited the manuscript. Kate Lorig receives royalties from the book used by participants in the CDSMP program. There are no other potential conflicts of interest.

Acknowledgments

Funding source: This work was supported in part by the National Council on Aging (NCOA) through contracts to Texas A&M Health Science Center (Principal Investigator: MO) and Stanford University (Principal Investigator: KL). We thank the 22 delivery sites and the participants who enrolled in the National Study of Chronic Disease Self-Management Program from 2010 to 2011.

References

1. Fowles JB, Fowler EJ, Craft C. Validation of claims diagnoses and self-reported conditions compared with medical records for selected chronic diseases. J Ambul Care Manage (1998) 21:24–34. doi: 10.1097/00004479-199801000-00004

2. Raina P, Torrance-Rynard V, Wong M, Woodward C. Agreement between self-reported and routinely collected health-care utilization data among seniors. Health Serv Res (2002) 37:751–74. doi:10.1111/1475-6773.00047

3. Ritter PL, Stewart AL, Kaymaz H, Sobel DS, Block DA, Lorig KR. Self-reports of health care utilization compared to provider records. J Clin Epidemiol (2001) 54:136–41. doi:10.1016/S0895-4356(00)00261-4

4. Tisnado DM, Adams JL, Liu H, Damberg CL, Chen WP, Hu FA, et al. What is the concordance between the medical record and patient self-report as data sources for ambulatory care? Med Care (2006) 44:132–40. doi:10.1097/01.mlr.0000196952.15921.bf

5. Susser SR, McCusker J, Belzile E. Comorbidity information in older patients at an emergency visit: self-report vs. administrative data had poor agreement but similar predictive validity. J Clin Epidemiol (2008) 61:511–5. doi:10.1016/j.jclinepi.2007.07.009

6. Wolinsky FD, Miller TR, An H, Geweke JF, Wallace RB, Wright KB, et al. Hospital episodes and physician visits: the concordance between self-reports and Medicare claims. Med Care (2007) 45:300–7. doi:10.1097/01.mlr.0000254576.26353.09

7. Pinto D, Robertson MC, Hansen P, Abbott JH. Good agreement between questionnaire and administrative databases for health care use and costs in patients with osteoarthritis. BMC Med Res Methodol (2011) 11:45. doi:10.1186/1471-2288-11-45

8. Zaslavsky AM, Ayanian JZ, Zaborski LB. The validity of race and ethnicity in enrollment data for Medicare beneficiaries. Health Serv Res (2012) 47:1300–21. doi:10.1111/j.1475-6773.2012.01411.x

9. Muggah E, Graves E, Bennett C, Manuel DG. Ascertainment of chronic diseases using population health data: a comparison of health administrative data and patient self-report. BMC Public Health (2013) 13:16. doi:10.1186/1471-2458-13-16

10. Coleman EA, Wagner EH, Grothaus LC, Hecht J, Savarino J, Buchner DM. Predicting hospitalization and functional decline in older health plan enrollees: are administrative data as accurate as self-report? J Am Geriatr Soc (1998) 46:419–25. doi:10.1111/j.1532-5415.1998.tb02460.x

11. Singh JA. Accuracy of Veterans Affairs databases for diagnoses of chronic diseases. Prev Chronic Dis (2009) 6:A126.

12. Wu CS, Lai MS, Gau SS, Wang SC, Tsai HJ. Concordance between patient self-reports and claims data on clinical diagnoses, medication use, and health system utilization in Taiwan. PLoS One (2014) 9(12):e112257. doi:10.1371/journal.pone.0112257

13. Wolinsky FD, Jones MP, Ullrich F, Lou Y, Wehby GL. The concordance of survey reports and Medicare claims in a nationally representative longitudinal cohort of older adults. Med Care (2014) 52(5):462–8. doi:10.1097/MLR.0000000000000120

14. Simpson CF, Boyd CM, Carlson MC, Griswold ME, Guralnik JM, Fried LP. Agreement between self-report of disease diagnoses and medical record validation in disabled older women: factors that modify agreement. J Am Geriatr Soc (2004) 52:123–7. doi:10.1111/j.1532-5415.2004.52021.x

15. Leikauf J, Federman AD. Comparisons of self-reported and chart-identified chronic diseases in inner-city seniors. J Am Geriatr Soc (2009) 57:1219–25. doi:10.1111/j.1532-5415.2009.02313.x

16. Chaudhry S, Jin L, Meltzer D. Use of a self-report-generated Charlson comorbidity index for predicting mortality. Med Care (2005) 43:607–15. doi:10.1097/01.mlr.0000163658.65008.ec

17. Roberts RO, Bergstralh EJ, Schmidt L, Jacobsen SJ. Comparison of self-reported and medical record health care utilization measures. J Clin Epidemiol (1996) 49:989–95. doi:10.1016/0895-4356(96)00143-6

18. Wallihan DB, Stump TE, Callahan CM. Accuracy of self-reported health services use and patterns of care among urban older adults. Med Care (1999) 37:662–70. doi:10.1097/00005650-199907000-00006

19. Glandon GL, Counte MA, Tancredi D. An analysis of physician utilization by elderly persons: systematic differences between self-report and archival information. J Gerontol (1992) 47:S245–52. doi:10.1093/geronj/47.5.S245

20. Ory MG, Ahn S, Jiang L, Lorig K, Ritter P, Laurent DD, et al. National study of chronic disease self-management: six-month outcome findings. J Aging Health (2013) 25:1258–74. doi:10.1177/0898264313502531

21. Ory MG, Ahn S, Jiang L, Smith ML, Ritter PL, Whitelaw N, et al. Successes of a national study of the chronic disease self-management program: meeting the triple aim of health care reform. Med Care (2013) 51:992–8. doi:10.1097/MLR.0b013e3182a95dd1

22. Lorden AL, Radcliff TA, Jiang L, Horel SA, Smith ML, Lorig K, et al. Leveraging administrative data for program evaluations: a method for linking datasets without unique identifiers. Eval Health Prof (2014). doi:10.1177/0163278714547568

23. Kroenke K, Strine TW, Spitzer RL, Williams JB, Berry JT, Mokdad AH. The PHQ-8 as a measure of current depression in the general population. J Affect Disord (2009) 114:163–73. doi:10.1016/j.jad.2008.06.026

24. Pope GC, Kautter J, Ellis RP, Ash AS, Ayanian JZ, Lezzoni LI, et al. Risk adjustment of Medicare capitation payments using the CMS-HCC model. Health Care Financ Rev (2004) 25:119–41.

25. Sim J, Wright CC. The kappa statistic in reliability studies: use, interpretation, and sample size requirements. Phys Ther (2005) 85:257–68.

26. Kottner J, Audigé L, Brorson S, Donner A, Gajewski BJ, Hróbjartsson A, et al. Guidelines for reporting reliability and agreement studies (GRRAS) were proposed. J Clin Epidemiol (2011) 64:99–106. doi:10.1016/j.jclinepi.2010.03.002

27. Baumann AE. Stigmatization, social distance and exclusion because of mental illness: the individual with mental illness as a ‘stranger’. Int Rev Psychiatry (2007) 19:131–5. doi:10.1080/09540260701278739

Keywords: aging, chronic disease, claims data, disease management, health services

Citation: Jiang L, Zhang B, Smith ML, Lorden AL, Radcliff TA, Lorig K, Howell BL, Whitelaw N and Ory MG (2015) Concordance between self-reports and Medicare claims among participants in a national study of chronic disease self-management program. Front. Public Health 3:222. doi: 10.3389/fpubh.2015.00222

Received: 09 June 2015; Accepted: 18 September 2015;

Published: 08 October 2015

Edited by:

Roger A. Harrison, University of Manchester, UKReviewed by:

William Augustine Toscano, University of Minnesota School of Public Health, USAJason Scott Turner, Saint Louis University, USA

Negar Golchin, University of Washington, USA

Copyright: © 2015 Jiang, Zhang, Smith, Lorden, Radcliff, Lorig, Howell, Whitelaw and Ory. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luohua Jiang, Department of Epidemiology, University of California Irvine, 205B Irvine Hall, Irvine, CA 92697-7550, USA, lhjiang@uci.edu

Luohua Jiang

Luohua Jiang Ben Zhang

Ben Zhang Matthew Lee Smith

Matthew Lee Smith Andrea L. Lorden

Andrea L. Lorden Tiffany A. Radcliff

Tiffany A. Radcliff Kate Lorig

Kate Lorig Benjamin L. Howell

Benjamin L. Howell Nancy Whitelaw

Nancy Whitelaw Marcia G. Ory

Marcia G. Ory