The value of AI in the Diagnosis, Treatment, and Prognosis of Malignant Lung Cancer

- 1Department of PET/CT Center, Cancer Center of Yunnan Province, Yunnan Cancer Hospital, The Third Affiliated Hospital of Kunming Medical University, Kunming, China

- 2Lauterbur Research Center for Biomedical Imaging, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Malignant tumors is a serious public health threat. Among them, lung cancer, which has the highest fatality rate globally, has significantly endangered human health. With the development of artificial intelligence (AI) and its integration with medicine, AI research in malignant lung tumors has become critical. This article reviews the value of CAD, computer neural network deep learning, radiomics, molecular biomarkers, and digital pathology for the diagnosis, treatment, and prognosis of malignant lung tumors.

Introduction

The GLOBOCAN2020 cancer report released by the International Agency for Research on Cancer (IARC) of the World Health Organization shows that lung cancer has become the leading cause of death from cancer in men, and the death rate among women is second only to breast cancer (1), illustrating its serious effect on human health. The rise of AI has changed traditional tumor diagnosis, treatment and prognosis strategies. AI is the process of using computers to simulate human thinking and behavior. With the introduction of machine learning algorithms and deep learning algorithms along with the rise of big data, AI is playing an increasingly important role in the medical field. The application of AI in medicine not only reduces the workload of doctors and makes the allocation of medical resources more effective but also improves the accuracy of disease diagnosis and the prognosis of patients. This article reviews the application of AI in lung cancer.

Basic Concepts Of AI

In 1959, the scholar Ledley and others put forward the mathematical model of computer-aided diagnosis for the first time, and diagnosed a group of lung cancer cases, which pioneered the computer-aided diagnosis. In 1966, the concept of “Computer Aided Diagnosis” (CAD) was first put forward by Ledley. Before the concept of machine learning and deep learning was put forward, CAD mainly used computer technology combined with mathematical models to build models (2). Some scholars use Gaussian scale space and multi-scale Gaussian filterbank to establish a model to detect pulmonary nodules on chest radiographs, and it is concluded that the model can detect 67% of nodules, which is close to the result of manual detection of 70% (3).This result shows that CAD has a great development prospect in the field of lung cancer. Deep learning is a machine learning technology based on computer neural networks, and computer neural networks are the study of human brain morphology, neural network structure, and letter processing, using computers to build intelligent computer models similar to human brain processing information to enhance human cognitive ability. With the advent of big data, neural network learning has evolved from traditional shallow neural networks to deep neural network learning. The concept of deep learning was proposed in the paper “Reducing the dimensionality of data with neural networks” by Hinton and Salakhutdinov (4). With the development of artificial intelligence, machine learning, a branch of artificial intelligence, and deep learning, a branch of machine learning, have played an important role. The combination of machine learning and deep learning with computer-aided detection/computer-aided diagnosis (CADe/CADx) makes it not only specific to the detection of nodules but also plays an essential role in the differentiation of benign and malignant lung nodules and the classification and staging of lung cancer. At the same time, the structured report (SR) of radiology department also plays a great guiding role in the treatment decision of lung cancer, and can strengthen the communication between imaging doctors and clinicians, while quality, Data quantification and accessibility are three important factors that transform from the current format of free-text reporting (FTR) to SR (5, 6). These three factors are closely related to AI. Some scholars have tried to use machine learning or neural network model to build a model to extract complete structure from FTR, and verified its feasibility (7, 8).

Image Processing

Medical Image Segmentation

Medical image segmentation is an important foundation of clinical research. There are two kinds of medical image segmentation: segmentation based on local spatial features, such as information such as gray level and texture, and segmentation based on edge information (9). Some scholars segment 20 Brain, 50 Breast, 50 Lung cancer patients and 20 Spleen scans based on adaptive geographic distance (AGD) and interactive machine learning (IML) integrated by SVMs, and get better results than manual annotation (10). Besides machine learning, the research on medical image segmentation by deep learning is also very popular. Based on the Maximum Intensity Projection (MIP), some researches have improved the U-net architecture, and put forward a network named deep residual separable neural network (DRS-CNN) to segment lung tumors. After comparing it with U-net, it is concluded that DRS-CNN has higher efficiency and fewer parameters than FCN FCN, SegNet (11). These deep learning networks all face the same problem: they need a lot of manually labeled data for training. The Cycle-consistent Generative Adversarial Network (GAN) network, which consists of two networks, Generator and Discriminator, belongs to the unsupervised learning mode. The results show that the improved CycleGAN does not need manual labeling, and can overcome the noise and reach the level of manual labeling (12). In addition, LGAN based on GAN is also proved to have better performance than U-net (13). Based on deep learning, some scholars have established a model called U-Net-Generative Adversarial Network (U-Net-GAN) by using Gan strategy, using U-Net as the Generator and FCNS as discriminators, and the experimental results show that the segmentation results in chest CT are reliable. All these studies show that GAN-based segmentation model has great potential to build a higher performance segmentation model (14).

Image Reconstruction and Fusion

PET is mainly a radioactive tracer that decays and emits positrons, and the positrons generate annihilation radiation to generate photons. Using detectors to detect photon shapes successfully makes metabolic energy imaging, so as to diagnose diseases. Imaging to meet diagnostic requirements requires a full dose of radioactive tracer, which increases the risk of radiation exposure (15), while too low a dose will increase noise and reduce image quality. Therefore, some scholars began to consider whether low-dose PET images can be used to reconstruct images that meet the diagnostic requirements. Some scholars have used Cycliw Gans to improve the image quality on PET images of low-dose lung cancer, and compared it with traditional noise reduction methods and RED-CNN and 3D-cgan, and concluded that Cycliw Gans can effectively keep the edge and SUV value (16). Other scholars have used sparse view data acquisition to realize low-dose and high-quality imaging (17). In addition, the resolution of PET is far lower than that of CT, so it needs to be fused with the anatomical structure of CT, which is beneficial to diagnosis. Some scholars try to use CNN to improve PET/CT image fusion, and the result of foreground detection accuracy is 99.29%, which shows that CNN can significantly improve image fusion (18). Some scholars put forward the recurrent fusion network (rfn), and compared it with early fusion, late fusion and high fusion, and three tumor segmentation methods, namely resnet, densenet and 3d-unet. The results show that rfn can provide more accurate segmentation (19).

Application of AI to Diagnosis

CAD, CNN and GAN Based Diagnosis

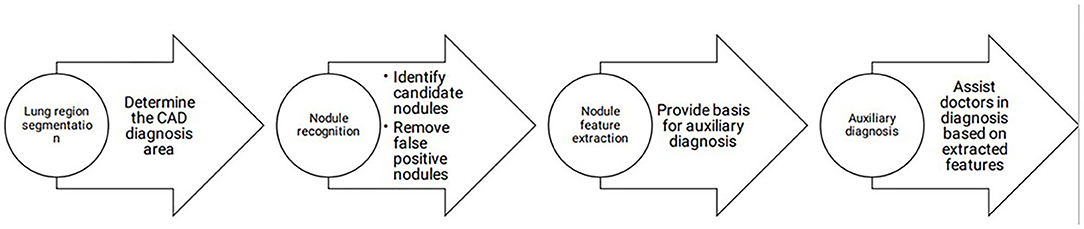

The early detection of lung cancer and early treatment are essential and related to prognostic outcomes. Facing the considerable workload associated with pulmonary nodule screening, the combination of CADe/CADx with manual diagnosis dramatically improves the efficiency of clinical work and reduces the missed diagnosis rate. At present, the detection rate of CAD for nodules is higher than that of manual diagnosis. The sensitivity is high, but the specificity of detecting nodules needs to be improved to reduce the false-positive rate (20). A schematic representation of CAD workflow is shown in Figure 1.

The combination of deep learning and lung tumor diagnosis has promoted the development of lung tumor diagnosis. AI is mainly used in lung cancer image recognition, medical image segmentation, lung nodule extraction and recognition, lung cancer pathological diagnosis, and tumor marker search in lung cancer diagnosis.

Regarding lung cancer image recognition, some scholars have used a deep convolutional neural network (CNN) to develop an automatic tumor region recognition system for lung cancer pathological images (21). Some scholars have used the Mask Regional Convolutional Neural Network (Mask R-CNN) architecture to develop a deep learning algorithm to classify cells in the lung cancer tumor microenvironment (TME) and simultaneously extract cell characteristics to predict prognosis. The results prove that the spatial organization of different cell types is predictive of patient survival and related to the gene expression of biological pathways (22), showing that AI can be used to identify tumor regions on lung cancer pathological images and to segment various cells in the lung cancer tumor microenvironment. In terms of image segmentation, medical images have a low resolution and low contrast compared with natural images. In addition, the shape of lung cancer images is irregular and can include speculation, lobulation, pleural indentation, and other shapes with unclear edges. There may also be calcified cavities and pleural effusions, increasing the difficulty of lung cancer image segmentation. The application of deep learning can improve the accuracy of image recognition and classification. In terms of lung nodule detection, compared with traditional machine learning methods, deep learning can quickly learn features of different dimensions, shorten the feature selection and calculation time, and significantly improve the efficiency of nodule detection. CNN is still being optimized. Previous studies have shown that two doctors who lack diagnostic experience could diagnose lung cancer nodules in 120 suspected lung cancer cases with nodule diameters larger than 3 mm using a CAD-aided diagnosis system based on the deep learning Faster R-CNN as the framework and not using a CAD-aided diagnosis system, respectively. The results show that after doctors use the CAD-assisted diagnosis system based on deep learning, the diagnostic sensitivity of lung cancer nodules is significantly improved, and the positive predictive value is significantly reduced. The reading time is shortened, especially for suspicious nodules with diameters of 3–6 and 6–10 mm (23), which can effectively alleviate the burden of clinical work. In terms of lung nodule detection, compared with the traditional machine learning method, deep learning can quickly learn the features of different dimensions, shorten the feature selection and calculation time, and significantly improve the efficiency of nodule detection. Among them, the convolutional neural network is a commonly used deep network. The deeper the depth of the convolutional neural network is, the richer the features of the extracted lung nodules; however, it also has shortcomings. Increasing the depth will prolong the training time of the model. In subsequent work, researchers should focus on extracting more nodule features while shortening the training time. GAN is also more and more used in the detection of lung nodules. GAN plays a very important role when only a small amount of data can't be used for deep learning model training. Studies have shown that a large number of images can be generated by GAN, and then the model can be built and trained by deep learning. The results show that this method can improve the classification accuracy by about 20% (24). This kind of transfer learning can generate a large amount of data, which solves the problems of manual annotation in deep learning and insufficient data samples (25). During the COVID-19 epidemic, many researches based on GAN achieved good results, which proved the above conclusions (26–28).

Differentiation of Benign and Malignant Pulmonary Disease

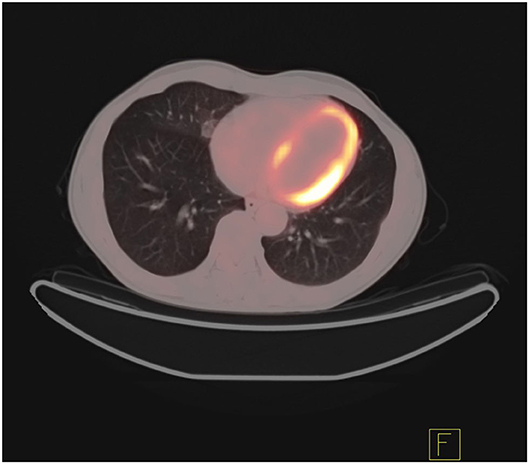

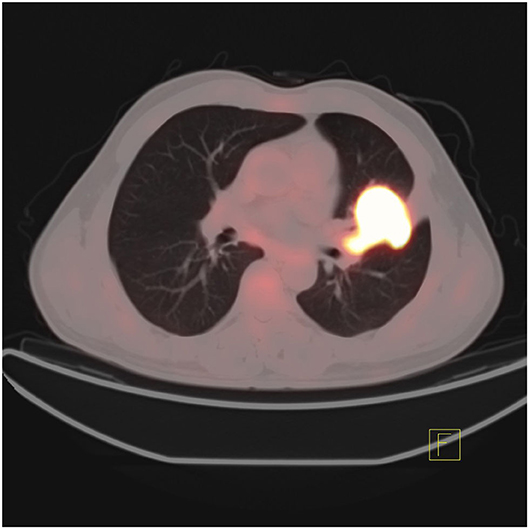

In addition, the differentiation of benign and malignant pulmonary nodules is an essential part of computer-aided diagnosis. Figures 2, 3 shows that on PET/CT, it is not easy to distinguish between benign and malignant nodules with the naked eye (Figure 2: Non-metabolic invasive adenocarcinoma on PET/CT, Figure 3: Non-metabolized granulomatous inflammation on PET/CT). For the diagnosis of benign and malignant pulmonary nodules, artificial intelligence is still dedicated to extracting certain image features, such as image size, shape, texture features, and semantic features. In machine learning, some scholars have used SVM to classify texture features, achieving an AUC of 0.9270 (29). Some scholars have used SVM to classify shape features and texture features, and the resulting AUC value was 0.9337 (30). In addition to machine learning, deep learning has also been used to identify lung nodules. Some scholars have used deep belief networks (DBNs) and CNNs to classify benign and malignant lung nodules for the first time in the classification of lung nodules. The sensitivity rates were 73.40 and 73.30%, respectively. Some scholars used a deep belief network (DBN) and CNN to classify benign and malignant lung nodules for the first time in patients with lung nodules, and the sensitivity rates were 73.40 and 73.30%, respectively (31). After that, an end-to-end framework for deep learning based on CNN was constructed, such as the multicrop convolutional neural network (MC-CNN), achieving an AUC value of 0.93 (32) and 3D CNN (33). In addition, some scholars have used CNN to establish a hierarchical semantic convolutional neural network (HSCNN) based on semantic features to detect nodules and identify nodules, and its AUC value was 0.8780 (34). Compared with machine learning, the end-to-end model of deep learning reduces the workload of data annotation. While deep learning requires much training data, to solve this problem, some scholars have proposed the transferable multimodel ensemble (TMME) algorithm (35) and Fuse-TSD algorithm (36), yielding AUC values of 0.9778 and 0.9665, respectively. According to the above research results, artificial intelligence in lung nodule identification has a good effect.

Methods to Improve Diagnosis Accuracy

While improving the diagnostic sensitivity, reducing the false-positive rate is also an important research direction. The reason for the false-positive rate is the complex structure of the lung. In addition to lung issue and large blood vessels, it is difficult to distinguish between benign and malignant nodules because the density of inflammatory tissue is similar to the that of tumor tissue, and both show a high level of glucose metabolism. In addition, complications such as pleural adhesions and pleural effusion make it difficult to distinguish tumor tissue from normal lung tissue. The rise of radiomics has been devoted to the reduction in the false-positive rate. Some scholars have used machine learning algorithms to construct a nomogram based on the features extracted by radiomics. In the training set and validation set, the false-positive rate dropped from 30.9 to 30.4% based solely on the doctor's diagnosis to 9.1 and 5.4%, respectively (37). Some scholars used a multipath 3D CNN to build a model based on the suspicious nodules' size, shape, and background information, which significantly reduced the false-positive rate (38). Their research shows that the false-positive rate can be reduced when AI is combined with medical imaging.

Trying to Detect Histological Types

The results of pathological diagnosis are critical to the final treatment decision and prognosis. It takes considerable time and energy for doctors to make a pathological diagnosis. Some scholars use CNN based on the EfficientNet-B3 architecture to establish a model to predict whether pathological images indicate lung cancer (39). In addition, since 80% of lung adenocarcinomas have multiple histological types, identifying histological types is very important for guiding the treatment choice. Therefore, some scholars have used deep learning convolutional nerves to classify lung adenocarcinoma types for the first (40). The above studies have achieved good results, indicating that AI can be combined with pathological diagnosis, reducing the burden on doctors and improving the accuracy of diagnosis. In terms of finding tumor markers, liquid biopsy has the advantages of noninvasiveness, real-time dynamic detection, and repeatability. However, the content of detected substances in the liquid biopsy is not high, so the detection sensitivity is not high. The use of machine learning algorithms to develop models that interpret the signals in the samples can effectively improve the detection sensitivity. The application of AI in liquid detection includes Foundation One based on cancer genomics (41).

The Role of Artificial Intelligence in Therapy

Targeted Therapy

The histological classification of lung cancer is mainly divided into small-cell lung cancer (SCLC) and non-small-cell lung cancer (NSCLC). Among them, non-small-cell lung cancer accounts for 85% and is further divided into squamous cell carcinoma, adenocarcinoma, squamous adenocarcinoma, and large cell lung cancer (42). For lung cancer treatment, the conventional treatment methods include surgical resection, radiotherapy, and chemotherapy. Targeted therapy also plays an increasingly important role due to its individualized treatment, excellent curative effect, and relatively few adverse reactions. Targeted therapy refers to targeting drugs that act on receptor proteins, enzymes, and genes during tumor cell proliferation to disrupt cell growth. Among the current targeted therapies, non-small-cell lung cancer targets include EGFR (epidermal growth factor receptor), EML4-ALK fusion gene (43), ROS1 fusion gene, RAS mutation, and C-MET amplification. In the process of targeted therapy, the most critical step is to determine the corresponding target. The primary traditional detection method is sequencing. However, heterogeneity within the tumor may cause inaccurate results for sampling and sequencing (44).

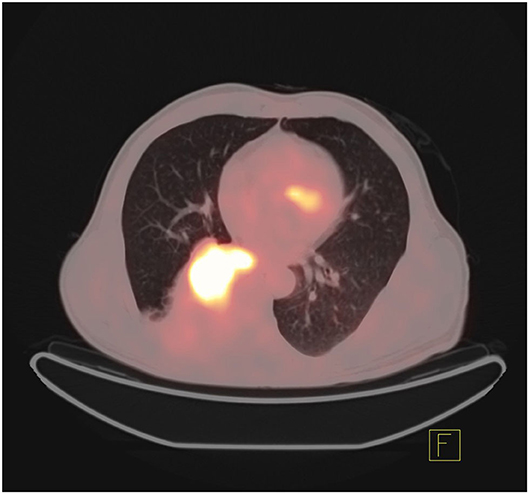

At the same time, surgery is invasive and may cause tumor metastasis, so it is not conducive to the repeated detection of targeted genes during the later stage of disease development. In addition to the fact that biopsy cannot be performed in all clinical cases (45), some patients may lose the opportunity for targeted therapy because they cannot undergo biopsy. In recent years, to achieve noninvasive, simple, and rapid detection, some scholars have studied the combination of radiomics and deep learning in artificial intelligence with CT and PET images to predict gene mutations. Figures 4, 5 show that it is not easy to distinguish between EGFR mutant type and wild type on PET/CT (Figure 4: EGFR mutant, Figure 5: EGFR wild type). In terms of radiomics, some scholars have used radiomics to extract the features of PET/CT images and used logistic regression to establish a model to predict EGFR mutations. The AUC values of the training set and validation set were 0.79 and 0.85, respectively. At the same time, scholars also established a clinical model based on sex and smoking history, with AUC values of 0.75 and 0.69 obtained for the training set and the validation set, respectively. The AUC values of the training set and the validation set of the combination of radiomics and clinical models were 0.86 and 0.87, respectively (45). In addition, some scholars have used a multivariate random forest algorithm and logistic regression model to screen out the image features most relevant to EGFR mutations on segmented PET/CT images and then employed the supervised XGBoost machine learning algorithm to establish a prediction model of EGFR mutation subtypes. The results were as follows: AUC value for exon 19 deletion was 0.77, the AUC value for exon 21 L858R point mutation was 0.92, and the overall model AUG for EGFR mutation was 0.87 (46). Scholars have also compared the performance of the gradient tree boosting algorithm and random forest algorithm (RF) to predict lung cancer subtypes and EGFR mutations. Combining the above two algorithms, the gradient tree boosting algorithm was obtained by comparing the AUC values. The conclusion was that the gradient tree boosting predictive algorithm ability is better than the random forest algorithm (47). In addition, machine learning algorithms such as SVM are used to predict target mutations (48). Because the use of machine learning to build a model requires precise manual segmentation steps, to simplify the delineation step, some scholars have proposed applying the end-to-end learning of deep learning to the prediction of EGFR mutation status. Deep learning models such as 2D small residual convolutional network (SResCNN) models and the use of 3D CNNs to develop 3D DenseNets have been established with the development of deep learning. However, the current research generally has certain problems, such as insufficient experimental samples (49, 50).

Immune Therapy

In addition, an immune checkpoint inhibitor (ICI) is also an effective method for treating lung cancer. Immunotherapy uses ICIs to restore the immune response toward tumor cells in order to slow the growth of tumors. At present, ICIs mainly act on the PD-1/PDL-1 pathway in lung cancer. Although immunotherapy has made significant progress in the treatment of lung cancer, ICIs are not practical for all patients and are effective in <30% of lung cancer patients (51). Therefore, it is similar to targeted therapy in that it is also necessary to detect the amount of PD-L1 protein in tumor tissue through biopsy and immunohistochemistry before immunotherapy. Unfortunately, biopsy in this case has the same problems as the targeted therapy biopsy above. Some scholars have used gene sequencing to detect the abundance of CD8 cells combined with enhanced CT generation to evaluate the imaging characteristics of CD8 cell tumor invasion and then used linear ElasticNet regression in machine learning to develop CD8 cell expression signature markers based on radiomics. In a study aiming to predict the tumor immunophenotype in order to determine whether ICI is effective and to predict the treatment response after receiving ICI treatment, the AUC value was found to be 0.74 for the expression classification of CD8 (high abundance vs. low abundance) and gene expression in CD8 cells. The characteristic AUC was 0.67 (52). Some scholars have combined CT imaging radiomics and clinical information to predict the expression of PD-L1, and the AUC in the prediction verification set was 0.848 (53). In addition, some scholars have used clinical data and radiomics of PET/CT images in combination with SResCNN of deep learning to establish a PD-L1 prediction model. They also used this model to predict the prognosis of patients for immunotherapy and achieved good results, with an AUC of 0.82 (54). In addition to radiomics, some scholars have also tested the RNA expression level of patients with relapsed NSCLC who received PD1/PD-L1 treatment and used the obtained genome to use machine learning to perform feature selection and establish a prediction model to accurately predict whether the patient is suitable for anti-PDL-1 treatment (55). Some scholars believe that clinical characteristics such as PD-1 cannot independently and accurately predict whether ICIs are beneficial to patients. Therefore, after comparing various machine learning algorithms, such as GBM and XGBoost, they chose the LighGBM algorithm to build a comprehensive prediction model for multiple clinical features, including patient characteristics, laboratory results, tumor size, genetic mutation status, metastatic location, treatment route, and PD-1 inhibitor type and response, yielding an AUC value of 0.788, which was better than the prediction model established by a single clinical feature (56). The above experimental results show that the combination of artificial intelligence and immunotherapy can help to establish a better predictive model to make clinical treatment decisions that are most beneficial to patients. The schematic diagram of the radiomics workflow is shown in Figure 6 (52).

Radiotheraphy

In the treatment of early lung cancer, especially in patients who cannot be operated on, stereotactic ablative radiotherapy (SABR) plays an important role, which the 3-year survival rate of patients with inoperable non-small cell lung cancer who received SABR was 55.8% (57). With the in-depth study of artificial intelligence in image segmentation, some scholars have tried to develop an automatic lung segmentation model in ablation radiotherapy through deep learning. Through the author's research, they found that although the current segmentation results are not comparable to the manual segmentation results, their research Proved that this is feasible (58). At the same time, some scholars used Deep Profiler in the deep learning neural network to predict the image fingerprint of CT images based on the purpose of precision treatment, and found that the failure rate of radiotherapy for patients with low scores was significantly lower than that for patients with high scores (59). While SABR brings benefits to patients, it also has corresponding side effects, such as Radiation-induced lung injury (RILI) (60), Chest wall pain and rib fractures, etc. (61). In order to predict the occurrence of side effects, some scholars have conducted research. Especially for the prediction of RILI, scholars have used AI to make predictions from various angles: For the first time, scholars extracted radiomic features from the gross tumor volume (GTV) in CT images of patients receiving treatment from two different medical institutions, and used the extracted radiomic features and clinical/dose to establish regularized models to predict local lung fibrosis (LF) about time and frequency of occurrence, but the model is not applicable to the prediction of disease-free survival (DFS) and overall survival (OS) (62). Based on the data of 3 medical institutions, some scholars have used LightGBM to develop three different models from the perspectives of dose-volume indices (DVIs), radiomics and a mixture of the two to predict radiation pneumonia of grade 2 and above, and the ROC of the three models. ROC-AUC and PR-AUC values are 0.660 ± 0.054 and 0.272 ± 0.052, 0.837 ± 0.054 and 0.510 ± 0.115 and 0.846 ± 0.049 and 0.531 ± 0.116, respectively (63). The above results indicate that there is still a lot of room for the development of AI in the related fields of SABR treatment of lung cancer.

The Role of Artificial Intelligence in Predicting the Prognosis of Lung Tumors

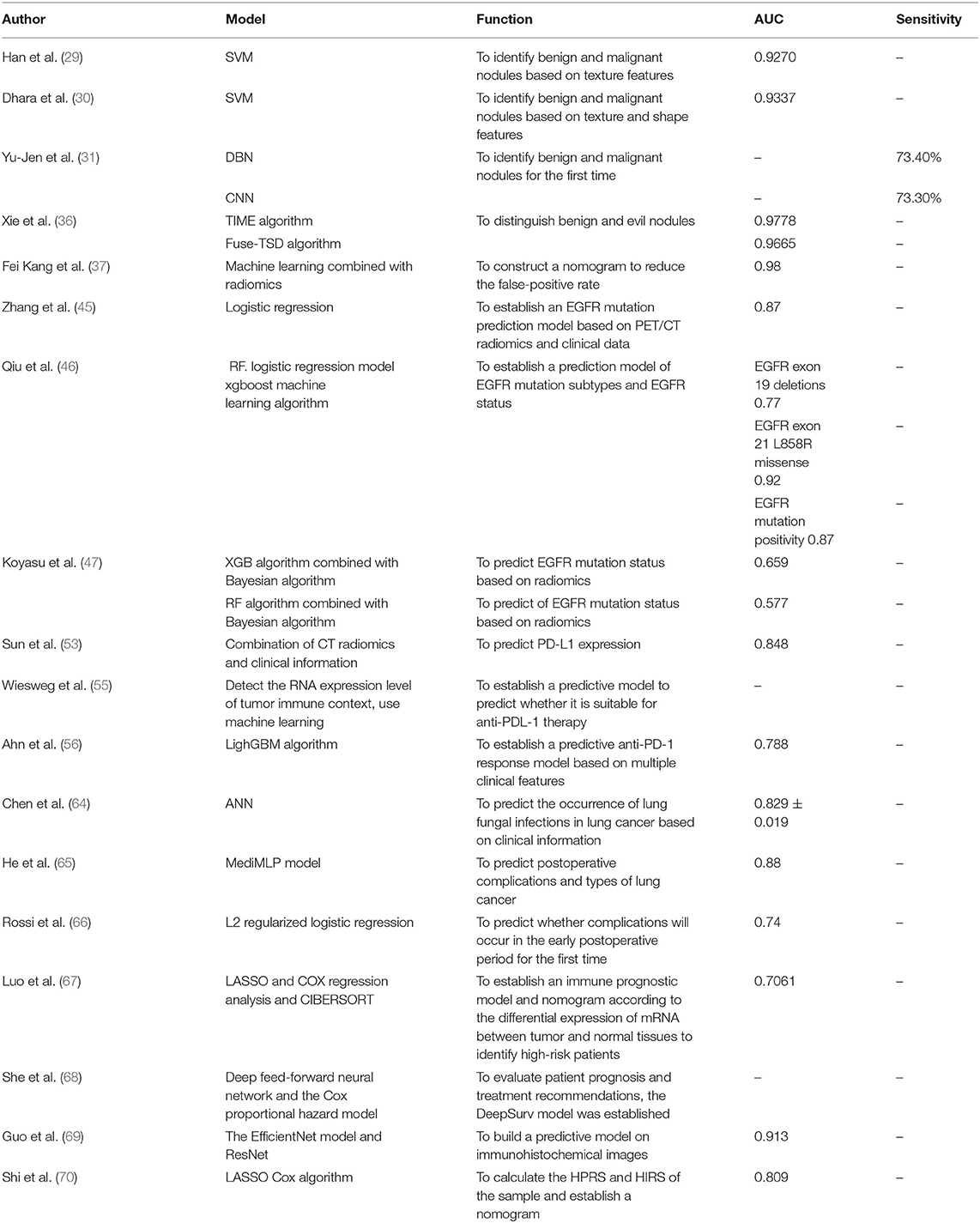

An accurate prognosis can guide the selection of the most beneficial treatment for lung cancer patients. The treatment and prognosis of patients are affected by TNM staging. With the development of artificial intelligence, lung cancer has made great progress in diagnosis and treatment, but at the same time, people can also provide more accurate prognoses through artificial intelligence to make decisions for patients based on the prediction results. The most beneficial treatment decisions can help to prevent unnecessary treatment. In terms of complications, some scholars have used artificial neural networks (ANNs) to establish a prediction model for the occurrence of fungal lung infections in lung cancer patients based on seven variables: age, antibiotic use, low serum albumin concentrations, radiation therapy, surgery, low hemoglobin hyperlipidemia, and length of stay in hospital. The AUC value was 0.829 ± 0.019 (64). Some scholars proposed medical MLP (MediMLP) based on the multilayer perception model (MLP). This model uses the Grad-CAMA algorithm for feature selection to determine whether complications will occur after lung cancer surgery as well as the types of complications (lung, other organs, whole body); the results indicated that the postoperative indwelling drainage tube time is the key to whether complications occur (65). Some scholars use wearable activity trackers to collect patient-generated health data (PGHD) and patient-reported outcomes (PROs) after discharge from the hospital and use the L2 regularized logistic regression model to predict whether complications will occur during the early postoperative period. In that study, the prediction of complications after discharge was most relevant to the length of hospital stay and complications before discharge. This regular remote detection model is the first model to predict complications after discharge. It was found to improve the survival rate and more effectively use medical resources (66). In addition, in terms of lung cancer patient survival and risk stratification, some scholars have used clinical data and radiomics of PET/CT images combined with SResCNN in deep learning to establish a PD-L1 immunotherapy prediction model. The deep learning score (DLS) predicted persistent clinical benefit (DCB), overall survival (OS), and progression-free survival (PFS). The conclusion was that DLS combined with clinical features can accurately predict DCB, OS, and PFS (54). Some scholars established an immune prognosis model composed of two immune genes (ANLN and F2) by using LASSO and Cox regression analysis along with the software CIBERSORT to analyze immune infiltration based on the differential expression of mRNA in tumor vs. normal tissues and predicted patients with a high risk of a poor prognosis. At the same time, the model and pathological analysis were combined to establish a nomogram for prognosis. The experimental results show that the comprehensively established nomogram has a higher AUC for prediction (67). The DeepSurv model, which aimed to evaluate patient prognosis and treatment recommendations and was composed of clinical information (sex, age, marital status), tumor characteristics (location, size, histological type and grade, and TNM staging), and treatment details from the SEER database and based on a deep feed-forward neural network and the Cox proportional hazard model, was found to have a better predictive ability than TNM. At the same time, it also developed the user input view, the survival prediction view, and the treatment recommendation view to visualize the DeepSurv model (68). Some scholars use the EfficientNet model to segment tumor cells and tumor-infiltrating cells in tumor microarrays (TMAs). At the same time, ResNet was used on the immunohistochemical images to extract the characteristics of OS and RFS (recurrence-free survival), and a reliable prediction model was established (69). Some scholars have used WGCNA to analyze the gene modules related to hypoxia based on the imbalance between the supply and demand of the hypoxia response tumor environment and then use the LASSO Cox algorithm or logistic regression model and RF to screen out the most relevant hypoxia prognostic genes, establish formulas, and calculate the hypoxia-related prognostic risk score (HPRS) of the sample. They concluded that the higher the HPRS is, the worse the OS of the patient. At the same time, a multivariate Cox regression analysis with age, stage, and sex demonstrated the excellent predictive ability of HPRS, and the use of HPRS and clinicopathological characteristics to establish a predictive nomogram achieved high accuracy (70). To date, a prognostic model of lung cancer has been established based on radiomics, genomics, clinical features, and pathological results. The established model has a good predictive ability from the experimental data, which is significant for guiding the choice of lung cancer treatment. Table 1 shows the development of AI in the field of lung cancer.

Discussion

For the diagnosis of pulmonary nodules, the application of AI has improved the detection rate, but we should also focus on improving the specificity of nodules to reduce the false-positive rate. For images of lung cancer tumors, confidential information is not visible to the naked eye, but with the help of AI, radiomics, and genomics, we can extract this information and combine it with clinical information for patient diagnosis, treatment, and prognosis. The current research still has the following problems. At present, most AI models are tested in a single race. Data from multiple sources should be tested to determine whether it can be applied to other races (71). In addition, the prediction or classification model established based on deep learning has black-box attributes because the neural network is not interpretable. Thus, we should further study how the neural network model calculates and make judgments. At the same time, we should explore the specific relationship between deep learning and radiomics to establish a better model. According to previous studies (45, 56), the combination of clinical features, semantic features, radiomics, and deep learning has better prediction or classification capabilities than models established by a single factor. Therefore, we should continue to explore how the above factors can be combined to establish the best model in the future. The development of AI and the rise of big data are inseparable; standardizing effective and sufficient data is essential for deep learning model training. Therefore, in the future, a standardized and shared database should be established for research so that artificial intelligence can be better used in lung cancer diagnosis, treatment, and prognosis. Finally, it is worth mentioning that although the application of artificial intelligence in medical care has a great development space, it also faces many other problems, such as ethical, psychological and legal issues. With the development and application of AI, especially when AI is used to decide the end of life in the future, perhaps we need new principles and regulations to manage medical artificial intelligence, so as to make the most favorable decision for patients and achieve the goal of “doing no harm” (72, 73). In order to achieve this goal, doctors should not accept the combination of AI with medical care without criticism, nor should they resist the application of AI without reason, but should actively participate in and promote its development (74). In addition, on the premise that deep learning has black-box attributes, we should not ignore patients' fear, but also ensure patients' right of informed consent and explain the benefits and potential risks, so as to solve patients' excessive fear or overconfidence (75). When AI is applied to medical disputes, the division of responsibilities may involve doctors, artificial intelligence system manufacturers and regulatory agencies (76), so relevant laws and regulations must be actively improved to solve related disputes.

Author Contributions

ZH, LC, and YW conceived and designed the study. YW, HC, YP, FY, JL, FY, and CY retrieved and analyzed the documents. YP collected the figures. YW wrote the manuscript. ZH and LC supervised the study, reviewed, and edited the manuscript. All authors approved the final manuscript.

Funding

This study was supported by the Project funded by China Postdoctoral Science Foundation (No. 2019M653501), the National Natural Science Foundation of China (No. 81960496), Yunnan Fundamental Research Projects (No. 202101AT070050), the Funding Project of Oriented Postdoctoral Training in Yunnan Province, the 100 Young and Middle-aged Academic and Technical Backbone Incubation Projects of Kunming Medical University, Reserve candidates for Kunming's young and middle-aged academic and technical leaders (17th), and Yunnan health training project of high-level talents (H-2018006) and Top Young Talents in Yunnan Ten Thousand Talents Program (2020).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin. (2021) 71:209–49. doi: 10.3322/caac.21660

2. Dong Z, Ledley RS. Processing X-ray images to eliminate irrelevant structures that mask important features. Comput Med imaging Graph Off J Comput Med Imaging Soc. (2004) 28:321–31. doi: 10.1016/j.compmedimag.2004.06.001

3. Schilham AMR, van Ginneken B, Loog M. A computer-aided diagnosis system for detection of lung nodules in chest radiographs with an evaluation on a public database. Med Image Anal. (2006) 10:247–58. doi: 10.1016/j.media.2005.09.003

4. Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science (80-). (2006) 313:504–7. doi: 10.1126/science.1127647

5. ESR paper on structured reporting in radiology. Insights Imaging. (2018) 9:1–7. doi: 10.1007/s13244-017-0588-8

6. Granata V, Grassi R, Miele V, Larici AR, Sverzellati N, Cappabianca S, et al. Structured reporting of lung cancer staging: a consensus proposal. Diagnostics (Basel, Switzerland). (2021) 11:1569. doi: 10.3390/diagnostics11091569

7. Steinkamp JM, Chambers C, Lalevic D, Zafar HM, Cook TS. Toward complete structured information extraction from radiology reports using machine learning. J Digit Imaging. (2019) 32:554–64. doi: 10.1007/s10278-019-00234-y

8. Steinkamp J, Chambers C, Lalevic D, Cook T. Automatic fully-contextualized recommendation extraction from radiology reports. J Digit Imaging. (2021) 34:374–84. doi: 10.1007/s10278-021-00423-8

9. Sharma N, Aggarwal LM. Automated medical image segmentation techniques. J Med Phys. (2010) 35:3–14. doi: 10.4103/0971-6203.58777

10. Bounias D, Singh A, Bakas S, Pati S, Rathore S, Akbari H, et al. Interactive machine learning-based multi-label segmentation of solid tumors and organs. Appl Sci (Basel, Switzerland). (2021) 11:7488. doi: 10.3390/app11167488

11. Dutande P, Baid U, Talbar S. Deep Residual Separable Convolutional Neural Network for lung tumor segmentation. Comput Biol Med. (2021) 141:105161. doi: 10.1016/j.compbiomed.2021.105161

12. Wang L, Guo D, Wang G, Zhang S. Annotation-efficient learning for medical image segmentation based on noisy pseudo labels and adversarial learning. IEEE Trans Med Imaging. (2021) 40:2795–807. doi: 10.1109/TMI.2020.3047807

13. Tan J, Jing L, Huo Y, Li L, Akin O, Tian Y, et al. Lung segmentation in CT scans using generative adversarial network. Comput Med imaging Graph Off J Comput Med Imaging Soc. (2021) 87:101817. doi: 10.1016/j.compmedimag.2020.101817

14. Dong X, Lei Y, Wang T, Thomas M, Tang L, Curran WJ, et al. Automatic multiorgan segmentation in thorax CT images using U-net-GAN. Med Phys. (2019) 46:2157–68. doi: 10.1002/mp.13458

15. An L, Zhang P, Adeli E, Wang Y, Ma G, Shi F, et al. Multi-level canonical correlation analysis for standard-dose PET image estimation. IEEE Trans Image Process Publ IEEE Signal Process Soc. (2016) 25:3303–15. doi: 10.1109/TIP.2016.2567072

16. Zhou L, Schaefferkoetter JD, Tham IWK, Huang G, Yan J. Supervised learning with cyclegan for low-dose FDG PET image denoising. Med Image Anal. (2020) 65:101770. doi: 10.1016/j.media.2020.101770

17. Rui X, Cheng L, Long Y, Fu L, Alessio AM, Asma E, et al. Ultra-low dose CT attenuation correction for PET/CT: analysis of sparse view data acquisition and reconstruction algorithms. Phys Med Biol. (2015) 60:7437–60. doi: 10.1088/0031-9155/60/19/7437

18. Kumar A, Fulham M, Feng D, Kim J. Co-Learning Feature Fusion Maps from PET-CT Images of Lung Cancer. IEEE Trans Med Imaging. (2019) doi: 10.1109/TMI.2019.2923601

19. Bi L, Fulham M, Li N, Liu Q, Song S, Dagan Feng D, et al. Recurrent feature fusion learning for multi-modality pet-ct tumor segmentation. Comput Methods Programs Biomed. (2021) 203:106043. doi: 10.1016/j.cmpb.2021.106043

20. Van Ginneken B, Schaefer-Prokop CM, Prokop M. Computer-aided diagnosis: how to move from the laboratory to the clinic. Radiology. (2011) 261:719–32. doi: 10.1148/radiol.11091710

21. Wang S, Chen A, Yang L, Cai L, Xie Y, Fujimoto J, et al. Comprehensive analysis of lung cancer pathology images to discover tumor shape and boundary features that predict survival outcome. Sci Rep. (2018) 8:10393. doi: 10.1038/s41598-018-27707-4

22. Wang S, Rong R, Yang DM, Fujimoto J, Yan S, Cai L, et al. Computational staining of pathology images to study the tumor microenvironment in lung cancer. Cancer Res. (2020) 80:2056–66. doi: 10.1158/0008-5472.CAN-19-1629

23. Kozuka T, Matsukubo Y, Kadoba T, Oda T, Suzuki A, Hyodo T, et al. Efficiency of a computer-aided diagnosis (CAD) system with deep learning in detection of pulmonary nodules on 1-mm-thick images of computed tomography. Jpn J Radiol. (2020) 38:1052–61. doi: 10.1007/s11604-020-01009-0

24. Onishi Y, Teramoto A, Tsujimoto M, Tsukamoto T, Saito K, Toyama H, et al. Automated pulmonary nodule classification in computed tomography images using a deep convolutional neural network trained by generative adversarial networks. Biomed Res Int. (2019) 2019:6051939. doi: 10.1155/2019/6051939

25. Nishio M, Fujimoto K, Matsuo H, Muramatsu C, Sakamoto R, Fujita H. Lung cancer segmentation with transfer learning: usefulness of a pretrained model constructed from an artificial dataset generated using a generative adversarial network. Front Artif Intell. (2021) 4:694815. doi: 10.3389/frai.2021.694815

26. Shah PM, Ullah H, Ullah R, Shah D, Wang Y, Islam SU, Gani A, Rodrigues JJPC. DC-GAN-based synthetic X-ray images augmentation for increasing the performance of EfficientNet for COVID-19 detection. Expert Syst. (2021)e12823.

27. Motamed S, Rogalla P, Khalvati F. Data augmentation using Generative Adversarial Networks (GANs) for GAN-based detection of Pneumonia and COVID-19 in chest X-ray images. Informatics Med unlocked. (2021) 27:100779. doi: 10.1016/j.imu.2021.100779

28. Sakib S, Tazrin T, Fouda MM, Fadlullah ZM, Guizani M. DL-CRC. Deep learning-based chest radiograph classification for COVID-19 detection: a novel approach. IEEE access Pract Innov open Solut. (2020) 8:171575–89. doi: 10.1109/ACCESS.2020.3025010

29. Han F, Wang H, Zhang G, Han H, Song B, Li L, et al. Texture feature analysis for computer-aided diagnosis on pulmonary nodules. J Digit Imaging. (2015) 28:99–115. doi: 10.1007/s10278-014-9718-8

30. Dhara AK, Mukhopadhyay S, Dutta A, Garg M, Khandelwal N. A Combination of shape and texture features for classification of pulmonary nodules in lung CT images. J Digit Imaging. (2016) 29:466–75. doi: 10.1007/s10278-015-9857-6

31. Hua KL, Hsu CH, Hidayati SC, Cheng WH, Chen YJ. Computer-aided classification of lung nodules on computed tomography images via deep learning technique. Onco Targets Ther. (2015) 8:2015–22. doi: 10.2147/OTT.S80733

32. Shen W, Zhou M, Yang F, Yu D, Dong D, Yang C, et al. Multi-crop Convolutional Neural Networks for lung nodule malignancy suspiciousness classification. Pattern Recognit. (2017) 61:663–73. doi: 10.1016/j.patcog.2016.05.029

33. Hussein S, Cao K, Song Q, Bagci U. Risk stratification of lung nodules using 3D CNN-based multi-task learning. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). (2017). p. 249–60. doi: 10.1007/978-3-319-59050-9_20

34. Shen S, Han SX, Aberle DR, Bui AA, Hsu W. An interpretable deep hierarchical semantic convolutional neural network for lung nodule malignancy classification. Expert Syst Appl. (2019) 128:84–95. doi: 10.1016/j.eswa.2019.01.048

35. Xie Y, Xia Y, Zhang J, Feng DD, Fulham M, Cai W. Transferable multi-model ensemble for benign-malignant lung nodule classification on chest CT. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics). (2017) 10435 LNCS: 656–664. doi: 10.1007/978-3-319-66179-7_75

36. Xie Y, Zhang J, Xia Y, Fulham M, Zhang Y. Fusing texture, shape and deep model-learned information at decision level for automated classification of lung nodules on chest CT. Inf Fusion. (2018) 42:102–10. doi: 10.1016/j.inffus.2017.10.005

37. Kang F, Mu W, Gong J, Wang S, Li G, Li G, et al. Integrating manual diagnosis into radiomics for reducing the false positive rate of 18F-FDG PET/CT diagnosis in patients with suspected lung cancer. Eur J Nucl Med Mol Imaging. (2019) 46:2770–9. doi: 10.1007/s00259-019-04418-0

38. Yuan H, Fan Z, Wu Y, Cheng J. An efficient multi-path 3D convolutional neural network for false-positive reduction of pulmonary nodule detection. Int J Comput Assist Radiol Surg. (2021) 16:2269–77. doi: 10.1007/s11548-021-02478-y

39. Kanavati F, Toyokawa G, Momosaki S, Rambeau M, Kozuma Y, Shoji F, et al. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci Rep. (2020) 10:9297. doi: 10.1038/s41598-020-66333-x

40. Wei JW, Tafe LJ, Linnik YA, Vaickus LJ, Tomita N, Hassanpour S. Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks. Sci Rep. (2019) 9:3358. doi: 10.1038/s41598-019-40041-7

41. Chalmers ZR, Connelly CF, Fabrizio D, Gay L, Ali SM, Ennis R, et al. Analysis of 100,000 human cancer genomes reveals the landscape of tumor mutational burden. Genome Med. (2017) 9:34. doi: 10.1186/s13073-017-0424-2

42. Molina JR, Yang P, Cassivi SD, Schild SE, Adjei AA. Non-small cell lung cancer: Epidemiology, risk factors, treatment, and survivorship. Mayo Clin Proc. (2008) 83:584–94. doi: 10.1016/S0025-6196(11)60735-0

43. Wu J, Savooji J, Liu D. Second- and third-generation ALK inhibitors for non-small cell lung cancer. J Hematol Oncol. (2016) 9:19. doi: 10.1186/s13045-016-0251-8

44. Zhang L, Zhang Y, Chang L, Yang Y, Fang W, Guan Y, et al. Intratumor heterogeneity comparison among different subtypes of non-small-cell lung cancer through multi-region tissue and matched ctDNA sequencing. Mol Cancer. (2019) 18:7. doi: 10.1186/s12943-019-0939-9

45. Zhang J, Zhao X, Zhao Y, Zhang J, Han J. Value of pre-therapy 18F-FDG PET/CT radiomics in predicting EGFR mutation status in patients with non-small cell lung cancer. Eur J Nucl Med Mol Imaging. (2020) 47:1137–46. doi: 10.1007/s00259-019-04592-1

46. Liu Q, Sun D, Li N, Kim J, Feng D, Huang G, et al. Predicting EGFR mutation subtypes in lung adenocarcinoma using 18F-FDG PET/CT radiomic features. Transl Lung Cancer Res. (2020) 9:549–62. doi: 10.21037/tlcr.2020.04.17

47. Koyasu S, Nishio M, Isoda H, Nakamoto Y, Togashi K. Usefulness of gradient tree boosting for predicting histological subtype and EGFR mutation status of non-small cell lung cancer on 18F FDG-PET/CT. Ann Nucl Med. (2019) 34:49–57. doi: 10.1007/s12149-019-01414-0

48. Jiang M, Zhang Y, Min J, Guo Y, Jie Y. Assessing EGFR gene mutation status in non-small cell lung cancer with imaging features from PET/CT. Nucl Med Commun. (2019) 40:842–9. doi: 10.1097/MNM.0000000000001043

49. Mu W, Jiang L, Zhang JY, Shi Y, Gray JE, Tunali I, et al. Non-invasive decision support for NSCLC treatment using PET/CT radiomics. Nat Commun. (2020) 11:5228. doi: 10.1038/s41467-020-19116-x

50. Zhao W, Yang J, Ni B, Bi D, Sun Y, Xu M, et al. Toward automatic prediction of EGFR mutation status in pulmonary adenocarcinoma with 3D deep learning. Cancer Med. (2019) 8:3532–43. doi: 10.1002/cam4.2233

51. Garon EB, Rizvi NA, Hui R, Leighl N, Balmanoukian AS, Eder JP, et al. Pembrolizumab for the treatment of non–small-cell lung cancer. N Engl J Med. (2015) 372:2018–28. doi: 10.1056/NEJMoa1501824

52. Sun R, Limkin EJ, Vakalopoulou M, Dercle L, Champiat S, Han SR, et al. A radiomics approach to assess tumour-infiltrating CD8 cells and response to anti-PD-1 or anti-PD-L1 immunotherapy: an imaging biomarker, retrospective multicohort study. Lancet Oncol. (2018) 19:1180–91. doi: 10.1016/S1470-2045(18)30413-3

53. Sun Z, Hu S, Ge Y, Wang J, Duan S, Hu C, Li Y. Radiomics study for predicting the expression of PD-L1 in non-small cell lung cancer based on CT images and clinicopathologic features. J Xray Sci Technol. (2020) 28: 449–59 doi: 10.3233/XST-200642

54. Mu W, Jiang L, Shi Y, Tunali I, Gray JE, Katsoulakis E et al. Non-invasive measurement of PD-L1 status and prediction of immunotherapy response using deep learning of PET/CT images. J Immunother Cancer. (2021) 9:e002118. doi: 10.1136/jitc-2020-002118

55. Wiesweg M, Mairinger F, Reis H, Goetz M, Kollmeier J, Misch D, et al. Machine learning reveals a PD-L1–independent prediction of response to immunotherapy of non-small cell lung cancer by gene expression context. Eur J Cancer. (2020) 140:76–85. doi: 10.1016/j.ejca.2020.09.015

56. Ahn BC, So JW, Synn CB, Kim TH, Kim JH, Byeon Y, et al. Clinical decision support algorithm based on machine learning to assess the clinical response to anti–programmed death-1 therapy in patients with non–small-cell lung cancer. Eur J Cancer. (2021) 153:179–89. doi: 10.1016/j.ejca.2021.05.019

57. Timmerman R, Paulus R, Galvin J, Michalski J, Straube W, Bradley J, et al. Stereotactic body radiation therapy for inoperable early stage lung cancer. JAMA. (2010) 303:1070–6. doi: 10.1001/jama.2010.261

58. Wong J, Huang V, Giambattista JA, Teke T, Kolbeck C, Giambattista J. Atrchian S. Training and validation of deep models for lung stereotactic ablative radiotherapy using retrospective radiotherapy planning contours. Front Oncol. (2021) 11:1–9. doi: 10.3389/fonc.2021.626499

59. Lou B, Doken S, Zhuang T, Wingerter D, Gidwani M, Mistry N, et al. An image-based deep learning framework for individualizing radiotherapy dose. Lancet Digit Heal. (2019) 1:e136–47. doi: 10.1016/S2589-7500(19)30058-5

60. Hanania AN, Mainwaring W, Ghebre YT, Hanania NA, Ludwig M. Radiation-induced lung injury: assessment and management. Chest. (2019) 156:150–62. doi: 10.1016/j.chest.2019.03.033

61. Bongers EM, Haasbeek CJA, Lagerwaard FJ, Slotman BJ, Senan S. Incidence and risk factors for chest wall toxicity after risk-adapted stereotactic radiotherapy for early-stage lung cancer. J Thorac Oncol Off Publ Int Assoc Study Lung Cancer. (2011) 6:2052–7. doi: 10.1097/JTO.0b013e3182307e74

62. Bousabarah K, Blanck O, Temming S, Wilhelm M-L, Hoevels M, Baus WW, et al. Radiomics for prediction of radiation-induced lung injury and oncologic outcome after robotic stereotactic body radiotherapy of lung cancer: results from two independent institutions. Radiat Oncol. (2021) 16:74. doi: 10.1186/s13014-021-01805-6

63. Adachi T, Nakamura M, Shintani T, Mitsuyoshi T, Kakino R, Ogata T, et al. Multi-institutional dose-segmented dosiomic analysis for predicting radiation pneumonitis after lung stereotactic body radiation therapy. Med Phys. (2021) 48:1781–91. doi: 10.1002/mp.14769

64. Chen J, Chen J, Ding HY, Pan QS, Hong WD, Xu G, et al. Use of an artificial neural network to construct a model of predicting deep fungal infection in lung cancer patients. Asian Pacific J Cancer Prev. (2015) 16:5095–9. doi: 10.7314/APJCP.2015.16.12.5095

65. He T, Guo J, Chen N, Xu X, Wang Z, Fu K, et al. MediMLP: Using Grad-CAM to extract crucial variables for lung cancer postoperative complication prediction. IEEE J Biomed Heal Informatics. (2020) 24:1762–71. doi: 10.1109/JBHI.2019.2949601

66. Rossi LA, Melstrom LG, Fong Y, Sun V. Predicting post-ischarge cancer surgery complications via telemonitoring of patient-reported outcomes and patient-enerated health data. J Surg Oncol. (2021) 123:1345–52. doi: 10.1002/jso.26413

67. Luo C, Lei M, Zhang Y, Zhang Q, Li L, Lian J, et al. Systematic construction and validation of an immune prognostic model for lung adenocarcinoma. J Cell Mol Med. (2020) 24:1233–44. doi: 10.1111/jcmm.14719

68. She Y, Jin Z, Wu J, Deng J, Zhang L, Su H, et al. Development and validation of a deep learning model for non-small cell lung cancer survival. JAMA Netw Open. (2020) 3:e205842. doi: 10.1001/jamanetworkopen.2020.5842

69. Guo H, Diao L, Zhou X, Chen JN, Zhou Y, Fang Q, et al. Artificial intelligence-based analysis for immunohistochemistry staining of immune checkpoints to predict resected non-small cell lung cancer survival and relapse. Transl Lung Cancer Res. (2021) 10:2452–74. doi: 10.21037/tlcr-21-96

70. Shi R, Bao X, Unger K, Sun J, Lu S, Manapov F, et al. Identification and validation of hypoxia-derived gene signatures to predict clinical outcomes and therapeutic responses in stage I lung adenocarcinoma patients. Theranostics. (2021) 11:5061–76. doi: 10.7150/thno.56202

71. Wang S, Shi J, Ye Z, Dong D, Yu D, Zhou M, et al. Predicting EGFR mutation status in lung adenocarcinoma on computed tomography image using deep learning. Eur Respir J. (2019) 53:1800986. doi: 10.1183/13993003.00986-2018

72. Kulikowski CA. Beginnings of Artificial Intelligence in Medicine (AIM): computational artifice assisting scientific inquiry and clinical art—with reflections on present AIM challenges. Yearb Med Inform. (2019) 28:249–56. doi: 10.1055/s-0039-1677895

73. Geis JR, Brady AP, Wu CC, Spencer J, Ranschaert E, Jaremko JL, et al. Ethics of artificial intelligence in radiology: summary of the joint European and North American multisociety statement. Radiology. (2019) 293:436–40. doi: 10.1148/radiol.2019191586

74. Arnold MH. Teasing out artificial intelligence in medicine: an ethical critique of artificial intelligence and machine learning in medicine. J Bioeth Inq. (2021) 18:121–39. doi: 10.1007/s11673-020-10080-1

75. Schiff D, Borenstein J. How should clinicians communicate with patients about the roles of artificially intelligent team members? AMA J ethics. (2019) 21:E138–145. doi: 10.1001/amajethics.2019.138

76. Jaremko JL, Azar M, Bromwich R, Lum A, Alicia Cheong LH, Gibert M, et al. Canadian association of radiologists white paper on ethical and legal issues related to artificial intelligence in radiology. Can Assoc Radiol J = J l'Association Can des Radiol. (2019) 70:107–18. doi: 10.1016/j.carj.2019.03.001

Keywords: lung cancer, AI, CAD, deep learning, radiomics

Citation: Wang Y, Cai H, Pu Y, Li J, Yang F, Yang C, Chen L and Hu Z (2022) The value of AI in the Diagnosis, Treatment, and Prognosis of Malignant Lung Cancer. Front. Radiol. 2:810731. doi: 10.3389/fradi.2022.810731

Received: 07 November 2021; Accepted: 30 March 2022;

Published: 06 May 2022.

Edited by:

Tianming Liu, University of Georgia, United StatesReviewed by:

Yubing Tong, University of Pennsylvania, United StatesLorenzo Faggioni, University of Pisa, Italy

Copyright © 2022 Wang, Cai, Pu, Li, Yang, Yang, Chen and Hu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Long Chen, lonechen1983@hotmail.com; Zhanli Hu, zl.hu@siat.ac.cn

†These authors share first authorship

Yue Wang1†

Yue Wang1†  Haihua Cai

Haihua Cai Yongzhu Pu

Yongzhu Pu Long Chen

Long Chen Zhanli Hu

Zhanli Hu