The Scientific Impact Derived From the Disciplinary Profiles

- 1Institute of Psychology, University of Tartu, Tartu, Estonia

- 2Grant Office, University of Tartu, Tartu, Estonia

- 3Department of Psychology, University of Warwick, Coventry, United Kingdom

The disciplinary profiles of the mean citation rates across 22 research areas were analyzed for 107 countries/territories that published at least 3,000 papers that exceeded the entrance thresholds for the Essential Science Indicators (ESI; Clarivate Analytics) during the period from January 1, 2009 to December 31, 2019. The matrix of pairwise differences between any two profiles was analyzed with a non-metric multidimensional scaling (MDS) algorithm, which recovered a two-dimensional geometric space describing these differences. These two dimensions, Dim1 and Dim2, described 5,671 pairwise differences between countries' disciplinary profiles with a sufficient accuracy (stress = 0.098). A significant correlation (r = 0.81, N = 107, p < 0.0001) was found between Dim1 and the Indicator of a Nation's Scientific Impact (INSI), which was computed as a composite of the average and the top citation rates. The scientific impact ranking of countries derived from the pairwise differences between disciplinary profiles seems to be more accurate and realistic compared with more traditional citation indices.

The Scientific Impact Derived From the Disciplinary Profiles

Although not perfect, the number of times a scientific paper has been cited since its publication is an objective and easy-to-determine indicator of its scientific impact, which was forecasted long before counting citations became practically feasible (Garfield, 1955). After an expected link between scientific and economic wealth was established—countries whose scientists tend to publish highly cited science papers had also higher level GDP per capita—the mean citation rate acquired a status of the most reliable measure of the scientific quality of nations (May, 1997; Rousseau and Rousseau, 1998; King, 2004; Harzing and Giroud, 2014; Prathap, 2017). However, it was noticed that some countries, for example, Sweden and Finland, seem to have lower mean citation rates than some other countries with a comparable level of scientific development such as Switzerland and the Netherlands (Karlsson and Persson, 2012; Öquist and Benner, 2015). It was also observed that Scandinavian papers published with international co-authorship produced a higher citation rate than purely domestic papers (Glänzel, 2000). It was also noticed that there was a gap between national mean citation rates and the proportion of highly cited papers that countries' scientists were publishing, which could be considered as an index of complaisance showing satisfaction with a relatively modest scientific ambitions (Allik, 2013; Lauk and Allik, 2018).

These and other similar problems caused a shift in the bibliometric research from impact scores based on average values of citations toward the use of indicators that reflect the top of the citation distribution, such as the number of papers reaching the highest rank of citations (van Leeuwen et al., 2003). In accordance with this general trend, a composite index—the High Quality Science Index (HQSI; Allik, 2013; Allik et al., 2020a)—was proposed characterizing nations by combining the mean citation rate per paper with the percentage of the papers that have reached the top 1% level of citations in a given research area and an age cohort of published papers. Although the average values of citations and the top of the citation distribution are highly correlated, typically r = 0.80 or higher (Allik, 2013), combining these two indicators into a composite index allowed to compensate some minor discrepancies between the two indicators.

Despite these improvements, the rankings of countries based on their citation frequencies are still often counterintuitive, seemingly at least. For example, very few experts would have expected that Panama will become a leading country whose scientists are publishing papers with the highest citation rate in the whole world (Monge-Najera and Ho, 2015; Confraria et al., 2017; Erfanmanesh et al., 2017; Allik et al., 2020a). One possible reason for such implausible rankings is that the selected top layer of papers is not representative of the total scientific production of a given nation (Allik et al., 2020a). When the Essential Science Indicators (ESI; Clarivate Analytics) database was designed, the whole science (except humanities) was decided to divide into 22 research areas with a quite different publication and citation rates. However, counting minimally required number of citations to enter the ESI in one of the research fields created a situation where it may be more advantageous to avoid entering ESI in relatively weak research areas that could decrease the country's average citation rate. As was shown by Allik and colleagues (2020a), leaving weaker publications out of counting may artificially increase the mean citation rate of that nation (Allik et al., 2020a). To deal with this problem, a new indicator—the Indicator of a Nation's Scientific Impact (INSI)—was proposed, wherein, in addition to the average and the top citation rates, the number of research areas in which each country/territory had succeeded to enter the ESI was also taken into account (Allik et al., 2020b). This modification made the scientific impact ranking of countries/territories more plausible, unfortunately not entirely. For example, the Republic of Georgia, which had the 5th highest mean citation rate, was shifted five positions down in the ranking because of the failure to exceed the ESI entrance threshold in 11 out of 22 scientific fields. However, Panama—also failing in 11 areas—dropped only two positions in the ranking and remained nevertheless ahead of the Netherlands, Denmark, and the United Kingdom not to mention USA, Canada, and Germany.

It was noticed that characteristics of the disciplinary structure may also be a factor that affects the competitive advantages of national sciences (Yang et al., 2012; Bongioanni et al., 2014, 2015; Cimini et al., 2014; Harzing and Giroud, 2014; Radosevic and Yoruk, 2014; Albarran et al., 2015; Lorca and de Andrés, 2019; Pinto and Teixeira, 2020). For example, it has been argued that this archaic disciplinary structure is one of the reasons why Russia and other former communist countries are still lagging behind Western nations (Kozlowski et al., 1999; Markusova et al., 2009; Adams and King, 2010; Guskov et al., 2016; Jurajda et al., 2017; Tregubova et al., 2017; Shashnov and Kotsemir, 2018). In a comprehensive study of how disciplinary structure is related to the competitive advantage in science of different nations, Harzing and Giroud (2014) showed that countries that demonstrated the fastest increase in their scientific productivity during the periods 1994–2004 and 2002–2012 remained relatively stable in their fairly well-balanced disciplinary structures. They also identified different groups of countries with distinct patterns of specialization. For example, one group of countries with a highly developed knowledge infrastructure had an emphasis on social sciences. Another group of countries had a rather balanced research profile with some slight advantage in physical sciences. Yet another group of countries mainly comprised Asian countries with a competitive advantage in engineering sciences (Harzing and Giroud, 2014). Although this study shed light on slightly different routes toward scientific excellence, it is still unclear whether there are truly separate routes or only one general highway, which guarantees advancement in the world ranking.

One of the problems with existing research on examining the scientific disciplinary profiles is that previous studies typically involved a relatively small number of nations. For example, the study by Harzing and Giroud (2014) analyzed disciplinary profiles of 34 countries across 21 disciplines while Almeida et al. (2009) examined disciplinary profiles of 26 European countries. Another study analyzed 27 European countries across 27 disciplines over the period from 1996 to 2011 (Bongioanni et al., 2014). Thelwall and Levitt (2018) analyzed the relative citation impact for 2.6 million articles from 26 fields in the 25 countries published from 1996 to 2015. Pinto and Teixeira (2020) examined disciplinary profiles of 65 countries over a broad period of time (1980–2016). There were several studies analyzing 16 G7 and BRICS countries (Yang et al., 2012; Shashnov and Kotsemir, 2018; Yue et al., 2018). Li (2017) explored disciplinary profiles of 45 countries, which is still a relatively small fraction of nations capable for a substantial scientific contribution. Estimating that there are about 100 nations with sufficiently advanced sciences, a need for more inclusive studies is obvious.

The Aim of the Present Study

To advance the existing research, the aim of the present study is to examine the disciplinary profiles of the mean citation rates for 107 countries or territories whose scientists made substantial contributions to the world's essential science. In accordance with a recommendation to use indicators reflecting the top of the citation distribution (van Leeuwen et al., 2003), we used publications that were selected by the ESI based on their top citation rates. For each country/territory that had exceeded the entrance thresholds, their disciplinary profiles were formed based on their mean citation rates across 22 broad disciplines that ESI uses to monitor publication and citation performance.

When comparing disciplinary profiles of any two countries, we can judge how similar or dissimilar disciplinary strengths or weaknesses of these two countries are. From pairwise (dis)similarities between any two disciplinary profiles, it is possible to construct a matrix of distances between all countries/territories. By applying a multidimensional scaling (MDS) algorithm to this matrix, we may hope to recover from it a geometric space of low dimensionality, which could represent these data, as they are points in this geometric space (Borg and Groenen, 2005). If axes of this geometric space have a meaningful interpretation, then we may have a novel way for the construction of a new index characterizing the scientific impact of nations, which would not base on the average or the top values of the citation distribution alone.

Methods

Data were retrieved from the latest available update of the ESI (Clarivate Analytics, updated on March 12, 2020; https://clarivate.com/products/essential-science-indicators/) that covered an 11-year period from January 1, 2009, until December 31, 2019. This update contained over 16 million Web of Science (WoS) documents, which were cited over 221 million times with an average frequency of 13.5 times per document.

In order to be included in the ESI, journals, papers, institutions, and authors need to exceed the minimum number of citations obtained by ranking journals, researchers, and papers in a respective research field in descending order by citation count and then selecting the top fraction or percentage of papers. For the authors and institutions, the threshold is set for the top 1% and the top 50% is established for countries and journals in an 11-year period. The main purpose of dividing into the fields is to balance publication and citation frequencies in different research areas. The ESI entrance thresholds were quite different for the research areas. For example, in the field of clinical medicine, 16,012 citations were needed for a country/territory in order to pass the ESI threshold whereas the respective figures in the fields of mathematics and economics & business were 494 and 321.

Among 149 countries/territories that passed the ESI threshold at least in one research field were several that published a small number of papers. For example, researchers from the Seychelles, Bermuda, and Vatican published 421, 404, and 257 papers, respectively, which were able to surpass the disciplinary entrance thresholds during the last 11 years. To include countries with a sufficient number of papers, we analyzed only countries that published more than 3,000 papers during the 11-year period. This entrance threshold was slightly lowered compared with the previous studies where it was 4,000 (Allik, 2013; Lauk and Allik, 2018; Allik et al., 2020b) to include a maximally large number of countries/territories making substantial contribution to the world science. Applying this criterion, 107 countries/territories were included in the analyses, which is about 78% of all countries/territories admitted to the ESI. The disciplinary profiles for these 107 counties/territories were retrieved from the ESI, and the mean citation rates across 22 research areas were reproduced in Table 1 without any modifications. However, lowering this criterion further to 2,000 would have extended the list by 16 additional countries: Mozambique, Bolivia, Democratic Republic of Congo, Bahrein, Cambodia, Ivory Coast, Jamaica, Madagascar, Yemen, Moldova, Syria, Libya, Mongolia, Trinidad and Tobago, and Montenegro. Because the scientific strength can be measured by the number of disciplines in which a country/territory succeeded to enter ESI, we excluded these 16 countries as they succeeded to exceed the entrance thresholds typically only in three to four research areas and no more than in eight areas, which is <40% of the total number of research areas.

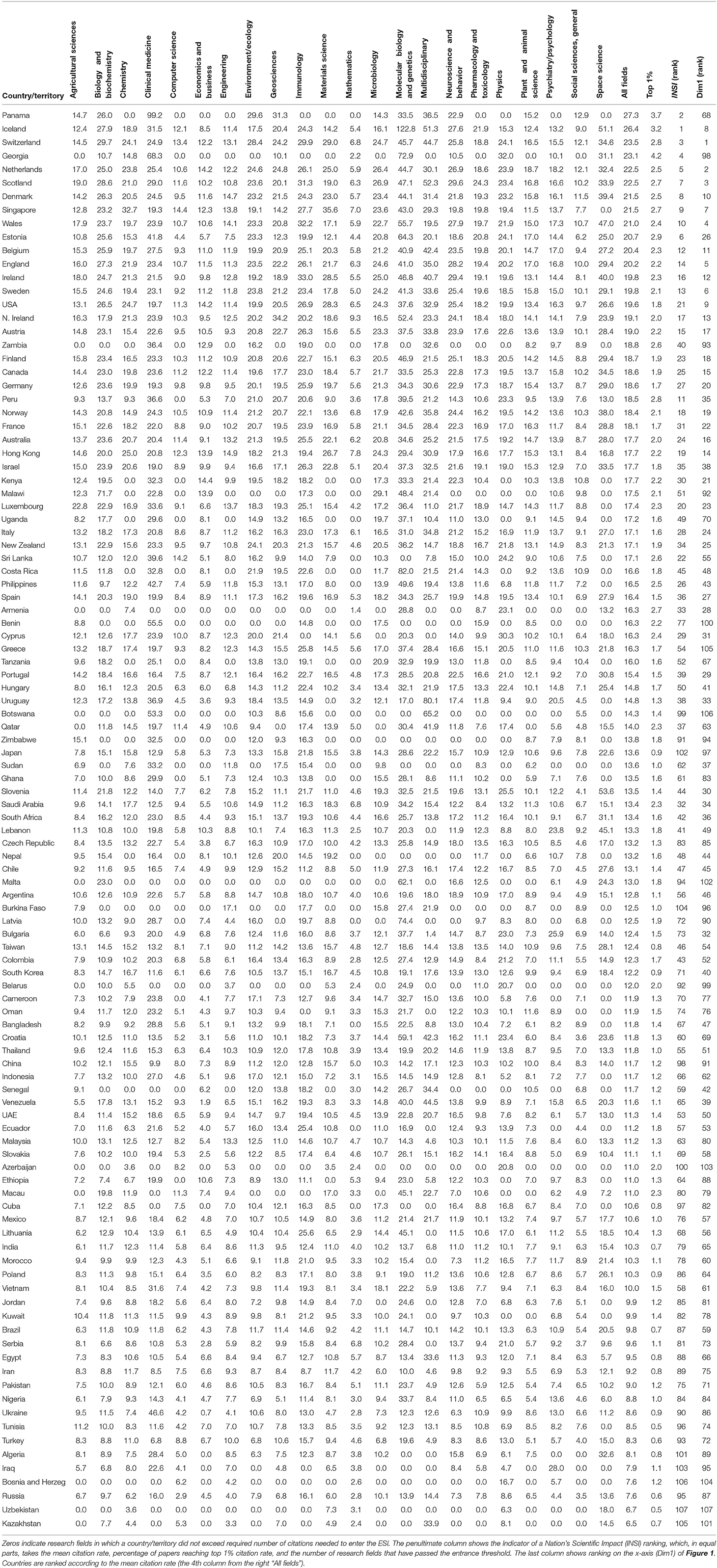

Table 1. The mean citation rates in 22 research fields and the average citation rate for 107 countries/territories that published 3,000 or more papers able to enter the ESI for the period 2009–2019.

Indicator of a Nation's Scientific Impact (INSI)

The penultimate column [“INSI (rank)”] in Table 1 presents the country/territory INSI score (ranking), which is an average of three components (Allik et al., 2020b). The first component is the country/territory mean citation rate—the number of citations divided by the number of papers (the 4th column from the right “All fields”). The second component is the percentage of papers that had reached the top 1% citation rate in the respective research area and age cohort (the 3rd column from the right “Top 1%”). Finally, the third component is a number of research areas or disciplines in which each country/territory had reached the ESI (the number of nonzeros in the first 22 columns). For example, large countries such as USA, Germany, China, and Russia have surpassed the ESI entrance thresholds in all 22 research fields. However, 49 (46%) out of 107 countries/territories failed to reach the ESI in one research area at least. Before computing the average score, three INSI components were normalized so that their mean values were equal to zero with the standard deviation equal to one. Thus, the INSI scores in the last column are in the units of the standard deviation showing how much below or above the average score of all 107 countries/territories each participant was scoring.

Table 1 also reproduces the mean citation rates of each country/territory in 22 different research fields. Zeros represent research fields in which country/territory failed to enter the ESI. For example, Benin, Bosnia, and Herzegovina, and Uzbekistan had 16 zeros in their disciplinary profiles. Because no entry means no citations, we treated those research areas as if they had zero citation rates.

For the analysis of 107 disciplinary profiles of each country/territory across 22 different research fields, we used the MDS technique, which attempts to transform “distances” or “proximities” among a set of N objects into a configuration of N points mapped into a geometric space with the smallest possible number of dimensions. A non-metric version of MDS assumes that only the ranks of the distances are known or relevant for producing a map, which reproduces these ranks in the best possible way. We applied the non-metric Guttman-Lingoes MDS algorithm (Borg and Groenen, 2005) as it is implemented in the Statistica (Dell Inc.) software package. Before applying a MDS algorithm, a matrix of pairwise (dis)similarities between disciplinary profiles of any two countries/territories was computed. The absolute pairwise differences across 22 disciplines were summed together, being used as a measure of (dis)similarity between any pairs of countries/territories. As a result, we created a symmetric matrix with 11,449 elements, each of which showing City Block or Manhattan distance between all possible pairs of countries/territories including the main diagonal of zeros representing distance from oneself. In order to compress the large range of differences in these (dis)similarities, we normalized (the mean value became zero with the standard deviation one) sums of absolute differences across 107 countries/territories.

Results

Table 1 presents the mean citation scores across 22 research fields for each country/territory. Entries in the table are ranked according to the mean citation rate (the 4th column from the right “All fields”). Panama (27.3), Iceland (26.4), and Switzerland (23.5) had the highest mean citation rate (note that fields with zeros were not used for the calculation of the mean citation rate). Among 107 countries/territories, publications authored by Russian (7.6), Uzbekistan (6.7), and Kazakhstan (6.5) scientists had the smallest impact on the world science in terms of cited work.

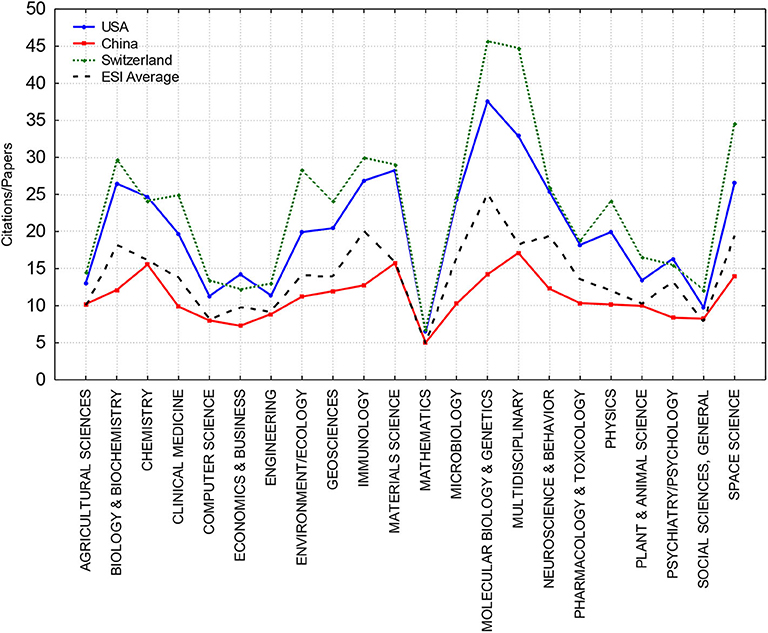

To obtain a better impression about the disciplinary profiles, Figure 1 displays the mean citation rates across 22 research areas for Switzerland, USA, and China. These three nations were chosen for illustrative purposes only: China and USA were two the most prolific sciences in the world publishing over 2 million and 4 million papers, respectively, during the observed 11-year period; Switzerland was one of the most efficient sciences by the mean citation rate. Please notice that these three nations entered the ESI in all 22 disciplines. The ESI average citation rate in each research area is also shown as a black broken line providing a baseline with which each nation can be compared. Switzerland, as a long-time efficiency front-runner, has a higher citation rate than USA in almost all research areas. Although the impact of Chinese science is growing (Leydesdorff and Wagner, 2009; Leydesdorff et al., 2014), its mean citation rate is still below the ESI average in almost every 22 research fields.

Figure 1. The mean citation rates across 22 research fields for three countries, USA (blue), China (red), and Switzerland (green), compared with the ESI average (black broken line).

The penultimate column in Table 1 presents the INSI ranking for each country/territory. According to this ranking, the highest-quality science is produced in Iceland, Panama, Switzerland, Republic of Georgia, Scotland, Estonia, Netherlands, Singapore, Denmark, and Wales. As these are all relatively small countries, this confirms previous findings that small countries seem to have an advantage in publishing high-impact scientific papers (Allik et al., 2020a,b). According to the INSI, the smallest impact among these 107 countries/territories had publications authored by researchers from Iraq, Burkina Faso, Kazakhstan, Bosnia and Herzegovina, and Uzbekistan. It is also interesting to notice that China, in spite of the increasing research volume, occupied a position in the middle of the INSI ranking (the 59th position) and Russia was very close to the bottom (the 95th position).

Next, we were interested in how the disciplinary profiles of the mean citation rates were related to the overall scientific impact of countries/territories. In a previous study (Bongioanni et al., 2014), a complex index, borrowed from the physics of magnetism, was proposed to estimate overlaps between disciplinary profiles of countries. In this study, we preferred a simpler approach computing the sum of absolute differences across all 22 fields between two disciplinary profiles (see Methods section). The findings showed that a two-dimensional solution was optimal (stress function = 0.098), showing that all differences between countries/territories can be placed on a plane with a sufficient accuracy (cf. Mair et al., 2016).

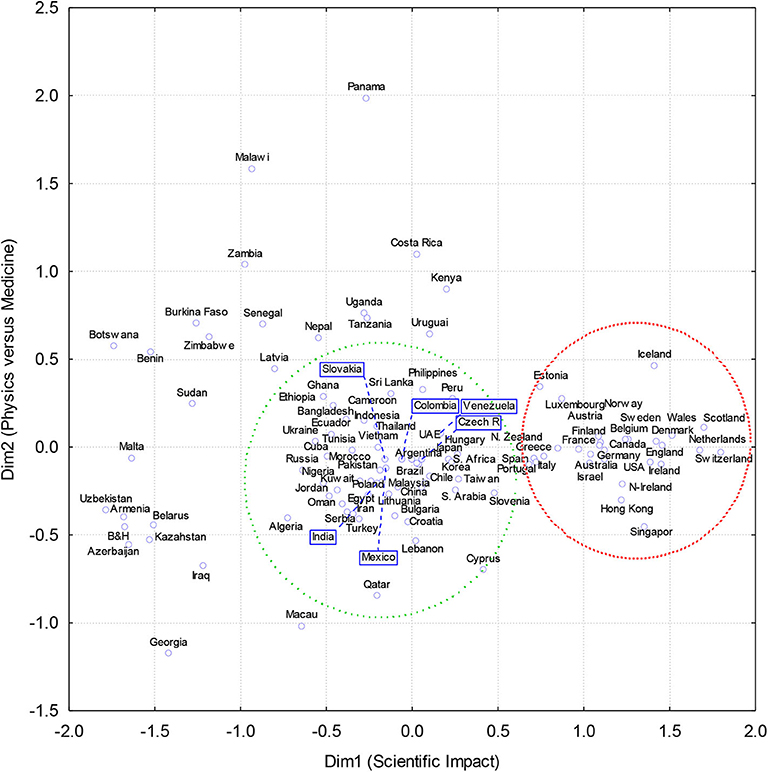

Figure 2 shows a two-dimensional plot derived from the MDS of similarities–differences between the disciplinary profiles of the mean citation rates. The first dimension Dim1 can be identified as the country/territory's overall scientific impact. Rankings of countries/territories on this dimension Dim1 is presented in the last column in Table 1 [“Dim1 (rank)”]. The correlation between Dim1 and the INSI was r = 0.81 (N = 107, p < 0.0001), which is higher than correlations between Dim1 and any of the INSI three components: the mean citation rate (r = 0.64, p < 0.00001), the percentage of the top-cited papers (r = 0.35, p < 0.00001), and the number of areas represented in the ESI (r = 0.77, p < 0.00001). After excluding two largest outliers—Panama and Georgia—the correlation increases to r = 0.88. Thus, this indicated that the scientific impact of nations could be measured using the Dim1 scores with approximately the same accuracy as with the INSI. It is important to emphasize that this ranking was obtained by ignoring the absolute mean citation rates, which is the foundation of the INSI. When a transformation for the pairwise differences AB, AC, and BC between any triples of the disciplinary profiles A, B, and C were searched to satisfy an approximate equality AB + BC ≈ AC, information about the absolute elevation of profiles was lost. Because the triangulation rule was sustained with a reasonable accuracy, it indicated that all differences between profiles can be arranged on a linear scale.

Figure 2. Two-dimensional plot of a non-metric Guttman-Lingoes multidimensional scaling analysis of country's citation profile similarities (Manhattan or City Block metrics of the normalized citation rates).

To illustrate how this derived ranking of the scientific impact [Table 1, the last column “Dim1 (rank)”] has certain advantages before the previous ones, we need to observe changes in the ranking positions of countries, whose high positions may not be entirely justified. According to the mean citations rate, by which countries/territories were listed in Table 1, Panama (27.3) was number one in the world, Georgia (23.1) was on the 4th position and Peru (18.5) occupied the 22nd position. Because the INSI penalizes for failures to reach the ESI in any of the research areas, countries/territories not being successful in all 22 research areas were expecting to lose positions in the ranking. Because both Georgia and Panama did not enter the ESI in 11 research areas, they were shifted down in the INSI ranking to the 2nd and the 5th positions, respectively. At the same time, Peru did not reach the ESI only in one field (computer sciences); the position in the INSI ranking was elevated up to the 12th position.

Compared with these relatively small changes in the ranking of countries that was based on either the mean citation rate or the INSI, the differences in the countries' ranking positions on Dim1 derived from the MDS analysis were more substantial. According to their positions on Dim1 (the last column in Table 1), Georgia, Panama, and Peru occupied the 98th, 68th, and 35th positions, respectively. Thus, in comparison with the mean citation rate, Georgia, Panama, and Peru dropped 94, 67, and 13 positions. Their disciplinary profiles were more similar to the disciplinary profiles of nations in vicinity to these positions. Two countries with profiles the most similar to Panama in this new ranking were Tanzania and Bangladesh. Georgia was squeezed between Sudan and Belarus, not Belgium and Ireland as previously in the INSI ranking. These changes in the ranking positions explained, as was already mentioned, a relatively modest correlation between Dim1 and the mean citation rate (r = 0.64).

The second dimension Dim2 was more difficult to interpret because a clear pattern did not emerge. Because it has the largest positive correlations with the mean citation rate in Clinical Medicine (r = 0.49, p < 0.00001) and Social Sciences (r = 0.50, p < 0.00001) to the contrast negative correlations with the mean citation rates in Physics (r = −0.49, p < 0.00001) and Mathematics (r = −0.49, p < 0.00001), it would be fair to say that this dimension represents human-centered opposite to the physics–math-centered sciences.

Two distinct clusters can be identified on the plot. These two clusters, we need to warn, were identified based on an impression with a heuristic purpose only, not in the result of any rigorous procedure. The first cluster (surrounded by the red circle) represents the cream of the crop in the world of science. This cluster of 29 countries includes mainly European countries such as the Netherlands, Scotland, and Switzerland but also other countries such as USA, Singapore, Hong Kong, Canada, Australia, Israel, and New Zealand. Although it was noticed that the scientific wealth of Hong Kong and Singapore is declining (Horta, 2018), they firmly belong to this group of leaders in the world science. A common feature of these 29 countries/territories is that they all succeeded to pass the ESI entrance thresholds in all 22 research areas.

Another group (green circle) unites not only many of the world's largest countries—China, Russia, Brazil, and India—but also smaller countries like Slovenia, Ecuador, and Hungary. If large countries in this cluster were successful in all 22 disciplinary areas, then smaller countries may have difficulties to collect enough citations to exceed the ESI entrance thresholds in some research areas. Outside of these two groups (or circles) are mainly African countries (upper part) or post-communist countries (lower part), which scatter along Dim2.

A similarity between these two clusters and two clusters that were identified previously (Bongioanni et al., 2015, Figure 2) can be noticed. Bongioanni et al. (2015) identified a cluster that included countries with a prominent biomedical disciplinary profile such as the US and the Netherlands (Bongioanni et al., 2015). Another cluster embraced a group of countries with a conspicuous physical-sciences profile, like China and Russia. In addition, many Central, Southern, and Eastern European countries belonged to this second group, as well as India, Indonesia, and Mexico. However, there are notable differences between the findings of Bongioanni et al.'s (2015) and this study. According to Bongioanni et al. (2015), Turkey is in the same group with the UK and the Netherlands; in the current study, Turkey's nearest neighbors are Serbia and Iran in the second group. In addition, Estonia and Portugal were differently classified. According to Bongioanni and colleagues (2005), these two countries are in the less scientifically advanced group of nations, while in our classification, they more likely belong to the leading group science nations (Bongioanni et al., 2015). These discrepancies are probably produced by different measures of (dis)similarity between disciplinary profiles.

Discussion

It has been suggested by experts that new impact indicators should not be introduced unless they have a clear added value relative to the existing indicators (Waltman, 2016). Indeed, the average citation rate or the percentage of papers reaching the top of the citation distributions have proved to be trusted and reliable indicators of the scientific wealth of nations (May, 1997; Rousseau and Rousseau, 1998; van Leeuwen et al., 2003; King, 2004; Halffman and Leydesdorff, 2010; Prathap, 2017). Very serious arguments are needed to introduce yet another indicator. Although warnings are still released not to take citations as the only constituents of the concept of scientific quality (MacRoberts and MacRoberts, 2018; Aksnes et al., 2019), citation indicators have become the most convenient measures of the scientific strength of nations (May, 1997; Rousseau and Rousseau, 1998; King, 2004; Harzing and Giroud, 2014; Prathap, 2017). Nevertheless, some of the country rankings based on the citation statistics did not look credible (Allik, 2013; Allik et al., 2020a,b). One of the possible causes of these counterintuitive rankings, as was mentioned above, appears to be the selectivity of databases, which is the main tool for extracting what is believed to be essential in science (Allik et al., 2020a,b). Although it appears to be true that the top of the citation distribution is a more informative characteristic of the scientific impact than indicators based on average values (van Leeuwen et al., 2003), the selectivity of databases unwillingly eliminates “losers” whose counting would have decreased the mean citation rate. Thus, the scientific impact of nations can be increased not only by the number of highly cited papers but also by neglecting those papers that could jeopardize the mean citation rate (Allik et al., 2020a). To improve citation indicators, a new measure—INSI—was proposed, which, in addition to the citation statistics, takes also into account the number of research areas in which a country/territory was successful to enter the ESI. This amendment improved rankings in the right direction, unfortunately not radically enough (Allik et al., 2020b). As we said above, the disciplinary profiles appeared to be different for scientifically developed and non-leading countries (Kozlowski et al., 1999; Almeida et al., 2009; Yang et al., 2012; Bongioanni et al., 2014, 2015; Harzing and Giroud, 2014; Carley et al., 2017; Li, 2017; Daraio et al., 2018; Shashnov and Kotsemir, 2018). For example, it was noticed that one of the reasons why post-communist countries are still lagging behind Western counterparts is their archaic disciplinary structure reflecting, among other things, the demands of the former totalitarian regimes (Kozlowski et al., 1999; Markusova et al., 2009; Jurajda et al., 2017). Openness of national science systems was observed to be correlated with the scientific impact—the more internationally engaged a nation is, in terms of coauthorships and researcher mobility, the higher the impact on their scientific work (Wagner et al., 2018). It was noticed that geographical proximity, which is one of the strongest incentives for cooperation, may be a principal factor of the similarity between disciplinary profiles (Almeida et al., 2009). Although such pairs as Finland–Norway, England–Scotland, Netherlands–Belgium, and Denmark–Sweden (Almeida et al., 2009, Figure 4) support this idea, there is an equally large number of neighboring countries (e.g., Panama–Colombia, Peru–Ecuador, Georgia–Armenia, Estonia–Latvia, etc.) that have a distinctly different level of scientific impact. It was also observed that BRICS countries differ from the scientifically leading countries typically belonging to G7 not only by the overall scientific impact but also by differences in the disciplinary structure of their sciences (Bornmann et al., 2015; Li, 2017; Shashnov and Kotsemir, 2018; Yue et al., 2018). For example, it was observed that a competitive advantage of a group of nations including the Netherlands, USA, UK, Canada, and Israel is an emphasis on social and biomedical research (Harzing and Giroud, 2014). The disciplinary citation profiles of G7 and BRICS countries are noticeably different. For instance, most G7 countries performed well in Space Science, which was not the strength of BRICS countries (Shashnov and Kotsemir, 2018; Yue et al., 2018). In spite of these differences, there seems to be a common evolutionary pattern of convergence in the national disciplinary profiles (Bongioanni et al., 2014; Bornmann et al., 2015; Li, 2017).

Typically, the disciplinary profiles were analyzed to discover different clusters into which nations belong. Another approach, adopted in this study, was to see if there is a small number dimensions that can summarize (dis)similarities between the disciplinary profiles (cf. Borg and Groenen, 2005). It is not likely that the similarities and dissimilarities between disciplinary profiles have a distinct pattern, which could be described by a low-dimensional space. Like any other human enterprises, science is a complex institution, which may have differences in prioritizing various research fields. For example, Panama in collaboration with the Smithsonian Institution—one of the world's largest museum, education, and research complexes—invested into the study of the tropical ecosystems by creating a branch of the Smithsonian in Panama, which attracted the best researchers around the world in this area (cf. Rubinoff and Leigh, 1990). Another already mentioned example is Georgia allocating considerable assets into physics in order to develop partnerships with the large international collaborative networks. As a result, Georgia achieved the highest mean citation rate (on average 32 cites per paper) in physics (see Table 1, column “Physics”). Inspecting Table 1, one can also notice, with a surprise, that Kenya had the highest impact among 107 nations in economics and business: every paper that was published by Kenyan's economists collected 14.4 citations on average (column “Economics and business”). Kenya benefited from the research unit of the United Nations Environment Programme in Nairobi, which is devoted to the study of the economics of ecosystems management and provided services (cf. Ivanova, 2007). Knowing the accomplishments that the deCODE and Kári Stefánsson with his colleagues (Hakonarson et al., 2003) have achieved, it is not surprising that Iceland seized the first position in the impact ranking in the molecular biology and genetics (column “Molecular biology and genetics”). These examples seemed to suggest that nations might have different keys for their success in producing high-quality science.

Nevertheless, all (dis)similarities between disciplinary profiles can be arranged on a single dimension ranking, which corresponded to the scientific impact that was measured by conventional indicators such as the INSI. This demonstrated that in spite of differences in the nations' competitive advantages, all that mattered was overall impact across many disciplines as possible, not how this impact was allocated among various research areas. To attain success, it was essential to have an evenly high level of citations relative to the ESI average across as many disciplines as possible because low impact or not even exceeding the entrance thresholds in one or several research areas is a key factor that diminishes scientific impact. This may also demonstrate that attempts of the agencies that fund scientific research in prioritizing their disciplinary budgets are not as effective as usually claimed. Results of this study appeared to suggest that the only thing that was really worth prioritizing is the scientific excellence irrespective of which particular discipline it was demonstrated. To our satisfaction, the impact ranking derived from the (dis)similarities between disciplinary profiles was free from anomalies that traditional citation indicators typically possess. These results support an idea about a common route toward scientific excellence in which disciplinary peculiarities are supporting a general advancement (Bongioanni et al., 2014; Li, 2017; Thelwall and Levitt, 2018).

In conclusion, previous attempts to construct indicators of the scientific impact of nations were based on the average or the top-citation statistics. However, the country rankings based on these indicators often look problematic and counterintuitive. Most of these anomalies were produced by failures to exceed the ESI entrance thresholds in weaker research areas in which nations failed to collect a sufficient number of citations (Allik et al., 2020a,b). To correct these implausible rankings, we proposed to take also into account the number of research areas in which each country/territory failed to exceed the ESI entrance thresholds (Allik et al., 2020b). This was an improvement that, however, did not eliminate problematic rankings entirely. In this study, we proposed a novel approach according to which the scientific impact can be derived from the MDS analysis of (dis)similarities between the disciplinary profiles of the mean citation rate. The scientific impact was derived from a matrix of (dis)similarities between disciplinary profiles as a dimension of a recovered geometric space, which characterized the quality of sciences surprisingly adequately without artificially increasing the impact by withdrawing data in weaker research areas. Because shapes of the disciplinary profiles seemed to be irrelevant, only the cumulative citation rate across all disciplines matters in achieving a position in the science impact ranking.

There are several limitations in this study. The decision to include countries that were able publish 3,000 (instead of the previously used 4,000) or more papers during the 11-year period was a voluntary decision. However, some tests with a different number of countries demonstrated that the final plot of the MDS was invariant to this number and preserved its general configuration. Another potentially problematic decision was to replace unrepresented fields with the zero citation rates. We can only guess what the replacement zeros with the actual citation frequencies, which are expectedly close to nil anyway, would have resulted. Unfortunately, the ESI does not provide information about the number of publication and their citation rates that were left behind the entrance thresholds. Although we are among the first who noticed that the problem of spurious country rankings may be created by the ESI's most precious property—focusing exclusively on the top of the citation distribution—we have very little information that the application of MDS to the disciplinary profiles provides the best answer to the problem. In one of our previous papers (Allik et al., 2020b), we already tried to correct rankings by taking into account in how many research areas each country/territory has failed to exceeded the entrance thresholds of the ESI. Although the spurious rankings were diminished, the improvement was less spectacular compared with the MDS of the disciplinary profiles used in this study. Additional studies are needed to establish what the best formula would be taking missing research fields into account.

Data Availability Statement

All datasets generated for this study are included in the article.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adams, J., and King, C. (2010). Global Research Report: Russia. Research and Collaboration in The New Geography of Science. Leeds: Evidence.

Aksnes, D. W., Langfeldt, L., and Wouters, P. (2019). Citations, citation indicators, and research quality: an overview of basic concepts and theories. Sage Open 9:2158244019829575. doi: 10.1177/2158244019829575

Albarran, P., Perianes-Rodriguez, A., and Ruiz-Castillo, J. (2015). Differences in citation impact across countries. J. Assoc. Inform. Sci. Technol. 66, 512–525. doi: 10.1002/asi.23219

Allik, J. (2013). Factors affecting bibliometric indicators of scientific quality. Trames J. Human. Soc. Sci. 17, 199–214. doi: 10.3176/tr.2013.3.01

Allik, J., Lauk, K., and Realo, A. (2020a). Factors predicting the scientific wealth of nations. Cross. Cult. Res. 54, 364–397. doi: 10.1177/1069397120910982

Allik, J., Lauk, K., and Realo, A. (2020b). Indicators of the scientific impact of nations revisited. Trames J. Human. Soc. Sci. 24, 231–250. doi: 10.3176/tr.2020.2.07

Almeida, J. A. S., Pais, A., and Formosinho, S. J. (2009). Science indicators and science patterns in Europe. J. Informetr. 3, 134–142. doi: 10.1016/j.joi.2009.01.001

Bongioanni, I., Daraio, C., Moed, H. F., and Ruocco, G. (2015). Comparing the Disciplinary Profiles of National and Regional Research Systems by Extensive and Intensive Measures. Avaliable online at: https://pdfs.semanticscholar.org/4406/daed0bde9f3367b1596b97d1fcc3aac360c4.pdf

Bongioanni, I., Daraio, C., and Ruocco, G. (2014). A quantitative measure to compare the disciplinary profiles of research systems and their evolution over time. J. Informetr. 8, 710–727. doi: 10.1016/j.joi.2014.06.006

Borg, I., and Groenen, P. J. F. (2005). Modern Multidimensional Scaling: Theory and Applications, 2nd Edn. New York, NY: Springer.

Bornmann, L., Wagner, C., and Leydesdorff, L. (2015). BRICS countries and scientific excellence: a bibliometric analysis of most frequently cited papers. J. Assoc. Inform. Sci. Technol. 66, 1507–1513. doi: 10.1002/asi.23333

Carley, S., Porter, A. L., Rafols, I., and Leydesdorff, L. (2017). Visualization of disciplinary profiles: enhanced science overlay maps. J. Data Inform. Sci. 2, 68–111. doi: 10.1515/jdis-2017-0015

Cimini, G., Gabrielli, A., and Labini, F. S. (2014). The scientific competitiveness of nations. PLoS ONE 9:e113470. doi: 10.1371/journal.pone.0113470

Confraria, H., Godinho, M. M., and Wang, L. L. (2017). Determinants of citation impact: a comparative analysis of the Global South versus the Global North. Res. Policy 46, 265–279. doi: 10.1016/j.respol.2016.11.004

Daraio, C., Fabbri, F., Gavazzi, G., Izzo, M. G., Leuzzi, L., Quaglia, G., and Ruocco, G. (2018). Assessing the interdependencies between scientific disciplinary profiles. Scientometrics 116, 1785–1803. doi: 10.1007/s11192-018-2816-5

Erfanmanesh, M., Tahira, M., and Abrizah, A. (2017). The publication success of 102 Nations in scopus and the performance of their scopus-indexed journals. Publish. Res. Q. 33, 421–432. doi: 10.1007/s12109-017-9540-5

Garfield, E. (1955). Citation indexes for science: a new dimension in documentation through association of ideas. Science 122, 108–111. doi: 10.1126/science.122.3159.108

Glänzel, W. (2000). Science in Scandinavia: a bibliometric approach. Scientometrics 48, 121–150. doi: 10.1023/A:1005640604267

Guskov, A., Kosyakov, D., and Selivanova, I. (2016). Scientometric research in Russia: impact of science policy changes. Scientometrics 107, 287–303. doi: 10.1007/s11192-016-1876-7

Hakonarson, H., Gulcher, J. R., and Stefánsson, K. (2003). deCODE genetics, Inc. Pharmacogenomics 4, 209–215. doi: 10.1517/phgs.4.2.209.22627

Halffman, W., and Leydesdorff, L. (2010). Is inequality among universities increasing? gini coefficients and the elusive rise of elite Universities. Minerva 48, 55–72. doi: 10.1007/s11024-010-9141-3

Harzing, A. W., and Giroud, A. (2014). The competitive advantage of nations: an application to academia. J. Informetr. 8, 29–42. doi: 10.1016/j.joi.2013.10.007

Horta, H. (2018). The declining scientific wealth of Hong Kong and Singapore. Scientometrics 117, 427–447. doi: 10.1007/s11192-018-2845-0

Ivanova, M. (2007). Designing the United Nations environment programme: a story of compromise and confrontation. Int. Environ. Agree. Politic. Law Econom. 7, 337–361. doi: 10.1007/s10784-007-9052-4

Jurajda, S., Kozubek, S., Munich, D., and Skoda, S. (2017). Scientific publication performance in post-communist countries: still lagging far behind. Scientometrics 112, 315–328. doi: 10.1007/s11192-017-2389-8

Karlsson, S., and Persson, O. (2012). The Swedish Production of Highly Cited Papers. Stockholm: Vetenskapsrådet.

Kozlowski, J., Radosevic, S., and Ircha, D. (1999). History matters: the inherited disciplinary structure of the post-communist science in countries of Central and Eastern Europe and its restructuring. Scientometrics 45, 137–166. doi: 10.1007/BF02458473

Lauk, K., and Allik, J. (2018). A puzzle of Estonian science: how to explain unexpected rise of the scientific impact. Trames J. Human. Soc. Sci. 22, 329–344. doi: 10.3176/tr.2018.4.01

Leydesdorff, L., and Wagner, C. (2009). Is the United States losing ground in science? A global perspective on the world science system. Scientometrics 78, 23–36. doi: 10.1007/s11192-008-1830-4

Leydesdorff, L., Wagner, C. S., and Bornmann, L. (2014). The European Union, China, and the United States in the top-1% and top-10% layers of most-frequently cited publications: competition and collaborations. J. Informetr. 8, 606–617. doi: 10.1016/j.joi.2014.05.002

Li, N. (2017). Evolutionary patterns of national disciplinary profiles in research: 1996-2015. Scientometrics 111, 493–520. doi: 10.1007/s11192-017-2259-4

Lorca, P., and de Andrés, J. (2019). The importance of cultural factors in R&D intensity. Cross Cult. Res. 53, 483–507. doi: 10.1177/1069397118813546

MacRoberts, M. H., and MacRoberts, B. R. (2018). The mismeasure of science: citation analysis. J. Assoc. Inform. Sci. Technol. 69, 474–482. doi: 10.1002/asi.23970

Mair, P., Borg, I., and Rusch, T. (2016). Goodness-of-fit assessment in multidimensional scaling and unfolding. Multivar. Behav. Res. 51, 772–789. doi: 10.1080/00273171.2016.1235966

Markusova, V. A., Jansz, M., Libkind, A. N., Libkind, I., and Varshavsky, A. (2009). Trends in Russian research output in post-Soviet era. Scientometrics 79, 249–260. doi: 10.1007/s11192-009-0416-0

May, R. M. (1997). The scientific wealth of nations. Science 275, 793–796. doi: 10.1126/science.275.5301.793

Monge-Najera, J., and Ho, Y. S. (2015). Bibliometry of Panama publications in the science citation index expanded: publication type, language, fields, authors and institutions. Rev. Biol. Trop. 63, 1255–1266. doi: 10.15517/rbt.v63i4.21112

Öquist, G., and Benner, M. (2015). “Why are some nations more successful than others in research impact? a comparison between Denmark and Sweden,” in Incentives and Performance: Governance of Research Organizations, eds I. M. Welpe, J. Wollersheim, S. Ringelhan, and M. Osterloh (Cham: Springer International Publishing), 241–257.

Pinto, T., and Teixeira, A. A. C. (2020). The impact of research output on economic growth by fields of science: a dynamic panel data analysis, 1980–2016. Scientometrics 123, 945–978. doi: 10.1007/s11192-020-03419-3

Prathap, G. (2017). Scientific wealth and inequality within nations. Scientometrics 113, 923–928. doi: 10.1007/s11192-017-2511-y

Radosevic, S., and Yoruk, E. (2014). Are there global shifts in the world science base? Analysing the catching up and falling behind of world regions. Scientometrics 101, 1897–1924. doi: 10.1007/s11192-014-1344-1

Rousseau, S., and Rousseau, R. (1998). The scientific wealth of European nations: taking effectiveness into account. Scientometrics 42, 75–87. doi: 10.1007/BF02465013

Rubinoff, I., and Leigh, E. G. (1990). Dealing with diversity: the Smithsonian tropical research Institute and tropical biology. Trends Ecol. Evolut. 5, 115–118. doi: 10.1016/0169-5347(90)90165-A

Shashnov, S., and Kotsemir, M. (2018). Research landscape of the BRICS countries: current trends in research output, thematic structures of publications, and the relative influence of partners. Scientometrics 117, 1115–1155. doi: 10.1007/s11192-018-2883-7

Thelwall, M., and Levitt, J. M. (2018). National scientific performance evolution patterns: Retrenchment, successful expansion, or overextension. J. Assoc. Inform. Sci. Technol. 69, 720–727. doi: 10.1002/asi.23969

Tregubova, N. D., Fabrykant, M., and Marchenko, A. (2017). Countries versus disciplines: comparative analysis of post-soviet transformations in academic publications from Belarus, Russia and Ukraine. Comparat. Sociol. 16, 147–177. doi: 10.1163/15691330-12341414

van Leeuwen, T. N., Visser, M. S., Moed, H. F., Nederhof, T. J., and van Raan, A. F. J. (2003). Holy Grail of science policy: exploring and combining bibliometric tools in search of scientific excellence. Scientometrics 57, 257–280. doi: 10.1023/A:1024141819302

Wagner, C. S., Whetsell, T., Baas, J., and Jonkers, K. (2018). Openness and impact of leading scientific countries. Front. Res. Metr. Anal. 3:10. doi: 10.3389/frma.2018.00010

Waltman, L. (2016). A review of the literature on citation impact indicators. J. Informetr. 10, 365–391. doi: 10.1016/j.joi.2016.02.007

Yang, L. Y., Yue, T., Ding, J. L., and Han, T. (2012). A comparison of disciplinary structure in science between the G7 and the BRIC countries by bibliometric methods. Scientometrics 93, 497–516. doi: 10.1007/s11192-012-0695-8

Keywords: disciplinary profiles, scientific impact, Essential Science Indicators, multidimensional scaling, bibliometrics

Citation: Allik J, Lauk K and Realo A (2020) The Scientific Impact Derived From the Disciplinary Profiles. Front. Res. Metr. Anal. 5:569268. doi: 10.3389/frma.2020.569268

Received: 03 June 2020; Accepted: 17 August 2020;

Published: 16 October 2020.

Edited by:

Juan Ignacio Gorraiz, Universität Wien, AustriaReviewed by:

Hamid R. Jamali, Charles Sturt University, AustraliaZaida Chinchilla-Rodríguez, Consejo Superior de Investigaciones Científicas (CSIC), Spain

Copyright © 2020 Allik, Lauk and Realo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jüri Allik, juri.allik@ut.ee

Jüri Allik

Jüri Allik Kalmer Lauk

Kalmer Lauk Anu Realo1,3

Anu Realo1,3