Psychometric properties of the TACT framework—Determining rigor in qualitative research

- 1Higher Education Development Centre, University of Otago, Dunedin, New Zealand

- 2Faculty of Health Sciences and Medicine, Bond University, Gold Coast, QLD, Australia

- 3Otago Business School, University of Otago, Dunedin, New Zealand

Introduction: The credibility of qualitative research has long been debated, with critics emphasizing the lack of rigor and the challenges of demonstrating it. In qualitative research, rigor encompasses explicit, detailed descriptions of various research stages, including problem framing, study design, data collection, analysis, and reporting. The diversity inherent in qualitative research, originating from various beliefs and paradigms, challenges establishing universal guidelines for determining its rigor. Additionally, researchers' often unrecorded thought processes in qualitative studies further complicate the assessment of research quality.

Methods: To address these concerns, this article builds on the TACT framework, which was developed to teach postgraduate students and those new to qualitative research to identify and apply rigorous principles and indicators in qualitative research. The research reported in this article focuses on creating a scale designed to evaluate the psychometric properties of the TACT framework. This involves analyzing the stability of its dimensions and understanding its effectiveness as a tool for teaching and research.

Results: The study's findings indicate that the TACT framework, when assessed through the newly developed scale, exhibits stable dimensions consistent with rigorous qualitative research principles. The framework effectively guides postgraduate students and new researchers in assessing the rigor of qualitative research processes and outcomes.

Discussion: The application of the TACT framework and its evaluation scale reveals several insights. Firstly, it demonstrates the framework's utility in bridging the gap in pedagogical tools for teaching rigor in qualitative research methods. Secondly, it highlights the framework's potential in providing a structured approach to undertaking qualitative research, which is essential given this field's diverse methodologies and paradigms. However, the TACT framework remains a guide to enhancing rigor in qualitative research throughout all the various phases but by no means a measure of rigor.

Conclusion: In conclusion, the TACT framework and its accompanying evaluative scale represent significant steps toward standardizing and enhancing the rigor of qualitative research, particularly for postgraduate students and early career researchers. While it does not solve all challenges associated with obtaining and demonstrating rigor in qualitative research, it provides a valuable tool for assessing and ensuring research quality, thereby addressing some of the longstanding criticisms of the quality of research obtained through qualitative methods.

Introduction and related research

The qualitative research methodology comprises several individually unique methods, with limited standardization of approaches and procedures. This lack of methodological standardization is problematic in achieving rigor (Williams et al., 2020). The scarcity of research is not surprising, considering the diverse range of approaches through which qualitative research can be conducted. Rigor, broadly defined, entails the capacity to be extremely thorough, systematic, consistent, methodical, and cautious. For several years, the literature has emphasized the value of rigor in qualitative studies (Guba and Lincoln, 1981; Morse et al., 2002; Lietz et al., 2006; Morse, 2015; Noble and Smith, 2015). For instance, Tong and Dew (2016) suggested that qualitative studies must be conducted using a rigorous approach and that the findings need to be comprehensively reported. Achieving rigor requires demonstrating that research outcomes can be applied to solve problems (Noble and Smith, 2015). It also means that the entire process of undertaking research is systematic and methodically transparent and that findings are accurately reported (Johnson et al., 2020).

Issues of rigor and relevance are likely to vary in complexity depending on the types of research questions, participants' characteristics and project size (Camfield, 2019).

Ensuring and upholding consistency in the approach, analysis, and reporting of research outcomes is of utmost importance due to the growing variety of qualitative research methods and designs (Daniel, 2019). While the significance of ensuring rigor in qualitative research methods is acknowledged (Daniel, 2018; Forero et al., 2018), a divided stance exists within the literature on practically achieving it. Some researchers have established universal criteria and standards for evaluating qualitative research grounded in interpretative ontologies (Shenton, 2004), while others have called for systematic and standardized procedures similar to those used in quantitative research (Morse et al., 2002). The adoption of general guidelines for evaluating qualitative research studies has faced criticism, as universal standards for judging qualitative research outcomes undermine the complexity and polarity of qualitative research methodology (Yardley, 2000; Dixon-Woods et al., 2004).

The complexity and plurality of methods in qualitative research can be attributed to its epistemic subjectivity, with the interpretation of data based on what is observed and how it was observed enriching the researcher's reflections and experiences of the social world (El Hussein et al., 2015; Hartman, 2015; Noble and Smith, 2015; Cypress, 2017). Barbour (2001) cautioned against using a checklist for determining rigor, asserting that improper use could reduce qualitative research to a set of technical procedures, thereby undermining the unique contribution of the systematic approach inherent to qualitative research.

Despite the diverse perspectives on achieving rigor in research presented in the literature, a universal framework is needed to provide students with clear guidance in research methods (Daniel, 2018). Such a generic framework acts as a decision-support tool, offering a comprehensive structure for novice qualitative researchers. Conducting meaningful qualitative research involves making numerous decisions, some of which are intricate and counterintuitive. This article presents the psychometric properties of the TACT framework (Daniel, 2018, 2019). TACT was designed to assist qualitative researchers in evaluating and determining the level of rigor in their studies. The framework comprises several indicators or dimensions: trust, auditability, credibility, and transferability (TACT).

The TACT framework

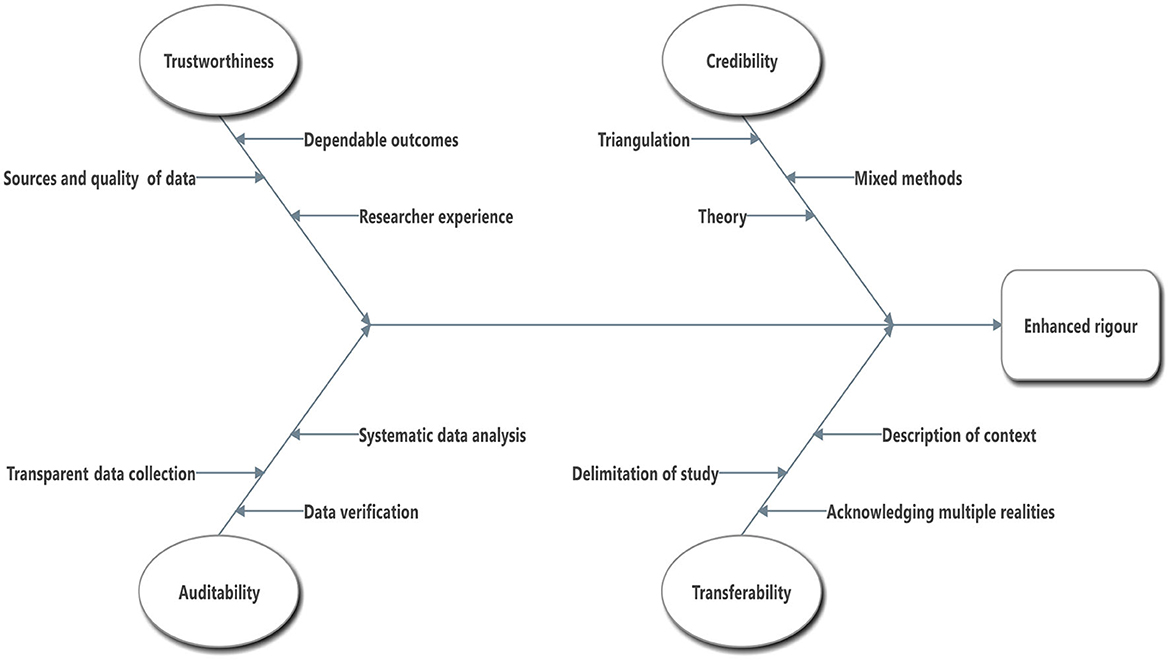

The TACT framework, which stands for trustworthiness, auditability, credibility, and transferability, offers researchers indicators to assess the rigor of qualitative research outcomes (Figure 1). These indicators were initially developed based on literature by Daniel (2018). The framework draws from other research endeavors to enhance the quality of qualitative research outcomes, such as studies by Morse et al. (2002), Koch (2006), and Johnson et al. (2020).

Figure 1. TACT model for assessing rigor in qualitative research (Daniel, 2018).

Trust is a fundamental aspect of ensuring the rigor of qualitative research. It has long been used to measure the truthfulness of research findings. Guba (1981) presented a model of rigor in qualitative research centered around trustworthiness: truth value, applicability, consistency, and neutrality. However, applying this model can be complex and time-consuming, requiring various strategies and situating findings within participants' views (Lietz et al., 2006; Sinkovics and Ghauri, 2008). Reflexivity, the researcher's self-awareness of their thoughts and actions in different contexts, can contribute to achieving trustworthiness (Meyrick, 2006).

Trustworthiness

Trust is a fundamental element for meaningfully interpreting qualitative research outcomes. It involves demonstrating relevance and confidence in research findings and establishing the authenticity of the results. Researchers achieve trustworthiness through a systematic and methodical approach, demonstrating data analysis and interpretation consistency. Trust is also essential in maintaining the overall integrity of research outcomes. This integrity in the qualitative research process ensures trustworthiness without advocating for a singular approach or disregarding opposing viewpoints.

Auditability

Auditability refers to a systematic recording of the research process, commonly known as an “audit trail.” Researchers present a clear pathway of decisions made during the research, facilitating reflection on the process. Auditability includes external and internal aspects. External auditability allows end-users to review research findings, while internal auditability involves scrutinizing methodological integrity concerning research questions, design, analysis, and conclusions.

Credibility

Credibility is crucial in all qualitative research outcomes. It involves demonstrating the ultimate truth in research conclusions and validating the authenticity and reliability of the findings. Researchers achieve credibility by ensuring that research design, data collection methods, analysis, and reporting align coherently with the research outcomes. Triangulation, member checking, peer debriefing, and prolonged engagement with participants are ways researchers can use to enhance credibility.

Transferability

Transferability enables researchers to apply research outcomes from one qualitative study to other similar settings or groups of people, offering valuable lessons. To achieve transferability, researchers must describe the study context and sample characteristics. Expert knowledge of participants and their understanding of the phenomenon under study are critical factors in the recruitment and selection of the sample. Detailed descriptions of real-life settings and participants' worldviews contribute to achieving transferability.

In summary, the TACT framework provides valuable indicators to assess the rigor of qualitative research outcomes, encompassing trustworthiness, auditability, credibility, and transferability. Researchers can use these indicators to enhance the quality and applicability of their qualitative research findings.

Purpose of the study

This study explores factors associated with achieving rigor in qualitative research studies, identifying the strategies needed to support them. The study is part of a large research programme looking at various ways to support the pedagogy of research methods.

The primary purpose of this study was to develop a comprehensive measure for assessing rigor in qualitative research studies and provide validity and reliability evidence to support its use for research purposes. We also wanted to examine the relationship between TACT constructs and some selected demographic factors. This study attempts to answer the following research questions:

1. How do we develop a measure of rigor in qualitative research?

2. To what extent is there evidence to support the factorial validity of the TACT (trustworthiness, auditability, credibility and transferability)?

3. To what extent are TACT factors associated with degree and stages in a postgraduate programme, academic division, and methodology experience?

Method and materials

Participants

We invited participants to participate in this study through an online questionnaire deployed for 2 years. The participants were 434 researchers at a research-intensive public university in New Zealand. Participants were enrolled in workshops on advanced topics in qualitative research methods taught by one of the authors. Of the participants, 49.5% (n = 215) were PhD, 26.3% were Masters (n = 114), 1.9% were diploma (n = 8) holders, and 21.0% were staff members (n = 91). Only six participants did not report their degree programme. Representation by academic division was 30.9% humanities (n = 134), 26.0% health science (n = 113), 23.3% commerce (n = 101), 7.8% science (n = 34), and 9.4% interdisciplinary (n = 41). Of the participants, 11 did not provide their academic division.

Concerning the status of research, 33.2% (n = 144) were planning research, 30.9% (n = 134) were writing up a thesis, and 27.4% (n = 119) were doing research. The remaining 7.8% (n = 34) were either making amendments to the thesis, awaiting graduation or not a student/staff member. Three participants did not report their stages in the programme. Regarding self-reported experience (feeling comfortable) with methodology, 30.6% were qualitative, 27.0% were mixed methods, 24.7% were quantitative, 5.3 % were all three traditions, 2.3% were qualitative and quantitative, and 3.2% did not provide information on the methodological experience.

Instrument development

The four dimensions of TACT were identified from various discourses of rigor in the literature on qualitative research methods. The verification of the TACT started with the development of a rating tool. The initial TACT scale contained 16 items to assess four dimensions of rigor in qualitative research studies: trustworthiness, auditability, credibility and transferability. Participants rated each item on a 5-point Likert scale, where 1 = very important, 2 = important, 3 = neutral, 4 = less critical, and 5 = not important. For ease of understanding, the items in the present study were reverse-coded with higher scores indicative of greater importance.

In this study, we examined the psychometric characteristics of the TACT scale using a Structural Equation Modeling (SEM) approach to provide validity and reliability evidence for its use with tertiary education researchers. The items in the TACT scale are shown in the Appendix.

Summary of statistical analyses

We conducted the data analyses in three stages. First, we checked the data for outliers and missing cases. Second, we adopted a Structural Equation Modeling (SEM) approach, focusing on Confirmatory Factor Analysis (CFA), to evaluate the factorial structure of the TACT scale using the MPlus 7 software (Muthén and Muthén, 2012). The hypothesized model was compared to other alternative (competing) models. The convergent and discriminant validities of the data were then established.

In the third stage, we utilized univariate MANOVA to explore potential significant mean differences in TACT components across participants' degree and status in the programme, academic divisions, and the methodology experience. An alpha level of 0.05 was used as a guideline for determining the significant effects of variables. The p-values for the univariate analyses were corrected relative to the number of subscales (e.g., the p-value for significance = 0.05/number of subscales). We also reported effect size measures, specifically partial eta squared (η2), to indicate the magnitude of the significant differences. The small, medium and large effects correspond to values of η2 of 0.01, 0.06, and 0.14 (Richardson, 2011).

The Weighted Least Squares Mean and Variance Adjusted (WLSMV) method was chosen as the Confirmatory Factor Analysis (CFA) estimator due to the data's ordered-categorical nature. Lubke and Muthén (2004) demonstrated that treating ordinal data as continuous can lead to inaccurate outcomes. WLSMV, a robust estimation technique, is recommended for modeling ordinal data (Flora and Curran, 2004; Brown, 2006). Robust estimation techniques are instrumental and effective when dealing with non-normal data distributions (Finney and DiStefano, 2006) because they apply a scaling factor to account for non-normality (Muthén and Muthén, 2006).

As suggested in the literature, we used several different goodness of fit indices to compare the different models and evaluate model-data fit (Cheung and Rensvold, 2002; Fan and Sivo, 2005, 2007). Each of these indices reflects a different aspect of model fit and may not be equally sensitive to different model conditions (Fan and Sivo, 2007). Therefore, it is essential to use multiple indices rather than relying on a single measure (Hair et al., 2010).

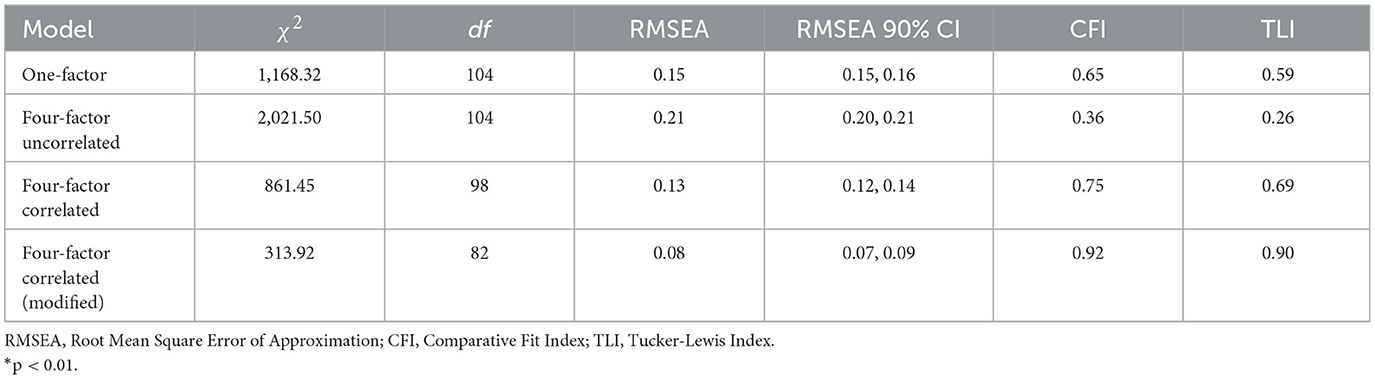

We evaluated model fit using the Root Mean Square Error of Approximation (RMSEA), the Comparative Fit Index (CFI), and the Tucker-Lewis index (TLI). In contrast, we reported the chi-square (χ2) values, which were not used for model fit decisions due to their sensitivity to sample size, model complexity, and distribution of variables. Based on the literature, the criteria we used for “acceptable” or “good” fit (Browne and Cudeck, 1992; MacCallum et al., 1996; Hu and Bentler, 1998; Hair et al., 2010) included: a non-significant chi-square (χ2), RMSEA with values < 0.08 indicating an acceptable fit and values < 0.05 indicating a good fit, and CFI and TLI with values >0.90 being indicative of reasonable fit and values >0.95 indicating a good fit.

After assessing the model fit as part of factorial validation, we further examined item loadings, factor correlations and reliabilities to provide evidence for convergent and discriminant validity evidence. The limitations of coefficient alpha (α) as a measure of reliability estimate are well-reported in the literature (Sijtsma, 2009; Teo and Fan, 2013). Therefore, we calculated and reported McDonald's (1999) omega (ω) for each TACT dimension as a better reliability estimate.

Results

Descriptive statistics

We have not identified any univariate outliers that have an effect on the results. The proportion of missing cases for each TACT item was minimal, ranging from mostly zero to 2%. Rather than deleting, the Expectation Maximization (EM) algorithm was utilized to impute the missing cases. The means and standard deviations for the four factors of the TACT scale are summarized in Table 1.

Our results indicated that factor means ranged from 3.89 to 4.37, suggesting that most participants endorsed the statements as “important” or “very important.” The standard deviations ranged from 0.55 to 0.72, indicating that the dispersion of responses for each factor was somewhat similar. However, examining the item means, ranging from 1.00 to 5.00, revealed that some researchers ranked the statements as “not important” or “less important.” For example, 65 (15%) researchers surprisingly reported that “ensuring the outcomes of a qualitative research study can be verified by theory/literature” was unnecessary or less essential for them.

Confirmatory factor analysis (CFA)

To provide validity evidence for the internal factor structure of the TACT measure, we tested and compared the goodness-of-fit of different competing models as suggested in the literature (Noar, 2003; Strauss and Smith, 2009). The hypothesized four-factor correlated TACT model was compared to two other alternative competing models. The alternative models included: (a) a one-factor (unidimensional) model that assumed all manifest variables loaded on a single factor and (b) a four-factor uncorrelated (orthogonal) model that suggests all the factors in the model are unrelated.

Support for the one-factor model means that we are measuring a unidimensional construct, and researchers are not differentiating the factors that assess rigor in qualitative research. Evidence for the four-factor orthogonal model indicates that TACT factors are distinct and independent. Support for the hypothesized four-factor correlated (oblique) model would suggest that researchers discern between four TACT factors related to each other. The goodness-of-fit measures of hypothesized and alternative models are summarized in Table 2.

As evident from Table 2, the results of the unidimensional and four-factor uncorrelated models revealed that these models did not represent the sample data sufficiently. The RMSEA, CFI, and TLI values did not meet the commonly acceptable fit criteria. The fit of the hypothesized four-factor correlated model (RMSEA = 0.13; CFI = 0.75; TLI = 0.69) was neither adequate. To pinpoint the sources of misfit, we further examined factor loadings, residual matrices and modification indices. We found that the factor loading for the second trustworthiness item was relatively low (λ = 0.29).

A closer examination of the wording of this item, “Ensuring research outcomes conform to research's assumptions or a well-established theory or both,” yielded that it was the only double-barrelled item, which was probably cognitively challenging to the respondents, so the item was removed from the scale. Examination of the modification indices suggested that incorporating residual covariance into the model would result in a more accurate fit. The most substantial modification indices were noted between items TW3 and TW4 and between items CR3 and CR4. Applying these modifications resulted in a significant improvement in model fit. The results revealed an acceptable model fit for the modified four-factor model (RMSEA = 0.08; CFI = 0.92; TLI = 0.90). Standardized factor loadings and reliabilities for the revised model are presented in Table 3.

Standardized factor loadings for the hypothesized model were significant and ranged from 0.50 to 0.82, supporting convergent validity. All of the items were strong indicators of the factors they were related to. The omega reliability estimates exceeded the recommended level (0.70). Factor correlations are presented in Table 4.

The correlations among the TACT factors ranged from 0.23 to 0.86, indicating the presence of discriminant validity evidence. The strongest correlations were observed between trustworthiness—auditability and transferability—auditability. Additionally, we noted modest correlations among other pairs of factors. The results from CFA analyses and reliability estimates supported the validity evidence at both the item and construct levels of the TACT scale. Thus, the proposed research model's constructs are deemed suitable for further analyses.

Differences on TACT

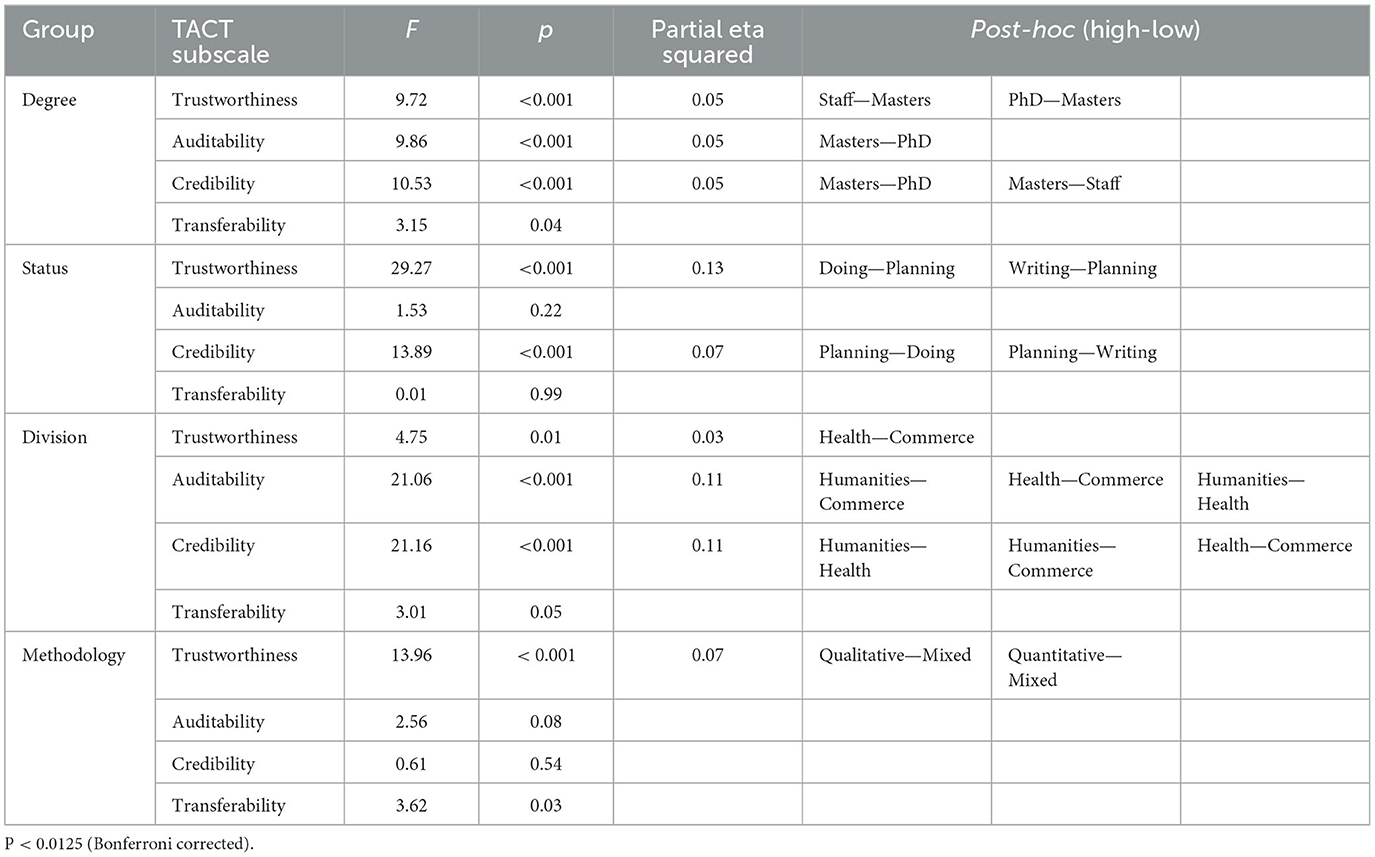

A multivariate analysis of variance (MANOVA) was employed to determine whether significant differences exist in TACT components about researchers' degree and status in the programme, academic division, and methodology experience. Dependent variables were the researchers' mean scores on each TACT subscale. Independent variables were a degree in the programme (masters, PhD, and staff), stages in the programme (planning research, doing research, and writing a thesis), academic division (commerce, humanities, and health sciences), and the methodology experience (qualitative, quantitative, and mixed methods). We omitted groups with small sample sizes from the analyses. MANOVA results revealed significant differences (Wilks' Lambda < 0.001) in the dimensions of the TACT based on researchers' degree and status in the programme, academic division, and methodology experience. Further univariate and post-hoc comparisons (with Bonferroni) were utilized and summarized in Table 5.

Degree differences

Significant variations were observed across the TACT subscales based on participants' degree affiliations. Noteworthy disparities were evident in the “Trustworthiness,” “Auditability,” and “Credibility” dimensions. Post-hoc comparisons indicated that participants holding PhD, Master, and Staff positions rated these factors differently, emphasizing the role of academic qualifications in shaping perceptions of research rigor.

Status distinctions

Exploring differences in research status revealed substantial discrepancies in the appraisal of “Trustworthiness” and “Credibility” subscales. Participants engaged in distinct research phases, encompassing “Doing,” “Planning,” and “Writing,” exhibited divergent evaluations of these dimensions. These findings illuminate the dynamic interplay between research activities and the perceived rigor of research endeavors.

Divisional variances

The academic division to which participants belonged yielded meaningful variations in the assessment of “Auditability” and “Credibility” dimensions. Participants affiliated with different academic domains demonstrated divergent ratings, underscoring the influence of disciplinary perspectives on appraisals of research rigor.

Methodological perspectives

Participants' research methodologies engendered significant disparities in evaluating the “Trustworthiness” and “Transferability” subscales. Notable differences were observed between qualitative, mixed, and quantitative research practitioners. These findings reflect the nuanced interrelationships between research paradigms and perceptions of research rigor.

Discussion and conclusion

The most challenging aspects of conducting high-quality research include making sense of voluminous data, imposing order, structure and meaning, identifying helpful information, making it logical, sensible and meaningful, and assessing the quality of qualitative research outcomes. In response to these challenges, this study aimed to develop a comprehensive measure to assess rigor in qualitative research studies and provide validity evidence to support its use for research purposes. By doing so, this research contributes to the advancement of rigor within the qualitative research domain. Notably, employing confirmatory factor analysis (CFA), the study establishes empirical support for the multidimensional structure of the TACT, which comprises four factors: trustworthiness, auditability, credibility, and transferability.

The magnitude of the factor loadings demonstrated that items were strong indicators of their respective dimensions within the TACT framework. The observed correlations among factors were in the expected direction, further providing additional validity evidence. The computed omega reliability estimates surpass the established threshold of 0.70, indicative of the robust internal consistency of the measured constructs. These findings collectively endorse the TACT as a valuable measure for assessing rigor in qualitative research. To our knowledge, this is the first analytical tool developed explicitly to measure the level of rigor in qualitative research.

The MANOVA findings shed light on the intricate interconnectedness among academic credentials, research status, academic disciplines, and research methodologies, collectively enriching the diverse spectrum of perspectives regarding research rigor.

The TACT framework equips researchers with informative indicators to navigate the assessment of rigor. While not rigid, these indicators manifest as theoretical and empirically grounded guidelines. Qualitative research methods are used to explore complex social phenomena. The lack of standardization in these methods and the challenge of replicating findings have led to growing criticism (Barbour, 2001; Filep et al., 2018). Notably, these critiques have described qualitative methods as biased and limited in generalizability (Cope, 2014; Hayashi et al., 2019).

Implications to pedagogy and research

Teaching rigor in qualitative research presents challenges partly because rigor, like many other social phenomena in qualitative research, is highly abstract and constructed, with meanings that can differ from one person to another. By identifying and validating its constituent dimensions, teachers of research methods can provide students with concrete elements that they can critically inspect and discuss, and teachers can emphasize the importance of maintaining rigor throughout the research process. Teachers can use the TACT framework to show students how to integrate rigor when framing research questions, designing studies, collecting and analyzing data, and reporting findings clearly and precisely. The framework also highlights the importance of reflexivity in qualitative research by providing students with clear indicators they should think critically about in their research design and decision-making. This active reflection will help students justify their approach at each research stage.

Introducing the TACT framework as an anchor of rigor in qualitative research can lead to higher quality and credibility. Researchers well-versed in rigorous qualitative methods are better equipped to produce trustworthy findings that contribute meaningfully to their field of study. By upholding rigorous research practices, the validity and reliability of qualitative research can be enhanced, thus bolstering the confidence of scholars, policymakers, and practitioners in the outcomes. Additionally, a focus on rigor in qualitative research can pave the way for more transparent and replicable research practices, fostering a culture of accountability and openness within the research community. This, in turn, promotes a positive impact on advancing knowledge and addressing complex social and cultural phenomena robustly and meaningfully.

Suggestions for future research

The four dimensions of rigor in the TACT framework are not obligatory benchmarks of rigor but rather serve as important indicators that guide researchers in enhancing the quality of research outcomes. These dimensions encourage researchers to consider thoughtful strategies to enhance research quality by addressing one or more of these facets. Further validation and replication studies involving the TACT measure remain necessary to substantiate its stability, reliability and construct validity. We believe conducting measurement invariance analyses across various settings or time points holds significant potential for fruitful research. Researchers may also be interested in examining the extent to which scores on the TACT measure are associated with gender, ethnicity or the age of the researchers.

Although the four-factor model provided the best fit, we acknowledge that there remains potential for enhancing the psychometric properties of the TACT scale. In this study, we tried to model and interpret a second-order (higher-order) factor model with the four scale factors subordinating to a single second-order factor. However, we were unable to assess the goodness-of-fit of this model and compare it with other models due to the covariance matrix not being positive definite, resulting in an inadmissible solution. Thus, we encourage researchers to consider and test a higher-order factor model which could potentially encapsulate a broader and more overarching conceptualization of the rigor construct.

In the future, we will explore whether the TACT framework can be applied to domains in qualitative research that often do not neatly fall into the steps by logical sequencing of qualitative research such as researching the unconscious and indigenous research. Central to this exploration is applying TACT's key elements, such as Auditability, to test whether integrating this element to every stage of the research process, including problem definition, design, methods, and outcomes, can improve the quality of research findings, fosters more profound understanding and constructive dialogue among scholars and stakeholders.

In the context of Indigenous Research Methods (IRM) with a focus on decolonization, it would be worthwhile to use the TACT framework in the entire research cycle and analyze whether aspects such as Trustworthiness and Auditability can empower historically marginalized communities to understand and trust the process and outcome of the research. We believe that applying TACT across various stages of research, from question formulation to presentation, encourages the inclusion of colonized communities' perspectives, making them partners in the research instead of subjects being researched and exploited. It is possible that such an approach shifts from traditional, top-down methods toward a more collaborative, culturally sensitive, and transparent model. Furthermore, the psychometric validation of the TACT tool shows that its integrity makes it a suitable guide for researchers within decolonized epistemologies and ontologies, fostering a significant paradigm shift toward more inclusive, respectful research methodologies.

Limitations

A notable limitation of this study is the use of self-report instruments for data collection, which introduces the possibility of inherent bias. Furthermore, a constraint pertains to the sample composition. Since we utilized a convenience and voluntary sampling approach, our sample may not comprehensively represent the entire population. Consequently, we advocate for future investigations to employ the TACT measure with larger sample sizes, thereby enhancing the generalizability of findings.

We acknowledge that the TACT framework presented in this article is quantitatively oriented and structured, which may limit its ability to adequately capture the intricacies of areas such as researching the unconscious. Researching into the unconscious is predominantly qualitative and fluid, marked by complexities that do not neatly fit within the rigid parameters of quantitative methods or logical steps in qualitative methods. In structured and numerical analysis, frameworks such as TACT may not adequately grasp the full depth and breadth of the unconscious, an area that frequently defies conventional structured or logical qualitative research approaches. However, TACT can still help researchers in the unconscious domains document their procedures, approaches, and rationales in all stages of the research process. They can use it as a reflective tool.

Further, it is worth noting that researching the unconscious is influenced by myriad factors that are generally not quantifiable. These include cultural influences, psychological dynamics, and social elements, collectively weave a complex tapestry defining the unconscious. To understand this aspect of human cognition, we require an approach capable of navigating these diverse and often subtle influences that may not lend themselves to straightforward quantitative measurement or strict structural frameworks. This necessitates a more flexible and nuanced method of studying the unconscious, which truly appreciates the rich and varied dimensions contributing to its formation.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of Otago Human Ethics. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

BD: Conceptualization, Data curation, Methodology, Validation, Writing – original draft, Writing – review & editing. MA: Formal analysis, Methodology, Project administration, Writing – original draft, Writing – review & editing. SC: Investigation, Methodology, Resources, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frma.2023.1276446/full#supplementary-material

References

Barbour, R. S. (2001). Checklists for improving rigour in qualitative research: a case of the tail wagging the dog? Br. Med. J. 322, 1115–1117. doi: 10.1136/bmj.322.7294.1115

Brown, T. (2006). Confirmatory Factor Analysis for Applied Research. Guildford; New York, NY: Guildford Press.

Browne, M. W., and Cudeck, R. (1992). Alternative ways of assessing model fit. Sociol. Methods Res. 21, 230–258. doi: 10.1177/0049124192021002005

Camfield, L. (2019). Rigour and ethics in the world of big-team qualitative data: experiences from research in international development. Am. Behav. Sci. 63, 604–621. doi: 10.1177/0002764218784636

Cheung, G. W., and Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Struct. Eq. Model. 9, 233–255. doi: 10.1207/S15328007SEM0902_5

Cope, D. G. (2014). Methods and meanings: credibility and trustworthiness of qualitative research. Oncol. Nurs. For. 40, 89–91. doi: 10.1188/14.ONF.89-91

Cypress, B. S. (2017). Rigour or reliability and validity in qualitative research: perspectives, strategies, reconceptualisation, and recommendations. Dimens. Crit. Care Nurs. 36, 253–263. doi: 10.1097/DCC.0000000000000253

Daniel, B. K. (2018). Empirical verification of the “TACT” framework for teaching rigour in qualitative research methodology. Qual. Res. J. 18, 262–275. doi: 10.1108/QRJ-D-17-00012

Daniel, K. (2019). Using the TACT framework to learn the principles of rigour in qualitative research. Electr. J. Bus. Res. Methods 17, 118–129. doi: 10.34190/JBRM.17.3.002

Dixon-Woods, M., Shaw, R. L., Agarwal, S., and Smith, J. A. (2004). The problem of appraising qualitative research. Br. Med. J. Qual. Saf. 13, 223–225. doi: 10.1136/qshc.2003.008714

El Hussein, M., Jakubec, S. L., and Osuji, J. (2015). Assessing the FACTS: a mnemonic for teaching and learning the rapid assessment of rigour in qualitative research studies. Qual. Rep. 20, 2237. doi: 10.46743/2160-3715/2015.2237

Fan, X., and Sivo, S. A. (2005). Sensitivity of fit indexes to misspecified structural or measurement model components: rationale of two-index strategy revisited. Struct. Eq. Model. 12, 343–367. doi: 10.1207/s15328007sem1203_1

Fan, X., and Sivo, S. A. (2007). Sensitivity of fit indices to model misspecification and model types. Multivar. Behav. Res. 42, 509–529. doi: 10.1080/00273170701382864

Filep, C. V., Turner, S., Eidse, N., Thompson-Fawcett, M., and Fitzsimons, S. (2018). Advancing rigour in solicited diary research. Qual. Res. 18, 451–470. doi: 10.1177/1468794117728411

Finney, S. J., and DiStefano, C. (2006). “Nonnormal and categorical data in structural equation modelling,” in Structural Equation Modelling: A Second Course, eds G. R. Hancock and R. O. Mueller (Greenwich, CT: Information Age), 269–314.

Flora, D. B., and Curran, P. J. (2004). An empirical evaluation of alternative methods of estimation for confirmatory factor analysis with ordinal data. Psychol. Methods 9, 466–491. doi: 10.1037/1082-989X.9.4.466

Forero, R., Nahidi, S., De Costa, J., Mohsin, M., Fitzgerald, G., Gibson, N., et al. (2018). Application of four-dimension criteria to assess the rigour of qualitative research in emergency medicine. BMC Health Serv. Res. 18, 120. doi: 10.1186/s12913-018-2915-2

Guba, E. G. (1981). Criteria for assessing the trustworthiness of naturalistic inquiries. Educ. Commun. Technol. J. 29, 75–91. doi: 10.1007/BF02766777

Guba, E. G., and Lincoln, Y. S. (1981). Effective Evaluation: Improving the Usefulness of Evaluation Results Through Responsive and Naturalistic Approaches. San Francisco, CA: Jossey-Bass.

Hair, J. F., Black, W. C., Babin, B. J., and Anderson, R. E. (2010). Multivariate Data Analysis, 7th Edn. Englewood Cliffs: Prentice-Hall.

Hartman, T. (2015). 'Strong multiplicity': an interpretive lens in the analysis of qualitative interview narratives. Qual. Res. 15, 22–38. doi: 10.1177/1468794113509259

Hayashi, Jr. P., Abib, G., and Hoppen, N. (2019). Validity in qualitative research: a processual approach. Qual. Rep. 24, 98–112. doi: 10.46743/2160-3715/2019.3443

Hu, L., and Bentler, P. M. (1998). Fit indices in covariance structure modelling: sensitivity to under parameterised model misspecification. Psychol. Methods 3, 424–453. doi: 10.1037/1082-989X.3.4.424

Johnson, J. L., Adkins, D., and Chauvin, S. (2020). A review of the quality indicators of rigour in qualitative research. Am. J. Pharmaceut. Educ. 84, ajpe7120. doi: 10.5688/ajpe7120

Koch, T. (2006). Establishing rigour in Qualitative Research: the decision trail. J. Adv. Nurs. 53, 91–103. doi: 10.1111/j.1365-2648.2006.03681.x

Lietz, C. A., Langer, C. L., and Furman, R. (2006). Establishing trustworthiness in qualitative research in social work implications from a study regarding spirituality. Qual. Soc. Work 5, 441–458. doi: 10.1177/1473325006070288

Lubke, G. H., and Muthén, B. O. (2004). Applying multigroup confirmatory factor models for continuous outcomes to Likert scale data complicates meaningful group comparisons. Struct. Eq. Model. 11, 514–534. doi: 10.1207/s15328007sem1104_2

MacCallum, R. C., Browne, M. W., and Sugawara, H. M. (1996). Power analysis and determination of sample size for covariance structure modelling. Psychol. Methods 1, 130. doi: 10.1037/1082-989X.1.2.130

Meyrick, J. (2006). What is good qualitative research? A first step towards a comprehensive approach to judging rigour/quality. J. Health Psychol. 11, 799–808. doi: 10.1177/1359105306066643

Morse, J. M. (2015). Critical analysis of strategies for determining rigour in qualitative inquiry. Qual. Health Res. 25, 1212–1222. doi: 10.1177/1049732315588501

Morse, J. M., Barrett, M., Mayan, M., Olson, K., and Spiers, J. (2002). Verification strategies for establishing reliability and validity in qualitative research. Int. J. Qual. Methods 1, 13–22. doi: 10.1177/160940690200100202

Muthén, L. K., and Muthén, B. O. (2006). Mplus User's Guide, 4th Edn. Los Angeles, CA: Muthén & Muthén.

Muthén, L. K., and Muthén, B. O. (2012). Mplus: Statistical Analysis With Latent Variables: User's Guide. Los Angeles, CA: Muthén & Muthén.

Noar, S. M. (2003). The role of structural equation modelling in scale development. Struct. Eq. Model. 10, 622–647. doi: 10.1207/S15328007SEM1004_8

Noble, H., and Smith, J. (2015). Issues of validity and reliability in qualitative research. Evid. Based Nurs. 18, 34–35. doi: 10.1136/eb-2015-102054

Richardson, J. T. (2011). Eta squared, and partial eta squared as measures of effect size in educational research. Educ. Res. Rev. 6, 135–147. doi: 10.1016/j.edurev.2010.12.001

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Educ. Inform. 22, 63–75. doi: 10.3233/EFI-2004-22201

Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's alpha. Psychometrika 74, 107–120. doi: 10.1007/s11336-008-9101-0

Sinkovics, R. R., and Ghauri, P. N. (2008). Enhancing the trustworthiness of qualitative research in international business. Manag. Int. Rev. 48, 689–714. doi: 10.1007/s11575-008-0103-z

Strauss, M. E., and Smith, G. T. (2009). Construct validity: advances in theory and methodology. Ann. Rev. Clin. Psychol. 5, 1–25. doi: 10.1146/annurev.clinpsy.032408.153639

Teo, T., and Fan, X. (2013). Coefficient alpha and beyond: issues and alternatives for educational research. Asia-Pacific Educ. Research. 22, 209–213. doi: 10.1007/s40299-013-0075-z

Tong, A., and Dew, M. A. (2016). Qualitative research in transplantation: ensuring relevance and rigour. Transplantation 100, 710–712. doi: 10.1097/TP.0000000000001117

Williams, V., Boylan, A. M., and Nunan, D. (2020). Critical appraisal of qualitative research: necessity, partialities and the issue of bias. Br. Med. J. Evid. Based Med. 25, 9–11. doi: 10.1136/bmjebm-2018-111132

Keywords: rigor, qualitative research, validity, confirmatory factor analysis (CFA), TACT

Citation: Daniel BK, Asil M and Carr S (2024) Psychometric properties of the TACT framework—Determining rigor in qualitative research. Front. Res. Metr. Anal. 8:1276446. doi: 10.3389/frma.2023.1276446

Received: 12 August 2023; Accepted: 13 December 2023;

Published: 08 January 2024.

Edited by:

Maximus Monaheng Sefotho, University of Johannesburg, South AfricaReviewed by:

Neo Pule, University of Johannesburg, South AfricaMandu Selepe, South African College of Applied Psychology, South Africa

Copyright © 2024 Daniel, Asil and Carr. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ben Kei Daniel, ben.daniel@otago.ac.nz

Ben Kei Daniel

Ben Kei Daniel Mustafa Asil

Mustafa Asil Sarah Carr3

Sarah Carr3