Robotic Assembly of Timber Structures in a Human-Robot Collaboration Setup

- 1The Maersk McKinney Moller Institute, SDU Robotics, University of Southern Denmark, Odense, Denmark

- 2CREATE Section for Civil and Architectural Engineering, University of Southern Denmark, Odense, Denmark

The construction sector is investigating wood as a highly sustainable material for fabrication of architectural elements. Several researchers in the field of construction are currently designing novel timber structures as well as novel solutions for fabricating such structures, i.e. robot technologies which allow for automation of a domain dominated by skilled craftsman. In this paper, we present a framework for closing the loop between the design and robotic assembly of timber structures. On one hand, we illustrate an extended automation process that incorporates learning by demonstration to learn and execute a complex assembly of an interlocking wooden joint. On the other hand, we describe a design case study that builds upon the specificity of this process, to achieve new designs of construction elements, which were previously only possible to be assembled by skilled craftsmen. The paper provides an overview of a process with different levels of focus, from the integration of a digital twin to timber joint design and the robotic assembly execution, to the development of a flexible robotic setup and novel assembly procedures for dealing with the complexity of the designed timber joints. We discuss synergistic results on both robotic and construction design innovation, with an outlook on future developments.

1 Introduction

In recent years, the field of construction robotics has gained in popularity, primarily because of a shifting mindset towards a sustainable, reusable and carbon-efficient society. One of the leading construction methods in this domain is robotic timber construction which focuses on sustainable design, manufacturing and assembly processes. The widespread diffusion of computational workflows to link design and simulation to real-world assembly operations has opened opportunities for efficient non-standard timber construction and increased the overall level of automation. With the progressive diffusion of robotic and automation technologies, the construction domain can also harness a higher level of automation in their production processes, essential to meet increasing demands for material and carbon efficiency and relieving labor-intensive and repetitive tasks. While the robotic fabrication of timber structures with Computer Numerical Control (CNC) technology relies on consolidated methods (Yuan et al., 2016), the automated assembly of wood structures is still a challenging task, especially in development of highly efficient structures that require custom design and assembly strategies. Working with a non-homogeneous material e.g., wood presents various challenges for robotic-driven assembly processes. Piece-specific material features, weather-related shape variations, geometrical imprecisions and occurring tolerances shall be carefully considered in the overall process. This paper presents a new framework for tackling such challenges in the context of the robot-based collaborative assembly of timber truss structures connected through novel lap-joint connections that interlock elements through complex assembly motions. These elements guarantee mechanical capacity through tight insertions that, however, can be difficult to achieve with common industrial robots and assembly strategies. Therefore, we introduce the concept of Learning by Demonstration (LbD) strategies based on Dynamic Movement Primitives (DMP) to teach robots how to perform such operations, thus omitting conventional robot programming, which for task like this is very complex. The first part of the paper introduces a robot-friendly design case study for a structural truss based on discrete wood elements, presenting innovative joinery features that allow assembly and disassembly through complex assembly motions. The second part describes the underlying digital twin and the pipeline connecting the phases of design, task planning and assembly. Lastly, adaptation procedures based on the task dynamics which arise during the assembly execution are presented. The effectiveness of the framework will be shown on a timber structure assembly executed by two collaborative robots.

The main contributions of the paper are

• a digital framework, linking the design and simulation of timber trusses with the robot based assembly procedures through a digital twin,

• design of novel interlocking timber truss joints, taking into account the capabilities and limitations of a collaborative robot,

• robotic LbD assembly method with adaptation capabilities, for execution of the demonstrated motion under various workspace conditions.

2 State of the Art

Extensive research has been conducted internationally with a particular effort on enhancing the robotic assembly of timber structures (Stumm et al., 2018; Thoma et al., 2019; Helm et al., 2016; Eversmann, 2017; Søndergaard et al., 2016; Naboni et al., 2021; Kunic et al., 2021b). Recent works have been looking into robotic assembly strategies for structures that rely on friction-based wood/wood connections to achieve structural strength through tight-fitting. While these techniques are found in historical traditional construction methods worldwide (Zwerger, 2015), they have been poorly implemented in current construction practices in favor of more straightforward techniques where steel plates are added to fixate the wooden elements (Leśko, 2016). The process is materially inefficient and presents strong geometrical limitations that compromise the effective construction possibilities. Advancements in CNC technologies and automation give new possibilities for fast and efficient fabrication of traditionally established wood-wood joinery, which used to be time consuming and required a high level of dexterity from the woodworking craftsmen. Experimental structures have been constructed with the use of interlocking half-lap joints to connect straight timber beams (Chai et al., 2019); integrative finger joints for the construction of plate-based (Krieg et al., 2015) and folded (Robeller et al., 2017) structural shells; interlocking timber tiles shaped like a puzzle, held by tension and gravity, to form structural arches (Kuma and Associates, 2020). The assembly of these structures primarily relies on manual work, as the tight interlocking of such connections requires complex robot tool path planning and advanced force control to be realized.

In the existing literature, we can find three main approaches to the problem of tight fit wood assembly. The first one relies on force/torque monitoring and poses data collection as seen in (Stumm et al., 2018; Stumm and Brell-Çokcan, 2019) and in the case of wood/wood lap joints assembly for spatial frame structures thought reinforcement learning methods (Apolinarska et al., 2021). The second method relies on computer vision to provide the necessary localization of the wooden elements and validation of the assembly operations, combined with geometrical adaptations of joinery elements to gain positioning precision during the assembly of reversible discrete structures (Kunic et al., 2021a).

Similarly, plate structures with multiple through-tenon joints have been automatically assembled, relying on fiducial markers and chamfered geometries that guide linear assembly motions (Rogeau et al., 2020). The third method is based on custom mechanical devices to apply additional clamping forces in the assembly process. Leung et al. (2021) utilizes custom-built remote-controlled clamps to apply large assembly forces and correct misalignment’s between wooden elements. At the same time Robeller et al. (2017) introduced a custom-built end-effector for vibration-assisted assembly of through-tenons joints, where the assembly forces are monitored with a load cell. Koerner-Al-Rawi et al. (2020) utilized two coordinated industrial robotic arms to assemble wood lattices through rotation and locking, building upon traditional Japanese Chidori joints.

In the work mentioned before, conventional industrial robots were utilized in order to automate the timber assembly tasks. In recent years we can see a shifting trend where collaborative robots are being utilized for collaborative tasks (Kunic et al., 2021a) in timber construction, where the workspace is shared between the human and the robot. Those systems follow the idea of the Industry 4.0 (Bragança et al., 2019), where the industrial systems are linked with the cloud, are able to be programmed with service/skill based programming structures (Schou et al., 2018) and enable interaction with agents, such as digital twins in a working environment (Malik and Brem, 2021). Furthermore, new manufacturing trends, focusing on efficiency, user friendliness and flexibility of the manufacturing process were introduced (Gašpar et al., 2020), replacing the need for specialized automation equipment, which governed the production processes in the past. Furthermore, the newly introduced automation technologies paved the way for methods such as LbD (Billard et al., 2008) and interaction control with the environment, thanks to the integrated sensors and controllers of the automation systems.

For a standard LbDscenario, robot trajectories describing the task are recorded (Deniša and Ude, 2015) by a human operator. While this information is adequate for robot tasks where the robot is not in contact with the human or the environment, for in-contact tasks e.g., assembly (Abu-Dakka et al., 2015) or polishing (Gams et al., 2013), dynamic data arising during the demonstration and execution has to be considered.

In order to execute tasks in contact with the environment, the execution framework has to take into account also the adaptability and generalizability aspect of the robot motion. Therefore, in many publications, demonstrated robot data is represented with Dynamic Movement Primitives (Ijspeert et al., 2013; Ude et al., 2014). With this framework, kinematic and dynamic robot trajectories and sensor readings can be represented in unified way, and can easily be adapted to a new situation with the help of modulation and time scaling. Furthermore, the robot data can be reused for synthesizing new movements (Kramberger et al., 2017; Lončarević et al., 2021) from a database of pre-recorded movements.

For in-contact tasks, where uncertainties e.g., manufacturing tolerances and material inconsistency, play a major factor, the before mentioned framework can efficiently be exploited, when coupled with a force control strategy as shown in Rozo et al. (2013), Koropouli et al. (2012), and Kormushev et al. (2011). Moreover, adaptation can also be achieved by changing the impedance of the robot, depending on the requirements of the task. By changing the stiffness parameters of the impedance controller, the interaction forces can be adjusted between the robot and the environment (Buchli et al., 2011). Pastor et al. (2011) introduced a method for real-time adaptation of the demonstrated robot motion depending on the measured force/torque data. The authors present an adaptive controller for learning and adaptation of demonstrated motion, where measured and learned force/torque feedback was utilized for in-contact robot tasks. Furthermore, a review of online adaptation of impedance control parameters for human robot interaction (Müller et al., 2018) and in-contact execution tasks are presented in the work of Abu-Dakka and Saveriano (2020).

3 Case - Study of Digital Design and Assembly of Complex Timber Truss Structures

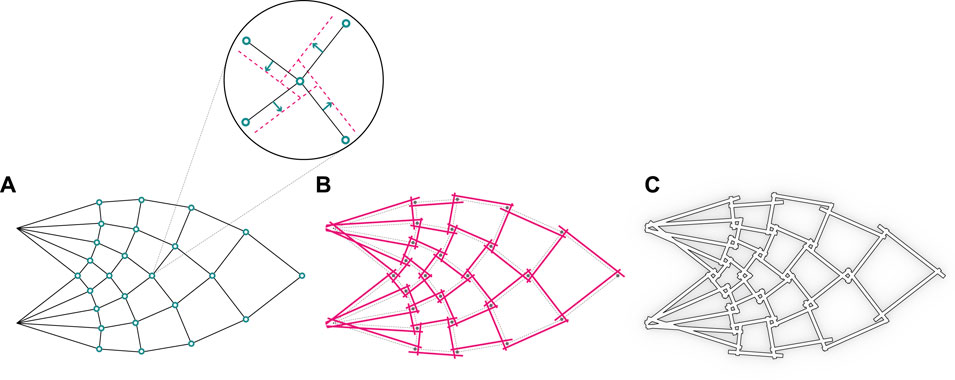

The urge to build more sustainable and circularity oriented in combination with modern computational design tools opens new perspectives for the construction industry. Driven by such motivations, we introduce a concept for reciprocal timber truss structures featuring innovative cross-lap interlocking joints. The presented design method promotes material and structural efficiency while proposing robot-friendly and assembly-aware features. The global structural design method is inspired by Antony Michell’s famed theoretical study (Michell, 1904) on the optimal truss structures that use a minimal amount of material while providing maximum structural capacity. In particular, we focus on the case of a cantilevered element with a vertical point load at the full span length. A frame based on quad elements is generated following ideal load paths (Figure 1).

FIGURE 1. The process of structural design inspired by Michell’s optimal truss: (A) Michell’s optimal truss, (B) Revised structure with reciprocal connections, (C) The final structure made of timber studs.

Our approach revisits such a structural solution, shifting from a typical strut-and-pin system to reciprocal connections (Larsen, 2007) based on interlocking cross-lap joints. This method enables timber-timber joinery without the need for additional steel fasteners while still providing structural integrity.

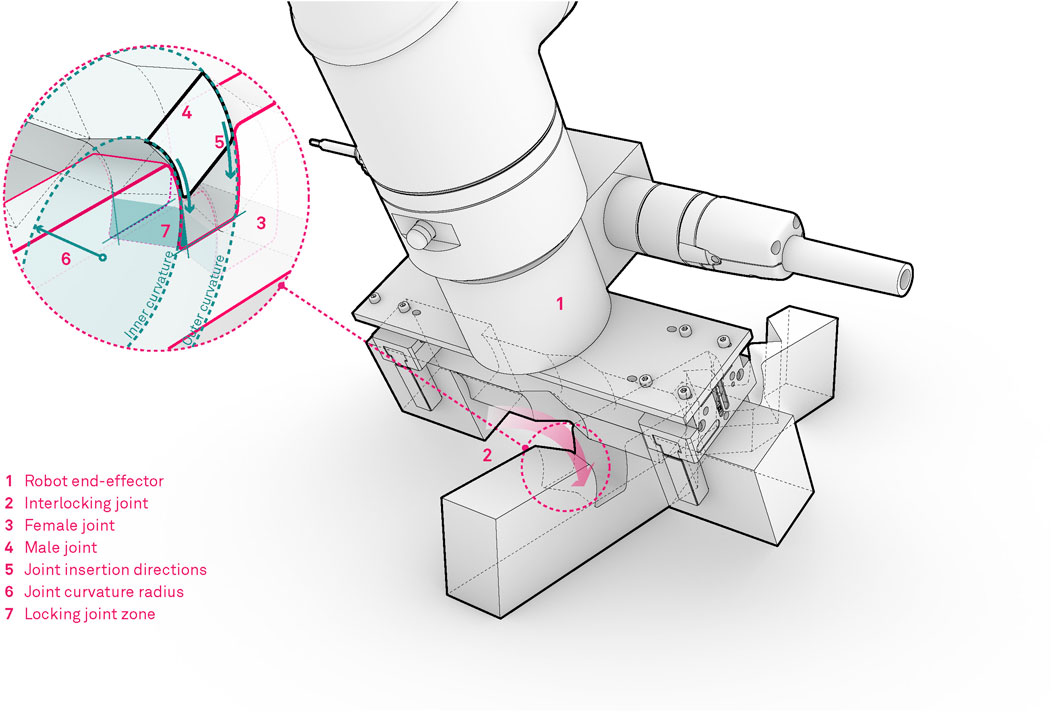

The cross-lap joint is here re-invented and designed with three-dimensional interlocking features derived from an idealized assembly trajectory that locks into position two or more wood studs through spatial translation and rotation. An initial inclined angle is followed by a rotational motion as shown in Figure 2, leaving no margin for the studs to be moved or removed unless the reversed trajectory is applied. The filleted edges and semi-circular cross-section of the joint ensure a smooth robotic positioning and a tight interlocking in the joint. The wood studs are placed along the compression and tension elements from Michell’s truss. To manufacture such a joint, CNC milling has to be adopted in order to ensure the described properties.

4 Methodology

In this section, the relevant methods outlining the digital robotic timber assembly framework, will be presented.

4.1 Digital Design Framework for Robot-Based Assembly

In this work computational design algorithms are developed to manage the global and local design parameters and transform these into robot targets and plan assembly trajectories. A bi-directional communication between the computer design environment and the physical setup is established, and constant updates on the overall assembly process and specific design targets are possible due to feedback from the construction procedures. The process is further aided by the human demonstration method, which allows for contact rich assembly skills to be transferred to the robot. Following Cimino et al. (Cimino et al., 2019), we apply digital twins to digitally mirror the state of the physical entities (Kritzinger et al., 2018) (i.e. timber structures and robot technologies) in order to support the analysis, process planning and operation monitoring of the setup.

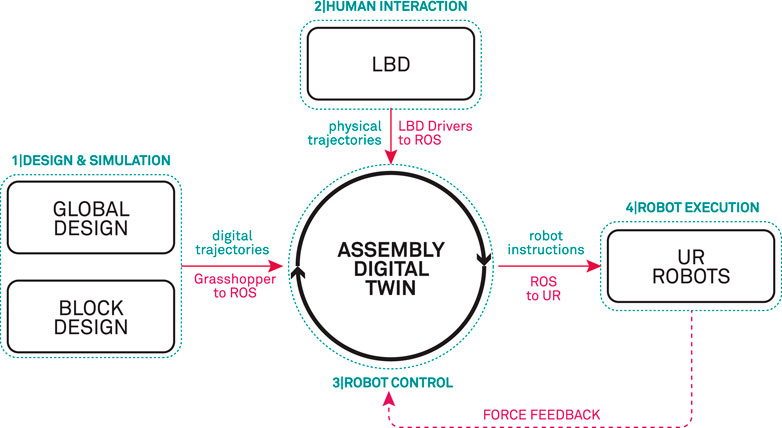

The overall digital twin robotic assembly framework (outlined in Figure 3) is organized in the following phases:

• In phase 1 – Design & Simulation of wooden blocks is implemented in Grasshopper (Rutten and McNeel, 2007) which represents a visual algorithmic editor plugin for Rhinoceros1, commonly used in the architectural design industry. In this stage the 3D model of the global truss structure is designed and stored. Additionally, the global structure contains the information of how each specific 3D modeled timber block is utilized in the global structure. This data is directly linked to a parametric structural model that can autonomously generate such data for different design cases. For more information on the parametric design of timber structures we direct the reader to our previous work Naboni et al. (2021). On this basis, geometric data is utilized to plan and simulate robot trajectories from the material stock feed to the location of insertion above a specific joint connection.

• In phase 2 – Human demonstration of the assembly task is recorded and interpreted. The demonstrated data represents a coordinated robot movement by physically grabbing and guiding the robot along the desired path, consisting of translational and rotational trajectories represented in operational space.

• phase 3 – Robot Control works as a digital twin with Robot Operating System (ROS) as the underlying communication between the service oriented control structures. Each robot action e.g., point to point movement, screwdriver operation, etc., is represented as service with specific inputs and outputs Naboni et al. (2021). Section 5.3 describes how the demonstrated data is utilized in the service oriented control structure. In this phase, all of the collected digital planning information from phase 1 and physical trajectories from phase 2 are compiled to a set of composite instructions giving an assembly sequence for a specific timber structure.

• In phase 4 – Physical robots are used to execute the tasks following the sequence of operations (from phase 3). In this work we make use of the collaborative robots with integrated force/torque sensors, by which the data measured during the assembly execution, is collected and sent back to the digital twin as a form of feedback – which is used to evaluate the success rate of a specific assembly execution (see Sec. 5.2). If the execution is successful the assembly digital twin schedules the next task.

FIGURE 3. Outline of the digital framework, linking the design and simulation, LbD and the physical level of the robot execution in an assembly digital twin.

4.2 System Description and Robot Cell Design

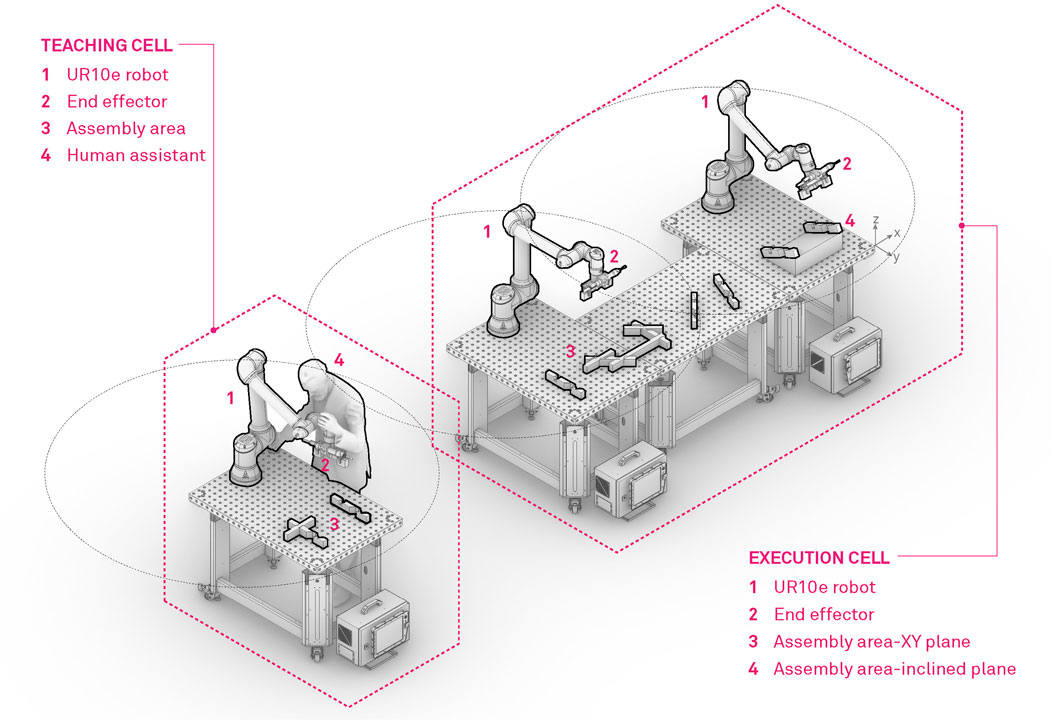

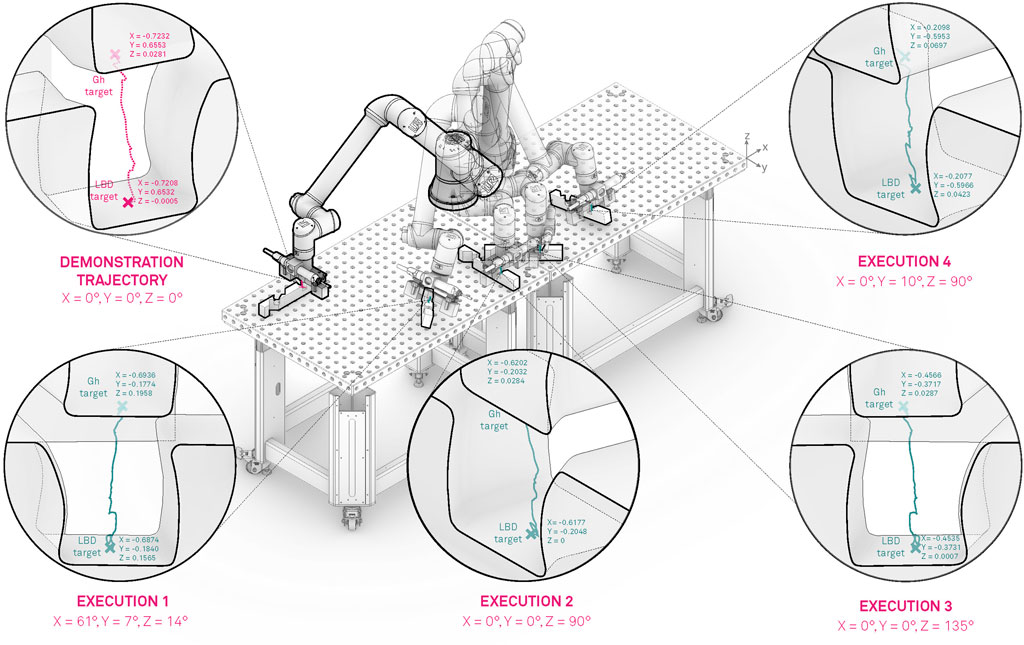

The experimental set-up for the robotic assembly of complex timber truss structures is based on two separate robotic cells, namely the Teaching cell and the Execution cell which are outlined in Figure 4. Both cells are equipped with universal Robot UR10e robots mounted on a Siegmund welding table. The Teaching cell is primarily designed for human-robot collaboration where LbD methods are applied to teach the robot highly complex and articulated moves which are required for assembly of tight interlocking timber joints. On the other hand, the Execution cell consists of two robots with dedicated tools, mounted on three tables, covering a full range of 4.6 m. With this configuration large timber structures can be assembled. Furthermore, in the execution cell, we combine timber layered assembly methods, consisting of pick and place, automatic and collaborative robotic screwing and vision localization of timber trusses, presented in our previous work Naboni et al. (2021), with the newly developed methods based on LbD.

FIGURE 4. Robot concept cell, designed for fabrication of timber structures. On the left hand side of the figure, the teaching cell is represented, where the operator teaches the robot actions with the help of LbD. The right hand side, represents the execution cell, where the thought actions with a combination of manipulation and robot screwing actions are deployed for robotic assembly of timber structures.

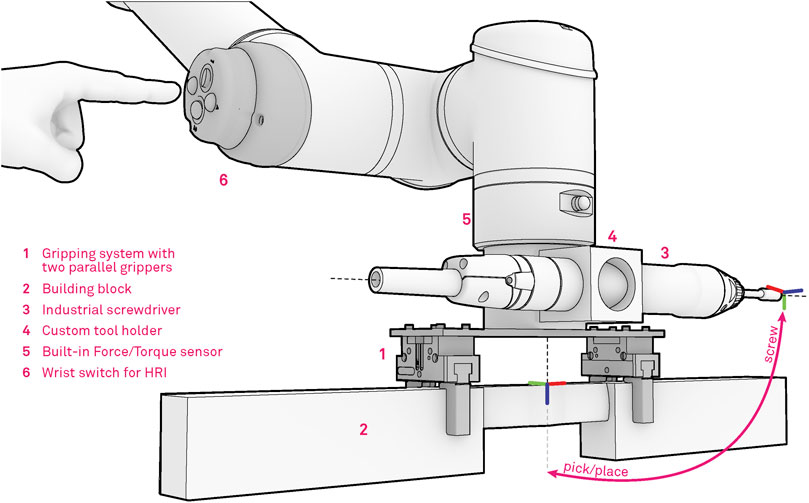

Each robot is equipped with a custom designed end-effector suitable for handling of wooden trusses and assembly of complex and tight timber joints, which allows for both the manipulation and screwing procedures (Figure 5). The gripper tool consists of two parallel Schunk JGP-64 pneumatic grippers which are rigidly mounted on a mounting plate at a distance of 20 cm. The mounting distance is designed based on the wooden stud design and allows for handling studs from 0.25 to 1.5 m. For this purpose specialized gripper fingers were designed and manufactured from sheet metal, featuring bend metal contact features which are suitable for grasping timber studs and can accommodate eventual manufacturing and material imprecisions.

FIGURE 5. Robotic tool for timber assembly, consisting of two parallel industrial pneumatic grippers, automatic screwdriver and the HRI interface.

Additionally, an industrial screwdriver is mounted within a custom-made steel flange, placed at a 90-degree angle from the gripper to avoid any form of collision between the tools while enabling multi-phase assembly procedures. The developed assembly system also takes full advantage of the built-in force-torque sensor which is placed within the robot flange and is used to record forces and torques during assembly procedures. The information is then passed to the digital twin for verification purposes or directly to the robot controller for adaptation purposes during the assembly. Finally, a custom interface for Human Robot Interaction (HRI), is mounted on the fourth joint of the robot. In our previous work (Naboni et al. (2021)), we applied this interface for collaborative task executions with a human operator. In this work we make use of the interface primarily in the demonstration phase. The human demonstrator can trigger various modes e.g., robot free drive, start/stop recording, gripper actions, while demonstrating the desired movement. With this setup the operator can easily demonstrate the assembly knowledge, which can later be executed in an assembly sequence.

5 Human – Robot Collaboration Setup for Timber Assembly

For LbD in connection with assembly tasks, several methods commonly known in robotics (Billard et al., 2008) can be employed. LbD and reuse of robot motion represents an easy, efficient and user-friendly way to program complex robot motion, not only for assembly but also for other tasks in robotics e.g., robotic reaching, polishing and grinding.

In construction automation, more specifically construction of timber structures, two main robotic approaches are arising from the robotic community. The first deals with layered design, where the robot paths can be extracted from the design directly and the designed timber blocks are assembled in pick and place type fashion. The second approach deals with more complex assembly structures, where the joints of the structures are designed in a way that they are self locking. In this case, the human operator has to intervene and execute the assembly (Naboni et al. (2021)), because programming of robot trajectories is very cumbersome and it takes a lot of resources and time. Some recent work in this field proposes reinforcement learning (Apolinarska et al. (2021)), to learn a specific assembly policy in simulation and then transfer the knowledge to a robotic system. This approach gives a policy which can be used for a single assembly setup and is not easily generalizable. To overcome this hurdle, in this paper we make use of LbD for leaning assembly policies for complex joints in timber assembly, which can be easily executed, adapted and generalized to various timber structure constraints.

5.1 Demonstration of Assembly Policies for Timber Structures

In this work we exploit one of the LbD methods called kinesthetic guiding (Hersch et al. (2008); Kramberger et al. (2017)) shown in Figure 6, as the main method for demonstrating human skills. Kinesthetic guiding enables us to record robot data by simply grabbing the robot and guiding it until the desired motion is achieved. In the process we record the 6-D Cartesian space and joint space movement trajectories. Please note that this method cannot be exploited on all robot manipulators, the manipulator must have the capability of active gravity compensation e.g., free drive, which is a common feature in collaborative robotics. For industrial robots, where this feature is commonly not available, motion demonstration with haptic devices can be applied.

In order to record the desired data, a human operator physically guides the robot along the desired trajectory and thus receives the same feedback from the environment as the robot. Consequently, the demonstrated trajectories are from the human perspective optimal, because the human can transfer the desired motion and adapt the demonstration to account for the environmental discrepancies if needed. The actual data we record with every demonstration is the following:

where pi, qi are the measured Cartesian space positions and orientations (represented as unit quaternions) and ςi are the joint angles measured at every time step during the demonstration ti, i = 1, … , T and T denotes the number of measurements in the training set. For a more detailed explanation of orientations encoded in the quaternion space we refer the reader to the following papers Ude et al. (2010, 2014). In addition to the kinematic data, we also record the corresponding dynamic characteristics of the demonstrated motion

Fm represents the recorded forces and Mm the recorded moments with an integrated force-torque sensor, during the replay of the demonstrated assembly task where the recorded joint space trajectory consisting of a time series of recorded joint angles ς is re-executed, represented in the base frame of the robot. With this approach, only the relevant dynamic data is recorded and it will be utilized for comparison purposes to outline the effectiveness of the adaptation procedures explained in the next section.

5.2 Assembly Policy Representation for Assembly of Timber Struts

In the paper we are primarily focusing on Cartesian space trajectories; therefore, to represent the recorded motion we exploit the Cartesian space Dynamic Movement Primitives (CDMPs) originally presented by Ude et al. (2014). The framework consists of a mass, spring, damper system with well-defined attractor dynamics providing a stable system for trajectory representation (Ijspeert et al. (2013)). CDMPs generate an autonomous control policy, which is robust to external perturbations and can be utilized for modulation of robot trajectories. The second order dynamical system models the progression of the trajectory and can be easily learned from a human demonstration. In CDMPs, the positional part of the demonstrated movement is treated in the same way as in the standard DMP formulation (Ijspeert et al. (2013)), whereas the orientational part of the trajectory is represented by unit quaternions and require special treatment, both in the nonlinear dynamic equations and during the integration. The system is represented with the following set of equations:

where τ represents the time scaling of the movement, gp, gq are the desired final positions g = [x, y, z] and orientations of the demonstrated movement in Carthesian space. In this framework, the orientations are represented as a unit quaternions q = v + u ∈ S3, where S is a unit sphere, representing a singularity free representation. For additional information on the mathematical representation in quaternion space, we direct the reader to the work of Ude et al. (2014). z and η represent the time scaled linear and angular velocity of the dynamical system and p and q correspond to the position and orientation of the demonstrated motion. The system is initialized at p = p0, q = q0, corresponding to the start pose of the demonstrated motion. Furthermore, parameters αp, αq, βp, βq and αx are related to the mass-spring-damper control parameters of the second order system, which influence its behavior. If the parameters are set in the following manner; τ > 0, [αp, αq] = 4 [βp, βq] > 0 and αx > 0, then the dynamical system has a unique and stable point attractor at [p, q] = [gp, gq], z = 0.

Additionally, s represents the exponential phase variable, which synchronizes all of the CDMPs in the system. Given the initial condition s (0) = 1, Eq. 5 can be solved analytically by s(t) = exp (αxt/τ), which characterizes the end of the encoded movement. Additionally, the shape of the demonstrated movement is encoded as a non-linear forcing term fp(s) and fq(s) for Cartesian positions and orientations separately. The term is a combination of radial basis functions, which essentially enables the robot to follow a demonstrated movement from the beginning to the end of the trajectory.

The afore mentioned free parameters,

5.3 Coupling the Demonstrated Data With the Digital Twin

The demonstrated knowledge, explained in the previous section, is represented as a self-contained LbD service in the execution framework. The service-based architecture provides a simple and efficient way to extend and plug in new services to the assembly digital twin. In this section we will explain the idea of the LbD service and how it is incorporated into the assembly digital twin.

The LbD service represents a self-contained framework for execution of demonstrated trajectories encoded with CDMPs. The input to the service is a set of target poses supplied by the global designer, which correspond to the target locations of the wooden blocks in the final assembly. The idea of the LbD service is to incorporate the supplied information and modulate the demonstrated trajectory to prepare it for execution with adaptation at any location in the workspace of the robot.

5.3.1 Modulation of the Demonstrated Motion, Based on the Assembly Digital Twin Input

DMPs and CDMPs are known for their modulation properties (Ijspeert et al. (2013)), this means that if a user changes the target goal of the position or orientation representation, the DMP with its dynamics ensures stable convergence. In order to exploit this property, in this work we adopted the modulation formulation presented in Ginesi et al. (2021) for the positional part of the trajectory and extend it to take into account also the orientational part of the trajectory. Thus, we reformulate the standard CDMP equations (Eq. 3-4), to account for the modulation factor, given by the rotation matrix Rp for the positional part (Ginesi et al. (2021)) and a novel orientation factor Rq represented in unit quaternion space.

The multiplication of the forcing term with the modulation factors accounts for the orientational and translational transformation of the entire demonstrated trajectory in accordance to the given inpute pose. Furthermore, we couple the reformulated equations with a force coupling term to enable adaptation of the demonstrated motion, based on the force input during the execution, more will be presented in Section 5.4.

In order to modulate the demonstrated trajectory with the associated target poses, given by the global design unit, we first have to calculate the relative start pose

In the equations

In order to get the rotation matrix representation Rp, we transform the axis angle representation to rotation matrix as outlined in Siciliano et al. (2008).

Calculating the relative transformation in orientation space represented with unit quaternions needs a special treatment. Unlike positions, which can be represented as a 3-D vector, the orientation quaternions are represented in S which is a unit sphere, therefore the transform between two unit quaternions is represented as an angular velocity that rotates the first quaternion e.g., q0,new into the second quaternion

With this formulation we get two transformation vectors

5.4 Adaptation of the Modulated Demonstrated Motion

In order to ensure a successful execution of the transformed demonstrated motion, we exploit the interaction forces measured during the execution with a force-torque sensor mounted at the wrist of the manipulator. The force measurements are used in a force control setting in order to add compliance to the system. The aim of this approach is to adapt the robot motion to small displacements, arising from the imperfections in the manufacturing process of the timber studs, positioning errors in the workspace and robot tool calibration imprecisions. The problem of unforeseen displacements is that, during the assembly process, small displacements can cause large forces being exerted on the work peace or the manipulator and consequently leading to a failed execution or even damage the hardware. In the presented approach we do not aim to control a specific force during the assembly, but to follow the demonstrated kinematic trajectory in order to execute the complex joint assembly procedure. For this reason we extend the original CDMP formulation (Eq. 3) with applying a compliance PI controller directly to the acceleration level of the CDMP (Eq. 9) enabling adaptation of the encoded motion directly while being executed. The compliance controller outputs are defined for the positional (Fc) and orientational (Mc) part of the CDMP, separately as follows:

In the equations Fd, Md represent the desired forces and torques and Fm, Mm the measured forces and torques, for each tool axis separately. Kp,F, Kp,M and Ki,F, Ki,M are positive defined gains of the PI controller. With this setup we can efficiently adapt to the environmental displacement, while minimising the interaction forces, ensuring a safe and reliable execution of the assembly task.

6 Experiments

In this section we will present the evaluation results of the proposed timber assembly framework. The experiments were conducted on the execution platform described in Sec. 4.2. The evaluation of the assembly approach, was executed several times at arbitrary placement locations of the mock up wooden studs in the workspace of the robot.

6.1 Demonstration of the Complex Joint Assembly Task

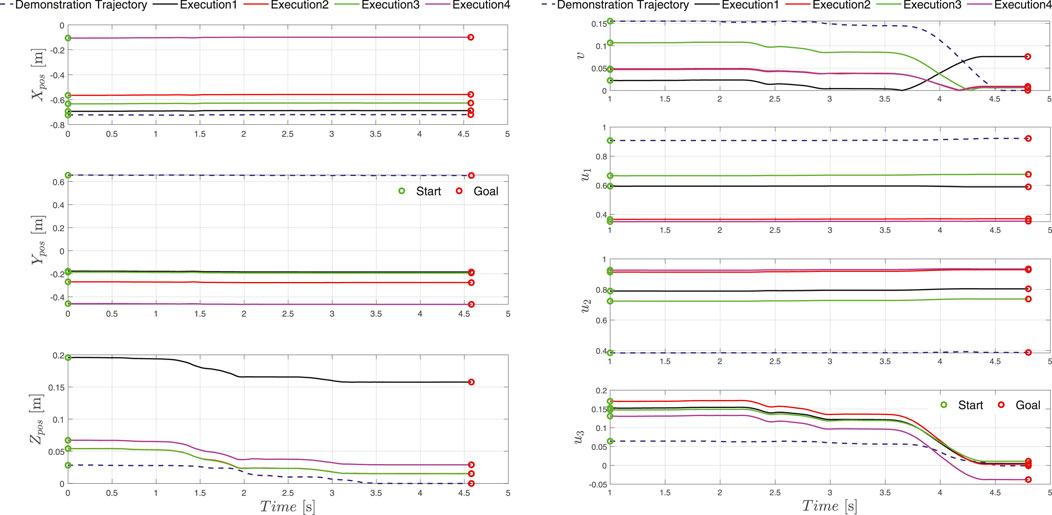

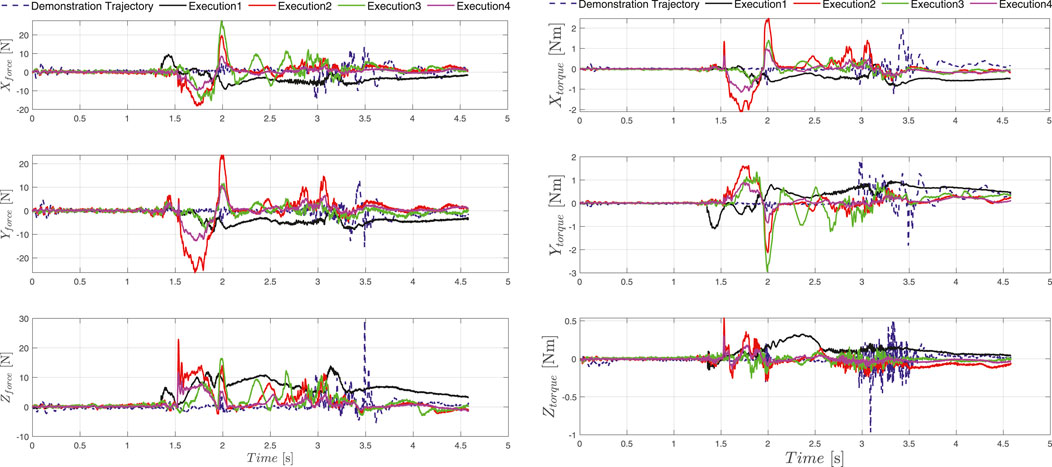

In the initial phase we recorded several demonstrations of the given joint assembly task. Initially the human operator set the robot into free drive mode e.g., active gravity compensation, with the help of the HRI interface and started to demonstrate the desired movement. Guiding the UR10e robot is substantially harder then compared to a KUKA iiwa or Franka panda robot, which come with active torque measurement in each joint, making the gravity compensation mode feel much smoother in comparison whit the UR10e, where the joint torques are estimated threw motor current measurement. In our previous work (Kramberger et al. (2017)) we applied iterative learning control coupled with force adaptation in order to optimize the demonstrated trajectory providing good results compared to an untrained human demonstrator. In this work we skipped this step and trained the human operator to perform the assembly task as best as possible with the robot. The outcome was recorded and is visualized in Figure 7 in the upper left zoomed in field with pink. A visual representation of the demonstration phases is given in Figure 8 and the time sequence of the recorded robot positions in Figure 9 with blue dashed lines. After the demonstration phase, we re-executed the kinematic trajectory and recorded the net force-torques arising during the demonstration which can be seen in Figure 10 represented with dashed lines. The force-torque measurement serves as a ground truth data for later comparison with the compliance execution, which will be elaborated in the following section.

FIGURE 7. Modulation and execution of the demonstrated motion in various locations of the robot workspace.

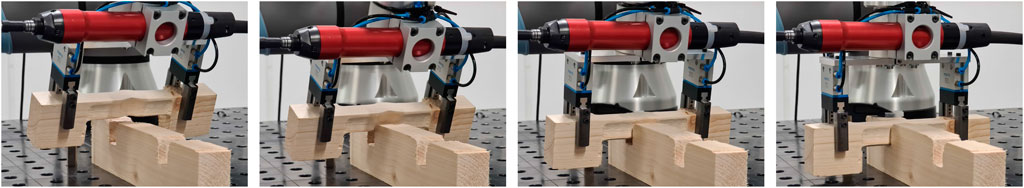

FIGURE 8. Execution of the demonstrated trajectory in 4 phases (approach (left), alignment (middle-left), interlocking (middle-right) and final configuration (right)).

FIGURE 9. Execution position (left) and orientation (right) trajectories recorded at five locations in the workspace of the robot. The goal location given by the global design unit is depicted as a red circle, whereas the transformed start location of the trajectory is presented with a green circle. The demonstrated trajectory is depicted with dashed lines and the transformed executed trajectories are presented with solid lines.

FIGURE 10. The demonstrated force-torque profile is represented with dashed blue line, solid lines represent forces and torques during the execution of the demonstrated assembly tasks at different locations of the timber studs in the workspace of the robot.

For proving the effectiveness of the proposed framework, we manufactured pine timber blocks with a KUKA KR240 R3330 industrial robot which is quipped with a CNC end mill and the KUKA CNC software package. The robot toolpath was defined in the Computer Aided Manufacturing (CAM) extension of the Fusion 3602 software. Afterwards, the generated G-code was parametrically translated into robot movements using the KUKA PRC (Braumann and Brell-Cokcan, 2011) plug-in for Grasshopper. With this approach, a 0.5 mm fabrication tolerance was achieved and thus we can ensure a tight connection and at the same time provide enough space for the execution of the demonstrated assembly motion. Furthermore, the manufacturing process left uneven surface finish on the test studs, which are the outcome of the imperfections in the wood. For this reason, the manufactured studs slightly differ one from another and therefore, to ensure a successful robotic assembly, the force adaptation procedures have to be applied.

6.2 Execution of the Demonstrated Assembly Task in Connection With the Digital Twin

After the demonstration phase, the obtained data was stored in the database associated with the assembly digital twin. In the execution digital twin the CDMP execution is defined as a service call, with the input corresponding to the new pose goal of the demonstrated trajectory, specified by the global design unit. The output of the service is a positional and dynamical signal, characterizing the success rate of the execution. The success rate is evaluated based on:

• comparing the new goal pose given by the global design unit and the actual measured Tool Center Point (TCP) pose of the robot after the execution finished. If the poses match, the execution was successful, on the other hand it signals a failure and the execution is repeated.

• Force-torque measurements during the execution. If the forces and torques, exceed a threshold defined by the average force and torque value from the net forces and torques recorded during the replay of the demonstration, the execution fails and vice versa.

The evaluation mechanism, signals the assembly digital twin to proceed with the assembly plan, re-execute the assembly sequence or set the robot into collaboration mode signalling the human to assist with the assembly. Similar evaluation procedures were implemented in our previous work dealing with collaborative robot screwing (Naboni et al. (2021)) and were extended in this work to accommodate the assembly execution with demonstrated data.

In total we evaluated 100 executions randomly covering the entire workspace of the robot with a success rate of 93%. The assembly execution sequence segmentation in 4 phases e.g. approach (new transformed start configuration), alignment, interlocking and final configuration (new goal pose specified by the global design unit) is depicted in Figure 8. Furthermore, the five execution examples where the orientation and position is significantly changed compared to the demonstrated trajectory are shown in Figures 7–9 for the kinematic trajectories and in Figure 10 for the dynamic trajectory. In the seven failed execution cases, the evaluation mechanism was triggered mainly based on too high execution forces. This was the outcome of imprecision’s in the placement of the assembly strut on the table, misalignment discrepancy during the grasping of the assembled strut or manufacturing imperfections which caused jamming at certain test locations. To mitigate this problem the goal pose given by the global design unit was re-evaluated and aligned by hand so that it matched, and in the second try the assembly strut was re-grasped from the fixture and the assembly re-executed. Focusing on the execution of the assembly task, it can be seed in Figure 10 that the execution force-torque measurements (solid lines) are on average lower then the force-torque measurements recorded after the demonstration (dashed lines). The application of the force-torque adaptation method, described in Sec. 5.4, contributed to a better overall performance of the execution.

Assembly of wooden trusses can in almost all cases be considered as a planar assembly problem, therefore the variations in assembly joint orientation is considered around a single axis e.g., robot TCP defined z-axis. For this reason, we conducted the majority of the experiments on the x-y plane (table plane), two instances can be observed in Figures 7–9 outlined as Execution two and 3. To show that the orientation transformation method described in Sec. 5.3.1 works for all arbitrary given orientations, we conducted an experiment with a target orientation specified in x-y and y-z plane (Execution 4) and a target orientation and position specified in all six principal axis (Execution 1). The successful execution of the assembly task is visible in Figures 7–9 and in Figure 10 with black and magenta color, showing that the force-torque measurement during the execution is comparable with the planar executions, furthermore proving that the accuracy of the proposed transformation method works adequately.

7 Conclusion

In this paper we propose a framework for assembly of complex timber trusses with a robotic manipulator. In the robotics domain the task can be related to the peg in hole assembly problem, where small positional discrepancies, usually caused by imprecise grasping, positioning of the work peace or the manufacturing process, lead to high forces applied on the work peace or manipulator. Furthermore, the assembly task cannot be programmed in an efficient way with conventional robot programming techniques. Therefore, we utilize LbD to transfer the human assembly skills onto the robotic system. For this reason we devised a teaching robot cell, where the human expert operator can teach the relevant assembly motions, which can later be transferred to the execution cell. In the paper we introduce the concept of the assembly digital twin, which directly links the architectural truss and structure design, with the robotic assembly, which is further enhanced with assembly methods utilizing learning by demonstration. With this setup we can efficiently and with a high success rate, assemble timber structures in layered fashion as well as complex joinery.

The aim of this work is to show the synergy between the structural design process and robot execution, with witch we are able to perform complex assembly tasks utilizing LbD and execute them at any given position and angle of the timber truss given by the global structural design. Therefore, we harnessed the modulation properties of the CDMPs and enhanced them with a novel orientation transformation system, which enables us to precisely transform the entire encoded motion according to the pose specified by the global assembly designer. Furthermore, we coupled a force compliance controller directly to the acceleration part of the CDMP, to facilitate adaptation to environmental changes during the assembly execution, thus minimizing the interaction force-torques and maximizing the success rate of the assembly.

The performance of the proposed framework was evaluated in several experiments, where a single human assembly demonstration was executed under various poses in the workspace of the robot. The pose input was provided by the global designer and used for transforming the demonstrated trajectories. We demonstrated, that a complex joint can be assembled at any arbitrary pose in the robots workspace, with a high success rate, despite the manufacturing imperfection in the wooden studs. The experiments also show, that the added compliance in the system adds to a higher success probability, and efficiently compensates for misalignment’s and manufacturing errors of the designed wooden studs, which in turn increases the robustness of the assembly strategy.

In future work, we will focus on extending the framework in order to be integrated with the overall timber assembly system, we are building. Furthermore we will investigate the possibility of up scaling, in order to facilitate even more complex and big timber trusses with a larger robot.

Data Availability Statement

Enquiries related to the data presented in the original contribution, should be addressed to the corresponding author directly.

Ethics Statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

AKr, CSc and CSl provided the initial suggestion for the system design and the robotic methods, AKu and RN developed the design methods for the timber trusses, prepared the figures and wrote the associated design sections and introduction of the paper. AKr, II and CSc collaborated on the implementation of the proposed robot control methods and presentation of the results. AKr wrote the conclusions, methodology, robot assembly and experimental sections. With the combined effort of all authors, the paper was revised and written according to the current state.

Funding

This research was supported by the SDU I4.0 lab. initiative.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The handling Editor declared a past co-authorship with one of the authors AKr.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

2https://www.autodesk.com/products/fusion-360/overview

References

Abu-Dakka, F. J., Nemec, B., Jørgensen, J. A., Savarimuthu, T. R., Krüger, N., and Ude, A. (2015). Adaptation of Manipulation Skills in Physical Contact with the Environment to Reference Force Profiles. Auton. Robot 39, 199–217. doi:10.1007/s10514-015-9435-2

Abu-Dakka, F. J., and Saveriano, M. (2020). Variable Impedance Control and Learning—A Review. Front. Robotics AI 7, 177. doi:10.3389/frobt.2020.590681

Apolinarska, A. A., Pacher, M., Li, H., Cote, N., Pastrana, R., Gramazio, F., et al. (2021). Robotic Assembly of Timber Joints Using Reinforcement Learning. Automation in Construction 125, 103–125. doi:10.1016/j.autcon.2021.103569

Billard, A., Calinon, S., Dillmann, R., and Schaal, S. (2008). “Robot Programming by Demonstration,” in Springer Handbook of Robotics (Springer), 1371–1394. doi:10.1007/978-3-540-30301-5_60

Bragança, S., Costa, E., Castellucci, I., and Arezes, P. M. (2019). A Brief Overview of the Use of Collaborative Robots in Industry 4.0: Human Role and Safety. Occup. Environ. Saf. Health, 641–650. doi:10.1007/978-3-030-14730-3_68

Braumann, J., and Brell-Cokcan, S. (2011). “Parametric Robot Control: Integrated Cad/cam for Architectural Design,” in Integration Through Computation - Proceedings of the 31st Annual Conference of the Association for Computer Aided Design in Architecture (Calgary, Canada: ACADIA 2011), 242–251.

Buchli, J., Theodorou, E., Stulp, F., and Schaal, S. (2011). Variable Impedance Control a Reinforcement Learning Approach. Robotics: Sci. Syst. VI, 153.

Chai, H., Marino, D., So, C. P., and Yuan, P. F. (2019). “Design for Mass Customization Robotic Realization of a Timber Tower with Interlocking Joints,” in Ubiquity and Autonomy - Paper Proceedings of the 39th Annual Conference of the Association for Computer Aided Design in Architecture (Austin, TX: ACADIA 2019), 564–572.

Cimino, C., Negri, E., and Fumagalli, L. (2019). Review of Digital Twin Applications in Manufacturing. Comput. Industry 113, 103–130. doi:10.1016/j.compind.2019.103130

Deniša, M., and Ude, A. (2015). Synthesis of New Dynamic Movement Primitives through Search in a Hierarchical Database of Example Movements. Int. J. Adv. Robotic Syst. 12, 137–150.

Eversmann, P. (2017). Digital Fabrication in Education Strategies and Concepts for Large-Scale Projects. CAAD EDUCATION - TEACHING 1, 333–342. doi:10.1007/s41693-017-0006-2

Gams, A., Nemec, B., Zlajpah, L., Wachter, M., Ijspeert, A., Asfour, T., et al. (2013). “Modulation of Motor Primitives Using Force Feedback: Interaction with the Environment and Bimanual Tasks,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IEEE), 5629–5635. doi:10.1109/iros.2013.6697172

Gašpar, T., Deniša, M., Radanovič, P., Ridge, B., Savarimuthu, T. R., Kramberger, A., et al. (2020). Smart Hardware Integration with Advanced Robot Programming Technologies for Efficient Reconfiguration of Robot Workcells. Robotics and Computer-Integrated Manufacturing 66, 101979.

Ginesi, M., Sansonetto, N., and Fiorini, P. (2021). Overcoming Some Drawbacks of Dynamic Movement Primitives. Robotics Autonomous Syst. 144, 103844. doi:10.1016/j.robot.2021.103844

Helm, V., Knauss, M., Kohlhammer, T., Gramazio, F., and Kohler, M. (2016). Additive Robotic Fabrication of Complex Timber Structures. A Comput. Approach, 29–44. doi:10.4324/9781315678825-3

Hersch, M., Guenter, F., Calinon, S., and Billard, A. (2008). Dynamical System Modulation for Robot Learning via Kinesthetic Demonstrations. IEEE Trans. Robot. 24, 1463–1467. doi:10.1109/tro.2008.2006703

Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., and Schaal, S. (2013). Dynamical Movement Primitives: Learning Attractor Models for Motor Behaviors. Neural Comput. 25, 328–373. doi:10.1162/neco_a_00393

Koerner-Al-Rawi, J., Park, K. E., Phillips, T. K., Pickoff, M., and Tortorici, N. (2020). Robotic Timber Assembly. Constr. Robot. 4, 175–185. doi:10.1007/s41693-020-00045-6

Kormushev, P., Calinon, S., and Caldwell, D. G. (2011). Imitation Learning of Positional and Force Skills Demonstrated via Kinesthetic Teaching and Haptic Input. Adv. Robotics 25, 581–603. doi:10.1163/016918611x558261

Koropouli, V., Hirche, S., and Lee, D. (2012). “Learning and Generalizing Force Control Policies for Sculpting,” in IEEE International Conference on Intelligent Robots and Systems (IROS) (Algarve, Portugal: Vilamoura), 1493–1498. doi:10.1109/iros.2012.6385957

Kramberger, A., Gams, A., Nemec, B., Chrysostomou, D., Madsen, O., and Ude, A. (2017). Generalization of Orientation Trajectories and Force-Torque Profiles for Robotic Assembly. Robotics Autonomous Syst. 98, 333–346. doi:10.1016/j.robot.2017.09.019

Krieg, O. D., Schwinn, T., and Menges, A. (2015). “Biomimetic Lightweight Timber Plate Shells: Computational Integration of Robotic Fabrication, Architectural Geometry and Structural Design,” in Advances in Architectural Geometry 2014. doi:10.1007/978-3-319-11418-7_8

Kritzinger, W., Karner, M., Traar, G., Henjes, J., and Sihn, W. (2018). Digital Twin in Manufacturing: A Categorical Literature Review and Classification. IFAC-PapersOnLine 51, 1016–1022. doi:10.1016/j.ifacol.2018.08.474

Kuma and Associates (2020). Botanical pavilion. Available at: https://kkaa.co.jp/works/architecture/botanical-pavilion/?fbclid=IwAR0TlmCQ5Tqbzj35vMMJO-PESEFqIP9TDUZZ6z0uXCWm6LQFifRSZqJlmZs, (Accessed 01 10 2021).

Kunic, A., Kramberger, A., and Naboni, R. (2021a). “Cyber-Physical Robotic Process for Re-configurable Wood Architecture. Closing the Circular Loop in wood Architecture,” in Proceedings of the 39th eCAADe Conference. Towards a new, configurable architecture SUBMITTED.

Kunic, A., Naboni, R., Kramberger, A., and Schlette, C. (2021b). Design and Assembly Automation of the Robotic Reversible Timber Beam. Automation in Construction 123, 103531. doi:10.1016/j.autcon.2020.103531

Leśko, A. (2016). Modern Moment Resisting Timber Connections – Theory and Numerical Modelling. Proced. Eng. 153, 400–406.

Leung, P. Y. V., Apolinarska, A. A., Tanadini, D., Gramazio, F., and Kohler, M. (2021). “Automatic Assembly of Jointed Timber Structure Using Distributed Robotic Clamps,” in Proceedings of the 26th International Conference of the Association for Computer-Aided Architectural Design Research in Asia (Hong Kong: CAADRIA), 583–592.

Lončarević, Z., Pahič, R., Ude, A., Gams, A., et al. (2021). Generalization-based Acquisition of Training Data for Motor Primitive Learning by Neural Networks. Appl. Sci. 11, 1013.

Malik, A. A., and Brem, A. (2021). Digital Twins for Collaborative Robots: A Case Study in Human-Robot Interaction. Robotics and Computer-Integrated Manufacturing 68, 102092. doi:10.1016/j.rcim.2020.102092

Michell, A. G. M. (1904). Lviii. The Limits of Economy of Material in Frame-Structures. Lond. Edinb. Dublin Phil. Mag. J. Sci. 8, 589–597. doi:10.1080/14786440409463229

Müller, F., Janetzky, J., Behrnd, U., Jäkel, J., and Thomas, U. (2018). “User Force-dependent Variable Impedance Control in Human-Robot Interaction,” in 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), 1328–1335. doi:10.1109/coase.2018.8560340

Naboni, R., Kunic, A., Kramberger, A., and Schlette, C. (2021). Design, Simulation and Robotic Assembly of Reversible Timber Structures. Construction Robotics 5, 1–10. doi:10.1007/s41693-020-00052-7

Pastor, P., Righetti, L., Kalakrishnan, M., and Schaal, S. (2011). “Online Movement Adaptation Based on Previous Sensor Experiences,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 365–371. doi:10.1109/iros.2011.6095059

Robeller, C., Weinand, Y., Helm, V., Thoma, A., Gramazio, F., and Kohler, M. (2017). “Robotic Integral Attachment,” in Fabricate (London, United Kingdom: UCL Press), 92–97. doi:10.2307/j.ctt1n7qkg7.16

Rogeau, N., Tiberghien, V., Latteur, P., and Weinand, Y. (2020). “Robotic Insertion of Timber Joints Using Visual Detection of Fiducial Markers,” in Proceedings of the 37th International Symposium on Automation and Robotics in Construction (ISARC), 491–498. doi:10.22260/isarc2020/0068

Rozo, L., Jiménez, P., and Torras, C. (2013). A Robot Learning from Demonstration Framework to Perform Force-Based Manipulation Tasks. Intel. Serv. Robotics 6, 33–51. doi:10.1007/s11370-012-0128-9

Schou, C., Andersen, R. S., Chrysostomou, D., Bøgh, S., and Madsen, O. (2018). Skill-based Instruction of Collaborative Robots in Industrial Settings. Robotics and Computer-Integrated Manufacturing 53, 72–80. doi:10.1016/j.rcim.2018.03.008

Søndergaard, A., Amir, O., Eversmann, P., Piskorec, L., Stan, F., Gramazio, F., et al. (2016). “Topology Optimization and Robotic Fabrication of Advanced Timber Space-Frame Structures,” in Robotic Fabrication in Architecture, Art and Design 2016 (Springer), 190–203.

Stumm, S., and Brell-Çokcan, S. (2019). Haptic Programming. Robotic Fabrication Architecture, Art Des., 44–58. doi:10.1007/978-3-319-92294-2_4

Stumm, S., Devadass, P., and Brell-Cokcan, S. (2018). Haptic Programming in Construction. Constr. Robot. 2, 3–13. doi:10.1007/s41693-018-0015-9

Thoma, A., Adel, A., Helmreich, M., Wehrle, T., Gramazio, F., and Kohler, M. (2019). Robotic Fabrication of Bespoke Timber Frame Modules. Robotic Fabrication Architecture, Art Des. 2018, 447–458. doi:10.1007/978-3-319-92294-2_34

Ude, A., Gams, A., Asfour, T., and Morimoto, J. (2010). Task-specific Generalization of Discrete and Periodic Dynamic Movement Primitives. IEEE Trans. Robot. 26, 800–815. doi:10.1109/tro.2010.2065430

Ude, A., Nemec, B., Petrič, T., and Morimoto, J. (2014). “Orientation in Cartesian Space Dynamic Movement Primitives,” in 2014 IEEE International Conference on Robotics and Automation (ICRA), 2997–3004. doi:10.1109/icra.2014.6907291

Yuan, P. F., Chai, H., Yan, C., and Zhou, J. J. (2016). “Robotc Fabrication of Structural Performance-Based Timber Grid-Shell in Large-Scale Building Scenario,” in ACADIA 2016: Posthuman Frontiers: Data, Designers, and Cognitive Machines - Proceedings of the 36th Annual Conference of the Association for Computer Aided Design in Architecture, 196–205.

Keywords: learning by demonstration, assembly of timber structures, digital twin, robotic assembly, robotic fabrication

Citation: Kramberger A, Kunic A, Iturrate I, Sloth C, Naboni R and Schlette C (2022) Robotic Assembly of Timber Structures in a Human-Robot Collaboration Setup. Front. Robot. AI 8:768038. doi: 10.3389/frobt.2021.768038

Received: 31 August 2021; Accepted: 16 November 2021;

Published: 27 January 2022.

Edited by:

Matteo Saveriano, University of Innsbruck, AustriaReviewed by:

Julien Gamerro, Swiss Federal Institute of Technology Lausanne, SwitzerlandAysegul Ucar, Firat University, Turkey

Copyright © 2022 Kramberger, Kunic, Iturrate, Sloth, Naboni and Schlette. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aljaz Kramberger, alk@mmmi.sdu.dk

Aljaz Kramberger

Aljaz Kramberger Anja Kunic2

Anja Kunic2  Iñigo Iturrate

Iñigo Iturrate Christoffer Sloth

Christoffer Sloth Christian Schlette

Christian Schlette