Human–Machine Telecollaboration Accelerates the Safe Deployment of Large-Scale Autonomous Robots During the COVID-19 Pandemic

- 1School of Mechanical and Aerospace Engineering, Nanyang Technological University, Nanyang, Singapore

- 2Autonomous Driving Lab, Alibaba DAMO Academy, Hangzhou, China

Introduction

Robots are increasingly used in today’s society (Torresen, 2018; Scassellati and Vázquez, 2020). Although the “end goal” is to achieve full autonomy, currently the smartness and abilities of robots are still limited. Thus, some form of human supervision and guidance is still required to ensure robust deployment of robots in society, especially in complex and emergency situations (Nunes et al., 2018; Li et al., 2019). However, when supervising robots, human performance and ability could be affected by their varying physical and psychological states, as well as other irrelevant activities. Thus, involving people in the operation of robots introduces some uncertainties and safety issues.

Currently, the supervision of robots has become even more challenging, especially due to the COVID-19 pandemic (Feizi et al., 2021). This is because, apart from maintaining the operator’s performance, avoiding close contact between the operator and others in the working place, to keep them away from potential onsite hazards, imposes new challenges. In this context, teleoperation, which keeps humans in the control loop but at a distance, provides a solution. However, this method has limitations. Under teleoperation, continuous human supervision and control are required, resulting in a higher workload. As operators observe the environment through monitors, their fields of view are limited. They can hardly feel the motion, forces, and vibrations that the robots receive on the remote side, which further restricts their situational awareness during supervision and affects the safe deployment of robots.

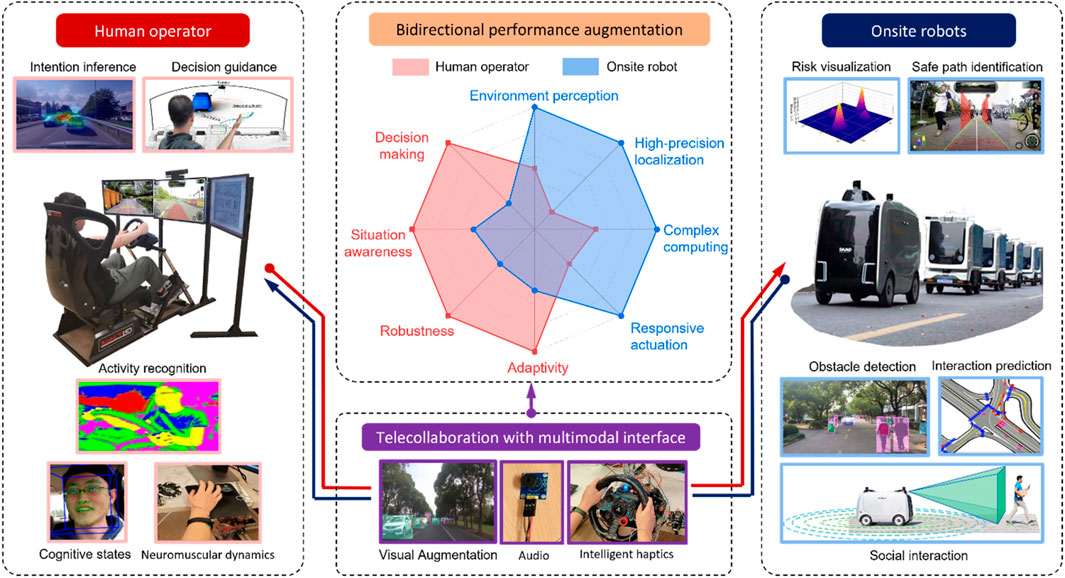

To overcome the above limitations and further advance safe robot operation, we propose a human–machine telecollaboration paradigm that features bidirectional performance augmentation with hybrid intelligence. In contrast to the existing teleoperation architecture, where a robot only receives and executes human commands in a single direction, the telecollaboration, as shown in Figure 1, fuses and leverages the hybrid intelligence from both human and robot sides, and keeps human operators away from onsite hazards, thereby improving the overall safety, efficiency, and robustness of the human-robot system, enabling the robust deployment of large-scale autonomous robots in society. This technology has been successfully implemented in over 200 Alibaba’s autonomous delivery robots. They are now serving more than 160 communities across 52 cities in China during the COVID-19 pandemic (Alibaba Group’s). With remote help from human operators, one robot can deliver up to 300 packages with more than 40 million obstacle detections and over 5,000 interactions per day. Recently, the fleet of delivery robots reached its milestone of one million parcel deliveries. The key features of the telecollaboration framework are as follows.

Human–Machine Bidirectional Augmentation Framework

As both humans and machines have their strengths and weaknesses, there are no fixed roles of master and slave under this telecollaboration framework. Operators and machines complement and augment each other via multimodal interfaces for perception, decision-making, planning, and control. The performance and status of both humans and robots are monitored and assessed simultaneously in real time. The overall control authority is adaptively allocated to or fully transferred between humans and machines according to their states. Because the robots’ ability is enhanced under human remote guidance, they can function normally most of the time. Operators would need to intervene only when necessary, which reduces their workloads as full-time engagement, is not required. Ideally, one operator can supervise multiple robots simultaneously via telecollaboration. From another perspective, owing to the help received from humans, the requirements of onboard hardware and the complexity of algorithms used by the robot can be lowered. This promotes the low-cost and large-scale deployment of robots with human–robot mutual trust in society.

During human-machine collaboration, there might be conflicts happening due to deviations between human operator’s and machine’s different intentions and expectations. To address this issue and mitigate the human-machine conflicts, under the proposed telecollaboration framework, we further designed a reference-free control algorithm, in which the status, abilities, as well as intentions of the human operator and robots are monitored separately (Huang et al., 2020). And based on their individually assessed performances, the entire control authority is then allocated to each party accordingly, ensuring the safety, stability and smoothness of the overall human-machine system.

Augmented Machine Intelligence Under Human Guidance

Robots, not pure mechanical actuators, have a certain degree of smartness and can autonomously operate under normal conditions. However, because of their limited abilities, robots can face considerable challenges in complex situations, which they may not be able to handle by themselves (Rossi et al., 2020). In such situations, human intervention is needed to provide guidance and compensation via telecollaboration at either the strategic or tactical level. Through multimodal interfaces, including image processing, gesture recognition, natural language processing, and affective computing, the operator’s intentions can be inferred and passed to the machines to enhance their reasoning (Yang et al., 2018). Recently, we proposed a novel human–machine cooperative rapidly exploring random tree algorithm, which introduces human preferences and corrective actions, enhancing the safety, smoothness, and human likeness of robot planning and tracking control (Huang et al., 2021). In addition, to further improve machine intelligence, we are developing a human-in-the-loop deep reinforcement learning (DRL) method by fusing human skills via real-time guidance into the DRL agent during the learning process, which effectively improves the learning curve (Wu et al., 2021).

Augmented Human Performance Leveraging Machine Intelligence

Compared to robots, humans exhibit stronger robustness and adaptability in scene understanding and decision-making; however, their abilities are limited in areas such as high-precision perception, localization, measurement, and complex computing (Fani et al., 2018; Su et al., 2020). In particular, under remote collaboration, the human field of view and subjective feelings are restricted. Fortunately, these deficiencies can be compensated and augmented by machines (Drnach and Ting, 2019). Multi-modal and multi-dimensional onsite information, including simultaneous localization and mapping, obstacle detection, and potential risk assessment, can be processed by onsite robots using onboard sensors, sent back via telecommunications, and visualized on monitors to improve the operator’s situational awareness. In addition, being deployed in society, robots will need to interact with humans. Therefore, interaction awareness and forecasting are important for safe robot operations. Recently, we proposed a novel deep learning model that uses only one second of historical data to accurately predict the robot motion and its interactions with surrounding agents up to 8 s ahead, wining the first Place of Interaction Prediction Track at 2021 Waymo Open Dataset Challenges (Mo et al., 2022). The visualized interaction prediction results effectively help operators understand the situation and make safe decisions. In addition, multimodal interfaces, including computer vision, augmented reality, virtual reality, electromyography, and haptics, have been explored to help identify the operator’s cognitive and physical states and allow automation to provide personalized assistance, thereby improving the operator’s task ability during telecollaboration (Lv et al., 2021).

Conclusion

The large-scale real-world robot deployment results demonstrated the feasibility and effectiveness of the proposed telecollaboration. The high-level framework, submodules, and algorithms that were developed have great potential to be expanded to a wide range of robotics and human–machine interaction applications. Nevertheless, social robot deployment is a system-engineering task that requires collaboration between different areas, such as AI, robotics, control, telecommunications, human factors, psychology, privacy, security, ethics, and law. In the future, more efforts from multi-disciplinary aspects, including human-like autonomy, cognitive robotics, multi-modal human-machine interface, low-cost perception, high-definition map, 5G communication, cloud and edge computing, safe AI etc, need to be made to develop a harmonic ecosystem for human–robot coexisting societies.

Author Contributions

ZH and YZ developed the algorithms, designed and performed experiments, processed and analyzed data, and interpreted results. QL developed the algorithms, designed and performed experiments. CL supervised the project, designed the system concept, methodology and experiments, interpreted results, and wrote the paper. All authors reviewed the manuscript.

Funding

This work was supported in part by the SUG-NAP Grant of Nanyang Technological University, Singapore; the A*STAR Project (SERC 1922500046); and the Alibaba Group, through the Alibaba Innovative Research Program and the Alibaba–Nanyang Technological University Joint Research Institute (No. AN-GC-2020-012).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alibaba Group’s Alibaba’s Driverless Robots Just Made Their One Millionth E-Commerce Delivery. Available at: https://www.alizila.com/alibaba-driverless-robots-one-millionth-ecommerce-delivery/ (Accessed April 3, 2022).

Drnach, L., and Ting, L. H. (2019). Ask This Robot for a Helping Hand. Nat. Mach Intell. 1, 8–9. doi:10.1038/s42256-018-0013-0

Fani, S., Ciotti, S., Catalano, M. G., Grioli, G., Tognetti, A., Valenza, G., et al. (2018). Simplifying Telerobotics: Wearability and Teleimpedance Improves Human-Robot Interactions in Teleoperation. IEEE Robot. Automat. Mag. 25 (1), 77–88. doi:10.1109/mra.2017.2741579

Feizi, N., Tavakoli, M., Patel, R. V., and Atashzar, S. F. (2021). Robotics and Ai for Teleoperation, Tele-Assessment, and Tele-Training for Surgery in the Era of Covid-19: Existing Challenges, and Future Vision. Front. Robot AI 8, 610677. doi:10.3389/frobt.2021.610677

Huang, C., Huang, H., Zhang, J., Hang, P., Hu, Z., and Lv, C. (2021). Human-machine Cooperative Trajectory Planning and Tracking for Safe Automated Driving. IEEE Trans. Intell. Transportation Syst. doi:10.1109/tits.2021.3109596

Huang, C., Lv, C., Naghdy, F., and Du, H. (2020). Reference‐free Approach for Mitigating Human-Machine Conflicts in Shared Control of Automated Vehicles. IET Control. Theor. Appl 14 (18), 2752–2763. doi:10.1049/iet-cta.2020.0289

Li, L., Wang, X., Wang, K., Lin, Y., Xin, J., Chen, L., et al. (2019). Parallel Testing of Vehicle Intelligence via Virtual-Real Interaction. Sci. robotics. doi:10.1126/scirobotics.aaw4106

Lv, C., Li, Y., Xing, Y., Huang, C., Cao, D., Zhao, Y., et al. (2021). Human-Machine Collaboration for Automated Driving Using an Intelligent Two‐Phase Haptic Interface. Adv. Intell. Syst. 3 (4), 2000229. doi:10.1002/aisy.202000229

Mo, X., Huang, Z., Xing, Y., and Lv, C. (2022). Multi-agent Trajectory Prediction with Heterogeneous Edge-Enhanced Graph Attention Network. IEEE Trans. Intell. Transportation Syst. doi:10.1109/tits.2022.3146300

Nunes, A., Reimer, B., and Coughlin, J. F. (2018). People Must Retain Control of Autonomous Vehicles. Nature 556 (7700), 169–171. doi:10.1038/d41586-018-04158-5

Rossi, S., Rossi, A., and Dautenhahn, K. (2020). The Secret Life of Robots: Perspectives and Challenges for Robot's Behaviours during Non-interactive Tasks. Int. J. Soc. Robotics 12 (6), 1265–1278. doi:10.1007/s12369-020-00650-z

Scassellati, B., and Vázquez, M. (2020). The Potential of Socially Assistive Robots during Infectious Disease Outbreaks. Sci. Robot. 5, s. doi:10.1126/scirobotics.abc9014

Su, H., Qi, W., Yang, C., Sandoval, J., Ferrigno, G., and Momi, E. D. (2020). Deep Neural Network Approach in Robot Tool Dynamics Identification for Bilateral Teleoperation. IEEE Robot. Autom. Lett. 5 (2), 2943–2949. doi:10.1109/lra.2020.2974445

Torresen, J. (2018). A Review of Future and Ethical Perspectives of Robotics and AI. Front. Robot. AI 4, 75. doi:10.3389/frobt.2017.00075

Wu, J., Huang, Z., Huang, C., Hu, Z., Hang, P., Xing, Y., et al. (2021). Human-in-the-loop Deep Reinforcement Learning with Application to Autonomous Driving, 07246. arXiv:2104.

Keywords: human-machine telecollaboration, hybrid intelligence, autonomous robots, COVID-19 pandemic, human-robot interaction

Citation: Hu Z, Zhang Y, Li Q and Lv C (2022) Human–Machine Telecollaboration Accelerates the Safe Deployment of Large-Scale Autonomous Robots During the COVID-19 Pandemic. Front. Robot. AI 9:853828. doi: 10.3389/frobt.2022.853828

Received: 13 January 2022; Accepted: 30 March 2022;

Published: 13 April 2022.

Edited by:

Yanan Li, University of Sussex, United KingdomReviewed by:

Jing Luo, Changsha University of Science and Technology, ChinaShuo Cheng, The University of Tokyo, Japan

Copyright © 2022 Hu, Zhang, Li and Lv. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chen Lv, lyuchen@ntu.edu.sg

Zhongxu Hu

Zhongxu Hu Yiran Zhang

Yiran Zhang Qinghua Li2

Qinghua Li2  Chen Lv

Chen Lv