Opinion attribution improves motivation to exchange subjective opinions with humanoid robots

- 1Graduate School of Engineering Science, Osaka University, Osaka, Japan

- 2Advanced Telecommunications Research Institute International (ATR), Kyoto, Japan

- 3RIKEN, Kyoto, Japan

In recent years, the development of robots that can engage in non-task-oriented dialogue with people, such as chat, has received increasing attention. This study aims to clarify the factors that improve the user’s willingness to talk with robots in non-task oriented dialogues (e.g., chat). A previous study reported that exchanging subjective opinions makes such dialogue enjoyable and enthusiastic. In some cases, however, the robot’s subjective opinions are not realistic, i.e., the user believes the robot does not have opinions, thus we cannot attribute the opinion to the robot. For example, if a robot says that alcohol tastes good, it may be difficult to imagine the robot having such an opinion. In this case, the user’s motivation to exchange opinions may decrease. In this study, we hypothesize that regardless of the type of robot, opinion attribution affects the user’s motivation to exchange opinions with humanoid robots. We examined the effect by preparing various opinions of two kinds of humanoid robots. The experimental result suggests that not only the users’ interest in the topic but also the attribution of the subjective opinions to them influence their motivation to exchange opinions. Another analysis revealed that the android significantly increased the motivation when they are interested in the topic and do not attribute opinions, while the small robot significantly increased it when not interested and attributed opinions. In situations where there are opinions that cannot be attributed to humanoid robots, the result that androids are more motivating when users have the interests even if opinions are not attributed can indicate the usefulness of androids.

1 Introduction

This study aims to clarify the factors that improve the user’s willingness to talk with robots in non-task oriented dialogues (e.g., chat). They are expected to play an important role in various applications, such as communication support for elderly people (Su et al., 2017). Additionally, in situations where the importance of communication is addressed, there is a great social demand for dialogue robots.

The spoken dialogue systems required for these robots can be classified into two types (Chen et al., 2017): task-oriented (e.g., (Williams and Young, 2007)) and non-task-oriented (e.g., (Wallace, 2009)) dialogue. In a task-oriented dialogue, the policy of the dialogue strategy is to achieve a specific goal, such as finding products (Yan et al., 2017) or seat reservation (Seneff and Polifroni, 2000). However, in non-task-oriented dialogues, the goal of the dialogue is to continue the dialogue by stimulating the motivation of the user. Hence, many studies have aimed at generating linguistically connected utterances. A famous non-task-oriented dialogue system ELIZA (Weizenbaum, 1966) has a dialogue database and response patterns. They enable it to continue a dialogue using parts of the user’s speech without limiting the topic. Recently, some studies have aimed to generate system utterances with more natural connections by selecting ones using human-human dialogue data (Huang et al., 2022). Other studies have focused on dialogue breakdown detection technology aiming to avoid speech without linguistic connections (Higashinaka et al., 2016). While linguistic consistency is an essential part of dialogue, stimulating the user’s motivation to talk is also necessary for non-task-oriented dialogue. Even if the utterances are linguistically correct, the user will soon get bored if they do not have the motivation to talk to the system. Therefore, to continue a dialogue, the system should stimulate the user’s desire to interact.

One method to stimulate the user’s motivation is to recognize the user’s interest and talk about them. Some studies have proposed methods to estimate the current level of interest based on various information (e.g., (Hirayama et al., 2011)). For estimating preferences, Uchida et al. developed a dialogue system that estimates the user’s preferences (Uchida et al., 2021). Other methods estimate user interests not only by linguistic information (Chen et al., 2022) but also by combining linguistic and non-linguistic information (Yu et al., 2019).

A previous study reported that exchanging subjective opinions makes chats enjoyable and enthusiastic (Tokuhisa and Terashima, 2009). The exchange of subjective opinions can be a self-disclosure and is a factor that plays an important role in the process of intimacy in interpersonal relationships (Altman and Taylor, 1973). The alignment of internal states in communication leads to the building of mutual trust through the enhancement of the predictability of the participants’ action choices (Katagiri et al., 2013). Yuan et al. developed the system that accomplish human-robot mental alignment (Yuan et al., 2022) in the context of explainable artificial intelligence (XAI) (Edmonds et al., 2019). Therefore, the exchange of subjective opinions is effective for intimacy with the interlocutor and can be a means of stimulating motivation for dialogue.

In this study, we define subjective opinion as “an evaluation made by an individual on a target.” For example, if the robot evaluates pasta as tasty, the robot’s subjective opinion is “the pasta is tasty.” From the previous studies mentioned above, we deduce that it can be effective for robots to express their subjective opinion to make the user feel enjoyable and enthusiastic in dialogue. However, another study has stated that people hardly attribute subjective experiences related to value (good or bad) to robots (Sytsma and Machery, 2010). Therefore, even if the robot states that food tastes good, its user is unlikely to attribute the experience of eating the food to it and to find that the robot actually has the opinion. Thus, in some cases, the robot’s subjective opinions are not realistic, i.e., the user does not feel the robot has its own opinions. This study refers to this phenomenon as “the user does not attribute subjective opinions to the robot.” We speculate that this phenomenon may reduce the user’s motivation to exchange opinions with the robot.

This study hypothesizes that regardless of the type of robot, opinion attribution affects the user’s motivation to exchange opinions with robots. For example, if a robot says that alcohol tastes good, it may be difficult to imagine the robot having such an opinion. On such topics, the user’s motivation to exchange opinions is likely to decrease. The contribution of this study is to show that opinion attribution is important for exchanging opinions with robots and to generalize the findings of previous studies to communication robots. Furthermore, people are willing to differently interact with different types of agents (Uchida et al., 2017a; 2020). We discuss a strategy to select the type of communication robots for various classes of dialogues and embodiment of the dialogue robots by considering the robot’s appearance and its impact on dialogue. In summary, the contributions of this study are the following:

• To clarify the influence of opinion attribution to humanoid robots on users’ motivation to exchange opinions with them.

• To clarify the topics that improve the user’s motivation to exchange opinions with each type of humanoid robot.

2 Related works

Our study is related to a cognitive phenomenon: mental state attribution. It means “the cognitive capacity to reflect upon one’s own and other persons” mental states such as beliefs, desires, feelings and intentions (Brüne et al., 2007)” and helps us to understand the others (Dennett, 1989; Mithen, 1998; Epley et al., 2007; Heider, 2013). Many previous studies reported that more human-like appearance increases the degree of mental state attribution to robots (Krach et al., 2008; Broadbent et al., 2013; Takahashi et al., 2014; Martini et al., 2016; 2015; Xu and Sar, 2018; Banks, 2020; Manzi et al., 2020). On the other hand, our study specifically focuses on the effect of opinion attribution on users’ motivation to exchange opinions with humanoid robots. Another previous study investigated the effect of the robot’s appearance on the users’ cooperative attitude toward it (Goetz et al., 2003). While it has investigated the cooperative attitudes of users in a task, this study focuses on the speech content of the robot.

In our previous study, we investigated the effect of opinion attribution on motivation to talk with a human-like android (Uchida et al., 2019). We asked participants to evaluate whether they had attributed opinions to it and their motivation to talk with it regarding a variety of opinions (e.g., the taste of food and the performance of a computer). As a result, we clarified the user’s interest in the android’s opinions and the attribution of the subjective opinions to it influence their motivation for dialogue. Because we investigated the effect of opinion attribution on the dialogue motivation using only the android in the previous study, this result could not be generalized to all dialogue robots. In addition, our previous study used the question “whether or not you want to dialogue” to evaluate it (Uchida et al., 2019). Since the dialogue can contain various factors, it is not clear whether the opinion attribution has an influence on the exchange of subjective opinions.

Recently, there have been many studies on artificial systems for engaging users in conversations, such as chatting, aiming to continue the dialogue itself (i.e., non-task-oriented dialogue). Contributing to this trend are developments in natural language processing (e.g., GPT4 (OpenAI, 2023)) and speech recognition technology (Baevski et al., 2020), which has shown tremendous progress in recognizing user speech. However, long-term non-task-oriented dialogues with the users such as human-to-human dialogues have not been realized yet. For such long-term dialogues, it is important to exchange subjective opinions (Tokuhisa and Terashima, 2009). However, it has been suggested that when an android which has human-like appearance utters subjective opinions, if that opinion cannot be attributed to it, then the user’s motivation to talk decreases (Uchida et al., 2019). In this study, we examined whether this finding is applicable to other humanoid robots and how opinion attribution and motivation to exchange opinions differ depending on the type of robot.

3 Materials and methods

We adopted the same experimental procedure as the previous study (Uchida et al., 2019) using an android (ERICA (Glas et al., 2016)) and a small robot (SOTA1). We used an android, which is a humanoid robot, that closely resembles a human in appearance, and a small robot that has a machine-like appearance. The hypothesis of this experiment is that, regardless of the type of robot, attributing opinions to the robot and the user’s interest in the topic increases the motivation for exchanging opinions.

3.1 Condition

We investigated the above hypotheses by preparing various opinion items and asking participants to evaluate whether they believe that the robot can evaluate the target opinion and whether they want to talk with the robot about the target opinion. There are some studies in HRI (Human-Robot Interaction) that use video evaluations (Woods et al., 2006; Maeda et al., 2021). A paper showed that video and real evaluations can be similar (Woods et al., 2006). The result confirms the moderate to high agreement between the evaluation of the robot in the video and one in the real environment. In the studies of mental state attribution, a majority of paper used some kind of robot representation as the materials presented to participants (Thellman et al., 2022). It also reported that video stimuli are the second most common type of the representation. This study also evaluates the robots by video following the previous study that directly focuses on the effect of opinion attribution for dialogue motivation (Uchida et al., 2019).

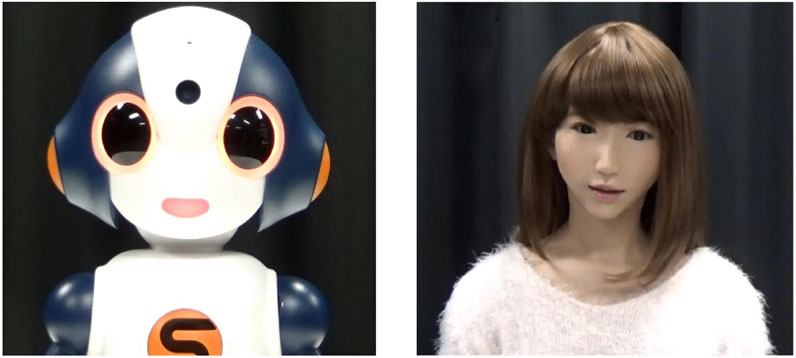

We prepared two conditions, one for the evaluation of the android and the other for the small robot. The participants in this experiment evaluated both conditions. This enables each participant to adopt their own same criteria for attributing opinions to the robots and for evaluating the topics on which they would like to exchange opinions. The order in which they watched the robot video was counterbalanced. Since each participant might imagine different things of robots in written instructions, we controlled their background knowledge by showing videos. We showed the participants an introductory video for each robot (small robot: https://youtu.be/5SD8PL9rRgo, android: https://youtu.be/j1RJsN3p4JY) to control the participants’ knowledge of the robots and to inform them that they are dialogue robots. The screen shots of the android and the robot are shown in Figure 1.

In the videos, the android says “I am an android,” and the small robot says “I am a robot.” The duration of each video is 5 s. The robots only said the above script such that the script would not affect the evaluation of the opinion attribution to them. The android has a extremely humanlike appearance and 44 degrees of freedom (DOF). Its lip, head, and torso movements are automatically generated from its voice using the systems developed in previous studies (Ishi et al., 2012; Sakai et al., 2016). It also expresses eye blinking at random timing. The small robot has a table-top size and 8 DOF. Its on/off of the LED at the mouth expressed the lip movement synchronized with the voice. This was realized using the function that is installed by default in it.

3.2 Procedure

The participants watch one of the robot’s videos, and then answer a preliminary questionnaire and a main questionnaire for the robot. They repeated this procedure for the other robot. In the preliminary questionnaire, the participants answer the questions below to evaluate the knowledge and impression of the robots.

• preQ1 (Knowledge): “Do you know the android/robot you just saw in the video?” (I know/I do not know)

• preQ2 (Age): “How old do you think the android/robot you just saw in the video is?”

• preQ3 (Interest in robots): “How interested are you in the android/robot you just saw in the video?” (0. not interested, 1. slightly interested, 2. Interested, and 3. Very interested)

• preQ4 (Preference): “How much did you like the android/robot you just saw in the video?” (0. I did not like it, 1. I liked it a little, 2. I liked it, and 3. I liked it a lot)

For each robot, participants choose one option for each question. The numbers of the choices in preQ3 and preQ4 are used to score the ratings in the analysis. We prepared preQ3 and preQ4 referring to a previous study to evaluate robots’ impression (Matsumoto, 2021).

In the main questionnaire, if they evaluate whether they attribute a specific opinion (e.g., “curry is delicious”) to the robots and whether they want to talk with it, it is possible that their motivation to exchange opinions depends not only on whether the opinion can be attributed but also on whether they agree or disagree with the opinion. In this experiment, we asked participants to evaluate whether the robot can judge each opinion (e.g., “taste of food”) and whether they want to talk with the robot about it. We prepared 100 opinions, such as “taste of food,” “beauty of paintings,” and “difficulty of mathematics.” We defined this target of opinion as a “topic.” Following the definition of subjective opinion (evaluation of a target), each opinion was created from a combination of a topic (e.g., food) and a noun-form of adjective (e.g., deliciousness of food). The topics were selected from a list of 100 topics in a previous study (Yamauchi et al., 2013), and one adjective from a list of 100 adjectives for the topic (Mizukami, 2014).

For each opinion, the participant evaluated whether they thought the robot could judge it or whether they wanted to talk about it with the robot. Since the motivation to exchange opinions is also affected by their interest in the topic, we also asked them to evaluate how interested they were in the topic. To evaluate this, we used the following questions.

• Q1 (Motivation to exchange opinions): “On each topic, how much do you want to exchange opinions with the android/robot you just saw in the video?” (0. I do not agree so, 1. I slightly agree, 2. I agree, and 3. I extremely agree)

• Q2 (Opinion attribution): “For each topic, do you think the android/robot you just saw in the video can judge it? E.g.,) Regarding “Interestingness of TV programs,” please answer “I do not agree” if you think the android cannot judge whether the TV program is interesting or not, and “I slightly agree,” “I agree,” or “I extremely agree” if you think it can with its degree.” (0. I do not agree, 1. I slightly agree, 2. I agree, and 3. I extremely agree)

• Q3 (Interest in topic): “How much interest do you have in each topic?” (0. not interested, 1. slightly interested, 2. Interested, and 3. Extremely interested)

For each topic, participants choose one of the four options for each question. The numbers of the choices in the questions are used to score the ratings in the analysis. Regarding the options of the answers, Likert scale includes the option “neither”, which causes unclear mapping of an intermediate category in this experiment. For this reason, we adopted these options above.

4 Result

Ninety-five Japanese people (48 males and 47 females, mean age of 24.747 years old, and variance of 6.041) participated in this experiment. We used crowdsourcing to recruit them.

4.1 Knowledge and impression

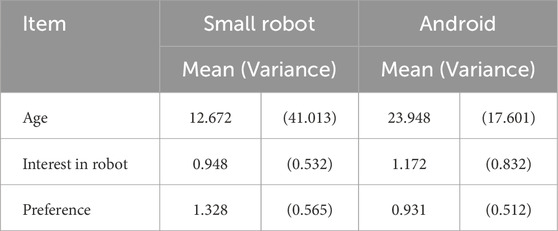

We evaluated the knowledge and the impression of the robots in the preliminary questionnaire. 18.947% and 30.526% of the participants knew the android and the small robot, respectively. The participants’ knowledge of the robots is considered to bias the results of the experiment. In the following, therefore, we limit the analysis to 58 participants (30 males and 28 females, mean age of 24.638 years old, and variance of 5.679) who do not know either robot. The results of the other items in the preliminary questionnaire are shown in Table 1.

4.2 Relationship between motivation to exchange opinions and interest in topic/opinion attribution

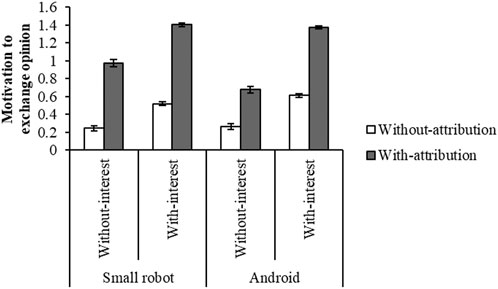

We investigated the effects of the opinion attribution to the robots and the interest for the motivation to exchange opinions. Regarding the opinion attribution (Q2), we divided the data into two groups: those with attribution group (”1. I slightly agree, 2. I agree, and 3. I extremely agree”) and without attribution group (”0. I do not agree”). 5,724 items were evaluated without attribution and 5,876 were with attribution, and thus the percentage of items evaluated with attribution was 50.655%. Similarly, regarding the interest in the topic (Q3), we divided the data into two groups: with interest group (”1. slightly interested, 2. Interested, 3. Extremely interested”) and without interest group (”0. not interested”). Figure 2 shows the mean and standard error of motivation to exchange opinions for each condition.

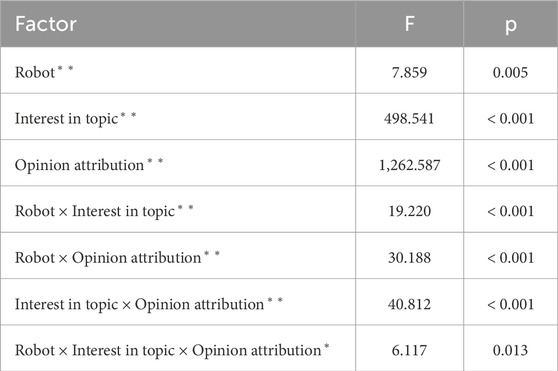

A three-way ANOVA (robot × interest in topic × opinion attribution) revealed that there were significant main effects of the robot (F (1, 11,592) = 7.859, p = 0.005), the interest in topic (F (1, 11,592) = 498.541, p < 0.001), and the opinion attribution (F (1, 11,592) = 1,262.587, p < 0.001). It also did that there were significant two-way interactions of the robot × the interest in topic (F (1, 11,592) = 19.220, p < 0.001), the robot × the opinion attribution (F (1, 11,592) = 30.188, p < 0.001), the interest in topic × the opinion attribution (F (1, 11,592) = 40.812, p < 0.001). It also showed that there was a significant three-way interaction of the robot × the interest in topic × the opinion attribution (F (1, 11,592) = 6.117, p = 0.013) as shown in Table 2. It was conducted by using a statistical software HAD (Shimizu, 2016).

We conducted a simple interaction effect test since the three-way interaction was significant. It revealed that there were significant simple interaction effects on the robot × the interest in topic in the with-attribution condition (F (1, 11,592) = 21.380, p < 0.001). We conducted a simple-simple main effect test since the simple interaction effect was significant. It revealed that there were significant simple-simple main effects on the opinion attribution in the small robot and without-interest condition (F (1, 11,592) = 225.866, p < 0.001), in the small robot and with-interest condition (F (1, 11,592) = 1,002.086, p < 0.001), in the android and without-interest condition (F (1, 11,592) = 77.061, p < 0.001), and in the android and with-interest condition (F (1, 11,592) = 732.093, p < 0.001).

Another simple interaction effect test revealed that there were significant simple interaction effects on the robot × the opinion attribution both in the without-interest condition (F (1, 11,592) = 21.307, p < 0.001) and the with-interest condition (F (1, 11,592) = 8.943, p = 0.003). It revealed that there were significant simple-simple main effects on the interest in the small robot and without-attribution condition (F (1, 11,592) = 58.846, p < 0.001), in the small robot and with-attribution condition (F (1, 11,592) = 100.192, p < 0.001), in the android and without-attribution condition (F (1, 11,592) = 82.584, p < 0.001), and in the android and with-attribution condition (F (1, 11,592) = 314.164, p < 0.001).

Another simple interaction effect test revealed that there were significant simple interaction effects on the interest in topic × the opinion attribution both in the small robot condition (F (1, 11,592) = 7.558, p = 0.006) and the android condition (F (1, 11,592) = 39.829, p < 0.001). It revealed that there were significant simple-simple main effects on the robot in the without-interest and with-attribution condition (F (1, 11,592) = 33.256, p < 0.001) and in the with-interest and without-attribution condition (F (1, 11,592) = 9.663, p = 0.002).

These results suggest that not only the user’s high interest in the topic but also the opinion attribution to the robot improves the motivation to exchange opinions regardless of the robot type. Moreover, the android significantly increased the motivation when they were interested in the topic and did not attribute opinions, while the small robot significantly increased it when they were not interested and attributed opinions.

4.3 Motivation to exchange opinions and opinion attribution for each robot and topic field

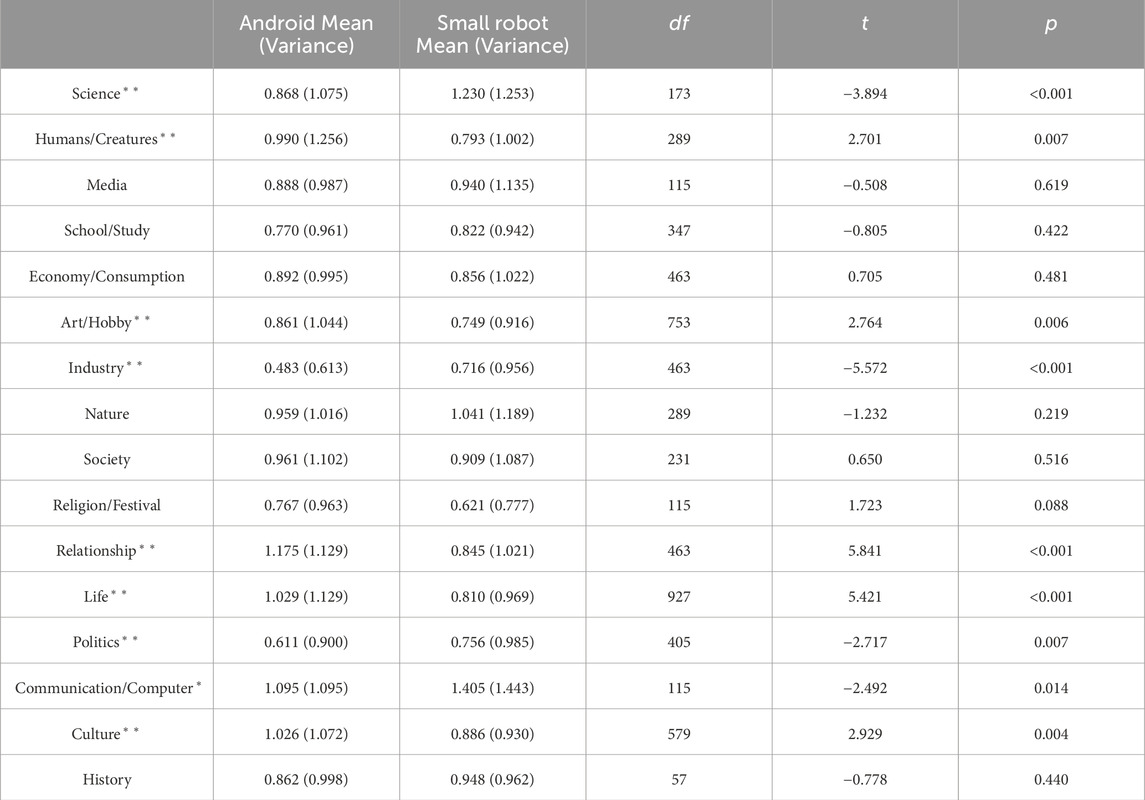

We also analyzed the motivation to exchange opinions and the opinion attribution not only by the robot type but also by the topic field. The field is categorized by (Yamauchi et al., 2013) and listed in the Supplementary Appendix of this paper. Table 3 shows the mean and the variance of the motivation for each robot by topic field and total. The scale of the score is [0, 3]. A paired t-test on the mean of the motivation between robots in each topic field revealed that there is significant difference in the following topic fields: “Science” (t (173) = −3.894, p < 0.001), “Humans/Creatures” (t (289) = 2.701, p = 0.007), “Art/Hobby” (t (753) = 2.764, p = 0.006), “Industry”(t (463) = −5.572, p < 0.001), “Relationship” (t (463) = 5.841, p < 0.001), “Life”(t (927) = 5.421,p < 0.001), “Politics”(t (405) = −2.717, p = 0.007), “Communication/Computer” (t (115) = −2.492, p = 0.014), and “Culture” (t (579) = 2.929, p = 0.004). This result suggests that people are willing to exchange opinions more with the android in Humans/Creatures (88–92), Art/Hobby (41–53), Relationship (27–34), Life (11–26), and Culture (1–10), while they do more with the small robot in Science (98–100), Industry (69–76), Politics (81–87), and Communication/Computer (59–60). The numbers in the parentheses next to the topic field describes the opinion number in the Supplementary Appendix.

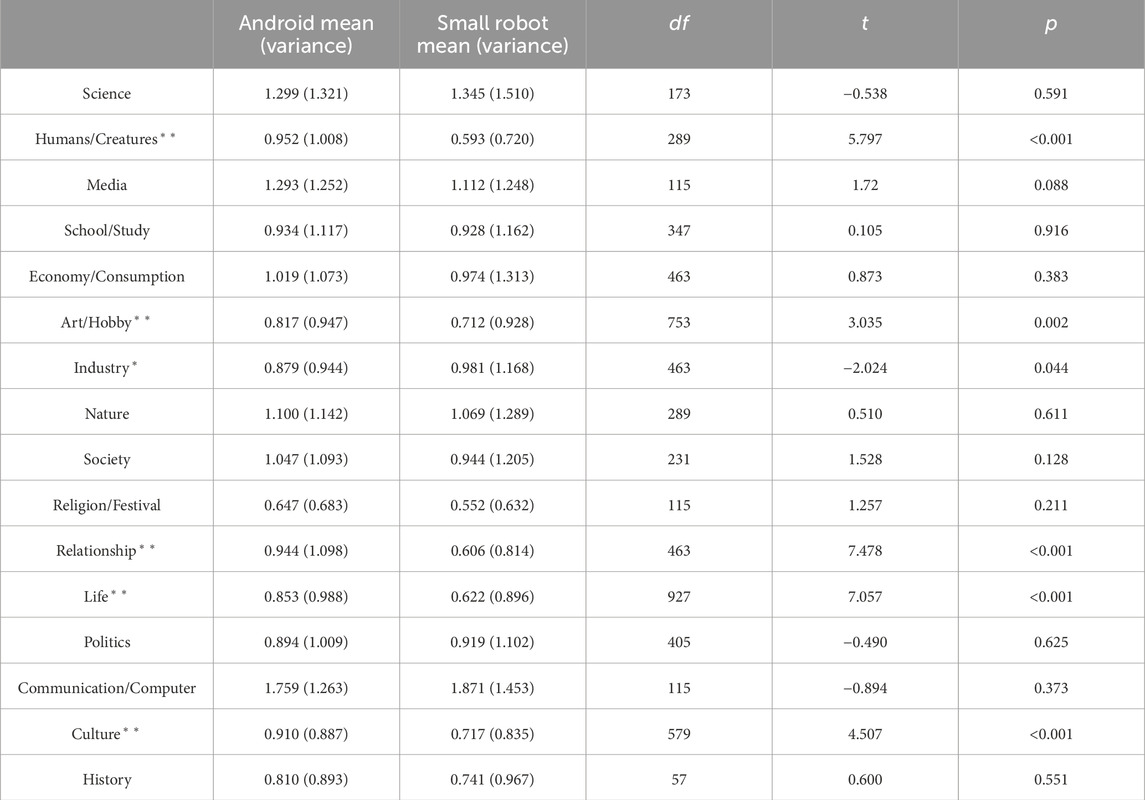

Table 4 lists the mean and the variance of the opinion attribution for each robot by topic field. The scale of the score is [0, 3]. A paired t-test on the mean of the opinion attribution between robots in each topic field revealed that there is a significant difference in the following topic fields: “Humans/Creatures” (t (289) = 5.797, p < 0.001), “Art/Hobby” (t (753) = 3.035, p = 0.002), “Industry” (t (463) = −2.024, p = 0.044), “Relationship” (t (463) = 7.478, p < 0.001), “Life” (t (927) = 7.057, p < 0.001), and “Culture” (t (579) = 4.507, p < 0.001). This result suggests that people attribute opinions more with the android in Humans/Creatures (88–92), Art/Hobby (41–53), Relationship (27–34), Life (11–26), and Culture (1–10), while they do more with the small robot in Industry (69–76). The numbers in the parentheses next to the topic field describes the opinion number in the Supplementary Appendix.

5 Discussion

In this study, we hypothesized that opinion attribution affects the user’s motivation to exchange opinions with humanoid robots. The experimental result suggests that this attribution improve their motivation. Moreover, approximately half of the subjective opinions were attributed to the humanoid robots. According to development of robotics and AI technologies, there is a possibility that this degree will improve. Another analysis revealed that the android significantly increased the motivation when they were interested in the topic and did not attribute opinions, while the small robot significantly increased it when they were not interested and attributed opinions. The results of this experiment supported the hypothesis and suggested that the importance of opinion attribution can be generalized to humanoid robots. The contributions of this study are that we clarified the influence of opinion attribution to humanoid robots on users’ motivation to exchange opinions with them, and clarified the topics that improve the user’s motivation to exchange opinions with each type of humanoid robot. While interest in a topic is a self-oriented factor formed by one’s past experiences, opinion attribution is an other-oriented factor formed by the conversation partner (here, the android and the small robot). Based on the findings, both self-oriented and other-oriented factors must be considered when designing dialogue robots that stimulate the user’s motivation to exchange opinions.

There are many dialogue systems that refer to human-human dialogue datasets (e.g., (Huang et al., 2022)) to generate the system’s utterance. These datasets would also contains utterance data including subjective opinions that cannot be attributed to the robot. Based on the results of this study, when implementing a dialogue system on a robot, it is necessary to consider not only the linguistic connection of utterances, but also whether the subjective opinions can be attributed to the robot. To avoid this problem, it can take a strategy to avoid discussing topics that the user cannot attribute opinions to. However, considering the robots’ ability, there are few items that can be attributed to robots. This means that the subjective opinions which are exchanged between the user and robot are extremely limited. Even if the robot told us that it had excellent coffee, we would not believe that it understands the taste of coffee. However, we frequently talk about a topic such as the taste of coffee, and the limitation would make the user lose their motivation to exchange opinions with the robot. To stimulate the user’s motivation regarding any topic, it is necessary to make the robot imagine that it can judge the target topic (i.e., the user can attribute the opinion to the robot).

Moreover, we investigated the effect of the robot type on the motivation to exchange opinions. The android significantly increased the motivation when they were interested in the topic and did not attribute opinions, while the small robot significantly increased it when they were not interested and attributed opinions. We can think of situations in which people are interested and do not attribute opinions, such as chatting in which they talk about casual things. Androids could be useful in such interactions. There are also situations in which they are not interested and attribute opinions such as listening to a lecture they are not interested in. The small robot may be useful in such educational situations. Also, the fact that people want to exchange opinions with androids if they are interested in the topic, even if they do not attribute opinions to it, shows the usefulness of androids as dialogue robots.

In this study, we further analyzed the motivation and the opinion attribution between robots for each topic field. The scores for humans/creatures, art/hobby, relationship, life, and culture were significantly higher for the android. Many of them are related to humans and their society. This may be because the appearance of the android closely resembles a human. However, the scores for science, industry, politics, and communication/computer were significantly higher for the small robot. They were mostly related to science and technology. This may be attributed to the non-human appearance of the small robot. We examine the results from the viewpoint of whether they exceed 1 since “disagree” is scored as 0 and “slightly agree” is scored as 1 in the questionnaire. For both robots, the mean scores were above 1 only for communication/computer. This probably because robots is an embodiment of information technology. The scores of the motivation did not exceed 2 for any of the topic fields (see Table 3), which indicates that the motivation is not high. This can be because the interaction was limited to the short video observation, and thus the interaction was not enough to arouse the desire for exchanging opinions. The Uncanny Valley (Mori et al., 2012) is a phenomenon that extremely human-like artificial entities can be uncanny. Research on this phenomenon investigates familiarity, which is not consistent with the motivation to exchange opinions targeted in this study. On the other hand, it is meaningful to discuss the relationship with this phenomenon. The results of this study on the motivation of each robot indicate that in some cases the android is significantly higher, and in other cases the small robot is. Thus, it may be difficult to explain the motivation based solely on human-likeness of the robots. Also, it should be noted that there is a case in which this phenomenon cannot be applied an android (Bartneck et al., 2009).

Subsequently, we discuss the opinion attribution between robots for each topic field. In the topic fields of humans/creatures, art/hobby, relationship, life, and culture, more subjective opinions were attributed to the android than to the small robot, while in the industry, more were attributed to the small robot. This can be similar to the results for the motivation, and the reason can also be similar. Many previous studies have shown that the more human-like robots become, the more they are attributed mental state (Krach et al., 2008; Broadbent et al., 2013; Takahashi et al., 2014; Martini et al., 2016; 2015; Xu and Sar, 2018; Banks, 2020; Manzi et al., 2020). Although opinions are considered to be a part of mental state, the results of this study suggest that people attribute opinions to smaller robots more than to more human-like androids in some topics. This may indicate that topics and task should be considered in the attribution issue. For both robots, the topics with the mean score above 1 were science, media, nature, and communication/computer. This can derive from the characteristics of robots themselves, as well as the reason discussed in the motivation. The topics that are not above 1 for the motivation but for the opinion attribution were science, media and nature. Although these topic fields have a relatively high degree of opinion attribution to the robots, they may not stimulate a desire for dialogue because the exchange of opinions related to them can be limited to specific groups such as experts in the fields. The scores of the attribution did not exceed 2 for any of the topic fields (see Table 4), which indicates that the attribution is not high. This can also stem from the short video observation.

Here, we discuss the relationship between robots’ appearance (embodiment) and intelligence. The degree of opinion attribution is greatly influenced by the degree of development of the technologies of robots and AI. The results of this study revealed that the degree of opinion attribution differs depending on the type of robot because they look different. The attribution of opinions (i.e., the ability to make judgments) may indicate that appearance influences intelligence. Previous studies also reported that the appearance of robots and agents affects the impressions of humans (Uchida et al., 2017b). The results of this study may contribute to the clarification of the relationship between them. Since not only appearance but also voice may have an influence, various modalities of communication should be investigated. It is also necessary to examine how opinion attribution is affected by the presence or absence of a body, for example, by comparing a robot in front of the user with a virtual agent (e.g., (Kidd and Breazeal, 2004; Powers et al., 2007)) or a text chatbot system (e.g., (Shuster et al., 2022)).

Finally, we address the limitations of this study. This study also evaluated the robots by video to follow the previous study (Uchida et al., 2019). The results may differ between a video evaluation and an evaluation by actually seeing the robots. Video provides less information regarding the robots’ modality, which may change the results. As the android has embodiment that closely resembles humans and can interact with various modalities (e.g., gestures), opinion attribution and motivation to exchange opinions may change through interaction. We used an android, which is a humanoid robot that closely resembles a human in appearance, and a small robot that has a machine-like appearance. This means that not all humanoid robots could be evaluated. Moreover, although significant differences were found in some evaluations, the absolute values are not large. To solve this problem, we need to design the interaction between the robot and the user. It is also necessary to examine how the evaluation changes when the robots interact or perform tasks, rather than just introducing themselves unilaterally as in this case. Investigating the relationship between opinion attribution and motivation to exchange opinions with the robots through interaction will also be necessary in future research. Though we adopted a topic classification used in the previous study (Yamauchi et al., 2013), there can be multiple variations in the classification depending on the participants. Also, what we defined as an opinion is not only a topic but also a set of a noun-form of adjective. Therefore, a systematic investigation of the types of adjectives is necessary. Furthermore, the results of this experiment may vary depending on the groups of the participants. Specifically, the results of the experiment may vary depending on the attributes of the participants (e.g., occupation and culture) and their knowledge of the robot. Future studies must include their attribution and the degree of involvement with the robot in daily life.

6 Conclusion

This study explored how the user’s motivation to exchange opinions with the humanoid robots is influenced by attributions of opinions to them. We examined the effect by preparing various opinions of two kinds of humanoid robots. The experimental result suggests that not only the users’ interest in the topic but the attribution of the subjective opinions to them influence their motivation to exchange opinions. Another analysis revealed that the android significantly increased the motivation when they were interested in the topic and did not attribute opinions, while the small robot significantly increased it when they were not interested and attributed opinions.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Advanced Telecommunications Research Institute International. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

TU, TM, and HI contributed to conception and design of the study. TU organized the database. TU performed the statistical analysis. TU wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by JST ERATO HI Symbiotic Human Robot Interaction Project JPMJER1401 (HI), JST PRESTO Grant Number JPMJPR23I2 (TU), and JSPS KAKENHI under grant numbers 19H05692 (TM), 19H05693 (HI), and 22K17949 (TU).

Acknowledgments

The authors would like to thank Midori Ban for assistance with the analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2024.1175879/full#supplementary-material

Footnotes

1https://www.vstone.co.jp/products/sota/

References

Altman, I., and Taylor, D. A. (1973). Social penetration: the development of interpersonal relationships. Holt, Rinehart and Winston).

Baevski, A., Zhou, Y., Mohamed, A., and Auli, M. (2020). wav2vec 2.0: a framework for self-supervised learning of speech representations. Adv. neural Inf. Process. Syst. 33, 12449–12460.

Banks, J. (2020). Theory of mind in social robots: replication of five established human tests. Int. J. Soc. Robotics 12, 403–414. doi:10.1007/s12369-019-00588-x

Bartneck, C., Kanda, T., Ishiguro, H., and Hagita, N. (2009). “My robotic doppelgänger-a critical look at the uncanny valley,” in RO-MAN 2009-The 18th IEEE international symposium on robot and human interactive communication (IEEE), 269–276.

Broadbent, E., Kumar, V., Li, X., Sollers, J., Stafford, R. Q., MacDonald, B. A., et al. (2013). Robots with display screens: a robot with a more humanlike face display is perceived to have more mind and a better personality. PloS one 8, e72589. doi:10.1371/journal.pone.0072589

Brüne, M., Abdel-Hamid, M., Lehmkämper, C., and Sonntag, C. (2007). Mental state attribution, neurocognitive functioning, and psychopathology: what predicts poor social competence in schizophrenia best? Schizophrenia Res. 92, 151–159. doi:10.1016/j.schres.2007.01.006

Chen, C., Wang, X., Yi, X., Wu, F., Xie, X., and Yan, R. (2022). “Personalized chit-chat generation for recommendation using external chat corpora,” in Proceedings of the 28th ACM SIGKDD conference on knowledge discovery and data mining, 2721–2731.

Chen, H., Liu, X., Yin, D., and Tang, J. (2017). A survey on dialogue systems: recent advances and new frontiers. Acm Sigkdd Explor. Newsl. 19, 25–35. doi:10.1145/3166054.3166058

Edmonds, M., Gao, F., Liu, H., Xie, X., Qi, S., Rothrock, B., et al. (2019). A tale of two explanations: enhancing human trust by explaining robot behavior. Sci. Robotics 4, eaay4663. doi:10.1126/scirobotics.aay4663

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi:10.1037/0033-295x.114.4.864

Glas, D. F., Minato, T., Ishi, C. T., Kawahara, T., and Ishiguro, H. (2016). “Erica: the erato intelligent conversational android,” in Robot and human interactive communication (RO-MAN), 2016 25th IEEE international symposium on (IEEE), 22–29.

Goetz, J., Kiesler, S., and Powers, A. (2003). “Matching robot appearance and behavior to tasks to improve human-robot cooperation,” in Robot and human interactive communication, 2003. Proceedings. ROMAN 2003. The 12th IEEE international workshop on (ieee), 55–60.

Heider, F. (2013). The psychology of interpersonal relations. London, England, United Kingdom: Psychology Press.

Higashinaka, R., Funakoshi, K., Kobayashi, Y., and Inaba, M. (2016). “The dialogue breakdown detection challenge: task description, datasets, and evaluation metrics,” in Proceedings of the tenth international conference on language resources and evaluation. LREC’16, 3146–3150.

Hirayama, T., Sumi, Y., Kawahara, T., and Matsuyama, T. (2011). “Info-concierge: proactive multi-modal interaction through mind probing,” in The Asia Pacific signal and information processing association annual summit and conference (Elsevier).

Huang, Y., Wu, X., Chen, S., Hu, W., Zhu, Q., Feng, J., et al. (2022). Cmcc: a comprehensive and large-scale human-human dataset for dialogue systems. Proc. Towards Semi-Supervised Reinf. Task-Oriented Dialog Syst. (SereTOD) 23, 48–61. doi:10.1186/s12863-022-01065-7

Ishi, C. T., Liu, C., Ishiguro, H., and Hagita, N. (2012). “Evaluation of formant-based lip motion generation in tele-operated humanoid robots,” in 2012 IEEE/RSJ international conference on intelligent robots and systems (IEEE), 2377–2382.

Katagiri, Y., Takanashi, K., Ishizaki, M., Den, Y., and Enomoto, M. (2013). Concern alignment and trust in consensus-building dialogues. Procedia-Social Behav. Sci. 97, 422–428. doi:10.1016/j.sbspro.2013.10.254

Kidd, C. D., and Breazeal, C. (2004). “Effect of a robot on user perceptions,” in 2004 IEEE/RSJ international conference on intelligent robots and systems (IROS)(IEEE Cat. No. 04CH37566) (IEEE), 4, 3559–3564.

Krach, S., Hegel, F., Wrede, B., Sagerer, G., Binkofski, F., and Kircher, T. (2008). Can machines think? interaction and perspective taking with robots investigated via fmri. PloS one 3, e2597. doi:10.1371/journal.pone.0002597

Maeda, R., Brščić, D., and Kanda, T. (2021). Influencing moral behavior through mere observation of robot work: video-based survey on littering behavior. Proc. 2021 ACM/IEEE Int. Conf. human-robot Interact., 83–91. doi:10.1145/3434073.3444680

Manzi, F., Peretti, G., Di Dio, C., Cangelosi, A., Itakura, S., Kanda, T., et al. (2020). A robot is not worth another: exploring children’s mental state attribution to different humanoid robots. Front. Psychol. 11, 2011. doi:10.3389/fpsyg.2020.02011

Martini, M. C., Gonzalez, C. A., and Wiese, E. (2016). Seeing minds in others–can agents with robotic appearance have human-like preferences? PloS one 11, e0146310. doi:10.1371/journal.pone.0146310

Martini, M. C., Murtza, R., and Wiese, E. (2015). Minimal physical features required for social robots. in Proceedings of the human factors and ergonomics society annual meeting. Los Angeles, CA: SAGE Publications Sage CA, 1438–1442.

Matsumoto, M. (2021). Fragile robot: the fragility of robots induces user attachment to robots. Int. J. Mech. Eng. Robotics Res. 10, 536–541. doi:10.18178/ijmerr.10.10.536-541

Mithen, S. J. (1998). The prehistory of the mind: a search for the origins of art, religion and science.

Mizukami, Y. (2014). Compiling synonym lists (adjectives) based on practical standards for Japanese language education. J. Jissen Jpn. Lang. Literature 85, 1–20. (in Japanese).

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robotics automation Mag. 19, 98–100. doi:10.1109/mra.2012.2192811

Powers, A., Kiesler, S., Fussell, S., and Torrey, C. (2007). Comparing a computer agent with a humanoid robot. Proc. ACM/IEEE Int. Conf. Human-robot Interact., 145–152. doi:10.1145/1228716.1228736

Sakai, K., Minato, T., Ishi, C. T., and Ishiguro, H. (2016). “Speech driven trunk motion generating system based on physical constraint,” in 2016 25th IEEE international symposium on robot and human interactive communication (RO-MAN) (IEEE), 232–239.

Seneff, S., and Polifroni, J. (2000). “Dialogue management in the mercury flight reservation system,” in Proceedings of the 2000 ANLP/NAACL workshop on conversational systems-volume 3 (Association for Computational Linguistics (ACL)), 11–16.

Shimizu, H. (2016). An introduction to the statistical free software had: suggestions to improve teaching, learning and practice data analysis. J. Media, Inf. Commun. 1, 59.

Shuster, K., Xu, J., Komeili, M., Ju, D., Smith, E. M., Roller, S., et al. (2022). Blenderbot 3: a deployed conversational agent that continually learns to responsibly engage.

Su, M.-H., Wu, C.-H., Huang, K.-Y., Hong, Q.-B., and Wang, H.-M. (2017). “A chatbot using lstm-based multi-layer embedding for elderly care,” in 2017 international conference on orange technologies (ICOT), 70–74. doi:10.1109/ICOT.2017.8336091

Sytsma, J., and Machery, E. (2010). Two conceptions of subjective experience. Philos. Stud. 151, 299–327. doi:10.1007/s11098-009-9439-x

Takahashi, H., Terada, K., Morita, T., Suzuki, S., Haji, T., Kozima, H., et al. (2014). Different impressions of other agents obtained through social interaction uniquely modulate dorsal and ventral pathway activities in the social human brain. cortex 58, 289–300. doi:10.1016/j.cortex.2014.03.011

Thellman, S., de Graaf, M., and Ziemke, T. (2022). Mental state attribution to robots: a systematic review of conceptions, methods, and findings. ACM Trans. Human-Robot Interact. (THRI) 11, 1–51. doi:10.1145/3526112

Tokuhisa, R., and Terashima, R. (2009). “Relationship between utterances and enthusiasm in non-task-oriented conversational dialogue,” in Proceedings of the 7th SIGdial workshop on discourse and dialogue. Stroudsburg, PA, United States: (Association for Computational Linguistics), 161–167.

Uchida, T., Minato, T., and Ishiguro, H. (2019). The relationship between dialogue motivation and attribution of subjective opinions to conversational androids, 34. Tokyo, Japan: Transactions of the Japanese Society for Artificial Intelligence. (in Japanese).

Uchida, T., Minato, T., Nakamura, Y., Yoshikawa, Y., and Ishiguro, H. (2021). Female-type android’s drive to quickly understand a user’s concept of preferences stimulates dialogue satisfaction: dialogue strategies for modeling user’s concept of preferences. Int. J. Soc. Robotics 13, 1499–1516. doi:10.1007/s12369-020-00731-z

Uchida, T., Takahashi, H., Ban, M., Shimaya, J., Minato, T., Ogawa, K., et al. (2020). Japanese young women did not discriminate between robots and humans as listeners for their self-disclosure -pilot study-. Multimodal Technol. Interact. 4, 35. doi:10.3390/mti4030035

Uchida, T., Takahashi, H., Ban, M., Shimaya, J., Yoshikawa, Y., and Ishiguro, H. (2017a). “A robot counseling system — what kinds of topics do we prefer to disclose to robots?,” in 2017 26th IEEE international symposium on robot and human interactive communication (IEEE), 207–212. doi:10.1109/ROMAN.2017.8172303

Uchida, T., Takahashi, H., Ban, M., Shimaya, J., Yoshikawa, Y., and Ishiguro, H. (2017b). “A robot counseling system—what kinds of topics do we prefer to disclose to robots?,” in 2017 26th IEEE international symposium on robot and human interactive communication (RO-MAN) (IEEE), 207–212.

Weizenbaum, J. (1966). ELIZA — a computer program for the study of natural language communication between man and machine. Commun. ACM 9, 36–45. doi:10.1145/365153.365168

Williams, J. D., and Young, S. (2007). Partially observable markov decision processes for spoken dialog systems. Comput. Speech and Lang. 21, 393–422. doi:10.1016/j.csl.2006.06.008

Woods, S., Walters, M., Koay, K. L., and Dautenhahn, K. (2006). “Comparing human robot interaction scenarios using live and video based methods: towards a novel methodological approach,” in 9th IEEE international workshop on advanced motion control, 2006 (IEEE), 750–755.

Xu, X., and Sar, S. (2018). “Do we see machines the same way as we see humans? a survey on mind perception of machines and human beings,” in 2018 27th IEEE international symposium on robot and human interactive communication (RO-MAN) (IEEE), 472–475.

Yamauchi, H., Hashimoto, N., Kaneniwa, K., and Tajiri, Y. (2013). Practical standards for Japanese language education. hituzi Shobo.

Yan, Z., Duan, N., Chen, P., Zhou, M., Zhou, J., and Li, Z. (2017). Building task-oriented dialogue systems for online shopping. in Proceedings of the AAAI conference on artificial intelligence, 31.

Yu, Z., Ramanarayanan, V., Lange, P., and Suendermann-Oeft, D. (2019). “An open-source dialog system with real-time engagement tracking for job interview training applications,” in Advanced social interaction with agents (Springer), 199–207.

Keywords: subjective opinion, opinion attribution, motivation, dialogue robot, android, humanoid robot

Citation: Uchida T, Minato T and Ishiguro H (2024) Opinion attribution improves motivation to exchange subjective opinions with humanoid robots. Front. Robot. AI 11:1175879. doi: 10.3389/frobt.2024.1175879

Received: 28 February 2023; Accepted: 30 January 2024;

Published: 19 February 2024.

Edited by:

Fumio Kanehiro, National Institute of Advanced Industrial Science and Technology (AIST), JapanReviewed by:

Soroush Sadeghnejad, Amirkabir University of Technology, IranTommaso Pardi, Independent Researcher, London, United Kingdom

Copyright © 2024 Uchida, Minato and Ishiguro. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Takahisa Uchida, uchida.takahisa@irl.sys.es.osaka-u.ac.jp

Takahisa Uchida

Takahisa Uchida Takashi Minato

Takashi Minato Hiroshi Ishiguro

Hiroshi Ishiguro