Choosing Ethics Over Morals: A Possible Determinant to Embracing Artificial Intelligence in Future Urban Mobility

- 1Global Urban Studies, School of Planning, Design, and Construction & Global Urban Studies Program, Michigan State University, East Lansing, MI, United States

- 2Department of Computer Science and Engineering, Michigan State University, East Lansing, MI, United States

- 3Department of Social Science, School of Planning, Design, and Construction, Michigan State University, East Lansing, MI, United States

Artificial Intelligence (AI) is becoming integral to human life, and the successful wide-scale uptake of autonomous and automated vehicles (AVs) will depend upon people's willingness to adopt and accept AI-based technology and its choices. A person's state of mind, a fundamental belief evolving out of an individual's character, personal choices, intrinsic motivation, and general way of life forming perceptions about how society should be governed, influences AVs perception. The state of mind includes perceptions about governance of autonomous vehicles' artificial intelligence (AVAI) and thus has an impact on a person's willingness to adopt and use AVs. However, one determinant of whether AVAI should be driven by society's ethics or the driver's morals, a “state of mind” variable, has not been studied. We asked 1,473 student, staff, and employee respondents at a university campus whether they prefer an AVAI learn their owners own personal morals (one's own principles) or adopt societal ethics (codes of conduct provided by an external source). Respondents were almost evenly split between whether AVAI should rely on ethics (45.6%) or morals (54.4%). Personal morals and societal ethics are not necessarily distinct and different. Sometimes both overlap and discrepancies are settled in court. However, with an AVAI these decision algorithms must be preprogrammed and the fundamental difference thus is whether an AI should learn from the individual driver (this is the status quo on how we drive today) or from society incorporating millions of drivers' choices. Both are bounded by law. Regardless, to successfully govern artificial intelligence in cities, policy-makers must thus bridge the deep divide between individuals who choose morals over ethics and vice versa.

Introduction

Autonomous, driverless, and self-driving vehicles have begun to emerge as a promising use of AI technologies (Pan, 2016; Faisal et al., 2019), because these machines may play a key role in “autonomous cities” of the future (Allam, 2020; Cugurullo, 2020). Intrinsic to any autonomous city is artificial intelligence (Allam, 2020; Cugurullo, 2020), which constantly analyzes large datasets, also called big data. Using big data, an AI can model and integrate a tremendous number of urban functions, and through those models draw conclusions about how best to govern a city. However, modeling with big data, especially for urban environments, can be flawed, biased, and in a worst case scenario wrong (Barns, 2021). Biased datasets beg questions as to the exact role an AI should play in urban governance and decision making despite its powerful ability to enhance human life. For example, an urban AI may be able to protect people from disasters (Yigitcanlar et al., 2020a,b) and reduce cities' carbon emissions (Allam, 2020; Acheampong et al., 2021), but may also severely change cities in an irrevocably negative way (Cugurullo, 2021).

Essentially, an AI is an algorithm, frequently embedded into machines, that can make its own choices, oftentimes without additional human input (Stone et al., 2016). Some of these decisions may balance cause and effect and include ethical or moral decisions with which humans may struggle (Boström and Yudkowsky, 2014). We ask whether an autonomous vehicle's artificial intelligence (AVAI) should operate according to societal ethics, or its end-users moral code, with ethics being defined as what society views as correct and morality being defined as what an individual views as correct (Bartneck et al., 2007; Applin, 2017). An AVAI may have to make a choice between protecting a pedestrian or its occupants (Hengstler et al., 2016; Etzioni and Etzioni, 2017). People may prefer AVAI to be programmed to benefit society in the abstract, but people also do not trust (and would not purchase) cars that would sacrifice their own life for the lives of others (Shabanpour et al., 2018). The moral and ethical debates about AVAI decision making discussed in this paper are a subset of the broader ethical, moral, and political implications of AVs, which include privacy, surveillance, social inequality, uneven access, and algorithmic discrimination of specific groups of people. Fully autonomous vehicles imply the presence of artificial intelligence in performing safety critical control functions at the heart of which ethical and moral decisions over their governance in cities must be made.

Over the past decade, much social science research has been devoted to understanding sentiments toward AVAI. To date, researchers agree that sentiments vary with demographics: young people, especially males, show more affinity toward AI than seniors (Liang and Lee, 2017; Kaur and Rampersad, 2018; Dos Santos et al., 2019); younger people are also more likely to ride in an AV or automated taxi (Pakusch et al., 2020). Comparatively few studies have focused on people's intrinsic motivation to adopt or sentiments toward AVs, or more precisely, what we define as their “state of mind” related to AI or AVs. For example, previous related experiences like familiarity with and trust in technology increases willingness to adopt, while risk-aversion to technology hinders wide-spread adoption of technology (Smith and Ulu, 2017; Schleich et al., 2019; Liu and Liu, 2021). One hypothesis, that to the best of our knowledge has not been tested, is whether a person's state of mind determines their affinity toward AI—specifically, whether a person who prefers ethics over morals is more likely to be comfortable with AVs, and whether those people share similar characteristics. We, thus intend to examine whether ethics/morals predicts willingness to adopt. Our contribution to the literature relates to sentiment toward AVs, AVAI, and user preference and starts filling a critical gap in how and what type of upcoming disruptions of AI in cities and societies autonomous vehicles may incur continuing Yigitcanlar et al. (2020a,b) explorations on these types of complex disruptions.

We seek to understand how people prefer AVAIs to be programmed: shall an AVAI learn its behavior from society at large (ethics) or learn the owner's own personal code of behavior (morals) and if—given their choice—whether state of mind has any relevance on perception, familiarity with, or willingness to adopt AVs? We found that people are almost evenly split between ethics and morals, but those who favor AVAIs adopting society's ethics are more willing to adopt, more familiar with, and have more favorable perceptions of AVs. We find that individuals who are younger, male, not white, and who have favorable perceptions of AVs express a statistically significantly greater willingness to adopt. Controlling for these factors, we find that individuals' familiarity with AVs and their beliefs about whether AVs should rely on ethics or morals are not statistically significantly associated with their willingness to adopt AVs. The contributions of our study are relevant to academia as an introduction to the state of mind variable as a factor in AV adoption and to industry: most AV programming proceeds by incorporating society's ethics as laws and connected to other vehicles, the AVAI can learn from millions of drivers' interacting on roadways through big data. In contrast, every car currently operating on roads is guided by the drivers' own morals bounded by laws. Further, different corporations choose different paths to program their vehicles in gray areas of the law, in which programmers must pre-declare how AVs make choices. These alternative options stretch well-beyond the binary of individual morals of users vs. societal ethics, but is it an important source of values emerging from for-profit companies developing the technology, and which may neither reflect users' morals or the society's ethics. Lastly, given the likelihood that AV technology will proliferate, better understanding user perceptions can ultimately lead to a faster adoption and better understanding of travel behavior. Given people are split in their perception over whether ethics or morals should guide AVAIs policy-makers need to bridge this gap when governing mobility in autonomous cities.

Literature Review

Assuming the worst-performing AV exceeds human capabilities, and that those human drivers are replaced entirely with their automated counterparts, it is naïve to believe such systems to be infallible. While AVs may connect to one another and analyze their environments to coordinate safe maneuvering (Siegel et al., 2017), environmental factors such as a child darting into the road will inevitably force AVs to choose among unavoidable incidents. More mundane decisions, e.g., whether to yield to an oncoming vehicle or take the right of way, will occur frequently, requiring ethical or moral decisions to be made daily. These potential shortcomings are an argument in opposition of vehicle automation: while society may suffer fewer casualties upon the introduction of AV's, some situations may have had more favorable outcomes when guided by human judgement.

Confronted with a challenge, humans rely on reasoning and reflexes to identify and execute a solution. Bringing similar judgment to automated vehicles may be desirable, not because humans are more “correct” than computers, but because reasoning and instinct may provide computers with a useful “backstop” in the absence of clear programming. Such a system may counter AV opponents' arguments by providing assurance that the AI's code is not merely rational and logical, but rather supported by a common and shared moral or ethical code. In particular, people's adoption of AI is likely if AI solution remain a tool to support work and not replace human-centered interaction (Kassens-Noor et al., 2021a). Precedence in other fields that had to make crucial choices between the greater public good and individual choices exist as they too have come to rely on AI analyzing big data. Crucially, big data analytics are inundated with ethical questions about when, how, and why someone's data should be used and how decisions are being made based on it (Spector-Bagdady and Jagsi, 2018; Barns, 2021). For example, one field is medical technology that frequently presents similar moral vs ethics questions for treatments involving scarce resources and end-of-life care (Demiris et al., 2006). The Covid-19 pandemic has brought these critical questions painfully to light when AI and doctors choose how to disseminate oxygen in overflowing hospitals (Zheng et al., 2021). Another field example is disaster recovery and preparedness that can be enhanced by technology (Yigitcanlar et al., 2020a,b), when AI evaluates big data like twitter to support search and rescue operations and supply distribution. However, these AI decisions bring about ethical and moral questions centered around who gets what (Geale, 2012).

Autonomous vehicles (AVs) and their associated artificial intelligence (AI) are similarly prolific in the ethical quandaries their existence produces (Liu and Liu, 2021). Before these technologies proliferate into our everyday lives, governments deciding over autonomous cities would be well-served to better understand exactly how individuals and society as a whole all want these machines to behave, especially in critical situations. In this manuscript, we consider the role morals and ethics may play in driving the adoption of AVs and examine contemporary approaches to incorporating morals and ethics into self-driving development, as well as potential future directions.

Ethical and Moral AIs

A core component of AI is the ability to make decisions in response to the environment (Stone et al., 2016). Some choices an AI may make are likely to include an ethical or moral component. An AI must take heed of the same “social requirements” that humans have, foresee the consequences of their decisions, and avoid harm to humans (Boström and Yudkowsky, 2014). The topic of ethics and AI is hotly debated, as are the legal implications of an AV injuring a person (De Sio, 2017), as well as their policy implications (Acheampong et al., 2021). In the interest of the public good lies the notion of making sustainable choices that serve society in the long-term. Yet, individual choices and preferences will play a key role and are likely to prevail, such as the preference for privately owned vehicles, even if our society becomes more autonomous. In contrast, currently there are numerous ways in which an AI is and can be programmed to make choices that are not bound by sustainability.

An AVAI's morals may be pre-loaded into the machine using a transparent algorithm, one that humans constantly regulate, monitor, and update (Rahwan, 2018). Such an algorithm may be required to place specific values on outcomes and weigh them against one another; reminiscent of the classical ethical dilemma involving a train, where a person must make the choice to save multiple human lives, by directly ending the life of a single individual. However, this may be overcome by allowing an AVAI more agency and giving it the capacity to seek out additional outcomes to maximize good, though this would increasingly empower an AVAI (Vamplew et al., 2018). Yet another option would involve removing the human element even further, for instance each individual AVAI does not need to undergo the process of learning from outcomes, they could simply learn from previous AVAIs while consistently allowing new data to be analyzed and used in decisions going forward. For example, there is little need to teach machines ethics (if this can be done in the first place) thus allowing AI to adjust their own reference framework (Etzioni and Etzioni, 2017). Cell phones could also prove to be a valuable data source for AVAIs to learn from, as well as provide information about pedestrian location and travel behavior (Wang et al., 2018).

In contrast to limited human involvement, maximizing human input is another option for developing ethical AVAIs; a system where humans are constantly checking AVAI for ethical correctness is another possible way to limit unethical behavior (Arnold and Scheutz, 2018). Some even go as far to suggest that all AVAIs be built with a form of off-switch, or a system in which humans are always the go to for determining moral correctness. However, systems like the above may be counterintuitive to the concept of an AVAI as an autonomous entity and may even lead to erroneous behavior or stalled learning (Arnold and Scheutz, 2018). Finally, speaking of ethics and AVAI begs the question of whether their creation itself is a “good” idea. Arguments are being made suggesting that if AVAIs are allowed to author their own moral, such codes could potentially value “machine nature,” as opposed to human values (Bryson, 2018, p. 23).

Regardless of how an AVAI makes its choices, perception of them will still matter. Educated people, those with higher incomes, and males report being the least concerned about the technology compared to other demographics (Liang and Lee, 2017). Fears can range from issues surrounding privacy, economic components such as job loss, and specifically, whether an AVAI will act in an ethical way. Usefulness also builds trust in technology, as more people become aware of the benefits of AI, trust increases (Mcknight et al., 2011). When medical students were asked about their opinions of AI, the majority agreed that AI could revolutionize certain aspects of the medical field, and males, specifically ones that were more familiar with technology in general, had a more favorable opinion of AI than did their female counterparts (Dos Santos et al., 2019). Media also has a major impact on how people perceive AVs (Du et al., 2021), with positive examples in media leading to positive sentiments.

The physical appearance of an AI-associated system can affect a person's sentiment toward it. An AI inside of an anthropomorphic robot generates more empathy than does one inhabiting a robotic vacuum cleaner, or inside a car's dashboard. Additionally, AIs are increasingly able to emulate human emotions, which will likely lead to more favorable/pleasant interactions (Nomura et al., 2006; Riek et al., 2009; Huang and Rust, 2018). Increasing peoples' trust of AI may be as simple as having the initial stages of an interaction preformulated, and/or programming an AI to behave in a more human-like fashion (Liang and Lee, 2016).

The State of Ethical Codes for AVs

Other studies have sought to outline an ethical code for how an AVAI should behave. Perhaps most notable is the moral machine experiment conducted at MIT, in which millions of participants displayed their own moral code when faced hypothetical moral quandaries (Awad et al., 2018). The moral machine experiment found dramatic differences in moral preference for people based on age, geography, sex, and more showing how complicated AVAI questions are. However, the use of results from the moral machine experiment may not be entirely accurate, as some research argues using data collected in this manner, or big data in general, is inherently flawed (Etienne, 2020; Barns, 2021). The moral machine experiment particularly drew critique in that it uses normative ends, its inadequacy in supporting ethical and juridical discussions to determine the moral settings for autonomous vehicles, and the inner fallacy behind computational social choice methods when applied to ethical decision-making (Etienne, 2021).

Nonetheless, as the first government in the world, Germany, undeterred by this complexity, has created a twenty-point code of conduct for how AVs should behave (Luetge, 2017). The German code notes “The protection of individuals takes precedence over all other utilitarian considerations” (Luetge, 2017, p. 549). Other studies confirm that utilitarian ethics may be what guides AVAI in the future (Faulhaber et al., 2019). It is likely that other countries will adopt similar codes, though how those codes will differ remains to be seen; if the moral machine's observation bleeds into reality, then these codes are likely to be extremely different. Concerns and arguments over which moral or ethical code an AVAI adopts may also seriously slow the technologies spread as well (Mordue et al., 2020).

Sentiment Toward AVs: Risk-Aversion, Trust, and Familiarity

AVs are slated to enter our surroundings as preeminent smart AIs that will make decisions impacting our fate as mobility users. For example, an AVAI may be responsible for analyzing road conditions, plotting courses, or recognizing a user; but an AVAI may also be responsible for more critical functions like when to brake or swerve to avoid hitting another vehicle or pedestrian (Hengstler et al., 2016; Etzioni and Etzioni, 2017). Trust in technology to make life and death decisions is intertwined with predictability and dependability, though perceptions are not always rational. One might expect teams of programmers, ethicists, and engineers to work together to imagine and plan for atypical scenarios such that an AVAI may make instant and informed decisions based on accurate data, learned rules, and known outcomes. In practice, there are an infinite number of scenarios, and AVAIs must operate on imperfect information fed into probabilistic models. Rather than designing a system to determine with certainty who lives and who dies, development teams instead make estimates from imperfect information and develop models to operate safely with a high probability in all known and unknown scenarios. It is failures to act appropriately, rather than deliberate action, that result in harm to passengers or bystanders. Algorithms do not choose to kill; rather, inadequate data, improper logic, or poor modeling cause unexpected behavior. Yet, consumers perseverate on “intent”—and struggle to understand how an automated system could bring humans to harm, as AVs are held to a higher standard than human drivers (Ackerman, 2016).

Although AVs will likely improve road safety, there will remain an element of risk at play, and AI-human interactions themselves may also result in injury (Major and Harriott, 2020). In general, more risk-averse people are less likely to adopt new technology, regardless of how benign or beneficial it is (Smith and Ulu, 2017; Kaur and Rampersad, 2018; Schleich et al., 2019). The perception of risk appears to be a major factor in a person's willingness to adopt AVs (Wang and Zhao, 2019). Though, perception of risk is situational, and in some cases, people may perceive risk differently when interacting with new technology. For example, people are more willing to allow an AVAI to harm a pedestrian than they would be willing to harm one themselves, were they driving the vehicle (Gill, 2018). Notably, those that have experienced automobile accidents in their past are more likely to adopt an AV, indicating that they view traditional driving as riskier given their history (Bansal and Kockelman, 2018). The perception of risk is likely correlated with trust, increasing trust may in turn reduce the perception of risk.

The main AV concerns impacting trust is safety (Haboucha et al., 2017; Rezaei and Caulfield, 2020). Trust in automotive companies, manufacturers, and regulatory agencies increases a person's willingness to adopt AVs, and the same is true for perceived usefulness and the perception that one can regain control (Choi and Ji, 2015; Wen et al., 2018; Dixon et al., 2020). In particular safety concerns, such as crashes, accidents, or non-recognizing human and animal life, are the primary reasons why people distrust AVs (Kassens-Noor et al., 2020, 2021b). In general, older people and females are less trusting of AVs than are other demographics (Bansal et al., 2016). Geography impacts trust as well, even in culturally similar places such as the U.S. and U.K. (Schoettle and Sivak, 2014). Given familiarity' with AVs except as depicted in the media is rare, trust in other technology, like smart phones, can be used as a proxy until wide-scale deployment increases familiarity. One of the most effective means to increase trust may be for individuals to interact with the technology on a personal basis: studies have found that after individuals interact with AVs, their opinions change for the positive; they are able to better conceptualize the benefits of the technology, and more willing to ride in an AV (Pakusch and Bossauer, 2017; Wicki and Bernauer, 2018; Salonen and Haavisto, 2019). Just as trust influences the perception of risk, familiarity appears to positively influence trust.

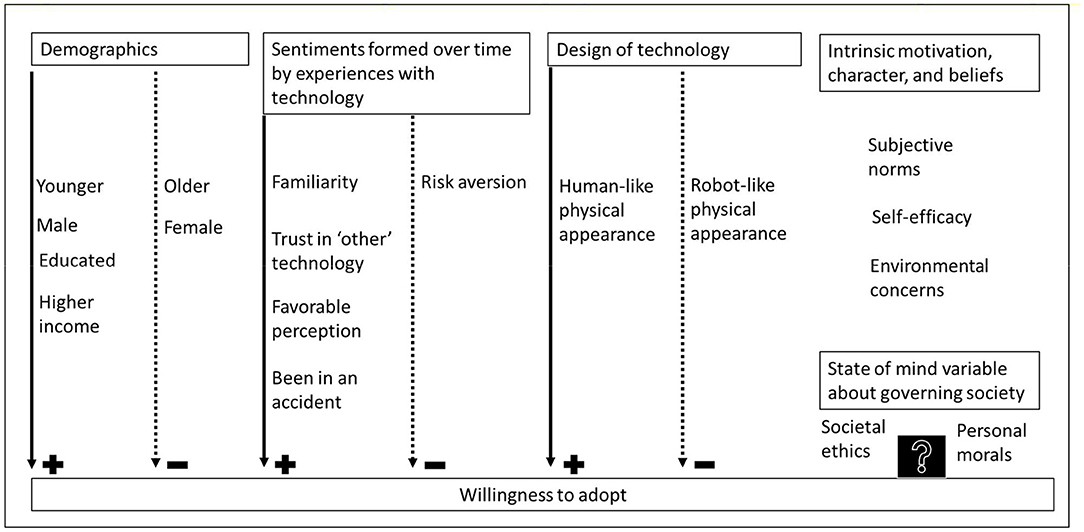

In general, familiarity with technology appears to impact willingness to adopt said technology, this is true for AVs as well, as a person's familiarity increases, so too does their willingness to adopt (Haboucha et al., 2017; Liljamo et al., 2018; Gkartzonikas and Gkritza, 2019). Particularly Gkartzonikas and Gkritza (2019) summarizes the many studies conducted on AVs, focusing on the likelihood of AV adoption and identifying different factors that may affect behavioral intention to ride in AVs. These factors include the level of awareness of AVs, consumer innovativeness, safety, trust of strangers, environmental concerns, relative advantage, compatibility, and complexity, subjective norms, self-efficacy, and driving-related seeking scale (Gkartzonikas and Gkritza, 2019, p. 335). Moreover, attitudes with and familiarity regarding technology often have a greater influence on willingness to adopt than do other demographic factors (Zmud et al., 2016). Though interestingly, in regard to AVs, familiarity was not found to increase willingness to pay, at least in one study (Bansal and Kockelman, 2018). As AVs continue to proliferate throughout all levels of society, and as the likeliness of individuals interacting with them grows, on average, familiarity with AVs is likely to increase. This in turn may foster trust and reduce the perception of risk, further increasing the chances that AVs will become a standard part of the modern world (Figure 1).

Figure 1. State of mind variables integrated into exemplary framework on influences AV adoption willingness. Source: for an expanded framework on influences see Gkartzonikas and Gkritza (2019).

Morals and Ethics in Society and as Drivers

Demographics, too, may play a role in whether a person values ethics over their own morals or vice versa. The moral machine experiment found that women and men often approach ethical and moral situations differently (Awad et al., 2018). In other examples women were found to generally make the same moral decisions as men when presented with dilemmas, except in situations where a person must actively choose to harm one person to save many people (Capraro and Sippel, 2017). The same study also suggests that emotional elements to ethical or moral dilemmas have a greater impact on women. Another study, this time in nurses, also found that women may be more impacted by moral dilemmas and as such may be more negatively affected by them (O'Connell, 2015). There is no consensus, however, as other research asserts that women make moral decisions based on deontological outcomes, whereas men were found to have more utilitarian ethics, and that these differences are significant (Friesdorf et al., 2015). Finally, women may have stronger moral identities and thus are more inflexible when presented with dilemmas (Kennedy et al., 2017).

Based on the literature, demographics, sentiments, experiences, and design among many other factors influence a person's willingness to adopt autonomous vehicles. Our work addresses an important question on the relationship between people's ethical and moral perceptions and their willingness to accept and adopt Autonomous Vehicles' Artificial Intelligence(s), their decision-making, and implications. Given that connected AVs are intended to optimize city functions and autonomous mobility across society, we test two hypotheses related to a state of mind variable about governing society to measure willingness to adopt AVS based on ethics and morals while controlling for factors that have proven to enhance adoption. Thus, we test:

• whether a person who prefers ethics over morals is more likely to be comfortable with AVs,

• whether those people share similar characteristics.

Methods

Data Collection

During the spring of 2019, the human resources department of a large public Midwestern university emailed a link to an online Qualtrics survey to all faculty, staff, and students. Responses to the survey aimed to elicit respondents' sentiment toward autonomous vehicles and artificial intelligence.

Sample

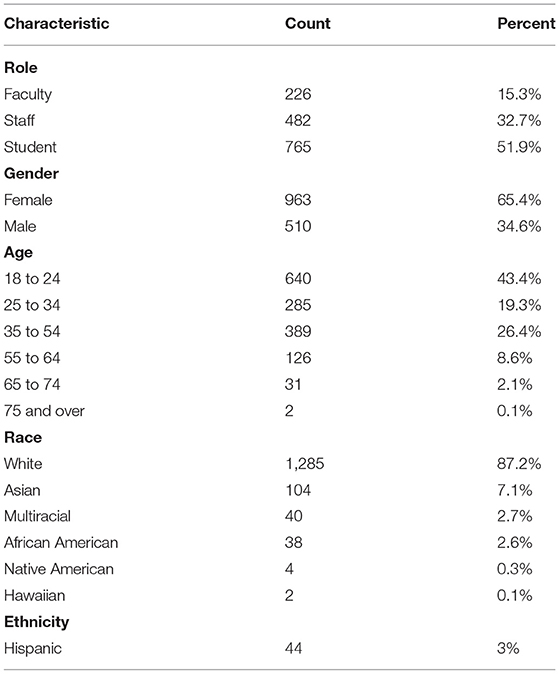

A total of 3,370 respondents started the survey, but here we focus on an analytic sample of the 1,473 students, staff, and faculty who provided complete responses to the items described below (see Table 1). This represents a convenience sample that is not necessarily representative of the university's or state's population, so the analyses reported below should be viewed as exploratory and descriptive, rather than as confirmatory tests of hypotheses. A majority of the sample were students (51.9%), with smaller numbers of staff (32.7%) and faculty (15.3%). The majority of respondents were female (65.4%) and white (87.2%), and under the age of 55 (89.2%).

Dependent Variable, Willingness to Adopt

Respondents were asked “I would ride in an autonomous/self-driving ___” for nine modes of AV: shuttle, bus, light rail, subway, train, car, pod, bike, scooter, and motorcycle. Responses were recorded on a 5-point Likert scale ranging from strongly agree to strongly disagree. Respondents' willingness to adopt AVAIs was measured using their mean response across these nine modes, yielding a willingness to adopt scale with high reliability (α = 0.9062).

Independent Variables

In addition to measuring respondents' sex (male = 1), race (white = 1, non-white = 0), and age (six categories), we also measured their familiarity with and perception of AVs, and their preference for AVAI to rely on society's ethics or their own morals. Familiarity with AVs was measured using their agreement, on a 5-point Likert scale ranging from strongly agree to strongly disagree, with the statement: “I would say I am familiar with autonomous/self-driving vehicles.” Perception of AVs was measured using their agreement, on a 5-point Likert scale ranging from strongly agree to strongly disagree, with the statement: “I would say my perception about autonomous/self-driving vehicles is positive.” Finally, preference for AVAI's reliance on ethics or morals was measured by asking: “Would you want autonomous technology to learn your morals or shall it adopt society's ethics as governed by law or chosen by hundreds of thousands?” These questions were pilot-tested with five faculty members and 17 students.

Results

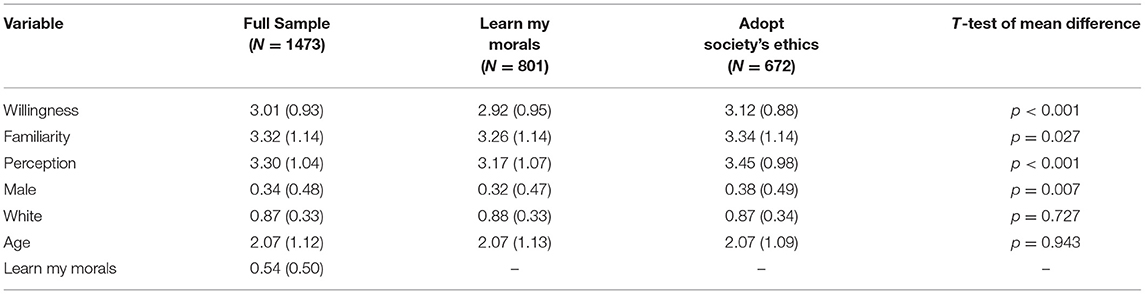

Table 2 reports the mean and standard deviation of each variable for the full sample, and separately for subgroups who indicated that autonomous technology should “learn your morals” or “adopt society's ethics.” We find that respondents reported moderate willingness to adopt AVs (M = 3.01), familiarity with AVs (M = 3.32) and perceptions of AVs (3.30). They were nearly evenly split between whether AVs should learn my morals (54%) or adopt society's ethics (46%). However, those believing that AVs should adopt society's ethics were statistically significantly more willing to adopt AVs (3.12 vs. 2.92, p < 0.001), more familiar with AVs (3.34 vs. 3.26, p = 0.027), and had more favorable perceptions of AVs (3.45 vs. 3.17, p < 0.001). Those favoring ethics over morals for AVs were also significantly more likely to be men (38% vs. 32%, p = 0.07), but did not differ in terms of race or age.

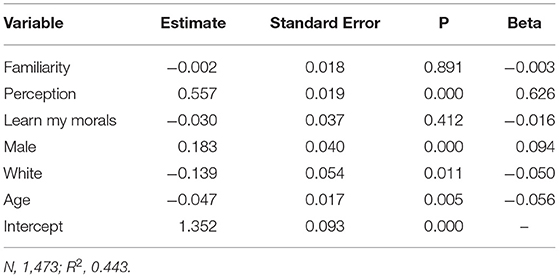

To understand the factors that are associated with an individual's willingness to adopt AVs, we estimated an OLS regression (see Table 3). We find that individuals who are younger, male, do not identify as white, and who have favorable perceptions of AVs express a statistically significantly greater willingness to adopt. Interestingly, controlling for these factors, we find that individuals' familiarity with AVs and their beliefs about whether AVAIs should rely on ethics or morals are not statistically significantly associated with their willingness to adopt AVs.

Discussion

Governments around the world must determine how and to what extent they will allow AI to make decisions for the good of society. As the technology and its choice-making algorithms will play a major role in the future autonomous city (Allam, 2020; Cugurullo, 2020), it can keep us safe from disasters (Yigitcanlar et al., 2020a,b), help reduce contributions to climate change (Allam, 2020; Acheampong et al., 2021), limit crashes (Major and Harriott, 2020) and more; but, will we also allow it to make life or death decisions that has traditionally been in the power of an individual? Even if a majority of people agree as to an answer, the datasets used to create an AVAI may also be flawed and may also provoke a myriad of ethical dilemmas (Barns, 2021). Even more, not only can these questions create policy bottlenecks (Gkartzonikas and Gkritza, 2019; Acheampong et al., 2021), they can also pose challenges for manufacturers.

If AVs and AI are to become widespread, manufacturers of AVs and AIs may have to adapt their products to specific cultures, including the process by which an AI makes its decisions (Applin, 2017). In this instance, choosing the ethics of society as millions of drivers' making choices on the road, or learning from an individual driver is a fundamental difference in how AVAIs can be programmed. Morals, ethics, and philosophy have long been considered in relationship to AI, with recent advances making such discussions relevant to self-driving. However, manufacturers and suppliers may lack ethics teams (Baram, 2019) and consider ethics and morals only once a system is completed or a problem has been encountered (Webster, 2017). This presents a problem in that if society is to maximize the potential benefits of AVs, then we must ensure that their implementation is conducted in a meaningful, equitable, and deliberate fashion—a way that considers the preferences and opinions of all members of society. Given our survey showed an almost even preference split, our state of mind variable is signaling an implementation problem in governing cities and in ensuring widespread adoption and understanding of AVAIs. If we as a group cannot decide how we want these machines to behave, then their introduction will surely cause strife and disagreement. Public policy essentially will provide the regulations that will shape how AVs operate, embedding moral principles and ethics into algorithms. Thus, while individuals will not have the “power” in reality to ensure that their own moral principles, other than that of society will prevail in choosing AVs, they will have a choice on whether to purchase an AVs or ride in particular AV depending on how they are programmed. Thus, a better understanding of people sentiment, preferences, and expectations can help lead to a more amenable and efficient adoption.

Researchers have explored the role for and implementation of morals and ethics in AVs, often with the Trolley Problem formulation that a vehicle knows its state and that of its environment perfectly, and thus that the outcomes are definite. Utilitarianism is a typical starting point and posits that minimizing total harm—even if bystanders or vehicle occupants are put at risk by an AVAI's decision—is optimal. While individuals claim to support this approach, they may not buy vehicles ascribing to this philosophy as personal harm may result (Awad et al., 2018; Kaur and Rampersad, 2018). Potential passengers may find it easier to ascribe to Kant's “duty bound” deontological philosophy, wherein AVAI's may be operated according to invariant rules, even if those rules are only optimal in most cases. Google's self-driving vehicles did this, striving to hit the smallest object detected when a collision is deemed unavoidable, no matter the object type (Smith, 2019). Other AVAIs may make distinction among objects—for example, that it's better to hit a traffic cone than a car, or a car vs. a person—whether through explicit rules or learned outcomes. Some manufacturers may follow the principle of “first, do no harm”—preferring injury through inaction rather than deliberate decision. AV companies have programmed in logical “safety nets” such that if a human were to stand in front of the vehicle, it will wait indefinitely rather than risk injuring that pedestrian by creeping forward—perhaps even when an approaching ambulance would need the AV to clear a path to the hospital. Rather than addressing morals and ethics explicitly, Tesla mimics human behavior (Smith, 2019) though it might be argued that with sufficient training data, moral and ethical philosophy may be learned implicitly. Outside of these examples, the authors have discussed with employees of automotive manufacturers and suppliers solutions ranging from user-configurable preferences, to preserving the vehicle purchaser (or licensee) at all costs, to rolling “digital dice” as a means of determining behavior. The state of morals and ethics in self-driving implementation is evolving rapidly, though today it takes a back seat to more immediate technical challenges.

AI and other autonomous technologies are slated to begin making decisions in our daily lives, for better or worse (Allam, 2020; Cugurullo, 2020). How people prefer these machines behave, however, is understudied. By introducing the concept of state of mind, which are variables related to a person's intrinsic motivation, we analyze whether society's ethics or personal morals are determinants in choosing AVs. In essence, we found that the ethics/morals variable is correlated with familiarity, perception, and gender with some caveats. Respondents were almost evenly split in preferring whether an AVAI should rely on society's ethics or the driver's own morals when making choices. Especially those who believe that AVs should adopt society's ethics were statistically significantly more willing to adopt AVs, more familiar with AVs, had more favorable perceptions of AVs. However, controlling for these factors, we find that individuals' familiarity with AVs and their beliefs about whether AVAIs should rely on ethics or morals are not statistically significantly associated with their willingness to adopt AVs. However, other studies have found that familiarity does positively influence willingness to ride in or adopt AVs (Haboucha et al., 2017; Liljamo et al., 2018; Gkartzonikas and Gkritza, 2019). Further, attitudes have proven to wield more influence on technology adoption than demographic variables (Zmud et al., 2016).

We also find that individuals who are younger, male, not white, and who have favorable perceptions of AVs express a statistically significantly greater willingness to adopt. This finding largely aligns with most studies which identify males (Liang and Lee, 2017; Dos Santos et al., 2019) and younger people (Bansal et al., 2016) are more likely to accept the new technology. Because the sample was racially homogeneous, we are cautious to interpret the finding that non-white respondents are more willing to adopt; future research should examine whether this replicates in more racially heterogenous samples.

Using the example of autonomous mobility, we introduced a variable (ethics vs morals) that may help governments decide over how to direct development of AIs and their power over governing future cities. However, in our study we simply tested for a binary variable, instead of expanding the survey to include other powerful and potential sources of values for AVs that are introduced by different car manufacturers and their programmers. In future studies the state of mind variables including not only a broader set of the general population, but also targeted focus groups with manufacturers would allow a broader perception and reasoning of how AVs can and should be programmed. As comparatively little attention has been paid to decision over how these new technologies proliferate our everyday lives, governments need a better understanding on how we as a society would want these machines to behave, especially in critical situations.

Conclusions

This study asks a simple question: do potential AVAI users believe morals or ethics guide AVAIs? Survey findings from a university campus suggest that people are divided on which should guide AVAIs' choices, but that those who believe AVAIs should be guided by ethics are more willing to adopt AVAIs than those who believe they should be guided by morals. However, after controlling for individuals' familiarity with AVAIs, perceptions of AVAIs, and demographic characteristics, this apparent effect disappears. That is, beliefs about whether AVAIs should be guided by morals or ethics appear to be unassociated with willingness to adopt AVAIs. This may help explain engineers' decision to postpone the problem, arguing that whether we instill or program ethics or morals into our vehicles is inconsequential.

This study has several limitations: conducted on a university campus, the survey only included people associated with the university, and thus the sample was biased toward both younger people and those with more education. It could be that within the demographic scope of this research, which was primarily a highly educated and relatively advantaged group, familiarity was simply a secondary consideration, or people associated with higher education may already be familiar with the technology beyond the point that it can influence their opinions. A similar survey of the general population may reveal different patterns because of this. Additionally, many of the constructs of interest (e.g. familiarity, morality) are likely multidimensional, but here were measured using single items, which reduces their reliability and their ability to fully capture these constructs. Future studies should consider using or developing multi-item scales to assess these constructs. Nonetheless, this study represents a preliminary exploratory and descriptive study of a large university sample, and provides a starting point for related future work on states of mind in AV programming. Lastly, this survey forced respondent to choose between morals and ethics, whereby these may not have to be distinctly different.

The core topic within this paper, whether and to what extent people's state of mind matters in the adoption of AVAIs, however, remains relevant and deserves further study given the limited nature of our sample. While our theoretical moral vs. ethics question may appear simple, the reality of implementation is extremely complex: the technology sector, car manufacturers, and the internet providers are rapidly developing technology for the future of mobility without engaging with the human state of mind variables, such as familiarity, willingness to adopt, and our newly introduced one in this paper: the preference whether morals or ethics should guide AVAIs. Given respondents' preferences show an almost 50/50 moral/ethics split, further exploration to the future of AI-enabled choice-making is necessary. In contrast, the AV industry is plowing down a path that may differ significantly from reality. These state of minds, which may not be comprehensive, combined ultimately determine AVAIs adoption into society. Future work should be focused on identifying further state of mind variables and test them via perception studies on representative populations to help identify their influence over the embracing the idea of AVAIs in the future of mobility.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Internal Review Board, Michigan State University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

EK-N and JS: study conception and design. EK-N: data collection. EK-N and JS: analysis and interpretation of results. EK-N, JS, and TD: draft manuscript preparation. All authors reviewed the results and approved the final version of the manuscript.

Funding

This work was supported by the Center of Business and Social Analytics at Michigan State University.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors thank Zachary Neal for his help with the analysis and interpretation.

References

Acheampong, R. A., Cugurullo, F., Gueriau, M., and Dusparic, I. (2021). Can autonomous vehicles enable sustainable mobility in future cities? Insights and policy challenges from user preferences over different urban transport options. Cities 112:103134. doi: 10.1016/j.cities.2021.103134

Ackerman, E. (2016). People want driverless cars with utilitarian ethics, unless they're a passenger. IEEE Spectrum. Available online at: http://spectrum.ieee.org/cars-thatthink/transportation/self-driving/people-want-driverless-cars-with-utilitarian-ethics-unless-theyre-a-passenger (accessed October 6, 2021).

Allam, Z. (2020). The Rise of Autonomous Smart Cities: Technology, Economic Performance and Climate Resilience. London: Springer Nature. doi: 10.1007/978-3-030-59448-0

Applin, S. (2017). Autonomous vehicle ethics: stock or custom?. IEEE Consum. Electron. Mag. 6, 108–110. doi: 10.1109/MCE.2017.2684917

Arnold, T., and Scheutz, M. (2018). The “big red button” is too late: an alternative model for the ethical evaluation of AI systems. Ethics Inf. Technol. 20, 59–69. doi: 10.1007/s10676-018-9447-7

Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., et al. (2018). The moral machine experiment. Nature 563, 59–64. doi: 10.1038/s41586-018-0637-6

Bansal, P., and Kockelman, K. M. (2018). Are we ready to embrace connected and self-driving vehicles? A case study of Texans. Transportation 45, 641–675. doi: 10.1007/s11116-016-9745-z

Bansal, P., Kockelman, K. M., and Singh, A. (2016). Assessing public opinions of and interest in new vehicle technologies: an Austin perspective. Transport. Res. C Emerg. Technol. 67, 1–4. doi: 10.1016/j.trc.2016.01.019

Baram, M. (2019). Why the trolley dilemma is a terrible model for trying to make self-driving cars safer. Fast Company. Available online at: https://www.fastcompany.com/90308968/why-the-trolley-dilemma-is-a-terrible-model-for-trying-to-make-self-driving-cars-safer (accessed October 6, 2021).

Barns, S. (2021). Out of the loop? On the radical and the routine in urban big data. Urban Stud. doi: 10.1177/00420980211014026 [Epub ahead of print].

Bartneck, C., Suzuki, T., Kanda, T., and Nomura, T. (2007). The influence of people's culture and prior experiences with Aibo on their attitude towards robots. AI Soc. 21, 217–230. doi: 10.1007/s00146-006-0052-7

Boström, N., and Yudkowsky, E. (2014). The Ethics of Artificial Intelligence. The Cambridge handbook of artificial intelligence. Cambridge: Cambridge University Press. 316–334. doi: 10.1017/CBO9781139046855.020

Bryson, J. J. (2018). Patiency is not a virtue: the design of intelligent systems and systems of ethics. Ethics Inf. Technol. 20, 15–26. doi: 10.1007/s10676-018-9448-6

Capraro, V., and Sippel, J. (2017). Gender differences in moral judgment and the evaluation of gender-specified moral agents. Cogn. Process. 18, 399–405. doi: 10.1007/s10339-017-0822-9

Choi, J. K., and Ji, Y. G. (2015). Investigating the importance of trust on adopting an autonomous vehicle. Int. J. Hum. Comput. Interact. 31, 692–702. doi: 10.1080/10447318.2015.1070549

Cugurullo, F. (2020). Urban artificial intelligence: from automation to autonomy in the smart city. Front. Sustain. Cities 2:38. doi: 10.3389/frsc.2020.00038

Cugurullo, F. (2021). Frankenstein Urbanism: Eco, Smart and Autonomous Cities, Artificial Intelligence and the End of the City. London: Routledge. doi: 10.4324/9781315652627

De Sio, F. S. (2017). Killing by autonomous vehicles and the legal doctrine of necessity. Ethical Theory Moral Pract. 20, 411–429. doi: 10.1007/s10677-017-9780-7

Demiris, G., Oliver, D. P., and Courtney, K. L. (2006). Ethical considerations for the utilization of telehealth technologies in home and hospice care by the nursing profession. Nurs. Admin. Q. 30, 56–66. doi: 10.1097/00006216-200601000-00009

Dixon, G., Sol Hart, P., Clarke, C., O'Donnell, N. H., and Hmielowski, J. (2020). What drives support for self-driving car technology in the United States?. J. Risk Res. 23, 275–287. doi: 10.1080/13669877.2018.1517384

Dos Santos, D. P., Giese, D., Brodehl, S., Chon, S. H., Staab, W., Kleinert, R., et al. (2019). Medical students' attitude towards artificial intelligence: a multicentre survey. Eur. Radiol. 29, 1640–1666. doi: 10.1007/s00330-018-5601-1

Du, H., Zhu, G., and Zheng, J. (2021). Why travelers trust and accept self-driving cars: an empirical study. Travel Behav. Soc. 22, 1–9. doi: 10.1016/j.tbs.2020.06.012

Etienne, H. (2020). When AI ethics goes astray: a case study of autonomous vehicles. Soc. Sci. Comput. Rev. 1–11. doi: 10.1177/0894439320906508

Etienne, H. (2021). The dark side of the ‘Moral Machine’ and the fallacy of computational ethical decision-making for autonomous vehicles. Law Innov. Technol. 13, 85–107. doi: 10.1080/17579961.2021.1898310

Etzioni, A., and Etzioni, O. (2017). Incorporating ethics into artificial intelligence. J. Ethics 21, 403–418. doi: 10.1007/s10892-017-9252-2

Faisal, A., Kamruzzaman, M., Yigitcanlar, T., and Currie, G. (2019). Understanding autonomous vehicles: a systematic literature review on capability, impact, planning and policy. J. Transport Land Use 12, 45–72. doi: 10.5198/jtlu.2019.1405

Faulhaber, A. K., Dittmer, A., Blind, F., Wächter, M. A., Timm, S., Sütfeld, L. R., et al. (2019). Human decisions in moral dilemmas are largely described by utilitarianism: virtual car driving study provides guidelines for autonomous driving vehicles. Sci. Eng. Ethics 25, 399–418. doi: 10.1007/s11948-018-0020-x

Friesdorf, R., Conway, P., and Gawronski, B. (2015). Gender differences in responses to moral dilemmas: a process dissociation analysis. Pers. Soc. Psychol. Bull. 41, 696–713. doi: 10.1177/0146167215575731

Geale, S. K. (2012). The ethics of disaster management. Disaster Prevent. Manag. doi: 10.1108/09653561211256152

Gill, T. (2018). “Will self-driving cars make us less moral? Yes, they can,” in E - European Advances in Consumer Research Volume 11, eds M. Geuens, M. Pandelaere, M. T. Pham, and I. Vermeir (Duluth, MN: ACR European Advances), 220–221. Available online at: http://www.acrwebsite.org/volumes/1700212/volumes/v11e/E-11 (accessed October 6, 2021).

Gkartzonikas, C., and Gkritza, K. (2019). What have we learned? A review of stated preference and choice studies on autonomous vehicles. Transport. Res. C Emerg. Technol. 98, 323–337. doi: 10.1016/j.trc.2018.12.003

Haboucha, C. J., Ishaq, R., and Shiftan, Y. (2017). User preferences regarding autonomous vehicles. Transport. Res. C Emerg. Technol. 78, 37–49. doi: 10.1016/j.trc.2017.01.010

Hengstler, M., Enkel, E., and Duelli, S. (2016). Applied artificial intelligence and trust—The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Change 105, 105–120. doi: 10.1016/j.techfore.2015.12.014

Huang, M. H., and Rust, R. T. (2018). Artificial intelligence in service. J. Serv. Res. 21, 155–172. doi: 10.1177/1094670517752459

Kassens-Noor, E., Cai, M., Kotval-Karamchandani, Z., and Decaminada, T. (2021b). Autonomous vehicles and mobility for people with special needs. Transport. Res. A Policy Pract. doi: 10.1016/j.tra.2021.06.014

Kassens-Noor, E., Wilson, M., Cai, M., Durst, N., and Decaminada, T. (2020). Autonomous vs. self-driving vehicles: the power of language to shape public perceptions. J. Urban Technol. doi: 10.1080/10630732.2020.1847983 [Epub ahead of print].

Kassens-Noor, E., Wilson, M., Kotval-Karamchandani, Z., Cai, M., and Decaminada, T. (2021a). Living with autonomy: public perceptions of an AI mediated future. J. Plan. Educ. Res. doi: 10.1177/0739456X20984529 [Epub ahead of print].

Kaur, K., and Rampersad, G. (2018). Trust in driverless cars: investigating key factors influencing the adoption of driverless cars. J. Eng. Technol. Manag. 48, 87–96. doi: 10.1016/j.jengtecman.2018.04.006

Kennedy, J. A., Kray, L. J., and Ku, G. (2017). A social-cognitive approach to understanding gender differences in negotiator ethics: the role of moral identity. Organ. Behav. Hum. Decis. Process. 138, 28–44. doi: 10.1016/j.obhdp.2016.11.003

Liang, Y., and Lee, S. A. (2016). Advancing the strategic messages affecting robot trust effect: the dynamic of user-and robot-generated content on human–robot trust and interaction outcomes. Cyberpsychol. Behav. Soc. Netw. 19, 538–544. doi: 10.1089/cyber.2016.0199

Liang, Y., and Lee, S. A. (2017). Fear of autonomous robots and artificial intelligence: evidence from national representative data with probability sampling. Int. J. Soc. Robot. 9, 379–384. doi: 10.1007/s12369-017-0401-3

Liljamo, T., Liimatainen, H., and Pöllänen, M. (2018). Attitudes and concerns on automated vehicles. Transport. Res. F Traffic Psychol. Behav. 59, 24–44. doi: 10.1016/j.trf.2018.08.010

Liu, P., and Liu, J. (2021). Selfish or utilitarian automated vehicles? Deontological evaluation and public acceptance. Int. J. Hum. Comput. Interact. 37, 1231–1242. doi: 10.1080/10447318.2021.1876357

Luetge, C. (2017). The German ethics code for automated and connected driving. Philos. Technol. 30, 547–558. doi: 10.1007/s13347-017-0284-0

Major, L., and Harriott, C. (2020). “Autonomous agents in the wild: human interaction challenges,” in Robotics Research. Springer Proceedings in Advanced Robotics, Vol. 10, ed N. Amato, G. Hager, S. Thomas, M. Torres-Torriti (Cham: Springer), 67–74. doi: 10.1007/978-3-030-28619-4_9

Mcknight, D. H., Carter, M., Thatcher, J. B., Clay, P. F., et al. (2011). Trust in a specific technology: An investigation of its components and measures. ACM Trans. Manage. Inform. Syst. 2, 1–25. doi: 10.1145/1985347.1985353

Mordue, G., Yeung, A., and Wu, F. (2020). The looming challenges of regulating high level autonomous vehicles. Transport. Res. A Policy Pract. 132, 174–187. doi: 10.1016/j.tra.2019.11.007

Nomura, T., Kanda, T., and Suzuki, T. (2006). Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI Soc. 20, 138–150. doi: 10.1007/s00146-005-0012-7

O'Connell, C. B. (2015). Gender and the experience of moral distress in critical care nurses. Nurs. Ethics 22, 32–42. doi: 10.1177/0969733013513216

Pakusch, C., and Bossauer, P. (2017). “User acceptance of fully autonomous public transport,” in 14th International Conference on e-Business (Shanghai), 52–60. doi: 10.5220/0006472900520060

Pakusch, C., Meurer, J., Tolmie, P., and Stevens, G. (2020). Traditional taxis vs automated taxis–Does the driver matter for millennials?. Travel Behav. Soc. 21, 214–225. doi: 10.1016/j.tbs.2020.06.009

Pan, Y. (2016). Heading toward artificial intelligence 2.0. Engineering 2, 409–413. doi: 10.1016/J.ENG.2016.04.018

Rahwan, I. (2018). Society-in-the-loop: programming the algorithmic social contract. Ethics Inf. Technol. 20, 5–14. doi: 10.1007/s10676-017-9430-8

Rezaei, A., and Caulfield, B. (2020). Examining public acceptance of autonomous mobility. Travel Behav. Soc. 21, 235–246. doi: 10.1016/j.tbs.2020.07.002

Riek, L. D., Rabinowitch, T. C., Chakrabarti, B., and Robinson, P. (2009). “How anthropomorphism affects empathy toward robots,” in Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction (La Jolla, CA), 245–246. doi: 10.1145/1514095.1514158

Salonen, A. O., and Haavisto, N. (2019). Towards autonomous transportation. passengers' experiences, perceptions and feelings in a driverless shuttle bus in Finland. Sustainability 11:588. doi: 10.3390/su11030588

Schleich, J., Gassmann, X., Meissner, T., and Faure, C. (2019). A large-scale test of the effects of time discounting, risk aversion, loss aversion, and present bias on household adoption of energy-efficient technologies. Energy Econ. 80, 377–393. doi: 10.1016/j.eneco.2018.12.018

Schoettle, B., and Sivak, M. (2014). A survey of public opinion about autonomous and self-driving vehicles in the US, the UK, and Australia. University of Michigan, Ann Arbor, Transportation Research Institute. Available online at: http://hdl.handle.net/2027.42/108384 (accessed October 6, 2021).

Shabanpour, R., Golshani, N., Shamshiripour, A., and Mohammadian, A. K. (2018). Eliciting preferences for adoption of fully automated vehicles using best-worst analysis. Transport. Res. C Emerg. Technol. 93, 463–478. doi: 10.1016/j.trc.2018.06.014

Siegel, J. E., Erb, D. C., and Sarma, S. E. (2017). A survey of the connected vehicle landscape—Architectures, enabling technologies, applications, and development areas. IEEE Trans. Intell. Transport. Syst. 19, 2391–2406. doi: 10.1109/TITS.2017.2749459

Smith, J. (2019). The Trolley Problem Isn't Theoretical Anymore. Towards Data Science. Available online at: https://towardsdatascience.com/trolley-problem-isnt-theoretical-2fa92be4b050 (accessed October 6, 2021).

Smith, J. E., and Ulu, C. (2017). Risk aversion, information acquisition, and technology adoption. Operat. Res. 65, 1011–1028. doi: 10.1287/opre.2017.1601

Spector-Bagdady, K., and Jagsi, R. (2018). Big data, ethics, and regulations: implications for consent in the learning health system. Med. Phys. 45:e845. doi: 10.1002/mp.12707

Stone, P., Brooks, R., Brynjolfsson, E., Calo, R., Etzioni, O., Hager, G., et al (2016). Artificial intelligence and life in 2030: One hundred year study on artificial intelligence. Report of the 2015-2016 study panel. Stanford University, Stanford, CA, United States. Available online at: http://ai100.stanford.edu/2016-report (accessed October 6, 2021).

Vamplew, P., Dazeley, R., Foale, C., Firmin, S., and Mummery, J. (2018). Human-aligned artificial intelligence is a multiobjective problem. Ethics Inf. Technol. 20, 27–40. doi: 10.1007/s10676-017-9440-6

Wang, S., and Zhao, J. (2019). Risk preference and adoption of autonomous vehicles. Transport. Research A Policy Pract. 126, 215–229. doi: 10.1016/j.tra.2019.06.007

Wang, Z., He, S. Y., and Leung, Y. (2018). Applying mobile phone data to travel behaviour research: a literature review. Travel Behav. Soc. 11, 141–155. doi: 10.1016/j.tbs.2017.02.005

Webster, K. (2017). Philosophy Prof Wins NSF Grant on Ethics of Self-Driving Cars. Lowell, MA: UMass Lowell. Available online at: https://www.uml.edu/news/stories/2017/selfdrivingcars.aspx (accessed October 6, 2021).

Wen, J., Chen, Y. X., Nassir, N., and Zhao, J. (2018). Transit-oriented autonomous vehicle operation with integrated demand-supply interaction. Transport. Res. C Emerg. Technol. 97, 216–234. doi: 10.1016/j.trc.2018.10.018

Wicki, M., and Bernauer, T. (2018). Public Opinion on Route 12: Interim report on the first survey on the pilot experiment of an automated bus service in Neuhausen am Rheinfall. ISTP Paper Series 3. ETH Zurich, Zürich,Switzerland.

Yigitcanlar, T., Butler, L., Windle, E., Desouza, K. C., Mehmood, R., and Corchado, J. M. (2020a). Can building “artificially intelligent cities” safeguard humanity from natural disasters, pandemics, and other catastrophes? An urban scholar's perspective. Sensors 20:2988doi: 10.3390/s20102988

Yigitcanlar, T., Desouza, K. C., Butler, L., and Roozkhosh, F. (2020b). Contributions and risks of Artificial Intelligence (AI) in building smarter cities: insights from a systematic review of the literature. Energies 13:1473. doi: 10.3390/en13061473

Zheng, H., Zhu, J., Xie, W., and Zhong, J (2021). Reinforcement Learning Assisted Oxygen Therapy for Covid-19 Patients Under Intensive Care. Bethesda, MD: National Institutes of Health. arxiv [preprint].arxiv:2105.08923v1.

Zmud, J., Sener, I. N., and Wagner, J. (2016). Consumer Acceptance and Travel Behavior: Impacts of Automated Vehicles. Bryan: Texas A&M Transportation Institute. Available online at: https://rosap.ntl.bts.gov/view/dot/32687 (accessed October 6, 2021).

Keywords: automation, morals (morality), ethics, autonomy, artificial intelligence, autonomous vehicle, automated

Citation: Kassens-Noor E, Siegel J and Decaminada T (2021) Choosing Ethics Over Morals: A Possible Determinant to Embracing Artificial Intelligence in Future Urban Mobility. Front. Sustain. Cities 3:723475. doi: 10.3389/frsc.2021.723475

Received: 10 June 2021; Accepted: 23 September 2021;

Published: 28 October 2021.

Edited by:

Federico Cugurullo, Trinity College Dublin, IrelandReviewed by:

Ransford A. Acheampong, The University of Manchester, United KingdomLuis Alvarez Leon, Dartmouth College, United States

Copyright © 2021 Kassens-Noor, Siegel and Decaminada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: E. Kassens-Noor, ekn@msu.edu

E. Kassens-Noor

E. Kassens-Noor Josh Siegel2

Josh Siegel2