Bronchoscopy using a head-mounted mixed reality device—a phantom study and a first in-patient user experience

- 1Department of Thoracic Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway

- 2Department of Circulation and Medical Imaging, Faculty of Medicine, Norwegian University of Science and Technology (NTNU), Trondheim, Norway

- 3Department of Health Research, SINTEF Digital, Trondheim, Norway

- 4Department of Medicine, Levanger Hospital, Nord-Trøndelag Health Trust, Levanger, Norway

- 5Department of Research, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway

- 6Department of Computer Science (IDI), Faculty of Information Technology and Electrical Engineering (IE), Norwegian University of Science and Technology (NTNU), Trondheim, Norway

Background: Bronchoscopy for peripheral lung lesions may involve image sources such as computed tomography (CT), fluoroscopy, radial endobronchial ultrasound (R-EBUS), and virtual/electromagnetic navigation bronchoscopy. Our objective was to evaluate the feasibility of replacing these multiple monitors with a head-mounted display (HMD), always providing relevant image data in the line of sight of the bronchoscopist.

Methods: A total of 17 pulmonologists wearing a HMD (Microsoft® HoloLens 2) performed bronchoscopy with electromagnetic navigation in a lung phantom. The bronchoscopists first conducted an endobronchial inspection and navigation to the target, followed by an endobronchial ultrasound bronchoscopy. The HMD experience was evaluated using a questionnaire. Finally, the HMD was used in bronchoscopy inspection and electromagnetic navigation of two patients presenting with hemoptysis.

Results: In the phantom study, the perceived quality of video and ultrasound images was assessed using a visual analog scale, with 100% representing optimal image quality. The score for video quality was 58% (95% confidence interval [CI] 48%–68%) and for ultrasound image quality, the score was 43% (95% CI 30%–56%). Contrast, color rendering, and resolution were all considered suboptimal. Despite adjusting the brightness settings, video image rendering was considered too dark. Navigation to the target for biopsy sampling was accomplished by all participants, with no significant difference in procedure time between experienced and less experienced bronchoscopists. The overall system latency for the image stream was 0.33–0.35 s. Fifteen of the pulmonologists would consider using HoloLens for navigation in the periphery, and two would not consider using HoloLens in bronchoscopy at all. In the human study, bronchoscopy inspection was feasible for both patients.

Conclusion: Bronchoscopy using an HMD was feasible in a lung phantom and in two patients. Video and ultrasound image quality was considered inferior to that of video monitors. HoloLens 2 was suboptimal for airway and mucosa inspection but may be adequate for virtual bronchoscopy navigation.

1 Introduction

In pulmonary medicine, bronchoscopy plays a crucial role in the inspection of airways and the sampling of lung lesions. However, the bronchoscope has limited access to small-caliber airways. In cases with peripheral lung tumors, the diagnostic yield relies on image modalities other than video alone (Burks and Akulian., 2020). Navigation to the target is aided by computed tomography (CT) scans taken before the procedure. CT scan images can be presented as two-dimensional (2D) slices or segmented into virtual bronchoscopy, serving as a roadmap to a lesion. These images can be integrated into an electromagnetic navigation system with tracked instruments. Additionally, a cone-beam CT, fluoroscopy, or endobronchial ultrasound (EBUS) can be used intraoperatively for target confirmation. Currently, all these additional image modalities are displayed on separate monitors, requiring the bronchoscopists to constantly shift their attention between different screens. An important clinical motivation is to make these image sources readily available, not having to constantly orientate in various directions in a bronchoscopy suite cluttered with monitors. The workflow could be improved by always displaying the relevant image source on a head-mounted display (HMD) equipped with augmented reality (AR) within the line of sight of the bronchoscopist.

In contrast to virtual reality, which provides an entirely artificial environment, AR combines computer-generated virtual elements with the real world. In medicine, these virtual elements can be medical imaging data, allowing complex anatomy to be presented as three-dimensional (3D) holograms. Studies in congenital heart surgery (Brun et al., 2018) and oncologic kidney surgery (Wellens et al., 2019) have shown that surgical planning with 3D visualizations improves the understanding of anatomy.

An increasing number of studies have focused on exploring optical see-through (OST) HMDs such as HoloLens, Epson Moverio, or Magic Leap, especially in the field of surgery. These devices can operate wirelessly and be activated by hand gestures or voice, allowing real-time display of imaging data during invasive procedures. In the surgical context, OST-HMDs have become the preferred choice over video see-through (VST) HMDs, which may introduce time lag and limit the field of view to the camera’s perspective. Given the need for close communication with nearby assistants during bronchoscopy procedures, an OST-HMD would be a natural fit.

In medicine, HoloLens is the most studied optical see-through device (Doughty et al., 2022). HoloLens offers simultaneous location and mapping (SLAM) properties with its inertial measurement unit and time-of-flight depth cameras. In neurosurgery, HoloLens has demonstrated its ability to overlay 3D CT images onto patients’ heads, providing trajectories for ventricular drainage procedures (Li et al., 2018). External navigation systems, such as electromagnetic (EM) or optical navigation systems, can be integrated with HoloLens to enhance accuracy. Such multimodal navigation systems have been explored in phantom models, both in vascular surgery (García-Vázquez et al., 2018) and orthopedics (Condino et al., 2018).

Replacing video monitors with HoloLens has been demonstrated in a study by Al Janabi et al., (2020), which simulated ureteroscopy procedures. In this study, video endoscopy, fluoroscopy, and CT imaging data were displayed in 2D mode on the HoloLens. The procedures were found to be more time-efficient and performed better than conventional monitors. However, research on HMDs in pulmonary medicine is scarce. In a recent report, four experienced pulmonologists performed a bronchoscopy on a lung phantom using OST-HMD Moverio BT-35E. The lung phantom was displayed as virtual bronchoscopy on the HMD, showing a centerline to five virtual targets. All targets up to the fifth bronchial division were reached and biopsy sampling was simulated, though without any comparison with the traditional monitor approach (Okachi et al., 2022).

Our study aimed to investigate whether the OST-HMD HoloLens could replace conventional video screen monitors in bronchoscopy examinations. We transferred 2D images from the bronchoscopy video stream and ultrasound images from an endobronchial ultrasound (EBUS) bronchoscope and segmented navigation data from CT scans or direct CT imaging data to the HMD. We explored the usefulness of an OST-HMD during bronchoscopy in a lung phantom and in two human patients. Our goal was to assess how the bronchoscopist perceived the OST-HMD experience in terms of image quality, workflow, and ergonomics. This study served as a preliminary step toward optimizing bronchoscopy for peripheral lung targets by always displaying the relevant images in the bronchoscopist’s line of sight.

2 Materials and methods

2.1 Study design and outcome

In this three-part proof-of-concept study, pulmonologists were recruited to perform mixed reality bronchoscopy with an OST-HMD. Seventeen pulmonologists participated in bronchoscopy performed on a lung phantom. The first part involved regular bronchoscopy with the addition of electromagnetic navigation bronchoscopy (ENB) for navigating to a peripheral target. The second part was EBUS bronchoscopy on the phantom. Finally, the third part involved regular bronchoscopy conducted on two patients by one of the pulmonologists.

The main outcome of the study was the evaluation of user experience by the 17 participating pulmonologists, which was performed using questionnaires with a scoring system. Additionally, the purpose of performing bronchoscopy with OST-HMD on two patients was to examine the feasibility and to refine the quality of the video streams.

2.2 Electromagnetic navigation bronchoscopy

Electromagnetic navigation bronchoscopy is an advanced guiding technique that utilizes preoperative image data for navigation during real-time bronchoscopy. CT imaging data can be segmented to generate virtual bronchoscopy, displaying a route to the target. By combining electromagnetic tracking of the bronchoscope, the location of the bronchoscope within the virtual 3D bronchial tree can be determined during examinations. Another option is to locate the tracked bronchoscope in a 2D stack of CT slices.

ENB was provided by the open-source research navigation platform CustusX (SINTEF, Trondheim, Norway, http://www.custusx.org) (Askeland et al., 2016) with the Aurora® EM tracking system (Northern Digital Inc., Waterloo, ON, Canada). The EM tracking sensor with six degrees of freedom (DOF) (Aurora, Northern Digital Inc., Waterloo, ON, Canada) was attached at the tip of the bronchoscope. Preoperative CT imaging data of both phantom and patients underwent an automated process of airway segmentation in CustusX. Virtual bronchoscopy and centerline route-to-target were provided (Lervik Bakeng et al., 2019). The CT images that were registered to the phantom and the patient’s position on the operating table was based upon automated centerline estimations from the tracked bronchoscope during the initial phase of the procedures (Hofstad et al., 2014). CustusX can combine and display both preoperative and real-time intraoperative images. This feasibility has been demonstrated in bronchoscopy through two studies by Sorger et al. The overall navigation accuracy was 10.0 ± 3.8 mm in a patient feasibility study (Sorger et al., 2017), and the mean target error in an earlier lung phantom study was found to be 2.8 ± 1.0 mm (Sorger et al., 2015).

2.3 Head-mounted display and image stream transmission

We utilized Microsoft® HoloLens 2 in the lung phantom study and with the second patient (Microsoft Corp, Redmond, WA, United States). HoloLens 1 was used with the first patient. The two image sources provided from virtual bronchoscopy with EM navigation and bronchoscopy video were presented on the semi-transparent screens of HoloLens (with the addition of ultrasound in the EBUS study part). The image sources followed the line of sight of the operator, as opposed to being fixed on regular video monitors.

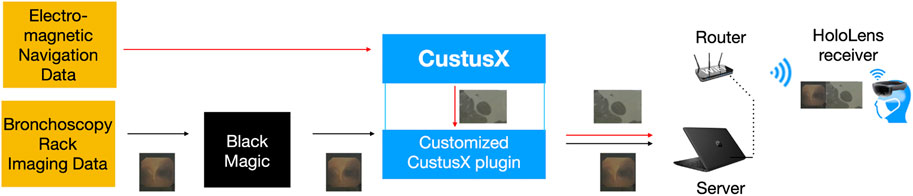

The electronic transmission pathways of image streams are depicted in Figure 1. The bronchoscopy video stream was captured by Blackmagic Web Presenter (Blackmagic Design, Fremont, CA, United States) and transferred to CustusX, which also generated the ENB video stream. Through a custom C++ CustusX plugin, both video streams were sent by WiFi to HoloLens and presented side-by-side in the line of sight of the operator. Additional image processing such as scaling, zooming, and down-sizing could be defined for each image source.

FIGURE 1. Electronic transmission pathways of images provided from the bronchoscopy rack and electromagnetic navigation data are shown as endoscopy video and 2D CT slice data (used in patient study), respectively. The image sources are finally put together and displayed on the HoloLens.

On the HMD, a HoloLens application was implemented in Unity (Unity Technologies, California, United States). To receive WiFi with the video streams, a video receiver running on the application was implemented in C#. Each stream of images was then converted to a Unity-compatible texture and applied to plane objects in the Unity scene. The plane objects consisted of two rectangles, where the two video streams could be placed. They were presented in a field of view of 34° on HoloLens 1 and 52° on HoloLens 2.

Running two video streams simultaneously poses limitations on image rendering and frame rate. The HoloLens WiFi bandwidth is sufficient for two video sources at a frame rate of 20 Hz and image resolution of 720 p, whereas the Olympus video processing unit has a higher frame rate of 30 Hz and image resolution of 1080 p. PNG compression/decompression is available to minimize bandwidth usage, but it was not utilized in this case as it increases transmission lag further. The color model used was YUV, and the bandwidth of the local network was 5 MHz. It turned out that the WiFi of the HoloLens was the bottleneck in terms of streaming latency.

Using HoloLens 1, the image delay in the first patient (Patient I) was noticeably long. In contrast, HoloLens 2 offers more memory, a faster CPU, and improved WiFi capabilities. When the application was ported to HoloLens 2, further optimizations reducing the transmission lag were added to the streaming code. Each image source was captured and received as an independent thread to minimize the delay. The latency was estimated from a video captured on HoloLens 2, which was recorded by the bronchoscopy video monitor.

2.4 Additional equipment used in the studies

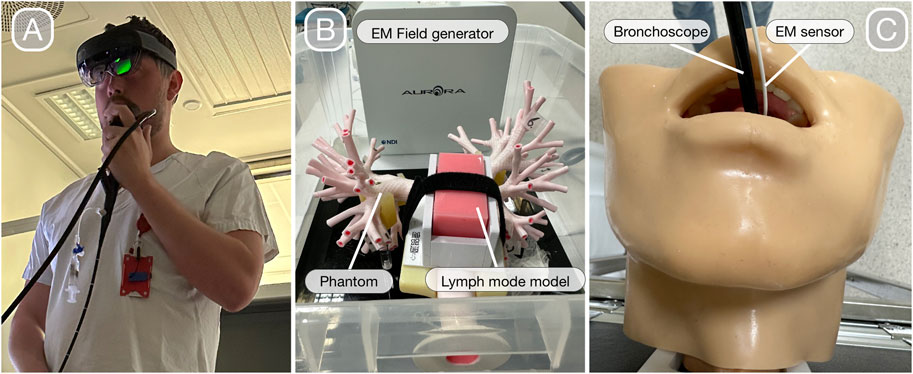

• Lung phantom: Ultrasonic Bronchoscopy Simulator LM-099, KOKEN CO., LTD, Tokyo, Japan (Figure 2)

• Conventional video bronchoscope (Olympus BF-P180). (Phantom study)

• (Olympus BF-H190, EVIS EXCERA III, diameter 6 mm, working channel 2.0 mm) (Patient I)

• (Olympus BF-P190, EVIS EXCERA III, diameter 4.1 mm, working channel 2.0 mm) (Patient II)

• EBUS bronchoscope (Olympus BF-UC180F).

• Bronchoscopy rack (all from Olympus, Tokyo, Japan)

o Video processing unit (Olympus EVIS EXCERA III CV-190 Plus)

o Light source (Olympus EVIS EXCERA III CLV-190)

o Ultrasound processing unit for EBUS (Olympus EVIS EUS EU-ME2)

FIGURE 2. Bronchoscopy with HoloLens 2 (A). Lung phantom with the lymph node model for regular bronchoscopy and EBUS with an electromagnetic field generator in the background that enables navigated bronchoscopy (B). Extension of the lung phantom with upper airways for bronchoscope intubation (C).

2.5 Experimental setup of the phantom study

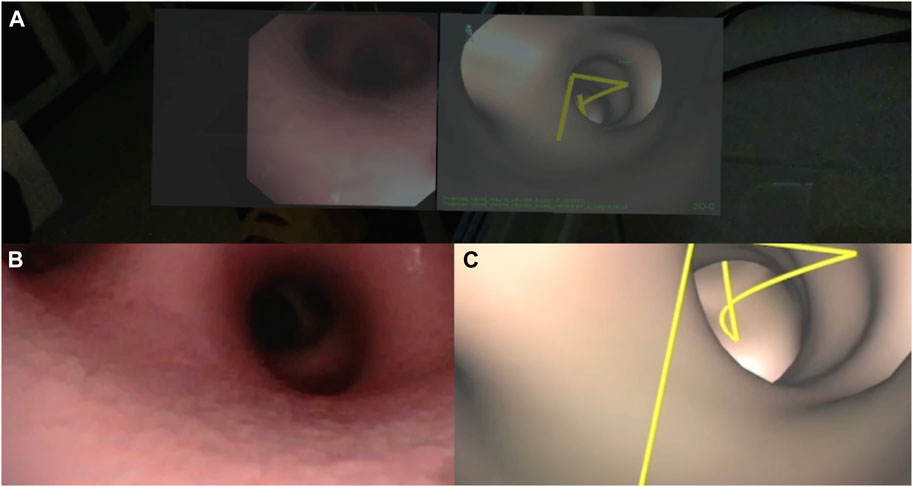

A lung phantom that included upper airways was examined with a conventional video bronchoscope (Figures 2B, C). Initially, the pulmonologists performed bronchoscope intubation before they performed a bronchial inspection of the left lung using the video monitor. The same procedure was repeated with HoloLens 2 for comparison. They were then asked to perform one endobronchial forceps biopsy of a target located in front of the fourth bronchial division in the right lower lobe while using HoloLens 2. During the sampling maneuver, the bronchoscopy video was presented side-by-side with ENB displayed as virtual bronchoscopy guidance to the target (Figure 3). The lighting conditions in the bronchoscopy suite were consistent for all participants and darker compared to a regular bronchoscopy. Simultaneously, the brightness setting of HoloLens 2 was adjusted to one step below the highest level (as the highest level caused color saturation disturbance).

FIGURE 3. Video bronchoscopy and virtual bronchoscopy with a yellow centerline to target. Screenshot from HoloLens 2 (A) during the lung phantom study with the video on the left and virtual bronchoscopy on the right. The lower left shows the corresponding bronchoscopy video rendered on the video monitor (B) and the lower right shows virtual bronchoscopy from ENB displayed on the navigation platform CustusX (C).

Finally, artificial lymph nodes in the lung phantom were examined using an EBUS bronchoscope. The participant was presented with the bronchoscopy video and endobronchial ultrasound images displayed on the monitor for comparison. Subsequently, the ultrasound image was rendered on HoloLens 2 for the participant’s evaluation.

2.6 User experience evaluation and statistics

User experience was evaluated with a questionnaire filled out right after completing the phantom test. The questionnaire focused on the perceived image quality of the video and ultrasound presented on HoloLens 2. When assessing image quality, the visual analog scale (VAS) was used, which had been validated in a multi-center study for evaluating single-use bronchoscopes (Flandes et al., 2020). The VAS was also employed to rate the level of difficulty with intubation. Additionally, potentially adverse symptoms experienced by the operators were evaluated using a seven-point Likert scale taken from a validated virtual reality neuroscience questionnaire (Kourtesis et al., 2019). The same scaling was used to assess color rendering perception and the difficulty in handling the biopsy forceps. Other aspects evaluated included brightness perception (too light/convenient/too dark), whether there were any annoying image delays (yes/no), considerations regarding the weight of HoloLens 2 for prolonged timeperiods (45–60 min procedures) (too heavy/not too heavy), the impact on procedure durations with HoloLens 2 (potentially shortened/same as with monitors/prolonged), and in which situations HoloLens 2 could replace the regular monitor (inspecting bronchial tree/navigation to peripheral targets/no situations). Experience with bronchoscopy and EBUS bronchoscopy was registered; novices (<10 procedures), intermediates (10–500), and experts (>500). Procedural time was measured and compared between expert bronchoscopists and the groups with less experience.

Statistical analyses were performed using GraphPad Prism software, version 9.5.1. The procedural time between groups with different levels of expertise was compared using the Mann–Whitney U test with a significance level of 5%.

2.7 Patient study

The clinical study involved two patients referred to the thoracic medicine department due to symptoms of hemoptysis. We chose to include patients with hemoptysis as this condition requires bronchial inspection, involves procedures of relatively short durations, and only rarely involves invasive sampling. None of the patients had CT imaging findings that could explain episodes of hemoptysis. The bronchoscopies were performed in moderate sedation anesthesia using midazolam 3–4 mg IV and alfentanil 0.75 mg IV.

Patient I was a 45-year woman with asthma and hemoptysis. The purpose of the bronchoscopy was to inspect the airways. On HoloLens 1, the pulmonologist was presented with the bronchoscopy video along with EM navigation displayed as axial CT slices. The electromagnetic sensor attached to the bronchoscope enabled real-time and hands-free scrolling of CT slices.

Patient II was a 32-year man with hemoptysis. The primary purpose of the bronchoscopy was to inspect the airways. In order to test the rendering of EM navigation on HoloLens 2 more extensively, three target spots were marked in the preprocedural CT. For each target, a centerline route in yellow was displayed, indicating the path to reach the target. One target was set in each upper lobe, and one target was placed in the left lower lobe. The target spots were positioned up to the fourth bronchial generation. To reach these assigned targets, a thinner bronchoscope was used. During the bronchoscopy, the EM navigation data were displayed as CT imaging data in axial planes. The EM field generator was placed laterally to the patient’s right arm.

2.8 Ethics approval

The patient study was approved by the Regional Ethical Committee (REC 193927). The two patients provided informed consent to participate. In order to ensure patient safety, an additional pulmonologist was present, observing the bronchoscopy procedure on a conventional video monitor.

3 Results

3.1 Phantom study

In the lung phantom study, the 17 pulmonologists who participated had an age range of 34–67 years (mean of 47 years). Eight of them were classified as experts having previously performed over 500 bronchoscopies. None of the participants was classified as novices, but two had conducted less than 100 bronchoscopies. However, out of the 17 participants, four of them were novices in EBUS bronchoscopy.

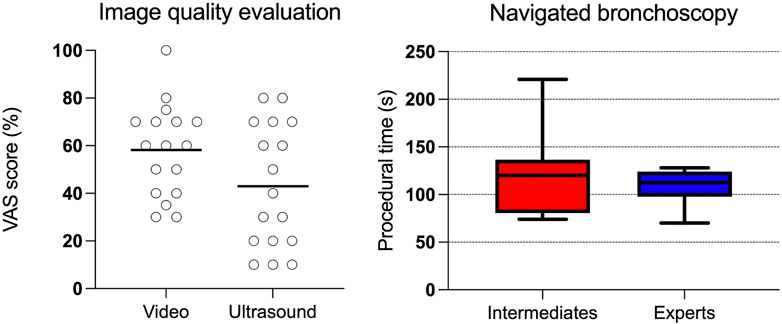

The difficulty in bronchoscope intubation using HoloLens 2 was graded on a scale from 0 (extremely easy) to 100% (extremely difficult), and the average score was found to be 27% (95% confidence interval [CI] 16%–37%). The average VAS scores for global image quality (0 (no image) to 100% optimal image quality) representing the bronchoscopy video and ultrasound were 58% (95% CI 48%–68%) and 43% (95% CI 30%–56%), respectively (Figure 4). Furthermore, the average scoring for video bronchoscopy image resolution was 56% (95% CI 47%–65%) and that for image contrast 52% (CI 44%–60%). Participants considered the color rendering quality, in terms of uncovering pathology, to be low to neutral. Eleven of the 17 participants considered the video images to be too dark (Figure 3A), while with EBUS, five participants found the images to be too dark and three participants found the images too bright. Five out of the 17 pulmonologists reported being annoyed by image latency. The image delay with HoloLens 2 in the phantom study and the second patient (Patient II) was found to be 0.33–0.35 s.

FIGURE 4. Dot plot (left) showing user evaluation of the video and ultrasound bronchoscopy image quality evaluated with a VAS score, with 100% being optimal image quality. The box plot (right) shows procedural time in navigating to the target in the right lung lower lobe with biopsy forceps sampling. Intermediate experienced participants (intermediates) have performed 10–500 bronchoscopies and experts more than 500.

The mean procedural time, from the phantom’s mouth to the right lower lobe target with biopsy forceps, was 119 s (95% CI 84–154 s) for the group with intermediate experience and 109 s (95% CI 93–125 s) for experts (Figure 4). No statistical differences were found between the two groups (p-value = 0.67). All participants successfully navigated to the target for biopsy sampling. The handling of biopsy forceps sampling using HoloLens 2 was considered neutral in terms of difficulty (neither easy nor difficult). Five participants believed that bronchoscopy procedures would be prolonged when using HoloLens 2, while five others believed that the procedures would be shortened.

Two of the pulmonologists found HoloLens 2 to be too heavy for procedures lasting 45–60 min. In terms of adverse symptoms during the procedures, six participants reported mild to very mild feelings of dizziness and two reported a mild feeling of nausea. Fifteen out of the 17 pulmonologists considered HoloLens 2 suitable for replacing the monitors in navigation to peripheral targets. One of the participants considered HoloLens 2 suitable for bronchial inspection, and two participants reported HoloLens 2 not suitable for any situation at all.

3.2 Patient study

Patient I: During the bronchoscopy procedure with HoloLens 1, a noticeable transmission delay (>0.5 s) made orientation challenging, and the video could not support the relatively fast movements of a standard bronchoscopy procedure. Although no pathology was found, for safety reasons, the results from bronchial inspection had to rely on a second pulmonologist viewing the conventional video monitor. The EM tracking was displayed as 2D CT slices derived from the positions of the tracked bronchoscope. To enable scrolling of the CT slices, an EM tracking sensor was positioned into the bronchoscope´s working channel.

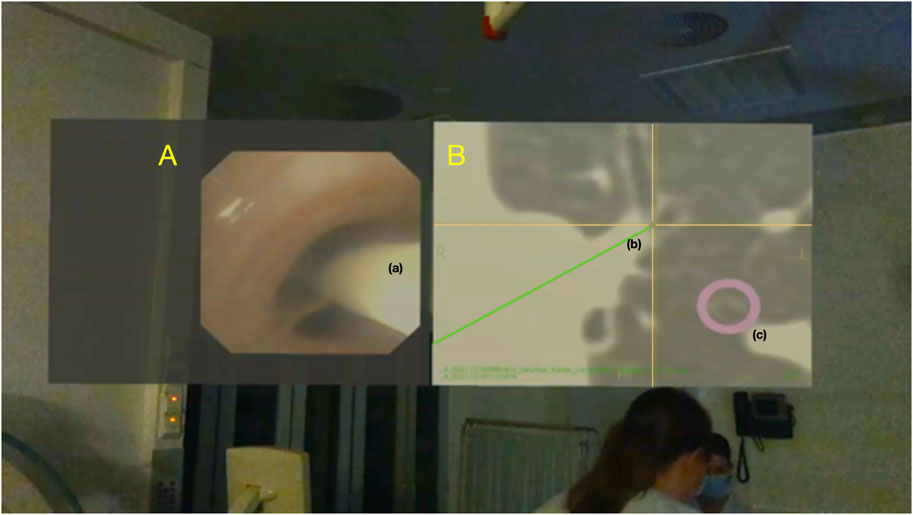

Patient II: HoloLens 2 was used, and the video-to-HoloLens transmission delay was 0.33–0.35 s, similar to the phantom study. As the transmission delay was adjusted for, the bronchoscopy on the second patient proceeded without complications, following an ordinary workflow. No intrabronchial pathology was revealed. The default brightness setting of the bronchoscopy video displayed on the HoloLens was considered too low and was suggested to be improved. During the bronchoscopy, the pulmonologist successfully reached the target in the right upper lobe using the CT axial plane tracked by the working channel EM sensor. However, due to the patient’s suffering from type 1 diabetes mellitus and an intradermal glucose monitor, the electromagnetic field was distorted, making it challenging to reliably navigate to the other targets. Nevertheless, the rendering of CT imaging data on HoloLens was considered adequate (Figure 5).

FIGURE 5. Screenshot from HoloLens 2 used with patient II, showing the bronchoscopy video (A) with a 6-DOF tracking sensor (a) advanced through a subsegmental bronchus. The position of the sensor tip (a) is displayed in the axial plane CT (B) as the center of a yellow cross (b) superimposed on the patient’s bronchial tree. The assigned target is marked as a purple ring (c).

4 Discussion

Of the 17 pulmonologists successfully performing navigated bronchoscopy on a lung phantom, performing targeted forceps biopsy, 15 expressed their willingness to replace the video monitor for HMD (HoloLens 2) for this purpose. The use of HMD for navigation was easily adopted with no significant difference in procedural time between most experienced bronchoscopists and the less experienced ones. The clinical study on patients demonstrated the feasibility of using HoloLens, but HoloLens 2, with its more suitable hardware, proved to be more effective in minimizing video transmission delay and maintaining an ordinary workflow. The noticeable long image transmission delay with HoloLens 1 with the first patient posed challenges in keeping up with the natural pace of a bronchoscopy.

The overall image quality, as perceived by the pulmonologists, was considered suboptimal for both video and ultrasound. The quality of video images received a VAS score of 58 out of 100. In comparison, a Spanish multi-center study evaluating a disposable single-use bronchoscope (Ambu aScope4™) with known inferior image quality reported a score of 80 out of 100 (Flandes et al., 2020). Various factors related to image quality seemed to contribute to the result of our study. The low luminance of microdisplays in the OST-HMD is a known challenge (Doughty et al., 2022), which was also observed in our study, despite manually optimizing the brightness setting on HoloLens 2 as the automated default brightness appeared to be too low. Additionally, ambient lighting in the study setting was reduced to a minimum to mitigate this issue.

One of the pulmonologists commented on the poor control of brightness intensity, which resulted in dark areas appearing too dark and bright areas appearing too bright in the image frame. It is a known fact that significant non-uniformity of luminance is observed across virtual images displayed on HoloLens 2 (Chen et al., 2021). This non-uniformity can also affect color rendering, which was perceived as low to neutral in our study. Non-uniformity can be mitigated through flat-field corrections, where software compensates for the luminance non-uniformity (Johnson A. A. et al., 2022). Implementing such corrections could potentially improve the perceived image quality in our study.

In a study comparing AR OST-HMD devices in surgery, HoloLens 1 was found to have better performance in contrast perception compared to other OST-HMD such as ODG R-7 and Moverio BT-200 (Qian et al., 2017). The image rendering on HoloLens 1 is generated by a liquid-crystal-on-silicone (LCoS) display which may differ from HoloLens 2 that utilizes laser beam scanning display (LBS) technology. In our study with HoloLens 2, the perceived image contrast was suboptimal. It is possible that the combination of low luminance on the device and the non-uniformity issue influenced the assessment of contrast rendering. The poor results regarding the perceived ultrasound image quality could also be influenced by the aforementioned factors, as well as the challenges examining the lung phantom lymph node model itself (ultrasound imaging is easier in a patient setting). It is worth noting that four pulmonologists participating in the study were novices in EBUS.

Excellent image rendering is crucial for mucosal inspection of the bronchial tree in patients. However, as our study indicates, it is not guaranteed that HoloLens 2 is the best OST-HMD for rendering endoscopic video images during bronchoscopy. It is possible that the newer Magic Leap 2, with its LCoS display and dynamic dimming function, may be better to address the shortcomings of low luminance. Additionally, Moverio BT-35E, which has already been studied in the context of bronchoscopy (Okachi et al., 2022), comes with flip-up shades that reduce the impact of ambient light. Another consideration could be using a VST-HMD. When comparing bronchoscopy to surgery, an uninterrupted view of the surroundings or seeing real-world elements is not as important. In contrast to displaying bronchoscopy videos, virtual bronchoscopy for navigation purposes on the HoloLens 2 was well-received by the pulmonologists.

The transmission time delay of images is of importance when invasive sampling of tissue is conducted. In a study by Nguyen et al. using ultrasound images on HoloLens 1, a latency of 0.080 s was considered too long for real-time ultrasound-guided sampling (Nguyen et al., 2021). In a recent study on ultrasound combined with machine learning-driven lesion segmentation and tracking information on HoloLens 2, the latency was estimated to be 0.143 s (Costa et al., 2023). In our study using HoloLens 2, transmission delay was estimated to be 0.34–0.35 s, making it unsuitable for ultrasound-guided needle sampling in real-time. Although five of the pulmonologists in our study expressed annoyance by the image delay, it did not interfere with the assigned task of video-supported biopsy forceps sampling.

Two of the pulmonologists found HoloLens 2 to be heavy to wear for procedures lasting 45–60 min, and six of them reported mild to very mild discomfort, such as dizziness and nausea, wearing the device. It should be noted that in studies on electromagnetic navigation bronchoscopy (ENB) procedures with tumor sampling in the periphery, the mean total procedure time was found to be 27 min with ENB alone (Eberhardt et al., 2007) and 51 min with the addition of fluoroscopy (Gildea et al., 2006). In complex bronchial interventions of such durations, it is expected that wearing HoloLens 2 may lead to more frequent discomfort. In the endoscopy study of Al Janabi et al., (2020), mild discomfort from using HoloLens was reported in 10% of the participants with eye fatigue and neck strain being the most common complaints. In the study of Okachi et al., (2022), none of the four pulmonologists experienced eye or body fatigue during or after bronchoscopy using Moverio BT-35E, although the duration of the procedures was not reported. The battery life for HoloLens 2, which is 2–3 h (Microsoft, 2022), is not expected to affect bronchoscopy procedures.

The patient study is limited by the inclusion of only two patients and one examining pulmonologist. The user experience could vary among bronchoscopists, depending on the type of the bronchoscopy procedure being performed. Target accuracy is not specifically addressed in our study as it relies on an extrinsic EM navigation system rather than the navigation capabilities of HoloLens 2. The accuracy of the navigation system has been studied elsewhere (Sorger et al., 2017). During our study, ambient lighting in the bronchoscopy suite was set darker than usual, and results may differ under brighter conditions. Our study did not include fluoroscopy, and areas of bronchoscopy research for navigation and target confirmation that involve additional image modalities such as cone-beam CT and augmented fluoroscopy (Burks and Akulian., 2020).

A limitation of the phantom study is that the procedural time using HoloLens 2 was not compared to that of the standard monitor approach. However, we argue that in these procedures, the pulmonologists’ understanding of 3D bronchial anatomy is more important than procedural time. If line-of-sight access to different image modalities during bronchoscopy improves their understanding, it has significant clinical implications. Similar to studies measuring procedural time, the pulmonologists in our study had divided opinions on whether procedural times would be shortened with an HMD. In a study of orthopedic surgery using fluoroscopy on a tibia model, the procedure time did not decrease with the use of an HMD, and the number of fluoroscopy images taken remained the same. However, a higher number of head turns was observed with the conventional monitor approach (Johnson M. et al., 2022). When adding tracking information to an HMD device, procedure time has been found to either stay the same or increase, as seen in studies of robotic-assisted surgery (Iqbal et al., 2021) and ultrasound combined with machine-learning segmentation (Costa et al., 2023). On the other hand, the ureteroscopy study of Al Janabi et al., (2020) showed improved procedural time with a 73-s reduction and improved outcomes. In a small study of six patient cases undergoing percutaneous dilatational tracheostomy, displaying the bronchoscopy video on an HMD (Airscouter WD-200B) was perceived to make the procedures faster and safer to perform as reorientation to a monitor was unnecessary (Gan et al., 2019).

In our study, the video monitor was presented in 2D mode on the HMD display. The use of AR with 3D lung anatomy during thoracic cancer surgery has been studied in Li et al., (2020), where 142 patients with lung cancer underwent surgery. In endoscopy, a study comparing 2D with 3D vision showed that the number of errors and time spent were improved with a 3D vision on an HMD when performing various manual tasks (Stewart et al., 2022). We cannot rule out the benefit of providing a real-time bird’s-eye view to the bronchoscopist who navigates the small airways with tracked instruments (without camera access), such as having the 3D bronchial tree superimposed on the patient’s chest. Furthermore, an optimal solution for seamless shifting between different image views and modalities on the HoloLens could be achieved with voice command or other more automated methods. Aspects for future studies could be implementing newer image sources and techniques for bronchoscopy navigation, as well as adopting future mixed reality devices in medical research, as this technology is still in its early stages.

5 Conclusion

Replacing the video monitor with an HMD (HoloLens) for multiple image sources in bronchoscopy was found to be feasible in a lung phantom and in two patients. However, the quality of video and ultrasound images was considered inferior to that of video monitors. HoloLens 2 was deemed suboptimal for airway and mucosa inspection but may be suitable for virtual bronchoscopy navigation.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Regional Ethical Committee (REC Central) 193927. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

AK-A, EH, and GK implemented the bronchoscopy experiments with HoloLens and electromagnetic navigation. AK-A and GK wrote the manuscript draft. GK developed the software for image transmission to HoloLens. HL, TL, TA, and HS have supervised the work. All authors contributed to the article and approved the submitted version.

Funding

The study was funded by the joint research committee, St. Olavs hospital and Faculty of Medicine, Norwegian University of Science and Technology (NTNU), SINTEF and Department of Research, St. Olavs hospital.

Acknowledgments

The authors would like to thank Jan Gunnar Skogås, Department of Research, St. Olavs hospital for providing study equipment. Furthermore, they would like to thank Marit Setvik and Solfrid Meier Løwensprung, Department of Thoracic Medicine, St. Olavs hospital for their contribution in assisting the bronchoscopies.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al Janabi, H. F., Aydin, A., Palaneer, S., Macchione, N., Al-Jabir, A., Khan, M. S., et al. (2020). Effectiveness of the HoloLens mixed-reality headset in minimally invasive surgery: A simulation-based feasibility study. Surg. Endosc. 34, 1143–1149. doi:10.1007/s00464-019-06862-3

Askeland, C., Solberg, O. V., Bakeng, J. B., Reinertsen, I., Tangen, G. A., Hofstad, E. F., et al. (2016). CustusX: An open-source research platform for image-guided therapy. Int. J. Comput. Assist. Radiol. Surg. 11, 505–519. doi:10.1007/s11548-015-1292-0

Brun, H., Bugge, R. A. B., Suther, L. K. R., Birkeland, S., Kumar, R., Pelanis, E., et al. (2018). Mixed reality holograms for heart surgery planning: First user experience in congenital heart disease. Eur. Heart J. Cardiovasc. Imaging. 20, 883–888. doi:10.1093/ehjci/jey184

Burks, A. C., and Akulian, J. (2020). Bronchoscopic diagnostic procedures available to the pulmonologist. Clin. Chest Med. 41, 129–144. doi:10.1016/j.ccm.2019.11.002

Chen, C. P., Cui, Y., Ye, Y., Yin, F., Shao, H., Lu, Y., et al. (2021). Wide-field-of-view near-eye display with dual-channel waveguide. Photonics 8, 557. doi:10.3390/photonics8120557

Condino, S., Turini, G., Parchi, P. D., Viglialoro, R. M., Piolanti, N., Gesi, M., et al. (2018). How to build a patient-specific hybrid simulator for orthopaedic open surgery: Benefits and limits of mixed-reality using the Microsoft HoloLens. J. Health Eng. 1, 5435097. doi:10.1155/2018/5435097

Costa, N., Ferreira, L., de Araújo, A. R. V. F., Oliveira, B., Torres, H. R., Morais, P., et al. (2023). Augmented reality-assisted ultrasound breast biopsy. Sensors 23, 1838. doi:10.3390/s23041838

Doughty, M., Ghugre, N. R., and Wright, G. A. (2022). Augmenting performance: A systematic review of optical see-through head-mounted displays in surgery. J. Imaging. 8, 203. doi:10.3390/jimaging8070203

Eberhardt, R., Anantham, D., Herth, F., Feller-Kopman, D., and Ernst, A. (2007). Electromagnetic navigation diagnostic bronchoscopy in peripheral lung lesions. Chest 131, 1800–1805. doi:10.1378/chest.06-3016

Flandes, J., Giraldo-Cadavid, L. F., Alfayate, J., Fernández-Navamuel, I., Agusti, C., Lucena, C. M., et al. (2020). Bronchoscopist's perception of the quality of the single-use bronchoscope (ambu aScope4™) in selected bronchoscopies: A multicenter study in 21 Spanish pulmonology services. J. Respir. Res. 320, 320. doi:10.1186/s12931-020-01576-w

Gan, A., Cohen, A., and Tan, L. (2019). Augmented reality-assisted percutaneous dilatational tracheostomy in critically ill patients with chronic respiratory disease. J. Intensive Care Med. 34, 153–155. doi:10.1177/0885066618791952

García-Vázquez, V., von Haxthausen, F., Jäckle, S., Schumann, C., Kuhlemann, I., Bouchagiar, J., et al. (2018). Navigation and visualisation with HoloLens in endovascular aortic repair. Innov. Surg. Sci. 3, 167–177. doi:10.1515/iss-2018-2001

Gildea, T. R., Mazzone, P. J., Karnak, D., Meziane, M., and Mehta, A. C. (2006). Electromagnetic navigation diagnostic bronchoscopy: A prospective study. Am. J. Respir. Crit. Care Med. 174, 982–989. doi:10.1164/rccm.200603-344OC

Hofstad, E. F., Sorger, H., Leira, H. O., Amundsen, T., and Lango, T. (2014). Automatic registration of CT images to patient during the initial phase of bronchoscopy: A clinical pilot study. Med. Phys. 41, 041903. doi:10.1118/1.4866884

Iqbal, H., Tatti, F., and Rodriguez y Baena, F. (2021). Augmented reality in robotic assisted orthopaedic surgery: A pilot study. J. Biomed. Inf. 120, 103841. doi:10.1016/j.jbi.2021.103841

Johnson, A. A., Reidler, J. S., Speier, W., Fuerst, B., Wang, J., and Osgood, G. M. (2022). Visualization of fluoroscopic imaging in orthopedic surgery: Head-mounted display vs conventional monitor. Surg. Innov. 29, 353–359. doi:10.1177/1553350620987978

Johnson, M., Zhao, C., Varshney, A., and Beams, R. (2022). “Digital precompensation for luminance nonuniformities in augmented reality head mounted displays,” in IEEE international symposium on mixed and augmented reality adjunct (ISMAR-Adjunct), (Singapore), 477–482. doi:10.1109/ISMAR-Adjunct57072.2022.00100

Kourtesis, P., Collina, S., Doumas, L. A. A., and MacPherson, S. E. (2019). Validation of the virtual reality neuroscience questionnaire: Maximum duration of immersive virtual reality sessions without the presence of pertinent adverse symptomatology. Front. Hum. Neurosci. 13, 417. doi:10.3389/fnhum.2019.00417

Lervik Bakeng, J. B., Hofstad, E. F., Solberg, O. V., Eiesland, J., Tangen, G. A., Amundsen, T., et al. (2019). Using the CustusX toolkit to create an image guided bronchoscopy application: Fraxinus. PLoS ONE 14, e0211772. doi:10.1371/journal.pone.0211772

Li, C., Zheng, B., Yu, Q., Yang, B., Liang, C., and Liu, Y. (2020). Augmented reality and 3-dimensional printing Technologies for guiding complex thoracoscopic surgery. Ann. Thorac. Surg. 112, 1624–1631. doi:10.1016/j.athoracsur.2020.10.037

Li, Y., Chen, X., Wang, N., Zhang, W., Li, D., Zhang, L., et al. (2018). A wearable mixed-reality holographic computer for guiding external ventricular drain insertion at the bedside. J. Neurosurg. 131, 1599–1606. doi:10.3171/2018.4.JNS18124

Microsoft (2022). HoloLens 2 technical specifications. Available at: https://www.microsoft.com/en-us/hololens/hardware (accessed May 3, 2022).

Nguyen, T., Plishker, W., Matisoff, A., Sharma, K., and Shekhar, R. (2021). HoloUS: Augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. Int. J. Comput. Assist. Radiol. Surg. 17, 385–391. doi:10.1007/s11548-021-02526-7

Okachi, S., Ito, T., Sato, K., Iwano, S., Shinohara, Y., Itoigawa, H., et al. (2022). Virtual bronchoscopy-guided transbronchial biopsy simulation using a head-mounted display: A new style of flexible bronchoscopy. Surg. Innov. 8, 811–813. doi:10.1177/15533506211068928

Qian, L., Barthel, A., Johnson, A., Osgood, G., Kazanzides, P., Navab, N., et al. (2017). Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int. J. Comput. Assist. Radiol. Surg. 12, 901–910. doi:10.1007/s11548-017-1564-y

Sorger, H., Hofstad, E. F., Amundsen, T., Lango, T., Bakeng, J. B., and Leira, H. O. (2017). A multimodal image guiding system for navigated ultrasound bronchoscopy (EBUS): A human feasibility study. PLoS One 12, e0171841. doi:10.1371/journal.pone.0171841

Sorger, H., Hofstad, E. F., Amundsen, T., Lango, T., and Leira, H. O. (2015). A novel platform for electromagnetic navigated ultrasound bronchoscopy (EBUS). Int. J. Comput. Assist. Radiol. Surg. 11, 1431–1443. doi:10.1007/s11548-015-1326-7

Stewart, C. L., Fong, A., Payyavula, G., DiMaio, S., Lafaro, K., Tallmon, K., et al. (2022). Study on augmented reality for robotic surgery bedside assistants. J. Robot. Surg. 16, 1019–1026. doi:10.1007/s11701-021-01335-z

Wellens, L. M., Meulstee, J., van de Ven, C. P., Terwisscha van Scheltinga, C. E. J., Littooij, A. S., van den Heuvel-Eibrink, M. M., et al. (2019). Comparison of 3-dimensional and augmented reality kidney models with conventional imaging data in the preoperative assessment of children with wilms tumors. JAMA Netw. Open 2, e192633. doi:10.1001/jamanetworkopen.2019.2633

Keywords: bronchoscopy, head-mounted display, HoloLens, mixed reality, augmented reality, electromagnetic navigation bronchoscopy, mixed reality bronchoscopy

Citation: Kildahl-Andersen A, Hofstad EF, Sorger H, Amundsen T, Langø T, Leira HO and Kiss G (2023) Bronchoscopy using a head-mounted mixed reality device—a phantom study and a first in-patient user experience. Front. Virtual Real. 4:940536. doi: 10.3389/frvir.2023.940536

Received: 10 May 2022; Accepted: 14 June 2023;

Published: 27 June 2023.

Edited by:

Marientina Gotsis, University of Southern California, United StatesReviewed by:

Aldo Badano, United States Food and Drug Administration, United StatesVangelis Lympouridis, University of Southern California, United States

Copyright © 2023 Kildahl-Andersen, Hofstad, Sorger, Amundsen, Langø, Leira and Kiss. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Håkon Olav Leira, hakon.o.leira@ntnu.no

Arne Kildahl-Andersen

Arne Kildahl-Andersen Erlend Fagertun Hofstad3

Erlend Fagertun Hofstad3  Thomas Langø

Thomas Langø Håkon Olav Leira

Håkon Olav Leira Gabriel Kiss

Gabriel Kiss