- 1Department of Emergency Medicine, University of Alberta, Edmonton, AB, Canada

- 2Department of Translational Medicine, Centre for Research and Training in Disaster Medicine, Humanitarian Aid and Global Health, Università del Piemonte Orientale, Novara, Italy

- 3Adjunct Faculty, Harvard/BIDMC Disaster Medicine Fellowship, Boston, MA, United States

- 4Komfo Anokye Teaching Hospital, Kumasi, Ghana

- 5Kumasi Centre for Collaborative Research in Tropical Medicine (KCCR), College of Health Sciences, Kwame Nkrumah University of Science and Technology (KNUST), Kumasi, Ghana

- 6Accident & Emergency Medicine Academic Unit, The Chinese University of Hong Kong, Hong Kong, Hong Kong SAR, China

- 7School of Medicine, The University of Notre Dame Australia, Perth, WA, Australia

- 8Department of Accident and Emergency Medicine, Faculty of Medicine, Jordan University of Science and Technology, Irbid, Jordan

- 9Centro Único Coordinador de Ablación e Implante, Tierra del Fuego AIAS, Argentina

Background: Since the 1950′s, artificial intelligence (AI) technologies have been beyond the reach of most disaster medicine (DM) practitioners. With the introduction of ChatGPT in 2022, there has been a surge of proposed applications for AI in disaster medicine. However, AI development is largely guided by vendors in high-income countries, and little is known of the needs of practitioners. This study provides an international perspective on the clinical problems that DM practitioners would like to see addressed by AI.

Materials and methods: A three round online Delphi study was performed by 131 international DM experts. In round one, experts were asked: “What specific clinical questions or problems in Disaster Medicine would you like to see addressed by artificial intelligence guided clinical decision support?” Statements from the first round were analyzed and collated for subsequent rounds where participants rated statements on a 7-point linear scale for importance.

Results: In round one, 77 participants gave 539 proposed statements which were collated into 47 statements for subsequent rounds. In round two, 89 participants gave 3,008 ratings with no statements reaching consensus. In round three, 63 participants gave 2,942 ratings: five statements reached consensus: distribution of disaster patients within the hospital, estimating the size of the affected population, hazard vulnerability analysis, acquisition and distribution of resources, and transportation routing. Experts tended to disagree with the use of AI for ethics, mental health, cultural sensitivity, or difficult treatment decisions.

Conclusions: In this online Delphi study DM practitioners expressed a preference for AI tools that would help with the logistical support of their clinical responsibilities. Participants appeared to have much less support for the use of AI in making difficult or critical decisions. Development of AI for clinical decision support should focus on the needs of the users and be guided by an international perspective.

Introduction

Although artificial intelligence (AI) technologies have been in existence since the 1950s, they have largely remained beyond the reach of disaster medicine practitioners. However, with the public introduction of ChatGPT in November 2022, there has been a surge of proposed applications of these technologies in the field of disaster medicine. A recent bibliometric study mapped current research at the intersection of disaster medicine and AI (1). This included areas such as disaster monitoring and prediction, AI-based geospatial technology, decision support systems, social media analysis, machine learning algorithms for disaster risk reduction, and the utilization of big data and deep learning for disaster management.

However, there is limited knowledge of which applications are most valuable to clinical practitioners in disaster medicine. Currently, disaster medicine applications of AI are largely guided by vendors concentrated in high-income countries—in countries with robust information technology infrastructure and long histories of advancements in computing science. However, both natural and man-made disasters are global phenomena, where low and middle-income countries are often the most affected by these events. This makes it clear that when vendors independently decide which AI products to develop for disaster medicine, the resulting tools may not fully meet the needs of disaster medicine practitioners.

The goal of this study was to provide an international perspective on the clinical problems that disaster medicine practitioners would like to see addressed by artificial intelligence technologies. The primary outcome was a prioritized list of statements that reached consensus.

Materials and methods

Study design

The study was a three-round Delphi study administered electronically. Delphi studies are structured, iterative research methods in which a panel of selected experts anonymously responds to a series of questionnaires over multiple rounds, with each round incorporating summarized feedback from the previous one, enabling participants to reconsider and refine their views until a stable consensus or clearly defined range of opinions is reached on a complex or uncertain topic (2).

The first round included open-ended and demographic questions. The second and third rounds included ratings of specific statements developed by the study team.

Study setting

The study was performed with an international group of expert panelists.

Participants and ethical review

From the onset, this study was envisioned as an international study emphasizing equity, diversity, and inclusion. The authorship team, composed of clinicians and researchers with recognized experience in disaster medicine, identified through professional networks and peer recognition, was specifically designed to ensure that all world regions were represented. A single author from each world region formed the authorship team, and each author was responsible for recruiting expert participants from their region.

Participant recruitment was performed in a three-step process. Firstly, each author was asked to identify potential participants who they believed were experts in disaster medicine. Authors were given freedom to use their own judgement in identifying and contacting the experts, which could include personal contacts or liaison with other institutions and organizations. No formal definition of criteria to classify as a disaster medicine expert was given. Identified experts within each world region were contacted by the author from that region and asked if they would be willing to participate. Those who agreed had their names forwarded to the study coordinator, MV. Secondly, the study coordinator sent an email to the potential participants, including the study information sheet and informed consent document. Those who consented proceeded to the third step, where they created a username and password on the study's electronic Delphi platform.

The study was approved by the Human Research Ethics Board of the University of Alberta under study ID PRO 00135232.

Interventions

All Delphi rounds were conducted online using STAT59 (STAT59 Services Ltd, Edmonton, AB).

Outcome measures

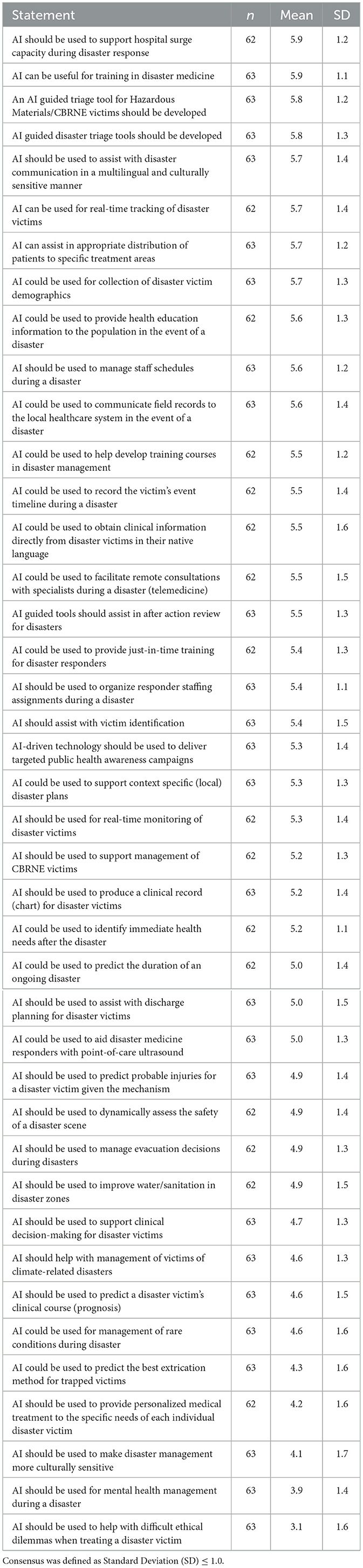

The primary study outcome was a prioritized list of statements that reached consensus. Analysis included parametric statistics of mean and standard deviation (SD) as this analysis has been previously documented to be valid and reliable (3). Consensus—the degree to which experts agreed with one another - was defined a-priori as a SD of ≤ 1.0 on the 7-point linear scale. Statements were prioritized based on their mean score on the 7-point scale.

Data collection and analysis

During the first survey round, participants were asked several demographic questions including: country of origin, language used at home, region of residence, professional discipline, clinical work environment, sex at birth, gender identity, self-identification as a visual minority, self-identification as having a disability, years of practice in disaster medicine, and age.

In addition, the first round asked a single content related question: “What specific clinical questions or problems in Disaster Medicine would you like to see addressed by artificial intelligence guided clinical decision support?” Each participant was requested to provide a minimum of 10 and a maximum of 30 proposed statements. This survey round was conducted from March 19 to July 24, 2024.

Following the first (open) round of the Delphi study, proposals were analyzed by a subset of the study team (JMF, MV, and MC). This analysis was assisted by the AI-guided technology of the STAT59 software. During this analysis, proposed statements were checked for compliance with the initial goals of the study. Additionally, proposals were grouped when repeated proposals were received from multiple study members, and any proposals that did not meet the original research question were removed from subsequent rounds. Following this analysis, statements were moved on to the second Delphi round.

During the second round of the Delphi analysis, participants were again invited to return to the STAT59 platform. They were asked to rate each of the statements on the final statement list from the first round using a linear scale from one to seven, with one being “not at all important” and seven being “very important.”

Following the second round, the statements were analyzed again. Any statements with a standard deviation of less than or equal to 1.0 were considered to have reached consensus and were dropped from the third round. In the third round, participants were once again invited to the STAT59 platform to rate the remaining statements on the same one-to-seven scale. This time, they were shown their own previous rating from round two, as well as the mean rating from all other experts. After the completion of round three, the statements that reached consensus, with a standard deviation of less than or equal to 1.0, were ranked based on their mean score. This ranked list formed the results of the study.

Of note, no demographic questions were mandatory. Furthermore, participants were able to skip rating any specific statement if they desired.

Sample size

No formal sample size calculation is known for Delphi studies. Generally, Delphi studies of more than 8–10 participants are known to provide statistically precise estimates when using a 7-point linear scale (3). However, the number of participants in this study was much larger to promote best practices of diversity and inclusion. Since the primary goal was to ensure a large enough number of experts to provide a global opinion on the research question, a target of 10–20 experts per world region was initially set.

Results

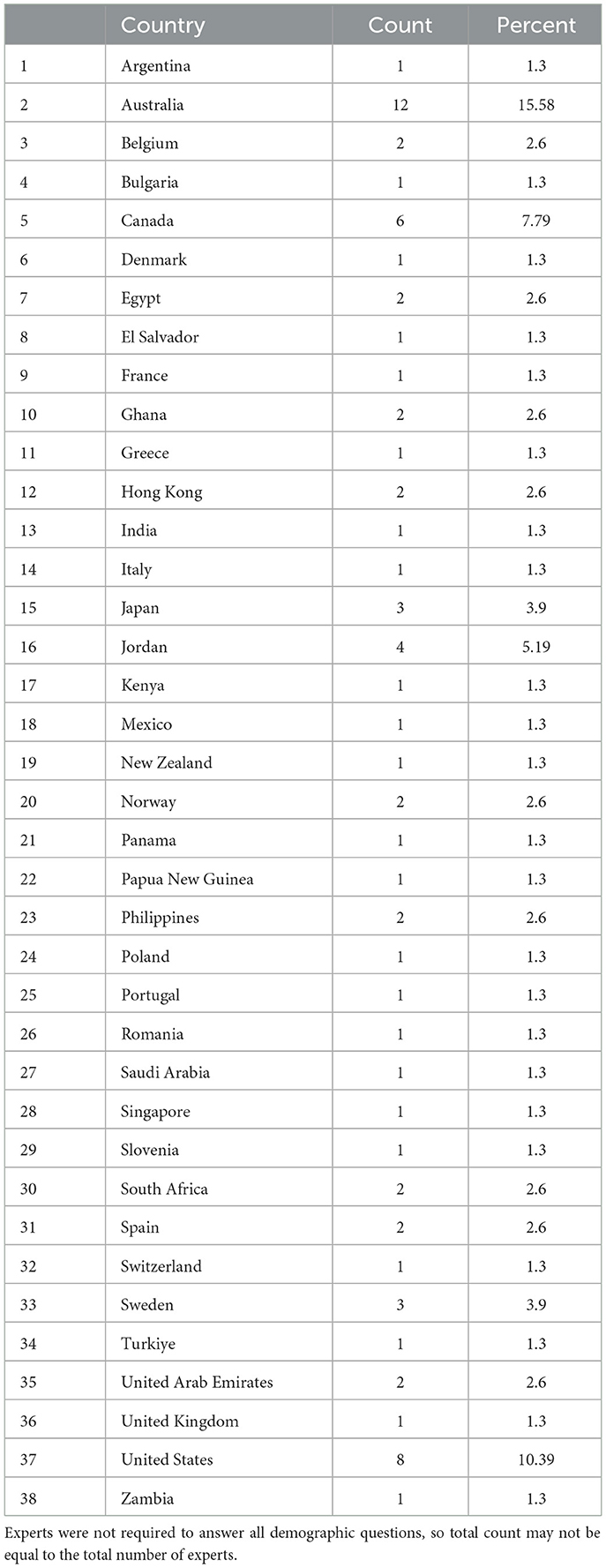

The final expert panel consisted of 131 participants who were recruited from 38 different countries (Table 1). Of note, not all experts completed every round of the study. In addition, not all experts answered all demographic questions. Of the 77 participants who answered the demographic questions, 29 (37.7%) were female and 48 (62.3%) were male. Participant age ranged from 30 to 73 (mean 46.6). Two participants (2.6%) self-identified as having a disability. Eleven participants (14.3%) self-identified as a visible minority.

Practitioners came from 15 different disciplines including physicians (46; 48.4%), nurses (25; 26.3%), paramedics (10; 10.5%), and others (10; 10.5%).

The mean number of years of experience as a disaster medicine specialist was 19.2 (range <1–34 years).

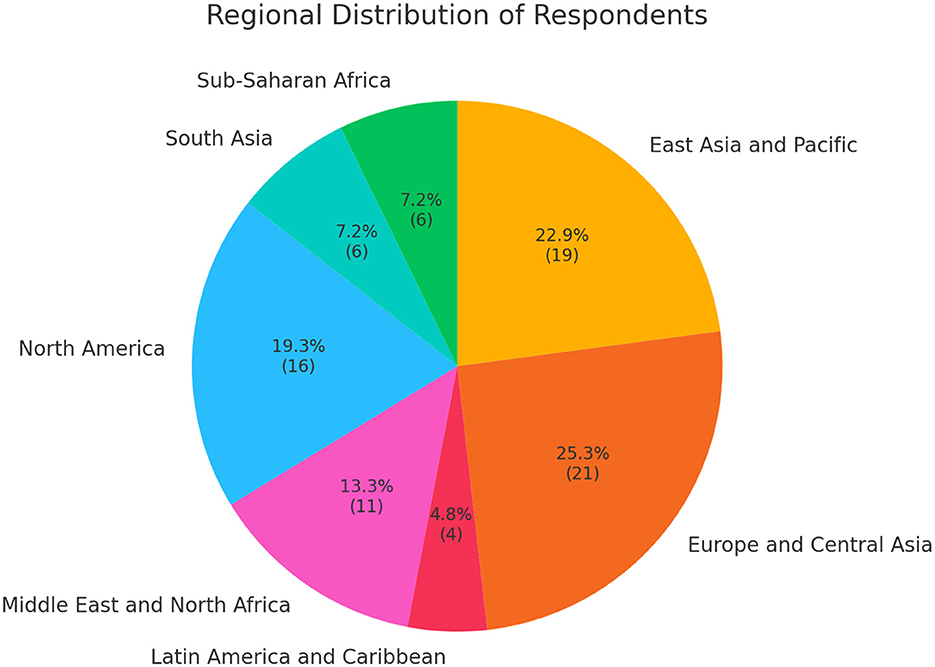

Participants were well-distributed across all seven world regions (Figure 1). The majority of participants were from Europe and Central Asia, East Asia and the Pacific, and North America.

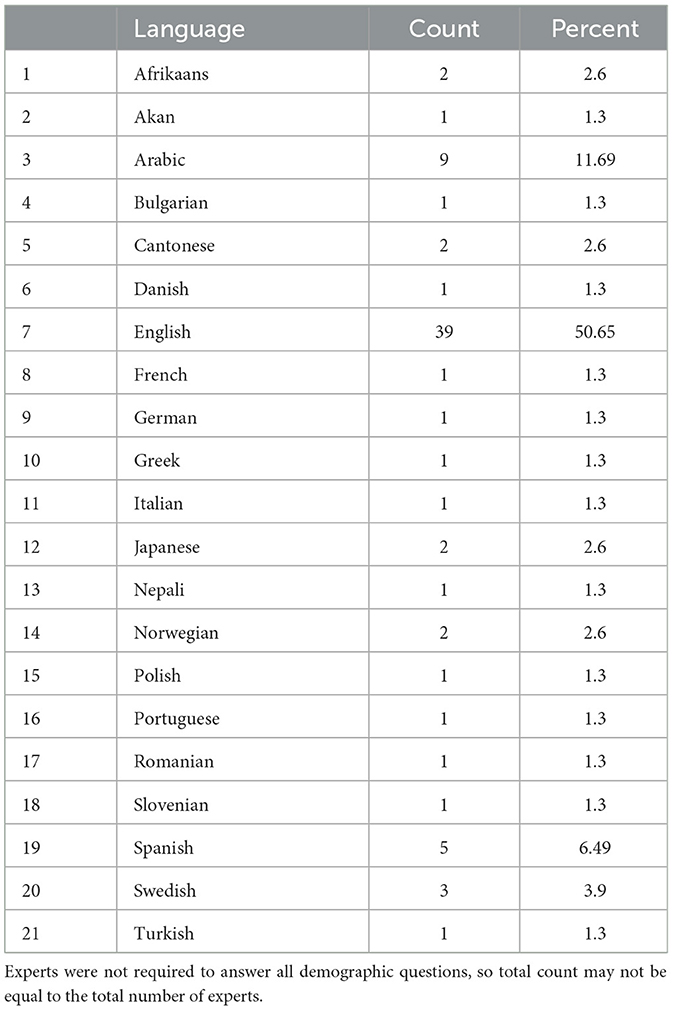

Most participants (39; 50.7%) gave English as their primary language spoken at home. However, 20 other languages were also represented (Table 2).

In the initial round (round one), 77 participants gave 539 proposed statements (Supplemental File S1). During the analysis, these statements were collated into 47 unique statements, which were advanced to the subsequent rounds.

In round two, 89 participants rated 47 statements for a total of 3,008 ratings. No statements reached consensus in round two.

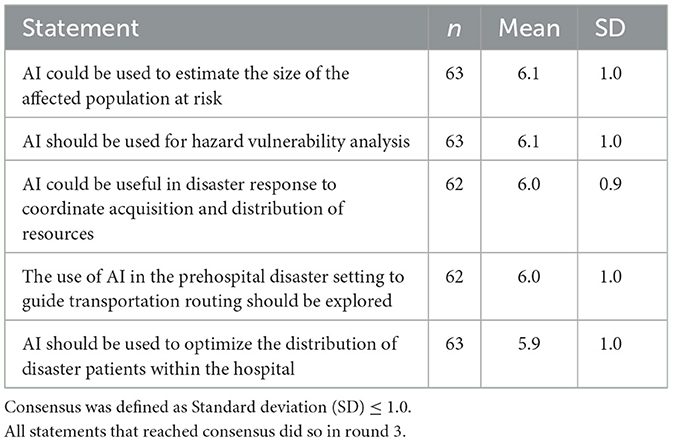

Because no statements reached consensus in round two, round three also consisted of 47 statements. In round three, 63 participants gave 2,942 ratings. Five statements reached consensus (Table 3). The mean score on the one-to-seven scale for these statements ranged between 5.9 and 6.1. The standard deviation ranged from 0.9 to 1.0. Two statements tied for scoring with the highest overall rating (mean 6.1/7): use of AI for estimating the size of an affected population at risk, and use of AI for hazard vulnerability analysis. Two statements tied for scoring with the second highest overall rating (6.0/7): use of AI to coordinate acquisition and distribution of resources, and use of AI in the prehospital disaster setting to guide transportation routing. A fifth statement reached consensus with a mean of 5.9/7: use of AI to optimize the distribution of disaster patients within the hospital. Forty-two statements did not reach consensus after round 3 (Table 4).

Of the five highest-ranking statements that did not reach consensus (Table 4), all had a mean of 5.7 or above, and their standard deviation ranged from 1.1 to 1.3.

Of the five lowest-ranking statements (Table 4) the mean score of importance ranged from 3.1 to 4.3. Of note, none of the lower ranking statements reached consensus.

Discussion

Interpretation and previous studies

In this online international Delphi study, a total of 131 participants from 38 different countries initially gave 539 proposed statements. Through two subsequent rounds of the Delphi study, five statements reached consensus.

The participants judged the use of AI to estimate the size of the affected population at risk as one of the joint highest-ranking statement (mean 6.1/7). Several other recently published studies have also explored this topic. A recent scoping review exploring the application of artificial intelligence technology in urban ecosystem-based disaster risk reduction found 76 publications addressing disasters such as floods, heat related illness, and fires (4). Artificial intelligence guided prediction rules have also been developed for forecasting COVID-19 cases as a model for future pandemics (5). In addition, an AI-based framework explicitly tailored for earthquake emergencies accurately predicted the population requiring relief supplies using historical earthquake data, socioeconomic indicators, and vulnerability indicators (6). Nonetheless, researchers note that there are still many barriers to developing these solutions including data quality, privacy, computational resources, and integration with existing systems (7).

The second joint most important statement, according to the experts, was the use of AI for hazard vulnerability analysis (mean 6.1/7). Ongoing research in this area has been published by several authors. For instance, AI driven models have been developed to assist with risk mitigation for natural hazards, power outages, and earthquakes (8, 9). One recent study used geospatial artificial intelligence for hazard mapping of flood risks on road networks in Portugal (10). Artificial intelligence models coupled to geographic information systems may also hold promise in predicting risks due to climate change (11). However, researchers note that present AI systems may lack human precision and contextual understanding (12).

Joint third in the ranking, with a mean of 6.0/7, was the statement that AI could be useful in disaster response to coordinate the acquisition and distribution of resources. A recent scoping review of 66 studies revealed a wide variety of AI applications across strategic and operational supply chain management phases and highlighted emerging techniques like explainable AI, neurosymbolic systems, and federated learning (13). Currently published AI guided research into healthcare supply chain may hold promise in this area (14). Some research shows that AI systems enable 40% faster recovery times during crises vs. traditional methods, while automated decision support systems reduce response times to supply chain disruptions by almost 65% (15). Resource allocation in emergency departments appears to be improved with use of machine learning algorithms for certain diagnoses in the emergency department (16). Nonetheless, significant issues may arise in the need to address challenges of implementation and ethical considerations in ensuring equitable healthcare delivery (15).

Participants judged as the joint fourth most important statement–tied with the third most important statement with a mean of 6.0/7—the use of AI in the pre-hospital disaster setting to guide transportation routing. Already much research is taking place on use of AI for ambulance dispatch in the pre-hospital setting (17–19). In contrast to traditional ambulance dispatch systems, AI systems can incorporate large amounts of data into transport decisions such as real-time patient data from the scene, bed occupancy, and the availability of emergency surgeries or procedures (20). In a recent scoping review of 32 studies addressing ambulance dispatch found that AI-enhanced emergency call triage and ambulance allocation reduced response times by up to 10%−20% (21). However, the authors note that “future development should focus on real-time adaptive systems, ethical implementation, improved data integration across the care continuum, and rigorous evaluation of real-time patient outcomes” (21).

Finally, the fifth most important statement, according to participants, with a mean of 5.9/7, was that AI should be used to optimize the distribution of disaster patients within the hospital. Ongoing research in the benefits of AI guided emergency department flow is encouraging (22, 23). A recent scoping review found that machine learning algorithms improved resource allocation, quality of care, and length of stay when applied to emergency department flow (24). Authors have also cited the potential advantages of information from resource allocation to facilitate converting hospital wards into dedicated units based on the predicted bed demand (25). However, minimal research has investigated use in surge capacity or disaster situations.

Several high-ranking statements that did not reach consensus also bear consideration. This includes AI to be used for: training in disaster medicine. In a recent scoping review of 64 articles addressing artificial intelligence-enhanced remote technologies for disaster medicine training, the authors concluded that the technologies promote learning incentives but that the quality remains uncertain (26). In this study some experts suggested AI be used to support hospital triage capacity during disaster response and to triage mass casualty incident victims. However, published studies investigating use of AI tools, such as for hospital triage, have often reported conflicting levels of practitioner comfort in allowing AI to make high-stakes decisions (27). Many experts supported the use of AI for triage of hazardous materials and chemical /biological/radiological/nuclear/explosion (CBRNE) victims. While much of the ongoing research in this field is still in its infancy, researcher are exploring the use of AI for protection of frontline CBRNE providers, analysis of hypoxia severity at triage, and measurement of signature intelligence (28–30). Some experts also supported the use of AI to aid with distribution of patients to specific treatment areas. When interpreting these statements that had high mean scores but did not reach consensus, it is important to recall that Delphi studies assess consensus to measure agreement of experts with one another. In the case of these statements, not all experts agreed with one another on the level of importance, even though the overall mean was high. Taken from a practical perspective, this shows that not all experts were equally supportive of these initiatives. However, this study did not investigate why the experts did not agree with one another or under what circumstances they would have achieved consensus.

Several statements ranked very low in importance, ranging from 3.1 to 4.3 out of 7. This includes AI to predict the best extrication methods for trapped victims, AI used to provide personalized medical treatment to specific victims, AI to make disaster management more culturally sensitive, AI for mental health management during disasters, and AI to help with difficult ethical dilemmas when treating a disaster victim. Interestingly, these lowest-ranking statements appear to all follow a theme, which includes the more human or personalized sides of disaster medicine. This may suggest that disaster practitioners hold a strong sense of responsibility for their decision-making process. Some ongoing research publications suggests that AI technology when used for difficult moral decisions may erode this sense of responsibility (31). However, a recent scoping review on the ethics of AI in healthcare concluded that existing laws and ethical frameworks were not up to date with the current or future application of artificial intelligence in healthcare (32). Of note, while the overall mean rating for these low ranking statements was low, none of the lower ranking statements reached consensus. This indicates that support for these statement varied between practitioners and may warrant further clarification.

Strengths and limitations

The major strength of this project is its international perspective. This was obtained by ensuring that equity, diversity, and inclusion was emphasized from the beginning of the study, including development of the authorship team. The participation of 131 participants from 38 different countries and 15 different disciplines ensured that the study maintained a global perspective.

A second strength of the study lies in the Delphi methodology itself. As this study was completed entirely online, there was mitigation of the tendency for groupthink to limit the creativity of the panel. Furthermore, in the Delphi study, all participants are given equal opportunity to voice their opinions, and all opinions carry equal weight. This contrasts with focus groups or expert panels, where dominant personalities can often overwhelm the opinions of other members of the group. This was particularly important in this topic, ensuring that dominant personalities from high-income countries did not overpower the voice of low- and middle-income countries.

A third strength of this study involved the direct participation of clinical practitioners. Throughout this study, the emphasis was on clinical practitioners' needs. The study purposefully did not focus on AI experts. AI experts tend to think more about what can be done and how it will be done. In contrast, clinicians are more likely to think of what will help their work and what will be useful for practical purposes.

Unfortunately, this study does have several limitations. Firstly, the Delphi method, while one of the more rigorous methods of obtaining expert opinion, is still based on expert opinion. However, this aligns with the specific objectives which is to create a consensus of opinions. Secondly, although the study made every possible effort to include a balanced view from all world regions, the study team was unsuccessful in recruiting a large number of experts from Latin America, sub-Saharan Africa, and South Asia. To some extent, this might represent where most academic and research efforts in disaster medicine are concentrated, which tends to be in North America, East Asia and Pacific, and Europe and Central Asia. Nonetheless, since experts were recruited directly through personal contacts of the study authors, there is a risk of inclusion bias. Thirdly, only a small number of statements reached consensus. While this may be a true reflection of the lack of homogeneity in disaster practitioner's needs, it is possible that further rounds of the study may have led to additional consensus statements. There was mild attrition of experts through the study rounds. However, the number of experts in all three rounds was well above the minimum standards commonly employed for Delphi studies.

Practice implications

The major value proposition of this project is the prioritized list of AI-guided technologies that are desirable to practitioners. This is designed to provide a clear pathway for vendors to create AI-guided applications that will benefit clinicians. Looking through the most desirable applications for AI, practitioners are most interested in applications that provide logistical support to enable them to better perform their jobs. Conversely, experts appeared to be less interested in AI for difficult decision-making. This appears to contrast with much of the approach that AI vendors are currently using, which often focuses on making decisions rather than supporting practitioner decisions. In essence, practitioners want AI to assist them in their duties, not to replace them.

Fundamentally, development of AI technologies for disaster medicine may require re-thinking the approach to user research. Currently, most technology developers base their decisions on user experience (U/X) research: a systematic study of how real or potential customers interact with a product or service, with the explicit goal of shaping design and content to drive clicks, sustain engagement, and ultimately convert interest into purchases (33). In contrast, disaster medicine AI development should focus not on “what sells” but rather on what practitioners need to assist them in their clinical duties.

Research implications

While this study provides perspective on disaster medicine practitioners' overall priorities for AI-guided clinical decision support, further research is required to delineate details. In many cases, these statements can be used as a target for AI development but will need further research. User experience research will be necessary to ensure that tools are usable by practitioners. Furthermore, all AI-guided technology should adhere to rigorous standards for testing and validation for accuracy before any of these tools are used for clinical decision-making. This research should maintain a global perspective and should continue to involve practitioners distributed worldwide.

A survey of current AI research in disaster medicine shows a pre-ponderance of opinion, frameworks, editorials, calls for action, and discussion of the potential of AI (1). What is clearly missing is evidence-based research.

This study demonstrates the utility and feasibility of research through online international Delphi methodology. This methodology can be useful for further research in more specialized areas of disaster medicine clinical decision support or for other topics. For instance, this paper did not pursue detailed preferences for such important topics as training, risk reduction, human resources, simulation, or education.

Conclusions

In this online Delphi study of 131 disaster medicine practitioners, participants clearly stated a preference for AI-guided clinical decision support that would help with the logistical support of their disaster medicine responsibilities. Participants appeared to have much less support for the use of AI in making difficult or critical decisions. Therefore, AI app development for clinical decision support should focus on the needs of the users and be guided by an international perspective.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Human Research Ethics Board of the University of Alberta under Study ID PRO 00135232. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

JMF: Project administration, Visualization, Data curation, Formal analysis, Conceptualization, Writing – review & editing, Methodology, Software, Investigation, Writing – original draft. MV: Visualization, Data curation, Conceptualization, Formal analysis, Writing – review & editing. JB: Writing – review & editing, Data curation. KH: Data curation, Writing – review & editing. JC: Data curation, Writing – review & editing. LR: Data curation, Writing – review & editing. ES: Writing – review & editing, Data curation. MC: Formal analysis, Data curation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors wish to thank the following Delphi experts who have consented to be acknowledged in the final publication: Rafael Castro Delgado, Ally Hutton, Jared Bly, Nicholas Kman, Zerina Tomkins, Fredrik Femtehjell Friberg, Alaa Oteir, Saurabh Dalal, Carlo Brugiotti, Knut Magne Kolstadbråten, Jamla Rizek, Tudor Codreanu, Eman Shaikh, Franco Chamberlain, Natalie Simakoloyi, Simon Herman, Kurtulus Aciksari, Daniel Kollek, Jacquie Hennessy, Kai Hsiao, Zainab Alhussaini, Einar Cruz, Jessica Ryder, Tristan Jones, Maicol Zanellati, Kate Maki, Barbara Karbo, Felix Ho, Conrad Ng, Suresh Pokharel, Stacey Abbott, Ahmad Oqlat, Sheena Teed, Alex Thompson, Karin Hugelius, Soren Mikkelsen, Frank Van Trimpont, Theo Ligthelm, Angie Bistaraki, Krzysztof Goniewicz, Adela Golea, Tatsuhiko Kubo, Kate Rotheray, Chun Tat Lui, Yohan Robinson, Grace Maina, Carlos E. Orellana-Jimenez, Katie Wilson, Angela Peca, Greg Penney, James Lee, Mariya Georgieva, Sonoe Mashino, Mahmoud Alwidyan, Fabian Rosas, Jorge Neira, Tehnaz Boyle, Angelika Underwood, Kavita Varshney, Jesica Valeria Bravo Gutierrez, Kwabena Danso, Maki MacDermot, Emad Abu Yaqeen, Vaclav Jordan, Bettina Evio, Tobias Gauss, Asmaa Alkafafy, Anja Westman, Lenard Cheng, Ahmed Alshemeili, John Ri, Elias Saade, Francisco Luis Pérez Caballero. The authors would also like to thank Mrs. Sandra Franchuk for her editorial assistance.

Conflict of interest

JMF is the CEO and founder of STAT59. MV has performed contract work for STAT59.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. ChatGPT version 4.5 and 5.0 was used for correction of grammar, spelling, and sentence structure. No citations were introduced by ChatGPT, and no diagrams or plots were created by ChatGPT. The author(s) retain responsibility for all content.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/femer.2025.1698372/full#supplementary-material

Abbreviations

AI, artificial intelligence, DM, disaster medicine, U/X, user experience.

References

1. Wibowo A, Amri I, Surahmat A, Rusdah R. Leveraging artificial intelligence in disaster management: a comprehensive bibliometric review. Jamba. (2025) 17:1776. doi: 10.4102/jamba.v17i1.1776

2. Sinead K, Felicity H, Hugh M. The Delphi Technique in Nursing and Health Research. Oxford: Wiley-Blackwell (2010).

3. Franc JM, Hung KKC, Pirisi A, Weinstein ES. Analysis of Delphi Study 7-point linear scale data by parametric methods: use of the mean and standard deviation. Methodol Innov. (2023) 16:226–33. doi: 10.1177/20597991231179393

4. Dai D, Bo M, Ren X, Dai K. Application and exploration of artificial intelligence technology in urban ecosystem-based disaster risk reduction: a scoping review. Ecol Indicat. (2024) 158:111565. doi: 10.1016/j.ecolind.2024.111565

5. Sen A, Stevens NT, Tran NK, Agarwal RR, Zhang Q. Dubin JA. Forecasting daily COVID-19 cases with gradient boosted regression trees and other methods: evidence from US cities. Front Public Health. (2023) 11:1259410. doi: 10.3389/fpubh.2023.1259410

6. Biswas S, Kumar D, Hajiaghaei-Keshteli M, Bera UK. An Ai-based framework for earthquake relief demand forecasting: a case Study in Türkiye. Int J Disaster Risk Reduct. (2024) 102:104287. doi: 10.1016/j.ijdrr.2024.104287

7. Velev D, Zlateva P. Challenges of artificial intelligence application for disaster risk management. Int Arch Photogramm Remote Sens Spatial Inf Sci. (2023) XLVIII-M-1-2023:387–94. doi: 10.5194/isprs-archives-XLVIII-M-1-2023-387-2023

8. Plevris V. Ai-driven innovations in earthquake risk mitigation: a future-focused perspective. Geosciences. (2024) 14:244. doi: 10.3390/geosciences14090244

9. Esparza M, Li B, Ma J, Mostafavi A. Ai meets natural hazard risk: a nationwide vulnerability assessment of data centers to natural hazards and power outages. Int J Disaster Risk Reduct. (2025) 126:105583. doi: 10.1016/j.ijdrr.2025.105583

10. Rezvani SMHS, Silva MJF, de Almeida NM. Mapping geospatial Ai flood risk in national road networks. ISPRS Int J Geo Inf. (2024) 13:323. doi: 10.3390/ijgi13090323

11. Diehr J, Ogunyiola A, Dada O. Artificial intelligence and machine learning-powered gis for proactive disaster resilience in a changing climate. Ann GIS. (2025) 31:287–300. doi: 10.1080/19475683.2025.2473596

12. Yazdi M, Zarei E, Adumene S, Beheshti A. Navigating the power of artificial intelligence in risk management: a comparative analysis. Safety. (2024) 10:42. doi: 10.3390/safety10020042

13. Teixeira AR, Ferreira JV, Ramos AL. Intelligent supply chain management: a systematic literature review on artificial intelligence contributions. Information. (2025) 16:399. doi: 10.3390/info16050399

14. Long P, Lu L, Chen Q, Chen Y, Li C, Luo X. Intelligent selection of healthcare supply chain mode - an applied research based on artificial intelligence. Front Public Health. (2023) 11:1310016. doi: 10.3389/fpubh.2023.1310016

15. Faith Chidinma O, Benjamin Gyedu A, Tobias Kwame A. Artificial intelligence in healthcare supply chain management: enhancing resilience and efficiency in U.S. medical supply distribution. EPRA Int J Econ Bus Manage Stud. (2025):285–91. doi: 10.36713/epra19901

16. Lee S, Reddy Mudireddy A, Kumar Pasupula D, Adhaduk M, Barsotti EJ, Sonka M, et al. Novel machine learning approach to predict and personalize length of stay for patients admitted with syncope from the emergency department. J Pers Med. (2022) 13:7. doi: 10.3390/jpm13010007

17. Attiah A, Kalkatawi M. Ai-powered smart emergency services support for 9-1-1 call handlers using textual features and svm model for digital health optimization. Front Big Data. (2025) 8:1594062. doi: 10.3389/fdata.2025.1594062

18. Selvan C, Anwar BH, Naveen S, Bhanu ST. Ambulance route optimization in a mobile ambulance dispatch system using deep neural network (Dnn). Sci Rep. (2025) 15:14232. doi: 10.1038/s41598-025-95048-0

19. Hede SN, Shivu Kumar MH, Loni N, Y S. AI based real-time call classification and dispatch through mathematical modelling. TechRxiv. (2025). doi: 10.36227/techrxiv.175296411.19300897/v1

20. Kim JH, Kim MJ, Kim HC, Kim HY, Sung JM, Chang HJ, et al. Novel artificial intelligence-enhanced digital network for prehospital emergency support: community intervention study. J Med Internet Res. (2025) 27:e58177. doi: 10.2196/58177

21. Miles J, Brady M, Smiath L, Cotterill C, Levey C. The use of artificial intelligence in the out of hospital care settings: a scoping review. medRxiv [preprint]. (2025). doi: 10.1101/2025.04.04.25325245

22. Hodgson NR, Saghafian S, Martini WA, Feizi A, Orfanoudaki A. Artificial intelligence-assisted emergency department vertical patient flow optimization. J Pers Med. (2025) 15:219. doi: 10.2139/ssrn.5219798

23. Moreno-Sánchez PA, Aalto M, van Gils M. Prediction of patient flow in the emergency department using explainable artificial intelligence. Digital Health. (2024) 10. doi: 10.1177/20552076241264194

24. Tyler S, Olis M, Aust N, Patel L, Simon L, Triantafyllidis C, et al. Use of artificial intelligence in triage in hospital emergency departments: a scoping review. Cureus. (2024) 16:e59906. doi: 10.7759/cureus.59906

25. Fatahi M. A Review of how artificial intelligence could influence the emergency department workflow. J Health Med Sci. (2025) 8:48–58. doi: 10.31014/aior.1994.08.01.228

26. Kao CL, Chien LC, Wang MC, Tang JS, Huang PC, Chuang CC, et al. The development of new remote technologies in disaster medicine education: a scoping review. Front Public Health. (2023) 11:1029558. doi: 10.3389/fpubh.2023.1029558

27. Da'Costa A, Teke J, Origbo JE, Osonuga A, Egbon E, Olawade DB. Ai-driven triage in emergency departments: a review of benefits, challenges, and future directions. Int J Med Inform. (2025) 197:105838. doi: 10.1016/j.ijmedinf.2025.105838

28. Seyedin H, Moslehi S, Tavan A, Narimani S. The role of artificial intelligence in protecting frontline forces in Cbrne incidents. Prog Disaster Sci. (2025) 26:100443. doi: 10.1016/j.pdisas.2025.100443

29. Nanini S, Abid M, Mamouni Y, Wiedemann A, Jouvet P, Bourassa S. Machine and deep learning models for hypoxemia severity triage in cbrne emergencies. Diagnostics. (2024) 14:2763. doi: 10.3390/diagnostics14232763

30. Rios E, Frascà D. Latest trends in masint technologies for Cbrne threats. Adv Mil Technol. (2024) 19:131–47. doi: 10.3849/aimt.01876

31. Salatino A, Prevel A, Caspar E, Bue SL. Influence of Ai behavior on human moral decisions, agency, and responsibility. Sci Rep. (2025) 15:12329. doi: 10.1038/s41598-025-95587-6

32. Prakash S, Balaji JN, Joshi A, Surapaneni KM. Ethical conundrums in the application of artificial intelligence (Ai) in healthcare-a scoping review of reviews. J Pers Med. (2022) 12:1914. doi: 10.3390/jpm12111914

Keywords: artificial intelligence, disaster medicine, clinical decision support, machine learning, Delphi, equity, diversity, inclusion

Citation: Franc JM, Verde M, Bonney J, Hung KKC, Cuthbertson J, Raffee L, Serra E and Caviglia M (2025) Applications of artificial intelligence-guided clinical decision support in disaster medicine: an international Delphi study. Front. Disaster Emerg. Med. 3:1698372. doi: 10.3389/femer.2025.1698372

Received: 03 September 2025; Accepted: 02 October 2025;

Published: 29 October 2025.

Edited by:

Robert Wunderlich, University of Tübingen, GermanyReviewed by:

Jan Wnent, University Medical Center Schleswig-Holstein, GermanyFrancesco Barbero, ASL Città di Torino, Italy

Copyright © 2025 Franc, Verde, Bonney, Hung, Cuthbertson, Raffee, Serra and Caviglia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jeffrey Michael Franc, amVmZnJleS5mcmFuY0B1YWxiZXJ0YS5jYQ==

†ORCID: Jeffrey Michael Franc orcid.org/0000-0002-2421-3479

Manuela Verde orcid.org/0000-0003-0476-1723

Joseph Bonney orcid.org/0000-0002-1717-6243

Kevin K. C. Hung orcid.org/0000-0001-8706-7758

Joseph Cuthbertson orcid.org/0000-0003-3210-8115

Liqaa Raffee orcid.org/0000-0001-8020-8677

Eduardo Serra orcid.org/0009-0009-5065-230X

Marta Caviglia orcid.org/0000-0002-4164-4756

Jeffrey Michael Franc

Jeffrey Michael Franc Manuela Verde

Manuela Verde Joseph Bonney4,5†

Joseph Bonney4,5† Joseph Cuthbertson

Joseph Cuthbertson Liqaa Raffee

Liqaa Raffee Eduardo Serra

Eduardo Serra Marta Caviglia

Marta Caviglia