Feedback control of collective dynamics in an oscillator population with time-dependent connectivity

- Institute of Physics and Astronomy, University of Potsdam, Potsdam, Germany

We present a numerical study of pulsatile feedback-based control of synchrony level in a highly-interconnected oscillatory network. We focus on a nontrivial case when the system is close to the synchronization transition point and exhibits collective rhythm with strong amplitude modulation. We pay special attention to technical but essential steps like causal real-time extraction of the signal of interest from a noisy measurement and estimation of instantaneous phase and amplitude. The feedback loop’s parameters are tuned automatically to suppress synchrony. Though the study is motivated by neuroscience, the results are relevant to controlling oscillatory activity in ensembles of various natures and, thus, to the rapidly developing field of network physiology.

1 Introduction

The merger of control science and nonlinear dynamics ideas led to essential achievements in controlling chaos, coherence of noisy dynamics, and noise-induced motion; see (Schöll and Schuster, 2008) for a review. Another field of application is controlling the level of synchrony. As is known, synchronization is a general and frequently encountered phenomenon that may be beneficial or harmful (Winfree, 1967; Kuramoto, 1984; Pikovsky et al., 2001; Strogatz, 2003; Osipov et al., 2007). So, synchronizing low-power generators helps to sum their outputs and thus create a high-power source; see, e.g., (Tiberkevich et al., 2009). Synchronization is vital for the stable operation of power grids (Dörfler and Bullo, 2012) and cardiac pacemakers (Jalife, 1984). Examples of an opposite value stem from neuroscience, where pathologically increased synchrony is believed to be a cause of, e.g., Parkinson’s disease (Tass, 1999; Tass, 2000) and pedestrian bridge dynamics (Dallard et al., 2001; Eckhardt et al., 2007). This paper addresses the case of a negative impact. It discusses suppressing undesired synchrony in a highly interconnected population of active, self-sustained oscillators - a problem of general interest for network physiology (Ivanov, 2021).

Two approaches to this problem are known from the literature: the open-loop (Tass, 1999; 2001; 2002) and closed-loop control (Rosenblum and Pikovsky, 2004a; b; Popovych et al., 2005; Lin et al., 2013; Wilson and Moehlis, 2015; Holt et al., 2016; Zhou et al., 2017). The most known and tested open loop scheme is the coordinated reset (Tass, 2003; Popovych and Tass, 2012; Adamchic et al., 2014; Manos et al., 2021; Munjal et al., 2021; Khaledi-Nasab et al., 2022); it requires stimulation via multiple electrodes. The feedback schemes exploit one measuring and one stimulating electrode. Furthermore, the feedback techniques can be categorized as continuous-time and pulsatile methods. Here, we concentrate on the latter case, suitable for neuroscience applications such as deep brain stimulation (DBS) (Benabid et al., 1991; 2009; Kühn and Volkmann, 2017), where only stimulation by pulses is possible.

The main features of our modeling study are as follows. 1) We suggest a network model with time-dependent global connectivity to imitate brain activity data with strong amplitude modulation. 2) We consider that one cannot measure the rhythm of interest directly but shall extract it from its mixture with other rhythms and noise. 3) We implement a realistic scheme for real-time estimating the signal’s phase and amplitude. 4) We suggest an adaptive control approach to tune the stimulation on the fly. Our main result is that we automatically find two vulnerable phases per oscillation period and achieve suppression of the strongly modulated activity by stimulation at these phases only.

In the rest of this section, we briefly review the main results in the feedback-based manipulation of the ensemble synchrony and specify our problem in more detail.

1.1 Feedback control of collective synchrony: state of the art

All known closed-loop techniques assume one can monitor the activity in question and stimulate at least a large part of the oscillator population. The population is supposed to be highly interconnected, so the mean-filed approximation is justified. If continuous-time stimulation is possible, one achieves the desired goal by feeding back a delayed or phase-shifted measurement (Rosenblum and Pikovsky, 2004b; Tukhlina et al., 2007). The explanation is simple: elements of the population maintain synchrony due to forcing by the mean field. If stimulation compensates for the mean field, the oscillators naturally desynchronize due to frequency inhomogeneity and individual noisy perturbations. It means the stimulation shall be approximately in antiphase to the measurement and have roughly the same amplitude. Thus, the problem reduces to determining the proper phase shift and amplification; this can be done by trial and error or by an adaptive control scheme (Montaseri et al., 2013). The paramount property of this technique is the stimulation tends to zero, or practically to the level of noise, as soon as the control goal is achieved and the undesired rhythm declines. Therefore, the scheme is denoted as the vanishing stimulation. In a practical situation, one measures a mixture of the activity to be suppressed with other rhythms and noise, so filtration shall be a part of the feedback scheme.

Another way to describe the mechanism of the continuous feedback stimulation is to assume that the collective mode appears via the Hopf bifurcation and write the corresponding normal form equation. Linear delayed or non-delayed feedback (Rosenblum and Pikovsky, 2004b; Tukhlina et al., 2007) changes the linear term and thus stabilizes the otherwise unstable origin. On the level of individual oscillators, this corresponds to the asynchronous state. The normal form representation clarifies the effect of the nonlinear feedback (Popovych et al., 2005): it changes the nonlinear term in the normal form equation, thus reducing the collective mode’s amplitude without causing the bifurcation.

In many applications, especially in neuroscience, continuous-time stimulation is not feasible and has to rely on a pulsatile feedback scheme. The most straightforward solution is to employ the continuous feedback signal to modulate a high-frequency pulse train (Popovych et al., 2017). Another approach is to modulate the pulses’ amplitude following the measurement but stimulate only at the vulnerable phases (Rosenblum, 2020); in this way, one can essentially reduce the intervention in the system. In this paper, we follow this path and simulate the desynchronizing feedback accounting for such practical issues as extracting the signal of interest from its mixture with noise and real-time estimation of the signal’s phase and amplitude. We suggest a network model with time-dependent connectivity to mimic the time course of data registered from Parkinsonian subjects and demonstrate a successful synchrony control of such a network by appropriately timed charge-balanced pulses. Next, we propose and discuss an algorithm for automatically tuning feedback parameters.

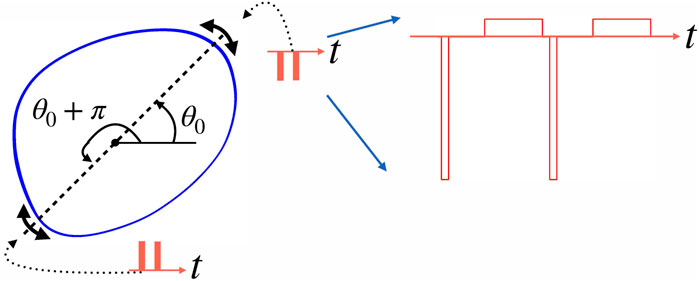

Before modeling the pulsatile feedback-based desynchronization, we discuss why certain oscillation phases are vulnerable. Consider a limit-cycle motion subject to an external stimulus that acts along some unknown direction. This direction depends on the system’s equations and how the stimulus enters these equations. Since we do not know the system’s equations, we cannot predict how the given stimulus influences the oscillation amplitude. However, we can say that the stimulus pushes the system off the limit cycle. If we apply the stimulus at such a phase that it pushes the system towards the unstable steady state inside the cycle, this action is optimal for desynchronization. Indeed, the ensemble’s synchronous state corresponds to the collective activity’s limit-cycle oscillation, while the asynchronous state corresponds to a fixed-point solution. Thus, there exists an optimal, vulnerable phase θ0. Obviously, stimulation at θ0 + π with a stimulus of opposite polarity is also optimal. Unfortunately, we cannot guess θ0. We can only apply stimuli at different phases and look at the response.

2 Modeling the closed-loop desynchronization

2.1 Simulating the amplitude-modulated activity

A commonly used model of collective dynamics in a highly interconnected oscillatory network is a mean-field coupled population. In computational neuroscience, the models frequently incorporate two groups of units, modeling excitatory and inhibitory neurons; in this case, the coupling organization may be more complex. The common feature of the models from this class is that they produce a nearly periodic collective mode. The deviation from the periodicity is due to the finite-size effect and, sometimes, weak collective chaos that is more pronounced if individual units are chaotic. However, the envelope of the rhythm to be suppressed does not vanish, and currently available techniques successfully treat this case. We concentrate here on the case when the activity of our interest is waxing and waning, as frequently observed in neuroscience measurements [for an example, see the time plots of band-passed beta-band activity from a patient with Parkinson’s disease in (Rosenblum et al., 2021)].

We use a phenomenological model to simulate the waxing and waning patterns when the activity bursts are interrupted by quiescent epochs. We take N globally coupled heterogeneous1 Bonhoeffer–van der Pol oscillators:

where k = 1, …, N is the oscillator index, and the coupling is organized via the mean field X = N−1 ∑kxk. Function P(t) describes external stimulation, and the parameter ψ quantifies how the stimuli affect the system and determines the phase beneficial for stimulation. For the following, we fix ψ = π/4. In the simulation of the feedback loop, this parameter is considered unknown, and a search algorithm is used to find an appropriate stimulation phase.

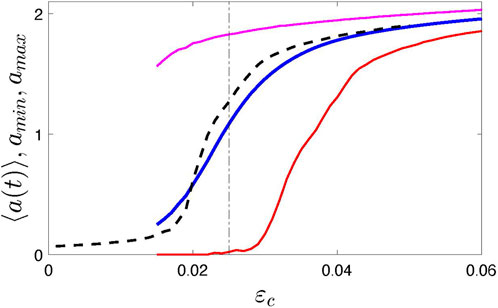

A new feature of the model is the time-dependent coupling strength ɛ(t). We assume that it fluctuates around some central value ɛc. For simulations, we use a simple algorithm; namely, we let ɛ(t) be a piece-wise constant function of time, ɛ(t) = ɛn = const for tn ≤ t < tn+1 = tn + τn, where ɛn and τn are random numbers, chosen from a uniform distribution between ɛc ∓Δɛ and τmin, τmax, respectively. Figure 1 illustrates the synchronization transition in the ensemble of N = 1,000 elements for Δɛ = 0.015, τmin = 200 and τmax = 500. Here, for the order parameter, we take the oscillation amplitude2, averaged over a long time interval, ⟨a(t)⟩. We see, that for 0.015 ≲ɛc ≲ 0.03 we obtain strongly modulated mean field X. The time plots are given in the Results section below. For comparison, we also show the synchronization transition for the usual globally-coupled model with ɛ = const (formally, it corresponds to Δɛ = 0). For simulations, we exploited the fourth-order Runge-Kutta scheme with the time step 0.1.

FIGURE 1. Synchronization transition in system (1) illustrated by the dependence of the time-averaged amplitude ⟨a(t)⟩ on the coupling strength. Black dashed line presents the standard case when the coupling is time-independent, i.e., Δɛ = 0. Blue, red, and magenta solid lines show the averaged, minimal, and maximal amplitude, respectively. Vertical dotted-dashed line indicates the value ɛc = 0.025 used in the following; this value corresponds to the strongly modulated mean field with vanishing envelope, see time plots in Figure 4.

The suggested model is certainly purely phenomenological. However, it is natural to assume that, e.g., neuronal networks in the brain are not frozen but permanently altered, e.g., due to plasticity (Manos et al., 2021; Asl et al., 2022; Khaledi-Nasab et al., 2022). Real-world networks are nonautonomous, adaptive (Gross and Blasius, 2008; Berner et al., 2023), and subject to internal fluctuations and external perturbations. Though the account of all details is impossible, a phenomenological model can help to describe the dynamics close to the synchronization transition point.

2.2 Real-time preprocessing

2.2.1 Measurement and filtering

Measuremental noise is inevitable. This noise and some irrelevant rhythms are often intense and contaminate the useful signal, so filtration becomes necessary before phase and amplitude estimation. For real-time applications, the filter can use only the current and previous measurement points, i.e., it must be causal. Thus, we implement a finite impulse response bandpass filter of length 2M + 1 points. The filter length M determines its quality: a longer digital filter provides better signal attenuation for the frequencies outside the desired band. Finite impulse response filters have linear phase response, which means they do not distort the signal but delay it by M points. Thus, the filter’s output is delayed by Mδ, where δ is the sampling rate. Hence, we can obtain only delayed values of the instantaneous phase and amplitude. If the delay is small, we can estimate the actual phase as θ(t) ≈ θ(t − Mδ) + ωMδ, where ω is the average oscillation frequency. However, the larger the delay, the worse the estimation because the system is noisy and exhibits phase diffusion. Thus, the filter shall not be too long. On the other hand, short filters are not efficient. Hence, there is an optimal filter semilength M.

2.2.2 Causal phase and amplitude estimation

Here, we implement an algorithm exploiting a non-resonant linear oscillator described in (Rosenblum et al., 2021). The idea is as follows. Suppose, for a moment, our signal is harmonic, and we use it to drive a linear damped oscillator; we use this oscillator as a virtual “measuring device.” The phase and amplitude of the oscillator are related to those of the driving force by well-known resonance curve formulas. Since we know the state of the linear oscillator, we invert the resonance relations to obtain the phase and amplitude of the force, i.e., the phase and amplitude of the analyzed signal. The crucial step is to choose the proper parameters for the “device.” By choosing the linear oscillator’s frequency much larger than the signal’s frequency and selecting appropriate damping, we can ensure that the amplitude and frequency response is approximately flat in a large interval of input frequencies. We need two oscillators to achieve this: a strongly-damped one yields a nearly constant amplitude response for frequencies much smaller than the resonant one, while a weakly-damped one provides a nearly constant phase response. The algorithm also works for the force with slowly varying amplitude and frequency. Practically, we substitute each linear oscillator with the corresponding differential equation and solve it numerically for the discretely-spaced input. Reference (Rosenblum et al., 2021) provides an efficient numerical scheme. The approach has demonstrated its efficiency in tests with bandpassed beta activity of Parkinsonian subjects (Busch et al., 2022).

2.3 Stimulation

We model stimulation by short stimuli. Thus, P(t) = ∑np(t − tn), where tn are the instants of stimulus’s application and p(t) describes the pulse shape. The stimuli have a finite length Ts, i.e., p(t) = 0 for t∉[0, Ts]. In many neuroscience applications, an additional requirement applies: the stimuli must be charge-balanced to avoid charge accumulation in the live tissue. Thus, we use bipolar stimuli consisting of two rectangular pulses of opposite polarity and equal area to fulfill the condition

FIGURE 2. This schematic figure illustrates the algorithm of stimulation at vulnerable phases. Let such phase be θ0. It means that a negative pulse applied at this phase pushes the point on the limit cycle (blue closed curve) towards the unstable steady state inside the cycle (filled circle), and so does a positive stimulus applied at θ0 + π. Practically, we check whether the circular distance between the current phase θ(t) and optimal phase θ0 is smaller than the tolerance parameter α. So, the system is stimulated if the phase is in the vicinity of θ0 or θ0 + π, as indicated by double-headed arrows. Generally, several stimuli (shown by red bars) fulfill this condition; for this illustration, we assume that this number is two. The right part of the figure shows the actual stimulus shape used in simulations. Here, two negative stimuli are shown. Each stimulus consists of a narrow but high rectangular pulse followed after some gap by a compensating pulse that is low but wide.

Next, we discuss the intensity of the stimulation. We implement a feedback algorithm with the feedback factor ɛfb < 0. It means the signal’s instantaneous amplitude a(t) determines the stimulation amplitude An, i.e., the height of the narrow rectangular pulse of the nth stimulus: An = A(tn) = max(ɛfba(t), − A0) for stimulation around θ0 and An = A(tn) = −max(ɛfba(t), − A0) for stimulation around θ0 + π.3 The parameter A0 determines the maximal allowed stimulation amplitude.

Until now, we assumed that the optimal (vulnerable) phase θ0 and the feedback factor ɛfb are known. In an actual experiment, we must determine these parameters, i.e., we need an adaptive control scheme. A natural approach is to try stimulation with different θ0, ɛfb and look at whether the signal’s amplitude is enhanced or suppressed. The known algorithms (Montaseri et al., 2013; Rosenblum, 2020) work well for signals without strong amplitude modulation, both for time-continuous and pulsatile stimulation. However, our tests find that these adaptation schemes become unstable for strong modulation, i.e., in the case treated in this paper.

We solve the problem by exploiting a trial-and-error algorithm. It means we try to stimulate at various phases and look when the stimulation is most efficient. However, this is not that trivial for the case of a burst-like, amplitude-modulated signal because we cannot tell the amplitude reduction due to stimulation from that due to internal dynamics. Indeed, the data’s essential feature is that the amplitude decreases practically to zero from time to time without any stimulation. On the other hand, even if we stimulate at the proper phase, the amplitude can grow due to increased coupling within the oscillator population. We approach the problem as follows.

In the first step, we do not stimulate but calculate the autonomous system’s average amplitude aaut. Next, in the learning phase, we start with some initial values for ɛfb and then gradually change θ0 from zero to 2πNcycl. Namely, we stimulate as described for m = 5 oscillation periods and then check whether acurr < amin. Here, acurr is the current amplitude value, averaged over m periods, and amin is the minimal amplitude value (Initially, we set amin = 0.3aaut.) If acurr < amin, we set amin = acurr and choose the current value for the optimal phase as θopt = θ0. Then, we stimulate the next m cycles and check the condition acurr < amin again. If acurr > amin but decreases, we make no changes and continue stimulation; otherwise we adjust the parameters as

where Δθ is the maximal allowed step, e.g., Δθ = 2π/25, and

where f is a decreasing function of |ɛfb|. Using such a function, we avoid feedback that is too strong. For real-time applications, it is beneficial to choose a computationally efficient function; we choose

3 Results

In this section, we show the results of feedback-based suppression of the noise-contaminated mean field X(t) generated by the globally coupled population described by Eq. 1. For the observable used by the control scheme, we take XN = X + σξ, where ξ is white Gaussian noise with zero average and standard deviation one. We fix σ = 3, i.e., the noise is strong.

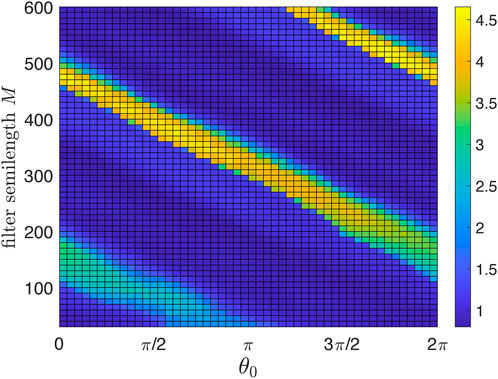

As an auxiliary step, we demonstrate in Figure 3 the effect of the digital filter.4 Above, we argued that there is an optimal filter semilength M. Here, we show the suppression degree in dependence on M. To concentrate on this issue, we consider the deterministic case of Eq. 1 with ɛ = const = 0.03. We scan M and θ0 and show by color coding the suppression factor S = S(θ0, M). We define the suppression factor as the ratio of the autonomous system’s standard deviation over the stimulated system’s standard deviation (the latter is computed after a sufficiently long transient). As expected, short filters are inefficient, while for long filters, the stimulation setup becomes more sensitive to the choice of θ0. For the following, we choose M = 350; for this filter, good suppression is achieved for 0.64π ≲θ0 ≲ 0.84π.

FIGURE 3. Suppression factor (color-coded) as a function of the stimulation phase θ0 and causal filter semilength M. Short filters are not efficient and, therefore, yield poor suppression. Large M ensures much better filtration but increases the delay introduced by the filter and thus spoils the overall performance of the feedback desynchronization scheme. The optimal M is about 350. For larger M, the maximal suppression factor remains nearly the same, but the algorithm becomes more sensitive to the choice of θ0.

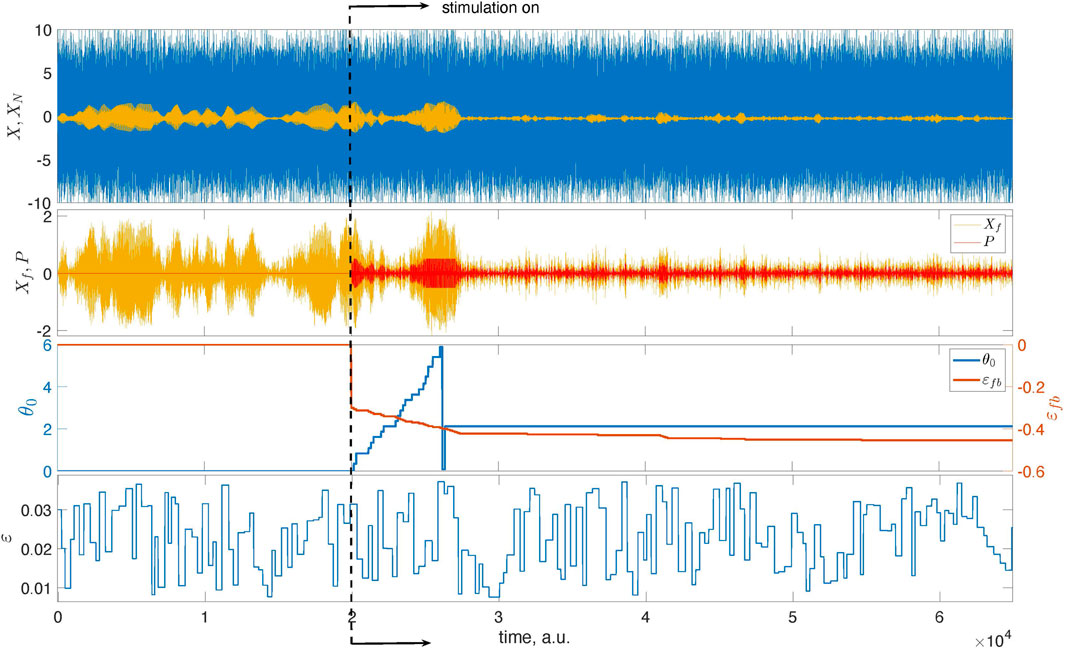

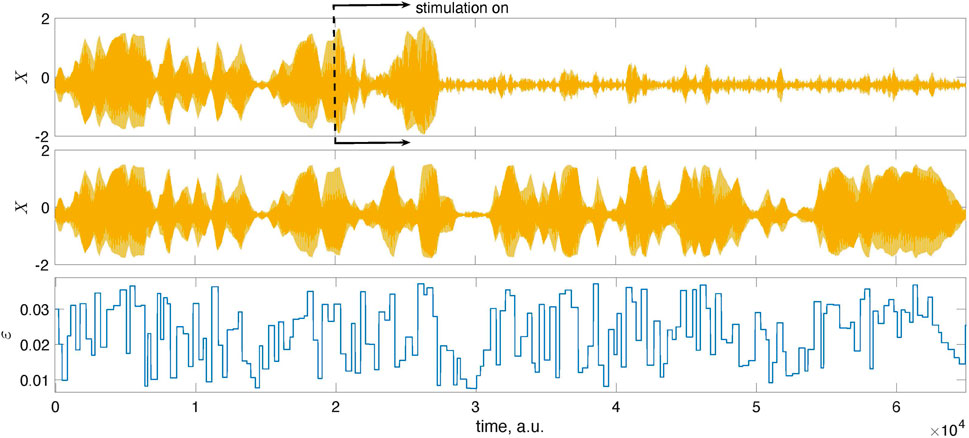

Figure 4 presents the main results. Now, we exploit the model with the time-dependent coupling with parameters ɛc = 0.025 and Δɛ = 0.015.5 We run the unstimulated system for t < 2 ⋅ 104, then turn on the stimulation and successfully suppress the collective oscillation. We take Ncycl = 1 for the adaptation epoch. The estimated optimal phase θopt = 0.67π corresponds to the domain of efficient suppression for the chosen filter.

FIGURE 4. Illustration of the feedback-based pulsatile desynchronization. The top panel presents the mean field X of system (1) and its mixture with noise, XN. The latter is used as the input to the feedback loop. The second panel from the top presents Xf, that is, the filtered series XN, and the stimulation that is turned on at t = 2 ⋅ 104. At this instant, the learning epoch begins. During this epoch, the stimulation phase θ0 grows from zero to 2π. After that, the stimulation continues with the optimal value of θ0. The bottom panel presents the time-dependent coupling.

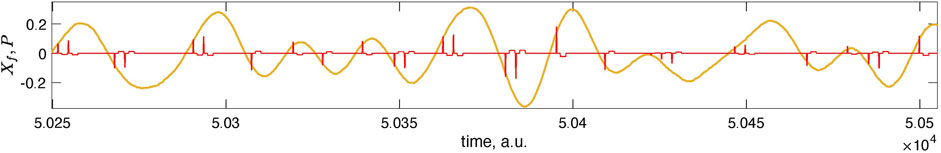

Figure 5 and Figure 6 provide further illustrations for this test. In Figure 5, we show a short epoch of the data from Figure 4 for the suppressed state magnified so that one sees individual stimuli. Figure 6 proves that the decrease of the amplitude is indeed due to stimulation and not internal dynamics. To demonstrate this, we run the system twice, with the same initial conditions and the same realization of the random time-dependent coupling ɛ(t). However, we switch on the stimulation in the first case while the system remains autonomous in the second.

FIGURE 5. Here, we magnify the short epoch of the data from Figure 4 (second panel from the top). In this magnification, one can see individual stimuli. Negative polarity pulses appear near the optimal phase θopt, while positive pulses appear near θopt + π. There are one or two pulses in the neighborhood of the vulnerable phase for the chosen parameters.

FIGURE 6. The mean fields X of the population (1) shown here were obtained from two different runs: in the top panel, the stimulation was turned on at the instant indicated by the dashed line, while in the middle panel, we show the results for the autonomous, unstimulated system. The system was started with the same initial conditions in both runs and simulated using the same time-dependent coupling. Naturally, for t < 2 ⋅ 104, the signals coincide. For larger times, they differ due to stimulation.

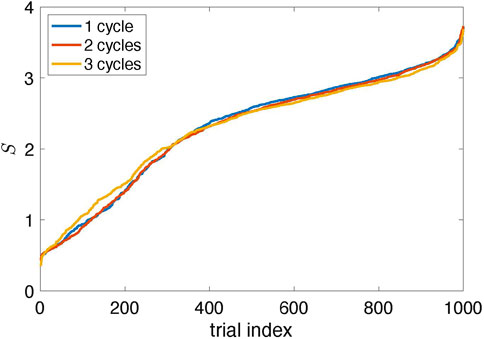

The natural question is whether the suggested algorithm always finds the proper stimulation parameters. The answer is no. The results in Figure 4 are typical, but sometimes the algorithm fails. Since it traces the amplitude variation in response to stimulation, the errors are inevitable because the amplitude can occasionally reduce due to internal dynamics, not stimulation. To estimate the probability of a failure, we performed 1,000 runs with different random realizations of the time-dependent coupling and computed the suppression factor S. We repeated this test with two and three learning cycles, letting θ0 in the learning phase grow to 4π and 6π, respectively. The results shown in Figure 7 demonstrate that the stimulation algorithm fails in

FIGURE 7. Suppression factor obtained in 1,000 trials with different realizations of the random time-dependent coupling ɛ(t) and for Ncycl = 1, 2, 3; it means that in the learning epoch, the stimulation phase θ0 changed from zero to 2πNcycl (The data are sorted in ascending order.) Approximately 90% of trials yield suppression of the collective mode (S > 1); about 70% provide significant suppression (S > 2).

4 Discussion

We presented a detailed simulation of the pulsatile feedback-based control of collective synchrony, concentrating on the case when the observed dynamics exhibit strong amplitude modulation; this case is relevant, e.g., for neuroscience. To simulate such modulated activity, we introduced a model of an oscillatory network with global randomly fluctuating coupling. We assumed that the input to the feedback loop is a mixture of the ensemble mean field and broad-band noise of high intensity. We demonstrated an efficient network desynchronization by incorporating a bandpass filter into the feedback loop and using an adaptation algorithm to tune the stimulation setup. We emphasize that we stimulate at vulnerable phases only and reduce the stimulation amplitude as soon as the goal is achieved, thus minimizing the intervention into the system. This property may be helpful for applications in life sciences and network physiology (Ivanov, 2021).

The results show that contamination of the rhythm in question by other spectrally separated rhythms and noise is not an obstacle. The feedback loop compensates for the delay introduced by a causal bandpass digital filter. Substituting the simple filter used in our simulations with a more advanced one [see, e.g. (Smetanin et al., 2020)] may improve the overall performance.

The algorithm finding the optimal stimulation phase and feedback factor can possibly be improved. One option to enhance its performance is to restart the learning epoch after some time if the suppression factor after the first search for optimal parameters is too small. An alternative approach is to substitute the adaptation algorithm by inference of the amplitude response of the system (Duchet et al., 2020; Cestnik et al., 2022) using specially designed test stimulation; the inferred information can then be used for suppressing stimulation.

Our simulations use bipolar charge-balanced stimuli consisting of two rectangular pulses with a gap in between. As known (Popovych O. V. et al., 2017; Popovych and Tass, 2019), the gap influences the stimulation efficiency, and optimizing the stimulus’s shape remains challenging (Wilson and Moehlis, 2014; Mau and Rosenblum, 2022). We notice that the desynchronization task simplifies without this application-specific charge balance requirement, i.e., when monopolar stimuli are allowed.

Finally, we stress that we paid particular attention to the computational efficiency of all building blocks of the control loop. Neither the phase and amplitude estimation nor optimal parameters search require functions computation but only arithmetical operations. Thus, the feedback scheme can be used in real-time applications and easily implemented in specialized hardware. Therefore, the presented results can be helpful in ongoing experimental research on phase-specific deep brain stimulation (Rosin et al., 2011; Cagnan et al., 2013; 2017; Little et al., 2013; Holt et al., 2019; McNamara et al., 2020). As a possible direction for future studies, we mention a further development of a network neuronal model exhibiting amplitude-modulated, burst-like behavior that will account for different types of plasticity, external inputs, internal fluctuations, and other realistic properties of the pathological pacemaker.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Author contributions

MR: Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The author acknowledges helpful discussions with A. Pikovsky.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1Parameters Ik determine oscillator frequencies; they are taken from a Gaussian distribution with the mean 0.6 and standard deviation 0.1. These parameters ensure that all units are in the oscillatory state and have different frequencies.

2For the amplitude calculation we exploit the MATLAB® envelope function; this function uses the spline interpolation between the local maxima to provide a smooth envelope of a time series.

3We remind that we compute the instantaneous amplitude for the filtered and, hence, time-delayed signal.

4We used the MATLAB® fir1 function.

5Parameters of the stimuli are: width of the first pulse is 0.2, gap is 1, and width of the compensating pulse is 1.6; the minimal distance between the pulses is Δ = 0.2. Further parameters are: α = 0.1π, A0 = 0.5.

References

Adamchic, I., Hauptmann, C., Barnikol, U., Pawelczyk, N., Popovych, O., Barnikol, T., et al. (2014). Coordinated reset neuromodulation for Parkinson’s disease: proof-of-concept study. Mov. Disord. 29, 1679–1684. doi:10.1002/mds.25923

Asl, M. M., Vahabie, A.-H., Valizadeh, A., and Tass, P. (2022). Spike-timing-dependent plasticity mediated by dopamine and its role in Parkinson’s disease pathophysiology. Front. Netw. Physiol. 2, 817524. doi:10.3389/fnetp.2022.817524

Benabid, A., Chabardes, S., Mitrofanis, J., and Pollak, P. (2009). Deep brain stimulation of the subthalamic nucleus for the treatment of Parkinson’s disease. Lancet Neurol. 8, 67–81. doi:10.1016/S1474-4422(08)70291-6

Benabid, A., Pollak, P., Gervason, C., Hoffmann, D., Gao, D., Hommel, M., et al. (1991). Long-term suppression of tremor by chronic stimulation of the ventral intermediate thalamic nucleus. Lancet 337, 403–406. doi:10.1016/0140-6736(91)91175-t

Berner, R., Gross, T., Kuehn, C., Kurths, J., and Yanchuk, S. (2023). Adaptive dynamical networks. Phys. Rep. 1031, 1–59. doi:10.1016/j.physrep.2023.08.001

Busch, J., Feldmann, L., Kühn, A., and Rosenblum, M. (2022). Real-time phase and amplitude estimation of neurophysiological signals exploiting a non-resonant oscillator. Exp. Neurol. 347, 113869. doi:10.1016/j.expneurol.2021.113869

Cagnan, H., Brittain, J.-S., Little, S., Foltynie, T., Limousin, P., Zrinzo, L., et al. (2013). Phase dependent modulation of tremor amplitude in essential tremor through thalamic stimulation. Brain 136, 3062–3075. doi:10.1093/brain/awt239

Cagnan, H., Pedrosa, D., Little, S., Pogosyan, A., Cheeran, B., Aziz, T., et al. (2017). Stimulating at the right time: phase-specific deep brain stimulation. Brain 40, 132–145. doi:10.1093/brain/aww286

Cestnik, R., Mau, E. T. K., and Rosenblum, M. (2022). Inferring oscillator’s phase and amplitude response from a scalar signal exploiting test stimulation. New J. Phys. 24, 123012. doi:10.1088/1367-2630/aca70a

Dallard, P., Fitzpatrick, T., Flint, A., Low, A., Smith, R. R., Willford, M., et al. (2001). London millennium bridge: pedestrian-induced lateral vibration. J. Bridge Eng. 6, 412–417. doi:10.1061/(asce)1084-0702(2001)6:6(412)

Dörfler, F., and Bullo, F. (2012). Synchronization and transient stability in power networks and nonuniform Kuramoto oscillators. SIAM J. Control Optim. 50, 1616–1642. doi:10.1137/110851584

Duchet, B., Weerasinghe, G., Cagnan, H., Brown, P., Bick, C., and Bogacz, R. (2020). Phase-dependence of response curves to deep brain stimulation and their relationship: from essential tremor patient data to a Wilson-Cowan model. J. Math. Neurosci. 10, 4. doi:10.1186/s13408-020-00081-0

Eckhardt, B., Ott, E., Strogatz, S. H., Abrams, D. M., and McRobie, A. (2007). Modeling walker synchronization on the millennium bridge. Phys. Rev. E 75, 021110. doi:10.1103/PhysRevE.75.021110

Gross, T., and Blasius, B. (2008). Adaptive coevolutionary networks: a review. J. R. Soc. Interface 5, 259–271. doi:10.1098/rsif.2007.1229

Holt, A., Wilson, D., Shinn, M., Moehlis, J., and Netoff, T. (2016). Phasic burst stimulation: a closed-loop approach to tuning deep brain stimulation parameters for Parkinson’s disease. PLoS Comput. Biol. 12, e1005011. doi:10.1371/journal.pcbi.1005011

Holt, A. B., Kormann, E., Gulberti, A., Pötter-Nerger, M., McNamara, C. G., Cagnan, H., et al. (2019). Phase-dependent suppression of beta oscillations in Parkinson’s disease patients. J. Neurosci. 39, 1119–1134. doi:10.1523/JNEUROSCI.1913-18.2018

Ivanov, P. (2021). The new field of network physiology: building the human physiolome. Front. Netw. Physiol. 1, 711778. doi:10.3389/fnetp.2021.711778

Jalife, J. (1984). Mutual entrainment and electrical coupling as mechanisms for synchronous firing of rabbit sino-atrial pace-maker cells. J. Physiol. Lond. 356, 221–243. doi:10.1113/jphysiol.1984.sp015461

Khaledi-Nasab, A., Kromer, J., and Tass, P. (2022). Long-lasting desynchronization of plastic neuronal networks by double-random coordinated reset stimulation. Front. Netw. Physiol. 2, 864859. doi:10.3389/fnetp.2022.864859

Kühn, A., and Volkmann, J. (2017). Innovations in deep brain stimulation methodology. Mov. Disord. 32, 11–19. doi:10.1002/mds.26703

Lin, W., Pu, Y., Guo, Y., and Kurths, J. (2013). Oscillation suppression and synchronization: frequencies determine the role of control with time delays. EPL Europhys. Lett. 102, 20003. doi:10.1209/0295-5075/102/20003

Little, S., Pogosyan, A., Neal, S., Zavala, B., Zrinzo, L., Hariz, M., et al. (2013). Adaptive deep brain stimulation in advanced Parkinson disease. Ann. Neurol. 74, 449–457. doi:10.1002/ana.23951

Manos, T., Diaz-Pier, S., and Tass, P. (2021). Long-term desynchronization by coordinated reset stimulation in a neural network model with synaptic and structural plasticity. Front. Physiol. 12, 716556. doi:10.3389/fphys.2021.716556

Mau, E. T. K., and Rosenblum, M. (2022). Optimizing charge-balanced pulse stimulation for desynchronization. Chaos 32, 013103. doi:10.1063/5.0070036

McNamara, C. G., Rothwell, M., and Sharott, A. (2020). Phase-dependent closed-loop modulation of neural oscillations in vivo. bioRxiv. doi:10.1101/2020.05.21.102335

Montaseri, G., Javad Yazdanpanah, M., Pikovsky, A., and Rosenblum, M. (2013). Synchrony suppression in ensembles of coupled oscillators via adaptive vanishing feedback. Chaos 23, 033122. doi:10.1063/1.4817393

Munjal, T., Silchenko, A., Pfeifer, K., Han, S., Yankulova, J., Fitzgerald, M., et al. (2021). Treatment tone spacing and acute effects of acoustic coordinated reset stimulation in tinnitus patients. Front. Netw. Physiol. 1, 734344. doi:10.3389/fnetp.2021.734344

Osipov, G., Kurths, J., and Zhou, C. (2007). Synchronization in oscillatory networks. Berlin Heidelberg: Springer-Verlag.

Pikovsky, A., Rosenblum, M., and Kurths, J. (2001). Synchronization. A universal concept in nonlinear sciences. Cambridge: Cambridge University Press.

Popovych, O., Lysyansky, B., Rosenblum, M., Pikovsky, A., and Tass, P. (2017a). Pulsatile desynchronizing delayed feedback for closed-loop deep brain stimulation. PLOS One 12, e0173363. doi:10.1371/journal.pone.0173363

Popovych, O. V., Hauptmann, C., and Tass, P. A. (2005). Effective desynchronization by nonlinear delayed feedback. Phys. Rev. Lett. 94, 164102. doi:10.1103/PhysRevLett.94.164102

Popovych, O. V., Lysyansky, B., and Tass, P. A. (2017b). Closed-loop deep brain stimulation by pulsatile delayed feedback with increased gap between pulse phases. Sci. Rep. 7, 1033. doi:10.1038/s41598-017-01067-x

Popovych, O. V., and Tass, P. A. (2012). Desynchronizing electrical and sensory coordinated reset neuromodulation. Front. Hum. Neurosci. 6, 58. doi:10.3389/fnhum.2012.00058

Popovych, O. V., and Tass, P. A. (2019). Adaptive delivery of continuous and delayed feedback deep brain stimulation - a computational study. Sci. Rep. 9, 10585. doi:10.1038/s41598-019-47036-4

Rosenblum, M. (2020). Controlling collective synchrony in oscillatory ensembles by precisely timed pulses. Chaos Interdiscip. J. Nonlinear Sci. 30, 093131. doi:10.1063/5.0019823

Rosenblum, M., Pikovsky, A., Kühn, A., and Busch, J. (2021). Real-time estimation of phase and amplitude with application to neural data. Sci. Rep. 11, 18037. doi:10.1038/s41598-021-97560-5

Rosenblum, M. G., and Pikovsky, A. S. (2004a). Controlling synchronization in an ensemble of globally coupled oscillators. Phys. Rev. Lett. 92, 114102. doi:10.1103/PhysRevLett.92.114102

Rosenblum, M. G., and Pikovsky, A. S. (2004b). Delayed feedback control of collective synchrony: an approach to suppression of pathological brain rhythms. Phys. Rev. E. 70, 041904. doi:10.1103/PhysRevE.70.041904

Rosin, B., Slovik, M., Mitelman, R., Rivlin-Etzion, M., Haber, S. N., Israel, Z., et al. (2011). Closed-loop deep brain stimulation is superior in ameliorating parkinsonism. Neuron 72, 370–384. doi:10.1016/j.neuron.2011.08.023

Smetanin, N., Belinskaya, A., Lebedev, M., and Ossadtchi, A. (2020). Digital filters for low-latency quantification of brain rhythms in real time. J. Neural Eng. 17, 046022. doi:10.1088/1741-2552/ab890f

Tass, P. (2001). Effective desynchronization with a resetting pulse train followed by a single pulse. Europhys. Lett. 55, 171–177. doi:10.1209/epl/i2001-00397-8

Tass, P. (2002). Effective desynchronization with bipolar double-pulse stimulation. Phys. Rev. E 66, 036226. doi:10.1103/PhysRevE.66.036226

Tass, P. A. (1999). Phase resetting in medicine and biology. Stochastic modelling and data analysis. Berlin: Springer-Verlag.

Tass, P. A. (2000). Stochastic phase resetting: a theory for deep brain stimulation. Prog. Theor. Phys. Suppl. 139, 301–313. doi:10.1143/ptps.139.301

Tass, P. A. (2003). A model of desynchronizing deep brain stimulation with a demand-controlled coordinated reset of neural subpopulations. Biol. Cybern. 89, 81–88. doi:10.1007/s00422-003-0425-7

Tiberkevich, V., Slavin, A., Bankowski, E., and Gerhart, G. (2009). Phase-locking and frustration in an array of nonlinear spin-torque nano-oscillators. Appl. Phys. Lett. 95, 262505. doi:10.1063/1.3278602

Tukhlina, N., Rosenblum, M., Pikovsky, A., and Kurths, J. (2007). Feedback suppression of neural synchrony by vanishing stimulation. Phys. Rev. E. 75, 011918. doi:10.1103/PhysRevE.75.011918

Wilson, D., and Moehlis, J. (2014). Optimal chaotic desynchronization for neural populations. SIAM J. Appl. Dyn. Syst. 13, 276–305. doi:10.1137/120901702

Wilson, D., and Moehlis, J. (2015). Clustered desynchronization from high-frequency deep brain stimulation. PLOS Comput. Biol. 11, e1004673. doi:10.1371/journal.pcbi.1004673

Winfree, A. T. (1967). Biological rhythms and the behavior of populations of coupled oscillators. J. Theor. Biol. 16, 15–42. doi:10.1016/0022-5193(67)90051-3

Keywords: network physiology, oscillations, connectivity, non-autonomous dynamics, synchrony control

Citation: Rosenblum M (2024) Feedback control of collective dynamics in an oscillator population with time-dependent connectivity. Front. Netw. Physiol. 4:1358146. doi: 10.3389/fnetp.2024.1358146

Received: 19 December 2023; Accepted: 23 January 2024;

Published: 02 February 2024.

Edited by:

Eckehard Schöll, Technical University of Berlin, GermanyReviewed by:

Yanhua Hong, Bangor University, United KingdomThanos Manos, CY Cergy-Paris University—ENSEA—CNRS, France

Oleksandr Popovych, Helmholtz Association of German Research Centres (HZ), Germany

Copyright © 2024 Rosenblum. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michael Rosenblum, mros@uni-potsdam.de

Michael Rosenblum

Michael Rosenblum