Emotion and attention effects: is it all a matter of timing? Not yet

- Department of Psychological and Brain Sciences, Indiana University, Bloomington, IN, USA

Controversy surrounds the relationship between emotion and attention in brain and behavior. Two recent studies acquired millisecond-level data to investigate the timing of emotion and attention effects in the amygdala (Luo et al., 2010; Pourtois et al., 2010). Both studies argued that the effects of emotional content temporally precede those of attention and that prior discrepancies in the literature may stem from the temporal characteristics of the functional MRI (fMRI) signal. Although both studies provide important insights about the temporal unfolding of affective responses in the brain, several issues are discussed here that qualify their results. Accordingly, it may not be yet time to accept the conclusion that “automaticity is a matter of timing”. Indeed, emotion and attention may be more closely linked than suggested in the two studies discussed here.

The relationship between emotion and cognition has been the target of a large conceptual and empirical literature. One particular facet of this question pertains to the link between emotion and attention (emotion here understood in the sense of affective processing). Is the perception of emotion-laden stimuli “automatic”? This question has received considerable interest because specific answers (“yes” or “no”) suggest potentially different relationships between emotion and cognition (more or less independence between the two, respectively). Evidence both for and against automaticity has been presented. For instance, emotional faces evoke responses in the amygdala when attention is diverted to other stimuli (Vuilleumier et al., 2001; Anderson et al., 2003). These and many related findings suggest that at least some types of emotional perception occur outside of top-down directed attention. Other findings have suggested, however, that the perception of emotion-laden items requires attention, as revealed by attentional manipulations that were designed to more strongly consume processing resources, leaving relatively few for the processing of unattended emotional items (Pessoa et al., 2002, 2005; Bishop et al., 2004, 2007; Hsu and Pessoa, 2007; Silvert et al., 2007; Lim et al., 2008). Overall, the automaticity debate, as it relates to the role of attention in affective processing, remains unresolved and controversial (Pessoa et al., 2010, submitted).

Although the scope of the present piece precludes a more in-depth discussion of automaticity, some comments are in order here. Broadly speaking, although automaticity is a concept that is operationalized in quite different ways across studies, it can be characterized as involving processing occurring independently of the availability of processing resources, not affected by intentions and strategies, and not necessarily tied to conscious processing (Posner and Snyder, 1975; Jonides, 1981; Tzelgov, 1997). A frequent strategy to probe potential automaticity is thus to manipulate the focus of attention, for instance, by evaluating the extent to which task-irrelevant information influences performance of a central task (Pashler, 1998). In this context, the degree to which processing resources are consumed by the attentional manipulation is at times described as the attentional load of the task (Lavie, 1995). For further discussion of conceptual issues and shortcomings surrounding the notion of automaticity, please see Pessoa et al. (submitted).

Techniques that provide fast temporal information at the millisecond level have been used to probe the timing of affective processing in humans. In the context of the relationship between emotional perception and attention, two recent studies are particularly noteworthy because they explicitly manipulated attention and emotion while brain signals were measured with magnetoencephalography (MEG) or intracranial recordings in an attempt to evaluate responses evoked in the amygdala – which is often considered to be a “signature” of affective processing. These studies are also important because a possible concern with fMRI studies, which have investigated this question in some depth, is that the technique may be relatively blind to fast effects. In other words, rapid effects of emotional items that are independent of attention may have been missed by fMRI, which only provides a low-pass version of the associated neural events. The goal of the present contribution is to briefly discuss these two studies (for a more comprehensive review of the relationship between emotion and attention, see Pessoa et al. (submitted).

In the first study, MEG was employed to investigate responses in the amygdala while participants viewed task-irrelevant fearful and neutral faces (Luo et al., 2010). On each trial, the observer’s task consisted in discriminating the orientation of peripherally located bars (same or different?). As in previous studies, attention was manipulated by varying task difficulty. During the low-load condition, the bar orientation difference was high (90°), making the task very easy. During the high-load condition, the bar orientation difference was low (15°), making the task relatively hard.

Magnetoencephalography responses revealed a significant main effect of facial expression in the left amygdala. Specifically, increased gamma-band activity was observed in response to fearful relative to neutral expressions very soon after stimulus onset (30–60 ms). Consistent with the notion of automaticity, no main effect of attentional load or load-by-expression interaction was detected during this early time window. In contrast, such an interaction was observed in the right amygdala at a later time (280–340 ms). Specifically, under the low-load condition, increased gamma-band activity was observed for fearful relative to neutral expressions; but, under high-load conditions, there was no effect of emotional expression on amygdala responses during this later time window. Taken together, the authors propose that “emotional automaticity is a matter of timing”, and suggest that fMRI may simply miss the fast, first pass of emotional information, which presumably would be automatic.

Localizing sources with EEG and/or MEG data is a complex problem, and it is not entirely clear that signals from deep structures in the brain, such as the amygdala, can be localized with certainty at this point in time (see below). An approach that bypasses this problem is to record directly from the amygdala in humans (e.g., during presurgical preparation). That was the strategy adopted by Pourtois et al. (2010), who employed the same paradigm of an earlier fMRI study (Vuilleumier et al., 2001), in which two houses (e.g., to the left and right of fixation) and two faces (e.g., below and above fixation) were employed. The subject’s task was to determine if the horizontal or vertical stimulus pair was identical or not. Recordings from face-sensitive sites in the lateral amygdala showed an early and systematic differential neural response between fearful and neutral faces, regardless of attention. Differences were observed from 140 to 290 ms. Furthermore, comparing trials with task-relevant versus task-irrelevant faces (regardless of emotion expression) revealed a sustained attentional effect in the left amygdala, but starting only at 710 ms post stimulus onset.

The two studies above provide important advances in our attempt to understand the interactions between emotion and attention. Specifically, by employing techniques that offer millisecond temporal resolution, the studies attempted to determine the temporal evolution of affective processing and how it is influenced by attention. However, the two studies also pose important questions, as discussed in what follows.

Let us consider the MEG study first. The amygdala is a deep structure in the brain and, accordingly, one that is challenging to probe with techniques such as electroencephalography (EEG) and MEG. Some investigators have suggested that advanced source analysis techniques are capable of estimating signals from this structure (Ioannides et al., 1995; Streit et al., 2003); for a recent evaluation, see also (Cornwell et al., 2008). In particular, Luo et al. (2010) suggest that synthetic aperture magnetometry is well suited in this case. These so-called adaptive beamformer techniques reconstruct the source activity on a predefined three dimensional grid using a weighted sum of sensor data (Vrba and Robinson, 2001; Barnes and Hillebrand, 2003). Others studies suggest, however, that localizing signals to structures deep in the brain may be problematic. For instance, in one investigation, simultaneous MEG and invasive EEG recordings indicated that epileptic activity restricted to medial temporal structures cannot reliably be detected by MEG and that an extended cortical area of at least 6–8 cm2 involving the basal temporal lobe is necessary to generate a reproducible MEG signal (Baumgartner et al., 2000); see also (Mikuni et al., 1997). It is thus unclear whether amygdala responses can be reliably estimated with MEG at the moment.

The results by Luo et al. (2010) further indicated that responses in the amygdala are modulated by affective content within 30–40 ms, possibly via a fast pathway. Yet, the timing is puzzling in light of known response latencies in the visual system. For instance, the earliest responses in the lateral geniculate nucleus (LGN) of the thalamus, which receives direct retinal input, are observed at approximately 30 ms, and on average they are around 33 ms (for the magno system) and 50 ms (for the parvo system) (Lamme and Roelfsema, 2000). When considering neuronal response latencies, other issues are important, too. In addition to the latency itself, one needs to consider “computation time”. It has been suggested (Tovee and Rolls, 1995) that most of the information encoded by visual neurons may be available in segments of activity 100 ms long, and that a fair amount of information is available in 50-ms segments, and even some with 20–30 ms segments (note that these segments take into account response latency, namely they consider neuronal spikes after a certain delay). Although these figures demonstrate the remarkable speed of neuronal computation (at least under some conditions), they add precious milliseconds to the time required to, for instance, discriminate between stimuli (e.g., hypothetical differential responses in the LGN, for example, would be expected to appear no earlier than 60 ms or longer post stimulus onset). An additional consideration is that responses in humans are possibly slower than in monkeys, further adding time. For instance, in one human study (Yoshor et al., 2007), the fastest recording sites had latencies just under 60 ms, and were probably located in V1 (or possibly V2). In monkey, the fastest responses in V1 can be observed under 40 ms (Lamme and Roelfsema, 2000).

What are the response latencies of neurons in the amygdala? In the monkey, amygdala responses typically range from 100 to 200 ms (Leonard et al., 1985; Nakamura et al., 1992; Gothard et al., 2007; Kuraoka and Nakamura, 2007) – although shorter response latencies to unspecific stimuli (e.g., fixation spots) have at times been reported (Gothard et al., 2007). Differences in evoked responses between threat and neutral or appeasing facial expressions in the monkey amygdala have been reported in the range of 120–250 ms (Gothard et al., 2007). Intracranial studies in humans generally find the earliest single-unit responses around 200 ms (Oya et al., 2002; Mormann et al., 2008). And in a patient study, affective modulation of amygdala responses was also observed starting at 200 ms (Krolak-Salmon et al., 2004); see also (Oya et al., 2002).

Recently, we have advocated that bypass systems involving the cortex may rapidly convey affective information throughout the brain (Pessoa and Adolphs, Submitted). This “parallel processing” architecture would allow fast affective responses around 100–150 ms post stimulus onset. Interestingly, this potential time course matches the one observed in the intracranial study by Pourtois et al. (2010), in which affective influences were observed starting at 140 ms. A potential concern with that study, however, is that the task employed was not challenging. Specifically, the patient was correct 95% of the time during face trials, and 97% of the time during house trials. Whereas this made for balanced task performance for faces and houses (and was probably determined by the testing of a patient in the context of neurosurgery), in all likelihood, the task was not sufficiently demanding. As demonstrated in the past, processing resources “spill over” when the central task is not sufficiently taxing (Lavie, 1995, 2005), and therefore effects of valence under these conditions are not entirely surprising. Although the effect of valence can be referred to as “automatic” in the sense of implicit processing of task-irrelevant information, the task does not allow for a stricter test of automaticity in terms of obligatory processing (Pessoa et al., submitted).

In light of these remarks on capacity limitations, it is worth considering that even in the MEG study, the attentional manipulation may not have been sufficiently strong given that observers performed at 83% correct in the high-load condition. In contrast, in a similar bar-orientation task, performance was at 64% correct during the most demanding condition (Pessoa et al., 2002); notably, in another bar-orientation study, we did observe valence effects on reaction time when performance was 79% correct, but these disappeared when performance was at approximately 60% correct (Erthal et al., 2005). In general, attentional manipulations that more completely exhaust processing capacity must be sought, as in similar questions of the need for attention during scene perception in which a clearer demonstration of the impact of the attentional manipulation is provided (Li et al., 2002).

A second important issue with the intracranial study refers to the timing of the attention effect, which was reported to start at around 700 ms. As noted by the authors, this timing is considerably later than effects of attention on sensory processing, which can be observed around 100 ms or earlier (Luck et al., 2000). As the authors suggested, this late effect may have been due to other task-related differences associated with processing the emotional significance of the faces. In any case, the effect would seem to have had a different origin than the modulation of visual processing by attentional mechanisms.

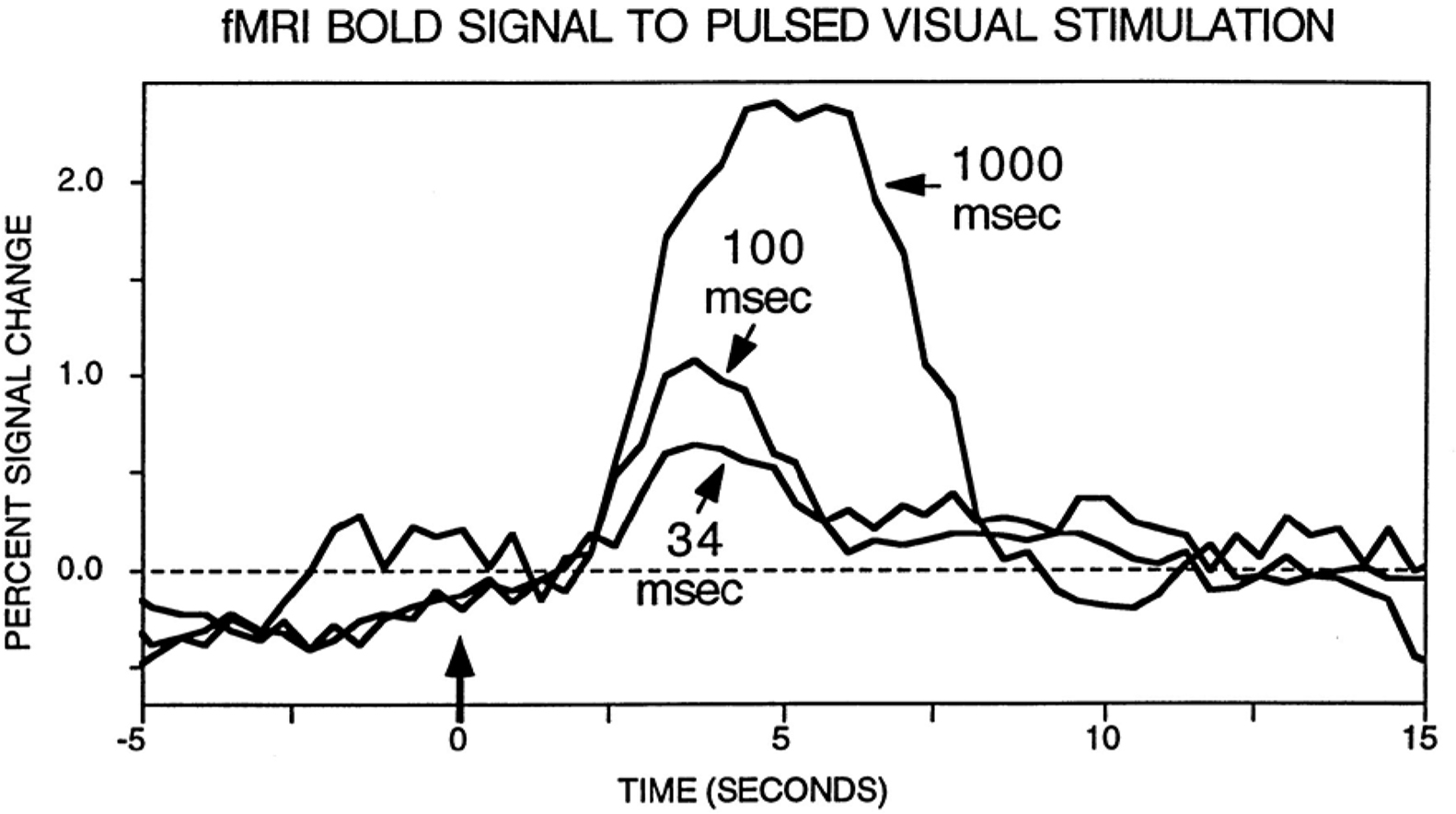

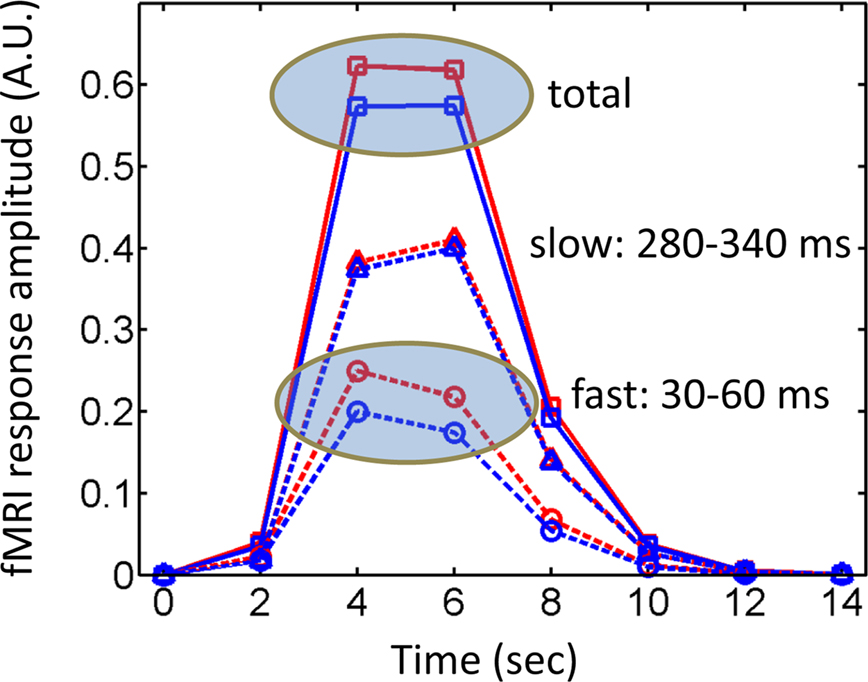

A final issue merits discussion here. A common objection to fMRI is that it might not be sensitive to brief events. Whereas this is a possible concern given the low-pass characteristics of the BOLD signal, several examples show that this problem does not necessarily hold. Perhaps surprisingly at the time, the BOLD signal was indeed found to be sensitive to transient events, as originally demonstrated by Savoy et al. (1995) (Figure 1). Importantly, fMRI responses have been consistently reported for stimuli that are presented very briefly (∼30 ms) and even masked, a result replicated many times with affective stimuli (Morris et al., 1998; Whalen et al., 1998), in particular. Thus, whereas it is clearly desirable to obtain millisecond-level data, such as provided by techniques like MEG, fMRI is certainly not blind to brief, transient events, as further illustrated in the simulations summarized in Figure 2.

Figure 1. fMRI responses to brief stimuli. Original results by Savoy et al. (1995) illustrating the fact that a clear signal change is observed for very brief events. Data from Savoy et al. (1995). Figure reproduced from (Rosen et al., 1998).

Figure 2. Simulated fMRI responses and timing. During the hard condition in the study by Luo et al. (2010), fast responses varied as a function of valence, whereas later responses did not – i.e., attention affected the latter, but not the former. However, fast responses are not inherently invisible to fMRI, and are expected to generate differential fMRI responses, as suggested by the simulated responses labeled “fast”. The “slow” component was also simulated and no differential responses would be expected (the slight displacement was used for display only). A typical fMRI study would pick up the “total” signal containing the contributions of both fast and slow components and, in theory, should be sensitive to the differences that were present only in the first time window (see also Figure 1). Simulated responses were generated by convolving an initial input function with a canonical hemodynamic response (Cohen, 1997). The input function can be viewed as a boxcar with “fast events” occurring between 30–60 ms and “slow events” occurring between 280–340 ms (a virtual temporal resolution of 10 ms was used in the definition of the boxcar events and in the convolution operation). The “fast” effect of emotional content was simulated by assuming a boxcar of intensity 0.2 versus 0.25 for affective and neutral conditions, respectively. Finally, it was assumed that fMRI signals were sampled every 2 s. Red lines: affective conditions; blue lines: neutral conditions; dashed lines: fast and slow components; solid lines: total signal; A.U.: arbitrary units.

In summary, both studies described here provide important insights about the temporal unfolding of affective responses in the brain. Both studies argued that the effects of emotional content temporally precede those of attention and that prior discrepancies in the literature may have stemmed from the temporal characteristics of the fMRI signal. However, the points raised here suggest that several important issues concerning the two studies remain to be elucidated by future investigations. Generally, as previously stated, stronger manipulations of attention will be critically important, as well as a clearer understanding of the extent to which they consume different types of processing resources (Pessoa et al., submitted). In particular, in the context of the MEG study, more conclusive evidence of amygdala involvement is needed (ideally via more thorough validation procedures). Furthermore, a better understanding of the timing of the MEG effect is required, as it appears to conflict with extant data on monkeys and humans (including the findings of the intracranial study). Taken together, it is suggested that it may not be yet time to accept the conclusion that “emotional automaticity is a matter of timing”: emotion and attention may be more closely linked than suggested in the two studies discussed here.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Support for this work was provided in part by the National Institute of Mental Health (R01 MH071589). I would like to thank James Blair and Patrik Vuilleumier for comments on a previous version of the manuscript, Srikanth Padmala for generating the simulated responses in Figure 2, and Shruti Japee for feedback on MEG methods.

References

Anderson, A. K., Christoff, K., Panitz, D., De Rosa, E., and Gabrieli, J. D. (2003). Neural correlates of the automatic processing of threat facial signals. J. Neurosci. 23, 5627–5633.

Barnes, G. R., and Hillebrand, A. (2003). Statistical flattening of MEG beamformer images. Hum. Brain Mapp. 18, 1–12.

Baumgartner, C., Pataraia, E., Lindinger, G., and Deecke, L. (2000). Neuromagnetic recordings in temporal lobe epilepsy. J. Clin. Neurophysiol. 17, 177–189.

Bishop, S. J., Duncan, J., and Lawrence, A. D. (2004). State anxiety modulation of the amygdala response to unattended threat-related stimuli. J. Neurosci. 24, 10364–10368.

Bishop, S. J., Jenkins, R., and Lawrence, A. D. (2007). Neural processing of fearful faces: effects of anxiety are gated by perceptual capacity limitations. Cereb. Cortex 17, 1595–1603.

Cohen, M. S. (1997). Parametric analysis of fMRI data using linear systems methods. Neuroimage 6, 93–103.

Cornwell, B. R., Carver, F. W., Coppola, R., Johnson, L., Alvarez, R., and Grillon, C. (2008). Evoked amygdala responses to negative faces revealed by adaptive MEG beamformers. Brain Res. 1244, 103–112.

Erthal, F. S., de Oliveira, L., Mocaiber, I., Pereira, M. G., Machado-Pinheiro, W., Volchan, E., and Pessoa, L. (2005). Load-dependent modulation of affective picture processing. Cogn. Affect. Behav. Neurosci. 5, 388–395.

Gothard, K. M., Battaglia, F. P., Erickson, C. A., Spitler, K. M., and Amaral, D. G. (2007). Neural responses to facial expression and face identity in the monkey amygdala. J. Neurophysiol. 97, 1671–1683.

Hsu, S. M., and Pessoa, L. (2007). Dissociable effects of bottom-up and top-down factors on the processing of unattended fearful faces. Neuropsychologia 45, 3075–3086.

Ioannides, A. A., Liu, M. J., Liu, L. C., Bamidis, P. D., Hellstrand, E., and Stephan, K. M. (1995). Magnetic field tomography of cortical and deep processes: examples of “real-time mapping” of averaged and single trial MEG signals. Int. J. Psychophysiol. 20, 161–175.

Jonides, J. (1981). Attention and Performance XI, eds M. I. Posner and O. Marin (Hillsdale, NJ: Erlbaum), 187–205.

Krolak-Salmon, P., Henaff, M. A., Vighetto, A., Bertrand, O., and Mauguiere, F. (2004). Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: a depth electrode ERP study in human. Neuron 42, 665–676.

Kuraoka, K., and Nakamura, K. (2007). Responses of single neurons in monkey amygdala to facial and vocal emotions. J. Neurophysiol. 97, 1379–1387.

Lamme, V. A., and Roelfsema, P. R. (2000). The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 23, 571–579.

Lavie, N. (1995). Perceptual load as a necessary condition for selective attention. J. Exp. Psychol. Hum. Percept. Perform. 21, 451–468.

Lavie, N. (2005). Distracted and confused?: selective attention under load. Trends Cogn. Sci. 9, 75–82.

Leonard, C. M., Rolls, E. T., Wilson, F. A., and Baylis, G. C. (1985). Neurons in the amygdala of the monkey with responses selective for faces. Behav. Brain Res. 15, 159–176.

Li, F. F., VanRullen, R., Koch, C., and Perona, P. (2002). Rapid natural scene categorization in the near absence of attention. Proc. Natl. Acad. Sci. U.S.A. 99, 9596–9601.

Lim, S. L., Padmala, S., and Pessoa, L. (2008). Affective learning modulates spatial competition during low-load attentional conditions. Neuropsychologia 46, 1267–1278.

Luck, S. J., Woodman, G. F., and Vogel, E. K. (2000). Event-related potential studies of attention. Trends Cogn. Sci. 4, 432–440.

Luo, Q., Holroyd, T., Majestic, C., Cheng, X., Schechter, J., and Blair, R. J. (2010). Emotional automaticity is a matter of timing. J. Neurosci. 30, 5825–5829.

Mikuni, N., Nagamine, T., Ikeda, A., Terada, K., Taki, W., Kimura, J., Kikuchi, H., and Shibasaki, H. (1997). Simultaneous recording of epileptiform discharges by MEG and subdural electrodes in temporal lobe epilepsy. Neuroimage 5, 298–306.

Mormann, F., Kornblith, S., Quiroga, R. Q., Kraskov, A., Cerf, M., Fried, I., and Koch, C. (2008). Latency and selectivity of single neurons indicate hierarchical processing in the human medial temporal lobe. J. Neurosci. 28, 8865–8872.

Morris, J. S., Ohman, A., and Dolan, R. J. (1998). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470.

Nakamura, K., Mikami, A., and Kubota, K. (1992). Activity of single neurons in the monkey amygdala during performance of a visual discrimination task. J. Neurophysiol. 67, 1447–1463.

Oya, H., Kawasaki, H., Howard, M. A. III, and Adolphs, R. (2002). Electrophysiological responses in the human amygdala discriminate emotion categories of complex visual stimuli. J. Neurosci. 22, 9502–9512.

Pessoa, L., McKenna, M., Gutierrez, E., and Ungerleider, L. G. (2002). Neural processing of emotional faces requires attention. Proc. Natl. Acad. Sci. U.S.A. 99, 11458–11463.

Pessoa, L., Oliveira, L., and Pereira, M. G. (2010). Attention and emotion. Scholarpedia J. 5, 6314.

Pessoa, L., Padmala, S., and Morland, T. (2005). Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. Neuroimage 28, 249–255.

Posner, M. I., and Snyder, C. R. R. (1975). “Attention and cognitive control,” in Information Processing and Cognition: The Loyola symposium, ed. R. L. Solso (Hillsdale, NJ: Erlbaum) 55–85.

Pourtois, G., Spinelli, L., Seeck, M., and Vuilleumier, P. (2010). Temporal precedence of emotion over attention modulations in the lateral amygdala: intracranial ERP evidence from a patient with temporal lobe epilepsy. Cogn. Affect. Behav. Neurosci. 10, 83–93.

Rosen, B. R., Buckner, R. L., and Dale, A. M. (1998). Event-related functional MRI: past, present, and future. Proc. Natl. Acad. Sci. U.S.A. 95, 773–780.

Savoy, R. L., Bandettini, P. A., O’Craven, K. M., Kwong, K. K., Davis, T. L., Baker, J. R., Weisskoff, R. M., and Rosen, B. R. (1995). Proc. Soc. Magn. Reson. Med. 450.

Silvert, L., Lepsien, J., Fragopanagos, N., Goolsby, B., Kiss, M., Taylor, J. G., Raymond, J. E., Shapiro, K. L., Eimer, M., and Nobre, A. C. (2007). Influence of attentional demands on the processing of emotional facial expressions in the amygdala. Neuroimage 38, 357–366.

Streit, M., Dammers, J., Simsek-Kraues, S., Brinkmeyer, J., Wolwer, W., and Ioannides, A. (2003). Time course of regional brain activations during facial emotion recognition in humans. Neurosci. Lett. 342, 101–104.

Tovee, M. J., and Rolls, E. T. (1995). Information encoding in short firing rate epochs by single neurons in the primate temporal visual cortex. Vis. cogn. 2, 35–58.

Tzelgov, J. (1997). Specifying the relations between automaticity and consciousness: a theoretical note. Conscious. Cogn. 6, 441–451.

Vrba, J., and Robinson, S. E. (2001). Signal processing in magnetoencephalography. Methods 25, 249–271.

Vuilleumier, P., Armony, J. L., Driver, J., and Dolan, R. J. (2001). Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron 30, 829–841.

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., and Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418.

Keywords: emotion, attention, timing, automaticity

Citation: Pessoa L (2010) Emotion and attention effects: is it all a matter of timing? Not yet. Front. Hum. Neurosci. 4:172. doi: 10.3389/fnhum.2010.00172

Received: 22 June 2010;

Paper pending published: 20 July 2010;

Accepted: 11 August 2010;

Published online: 14 September 2010

Edited by:

Hauke R. Heekeren, Max Planck Institute for Human Development, GermanyReviewed by:

Sonia Bishop, University of California at Berkeley, USADavid Zald, Vanderbilt University, USA

Copyright: © 2010 Pessoa. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Luiz Pessoa, Department of Psychological and Brain Sciences, Indiana University, 1101 E 10th Street, Bloomington, IN 47405, USA. e-mail: lpessoa@indiana.edu