- 1 Department of Psychology, University of Nevada Las Vegas, Las Vegas, NV, USA

- 2 The Rotman Research Institute, Baycrest Centre for Geriatric Care, Toronto, ON, Canada

Auditory perception and cognition entails both low-level and high-level processes, which are likely to interact with each other to create our rich conscious experience of soundscapes. Recent research that we review has revealed numerous influences of high-level factors, such as attention, intention, and prior experience, on conscious auditory perception. And recently, studies have shown that auditory scene analysis tasks can exhibit multistability in a manner very similar to ambiguous visual stimuli, presenting a unique opportunity to study neural correlates of auditory awareness and the extent to which mechanisms of perception are shared across sensory modalities. Research has also led to a growing number of techniques through which auditory perception can be manipulated and even completely suppressed. Such findings have important consequences for our understanding of the mechanisms of perception and also should allow scientists to precisely distinguish the influences of different higher-level influences.

Introduction

Understanding conscious experience of the external world has been a pursuit of theorists since the early days of experimental psychology. For example, Wundt and Titchener were among those who used introspection of their own perceptions to try and arrive at the fundamental units of experience (Boring, 1953; Danzinger, 1980). However, since then perception science and other areas of experimental psychology and neuroscience have been dominated by more objective psychophysical methods of understanding perception that have as a consequence, or by design, pushed the inquiry of subjective experience to the background. This objective measurement of perception has provided exquisite information about our perceptual skills to detect, discriminate, and categorize particular stimuli, and the underlying neuro-computational mechanisms of these abilities.

Recently, however, theorists have made an important contribution to reviving the scientific study of consciousness, perhaps most notably by defining accessible empirical problems such as how to explain the generation of perceptual awareness or consciousness (Crick and Koch, 1995, 2003), which we operationally define as the explicit reporting of a particular stimulus or how it is perceptually organized. This has led to investigations into the necessary and sufficient conditions for people to be aware of stimuli, especially in visual perception. For example, researchers have investigated the role of particular brain areas (Leopold and Logothetis, 1999; Tong et al., 2006; Donner et al., 2008) and particular neural processes such as feedback from higher to lower areas (Pascual-Leone and Walsh, 2001; Hochstein and Ahissar, 2002; Lamme, 2004; Wibral et al., 2009) that are associated with visual awareness. In many cases, these investigations have made use of multistable visual stimuli that can be perceived in more than one way (e.g., the well-known Necker cube, Long and Toppino, 2004), enabling the investigation of changes in perception without any confounding stimulus changes. The development of techniques to manipulate whether people are aware of particular stimuli (Kim and Blake, 2005) has additionally led to evaluating awareness (e.g., of a prior stimulus) as an independent variable (i.e., rather than studying awareness as the outcome variable) that can affect perception of subsequent stimuli (e.g., Kanai et al., 2006; for a review Koch and Tsuchiya, 2007). Much less work of these types has been done on auditory awareness, but several promising lines of research have begun, which we discuss in detail below.

In this review of the literature, we focus on three main types of research on auditory perception. First, we review research that demonstrates effects of attention and other high-level factors on auditory perceptual organization, with an emphasis on the difficulty in manipulating attention separately from other factors. Next, we discuss the fact that perception of sound objects exhibits the hallmarks of multistability and therefore shows promise for future studies of auditory perception and its underlying neural mechanisms. In this section, we also review research on the neural correlates of subjective auditory perception, which provides clues as to the areas of the brain that determine perception of sound objects. Finally, we discuss a number of recent demonstrations in which auditory events can be made imperceptible, which like their visual counterparts can enable researchers to identify the mechanisms of auditory awareness. Some of the studies that have been done permit interesting comparisons between perception of sound and conscious perception of stimuli in other sensory modalities. When possible, we will point out the similarities and differences across modalities, and point out the need for future research to delineate the extent to which similar phenomena and similar mechanisms are present across the senses during perception.

Auditory Scene Analysis as a Framework to Study Awareness

Auditory scene analysis (ASA) is a field of study that has been traditionally concerned with how the auditory system perceptually organizes incoming sounds from different sources in the environment into sound objects or streams, such as discrete sounds (e.g., phone ringing, gunshot) or sequences of sounds (e.g., melody, voice of a friend in crowded restaurant), respectively (Bregman, 1990). For example, in a crowded restaurant in which many people are talking at the same time, an individual must segregate the background speech from his or her dining partner’s speech and group the various sound components of the partner’s speech appropriately into a meaningful stream of words. ASA has mainly been studied with the goal of understanding how listeners segregate and group sounds; however, research in this field has also developed paradigms that are highly suitable for studying more general perceptual mechanisms and how low-level stimulus factors and higher-level factors such as attention, intention, and previous knowledge influence perception. In ASA studies, participants are often asked to report on their subjective experience of hearing two or more segregated patterns; and as mentioned earlier, when sounds are kept constant the operation of perceptual mechanisms can be studied directly without confounding effects of stimulus manipulations. However, indirect performance-based measures of segregation can also be informative because they tend to show the same effects as subjective measures (e.g., Roberts et al., 2002; Stainsby et al., 2004; Micheyl and Oxenham, 2010). Another important aspect of many ASA studies is that they often use rather simple arrangements of sounds that are easy to generate and manipulate. They also do not involve many of the complications associated with using real-world sounds (e.g., speech and music), such as the activation of long-term memory or expertise-related processes. Thus, such high-level processes can be controlled and studied with relative ease.

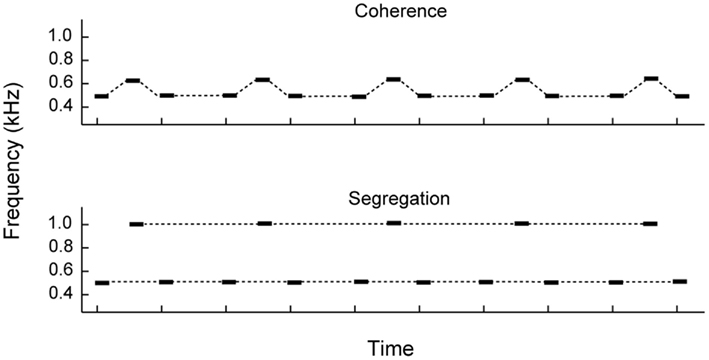

Bregman (1990) proposed two main classes of ASA mechanisms: (1) primary mechanisms that process incoming mixtures of sounds in an automatic fashion using simple transformations, and (2) schema-based mechanisms that are more likely to be attention-, intention-, and knowledge-dependent. An example of the operation of primary ASA is the well-known effect of frequency separation (Δf) during segregation of sequential tone patterns (Miller and Heise, 1950; Bregman and Campbell, 1971; Van Noorden, 1975). In the laboratory, auditory stream segregation has been studied extensively as an example of sequential segregation by playing two alternating pure tones of different frequencies (A and B) in a repeating pattern (e.g., ABA-ABA-…, where “-” corresponds to a silence), as shown in Figure 1. At first, the tones are heard as a single stream with a galloping rhythm, but after several repetitions of the sequence, the tones are often heard as splitting into two streams or “streaming” (i.e., A-A-A-A… and B—B—…). The larger the Δf between the A and B tones and the more rapidly they are presented, the more likely participants report hearing two streams as opposed to one stream. The characteristic time course of pure-tone streaming, called buildup, is likely to have its basis in the adaptation of frequency-tuned neurons in early brainstem and/or primary cortical stages of processing (Micheyl et al., 2005; Pressnitzer et al., 2008; for reviews, Micheyl et al., 2007a; Snyder and Alain, 2007). But more recent research has shown that a number of stimulus cues besides pure-tone frequency can result in perception of streaming, even cues that are known to be computed in the central auditory system (for reviews, Moore and Gockel, 2002; Snyder and Alain, 2007). This evidence that streaming occurs at central sites raises the possibility that auditory perception results from a combination of activity at multiple levels of the auditory system, including those that can be influenced by schema-based mechanisms.

Figure 1. In auditory stream segregation experiments, low and high tones are alternated repeatedly. When the frequency difference between the tones is small (top), this typically leads to perception of one coherent stream. For large frequency differences (bottom), one is more likely to be heard as two segregated streams.

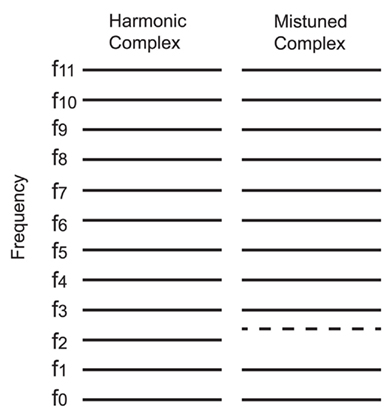

In addition to segregation of sequential patterns, another important aspect of scene analysis is the segregation of sounds that occur concurrently, such as when two individuals speak at exactly the same time. In social gatherings, human listeners must perceptually integrate the simultaneous components originating from one person’s voice (i.e., fundamental frequency or f0, and harmonics that are integer-multiples of f0) and segregate these from concurrent sounds of other talkers. Psychophysical research has identified several cues that influence how concurrent sounds will be grouped together (for reviews, Carlyon, 2004; Alain, 2007; Ciocca, 2008). For instance, sounds that are harmonically related, begin at the same time and originate from the same location are more likely to emanate from the same physical object than those that are not. In the laboratory, experimenters can induce the perception of concurrent sound objects by mistuning one spectral component (i.e., a harmonic) from an otherwise periodic harmonic complex tone (see Figure 2). Low harmonics mistuned by about 4–6% of their original value stand out from the complex so that listeners report hearing two sounds: a complex tone and another sound with a pure-tone quality (Moore et al., 1986). While several studies have investigated the role that attention plays in auditory stream segregation, which we review below, far less research has been done on the impact of high-level factors on concurrent sound segregation.

Figure 2. In mistuned harmonic experiments, a complex harmonic sound composed of frequency components that are all multiples of the fundamental frequency (f0 ) is heard as a single sound with a buzzy quality (left). When one of the components is mistuned, it stands out as a separate pure-tone object in addition to the remaining complex sound (right).

Effects of High-Level Factors on Auditory Scene Analysis

Attention

Attention during auditory stream segregation. Psychophysical studies have shown that buildup of stream segregation is modulated by attention, suggesting the involvement of high-level factors in perception of streaming. In these studies, participants were presented with an ABA- pattern to one ear. The role attention plays in auditory stream segregation was examined by assessing the buildup of streaming while participants were engaged in a separate auditory, visual, or non-sensory task in which participants counted backward (Carlyon et al., 2001, 2003; Thompson et al., 2011). By having participants engaging a primary task, attention was diverted away from the ABA- pattern. When attending to the ABA- pattern, participants showed a typical pattern of buildup. However, when attending the other task for the first part of the ABA- pattern, participants failed to show any sign of buildup when they switched their attention. Thus, buildup either did not occur while attention was diverted to the primary task or it was reset following the brief switch in attention (Cusack et al., 2004), a distinction that has been quite difficult to resolve using psychophysical measurements. These effects occurred regardless of the task used to capture attention (Carlyon et al., 2003), suggesting that buildup involves mechanisms within central auditory areas, multimodal pathways, and/or in peripheral areas that can be influenced in a top-down fashion by attention. To explain these results, Cusack et al. (2004) proposed a hierarchical model of stream segregation. According to this model, preattentive mechanisms segregate streams based on acoustic features (e.g., Δf) and attention-dependent buildup mechanisms further break down outputs (streams) of this earlier process that are attended to. For example, when talking to a friend at a concert, low-level processes automatically segregate the friend’s voice from the music. However, since attention is allocated to the friend’s voice and not the concert, buildup processes do not further decompose the music into its constituent parts (e.g., guitar, drums, bass, etc.; also, see Alain and Arnott, 2000).

Consistent with this model, Snyder et al. (2006) provided event-related potential (ERP) evidence for at least two mechanisms contributing to stream segregation: an early preattentive segregation mechanism and an attention-dependent buildup mechanism. In particular, auditory cortical responses (P2 and N1c) to an ABA- pattern increased in amplitude with increasing Δf and correlated with behavioral measures of streaming; this enhancement occurred even when attention was directed away from the ABA- pattern. Additionally, a temporally broad enhancement following the onset of an ABA- pattern progressively increased in positivity throughout the course of the pattern. The time course of this progressive increase indicated a strong link with the buildup of streaming. Importantly, this enhancement was diminished when participant’s attention was directed away from the ABA- pattern. These findings support the existence of an attention-dependent buildup mechanism in addition to a preattentive segregation mechanism. Also, since buildup-related processes were measured during passive listening these findings are more consistent with an effect of sustained attention as opposed to the possibility that buildup is simply reset following brief switches in attention (cf. Cusack et al., 2004).

However, Sussman et al. (2007) showed that buildup does not always require attention. They showed that deviant stimuli embedded within a high-tone stream of an ABA- pattern resulted in a mismatch negativity response during perception of two streams (Sussman et al., 1999, 2007). Furthermore, deviants were more likely to evoke a mismatch negativity when they occurred at the end of ABA- patterns compared to when they occurred early on, consistent with the time course of buildup. Importantly, these findings were similar whether or not the ABA- patterns were attended, suggesting that attention may not be required for buildup to occur, in contrast to the findings discussed above. Because this study used relatively large Δfs, it is possible that attention only modulates buildup in the absence of robust segregation cues (i.e., large Δf; Sussman et al., 2007). Indeed, Snyder et al. (2006) included several conditions with Δfs smaller than that used by Sussman et al. (2007). Additionally, close inspection of Cusack et al. (2004) shows that preattentive buildup processes were more prevalent for larger than smaller Δf conditions.

Several additional physiological studies have examined the effects of selective attention on streaming. These studies have supported a gain model in which attention to a target stream enhances neural processing of sounds within that stream while suppressing unattended streams. An early ERP study showed that selective attention to a stream facilitated early sensory processing of that stream and inhibited processing of unattended streams (Alain and Woods, 1994). More recent studies have focused on the effects of selective attention on continuous neural activity to sound streams. For example, in addition to enhanced transient responses generated in associative auditory areas (Bidet-Caulet et al., 2007), selective attention enhanced steady-state responses generated in primary auditory cortex to attended streams (Bidet-Caulet et al., 2007; Elhilali et al., 2009b; Xiang et al., 2010). Furthermore, these responses were entrained to the rhythm of the target stream and constrained by known entrainment capabilities within auditory cortex (i.e., better entrained for low vs. high frequencies; Xiang et al., 2010). High-density ERP and neuromagnetic studies have recently examined neural responses to continuous speech streams played amongst distracting speech (Kerlin et al., 2010; Ding and Simon, 2012). Both studies demonstrated that low-frequency (4–8 Hz) speech envelope information was represented in the auditory cortex of listeners. These representations were measured as either a continuous low-frequency response phase-locked to the speech (Kerlin et al., 2010) or a phase-locked N1-like neuromagnetic response that was primarily driven by low-frequency features of the speech (Ding and Simon, 2012). Consistent with a gain model, selectively attending to a speech stream enhanced the continuous low-frequency response to the attended speech and (possibly) suppressed responses to unattended speech (Kerlin et al., 2010). In a separate study, attention enhanced an N1-like response to attended speech and suppressed responses to unattended speech (Ding and Simon, 2012). In this latter case, the relatively short latency of these effects suggests that attention modulated bottom-up segregation and/or selection processes. Furthermore, this finding generalizes similar effects of selective attention on the auditory N1 ERP response from simple tones (Hillyard et al., 1973) to more naturalistic speech stimuli. Taken together, these findings are consistent with a gain model in which attention to a sound stream improves its neural representation while suppressing representations of irrelevant streams.

An issue with this type of gain model is that it is not uncommon for separate streams of speech to share similar acoustic features and, accordingly, activate overlapping neuronal receptive fields. In this case, attention-related enhancement or suppression would act on both attended and unattended streams. Therefore, in addition to gain, attention may also serve to narrow neuronal receptive fields of neurons within the auditory cortex (Ahveninen et al., 2011). This would, in effect, increase feature selectivity and decrease the likelihood that separate streams of speech activate overlapping neurons. To test this model, participants were presented with target sequences of repeating tones embedded within notch-filtered white noise that did not overlap with the frequency of the target. Auditory cortical responses (N1) to unattended sounds were reduced in amplitude reflecting lateral inhibition from the masker. In contrast, these attenuated effects disappeared for attended target stimuli. Here, selective attention may have narrowed the width of the receptive fields processing the target stream and, consequently, increased the representational distance between task-relevant and task-irrelevant stimuli. Furthermore, these neuronal changes correlated with behavioral measures of target detection suggesting that attention-related receptive field narrowing aided segregation, in addition to any helpful effects of gain.

A third way in which selective attention influences neural processes of streaming is enhancing temporal coherence between neuronal populations. In particular, attention to a target stream enhanced synchronization between distinct neuronal populations (both within and across hemispheres) responsible for processing stimuli within that stream and this correlated with behavioral measures of streaming (Elhilali et al., 2009b; Xiang et al., 2010). Enhanced synchronization may have facilitated the perceptual boundary between acoustic features belonging to attended and unattended streams as detected by a temporal coherence mechanism (Shamma et al., 2011). Consistent with the role of temporal coherence in streaming, when presented with a modified ABA- pattern in which low- (A) and high- (B) pitched tones were played simultaneously rather than sequentially participants reported hearing one stream even for very large Δfs (Elhilali et al., 2009a). Taken together, these physiological studies revealed at least three ways in which attention modulated streaming: (1) enhanced processing of the stimuli within the task-relevant stream and suppressed processing of those within the task-irrelevant stream (Alain and Woods, 1994; Bidet-Caulet et al., 2007; Elhilali et al., 2009b; Kerlin et al., 2010; Xiang et al., 2010; Ding and Simon, 2012), (2) enhanced feature selectivity for task-relevant stimuli (Ahveninen et al., 2011), and (3) enhanced temporal coherence between distinct neuron populations processing task-relevant stimuli (Elhilali et al., 2009b; Xiang et al., 2010).

Jones et al. (1981) theorized that rhythmic attention plays a role in the stream segregation process. Rhythmic attention is assumed to be a time-dependent process that dynamically fluctuates in a periodic fashion between a high and low state (Large and Jones, 1999). According to this theory, rhythmic attention aids listeners in picking up relations between adjacent and non-adjacent events when they are nested in a common rhythm. Therefore, when stimuli have a regular periodic pattern, rhythmic attention can detect sounds that do and do not belong to that stream. Indeed, when two streams of tones differed in rhythm they were more likely to be segregated even for tones relatively close in frequency (Jones et al., 1981). These findings are consistent with physiological studies that showed steady-state brain responses to be entrained to the rhythm of the segregated target stream (Elhilali et al., 2009b; Xiang et al., 2010). However, follow-up studies to Jones et al. (1981) have yielded conflicting results. For example, Rogers and Bregman (1993) showed that the likelihood of a context sequence of B-only tones to increase segregation in a short ABA- pattern was similar for context sequences that matched or mismatched the ABA- rhythm. Therefore, manipulating rhythm only minimally enhanced the effect of Δf during perception of streaming. However, it is not clear whether the buildup observed during these single-tone contexts was mediated by similar mechanisms as those that are active while listening to an ABA- context pattern (Thompson et al., 2011). Therefore, it is possible that rhythmic attention modulates these two types of buildup in a different manner. Studies by Alain and Woods(1993, 1994) also provided little evidence that rhythm has a role in streaming. They showed that the likelihood of segregating a target stream of tones from distracters was similar for sequences that had regular or irregular rhythms. However, because the rhythms of target and distracter streams were never manipulated independently, rhythm could not be used as a reliable cue for segregation. Therefore, in light of these issues, it still seems possible that rhythmic attention may modulate stream segregation, especially in cases where Δf is not sufficient for segregation to occur.

Indeed, the role of rhythmic attention in streaming has been the focus of several recent studies, which have proven more consistent with the ideas of Jones et al. (1981). For example, when Δfs were small, listeners were more likely to segregate an irregular target stream from a distracter stream when the distracter was isochronous (Andreou et al., 2011). However, given a large enough Δf, rhythm had a marginal influence on measures of streaming. Therefore, it may be that large Δfs are a dominant cue for streaming, but that listeners consider other cues such as rhythm when Δf is small. Other studies, in which participants detected a target melody interleaved with irrelevant melodies, showed that participants used rhythmic pattern to attend to points in time during which notes of the target melody occurred (Dowling et al., 1987) and reduce the distracting effects of irrelevant melodies (Devergie et al., 2010). Finally, listeners used rhythmic differences between streams to maintain perception of segregated streams (Bendixen et al., 2010). A plausible explanation for these results is that attention to the task-relevant stream was facilitated when the target stream had a regular rhythm distinct from other streams. Additionally, increased suppression of isochronous distracter streams facilitated attention to an irregular task-relevant stream. Taken together, studies suggest that rhythmic attention may modulate streaming, perhaps in conditions in which more salient cues are unavailable, but more work is needed to assess the generality of these findings.

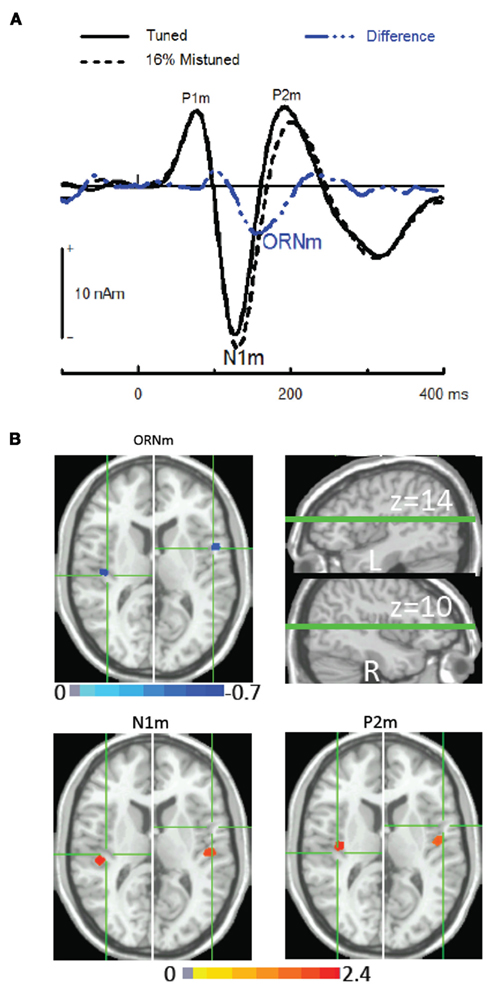

Attention during concurrent sound segregation. As with stream segregation, scalp-recorded ERPs have proven helpful in investigating the role of attention during concurrent sound perception because it allows one to examine the processing of auditory stimuli while they occur outside the focus of attention. Alain et al. (2001) measured auditory ERPs while participants were presented with harmonic complex tones with or without a mistuned harmonic; in one condition they indicated whether they heard one vs. two sounds, while in another condition they listened passively (i.e., read a book of their choice, with no response required). The main finding was an increased negativity that superimposed the N1 and P2 wave elicited by the sound onset. Figure 3 shows examples of neuromagnetic activity elicited by tuned and mistuned stimuli and the corresponding difference wave referred to as the object-related negativity (ORN), so named because its amplitude correlated with the observers’ likelihood of hearing two concurrent sound objects. The ERP recording by Alain et al. (2001) during the passive listening condition was instrumental in showing that the ORN, thought to index concurrent sound segregation and perception, occurred automatically. The proposal that low-level concurrent sound segregation mechanisms are not under attentional control was confirmed in subsequent ERP studies using active listening paradigms that varied auditory (Alain and Izenberg, 2003) or visual attentional demands (Dyson et al., 2005).

Figure 3. A neural marker of concurrent sound segregation based on harmonicity. (A) Neuromagnetic activity elicited by harmonic complexes that had all harmonics in tune or the third harmonic mistuned by 16% of its original value. The magnetic version of the ORN (ORNm) is isolated in the difference wave between responses elicited by the tuned and mistuned stimuli. The group mean responses are from 12 young adults. (B) Source modeling using the beamforming technique called event-related synthetic aperture magnetometry (ER-SAM). The activation maps (group image results) are overlaid on a brain image conforming to Talairach space. Green cross hairs highlight the location of the peak maxima for the ORNm sources (blue) derived from subtracting the ER-SAM results for the 0% mistuned stimulus from that of the 16% mistuned stimulus. For comparison, ER-SAM source maps at the time interval of the peak N1m and P2m responses (red) are plotted at the same ORNm axial (z-plane) level.

In addition to providing evidence for primary sound segregation, ERPs also revealed attention-related effects during the perception of concurrent sound objects. Indeed, when listeners were required to indicate whether they heard one or two sounds, the ORN was followed by a positive wave that peaked about 400 ms after sound onset, referred to as the P400 (Alain et al., 2001). It was present only when participants were required to make a response about the stimuli and hence is thought to index perceptual decision-making. Like the ORN, the P400 amplitude correlated with perception and was larger when participants were more likely to report hearing two concurrent sound objects. Together, these ERP studies revealed that both bottom-up (attention-independent) and top-down controlled processes are involved in concurrent sound perception.

In the ERP studies reviewed above, the perception of concurrent sound objects and mistuning were partly confounded, making it difficult to determine whether the ORN indexes conscious perception or simply the amount of mistuning. If the ORN indexes perception of concurrent sound objects, then it should also be present when concurrent sounds are segregated on the basis of other cues such as spatial location. McDonald and Alain (2005) examined the role of location on concurrent sound perception. Using complex harmonic tones with or without a mistuned harmonic, these authors found that the likelihood of reporting two concurrent sound objects increased when the harmonic was presented at a different location than the remaining harmonics of the complex. Interestingly, the effect of spatial location on perception of concurrent sound objects was paralleled by an ORN. The results from this study indicated that the ORN was not limited to mistuning but rather relates to the subjective experience of hearing two different sounds simultaneously. Moreover, this study showed that listeners can segregate sounds based on harmonicity or location alone and that a conjunction of harmonicity and location cues contributes to sound segregation primarily when harmonicity is ambiguous. Results from another research group also found an ORN during concurrent sound segregation with cues other than harmonicity, further supporting the interpretation that the ORN is related to conscious perception rather than stimulus processing (Johnson et al., 2003; Hautus and Johnson, 2005). However, an even stronger test of this account would be to present multistable versions of the mistuned harmonic (i.e., with an intermediate amount of mistuning) to see if the ORN is enhanced when listeners hear two objects compared to when they hear one object for the exact same stimulus.

Though the mistuned harmonic paradigm has proven helpful in identifying neural correlates of concurrent sound perception, the conclusions from these studies often rely on subjective assessment. Moreover, it is unclear whether the mechanisms involved in parsing a mistuned harmonic in an otherwise harmonic complex share similarities with those involved during the segregation and identification of over-learned stimuli such as speech sounds. In addition to data-driven processes, speech stimuli are likely to engage schema-driven processes during concurrent speech segregation and identification. To examine whether prior findings using the mistuned harmonic paradigms were generalizable to more ecologically valid stimuli, Alain et al. (2005) recorded ERPs while participants performed the double vowel task. The benefit of this task is that it provides a more direct assessment of speech separation and also evokes processes involved in acoustic identification. Here, participants were presented with a mixture of two phonetically different synthetic vowels, either with the same or different f0, and participants were required to indicate which two vowels were presented. As previously reported in the behavioral literature (e.g., Chalikia and Bregman, 1989; Assmann and Summerfield, 1990), accuracy in identifying both vowels improved by increasing the difference in the f0 between the two vowels. This improvement in performance was paralleled by an ORN that reflected the difference in f0 between the two vowels. As with the mistuned stimuli, the ORN during speech segregation was present in both attend and ignore conditions, consistent with the proposal that concurrent speech segregation may involve an attention-independent process. In summary, while it is not yet possible to propose a comprehensive account of how the nervous system accomplishes concurrent sound segregation, such an account will likely include multiple neuro-computational principles and multiple levels of processing in the central auditory system.

Intention

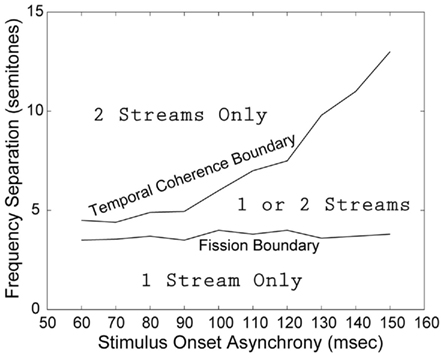

One of the first in-depth investigations of streaming provided an elegant demonstration of the large influence that manipulating an observer’s intention can have on conscious perception (Van Noorden, 1975). Participants listened to an ABA- pattern in which the A tone started out being much higher (or lower) than the B tone and increased (or decreased) in frequency after each presentation while the B tone stayed constant. This resulted in a continuously changing Δf between the A and B tones, and thus a continuously changing likelihood of hearing one or two streams. The stimulus onset asynchrony (SOA) from one tone to the next was also varied to promote auditory streaming. In addition to the changing stimulus, the participants’ intention varied as a result of the following instructions: (1) try and hear a single stream, or (2) try and hear two streams. The participants’ continuous “one stream” vs. “two streams” responses as a function of Δf and SOA provided a way to assess the limits of hearing a sequence of tones as integrated or segregated (see Figure 4). The Δf at which it was no longer possible to hold this percept was called the “fission boundary,” and did not vary much with SOA. In contrast, when participants were asked to hold the one stream percept, the Δf at which it was no longer possible (the “temporal coherence boundary”) varied substantially with SOA. Importantly, these two perceptual boundaries did not overlap with each other, resulting in a large number of combinations of Δf and SOA in which either percept was possible. Not only did this demonstrate the large effect intention can have on conscious perception, it also was suggestive of other properties associated with conscious visual perception such as hysteresis and multistability (cf. Hock et al., 1993), foreshadowing more recent research to be discussed in detail below. Interestingly, Van Noorden used the term “attentional set” instead of “intention” to describe the manipulated variable in his study, which raises the important possibility that the effects he observed were due most directly to the scope of selective attention of the listener on either both the A and B tones or just one of the tones. Thus, while selective attention may be a mediating mechanism for the effect of intention to hear a particular perceptual organization on perception, it might not be the only way that a listener’s intention can affect conscious perception. Given that, surprisingly little research has been done since Van Noorden’s study to distinguish between effects of attention and intention, at either the behavioral or neurophysiological level, this remains a rich area to be investigated further.

Figure 4. A reproduction of Van Noorden’s (1975) streaming diagram, showing the combinations of frequency separation and stimulus onset asynchrony between low and high tones that lead to perception of only one stream, only two streams, or either perceptual organization.

Prior experience

One way to study higher-order cognitive processes during perception is to assess the impact of prior experience, which can inform the role of explicit and implicit memory during perception. For example, streaming studies have tested for effects of prior knowledge of stimuli as a possible mediating mechanism for a listener’s intention to hear segregated patterns in an auditory scene. In one early study, listeners were presented with two melodies at the same time, with the tones of melody A interleaved with the tones of melody B (i.e., A1, B1, A2, B2,…, where A1 is the first note of melody A). This results in a melody that is more complex than the typical ABA- pattern used for streaming experiments because the A and B tones frequently change during a trial (Dowling, 1973). When both melodies were familiar tunes, it was easier to identify them when the frequency ranges of the two melodies were greatly separated, as in standard streaming paradigms. Importantly, when the name of one of the tunes was given prior to hearing the interleaved melodies, it was easier to perceptually segregate it even when the two melodies were closer in pitch, demonstrating an effect of prior knowledge on perceptual segregation. However, knowing the name of the background melody did not help participants identify the target melody, suggesting that prior knowledge does not attenuate the distracting influence of background sounds (also, see Newman and Evers, 2007). Instead, it seems more likely that attentional focus upon expected notes in the target melody helped segregate it from the background. A later study directly tested this idea, showing that target melodies with events presented at points of high temporal expectation due to the rhythm of the A and B melodies were recognized better than melodies with events presented at points of low expectation (Dowling et al., 1987). This form of temporal attention is consistent with the dynamic attending theory of Jones and colleagues (Jones, 1976; Jones and Boltz, 1989; Large and Jones, 1999). A caveat to the work by Dowling on effects of familiarity is a more recent study showing that previously unfamiliar interleaved melodies were not easier to segregate when the target melody had just been presented by itself prior to the interleaved melodies (Bey and McAdams, 2002). Thus, the beneficial effects resulting from familiarity may only occur when the patterns are stored in long-term memory. Alternatively, it is possible that representations for familiar melodies are simply stronger than short-term traces for melodies that have been presented only once, regardless of the storage mechanism.

In some cases discussed thus far it is difficult to rule out attention as the most direct factor that enhances processing when manipulating familiarity of stimuli or the listener’s intention. However, it is also possible that familiarity, priming, and other memory-related factors might be able to directly influence perception through non-attention-related mechanisms. For example, adults of all ages benefit from semantic predictability of words in a sentence segregation task (Pichora-Fuller et al., 1995). Another study showed that complex stimuli that are embedded in noise became easier to segregate when they were presented repeatedly, as long as on each new presentation they were mixed with a different noise (McDermott et al., 2011). Because the noises are unlikely to be perceived as auditory objects prior to the first time they are successfully segregated, this result suggests that short-term memory traces are able to automatically facilitate segregation. Finally, studies of streaming context effects have shown that both prior stimuli and prior perception of those stimuli can have large effects on a subsequent perceptual decision, an example of implicit memories influencing perception (Snyder et al., 2008, 2009a,b; Snyder and Weintraub, 2011; for similar findings in continuity perception, see Riecke et al., 2009, 2011; for related research in speech perception, see McClelland et al., 2006). In particular, a prior ABA- pattern with a large Δf biases following patterns to be heard as one stream, a contrastive or suppressive effect; in contrast, prior perception of two streams biases subsequent patterns to be heard with the same percept, a facilitative effect. Importantly, these streaming context effects are likely to be implicit because listeners are not explicitly asked to compare prior and current patterns nor are they typically aware that the prior patterns are affecting their perception. Also of note is that the context effects are consistent with similar effects of prior stimuli and prior percepts observed in vision, suggesting the operation of general memory mechanisms that implicitly influence perception (Pearson and Brascamp, 2008).

One account of perception, known as reverse hierarchy theory, might help explain how high-level factors such as intention and prior experience might enhance segregation (Hochstein and Ahissar, 2002). This theory assumes that a stimulus activates the sensory system in a bottom-up manner without conscious access of each low-level representation; when the information finally reaches a high-level representation, this is accessed in the form of a gist or category related to the stimulus (also, see Oliva and Torralba, 2001; Greene and Oliva, 2009). Once this high-level activation occurs, low-level representations of the stimulus can be accessed only in specific circumstances in which a top-down path is possible. The reverse hierarchy theory is consistent with visual learning studies and a number of other visual phenomena including change blindness and illusory conjunctions (Hochstein and Ahissar, 2002). Recently, the theory was also able to predict novel findings in a word segregation task (Nahum et al., 2008). Hebrew-speaking listeners were unable to use a low-level binaural difference cue that would have aided segregation when the task was to make a semantic judgment on one of two possible Hebrew words that were phonologically similar (e.g., /tamid/ and /amid/). This was likely due to the fact that the semantic task primarily involved accessing high-level representations and the acoustically similar words were processed in highly overlapping ascending auditory pathways. Interestingly, even when the task was not inherently high-level such as in word identification, binaural cues were not used unless they were available on every trial within a block, suggesting that listeners implicitly learn over the course of a block of trials to not access low-level representations unless they were consistently useful. For our purposes, these results are interesting because they support a theory that might be able to explain how high-level information about stimuli and recent experience can guide the accessing of low-level cues for conscious auditory perception.

Multistability

The fact that subjective and objective measures of perception can be substantially modulated by attention and other high-level factors suggests that auditory perception is multistable like visual perception (Leopold and Logothetis, 1999; Long and Toppino, 2004; Pearson and Brascamp, 2008). However, it was not until relatively recently that a thorough quantitative comparison was made between auditory and visual multistable perception (Pressnitzer and Hupé, 2006). In this study, the authors assessed auditory streaming using ABA- patterns with an intermediate Δf presented with many more repetitions per trial than usual. The same observers were also tested on perceptual segregation of moving plaid patterns, which has been studied in detail at the psychophysical (e.g., Hupé and Rubin, 2003) and neurophysiological (e.g., Movshon et al., 1985) level. Perception of the moving plaid pattern was appropriate for this comparison with perception of ABA- patterns because the two stimuli share a number of psychophysical properties. First, they are both segregation tasks, resulting in either the perception of a single pattern or two distinct patterns. Second, in both paradigms the initial perception is of a single pattern and only after a buildup period does perception of two patterns occur. The study went further by showing that after the initial switch to perceiving two patterns, observers then showed similar stochastic switching between the two percepts in both modalities. And the initial period of perceiving one stream was longer in duration than subsequent periods of either stable percept. They also showed that it was possible to intentionally control perception but it was not possible to completely eliminate switching between percepts, consistent with the findings of Van Noorden (1975) discussed earlier. The finding that even for ABA- patterns with rather large or rather small Δf values (i.e., not “ambiguous”) switching between one and two streams continued to occur, despite an overall bias for one percept, emphasizes the robustness of multistability in streaming (Denham and Winkler, 2006).

Pressnitzer and Hupé (2006) further showed that despite the similar multistable perceptual phenomena in the visual and auditory paradigms, the number of switches per unit time in one modality did not predict the switching rate in the other modality, suggesting similar but functionally distinct mechanisms for controlling perception in vision and hearing. In a subsequent study, these authors further explored the mechanisms controlling multistable perception by presenting visual and auditory patterns at the same time (Hupé et al., 2008). In the first experiment, they presented ABA- and plaid patterns together and participants reported any switches observed in each modality. In the second experiment, they presented ABA- and apparent motion patterns together that were spatially and temporally coupled with each other, in order to increase the likelihood of cross-modal interactions in perception. The results showed that a switch in one modality did increase the likelihood of switching in the other modality, that the likelihood of perceiving the same percept in the two modalities was higher than expected based on chance, and these two effects were largest for the experiment using cross-modally coupled patterns. Thus, while there is likely to be interaction between the two modalities in controlling perception, this latter finding suggested that there is not a supramodal mechanism that controls perception in both modalities; rather, perceptual mechanisms in vision and hearing may interact depending on how likely signals in the two modalities are coming from the same physical objects in the environment. This conclusion is consistent with a study showing that intentional control over perceptual interpretations is strongly enhanced when stimuli are cross-modally consistent with each other (van Ee et al., 2009).

Neurophysiological studies also support the idea that perception may be determined primarily within modality-specific brain areas. In vision, the majority of findings show robust correlates in areas that are thought to be primarily dedicated to visual processing (Leopold and Logothetis, 1999; Tong et al., 2006). In hearing, although there are only a few studies on neural correlates of multistable perception, the findings also suggest the involvement of auditory-specific processes. However, it is important to be cautious in interpreting the precise role of brain areas measured in neurophysiological studies because of the correlational nature of the data.

In a streaming study measuring neuromagnetic brain activity signals from the superior temporal plane, small modulations in sensory-evoked response amplitude were observed depending on whether listeners were hearing two streams. These perception-related modulations occurred in similar components as those that were modulated by increased Δf, but they were smaller in amplitude (Gutschalk et al., 2005). Intracranial ERPs from several lateral superior temporal lobe locations measured during neurosurgery in epilepsy patients also showed some dependence on perception, but these were also much less robust compared to Δf-dependent modulations (Dykstra et al., 2011). In a functional magnetic resonance imaging (fMRI) study, listeners showed more activity in auditory cortex when hearing two streams as opposed to one stream (Hill et al., 2011). In another fMRI study, which examined neural correlates of switching between one- and two-stream percepts, switching-related activations were observed in non-primary auditory cortex as well as the auditory thalamus in a manner that suggested the importance of thalamo-cortical interactions in determining perception (Kondo and Kashino, 2009). In an fMRI study on streaming using inter-aural time difference as the cue to segregating A and B tones, switching-related activity in the auditory cortex was again found, in addition to activity in the inferior colliculus, which is an important brainstem area for processing binaural information (Schadwinkel and Gutschalk, 2011). Future studies should directly compare the effect of perceiving one vs. two streams and the effect of switching between perceiving one and two streams; without such a direct comparison using the same participants and similar stimuli, it is difficult to determine whether similar brain circuits are implicated in these possibly distinct processes.

Exceptions to evidence for modality-specific auditory perception mechanisms are fMRI studies showing enhanced activity while perceiving two streams compared to perceiving one stream in the intraparietal sulcus, an area that is thought to also be involved in visual perceptual organization and attention shifting (Cusack, 2005; Hill et al., 2011). Interestingly, increasing the spectral coherence of complex acoustic stimuli in such a way that increases perceptual segregation also modulated the fMRI signals in intraparietal sulcus, in addition to the superior temporal sulcus, a higher-order auditory processing area (Teki et al., 2011). However, these brain modulations were observed while participants were not making perceptual judgments so it is unclear the extent to which they reflect perceptual processing, as opposed to automatic stimulus processing. At this point it is difficult to conclusively state which of the brain areas found to correlate with perception in these studies are most likely to be important for determining perception because of the different stimuli and tasks used. But these studies have provided a number of candidate areas that should be studied in future neurophysiological studies, as well as studies that assess the consequences of disrupted processing in the candidate areas.

Although other ASA tasks (e.g., mistuned harmonic segregation) have not been studied as thoroughly for signs of multistable perception observed in streaming, it stands to reason that they would show some of the same phenomena and could be useful in determining the generality of the streaming findings. For example, a multistable speech perception phenomenon is verbal transformation in which repeated presentation of a word results in the perceived word changing to another word, often with many different interpretations during a single trial (e.g., the four-phoneme stimulus TRESS being heard as the following sequence of words “stress, dress, stress, dress, Jewish, Joyce, dress, Jewess, Jewish, dress, floris, florist, Joyce, dress, stress, dress, purse”; Warren, 1968). Ditzinger and colleagues showed that rather than randomly changing between all the possible alternatives, pairs of alternatives tended to alternate with each other, suggesting that the principles underlying the phenomenon are more similar to other multistable phenomena (Ditzinger et al., 1997b; Tuller et al., 1997). Indeed, a dynamic systems model that was similar to a model of multistable visual perception was able to reproduce the time course of verbal transformations (Ditzinger et al., 1997a).

A more recent study took a different theoretical approach to verbal transformations by trying to explain them in terms of auditory streaming and grouping mechanisms (Pitt and Shoaf, 2002). Listeners were presented three-phoneme (consonant–vowel–consonant) pseudowords and reported instances of hearing transformations in addition to instances of hearing more than one stream of sounds. A large majority of the transformations reported were accompanied by hearing more than one stream of sounds, suggesting that part of the original pseudoword was segregated from the remainder, changing how the remainder sounded. Changes in perception also occurred for sine-wave speech that was repeated, with transformations occurring after more stimulus repetitions when perceived as speech rather than as tones, suggesting an influence of top-down knowledge on stabilizing perception, consistent with evidence from streaming paradigms discussed above. Behavioral evidence that overt and covert speech production constrains perception of verbal transformations (Sato et al., 2006) further implicates speech-specific (e.g., articulatory) mechanisms being important for generating verbal transformations, as does neurophysiological activity in left inferior frontal speech areas associated with transformations (Sato et al., 2004; Kondo and Kashino, 2007; Basirat et al., 2008).

In addition to speech perception paradigms, signs of multistable perception have also been observed in a variety of musical tasks (e.g., Deutsch, 1997; Toiviainen and Snyder, 2003; Repp, 2007; Iversen et al., 2009). Additional research on musical multistability would be especially interesting in light of evidence suggesting distinct mechanisms for resolving ambiguous stimuli in vision vs. hearing and speech-specific mechanisms in verbal transformations. For instance, it would be important to determine whether different types of ambiguous auditory stimuli (e.g., speech vs. music) are resolved in distinct neural circuits. This would suggest that multistability is controlled not by centralized mechanisms in only a few brain areas but rather by the normal dynamics that are available throughout the cerebral cortex or other brain areas.

From Sounds to Conscious Percepts, or Not

While the research described above demonstrates the promise of using segregation paradigms to understand the role of high-level factors in resolving ambiguous stimuli, another important topic is to understand why some auditory stimuli fail to become accessible to awareness in the first place. Fortunately, researchers have developed a number of clever techniques, often inspired by similar research in vision, to manipulate whether an auditory event is made consciously accessible to observers. Such techniques are critical to understand the mechanisms underlying stimulus awareness, and also evaluating the influence of being aware of a stimulus on processing subsequent stimuli, separate from the influence of other factors such as attention (Koch and Tsuchiya, 2007).

Energy Trading

Traditional ASA theory (Bregman, 1990) makes a common, but perhaps erroneous, assumption of the existence of energy trading. According to the energy trading hypothesis, if one auditory component contributes to two objects simultaneously, then the total energy in that component should be split between the two objects so that the sum of the amount of energy the component contributes to each object equals the total amount of energy in the component. Research on this topic provides important insights about how low-level sound components contribute to perception of auditory objects and streams. However, the object representations in a scene do not always split the total amount of energy available in a zero-sum fashion (Shinn-Cunningham et al., 2007). In this study, a pure-tone target was used that could be perceptually grouped with either a rhythmic sequence of pure tones of the same frequency (tone sequence) or with concurrent pure tones of different frequencies (a vowel). If the target was incorporated into the vowel, the category of the vowel would change from /I/ to /ε/, and if the target was incorporated into the sequence, its rhythm would change from “galloping” to “even.” The tone sequence, vowel, and target were presented together with varying spatial configurations. The target could be presented at the same spatial location as the vowel (or tone sequence) to increase the probability of perceptual grouping, or the target could be presented at a different spatial location. The authors conducted trials in which listeners attended to the vowel while ignoring the tone sequence or vice versa.

They found that in the attend-tone block, listeners heard the target as contributing to the tone sequence in all spatial configurations, except when the target was presented at the same location as the vowel. Oddly, in the attend-vowel block, when the feature was presented at the same spatial location as the vowel, the feature did not group with the vowel – the vowel was perceived as /I/. Because the target did not contribute to either percept (the tone sequence or the vowel), it was as if the target tone disappeared from the mixture. This curious case of a feature disappearing suggests that energy trading does not always hold between objects in scenes and that there can be sounds in a scene that do not reach conscious perception even though they are otherwise audible. Shinn-Cunningham et al. (2007) further suggest that listeners require more evidence to allocate an auditory component to a sound in a perceptual figure than to reject it to the auditory ground. It should be noted that in two other studies (Shinn-Cunningham et al., 2008; Shinn-Cunningham and Schwartz, 2010), the same researchers used a simultaneous tone complex rather than a vowel as a competing sound with the tone sequence, and found results that were more consistent with energy trading (see also Leung et al., 2011). However, these two studies also used a richer harmonic target sound, which changed the perceived pitch of the tone complex when the target was integrated.

Another line of research that is problematic for the energy trading hypothesis is the well-established finding of duplex perception: an auditory component can contribute to two sounds at the same time (Rand, 1974; Fowler and Rosenblum, 1990). Duplex perception was first demonstrated by Rand (1974). In this study, the second and third formant transitions from a syllable, e.g., “da,” were presented to one ear while the rest of the syllable (i.e., the first formant and the remaining second and third formants) was presented to the other ear. This stimulus generated two simultaneous percepts: listeners reported hearing a fully intact syllable in one ear and a non-speech chirp-like sound in the other ear. The identity (“da” vs. “ga”) of the syllable was determined by the third formant transition. Even though the critical feature for identification of the syllable was presented at a separate spatial location from the rest of the syllable, the feature was integrated with the other components to create a coherent, identifiable percept (while at the same time creating the separate percept of a chirp).

Duplex perception has been found to be surprisingly resistant to a variety of other manipulations of the third formant transition, such as SOA (e.g., Bentin and Mann, 1990; Nygaard and Eimas, 1990; Nygaard, 1993), amplitude differences (Cutting, 1976; Whalen and Liberman, 1987; Bentin and Mann, 1990), f0 (Cutting, 1976), and periodicity differences (Repp and Bentin, 1984). The effect is so strong that it has even been found to occur when the isolated formant transition is not necessary to form a coherent percept (Nygaard and Eimas, 1990). Duplex perception phenomena are not limited to speech objects. For example, when two simultaneous piano notes are presented to one ear while a single note is presented simultaneously to the other ear, the resulting percept is of both the single tone and a fused chord (Pastore et al., 1983). Duplex perception also has been demonstrated with environmental sounds (see Fowler and Rosenblum, 1990).

In summary, it is necessary to either modify ASA theory (Bregman, 1990) or to look beyond it for an explanation of the non-veridical perceptual organization of auditory scenes. Collectively, the findings of duplex perception and the recent case of feature non-allocation contradict the energy trading hypothesis and call into question the amount of low-level detail we are aware of in our acoustic environment (cf. Nahum et al., 2008). Future research on energy trading using denser and more naturalistic auditory scenes is needed to provide a more complete picture of how ASA is accomplished to generate our conscious perception of auditory objects and streams.

Change Deafness

Change deafness is the surprising failure to notice striking changes to auditory scenes. A visual analog to this phenomenon has been extensively studied in the visual domain, where it is referred to as change blindness (for reviews, see Rensink, 2002; Simons and Rensink, 2005). And a related auditory phenomenon was actually demonstrated as early as the work of Cherry (1953) who showed that changes to an unattended stream of auditory input (such as a change of the speaker’s identity) are often missed while shadowing a spoken message presented to an attended stream of auditory input (Vitevitch, 2003; Sinnett et al., 2006). Studies using the one-shot technique, in which presentation of a scene is followed by an interruption and then either the same or a modified scene, have been the most common way of examining change deafness. Listeners were found to often miss changes to environmental objects, such as a dog barking changing to a piano tune (e.g., Eramudugolla et al., 2005; Gregg and Samuel, 2008, 2009). It is important to note that change deafness occurs even though scenes sizes are typically quite small: ∼45% change deafness occurred in Gregg and Samuel (2008) with just four objects per scene. An understanding of the mechanisms underlying change deafness has the potential to inform several issues in auditory perception, such as the completeness of our representation of the auditory world, the limitations of the auditory perceptual system, and how auditory perception may limit auditory memory for objects (for a review, see Snyder and Gregg, 2011). Change deafness might also be useful for studying unconscious processing of changes, as well as the mechanisms that enable changes to reach awareness.

One study has shown that change deafness is reduced with directed attention to the changing object (Eramudugolla et al., 2005). In this study, a 5-s scene was presented, followed by a burst of white noise, and then another 5 s scene that was either the same or different. On Different trials, an object from Scene 1 was either deleted in Scene 2 or two objects switched spatial locations from Scene 1 to Scene 2. The experimental task was to report whether the two scenes were the “Same” or “Different,” and substantial change deafness was found when not attending to the to-be-changed object. However, when attention was directed to the to-be-changed object via a verbal cue, change detection performance was nearly perfect. One problem with this study, however, is that attention cues were always valid. As a result, participants could have listened for the cued sound in Scene 2, rather than actually comparing the two scenes. An interesting question to address in future research is what aspects of auditory objects must be attended to enhance performance.

Failures to detect changes may not necessarily reflect a failure to encode objects in scenes. Gregg and Samuel (2008) presented an auditory scene, followed by a burst of noise, and then another scene that was either the same as or different than the first scene. Participants performed a change detection task, followed by an object-encoding task, in which they indicated which of two objects they had heard in one of the two scenes. Gregg and Samuel found that object-encoding had a lower error rate than change detection (28 vs. 53%). This study also found that the acoustics of a scene were a critical determinant of change deafness: performance improved when the object that changed was more acoustically distinct from the sound it replaced. But the acoustic manipulation had no effect on object-encoding performance, even though it resulted in more spectral differences within one of the scenes. Gregg and Samuel suggested that successful change detection may not be based on object identification, as is traditionally assumed to underlie visual scene perception (e.g., Biederman, 1987; Edelman, 1998; Ullman, 2007), but is instead accomplished by comparing global acoustic representations of the scenes.

Recently, however, McAnally et al. (2010) distinguished between object-encoding on detected and not detected change trials and found that performance in identifying which object was deleted was near ceiling when changes were detected but at chance when changes were not detected. This finding suggests that changes may only be detected if objects are well encoded, contrary to the findings of Gregg and Samuel (2008). However, it should be noted that the extent of change deafness that occurred in McAnally et al. (2010) was quite modest. They obtained 15% change deafness for scene sizes of four objects, whereas Gregg and Samuel obtained 45% change deafness for scene sizes of four objects. One potential reason for the discrepancy across studies may be that the task in McAnally et al. (2010) did not elicit much change deafness. In their study, a changed scene consisted of an object that was missing, rather than an object replaced by a different object as in Gregg and Samuel. Despite the task differences, the results of McAnally et al. (2010) do question the extent to which objects are encoded during change deafness, and this is an issue that warrants further investigation.

One major issue in the change deafness research is the question of whether change deafness actually reflects verbal or semantic processing limitations, rather than a sensory-level process. Gregg and Samuel (2009) have shown that abstract identity information seems to be encoded preferentially compared to intricate physical detail. In this experiment, within-category changes (e.g., a large dog barking changing to a small dog barking) were missed more often than between-category changes (e.g., a large dog barking changing to a piano tune). It is important to note that this result occurred even though acoustic distance for within- and between-category changes was controlled. In fact, the finding that within-category changes elicited more change deafness was so robust that it occurred even when the within-category changes were acoustically advantaged compared to between-category changes. Gregg and Samuel did not address the specific nature of the high-level representation being used; it is possible that subjects may have been forming a mental list of verbal labels for all of the objects in the pre-change scene, as has been suggested (Demany et al., 2008). Alternatively, higher-order representations might be activated that reflect the semantic similarity between objects within and between categories.

In summary, change deafness is a relatively new and intriguing line of research. Future research is needed to resolve theoretical issues about why failures to detect auditory changes occur. For example, the issue still remains to what extent sensory-related, attention, memory, or comparison processes are responsible for failures to detect changes and how the interaction of these processes contributes to change deafness.

Masking

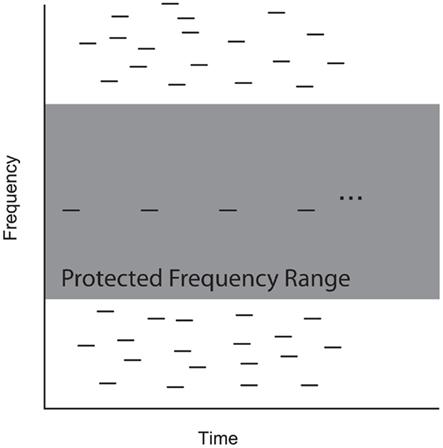

Masking of a target stimulus by another stimulus presented around the same time has been used extensively to study low-level mechanisms of auditory processing. Typically, masking has been observed most strongly when the target and masking stimuli are similar in acoustic features such as frequency, which can be attributed to interference in early frequency-specific stages of processing (e.g., Moore, 1978). This form of masking is referred to as “energetic masking,” in contrast to “informational masking,” which is assumed to occur when sounds do not have acoustic overlap. Rather, informational masking is assumed to take place at later anatomical sites in the auditory system and to result from a variety of higher-level factors including perceptual grouping and attention (Durlach et al., 2003a; Kidd et al., 2007; Shinn-Cunningham, 2008). The notion of informational masking has generated interesting research that can inform perceptual mechanisms relevant to the current discussion. In particular, a variant of the multi-tone masker paradigm (see Figure 5) bears some similarity to streaming paradigms in its use of repeating pure tones (Neff and Green, 1987). An important difference, however, is the fact that the task typically used in informational masking experiments is to detect whether a fixed-frequency tone is present or absent in a scene along with numerous other masking tones of different frequencies. Peripheral masking can be prevented by not presenting any of the masking tones within a critical band around the target tone.

Figure 5. In informational masking experiments, presenting a series of fixed-frequency target tones in the midst of a multi-tone masker stimulus can prevent awareness of the target, even when the masker tones are prevented from overlapping in frequency with the target by using a protected frequency range.

Several results in the literature have demonstrated interesting similarities between factors that cause streaming and factors that cause release from informational masking. In particular, faster presentation rate, greater target-mask dissimilarity, and cueing the location of the target all facilitate release from masking (Kidd et al., 1994, 2003, 2005; Durlach et al., 2003b; Micheyl et al., 2007b). Similarities may also exist at the neural level: in one study a long-latency response from secondary auditory cortex occurred in response to target tones in a multi-tone masker, but only when participants detected them; remarkably, when the tones were not detected all long-latency brain responses were conspicuously absent (Gutschalk et al., 2008). The response was referred to as an awareness-related negativity (ARN) and was later in latency than (but had similar scalp distribution to) the well-studied N1 response (Näätänen and Picton, 1987), which is consistent with the involvement of negative long-latency responses in streaming and concurrent sound segregation (e.g., Alain et al., 2001; Gutschalk et al., 2005; Snyder et al., 2006). Activity from primary auditory cortex was present regardless of whether the target was detected, strongly suggesting that neural activity must reach beyond primary auditory cortex in order to generate perception. The results were also consistent with the reduction in the N1 observed when sounds are ignored and during sleep (Crowley and Colrain, 2004). The N1 is thought to be an obligatory stimulus-driven response, but if the ARN were related to the N1 (as was suggested by similar source locations), this study would be the first to demonstrate that the N1 generators require participants to be aware of a stimulus to be activated. However, some caution is warranted because the ARN was found to have a longer latency than is typical of the N1, and could therefore be more related to a later negative wave (Nd), which is linked to selective attention (Hansen and Hillyard, 1980). This raises the possibility that the ARN could simply be an index of fluctuations in attention, rather than a direct correlate of awareness.

These results are interesting to compare with findings from a single patient with bilateral superior temporal auditory cortex lesions due to stroke, who performed well on sound detection tasks as long as attention was paid to the tasks (Engelien et al., 2000). However, it is not totally clear what the exact experience of this patient was. In particular, the patient may have had normal conscious experience of detecting sounds as long as enough attention was used; alternatively, the patient may have had little conscious experience of the sounds that he was nevertheless able to reliably detect, in an analogous fashion to patients with blindsight as a result of visual cortex damage (e.g., Stoerig and Cowey, 1997). The same patient showed activation during attention to auditory tasks in a number of brain areas, measured by positron emission tomography, such as in the prefrontal and middle temporal cortices, caudate nucleus, putamen, thalamus, and the cerebellum. Thus, detection of sounds (whether accompanied by conscious experience of the sound or not) may be possible by activating non-auditory brain areas, raising the question of the extent to which superior temporal auditory cortex is necessary or sufficient for awareness to occur. For example, it is possible that the ARN found by Gutschalk et al. (2008) is the result of input from higher-level brain areas that are responsible for generating awareness. Recently, evidence in support of the importance of feedback for generating awareness was found by recording electrophysiological responses in patients in a vegetative state, who compared to controls showed a lack of functional connectivity from frontal to temporal cortex during processing of changes in pure-tone frequency (Boly et al., 2011; for evidence from the visual domain supporting the importance of top-down feedback for perceptual awareness in fully awake, non-brain-damaged individuals, see Pascual-Leone and Walsh, 2001; Wibral et al., 2009).

Subliminal Speech

Recently, researchers have made speech inaudible to determine the extent of auditory priming that can occur without awareness of the priming stimulus. This is one of the only examples of research that has addressed the necessity or sufficiency of auditory awareness for prior stimuli to influence later processing. In one study, priming words were made inaudible by attenuation, time-compression, and masking with time reversals of other time-compressed words immediately before and after the priming words (Kouider and Dupoux, 2005). Compressing words so they were as short as 35 or 40% of their original duration led to very little awareness of the primes as measured on independent tests in which participants had to decide whether the masked sound was a word vs. non-word or a word vs. reversed word. The test word, which was not attenuated or compressed, was played immediately after the priming word (and simultaneously with the post-priming mask). Non-word pairs were also used that were the same or acoustically similar. Based on the speed with which participants made word vs. non-word decisions about the target, this study showed that repetition of the same word caused priming (i.e., faster responses compared to unrelated prime–target pairs) at all time compressions, including ones that made the prime inaudible (35 and 40%), although the priming effect was larger for audible primes (50 and 70%). Priming also occurred when the prime and target were the same words spoken by different-gender voices, even for the 35% compression level, suggesting that subliminal priming can occur at the abstract word level, independent of the exact acoustics of the sound. Priming effects did not occur for non-words or for semantically related (but acoustically different) words at the subliminal compression levels, suggesting that semantic processing may require conscious perception of words.

A second study used primes that were compressed by 35%, but this time the researchers made the prime audible on some trials by presenting them with a different inter-aural time difference compared to the masking sounds (Dupoux et al., 2008). Again, word priming only occurred for masked words but not masked non-words; priming occurred for both words and non-words when unmasked; and priming was larger for unmasked compared to masked sounds. Additionally, priming did not decline with longer prime–target delays for unmasked words, but the effect declined rapidly for masked sounds over the course of 1000 ms, suggesting a qualitatively different type of robust memory storage for audible sounds.

The basic masked priming effect was recently confirmed by a separate group, who additionally showed that priming occurs mainly for targets with few phonological neighbors (Davis et al., 2010). But a recent study found semantic priming using auditory prime–target word pairs (Daltrozzo et al., 2011), which was in contrast to the study by Kouider and Dupoux (2005). However, the more recent study showing semantic priming used very low-intensity primes that were not possible to categorize, instead of also using time-compression and masking, which could account for the discrepant findings.

Kouider et al. (2010) recently performed an fMRI study using their masking paradigm. They showed priming-related suppression of activity which may prevent processing of stimuli that have already been presented (Schacter et al., 2004). Decrease in activity was found in the left superior temporal auditory cortex (including Heschl’s gyrus and planum temporale) for within-gender word pairs and cross-gender word pairs, and in the right insula for within-gender word pairs. For non-words, a different pattern of activity decrease was found in the frontal lobe and caudate nucleus, in addition to response enhancement in the superior temporal cortex. The function of the brain changes should be interpreted cautiously, however, because the magnitudes of activity decrease did not correlate with the magnitudes of behavioral priming. Nevertheless, the results do show that information about unconscious auditory stimuli can reach fairly high levels of processing, with the particular brain areas involved being dependent on the familiarity or meaningfulness of the stimuli.

Speech-priming results are also interesting to compare with a recent study that used fMRI to measure acoustic sentence processing in individuals who were fully awake, lightly sedated, or deeply sedated (Davis et al., 2007). Neural activity in temporal and frontal speech-processing areas continued to differentiate sentences from matched noise stimuli in light sedation and superior temporal responses continued in deep sedation. In contrast, neural activity did not distinguish sentences with vs. without semantically ambiguous words, consistent with the lack of semantic priming observed by Kouider and Dupoux (2005) but inconsistent with the study by Daltrozzo et al. (2011).

Auditory Attentional Blink

Attentional blink (AB) refers to a phenomenon where the correct identification of a first target (T1) impairs the processing of a second target (T2) when presented within several hundred millisecond after T1 (e.g., Broadbent and Broadbent, 1987; Raymond et al., 1992; Chun and Potter, 1995). Although the AB has been studied primarily in the visual modality, there is some evidence to suggest that AB also occurs in the auditory modality (e.g., Duncan et al., 1997; Soto-Faraco et al., 2002; Tremblay et al., 2005; Vachon and Tremblay, 2005; Shen and Mondor, 2006).