- Department of Philosophy, Université Lille-Nord de France, UMR 8163, Lille, France

Motivationally unconscious (M-unconscious) states are unconscious states that can directly motivate a subject’s behavior and whose unconscious character typically results from a form of repression. The basic argument for M-unconscious states claims that they provide the best explanation for some seemingly non-rational behaviors, like akrasia, impulsivity, or apparent self-deception. This basic argument has been challenged on theoretical, empirical, and conceptual grounds. Drawing on recent works on apparent self-deception and on the “cognitive unconscious” I assess those objections. I argue that (i) even if there is a good theoretical argument for its existence, (ii) most empirical vindications of the M-unconscious miss their target. (iii) As for the conceptual objections, they compel us to modify the classical picture of the M-unconscious. I conclude that M-unconscious states and processes must be affective states and processes that the subject really feels and experiences – and which are in this sense conscious – even though they are not, or not well, cognitively accessible to him. Dual-process psychology and the literature on cold–hot empathy gaps partly support the existence of such M-unconscious states.

It is usually easy to explain someone’s behavior. We can often read the motives that explain his actions directly on his moves and expressions. When we cannot, it is always possible to ask the person the reason for his actions. I would normally explain why John pulled the trigger of the gun while pointing it at his wife by citing his desire to get rid of her and his belief that this is an excellent way of doing just that. If I had some doubts, I could ask John. He might tell me that he’s no murderer and he just wanted to wound her. I might accordingly revise my explanation. Provided that John is “in good faith,” I should end up with a pretty good explanation. This explanation will have the following form: a motive for an action A – roughly1 a desire for a given outcome and the belief that A is the best way to achieve this outcome – explains the action A. There are much tougher cases though, in which this method will not be of much help, even if the subject whose behavior we are trying to interpret can answer all our questions, and even if he is perfectly honest.

Akrasia and Impulsivity

The cases I am thinking of have bewildered philosophers ever since Plato. They involve actions that do not seem rational. Consider for example, weakness of the will or akrasia. Someone who is akratic seems to be acting against his preferences. A typical example involves someone, say Paul, who sees someone else’s wallet on the ground, who knows he should not take it, wants to be honest “and everything”, prefers, all things considered not to take it, … but yet, steals the wallet. Paul “sees the better and approves it, but he follows the worse”. Notice that Paul might, but need not be acting impulsively. He might as well pursue the worse, so to speak, “in cold blood”. In any case, he will in good faith deny having the preference that would explain his behavior, so it seems reasonable not to attribute him this preference. But how then should we explain his misdemeanor? (compare: “John chose A over B even though he judged A to be more expensive/less pretty…” to “John chose A over B even though he judged that all things considered, it was better to chose B”).

Apparent Self-Deception

Consider also cases of “self-deception”. A victim of self-deception seems to be deceiving himself into believing something false. He believes something against the evidence, and he seems to do so intentionally. For example, Mary, who was recently diagnosed with a cancer claims that the diagnosis is misguided and she really seems to believe it, but she avoids her physicians as well as conversations on medical topics. She seems somehow to be lying to herself about her disease. Self-deception names both the phenomenon “someone seems to be deceiving oneself” and its natural explanation “this person actually deceives herself”. We shall see that many refuse this natural explanation so it will be useful to have two different names here. We will call apparent self-deception the phenomenon, and self-deception its natural explanation. In any case, being “apparently self-deceived” Mary will in good faith deny having the intention to blind herself, so it will be difficult to explain why she does not acknowledge her disease and why she avoids doctors.

The Motivational Unconscious (M-unconscious)

Because the motives that would most naturally explain the mysterious actions of Mary and Paul are not acknowledged by them, it might be tempting, in both cases, to claim that they have unconscious motives. There is an additional, and so to speak logical, reason to claim that there must be unconscious motives in the case of apparent self-deception. If Mary really deceived herself into falsely believing that she is perfectly healthy, as it seems she did, it would be paradoxical to suppose that her motive – her belief that she is sick and her desire to deceive herself into believing that she is not – was conscious. We cannot indeed be deceived by someone when we are aware of his or her desires to deceive us, or even when we are aware that what he or she says is false. The only way to solve this paradox, it might be claimed, and to make sense of the possibility of deceiving oneself, is to posit unconscious motives. If Mary only unconsciously knows that she is ill, and if she only unconsciously desires to believe that she is not ill, then she might actually deceive herself. Notice that this last argument does not rely on the claim that Mary is in good faith when she speaks about her motives and that conscious motives are always available to introspection. It only relies on the claim that she is really deceiving herself, as she seems to be, and that this logically implies unconscious motives. On that account, apparent self-deception would result from self-deception, and self-deception would not only prevent the subject of being conscious of some things: it would manage in doing so because it is motivated by unconscious beliefs and desires. Apparent self-deception, that is, would be a form of repression.

We can call motivational unconscious (M-unconscious) the kind of unconscious posited by those explanations. The M-unconscious is defined by the three following constraints:

• I. It is a form of unconscious: M-unconscious states are unconscious.

• II. It is a motivating unconscious: M-unconscious states can be motives for actions.

• III. It is a motivated unconscious: the unconscious character of M-unconscious states typically results from a form of repression.

The Basic Theoretical Argument for the M-unconscious

The basic argument for the M-unconscious is the one I just gave. It argues for the existence of M-unconscious states on the grounds that they provide the best explanation of some seemingly non-rational behaviors like self-deception. This is, roughly, Freud’s first argument for the unconscious in his 1915 paper The unconscious (Freud, 1974, p. 2991–3025). Actually, even before he used the notion of unconscious, Freud started to interpret phobias, obsessional compulsive disorders, and conversion disorders in terms of self-deception, as “neuro-psychoses of defense” Freud (1974, pp. 301–313). He then explained self-deception in terms of unconscious mental states and argued that M-unconscious states also manifest themselves in other, benign, phenomena such as dreams and lapsus.

It should be noted that even though Freud is probably one of the greatest theorists of such a M-unconscious, he is neither the first, nor the last one. Nussbaum (1994, p. 133) convincingly argues that Lucretius, and probably Epicurus, appealed to an M-unconscious fear of death in order to explain some of our irrational behaviors. In the same vein, some contemporary psychologists have also postulated M-unconscious states quite unlike those referred to by Freud (Yalom, 1980 is an example). The notion of M-unconscious is thus wider than that of the “Freudian unconscious”.

The recent fate of this notion, however, was shaped by that of Freud’s legacy. The M-unconscious endured attacks on at least four partially distinct fronts in the last century.

Theoretical Objections

On the theoretical side, it was argued that the basic argument for the M-unconscious is not sound. It is an abductive argument: an argument “to the best explanation”. It argues for the existence of M-unconscious states on the ground that they provide the best explanation of some of our behaviors. In order for such an argument to be conclusive though, it is not enough to claim that there are real cases of akrasia and apparent self-deception. It must be shown that the rival explanations of the same phenomena are not better. As we shall see, Jaspers (1997) already disputed that claim, and many philosophers have recently put forward very neat accounts of apparent self-deception which do not appeal to unconscious states.

Empirical (and Epistemological) Objections

The basic argument is also a theoretical argument: it relies on folk-psychological observations, clinical reports, and individual narratives rather than on controlled empirical experiments. It is moreover dubious that M-unconscious states have been directly observed. Some have argued, in the wake of logical positivists, that the reality of apparent self-deception and of the M-unconscious should not accordingly be granted. It has even been argued that the M-unconscious had not only failed to be empirically ascertained, but also that it had to, for principled reasons. I will not, however, consider those well known epistemological objections here.

Conceptual Objections

Finally, it has been claimed that the very concept of M-unconscious is inconsistent or at least very problematic and that it should be reformed, if not eliminated (Sartre, 1976; Wittgenstein, 1982).

Outline

Those attacks probably contributed to the withdrawal of the M-unconscious from scientific psychology. Studies on the M-unconscious were substituted by experiments focused on the “cognitive unconscious” which is not defined by its connection to motivation but only by its unconscious character. The latter seemed much simpler – one might say much dumber – and for that reason much less problematic than the M-unconscious.

The face of the cognitive unconscious has however drastically changed since Kihlstrom (1987) contrasted it with the “psychoanalytic unconscious”. Recent developments in cognitive neurosciences have shown that unconscious states are many, and that they can occupy a wide array of functions long thought to be the prerogative of consciousness. Not only can we perceive objects unconsciously, we can also perceive some of the high level features of those objects, like the meaning of words (Dehaene and Naccache, 2001). We can learn rules unconsciously (Cleeramns et al., 1998). We can unconsciously appraise things or persons (Fazio and Williams, 1986; Bargh et al., 1996). Some results even suggest that self-regulation and metacognition can also occur unconsciously (Hassin et al., 2005). In the meantime, philosophers of cognitive science have shown that we could meet some of the difficulties raised by the idea of a rich unconscious mental life, of unconscious representations and so on. All this led Uleman (2005), in the introduction of a collection of essays devoted to the topic, to conclude that we should now talk of the “new unconscious” and that “the list of psychological processes carried out in the new unconscious is so extensive that it raises two questions: what, if anything, cannot be done without awareness? What is consciousness for?”.

By an interesting twist of fate, this new understanding of the cognitive unconscious has also led to an important revival of some Freudian ideas, some researchers even claiming that after years of neglect, the Freudian unconscious had finally been vindicated.

The aim of this paper is to take advantage of those recent developments to reassess the basic argument for a motivational unconscious at a theoretical, empirical, and conceptual level. This task is somehow too wide and I will have to restrict it by focusing on the version of the basic argument that appeals to apparent self-deception. This limitation is justified by the central role of apparent self-deception in the motivational unconscious: repression is nothing but the way partisans of the motivational unconscious explain apparent self-deception. It is also justified by the quality and the variety of the researches that have been devoted on this topic these last 30 years. I will first show that the basic argument can be rescued and developed into a cogent general theoretical argument for the M-unconscious. This general argument concludes that the M-unconscious provides the best explanation of apparent self-deception, or rather of what we know of it from folk-psychological observations, psychiatric case histories, and first-person narratives (see Section 2).

I will then investigate some recent “empirical vindications” of the motivational unconscious (see Section 3). Here again, I will have to restrict my overview to three influential series of studies that try to empirically certify the existence of apparent self-deception in order to prove the existence of the M-unconscious. Despite its limitation, this overview will allow us to spot some shortcomings shared by many studies of the same vein, shortcomings which explain what I take to be the rather limited success of this “vindicating enterprise.”

Finally I will tackle what I perceive to be the two major conceptual problems posed by the M-unconscious (see Section 4).

The outcome of this threefold review will be an original picture of the M-unconscious which I call affective and neo-dissociationist. According to that picture, M-unconscious states and processes are affective states and processes that the subject really feels and experiences – and which are in this sense conscious – even though they are not, or not well, cognitively accessible to him. This picture of the M-unconscious is affective in that M-unconscious states and processes are essentially affective. It is neo-dissociationist in that, even if M-unconscious states are in some sense unconscious, they are in another important sense conscious, the role of repression being to maintain the dissociation of two forms of consciousness rather than to expel some states from a monolithic consciousness. Dual-process psychology and the literature on cold–hot empathy gaps partly support this version of the M-unconscious. I argue that the latter does not only have some empirical plausibility. It is also the only one that can escape the two conceptual problems (see Section 5).

Those four sections are not unrelated. Indeed we will see that the alternative explanations of apparent self-deception found in the first part will help in criticizing the empirical studies in the second part, and that the conceptual problems which afflict the M-unconscious will allow us to diagnose the relative failure of the empirical vindication. I will indeed argue that the M-unconscious must be quite unlike what we have been looking for in most empirical studies.

1 Personal Level Explanations and the M-unconscious

Before addressing the theoretical, the empirical, and the conceptual objections confronting the M-unconscious we need to introduce some terminology and to make the definition of the M-unconscious more articulate.

We shall see, in the next section, that there are very simple and now very common explanations that could replace the ones in terms of M-unconscious. The explanations I am thinking of are framed in terms of mere “unconscious” brain states, activation levels, and neurotransmitters. They are what we might call purely neurophysiological explanations. Such neurophysiological explanations have been put forward to explain depression and addiction2, and there is no reason to suppose that similar explanations are not available in the case of apparent self-deception. It is important to understand that explanations of this kind are radically different from the ones in terms of the M-unconscious.

1.1 Motives and Causes

The distinction between motives and mere causes is in this respect crucial. By citing motives, folk-psychological explanations do not only allow to predict actions, they also “make sense” of them. They justify them. If John desires to wound his wife and points his gun at her, it makes sense for him to pull the trigger. His action is in that respect justified by his motive. By only citing causes, purely neurophysiological accounts also allow to predict actions, but they do not make sense of them3. When a judge asks you “why did you do it?”, he is asking for motives, not for mere causes. When a neurophysiologist asks the same question, he might be asking for mere causes. It is in this technical sense of motivation that M-unconscious states can be motives for action. By positing some states which are unconscious but are motives nonetheless, explanations in terms of M-unconscious accordingly prolong rather than revise folk-psychological explanations.

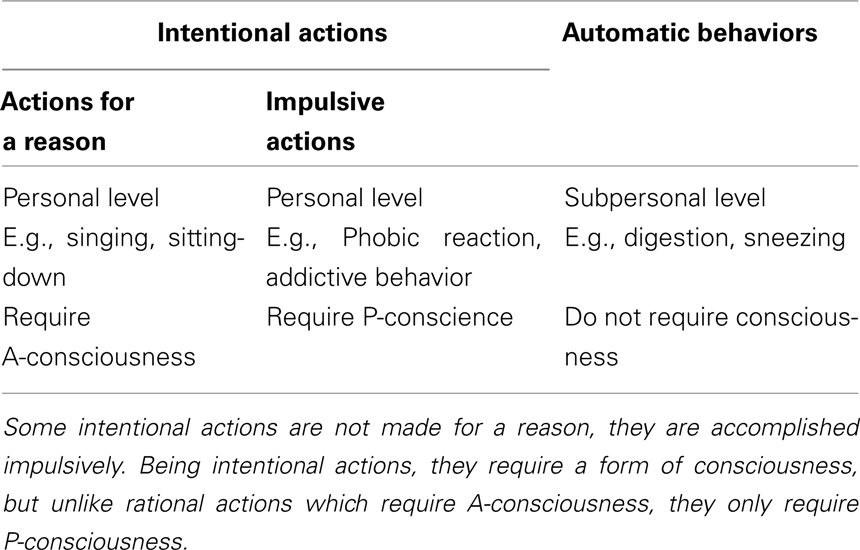

1.2 Personal and Subpersonal Explanations

It is customary, since Dennett (1969), to say that motives and the kind of “sense-making” explanations in which they figure belong to the personal level as opposed to the subpersonal or even parapersonal level. This terminology reflects the fact that this kind of explanation mentions states, events and processes that we would naturally ascribe to a person. Other explanations, on the other hand, can mention states, events or processes that we would naturally attribute to a proper part (“sub”) of a person like his brain, or to quasi-subjects (“para”) within the person. Neuropsychological explanations are, for example, typically subpersonal.

1.3 The Three Constraints on a Motivational Unconscious

These distinctions can help us make the three constraints on a M-unconscious more precise. M-unconscious states (processes, events…) are not only unconscious (I). They are also motivating:

II. The M-unconscious is a motivating unconscious. M-unconscious states can be motives of the subject’s actions, they are states that can explain those actions at a personal level.

M-unconscious states are also typically motivated: their unconscious nature results typically results from repression. Repression in this sense includes the expulsion from consciousness of a conscious state as well as the “defense” processes that maintain an unconscious state away from consciousness. Here again, it should be insisted that repression is a personal level phenomenon that can be compared to hiding something from someone. It is something the subject does for a motive, hence something he does intentionally4. It is not a subpersonal happening, and it is not the action of a small homunculus within the subject. As it is intentional, it is not the mere collateral effect of some intentional actions of the subject either. When the bombing of a bridge causes civilian losses, the latter are sometimes unintended. They are a mere collateral effect of an intentional action. This implies that “killing civilians” is not an intentional action but “bombing the bridge” is, even though both expressions can be used to describe what the subject did. Repressing a mental state is in that respect like bombing the bridge rather than like killing civilians:

III. The M-unconscious is a motivated unconscious. M-unconscious states typically result from a form of repression, which is an intentional action of the subject5.

2 Theoretical Objections Against a M-unconscious

The basic argument for a M-unconscious has been challenged, as we said, on theoretical grounds. I will try to show that the M-unconscious can actually withstand those criticisms quite well.

2.1 Against Subpersonal Accounts of Apparent Self-Deception

The simplest rivals to the explanation of apparent self-deception in terms of M-unconscious states are framed at a purely subpersonal level. Such explanations would provide unconscious causes for the behaviors under scrutiny, and they would not commit to the existence of unconscious motives. Correspondingly, they would not try to make sense of those behaviors. The gist of Jaspers (1997)’s early objections to Freud was precisely that the latter had not shown the need to reject such subpersonal explanations of those non-rational behaviors in favor of personal level ones6.

Why should we favor personal level explanations? One answer has it that personal level explanations should, when they are possible, always be favored to subpersonal explanations. It could be claimed, for example, that we should by default maximize the intelligibility of the subjects we interpret (this is a – rather hasty – way to understand the “principle of charity”; Davidson, 1984). In such a bold form however, this claim is not tenable. I just remembered a minute ago that I needed to buy some bread. Why a minute ago rather than a minute earlier? This question has an answer in subpersonal term. I take it however that it would in general be totally unreasonable to look for another, complementary, answer framed in personal terms. At least in normal circumstances, there is just no plausible explanation of such a phenomenon in personal terms7.

What is true is that in psychology, like everywhere, a good explanation should by default save the appearances and that some of the phenomena we are trying to explain, unlike remembering a minute ago to buy some bread, do indeed seem to be phenomena that can be explained in personal terms. By definition, apparently self-deceived subjects seem to be deceiving themselves. If we want to save this appearance we will have to explain this phenomenon in personal terms. Moreover, and pace eliminativism (Churchland, 1981), personal level explanations have many virtues, which might justify that we should favor them when they are not too implausible. They have some explanatory virtues: they are simple and easy to understand, they are also quite general and have relatively good predictive capacities. Although it is probably more controversial, I take it that they also have some moral virtues. They make it easier, for example, to consider the subject as responsible of his deeds and to empathize with him.

2.2 The Paradoxes of Self-Deception

Those are reasons to favor, by default at least, a personal level explanation of apparent self-deception. Now personal level explanations can appeal to conscious states as well as unconscious states. Why should we prefer unconscious states in some cases? Why should we, furthermore, appeal to repression? We outlined a basic argument to the effect that personal level explanations of apparent self-deception must invoke unconscious motives and repression. This argument claims that other explanations of the same phenomenon will lead to paradoxes. Even if their primary target was not usually the M-unconscious, in the last 40 years, philosophers of mind have precisely articulated those paradoxes and have shown that there are many other ways to escape them and to explain apparent self-deception which do not appeal to unconscious states. Those alternative solutions to the paradoxes, and the alternative explanations of apparent self-deception which rely on them, threaten the basic argument. We should accordingly review those articulate versions of the paradoxes and assess their various solutions.

Self-deception can give rise to two paradoxes (Mele, 2001; Davidson, 2004).

The static paradox of self-deception

1. In order to deceive someone into believing P, as opposed to merely induce someone into believing P, one has to believe the contrary proposition ~P.

2. In order to be deceived into believing P one must believe P.

3. If someone believes P and believes ~P he believes (P and ~P).

4. So by (1–3), in order to deceive oneself into believing P, one must believe (P and ~P).

This is paradoxical because although apparent self-deception is certainly irrational it does not seem so irrational as to involve the belief in a bold contradiction.

The strategic paradox has to do with the absurdity of the project or intention of deceiving myself rather than with the absurdity of the state of being self-deceived.

The strategic paradox of self-deception

1. In order to deceive someone intentionally I must know (and hence believe) that I am trying to deceive him.

2. In order for someone to be deceived by me, he must believe that I am not trying to deceive him.

3. If I believe that I am trying to deceive myself and if I believe that I am not, I believe that (I am and I am not trying to deceive myself).

4. So by (1–3), in order to deceive myself I must believe that (I am and I am not trying to deceive myself).

This is paradoxical, again, because apparent self-deception does not seem that irrational.

The M-unconscious provides, as we said, a very natural solution to those paradoxes. When someone apparently deceives herself into believing P, she consciously believes P and unconsciously believes ~P. This solves the static paradox because one can believe P-consciously and believe not P unconsciously without believing (P and ~P). Moreover, the intention of deceiving oneself must be itself unconscious, so the subject may not believe that he has it. This solves the strategic paradox.

According to this explanation, self-deception stems from an unconscious motive (the intention to deceive oneself) and results in a repressed, unconscious belief (the belief that ~P). The natural character of this solution does not suffice, however, to establish that it is the best solution to the paradoxes, and that the M-unconscious accordingly provides the best personal level explanation of apparent self-deception. There has been a considerable amount of philosophical and psychological theorizing on apparent self-deception in the last 40 years and many have proposed alternative explanations of this phenomenon that are spelled in personal terms, but which do not appeal to unconscious states. In order to be conclusive, the general argument for the M-unconscious must show that those explanations are not as good as the one in terms of M-unconscious. Reviewing those explanations will prove useful because they provide interesting variants of the Freudian explanations, variants that we should keep in mind when we will assess the empirical evidence for the M-unconscious.

2.3 Explaining Apparent Self-Deception in Terms of a Divided Self

Ironically enough, with one notable exception (Fingarette, 1969), the debate on apparent self-deception has largely ignored the latter Freudian solution. It has concentrated instead on a close cousin of it, which postulates, instead, a separation within the self between two centers of beliefs and agency.

2.3.1 Cognitive separations and the static paradox

One can indeed solve the static paradox by postulating that the conflicting beliefs are both conscious but that they are hidden to each other by a form of separation. This involves construing the self as divided into different centers of conscious beliefs.

2.3.2 Agentive separations and the strategic paradox

In order to get rid of the strategic paradox we must go further and suppose that the intention to deceive, just like the belief that P which contributes to motivate it, is also separated from the rest of the subject. The separation will thus be agentive as well as cognitive.

When a subject is self-deceived, a part of himself would be deceiving another part of himself. Partisans of such a solution disagree on the degree of autonomy one should grant to those parts of the self. Rorty (1988) pictures them as full-blown homunculi. Pears (1987) acknowledges different centers of agency but tries not to picture them as full-blown subjects. However, the mere suggestion that the self is literally divided in multiple centers of agency is sufficiently awkward already. It is one thing to say that someone has different, and maybe conflicting, faculties, and inclinations, it is quite another to say that it is constituted by different centers of agency! Davidson (1983) acknowledges the problem. He actually takes it, wrongly I believe, to be a problem afflicting the Freudian conception of the mind (Davidson, 2004, pp. 170–171). He tries to eschew it by talking of semi-autonomous divisions or quasi-independent structures (Davidson, 2004, p. 181) while making clear that “the idea of a quasi-autonomous division is not one that demands a little agent in the division” (Davidson, 2004, p. 181). Davidson later emphasized that “the image [he] wished to invite was not, then, that of two minds each somehow able to act like an independent agent; the image is rather that of a single mind not wholly integrated; a brain suffering from a perhaps temporary self-inflicted lobotomy.” I think these remarks point toward the right direction, which is, we shall see, that of the M-unconscious approach to self-deception, and of its neo-dissociationist variant (see Section 6.1). The problem is that it is not clear that Davidson has the resources to solve the strategic paradox and to endorse one of those approaches while refusing, as he does, to be spell out more precisely the nature of this lack of integration8 and to resort to the concept of (un-)consciousness9.

The divided self approach to apparent self-deception is not only metaphysically extravagant. It suffers from a deeper, seldom noted, problem10. It cannot, by itself, explain apparent self-deception. Suppose there are two centers of agency in someone, a deceiver and a deceived one. The deceived center of agency has reasons to be deceived: believing something false will alleviate his pains and worries. But what reasons could the deceiving center have to deceive the deceived center? By definition, two distinct centers of agency can have independent motives for acting. Just like your reasons for pulling the trigger are not in general reasons for me to help you do so, the deceived center’s reasons for being deceived will not in general be reasons for the deceiver to do the deceiving. The partisan of the divided self approach owes us a story here, a story which he unfortunately never provides.

It should be noted, by the way that this idea of a divided self, an idea for which Sartre (1992) notoriously criticized Freud and which Davidson also attributes to Freud has not been endorsed by him11. As Gardner (2006, pp. 148–153) has argued at length, “The ego, id, and superego, as parts of the soul, do war, but they are not each of them warring souls.”

2.4 A First Deflationary Account: Apparent Self-Deception as Diachronic Self-Deception

Many philosophers have insisted that we can avoid the problems posed by the divided self approach without espousing a Freudian approach to apparent self-deception. They have tried to explain this phenomenon in a deflationary way, that is, without positing a present intention to deceive oneself. Some have proposed that self-deception is actually diachronic. When I decide not to indicate a future appointment in my notebook, hoping that I will eventually forget it, I can manage to deceive myself without paradox because it is so to speak my present self which deceives my future self. All cases of apparent self-deception, argue the partisans of the diachronic self-deception approach, would be like that. A subject would form the intention at time t, to deceive himself at a future time t’. The problem with this proposal is that at least in some cases of apparent self-deception – let us call them cases of severe apparent self-deception, the subject displays a form of (synchronic) inner conflict. This conflict can be witnessed by an equivocal behavior, as in the example of the self-deceived patient who claims not to suffer from cancer but simultaneously avoids his physicians. In that case as in others, the conflict can also surface in abnormal emotional reactions associated with the assertion of P. Just like liars, subjects of severe forms of apparent self-deception can for example show heightened autonomic response when making their claim (Bonanno et al., 1995). Diachronic accounts cannot explain severe apparent self-deception. They do not, accordingly, threaten the M-unconscious approach.

2.5 A Second Deflationary Account: Apparent Self-Deception as Motivationally Biased Beliefs

Another deflationary account of apparent self-deception consists in explaining away the impression that the subject intends to deceive himself at one point or another. There is ample empirical evidence that our belief formation mechanisms are often biased by our desires. Mele (1997, 2001) has argued that motivationally biased beliefs could explain cases of apparent self-deception. Consider for example a young man who recently lost his father. To alleviate his pains, he tries to remember the good moments he spent with him, so he focuses on situations in which his father was very nice and he comes to forget darker aspects of his personality. He ends up believing falsely that he was a very gentle man. Here the belief is certainly biased by the young man’s desires. His belief might even correspond to what he desires to be the case. But it is better described as a case of wishful thinking than as a case of self-deception, for the subject never had to form the intention to believe or to forget anything. His desires biased his belief, and thus caused it, but they did not motivate it. The belief was not formed intentionally, to put the same point in another way, it was only a happy collateral effect. Mele (1997, 2001) claims that all cases of apparent self-deception are like that.

Like the preceding one, this approach cannot however account for the inner conflicts which are characteristic of severe cases of apparent self-deception. It also suffers from a specificity problem (Bermùdez, 2000). We have many desires that do not give rise to apparent self-deception. Theories of apparent self-deception which appeal to the subject’s intention to deceive himself can readily account for this specificity of apparent self-deception: apparent self-deception would only arise when a subject not only desires P or even desires to believe P, but when he also intends to believe P. It is not obvious that the theory of apparent self-deception as motivationally biased beliefs can give a satisfying account of the specificity of apparent self-deception (although see Mele, 2001, chap. 3).

2.6 The General Argument for a M-unconscious

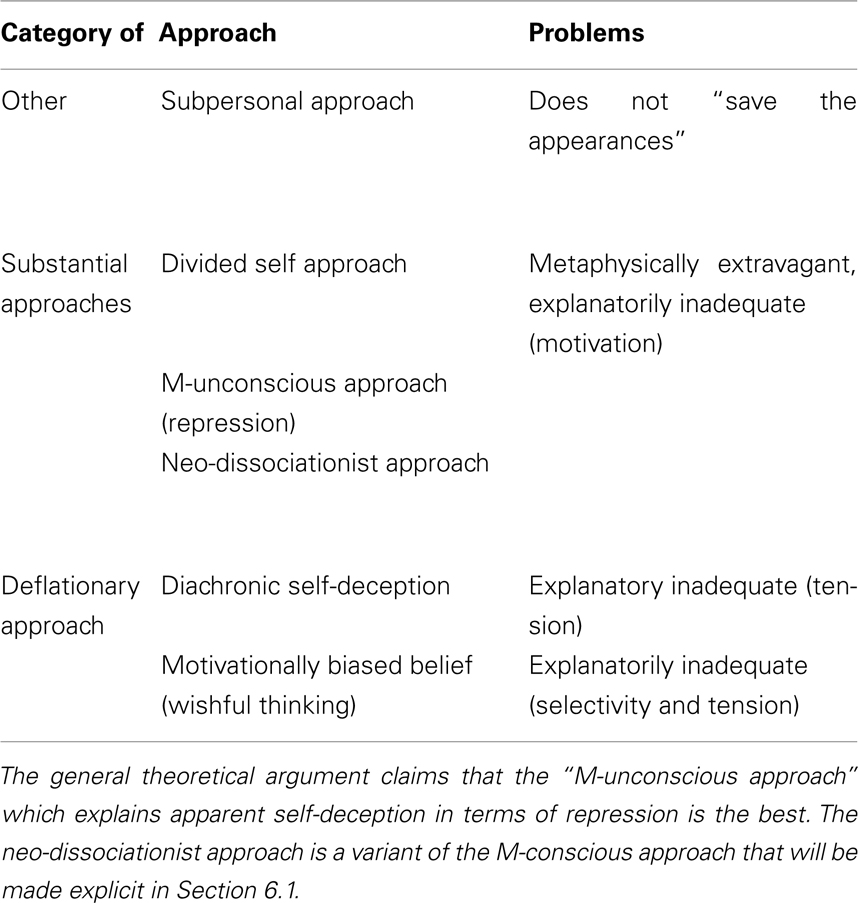

Having reviewed alternative approaches to self-deception and their problems (Table 1), we are in a position to state the general theoretical argument for the M-unconscious.

1. Exclusion of deflationary approaches. Cases of apparent self-deception, which involve selectivity and inner conflict, are better explained in a non-deflationary way.

2. Folk-psychological observation. Apparent self-deception sometimes involve selectivity and inner conflict.

3. General explanatory principle. A case of apparent self-deception which is better explained in a non-deflationary way involves a form of motivational unconscious.

The general explanatory principle rests on the supposition that the subjects are not too irrational [so that they cannot believe plain contradictions like (P and not P)] and on the rejection of the divided self and the subpersonal approaches to apparent self-deception. The exclusion of deflationary approaches rests on the fact that deflationary approaches of apparent self-deception, which do not posit a present intention to deceive oneself, cannot explain the presence of inner conflicts or of selectivity.

3 Empirical Evidence for a M-unconscious

The theoretical reasons to posit a M-unconscious rely on folk-psychological observations and on the general explanatory principle, which depends itself on contentious methodological claims. It would not weigh much in the face of empirical evidence to the effect that there are no M-unconscious states. The general argument is in that sense provisional and it would be nice if its conclusion could be empirically confirmed.

Many recent studies have been said to provide the confirmation needed. I will review three influential series of studies on memory suppression, confabulation, and anosognosia for plegia respectively. I will argue that they mostly miss their target. Only the studies on anosognosia come close to vindicating the M-unconscious, but they tend to favor a neo-dissociationist variant of the M-unconscious approach, according to which the repressed states are not unambiguously conscious.

3.1 Memory Suppression

In a much quoted study, Anderson and Green (2001) have shown that subjects can intentionally suppress unwanted memories. Their conclusion drew on the following “think/no-think paradigm”:

• In the first, preliminary phase, subjects are trained to learn some cue-target word pairs.

• In the experimental phase, which could be repeated several times, they were shown the cue. For some words they had to think about the target (“think” condition) while for others they had to suppress thoughts about the target (“no think” condition).

• In the final phase the subject’s memory of the target associated with each cue was assessed.

Anderson and Green (2001) have shown that the “think/no think” instruction had a significant effect on recall. The “think” instruction made retrieval of the target easier, while the “no think” instruction made it harder. This effect was moreover strengthened by the number of repetitions of the experimental phase. Encouraging retrieval with rewards did not affect those results. Anderson and Green(2001, p. 303) concluded that their study “provides a mechanistic basis for the voluntary form of repression (suppression) proposed by Freud”. Schacter (2001) noticed that this was at least overstated, given that the targets learned and suppressed were not emotionally loaded. The “think/no think” has however recently been applied to targets containing negative emotional content, showing that “when cognitive control mechanisms are directed toward suppression, their effect on memory representations is heightened for emotional compared with neutral stimuli” (Depue et al., 2006, 2007).

This is not, however, enough to vindicate a motivational unconscious. These studies indeed show that a subject can voluntarily forget something but they do not show that the suppressed memory remains in an unconscious form. It could have simply been destroyed. As Kihlstrom (2002) has pointed out “there was no evidence presented of persisting unconscious influence of the suppressed items. And there was no evidence that the ‘amnesia’ could be ‘reversed’.” Such an evidence would be however required in order to show the existence of a motivational unconscious.

This failure has to do with the fact that memory suppression is a form of apparent self-deception which can straightforwardly be explained in deflationary terms as a form of diachronic self-deception, and which does not require, for that reason, the presence of unconscious mental states.

3.2 Motivated Confabulation

The term “confabulation” was coined in the beginning of the century to refer to the condition of some amnesic patients who seemed to invent, in “good faith,” narratives about themselves and about the world, and showed little concern about the plausibility of their stories. In his monograph devoted to the topic, Hirstein (2006) offers the following description of an encounter with a confabulating patient:

A neurologist enters a hospital room and approaches an older man sitting up in bed. The neurologist greets him, examines his chart, and after a brief chat in which the man reports feeling fine, asks him what he did over the weekend. The man offers in response a long, coherent description of his going to a professional conference in New York City and planning a project with a large research team, all of which the doctor writes down. The only problem with this narration is that the man has been in the hospital the entire weekend, in fact for the past three months. What is curious is that the man is of sound mind, yet genuinely believes what he is saying. When the doctor informs him that he is mistaken, he replies, “I will have to check with my wife about that,” then seems to lose interest in the conversation.

A similar phenomenon has since been found to affect some patients suffering from anosognosia for plegia, Anton syndrome, and hemineglect. In an experimental setting, it could also affect the patients whose corpus callosum has been severed (“split-brain” patients). In all those cases, the situation is somehow similar. Information coming from a given source is abnormally scarce (short-term memory in Korsakoff’s syndrome, proprioception in anosognosia for hemiplegia, vision in Anton syndrome, sensory information exchanged through the corpus callosum in split-brain patients, etc.). When information from this source is solicited, the patients seem to fill the gaps by concocting stories.

Some have argued finally, that normal subjects are also prone to confabulation when asked to justify an attitude the cause of which is not normally available to introspection (Nisbett and Wilson, 1977; see also Bortolotti and Cox, 2009, p. 957–958, Dennett, 1992). This has led some to widen the meaning of confabulation. We will say that while confabulation in the narrow sense can only affect patients suffering from amnesia, confabulation in the wide sense is associated with other pathologies as well as with normal subjects. In this section, we will only deal with confabulation in the narrow sense.

If explanations of confabulation commonly appeal to cognitive factors, recent studies have suggested that they should invoke motivational factors and even M-unconscious states as well.

3.2.1 Cognitive explanations

Many have noticed that classical cognitive explanations of confabulations suffer from important shortcomings. It is customary to divide cognitive explanations of mental disorders into three categories:

• bottom-up, “empiricist” theories explain it in terms of an experiential deficit,

• top-down, “rationalist12” deficits explain it in terms of an executive function (“rationality”) deficit,

• “two-factor13” accounts explain it in terms of both kinds of deficits.

As confabulation (in the narrow sense) is connected to a memory deficit, purely rationalist accounts are implausible. We are left with two groups of explanations. An inability to retrieve the relevant memories or to properly situate them in time, associated with a normal gap-filling mechanism could plausibly give rise to something like confabulation. However, it is well known that amnesia does not always give rise to confabulation. This has led some to postulate, in addition to the memory deficit, an executive dysfunction leaving patients unable to properly assess the hypotheses they form to fill the gaps in their memories. The occurrence of such a deficit is plausible since frontal sites responsible for the cognitive control and monitoring of thoughts are frequently damaged in confabulating patients (Fotopoulou et al., 2004; Turnbull et al., 2004). This two-factor explanation might not be entirely satisfactory, however, since it cannot account for confabulation in the wide sense, which does not seem to require an executive dysfunction. It might be answered that we should not expect that a single causal mechanisms underlies all forms of confabulations. Another problem with this account is that it resembles the so-called two-factor explanations of delusions. It might not, accordingly, be in a position to differentiate between delusions and confabulations (some actually welcome this consequence; see Bortolotti and Cox, 2009, pp. 955–956).

3.2.2 Motivational influences

The objections against cognitive explanations of confabulation, even though they might not be totally conclusive, might invite one to look for alternative explanations. There are reasons to think that motivational factors can also play a role in confabulation. It has long since been observed that the content of confabulations is often self-serving. This clinical observation has started to gain empirical confirmation. Using independent raters Fotopoulou et al. (2008b) showed that confabulating patients distorted their previous experiences in ways that are more pleasant than healthy and amnesic controls (Solms, 2000; Fotopoulou et al., 2004 and Turnbull et al., 2004 provide convergent data).

Among others, Marks Solms took the motivational account of confabulation to vindicate some Freudian ideas (Solms, 2000; Turnbull and Solms, 2007). It does not however vindicate the Freudian unconscious. For all those studies show is that the false beliefs of the confabulating patients fit their desires. They constitute a case of apparent self-deception. They give us no reason, however, to think that this apparent self-deception should be explained in terms of M-unconscious mental states rather than in a deflationary way, as a form motivationally biased belief formation (a form of mere wishful thinking). They do not show that the patients have a conscious true belief that contradicts their conscious false belief nor an unconscious motive to acquire this conscious false belief. In order to do that, an account of confabulation would have either to give direct empirical evidence of conflicting unconscious mental states or, at least, to show that because the patients’ apparent self-deception involve selectivity and inner conflict, it cannot be accounted for in a deflationary way.

3.2.3 Lack of selectivity

As far as confabulation in the narrow sense is concerned, there is no direct empirical evidence of such unconscious states available yet. Furthermore the patients’ apparent self-deception does not exhibit the features that would rule out an explanation in terms of a motivational bias. First, the content of confabulation is not specific enough to suppose that it is intentional: their confabulations tend to bear on their past but this can be explained by their amnesia, which facilitates their wishful thinking on this topic. This is indeed how Fotopoulou et al. (2008a) explain the phenomenon: “both confabulation and its motivated content result from a deficit in the control and regulation of memory retrieval, which allows motivational factors to acquire a greater role than usual in determining which memories are selected for retrieval. To this extent, the self-enhancing content of confabulation could be explained as a neurogenic exaggeration of normal self-serving memory distortion.”

3.2.4 Lack of inner conflict

Second, the patients do not exhibit the signs of an inner conflict. Their behavior has not been shown to be ambivalent. Hirstein (2006, p. 180) remarks that they have an hyporesponsive or unresponsive autonomic system and suggests that they would not show the emotional reaction which is characteristic of lying. Conjoined with the lack of selectivity this apparent lack of inner conflict tends to show that anosognosia is a form of facilitated wishful thinking rather than a form of self-deception.

3.3 Anosognosia for Plegia

Patients with anosognosia for plegia deny their paralysis. They can also produce narratives “covering” their ailment, and thus confabulate in the wide sense. They constitute an interesting case because (i) the explanation of their pathology seems to require, like that of confabulation in the narrow sense, the appeal to motivational factors, (ii) but they do sometimes show signs of an inner conflict. I tackle this second point first.

3.3.1 Clinical evidence of inner conflicts

It has been observed clinically that many patients seem to be somehow cognizant of their condition. Ramachandran (2009) for example describes the following case (notice that he appeals to a divided self approach to resolve the apparent inconsistency of the patient):

An intelligent and lucid patient I saw recently claimed that her own left arm was not paralyzed and that the lifeless left arm on her lap belonged to her father who was “hiding under the table.” Yet when I asked her to touch her nose with her left hand she used her intact right hand to grab and raise the paralyzed hand – using the latter as a “tool” to touch her nose! Clearly somebody in there knew that her left arm was paralyzed and that the arm on her lap was her own, but “she” – the person I was talking to – didn’t know.

Other patients seem to avoid bimanual tasks. Ramachandran also described what he interpreted as “reaction formations” betraying the patients’ knowledge. One of his patients for example claimed “I can’t wait to go back to two-fisted beer drinking” (Ramachandran and Blakeslee, 1998, p. 139). Another wrongly claimed “I tied the shoelace with both my hands” (Ramachandran and Blakeslee, 1998, p. 139).

3.3.2 Empirical evidence of inner conflicts

Several measures have been recently put forward to assess those apparent inner conflicts. Marcel et al. (2004) asked patients to estimate their bimanual abilities in the first-person form (“In your present state how well, compared with your normal ability, can you…”) and in the third-person form (“If I were in your present state, how well would I be able to…”). They concluded that 15–50% of the patients suffering from anosognosia with a right-brain damage showed greater overestimation of their abilities when asked in the first-person rather than in the third-person. Marcel et al. (2004) also obtained indirect acknowledgments of the deficit by asking the patients about their weak limb “in a series of different ways (e.g., in a tentative, confidential manner): “Is it ever naughty? Does it ever not do what you want?” While most patients suffering from anosognosia expressed incomprehension or replied negatively, five right-brain damaged patients replied affirmatively. One patient responded conspiratorially “Oh yes! In fact, if it doesn’t do what I want, I’m going to hit it.” As they recognize, “It is not yet clear (…) what the relevant aspect of [the] question was: type of description, confidentiality of interaction, emotional tone, or infantile speech.”

Bisiach and Geminiani (1991) report that some patients may deny their plegia while spontaneously avoiding bimanual actions whereas others explicitly grant their plegia but spontaneously engage in bimanual actions. Such dissociations between implicit and explicit knowledge of plegia were recently tested by Cocchini et al. (2010) on a group of 30 right-brain damaged patients. They found that two patients exhibited a selective implicit anosognosia and eight patients exhibited a selective explicit anosognosia. Finally, Nardone et al. (2007) tested two patients using an attentional-capture paradigm. Unlike non-anosognosic controls, they showed a higher simple reaction time when a word related to movement was presented with the target, suggesting again, an implicit awareness of their deficit.

There is thus consistent evidence that some patients suffering from anosognosia, unlike patients suffering from confabulation in the narrow sense, have conflicting mental states relative to their plegia. We shall also see that, like the latter, their condition seems to result from motivational influences.

3.3.3 The explanatory argument for motivational influences

The denial of illness is a false belief which is self-serving and probably pleasant. But this is not enough to show that it is influenced by motivational factors for it could be self-serving so to speak, by chance (I believe that the weather is sunny today, which is actually conform to my desire, but there was no causal influence of my desire on my belief). One reason to affirm that it is influenced by motivational factors appeals to the insufficiency of non-motivational explanations of anosognosia. Like the classical explanations of confabulation (in the narrow sense) classical, cognitive, explanations of anosognosia divide roughly into two groups. According to the empiricist accounts, the patient would deny his plegia because of a proprioceptive loss, of an hemispatial neglect, or because of a more subtle somatosensory deficit, such as an impairment of the feedforward mechanism responsible for the monitoring and control of limb movements (see Vuilleumier, 2004 for a review). Those empiricist theories are unsatisfactory because double dissociations between those somatosensory deficits and anosognosia have been observed, and because, more deeply, those somatosensory deficits cannot explain why the patient maintains his denial despite the contrary evidence that other reliable sources (vision, testimony, etc.) keep providing14. In response some theorists have proposed a two-factor theory which posits an executive problem, such as confusion or inflexibility. Those executive problems would however have to be very important and many data suggest that they are actually absent in some patients (Marcel et al., 2004; Vuilleumier, 2004).

The insufficiency of both kinds of cognitive theories might be seen as vindicating the causal role of motivational factors in anosognosia (Levy, 2008). Even when they are conjoined with experiential and executive deficits however, motivational factors will not be sufficient to explain all cases of anosognosia. Prigatano et al. (2010) reports for example a patient suffering from anosognosia and blindness. Suffering from hemineglect, she was no more aware of his visual experience than of her proprioceptive experience in her left hemifield. She nevertheless denied her plegia but acknowledged her blindness. It would be ad hoc to suppose that she had more motivations to deny her plegia than her blindness. All this suggests that the shortcomings of traditional explanations are often due to the fact that anosognosia is not a unitary phenomenon with a single underlying cause, rather than to the neglect of motivational factors.

3.3.4 The direct argument for motivational influences

This is not to say that motivational factors do not play a role in the explanation of some forms of anosognosia. Indeed some of the data collected by Marcel et al. (2004, p. 33) precisely suggest that they do. They observed, for example that “men over-estimated their abilities on car driving more than women and only in the first-person version of the question.” This overestimation might be motivated by self-esteem, car driving abilities being more relevant to the latter for men than for women. The following observation is also interesting in that respect. After giving the patients a bimanual task, Marcel et al. (2004) asked them to explain their failure. It appeared that a significant portion of patients gave bizarre answers (e.g., “I should use a robot,” or “My arm has a cold”) suggesting both an implicit knowledge of their handicap and “a desperate attempt at defense against [explicit] acknowledgment” Marcel et al. (2004, p. 34).

Let us take stock. The false beliefs of some patients with anosognosia seem to arise from motivational factors. Those patients are apparently self-deceived. Moreover, they have conflicting attitudes toward their plegia, which suggests that this case of apparent self-deception is better explained in terms of a present intention to deceive oneself rather than in a deflationary way, as a form of diachronic self-deception or as a mere motivationally biased belief. This gives us an empirical argument for the existence of a motivational unconscious. The force of the latter should not be over-estimated though. It is actually a variant of the general argument:

1. Exclusion of deflationary approaches. Cases of apparent self-deception which involve selectivity and inner conflict are better explained in a non-deflationary way.

2. Empirical observation. Anosognosia is a form of apparent self-deception which involves an inner conflict.

3. General explanatory principle. A case of apparent self-deception which is better explained in a non-deflationary way involves a form of motivational unconscious.

The only difference is that the existence of the relevant case of apparent self-deception is supported by empirical evidence concerning anosognosia (3) whereas it is supported by folk-psychological observations about normal behavior in the original argument. The progress is meager given that the general principle is far more plausible in the case of normal behavior than it is in the case of anosognosia. The general explanatory principle, it should be reminded, rests on the assumption that subjects are rational enough to detect obvious inconsistencies between their conscious attitudes. It is not obvious, however, those patients who suffer from anosognosia and are relevantly self-deceived – those who exhibit a form of inner conflict – satisfy this rationality assumption.

More fundamentally, the general explanatory principle rests on various premises that might be deemed controversial and we should expect an empirical confirmation to give us independent support for its conclusion. It rested, for example, on the claim that apparently self-deceived patients seem to be deceiving themselves and that we should by default save this appearance. Such premises, again would not weigh much against some empirical evidence which favors alternative explanations of the apparent self-deception afflicting the patients. Some influential researchers have indeed argued for such alternative explanations. Hirstein (2006) has argued for an explanation in subpersonal terms. Marcel et al. (2004) have argued for an explanations in personal but conscious terms. I will argue that if the empirical evidence gathered so far seems to exclude Hirstein’s account, it also seems to favor Marcel’s neo-dissociationist account over those which picture the repressed states as unambiguously unconscious.

3.3.5 Subpersonal self-deception?

Hirstein (2006) has claimed that cases of apparent self-deception in patients suffering from anosognosia could be explained without having to postulate that the subject knows about his plegia and intends to forget about it. Hirstein’s point rests mainly on the fact that the selectivity of the patient’s confabulations can be explained without positing an intention to deceive oneself (Hirstein, 2006, p. 230) and on the patients’ attenuated autonomic response, which would betray an absence of inner conflict. Although he does not address the issue, Hirstein could countenance some of the evidence we have reviewed so far, which indicates a form of inner conflict, by claiming that even though some parts of the patient’s brain represent his plegia and control his belief that he is healthy, the subject himself, as opposed to his brain, does not believe, unconsciously or otherwise, that he suffers from a paralysis and does not intend, consciously or otherwise to believe that he is healthy. He could claim, in other words, that the patient’s “deceptive intention” and “knowledge” are subpersonal.

3.3.6 Conscious self-deception?

I take it, however, that the results of Marcel et al. (2004) support the claim that some patients do represent their condition at a personal level. They show that the patients can, when they are asked in the proper way, report their handicap (cf. Levy, 2008, pp. 235–238). Those striking results, however, tend to show too much. They tend to show not only that the patients have genuine personal level attitudes toward their plegia, but also that they have conscious attitudes toward their plegia. They tend to show, more precisely that the patients consciously believe that they are paralyzed. After all, reportability is a good enough evidence for consciousness! Marcel and his colleagues accordingly opt for an interpretation of apparent self-deception among patients suffering from anosognosia in terms of conscious self-deception:

Some patients seemed to show a genuine dissociation within awareness according to the manner or viewpoint of the question. Such a split in consciousness, often referred to as a dissociative state, has been said to occur in a variety of circum- stances. (Marcel et al., 2004, p. 33)

Such an option might seem problematic because other measures reveal that the patients consciously believe that they are not paralyzed. Marcel et al. (2004, p. 33) suggests that this “is only a problem if we assume a strict singularity of consciousness and a logical consistency within any one segment of consciousness. That is, genuine dissociations and conflicts within awareness may be possible. (Marcel et al., 2004, p. 33).” I will come back to the question of the “strict singularity of consciousness” when we will put forward a neo-dissociationist approach to self-deception, according to which repressed state are in some important sense conscious even though they are not well accessible to the subject for rational control (see Sections 6.1–6.2). For now, it is enough to realize that the patients could simply fail to be rational enough to monitor their inconsistencies and that they could accordingly believe consciously both that they do and that they do not suffer from a paralysis.

4 Conceptual Objections to a M-unconscious

It might be no accident that two leading groups of researchers who recognize the conflicting nature of some forms of anosognosia nevertheless reject an explanation of this conflict in terms of unconscious personal level states. Doing so, they implicitly get round two conceptual objections that make the very notion of a motivational unconscious problematic. I will actually argue that if those two objections do not make M-unconscious states impossible, they make it at least quite different from what we have been looking for through most empirical studies. They would thus also contribute to explain why the empirical studies we have scrutinized have not provided some decisive evidence for a motivational unconscious.

Those two conceptual objections are related with the second and the third constraint on a motivational unconscious respectively. They rely on the tension between those and the first constraint. The first objection claims that personal level mental states like motives must necessarily be conscious (see Section 4.1). The second objection claims that one could not repress a mental state unless he is conscious of that state, so that repression is a priori impossible (see Section 4.2). In that section, I will review those two objections and some answers to them that I deem inadequate. I will then put forward my own neo-dissociationist answer.

4.1 A First Conceptual Objection: Can Personal Level States be Unconscious?

Typical personal level mental states are conscious (at least as far as occurrent mental states are concerned). Typical unconscious brain states are not personal level mental states. Some researchers have suggested that unconscious mental states of a personal level would simply be chimerical. Wittgenstein for example considered that personal level accounts could only explain our actions by providing motives or reasons for them (he often seems to assimilate motives and reasons) and that unconscious mental states could not provide such motives or reasons for our actions but only mere causes for them15. Jaspers (1997) also considered that unconscious, as opposed to merely “unnoticed,” mental states could not figure in personal level explanations16.

4.1.1 Challenging the conscious-unconscious distinction

Even if it were acknowledged that some personal level mental states could be unconscious, there would still be a problem of criterion. On what ground should we judge that an unconscious state is a personal level mental state? Consciousness and reportability are the most common and the most natural criteria for the personal level, but those are useless when it comes to assess the existence of unconscious personal level mental states. Levy (2008) argues at length that the empirical evidence supports the claim that patients suffering from anosognosia really do “acknowledge,” at a personal level, their plegia. To counter Hirstein (2006)’s contention that this “acknowledgment” is only subpersonal because it is not available to them, he has to argue that availability comes in degree and that this acknowledgment is somehow available to them. The idea seems to be that at a certain level of availability we can say that the patient has some consciousness of his condition and that he accordingly acknowledges it at a personal level even though he is not fully conscious of it. I think that this is actually a good strategy, and we shall see that a defender of the M-unconscious might have to adopt a similar neo-dissociationist strategy, arguing that M-unconscious state are not totally unconscious. But unless more is said, it might seem like an ad hoc maneuver to counter the claim of those who contend that either a state is sufficiently available to the subject to be a personal level state, and it is conscious, or it is not but it is unconscious. We will come back to that point later (see Section 5.4.2).

4.1.2 Challenging the personal-subpersonal distinction

Alternatively, instead of making the border between the conscious and the unconscious murkier, one might try to challenge the distinction between personal and subpersonal mental states. Bermúdez (2000) has for example argued that personal level explanations are not autonomous in that some subpersonal level mental states can, and should figure, in some explanations that “make sense” of an action. He mentions, among others, explanations of the behavior of blindsights (Weiskrantz, 1986), or explanations of skillful behavior (some subpersonal motor processes can explain why the tennis champion managed to put the ball just here and not a few inches further, behind the line; see also Pacherie, 2008a). I agree with the general claim that some subpersonal states can figure in explanations that make sense of an action. I am not sure however, that such subpersonal states can, in those explanations, play the role of M-unconscious states. In particular, if the notion of M-unconscious is consistent, there should be some M-unconscious intentions capable alone of making sense of some actions, namely, the intentions to deceive oneself. It is dubious, however, that subpersonal intentions can alone, make sense of actions, or even that there can be such a thing as a subpersonal intention (an intention of whom?). This brings us to the second conceptual problem.

4.2 The Fundamental Problem of the M-unconscious: Is it Possible to Repress an Unconscious State?

We saw that the notion of M-unconscious allow us to solve the paradoxes of self-deception. It is however exposed to what one might call a “revenge problem17”. The problem, to put it briefly, is that in order to act on something intentionally I must be conscious of the thing I act on. Otherwise I could not have a sufficient control of that thing for my action to count as intentional. This is true even if we understand, as we do, “intentional” in a minimal sense which does not involve anything like deliberations. When John shoots his wife in anger, if his action is intentional, he must be conscious of his wife and of the fact that she is in a position to be shot. In the same way, if I close the door of a conference I am attending to and that in doing so I prevent a very noisy man from entering the room, we will not say that I intentionally prevented this man from entering unless I was aware of this man. More generally, if I am not conscious of X and X happens because of me, then it happens so to speak “blindly” rather than intentionally: as a consequence of some automatic, subpersonal process in me, or as a mere collateral effect of some other thing that I do intentionally. This principle of conscious agency connecting intentional action and consciousness applies to repression. It yields that the subject must be conscious of the states he represses, which contradicts the claim that they are unconscious. This revenge problem18 is, I take it, the fundamental problem of the motivational unconscious. If M is a typical M-unconscious state, an M-unconscious state, that is, which results from repression,

1. The repression of M is intentional (The M-unconscious is a Motivated Unconscious)

2. In order to repress something intentionally one must be conscious of that thing (Principle of conscious agency)

3. The subject is not conscious of M19 (The M-unconscious is unconscious)

(1) and (3) are definitional. (2), the principle of conscious agency, is widely endorsed by psychologists who consider intentional control as a measure of consciousness (Debner and Jacoby, 1994). It has been defended on intuitive grounds: intentional actions, as opposed to automatic behaviors and mere collateral effects must not be “blind”. Here is a more cautious argument connecting consciousness and action through the notion of control:

• If a subject acts intentionally on X, X must be under his intentional control.

• If X is under the intentional control of a subject, that subject must be conscious of X.

5 An Affective, Neo-Dissociationist Picture of the M-unconscious

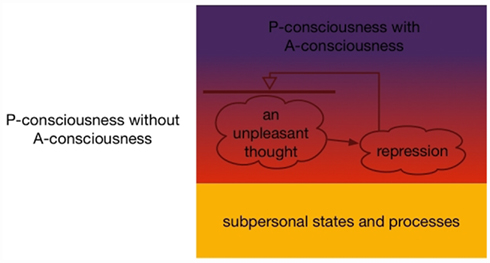

If the concept of M-unconscious is to be consistent, the argument (1–3) which has it that M-unconscious states are both conscious and unconscious must rest on an equivocation. This hypothesis is indeed quite plausible. Consciousness is a famously ambiguous and slippery notion (Chalmers, 1996; Siewert, 1998; Block, 2004). As Block (2004) puts it, “there are a number of very different “consciousnesses”. (…) These concepts are often partly or totally conflated, with bad results.” The fundamental problem of the M-unconscious might be one of those “bad results.” It could indeed be escaped if “conscious” did not have the same meaning in (2) and in (3). Considering that consciousness and agency are related by the principle of conscious control, distinctions in forms of consciousness are likely to mirror distinctions in forms of intentional control of agency. I will indeed argue that we should distinguish phenomenal consciousness and access-consciousness on the one hand (Section 5.1) and impulsive and rational forms of intentional control on the other hand (Section 5.2). Repression is, I will argue, an impulsive process, and repressed states are conscious in the phenomenal sense even if the subject is not access-conscious of them and cannot accordingly control them rationally. This will allow us to solve the fundamental problem of the M-unconscious. We shall see that this will allow us to solve the first conceptual problem as well.

5.1 The Ambiguity of Consciousness

5.1.1 Access-consciousness (A-consciousness)

One of the difficulties surrounding the task of defining consciousness is that the word applies to different kinds of thing. We can talk of

• conscious creatures (this man is conscious),

• of conscious mental states (his perception of the grass is conscious),

• and of conscious contents (he is conscious of the grass).

As the example makes clear those senses are related. In particular a subject is conscious of R if he has a conscious state with the content R. The risk of confusion is even bigger when the conscious content is itself a mental state, like when I am conscious of my desire to go to the sea, for in that case the desire which is a conscious content can also be a conscious state. In order to avoid ambiguities, I will always use the locution “conscious of” to refer to conscious contents.

Consider now a perceptual state which represents that the grass is green in front of me. We can say that this perceptual state is conscious (or that I am conscious of the grass being green) to mean that I can report that the grass is green, that I can reason on this fact and infer that it rains often in this part of the country, etc. This sense of consciousness underlies reportability. It is called A-consciousness.

Access-consciousness (A-consciousness) If a subject S has a state X which represents R, we say that X is A-conscious, or that S is A-conscious of R, if R is broadcast for free use in the rational control of behavior (I adapt this definition from Block, 2004).

A-consciousness marks a degree of availability, or a quality of access. We can indeed classify degrees of availability depending on the kind of behavior the available information can yield:

a. Minimally available information. An information is minimally available, when it is only represented at a subpersonal level and does not normally influence the subject’s overt behavior. Brain potentials reveal that patients suffering from prosopagnosia covertly recognize faces (Renault et al., 1989), this recognition however seems to be, at least in certain patients, only minimally accessible (Bruyer, 1991).

b. Subliminal information 1. At a higher degree of accessibility, the information influences the subject’s behavior in subtle ways. One can think for example of the priming effects of masked stimuli (Marcel, 1983; Dehaene and Naccache, 2001; Kouider et al., 2006).

c. Subliminal information 2. At a slightly higher degree, the subject can report the information in forced choice situations (think of the visual information available to blindsights; Weiskrantz, 1986).

d. Subliminal information 2’. The information might also be available in such a way that although the subject cannot report it spontaneously, he could have done so if his attention had not been impeded. This is the case of stimuli which are shown during an attentional blink (Sergent and Dehaene, 2005; Dehaene et al., 2006 calls such subliminal stimuli preconscious).

e. Non-categorical spontaneous report. The information might be available in such a way that the subject can spontaneously report it but only in a non-categorical way. For example, a subject who feels depressed might be unable to categorize his feeling as depression and yet be able to discriminate when this feeling changes or disappears.

f. Categorical spontaneous report. At a higher level, the subject is also able to categorize the information.

g. Memory. To store it in memory.

h. Predicting and planning. And to use it for making predictions, for planning, etc. The typical supraliminal perception of “medium sized dry goods” for example allows to do all those things.

The degree of availability to which A-consciousness corresponds is the degree of availability which allows rational control. In Blocks’s official definition “The “rational” is [just] meant to rule out the kind of automatic control that obtains in blindsight (Block, 2004),” so rational control and A-consciousness would probably start at (e). For reasons that will appear later20, I think it is better to understand “rational” in a more substantial sense, and to consider that A-consciousness and the kind of rational control that characterizes it start at (f). It will be useful to give a name to the kind of rational control and A-consciousness involved in normal supraliminal perceptions (h): we will say that the, subjects are robustly A-conscious of their contents and that he can use the information they provide for highly rational control.

5.1.2 Phenomenal consciousness (P-consciousness)

Whatever the precise degree of availability it requires, A-consciousness will conceptually differ from phenomenal consciousness (P-consciousness). My phenomenally conscious states are those states there is something it is like to be in (Nagel, 1974), those states that have a subjective or a qualitative character. They are what we call subjective experiences. While access-consciousness is tied to rationality, phenomenality is tied affectivity21. It should be noted however, that even if affects and emotions are paradigmatic of phenomenal states, perceptions, episodes of inner speech are also phenomenally conscious. Some have even argued that abstract thoughts could be conscious in this sense as well (Siewert, 1998; Pitt, 2004). Just like for A-consciousness we can attribute P-consciousness both to states and to their contents. Thus, if a subject has a subjective experience X of R we can say that he is P-conscious of R or that X is P-conscious.

Phenomenal consciousness (P-consciousness) We say that a subject’s mental state is P-conscious if it is a subjective experience. We say that subject S is P-conscious of R if he has a subjective experience of R.

It has often been noted that because of their subjectivity, P-conscious states seemed to involve a form of implicit or marginal reflexivity (Kriegel, 2004). What it’s like to have an experience is always what it’s like for me to have an experience because, to put it simply, I experience my subjective experiences. In what follows it will be useful to remember this suggestion to the effect that we are indeed always P-conscious of our P-conscious states, be it in am implicit and marginal manner.

5.1.3 The (un-)consciousness of M-unconscious states

We can now come back to the fundamental problem of the M-unconscious. In what sense should “unconscious” be taken in (3). In what sense should a subject be unconscious of his M-unconscious states. If a subject has an M-unconscious state M, he should be unable to report M. More broadly, he should be unable to use the information that he is in M for taking rational decisions. The subject should accordingly be A-unconscious of M. We can thus rephrase (3) as:

3’. S is A-unconscious of M (M-unconscious is unconscious)

If we are to defuse the second conceptual objection we must show that there is a sense of consciousness, say consciousness*, such that

i. consciousness* is a form of consciousness in an intuitive sense.

ii. if S is conscious* of M, M is under his intentional control.

iii. S can be conscious* of M without being A-conscious of M. S can be conscious* of M, that is, without M being under his rational control.

(iii) seems to stand in tension with (ii) and (i). I will argue that this tension is only apparent and that we have independent reasons to believe that P-consciousness can satisfy (i–iii). I will indeed show that there is an important distinction between two forms of intentional control that mirrors the distinction between A-consciousness and P-consciousness:

• There is a form of control, impulsive control, that is intentional without being rational.

• This intentional form of control is associated, as the principle of conscious agency requires, with a form of consciousness, namely P-consciousness.

• A subject can be P-conscious of something he is not A-conscious of.

In the picture that will emerge, M-unconscious states are typically affective states that the subject experiences viscerally even though he is not A-conscious of them. He can control them impulsively, but not rationally and repression is thus an impulsive process.