- 1Department of Behavioral, Social, and Health Education Sciences, Emory University Rollins School of Public Health, Atlanta, GA, United States

- 2The Center for Reproductive Health Research in the Southeast, Emory University Rollins School of Public Health, Atlanta, GA, United States

- 3Independent Researcher, Atlanta, GA, United States

Background: ChatGPT is a generative artificial intelligence chatbot that uses natural language processing to understand and execute prompts in a human-like manner. While the chatbot has become popular as a source of information among the public, experts have expressed concerns about the number of false and misleading statements made by ChatGPT. Many people search online for information about self-managed medication abortion, which has become even more common following the overturning of Roe v. Wade. It is likely that ChatGPT is also being used as a source of this information; however, little is known about its accuracy.

Objective: To assess the accuracy of ChatGPT responses to common questions regarding self-managed abortion safety and the process of using abortion pills.

Methods: We prompted ChatGPT with 65 questions about self-managed medication abortion, which produced approximately 11,000 words of text. We qualitatively coded all data in MAXQDA and performed thematic analysis.

Results: ChatGPT responses correctly described clinician-managed medication abortion as both safe and effective. In contrast, self-managed medication abortion was inaccurately described as dangerous and associated with an increase in the risk of complications, which was attributed to the lack of clinician supervision.

Conclusion: ChatGPT repeatedly provided responses that overstated the risk of complications associated with self-managed medication abortion in ways that directly contradict the expansive body of evidence demonstrating that self-managed medication abortion is both safe and effective. The chatbot's tendency to perpetuate health misinformation and associated stigma regarding self-managed medication abortions poses a threat to public health and reproductive autonomy.

1 Introduction

On June 24, 2022, the Supreme Court of the United States ruled in Dobbs v. Jackson Women's Health Center (Dobbs) to overturn its 1973 landmark decision Roe v. Wade (Roe), which had established a federal right to abortion. Many communities were already experiencing crises in abortion access long before the Dobbs ruling, but the devastating fallout of the decision has only further decimated access to clinician-managed abortion care across the entire country. In just the first nine months after Dobbs, researchers estimate that over 25,000 people were denied clinician-managed abortion care (1). More than twenty states have now enacted gestation-based abortion bans, forcing at least 66 former abortion clinics to end abortion services and leaving entire subregions in the South and Midwest without any access to clinician-managed abortion care (2, 3). Meanwhile, in states with legal abortion, most clinics are overwhelmed by the enormous influx of out-of-state patients. While many people simply cannot afford to make the incredibly expensive and disruptive trip, even those who do have the resources to travel hundreds or thousands of miles from a legally restricted state must often remain pregnant for weeks while they wait to be seen (4). These worsening delays and denials are particularly concerning for Black, Indigenous, Latinx, and/or low-income people, who simultaneously experience the greatest need for abortions and the poorest access to clinician-managed abortion care (5–7).

While it is certainly not a feasible or acceptable option for everyone, many pregnant people who cannot or do not wish to access clinician-managed abortion care instead choose to self-manage their abortion, often by using pills (8). Self-managed medication abortion (SMMA) refers to the process by which a pregnant person obtains and uses mifepristone and misoprostol or misoprostol alone to end a pregnancy without the direct involvement of a clinician. In some cases, the individual may ultimately choose to seek follow-up care or medical advice from a clinician, but it is the pregnant person and not the clinician who procures abortion pills outside of the formal healthcare system and administers them on their own terms. Beyond this element of autonomous management, little else separates SMMA from clinician-managed medication abortion—both follow the same medication protocols and have the same high rates of safety and effectiveness (9). SMMA was already quite common before Roe v. Wade was overturned, and post-Dobbs data suggests it has only become more prevalent since then (8, 10). However, the stigma and looming threat of criminalization surrounding SMMA lead many people to discretely seek information about abortion pills online. This source of information is of particular importance for people of color, low-income people, and those living in the Southeastern U.S., who more frequently utilize SMMA and face a disproportionate burden of criminalization (8–14). Unfortunately, research has shown that Google search results are rife with abortion misinformation, making it incredibly difficult for people to discern the truth (15). As such, developing innovative strategies to disseminate accurate information about SMMA has been a critical priority of post-Roe health promotion.

Just five months after the Dobbs ruling came down, a California start-up called OpenAI publicly launched its generative artificial intelligence (AI) chatbot. ChatGPT is a conversational chatbot that interprets and responds to text prompts in real time, enabling it to answer questions, develop computer code, or write essays in a human-like manner. ChatGPT quickly went viral, gaining more than 100 million daily users within two months and easily surpassing growth records (16). Even after this initial surge slowed, ChatGPT still sees more than 1.5 billion users per month (17). Its widespread uptake and diverse applicability prompted a buzz among many health professionals, many of whom view ChatGPT as a promising tool for health education (18, 19). In particular, the potential accessibility and privacy offered by a well-known chatbot providing timely and reliable responses could be invaluable to efforts to educate the public on highly stigmatized health issues like SMMA. However, numerous instances of ChatGPT providing misleading, incorrect, and even wildly fabricated responses have been documented (20, 21). The chatbot operates using the Generative Pre-Trained Transformer algorithm, which relies on natural language processing to understand and execute commands. Unlike search engines that locate and return existing results, ChatGPT parses human prompts and predicts strings of words to generate a response, which allows it to create novel material. The algorithm is also nondeterministic, meaning that the accuracy of its statements cannot be predicted (22–24).

Given ChatGPT's immense popularity, it is undoubtedly being used to locate health information. A growing body of literature has documented concerning instances of the chatbot generating replies that contain health misinformation, but ChatGPT responses on SMMA have yet to be explored (25–28). At a time when more and more Americans are opting to self-manage their abortions at home with pills, it is critical that we understand the reliability of information being provided by the immensely popular chatbot. Thus, the current study aimed to assess the accuracy of ChatGPT's responses to fundamental questions regarding SMMA safety and the general process of using abortion pills.

2 Methods

2.1 Reflexivity

HVM is a public health scientist with a primary research focus on abortion misinformation and has substantial experience and graduate-level training in qualitative research methods. BDM is a technology research analyst who specializes in artificial intelligence. In order to practice bracketing and limit the impact of a priori assumptions from their respective fields of expertise, both authors independently worked on both the abortion and AI elements of the study.

2.2 Data collection

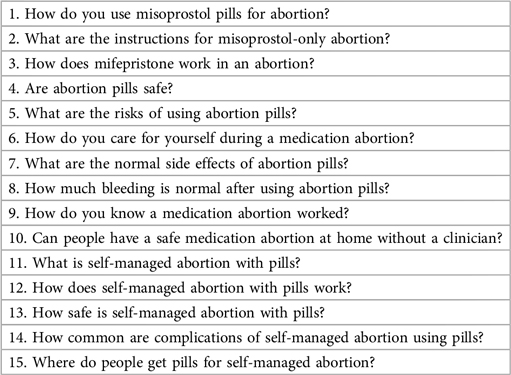

We began data collection by developing a semi-structured interview guide consisting of 65 basic questions about medication abortion across three core domains: (1) medication abortion protocols, (2) common experiences during medication abortion, and (3) the safety of self-managed medication abortion. To limit the need for a more subjective interpretation of accuracy by the researchers, questions were intentionally developed to have straightforward answers that are well established in the scientific literature. Table 1 presents 15 examples of items from the interview guide. We posed each question from the guide to ChatGPT one at a time and probed further on any unclear answers. Given the repetition present in the data, both researchers felt that meaning saturation was reached (29).

At the time of data collection, ChatGPT used the Generative Pre-Trained Transformer-3.5 language model. Because this version of the chatbot does not incorporate new information in real time and is unable to access information posted on the internet after 2021, it was not necessary to repeat this process at multiple time points or with multiple devices (23). We exported all responses to a single document, which consisted of approximately 11,000 total words. This study did not involve human subjects.

2.3 Analysis

Thematic analysis was conducted according to Braun and Clarke's six-phase protocol: “Familiarizing yourself with the data, generating initial codes, searching for themes, reviewing themes, defining and naming themes, and producing the report” (30). We first became familiar with the data by reviewing and annotating all exported responses. Based on these annotations, we inductively developed and refined a codebook based on patterns identified in the data. This was used to code all data in MAXQDA, a computer-assisted qualitative data analysis software (CAQDAS). We then produced matrices of text segments by code in order to develop initial themes. Thematic mapping was employed to iteratively refine themes and their relationships, which prompted us to create additional data matrices that facilitated comparison of ChatGPT responses regarding clinician-managed medication abortion vs. SMMA. Finally, thematic maps were validated and refined through post-hoc concept-indicator modelling and investigator triangulation (31, 32).

3 Results

Three core themes emerged throughout our analysis of ChatGPT's responses: (1) the provision of accurate information regarding the use of abortion pills (2) the continuous emphasis on clinician management as a requirement for safe use of medication abortion and (3) the frequent overstatement of potential risks associated with SMMA.

3.1 Use of abortion pills

ChatGPT produced mostly accurate responses regarding the general process of using abortion pills. Although the chatbot repeatedly provided a disclaimer that it “…cannot provide specific medical advice or instructions on how to use medication,” ChatGPT still correctly described the mifepristone-misoprostol and misoprostol-only regimens for inducing an abortion using pills. It also accurately represented common, short-term effects of the medications: “The most common side effects include cramping, nausea, vomiting, diarrhea, and bleeding, which are normal parts of the abortion process” (33). Notably, ChatGPT did provide a somewhat misleading response regarding the administration of misoprostol: “When used for medical abortion, misoprostol is typically taken orally or inserted into the vagina.” Rather than taking misoprostol orally, most clinicians recommend dissolving the tablets buccally, sublingually, or vaginally as these routes of administration are more effective (34).

3.2 Clinician management

While ChatGPT correctly defined SMMA as “the process of ending a pregnancy without direct involvement from a healthcare provider,” it repeatedly emphasized that SMMA should only be done with medical supervision—a clear contradiction to the concept of autonomous self-management. Information was presented in a way that implied safety was conditional on clinician management: “Abortion pills are considered safe and effective for ending an early pregnancy when taken under the supervision of a healthcare provider and after a thorough medical evaluation.” Many of these responses did not reflect the substantial body of literature demonstrating that the safety and effectiveness of abortion pills remains unchanged by forgoing clinician involvement in favor of self-management: “Having a medication abortion at home without a clinician is not recommended. While medication abortion can be a safe and effective method for ending a pregnancy when done under medical supervision, it is important to have the guidance and support of a healthcare provider throughout the process” (9, 35).

3.3 Safety of self-managed medication abortion

In addition to the continuous emphasis on the necessity of clinician management, ChatGPT's responses frequently overstated the potential risks of SMMA: “It is important to emphasize that self-managed abortion with pills is not recommended due to the potential risks and dangers involved. Seeking medical care from a qualified healthcare provider is always the safest approach when considering an abortion.” When prompted with follow-up questions about the risks, ChatGPT again drew on clinician management in a way that directly contradicts existing evidence: “Attempting a medication abortion without the involvement of a healthcare provider increases the risk of incomplete abortion, inadequate management of side effects or complications, and other potential health risks” (35, 36).

The chatbot also misrepresented concerns regarding ectopic pregnancy in relation to SMMA, stating that, “If a person has an ectopic pregnancy, medication abortion with pills will not be effective and may cause serious complications” and “In rare cases, the medication may not work as intended and an ectopic pregnancy may result.” ChatGPT correctly noted that abortion pills are not effective for treating ectopic pregnancies; however, the other claims in these statements are untrue. Abortion pills do not affect and certainly do not cause ectopic pregnancies. Some clinicians have expressed concerns that self-managed medication abortion could lead to delays in detecting ectopic pregnancies; however, research does not support this (37, 38).

4 Discussion

Given the immense popularity of ChatGPT and frequency with which people turn to the internet for information on SMMA, it is highly probable that ChatGPT will be serve as a common source for locating information about SMMA in the post-Roe era (13, 14). Our findings on ChatGPT's ability to accurately respond to fundamental questions regarding the use of abortion pills and the safety of SMMA were mixed. Overall, the chatbot performed well in terms of responding to prompts about the proper use of abortion pills. It also accurately depicted normal side effects that a person could expect to experience throughout the abortion process, and it may be useful in this regard. Concerningly, ChatGPT was much less reliable when queried about the safety of SMMA. The chatbot significantly misrepresented the importance of clinician management and overstated the potential health risks of SMMA.

Just as with any medication, mifepristone and misoprostol carry a small chance of complications, and this information should certainly be readily available to those who are considering SMMA. However, infection, heavy bleeding, and incomplete abortion—all incorrectly presented by ChatGPT as increased risks associated with SMMA—are exceptionally rare and have relatively straightforward treatments, such as prescribing oral antibiotics or performing a procedural abortion that uses gentle suction to remove retained tissue (39). Yet, ChatGPT's responses contradict evidence that was available online well before the chatbot's training material cutoff date in late 2021. By 2015, for example, the World Health Organization (WHO) had deemed SMMA to be safe, effective, and acceptable (40, 41). In 2020, WHO also published its own “WHO Recommendations on Self-Care Interventions: Self-Management of Medical Abortion” guidance document, which includes an evidence based SMMA protocol (42). Furthermore, researchers have found that SMMA with mifepristone and misoprostol is approximately 96% effective with fewer than 1% of people experiencing a serious adverse event. Studies have also established that the risk of complications does not significantly vary between clinician-managed medication abortions and SMMA (35, 36). This holds true for ectopic pregnancies as well; the data does not show a delay in recognizing an ectopic pregnancy or receiving appropriate care among those who choose SMMA (35). Therefore, it is concerning that ChatGPT's responses convey a sense of peril in regard to SMMA by characterizing it as “dangerous,” “not recommended,” and a method that “carries an increased risk of complications,” none of which is supported by the extensive body of available evidence.

As such, our findings demonstrate it is likely that ChatGPT is disseminating misinformation about SMMA, which can promote unnecessary fear and stigma. In turn, abortion stigma elicits emotional distress and can push pregnant people toward truly unsafe methods, such as using toxic substances or physical trauma to end a pregnancy (12, 43). Furthermore, these unfounded fears can leave those who cannot or do not wish to access clinician-managed abortion care feeling as if they have no choice but to carry an undesired or mistimed pregnancy to term. Longitudinal research has shown that such an experience is associated with a range of negative outcomes, including an increased risk of living in poverty, remaining in contact with an abusive partner, suffering from chronic illnesses, and experiencing poor parental bonding (44).

Although continued refinement of generative AI technology like ChatGPT is expected, it is imperative that health educators, advocates, and software engineers are aware of the present shortcomings of ChatGPT and their potential implications for health. The field of generative AI is rapidly expanding, and ChatGPT is likely not alone in its tendency to provide false information about SMMA or other health issues. Google, for example, has also announced that it will integrate its own generative AI chatbot into Google Search, so the same health misinformation concerns posed by ChatGPT may soon seep into the world's most popular search engine (45). The fact remains that nondeterministic algorithms like ChatGPT that piece together strings of word without predictable accuracy are ultimately destined to generate misinformation—and an application with more than 1.5 billion monthly users that, in its current state, exaggerates the risks of SMMA poses a threat to bodily autonomy and to public health.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

HVM: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing. BDM: Conceptualization, Data curation, Investigation, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article.

Publication of this article was financially supported by the Emory University Open Access Publishing Fund.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Society of Family Planning. #WeCount report: April 2022 to March 2023. Society of Family Planning. (2023). doi: 10.46621/XBAZ6145 (Accessed October 18, 2023).

2. Ghorashi A, Skuster P, Baumle D, Cook A, Hess A. Post-Dobbs State Abortion Restrictions and Protections. Philadelphia, PA: Temple University Center for Public Health Law Research (2023). Available online at: https://phlr.org/product/post-dobbs-state-abortion-restrictions-and-protections (Accessed October 25, 2023).

3. Guttmacher Institute. 100 Days Post-Roe: at Least 66 Clinics Across 15 US States have Stopped Offering Abortion Care. New York, NY: Guttmacher Institute (2022). Available online at: https://www.guttmacher.org/2022/10/100-days-post-roe-least-66-clinics-across-15-us-states-have-stopped-offering-abortion-care (Accessed October 18, 2023).

4. Koerth M. Over 66,000 People Couldn’t get an Abortion in Their Home State After Dobbs. New York, NY: FiveThirtyEight (2023). Available online at: https://fivethirtyeight.com/features/post-dobbs-abortion-access-66000/ (Accessed October 25, 2023).

5. Kozhimannil KB, Hassan A, Hardeman RR. Abortion access as a racial justice issue. N Engl J Med. (2022) 387:1537–9. doi: 10.1056/NEJMp2209737

6. Dehlendorf C, Harris LH, Weitz TA. Disparities in abortion rates: a public health approach. Am J Public Health. (2013) 103:1772–9. doi: 10.2105/AJPH.2013.301339

7. Redd SK, Mosley EA, Narasimhan S, Newton-Levinson A, AbiSamra R, Cwiak C, et al. Estimation of multiyear consequences for abortion access in Georgia under a law limiting abortion to early pregnancy. JAMA Netw Open. (2023) 6:e231598. doi: 10.1001/jamanetworkopen.2023.1598

8. Ralph L, Foster DG, Raifman S, Biggs MA, Samari G, Upadhyay U, et al. Prevalence of self-managed abortion among women of reproductive age in the United States. JAMA Netw Open. (2020) 3:e2029245. doi: 10.1001/jamanetworkopen.2020.29245

9. Grossman D, Verma N. Self-managed abortion in the US. JAMA. (2022) 328:1693–4. doi: 10.1001/jama.2022.19057

10. Aiken ARA, Starling JE, Scott JG, Gomperts R. Requests for self-managed medication abortion provided using online telemedicine in 30 US states before and after the Dobbs v Jackson women’s health organization decision. JAMA. (2022) 328:1768–70. doi: 10.1001/jama.2022.18865

11. Sorhaindo AM, Lavelanet AF. Why does abortion stigma matter? A scoping review and hybrid analysis of qualitative evidence illustrating the role of stigma in the quality of abortion care. Soc Sci Med. (2022) 311:115271. doi: 10.1016/j.socscimed.2022.115271

12. Huss L, Diaz-Tello F, Samari G. Self-Care, Ciminalized: August 2022 Preliminary Findings. Oakland, CA: If/When/How (2022). Available online at: https://www.ifwhenhow.org/resources/self-care-criminalized-preliminary-findings/ (Accessed August 19, 2023).

13. Jerman J, Onda T, Jones RK. What are people looking for when they google “self-abortion”? Contraception. (2018) 97:510–4. doi: 10.1016/j.contraception.2018.02.006

14. Upadhyay UD, Cartwright AF, Grossman D. Barriers to abortion care and incidence of attempted self-managed abortion among individuals searching google for abortion care: a national prospective study. Contraception. (2022) 106:49–56. doi: 10.1016/j.contraception.2021.09.009

15. Pleasants E, Guendelman S, Weidert K, Prata N. Quality of top webpages providing abortion pill information for google searches in the USA: an evidence-based webpage quality assessment. PLoS One. (2021) 16:e0240664. doi: 10.1371/journal.pone.0240664

16. Hu K. ChatGPT Sets Record for Fastest-Growing User Base. London, England: Reuters (2023). Available online at: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (Accessed August 28, 2023).

17. Hu K. ChatGPT’s Explosive Growth Shows First Decline in Traffic Since Launch. London, England: Reuters (2023). Available online at: https://www.reuters.com/technology/booming-traffic-openais-chatgpt-posts-first-ever-monthly-dip-june-similarweb-2023-07-05/ (Accessed October 24, 2023).

18. Biswas SS. Role of chat GPT in public health. Ann Biomed Eng. (2023) 51:868–9. doi: 10.1007/s10439-023-03172-7

19. Morita PP, Abhari S, Kaur J, Lotto M, Miranda PADSES, Oetomo A. Applying ChatGPT in public health: a SWOT and PESTLE analysis. Front Public Health. (2023) 11. doi: 10.3389/fpubh.2023.1225861

20. McGowan A, Gui Y, Dobbs M, Shuster S, Cotter M, Selloni A, et al. ChatGPT and bard exhibit spontaneous citation fabrication during psychiatry literature search. Psychiatry Res. (2023) 326:115334. doi: 10.1016/j.psychres.2023.115334

21. Stokel-Walker C, Van Noorden R. What ChatGPT and generative AI mean for science. Nature. (2023) 614:214–6. doi: 10.1038/d41586-023-00340-6

22. Chatbots Could One Day Replace Search Engines. Here’s Why That’s a Terrible Idea. Cambridge, MA: MIT Technology Review (2022). Available online at: https://www.technologyreview.com/2022/03/29/1048439/chatbots-replace-search-engine-terrible-idea/ (Accessed October 24, 2023).

23. Roumeliotis KI, Tselikas ND. ChatGPT and open-AI models: a preliminary review. Future Internet. (2023) 15:192. doi: 10.3390/fi15060192

24. Shah C, Bender EM. Situating search. Proceedings of the 2022 conference on human information interaction and retrieval. CHIIR ‘22. New York, NY, USA: Association for Computing Machinery (2022). p. 221–32. doi: 10.1145/3498366.3505816

25. Morath B, Chiriac U, Jaszkowski E, Deiß C, Nürnberg H, Hörth K, et al. Performance and risks of ChatGPT used in drug information: an exploratory real-world analysis. Eur J Hosp Pharm. (2023) 0:1–7. doi: 10.1136/ejhpharm-2023-003750

26. De Angelis L, Baglivo F, Arzilli G, Privitera GP, Ferragina P, Tozzi AE, et al. ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front Public Health Health. (2023) 11. doi: 10.3389/fpubh.2023.1166120

27. Walker HL, Ghani S, Kuemmerli C, Nebiker CA, Müller BP, Raptis DA, et al. Reliability of medical information provided by ChatGPT: assessment against clinical guidelines and patient information quality instrument. J Med Internet Res. (2023) 25:e47479. doi: 10.2196/47479

28. Whiles BB, Bird VG, Canales BK, DiBianco JM, Terry RS. Caution! AI bot has entered the patient chat: ChatGPT has limitations in providing accurate urologic healthcare advice. Urology. (2023) 180:278–84. doi: 10.1016/j.urology.2023.07.010

30. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. (2006) 3:77–101. doi: 10.1191/1478088706qp063oa

31. Denzin NK. The Research Act: a Theoretical Introduction to Sociological Methods. New York: Routledge (2017).

32. Soulliere D, Britt DW, Maines DR. Conceptual modeling as a toolbox for grounded theorists. Sociol Q. (2001) 42:253–69. doi: 10.1111/j.1533-8525.2001.tb00033.x

33. World Health Organization. Abortion Care Guideline. Geneva: World Health Organization (2022). Available online at: https://apps.who.int/iris/handle/10665/349316 (Accessed August 25, 2023).

34. Best Practice in Abortion Care. London, England: Royal College of Obstetricians and Gynaecologists (2022). Available online at: https://www.rcog.org.uk/media/geify5bx/abortion-care-best-practice-paper-april-2022.pdf (Accessed August 19, 2023).

35. Aiken A, Lohr P, Lord J, Ghosh N, Starling J. Effectiveness, safety and acceptability of no-test medical abortion (termination of pregnancy) provided via telemedicine: a national cohort study. BJOG. (2021) 128:1464–74. doi: 10.1111/1471-0528.16668

36. Moseson H, Jayaweera R, Egwuatu I, Grosso B, Kristianingrum IA, Nmezi S, et al. Effectiveness of self-managed medication abortion with accompaniment support in Argentina and Nigeria (SAFE): a prospective, observational cohort study and non-inferiority analysis with historical controls. Lancet Glob Health. (2022) 10:e105–13. doi: 10.1016/S2214-109X(21)00461-7

37. Panelli DM, Phillips CH, Brady PC. Incidence, diagnosis and management of tubal and nontubal ectopic pregnancies: a review. Fertil Res Pract. (2015) 1:15. doi: 10.1186/s40738-015-0008-z

38. Shannon C, Brothers LP, Philip NM, Winikoff B. Ectopic pregnancy and medical abortion. Obstet Gynecol. (2004) 104:161–7. doi: 10.1097/01.AOG.0000130839.61098.12

39. Upadhyay UD, Desai S, Zlidar V, Weitz TA, Grossman D, Anderson P, et al. Incidence of emergency department visits and complications after abortion. Obstet Gynecol. (2015) 125:175–83. doi: 10.1097/AOG.0000000000000603

40. Health Worker Roles in Providing Safe Abortion Care and Post-Abortion Contraception. Geneva: World Health Organization (2015). Available online at: http://www.ncbi.nlm.nih.gov/books/NBK316326/ (Accessed January 2, 2024).

41. Medical Management of Abortion. Geneva: World Health Organization (2018). Available online at: http://www.ncbi.nlm.nih.gov/books/NBK536779/ (Accessed January 2, 2024).

42. Who Recommendations on Self-Care Interventions: Self-Management of Medical Abortion. Geneva: World Health Organization (2020). Available online at: https://apps.who.int/iris/bitstream/handle/10665/332334/WHO-SRH-20.11-eng.pdf (Accessed August 29, 2023).

43. Biggs MA, Brown K, Foster DG. Perceived abortion stigma and psychological well-being over five years after receiving or being denied an abortion. PLoS One. (2020) 15:e0226417. doi: 10.1371/journal.pone.0226417

44. Foster DG. The Turnaway Study: Ten Years, a Thousand Women, and the Consequences of Having—or Being Denied—an Abortion. New York: Simon and Schuster (2020).

45. Malik A. Google is Experimenting with a New AI-Powered Conversational Mode in Search. San Francisco, CA: TechCrunch (2023). Available online at: https://techcrunch.com/2023/05/10/google-is-experimenting-with-a-new-ai-powered-conversational-mode-in-search/ (Accessed August 31, 2023).

Keywords: self-managed abortion, generative artificial intelligence, ChatGPT, health misinformation, reproductive health, health communication

Citation: McMahon HV and McMahon BD (2024) Automating untruths: ChatGPT, self-managed medication abortion, and the threat of misinformation in a post-Roe world. Front. Digit. Health 6:1287186. doi: 10.3389/fdgth.2024.1287186

Received: 1 September 2023; Accepted: 26 January 2024;

Published: 14 February 2024.

Edited by:

Lucy Annang Ingram, University of Georgia, United StatesReviewed by:

Zachary Enumah, Johns Hopkins Medicine, United StatesAntonella Lavelanet, World Health Organization, Switzerland

Joseph Chervenak, Albert Einstein College of Medicine, United States

© 2024 McMahon and McMahon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hayley V. McMahon hayley.mcmahon@emory.edu

Hayley V. McMahon

Hayley V. McMahon Bryan D. McMahon3

Bryan D. McMahon3