- Institute of Cognitive Science, University of Osnabrueck, Osnabrueck, Germany

It is assumed that the cause of cognitive and behavioral capacities of living systems is to be found in the complex structure-function relationship of their brains; a property that is still difficult to decipher. Based on a neurodynamics approach to embodied cognition this paper introduces a method to guide the development of modular neural systems into the direction of enhanced cognitive abilities. It uses formally the synchronization of subnetworks to split the dynamics of coupled systems into synchronized and asynchronous components. The concept of a synchronization core is introduced to represent a whole family of parameterized neurodynamical systems living in a synchronization manifold. It is used to identify those coupled systems having a rich spectrum of dynamical properties. Special coupling structures—called generative—are identified which allow to make the synchronized dynamics more “complex” than the dynamics of the isolated parts. Furthermore, a criterion for coupling structures is given which, in addition to the synchronized dynamics, allows also for an asynchronous dynamics by destabilizing the synchronization manifold. The large class of synchronization equivalent systems contains networks with very different coupling structures and weights allsharing the same dynamical properties. To demonstrate the method a simple example is discussed in detail.

1. Introduction

That the complexity of neural systems, how ever defined, is the source for the cognitive and behavioral capacities of living systems is an almost unquestioned assumption underlying most discussions about the impressive abilities of brains and brain-like systems. Hidden in the term complexity is the question for the still enigmatic structure-function-relationship in these systems. This paper will use a theoretical approach to tackle this problem from the point of modular neurodynamics. It is widely motivated by evolutionary robotics techniques (Nolfi and Floreano, 2000; Harvey et al., 2005; Nolfi et al., 2016), which were applied to study simple neural network solutions for the control of animat behavior (Dean, 1998; Guillot and Meyer, 2001).

If one is using the term complexity in the context of brain-like systems there are at least two different aspects which have to be addressed. One concerns the connectivity of networks; and there are useful measures to quantify what one would term complexity of network connectivity (Sporns et al., 2000; Sporns, 2002). In general, these measures will gain their importance for larger networks.

The other aspect is concerned with the properties of the dynamics running on these neural networks. Complexity in this context will be related to what (Ashby, 1958) called the law of requisite variety. Itstates that the larger the variety of actions available to a control system, the larger the variety of perturbations it is able to compensate. This translates into saying that the larger the number of appropriate “states” available to a neural system, the more behavior relevant actions it may generate to cope with occurring vital or life-threatening external situations. To represent these “appropriate states” we identified the so called dynamical forms of a neural system (Pasemann, 2017).

A crucial question to ask is that for the relationship between the complexity of the connectivity and the complexity of the dynamics which is realizable on a given network. In the context of brain sciences this has been discussed for instance in Sporns et al. (2000) and Sporns (2002, 2017).

For evolution or development of cognitive neural systems modularity is certainly a good design principle. But already Szentágothai and Arbib (1974), as well as Freeman (1975), formulated the modular architecture of the nervous system as a fundamental organizational principle, where certain brain areas are made up of smaller, repeating neural circuits, which are locally interacting functional units generating the overall performance of the system. Thus, it should be a promising strategy to develop larger neural networks with enriched cognitive faculties by coupling smaller networks in such a way that the resulting system has an extended capacity for sensorimotor control and enhanced cognitive solutions for challenging environmental situations. This is one goal of a modular neurodynamics approach to embodied cognition (Anderson, 2003; Ziemke, 2003).

Concerning “living” systems, neural dynamics is permanently driven by external (and metabolic) sensor signals which can serve, in a first approximation, as (slowly varying) control parameters. For a given set of such parameters the corresponding dynamical system may have a global attractor or a multitude of co-existing attractors forming a so called attractor landscape. A small change of parameters may change the whole attractor landscape only marginally: attractors and their basin boundaries are only slightly deformed, they are morphing (Negrello and Pasemann, 2008). But at certain critical parameter values the number and/or types of attractors are abruptly changed; i.e., a bifurcation occurs. In Pasemann (2017) it was argued, that what is of relevance for behavior is a whole class of parameterized dynamical systems, called a dynamical form.

Now, to change behavior (or a mental content) the system should switch from one dynamical form to another dynamical form. In a sensory-motor loop sensor signals can drive parameters across a bifurcation set into a different parameter domain, thus switching to a different dynamical form with a different number and/or different types of attractors. The co-existence of a multitude of attractors in a dynamical form relates to multi-stability, and is functional, for instance, for memory properties. The existence of a larger variety of dynamical forms in a neural structure will enable the system to discern more relevant external (and internal) situations. Moreover, in the spirit of the law of requisite variety, it is a reasonable hypothesis to assume that having more dynamical forms available after the coupling of neuromodules will provide an advantage for the development of cognitive abilities. The goal of coupling subsystems therefore is to enlarge the behavioral or cognitive capacities of neural control systems.

To have a guide line for our analysis we will rely on a set of reasonable assumptions concerning the properties of a network. We will allow neurons to have positive or negative self-connections. Second, the neuromodules which are to be coupled are assumed to be strongly connected in the graph theoretical sense, and the coupling of neuromodules is assumed to be recurrent, so that the resulting system is again strongly connected. Recurrent coupling will always introduce some additional cycles (in graph theory parlance) into the system and therefore will also influence the dynamics of the composed system.

Synchronization of neuron activities across separated areas of brains was often discussed as a fundamental mechanism underlying cognitive processes. Especially synchronization was understood as a solution to the so called binding problem (Von der Malsburg, 1995; Singer, 1996; Melloni et al., 2007; Maurer, 2016). But its contributions for a live sustaining behavioral performance is still not fully understood and perhaps strongly overestimated (Santos et al., 2012).

Instead of addressing the many roles ascribed to synchronization, in this paper synchronization is considered as a formal tool to understand how synaptic coupling of neuromodules can lead to larger systems with a richer dynamical spectrum. The idea here is the following: Suppose that for the composed system already the lower dimensional synchronized dynamics is at least as rich—for instance in terms of the number of attractors—as that of the uncoupled neuromodules. If in addition to this synchronized dynamics there exists a multitude of asynchronous attractors outside the synchronization manifold, then the coupled system is said to have a richer dynamical spectrum than its isolated parts; i.e., the composed system will provide a larger reservoir for different cognitive processes. The goal here, then, is to find criteria for such a situation.

For that we introduce the concept of a synchronization core of a composed system. It relates the synchronized dynamics to that of a specific neural network of lower dimension, the dynamics of which may be known. This concept allows to classify a whole family of synchronization equivalent neural networks all having different connectivities but carrying qualitatively the same [i.e., topological conjugate (Strogatz, 1996)] synchronization dynamics; i.e., they have the same synchronization core. Concerning the structure-function-relationship in neural systems this approach helps to derive conditions for the dynamical “complexity” of coupled systems.

To enhance the dynamics of given larger neural systems one may think about its decomposition into synchronization equivalent submodules, thus identifying the modules together with their coupling structure. Module connectivities and/or couplings than can be optimized for a more complex neurodynamics.

The organization of the paper is as follows. In the next section a neuromodule is introduced as a parameterized family of discrete-time dynamical systems together with the structure of the underlying neural network. The corresponding concepts of attractor landscapes and dynamical forms are shortly recalled.

Section 3 then will present some basic definitions to characterize the synchronized and asynchronous dynamics of coupled neuromodules (compare also Pasemann, 1999; Pasemann and Wennekers, 2000). It introduces synchronization and obstruction weight matrices, synchronization cores, and discusses the stability of synchronized and asynchronous dynamics and the decomposition of larger networks into synchronizable submodules. The following section 4 then presents a simple example for demonstrating the arguments in the former sections. Finally, the paper concludes with a short discussion of results.

2. Neuromodules

Before discussing the role synchronization may play to help understanding the interplay between connectivity and dynamics of coupled neural networks we will introduce some basic notations.

In the following Nn(θ, w) refers to a neural network with n neurons, given bias terms θ ∈ ℝn and (n × n) weight matrix w ∈ ℝn×n. The transfer function of neurons is chosen to be the sigmoid τ : = tanh.

Although a neuromodule is generally understood as a neural network which can be a part of a larger neural system, here, being interested mainly in the qualitative aspects of dynamics on networks with standard sigmoidal neurons, we will restrict our considerations to discrete-time neurodynamics and describe a neuromodule as a parameterized family of dynamical systems , where A ⊂ ℝn denotes the activation state space of the network, Q ⊂ ℝ(n+1)×n the parameter space, and the map f : Q × A → A the discrete-time dynamics defined by

Here, ai denotes the activation of neuron i, wij the synaptic weight from neuron j to unit i, the sum of a fixed bias term and its stationary (slowly varying) external input Ii. The output oi of unit i is then given by oi = τ (ai) : = tanh(ai); and the output space, denoted by A*, is corresponds to the open n-cube A* = (−1, 1)n ⊂ ℝn. Denoting a parameter set by ρ : = (θ, w) ∈ Q we also write for the dynamics of the underlying neural network Nn(θ, w).

2.1. Connectivity, Structure, and Configuration

To better understand the relation between dynamical and network properties we discern between the connectivity of a network Nn(θ, w), its structure, and its configuration.

The connectivity of a network Nn(θ, w) is best reflected by its adjacency matrix C(w) which is given by the (n × n)-matrix with zero elements on the main diagonal and entries Cij(w) defined by

i.e., the adjacency matrix ignores self-connections (loops) of neurons.

Furthermore, if we want to put emphasis on the fact that there exist inhibitory as well as excitatory synaptic connections in the network Nn(θ, w) we will refer to the structure matrix CS(w), also called the structure, of the network Nn(θ, w) given by

The structure CS(w) of a network Nn(θ, w) thus describes the polarity of inter-connections as well as that of self-connections. It is often represented by a signed directed graph. Keeping in mind the underlying neural network Nn(θ, w) of the neuromodule , we also write for its structure. The structure together with the corresponding family of parameterized dynamical systems is what we usually refer to as a neuromodule.

An explicitly given weight matrix w of a neuromodule is called its configuration. Finally, the bias terms together with the synaptic weights wij of the weight matrix w ∈ ℝn×n represent the parameters ρ ∈ Q ⊂ ℝ(1+n)×n of the neuromodule .

Thus, given a certain connectivity C(w) of a network Nn(θ, w), there can be a manifold of different structures CS(w) consistent with this connectivity. And there will be a manifold of configurations wij for one and the same neural structure CS(w).

Given a parameter vector there may exist not only one attractor, but many attractors of the same type or even of different types. The metaphor attractor landscape Lρ for then represents the state space M marked by all attractors, their basins of attraction together with their basin boundaries. A dynamical form than can be understood as a bundle of such landscapes over a certain set of parameters for which the corresponding dynamical systems fρ are structurally stable; i.e., topologically conjugate (Pasemann, 2017).

Because a practicable and meaningful complexity measure for our purposes seems to be not yet at hand, we will use the term richness of the dynamical spectrum in a purely intuitive way to characterize the dynamical properties of a neuromodule. As an example: a neural network Nn(θ, w) with given weight matrix w, allowing only a global fixed point attractor for all bias terms θ is assumed to be dynamically less rich than a network for which, for instance, a period doubling route to chaos can be observed in a bifurcation diagram. On the other hand, a network allowing a parameter domain providing a dynamical form with k different attractors is termed to be dynamically richer than a network providing only l < k coexisting attractors, where k is the maximal number of attractors for all (A, fρ), ρ ∈ Q inherited by a given structure CS(w).

2.2. Coupled Neuromodules

In the following an n-dimensional neuromodule is assumed to be given by a strongly connected neural network Nn(θ, w); i.e., its weight matrix w is assumed to be irreducible.

Let and denote two neuromodules with (n × n)-weight matrices wA and wB, respectively. Their corresponding parameter sets are denoted by ρA = (θA, wA) ∈ Q and ρB = (θB, wB) ∈ Q′, and the neural activations of module and will be denoted by ai and bi, i = 1, ..., n, respectively.

A system composed of and corresponds to a neuromodule , with M : = (A × B), h : R × M → M, and (Q × Q′) ⊂ R; i.e., the new parameters space R includes the parameters of and and is enlarged by the weights of synaptic connections between neurons of the two modules and .

The connections from module to module are described by an (n × n)-coupling matrix wAB, connections from to by (n × n)-coupling matrix wBA. Thus, the (2n × 2n) weight matrix wM of the coupled system is of the form

The pair (wAB, wBA) of matrices comprising the coupling connections is simply called the coupling of modules and . It is called recurrent if wAB ≠ 0 and wBA ≠ 0. A recurrent coupling (wAB, wBA) is called symmetric, iff wAB = wBA. Recurrent couplings will lead again to strongly connected neural networks with irreducible weight matrix wM; i.e., to neuromodules.

3. Dynamics of Coupled Neuromodules

The dynamics of coupled neuromodules will depend on the strength of the coupling connections and on their type, i.e., being excitatory or inhibitory. It will also depend on the type (even or odd) and length of the cycles established by the coupling connections. Recall, that a cycle is termed even (odd) if the number of inhibitory connections in the cycle is even (odd). To get some first results concerning the effect of different couplings we assume that the neuromodules and have the same dimension n. For the more general case where modules have different dimensions see e.g., Pasemann and Wennekers (2000).

In the following denotes a system of coupled n-modules and with weight matrix wM given by Equation (4). The activation state space M = A × B of the coupled system is 2n-dimensional, and its parameterized discrete-time dynamics will be denoted by

where ρ : = (ρA, ρB, wA, wB, wAB, wBA) denotes a set of parameters for the coupled system (M, hρ). The dynamics hρ is then given for i = 1, . . . , n in the form

Definition Given a coupled system . Suppose there exist a subset , such that implies

where (a(t; a0), b(t; b0)) denotes the orbit in M under hρ through the initial condition . Then this process is called a (complete) synchronization of modules and if κ = 1. If κ = −1 the dynamics is called (completely) anti-synchronous. Otherwise the dynamics is called asynchronous.

3.1. Synchronized Module Dynamics

Let denote the system of coupled modules and which can be completely synchronized. Then the synchronized dynamics is restricted to the synchronization manifold Ms which is an n-dimensional hyperspace Ms ≅ ℝn ⊂ M. One can immediately verify the following lemma by subtracting Equation (6) from Equation (5); i.e., by equating ai = bi, i = 1, . . . , n.

Lemma 3.1. Let the parameter sets ρA ∈ Q, ρB ∈ Q′ of the modules and satisfy

Then every orbit of hρ : M → M with initial condition a(0) = b(0) ∈ Ms is constrained to Ms for all times; i.e., Ms is an invariant manifold for hρ.

Equation (8) is called the synchronization condition for coupled neuromodules, and it shows that synchronization can be achieved for modules with different weight matrices wA and wB, as well as with different coupling matrices wAB and wBA, as long as (8) is satisfied.

For a more detailed study of the synchronized dynamics it is appropriate to introduce new coordinates (ξ, η) for M which are parallel and orthogonal, respectively, to the synchronization manifold Ms:

An orbit on the synchronization manifold Ms then will be given by ηi = 0, i = 1, . . . , n. Furthermore, the hyperspace M⊥ ⊂ M defined by ξi = 0, i = 1, . . . , n, will be called the obstruction manifold.

In terms of the (ξ, η)-coordinates the general dynamics hρ : M → M of the coupled system then reads

where i = 1, . . . , n, and the functions G± are defined by

These functions have the following properties:

where τ′ denotes the derivative of the sigmoid τ = tanh. Recall that 0 < τ′(x) ≤ 1, x ∈ ℝ, and τ′(0) = 1 so that the linearization Dhρ of the general dynamics hρ at the origin 0 ∈ M is equal to the weight matrix wM of the coupled system; i.e.,

Defining the synchronization matrix w+ by

and setting

the synchronized dynamics is given, in terms of the ξ-coordinates, by the n equations

Thus, the synchronized dynamics corresponds to that of an n-module with weight matrix w+ depending on the bias terms θ : = θA = θB. It may have fixed point attractors as well as periodic, quasiperiodic, or chaotic attractors, all constrained to Ms. Although the persistence of the synchronized dynamics is guaranteed by the synchronization conditions (8), it is not at all clear that the synchronized dynamics (18) is asymptotically stable, in the sense, that small perturbations will not desynchronize the system. Thus, an orbit in M may be an attractor for the synchronized dynamics but not for the global dynamics fρ of the coupled system (Ashwin et al., 1996).

Furthermore, if the synchronization conditions (8) are satisfied then the corresponding obstruction dynamics is given in terms of the η-coordinates by the equations

where w− denotes the obstruction matrix defined by

Observe that the obstruction dynamics does not depend on bias terms, as long as θA = θB. This means that the origin η0 ∈ M⊥ is always a fixed point for the η-dynamics (19). As long as η0 ∈ M⊥ is an asymptotically stable fixed point for the corresponding synchronized dynamics will be asymptotically stable. Recall that the obstruction matrix w− is identical with the linearization of the obstruction dynamics at the origin; i.e., .

Usually asymptotic stability of Ms is discussed in terms of the synchronization Liapunov exponents and the transversal Liapunov exponents , i = 1, . . . , n, for the synchronized dynamics on Ms, as for instance in Pasemann and Wennekers (2000). There it was shown that the synchronization manifold Ms is asymptotically stable, if the largest transversal Lyapunov exponent satisfies for all orbits in Ms. But since this is the case if all eigenvalues ϵi of the obstruction matrix w− satisfy |ϵi| < 1, i = 1, . . . , n, the asymptotic stability of the synchronization manifold Ms can be controlled by the obstruction matrix w− alone.

Thus, to reserve the full dynamical spectrum provided by an n-module with weight matrix w+ for a pure synchronized dynamics of a coupled system one just has to choose a coupling (wAB, wBA) such that the moduli of the eigenvalues of the corresponding obstruction matrix w− are all smaller than 1 (compare the example in section 4, Figure 12).

To get a reasonable estimate for the weights one can apply for instance a theorem like the one in Hammer and Tiňo (2003) to obtain

Theorem 3.2. Given an n-dimensional obstruction dynamics with respect to an obstruction matrix w−. If

then the origin η0 ∈ M⊥ is a global fixed point attractor for , and the corresponding synchronization dynamics is asymptotically stable.

Following the Liapunov exponent approach as in Pasemann and Wennekers (2000) one will trivially derive

Lemma 3.3. Let Nn(θ, w) be a synchronizable neuromodule with synchronization matrix w+ and obstruction matrix w−. Let ϵi, i = 1, . . . , n, denote the eigenvalues of the obstruction matrix w−. If

then the synchronization manifold Ms is asymptotically stable.

Correspondingly, to destabilize a synchronization manifold Ms, one has to chose a coupling (wAB, wBA) such that the eigenvalues of the obstruction matrix w− are non-zero with moduli large enough to make contributions to the positivity of the largest transversal exponent .

3.2. Synchronization Cores

Being interested again not only in the synchronized dynamics of a specific configuration of coupled neuromodules, which depends on a given set of parameters (θ, w) ∈ R, but in the full dynamical spectrum of synchronization available for coupled neuromodules we refer to the following

Definition Given a system of coupled modules and with coupling (wAB, wBA) satisfying (8). The structure CS(w+) of its synchronization matrix w+ is called the synchronization core of the coupled system .

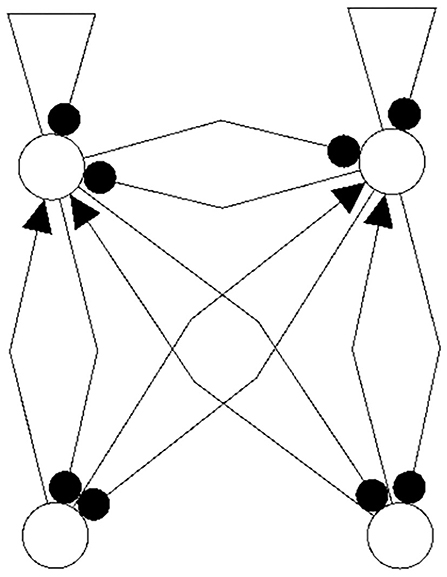

There can be many different structures and module configurations of and with many different coupling matrices wAB and wBA all leading to the same synchronization core. An example can be seen in section 4 Figure 14 where three different structures all have the same synchronization core w+ ∈ ℝn × n shown in Figure 5 (right). All these different modules and different couplings will lead to the same parameterized family of synchronized dynamics. Therefore, at the same time a synchronization core w+ stands for a whole class of synchronizable neural networks of dimension n, and we define

Definition Two coupled systems and with synchronization cores CS(w+) and CS(w′+) are called synchronization equivalent if they have the same synchronization core; i.e., there exists a coupling (wAB, wBA) such that

With respect to the structure-function relationship in coupled systems one then can ask for the effects different couplings (wAB, wBA) will have for the resulting dynamics on the modular neural network. To answer this question the following definition is introduced.

Definition Given a system of coupled modules and with coupling matrices wAB and wBA satisfying (8). Let CS(w+) denote its synchronization core. If there exists at least one pair of indices (i, j) such that but and , then the corresponding coupling (wAB, wBA) is called generative. Otherwise it is called conservative.

A synchronization core CS(w+) may be identical to the structures CS(wA) or CS(wB) of the isolated modules or , or it may be of different type. If it is generative it represents a family of parameterized dynamical systems different from those of the modules and . And it may allow for a richer dynamical spectrum than that of the isolated parts and .

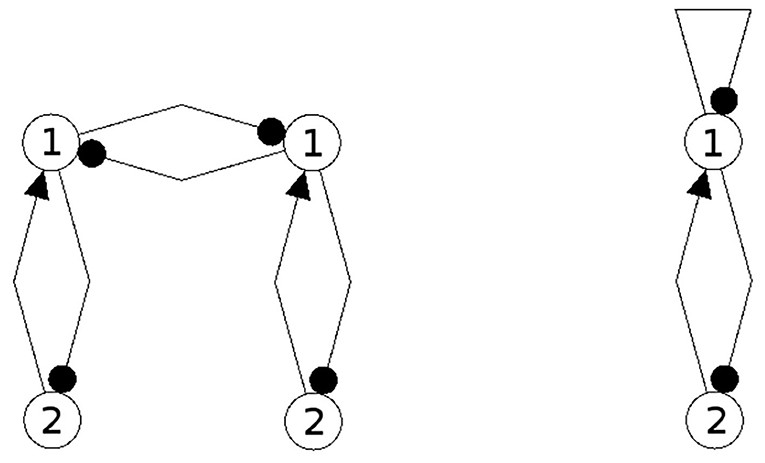

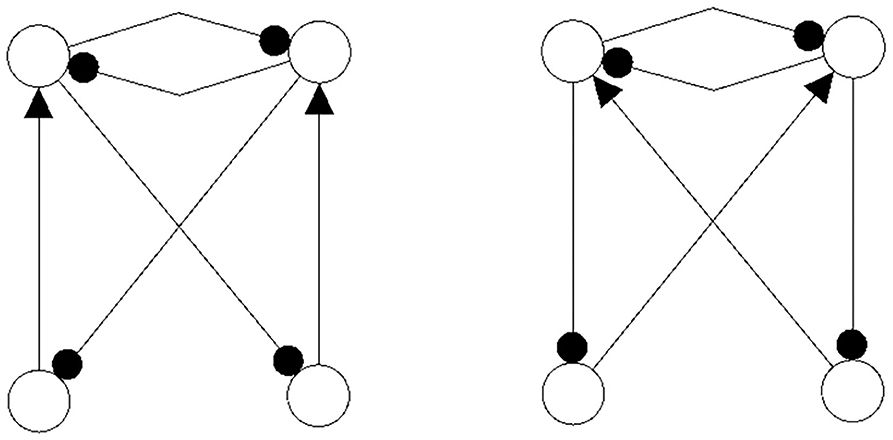

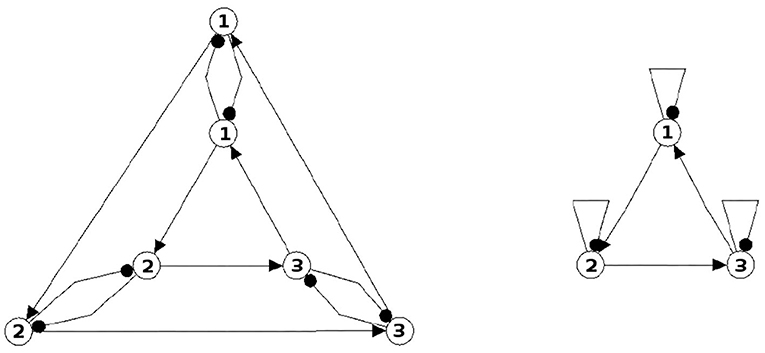

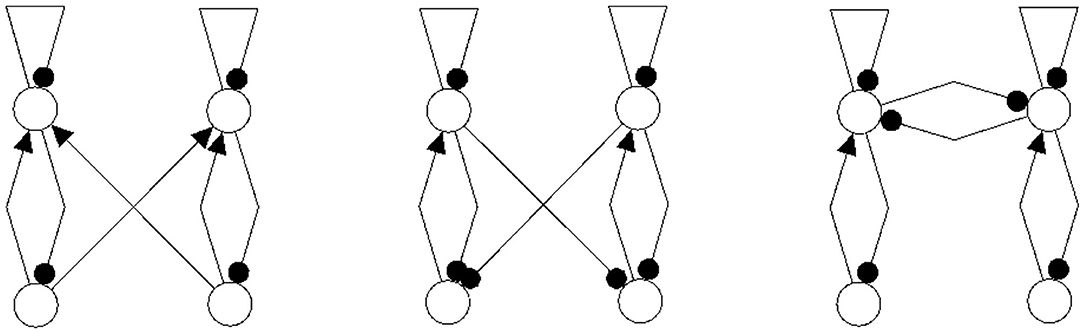

In Figures 1, 2 examples of a conservative and of a generative coupling of two odd 3-cycles are shown (left). For odd 3-cycles one observes, besides global fixed point attractors, only one dynamical form with co-existing period-2 and period-6 attractors (Pasemann, 1995). To illustrate the differences, the graphs are twisted (middle) revealing that a generative coupling introduces an additional 4-cycle into the system. That this coupling is generative can be seen by the synchronization core (right).

Figure 1. Conservative coupling of two odd 3-cycles (left), the same structure but twisted (middle), and the synchronization core CS(w+) (right).

Figure 2. Generative coupling of two odd 3-cycles (left), the same structure but twisted (middle), and the synchronization core CS(w+) (right).

Although in the first case the synchronization core is again an odd 3-cycle, in the generative case one observes a new odd 2-cycle in the synchronization core. The resulting 3-dimensional synchronized dynamics in the 6-dimensional network now displays many new periodic attractors up to quasi-periodicity, as simulation experiments show. This supports the hypothesis that generative couplings of neuromodules can enable a richer dynamical spectrum than that of the isolated modules.

3.3. Decomposition of Neural Networks

Having used synchronization so far as a tool to compose larger neural systems from smaller neuromodules to derive a richer dynamical spectrum one may now ask if it can also be used to determine how larger systems can be configured in such a way that their dynamical spectrum is enhanced. For that we try to decompose a large network into two sub-modules which can be completely synchronized. One can then optimize the system by choosing an appropriate coupling (wAB, wBA) which gives the desired properties of the corresponding synchronization and obstruction matrices w+ and w−. So first we have

Definition Given a neuromodule Nn(θ, w) of even dimension n = 2m with structure CS(w). It is called decomposable into synchronizable submodules, or s-decomposable, if there exist two recurrent sub-modules and with structures CS(wA) and CS(wB), and a coupling (wAB, wBA) which satisfies the synchronization condition (8) such that

Equation (22) is called an s-decomposition of Nn(θ, w). A neuromodule Nn(θ, w), n = 2m, is called basic if it is not s-decomposable.

Definition Given an s-decomposable neuromodule Nn(θ, w) with submodules and , coupling (wAB, wBA), and synchronization matrix w+. Its s-decomposition is called generative if the synchronization matrix w+ is generative. Otherwise it is called conservative. A neural network is called basic if it is not s-decomposable.

Under the assumed condition of complete synchronization, it is clear that an s-decomposable neuromodule Nn(θ, w) must be of even dimension. But s-decompositions may be generalized also to partial synchronized neuromodules (Pasemann and Wennekers, 2000).

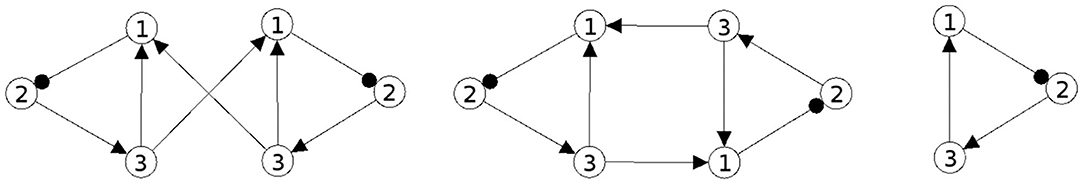

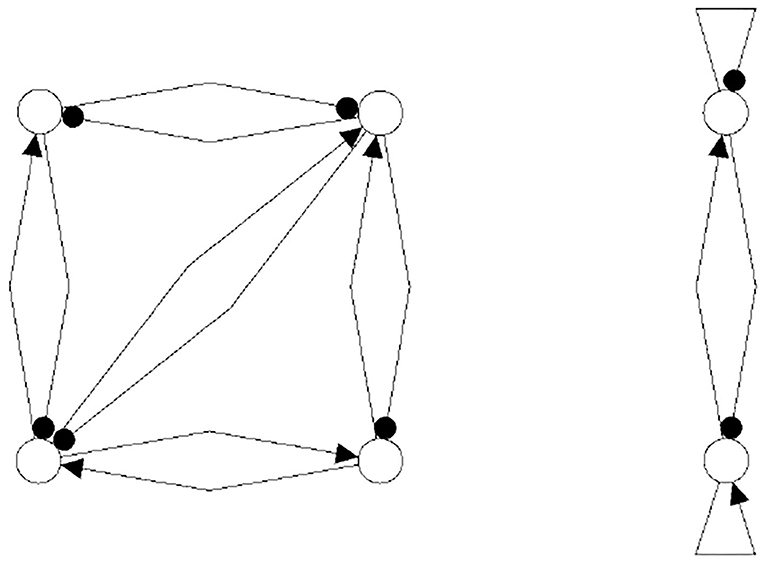

The following figures show examples of a basic module with six neurons (Figure 3), and an s-decomposable module with its synchronization core CS(w+), demonstrating that this s-decomposition is generative (Figure 4).

Figure 3. A six-neuron module which is not s-decomposable into two three-neuron modules. It is a basic module.

Figure 4. A six-neuron neuromodule (left) which is s-decomposable and the resulting synchronization core CS(w+) (right), showing that its s-decomposition is generative.

Analyzing the generative synchronization core CS(w+) of the s-decom-posable module in Figure 4, one observes again that the synchronized dynamics has a richer dynamical spectrum than the isolated 3-modules. For instance, the core CS(w+) allows for quasiperiodic attractors, although the parts, that is the basic (even or odd) 3-cycles can display only r-periodic attractors with r = 1, 3 and r = 2, 6, respectively.

The identification of neural structures that are s-decomposable or basic may help to develop networks with a desired rich dynamical spectrum. Proving conjectures like the following can help to find larger classes of such structures:

• If the connectivity of a neural network corresponds to the Cayley graph of a finitely generated Abelian group, then it is s-decomposable.

• If the connectivity of a neural network does not contain a cycle of even length, then it is not s-decomposable.

4. A Simple Example

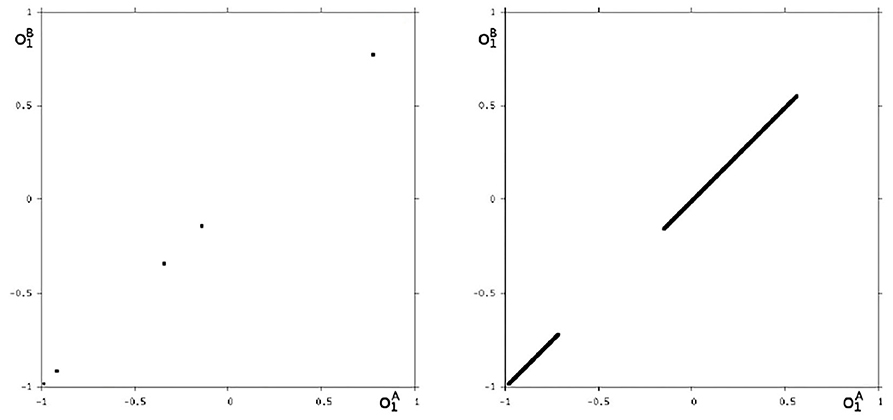

Some interesting features of coupled neural networks can be studied already for the special case of coupling identical modules. For our discussion this is not an essential restriction, but allows a simpler notation. To illustrate the foregoing theoretical arguments we therefore choose the following simple example. Coupled are two identical odd 2-cycles without self-couplings (compare Figure 5) leading to a 4-dimensional neural network . With respect to the synchronization condition (8 the notation then reduces to bias terms satisfying

and with w := wA = wB one has

Such odd 2-cycles come in only two dynamical forms: one with a global fixed point attractor, and one with a global period-4 attractor.

The recurrent coupling wcoup = wBA = wAB is chosen to have only one non-zero element

so that the synchronization and obstruction matrices read

This leads to the structure of the composed system depicted in Figure 5 (left) and to its synchronization core CS(w+) (right). One observes that the recurrent coupling wcoup is generative: it generates a negative self-connection of neuron 1 which was absent in the original 2-neuron networks. But structures like the synchronization core CS(w+) allow for configurations which display periodic, quasi-periodic and even chaotic attractors (Pasemann, 2002), so that already the synchronized dynamics of the coupled system has a much richer dynamical spectrum than the original odd 2-cycles.

To go into deeper analysis we consider first the stability properties of the synchronized dynamics on Ms. Because here the eigenvalues of w+ and w− have identical moduli, from section 3.1 we know that the largest synchronization exponent here is equal to the largest transversal exponent:

Thus, for orbits will never be asymptotically stable on all of M; and in fact, synchronized chaos will always be hyperchaotic.

To analyze this situation we choose the weights of the synchronization matrix w+ large enough to allow for chaotic dynamics by setting

which is obtained by setting

The obstruction matrix then reads

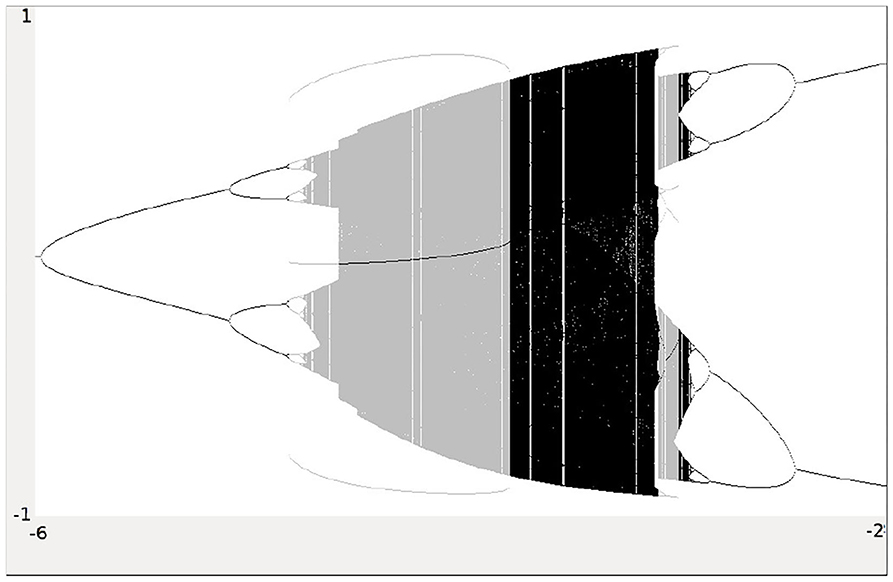

Analyzing the synchronous dynamics using the synchronization matrix (27) and fixing θ2 = 0 one realizes in the corresponding bifurcation diagram for θ1 (Figure 6) a chaotic domain around −4.75 < θ1 < −3.1.

Figure 6. Bifurcation diagram for the varied bias term θ1 of the synchronous dynamics according to w+ given by (27). The bias term θ2 = 0 is fixed. Shown is the mean output of the 2-neuron module corresponding to the synchronization core CS(w+) in Figure 5 (right). Coexisting attractors are marked by gray shaded domains.

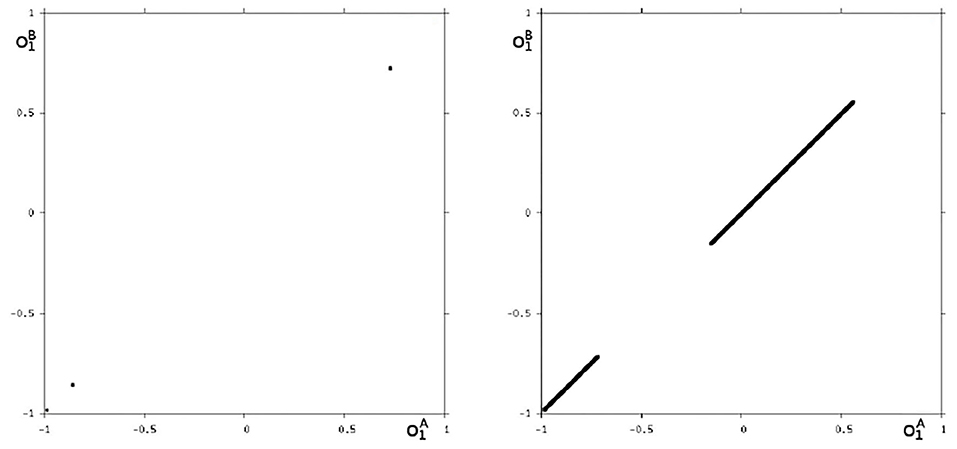

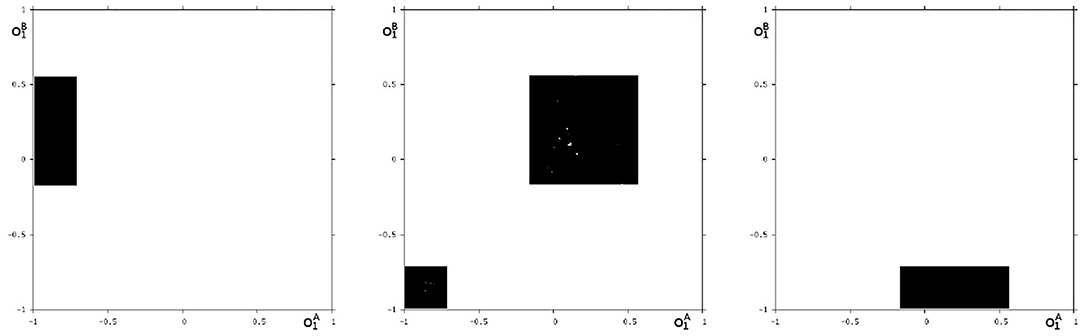

Because this configuration has quite interesting dynamical properties and may serve as an example for what was called a “rich dynamical spectrum,” we will identify the coexistent attractors for certain bias values θ1 from this interval. For that we use their projections onto the -output space. As suggested by the bifurcation diagram in Figure 6 we check the situation for θ1 = −4 and find five different attractors for this configuration: a period-3 and a chaotic attractor in the synchronization manifold Ms shown in Figure 7, and an asynchronous period-6 attractor together with two asynchronous chaotic attractors, as can be seen in Figure 8. They are derived by using random initial conditions in the 4-dimensional output space M*. One further observes that all asynchronous attractors are symmetric with respect to the synchronization manifold Ms, which is typical for coupled identical modules (Pasemann, 1999).

Figure 7. The two synchronized attractors in Ms for θ1 = −4: A period-3 and a chaotic attractor. Shown are their projections to the -output space.

Figure 8. The three asynchronous attractors in M for θ1 = −4: a period-6, and two chaotic attractors. Shown are their projections to the -output space.

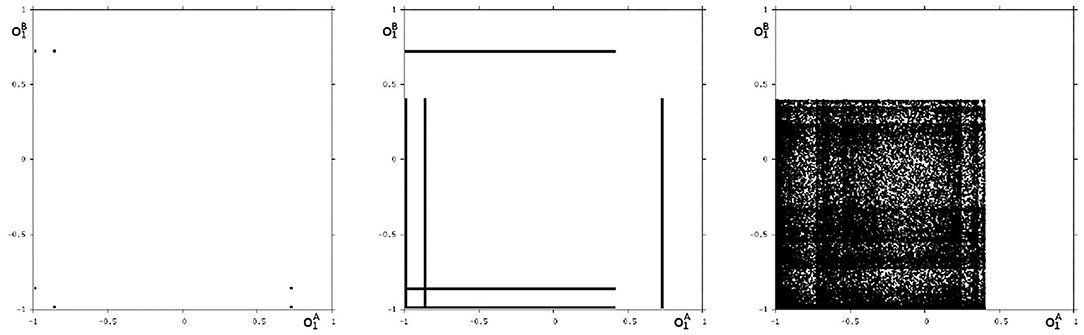

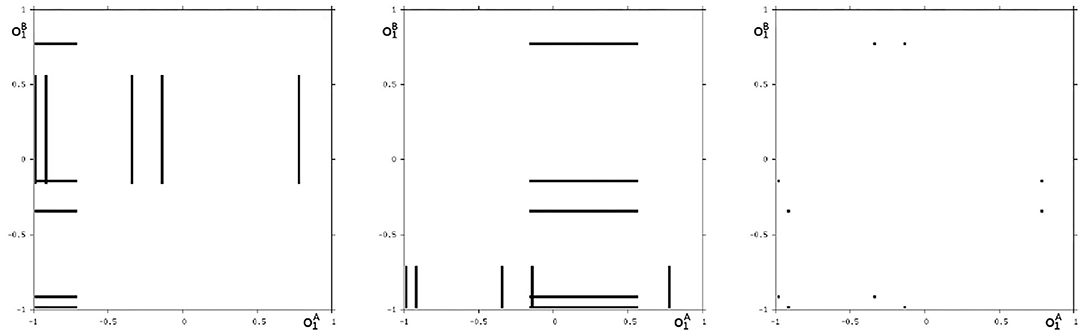

We may also have a look at the situation for θ1 = −3.0 where coexisting attractors can be assumed according to the shaded structure in Figure 6. Using again randomly chosen initial conditions on m* we are able to identify at least eight different coexisting attractors: In the synchronization manifold Ms there co-exists a period-5 attractor together with a chaotic attractor (Figure 9). Furthermore there are six asynchronous attractors, one of them is a period-10 attractor, the other five attractors are chaotic. The projections to the -output space of all these attractors are displayed in Figures 10, 11.

Figure 9. The two synchronized attractors for θ1 = −3: a period-5 and a chaotic attractor. Shown are their projections to the -output space.

Figure 10. Three asynchronous chaotic attractors for θ1 = −3. Shown are their projections to the -output space.

Figure 11. The additional two asynchronous chaotic attractors for θ1 = −3, and an asynchronous period-10 attractor for θ1 = −3. Shown are their projections to the -output space.

4.1. Globally Stable Synchronization Manifolds

If one wants to keep the full dynamical spectrum of the synchronization core CS(w+) with w+ as in Equation (27), but wants to keep the dynamics being strictly synchronous for all varying θ, one has to choose a configuration such that the synchronization manifold Ms is globally stable. This is guarantied if the obstruction matrix w−, according to section 3.1, has eigenvalues all satisfying |λi| < 1, i = 1, . . . , n. For that, instead of (29), one may choose an obstruction matrix

which has eigenvalues −0.984 and −0.366, respectively. Then one may define a convenient weight matrix w = wA = wB for the modules and calculate the corresponding coupling wcoup = wAB = wBA as in the following

The corresponding configuration is shown in Figure 12 having the same synchronization core CS(w+) as the structure discussed above (Figure 5).

Figure 12. The example of a conservative coupling of 2n-modules allowing the same dynamical spectrum corresponding to the synchronization matrix w+ (27) in a globally stable synchronization manifold Ms.

4.2. Discussion of the Example; Generative Couplings

Although the example of this section is very simple, the dynamics for two specific parameter values, characterized in the figures above, clearly demonstrate what we termed a “rich dynamical spectrum.” And it also makes clear that this richness is the direct cause of the generative coupling of the two odd 2-cycles. Furthermore, the strong coupling leads to a large modulus of the eigenvalues of the synchronization matrix w+ and also of the obstruction matrix w− given in (29). Therefore, also the largest transversal Lyapunov exponent satisfies and therefore the synchronization manifold Ms is destabilized; but, recall, orbits with initial conditions in the synchronization manifold Ms will stay in Ms. With initial conditions outside of Ms one observes additional asynchronous attractors in the state space M of the coupled system, even if the synchronization condition (8) is satisfied. Thus, it depends essentially on the initial conditions whether the coupled system will follow a synchronous or asynchronous orbit.

Further analysis shows that smaller negative coupling values will drive the synchronized dynamics of the coupled system into a domain with quasi-periodic attractors. But because the modulus of the largest eigenvalue of w− remains still large enough to destabilize the synchronization manifold Ms there will still exist also some asynchronous attractors in M. In fact, besides a synchronized quasi-periodic attractor in Ms there is a multitude of asynchronous quasi-periodic attractors in M for certain values of the bias term θ1. Even if one changes the strength of the connections w12 = −w21 the synchronization manifold Ms will stay unstable, and there exist some asynchronous attractors as well until the modulus of the largest eigenvalue of w− is smaller than 1.0. This demonstrates that a generative coupling guaranties over a large θ-parameter domain co-existing synchronous as well as asynchronous attractors.

It should be mentioned here that one may even couple non-recurrent networks, like the feedforward networks in Figure 13, to obtain the same synchronization core CS(w+) as in our example, Figure 5 right. So synchronization cores are well defined not only for coupled neuromodules but, more generally, also for coupled non-recurrent networks. To observe the same synchronized dynamics of such systems it is only necessary that they have the same synchronization matrix w+ and obstruction matrix w−. This indicates that in general the class of synchronization equivalent networks comprises a large number of structures that are all able to carry exactly the same types of synchronized dynamics. And that generative couplings of “simple” networks can lead to networks with a much richer dynamical spectrum than that observed for the original subsystems.

4.3. Discussion of the Example; Conservative Couplings

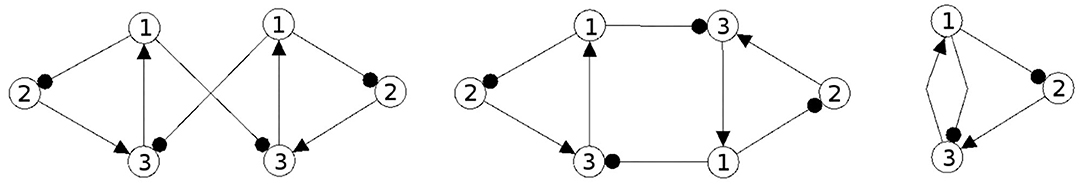

Now then, what is the effect of conservative couplings? Because the result of these couplings is a synchronization core CS(w+), which is the same as the structure of the parts , it will not generate a synchronized dynamics with a richer dynamical spectrum than that of the parts. Examples of such non-generative couplings, all having the same synchronization core CS(w+) as our example (Figure 5), are shown in Figure 14. One therefore can infer that all the corresponding different network configurations will have a synchronized dynamics which is identical with the synchronized dynamics discussed in the example above. But here the dynamical spectrum is identical with that of the coupled 2-neuron networks (Pasemann, 2002).

Figure 14. Examples of network structures all having the same synchronization core CS(w+) as our example shown in Figure 5 right. They all have the same parameterized family of 2-dimensional synchronized dynamics.

4.4. Discussion of the Example; Relation to K-Sets

One may further remark that, based on the neurophysiological findings, Freeman (1975) identified 10 basic neurodynamical modules, the Katchalsky K-sets, that help to explain how neural populations can create the complex dynamics essential for cognition. One of the simplest modules, called the Freeman KII-set, consists of two excitatory and two inhibitory populations. The structure CS(KII) of a KII-set is displayed in Figure 15 (left) together with its synchronization core CS(w′+) (right). Although this synchronization core has an additional positive self-connection, the synchronized dynamics of the KII-set is not qualitatively different from that of the example discussed above, i.e., it has the same dynamical spectrum. Having this in mind, it is not a surprise that a corresponding structure for artificial neurons served as a versatile module for dynamic memory designs, for robust classification, for pattern recognition, and for navigation tasks (Kozma, 2008; Kozma and Freeman, 2009).

Figure 15. The structure of a Freeman KII-set CS(KII) (left) and its synchronization core CS(w′+) (right).

5. Summary

Based on the assumption that cognitive abilities of brains and brain-like systems rest on their dynamical properties, the development of artificial neural networks providing cognitive abilities calls for systems having a manifold of different non-trivial attractors between which can be switched by external (and internal) sensor signals (Pasemann, 2017).

As a guideline for generating neural systems with such a desired “rich dynamical spectrum,” for instance by applying an evolutionary algorithm, we propose to couple synchronizable subsystems with a certain dynamical spectrum to obtain a neural network with an even richer dynamical spectrum. For two neuromodules of dimension n, represented by their signed connections (structures), which can be synchronized by applying appropriate interconnections, we observe that the resulting synchronized dynamics of the 2n-dimensional network is identical to that of an n-dimensional neuromodule, defined by the synchronization matrix w+ and named the synchronization core CS(w+).

Using so called generative couplings, even the n-dimensional synchronized dynamics may have a richer dynamical spectrum than the isolated n-dimensional subsystems. Furthermore, based on the obstruction matrix w− criteria are given for the co-existence of asynchronous attractors outside of the synchronization manifold, so that at the same time there exist attractors for the synchronized dynamics as well as for an asynchronous dynamics; i.e., the dynamical spectrum of the composed system is much richer. Thus, a network composed according to these rules will provide a larger variety of attractors for use as memories or for action control. For applications, as usual, which attractor goes into actions depends on bias terms (slow sensor inputs), the crossing of bifurcation manifolds or applied initial conditions (Pasemann, 2017).

To demonstrate the basic ideas, we concentrated on the recurrent coupling of two identical 2-dimensional neuromodules, two 2-cycles. Although this is an extremely simple configuration we made visible a manifold of co-existing synchronous and asynchronous attractors for what was a generative coupling. For such 2 × 2-dimensional networks also conservative (non-generative) couplings where discussed, and an example provides the presumpsion that the concept of generative couplings can be extended to not strongly recurrent, but still synchronizable subsystems. Also a comparison with Freeman KII-sets, which were derived from neurophysiological findings, is given.

From our results one can infer that the class of synchronization equivalent neural structures contains very many even dimensional networks, all having the same properties of their synchronous dynamics. And, furthermore, networks displaying a rich dynamical spectrum do not need to have many connections. This observation may be related to the small world view of network functionality (Watts and Strogatz, 1998).

Although concepts of the paper relate only to completely synchronized even dimensional composed systems, a generalization to partially synchronized neural networks (Pasemann and Wennekers, 2000) is straight forward.

The ultimate goal of this approach is to develop strategies for selecting reasonable neuromodules which can serve as individuals for the initial population of an evolutionary neuro-robotics run. Evolutionary programs, like for instance the Interactively Constrained Neuro-Evolution (ICONE) (Rempis and Pasemann, 2012) program, are prepared to use populations of neuromodules (instead of neurons) and coupling connections as basic elements for an evolution of behavior relevant neural control-networks for autonomous robots.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The author would like to thank the Institute for Cognitive Science, University of Osnabrück, for its hospitality and support, and Thomas Wennekers for his valuable suggestions.

References

Anderson, M. L. (2003). Embodied cognition: a field guide. Artif. Intell. 149, 91–130. doi: 10.1016/S0004-3702(03)00054-7

Ashby, W. R. (1958). Requisite variety and its implications for the control of complex systems. Cybernetica 1, 83–99.

Ashwin, P., Buescu, J., and Stewart, I. (1996). From attractor to chaotic saddle: a tale of transverse instability. Nonlinearity 9:703. doi: 10.1088/0951-7715/9/3/006

Dean, J. (1998). Animats and what they can tell us. Trends Cogn. Sci. 2, 60–67. doi: 10.1016/S1364-6613(98)01120-6

Guillot, A., and Meyer, J. A. (2001). The animat contribution to cognitive systems research. Cogn. Syst. Res. 2, 157–165. doi: 10.1016/S1389-0417(01)00019-5

Hammer, B., and Tiňo, P. (2003). Recurrent neural networks with small weights implement definite memory machines. Neural Comput. 15, 1897–1929. doi: 10.1162/08997660360675080

Harvey, I., Di Paolo, E., Wood, R., Quinn, M., and Tuci, E. (2005). Evolutionary robotics: a new scientific tool for studying cognition. Artif. Life 11, 79–98. doi: 10.1162/1064546053278991

Kozma, R. (2008). Intentional systems: review of neurodynamics, modeling, and robotics implementation. Phys. Life Rev. 5, 1–21. doi: 10.1016/j.plrev.2007.10.002

Kozma, R., and Freeman, W. J. (2009). The KIV model of intentional dynamics and decision making. Neural Netw. 22, 277–285. doi: 10.1016/j.neunet.2009.03.019

Maurer, H. (2016). Integrative synchronization mechanisms in connectionist cognitive neuroarchitectures. Comput. Cogn. Sci. 2:3. doi: 10.1186/s40469-016-0010-8

Melloni, L., Molina, C., Pena, M., Torres, D., Singer, W., and Rodriguez, E. (2007). Synchronization of neural activity across cortical areas correlates with conscious perception. J. Neurosci. 27, 2858–2865. doi: 10.1523/JNEUROSCI.4623-06.2007

Negrello, M., and Pasemann, F. (2008). Attractor landscapes and active tracking: the neurodynamics of embodied action. Adapt. Behav. 16, 196–216. doi: 10.1177/1059712308090200

Nolfi, S., Bongard, J., Husbands, P., and Floreano, D. (2016). “Evolutionary robotics,” in Springer Handbook of Robotics, eds B. Siciliano and O. Khatib (Berlin; Heidelberg: Springer), 2035–2068. doi: 10.1007/978-3-319-32552-1_76

Nolfi, S., and Floreano, D. (2000). Evolutionary Robotics: The Biology, Intelligence, and Technology of Self-Organizing Machines. London: MIT Press.

Pasemann, F. (1995). Characterization of periodic attractors in neural ring networks. Neural Netw. 8, 421–429. doi: 10.1016/0893-6080(94)00085-Z

Pasemann, F. (1999). Synchronous and asynchronous chaos in coupled neuromodules. Int. J. Bifurc. Chaos 9, 1957–1968. doi: 10.1142/S0218127499001425

Pasemann, F. (2002). Complex dynamics and the structure of small neural networks. Netw. Comput. Neural Syst. 13, 195–216. doi: 10.1080/net.13.2.195.216

Pasemann, F. (2017). Neurodynamics in the sensorimotor loop: representing behavior relevant external situations. Front. Neurorobot. 11:5. doi: 10.3389/fnbot.2017.00005

Pasemann, F., and Wennekers, T. (2000). Generalized and partial synchronization of coupled neural networks. Netw. Comput. Neural Syst. 11, 41–61. doi: 10.1088/0954-898X_11_1_303

Rempis, C. W., and Pasemann, F. (2012). “An interactively constrained neuro-evolution approach for behavior control of complex robots,” in Variants of Evolutionary Algorithms for Real-World Applications, Vol. 87, eds R. Chiong, T. Weise, and Z. Michalewicz (Berlin; Heidelberg: Springer), 305–341. doi: 10.1007/978-3-642-23424-8_10

Santos, B. A., Barandiaran, X. E., and Husbands, P. (2012). Synchrony and phase relation dynamics underlying sensorimotor coordination. Adapt. Behav. 20, 321–336. doi: 10.1177/1059712312451859

Singer, W. (1996). “Neuronal synchronization: a solution to the binding problem,” in The Mind-Brain Continuum: Sensory Processes, eds R. R. Llinás and P. S. Churchland (Cambridge, MA: MIT Press), 101–130.

Sporns, O. (2002). Network analysis, complexity, and brain function. Complexity 8, 56–60. doi: 10.1002/cplx.10047

Sporns, O. (2017). The future of network neuroscience. Netw. Neurosci. 1, 1–2. doi: 10.1162/NETN_e_00005

Sporns, O., Tononi, G., and Edelman, G. M. (2000). Connectivity and complexity: the relationship between neuroanatomy and brain dynamics. Neural Netw. 13, 909–922. doi: 10.1016/S0893-6080(00)00053-8

Szentágothai, J., and Arbib, M. A. (1974). Conceptual Models of Neural Organization: Based on a Work Session of the Neurosciences Research Program, Vol. 12. Cambridge, MA: MIT Press.

Von der Malsburg, C. (1995). Binding in models of perception and brain function. Curr. Opin. Neurobiol. 5, 520–526. doi: 10.1016/0959-4388(95)80014-X

Watts, D. J., and Strogatz, S. H. (1998). Collective dynamics of ‘small-world' networks. Nature 393, 440. doi: 10.1038/30918

Keywords: connectivity (B), neurodynamics, complexity, synchronization, modularity

Citation: Pasemann F (2021) Modular Neurodynamics and Its Classification by Synchronization Cores. Front. Syst. Neurosci. 15:606074. doi: 10.3389/fnsys.2021.606074

Received: 14 September 2020; Accepted: 27 January 2021;

Published: 12 March 2021.

Edited by:

Alessandro E. P. Villa, Neuro-Heuristic Research Group (NHRG), SwitzerlandReviewed by:

Ehren Lee Newman, Indiana University Bloomington, United StatesJiang Wang, Tianjin University, China

Copyright © 2021 Pasemann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frank Pasemann, ZnJhbmsucGFzZW1hbm5AdW5pLW9zbmFicnVlY2suZGU=

Frank Pasemann

Frank Pasemann