- 1Dipartimento di Neuroscienze, Biomedicina e Movimento, University of Verona, Verona, Italy

- 2Dipartimento di Neuroscienze, University of Parma, Parma, Italy

- 3Center for Mid/Brain Sciences, University of Trento, Trento, Italy

Adults exposed to affective facial displays produce specific rapid facial reactions (RFRs) which are of lower intensity in males compared to females. We investigated such sex difference in a population of 60 primary school children (30 F; 30 M), aged 7–10 years. We recorded the surface electromyographic (EMG) signal from the corrugator supercilii and the zygomatici muscles, while children watched affective facial displays. Results showed the expected smiling RFR to smiling faces and the expected frowning RFR to sad faces. A systematic difference between male and female participants was observed, with boys showing less ample EMG responses than age-matched girls. We demonstrate that sex differences in the somatic component of affective motor patterns are present also in childhood.

Introduction

In the general population, males show lesser empathic personality traits compared to females. This observation has been well-documented in children and adults to the point that some authors define the male brain by its lesser empathic capacities than the female one (Baron-Cohen, 2002; Lawrence et al., 2004; Coll et al., 2012; Michalska et al., 2013). This sexual dimorphism in humans is supposed to be the product of evolutionary pressure related to mating and parental behaviors, i.e., parental investment is much greater for the female sex then for the male sex (Decety and Svetlova, 2012). Measuring empathy is challenging. Explicit self-reports by means of validated questionnaires represent the gold-standard for empathy assessment (Lawrence et al., 2004), which may be supported by a constellation of ancillary physiological (Dimberg et al., 2002) and imaging (Michalska et al., 2013) measures. Physiological measures of empathy generally consist in recording affective motor responses to other’s emotional states. The general rationale for this is rooted in the “affective” theory of empathy (Lawrence et al., 2004) that defines empathy as the capacity to re-enact other’s affective states.

Affective motor patterns recruit specific visceral and somatic effectors. Visceral responses (e.g., an increase in heart rate or a change in sweat production) are produced for homeostasis of internal functions, in contrast, somatic affective movements have a communicative role (e.g., smiling or yelling). One of the most studied somatic components of affective movements is facial expression. It has been described that individuals exposed to affective displays of conspecifics produce stereotypical facial movements that are specific to the facial expression that is observed. These movements are a robust and replicated finding and are referred to as rapid facial reactions (RFRs; Dimberg, 1982; Thunberg and Dimberg, 2000; Larsen et al., 2003; Weyers et al., 2009; Fujimura et al., 2010; Rymarczyk et al., 2011; Likowski et al., 2012); for a review see Cattaneo and Pavesi (2014). They may follow a mimetic pattern (as in smiling in response to a smile) or a reactive pattern (as in producing a fearful facial posture in response to an angry face) to the emotional facial expression of the person being observed (Dimberg, 1982; Moody et al., 2007). Subjects are unaware of own RFRs, which are not modified by superimposed voluntary movements. Another characteristic of RFRs is their sub-second onset latency, which has been documented in the 300–700 ms range (Dimberg et al., 2000). According to some authors, RFRs initiate or modulate affective states in the observer and therefore are potentially a fundamental link in the chain of events that mediate inter-individual affective communication (Dimberg et al., 2002; Larsen et al., 2003; McIntosh et al., 2006; Niedenthal et al., 2010). Consequently RFRs have been used as a tool to investigate empathy in special populations such as people diagnosed with autistic spectrum disorder (Clarke et al., 2005; McIntosh et al., 2006; Beall et al., 2008) or in particular personality traits (Scarpazza et al., 2018). Since their early descriptions, it has been shown that RFRs are unevenly produced between the two sexes (Dimberg and Lundquist, 1990; Lang et al., 1993; Thunberg and Dimberg, 2000; Sonnby-Borgstrom et al., 2008; Hermans et al., 2009; Huang and Hu, 2009; Neufeld et al., 2016). Males produce RFRs of smaller amplitude than females, possibly due to the greater female parental investment and empathy compared with males. RFRs have been recorded in children (McIntosh et al., 2006; Beall et al., 2008; Oberman et al., 2009; Deschamps et al., 2012, 2014; Geangu et al., 2016). A very large and recent web-based study confirmed sex differences in facial reactions to affective stimuli, and suggested that the differences between sexes could not be entirely quantitative (females more than males). On the contrary, in adults, qualitative differences are found, with women being more reactive to positive emotional stimuli and vice-versa, males being more reactive on negative stimuli (McDuff et al., 2017). Up to now the issue of sex differences in RFRs has not been explicitly investigated in children, and only a partial report in pre-pubertal children is available in the literature (McManis et al., 2001). We aim to fill this gap in current knowledge with the present report.

Materials and Methods

Participants

We examined a group of 60 typically-developing children (30 males and 30 females; age: 7; 8–10; 4 years) randomly recruited among the pupils of a local public primary school in Parma (Italy). They had no history of pre-term birth, neurodevelopmental disorders and were developing typically. Ethnicity was at 95% Caucasian, compatibly with the general Italian population1. All participants had normal or corrected to normal vision. Written informed consent was obtained from each participant’s parent and full approval of the child was obtained before the session. The study had been approved by the local ethical committee (Commitee for human experimentation of the University of Parma).

Visual Stimuli

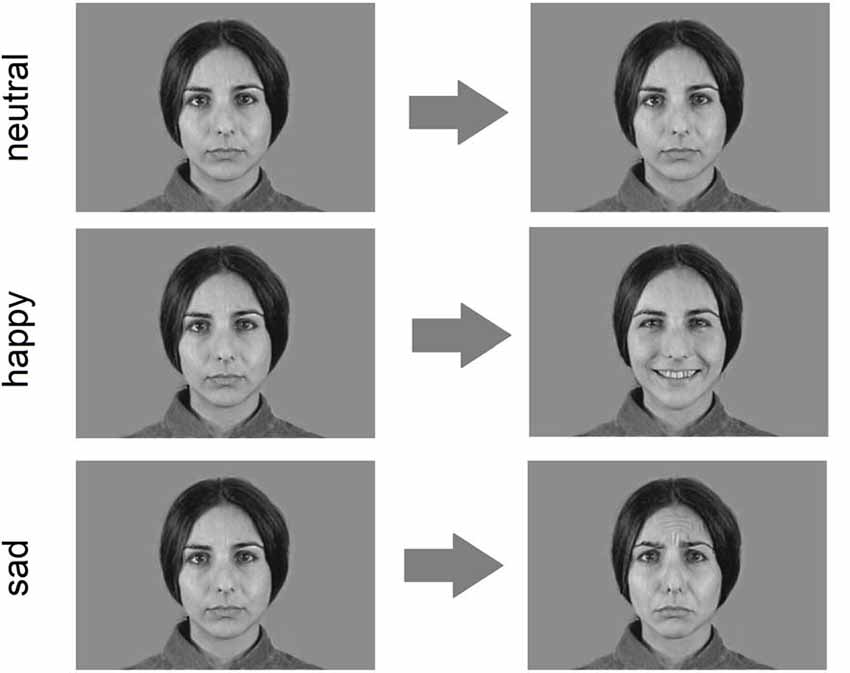

Visual stimuli were videos of faces passing from an initial neutral expression to full-fledged emotional expressions. We built the movies starting from grayscale photographs (14.5 cm high × 21 cm wide) selected from the Montreal Set of Facial Displays of Emotion (Beaupré and Hess, 2005) representing neutral, happy and sad expressions of Caucasian, Asian and African males and females. For each actor the neutral face was morphed by means of morphing software (Sqirlz Morph 1) into the full emotion in steps of 10%. In this way we obtained for each actor and emotion, 11 frames representing the spectrum of facial expression form neutral to 100% of the emotion. All frames were mounted in sequence to obtain a short video clip of 2930 ms. The clip in the neutral condition was done with a static sequence of 11 frames of the neutral face. The three stimulus types, happy, sad and neutral are shown in Figure 1. The ultimate number of stimuli were 2 (actor gender) × 3 (actor ethnicity) × 3 (emotions) × 2 (different actors within each category) = 36 clips. These were presented on a 15.4″ computer screen placed about 60 cm away from the participant.

Procedure

Participants were greeted by the experimenters, were shown the recording laboratory and allowed time to familiarize with the environment and the equipment. They were shown the recording electrodes and were allowed to handle them. In order to avoid any bias in the participants, we avoided any reference to facial movements and muscles during the initial briefing. Instead participants were told that we were measuring skin temperature from various parts of the body and a few fake electrodes were attached to the skin of the hand and feet (Dimberg and Thunberg, 1998). Trials were initiated manually by the experimenter. The experimental session was divided in two blocks. In the first block, named “passive observation” the participants were only asked to watch attentively the clips. In the second block (“explicit rating”) they were asked to name the emotion expressed in each clip. Verbal responses were logged for further analysis. Each of the two blocks consisted in 36 stimuli, 12 clips representing happy expressions, 12 clips representing sad expressions and 12 clips of neutral expression. At the end of the session we asked all participants to perform maximal contractions of the two recorded facial muscles. This measure was used during data processing for between-subjects normalization.

EMG Recordings, Pre-processing and Analysis

Facial EMG was recorded from the zygomatici muscles and the corrugator supercilii bilaterally with Ag/AgCl electrodes (recording area of 28 mm2). Muscle activity was continuously recorded using an electroencephalograph (Micromed-system, Italy), amplified ×1000 and digitized at a sampling rate of 512 Hz. The EMG signal was band-pass filtered (20–250 Hz) and rectified. The rectified EMG signal was then processed in the following steps: (1) all EMG values were divided by the EMG recorded during maximal contraction to improve comparability between subjects. EMG data was therefore expressed as percentage of maximal contraction rather than as absolute values. (2) Continuous EMG recordings were parsed into trials by considering the window from −800 ms to +2800 ms from stimulus onset. (3) Data were averaged in 400 ms consecutive bins, thus obtaining nine consecutive values of EMG for each trial. (4) The two pre-stimulus bins were averaged to create a baseline EMG value. (5) The seven post-stimulus bins were baseline-corrected by subtracting from them the baseline values. In the resulting data, any positive value indicated increased EMG activity compared to baseline and negative values indicated decreased EMG activity compared to baseline. (6) EMG data from each bin were averaged within subjects according to the emotion displayed. The resulting dataset included for each subject a total of six (2 muscles × 2 sides × 3 emotions) series of seven bins each.

Statistical Analysis of EMG Data

The processed EMG data were analyzed by means of a mixed-design ANOVA with one between-subjects factor, SEX (2 levels: male or female) and four within-subjects factors, SIDE (2 levels: left or right) MUSCLE (2 levels: corrugator supercilii and zygomatici), EMOTION (3 levels: neutral, happy and sad) and BIN (7 levels, corresponding to each of the post-stimulus bins). Post hoc comparisons were made with t-tests with Bonferroni correction where appropriate. Greenhouse-Geisser (GG) correction was performed when appropriate. Effect size was expressed by means of the eta-squared coefficient. All statistics were performed with the STATISTICA software (StatSoft Inc.).

Analysis of Behavioral Data

The explicit assessment of emotional expressions was classified offline into five macro-emotional categories (happiness, sadness, anger, disgust and fear). Accuracy was calculated as the ratio of correct responses on total responses. Statistical analysis on accuracy was performed by a mixed-design ANOVA with one between-subjects factor, SEX (2 levels: male or female) and one within-subjects factor, EMOTION (2 levels: happy and sad) Post hoc comparisons were made with t-tests with Bonferroni correction where appropriate.

Results

EMG

As a preliminary analysis, we looked for inhomogeneity in the pool of stimuli. In particular, we inspected qualitatively the EMG responses to single stimuli to identify if any were systematically more or less evocative then others in producing RFRs. We assessed quantitatively by means of a 4-way ANOVA (STIMULUS SEX*STIMULUS ETHNICITY*EMOTION*PARTICIPANT SEX) whether any effect of ethnicity or sex of the stimulus were evident. No main effects nor interactions involving STIMULUS SEX and STIMULUS ETHNICITY were evident (all p-values > 0.18).

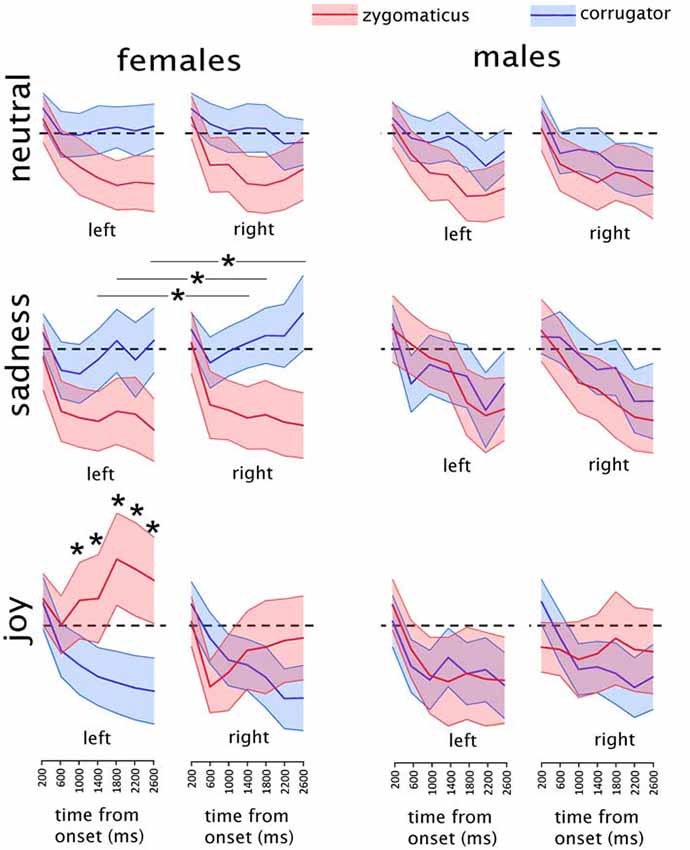

EMG results of the main analysis are illustrated in Figure 2. Given the ad-hoc interest for specific muscular responses to specific emotions, we considered as results of interest only the interactions involving SEX, MUSCLE and EMOTION. Other effects, for example a main effect of MUSCLE, are not informative for the present work and will not be discussed. The omnibus ANOVA showed a significant complex interaction of SEX*SIDE*MUSCLE*EMOTION*BIN (F(12,696) = 3.6893, p = 0.00002; GG-adjusted p = 0.0004; eta-squared = 0.06). To investigate this 5-way interaction we split the data among the two populations of females and males into two SIDE*MUSCLE* EMOTION *BIN ANOVAs.

Figure 2. Mean results of the electromyographic (EMG) recordings in males and females in each experimental condition. Asterisks indicate the time-bins in which a significant difference was found between the two muscles. In the “sadness” condition, asterisks are represented associated with a line because they refer to the data collapsed between the two sides. Shaded areas represent the region included in the upper and lower 95% confidence intervals of the mean. The EMG activity is expressed as the percentage of maximal EMG activity, baseline corrected by subtraction of the pre-stimulus EMG (see “Materials and Methods” section for details on EMG pre-processing). Dashed black lines indicate the value of y = 0, corresponding to the baseline pre-stimulus EMG.

Male Group

The sub-ANOVA on male participants did not show any significant interaction of interest, i.e., involving MUSCLE and EMOTION (all p values > 0.12). The remaining exploratory analysis was therefore limited to the female participants.

Female Group

In the female group a significant interaction of MUSCLE*EMOTION*BIN (F(12,348) = 10.846, p < 0.000001; GG-adjusted p < 0.000001; eta-squared = 0.27) was found, without any effect of SIDE. Data from the female group were further split into three separate ANOVAs, one for each of the three stimulus types (happy, sad and neutral).

Female Group, Happiness Clips

The analysis on happiness clips showed a significant SIDE*MUSCLE*BIN interaction: (F(6,174) = 12.147, p < 0.000001; GG-adjusted p < 0.000001; eta-squared = 0.30). This interaction was further explored by means of two MUSCLE*BIN interactions, one for each side.

Female Group, Happiness Clips, Right Side

The ANOVA on the right side showed a significant MUSCLE*BIN interaction (F(6,174) = 6.882, p = 0.000001); GG-adjusted p = 0.0001; eta-squared = 0.19). In the Post hoc analyses, significantly different values between the two muscles were found at the 400–800 ms bin (t(29) = 2.1; p = 0.04), at the 2000–2400 ms bin (t(29) = −2.2; p = 0.04) and at the 2400–2800 ms bin (t(29) = −2.1; p = 0.04). However, none of them were below the adjusted p-threshold for seven multiple comparisons (p = 0.007) and therefore will not be considered further.

Female Group, Happiness Clips, Left Side

The ANOVA on the left side showed a significant MUSCLE*BIN interaction (F(6,174) = 11.421, p < 0.000001; GG-adjusted p < 0.000001; eta-squared = 0.28). Post hoc tests were performed by paired-sample t-tests comparing values from the two muscles in the seven different bins. Given the seven comparisons, the p-value threshold was Bonferroni-adjusted to p = 0.007. Significantly different values between the two muscles were found at the 1200–1600 ms bin (t(29) = −2.90; p = 0.006), at the 1600–2000 ms bin (t(29) = −3.99; p = 0.0004), at the 2000–2400 ms bin (t(29) = −3.75; p = 0.0007) and at the 2400–2800 ms bin (t(29) = −3.67; p = 0.0009).

Female Group, Sadness Clips

The analysis on sadness clips showed a significant MUSCLE*BIN interaction (F(6,174) = 5.4237, p = 0.00004; GG-adjusted p = 0.004; eta-squared = 0.16) without any effect on side. Post hoc tests were performed by paired-sample t-tests comparing values from the two muscles in the seven different bins. Given the seven comparisons, the p-value threshold was Bonferroni-adjusted to p = 0.007. Significantly different values between the two muscles were found at the 1200–1600 ms bin (t(29) = 3.091; p = 0.004), at the 1600–2000 ms bin (t(29) = 3.10; p = 0.004) and at the 2400–2800 ms bin (t(29) = 3.85; p = 0.0006).

Female Group, Neutral Clips

The analysis on neutral clips showed a MUSCLE*BIN interaction (F(6,174) = 2.2220, p = 0.043, eta-squared = 0.07), which did not survive the GG-adjustment (adjusted p = 0.07) and therefore will not be considered any further.

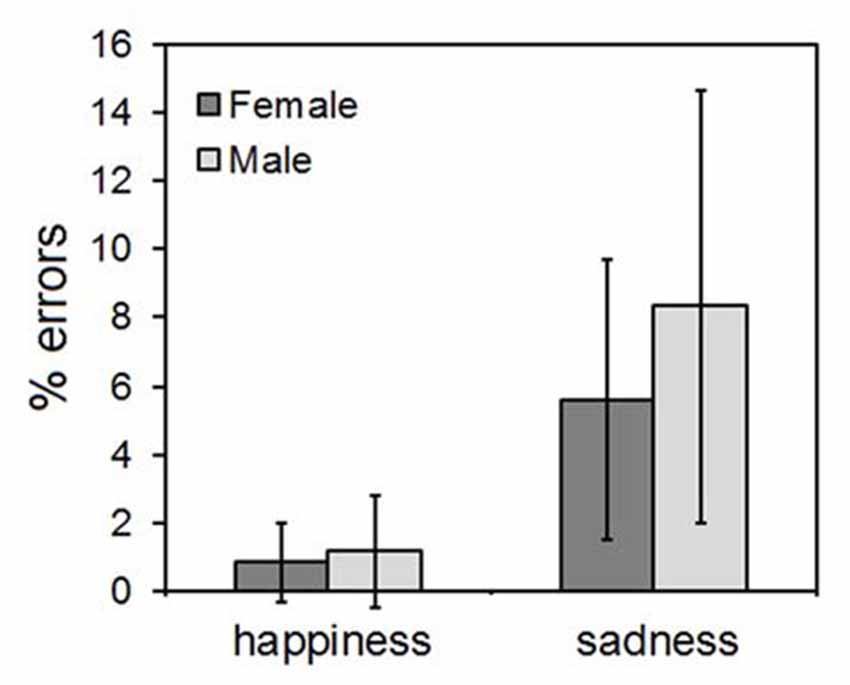

Explicit Emotion Recognition

The ANOVA yielded only a main effect of the CLIP factor, indicating that more errors were made in evaluating the sadness than the happiness ones (F(1,58) = 8.44, p = 0.003). The types of wrong responses to the sadness clips fell into two main categories: disgust (41% of errors) and fear (37% of errors). No significant main effect of SEX (F(1,58) = 0.59, p = 0.41) nor any significant SEX*EMOTION interaction (F(1,58) = 0.28, p = 0.54) were found. The results are illustrated in Figure 3.

Figure 3. Results of the explicit emotional ratings. Error bars indicate 95% confidence interval of the mean.

Discussion

Main Finding: Females Show More RFRs Than Males

The present study shows that in a population of Italian children aged 7–10 years, RFRs to affective facial displays can be readily elicited only in female subjects similarly to what has been reported in the literature for adults (Dimberg and Lundquist, 1990; Thunberg and Dimberg, 2000; Sonnby-Borgstrom et al., 2008; Hermans et al., 2009; Huang and Hu, 2009). As in adults, RFRs are evident in the EMG activity within one second, with a progressive increase of activity of the zygomatici muscles and decrease of activity of the corrugator supercilii upon showing happy faces, and vice versa an increase of activity of the corrugator supercilii and a decrease of activity of the zygomatici muscles upon showing sad faces. This pattern is more evident for the happy than the sad stimuli, that is in keeping with the only significant difference in the behavioral data, i.e., a larger number of errors with sad than happy faces.

The Possible Communicative Role of RFRs, Beyond Mere Mimicry

What do these data tell us about behavioral differences between males and females? To answer this question one should know what psychological process are RFRs a marker of. Unfortunately, we are far from a univocal interpretation of RFRs as a biomarker of internal processes. The hypothesis that they represent a mere mimicry of observed affective displays is simplistic, therefore rendering less likely the possibility that RFRs are a marker of “empathic” capacities. The current evidence seems to point at a broader communicative role of RFRs. Facial expressions are considered to be evolutionarily shaped as a non-verbal communicative tool for conspecifics (Darwin, 1872; Ekman and Oster, 1979). In this context, RFRs are often referred to as mimicry of emotional activity. However, RFRs seem to be more complex patterns of affective motor response than mere mimicry. They represent affective responses to certain evolutionary/biologically relevant stimuli, which can be facial expressions just as well as snakes or spiders (Cacioppo et al., 1986; Dimberg, 1986). In fact, RFRs are readily elicited by affectively charged scenes in the absence of facial displays and therefore their potential role in the reception of communicative affective signals is weak. Moreover, when RFRs are produced in response to facial displays, they do not necessarily follow a mimetic pattern, but can be reactive (as in producing a fear expression in response to an angry face) (Moody et al., 2007). Taken together, these data seem to point at a communicative role of RFRs that is more tuned to sending information to conspecifics rather than receiving information from conspecifics. In the light of this assumption, the present data less likely indicate a “less empathic” brain in our population of male children but more likely a lesser attitude to communicate within-group socially relevant affective information. At least in part it possible to infer attitudes from facial EMG response. The impact of social context in mimicry has been studied using facial EMG responses by Bourgeois and Hess (2008), who showed that the level of facial mimicry varies as a function of group membership.

Acquired or Innate Origin of Sex Differences?

Finding neuro-behavioral differences in developmental age raises the question whether we are describing a genetically-determined, innate pattern or a pattern acquired during early development because of interaction with the environment. We know from the literature that RFRs are present in infancy in children as young as 6 years (Deschamps et al., 2012) and it has been recently shown (Geangu et al., 2016) that 3-year-old children already display RFRs to faces, but not to bodily emotion expressions, unlike adults, in whom RFRs elicited both by facial and body emotions (Magnée et al., 2007). The presence of RFRs in 3-year-old children seem to point out at a hard-wired mechanism at their origin. On the contrary, we cannot speculate in any way about the innate or acquired origin of the sex difference found by us, nor we think our study is appropriately designed to test such hypothesis. Indeed, several culturally-mediated factors, alien to basic emotional processing, can modulate RFRs. For instance recently formed negative or positive attitudes toward the observed actors can invert RFR patterns (Likowski et al., 2008). RFRs may be modulated by the attribution of fairness to the actor (Hofman et al., 2012). Facial expression is strongly influenced by a social contextual effects (Vrana and Gross, 2004). In particular group membership can modulate RFRs, which tend to be more evident in response to in-group faces (Yabar et al., 2006). Finally, also task-related factors could be at the source of the observed difference between male and female participants. Performance anxiety can modulate RFRs (Dimberg and Thunberg, 2007). Also perceived task difficulty and task demands can generate RFR-like responses. In fact it has been shown that frowning-like RFRs can contaminate responses to positive affective displays if a task is resource-demanding (Lishner et al., 2008). In the light of these observations, any difference in human environment surrounding boys or girls could have affected their early personality traits. Nevertheless, the present finding is robust and it has considerable implications for further research, especially from the methodological point of view, as outlined in the following paragraph.

Value of the Present Data for RFR Research in Human Development

Whatever the cause, present data are rather explicit in telling that sex differences in such capacities are already expressed in the age bracket of the present study and therefore they are of considerable value at least at a methodological level. RFRs are being increasingly used to test pathogenetic hypotheses on neurodevelopmental disorders (McIntosh et al., 2006; Press et al., 2010; Deschamps et al., 2014), and are therefore increasingly used in pediatric populations. The original finding of sex differences in RFRs in adults (Thunberg and Dimberg, 2000) greatly influenced the selection of experimental populations in the subsequent literature. We believe that a similar finding in children should prompt similar caution in testing developmental disorders.

Conclusion

We found that the peculiar sexual dimorphism in RFRs that is well-documented in adults is already present at pre-pubertal age. Seven to ten year old males showed lesser EMG activity in facial muscles than their female peers. Uncertainty on the functional role of RFRs limits the interpretation of the data. Embodied cognition theories postulate their role in awareness of self and others’ emotional states. RFRs may have role in non-verbal communication, similarly to overt facial affective displays. In this respect, the present data are in line with known differences between male and female affective behavior, though they do not solve the issue on whether these differences are acquired or developmental. Importantly, RFRs are generally used in research as a physiological marker of affective processes and the present finding raises relevant methodological issues in terms of population selection.

Author Contributions

LC, VV and SB designed the study and collected data. LC and LT analyzed the data. All authors wrote the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

References

Baron-Cohen, S. (2002). The extreme male brain theory of autism. Trends Cogn. Sci. 6, 248–254. doi: 10.1016/s1364-6613(02)01904-6

Beall, P. M., Moody, E. J., McIntosh, D. N., Hepburn, S. L., and Reed, C. L. (2008). Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. J. Exp. Child Psychol. 101, 206–223. doi: 10.1016/j.jecp.2008.04.004

Beaupré, M. G., and Hess, U. (2005). Cross-cultural emotion recognition among canadian ethnic groups. J. Cross. Cult. Psychol. 36, 355–370. doi: 10.1177/0022022104273656

Bourgeois, P., and Hess, U. (2008). The impact of social context on mimicry. Biol. Psychol. 77, 343–352. doi: 10.1016/j.biopsycho.2007.11.008

Cacioppo, J. T., Petty, R. E., Losch, M. E., and Kim, H. S. (1986). Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Pers. Soc. Psychol. 50, 260–268. doi: 10.1037//0022-3514.50.2.260

Cattaneo, L., and Pavesi, G. (2014). The facial motor system. Neurosci. Biobehav. Rev. 38, 135–159. doi: 10.1016/j.neubiorev.2013.11.002

Clarke, T. J., Bradshaw, M. F., Field, D. T., Hampson, S. E., and Rose, D. (2005). The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception 34, 1171–1180. doi: 10.1068/p5203

Coll, M. P., Budell, L., Rainville, P., Decety, J., and Jackson, P. L. (2012). The role of gender in the interaction between self-pain and the perception of pain in others. J. Pain 13, 695–703. doi: 10.1016/j.jpain.2012.04.009

Decety, J., and Svetlova, M. (2012). Putting together phylogenetic and ontogenetic perspectives on empathy. Dev. Cogn. Neurosci. 2, 1–24. doi: 10.1016/j.dcn.2011.05.003

Deschamps, P. K. H., Schutte, I., Kenemans, J. L., Matthys, W., and Schutter, D. J. L. G. (2012). Electromyographic responses to emotional facial expressions in 6-7year olds: a feasibility study. Int. J. Psychophysiol. 85, 195–199. doi: 10.1016/j.ijpsycho.2012.05.004

Deschamps, P., Munsters, N., Kenemans, L., Schutter, D., and Matthys, W. (2014). Facial mimicry in 6–7 year old children with disruptive behavior disorder and ADHD. PLoS One 9:e84965. doi: 10.1371/journal.pone.0084965

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology 19, 643–647. doi: 10.1111/j.1469-8986.1982.tb02516.x

Dimberg, U. (1986). Facial reactions to fear-relevant and fear-irrelevant stimuli. Biol. Psychol. 23, 153–161. doi: 10.1016/0301-0511(86)90079-7

Dimberg, U., and Lundquist, L. O. (1990). Gender differences in facial reactions to facial expressions. Biol. Psychol. 30, 151–159. doi: 10.1016/0301-0511(90)90024-q

Dimberg, U., and Thunberg, M. (1998). Rapid facial reactions to emotional facial expressions. Scand. J. Psychol. 39, 39–45. doi: 10.1111/1467-9450.00054

Dimberg, U., and Thunberg, M. (2007). Speech anxiety and rapid emotional reactions to angry and happy facial expressions. Scand. J. Psychol. 48, 321–328. doi: 10.1111/j.1467-9450.2007.00586.x

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi: 10.1111/1467-9280.00221

Dimberg, U., Thunberg, M., and Grunedal, S. (2002). Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn. Emot. 16, 449–471. doi: 10.1080/02699930143000356

Ekman, P., and Oster, H. (1979). Facial expressions of emotion. Annu. Rev. Psychol. 30, 527–554. doi: 10.1146/annurev.ps.30.020179.002523

Fujimura, T., Sato, W., and Suzuki, N. (2010). Facial expression arousal level modulates facial mimicry. Int. J. Psychophysiol. 76, 88–92. doi: 10.1016/j.ijpsycho.2010.02.008

Geangu, E., Quadrelli, E., Conte, S., Croci, E., and Turati, C. (2016). Three-year-olds’ rapid facial electromyographic responses to emotional facial expressions and body postures. J. Exp. Child Psychol. 144, 1–14. doi: 10.1016/j.jecp.2015.11.001

Hermans, E. J., van Wingen, G., Bos, P. A., Putman, P., and van Honk, J. (2009). Reduced spontaneous facial mimicry in women with autistic traits. Biol. Psychol. 80, 348–353. doi: 10.1016/j.biopsycho.2008.12.002

Hofman, D., Bos, P. A., Schutter, D. J. L. G., and van Honk, J. (2012). Fairness modulates non-conscious facial mimicry in women. Proc. R. Soc. B Biol. Sci. 279, 3535–3539. doi: 10.1098/rspb.2012.0694

Huang, H. Y., and Hu, S. (2009). Sex differences found in facial EMG activity provoked by viewing pleasant and unpleasant photographs. Percept. Mot. Skills 109, 371–381. doi: 10.2466/pms.109.2.371-381

Lang, P. J., Greenwald, M. K., Bradley, M. M., and Hamm, A. O. (1993). Looking at pictures: affective, facial, visceral, and behavioral reactions. Psychophysiology 30, 261–273. doi: 10.1111/j.1469-8986.1993.tb03352.x

Larsen, J. T., Norris, C. J., and Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40, 776–785. doi: 10.1111/1469-8986.00078

Lawrence, E. J., Shaw, P., Baker, D., Baron-Cohen, S., and David, A. S. (2004). Measuring empathy: reliability and validity of the Empathy Quotient. Psychol. Med. 34, 911–919. doi: 10.1017/s0033291703001624

Likowski, K. U., Mühlberger, A., Gerdes, A. B. M., Wieser, M. J., Pauli, P., and Weyers, P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Front. Hum. Neurosci. 6:214. doi: 10.3389/fnhum.2012.00214

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2008). Modulation of facial mimicry by attitudes. J. Exp. Soc. Psychol. 44, 1065–1072. doi: 10.1016/j.jesp.2007.10.007

Lishner, D. A., Cooter, A. B., and Zald, D. H. (2008). Rapid emotional contagion and expressive congruence under strong test conditions. J. Nonverbal Behav. 32, 225–239. doi: 10.1007/s10919-008-0053-y

Magnée, M. J. C. M., Stekelenburg, J. J., Kemner, C., and de Gelder, B. (2007). Similar facial electromyographic responses to faces, voices, and body expressions. Neuroreport 18, 369–372. doi: 10.1097/wnr.0b013e32801776e6

McDuff, D., Kodra, E., Kaliouby, E. R., and LaFrance, M. (2017). A large-scale analysis of sex differences in facial expressions. PLoS One 12:e0173942. doi: 10.1371/journal.pone.0173942

McIntosh, D. N., Reichmann-Decker, A., Winkielman, P., and Wilbarger, J. L. (2006). When the social mirror breaks: deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev. Sci. 9, 295–302. doi: 10.1111/j.1467-7687.2006.00492.x

McManis, M. H., Bradley, M. M., Berg, W. K., Cuthbert, B. N., and Lang, P. J. (2001). Emotional reactions in children: verbal, physiological, and behavioral responses to affective pictures. Psychophysiology 38, 222–231. doi: 10.1017/s0048577201991140

Michalska, K. J., Kinzler, K. D., and Decety, J. (2013). Age-related sex differences in explicit measures of empathy do not predict brain responses across childhood and adolescence. Dev. Cogn. Neurosci. 3, 22–32. doi: 10.1016/j.dcn.2012.08.001

Moody, E. J., McIntosh, D. N., Mann, L. J., and Weisser, K. R. (2007). More than mere mimicry? The influence of emotion on rapid facial reactions to faces. Emotion 7, 447–457. doi: 10.1037/1528-3542.7.2.447

Neufeld, J., Ioannou, C., Korb, S., Schilbach, L., and Chakrabarti, B. (2016). Spontaneous facial mimicry is modulated by joint attention and autistic traits. Autism Res. 9, 781–789. doi: 10.1002/aur.1573

Niedenthal, P. M., Mermillod, M., Maringer, M., and Hess, U. (2010). The Simulation of Smiles (SIMS) model: embodied simulation and the meaning of facial expression. Behav. Brain Sci. 33, 417–433. doi: 10.1017/s0140525x10000865

Oberman, L. M., Winkielman, P., and Ramachandran, V. S. (2009). Slow echo: facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev. Sci. 12, 510–520. doi: 10.1111/j.1467-7687.2008.00796.x

Press, C., Richardson, D., and Bird, G. (2010). Intact imitation of emotional facial actions in autism spectrum conditions. Neuropsychologia 48, 3291–3297. doi: 10.1016/j.neuropsychologia.2010.07.012

Rymarczyk, K., Biele, C., Grabowska, A., and Majczynski, H. (2011). EMG activity in response to static and dynamic facial expressions. Int. J. Psychophysiol. 79, 330–333. doi: 10.1016/j.ijpsycho.2010.11.001

Scarpazza, C., Làdavas, E., and Cattaneo, L. (2018). Invisible side of emotions: somato-motor responses to affective facial displays in alexithymia. Exp. Brain Res. 236, 195–206. doi: 10.1007/s00221-017-5118-x

Sonnby-Borgstrom, M., Jönsson, P., and Svensson, O. (2008). Gender differences in facial imitation and verbally reported emotional contagion from spontaneous to emotionally regulated processing levels: cognition and Neurosciences. Scand. J. Psychol. 49, 111–122. doi: 10.1111/j.1467-9450.2008.00626.x

Thunberg, M., and Dimberg, U. (2000). Gender differences in facial reactions to fear-relevant stimuli. J. Nonverbal Behav. 24, 45–51. doi: 10.1023/A:1006662822224

Vrana, S. R., and Gross, D. (2004). Reactions to facial expressions: effects of social context and speech anxiety on responses to neutral, anger, and joy expressions. Biol. Psychol. 66, 63–78. doi: 10.1016/j.biopsycho.2003.07.004

Weyers, P., Mühlberger, A., Kund, A., Hess, U., and Pauli, P. (2009). Modulation of facial reactions to avatar emotional faces by nonconscious competition priming. Psychophysiology 46, 328–335. doi: 10.1111/j.1469-8986.2008.00771.x

Keywords: facial electromyography, emotions, mirror neurons, sadness, empathy, imitation, infancy, development

Citation: Cattaneo L, Veroni V, Boria S, Tassinari G and Turella L (2018) Sex Differences in Affective Facial Reactions Are Present in Childhood. Front. Integr. Neurosci. 12:19. doi: 10.3389/fnint.2018.00019

Received: 28 December 2017; Accepted: 04 May 2018;

Published: 23 May 2018.

Edited by:

Marcelo Fernandes Costa, Universidade de São Paulo, BrazilReviewed by:

Radwa Khalil, Jacobs University Bremen, GermanyRossella Breveglieri, Università degli Studi di Bologna, Italy

Copyright © 2018 Cattaneo, Veroni, Boria, Tassinari and Turella. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luca Turella, bHVjYS50dXJlbGxhQGdtYWlsLmNvbQ==

Luigi Cattaneo

Luigi Cattaneo Vania Veroni

Vania Veroni Sonia Boria2

Sonia Boria2 Giancarlo Tassinari

Giancarlo Tassinari Luca Turella

Luca Turella