- 1Graduate School of Biomedical Engineering, University of New South Wales, Sydney, NSW, Australia

- 2Norwich Medical School, University of East Anglia, Norwich, UK

- 3School of Medical Sciences, University of New South Wales, Sydney, NSW, Australia

The clinical distinction between Alzheimer's disease (AD) and behavioral variant frontotemporal dementia (bvFTD) remains challenging and largely dependent on the experience of the clinician. This study investigates whether objective machine learning algorithms using supportive neuroimaging and neuropsychological clinical features can aid the distinction between both diseases. Retrospective neuroimaging and neuropsychological data of 166 participants (54 AD; 55 bvFTD; 57 healthy controls) was analyzed via a Naïve Bayes classification model. A subgroup of patients (n = 22) had pathologically-confirmed diagnoses. Results show that a combination of gray matter atrophy and neuropsychological features allowed a correct classification of 61.47% of cases at clinical presentation. More importantly, there was a clear dissociation between imaging and neuropsychological features, with the latter having the greater diagnostic accuracy (respectively 51.38 vs. 62.39%). These findings indicate that, at presentation, machine learning classification of bvFTD and AD is mostly based on cognitive and not imaging features. This clearly highlights the urgent need to develop better biomarkers for both diseases, but also emphasizes the value of machine learning in determining the predictive diagnostic features in neurodegeneration.

Introduction

Clinical diagnosis of neurodegenerative diseases at clinical presentation remains challenging, in particular for phenotypologically similar diseases such Alzheimer's disease (AD) and behavioral variant frontotemporal dementia (bvFTD). Diagnostic criteria have been established and revised (Dubois et al., 2007; Rascovsky et al., 2011) for both diseases, with amnesia seen as a classic symptom of AD, whereas behavioral changes and executive impairments are reported as core criteria for bvFTD. However, recent evidence has highlighted that AD patients can present with dysexecutive and behavioral changes (Possin et al., 2013). Similarly, an important proportion of bvFTD patients, including pathologically confirmed patients, have been reported to show similar levels of amnesia as found in AD (Hornberger et al., 2010; Hornberger and Piguet, 2012; Bertoux et al., 2014).

These findings increase the challenge for clinicians in distinguishing between these two diseases at first presentation. One potential aid to the clinical diagnosis would be the use of machine/statistical learning algorithms to objectively interpret supportive diagnostic criteria (e.g., neuroimaging, cognition, etc.) to aid diagnosis based on the core diagnostic features. Such classifiers have been recently shown to accurately distinguish AD patients from healthy controls (Zhang et al., 2011; Zhou et al., 2014). However, classification against healthy individuals has limited utility as the distinction of neurodegenerative and healthy individuals is quite straightforward. More interesting would be to employ machine learning algorithms for the diagnostic distinction of different neurodegenerative diseases.

The current study addresses this issue by employing a Naïve Bayes classifier model to distinguish between a large clinical sample of individuals with clinically-diagnosed AD or bvFTD, as well as automatically separating these two disease classes from healthy age-matched controls at clinical presentation. Critically, a subset of patients had confirmed pathological diagnoses. Finally, to avoid circularity, we did not employ in the algorithm any core diagnostic features for the distinction of patients (such as the Cambridge Behavioural Inventory), as these features were used in the initial clinical diagnosis and provided the diagnostic reference against which the performance of the algorithm is compared (except for the pathologically-confirmed cases where pathology provided the final diagnosis); instead the algorithm utilizes diagnostic supportive features (i.e., atrophy neuroimaging and neuropsychology) only. Thus, our findings illustrate for the first time how supportive information can aid clinical diagnosis of these diagnostically challenging similar neurodegenerative conditions.

Methods

Participants

A total of 166 participants were selected (54 AD; 55 bvFTD; 57 healthy controls) from the FRONTIER (Frontotemporal Dementia Research Group) patient database, Sydney, Australia. All bvFTD patients met current consensus criteria (Rascovsky et al., 2011) with insidious onset, decline in social behavior and personal conduct, emotional blunting, and loss of insight. Patients with a known genetic mutation associated with bvFTD were not included in the study. All AD patients met revised NINCDS-ADRDA diagnostic criteria for probable AD (Dubois et al., 2007). Pathological confirmation of diagnosis was available for 22 patients (9 AD; 13 bvFTD).

Healthy controls were selected from a healthy volunteer panel or were spouses/carers of patients. The South Eastern Sydney and Illawarra Area Health Service and the University of New South Wales human ethics committees approved the study. Written informed consent was obtained from the participant or the primary caregiver in accordance with the Declaration of Helsinki.

Neuropsychological Assessment

All participants underwent cognitive screening using the Addenbrooke's Cognitive Examination (ACE-R; Mioshi et al., 2006). The ACE-R results in a score out of 100, and includes subsections in attention, memory, language and visuo-perception.

The frontotemporal dementia rating scale (FRS; Mioshi et al., 2010) was used to determine patients' disease severity. The Cambridge Behavioural Inventory (CBI; Wedderburn et al., 2008) was used as a behavioral disturbance measure.

Patients also underwent a comprehensive cognitive assessment including the Hayling test (Burgess and Shallice, 1996) that assess inhibition/response suppression, the backward digit span evaluating working-memory, lexical letter fluency tasks assessing verbal initiation, the Trail Making test (Reitan, 1955) evaluating flexibility, the recall of the Rey Complex Figure (Rey, 1941) as well as the Doors and People test (Baddeley et al., 1995), two visual memory tests, the Rey Auditory Verbal Learning Test (RAVLT–Rey, 1964) to assess verbal memory and a facial emotion recognition test based on Ekman faces (Ekman and Friesen, 1975). The cognitive assessments therefore covered extensive cognitive domains: executive (Digit Span; Hayling; FAS letter fluency; Trails); memory (Rey Figure Recall; RAVLT recall and recognition; Doors and People) and emotion recognition (Ekman faces test). Total or subscores of each test were employed in the Bayesian classification analysis.

MRI Acquisition and Analysis

All patients and controls underwent the same imaging protocol to obtain whole-brain T1-weighted images using a 3T Philips MRI scanner with standard quadrature head coil (8 channels). The 3D T1-weighted sequences were acquired as follows: coronal orientation, 161 mm2 in-plane resolution, slice thickness 1 mm, TR/TE = 5.8/2.6 ms. MRI analysis was conducted using a Voxel-based morphometry (VBM) pipeline on three dimensional T1-weighted scans, using the FSL-VBM toolbox in the FMRIB software library package (http://www.fmrib.ox.ac.uk/fsl/). The first step involved extracting the brain from all scans using the BET algorithm in the FSL toolbox, using a fractional intensity threshold of 0.22. Each scan was visually checked after brain extraction, both to ensure that no brain matter was excluded, and no non-brain matter was included (e.g., skull, optic nerve, dura mater; Smith et al., 2004).

A gray matter template, specific to this study, was then built by canvassing 20 scans from each group (total n = 60). An equal number of scans across groups was used to ensure equal representation, and thus avoid potential bias toward any single group's topography during registration. Template scans were then registered to the Montreal Neurological Institute Standard space (MNI 152) using non-linear b-spline representation of the registration warp field, resulting in study-specific gray matter template at 2 × 2 × 2 mm3 resolution in standard space (Rueckert et al., 1999; Andersson et al., 2007). Simultaneously, brain-extracted scans were also processed with the FMRIB's Automatic Segmentation Tool (FAST v4.0) to achieve tissue segmentation into cerebrospinal fluid (CSF), gray matter and white matter. Specifically, this was done via a hidden Markov random field model and an associated expectation-maximization algorithm (Zhang et al., 2001).

The FAST algorithm also corrected for spatial intensity variations, such as bias field or radio-frequency inhomogeneities in the scans, resulting in partial volume maps of the scans. The following step saw gray matter partial volume maps then non-linearly registered to the study-specific template via non-linear b-spline representation of the registration warp. These maps were then modulated by dividing by the Jacobian of the warp field, to correct for any contraction/enlargement caused by the non-linear component of the transformation (Good et al., 2002). After normalization and modulation, smoothing the gray matter maps occurred using an isotropic Gaussian kernel (standard deviation = 3 mm; full width half maximum = 8 mm).

Based on the known spread of pathology in bvFTD and AD (Seeley et al., 2008), we a priori selected a subset of normalized, smoothed brain regions for the Bayesian classification analysis. The brain region boundaries were established via the cortical and subcortical Harvard-Oxford probabilistic atlases. The selected regions were the: (1) amygdala; (2) hippocampus; (3) medial temporal lobe; (4) temporal pole; (5) dorsolateral prefrontal cortex (DLPFC); (6) ventromedial prefrontal cortex (VMPFC); (7) striatum, and; (8) insula. For the selected regions, gray matter intensities were extracted and multiplied by the mean of the values in the smoothed registered gray matter to give total volume for each region and participant. The volumes were then corrected for total intracranial volume, as well as age and gender.

There is of course the opportunity to segment the brain images into smaller sub-regions, for example, into their left and right hemisphere sub-regions, but given the limited data set available with which to learn a pattern recognition model, we risk over-learning during the training phase. Therefore, we conservatively limit the pool to only eight MRI volumetric features.

Data Preparation

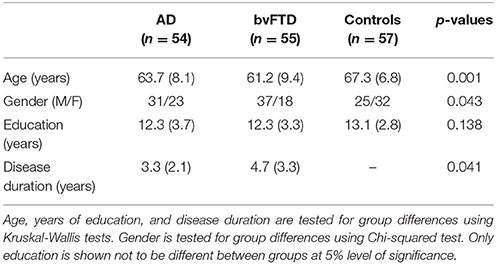

Participants were divided into three classes based on their disease classification (two disease classes, and one control class) as shown in Table 1.

Table 1. Three classes of data, which include two disease classes, Alzheimer's disease (AD) and behavioral variant frontotemporal dementia (bvFTD), and a control group.

For each participant, a vector of up to 25 numerical features was available, including the 8 MRI volumetric features and 17 neuropsychological features. This data was arranged in two data matrices, denoted as Xscan and Xcog, respectively. The matrix concatenation of all data was also denoted as Xall = (Xscan, Xcog). Each row represents one subject and each column represents one feature variable.

As a number of neuropsychological cognitive scores were unavailable for several subjects, it is expected that this led to an underestimation of the discriminating capacity of these cognitive assessments in differentiating AD and bvFTD. A summary of the extent of this missing data is provided in Supplementary Table 1.

In order to compare the performance of a multivariate classifier model in discriminating the two disease classes of AD and bvFTD (then in discriminating between the three classes of AD, bvFTD and controls in a second step) using different combinations of the available features as the input, the following analyses were performed.

Naïve Bayes Classification

The Naïve Bayes classification method is adopted in this study primarily for its ability to handle missing features, which occurs for some of the neuropsychological assessments (Liu et al., 2005; Shi and Liu, 2011). A Naïve Bayes classifier is a simple probabilistic classifier based on the application of Bayes' theorem (described mathematically below) with the assumption of probabilistic independence between every pair of features; in practice this is rarely true, as certain features can be correlated, but Naïve Bayes classifiers demonstrate remarkably robust performance on features which are not strictly independent (Zhang, 2004). Given a discrete class label Y and n features, x1 through xn, Bayes' theorem states the following relationship:

where P(Y|x1, …, xn) is the posterior probability of class Y being correct given the observed features in the vector X = (x1, …, xn). Using the naïve independence assumption that features are independent of each other,

the relationship is simplified to:

That is, the estimated class label which is output as a decision from the classifier model, denoted as , is that which maximizes the expression .

The Naïve Bayes classifier used two steps to classify data, using the MATLAB Statistics and Machine Learning Toolbox 2014b (Mathworks, Natick, MA, USA):

• Training step: Using training data, the method estimates the parameters of the probability distributions of xi for each Y, assuming that the xi are conditionally independent; that is, for each disease class Y, and each feature variable xi, the probability density P(xi|Y) is approximated with the available training data. In lay terms, P(xi|Y) is the probability of observing a value for the variable xi given a particular disease class. The feature xi can be either discrete or continuous, and either would suggest a different model for the probability density function, P(xi|Y). Since distributions are assumed independent, during training, missing instances for a particular feature are not included in the frequency count (for discrete variables) or distribution estimate (for continuous variables, using a Gaussian smoothing kernel function).

• Prediction step: For any unseen testing data, the method uses the previously estimated distributions to compute the value , which is proportional to the posterior probability, P(Y|x1, …, xn) (as shown above), for each possible class Y; either Y∈{AD, bvFTD} in the first analysis or Y∈{AD, bvFTD, control} in the second. The classifier then chooses the winning class, , as the disease class which maximizes . During testing, for observations that have some but not all missing features, the algorithm estimates the class label using only non-missing features.

10-Fold Cross-validation

Rather than dividing the data evenly into training and testing sets, 10-fold cross-validation was used to obtain a better estimate of how the model will behave on a general data set by averaging out variations which were introduced by selecting one training/testing split from the data. The 109 AD and bvFTD subjects (or 166 subjects when also including controls) were randomly divided into 10 similar sized groups such that the proportion of subjects from each disease class was approximately equal within each group. For each of the 10 cross-validation runs, nine groups were used for training and the remaining group withheld for testing; this was repeated 10 times, such that each of the 10 groups were used as testing data for one of the 10 repeats. For any of the 10 repeats, given the training data from the other nine groups, the procedure for training the classifier is outlined above; however, it may be possible that the removal of some exceptionally noisy or highly correlated features before training may have improved the performance during the testing phase, therefore the following feature selection procedure was performed as a pre-processing step during the training phase of the classifier and not using any of the testing data for that repeat/fold.

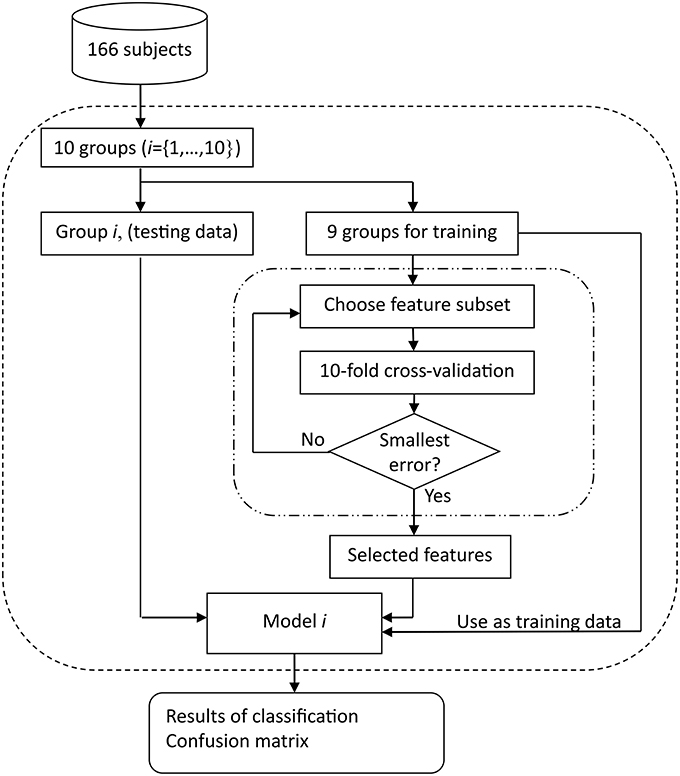

Feature Selection

As mentioned above, each training set contained data from nine subject groups. Starting with an empty candidate feature subset, features were sequentially added to the candidate subset until the addition of further features did not further improve the classification accuracy; this accuracy was determined using a second 10-fold cross-validation procedure within this training set in order to evaluate the potential feature subset under consideration. Figure 1 illustrates the entire process of classification and feature selection.

Figure 1. Block diagram of training and testing of Naïve Bayes classification model. One outer loop performs the testing, using 10 different groups with approximately 16 or 17 subjects in each group when n = 166 for three-way classification of AD, bvFTD, and control. The nine groups used for training in each run are subject to further feature selection to remove redundant or noisy features; each candidate feature subset is evaluated using an inner 10-fold cross-validation procedure.

Performance Metrics

Classification performance was evaluated using both classification accuracy and Cohen's kappa statistic (Cohen, 1968). Approximate confidence intervals for accuracy were also listed; they were derived using the accuracy as calculated from the confusion matrix (pooling classification results from all 10 cross-validation repeats) and the number of subjects for which a classification result is obtained, so independence between classification results was not strictly observed (due to test data also being used as training data for other folds) as required when estimating confidence intervals. Confidence intervals were computed with the approximation that all results were drawn from a fixed classifier model (rather than cross-validation, which is actually used).

Evaluating Three Different Feature Sets

In order to compare the usefulness of the MRI scans volumes and the neuropsychological assessment (cognitive and neuropsychiatric) features three different starting feature sets (before feature selection begins), Xscan, Xcog, and Xall were evaluated using the procedure shown in Figure 1.

Results

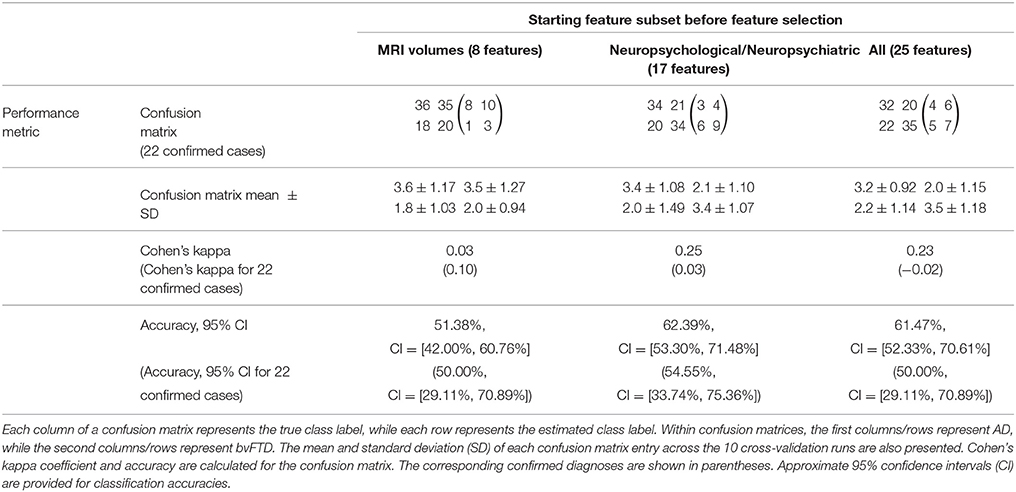

Classifying AD and bvFTD

Table 2 shows the classification results in discriminating AD and bvFTD (without considering the control group). Using the MRI volume features as input, the machine learning algorithm classified 51.4% (50% when considering only 22 confirmed cases) of bvFTD and AD patients correctly at presentation. In contrast, the neuropsychological scores achieved higher discrimination accuracy, correctly identifying 62.4% of bvFTD and AD cases. Not surprisingly, due to the low classification accuracy when using MRI volumes, the combined feature set (MRI volumes and neuropsychological) was only slightly decreased to 61.5% of correct discrimination between bvFTD and AD.

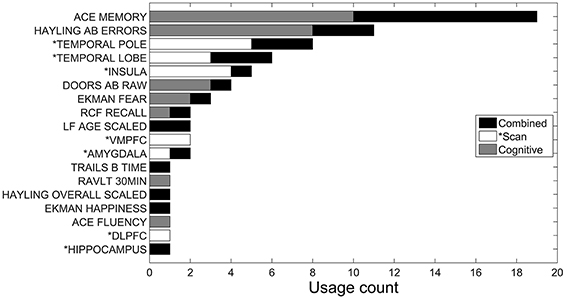

Figure 2 shows a histogram of the 10 sets of features selected for each of the 10 outer cross-validation runs, for a given starting feature set (derived from either the MRI volumes, neuropsychological assessment, or both combined). The higher the frequency with which the feature is selected, the more consistently it contributes to the classification task. There was a large variability across features contributing to successful discrimination. Using only MRI scan volume features (shown as white bars in Figure 2), six of the eight MRI regions were selected at least once, except for the striatum (which is never selected when discriminating between AD and bvFTD, and so not shown in Figure 2) and the hippocampus. The most selected regions were the temporal pole, insula, and temporal lobe. For the neuropsychological features (shown as gray bars in Figure 2), 7 of the 17 were selected at least once, with ACE-R memory subtest, Hayling AB errors, Doors and People test, and facial emotion recognition of fear scores being selected more than twice, and with the ACE-R memory subscore and Hayling AB errors being selected more than twice as often as the next most frequently selected neuropsychological feature (Doors and People test scores).

Figure 2. Accumulated feature selection results of 10-fold cross validation in discriminating AD and bvFTD using three different feature sets: MRI volumes (*Scan), neuropsychological (Cognitive), and both combined. Y-axis shows the name of selected features and X-axis shows the accumulated count of a corresponding feature being selected over the 10-folds. Three sets of features are displayed in different colors.

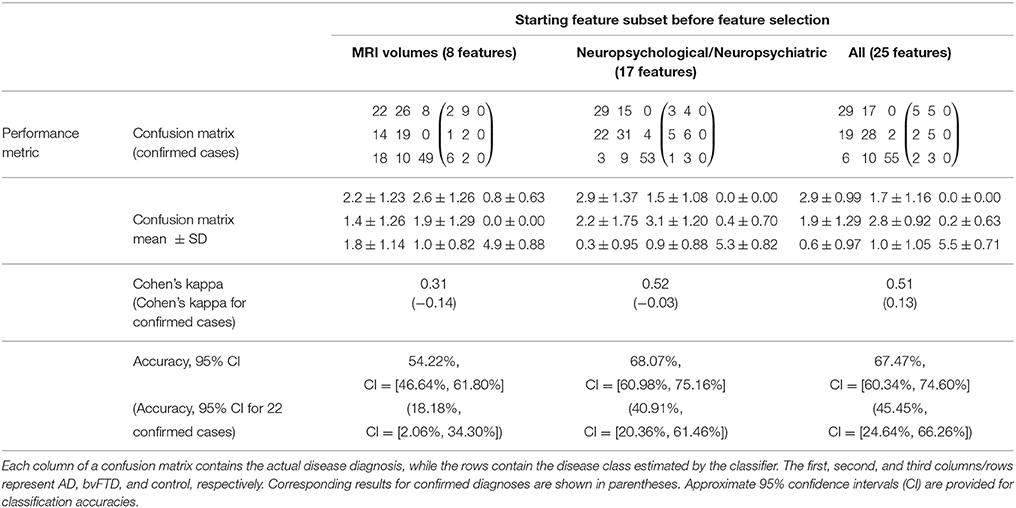

Classifying AD, bvFTD, and Controls

Table 3 shows the classification results in discriminating AD, bvFTD and control classes. MRI features achieved an accuracy of 54.2% (18.2%, when considering the 22 confirmed cases only). As in the previous classification, the three-class classification performed better using neuropsychological features, with an accuracy of 68.1%. The combination of both MRI and neuropsychological features achieves an accuracy of 67.5% (although confidence intervals overlap almost entirely).

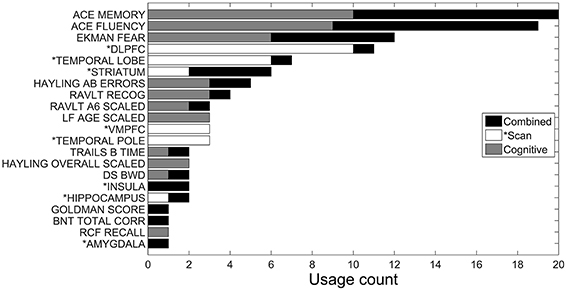

The corresponding feature selection results are shown in Figure 3. The most selected features when using only MRI features were the DLPFC, temporal lobe, VMPFC and temporal pole. When using neuropsychological features, the most commonly selected features were ACE-R memory and ACE-R fluency subscores as well as facial emotion recognition of fear. Combining all (neuropsychological and imaging) features in the analysis, these same three neuropsychological features remained among the most selected, however, DLPFC and temporal lobe (which were the two most frequently selected features when using only MRI scan features) are each only selected for one of the 10 cross-validation runs. This last result indicates that the neuropsychological features already contained this same scan information. Interestingly, when combining both scan and neuropsychological features, the striatum is selected twice as often (rising from being selected twice to being selected four times).

Figure 3. Accumulated feature selection results of 10-fold cross validation in discriminating AD, bvFTD, and control classes using three different feature sets: MRI volumes (*Scan), neuropsychological (Cognitive), and both combined. Y-axis shows the name of selected features and X-axis shows the accumulated count of a corresponding feature being selected over the 10-folds. Three sets of features are displayed in different colors.

Discussion

To our knowledge, this is the first study investigating the use of machine learning algorithms to differentiate AD and specifically bvFTD. Results showed that neuropsychological scores and particularly tests of emotion recognition, memory screening and executive assessment achieved the best classification results. Cortical volumes of a subset of frontal, temporal, and insular regions were the most distinctive anatomical features to distinguish the groups.

Previous neurodegenerative machine learning studies have virtually been all focused on AD and its prodromal stages (Walhovd et al., 2010; Cuingnet et al., 2011; Hinrichs et al., 2011; Zhang et al., 2011; Zhou et al., 2014), whereas only one study examined discriminating AD from more general frontotemporal lobar degeneration (FTLD; Klöppel et al., 2008) as a clinical spectrum. In addition, virtually all these studies have focused mostly on neuroimaging features, and none have attempted to distinguish between the specific diseases of AD and bvFTD, whereas the current study used additional neuropsychological features as well as a pathologically confirmed bvFTD patient subgroup.

On a cognitive level, the most salient neuropsychological features to accurately classify AD and bvFTD were assessment of emotion recognition (Ekman faces), inhibition (Hayling), visual episodic memory (Doors and People), and verbal memory screening (ACE-R memory). These findings nicely corroborate previous results showing that, at presentation, emotion recognition deficits and disinhibition are hallmarks of bvFTD while being relatively absent in AD (Hornberger et al., 2011; Bertoux et al., 2015). In contrast, AD patients' prevalent episodic memory problems were most distinctive for this patient group, although some bvFTD can show impaired episodic memory performance (Hornberger et al., 2010; Bertoux et al., 2014). More specifically, a subgroup of bvFTD patients can show severe episodic memory problems, which limits the utility of episodic memory problems in the diagnostic distinction of both diseases. Future machine learning approaches on such amnestic bvFTD compared to AD patients would be of importance to confirm this notion. Finally, similar neuropsychological factors were found to discriminate groups when controls were also added in the analysis, further corroborating the robustness of the findings.

On an anatomical level, the temporal pole and insula were the most distinctive features to distinguish between AD and bvFTD. The insula has been previously shown to be among the earliest of the regions atrophic in bvFTD (Perry et al., 2006) and is selectively impaired compared to AD. The identification of the temporal lobe as a significant feature to distinguish both diseases is an intriguing result, as both AD and bvFTD show significant changes in this region. Nevertheless, the atrophy of the temporal pole, which accounts for a large part of the temporal lobe, might explain this finding, as it is indeed strongly associated with bvFTD pathology (Whitwell et al., 2009). The atrophy findings are therefore strongly dominated by the bvFTD atrophy pattern spanning temporal pole and insular regions, whereas interestingly prefrontal cortex regions (DLPFC, VMPFC) as well as medial temporal lobe regions contributed little to the classification accuracy. This is further confirmed by the analysis including the controls, which only then showed volumes of the VMPFC and DLPFC as well as of the temporal lobe and pole strongly contributing to the classification.

Interestingly, neuropsychological features outperformed cortical volume features for the classification accuracy between bvFTD and AD (62.4 vs. 51.4%, for cortical volume or neurophysiological features, respectively). More intriguing is the fact that the combination of atrophy and neuropsychological features did not increase the classification accuracy. This indicates a redundancy in the variables with neuroimaging and cognitive features seemingly representing the same dysfunction. Finally, similar classification results were observed when the analysis was restricted to the pathologically confirmed cases for which the neuropsychological measures showed a classification rate of 54.6% and atrophy features an even a lower accuracy rate of 50.0%. It is likely that the difference in sample size between the overall group (n = 109) and the pathological confirmed cases (n = 22) may explain the difference of classification accuracy for the combining features between the analyses (62.4% for n = 109, and 54.6% for n = 22). Still, it is important to note that classification results were relatively similar in the pathological subgroup as it still represents the gold standard of definite diagnosis in both diseases.

It is interesting to note that the previous study by Klöppel et al. (2008) achieved much higher sensitivity and specificity (94.7 and 83.3%, respectively) using MRI atrophy contrasts of AD and FTLD, showing that parietal and frontal changes were particularly informative in the distinction of AD and FTLD, respectively. However, the inclusion of language-variant FTLD together with behavioral-variant, as well as the exclusion of bvFTD patients with memory impairment could explain the difference with our results, as it has been shown that AD and bvFTD can overlap to a large degree for scan-based measures (Hornberger and Piguet, 2012; Hornberger et al., 2012; De Souza et al., 2013), whereas other FTLD clinical subtypes (sv-FTD; nfv-PPA) show more distinct scan features (Gorno-Tempini et al., 2011). Also, a key differences between Klöppel et al.'s study and ours is that we used more specific regions (e.g., VMPFC) as neuroimaging features instead of the entire cortical lobes (e.g., frontal lobe), which may have lowered the general discriminative power.

Another novelty in our study was the employment of a three-way classification (AD, bvFTD, and controls) in a post-hoc analysis, which allowed contrasting the patient groups with controls at the same time. While it is not possible to directly compare these results with other reports in the literature, an approximate comparison can be made against several reported attempts to distinguish AD from controls. Previous studies showed good sensitivity/specificity (>80% sensitivity and >90% specificity) of imaging measures to distinguish AD from controls (Hamelin et al., 2015). In our results (Table 3), using the neuroimaging features resulted in 8 normal controls being erroneously classified as AD patients, and 28 diseased patients (18 AD and 10 bvFTD) wrongly classified as normal. In contrast, using neuropsychological scores instead in the model resulted in much fewer errors when classifying between controls and patients. Interestingly, these results are similar to Hinrichs et al. (2011) which reported that both cognitive and neuroimaging features contributed to the prediction of MCI patients progressing to full-blown AD—with neuroimaging features contributing slightly more to the classification. As mentioned already above, it is currently not clear how much cognitive and neuroimaging atrophy features map onto each other, however, it becomes apparent that even if there is some redundancy, a complementary diagnostic and classification approach can potentially corroborate diagnosis based on only one feature. There is clearly great scope to explore this further in the future, in particular in the distinction of neurodegenerative conditions from each other.

Despite these promising results there are limitations to our findings. In particular, only a subset of patients had a pathologically confirmed diagnosis. Ideally, we would have pathological confirmation in all patients. Still, the pathological confirmed participants showed similar results to the clinical cohort. A further limitation might have been the selection of specific neuroimaging and cognitive features in the analysis. As outlined in the methods, the a priori reasoning was to include features that have been shown to be most sensitive and specific to the respective pathologies. However, this might mean that other features which potentially could have allowed better classification were not considered in the current analysis. There may also be a small positive bias in the results due to the registration of brain images prior to the machine-learning exercise performed herein (that is, images are normalized using available data outside of the cross-validation loop); however, failing to perform such registration would likely lead to a larger negative bias in results due to the effects of age and gender covariates which also correlate with tissue volumes. Missing data among the neuropsychological assessment features will also have resulted in a lesser reported accuracy than what is achievable if these data were complete; hence, neuropsychological assessment could outperform MRI scans in this diagnostic task by a greater margin than what is presented herein. Finally, despite the sample size being excellent for clinical studies, the current sample size poses a challenge for modeling techniques, such as the one used here. In particular, the sample size relative to number of features can lead to worse performance than true performance in wild due to overfitting during feature selection and training; i.e., large variation in features selected between cross-validation runs. It would be therefore important to replicate our results in independent and larger samples in the future. Still, we believe that the current findings are of importance and highlight how, in the near future, clinicians could use novel computational techniques at a single patient level to aid their clinical diagnoses.

Taken together, this study used a machine learning classifier to distinguish AD and bvFTD. Despite showing promising findings, the separability of the three groups, and in particular between the two patient groups, was lower than expected. Cortical volume in temporo-insular regions allowed a classification accuracy of 51.4% between AD and bvFTD, while neuropsychological scores of emotion recognition, cognitive inhibition and memory reached approximately 62.4% accuracy. These results suggest that machine-learning classifier for AD and bvFTD should rely more on cognitive performance than cortical volumes and can provide clinicians with objective supportive information under diagnostic uncertainty.

Author Contributions

JW and SR analyzed the data and developed the model. MB, JH, and MH did the experiments and obtained data from patients. All co-authors have contributed writing the manuscript as well as proof-reading.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported in part by funding to ForeFront, a collaborative research group dedicated to the study of frontotemporal dementia and motor neuron disease, from the National Health and Medical Research Council (NHMRC) (APP1037746) and the Australian Research Council (ARC) Centre of Excellence in Cognition and its Disorders Memory Node (CE11000102).

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fnagi.2016.00119

References

Andersson, J. L. R., Jenkinson, M., and Smith, S. (2007). Non-Linear Optimisation. FMRIB Technical Report TR07JA2. Available online at: http://www.fmrib.ox.ac.uk/analysis/techrep

Baddeley, A. D., Wilson, B. A., and Kopelman, M. D. (1995). Handbook of Memory Disorders. London: John Wiley and Sons Ltd.

Bertoux, M., de Souza, L. C., Corlier, F., Lamari, F., Bottlaender, M., Dubois, B., et al. (2014). Two distinct amnesic profiles in behavioral variant frontotemporal dementia. Biol. Psychiatry 75, 582–588. doi: 10.1016/j.biopsych.2013.08.017

Bertoux, M., de Souza, L. C., Sarazin, M., Funkiewiez, A., Dubois, B., and Hornberger, M. (2015). How preserved is emotion recognition in Alzheimer disease compared with behavioral variant frontotemporal dementia? Alzheimer Dis. Assoc. Disord. 29, 154–157. doi: 10.1097/WAD.0000000000000023

Burgess, P. W., and Shallice, T. (1996). Response suppression, initiation and strategy use following frontal lobe lesion. Neuropsychologia 34, 263–276. doi: 10.1016/0028-3932(95)00104-2

Cohen, J. (1968). Weighted kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol. Bull. 70, 213–220. doi: 10.1093/arclin/acv027

Cuingnet, R., Gerardin, E., Tessieras, J., Auzias, G., Lehéricy, S., Habert, M. O., et al. (2011). Automatic classification of patients with Alzheimer's disease from structural MRI: a comparison of ten methods using the ADNI database. Neuroimage 56, 766–781. doi: 10.1016/j.neuroimage.2010.06.013

De Souza, L. C., Chupin, M., Bertoux, M., Lehéricy, S., Dubois, B., Lamari, F., et al. (2013). Is hippocampal volume a good marker to differentiate Alzheimer's disease from frontotemporal dementia? J. Alzheimers. Dis. 36, 57–66. doi: 10.3233/JAD-122293

Dubois, B., Feldman, H. H., Jacova, C., Dekosky, S. T., Barberger-Gateau, P., Cummings, J., et al. (2007). Research criteria for the diagnosis of Alzheimer's disease: revising the NINCDS-ADRDA criteria. Lancet Neurol. 6, 734–746. doi: 10.1016/S1474-4422(07)70178-3

Good, C. D., Scahill, R. I., Fox, N. C., Ashburner, J., Friston, K., Chan, D., et al. (2002). Automatic differentiation of anatomical patterns in the human brain: validation with studies of degenerative dementias. Neuroimage 17, 29–46. doi: 10.1006/nimg.2002.1202

Gorno-Tempini, M. L., Hillis, A. E., Weintraub, S., Kertesz, A., Mendez, M., Cappa, S. F., et al. (2011). Classification of primary progressive aphasia and its variants. Neurology 76, 1006–1014. doi: 10.1212/WNL.0b013e31821103e6

Hamelin, L., Bertoux, M., Bottlaender, M., Corne, H., Lagarde, J., Hahn, V., et al. (2015). Sulcal morphology as a new imaging marker for the diagnosis of early onset Alzheimer's disease. Neurobiol. Aging. 36, 2932–2939. doi: 10.1016/j.neurobiolaging.2015.04.019

Hinrichs, C., Singh, V., Xu, G., and Johnson, S. C. (2011). Predictive markers for AD in a multi-modality framework: an analysis of MCI progression in the ADNI population. Neuroimage 55, 574–589. doi: 10.1016/j.neuroimage.2010.10.081

Hornberger, M., Geng, J., and Hodges, J. R. (2011). Convergent grey and white matter evidence of orbitofrontal cortex changes related to disinhibition in behavioural variant frontotemporal dementia. Brain 134(Pt 9), 2502–2512. doi: 10.1093/brain/awr173

Hornberger, M., and Piguet, O. (2012). Episodic memory in frontotemporal dementia: a critical review. Brain 135(Pt 3), 678–692. doi: 10.1093/brain/aws011

Hornberger, M., Piguet, O., Graham, A. J., Nestor, P. J., and Hodges, J. R. (2010). How preserved is episodic memory in behavioral variant frontotemporal dementia? Neurology 74, 472–479. doi: 10.1212/WNL.0b013e3181cef85d

Hornberger, M., Wong, S., Tan, R., Irish, M., Piguet, O., Kril, J., et al. (2012). In vivo and post-mortem memory circuit integrity in frontotemporal dementia and Alzheimer's disease. Brain 135, 3015–3025. doi: 10.1093/brain/aws239

Klöppel, S., Stonnington, C. M., Chu, C., Draganski, B., Scahill, R. I., Rohrer, J. D., et al. (2008). Automatic classification of MR scans in Alzheimer's disease. Brain 131, 681–689. doi: 10.1093/brain/awm319

Liu, P., Lei, L., and Wu, N. (2005). A quantitative study of the effect of missing data in classifiers, in The Fifth International Conference on Computer and Information Technology CIT, (Shanghai), 28–33.

Mioshi, E., Dawson, K., Mitchell, J., Arnold, R., and Hodges, J. R. (2006). The Addenbrooke's Cognitive Examination Revised (ACE-R): a brief cognitive test battery for dementia screening. Int. J. Geriatr. Psychiatry 21, 1078–1085. doi: 10.1002/gps.1610

Mioshi, E., Hsieh, S., Savage, S., Hornberger, M., and Hodges, J. R. (2010). Clinical staging and disease progression in frontotemporal dementia. Neurology 74, 1591–1597. doi: 10.1212/WNL.0b013e3181e04070

Perry, R. J., Graham, A., Williams, G., Rosen, H., Erzinçlioglu, S., Weiner, M., et al. (2006). Patterns of frontal lobe atrophy in frontotemporal dementia: a volumetric MRI study. Dement. Geriatr. Cogn. Disord. 22, 278–287. doi: 10.1159/000095128

Possin, K. L., Feigenbaum, D., Rankin, K. P., Smith, G. E., Boxer, A. L., Wood, K., et al. (2013). Dissociable executive functions in behavioral variant frontotemporal and Alzheimer dementias. Neurology 80, 2180–2185. doi: 10.1212/WNL.0b013e318296e940

Rascovsky, K., Hodges, J. R., Knopman, D., Mendez, M. F., Kramer, J. H., Neuhaus, J., et al. (2011). Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain 134(Pt 9), 2456–2477. doi: 10.1093/brain/awr179

Reitan, R. M. (1955). The relation of the trail making test to organic brain damage. J. Consult. Psychol. 19, 393–394. doi: 10.1037/h0044509

Rey, A. (1941). L'examen psychologique dans les cas d'encephalopathie traumatique. Arch. Psychol. 28, 215–285.

Rueckert, D., Sonoda, L. I., Hayes, C., Hill, D. L., Leach, M. O., Hose, D. R., et al. (1999). Nonrigid registration using free-form deformations: application to breast MR images. IEEE Trans. Med. Imaging 18, 712–721. doi: 10.1109/42.796284

Seeley, W. W., Crawford, R., Rascovsky, K., Kramer, J. H., Weiner, M., Miller, B. L., et al. (2008). Frontal paralimbic network atrophy in very mild behavioral variant frontotemporal dementia. Arch. Neurol. 65, 249–255. doi: 10.1001/archneurol.2007.38

Shi, H., and Liu, Y. (2011). Naïve Bayes vs. support vector machine: resilience to missing data, in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 7003 LNAI(PART 2) (Taiyuan), 680–687.

Smith, S. M., Jenkinson, M., Woolrich, M. W., Beckmann, C. F., Behrens, T. E., Johansen-Berg, H., et al. (2004). Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23(Suppl. 1), S208–S219. doi: 10.1016/j.neuroimage.2004.07.051

Walhovd, K. B., Fjell, A. M., Brewer, J., McEvoy, L. K., Fennema-Notestine, C., Hagler, D. J., et al. (2010). Combining MR imaging, positron-emission tomography, and CSF biomarkers in the diagnosis and prognosis of alzheimer disease. Am. J. Neuroradiol. 31, 347–354. doi: 10.3174/ajnr.A1809

Wedderburn, C., Wear, H., Brown, J., Mason, S. J., Barker, R. A., Hodges, J., et al. (2008). The utility of the Cambridge Behavioural Inventory in neurodegenerative disease. J. Neurol. Neurosurg. Psychiatr. 79, 500–503. doi: 10.1136/jnnp.2007.122028

Whitwell, J. L., Przybelski, S. A., Weigand, S. D., Ivnik, R. J., Vemuri, P., Gunter, J. L., et al. (2009). Distinct anatomical subtypes of the behavioural variant of frontotemporal dementia: a cluster analysis study. Brain 132, 2932–2946. doi: 10.1093/brain/awp232

Zhang, Y., Brady, M., and Smith, S. (2001). Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Trans. Med. Imaging 20, 45–57. doi: 10.1109/42.906424

Zhang, D., Wang, Y., Zhou, L., Yuan, H., and Shen, D. (2011). Multimodal classification of Alzheimer's disease and mild cognitive impairment. Neuroimage 55, 856–867. doi: 10.1016/j.neuroimage.2011.01.008

Zhang, H. (2004). The optimality of naive bayes, in The Seventeenth International Florida Artificial Intelligence Research Society Conference Proceedings (Miami Beach, FL: FLAIRS).

Keywords: machine learning, AD, bvFTD, classification, Bayesian, MRI

Citation: Wang J, Redmond SJ, Bertoux M, Hodges JR and Hornberger M (2016) A Comparison of Magnetic Resonance Imaging and Neuropsychological Examination in the Diagnostic Distinction of Alzheimer's Disease and Behavioral Variant Frontotemporal Dementia. Front. Aging Neurosci. 8:119. doi: 10.3389/fnagi.2016.00119

Received: 21 January 2016; Accepted: 09 May 2016;

Published: 16 June 2016.

Edited by:

Agustin Ibanez, Instituto de Neurociencia Cognitiva y Traslacional (INCyT), ArgentinaReviewed by:

Bogdan O. Popescu, Colentina Clinical Hospital, RomaniaJavier Escudero, University of Edinburgh, UK

Copyright © 2016 Wang, Redmond, Bertoux, Hodges and Hornberger. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michael Hornberger, bS5ob3JuYmVyZ2VyQHVlYS5hYy51aw==

Jingjing Wang

Jingjing Wang Stephen J. Redmond

Stephen J. Redmond Maxime Bertoux

Maxime Bertoux John R. Hodges

John R. Hodges Michael Hornberger

Michael Hornberger