- School of Mathematical Sciences, Zhejiang University, Hangzhou, China

Topological data analysis is becoming more and more popular in recent years. It has found various applications in many different fields, for its convenience in analyzing and understanding the structure and dynamic of complex systems. We used topological data analysis to analyze the firings of a network of stochastic spiking neurons, which can be in a sub-critical, critical, or super-critical state depending on the value of the control parameter. We calculated several topological features regarding Betti curves and then analyzed the behaviors of these features, using them as inputs for machine learning to discriminate the three states of the network.

1 Introduction

The critical brain hypothesis that the brain operates near a critical phase transition point for optimal information processing functions (Beggs and Plenz, 2003; Kinouchi and Copelli, 2006; Beggs, 2008; Shew et al., 2011; Shew and Plenz, 2013) has been studied for 20 years. Though there are controversies (Touboul and Destexhe, 2010, 2017; Destexhe and Touboul, 2021), this hypothesis is still well supported (Fontenele et al., 2019; Plenz et al., 2021; Beggs, 2022). Therefore, it remains an important problem about how to determine whether a neural system is critical or not. Usually, it is assessed via neural avalanches (Beggs and Plenz, 2003). When the distributions of avalanche size and duration follow power-law and the corresponding exponents obey the crackling scaling relation (Muñoz et al., 1999; Sethna et al., 2001), the neural system is considered critical. However, as far as we know, there are yet no sufficient conditions for the criticality of neural systems, and much more studies should be devoted to finding more methods for assessing the criticality in neuroscience.

On the other hand, recently applied topology has become an important tool in neuroscience and found numerous applications in several aspects, for its convenience in analyzing and understanding the structure and dynamic of neural systems. By constructing simplicial complex from brain activity data, one can identify the topological features of functional modules, hierarchical organization, and diseases in brain. For example, in 2015, the Blue Brain Project reconstructed a data-driven model of the rat neocortical microcircuitry which supports the diverse information processing (Markram et al., 2015), and later in 2017, they did a further topological analysis on the microcircuitry and found that the high-dimensional topological structure appeared and disintegrated with the spatial-temporal stimulus (Reimann et al., 2017). Moreover, by applying the persistent homology and nerve theorem, one can extract global features of the external environment by analyzing the co-firing activities of cells (Curto and Itskov, 2008; Giusti et al., 2015). For instance, in the hippocampus, by modeling the place cells with different firing parameters (i.e., firing rates, place field size, and number of neurons), the computation of persistent homology on the co-firing activities gives a topological information about the environment and the learning region wherein the corresponding parameters can make the model generate stable topological representation (Dabaghian et al., 2012). Furthermore, many other topological analysis methods and biophysiological factors were considered in the spatial learning in the hippocampus. Arai et al. (2014) explored the effects of θ phase precession on spatial learning, and later on, they evaluated the effect of decaying connections between hippocampus neurons (Babichev et al., 2018) and the replay mechanism (Babichev et al., 2019) using a novel topological technique called zigzag persistent homology. Topological data analysis (TDA) is also successfully applied in other spatial cognitive systems such as head-direction cells, grid cells, and conjunctive cells that span low-dimension topological structures embedded in high-dimensional neural activity space (Curto, 2016; Kang et al., 2021; Gardner et al., 2022) in which persistent cohomology techniques are used. Moreover, persistent homology has been used to analyze the spiking data generated from artificial neural network (Spreemann et al., 2018; Bardin et al., 2019) in which spike train correlations were seen as the input to construct simplicial complex.

With these wide applications in neuroscience, TDA may also be a promising candidate for addressing the problem regarding criticality. As a first step, we applied TDA to the firings of a network of stochastic spiking neurons in order to see how topological features would behave as the network dynamics change and if TDA can discriminate the three states of the network, i.e., sub-critical, critical, and super-critical states.

2 An integrate-and-fire neural network

For our purposes we considered the firing dynamics of an excitatory/inhibitory stochastic integrate-and-fire network, which has a mean-field directed percolation critical point with a fully connected structure (Girardi-Schappo et al., 2021). With this critical point, the network has three states depending on the control parameter, i.e., critical, sub-critical, and super-critical states.

Here, we briefly introduce the model. The network is composed of N neurons, and each neuron is a stochastic leaky integrate-and-fire unit with discrete time step equal to 1 ms, connected in an all-to-all graph. The membrane potential of each neuron i, either excitatory (E) or inhibitory (I), evolves as

where μi is the leakage parameter, is an external current, NE and NI are, respectively, the number of excitatory and inhibitory neurons, and the non-negative element Jij (Wij) gives the synaptic weight between the jth presynaptic excitatory (inhibitory) neuron and the ith postsynaptic neuron. Jij = 0 or Wij = 0 means that the neurons are not connected. is a stochastic Boolean variable denoting if at time t a neuron fires () or not (). It turns to 1 with a piecewise linear sigmoidal probability Φ(V),

where θ is the firing threshold, Γ is the firing gain constant (Brochini et al., 2016), Θ(·) is the Heaviside function, and VS = θ+1/Γ is the saturation potential. The total number of neurons in the network is N = NE+NI, with p = NE/N and q = NI/N.

The average over neurons of Eq. (1) yields the mean-field of the network, which presents a mean-field directed percolation (MF-DP) critical point (Girardi-Schappo et al., 2020, 2021):

where J = 〈Jij〉, W = 〈Wij〉, , and μ = 〈μi〉 are mean-field approximations, with 〈.〉 denoting average over neurons, and . Denoting and g = W/J (which intuitively represents the level of inhibition), then the critical point will lie at (see Girardi-Schappo et al., 2021 for a detailed derivation)

where μ = 0 will be taken in this study, since this is valid, and μ>0 does not present any new phenomenology (Girardi-Schappo et al., 2021).

3 Methods for analysis

To analyze the firings of the network introduced above, we do topological data analysis here. First, we introduce the topological framework that is necessary for TDA.

3.1 Topological framework

3.1.1 Simplicial complex

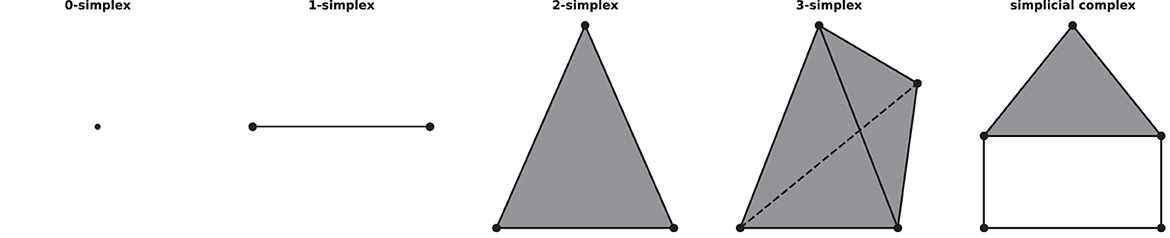

A simplicial complex is a mathematical structure in algebraic topology for approximating the shape and properties of spaces, constructed from the basic geometric objects, i.e., simplices (plural of simplex). Given a set of vertices V, a n-simplex is defined as the convex hull of (n+1) affine independent vertices in V. For example, geometrically, a 0-simplex is a vertex or a point, a 1-simplex is an edge, a 2-simplex is a triangle, and so on. A simplicial complex can be constructed from simplices if they satisfy two conditions: first, if a simplex is included in the simplicial complex, then so are its faces, for example, if a triangle is a part of a simplicial complex, its three edges and vertices must be part of the complex as well; second, the intersection of any two simplices in the complex is either empty or a face of them, see Figure 1 for an example of simplices and a simplicial complex.

Figure 1. Examples of simplices and a simplicial complex, 0-, 1-, 2-, 3-simplex, and a simplicial complex (which consists of five 0-simplices, five 1-simplices, and one 2-simplex) from left to right.

By defining the simplices and how they construct a simplicial complex, we can capture the structure of a specific space. In this study, we constructed the simplicial complex by treating the spike trains of neurons as the vertices in a complex, and the weight value between two vertices is equal to the dissimilarity of their corresponding spike trains. We will construct the commonly used Vietoris-Rips complex. That is, the complex is consisted of all vertices at first. Then, given a threshold, an edge between any two vertices is added to the complex if the weight value between them is less than the threshold. Moreover, a triangle or higher-dimensional simplex is added also if all its edges are in the complex. After adding all such simplices, a Vietoris-Rips complex with regard to the threshold is obtained.

3.1.2 Betti numbers and persistent homology

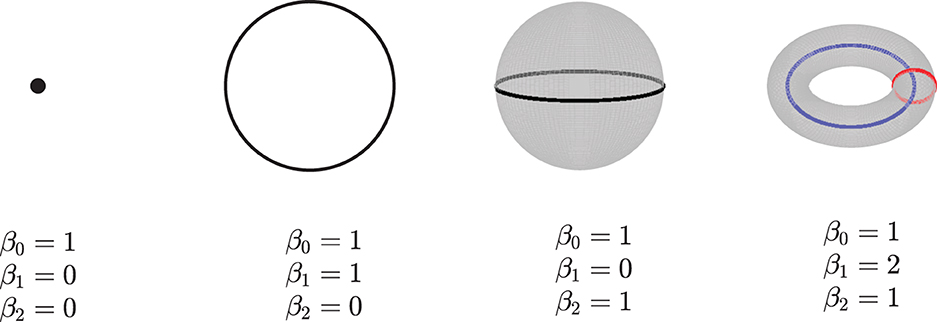

Given a simpilicial complex Σ, let Hk(Σ) donate the k-dimensional homology of Σ. The dimension of Hk(Σ) which counts the k-dimensional “holes” in Σ is called the kth Betti numbers, βk, i.e, β0 gives the number of connected components, β1 is the number of loops in Σ, β2 is the number of voids, and so on. See Figure 2 for an example. Here, we only consider the zeroth betti numbers and the first betti numbers.

Figure 2. Examples of betti numbers, from left to right - a point, a circle, a 2-dimensional sphere, and a 2-dimensional torus. All of them have one connected component, so their 0-dimension betti number β0 is 1. The circle has one loop, so its 1-dimension betti number β1 is 1. Both the sphere and torus have a 2-dimension void inside; thus, their β2 are 1. Furthermore, the torus has two loops, and its β1 is equal to 2.

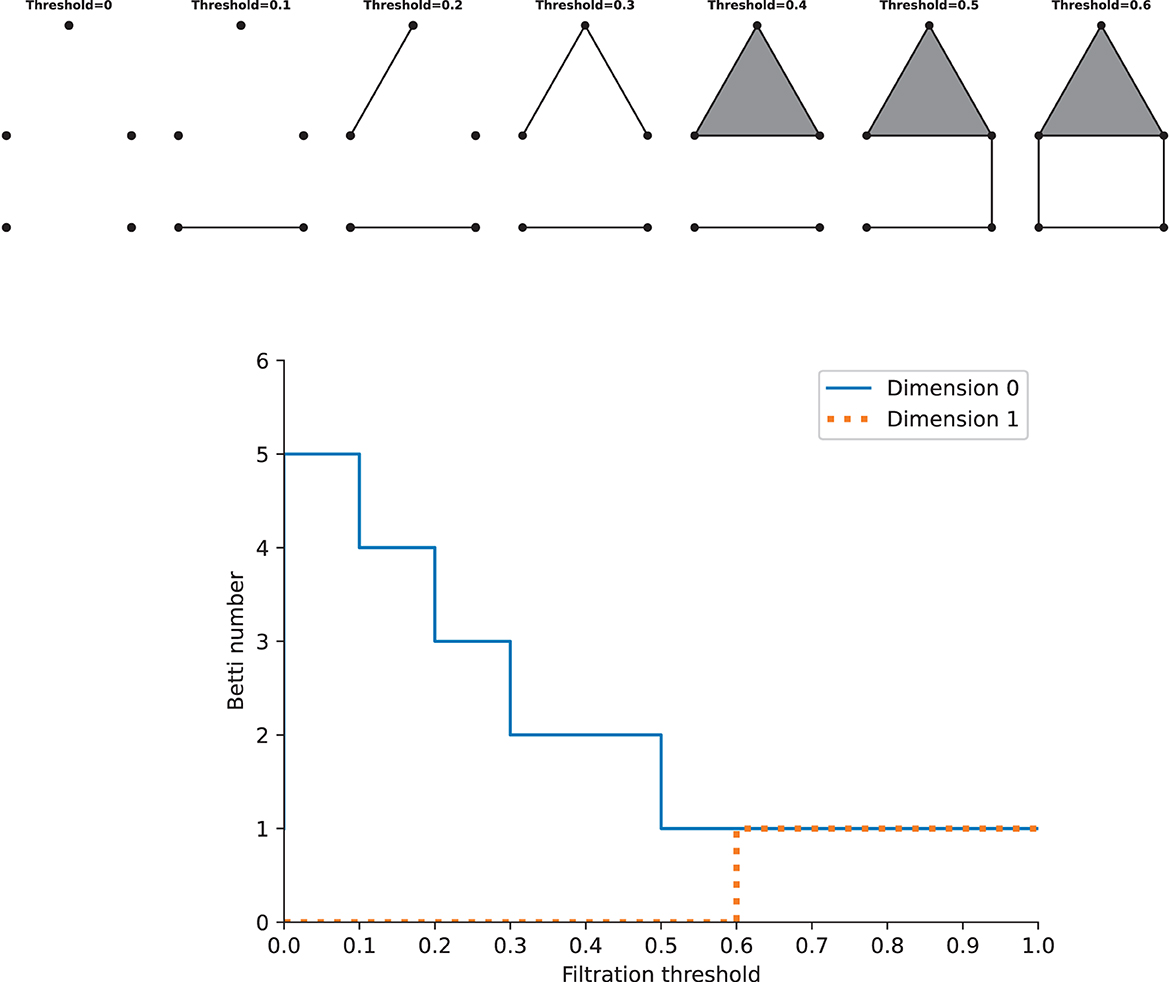

As stated above, we can construct one complex via a single constant threshold. However, its homology could not represent the properties of the underlying space well, and it is difficult to choose a suitable threshold. Instead, we need to build a filtration, which is a nested family of complexes with regard to an increasing sequence of thresholds. At the beginning of the filtration, it consists of vertices only, i.e., there are only 0-simplices. As the threshold increases from zero to one (the maximum value of the weight), higher-dimensional simplices will appear. By this construction, we will get a nested family of complexes, and betti numbers can be computed in each complex. Calculating the homology of the complexes of all thresholds requires the persistent homology theory, which gives a way to study how the topological features such as “holes” change across the filtration. Given two parameters ϵ0 and ϵ1, if the feature at Hk(ϵ0) is still present at Hk(ϵ1), it is said that the feature persists from ϵ0 to ϵ1, and the features that persist more longer as the threshold increases are more significant, while others are considered as noise. The lifetime that records the “birth” (the time when holes appear) and “death” (the time when holes disappear) of features could offer more information across the filtration about the underlying data, so we can track the betti numbers in each dimension as a function of the filtration threshold, which gives rise to Betti curves. See Figure 3 for an example. The features of the Betti curves will be analyzed later.

Figure 3. Examples of a filtration and the Betti cuvre. Top: the simplicial complexs with increasing filtration thresholds. Bottom: the Betti curves of the simplicial complexs in dimension 0 and 1. Note that this is just an illustration, the distance on the graph should not be taken as the true distance between different points.

3.2 Analyzing network dynamics

In the following, we applied a method based on persistent homology to analyze network dynamics using topological features of spaces built from various spike train distances (Bardin et al., 2019). Three different measures of spike train similarity are chosen here for analyzing the spiking data, i.e., the Pearson correlation, SPIKE-synchronicity (Kreuz et al., 2015), and SPIKE-distance (Kreuz et al., 2013).

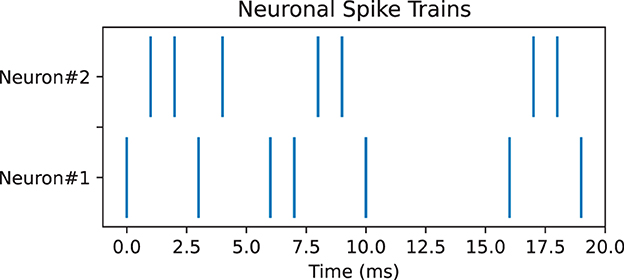

Pearson correlation can be used to measure the correlation between spike trains recorded from different neurons. It compares the spike trains of two neurons and estimates whether neurons tend to fire together or exhibit temporal dependencies, which can provide insights into the functional connectivity and information processing in neural circuits (Cohen and Kohn, 2011). However, Pearson correlation encounters many challenges such as temporal precision and time lagged correlations; thus, it is necessary to use other measures as well for complements. Here, we used two additional measures, namely, SPIKE-synchronicity and SPIKE-distance. SPIKE-synchronicity counts the simultaneous appearances of spikes in two spike trains, while SPIKE-distance measures the dissimilarity between spike trains. Notice that both Pearson correlation and SPIKE-synchronicity are bounded in [0, 1], with value 1 indicating the spike trains are identical, and they are converted to similarity measures through the function x↦1−x. In contrast, spike trains with larger SPIKE-distance value are considered more dissimilar. Importantly, these three measures all depend on the size of the time window, and for simplicity here, we used the same time-binning as in Bardin et al. (2019). For computing SPIKE-synchronicity and SPIKE-distance, we used the Python package PySpike (Mulansky and Kreuz, 2016), while Pearson correlation was computed by the Python package Elephant (Denker et al., 2018). Note that given two spike trains, these measures may have very different values, see Figure 4 for an example.

Figure 4. Pair of neuronal spike trains for which Pearson correlation measure is 0.17, SPIKE-synchronicity measure is 1, and SPIKE-distance measure is 0.31, with a time bin of 4 ms.

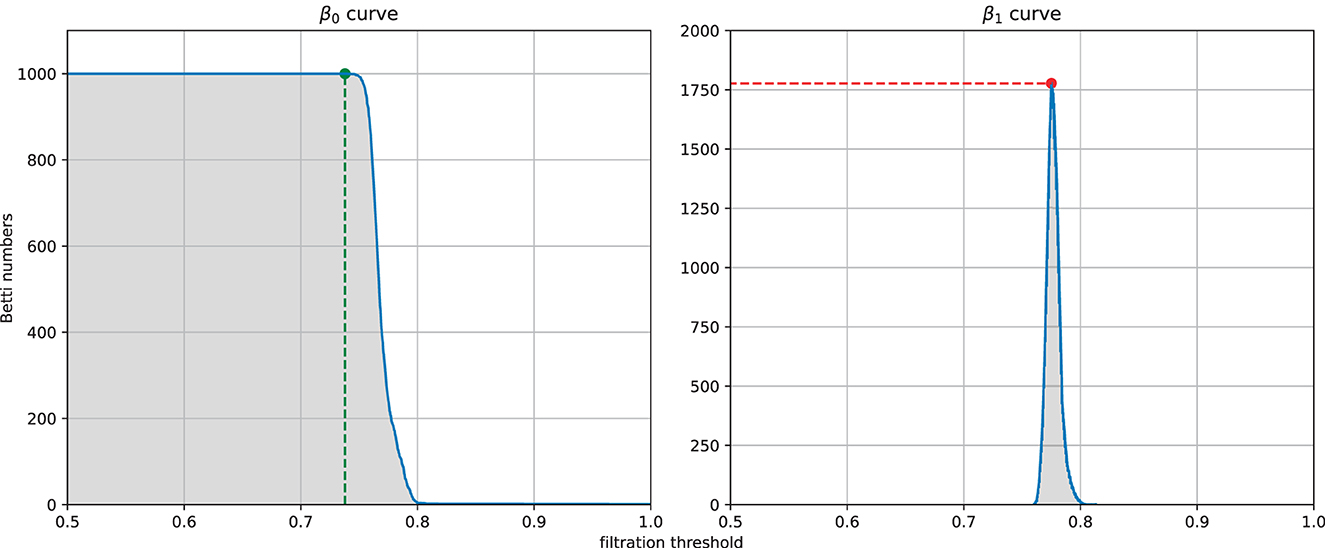

From each similarity measure, we can computed the persistent homology of the Vietoris-Rips complex of the weighted graph and then extracted four features from the zeroth and first Betti curves. The four features (see Figure 5) are the filtration value at which the Betti-0 curve starts to decrease (we called it the turning point of the Betti-0 curve), the area under the Betti-0 curve, the global maximum of the Betti-1 curve, and the area under the Betti-1 curve, which are referred to later as feature 1, 2, 3, 4, respectively. Since there are three different similarity measures, a total of 12 features can be extracted.

Figure 5. Example of the four features extracted from a filtration: the filtration threshold at which the Betti-0 curve starts to decrease (left, green), the global maximum (right, red) of the Betti-1 curve, and the area under each curve (gray area) starting from 0.

These features can then used as the input for machine learning in order to discern information about the global network dynamics. Following Bardin et al. (2019), machine learning here was achieved by the support vector machine (SVM, a supervised machine learning algorithm used for classification and regression) methods (Cortes and Vapnik, 1995) using a radial basis function (a real-valued function whose value depends only on the distance from some center point) kernel with L2-regularization (a kind of distance). We trained an L2-regularized SVM classifier on our selected standardized features to identify the different regimes, whose regularizing hyperparameter was selected with a tenfold cross-validation (a resampling procedure used to evaluate machine learning models on a limited data sample). During training, an 80%-20% training-testing sample partition was used, and performance of the classifier was evaluated by an accuracy score.

We considered three different classifications, aiming to see if topological features can capture some essential characteristics of each dynamical regime that help to distinct themselves from others. The first one discards the critical state and only distinguishes super-critical from sub-critical states, and the second one distinguishes all three states, while the last one distinguishes critical from the other two non-critical states. The last one is to investigate whether the critical state bears some special topological features, motivated by the variety of functional benefits of criticality in neural networks (Kinouchi and Copelli, 2006; Beggs, 2008; Shew et al., 2011; Shew and Plenz, 2013).

4 Results

In order to see how topological features change as the network goes through the super-critical, critical, and sub-critical states, we generated network dynamics for different values of the control parameter g by numerical simulations. We varied g from 1.20 to 1.80 in a step of 0.01 and run 10 simulations for each value of g. To discriminate better between critical and non-critical states later, we generated another 190 simulations for the critical point g = 1.50 so that there are enough samples of critical states.

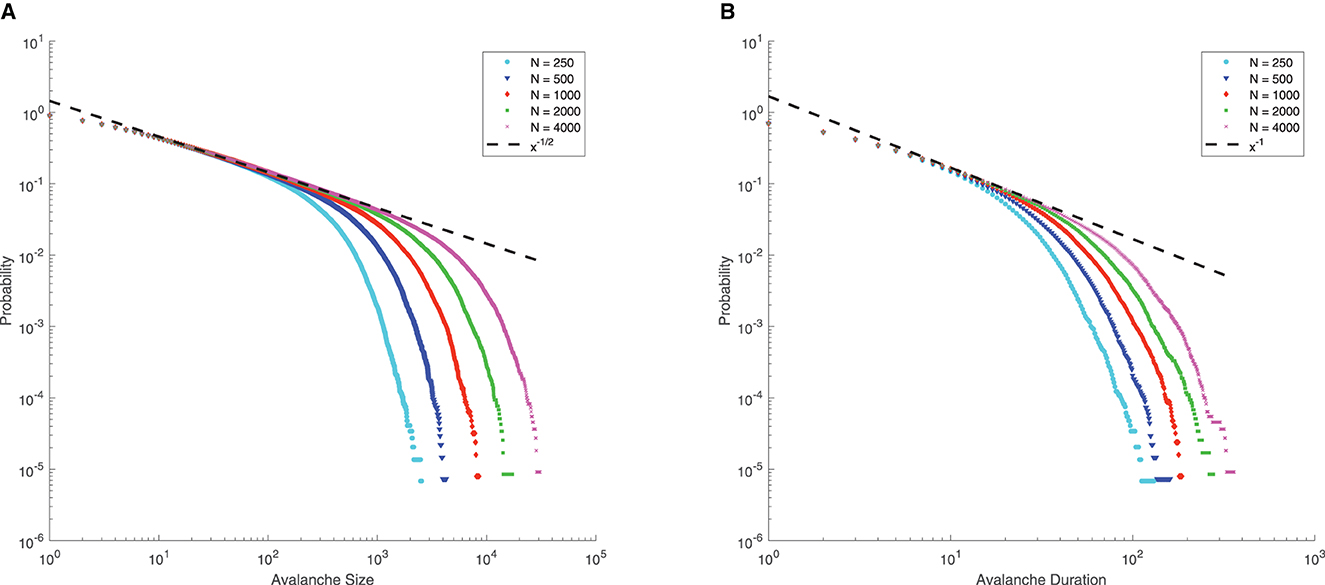

Note that for μ = 0, p = 0.8, q = 0.2, Γ = 10, J = 10, from Eq. (4), we can see that the mean-field critical point lies at g = 1.5, which is only reached in the limit of infinite network size. Nevertheless, for a finite-size network, we still consider g = 1.5 as the critical point since the distributions of avalanche size and duration at g = 1.5 seem to follow power-law, along with finite-size effects (the exponential decay occurs at larger size as N increases, see Figure 6), which is commonly considered as a signature of criticality in neuroscience (Beggs and Plenz, 2003).

Figure 6. Complementary cumulative distribution function (CCDF) of neuronal avalanches at g = 1.5, for μ = 0, p = 0.8, q = 0.2, Γ = 10, J = 10. (A) CCDF of avalanche size; (B) CCDF of avalanche duration. Dashed lines indicate mean-field predictions. Both distributions start to be power-law like, followed by an exponential cutoff that grows with network size N, indicative of the finite-size effect. These suggest that for finite-size networks the mean-field critical point g = 1.5 can still be considered critical.

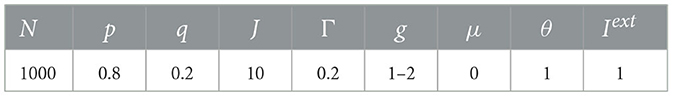

For simplicity and limited computational resources, in all our numerical simulations, unless otherwise stated, we used a fixed total number N = 1000 neurons and set p = 0.8 and q = 0.2 as reported for cortical data (Somogyi et al., 1998). Note that though for living neural networks the ratio of excitatory to inhibitory neurons varies from 3:1 to 9:1 and remains roughly constant for different sensory areas within a species (Alreja et al., 2022), this ratio would not alter the dynamics of the network here (Girardi-Schappo et al., 2020). Each simulation was run for 1,000,000 time steps, with the first transient 10,000 steps discarded. One time step is set to be equal to a biologically plausible time, i.e., 1 ms, with the spike of a neuron takes one time step here. We set the external field h = 0 and used an offline driving mechanism to keep the network dynamics on, which means that a randomly chosen neuron is set to be active immediately after the activity of the network died out. See Table 1 for values of parameters used in the simulations.

For each simulation, we first computed three pairwise spike train similarity measures and then the persistent homology of the Vietoris-Rips complex of the weighted graph. Features discussed above from the zeroth and first Betti curves are then extracted. A total of 12 features were extracted during each simulation, see Figure 7.

Figure 7. Features extracted from simulations. Values of features are averaged over 10 simulations for each value of g. For each row, one same feature is displayed, respectively, for three spike train similarity measures, i.e., the Pearson correlation, SPIKE-synchronicity, and SPIKE-distance. And the four different features used here are (A–C) the turning point of the Betti-0 curve; (D–F) the area under the Betti-0 curve; (G–I) the maximum of the Betti-1 curve; (J–L) the area under the Betti-1 curve.

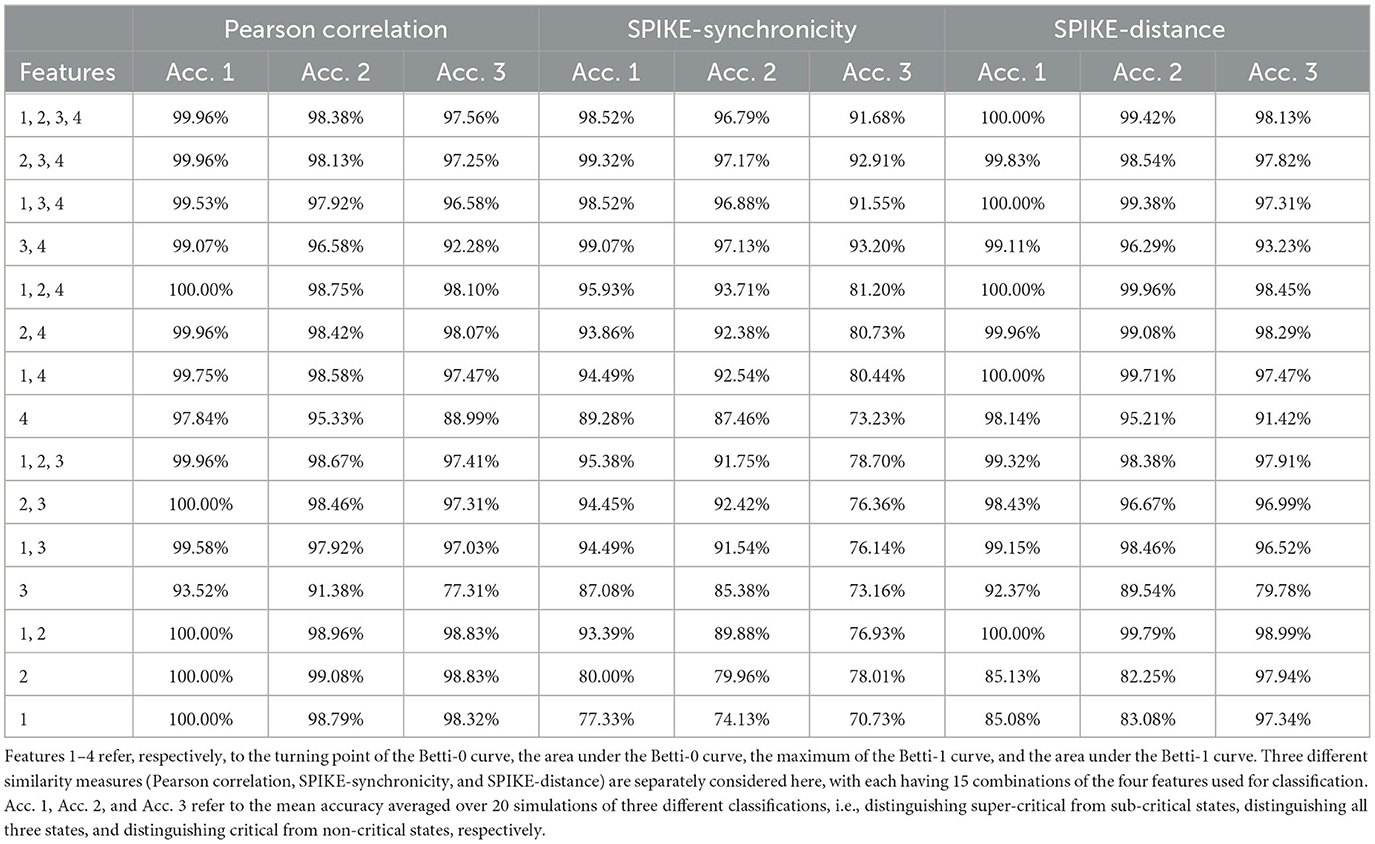

From Figure 7, one can see that the four features of Pearson correlation and SPIKE-distance measure behave quite similarly. When g>1.5, i.e., the network is in a sub-critical state, all features increase as g becomes larger, while for g < 1.5, i.e., when the network is in a super-critical state, all features first increase and then decrease. This may have reflected that under these two dissimilarity measures, the overall distance among all neurons increases and the groups they form due to their co-activity are connected more complicatedly as inhibition gets stronger (g gets larger). We predict that it is easy to discriminate the super-critical, critical, and sub-critical states using features of these two measures since the curves are relatively monotonous in a certain interval around the critical state g = 1.5. Meanwhile, we conjecture that the smoother the curves, the easier the classification. These are verified in our classifying results, see Table 2.

However, for SPIKE-synchronicity measure, things are quite different. Overall, as g increases, feature 1 first increases and then decreases up to a certain level, feature 2 behaves similarly but it increases again not far from the critical point g = 1.5, feature 3 first increases and then seems to decrease back to the starting level, while feature 4 increases all the time. We have no idea why it behaves so differently from the other two measures. A possible explanation is that SPIKE-synchronicity measure reflects more accurately the synchronous activity of neurons and it may have been influenced a lot by the special role of criticality. This requires more future study that provides deep insights into the meanings of these features. Nevertheless, we conjecture that compared to the other two measures, the classification accuracy would drop a bit when using the four features of SPIKE-synchronicity measure, which again can be confirmed in Table 2.

Of course, different combinations of features would result in different accuracy of classification. But overall, we find that a very high accuracy of classification would be gained when using features whose changing curve over g is smooth and relatively monotonous in a certain interval around the critical point g = 1.5, as can be seen from Figure 7 and Table 2.

5 Discussion

In this study, we applied TDA to a stochastic excitatory/inhibitory network model that has a mean-field critical point (Girardi-Schappo et al., 2020, 2021). With the existence of a critical point, the network can be super-critical, critical, or sub-critical. We are interested in the behaviors of topological features of the network as it changes among these different dynamical regimes. Three different similarity measures are considered here for constructing simplices that are basic elements of TDA, namely, Pearson correlation, SPIKE-synchronicity, and SPIKE-distance. Four features regarding Betti curves are calculated for analysis, which are then used as inputs for machine learning to discriminate the three states of the network. However, it should be noted that the behaviors of such features are yet hard to explain. Betti-0 number counts the number of connected components in the complex, and the turning point of and the area under Betti-0 curve reflects, respectively, the smallest distance and the overall distance of all vertices. Betti-l number counts the number of 1-dimensional “holes” in the complex. The global maximum of and the area under the Betti-1 curve are difficult to understand, and they may have reflected the underlying complicated distributions of local groups due to their firing activity. Why these features vary as in Figure 7 remains to be uncovered.

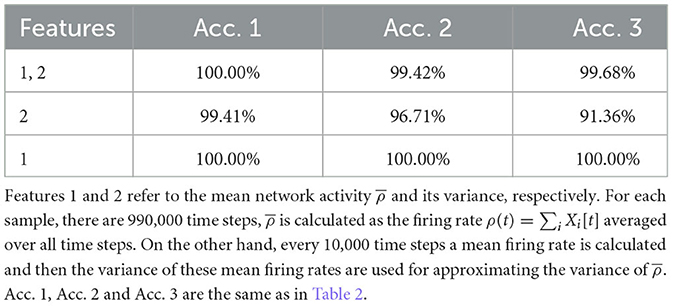

Nevertheless, our study shows that topological features can reflect many important implicit aspects of network dynamics, and some of these features make it possible to classify different dynamical regimes of the network easily. This is a promising direction for developing new methods for assessing the criticality of neural systems. Nevertheless, the study here bares many limitations. First of all, we only considered a special network model in its mean-field case, and more realistic ones with various connections could be studied. Second, there is only one control parameter g in our network model, which reduces the difficulty of classification sufficiently, as compared to the work of Bardin et al. (2019), where there are two control parameters. In fact, for our case, there are simpler classification methods. For example, using the mean network activity and its variance as the input for machine learning can classify the three regimes with high accuracy as well, see Table 3. Then why do we choose TDA? Mainly because we think TDA is a method that is worth developing since it contains more underlying information, and it uses only the firing data which is not limited to the model used here. When more complicated models and more regimes are considered, classification is very likely to become much more difficult, and TDA could be more useful then. Of course, this requires more future studies.

Apart from that, the resolution of the control parameter g in this study is chosen to be 0.01. Though we think it is relatively small, it may happen that as the resolution gets higher and higher, the classification would ultimately fail due to the lack of enough samples. Nevertheless, up to a suitable resolution, the classification should work, and its accuracy would be still high as long as there are enough samples.

Additionally, we did not provide any control for the results. For example, we did not show how TDA measures behave on shuffled data. As we checked, after shuffling the firing data, the correlations between neurons reduced greatly, and consequently, the elements of distance matrices became very close to each other, which resulted in an extremely long time of the computation of the features that is beyond our computational resources. But based on these distance matrices, we think the features of such shuffled data would not display much changes as compared to the original data.

Moreover, we applied three commonly used similarity measures for analyzing spike trains, but there is no guarantee that these measures capture all or the most important aspects of network dynamics best, so are the four features we chose for analysis. More measures and features could be explored to reflect network dynamics better. More future work could be devoted to investigating whether there are topological features (or combinations of them) that reflect the super-critical, critical, and sub-critical states of neural networks unambiguously, which is very meaningful given the various functional benefits (Kinouchi and Copelli, 2006; Beggs, 2008; Shew et al., 2011; Shew and Plenz, 2013) that criticality can offer to neural systems.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

XB: Conceptualization, Data curation, Formal analysis, Software, Writing—original draft, Writing—review & editing. CY: Conceptualization, Methodology, Software, Validation, Visualization, Writing—review & editing. JZ: Funding acquisition, Project administration, Writing—review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alreja, A., Nemenman, I., and Rozell, C. J. (2022). Constrained brain volume in an efficient coding model explains the fraction of excitatory and inhibitory neurons in sensory cortices. PLoS Comput. Biol. 18, e1009642. doi: 10.1371/journal.pcbi.1009642

Arai, M., Brandt, V., and Dabaghian, Y. (2014). The effects of theta precession on spatial learning and simplicial complex dynamics in a topological model of the hippocampal spatial map. PLoS Comput. Biol. 10, e1003651. doi: 10.1371/journal.pcbi.1003651

Babichev, A., Morozov, D., and Dabaghian, Y. (2018). Robust spatial memory maps encoded by networks with transient connections. PLoS Comput. Biol. 14, e1006433. doi: 10.1371/journal.pcbi.1006433

Babichev, A., Morozov, D., and Dabaghian, Y. (2019). Replays of spatial memories suppress topological fluctuations in cognitive map. Netw. Neurosci. 3, 707–724. doi: 10.1162/netn_a_00076

Bardin, J.-B., Spreemann, G., and Hess, K. (2019). Topological exploration of artificial neuronal network dynamics. Netw. Neurosci. 3, 725–743. doi: 10.1162/netn_a_00080

Beggs, JM. (2008). The criticality hypothesis: how local cortical networks might optimize information processing. Philos. Trans., Math. Phys. Eng. 366, 329–343. doi: 10.1098/rsta.2007.2092

Beggs, J., and Plenz, D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177. doi: 10.1523/JNEUROSCI.23-35-11167.2003

Beggs, J. M. (2022). Addressing skepticism of the critical brain hypothesis. Front. Comput. Neurosci. 16, 703865. doi: 10.3389/fncom.2022.703865

Brochini, L., Costa, A., Abadi, M., Roque, A., Stolfi, J., and Kinouchi, O. (2016). Phase transitions and self-organized criticality in networks of stochastic spiking neurons. Sci. Rep. 6, 35831. doi: 10.1038/srep35831

Cohen, M. R., and Kohn, A. (2011). Measuring and interpreting neuronal correlations. Nat. Neurosci. 14, 811–819. doi: 10.1038/nn.2842

Cortes, C., and Vapnik, V. (1995). Support-vector networks. Mach. Learn. 20, 273–297. doi: 10.1007/BF00994018

Curto, C. (2016). What can topology tell us about the neural code? Bull. New Ser. Am. Math. Soc. 54, 63–78. doi: 10.1090/bull/1554

Curto, C., and Itskov, V. (2008). Cell groups reveal structure of stimulus space. PLoS Comput. Biol. 4, e1000205. doi: 10.1371/journal.pcbi.1000205

Dabaghian, Y., Mémoli, F., Frank, L., and Carlsson, G. (2012). A topological paradigm for hippocampal spatial map formation using persistent homology. PLoS Comput. Biol. 8, e1002581. doi: 10.1371/journal.pcbi.1002581

Denker, M., Yegenoglu, A., and Grün, S. (2018). Collaborative HPC-enabled workflows on the HBP collaboratory using the elephant framework. Neuroinformatics 2018, P19. doi: 10.12751/incf.ni2018.0019

Destexhe, A., and Touboul, J. D. (2021). Is there sufficient evidence for criticality in cortical systems? Eneuro 8, eneuro.0551-20.2021. doi: 10.1523/ENEURO.0551-20.2021

Fontenele, A. J., de Vasconcelos, N. A. P., Feliciano, T., Aguiar, L. A. A., Soares-Cunha, C., Coimbra, B., et al. (2019). Criticality between cortical states. Phys. Rev. Lett. 122, 208101. doi: 10.1103/PhysRevLett.122.208101

Gardner, R. J., Hermansen, E., Pachitariu, M., Burak, Y., Baas, N. A., Dunn, B. A., et al. (2022). Toroidal topology of population activity in grid cells. Nature 602, 123–128. doi: 10.1038/s41586-021-04268-7

Girardi-Schappo, M., Brochini, L., Costa, A., Carvalho, T., and Kinouchi, O. (2020). Synaptic balance due to homeostatically self-organized quasicritical dynamics. Phys. Rev. Res. 2, 1. doi: 10.1103/PhysRevResearch.2.012042

Girardi-Schappo, M., Galera, E. F., Carvalho, T. T. A., Brochini, L., Kamiji, N. L., Roque, A. C., et al. (2021). A unified theory of E/I synaptic balance, quasicritical neuronal avalanches and asynchronous irregular spiking. J. Phys. 2, 045001. doi: 10.1088/2632-072X/ac2792

Giusti, C., Pastalkova, E., Curto, C., and Itskov, V. (2015). Clique topology reveals intrinsic geometric structure in neural correlations. Proc. Nat. Acad. Sci. 112, 13455–13460. doi: 10.1073/pnas.1506407112

Kang, L., Xu, B., and Morozov, D. (2021). Evaluating state space discovery by persistent cohomology in the spatial representation system. Front. Comput. Neurosci. 15, 616748. doi: 10.3389/fncom.2021.616748

Kinouchi, O., and Copelli, M. (2006). Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351. doi: 10.1038/nphys289

Kreuz, T., Chicharro, D., Houghton, C., Andrzejak, R. G., and Mormann, F. (2013). Monitoring spike train synchrony. J. Neurophysiol. 109, 1457–1472. doi: 10.1152/jn.00873.2012

Kreuz, T., Mulansky, M., and Bozanic, N. (2015). Spiky: a graphical user interface for monitoring spike train synchrony. J. Neurophysiol. 113, 3432–3445. doi: 10.1152/jn.00848.2014

Markram, H., Muller, E., Ramaswamy, S., Reimann, M. W., Abdellah, M., Sanchez, C. A., et al. (2015). Reconstruction and simulation of neocortical microcircuitry. Cell 163, 456–492. doi: 10.1016/j.cell.2015.09.029

Mulansky, M., and Kreuz, T. (2016). Pyspike—a python library for analyzing spike train synchrony. SoftwareX 5, 183–189. doi: 10.1016/j.softx.2016.07.006

Muñoz, M. A., Dickman, R., Vespignani, A., and Zapperi, S. (1999). Avalanche and spreading exponents in systems with absorbing states. Phys. Rev. E 59, 6175–6179. doi: 10.1103/PhysRevE.59.6175

Plenz, D., Ribeiro, T. L., Miller, S. R., Kells, P. A., Vakili, A., and Capek, EL. (2021). Self-organized criticality in the brain. Front. Phys. 9, 639389. doi: 10.3389/fphy.2021.639389

Reimann, M. W., Nolte, M., Scolamiero, M., Turner, K., Perin, R., Chindemi, G., et al. (2017). Cliques of neurons bound into cavities provide a missing link between structure and function. Front. Comput. Neurosci. 11, 48. doi: 10.3389/fncom.2017.00048

Sethna, J. P., Dahmen, K. A., and Myers, C. R. (2001). Crackling noise. Nature 410, 242–250. doi: 10.1038/35065675

Shew, W. L., and Plenz, D. (2013). The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100. doi: 10.1177/1073858412445487

Shew, W. L., Yang, H., Yu, S., Roy, R., and Plenz, D. (2011). Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J. Neurosci. 31, 55–63. doi: 10.1523/JNEUROSCI.4637-10.2011

Somogyi, P., Tamás, G., Lujan, R., and Buhl, E. H. (1998). Salient features of synaptic organisation in the cerebral cortex. Brain Res. Rev. 26, 113–135. doi: 10.1016/S0165-0173(97)00061-1

Spreemann, G., Dunn, B., Botnan, M. B., and Baas, N. A. (2018). Using persistent homology to reveal hidden covariates in systems governed by the kinetic ising model. Phys. Rev. E 97, 032313. doi: 10.1103/PhysRevE.97.032313

Touboul, J., and Destexhe, A. (2010). Can power-law scaling and neuronal avalanches arise from stochastic dynamics? PLoS ONE 5, e8982. doi: 10.1371/journal.pone.0008982

Keywords: topological data analysis, persistent homology, spiking neural network, Betti curves, criticality

Citation: Bai X, Yu C and Zhai J (2024) Topological data analysis of the firings of a network of stochastic spiking neurons. Front. Neural Circuits 17:1308629. doi: 10.3389/fncir.2023.1308629

Received: 06 October 2023; Accepted: 06 December 2023;

Published: 04 January 2024.

Edited by:

Mauricio Girardi-Schappo, Federal University of Santa Catarina, BrazilReviewed by:

Osame Kinouchi, University of São Paulo, BrazilJohn M. Beggs, Indiana University Bloomington, United States

Copyright © 2024 Bai, Yu and Zhai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chaojun Yu, MTE4MzUwMTdAemp1LmVkdS5jbg==

Xiaotian Bai

Xiaotian Bai Chaojun Yu

Chaojun Yu Jian Zhai

Jian Zhai