- 1Britton Chance Center for Biomedical Photonics, Wuhan National Laboratory for Optoelectronics- Huazhong University of Science and Technology, Wuhan, China

- 2Key Laboratory of Biomedical Photonics of Ministry of Education, College of Life Science and Technology, Huazhong University of Science and Technology, Wuhan, China

The recognition of brain nuclei is the basis for localizing brain functions. Traditional histological research, represented by atlas illustration, achieves the goal of nucleus boundary recognition by manual delineation, but it has become increasingly difficult to extend this handmade method to delineating brain regions and nuclei from large datasets acquired by the recently developed single-cell-resolution imaging techniques for the whole brain. Here, we propose a method based on a closed cubic spline (CCS), which can automatically segment the boundaries of nuclei that differ to a relatively high degree in cell density from the surrounding areas and has been validated on model images and Nissl-stained microimages of mouse brain. It may even be extended to the segmentation of target outlines on MRI or CT images. The proposed method for the automatic extraction of nucleus boundaries would greatly accelerate the illustration of high-resolution brain atlases.

Introduction

The brain consists of massive nuclei with different functions. In neuroscience research, the precise recognition and delineation of nucleus boundaries is the crux of brain area identification and atlas illustration.

The contemporary automatic recognition methods for nucleus boundaries are mainly based on MR images (Wiegell et al., 2003; van der Lijn et al., 2008) and micro-optical images (McDonald, 1982; Geisler et al., 2003). In MR images and low-resolution histological images, the distribution of gray level in a nucleus is homogenous, facilitating the application of many classical image-segmentation techniques (Balafar et al., 2010). In contrast, nuclei on cytoarchitectural images at single-cell resolution do not exhibit an even and continuous distribution of the gray level but, rather, clusters of discrete recognizable neurons. Microscopic images clearly show that the cell morphologies and cell densities of brain nuclei differ from each other, resulting in blurry borders between nuclei and their neighboring areas (Gahr, 1997). The illustration of traditional histological brain atlases, for instance, Brodmann's human brain atlas (Brodmann, 1908), the mouse brain in stereotaxic coordinates (Paxinos and Franklin, 2004) and the Allen Reference Atlas (Dong, 2008), relies on the experience of anatomical experts (Brunjes et al., 2005), who draw the boundaries of nuclei with smooth curves on a limited number of sections according to the morphology and density differences of the imaged cells. These handmade atlases have been widely used in neurobiological research. However, given the rise of whole-brain continuous imaging techniques with single-cell resolution (Li et al., 2010), the data volume of sections from a single mouse brain has exceeded what was acquired with traditional methods. As a result, it has become unrealistic to manually segment the boundaries of brain regions and nuclei. Thus, the development of method for the automatic recognition and segmentation of entire and continuous boundaries of nuclei on large, high-resolution histological images is urgently needed.

Some efforts have been made for automatic parcellation of brain regions and nuclei on single-cell-resolution histological images. Xiang et al. segmented the abducens nucleus based on the Gabor wavelet, which yielded discontinuous boundaries (Xiang et al., 2004). Mesejo et al. segmented the pyramidal cell layer and granular cell layer of the hippocampus formation (Mesejo et al., 2012, 2013). However, their selected sections were thick, and the cells of the two segmented structures overlapped one another, resulting in a homogeneous distribution of the gray level. The Otsu thresholding and Chan-Vese model applied in their work were only suitable for the segmentation of these homogeneous regions, not targets made up of isolated cells. Amunts et al. automatically acquired the dividing lines between neighboring brain regions in the cortex by extracting the curve features from gray level index profiles on Nissl-stained images (Amunts et al., 2013). Meyer et al. divided the anterior olfactory nucleus region into several parts by statistically analyzing the cell distribution (Meyer et al., 2006). However, they only manually delineated the outline of brain structures and then automatically computed the dividing lines among the subregions within; they did not directly segment an entire closed outline of the brain regions and nuclei from original cytoarchitectural images. Briefly, the methods mentioned above all have some shortcomings in segmenting continuous boundaries of nuclei consisting of isolated cell bodies.

In this paper, we propose a method to automatically segment the outlines of nuclei whose cell densities differ to a relatively high degree relative to their surrounding areas. This method uses a closed cubic spline (CCS) as its action object and guides the automatic evolution of CCS in the whole image sequence based on the features extracted from the surrounding images of the CCS control points, and the entire closed outline of the target nucleus on the whole image sequence was successfully acquired. The proposed method was applied to nuclei with densely packed cells, such as the mitral cell layer of the olfactory bulb, and nuclei with low cell density but surrounded by areas of high cell density, such as the molecular layer of the cerebellar lobe. This method was also extended to the segmentation of non-nucleus targets.

Method

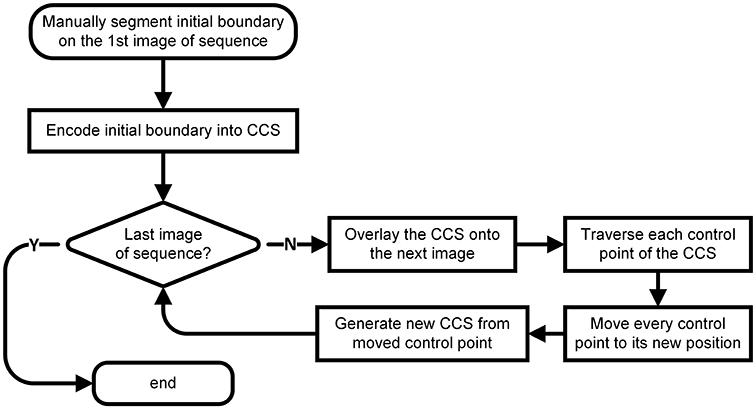

The whole workflow is shown as Figure 1. First, we manually delineated the boundary of the target nucleus on the first image of the given image sequence and extracted the feature points of the boundary, which we used to construct a CCS that was overlaid on the image next to the first one. Then, we traversed each control point of the CCS and moved each to its new position on the new image by calculating the image similarities and maximizing the difference between the inner and outer sides of each control point. After traversing all control points, we generated a new boundary based on the control points at their new positions. We repeated this process throughout the image sequence. A detailed explanation of every step of our method follows.

Manual Segmentation of the Initial Boundary and Extraction of Control Points

The manual segmentation of the boundary of nucleus on the first image of the sequence was implemented by the Segmentation Editor module of Amira (FEI, Hillsboro, OR, USA). The segmented boundary was saved as a label image, in which the gray value of the pixels inside the boundary was 255 and that of the ones outside the boundary was 0.

Using the corner detection algorithms of Harris (Harris and Stephens, 1988), Log (Lindeberg, 1998) and Gilles (Gilles, 1999), we extracted the points that could describe the shape features of the segmented boundary, which could then be used as CSS control points.

CCS Encoding of the Initial Boundary

The CCS was a type of smooth and closed curve comprising n control points and n segments. At each control point, the CCS was continuous and differentiable (Press, 2007). The CCS was deduced from the control point set according to Kershaw (1972).

The coordinates of any point on the ith segment of the CCS could be interpolated by the following equation set depending on variable t (t∈[0, 1]):

where bxj and byj (j = 1, 2, 3) are the parameters of the ith segment of the CCS. When variable t was given a value 0 or 1, the values of x and y corresponded to the coordinates of the start or end point of the ith segment, respectively. Eight parameters were needed to describe one segment, and thus, a total of 8n parameters is required to describe the whole CCS. Note that the expressions of x(t) and y(t) are symmetrical. We deduced bx1−bx4 in Equation (1) as an example, which can be extended to the deduction of a total of 4n parameters of the x coordinate of CCS.

The CCS was denoted as C, and the start and end points of the ith segment of C were marked as Pi−1 and Pi, respectively. We denoted the control points of C to be P = {P1, P2, …, Pn}, and thus, for the ith segment of C, we have

where Pi−1(x) and Pi(x), respectively, are the x coordinates of Pi−1 and Pi, and and are the first derivatives of the x coordinates at Pi−1(x) and Pi(x) with respect to t. According to (3), we have

Given the continuous and differentiable characteristics of the CCS at any control point, we have

Substituting the second-derivative expression of the CCS into Equation (5), we have

Then, combining Equations (4) and (6) to eliminate parameter b, we can acquire the equation set with respect to P and P′:

The above equations can then be rewritten as a matrix multiplication expression, as shown below:

By solving the matrix Equation (8), we obtain , where i = 1,2,…,n. After substituting the values of into Equation (4), we attain the 4n parameters for the x coordinate of the CCS. The same procedure was used to acquire the 4n parameters for the y coordinate.

Control Point Evolution of CCS

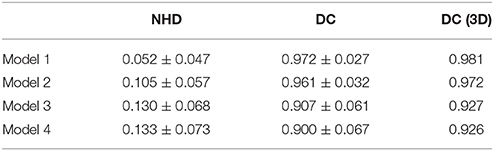

To iterate each control point of the CCS, at control point Pk, we extracted the surrounding image Ikalong the normal direction vk of Pk. The width and height were w and h, respectively, as shown in Figure 2A. We then overlaid the CCS onto the next section of the image sequence and extracted the surrounding image of Pk along its normal direction, as shown in Figure 2B. The width of was also w, whereas the height was the height of Ik with a sliding range r. We let the point Pk slide along vk in and denoted the moving Pk as . We defined the distance of and the bottom edge of as x. A sliding window Win, whose height was h and width was w, was then constructed along the direction of vk with as its center. The value of x was required to be an integer with pixel as the unit, and thus, there were only r possible values of x.

Figure 2. Control point evolution. (A) The nth image of the sequence. The green curve is a segment of the computed CCS in the current image. Pk is the kth control point of CCS, and vk is the normal vector at the point Pk. Centered on Pk, the black rectangle Ik is the surrounding image of Pk, which was extracted along vk, with width w and height h. (B) The n+1st image of the sequence. The green curve is the CCS segment of the nth image, and the blue curve is the evolved new CCS segment, on which the control point Pkmoved to its new position along vk and is denoted . The black rectangle is the surrounding image of Pk, with width w and the height h+r. (C) is divided by the moving point into two parts: Iin and Iout. When the difference in the mean gray values of Iin and Iout is maximized, the moving point is labeled , and its coordinate is xd. (D) Centered on the moving point, a sliding window Win is constructed. When the content of Win reaches maximal similarity with Ik, the moving point is marked as , and its coordinate is xs.

As shown in Figure 2C, with a line through , can be divided into an inner part and outer part, which are denoted as Iinand Iout, respectively. The mean gray values of these two parts are indicated as meanin and meanout, respectively. When Pk moved to the position marked where |meanin−meanout| was maximized, the value of x was denoted xd, indicating that the difference between Iin and Iout was maximized. Then, we calculated the similarities between Win and Ik given all possible values of x. The measurements of similarity included the sum the variance of the gray value, the covariance, the correlation coefficient and the angle cosine. The values of x with maximal similarity under the 4 criteria were recorded and sorted. The median of the 4 values of x is denoted xs and was regarded as the value of x at which Win and Ik exhibited the best match, as shown in Figure 2D.

The value of x was determined by both xd and xs. We constructed a minimum object function as indicated below, where α and β were constant:

The value of x that minimized the object function f was

Therefore, x can be seen as a linear combination of xd and xs. As α increased, xs weighed more, indicating that the value of x was increasingly related to the similarity of the two images surrounding the control point on neighboring sections; as β increased, xd weighed more, and thus, x was more determined by the difference between the inner and outer parts of the surrounding image divided by the control point. According to our experience, the values of α and β were both set to 0.5.

Subsequently, the point Pk moved to its new position, as shown in Figure 2B. The calculation above was performed for every control point of the CCS, giving the new positions of all the control points.

Reconstruction of CCS from Evolved Control Points

The method of constructing a new CCS from control points with new coordinates was the same as described above. Note that if the shape of a contour was complicated, the neighboring nodes of the CCS might exchange their positions, causing intersections of the CCS around these control points. To avoid these intersections, we applied the procedures below:

(1) Calculate the distance between every control point and its nth (n = 1, 2, …, m−1, where m is the number of control points of CCS) neighboring control points on the right side;

(2) Define D as the index depth, T1 as the thresholding of the nearest distance between control points, and any control point together with any of its right-side neighbor as a control point pair. Find all of the control point pairs with distances less than T1 and differences between their index numbers less than D. If the distance between the two points of a control point pair was less than T1, remove one from the pair and the points in between.

(3) Calculate the distance between the remaining control points and insert new control points between the neighboring control points with distances exceeding a manually assigned maximal distance threshold T2.

The values of T1, T2 and D were set to 10, 20 and 4, respectively, based on our experience.

Design of CCS Toolkit

We also developed a Matlab toolkit named CCSToolkit, which can be downloaded from https://github.com/VBNProject/CCS-Segmentation-Toolkit. This toolkit includes 22 functions that implement the code necessary for our method and the common operations on the CCS. The functions in the toolkit can be grouped into 3 categories: construction/decomposition of the CCS, information extraction and CCS computation.

Construction Method of Model Data

To validate our method, we created several image sequences that simulated nuclei formed by packed cells with randomly generated particles. The process was as follows.

(1) In a limited space (200 × 200 × 200 voxels), we defined a spherical area with radius 60 and center coordinate (100, 100, 100) as the target nucleus. The remaining area was defined as background.

(2) We traversed every voxel in the created 3D space. For voxels in the nucleus and background areas, we generated randomly distributed particles with radii in the range [2, 3] and gray level values in the range [140, 255] by different probabilities Po and Pb, respectively, to simulate different cell densities within and outside the target nucleus.

(3) We stored the created 3D space as an image sequence.

Evaluation Method

We evaluated the results obtained using our method on model images using a supervised evaluation strategy. The chosen evaluation parameters were the Dice coefficient (DC) (Dice, 1945) and the Hausdorff distance (Huttenlocher et al., 1993). NHD is used for measuring the similarities between the segmentation results and real boundaries, while DC is used for calculating the overlapping of segmented area and the area surrounded by real boundaries.

In any given image from the model image sequence, we defined the real area occupied by the target nucleus as Ar, and the calculated area as Ac; thus, the DC was defined as follows:

Here, for convenience, the area was approximated as the pixel number, and Ar was defined as the number of pixels occupied by the nucleus area. Considering that the randomly generated particles belonging to the nucleus area could be on the boundary of the nucleus, we adopted the convention that the outside area of these particles was included in Ar.

We then defined Sr to be the real boundary of the nucleus area and sr to be any pixel that belonged to Sr. Thus, the shortest distance from any pixel p to Sr was

The Hausdorff distance was defined as

where Sc was defined as the calculated boundary of the nucleus and sc as any pixel that belonged to Sc.

To avoid the influence of the target's area on the value of the Hausdorff distance, we normalized the Hausdorff distance by the real perimeter of the target area as below:

Results

We validated our method using the 4 created models and applied the DC and Normalized Hausdorff distance (NHD) to evaluate the precision of our method. Next, we tested our method on Nissl-stained mouse brain images acquired by Micro-Optical Sectioning Tomography system (MOST) (Li et al., 2010). Finally, we applied our method to the segmentation of the outline of a mouse brain using the coronal sections acquired by MRI and CT.

Model Testing

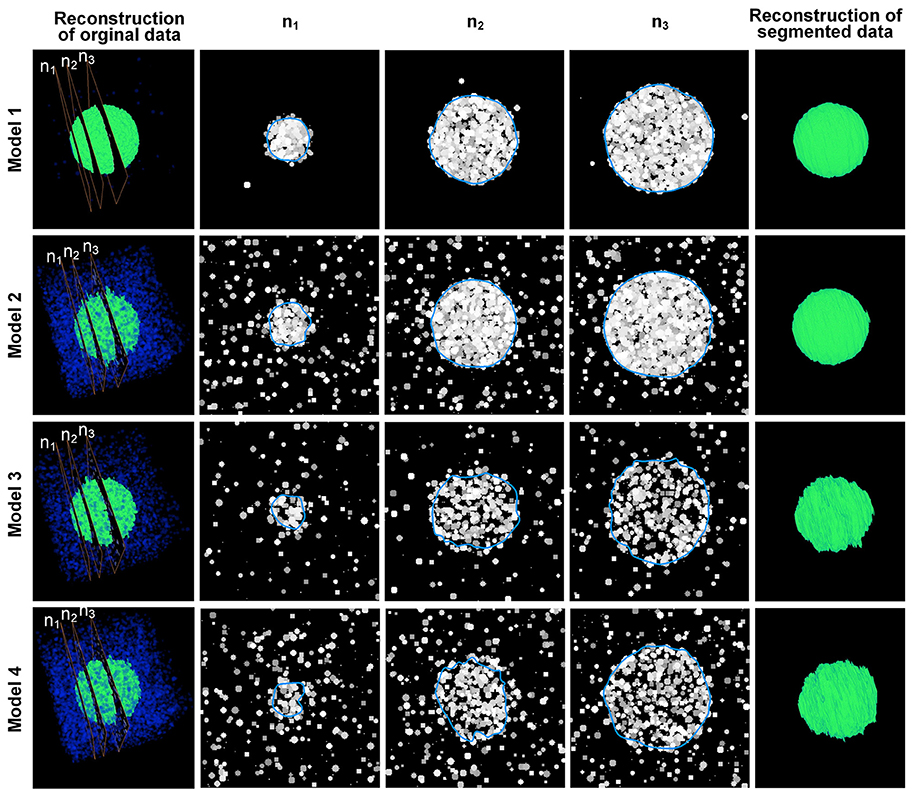

Using different Po and Pb values, we created 4 sets of model image data, which are shown in the 1st-4th rows in Figure 3. In model 1, the probability of the occurrence of particles in the target area was much higher than in the background area; thus, the particles in the target area were densely packed, and the boundary of the target area was the most unambiguous of all the tested models. In model 2, the probability of the occurrence of particles in the background area was higher than that in model 1, which could disturb the decision making about the boundary of the target area. In model 3, the possibility of particles occurring in the target area was lower than that in model 1, resulting in a blurry boundary around the target area. In model 4, the possibility of the occurrence of particles in the background area was higher than that in model 3, and this model had the blurriest boundaries.

Figure 3. Results of the model data. The 1st–4th rows show the computational results obtained using 4 model datasets. The 1st column is the 3D reconstruction of the image sequence of the model data. Blue indicates the “cells” in the background area, whereas green indicates the “cells” in the nucleus area. n1 to n3 are 3 sections chosen from the image sequences that correspond to the 2nd–4th columns. The blue curves in the 2nd–4th columns are the computed CCS of the outline of the “nuclei” in the model data. The 5th row shows the 3D reconstruction of the computation results. The (Po, Pb) parameters of the 4 models are (2%, 0.001%), (2%, 0.1%), (0.7%, 0.05%), and (0.7%, 0.1%), respectively.

The values of the parameters w, h and r of model 1 and model 2 were set to 30, 20, and 20, respectively. For model 3 and model 4, the aforementioned parameters were set to 80, 40, and 20, respectively, to reduce the effects of the particles of the background area. Figure 3 shows that our method could extract the boundaries of nuclei in areas with different nucleus-area densities and background densities. It could also be seen that some particles next to the computed boundaries were not included. These particles were firstly appeared isolatedly around the target nucleus. When these particles were starting to contact with the border of nucleus, our method would not include them into the segmented boundary immediately, until more particles appeared around these particles in the next section of the image sequence. This strategy can reduce the influence of noises effectively.

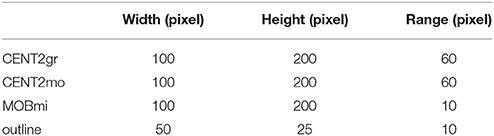

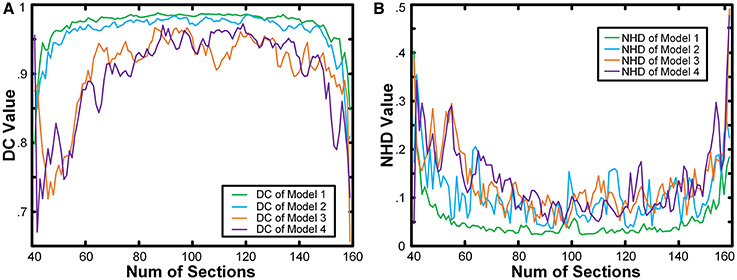

We calculated the boundaries of the nucleus from the 41st to the 159th images of the whole model dataset and compared the results with the real boundaries according to NHD and DC. The statistical results are shown in Figure 4. In the first and last images of the sequence, in which the target nucleus appeared and vanished, because the area was small, the segmentation result could have been more easily influenced by particles outside the nucleus, leading to a higher NHD value and a lower DC value and implying a higher bias of the calculation results obtained using the real boundaries. However, the DC values of all the images in the 4 models exceeded 0.65, and the NHD values were lower than 0.5. In different models, as the content of the model image became more complicated and the particle density of the nucleus area decreased, the DC value decreased gradually, and the NHD value increased slowly, which corresponded to a slow decrease in the segmentation precision. The mean value and standard deviation of the NHD and DC values are listed in the first and second column of Table 1. From the table, we can see that the average DC of each model image exceeded 0.90 and that the NHD was lower than 0.15. We also evaluated the 3D reconstruction results of 4 models with DC. The results are also shown in the third column of Table 1. We could see that all the DC values of the 4 models are above 0.90, which shows a good match between our segmentation results and the real boundaries.

Figure 4. Quantitative analysis of the results obtained from model images. The NHD and DC of the results obtained from the 4 model image datasets. The horizontal axis is the number of images in the sequence. The vertical axis in (A) is the NHD value, whereas in (B), it is the DC value.

Test on Real Cytoarchitectural Images

To validate our method for single-cell-resolution image sequences, we selected a 3D mouse whole-brain dataset with 0.35-μm horizontal resolution and 1-μm axial resolution acquired by the MOST system and saved as consecutive coronal sections. The dataset was acquired from an 8-week-old C57BL/6 male mouse (Jackson Laboratory). All of the animal experiments followed procedures approved by the Institutional Animal Ethics Committee of Huazhong University of Science and Technology.

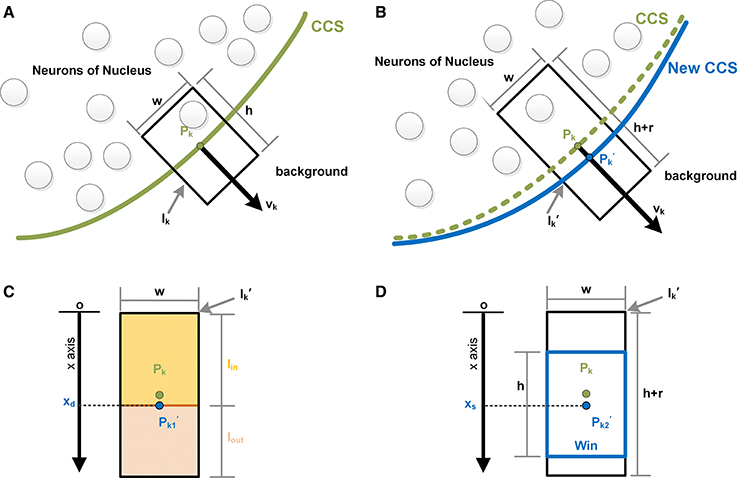

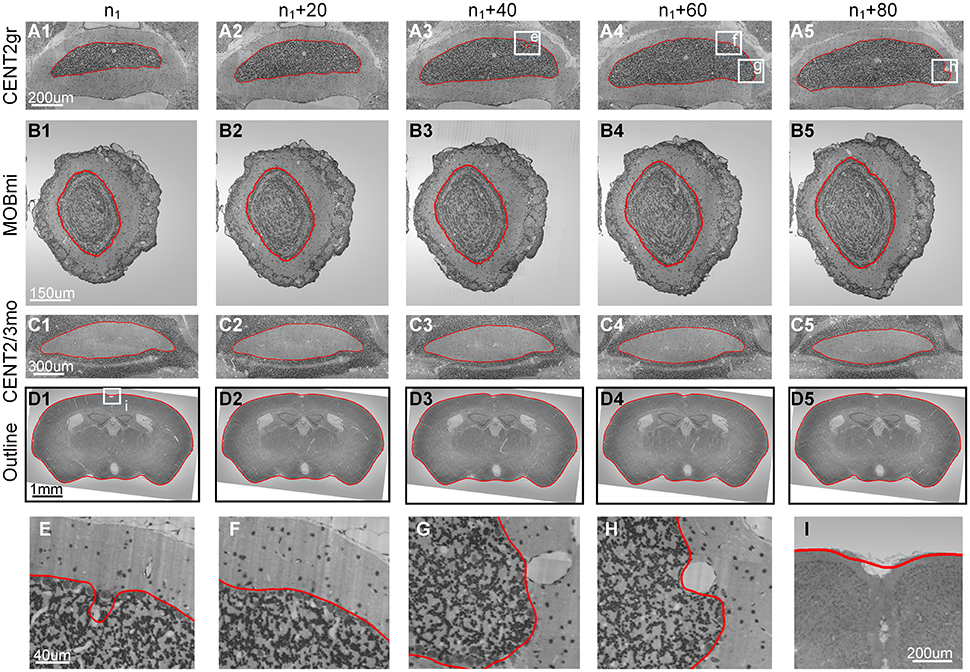

We selected the image sequence containing the granular layer of Lobule II (CENT2gr) to demonstrate the performance of our method in a typically encountered situation. The cells in CENT2gr are compact, whereas the cells in the surrounding area are sparse, which was similar to model 3. Based on our method, we correctly extracted the boundaries of this selected nucleus in 100 consecutive coronal sections (Figures 5A1–A5).

Figure 5. Results from cytoarchitectural images. The results obtained by applying our method to 4 cytoarchitectural image datasets. (A1–D5) The 1st–4th rows present the contour evolution processes of CENT2gr, CENT2/3mo, MOBmi and the whole mouse brain. (E–I) The 5th row gives the computational details. The red curves are the computed CCS. n1 represents the 20th section of the image sequence of every dataset. The image to the right of each column is the 20th image after it.

We then used the mitral layer of the main olfactory bulb (MOBmi) to evaluate our method in a more complicated situation. The MOBmi is a very thin circular area with relatively high cell density. The outside and inside of the MOBmi are the outer plexiform layer (MOBopl) and inner plexiform layer (MOBipl), respectively. The cell densities of these two nuclei are lower than those of MOBmi but not drastically so. We evaluated our method on a series of 100 coronal sections to determine the border between MOBmi and MOBopl, as shown in Figures 5B1–B5.

Our method can also be applied to extract the boundaries of nuclei with low cell density that are surrounded by areas with high cell density. On coronal sections of mouse brain, the molecular layer of Lobule II (CENT2mo) and Lobule III (CENT3mo) of the cerebellum have low cell density and are surrounded by the granular layers of Lobule II (CENT2gr) and Lobule III (CENT3gr). Using our method, we extracted the boundary of CENT2mo and CENT3mo as a whole (Figures 5C1–C5).

Finally, this method could even be applied to the segmentation of traditional non-nucleus objects with an even distribution of gray level values. We used the outline of the mouse brain in coronal sections for demonstration. We manually segmented the outline of the mouse brain in the first section and then calculated the outline of the mouse brain in the subsequent sections (Figures 5D1–D5).

As shown in Figure 5, the segmentation results were more or less influenced by the existence of blood vessels with high gray level values that traversed through the boundaries of the nuclei in Nissl-stained sections. Figures 5G,H show the blood vessels at the border of the nucleus that then stretched to press the border toward the inside. Before a blood vessel had vanished, the border did not immediately retract, as shown in Figure 5E. When the blood vessel disappeared, the segmented boundary converged to the real boundary of the nucleus, as shown in Figure 5F. Note that our method was unable to fit the sharp hollows of the target's boundary very well due to the smoothness and continuousness of CCS in math, as shown in Figure 5E. Considering the different sizes of the aforementioned target nuclei, the computational parameters, namely, width (w), height (h) and sliding range (r), were set to different values (Table 2).

The Outline Segmentation of MRI/CT Mouse Brain Images

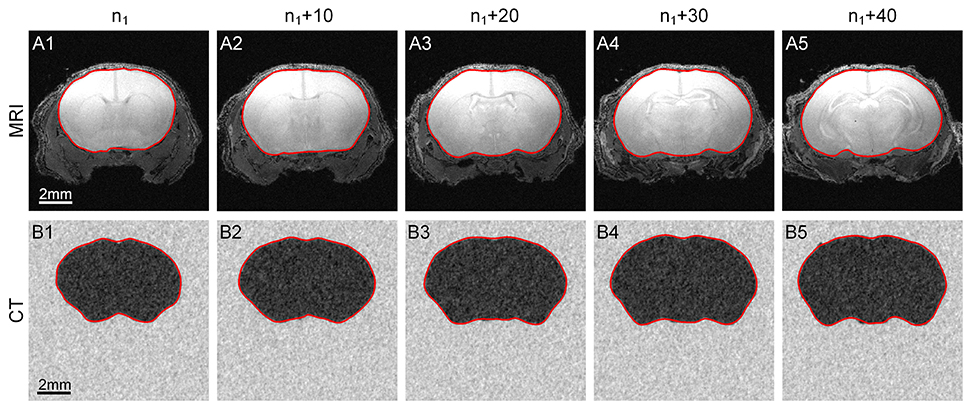

Our method can be applied to MRI and CT data in addition to cytoarchitectural images. We used a C57BL/6 mouse brain MRI dataset (Bruker Biospect, 7.0 T/20 cm, 300 MHz) with a resolution of 60 μm/pixel and dimensions of 200 × 200 × 320. Fifty coronal sections were chosen for testing, and the results are shown in the first row of Figure 6.

Figure 6. Segmentation of MRI and CT data. The results obtained by applying our method to MRI and CT data. (A1–A5) The 1st row shows the contour evolution process in MR images. (B1–B5) The 2nd row shows the process using CT data. The red curves are the computed CCS of the outline of the target area. n1 represents the 10th section of the image sequence of every dataset. The image to the right of each column is the 10th image after it.

We then selected a C57BL/6 mouse brain CT dataset (UltraBright, Oxford Instruments; PaxScan2520V, Varian Medical Systems. 50 kV, 40 W) with a resolution of 31.8 μm/pixel and dimensions of 800 × 800 × 800; 50 sections were chosen for our test. Note that the gray level range of the acquired original CT data was -0.402 to 0.136. We extended this range to 0–255 for convenience. The results are shown in the second row of Figure 6.

The parameters w, h and r used to analyze the MRI and CT data were set to 10, 5 and 3, respectively.

Discussion

We developed a method to extract the boundaries of nuclei with relatively high cell density in an image sequence with CCS, which can also be used for the segmentation of nuclei with low cell density that are surrounded by areas with high cell density. Our method is also able to extract the boundaries of traditional non-nucleus objects.

Compared to our method, the classical segmentation algorithms used in the image segmentation field are difficult to apply to the segmentation of nuclei consisting of scattered cell bodies. The traditional thresholding method tends to extract the boundaries of isolated cells but not the whole boundary of the nucleus. The region-growing method is suitable for the segmentation of target areas with homogenous gray level distributions. The snake algorithm is easily disturbed by cells near the boundary, whereas the level set method tends to cause the boundary to converge onto isolated cells.

In the past, the boundaries of nuclei in high-resolution cytoarchitectural images were manually delineated based on experts' experience. With the emergence of high-resolution data-acquisition techniques, such as MOST, it has become unrealistic to draw lines on tens of thousands of sections by hand. Our method can automatically segment the boundaries of nuclei that differ in cell density to a high degree relative to their surrounding area, thereby partially automating the delineation of nucleus boundaries in high-resolution cytoarchitectural images and ensuring future application in the illustration of high-resolution brain atlases.

Our method has some limitations. In the mouse brain, many nuclei have complicated morphologies, and their boundaries occasionally split and merge along the coronal direction, which cannot be handled by our method. Our next goal is to extract nuclei with complicated morphologies and solve this problem by determining a series of conditions to detect the occurrence of the splitting and merging of nucleus boundaries.

Author Contributions

ZF and QL designed the proposed method, and ZF also coded the CSS toolkit and completed the performance testing. AL and HG provided the data needed for testing and giving many essential advices on articles writing and model testing. QL provided the support in hardware and given many essential advices. ZF drafted the manuscript and all authors reviewed and approved the final version of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We appreciate Zhengchao Xu and Chaozhen Tan for testing the CCS Toolkit, and the financial supports from the National Program on Key Basic Research Project (973 Program, Grant No. 2015CB755602), National Natural Science Foundation of China (Grant No. 91432116) and National Key Scientific Instrument and Equipment Development Program of China (Grant No. 2012YQ030260).

References

Amunts, K., Lepage, C., Borgeat, L., Mohlberg, H., Dickscheid, T., Rousseau, M.-É., et al. (2013). BigBrain: an ultrahigh-resolution 3D human brain model. Science 340, 1472–1475. doi: 10.1126/science.1235381

Balafar, M. A., Ramli, A. R., Saripan, M. I., and Mashohor, S. (2010). Review of brain MRI image segmentation methods. Artif. Intell. Rev. 33, 261–274. doi: 10.1007/s10462-010-9155-0

Brodmann, K. (1908). Beiträge zur histologischen lokalisation der groβhirnrinde. J. Psychol. Neurol. 10, 231–246.

Brunjes, P. C., Illig, K. R., and Meyer, E. A. (2005). A field guide to the anterior olfactory nucleus (cortex). Brain Res. Rev. 50, 305–335. doi: 10.1016/j.brainresrev.2005.08.005

Dice, L. R. (1945). Measures of the amount of ecologic association between species. Ecology 26, 297–302. doi: 10.2307/1932409

Dong, H. W. (2008). The Allen Reference Atlas: A Digital Color Brain Atlas of the C57Bl/6J Male Mouse. Hoboken, NJ: John Wiley & Sons Inc.

Gahr, M. (1997). How should brain nuclei be delineated? Consequences for developmental mechanisms and for correlations ofarea size, neuron numbers and functions of brain nuclei. Trends Neurosci. 20, 58–62. doi: 10.1016/S0166-2236(96)10076-X

Geisler, S., Andres, K. H., and Veh, R. W. (2003). Morphologic and cytochemical criteria for the identification and delineation of individual subnuclei within the lateral habenular complex of the rat. J. Comp. Neurol. 458, 78–97. doi: 10.1002/cne.10566

Gilles, D. S. (1999). Robust Description and Matching of Images. dissertation's thesis, University of Oxford, Oxford, UK.

Harris, C., and Stephens, M. (1988). A combined corner and edge detector. Proc. Alvey Vis. Conf. 15, 147–151. doi: 10.5244/C.2.23

Huttenlocher, D. P., Klanderman, G. A., and Rucklidge, W. J. (1993). Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 15, 850–863. doi: 10.1109/34.232073

Kershaw, D. (1972). The orders of approximation of the first derivative of cubic splines at the knots. Math. Comput. 26, 191–198. doi: 10.1090/S0025-5718-1972-0300403-0

Li, A., Gong, H., Zhang, B., Wang, Q., Yan, C., Wu, J., et al. (2010). Micro-optical sectioning tomography to obtain a high-resolution atlas of the mouse brain. Science 330, 1404–1408. doi: 10.1126/science.1191776

Lindeberg, T. (1998). Feature detection with automatic scale selection. Int. J. Comput. Vis. 30, 79–116. doi: 10.1023/A:1008045108935

McDonald, A. J. (1982). Cytoarchitecture of the central amygdaloid nucleus of the rat. J. Comp. Neurol. 208, 401–418. doi: 10.1002/cne.902080409

Mesejo, P., Cagnoni, S., Costalunga, A., and Valeriani, D. (2013). “Segmentation of histological images using a metaheuristic-based level set approach,” in Proceedings of the 15th Annual Conference Companion on Genetic and Evolutionary Computation (New York, NY: ACM), 1455–1462. doi: 10.1145/2464576.2466808

Mesejo, P., Ugolotti, R., Cagnoni, S., Cunto, F. D., and Giacobini, M. (2012). “Automatic segmentation of hippocampus in histological images of mouse brains using deformable models and random forest,” in 25th International Symposium on Computer-Based Medical Systems (CBMS) (Rome: IEEE), 1–4. doi: 10.1109/cbms.2012.6266318

Meyer, E. A., Illig, K. R., and Brunjes, P. C. (2006). Differences in chemo-and cytoarchitectural features within pars principalis of the rat anterior olfactory nucleus suggest functional specialization. J. Comp. Neurol. 498, 786–795. doi: 10.1002/cne.21077

Paxinos, G., and Franklin, K. B. (2004). The Mouse Brain in Stereotaxic Coordinates. Sydney: Gulf Professional Publishing.

Press, W. H. (2007). Numerical Recipes 3rd Edition: The Art of Scientific Computing. Cambridge; New York, NY: Cambridge University Press.

van der Lijn, F., den Heijer, T., Breteler, M. M., and Niessen, W. J. (2008). Hippocampus segmentation in MR images using atlas registration, voxel classification, and graph cuts. Neuroimage 43, 708–720. doi: 10.1016/j.neuroimage.2008.07.058

Wiegell, M. R., Tuch, D. S., Larsson, H. B., and Wedeen, V. J. (2003). Automatic segmentation of thalamic nuclei from diffusion tensor magnetic resonance imaging. Neuroimage 19, 391–401. doi: 10.1016/S1053-8119(03)00044-2

Keywords: closed cubic spline, brain, histological image, nucleus segmentation, automatic

Citation: Feng Z, Li A, Gong H and Luo Q (2016) An Automatic Method for Nucleus Boundary Segmentation Based on a Closed Cubic Spline. Front. Neuroinform. 10:21. doi: 10.3389/fninf.2016.00021

Received: 12 March 2016; Accepted: 02 June 2016;

Published: 16 June 2016.

Edited by:

Arjen Van Ooyen, Vrije Universiteit Amsterdam, NetherlandsReviewed by:

Alexander K. Kozlov, Royal Institute of Technology, SwedenHidetoshi Ikeno, University of Hyogo, Japan

Copyright © 2016 Feng, Li, Gong and Luo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qingming Luo, cWx1b0BtYWlsLmh1c3QuZWR1LmNu

Zhao Feng

Zhao Feng Anan Li

Anan Li Hui Gong

Hui Gong Qingming Luo

Qingming Luo